Abstract

In this article, we propose a robust star classification methodology leveraging light curves collected from 15 datasets within the Kepler field in the visible optical spectrum. By employing a Bagging neural network ensemble approach, specifically an Bagging-Performance Approach Neural Network (BAPANN), which integrates three supervised neural network architectures, we successfully classified 760 samples of curves which represent 9 type of stars. Our method demonstrated a high classification accuracy of up to 97% using light curve datasets containing 13, 20, 50, 150, and 450 points per star. The BAPANN achieved a minimum error rate of 0.1559 and exhibited efficient learning, requiring an average of 29 epochs. Additionally, nine types of stellar variability were classified through 45 conducted tests, taking into account error margins of 0, 5, and 10 for the light curve samples. These results highlight the BAPANN model’s robustness against uncertainty and ability to converge quickly in terms of iterations needed for learning, training, and validation.

1. Introduction

The classification of the elements of the universe is crucial for creating accurate physical models for astrophysicists. However, the enormous amount of data observations carried out worldwide becomes overwhelming and intractable, necessitating the automation of data analysis through powerful and fast methods, such as artificial intelligence algorithms. Fortunately, artificial intelligence offers methods and approaches that can be effectively utilized to support this heavy task. Specifically, applications to identify or classify light curves based on their stellar variability in astrophysics have shown promising results. Some studies have achieved accuracy percentages close to 90% using light curves with about 2000 points, employing Fourier transform techniques, and, in some cases, using up to 7000 parametric statistics as input for the identification of stellar variability. These works, detailed in Table 1, highlight the classification contributions achieved by various authors.

Table 1.

In the 1st and 2nd columns, the references and titles of the works are displayed. The 3rd column presents the number of characteristics each work utilizes, including the type of data (whether a light curve or statistical data), the number of points on the curve, and the number of classes the respective authors consider. The 4th column specifies the method used for either characterization or classification. The 5th column lists the accuracy percentage obtained by the authors and the number of stars included in their respective observation collections.

Multi-wavelength observations are essential to understanding the nature and evolution of astrophysical objects and phenomena. This emphasizes the importance of automatic classification through artificial intelligence and the need to catalog all stellar objects. Of particular relevance is that, for stars, observations in the ultraviolet range complement optical, infrared, and X-ray data, among others. The UV band explicitly allows the determination of atmospheric properties in solar-type stars, which impacts the conditions under which life can exist on exoplanets. In this work, we study the light curves in the visible spectrum from the Kepler mission data using artificial intelligence methods leading to variability detection. We consider nine classes of variability, including Eclipse, Constant, Aperiodic, Contact/Rotational type stars; Delta Scuti/Beta Cepheids; Flare, Gamma Doradus/SPB; RRLyrae/Cepheidas (noting that Beta Cepheids and Cepheids are distinctly different categories), and Solarlike stars. Our research is based on the collection of stars provided by Audenaert [5], excluding the Instrument class and including an additional class of Flare-type stars. As described below, we analyze 15 datasets with varying light curve points in the optical domain, aiming to further classify datasets in the ultraviolet domain. This work has the potential to be applied to other regions of the sky that lack optical light curves, such as the Kepler field, requiring reclassification of light curves in the NUV. This research aims to establish a standard that can be applied to other regions of the GALEX (Galaxy Evolution Explorer) sky, as referenced in the works of Miles [7], Olmedo [2], and Olmedo [4].

The paper is organized as follows: Section 1 provides the background information. Section 2 introduces the methodology for classifying Kepler light curves using artificial intelligence. Section 3 discusses the experiments conducted, followed by Section 4, which presents the results obtained. Finally, we conclude the paper with our final remarks in Section 5.

2. Methodology for Classifying Kepler Light Curves Using Artificial Intelligence

This research begins by familiarizing itself with the photometric database. The dataset comprises approximately 400,000 photometric data points of star sources obtained from the Galaxy Evolution Explorer (GALEX) space telescope [2,4]. In the near-ultraviolet spectrum (NUV; 2000–2800 Å), these light curves are derived from point sources observed by the Kepler telescope. Starting with the GALEX collection, the existence of each light source in the Kepler Input Catalog (KIC) is verified. If matches are found, the light curves that have undergone multiple observations are selected to form the input dataset, simulating the NUV sources while confirming their presence in the KIC. Our methodology uses the data to train the learning algorithms. Light curves of the selected light sources are generated, taking into account attributes such as exposure time and NUV flux. Samples are obtained with 400, 200, 100, 50, and 20 points of the light curve for each source from the GALEX collection. This stage aims to highlight the characteristics in the light curve points, providing a unique profile for each type of light curve to be classified. Twenty-seven parametric statistics are then calculated as a function of the NUV flux (simulated from the optical flux) based on the works of [5,7,8,9]. The neural network architectures used in this research require normalized data between 0 and 1 in real values for the input of the data sets. Therefore, the photometric data is normalized for each vector in the GALEX collection, as required by any neural network algorithm [10]. Each of the previously mentioned statistics is normalized to fit within the range of 0 to 1, ensuring compatibility with the neural network models. The backpropagation algorithm operates with real values and performs more effectively when the input data is normalized within this range. The project aims to classify the types of ultraviolet variability observed in stars or planetary systems into the following categories:

- Group 1 ECLIPSE: Eclipsing Binaries and Transit Events.

- Group 2 CONSTANT: Constant.

- Group 3 APERIODIC: Aperiodic and Long Period.

- Group 4 CONTACT-ROT (Rotational): Rotational and Binary Contact, including Chemically Peculiar.

- Group 5 DSCT-BCEP: Delta Scuti (DSCT) and Cepheids (BCEP).

- Group 6 FLARE: Stars with Transient Outbursts or Flares.

- Group 7 GDOR-SPB: Gamma Doradus (GDOR) and Slowly Pulsating B-type (SPB) stars.

- Group 8 RRLYR-CEPHEID: RRLyrae (RRLYR) stars and Cepheid Variables (CEPHEID).

- Group 9 SOLAR-LIKE: Solar-like stars.

This classification is performed using three distinct neural network architectures:

- 1.

- Applying a backpropagation neural network algorithm with pre-labeled samples under a multilayer perceptron architecture with supervised learning, consisting of 17 input nodes, five hidden nodes, and nine output nodes [10].

- 2.

- Applying a backpropagation neural network algorithm with pre-labeled samples under a multilayer perceptron architecture with supervised learning, consisting of 17 input nodes, 10 hidden nodes, and nine output nodes [10].

- 3.

- Applying a backpropagation neural network algorithm with pre-labeled samples under a multilayer perceptron architecture with supervised learning, consisting of 17 input nodes, 15 hidden nodes, and nine output nodes [10,11,12].

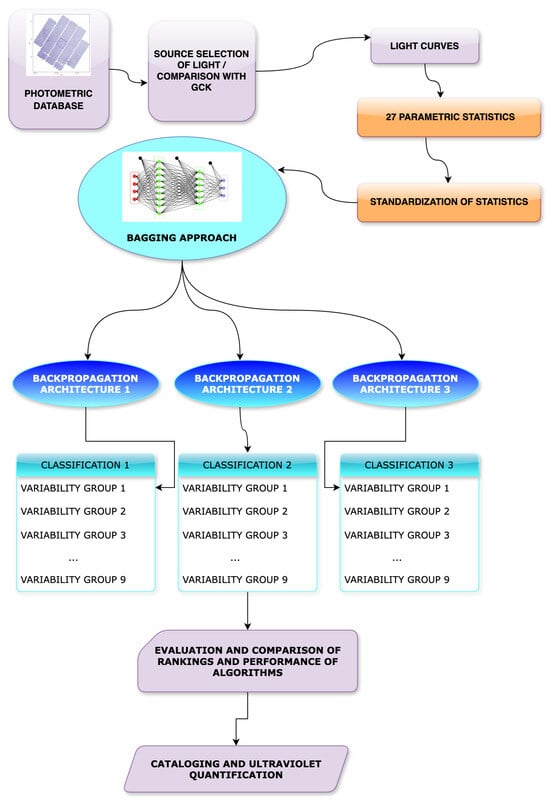

The final result will be a catalog of new datasets labeled with their respective categories and quantification data with a certainty percentage above 30%. The Figure 1 shows the Methodology for Classifying Kepler Light Curves Using Artificial Intelligence.

Figure 1.

Methodology for Classifying Kepler Light Curves Using Artificial Intelligence.

3. Experiments

We will initiate our process with the collection of Kepler data and the classification of stellar variability categories. Following that, we will outline the experimental setup.

3.1. Kepler Data Collection

The collection contains 760 samples of stars that covers 9 types of stars, this collection includes observation time, the NUV flux, and the error (signal to noise error) of the flux. The categories considered in 15 data sets defined as follows in Table 2.

Table 2.

Star data sets show different errors and resolutions.

Each type of variability is trained with 100 examples, except the APERIODIC class, which includes only 28 examples, and the RRLYR—CEPHEID class, which has 32 training examples. The table Table 2 provides detailed information on this topic.

As illustrated in Table 2, the data is divided into 15 sets, each containing multiple data points and a corresponding error value.

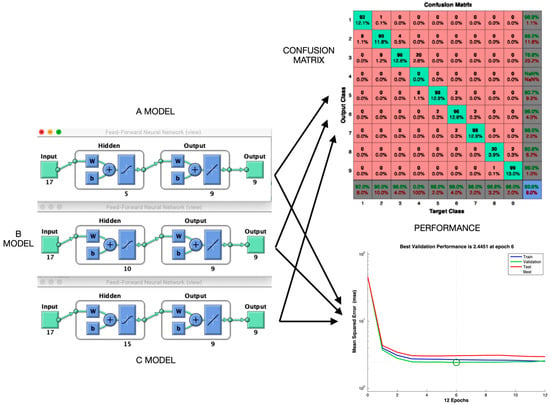

The classification approach employs an ensemble method that runs multiple algorithms independently to enhance classification accuracy. Specifically, we use the Bagging-Performance Approach Neural Network (BAPANN), a modified bagging technique. This method executes three neural network algorithms independently, each with a different number of hidden nodes (5, 10, and 15). The neural network with the highest classification percentage and a performance value closest to zero is selected as the best performer. This performance is demonstrated when the histogram in Figure 2 calculates errors as the difference between targets and outputs based on the learning achieved as the number of training samples increases (which are shown in blue).

Figure 2.

The Bagging-Performance Approach Neural Network (BAPANN) is an ensemble system built from three neural networks configured with different numbers of hidden nodes. The optimal architecture is determined by evaluating each network’s confusion matrices and performance metrics based on the training set.

The Bagging-Performance Approach Neural Network (BAPANN) is illustrated in Figure 2.

The core of BAPANN uses a Multilayer Perceptron architecture with backpropagation learning, a well-established model known for its high classification performance. This model often achieves accuracies above 80%, ensuring that our classifications are optimized for performance and accuracy.

3.2. Stellar Variability Categories

Each type of stellar variability is defined and must be recognized for the artificial intelligence code. This research aims to classify nine groups of stellar variability using optical flows that simulate ultraviolet photometry. A sample of each of the nine variables to be recognized is graphed from Figure 3, Figure 4, Figure 5, Figure 6, Figure 7, Figure 8, Figure 9, Figure 10 and Figure 11. It is essential to highlight that the dataset was carefully selected. However, some crossover can be seen in the data due to instrument noise and interruptions in readings between points during the sampling process. Nonetheless, it is confirmed that the neural network models are robust to disturbances, uncertainty, and noise within the dataset. Their performance is not adversely affected during the training or learning stages.

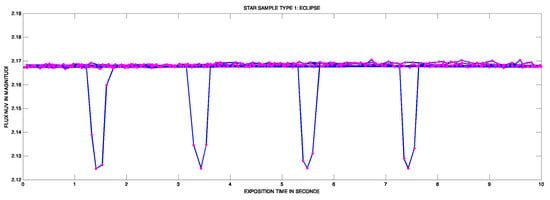

Figure 3.

The light curve of a Group 1 star, specifically an eclipse event, consists of 450 data points collected over 27.5 days. The flux value is measured in terms of the ultraviolet light magnitude, which is represented on the y-axis. The x-axis denotes the exposure time in seconds.

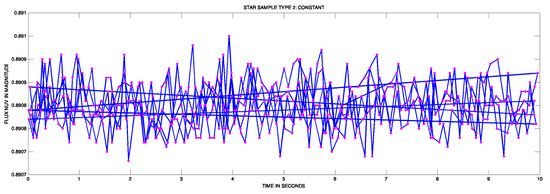

Figure 4.

The light curve of a Group 2 star, which exhibits a constant flux, consists of 450 data points collected over 27.5 days. The flux value is measured in terms of the ultraviolet light magnitude, represented on the y-axis, while the x-axis indicates the exposure time in seconds.

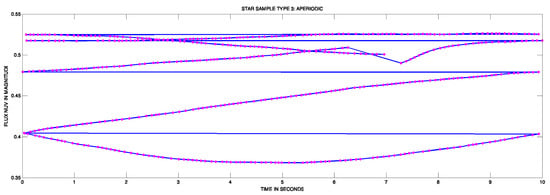

Figure 5.

The light curve of a Group 3 star, characterized by aperiodic behavior, consists of 450 data points collected over 27.5 days. The flux value is measured in terms of the ultraviolet light magnitude, represented on the y-axis, while the x-axis denotes the exposure time in seconds.

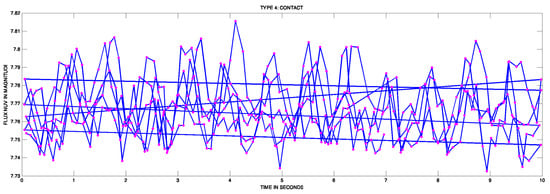

Figure 6.

The light curve of a Group 4 star, specifically exhibiting Contacto-Rot behavior, consists of 450 data points collected over 27.5 days. The flux value is measured in terms of the ultraviolet light magnitude, represented on the y-axis, while the x-axis indicates the exposure time in seconds.

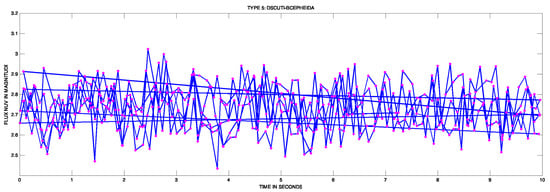

Figure 7.

The light curve of a Group 5 star, exhibiting Dsct-BCepheid characteristics, consists of 450 data points collected over 27.5 days. The flux value is measured in terms of the ultraviolet light magnitude, represented on the y-axis, while the x-axis indicates the exposure time in seconds.

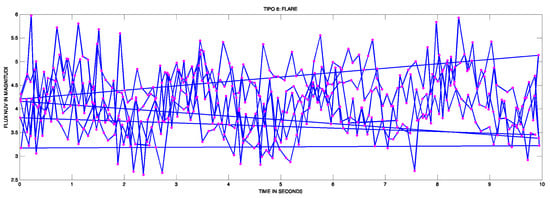

Figure 8.

The light curve of a Group 6 star, identified as a Flare star, comprises 450 data points collected over 27.5 days. The flux value, measured in ultraviolet light magnitude, is represented on the y-axis, while the x-axis indicates the exposure time in seconds.

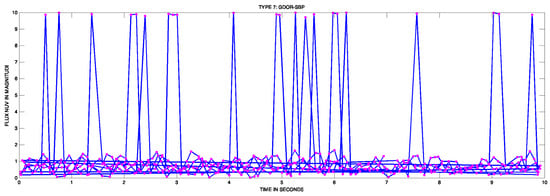

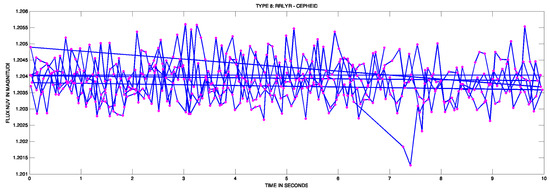

Figure 9.

The light curve of a Group 7 star, classified as Gdor-SPB, consists of 450 data points collected over 27.5 days. The flux value, measured in terms of ultraviolet light magnitude, is represented on the y-axis, while the x-axis signifies the exposure time in seconds.

Figure 10.

The light curve of a Group 8 star, identified as RRLyrae-Cepheid, comprises 450 data points collected over 27.5 days. The flux value, measured in ultraviolet light magnitude, is represented on the y-axis, while the x-axis indicates the exposure time in seconds.

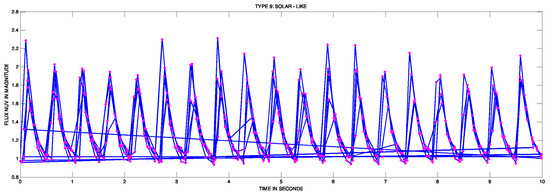

Figure 11.

The light curve of a Group 9 star, classified as Solar-like, consists of 450 data points collected over 27.5 days. The flux value, measured in terms of ultraviolet light magnitude, is represented on the y-axis, while the x-axis indicates the exposure time in seconds.

The definitions for these groups are provided below:

- Group 1 ECLIPSE—Binary Eclipsants and Transit Events: Eclipsing/transiting (transit/eclipse) systems are a class of objects that exhibit extrinsic variability in the form of periodic changes in transits/eclipses [5]. An example of the light curve is illustrated in Figure 3.

- Group 2 CONSTANT—Constant: Constant stars (constant) are a class of objects that do not show any statistically significant variability on the timescale of 27.5 days [5]. An example of the light curve can be seen in the Figure 4.

- Group 3 APERIODIC—Aperiodic and Long Period: aperiodic variable stars refer to stars that do not exhibit any regular or repeating pattern in their brightness changes over time as Mira and Semiregular (Concepts according to the American Association of Variable Star Observers (AAVSO) An example of the light curve can be seen in the Figure 5.

- Group 4 CONTACT-ROT—Rotational and Binary Contact: Another geometric variability is caused by the star’s rotation. Rotating or rotational variables are stars that dim by a few percent as they rotate due to starspots [13] where 450 dots in 10 months were taked, and the value in flux of near ultraviolet band is meassured in axes x and y respectively.

On the other hand, Chemically Peculiar stars have a period of variability equal to the star’s rotation period. Chemically peculiar (CP) stars are a class of stars with distinctly unusual abundances of metals, at least in their surface layers, according to the research projects outlined in the first search result [13], a sample of this light curve is illustrated in Figure 6.

- Group 5 DSCT-BCEP—Delta () Scuti and () Cepheids: The stars Cepheids (Delta Scuti/ Cepheids) are two pulsating variables in modes of radial and non-radial pressure of low order and gravity that are excited mainly through the mechanism that acts in the zone of partial ionization of helium (Delta Scuti stars) and the iron group. Beta Cep stars have masses between 8 and 25 Me, and their pulsation periods range from 2 to 8 h. The less massive Delta Sct stars cover the 1.5 to 2.5 Me mass range and have periods of about 15 min to about 8 h. Therefore, there is a significant overlap between the two in terms of pulsation periods [5]. A sample of this type of star, in its curve light, is the Figure 7.

- Group 6 FLARE—Transient or Flare Stars: The flares are believed to occur due to a magnetic reconnection event that creates a beam of charged particles that impacts the stellar photosphere, generating rapid heating and emission at almost all wavelengths. Although the initial heating produces a very hot gas, the flare is more pronounced in short wavelengths: ultraviolet and X-rays [14]. One sample of how looks a curve light of this type is Figure 8.

- Group 7 GDOR-SPB—Gamma () Doradus and Slow Pulse: Gamma Doradus stars are a type of variable star that exhibits variations in luminosity due to non-radial pulsations in their surface layers [15]. An example of the curve light is shown in Figure 9.

- Group 8 RRLYR-CEPHEID—RRLyras and Cepheid Stars: Classical pulsators (class RRLyr—Ceph) are low to intermediate mass evolved stars whose intrinsic pulsation variability is driven by the opacity mechanism acting in the zone partial ionization of helium [5]. An example of the light curve can be observed in Figure 10.

- Group 9 SOLAR LIKE—Solar Like Stars: Solar-like pulsators (solar class) are intrinsically variable stars showing oscillations driven by turbulent convective motions near their surfaces. Any star with an outer convective zone is expected to show such stochastically excited oscillations [5]. An example of a light curve can be observed in Figure 11.

4. Results

The BAgging-Performance Approach Neural Network (BAPANN) training process involves an initial training session to test the classification level that BAPANN can achieve. It was initially tested with 760 stars, each with 13, 20, 50, 150, and 450 light points to be classified. Light points with 00, 05, and 10 errors were considered.

The set initially comprised 27 statistics. However, some statistics hindered the training of the BPANN. After a study of discriminant and non-discriminant features, the input was reduced to 17 parametric statistics.

The selection of the number of nodes is based on a recommendation used by [10], where it is suggested that the number of hidden nodes should be around 50% of the number of input nodes. For our case, 17 input nodes imply approximately 8.5 hidden nodes, rounded to 10. Therefore, we tested this recommendation (10 hidden nodes) and varied it with an upper bound of 15 and a lower bound of 5 hidden nodes. Its variability was chosen to prevent overtraining and ensure stable learning even if some architectures fail to learn the data examples correctly. Our methodology trained three architectures for 46 training tests:

- (a)

- BAPANN configuration 1: Containing 17 input nodes, five hidden nodes, and nine output nodes (17-5-9 configuration).

- (b)

- BAPANN configuration 2: Containing 17 input nodes, 10 hidden nodes, and nine output nodes (17-10-9 configuration).

- (c)

- BAPANN configuration 3: Containing 17 input nodes, 15 hidden nodes, and nine output nodes (17-15-9 configuration).

The results were obtained through an initial setup to select discriminant features, followed by a second configuration in Parametric Statistics, a Test of the Training Process, and an Analysis of Confusion Matrices.

4.1. Initial Setup to Choose Discriminant Features

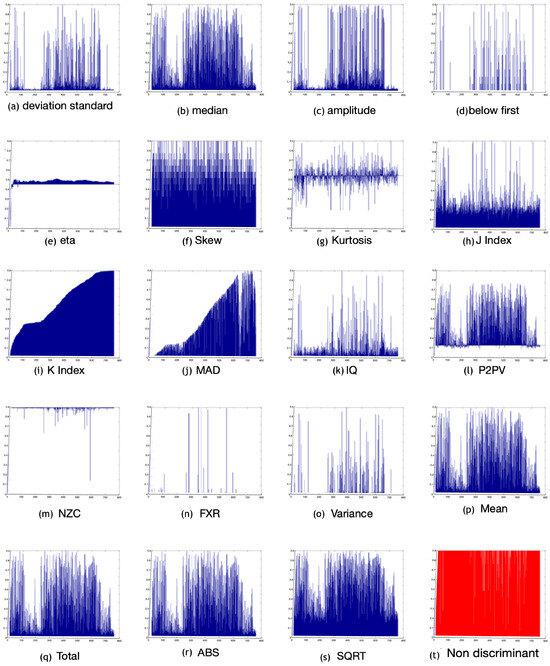

The advantage of the BAPANN approach is that features can be corrected by carefully analyzing the 27 statistics and separating discriminant from non-discriminant ones. An analysis was conducted, and each statistic was graphed to visually identify those relevant to learning and eliminate those that hinder it. Non-discriminant statistics are those that appear red in Figure 12, and this is because no variations are observed in their graphs, that is, their feature vector is completely continuous and unchanged.

Figure 12.

Discriminant statistics and an example of non-discriminant statistics. The blue graphs shows skews (are discriminants), but red graphs shows filled axes (not discriminants). The x axes represents the 760 star samples. The y axes represents the value of the statistic mentioned between 0 and 1 real valued. A discriminant statistic displays variations in its graph (blue), whereas a non-discriminant statistic shows a continuous graph (red).

The non-discriminant () statistics were identified and removed, which do not contribute to the neural network’s classification process. This left 17 discriminant () statistics that influence the classification process.

where means non-discriminant features, such as (beyond the first), (Sum of Square Standard Deviation), (Max Slope), (Median Slope), (Absolute Slope), (Consecutive points), (absolute of the total of the flux and the product of the inverse of the number of points in the observation), (ratio of differences and sum between maximum and minimum of flux and errors), and (Sign).

where means discriminant features, such as (standard deviation), (median flux), (amplitude), (below the first), (third power of the difference between two successive flux values), Kurtosis (rth moment about the mean), (J index), (K index), (median of the differences between the flux and the median of the flux), (interquartile range), (non-zero crossing), (flux ratio), (average), (total flux), (absolute), and (square root).

An example of the discriminant statistics selected, along with an example of non-discriminant statistics, can be seen in Figure 12.

4.2. Second Configuration in Parametric Statistics

Once it was established which statistics were effective for classification, the type of architecture for more robust training was determined. Seventeen statistics were identified as adequate for training, leading to the three BAPANN architectures detailed above.

Some training sessions revealed that the characteristic yielded values too small to be considered relevant for learning when the data sets contained 50, 150, or 450 light points per star curve. In such cases, , , and were eliminated from the input training set, leaving only 17 statistics for tests 20 and 21, achieving the highest classification accuracy of 97.8% for nine stellar variability types for 760 stars (see Table 3).

Table 3.

Star datasets are provided at different error levels and resolutions for training purposes.

4.3. Test of Training Process

The training was performed for each data set with the 3 BAPANN architectures detailed above. Each test displayed the following elements: 1. The trained architecture: BAPANN Architecture 1, 2, or 3, 17 input nodes, nine output nodes, and varying hidden nodes. 2. The number of epochs required for each architecture to learn the data set. 3. The classification percentage obtained for each case. 4. The performance of the neural network.

Forty-five detailed tests are described in Table 4. Additionally, the update operations of the hidden nodes are shown in expression (3):

where means the output of hidden nodes activation function, means the output of hidden node .

Table 4.

Correct classification by class and by instances of the five matrices chosen as those that yielded a classification percentage near 99%. (* In this test, only 17 statistics were required, for which (1) Average, (2) Total, and (3) Absolute are removed for the input data). Where dots indicate the resolution of the light curve.

4.4. Analysis of Confusion Matrices

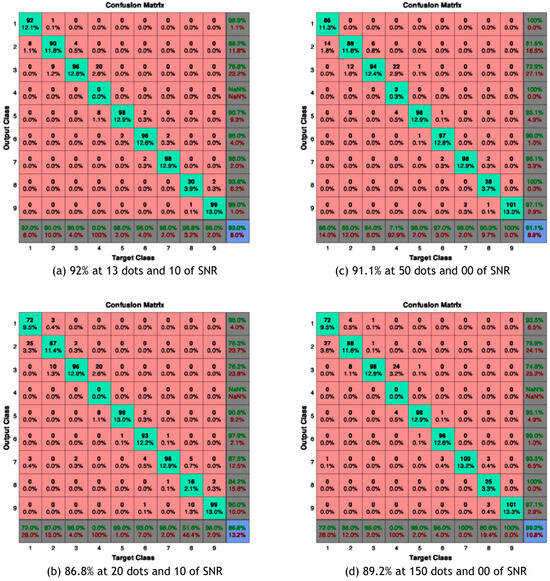

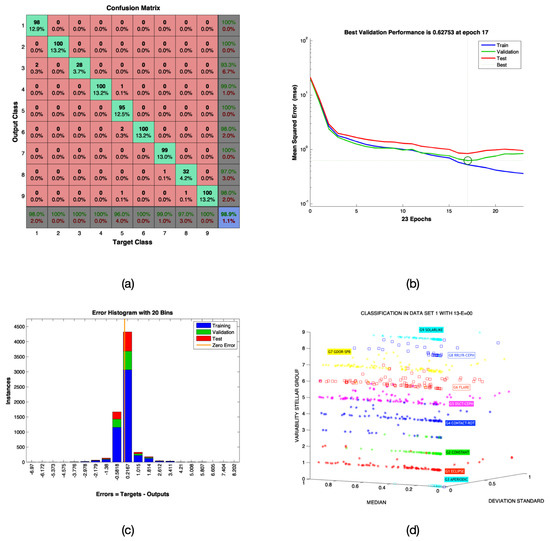

The confusion matrices provide insights into the percentage of correct or incorrect classifications, false positives, and false negatives and help identify complex categories for the model to classify. This information may indicate the need to reconsider some categories or reinforce the model architecture. Five examples of confusion matrices, yielding percentages of 92.6%, 96.8%, 97.8%, 96.1%, and 97.4% for the 9 cases of stellar variability, are shown in Figure 13 and Figure 14a.

Figure 13.

4 Confusion matrix (CM) of percentages of 92.0 %, 96.6 % 97.8 % and 95.1% recognized instances.

Figure 14.

(a) Confusion matrix resulting from test 9 with 98.9% (blue square) recognized instances. (b) Convergence of learning performance reached epoch 17 with a value of 0.62753. (c) Example of a histogram of learning errors in 20 test containers, achieving a minimum error of 0.2167, which reflects that the difference between the labels and what the model learns is very close to 0. (d) Stellar variability classification of 760 stars, represented in 3 dimensions, with x = mean (), y = amplitude (w) and z = stellar variability class.

In particular, it is observed that the class that obtains a percentage of 0% classification is GROUP 3 APERIODIC stars, as is shown in some of the confusion matrix in Figure 14a.

Below is Table 4 with the best percentages selected for different cases, and where DOTS means the number of points that describe the light curve, and this is a function of that data set and its corresponding error where the best percentage was achieved among the 15 light curve data sets handled. This is due to the error of the instrument providing the data based on noise or static of the signal or telescope. Although this does not affect the operation of the classifier through the assembly of neural networks, it does affect their aesthetics visually in the graphs. However, this does not prevent effective classification. It is demonstrated that despite these details in the light curve samples, the ensemble omitted or suppressed the uncertainty, ambiguity, or noise in the data due to static or instrument measurements. The effect is not of significant importance in the classification process performed by the neural networks.

5. Conclusions

It is essential to mention that for datasets with fewer points on the light curve, the classification percentage decreases more significantly than in cases where the number of points on the light curve is more significant. Another determining factor is the noise error in the signal accompanying each dataset. This indicates that the smaller the dataset and the greater the noise, the more complex the classification. Another observation regarding classification indicates that having more nodes in the hidden layer can improve learning, which also leads to an improvement in the classification percentage. On the other hand, input feature selection must be carefully selected to visually distinguish discriminatory ones, which helps improve learning or even accelerate it and avoid overtraining. The architectures with higher percentages have 10 to 15 nodes in the hidden layer, while the architecture with five nodes does not achieve the highest percentages. Our findings suggest that achieving percentages of up to 91% with only 13 light curve points demonstrates that the BAPANN model, in architectures 2 and 3 (with 10 and 15 hidden nodes, respectively), are reliable models for categorizing stellar variability using only 17 features for the input layer (parametric statistics). It has been observed that with 50 light curve points, it is sufficient to classify eight types of stellar variability, which surpasses all the reviewed state-of-the-art. A maximum correct classification rate of 98.9% is achieved, and in the best case, 97.8%. Both values are above the state-of-the-art maximum of 94.9%. The most effective architecture in our BAPANN ensemble is a Multilayer Perceptron architecture trained with a backpropagation algorithm with 10 hidden nodes. This is improved even with just 17 carefully selected statistics, where achieving percentages above 90% is possible.

Author Contributions

Conceptualization, methodology and software: L.F.-P.; Investigation, writing—original draft preparation, writing—review, visualization and editing: L.I.B.-S.; Validation, data curation resources, and formal analysis, M.T.O.-A.; Supervision, project administration, funding acquisition, B.P.G.-V. All authors have read and agreed to the published version of the manuscript.

Funding

We thank CONAHCYT for providing the National Sabbatical Stay scholarship, code 2022-000013-01NACV-00106.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

We extend our heartfelt gratitude to Jeroen Audenaert from the Massachusetts Institute of Technology (MIT) in Cambridge, USA, for his generosity in providing us with his collection of stars, which constitutes a substantial portion of our test data for this research. The data are available upon request to the corresponding authors.

Acknowledgments

We extend our sincere gratitude to INAOE for their invaluable support, Special thanks to Miguel Chávez and Emanuele Bertone for their time, and to José Ramón Valdés for facilitating essential connections and resources.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

| BAPANN | Bagging-Performance Approach Neural Network |

| MGaussianM | Mixture Gaussian Model |

| BNC | Bayesian networks classifier |

| ANNC | Artificial neural networks classifier |

| SVM | Support Vector Machine |

| GALEX UV | Galaxy Evolution Explorer space telescope |

| NUV | Near deep UltraViolet band |

| TFD | TrES Lyr1 (which includes the Kepler field) light curves, parameterization through a Discrete Fourier analysis |

| SAX | Symbolic Aggregate Approximation |

| SMOTE | Synthetic Minority Over Sampling Technique |

| BN | Bayesian Network |

| RFGC | Random Forest Method |

| TESS | NASA Transiting Exoplanet Survey Satellite |

| SHORTING HAT | Hat sorting method |

| GBGC | Gradient Boosting |

| T’DA | TESS Data for Asteroseismology |

| Q9 | Is a specific number of a Kepler Field |

| UV | UltraViolet band |

| DOTS | Dots per light curve of the star |

| SNR | Signal to Noise error |

References

- Debosscher, J.; Sarro, L.M.; Aerts, C.; Cuypers, J.; Vandenbussche, B.; Garrido, R.; Solano, E. Automated supervised classification of variable stars-I. methodology. Astron. Astrophys. 2007, 475, 1159–1183. [Google Scholar] [CrossRef]

- Olmedo, M.; Lloyd, J.; Mamajek, E.E.; Chávez, M.; Bertone, E.; Martin, D.C.; Neill, J.D. Deep GALEX UV Survey of the Kepler Field. I. Point Source Catalog. Astrophys. J. 2015, 813, 100. [Google Scholar] [CrossRef]

- Bass, G.; Borne, K. Supervised ensemble classification of Kepler variable stars. Mon. Not. R. Astron. Soc. 2016, 459, 3721–3737. [Google Scholar] [CrossRef]

- Olmedo Aguilar, N.D. Fuentes Variables Ultravioleta en el campo de Kepler. PhD Thesis, INAOE, San Andrés Cholula, Mexico, 2017. [Google Scholar]

- Audenaert, J.; Kuszlewicz, J.S.; Handberg, R.; Tkachenko, A.; Armstrong, D.J.; Hon, M.; Kgoadi, R.; Lund, M.N.; Bell, K.J.; Bugnet, L.; et al. TESS data for asteroseismology (T’DA) stellar variability classification pipeline: Setup and application to the Kepler Q9 data. Astron. J. 2021, 162, 209. [Google Scholar] [CrossRef]

- Barbara, N.H.; Bedding, T.R.; Fulcher, B.D.; Murphy, S.J.; Reeth, T.V. Classifying Kepler light curves for 12,000 A and F stars using supervised feature-based machine learning. Mon. Not. R. Astron. Soc. 2022, 514, 2793–2804. [Google Scholar] [CrossRef]

- Miles, B.E.; Shkolnik, E.L. HAZMAT. II. Ultraviolet variability of low-mass stars in the GALEX Archive. Astron. J. 2017, 154, 67. [Google Scholar] [CrossRef]

- Kim, S.T.; Cai, W.; Jin, F.F.; Yu, J.Y. ENSO stability in coupled climate models and its association with mean state. Clim. Dyn. 2014, 42, 3313–3321. [Google Scholar] [CrossRef]

- Wittenmyer, R.A.; Butler, R.P.; Tinney, C.G.; Horner, J.; Carter, B.D.; Wright, D.J.; Jones, H.R.; Bailey, J.; O’Toole, S.J. The Anglo-Australian planet search XXIV: The frequency of Jupiter analogs. Astrophys. J. 2016, 819, 28. [Google Scholar] [CrossRef]

- Fausett, L.V. Fundamentals of Neural Networks: Architectures, Algorithms, and Applications; Prentice-Hall: Hoboken, NJ, USA, 1994. [Google Scholar]

- Rokach, L. Pattern classification using ensemble methods. Ser. Mach. Percept. Artif. Intell. 2010, 75, 225. [Google Scholar]

- Sewell, M. Ensemble learning. Res Note 2008, 11, 1–34. [Google Scholar]

- Preston, G.W. The chemically peculiar stars of the upper main sequence. Annu. Rev. Astron. Astrophys. 1974, 12, 257–277. [Google Scholar] [CrossRef]

- Segura, A.; Walkowicz, L.M.; Meadows, V.; Kasting, J.; Hawley, S. The effect of a strong stellar flare on the atmospheric chemistry of an Earth-like planet orbiting an M dwarf. Astrobiology 2010, 10, 751–771. [Google Scholar] [CrossRef] [PubMed]

- Antoci, V.; Cunha, M.S.; Bowman, D.M.; Murphy, S.J.; Kurtz, D.W.; Bedding, T.R.; Borre, C.C.; Christophe, S.; Daszyńska-Daszkiewicz, J.; Fox-Machado, L. The first view of δ Scuti and γ Doradus stars with the TESS mission. Mon. Not. R. Astron. Soc. 2019, 490, 4040–4059. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).