RTOB SLAM: Real-Time Onboard Laser-Based Localization and Mapping

Abstract

:1. Introduction

1.1. Context

1.2. Related Work

1.3. Contributions

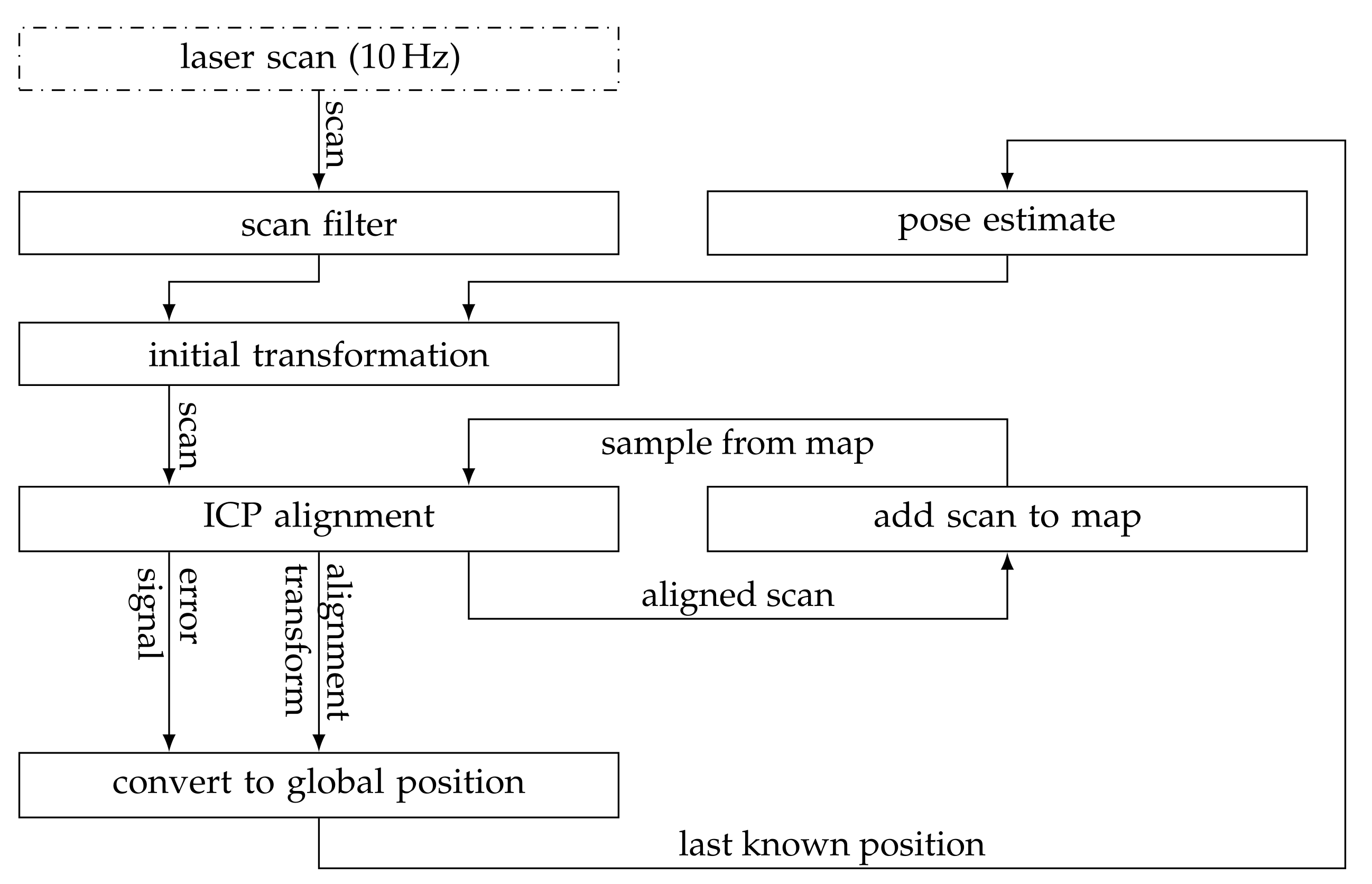

2. Localization and Mapping

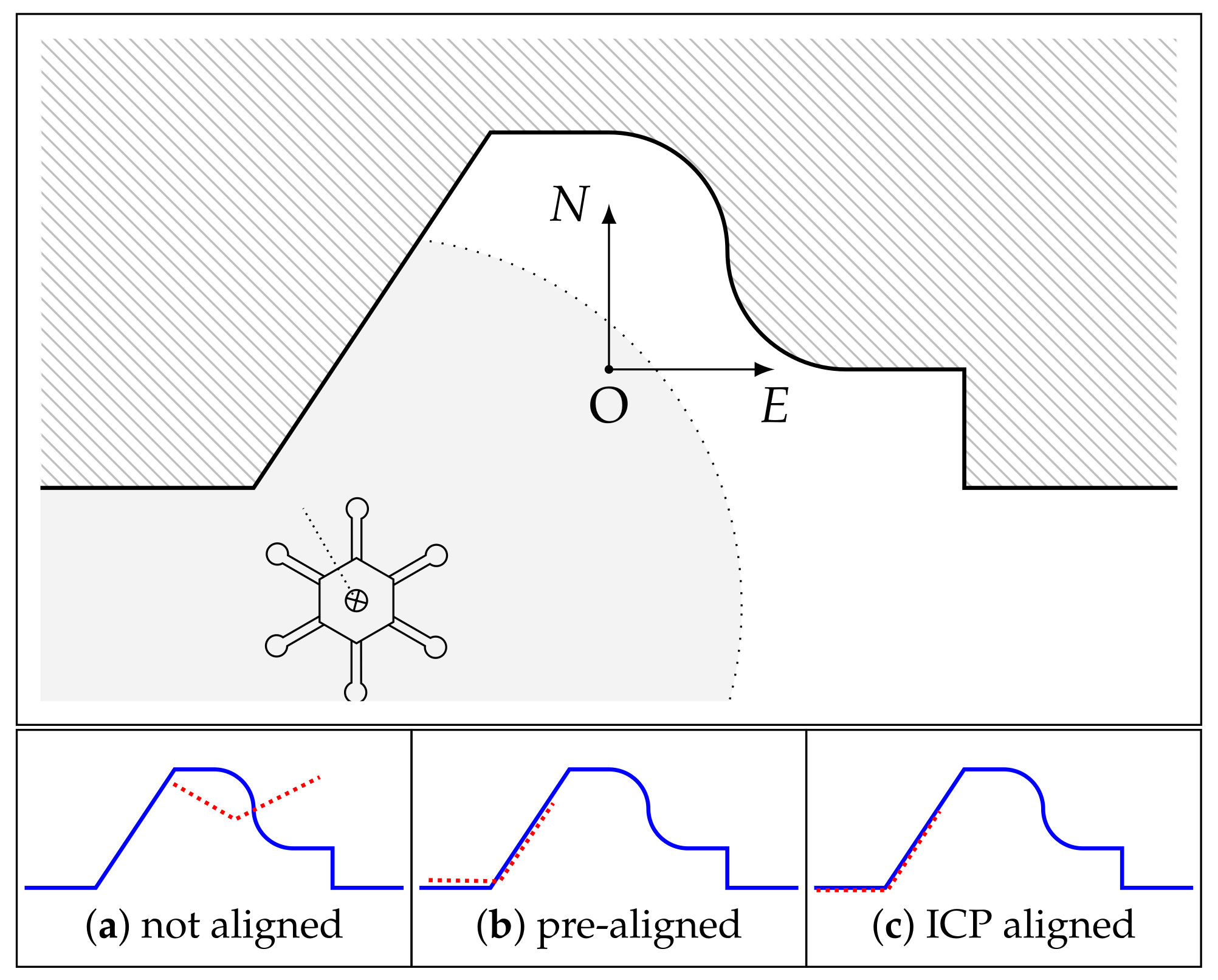

2.1. Scan Alignment

2.1.1. Scan Filter

2.1.2. Initial Alignment

2.1.3. ICP Alignment

- For each point in the scan, find the nearest neighbor point in the map.

- Find the transformation that minimizes the cost function J, where J is given by the sum of all point-to-point distances. The cost is minimized by solving a least-squares system. The commonly used Point-Cloud-Library uses the highly efficient singular value decomposition for this [21].

- Transform all points with and iterate until the problem converged (see Section 2.3).

2.1.4. Update and Output

2.2. Position Estimation

2.3. ICP Alignment Details

2.3.1. Number of Iterations

2.3.2. Sampling from the Map

- Case a): If and the reference map is a random sample drawn from the whole past. This is very close to an occupancy grid.

- Case b): If and the reference map can be based—depending on the choice of — on the most recent observations. If the environment changed at time where is less than the RTOB-SLAM performance will not be influenced by this change.

2.3.3. Loop-Closure in Static Environments

3. Results

3.1. Experimental Setup

3.2. Experimental Results

3.2.1. Simulation

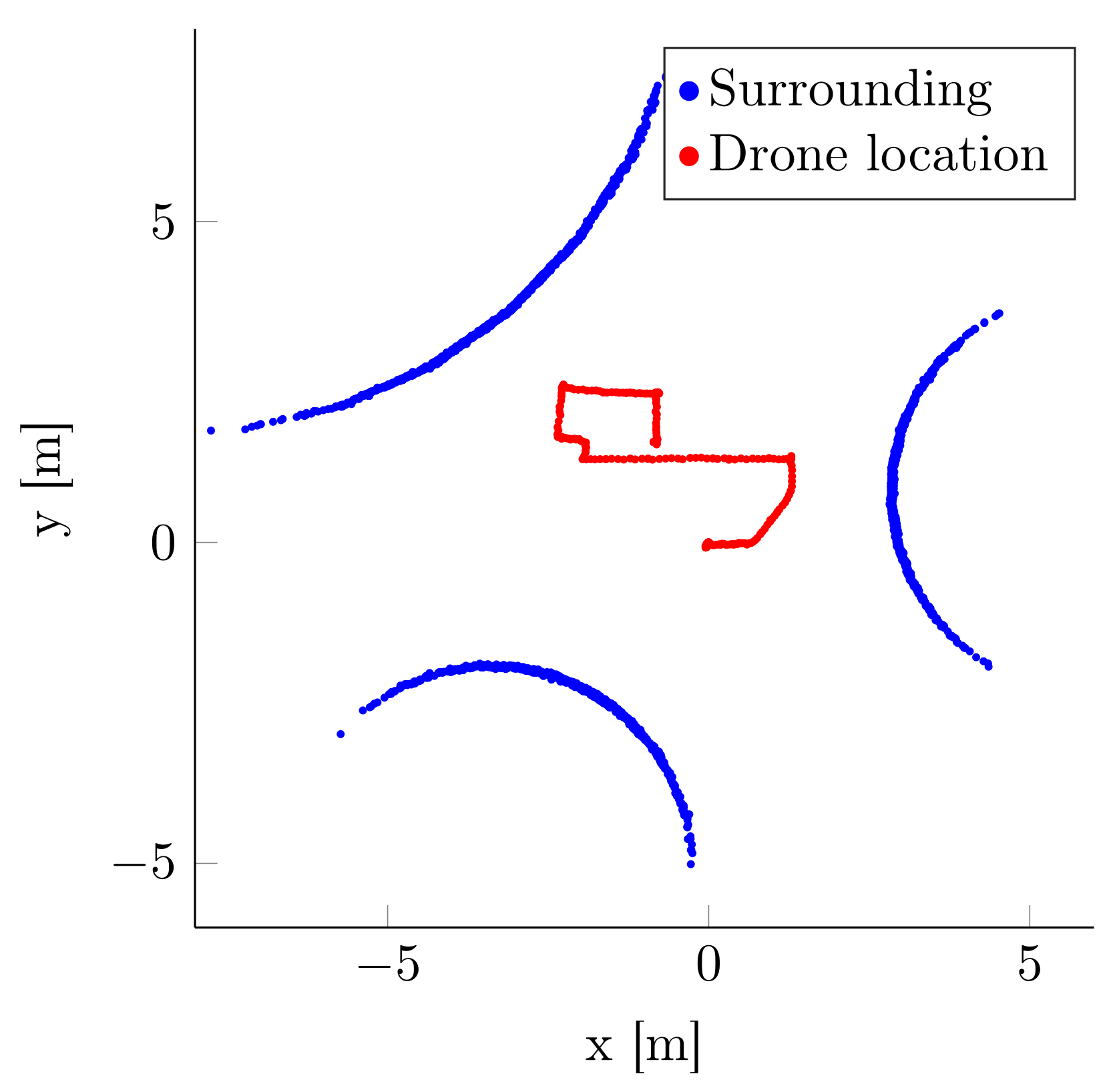

3.2.2. Indoor Experiments

- to map indoor environments

- to hover stationary in the presence of severe turbulence

3.2.3. Outdoor Experiments

- It can cope with a dynamic environment: the side wall and the trash bin are not visible at higher altitudes.

- It can discover new areas: everything outside of the garage was not visible when the flight started

- When revisiting areas that have already been discovered, no loop-closing issues are visible.

4. Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| RTOB SLAM | Real-time On-Board Simultaneous Localisation and Mapping |

| PCL | Point Cloud Library |

| UAV | Unmanned Aerial Vehicle |

| ROS | Robotic Operating System |

| ICP | Iterative Closest Point |

| GPS | Global Positioning System |

| WCET | Worst-case Execution Time |

References

- Li, Y.; Liu, Y.; Wang, Y.; Lin, Y.; Shen, W. The Millimeter-Wave Radar SLAM Assisted by the RCS Feature of the Target and IMU. Sensors 2020, 20, 5421. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Chen, Z.; Zheng, W.; Wang, H.; Liu, J. Monocular Visual-Inertial SLAM: Continuous Preintegration and Reliable Initialization. Sensors 2017, 17, 2613. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Munguía, R.; Urzua, S.; Bolea, Y.; Grau, A. Vision-Based SLAM System for Unmanned Aerial Vehicles. Sensors 2016, 16, 372. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- López, E.; García, S.; Barea, R.; Bergasa, L.M.; Molinos, E.J.; Arroyo, R.; Romera, E.; Pardo, S. A Multi-Sensorial Simultaneous Localization and Mapping (SLAM) System for Low-Cost Micro Aerial Vehicles in GPS-Denied Environments. Sensors 2017, 17, 802. [Google Scholar] [CrossRef] [PubMed]

- Bauersfeld, L.; Ducard, G. Low-cost 3D Laser Design and Evaluation with Mapping Techniques Review. In Proceedings of the 2019 IEEE Sensors Applications Symposium (SAS), Sophia Antipolis, France, 11–13 March 2019; pp. 81–86. [Google Scholar]

- Krul, S.; Pantos, C.; Frangulea, M.; Valente, J. Visual SLAM for Indoor Livestock and Farming Using a Small Drone with a Monocular Camera: A Feasibility Study. Drones 2021, 5, 41. [Google Scholar] [CrossRef]

- Nguyen, V.; Harati, A.; Martinelli, A.; Siegwart, R. Orthogonal SLAM: A Step toward Lightweight Indoor Autonomous Navigation. In Proceedings of the 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems, Beijing, China, 9–15 October 2006; pp. 5007–5012. [Google Scholar]

- Alpen, M.; Willrodt, C.; Frick, K.; Horn, J. On-board SLAM for indoor UAV using a laser range finder. In Unmanned Systems Technology XII; SPIE: Wallisellen, Switzerland, 2010; Volume 7692. [Google Scholar]

- Alpen, M.; Frick, K.; Horn, J. An Autonomous Indoor UAV with a Real-Time On-Board Orthogonal SLAM. In Proceedings of the 2013 IFAC Intelligent Autonomous Vehicles Symposium, the International Federation of Automatic Control, Gold Coast, QLD, Australia, 26–28 June 2013; pp. 268–273. [Google Scholar]

- Censi, A. An ICP variant using a point-to-line metric. In Proceedings of the 2008 IEEE International Conference on Robotics and Automation, Chengdu, China, 21–24 September 2008; pp. 19–25. [Google Scholar]

- Dryanovski, I.; Valenti, R.G.; Xiao, J. An open-source navigation system for micro aerial vehicles. Auton Robot 2013, 34, 177–188. [Google Scholar] [CrossRef]

- Friedman, C.; Chopra, I.; Rand, O. Indoor/Outdoor Scan-Matching Based Mapping Technique with a Helicopter MAV in GPS-Denied Environment. Int. J. Micro Air Veh. 2015, 7, 55–70. [Google Scholar] [CrossRef] [Green Version]

- Shen, S.; Michael, N.; Kumar, V. Autonomous Multi-Floor Indoor Navigation with a Computationally Contrained MAV. In Proceedings of the International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 20–25. [Google Scholar]

- Grzonka, S.; Grisetti, G.; Burgard, W. A Fully Autonomous Indoor Quadcopter. IEEE Trans. Robot. 2012, 28, 90–100. [Google Scholar] [CrossRef] [Green Version]

- Steux, B.; Hamzaoui, O.E. tinySLAM: A SLAM algorithm in less than 200 lines C-language program. In Proceedings of the 2010 11th International Conference on Control Automation Robotics Vision, Singapore, 7–10 December 2010; pp. 1975–1979. [Google Scholar]

- Doera, C.; Scholza, G.; Trommera, G.F. Indoor Laser-based SLAM for Micro Aerial Vehicles. Gyroscopy Navig. 2017, 8, 181–189. [Google Scholar] [CrossRef]

- Lee, T.j.; Kim, C.h.; Cho, D.i.D. A Monocular Vision Sensor-Based Efficient SLAM Method for Indoor Service Robots. IEEE Trans. Ind. Electron. 2019, 66, 318–328. [Google Scholar] [CrossRef]

- Hess, W.; Kohler, D.; Rapp, H.; Andor, D. Real-time loop closure in 2D LIDAR SLAM. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation, Stockholm, Sweden, 16–21 May 2016; pp. 1271–1278. [Google Scholar]

- Gmapping. 2021. Available online: http://wiki.ros.org/gmapping (accessed on 10 September 2021).

- Chen, H.; Hu, W.; Yang, K.; Bai, J.; Wang, K. Panoramic annular SLAM with loop closure and global optimization. Appl. Opt. 2021, 60, 6264–6274. [Google Scholar] [CrossRef] [PubMed]

- Rusu, R.; Cousins, S. 3D is here: Point Cloud Library (PCL). In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011. [Google Scholar]

- Point Cloud Library. 2021. Available online: http://pointclouds.org/ (accessed on 7 September 2021).

| Arbitrary Environment | Real-Time Onboard | Dynamic Environment | |

|---|---|---|---|

| RTOB-SLAM | Yes | Yes | Yes |

| OrthoSLAM | No | Yes | No |

| Cartographer | Yes | Yes | (Yes) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bauersfeld, L.; Ducard, G. RTOB SLAM: Real-Time Onboard Laser-Based Localization and Mapping. Vehicles 2021, 3, 778-789. https://doi.org/10.3390/vehicles3040046

Bauersfeld L, Ducard G. RTOB SLAM: Real-Time Onboard Laser-Based Localization and Mapping. Vehicles. 2021; 3(4):778-789. https://doi.org/10.3390/vehicles3040046

Chicago/Turabian StyleBauersfeld, Leonard, and Guillaume Ducard. 2021. "RTOB SLAM: Real-Time Onboard Laser-Based Localization and Mapping" Vehicles 3, no. 4: 778-789. https://doi.org/10.3390/vehicles3040046

APA StyleBauersfeld, L., & Ducard, G. (2021). RTOB SLAM: Real-Time Onboard Laser-Based Localization and Mapping. Vehicles, 3(4), 778-789. https://doi.org/10.3390/vehicles3040046