Abstract

The implementation of the NIS2 Directive expands the scope of cybersecurity regulation across the European Union, placing new demands on both essential and important entities. Despite its importance, organizations face multiple barriers that undermine compliance, including lack of awareness, technical complexity, financial constraints, and regulatory uncertainty. This study identifies and models these barriers to provide a clearer view of the systemic challenges of NIS2 implementation. Building on a structured literature review, fourteen barriers were defined and validated through expert input. The Decision-Making Trial and Evaluation Laboratory (DEMATEL) method was then applied to examine their interdependencies and to map causal relationships. The analysis highlights lack of awareness and the evolving threat landscape as key drivers (i.e., causal factors) that reinforce each other. Technical complexity and financial constraints act as mediators transmitting the influence of these causal factors toward operational and governance failures. Operational disruptions, high reporting costs, and inadequate risk assessment emerge as the most dependent outcomes (i.e., effect factors), absorbing the impact of the driving and mediating factors. The findings suggest that interventions targeted at awareness-building, resource allocation, and risk management capacity have the greatest leverage for improving compliance and resilience. By clarifying the cause-and-effect dynamics among barriers, this study supports policymakers and managers in designing more effective strategies for NIS2 implementation and contributes to current debates on cybersecurity governance in critical infrastructures.

1. Introduction

The growing integration of information and communication technologies (ICT) has transformed modern society. While this integration creates opportunities, it also increases exposure to cyber threats, attacks, and criminal activity [1]. Cybersecurity, therefore, has become essential for protecting the information and infrastructure ecosystem of organizations by deploying technical safeguards, while also guiding the identification and mitigation of risks [2]. It is widely acknowledged that cybersecurity is socio-technical in nature and depends strongly on human factors, which remain central to digital resilience.

The digital transformation has also introduced new forms of strategic risk. Sophisticated attackers exploit vulnerabilities, potentially disrupting critical services and undermining societal security [3]. To counter this, stakeholders must identify and address barriers that weaken cybersecurity frameworks. Addressing these barriers in a structured way enables the development of more resilient systems [4]. Although some incidents are caused by hardware or software failures, the volume of malicious events has increased sharply [5]. This escalation has driven the development of regulatory frameworks that combine technical, legal, and strategic perspectives. Prominent examples include the international standard for information security management systems ISO/IEC 27001 [6], the National Institute of Standards and Technology (NIST) Cybersecurity Framework, and the General Data Protection Regulation (GDPR). These frameworks highlight the importance of risk disclosure, which has gained traction given the potential impact of cyber incidents [7]. Still, fragmented security practices often prove insufficient for meeting compliance requirements, resulting in inconsistent implementation, weak communication across organizational units, and limited alignment with regulatory expectations [8].

Within Europe, regulatory efforts have historically emphasized competition, social welfare, and healthcare, with less attention paid to ICT infrastructure as a service of general interest [9]. Yet with digitalization and automation embedded in nearly all aspects of daily life, the resilience of ICT systems is a public concern. Recognizing this, the European Union adopted the Network and Information Systems Directive (NIS Directive) in 2016 [10], the first comprehensive EU-wide cybersecurity legislation [3]. While the NIS Directive improved Member States’ cybersecurity capacity, its implementation revealed shortcomings. To address these, the European Commission proposed a revision, which resulted in the adoption of the Network and Information Systems Directive 2 (NIS2 Directive) [11]. The NIS2 Directive came into force on 18 October 2024, replacing the original framework [12].

The NIS2 Directive outlines security requirements, including confidentiality, authorization, control, and reliability; however, its effectiveness depends on proper organizational structures and architectural support [13]. It obliges Member States to establish national policies, though it does not prescribe specific content [14]. Implementation challenges are expected, especially as the Directive expands its scope to new entities and requires coordination with Computer Security Incident Response Teams. Common difficulties include scalability, uneven access to support services, and risks linked to coordination across stakeholders [15].

Several studies have examined barriers to compliance with NIS2. Reported barriers include insufficient awareness of security frameworks [16], limited financial resources [5,17], and the complexity of the cybersecurity environment [17,18]. However, these barriers have primarily been studied in isolation. Their interdependencies, and the ways in which they reinforce or mitigate one another, remain understudied. This gap limits understanding of the systemic nature of challenges faced by organizations attempting to comply with NIS2.

Addressing this research gap is important for both academic and policy debates. Cybersecurity today is not only a technical necessity but also a strategic priority for organizations and governments. Understanding the barriers to compliance, and the relationships between them, can inform the design of policies and practices that promote resilience.

This study responds to the gap by identifying and modeling barriers to NIS2 compliance using the Decision-Making Trial and Evaluation Laboratory (DEMATEL) method. DEMATEL is well-suited to explore the causal relationships between complex factors. In this context, it is applied to examine how barriers such as technical limitations, regulatory complexity, resistance to change, and resource constraints interact and influence each other. The analysis aims to provide a structured view of the most influential barriers, supporting decision-makers in prioritizing interventions and allocating resources more effectively.

The remainder of this paper is organized as follows. Section 2 presents the background of the study, including an overview of cybersecurity regulations, the transition from NIS to NIS2, and the Directive’s operational, legal, and economic dimensions. Section 3 reviews the literature on barriers to NIS2 compliance. Section 4 outlines the DEMATEL methodology, including data collection and sample details. Section 5 reports the empirical results, focusing on the cause-and-effect relationships among barriers. Section 6 discusses these findings and situates them within the broader context of cybersecurity governance. Section 7 examines the managerial, societal, and policy implications, while Section 8 discusses the study’s limitations and proposes directions for future research. Section 9 concludes the paper by summarizing the main insights and highlighting the study’s overall contribution.

2. Background

This section provides the background for this study by reviewing key cybersecurity regulations and principles, outlining the transition from the NIS Directive to NIS2, and examining the Directive’s operational, legal, and economic dimensions.

2.1. Cybersecurity Regulations and Principles

The dependence on ICT has made cybersecurity a central policy and operational concern. Traditional security threats have shifted into cyberspace, creating new risks that require regulatory responses [1]. Over the last two decades, several frameworks have been developed to protect the confidentiality, integrity, and availability of information. In Europe, the GDPR governs the processing of personal data, while sector-specific rules such as the Payment Card Industry Data Security Standard focus on the secure handling of financial data. Internationally, the NIST Cybersecurity Framework provides widely adopted guidance, and the ISO/IEC 27001 family of standards defines requirements for information security management systems. The Digital Operational Resilience Act (DORA) addresses risks in the financial sector, highlighting the increasing role of regulatory specialization.

A shared foundation across these frameworks is the CIA triad (i.e., confidentiality, integrity, and availability), which remains the reference model for designing controls [1]. Effective cybersecurity depends on a layered approach that includes authentication mechanisms, access control, incident management, intrusion detection, and encryption. The combination of these measures provides resilience against diverse threats, from ransomware to advanced persistent threats.

At the European level, the European Union Agency for Cybersecurity (ENISA) has emphasized that cybersecurity regulation should be clear, consistent, balanced, and non-discriminatory. Clear rules reduce compliance ambiguity and help smaller organizations avoid misinterpretations. Consistency across Member States is essential to prevent fragmentation, which increases costs and weakens cross-border security cooperation. A balanced approach ensures that regulations mitigate risks without imposing excessive burdens, while non-discrimination safeguards fair competition [19].

These principles are not abstract. The SolarWinds supply chain breach in 2020 and the sharp rise in ransomware incidents, which accounted for about 35% of intrusions in 2021 [20], show how regulatory clarity and coordination can affect real outcomes. ENISA’s Threat Landscape report [19] further highlights the growing role of supply chain risks, disinformation, and the weaponization of digital platforms in the context of geopolitical conflicts. Such developments show why cybersecurity cannot be left solely to organizational discretion and why regulation has become a cornerstone of digital resilience [19,21].

ISO standards play a central role in connecting regulatory requirements with operational practices. ISO/IEC 27001 provides structured processes for risk management, gap analysis, audits, and continuous improvement, aligning with broader principles of organizational governance [8,22]. By mapping regulatory obligations to ISO controls, organizations can simultaneously meet compliance requirements and strengthen internal security practices. This integration reflects a broader trend in which regulations are most effective when they are supported by established management frameworks that balance technical, human, and cultural dimensions of security [23].

2.2. Transition from NIS to NIS2

The original NIS Directive, adopted in 2016, was the first comprehensive piece of EU-wide cybersecurity legislation [3]. It required Member States to designate national Computer Security Incident Response Teams, establish cybersecurity strategies, and ensure incident monitoring and reporting in sectors deemed essential, including energy, transport, health, banking, water, and digital infrastructure. The Directive also applied to Digital Service Providers such as online marketplaces and cloud services, although under a lighter regulatory regime [24].

Despite these advances, the NIS Directive faced significant implementation challenges. Fragmentation across Member States created inconsistent levels of protection, and the scope was too narrow to reflect the critical role of other sectors in modern economies [24]. Differences in how obligations were enforced undermined the Directive’s harmonization goal, leaving gaps in cross-border coordination.

The NIS2 Directive was designed to address these shortcomings [12,25]. NIS2 broadens the scope substantially by introducing two categories of covered entities: (i) Essential Entities, which operate in sectors such as healthcare, transport, energy, and digital infrastructure, and (ii) Important Entities, which include waste management, food production, manufacturing, postal services, and research organizations [7]. This expansion reflects recognition that disruptions in these sectors can have cascading effects across societies and economies.

NIS2 also strengthens reporting obligations, improves supervisory powers, and enhances EU-wide coordination through an expanded role for ENISA and the introduction of Coordinated Vulnerability Disclosure mechanisms [26]. Unlike GDPR, which regulates personal data protection, NIS2 focuses on the resilience of network and information systems. While GDPR holds primary authority over data privacy, NIS2 establishes exclusive authority over cybersecurity and critical infrastructure protection [3]. Maintaining this distinction helps avoid regulatory overlap while ensuring that both frameworks support complementary goals.

The Directive also embeds accountability more deeply. Organizations must adopt preventive measures and further demonstrate the adequacy of their practices to supervisory authorities. This shift reflects an evolution in EU cybersecurity regulation from prescriptive compliance to performance-based accountability.

2.3. Operational, Legal, and Economic Dimensions

NIS2 introduces obligations that extend across technical, organizational, and governance layers. Operationally, the Directive expands cybersecurity responsibilities into both information technology (IT) and operational technology (OT) environments. While IT security focuses on protecting data, networks, and applications, OT security emphasizes system availability and the continuity of physical processes, where downtime can endanger public safety and disrupt essential services. The inclusion of OT reflects the EU’s recognition that critical infrastructures are increasingly digitalized and interconnected [27,28,29].

From a legal perspective, NIS2 retains a directive-based model, requiring Member States to transpose its provisions into national law. This minimum harmonization approach establishes a shared baseline while allowing adaptation to national contexts [14]. However, such flexibility carries risks, as divergent interpretations and uneven enforcement can create compliance uncertainty, particularly for entities operating across borders. The Directive seeks to mitigate this risk by strengthening EU-level coordination and requiring regular peer reviews among Member States [26].

Economically, NIS2 compliance imposes significant costs. Obliged organizations must conduct regular risk assessments, develop incident response capabilities, and implement governance, risk, and compliance structures. These requirements place particular strain on smaller entities with limited financial and human resources. Failure to comply carries serious consequences, with penalties reaching up to €10 million or 2% of annual global revenue, as well as possible operational restrictions and mandatory audits [30,31]. Such penalties aim to ensure accountability but may also deter investment in innovation if compliance costs are perceived as excessive.

NIS2 also interacts with other EU instruments. The DORA, adopted in 2020, sets requirements for financial institutions, focusing on operational continuity and resilience during crises [32]. While NIS2 functions as lex generalis (i.e., general framework) for cybersecurity across sectors, DORA serves as lex specialis (i.e., sector-specific framework) for the financial sector, establishing more detailed rules [5]. Entities covered by DORA are exempt from NIS2, but both frameworks share objectives of resilience and risk management, highlighting the growing specialization of EU regulation.

The Directive’s operational, legal, and economic provisions demonstrate the EU’s effort to create a cybersecurity framework that is comprehensive, clear, enforceable, and adaptable. Yet they also illustrate why compliance is challenging, since organizations must deal with overlapping frameworks, align IT and OT practices, and allocate resources to meet demanding requirements under the threat of financial and reputational penalties.

3. Literature Review

This section examines the barriers to NIS2 compliance identified in prior academic and policy work. To build a comprehensive view, the review draws on published studies, reports, and regulatory frameworks that analyze the challenges associated with the Directive.

The search strategy was designed to capture research explicitly addressing NIS2 and its compliance difficulties. Scopus served as the primary database, with queries run between late 2024 and early 2025 using the keywords “NIS2”, “cybersecurity”, “challenges”, and “barriers”. This initial step returned 62 publications. After practical screening and qualitative assessment, 27 met the inclusion criteria. These comprised 14 journal articles, 12 conference papers, and one book chapter, as categorized by Scopus. Inclusion criteria were English-language publications that explicitly discussed NIS2 implementation challenges and offered empirical or theoretical contributions to cybersecurity policy or regulation. Exclusion criteria were applied to items that, despite containing relevant keywords, did not address NIS2 directly or lacked methodological grounding.

To broaden the coverage, forward and backward citation searches were conducted [33,34]. This resulted in the addition of 15 relevant publications that were not indexed in Scopus but were central to understanding the Directive’s application and development, bringing the total to 42. These included policy and regulatory documents from the European Commission and ENISA, as well as business reports. This approach ensured that the review included both academic and practitioner perspectives.

The final corpus of materials provided a structured basis for analysis. Across the 42 selected publications, 14 distinct barriers to NIS2 compliance were identified. Each barrier is discussed in the following subsections, with attention to the ways it has been characterized in the literature and its implications for organizations seeking to align with the Directive. A concise overview of the main insights from the literature, along with the corresponding references for each barrier, is provided in Appendix A, Table A1.

3.1. Lack of Awareness and Understanding

Many organizations, especially Small and Medium-sized Enterprises (SMEs), struggle to interpret what NIS2 requires and how those requirements apply to their context. Limited understanding reduces the priority given to compliance activities and weakens risk assessment and control selection [35,36]. Sectoral examples echo this pattern; in the space domain, smaller actors face knowledge gaps that hinder competitive response to NIS2 [37]. Incomplete grasp of the threat picture compounds the problem, making it harder to align defensive measures with NIS2 expectations [16].

Part of the difficulty is legal–technical complexity. New or revised definitions and scope elements can be hard to parse even for legal professionals, with cloud-related concepts cited as areas of confusion [38]. Clear, practical guidance remains uneven across sectors and sizes, despite the socio-technical nature of cybersecurity and the central role of human factors [2,13,21]. ENISA reports encouraging familiarity rates (92% among 1350 organizations) but also persistent pockets of unawareness, highlighting the need for targeted communication through national authorities and coordinated EU campaigns [39,40]. Fundamentally, effective implementation presupposes a sound grasp of core concepts and principles [1].

3.2. Insufficient Financial Resources

Compliance requires upfront and recurring investment in technology, training, risk assessment, incident response, and governance capacity. Many entities lack the budgetary headroom to establish these foundations [13]. Earlier work already noted that NIS-era policies did not prescribe specific methods or budgets, leaving financial prioritization contentious [24]. Regulators themselves reported resource constraints, signaling systemic pressure in the compliance chain [17]. At the same time, the macro-level economics of cyber risk are worsening, as breach costs remain high and projected aggregate losses are rising, which strengthens the business case for investment but also heightens funding strain [5].

Survey evidence suggests a reallocation rather than net-new funding. In 2024, 68% of firms reported drawing NIS2 budgets from other cost centers amid shrinking IT budgets [41]. Scholarly and industry analyses characterize full compliance as costly and organizationally demanding, proposing structured approaches to reduce complexity and align spending with risk [12]. Yet budget gaps are common, with roughly three-quarters of organizations reporting no dedicated allocation, and aggregate annual costs estimated at €31.2 billion for already regulated sectors plus €29.9 billion for newly covered ones [35,36]. Limited funding impedes security awareness initiatives and maturity gains, especially where systems are internet-facing and financial reporting relies on IT platforms [42]. Boards also demand quantifiable evidence for spend, a long-standing pain point for Chief Information Security Officers that complicates stakeholder buy-in in ambiguous risk environments [24].

3.3. Technical Complexity

NIS2 introduces technical and organizational requirements that are especially difficult for entities with outdated or legacy systems [13]. Implementation requires structured governance frameworks that enforce policies and risk management strategies, but EU organizations often face overlapping regulatory demands that add to the complexity [8]. Some industries, such as space technology, face additional hurdles due to specialized systems and limited expertise, while certification processes can increase administrative burden [37]. Fragmentation at the national level exacerbates the problem, as Member States rely on different security assessment instruments, such as Estonia’s Public Information Act, creating variability in compliance obligations [43]. Technical complexity is also evident in the Internet of Things (IoT) ecosystem, where multi-layered security frameworks and heterogeneous risks complicate consistent application of NIS2 [44]. ENISA has begun issuing guidance and mapping tables to align NIS2 obligations with national frameworks [45]. Without coordinated approaches, heterogeneous frameworks risk undermining critical infrastructure protection, as noted by Passerini and Kountche [46].

3.4. Shortage of Cybersecurity Professionals

Although the global cybersecurity workforce reached 5.5 million in 2023, demand continues to outpace supply, leaving organizations without the talent needed for NIS2 compliance [47]. Workforce shortages stem from macroeconomic conditions, hiring freezes, and insufficient early-career opportunities, with universities struggling to align programs with industry needs [48]. Entering the field typically requires education, certification, and experience, making the pipeline slow to develop. NIS2 may further intensify demand by creating requirements for specialized skills across socio-technical domains [2,24]. National instruments shaped by local risks amplify the call for professionals, yet standardized renewal methods for skills remain limited [43]. In sectors such as space, the shortage may lead to consolidation as only firms with cybersecurity leadership can compete [37].

3.5. Complex and Evolving Cybersecurity Threat Landscape

Cybersecurity incidents vary significantly in scope and impact, making it difficult for organizations to maintain consistent defenses [16]. To mitigate these risks, frameworks such as Zero Trust Architecture have been promoted, but adoption requires resources and technical maturity. ENISA surveys show that while 90% of organizations expect attacks to increase in frequency and cost, only 4% report investing adequately in new technologies [39]. Limited regulatory capacity further constrains enforcement of NIS2 [17]. OT adds further uncertainty. Industry-specific differences mean that applying generic “best practices” can unintentionally introduce vulnerabilities [24]. Legal changes and requirements for risk disclosure further complicate the situation [7,12]. IoT ecosystems, with diverse devices and contexts, reinforce the point that risks are dynamic and context-dependent [44]. Broader crises such as COVID-19 have only amplified uncertainty and blurred risk boundaries [49].

3.6. Fragmented Regulatory Landscape

Although NIS2 aims to harmonize cybersecurity rules, Member States interpret and implement the Directive in different ways. Key terms such as “significance” or “assets” remain undefined at the EU level, leading to divergent national approaches and uncertainty for cross-border organizations [1,16]. For example, Luxembourg defines significance through regulatory measures, while other countries provide little guidance. This variation creates challenges for firms operating in multiple jurisdictions. Industries such as finance, healthcare, and energy also face overlapping sectoral regulations in addition to NIS2 [43]. National frameworks, such as Latvia’s ICT compliance rules and Estonia’s information security standard E-ITS, illustrate this fragmentation [9]. The European Commission [50] emphasizes the need for national cybersecurity strategies aligned with EU-wide objectives, but inconsistencies persist.

3.7. Operational Disruptions

Cyber incidents can trigger cascading failures with financial, reputational, and legal consequences. NIS2 requires essential and important entities to notify authorities of incidents and adopt response and recovery measures to ensure continuity [7,44]. In manufacturing and industrial automation, IoT networks are particularly exposed, where disruptions may cause downtime or safety risks. Attacks such as phishing can provide gateways to system failures, including power outages [51]. Addressing operational risks requires intelligence-driven assessments that draw on internal data and external sources such as government advisories [37]. A coordinated approach that aligns NIS2 with GDPR and other sectoral standards can strengthen resilience through multi-layered defense [7].

3.8. Resistance to Change and Organizational Culture

Resistance to change slows the adoption of NIS2 measures. Executives often prioritize operational continuity, while technical teams struggle with added responsibilities [43]. Some Member States lack updated instruments and data-collection mechanisms, which reinforces inertia in organizational practices. A strong risk-aware culture is critical to embed cybersecurity requirements within broader IT and operational frameworks [52]. The rapid expansion of IoT and cyber–physical systems (CPS), especially in energy and smart grids, introduces new risks that organizations may hesitate to confront [44]. Without leadership to drive change and overcome cultural barriers, compliance remains superficial. Threat detection and structured security approaches can help organizations adapt [51].

3.9. Lack of Clear Guidance and Support from Authorities

Uncertainty about reporting and compliance obligations remains a major concern. Under the original NIS Directive, only severe incidents were reported, limiting early detection. Under NIS2, broader reporting increases demands on organizations but lacks consistent guidance [16]. Ambiguities persist in defining cloud services, disclosure obligations, and third-party requirements [17]. National authorities design their own assessment instruments, which vary in quality and frequency [43]. This lack of clarity weakens crisis coordination among Member States and organizations [53] and has prompted criticism from industry, including Microsoft [54]. Although some jurisdictions and sectors have introduced their own initiatives, international cooperation remains limited [55].

3.10. Supply Chain Vulnerabilities

NIS2 highlights the importance of strengthening ICT/OT supply chain security, as many entities depend on distributed systems outside their direct control [56]. While organizations are encouraged to assess supplier practices, the Directive does not prescribe a specific approach, leaving significant flexibility [13]. Cross-functional teams and structured reviews of interdependencies are recommended to address these risks [2]. Complex global supply chains, especially in sectors such as space, heighten exposure and impact [37]. Certification schemes for suppliers may be necessary to increase transparency and trust [57]. Evidence from case studies suggests that third-party audits, monitoring, and contractual obligations are critical for ensuring compliance.

3.11. High Cost of Incident Reporting and Management

Incident reporting and management, while central to NIS2, impose significant financial and operational burdens. Aligning legislative and ISO requirements can reduce duplication, but many organizations still face high costs for staffing, training, and outsourcing [8]. Security Operations Centers require specialized teams such as blue (defensive), red (offensive), and purple (collaborative), which are resource-intensive to maintain [58]. ENISA [59] notes that compliance drives additional legal and administrative costs, while poor reporting processes can damage market position and reputation. The 24-h reporting obligation requires upgraded mechanisms and skilled personnel, increasing demand in an already tight labor market [15]. A well-structured incident response framework, supported by regular training, helps reduce outsourcing costs and ensures compliance [24].

3.12. Data Privacy and Confidentiality Concerns

NIS2 mandates reporting of incidents that may expose sensitive data, raising concerns about confidentiality and privacy. Reports must often be anonymized or pseudonymized to prevent misuse [16]. While intelligence sharing strengthens resilience, it must respect privacy requirements and rely on trusted networks [24]. Companies increasingly disclose cybersecurity risks in sustainability and annual reports, linking them to broader governance frameworks [7]. Article 12(1) of NIS2 permits anonymous vulnerability reporting, but Member States may lack experience in supporting this process [14]. Encouraging responsible disclosure by ethical hackers is crucial to prevent vulnerabilities from being sold illicitly. ENISA [40] stresses the importance of national coordinated vulnerability disclosure procedures that safeguard sensitive information while ensuring timely reporting.

3.13. Inadequate Risk Assessment and Management Capabilities

Risk assessment maturity is uneven across organizations, undermining NIS2 compliance. Ambiguous reporting obligations often discourage disclosure, while national authorities may lack the resources to handle large volumes of reports [17]. IEC 31010:2019 [23] stresses that choice of risk assessment techniques depends on expertise, time, and resources, yet many organizations lack such capacity. A structured security strategy with clear objectives is essential for compliance [13]. Larger corporations typically have mature systems, whereas SMEs often lag behind; 34% of surveyed organizations reported no management involvement in risk management [35]. Mapping and classification approaches can improve scalability [8]. Integration of proactive detection with structured risk management helps address these gaps [51,60].

3.14. Lack of Inter-Organizational Collaboration

Despite NIS2’s emphasis on cooperation, organizations remain reluctant to share sensitive information. Concerns over confidentiality hinder collaboration across sectors and supply chains [2]. Manufacturing firms, for instance, must balance vendor assessments with selective data sharing [35]. In the space sector, standardized channels between satellites and ground stations are needed to address supply chain risks [37]. Silos further complicate classification of entities spanning multiple sectors [13]. This weakens collective situational awareness, which requires operational, tactical, and strategic coordination [61]. EU-level initiatives such as the one-stop-shop mechanism [3] and joint cybersecurity exercises can support better collaboration, but jurisdictional gaps persist [9].

4. Methodology

This section outlines the DEMATEL methodology, including data collection and sample details.

4.1. DEMATEL Framework

This study employs the DEMATEL method to identify and analyze the interrelationships among the barriers to NIS2 compliance. The DEMATEL approach is widely recognized for its ability to capture complex cause-and-effect relationships and provide structured insights into interdependent systems [62,63]. Originally developed in the 1970s at the Battelle Memorial Institute in Geneva, DEMATEL is grounded in graph theory and constructs directed graphs that illustrate how factors influence one another [64,65]. The procedure followed in this study consisted of five main stages [66,67,68]: (i) building the average direct relation matrix, (ii) normalizing the matrix, (iii) deriving the total relation matrix, (iv) computing prominence and net effects, and (v) visualizing the causal structure. The DEMATEL calculations were based on the mathematical formulations of Moktadir et al. [69].

4.1.1. Average Direct Relation Matrix

The first step establishes the average direct influence among the identified barriers. Each expert evaluates how strongly barrier i affects barrier j, using a scale from 0 (no influence) to 4 (very strong influence). If there are n barriers and H experts, each expert k provides an matrix . The aggregated Average Direct Relation Matrix = , is then obtained by averaging across all expert inputs:

This matrix serves as the baseline representation of direct influences within the system.

4.1.2. Normalization of the Direct Relation Matrix

To make the influence values comparable, the average matrix M is normalized. A scaling factor S is calculated as the smaller of the reciprocals of the maximum row sum or the maximum column sum of M:

The normalized direct relation matrix is then defined as , ensuring all values fall between 0 and 1 while preserving proportional relationships.

4.1.3. Total Relation Matrix

The normalized matrix captures only direct effects. To account for both direct and indirect influences, the Total Relation Matrix is computed as:

where I is the identity matrix. This matrix provides a complete view of the interactions among barriers, including feedback and indirect effects.

4.1.4. Prominence and Net Effect

From T, two measures are obtained for each barrier. The row sum gives the total influence exerted:

The column sum gives the total influence received:

Prominence is defined as , indicating the overall importance of a barrier. The net effect is ; a positive value shows that the barrier mainly influences others (a causal factor), while a negative value means it is mainly influenced by others (an effect factor). Plotting these values on a two-dimensional diagram helps distinguish driving and dependent barriers.

4.1.5. Visualization and Threshold Determination

Finally, an impact-relation map is generated to display the most important causal connections. To avoid clutter and highlight only meaningful influences, a threshold is applied (), where μ is the mean and σ the standard deviation of all values in T. With this approach, as applied by Moktadir et al. [69], Kouhizadeh et al. [70], and Chountalas et al. [71], only relationships above θ are retained in the diagram, ensuring clarity while capturing the strongest causal structure.

4.2. Sample Information and Data Collection Process

Recruiting cybersecurity and compliance professionals poses practical difficulties, as they represent a specialized group in high demand. A census-type approach is not feasible in this context. Instead, non-probability techniques were employed, specifically purposive and snowball sampling, which offer a pragmatic balance of efficiency, cost, and access.

The barriers to NIS2 Directive Compliance examined in this study were first drawn from the academic and policy literature (see Section 2). Their suitability was then reviewed by a small panel of practitioners with more than a decade of experience each. The panel consisted of a finance-sector C-level executive and two senior managers from the technology/IT sector, whose feedback helped confirm the clarity and practical relevance of the selected barriers.

Participants were asked to complete a structured questionnaire assessing the influence of each barrier on the others. A 14 × 14 pairwise comparison matrix was used, applying a five-point scale where 0 indicated no influence and 4 indicated very strong influence. The barriers included in the matrix were drawn from the literature review. To account for ethical standards in research, participation was voluntary, and respondents were informed about the study’s purpose and use of data. Demographic information was also collected to contextualize the findings.

The final dataset included 26 professionals working in IT and cybersecurity roles across multiple organizations and sectors, including finance, energy, transport, and digital services. The sample was predominantly male, reflecting the gender imbalance commonly reported in these sectors. Most participants had between 11 and 20 years of professional experience, with an overall mean of 16.65 years, and a large proportion held a master’s degree.

This group represented a mix of perspectives, combining strategic, managerial, and technical expertise. Senior executives, compliance officers, and operational cybersecurity specialists were all represented, allowing for insights that reflect both governance and implementation realities of NIS2. Such heterogeneity enhances the study’s ability to capture cross-sectoral patterns and common challenges while remaining consistent with the qualitative and expert-oriented nature of DEMATEL. A detailed profile of respondents is provided in Appendix A, Table A2.

It should be stressed that DEMATEL does not depend on large samples to yield meaningful outcomes. As an expert-based approach, its strength lies in the knowledge and experience of participants rather than in statistical generalization. Previous studies indicate that groups as small as 10 to 15 domain experts are often sufficient to generate dependable insights when the panel is relatively uniform in background and expertise [68,72,73]. To examine the consistency of the judgments provided in this study, the Intraclass Correlation Coefficient (ICC) was calculated using a two-way mixed-effects model with average measures [ICC(3,k)].

5. Results

This section reports the outputs of the DEMATEL analysis. The first part presents the reliability check of the expert input. The second outlines the relative importance of each barrier and whether it functions primarily as a driver or a dependent factor. The third identifies the strongest causal links among barriers. The supporting matrices used in these calculations (i.e., the Average Direct Relation Matrix, the Normalized Direct Relation Matrix, and the Total Relation Matrix) are provided in Appendix A Table A3.

5.1. Reliability of Expert Evaluations

Consistency in expert assessments was examined through the Intraclass Correlation Coefficient. The analysis produced a value of 0.777 (p < 0.001), indicating a moderately strong level of agreement. While individual variation was present, the overall level of coherence across the group was high enough to justify treating the judgments as a credible basis for building the causal structure of the barriers to NIS2 compliance.

5.2. Significance and Overall Impact of the Barriers

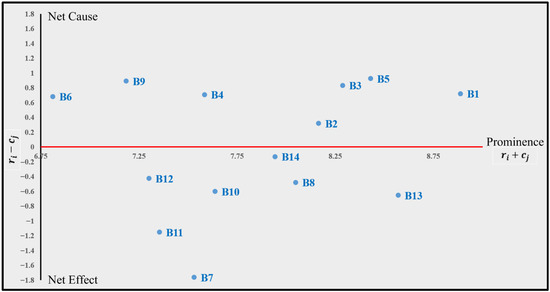

Table 1 presents the prominence and net effect of the 14 barriers to NIS2 compliance. The classification into cause and effect groups was based on the sign of the net effect. Seven barriers were identified as drivers of influence (cause group), while the remaining seven were more often shaped by others (effect group). This balance suggests that no single cluster of barriers dominates the system, thereby reinforcing the suitability of DEMATEL for this analysis.

Table 1.

Prominence and net effect values.

Among the cause group, lack of awareness and understanding (B1) stands out with the highest prominence (8.888), followed by complex and evolving cybersecurity threat landscape (B5) at 8.429. Both are central in terms of prominence and also exert strong net effects (0.721 and 0.926, respectively), underscoring their role as key drivers. Technical complexity (B3) also emerges as influential (8.287 prominence, 0.833 net effect), suggesting that technical issues amplify the compliance burden. Insufficient financial resources (B2), although slightly less prominent (8.165), remains a significant driver with a positive net effect (0.318).

The shortage of cybersecurity professionals (B4) also emerges as a meaningful driver, with a prominence of 7.583 and a positive net effect (0.707). While its centrality is lower than that of awareness or technical barriers, its influence highlights how workforce limitations can amplify both operational and managerial weaknesses across the system.

Other causes, such as lack of clear guidance and support from authorities (B9), display a notable net effect (0.891) despite moderate prominence, indicating that regulatory clarity may disproportionately shape the system. Fragmented regulatory landscape (B6), on the other hand, shows a weaker prominence (6.811) but still maintains a positive net effect (0.681), positioning it as a niche yet influential cause.

The effect group includes barriers that absorb influence from others. Inadequate risk assessment and management capabilities (B13) has the highest prominence among effects (8.570) and a negative net effect (−0.654), suggesting that while central, it is heavily dependent on upstream causes. Resistance to change and organizational culture (B8) also shows strong prominence (8.049) with a modest negative net effect (−0.479). Operational disruptions (B7) and high cost of incident reporting and management (B11) record the most extreme negative net effects (−1.763 and −1.153, respectively), making them highly dependent barriers.

Other effects, such as supply chain vulnerabilities (B10) and data privacy and confidentiality concerns (B12), cluster around mid-range prominence (7.637 and 7.301), while lack of inter-organizational collaboration (B14) exhibits a near-zero net effect (−0.130), reflecting a marginal role compared to the rest.

Figure 1 illustrates the distribution of barriers on the prominence–net effect plane. Barriers in the upper-right quadrant, such as B1, B3, and B5, function as strong causal drivers. Conversely, barriers like B7 and B11 occupy the lower-left quadrant, highlighting their dependence on systemic factors. The positioning of B13 and B8 confirms their dual role as critical but reactive barriers. This graphical mapping highlights where intervention could be most effective—strengthening cause factors while mitigating the vulnerabilities observed in dependent ones.

Figure 1.

Prominence and net effect diagram.

5.3. Key Causal Relationships Between the Barriers

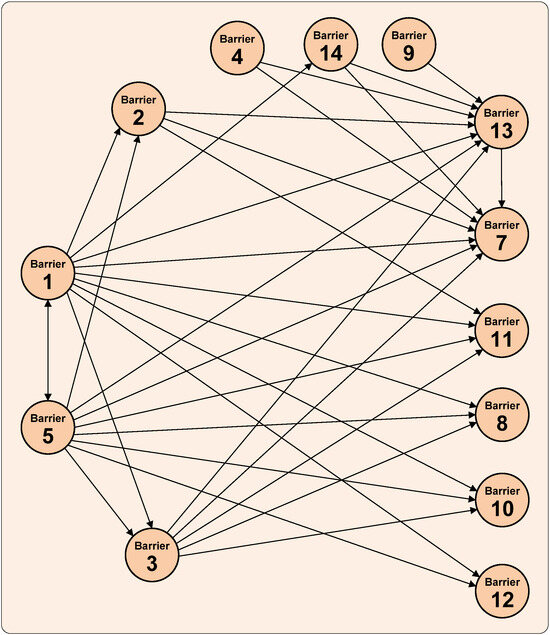

The Total Relation Matrix (see Appendix A, Table A3) highlights the interactions exceeding the established threshold (θ = 0.359), ensuring that only the most meaningful causal links are retained for interpretation. Figure 2 presents these connections in the form of an influence map.

Figure 2.

Key causal relationships.

The results show that B1 and B5 exert the strongest outward influence on other barriers. B1 is especially central, with significant effects on B2, B3, B7, B8, B10, B11, B12, B13, and B14. This spread illustrates B1’s role as a foundational driver in shaping both technical and organizational barriers.

B5 also emerges as a strong causal factor, influencing B2, B3, B7, B8, B10, B11, B12, and B13. Its influence pattern mirrors B1’s reach but with particular strength on B7 and B13, highlighting the role of a shifting threat environment in triggering operational disruptions and exposing weaknesses in risk management.

Among the receiving barriers, B7 and B13 show the largest incoming effects, confirming their dependence on upstream factors. This makes them critical endpoints in the network, where upstream deficiencies appear as operational or management failures.

The analysis also reveals a reciprocal relationship between B1 and B5. This two-way link suggests that limited awareness hampers preparedness for emerging threats, while new threats in turn expose awareness gaps, creating a reinforcing cycle at the core of the system. Acting as mediators, B2 and B3 channel the effects of B1 and B5 toward several dependent barriers. These dynamics suggest a layered structure in which B1 and B5 act as primary drivers, B2 and B3 function as mediators, and barriers such as B7, B11, and B13 emerge as the main dependent outcomes.

Other observed interactions include B4’s influence on B7 and B13, suggesting that workforce shortages translate into operational weaknesses and higher reporting burdens. Similarly, B14 maintains weaker but still above-threshold ties with B7 and B13. At the periphery, B9 shows only one significant outgoing link, influencing B13. These findings position these barriers as secondary influencers with localized impact.

In contrast, B6 did not surpass the threshold in any of its interactions, and thus does not appear in the influence map. This suggests that while it has importance in net effect analysis, its direct relational strength with other barriers is comparatively limited.

6. Discussion

The DEMATEL analysis provides a structured view of how barriers to NIS2 compliance interact, highlighting which issues drive systemic weaknesses and which are primarily consequences of upstream gaps. These findings show that cybersecurity risks do not exist in isolation; instead, they spread through interconnected links, where addressing one weakness can trigger further effects across the system. This interconnectedness reflects the principles of IEC 31010:2019, which emphasizes that risk dependencies must be understood to prevent mitigation efforts from inadvertently triggering secondary problems.

A central observation is the reinforcing relationship between lack of awareness and understanding and the evolving cybersecurity threat landscape. The reciprocal link between these two barriers suggests that limited awareness weakens the ability to anticipate new threats, while shifting threats expose the shortcomings of low awareness. This cycle forms a structural feedback loop at the top of the system, amplifying vulnerabilities across multiple domains. Such dynamics align with ENISA’s NIS360 report [40], which stresses the importance of investment in awareness initiatives and proactive preparedness to strengthen resilience.

Technical complexity and insufficient financial resources were found to mediate much of the influence from lack of awareness and understanding and evolving cybersecurity threat landscape toward downstream effects. Both act as mediators rather than primary initiators. For example, insufficient financial resources channels the lack of awareness and threat-driven pressure into operational disruptions and cost burdens, while technical complexity translates those earlier weaknesses into problems with risk management and resilience. This layered structure illustrates how technical and financial limitations can intensify systemic risks when coupled with weak awareness and shifting threats. ENISA [40] similarly notes that managing complexity and improving resource allocation are prerequisites for strengthening cybersecurity capacities.

Among the dependent factors, operational disruptions and inadequate risk assessment and management capabilities absorb the highest levels of incoming influence. These outcomes demonstrate how upstream deficiencies appear as tangible operational failures or systemic weaknesses in risk governance. The results suggest that reactive responses to disruptions are insufficient, as ENISA [40] has also argued. Instead, efforts must focus on strengthening the underlying causes, particularly awareness, technical capabilities, and financial investment.

The role of risk management is especially notable, as it functions both as a dependent barrier and as an intermediary linking upstream causes to operational outcomes. Weaknesses in risk assessment therefore act as a bottleneck, amplifying systemic vulnerabilities. Strengthening this capacity could provide leverage across multiple areas, as noted by Coppolino et al. [51] and ENISA [59], who argue for a shift from threat-centric to risk-centric approaches in cybersecurity governance.

Sector-specific findings from ENISA support these systemic observations. For example, finance and energy sectors face increased exposure due to complex infrastructures and emerging attack vectors such as AI-driven phishing and deepfake fraud [59]. The persistent shortage of cybersecurity professionals further compounds this, linking workforce gaps to operational weaknesses and higher costs. This demand–supply imbalance in skilled labor has been repeatedly flagged in both academic and policy reports.

Some barriers appear weaker in terms of direct causal weight but remain relevant in shaping broader contexts. Fragmented regulatory landscape, while excluded from the causal graph due to falling below the threshold, still holds structural importance given its impact on compliance uncertainty. Similarly, lack of clear guidance showed only one significant outgoing link, suggesting that while regulatory clarity matters, it does not drive systemic failures to the same extent as technical or organizational weaknesses. This aligns with Vandezande [5], who emphasizes that the EU Cybersecurity Act and NIS2 strengthen ENISA’s mandate as a coordinating authority rather than acting as isolated regulatory levers.

Cultural and organizational dimensions also remain critical. Resistance to change, supply chain vulnerabilities, and lack of inter-organizational collaboration carry less systemic influence but still reflect challenges with leadership, culture, and external dependencies. Historical breaches, such as Yahoo’s large-scale compromise [74], demonstrate how organizational behavior and weak vendor oversight can escalate into systemic risks. Although these barriers occupy a less central structural role, ignoring them would weaken the overall cybersecurity posture.

Therefore, the results suggest a layered system. At the top are reciprocal and reinforcing drivers (lack of awareness and understanding, and evolving cybersecurity threat landscape). In the middle are mediators that transmit earlier weaknesses into multiple domains (insufficient financial resources and technical complexity). At the bottom are dependent outcomes (mostly operational disruptions, high cost of incident reporting and management, and inadequate risk assessment and management capabilities) where upstream deficiencies manifest as tangible failures. This structure highlights that targeting root causes (particularly awareness, threat anticipation, and technical and financial capacity) offers the greatest leverage for improving compliance and resilience.

Finally, aligning these findings with a PESTLE perspective shows how the barriers span political (B6, B9), economic (B2, B11), societal (B1, B4, B8, B13, B14), technological (B3, B5, B7, B10), and legal (B12) domains. This framing highlights that NIS2 compliance is not a narrow technical challenge but a multi-dimensional governance problem that requires coordinated responses across policy, organizational practice, and technological investment.

7. Managerial, Societal, and Policy Implications

The results highlight several implications for both organizational management and wider society.

For managers, the strong reciprocal link between lack of awareness and the evolving threat landscape means that cybersecurity education and situational awareness cannot be treated as one-off initiatives. Training must be continuous and adaptive to shifting threats. Awareness campaigns that remain static risk becoming obsolete, leaving organizations unprepared for new attack vectors. This insight extends beyond compliance. Organizations that institutionalize dynamic awareness programs are better positioned to anticipate risks and avoid the reinforcing cycle of low preparedness and growing threats.

Technical complexity and financial constraints emerged as mediators that convert earlier weaknesses into operational and financial burdens. From a managerial standpoint, this suggests that isolated investments, such as purchasing advanced tools without addressing staff expertise, or budgeting cybersecurity reactively, are likely to amplify rather than mitigate risk. Managers need to treat financial planning and technical capability-building as systemic enablers, not supplementary measures. Practical steps could include prioritizing scalable security architectures, adopting open standards to reduce unnecessary complexity, and allocating resources for long-term capacity-building rather than short-term fixes.

Operational disruptions and inadequate risk management were shown to absorb the heaviest incoming pressures. For organizations, this means that disruptions should be treated as symptoms rather than root causes. Responding only at the level of incident handling or continuity planning risks leaving the underlying weaknesses intact. Strengthening risk assessment practices, by embedding them into decision-making processes rather than treating them as compliance exercises, can provide resilience dividends across the organization.

The shortage of cybersecurity professionals also has direct managerial consequences. Workforce limitations were found to intensify both operational failures and reporting burdens. Organizations cannot rely solely on recruitment to address this gap, especially given the systemic talent shortage across sectors. Instead, managers should consider strategies such as internal reskilling, sector-level talent-sharing initiatives, and greater reliance on automation in low-value, repetitive security tasks to free scarce expertise for high-value activities.

From a societal perspective, the findings underline that cybersecurity resilience is shaped as much by governance and collective action as by individual organizations. The limited role of fragmented regulation in direct causal pathways may appear counterintuitive but reflects a deeper issue, namely that rules without clarity or harmonization do not translate into stronger security outcomes. Policymakers should focus less on adding regulatory layers and more on ensuring coherence, guidance, and practical applicability. Otherwise, organizations risk expending resources on dealing with overlaps and ambiguities rather than improving their security posture.

Cultural and collaborative barriers such as resistance to change and lack of inter-organizational collaboration showed weaker systemic influence but still carry important societal implications. Past incidents, such as the Yahoo breach, show that organizational culture can magnify technical failures into systemic crises. On a broader level, limited collaboration between firms and across sectors hampers the ability to recognize cross-cutting risks. Societal resilience therefore requires stronger mechanisms for information-sharing, including trusted platforms where sensitive insights can be exchanged without fear of reputational or legal repercussions.

The layered structure identified in this study (drivers at the top, mediators in the middle, and dependent outcomes at the bottom) suggests that both managerial and societal interventions are most effective when directed at the upper tiers. Awareness-building, investment in technical and financial capacity, and risk management integration create ripple effects that reduce pressures downstream. This reinforces that compliance with the NIS2 Directive should not be framed solely as a regulatory burden but as a governance opportunity to address systemic vulnerabilities that affect both organizations and the societies they serve.

From a policy perspective, the analysis points to areas where targeted intervention could deliver the greatest systemic benefit. Policy measures should focus on breaking the chain of influence at its most impactful points rather than dispersing resources across all barriers. Three directions emerge as particularly effective. First, addressing the lack of awareness and understanding through sector-specific, ready-to-use templates and concise playbooks could help organizations translate regulatory obligations into concrete actions. Such tools shorten the time between awareness and implementation, especially for smaller entities with limited expertise. Second, continuous horizon-scanning advisories and periodic, actionable updates, such as quarterly lists of key emerging threats and recommended mitigations, could help stabilize the volatility created by a constantly shifting threat landscape. Third, introducing proportional, risk-tiered reporting requirements, supported by machine-readable templates, would simplify compliance and lower the cost burden associated with incident management. These measures work at the source of influence by reducing the impact of the most dominant and mediating factors, including awareness gaps, threat uncertainty, technical complexity, and financial strain. In doing so, they can lessen downstream pressures such as operational disruptions, high reporting costs, and weak risk management capabilities without requiring large-scale investment at the organizational level.

A second policy priority concerns regulatory coherence. Although fragmentation across jurisdictions and sectors did not appear as a dominant causal link, its central position in the network suggests that greater standardization would yield indirect gains. Establishing authoritative control mappings and developing reference architectures tailored to SMEs could simplify technical design decisions and enhance governance capacity. Policy instruments such as targeted tax credits or vouchers for initial cybersecurity baselines, covering areas like risk assessment, logging, or incident readiness, would help organizations with limited financial resources meet core requirements. Regional initiatives, such as shared hotlines or tabletop exercises, could further relieve capacity pressures, particularly where expertise is scarce.

Finally, maintaining clear and ongoing guidance from authorities is essential. Guidance should be treated as a continuous service rather than a one-off communication. A stable and responsive advisory function would help prevent the recurrence of awareness-driven weaknesses and ensure that new regulatory or threat developments are translated into practical direction.

8. Limitations and Future Research Directions

This study has several limitations that shape the scope of its findings and suggest directions for future research. The analysis is perception-based, relying on expert judgments that capture relationships among barriers at a single point in time. While DEMATEL provides a useful method for uncovering interdependencies, it cannot confirm whether the identified patterns translate into measurable outcomes such as incident reduction, faster recovery times, or improved audit results. In addition, the model is static and cross-sectional, meaning it does not reflect how relationships between barriers may shift over time as organizations mature in their compliance with the Directive or as the external threat environment changes. Certain barriers were also defined at a broad level, which may compress distinct mechanisms into a single factor and reduce precision in interpreting their roles.

The expert panel consisted of 26 professionals from different sectors and roles, which supports the validity of insights across diverse contexts. However, the relatively small sample size and concentration in certain professional categories limit the breadth of perspectives compared with the full spectrum of stakeholders involved in NIS2 implementation. Future studies could expand participation to include regulators, auditors, and sector-specific authorities to test whether varying professional backgrounds or industry contexts lead to different causal structures.

A further line of research could involve linking the identified systemic patterns to operational and compliance data. For instance, the finding that operational disruptions and incident reporting costs are highly dependent outcomes could be tested by associating the prominence of these barriers with quantitative indicators such as downtime, mean time to recover, or regulatory penalties. Methods such as structural equation modeling or Bayesian networks could be employed to test these relationships, allowing researchers to validate whether weak awareness, financial constraints, and technical complexity indeed act as upstream drivers of these performance outcomes.

Another important step would be to move beyond the static representation offered here and explore how the barrier system evolves over time. Longitudinal research could follow organizations across different phases of Directive implementation, examining whether continuous awareness programs reduce the reinforcing cycle between poor preparedness and the evolving threat environment, or whether new threat vectors renew this loop despite awareness efforts. System dynamics modeling could be especially valuable in this regard, as it allows for simulation of policy interventions, such as training subsidies or new supervisory guidance, and their potential ripple effects across the network of barriers.

The sectoral dimension also needs closer attention. While this study pooled expert input across industries, different contexts exhibit distinct vulnerabilities. For example, energy and transport operators face heightened exposure to OT risks, while financial institutions deal with overlapping compliance under both cybersecurity and financial stability regulations. Developing sector-specific models would allow researchers to identify whether technical complexity, financial constraints, or workforce shortages dominate in each setting. Such models could combine DEMATEL with industry-specific surveys or case studies, producing tailored insights for policymakers and managers.

Collaboration barriers also deserve further consideration. Although the lack of inter-organizational cooperation did not appear central in the influence map, its societal relevance remains significant. Future research could examine whether structured information-sharing platforms, such as Information Sharing and Analysis Centers, mitigate downstream vulnerabilities in incident response and risk management even when their direct causal weight is modest. A mixed-method approach, combining social network analysis of collaboration structures with qualitative interviews, would be well-suited to uncover these dynamics.

The shortage of cybersecurity professionals is another area where further study is needed. Workforce gaps were shown to intensify operational failures and increase reporting burdens, yet most existing research treats this problem in terms of headcount. Future work could examine capability configurations rather than absolute numbers, investigating how reskilling initiatives, automation of routine security tasks, or shared security operation centers alter the burden on organizations. Contingency theory may provide a useful lens here, suggesting that the effectiveness of workforce strategies depends on contextual factors such as organizational size, sector, and regulatory exposure.

Finally, the present study employed barrier categories that, while practical, may have obscured finer-grained mechanisms. Technical complexity, for example, combines issues of legacy system integration with tool sprawl from poorly coordinated investments, while awareness encompasses both executive commitment and employee-level behavior. Future research could disaggregate these categories and reapply influence-mapping methods to test whether more precise definitions change the structure of causes and effects. Resource-based and complexity theories could guide such work, framing cybersecurity capabilities as both scarce organizational assets and elements in a complex adaptive system.

Beyond the NIS2 context, this framework could also be applied to other regulatory domains that share similar compliance challenges. Regulations such as DORA or the Artificial Intelligence Act present comparable patterns of organizational adaptation, cross-functional coordination, and resource constraints. Applying the same causal mapping approach to these frameworks could reveal whether the drivers and mediators identified here, such as awareness, technical complexity, and financial readiness, play similar roles in shaping compliance outcomes. Such extensions would test the generalizability of the proposed model and help develop a more integrated understanding of regulatory implementation across digital governance frameworks.

9. Conclusions

This study examined the systemic barriers that hinder organizational compliance with the NIS2 Directive by applying the DEMATEL method to identify and model their causal relationships. The analysis revealed that cybersecurity compliance challenges form an interconnected system, where weaknesses in one domain often spread into others.

The results indicate that compliance with NIS2 is shaped by reinforcing dynamics between organizational awareness, technical capacity, financial readiness, and regulatory clarity. These elements interact in ways that extend beyond linear cause-effect reasoning, emphasizing that progress in one area depends on coordinated improvement across the others. The broader meaning of these findings lies in how many compliance difficulties originate at the organizational level (where awareness, capability, and funding intersect) and then translate into operational and governance failures. This pattern underlines the importance of addressing cybersecurity as a management and policy problem, not only as a technical one. A systemic response must strengthen both institutional capacity and the consistency of regulatory guidance to prevent these weaknesses from spreading further.

The study contributes to the literature and practice in several ways. It provides the first empirically grounded structured causal model of barriers to NIS2 compliance, offering a transparent and data-driven view of how organizational, financial, and technical factors interact. By framing these interactions as a connected system rather than isolated challenges, the model helps decision-makers target interventions that achieve meaningful, system-wide effects with limited resources. The findings also offer a foundation for designing policy tools that reduce fragmentation, encourage shared learning, and make compliance more attainable, particularly for smaller organizations with constrained capabilities.

Beyond its immediate scope, the study advances the understanding of cybersecurity governance as a socio-technical system. It demonstrates how expert-based analytical methods such as DEMATEL can uncover interdependencies that traditional approaches often overlook. By linking organizational behavior, resource allocation, and regulatory design, the study contributes to both theory and policy debates on how to achieve effective and sustainable approaches to cybersecurity compliance across institutional and sectoral contexts.

Author Contributions

Conceptualization, K.M. and P.T.C.; methodology, P.T.C. and T.K.D.; software, P.T.C.; validation, F.C.K. and A.I.M.; formal analysis, K.M., P.T.C. and A.I.M.; investigation, K.M. and P.T.C.; resources, K.M.; data curation, K.M. and P.T.C.; writing—original draft preparation, K.M., P.T.C., F.C.K., A.I.M. and T.K.D.; writing—review and editing, K.M., P.T.C., F.C.K., A.I.M. and T.K.D.; visualization, K.M. and P.T.C.; supervision, P.T.C. and F.C.K.; project administration, A.I.M. and T.K.D.; funding acquisition, P.T.C. All authors have read and agreed to the published version of the manuscript.

Funding

The publication of this paper has been partly supported by the University of Piraeus Research Center.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| DEMATEL | Decision-Making Trial and Evaluation Laboratory |

| ICT | Information and Communication Technologies |

| NIST | National Institute of Standards and Technology |

| GDPR | General Data Protection Regulation |

| NIS Directive | Network and Information Systems Directive |

| NIS2 Directive | Network and Information Systems Directive 2 |

| DORA | Digital Operational Resilience Act |

| CIA | Confidentiality, Integrity, and Availability |

| ENISA | European Union Agency for Cybersecurity |

| IT | Information Technology |

| OT | Operational Technology |

| SMEs | Small and Medium-sized Enterprises |

| IoT | Internet of Things |

| CPS | Cyber–physical Systems |

Appendix A

Table A1.

Overview of barriers to NIS2 Directive Compliance.

Table A1.

Overview of barriers to NIS2 Directive Compliance.

| Barriers | References |

|---|---|

| B1: Lack of awareness and understanding Weak organizational understanding of NIS2 obligations (driven by legal–technical complexity, uneven guidance, and limited visibility of threats) delays or distorts compliance planning and execution, particularly in SMEs. | [1,2,13,16,21,35,36,37,38,39,40] |

| B2: Insufficient financial resources Tight budgets and competing priorities constrain the investments needed for NIS2-level capability, with documented funding gaps, reallocation from other cost centers, and board-level proof demands slowing progress across technology, people, and process. | [5,13,17,24,35,36,41,42] |

| B3: Technical complexity Compliance is hindered by the technical complexity of NIS2 requirements, aggravated by legacy systems, sector-specific challenges, and fragmented national frameworks. | [8,13,37,43,44,45,46] |

| B4: Shortage of cybersecurity professionals A persistent shortage of skilled professionals leaves organizations without the expertise needed to meet NIS2 requirements, widening the compliance gap. | [2,24,37,43,47,48] |

| B5: Complex and evolving cybersecurity threat landscape The constantly changing nature of cyber threats makes it difficult for organizations to sustain compliance, especially when requirements must adapt across IT, OT, and IoT contexts. | [7,12,16,17,24,39,44,49] |

| B6: Fragmented regulatory landscape Divergent national interpretations and overlapping sectoral rules create a fragmented regulatory environment that complicates NIS2 compliance, especially for cross-border organizations. | [1,9,16,43,50] |

| B7: Operational disruptions NIS2 entities face high exposure to operational disruptions, where cascading cyber incidents demand rapid detection, intelligence-driven response, and recovery planning. | [7,37,44,51] |

| B8: Resistance to change and organizational culture Organizational inertia and cultural resistance to new security practices delay NIS2 adoption, especially in sectors where IoT and CPS amplify risks. | [43,44,51,52] |

| B9: Lack of clear guidance and support from authorities Limited guidance, inconsistent national instruments, and weak crisis coordination hinder organizations’ ability to understand and comply with NIS2 obligations. | [16,17,43,53,54,55] |

| B10: Supply chain vulnerabilities Supply chain vulnerabilities remain a critical challenge, as organizations often lack control over third-party risks, requiring certification, monitoring, and coordinated oversight to achieve NIS2 compliance. | [2,13,37,56,57] |

| B11: High cost of incident reporting and management Incident reporting under NIS2 is resource-intensive. The 24-h deadline, specialized staffing needs, and legal risks create high costs that organizations (especially smaller ones) struggle to absorb. | [8,15,24,58,59] |

| B12: Data privacy and confidentiality concerns Compliance with NIS2 requires balancing transparency in incident reporting with privacy and confidentiality, a challenge compounded by limited state capacity and inconsistent CVD practices. | [7,14,16,24,40] |

| B13: Inadequate risk assessment and management capabilities Many organizations, especially SMEs, lack the expertise and resources to conduct mature risk assessments, leading to gaps in compliance and ineffective threat management. | [8,13,17,23,35,51,60] |

| B14: Lack of inter-organizational collaboration Reluctance to share sensitive data and persistent sectoral silos limit inter-organizational collaboration, undermining NIS2’s goal of coordinated resilience across Member States. | [2,3,9,13,35,37,61] |

Table A2.

Detailed participants profile.

Table A2.

Detailed participants profile.

| ID | Age Range | Gender | Highest Degree | Company Classification | Rank | Experience (Years) |

|---|---|---|---|---|---|---|

| 1 | 55–64 | Male | Master’s | Finance/Banking | Executive (C-Level) | 20–25 |

| 2 | 35–44 | Female | Master’s | Technology/IT | Manager/Department Head | 16–20 |

| 3 | 35–44 | Male | Master’s | Finance/Banking | Manager/Department Head | 16–20 |

| 4 | 35–44 | Male | Master’s | Energy/Oil and Gas | Other | 6–10 |

| 5 | <34 | Male | Master’s | Government/Public Sector | Other | 3–5 |

| 6 | 45–54 | Female | Master’s | Non-Governmental Organization | Executive (C-Level) | >30 |

| 7 | 35–44 | Male | Master’s | Technology/IT | Senior Manager/Director | 16–20 |

| 8 | <34 | Male | Master’s | Technology/IT | Senior Manager/Director | 11–15 |

| 9 | 55–64 | Male | Master’s | Non-Governmental Organization | Executive (C-Level) | 20–25 |

| 10 | 45–54 | Male | Master’s | Non-Governmental Organization | Team Lead/Supervisor | 11–15 |

| 11 | <34 | Female | Master’s | Technology/IT | Entry-Level/Junior Staff | 3–5 |

| 12 | 55–64 | Male | Master’s | Finance/Banking | Executive (C-Level) | >30 |

| 13 | 35–44 | Male | Master’s | Technology/IT | Manager/Department Head | 11–15 |

| 14 | <34 | Male | Master’s | Technology/IT | Senior Manager/Director | 6–10 |

| 15 | <34 | Male | Master’s | Technology/IT | Team Lead/Supervisor | 6–10 |

| 16 | 35–44 | Male | Bachelor’s | Technology/IT | Team Lead/Supervisor | 11–15 |

| 17 | 35–45 | Female | Bachelor’s | Technology/IT | Team Lead/Supervisor | 16–20 |

| 18 | 35–45 | Female | Bachelor’s | Technology/IT | Other | 16–20 |

| 19 | 45–54 | Male | Bachelor’s | Technology/IT | Senior Manager/Director | 20–25 |

| 20 | 55–64 | Male | Bachelor’s | Technology/IT | Executive (C-Level) | >30 |

| 21 | 35–44 | Male | Master’s | Technology/IT | Senior Manager/Director | 16–20 |

| 22 | 35–44 | Male | Master’s | Technology/IT | Executive (C-Level) | 20–25 |

| 23 | 45–54 | Male | Master’s | Non-Governmental Organization | Senior Manager/Director | 16–20 |

| 24 | <34 | Male | Master’s | Technology/IT | Team Lead/Supervisor | 6–10 |

| 25 | 35–45 | Male | Master’s | Technology/IT | Manager/Department Head | 11–15 |

| 26 | 35–44 | Male | Master’s | Energy/Oil and Gas | Manager/Department Head | 16–20 |

Table A3.

DEMATEL matrices.

Table A3.

DEMATEL matrices.

| Average Direct Relation Matrix | ||||||||||||||

| B1 | B2 | B3 | B4 | B5 | B6 | B7 | B8 | B9 | B10 | B11 | B12 | B13 | B14 | |

| B1 | 2.346 | 1.808 | 1.538 | 1.808 | 1.615 | 1.615 | 2.731 | 1.577 | 1.462 | 1.154 | 1.923 | 2.423 | 1.846 | |

| B2 | 1.731 | 1.538 | 2.423 | 1.192 | 0.885 | 2.192 | 1.692 | 0.769 | 1.654 | 2.269 | 1.538 | 2.000 | 1.308 | |

| B3 | 1.500 | 1.500 | 1.923 | 1.808 | 1.192 | 2.731 | 2.000 | 1.115 | 2.000 | 2.038 | 1.500 | 2.269 | 1.346 | |

| B4 | 2.192 | 1.269 | 1.692 | 1.538 | 0.923 | 1.962 | 1.692 | 0.923 | 1.731 | 1.500 | 1.308 | 2.269 | 1.423 | |

| B5 | 1.885 | 1.654 | 2.115 | 1.885 | 1.346 | 2.115 | 1.500 | 1.385 | 2.077 | 2.077 | 1.923 | 1.962 | 1.423 | |

| B6 | 1.346 | 1.115 | 1.308 | 0.923 | 1.538 | 0.923 | 1.269 | 2.846 | 1.231 | 1.308 | 1.808 | 1.385 | 1.308 | |

| B7 | 0.808 | 1.308 | 1.308 | 0.808 | 1.115 | 0.577 | 1.462 | 0.692 | 1.538 | 1.538 | 0.923 | 0.885 | 1.154 | |

| B8 | 2.115 | 1.500 | 1.308 | 1.000 | 1.154 | 0.962 | 1.385 | 1.231 | 1.385 | 1.423 | 1.154 | 1.769 | 2.308 | |

| B9 | 2.308 | 1.000 | 1.385 | 1.115 | 1.308 | 2.231 | 1.192 | 1.423 | 1.615 | 1.500 | 1.385 | 2.000 | 1.346 | |

| B10 | 1.000 | 0.846 | 1.154 | 0.731 | 1.885 | 0.923 | 2.308 | 1.192 | 0.731 | 1.923 | 1.731 | 1.615 | 1.654 | |

| B11 | 0.923 | 1.808 | 0.885 | 0.846 | 1.115 | 1.038 | 1.346 | 1.462 | 0.808 | 1.154 | 1.154 | 1.500 | 1.154 | |

| B12 | 1.038 | 1.192 | 1.077 | 1.346 | 1.462 | 1.538 | 1.192 | 1.154 | 1.269 | 1.000 | 1.769 | 1.077 | 1.923 | |

| B13 | 1.923 | 2.115 | 1.231 | 1.231 | 1.346 | 0.885 | 2.115 | 1.808 | 1.077 | 1.962 | 1.154 | 1.346 | 1.346 | |

| B14 | 1.615 | 1.385 | 1.615 | 1.077 | 1.192 | 1.038 | 1.885 | 1.615 | 1.269 | 1.500 | 1.385 | 1.462 | 2.000 | |

| Normalized Direct Relation Matrix | ||||||||||||||

| B1 | B2 | B3 | B4 | B5 | B6 | B7 | B8 | B9 | B10 | B11 | B12 | B13 | B14 | |

| B1 | 0.000 | 0.098 | 0.076 | 0.065 | 0.076 | 0.068 | 0.068 | 0.115 | 0.066 | 0.061 | 0.048 | 0.081 | 0.102 | 0.077 |

| B2 | 0.073 | 0.000 | 0.065 | 0.102 | 0.050 | 0.037 | 0.092 | 0.071 | 0.032 | 0.069 | 0.095 | 0.065 | 0.084 | 0.055 |

| B3 | 0.063 | 0.063 | 0.000 | 0.081 | 0.076 | 0.050 | 0.115 | 0.084 | 0.047 | 0.084 | 0.085 | 0.063 | 0.095 | 0.056 |

| B4 | 0.092 | 0.053 | 0.071 | 0.000 | 0.065 | 0.039 | 0.082 | 0.071 | 0.039 | 0.073 | 0.063 | 0.055 | 0.095 | 0.060 |

| B5 | 0.079 | 0.069 | 0.089 | 0.079 | 0.000 | 0.056 | 0.089 | 0.063 | 0.058 | 0.087 | 0.087 | 0.081 | 0.082 | 0.060 |