1. Introduction

Ransomware encrypts a victim’s data and demands payment in exchange for decryption. Modern ransomware attacks often employ a combination of techniques, including file encryption, data exfiltration, and double extortion. In addition to encrypting files, attackers threaten to release sensitive information on the dark web if the ransom is not paid [

1]. Organizations affected by ransomware attacks typically experience severe financial losses, operational disruptions, and reputational damage as critical information is encrypted or leaked [

2].

The emergence of ransomware-as-a-service (RaaS) has further exacerbated this threat, enabling even non-technical criminal groups to conduct ransomware campaigns [

3]. Terrorist and extremist groups have also leveraged ransomware to fund their activities or disrupt governmental operations. Moreover, state-sponsored actors increasingly combine cryptocurrency with ransomware to maximize profits and evade detection. Recently, ransomware developers have begun exploiting Generative AI (GenAI) to produce more sophisticated binaries with advanced evasion techniques. In addition, criminally oriented large language models, such as EscapeGPT, BlackhatGPT, and FraudGPT, are being used to support the development of malware and related tools [

4].

Despite the growing threat, most research on ransomware has focused on recovery and mitigation. Traditional approaches such as static analysis and signature-based detection have become less effective due to the advanced evasion techniques adopted by modern ransomware. These techniques often fail to capture the complexity of ransomware behavior, limiting their ability to detect or classify ransomware families. Each ransomware family exhibits distinctive behavioral patterns during execution, which are often overlooked by static methods.

API call analysis offers an effective complement to signature-based techniques by enabling dynamic behavioral analysis. Ransomware leverages Windows API calls for various purposes, including encrypting files, establishing command-and-control (C2) communications, manipulating files, and modifying the system registry. Analyzing these API calls not only reveals ransomware behavior but also helps detect evasion strategies, thereby improving detection and classification of both known and emerging threats.

In this study, we focus on monitoring functional API calls using API tracking tools to observe and log ransomware behavior. This research is structured into two main parts based on machine learning (ML) and deep learning (DL) approaches. Two datasets were prepared: (i) a dataset from VirusTotal containing prevalent ransomware families such as Darkside, Bad Rabbit, CryptLocker, Locker, and Reveton, and (ii) a benchmark dataset from Cuckoo Sandbox, including families such as Critroni, CryptLocker, CryptoWall, Kollah, Kovter, Locker, Matsnu, Reveton, and Trojan-Ransom. The threat level of each ransomware family was determined based on MITRE ATT&CK reports. The MITRE ATT&CK (Adversarial Tactics, Techniques, and Common Knowledge) framework is a globally recognized knowledge base that systematically categorizes adversarial behaviors based on real-world observations. It provides a structured mapping of attack techniques across different stages of an intrusion, helping researchers and defenders analyze, classify, and mitigate threats effectively.

This paper proposes a classification framework to identify emerging ransomware families by analyzing their functional API usage patterns. Unlike prior studies, which often relied on limited feature sets and static analysis, our approach incorporates diverse features, including API calls, DLL usage, and registry modifications, derived from dynamic analysis. This richer feature set enables more accurate behavioral characterization and family-level classification. This paper evaluates both ML and DL models using two datasets: a benchmark dataset comprising 1096 samples and a custom dataset containing 473 ransomware samples, both characterized by functional API features.

The contributions of our paper are as follows:

Novel framework: We propose a classification framework for recent ransomware families based on functional API usage patterns, with results correlated to threat severity levels, thereby addressing gaps in prior research.

Behavioral analysis: By focusing on functional API calls, this study provides a detailed analysis of ransomware behavior, supporting more effective monitoring and logging through API tracking tools.

Balanced datasets: Datasets were constructed from reputable sources to comprehensively capture ransomware behaviors based on API call patterns, improving the accuracy of threat analysis.

Comparative evaluation: We introduce a new evaluation dimension by comparing ML and DL models at the family level and conducting cross-sandbox experiments, where models are trained on one analysis platform and tested on another, to assess adaptability across environments.

The remainder of this paper is organized as follows:

Section 2 reviews the literature on ML and the use of APIs for ransomware detection and classification;

Section 3 describes the methodology, including dataset preparation and model design;

Section 4 presents experimental results and analysis;

Section 5 discusses limitations and future directions; and

Section 6 concludes the paper with key findings and recommendations.

2. Literature Review

Ransomware is widely used in cybercrime, and numerous studies have been conducted to classify and detect it, with much of this research employing ML and DL techniques. This section reviews recent works, grouping them by approach, and concludes by identifying research gaps that motivate our proposed approach.

Singh et al. [

5] introduced a novel approach for detecting RaaS attacks using a set of DL models. Their RansoDetect Fusion model combines the predictive power of three multilayer perceptron (MLP) models with different activation functions, trained on the UNSW-NB15 network intrusion detection dataset. The model demonstrated excellent performance, achieving 98.79% accuracy and recall, 98.85% precision, and an F1 score of 98.80%. However, as the evaluation was limited to a single, network-based dataset, the findings lack validation against emerging ransomware threats in real-world environments.

Kim and Park [

6] proposed a behavioral performance visualization method that classifies ransomware attacks by analyzing CPU, memory, and I/O usage patterns. The method achieved a low loss rate of 3.69% and at least 98.94% accuracy, showing strong detection capability. Nevertheless, its narrow focus on resource usage overlooks other critical behavioral indicators, potentially reducing effectiveness against stealthy attacks. This highlights the limitation of relying on a single feature type. Azzedin et al. [

7] suggested evolving strategies for defending against ransomware by advocating a shift from traditional victim-centric approaches to attacker-centric methodologies. While conceptually valuable, the study was limited to qualitative frameworks without empirical validation, making it difficult to assess practical impact.

Davies et al. [

8] analyzed hybrid ransomware that combines worm-like propagation with destructive encryption, such as Bad Rabbit and NotPetya. Using digital forensic tools, they uncovered encryption keys and studied activation times during live attacks. However, newer variants were excluded, restricting applicability to the current threat landscape. Davies et al. [

9] modeled encryption and destruction times for WannaCry and Ryuk using 130,000 samples, achieving 99% accuracy. While impactful, the exclusion of emerging or lesser-known ransomware limits coverage. Talukder et al. [

10] demonstrated that ransomware exhibits distinct behavioral patterns useful for dynamic classification. However, a focus on established patterns may fail to fully account for new or evolving ransomware techniques that deviate from these patterns.

Ullah et al. [

11] focused on real-time API call extraction, analyzing 78,550 samples with Random Forest (RF) to achieve 99% precision. While effective for speed, exclusive reliance on API calls risks missing obfuscated behaviors. Additional behavioral features could improve resilience. Xiao et al. [

12] built a graphical model dividing API calls into rewarding behaviors, achieving 99% detection for malware and 95% classification accuracy. However, the small dataset size limits generalization. Nguyen et al. [

13] used API calls from dynamic analysis to classify WannaCry and Win32:File Coder. The narrow scope and limited dataset reduce applicability to unknown threats. Aslam et al. [

14] addressed dataset imbalance in Android systems via oversampling and undersampling, achieving 99.53% accuracy. Yet, such balancing methods can introduce bias in real-world scenarios. Sihwail et al. [

15] proposed an SVM-based classifier with 98.5% accuracy. While reducing features simplified the models, it risked omitting critical behavioral data. Ban et al. [

16] studied Android ransomware infections, focusing on API calls, and Du et al. [

17] classified Windows ransomware families using KNN on 2019–2020 data. While such OS-specific approaches inevitably face limitations in broader applicability, our study also shares this constraint as it focuses on Windows API calls. Nevertheless, platform-specific research remains valuable, as it provides deeper insights into ransomware behavior within the targeted operating system.

Schofield et al. [

18] applied a CNN 1-D model to API calls, achieving 98% accuracy. However, adaptability to diverse behaviors requires further study. Rabadi et al. [

19] stressed understanding API functions and context, achieving 99.98% accuracy. While comprehensive, some evasion methods remain unaddressed. Jethva et al. [

20] incorporated system and registry file features, complementing API-based approaches, illustrating the value of multi-source data. Ramachandran et al. [

21] demonstrated DLL injection and CreateFileW hooking as encryption prevention methods. Although innovative, broader validation is necessary. Zakaria et al. [

22] developed RENTAKA for early cryptographic ransomware detection via API call patterns, achieving 93.8% accuracy. Adaptation mechanisms are needed to handle evolving threats. Alhashmi et al. [

23] proposed HAPI-MDM, a hybrid static-dynamic API model using XGBoost and ANN. Despite strength against obfuscation, dataset limitations affect generalizability.

Azhar et al. [

24] evaluated ML models for predicting ransomware severity by analyzing over 50 strains with MITRE ATT&CK and MITRE Cyber Analytics Repository (CAR), comparing six models in classification and regression tasks. Axali et al. [

25] proposed an MCDM-based framework integrating MITRE ATT&CK for automated real-time ransomware detection, dynamically prioritizing mitigation actions without human intervention, and outperforming traditional rule-based systems.

Table 1 summarizes recent studies using API calls for ransomware classification with ML and DL. High detection rates are common; however, limitations remain, including dataset imbalance, narrow family coverage, and insufficient handling of evasion tactics such as API call obfuscation and sandbox detection.

While detection rates in prior work are high, many approaches rely on limited or imbalanced datasets, focus narrowly on certain families, and depend solely on API sequences. Few studies incorporate additional behavioral features such as DLL usage or registry modifications, and even fewer address advanced evasion techniques such as API call obfuscation or sandbox detection.

To address these shortcomings, our study addresses these gaps by combining API sequences with additional behavioral features extracted from dynamic analysis, evaluating models on both a benchmark dataset and a custom-built dataset that reflects real-world ransomware activity. This ensures greater dataset diversity, richer feature presentations, and improved resilience against sophisticated evasion techniques.

3. Methodology

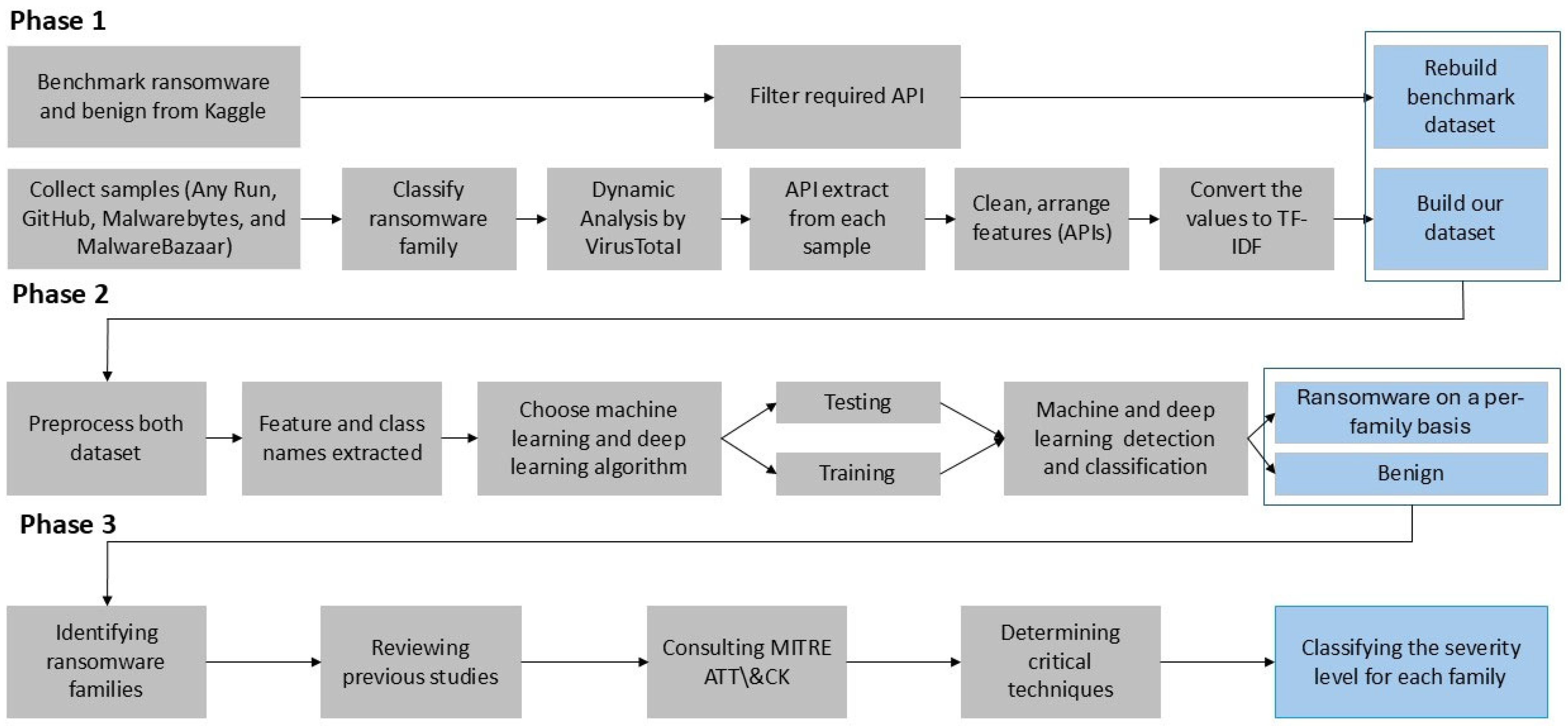

The methodology for ransomware classification in this study is divided into three main phases, as illustrated in

Figure 1, which outlines the overall process of detection and classification. All experiments were conducted on a Windows 11 Pro environment.

Phase 1 focuses on sourcing both benign and ransomware samples and consists of two parallel parts. First, we collected a benchmark dataset containing samples of ransomware families targeting Windows operating systems. At the same time, additional ransomware samples were obtained from publicly available and reputable repositories such as MalwareBazaar and Any.Run.

These platforms provide pre-assigned family classifications based on expert malware analysis, which were directly adopted in our study. Specifically, MalwareBazaar relies on community researchers to assign family tags, while Any.Run determines families through interactive sandbox-based behavioral analysis. To maintain consistency and reliability, family labels were cross-referenced across multiple sources whenever possible.

After collection, the samples were analyzed using the VirusTotal platform, which aggregates results from multiple antivirus engines and incorporates a sandbox environment for extracting API calls. The extracted API calls were then converted into term frequency–inverse document frequency (TF-IDF) representations—a statistical method that quantifies the importance of a term within a collection of documents. In this context, each API call sequence is treated as textual data, and TF-IDF is applied to assign higher weights to API calls that occur frequently within a specific ransomware family but less frequently across others. This transformation supports further analysis and ensures compatibility with the classification algorithms.

The diversity of the dataset, achieved by collecting samples from multiple sources and cross-verifying family labels, is essential for improving the generalization and robustness of the classification model. This approach reduces potential bias towards specific ransomware families or sources, enabling the model to better capture behavioral variations across different ransomware samples.

Phase 2 involves selecting and applying ML and DL algorithms, including XGBoost, LightGBM, Support Vector Machines (SVMs), k-Nearest Neighbors (KNNs), and Deep Neural Networks (DNNs). The TF-IDF features guide these algorithms to focus on the most discriminative API call patterns by down-weighting common system calls that appear in nearly all programs and emphasizing distinctive behavioral features. This representation improves the effectiveness of the classification algorithms by guiding them to focus on the most discriminative aspects of ransomware behaviors. Next, class labels were extracted from the dataset, and the algorithms were trained and tested to classify ransomware families. Finally, the models were used to determine whether samples were ransomware or benign.

Phase 3 builds on the extracted features and classification results from Phase 2. In this phase, the MITRE ATT&CK framework, together with relevant previous studies [

26,

27], was employed to identify the techniques used by ransomware families and map them to severity criteria. These criteria consider factors such as threat level, impact, encryption complexity, propagation speed, and other key characteristics of these malicious programs. In

Figure 1, blue boxes represent the output datasets, grey boxes indicate processing steps, and arrows illustrate the sequential workflow.

3.1. Phase 1: First Part—Benchmark Dataset Collection

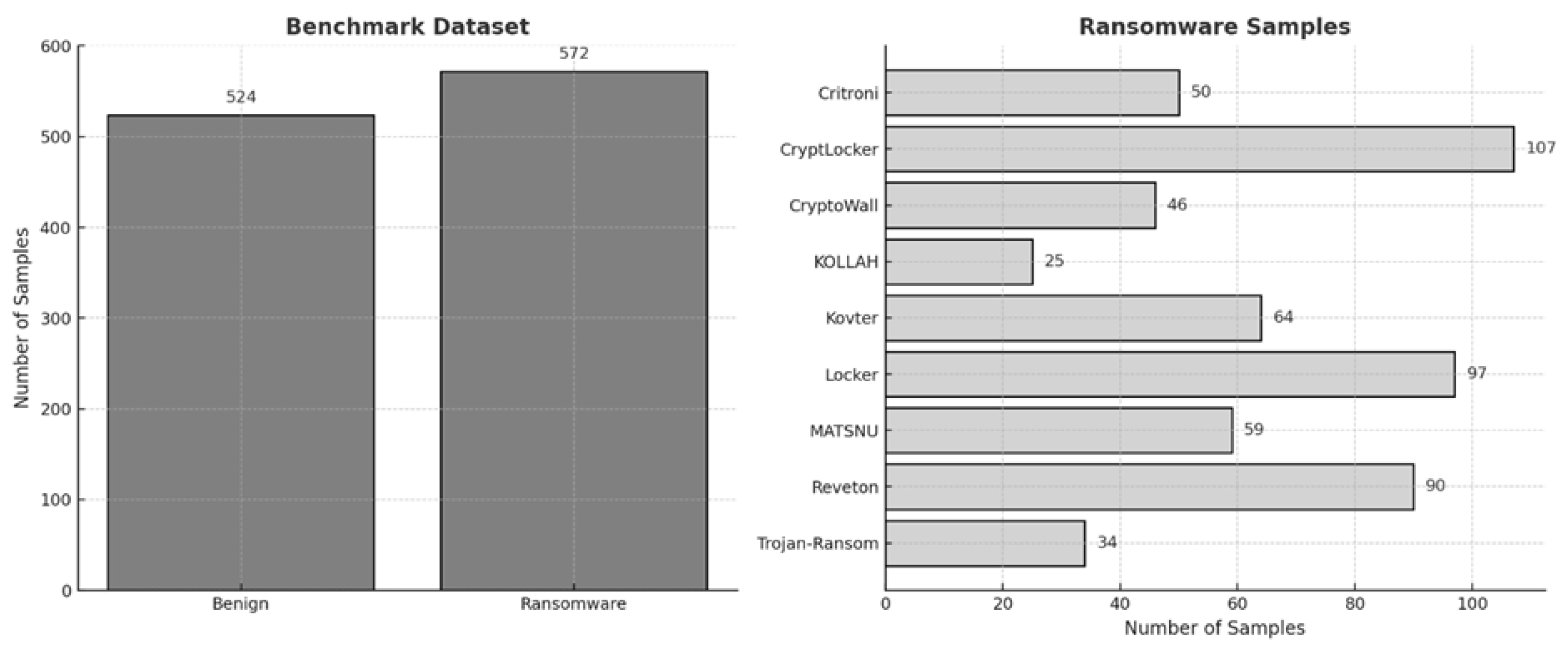

The benchmark dataset was obtained from the Kaggle website [

28], containing 572 ransomware samples and 524 benign applications, a total of 1096 samples. This dataset includes 9 ransomware families, namely, Critroni, CryptLocker, CryptoWall, KOLLAH, Kovter, Locker, MATSNU, Reveton, and Trojan-Ransom (

Figure 2). To analyze these samples, we used Cuckoo sandboxes v2.0.7 an open-source platform for examining suspicious files and URLs. Within a controlled virtual environment, the files were executed, and detailed reports were generated, capturing their behavior, including API call sequences and timing information.

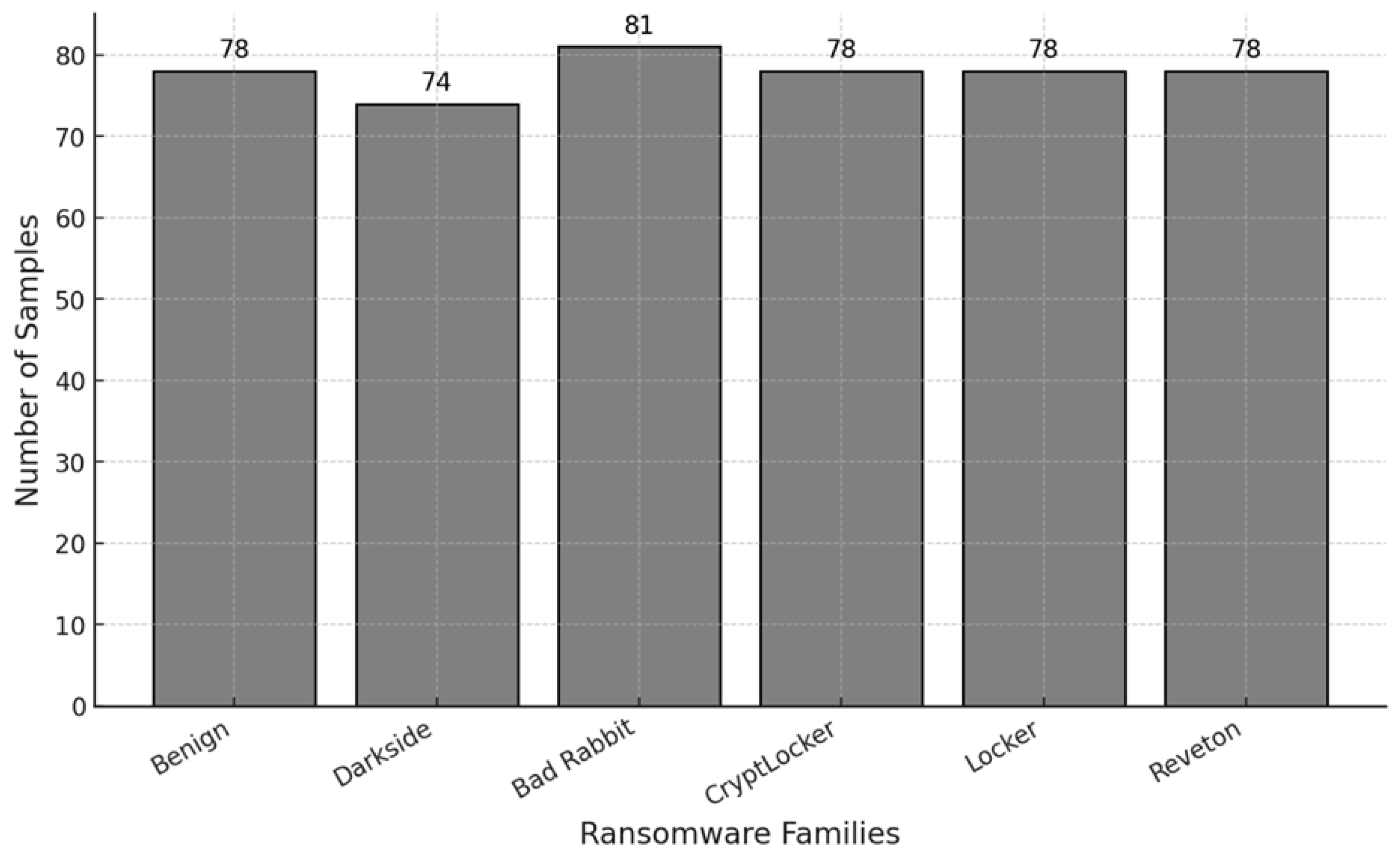

3.2. Phase 1: Second Part—Custom Dataset Collection

In addition to the benchmark dataset, we collected 467 ransomware samples and their corresponding hash values from sources such as Any.Run, GitHub, Malwarebytes, and Malware Bazaar. This dataset includes notable ransomware families such as Darkside, Bad Rabbit, CryptLocker, Locker, and Reveton (

Figure 3). We used the VirusTotal platform to conduct dynamic analysis, executing the ransomware in a controlled sandbox environment. This allowed us to observe its behavior closely without endangering real systems. The sandbox captured the following:

API calls: Functions or procedures used by ransomware to interact with the operating system.

Dynamic link libraries (DLLs): Reusable code modules loaded by ransomware.

Registry entries: Modifications or additions to the Windows registry, often related to persistence or configuration.

In this study, we focus exclusively on dynamic analysis, which executes samples in a controlled environment to capture real-time API call behavior. This approach is more suitable for our purpose than static analysis, as it provides richer behavioral information that can be directly leveraged by ML and DL models for ransomware classification.

3.3. Phase 1: API Extraction and Categorization

API calls were grouped into two categories: functional APIs and malicious-intent APIs. Functional APIs were further divided into eight subcategories: Network, Registry, Service, File, Hardware and Systems, Message, Process and Thread, and System. Malicious-intent APIs were grouped into six subcategories: Shellcode, Keylogging, Obfuscation, Password Dumping and Hashing, Anti-Debugging and Anti-Reversing, and Handle Manipulation (

Table 2).

This study focuses on functional APIs.

Table 2 provides an overview of the classification scheme, while

Table 3 presents representative API examples extracted from the VirusTotal platform. These samples include executions across multiple versions of the Windows operating systems, and the listed APIs represent core functions that are commonly shared among different Windows environments.

The benchmark dataset contains a total of 30,970 variables, including API, REG, DIR, STR, and others, among which 232 variables correspond to APIs. In addition, from our custom dataset, a total of 1236 API calls were extracted for analysis.

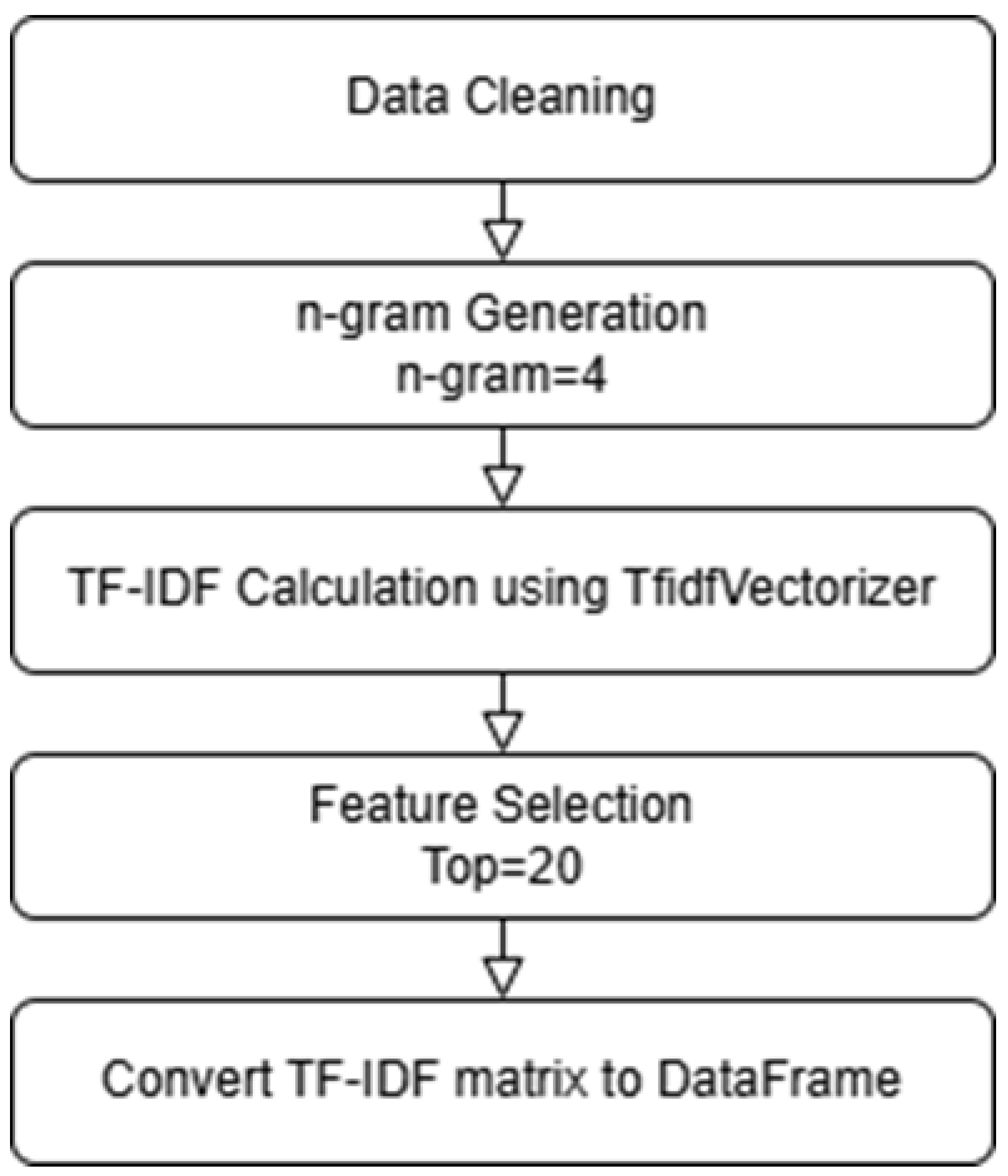

3.4. Phase 1: API Conversion to TF-IDF

The extracted API calls were processed as follows: API calls were extracted from the JSON file; the data were cleaned to remove duplicates and irrelevant values; API calls were aggregated as dataset columns; and each row was labeled with the corresponding ransomware family. Finally, using Python v3.11.5, the API call names were converted into TF-IDF values, as shown in

Figure 4. TF-IDF represents the relative importance of each API call across samples, enabling models to better distinguish ransomware behaviors. This step is essential for handling large datasets and improving ML and DL model performance in classifying ransomware families.

In this study, API sequences were represented using 4 g modeling, which preserves the contextual relationship between consecutive calls and better reflects the behavioral patterns of ransomware families. To improve efficiency and reduce noise, we retained only the top 20 features ranked by TF-IDF, thereby ensuring that the most distinctive patterns were emphasized while minimizing redundant or less informative features.

3.5. Phase 2: Selection of ML and DL Classifiers

We employed a range of machine learning (ML) and deep learning (DL) algorithms for ransomware classification, using API calls transformed into TF-IDF features. TF-IDF is a statistical technique used to quantify the importance of a term within a document relative to a corpus. In this study, each ransomware sample was treated as a “document,” and the extracted API call names were treated as “terms.” The TF-IDF vectorization converted the frequency and distinctiveness of API calls into numerical feature representations suitable for ML/DL algorithms, ensuring that frequently used yet uninformative calls were downweighted while distinctive calls were emphasized.

The selected techniques included XGBoost, LightGBM, SVM, KNN, and DNN, chosen to mitigate issues such as overfitting and class imbalance. To address these challenges, established strategies were applied, including SMOTE, RandomOverSampler, Dropout, k-fold cross-validation, stratified splitting, and hyperparameter tuning. Specifically, an 80/20 train–test split was used with stratification to preserve class distributions. For hyperparameter optimization, GridSearchCV with 2-fold StratifiedKFold cross-validation was applied across predefined parameter ranges for each model, such as n_estimators, max_depth, and learning_rate for XGBoost and LightGBM, and hidden layer sizes and activation functions for MLP.

For deep learning, we designed a DNN architecture consisting of three Dense layers with 128, 64, and 32 units using ReLU activation, followed by a Softmax output layer for multi-class classification. Batch Normalization and Dropout (0.5 and 0.3) were incorporated to prevent overfitting. The model was optimized using the Adam optimizer with a learning rate of 0.0005 and the sparse_categorical_crossentropy loss function. Early Stopping was employed with a patience of 10 epochs based on validation loss. Model performance was further validated using StratifiedShuffleSplit across multiple iterations, with average precision, recall, and F1-scores reported.

All classifiers were evaluated on two datasets, recording training and prediction times along with performance metrics, including precision, recall, F1-score, and accuracy, to assess their effectiveness in ransomware classification. These procedures provide a rigorous and reliable evaluation framework, thereby strengthening the credibility of the results.

3.6. Phase 3: Assessing the Severity Level of Ransomware Families

In this phase, the ransomware families under study were first identified. The specific techniques employed by these families were then analyzed using the MITRE ATT&CK framework.

Additionally, several prior studies on ransomware families [

29], including those on Kovter and Reveton, were reviewed to broaden the analysis and support the findings. Based on this investigation, the most critical techniques indicative of threat severity were determined. These included the complexity and resilience of encryption, the speed of propagation across systems and networks, the extent of damage caused, the malware’s ability to obfuscate and evade detection, and its capacity to bypass security defenses. By integrating these criteria, the severity level of each family was assessed individually.

Table 4 presents the key techniques associated with each ransomware family, along with their corresponding severity levels.

4. Results and Discussion

The Cross-Sandbox approach was employed to evaluate the models’ generalization capabilities across different analysis environments. The models were tested on two datasets containing API calls extracted from distinct dynamic analysis platforms: the first was obtained from Cuckoo Sandbox and used as a benchmark, and the second was collected from VirusTotal Sandbox.

4.1. ML for Benchmark

The benchmark dataset includes nine ransomware families and benign programs, comprising a total of 1096 samples after minor balancing between classes. Initially, a slight imbalance existed between ransomware and benign samples, which was corrected during preprocessing. API calls were transformed into TF-IDF features to effectively capture behavioral patterns, and all samples were classified into their respective categories.

The categories were represented numerically, with ‘0’ indicating benign programs and ‘1’ through ‘9’ corresponding to different ransomware families and their severity levels (

Table 5). Based on this classification scheme, the performance results for benign programs and each ransomware family in the benchmark dataset are presented in

Table 6.

The experimental results indicate that ransomware families classified as high and very high severity—such as Critroni, CryptLocker, CryptoWall, Kovter, Matsnu, and Trojan-Ransom—represent the most critical threats, where achieving high recall is essential to minimize false negatives and prevent overlooking severe attacks. Advanced ensemble methods, particularly XGBoost and LightGBM, consistently demonstrated superior detection performance, combining strong recall and high F1 scores with low false positive rates, underscoring their suitability for practical deployment while ensuring both accuracy and efficient resource utilization.

Medium-severity families, such as Kollah and Locker, exhibited balanced performance in terms of precision and recall, whereas low-severity families, like Reveton, were detected with high recall and minimal false negatives, reflecting the robustness of the proposed approach across varying risk levels. In algorithm comparisons, XGBoost and LightGBM provided the best trade-off between accuracy and execution time, SVM achieved high accuracy, albeit with longer training times, and KNN yielded moderate but acceptable results. These findings suggest that modern ensemble models are particularly well-suited for prioritizing the detection of high-severity ransomware families while maintaining adequate performance for medium- and low-severity cases.

Despite these promising outcomes, certain limitations must be acknowledged. Some families, such as CryptLocker and Locker, exhibited weaker detection rates, highlighting challenges in achieving generalization across diverse behavioral profiles. Notably, these two families shared 145 API calls out of 234 recorded, meaning that over 62% of features were identical or behaviorally similar. This high level of similarity increases the likelihood of classification confusion, leading to trade-offs between false positives (FP) and false negatives (FN) and higher misclassification rates when relying solely on features based on API call names or frequencies (e.g., limited TF-IDF features).

Furthermore, both families employ advanced encryption and obfuscation techniques. CryptLocker was classified as high-severity due to its significant system impact (wide-scale encryption and disabling backups) and the presence of evasion mechanisms that reduce detectability by current systems. In contrast, Locker was classified as medium severity because its impact and behavior may vary across different Windows versions (some API calls may be unavailable or behave differently), potentially reducing effective detection in certain environments.

Therefore, it is recommended that future models expand the feature set beyond API calls to include multiple behavioral characteristics and the temporal sequencing of these calls. Such enhancements would improve the model’s ability to differentiate between families with high behavioral similarity and strengthen both detection accuracy and resilience.

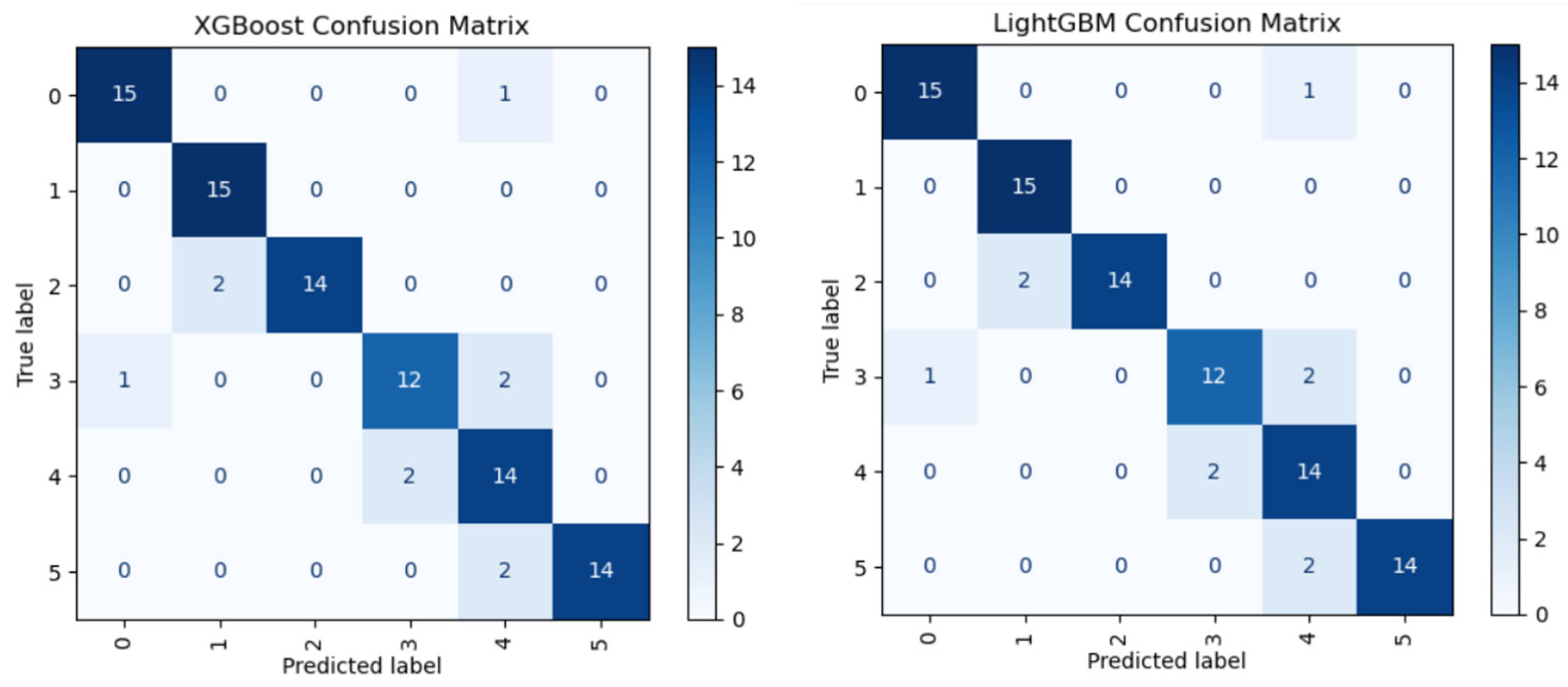

To evaluate the classification performance, a confusion matrix for the XGBoost and LightGBM models is presented in

Figure 5. Both models achieved high overall accuracy (~93%), which has strong true positive rates and relatively low FP and FN across ransomware families.

XGBoost demonstrated consistent detection accuracy, confirming its robustness in distinguishing between families. Similarly, LightGBM maintained a high level of precision, with misclassifications scattered rather than concentrated, indicating good discriminative capability. The primary limitation remains reducing false negatives, as undetected ransomware can delay response and recovery efforts.

4.2. DL for Benchmark Dataset

We applied DL models to the benchmark dataset to evaluate their effectiveness in classifying ransomware families. As shown in

Table 7, this step enables a direct comparison between the performance of traditional ML algorithms and DL approaches, highlighting differences in accuracy, precision, recall, and F1-score across all classes.

The evaluation of the benchmark dataset demonstrates that several ransomware families achieved high detection rates, confirming the effectiveness of the classification model. CryptLocker, Locker, and Trojan-Ransom exhibited strong recall values of 0.8571, 0.8381, and 0.8381, respectively, indicating the model’s ability to correctly identify most samples from these families. CryptoWall and Kollah achieved balanced detection performance with precision values of 0.7953 and 0.8240, showing that the model can accurately detect these families while maintaining consistent classification results. The best overall balanced performance was observed for Matsnu (F1 = 0.9561), Reveton (F1 = 0.9390), and Kovter (F1 = 0.9041), suggesting that the model can achieve both high detection rates and accurate classification across different ransomware families.

Despite these promising results, several limitations should be noted. Some families, such as CryptLocker, Locker, and Trojan-Ransom, showed relatively lower recall values compared to others, indicating challenges in generalizing the model across diverse behavioral patterns. This limitation may be partly attributed to the reliance on API call features alone, as adversaries frequently use obfuscation techniques, code packing, and anti-analysis strategies such as anti-sandboxing to evade detection. Moreover, the temporal sequence of API calls, rather than their mere frequency, can play a crucial role in differentiating between families, and the current model may not fully exploit this aspect. Therefore, while the model demonstrates robustness across various risk levels, additional strategies, such as incorporating sequential patterns and dynamic behavioral features, are recommended to enhance detection, particularly for high- and medium-severity ransomware families.

Furthermore, the detection time of approximately 0.42 s per sample demonstrates that the system is highly efficient and suitable for near real-time ransomware identification, making it practical for deployment in operational cybersecurity environments.

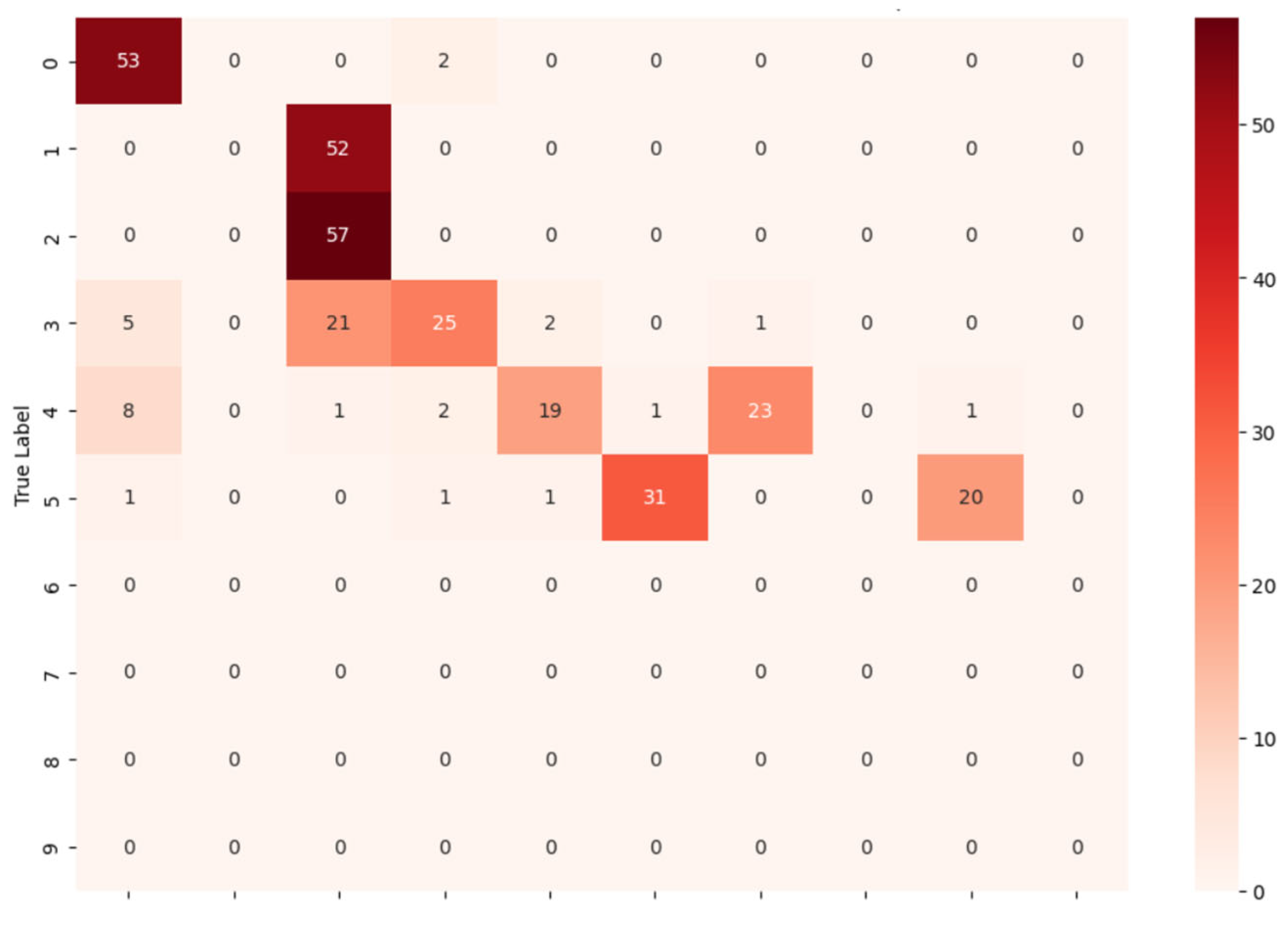

Figure 6 presents the confusion matrix for the DNN model, summarizing its classification performance across ransomware families.

Comparison Between ML and DL on the Benchmark Dataset

For the benchmark dataset, the ML algorithms, particularly XGBoost and LightGBM, achieved higher precision, recall, and F1-scores compared to the DL model, while also requiring significantly less computational time. Although the DL model (DNN) demonstrated strong detection capabilities and potential for scalability, it was less efficient for this benchmark dataset due to its relatively small size and limited complexity.

4.3. ML for Custom Dataset

The custom dataset consists of several ransomware families, including Darkside (74 samples), Bad Rabbit (81 samples), CryptLocker, Locker, and Reveton, as well as benign programs. For each family, a total of 1442 API call features were extracted using dynamic analysis platforms to capture comprehensive behavioral patterns.

Table 8 lists the labels and severity levels assigned to each ransomware family and benign category, while

Table 9 presents the performance results of XGBoost, LightGBM, SVM, and KNN models.

Results of Severity Level

The evaluation of the benchmark dataset shows that several ransomware families, such as CryptLocker, Locker, and Trojan-Ransom, achieved high detection rates, confirming the effectiveness of the classification model. CryptoWall and Kollah demonstrated balanced performance, while Matsnu, Reveton, and Kovter achieved the best overall balanced performance, reflecting the model’s ability to adapt to different risk levels.

However, some limitations should be noted. Certain families, such as CryptLocker, Locker, and Trojan-Ransom, exhibited relatively lower recall, indicating challenges in generalizing the model across diverse behavioral patterns. This limitation can be partly attributed to relying solely on API call features, while attackers may employ obfuscation techniques, code packing, and anti-analysis strategies. Moreover, the temporal sequence of API calls could improve differentiation between families, which the current model does not fully exploit.

When classifying ransomware families by risk level, modern ensemble algorithms such as XGBoost and LightGBM achieved the highest precision, recall, and F1 scores while maintaining low execution times.

Figure 7 presents the confusion matrices for XGBoost and LightGBM, respectively. Both models achieved high true positive rates and low FPs across families, confirming their robustness in detecting and distinguishing ransomware variants. However, certain families such as CryptoLocker showed slightly lower true positive rates, indicating ongoing challenges in detecting high-risk or obfuscated samples. Reducing false negatives remains an important direction for improving detection reliability in future work.

4.4. DL for Custom Dataset

The DNN model was applied to the custom dataset to evaluate its performance in classifying ransomware families. As shown in

Table 10, the results highlight the model’s precision, recall, F1-score, and execution time.

The DNN model achieved strong performance across most ransomware families with a low execution time of 4.45 s, demonstrating its suitability for practical detection systems. For example, the Darkside family achieved 88.24% precision and 100% recall with a balanced F1-score of 0.9375, while Bad Rabbit reached 100% precision and 87.5% recall. Reveton showed excellent results with 100% precision, 93.75% recall, and an F1-score of 0.9677. In contrast, some families, such as CryptLocker (85.71% precision, 80% recall) and Locker (73.33% precision, 68.75% recall), exhibited weaker performance, highlighting challenges in detecting all true positives. These limitations may stem from reliance on API call features alone, which can be circumvented by obfuscation, code packing, and anti-analysis techniques. Incorporating temporal sequences of API calls or dynamic behavioral features could further improve detection, particularly for these more challenging families.

Following the evaluation of performance metrics,

Figure 8 presents the confusion matrix for the DNN model, which achieved the best overall performance among the tested algorithms. The model demonstrated consistently high precision and recall across ransomware families, particularly excelling in detecting high-risk samples such as DarkSide with perfect recall and minimal FP. These results confirm that the DNN effectively captures complex nonlinear API patterns and maintains strong generalization, achieving an average prediction time of approximately 4.45 s per sample, suitable for near real-time ransomware detection in operational environments.

Comparison Between ML and DL on the Custom Dataset

For the Custom Dataset, XGBoost and LightGBM performed the best and most balanced performance in ransomware detection, delivering high precision and strong recall with relatively low FP and FN. In contrast, SVM and DNN showed promising but less stable results, requiring further tuning to handle small datasets or complex ransomware families more effectively. Although KNN offers faster computation and simplicity, its higher FP rate makes it less reliable for detecting high-risk ransomware, as it may lead to inaccurate alerts.

4.5. Model Generalization

To evaluate the generalization capability of the proposed models, we conducted cross-dataset experiments where training was performed on one dataset and testing on the other, and vice versa. The two datasets originated from distinct dynamic analysis platforms, ensuring diversity in behavioral data and potential variations in extracted features. To maintain compatibility, only common API call features were retained, and dimensionality reduction was applied using Truncated Singular Value Decomposition (TruncatedSVD), which is suitable for sparse representations such as TF-IDF-transformed API calls.

Three gradient boosting algorithms—XGBoost, CatBoost, and LightGBM—were evaluated. Hyperparameters were optimized via GridSearchCV with macro-F1 as the objective metric, and SMOTE oversampling was incorporated to address class imbalance. Two evaluation scenarios were considered: (1) no fine-tuning, where the model trained on the source dataset was directly evaluated on the target dataset, and (2) fine-tuning, where a small portion of the target dataset was included in training before final evaluation.

Table 11 summarizes the performance comparison of XGBoost and LightGBM, and the confusion matrix in

Figure 9 demonstrates that model performance improves significantly with fine-tuning. The best results are achieved when 30% of the target data is incorporated. This indicates that even a small portion of target data can substantially enhance model adaptability and robustness across heterogeneous datasets, thereby confirming bidirectional generalization capability.

4.5.1. Experiment Direction: Custom→Benchmark

When trained on the custom dataset and tested on the benchmark dataset, XGBoost achieved strong performance for Reveton (0.97) and CryptLocker (0.68), while struggling with CryptoWall (0.14) and Kollah (0.11). LightGBM followed a similar trend, showing moderate improvements for CryptLocker (0.63) and Matsnu (0.55), but consistently low precision for small or behaviorally similar families. These results indicate that benchmark families not well represented in the custom dataset pose challenges, although distinct families such as Reveton and CryptLocker remain reliably identifiable.

4.5.2. Experiment Direction: Benchmark→Custom

In the reverse direction, both XGBoost and LightGBM achieved high precision for Reveton (0.97 and 0.92, respectively) and CryptLocker (0.90 and 9.93). However, performance dropped significantly for Bad Rabbit (0) and Darkside (0.48), reflecting either insufficient representation in the benchmark dataset or distinct behavioral differences across environments. These findings suggest that while the benchmark dataset supports robust generalization for well-represented families, it fails to capture unseen or rare families effectively.

4.5.3. Model Comparison

Across both directions, XGBoost achieved higher peak performance for distinct families but exhibited greater variability for less common ones, while LightGBM delivered more consistent results across intermediate categories such as Matsnu and Locker. Overall, both models demonstrated strong generalization for families with distinctive features but struggled with families of overlapping behaviors or limited representation.

The results highlight several key observations regarding model performance and dataset influence. First, fine-tuning with 30% of the test set significantly improved precision compared with experiments without fine-tuning or with smaller fractions, underscoring the importance of incorporating new samples to enhance generalization. Second, families with clear and distinctive features, such as Reveton and CryptLocker, were consistently well recognized, whereas less common or visually and functionally similar families, including Bad Rabbit, CryptoWall, and Kollah, exhibited lower precision. Third, the direction of training also played a critical role: transferring knowledge from Benchmark → Custom generally provided better generalization when the benchmark dataset adequately represented the full distribution of families; however, performance declined for unseen families. Conversely, the Custom → Benchmark direction posed greater challenges due to the limited coverage of the custom dataset. Overall, introducing 30% of test samples during fine-tuning proved particularly effective in improving precision for new or imbalanced families, demonstrating the practical benefit of selective dataset augmentation in advancing multi-class malware classification.

4.5.4. Analysis of the Model’s Confusion Matrix (Benchmark→Custom)

High accuracy for very high-risk families: Critroni, CryptLocker, and CryptoWall were mostly correctly classified (52/52, 57/57, 25/26), reflecting distinctive API call patterns.

Misclassification in medium- and high-risk families: Kollah and Kovter showed moderate confusion due to similar behavioral traits. For example, 23 Kollah samples were misclassified as Kovter, and Kovter samples were sometimes misclassified as Kollah or Reveton.

Underrepresented or low-confidence families: Locker, Matsnu, Reveton, and Trojan-Ransom had limited correct identification, likely due to small sample sizes in training/testing.

Directionality and dataset effect: Training on the larger, more diverse benchmark dataset improved generalization to the custom dataset compared to the reverse scenario. The model performed particularly well in classifying very high-risk families such as Critroni, CryptLocker, and CryptoWall, owing to their distinctive behavioral signatures. In contrast, families with similar patterns or limited sample representation—including Kollah, Kovter, Reveton, Locker, Matsnu, and Trojan-Ransom—showed higher misclassification rates. These findings underscore the importance of increasing sample sizes for medium- and high-risk families and adopting more advanced feature extraction techniques, such as sequential API call analysis, rather than relying solely on frequency-based features.

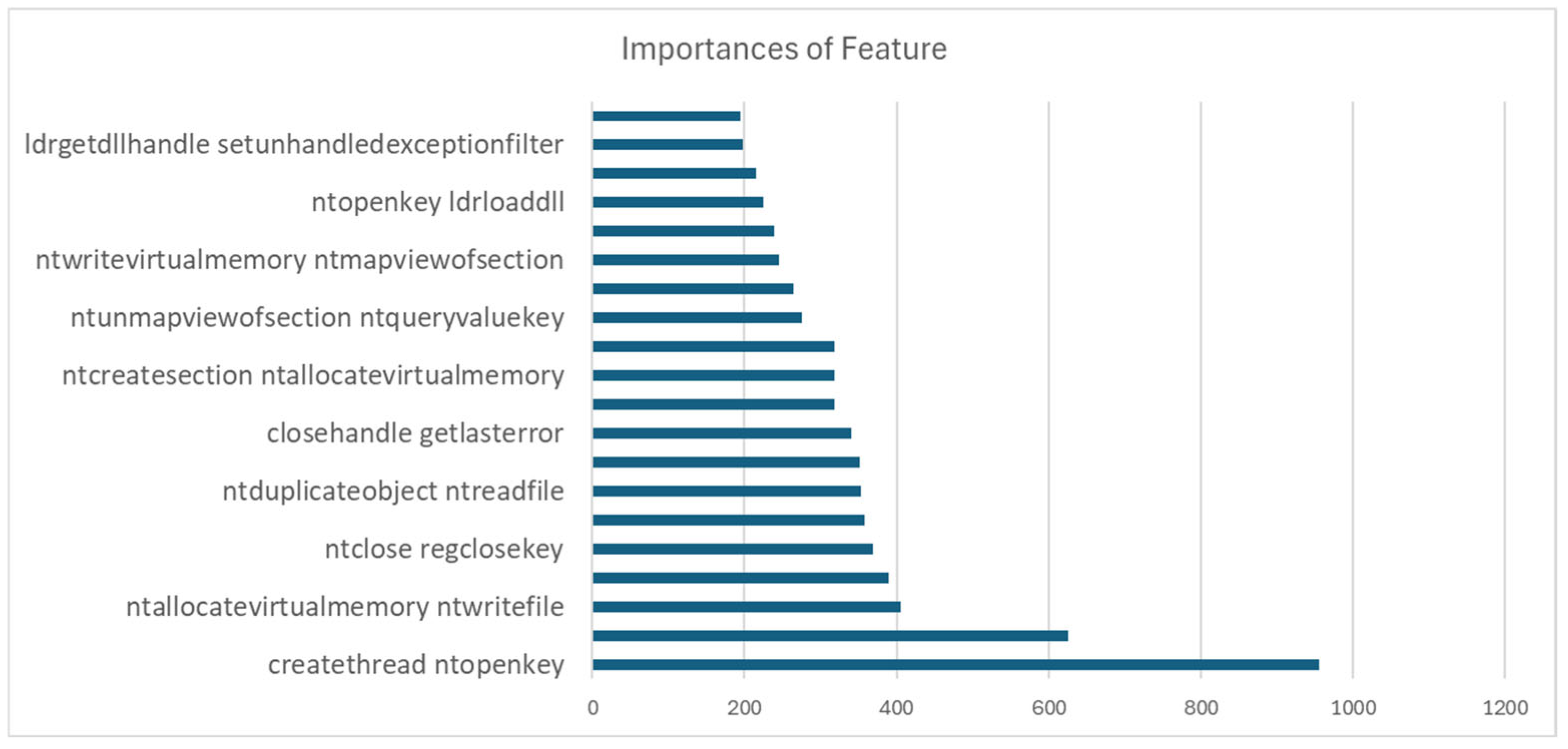

The most influential features extracted from the LightGBM model were key API calls such as CreateThread and NtOpenKey (importance = 956), GetSystemTimeAsFileTime and UuidCreate (importance = 625), and NtAllocateVirtualMemory and NtWriteFile (importance = 405). These features capture essential malware behaviors in Windows systems, including thread creation, registry access, memory management, file writing, and temporal operations for encryption or internal tracking. By modeling these distinctive patterns, the features account for the model’s ability to differentiate ransomware families.

Figure 10 illustrates a behavioral map of these features, providing quantitative and qualitative insights to improve future models and enhance ransomware detection accuracy.

4.5.5. Comparison with Recent Studies

The current study differs from most recent research in several key aspects. While many prior studies have focused on malware classification from specific perspectives or single data representations, our approach integrates multiple analytical domains to evaluate the overall performance across diverse datasets and learning techniques. This holistic design enables a more comprehensive understanding of ransomware family behavior.

Most recent studies relied primarily on binary feature representation, which, although efficient, tends to overlook the semantic and behavioral relationships among API call sequences. In contrast, our study adopted a TF–IDF–based representation for API call features. This approach provided richer contextual information and enhanced the model’s ability to distinguish between similar ransomware behaviors, leading to more interpretable and flexible feature extraction.

Additionally, the proposed model was evaluated for its generalization capability, which achieved a reasonable level of performance given the limited and noisy dataset. This demonstrates the robustness of the approach, particularly in scenarios where data are obfuscated or partially corrupted.

At the ransomware family classification level, our framework included different severity levels of ransomware families, allowing for a finer-grained analysis of family-specific behaviors. Most ransomware families were effectively classified, except for two families that showed reduced accuracy due to advanced obfuscation and evasion techniques. This finding highlights the continuing challenge of detecting and classifying highly obfuscated malware, which will be further addressed in future work by expanding the dataset and applying transformer-based models under cloud-based training environments

4.5.6. Threats to Validity

Data Diversity and Size: The dataset used in this study is relatively small (1500 samples), which may limit the generalizability of the results across all ransomware families or real-world conditions. Moreover, the distribution of families is not fully balanced, which could influence model accuracy for certain classes.

Cross-System and Windows Version Generalization: This study focused on Windows-based systems, where API call patterns may differ across versions. Such variation could restrict the model’s ability to generalize to other versions or operating systems. However, the impact is expected to be limited, as most modern Windows environments share similar architectures and core API behaviors remain consistent. Continuous monitoring of system-level changes that might affect ransomware behavior is nevertheless recommended.

Obfuscation and Anti-Analysis Techniques: Relying solely on API call features exposes the models to evasion methods such as code obfuscation, packing, and anti-sandboxing strategies. These techniques may conceal critical behaviors and reduce the model’s effectiveness against more sophisticated ransomware samples.

Temporal Sequence of API Calls: The current approach considers the frequency of API calls but not their temporal order, which may hinder the model’s ability to distinguish families with similar behavioral profiles. Further work should incorporate sequential or temporal analysis to better capture these relationships. Incorporating sequential analysis in future models is therefore important.

4.5.7. Computational Resource Requirements and Deployment Feasibility

To ensure transparency and reproducibility of the experimental setup, as well as to provide practical insights for real-world implementation,

Table 12 summarizes the computational resources used during model development and training.

Furthermore, as ransomware detection frameworks may be deployed in various operational environments,

Table 13 outlines recommended deployment scenarios, highlighting suitable configurations and model types for each environment, from lightweight edge systems to large-scale production infrastructures.

5. Limitations and Further Research

This study provides a comprehensive analysis of ransomware family classification using API call data with both ML and DL methodologies. However, several limitations affect the generalizability of the findings.

First, the analysis was constrained by the scarcity of recent ransomware samples. The lack of up-to-date datasets reduces representativeness and limits the model’s ability to generalize to emerging ransomware threats. A small sample size may also result in incomplete patterns of behavior and introduce biases into model performance.

Second, many modern ransomware families can detect virtualized environments such as VirusTotal or Joe Sandbox and may terminate or alter execution once detection occurs. This behavior produces an insufficient number of observable API calls, hindering accurate behavioral analysis. Although Cuckoo Sandbox provides valuable information, its restriction to Windows 7—a deprecated operating system—further limited the analysis. Ransomware often behaves differently on newer systems, thereby reducing the ecological validity of results. Moreover, advanced obfuscation and anti-analysis techniques, including delaying execution, misleading API call sequences, and sandbox-aware adaptations, further complicate efforts to capture genuine operational patterns.

Future research should address these issues by acquiring larger and more diverse datasets of recent ransomware samples. Enhancing monitoring tools to capture a wider range of API interactions across modern operating systems would improve both accuracy and robustness. Additionally, exploring alternative data sources and developing advanced instrumentation for API monitoring could provide deeper insights and further strengthen the classification performance.

Moreover, expanding the framework to other operating systems, such as Linux, macOS, and Android, would help evaluate its generalizability and adaptability beyond Windows environments, offering a broader perspective on ransomware behavior across platforms.

6. Conclusions

This study classified ransomware families based on API call analysis and systematically compared the performance of ML and DL models. By reducing the number of families while increasing the sample size, we were able to capture more representative behavioral patterns. Families with clear patterns, such as Critroni, CryptLocker, and CryptoWall, achieved the highest accuracy, while families with overlapping behaviors or limited samples showed lower performance. This highlights the strong correlation between risk level and classification accuracy, underscoring the importance of data quality and balanced representation.

Experimental results demonstrated that XGBoost and LightGBM achieved 84–100% accuracy, particularly excelling at detecting high-risk families. The proposed classification framework can be integrated into endpoint detection and response (EDR) systems, intrusion detection systems (IDSs), and malware sandboxes to provide automated family-level detection. By linking classification outcomes to risk levels, this framework supports security teams and forensic investigators in prioritizing high-risk threats and accelerating incident response.

Overall, this study contributes to the classification of recent ransomware families and offers practical implications for enhancing both prevention and forensic investigation. Future work should focus on acquiring larger and more diverse datasets, incorporating alternative monitoring sources, and applying advanced feature extraction techniques to further improve generalization and robustness.