AI-Augmented SOC: A Survey of LLMs and Agents for Security Automation

Abstract

1. Introduction

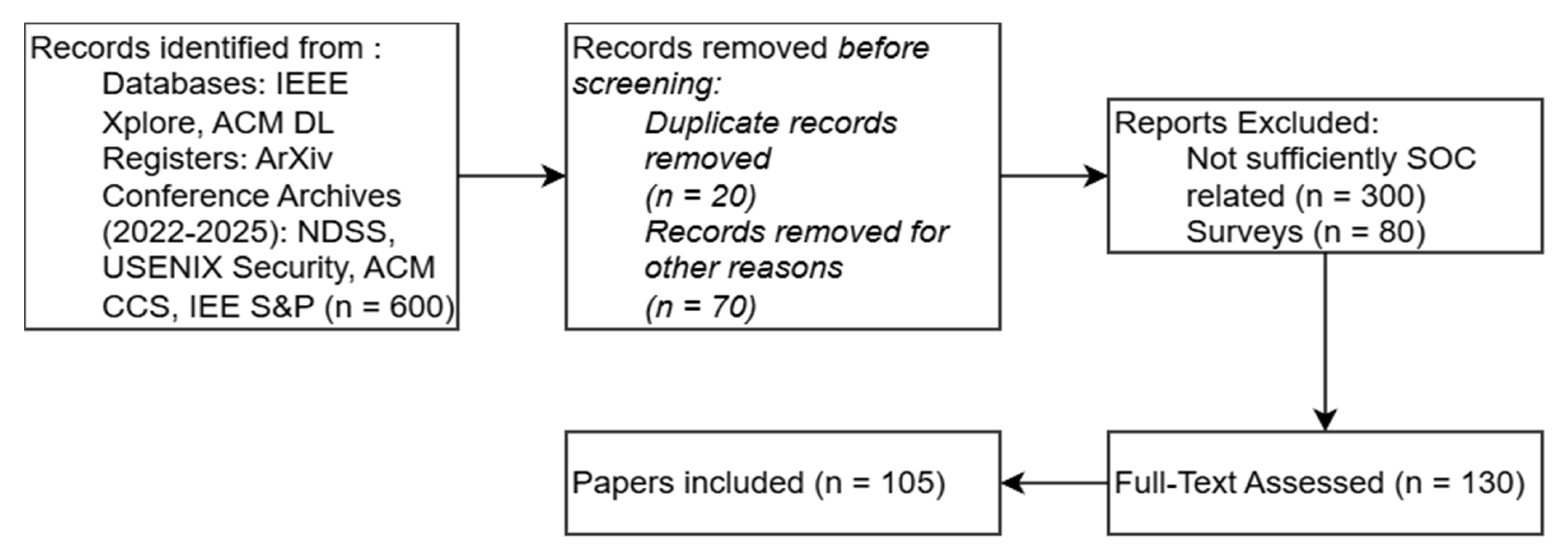

2. Methodology

3. Integration of AI Agents and LLMs in Security Operations Center Tasks

3.1. Log Summarization

3.2. Alert Triage

3.3. Threat Intelligence

3.4. Ticket Handling

3.5. Incident Response

3.6. Report Generation

3.7. Asset Discovery and Management

3.8. Vulnerability Management

4. Capability Maturity Model

4.1. Relevance Compared to SOC-CMM

4.2. Validity of Capability Maturity Model

5. Challenges

5.1. Integration Challenges

5.2. Operational Challenges

5.3. Model-Related Challenges

5.4. Data-Related Challenges

5.5. Integrated and Critical Discussion of Challenges

6. Future Directions

7. Threats to Validity

8. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Binbeshr, F.; Imam, M.; Ghaleb, M.; Hamdan, M.; Rahim, M.A.; Hammoudeh, M. The Rise of Cognitive SOCs: A Systematic Literature Review on AI Approaches. IEEE Open J. Comput. Soc. 2025, 6, 360–379. [Google Scholar] [CrossRef]

- Hassanin, M.; Moustafa, N. A Comprehensive Overview of Large Language Models (LLMs) for Cyber Defences: Opportunities and Directions. arXiv 2024, arXiv:2405.14487v1. [Google Scholar] [CrossRef]

- Mohsin, A.; Janicke, H.; Ibrahim, A.; Sarker, I.H.; Camtepe, S. A Unified Framework for Human AI Collaboration in Security Operations Centers with Trusted Autonomy. arXiv 2025, arXiv:2505.23397v2. [Google Scholar] [CrossRef]

- Chigurupati, M.; Malviya, R.K.; Toorpu, A.R.; Anand, K. AI Agents for Cloud Reliability: Autonomous Threat Detection and Mitigation Aligned with Site Reliability Engineering Principles. In Proceedings of the 2025 IEEE 4th International Conference on AI in Cybersecurity (ICAIC), Houston, TX, USA, 5–7 February 2025; IEEE: Piscataway, NJ, USA, 2025. [Google Scholar] [CrossRef]

- Song, C.; Ma, L.; Zheng, J.; Liao, J.; Kuang, H.; Yang, L. Audit-LLM: Multi-Agent Collaboration for Log-based Insider Threat Detection. arXiv 2024, arXiv:2408.08902v1. [Google Scholar] [CrossRef]

- Gupta, M.; Akiri, C.; Aryal, K.; Parker, E.; Praharaj, L. From ChatGPT to ThreatGPT: Impact of Generative AI in Cybersecurity and Privacy. IEEE Access 2023, 11, 80218–80245. [Google Scholar] [CrossRef]

- IEEE Xplore. IEEE Xplore Digital Library; IEEE: Piscataway, NJ, USA, 2025; Available online: https://ieeexplore.ieee.org/ (accessed on 4 June 2025).

- arXiv. arXiv.org e-Print Archive; Cornell University: Ithaca, NY, USA, 2025; Available online: https://arxiv.org/ (accessed on 4 June 2025).

- ACM Digital Library. ACM Digital Library, Association for Computing Machinery. Available online: https://dl.acm.org/ (accessed on 4 June 2025).

- Zhong, A.; Mo, D.; Liu, G.; Liu, J.; Lu, Q.; Zhou, Q.; Wu, J.; Li, Q.; Wen, Q. LogParser-LLM: Advancing efficient log parsing with large language models. In Proceedings of the 30th ACM SIGKDD Conference on Knowledge Discovery and Data Mining (KDD ’24), Barcelona, Spain, 25–29 August 2024. [Google Scholar] [CrossRef]

- Huang, J.; Jiang, Z.; Chen, Z.; Lyu, M.R. LUNAR: Unsupervised LLM-based Log Parsing. arXiv 2024, arXiv:2406.07174v2. [Google Scholar] [CrossRef]

- Balasubramanian, P.; Seby, J.; Kostakos, P. CYGENT: A cybersecurity conversational agent with log summarization powered by GPT-3. arXiv 2024, arXiv:2403.17160. [Google Scholar] [CrossRef]

- Liu, X.; Liang, J.; Yan, Q.; Jang, J.; Mao, S.; Ye, M.; Jia, J.; Xi, Z. CyLens: Towards Reinventing Cyber Threat Intelligence in the Paradigm of Agentic Large Language Models. arXiv 2025, arXiv:2502.20791v2. [Google Scholar] [CrossRef]

- Ma, Z.; Kim, D.J.; Chen, T.-H.P. LibreLog: Accurate and efficient unsupervised log parsing using open-source large language models. arXiv 2024, arXiv:2408.01585. [Google Scholar] [CrossRef]

- Akhtar, S.; Khan, S.; Parkinson, S. LLM-based event log analysis techniques: A survey. arXiv 2025, arXiv:2502.00677. [Google Scholar] [CrossRef]

- Ma, Z.; Chen, A.R.; Kim, D.J.; Chen, T.-H.; Wang, S. LLMParser: An Exploratory Study on Using Large Language Models for Log Parsing. In Proceedings of the IEEE/ACM 46th International Conference on Software Engineering, Lisbon, Portugal, 14–20 April 2024; ACM: New York, NY, USA, 2024; pp. 1–13. [Google Scholar] [CrossRef]

- Fieblinger, R.; Alam, T.; Rastogi, N. Actionable Cyber Threat Intelligence using Knowledge Graphs and Large Language Models. arXiv 2024, arXiv:2407.02528v1. [Google Scholar] [CrossRef]

- Liu, Y.; Tao, S.; Meng, W.; Wang, J.; Ma, W.; Chen, Y.; Zhao, Y.; Yang, H.; Jiang, Y. Interpretable Online Log Analysis Using Large Language Models with Prompt Strategies. In Proceedings of the 32nd IEEE/ACM International Conference on Program Comprehension (ICPC ’24), Lisbon, Portugal, 15–16 April 2024; pp. 35–46. [Google Scholar] [CrossRef]

- Gupta, P.; Bhukar, K.; Kumar, H.; Nagar, S.; Mohapatra, P.; Kar, D. LogAn: An LLM-Based Log Analytics Tool with Causal Inferencing. In Proceedings of the 16th ACM/SPEC International Conference on Performance Engineering Companion (ICPE Companion), Toronto, ON, Canada, 5–9 May 2025; pp. 1–3. [Google Scholar] [CrossRef]

- Al Siam, A.; Hassan, M.; Bhuiyan, T. Artificial Intelligence for Cybersecurity: A State of the Art. In Proceedings of the 2025 IEEE 4th International Conference on AI in Cybersecurity (ICAIC), Houston, TX, USA, 5–7 February 2025; IEEE: Piscataway, NJ, USA, 2025. [Google Scholar] [CrossRef]

- Jiang, Z.; Liu, J.; Chen, Z.; Li, Y.; Huang, J.; Huo, Y.; He, P.; Gu, J.; Lyu, M.R. LILAC: Log Parsing using LLMs with Adaptive Parsing Cache. arXiv 2023, arXiv:2310.01796v3. [Google Scholar] [CrossRef]

- Karlsen, E.; Luo, X.; Zincir-Heywood, N.; Heywood, M. Heywood, Benchmarking Large Language Models for Log Analysis, Security, and Interpretation. arXiv 2023, arXiv:2311.14519v1. [Google Scholar] [CrossRef]

- Fayyazi, R.; Taghdimi, R.; Yang, S.J. Advancing TTP Analysis: Harnessing the Power of Large Language Models with Retrieval-Augmented Generation. arXiv 2024, arXiv:2401.00280. [Google Scholar] [CrossRef]

- Zhang, H.; Huang, J.; Mei, K.; Yao, Y.; Wang, Z.; Zhan, C.; Wang, H.; Zhang, Y. Agent Security Bench (ASB): Formalizing and Benchmarking Attacks and Defenses in LLM-based Agents. arXiv 2024, arXiv:2410.02644v4. [Google Scholar] [CrossRef]

- Liu, X.; Yu, F.; Li, X.; Yan, G.; Yang, P.; Xi, Z. Benchmarking LLMs in an Embodied Environment for Blue Team Threat Hunting. arXiv 2025, arXiv:2505.11901v1. [Google Scholar] [CrossRef]

- Loumachi, F.Y.; Ghanem, M.C.; Ferrag, M.A. GenDFIR: Advancing Cyber Incident Timeline Analysis Through Retrieval Augmented Generation and Large Language Models. arXiv 2024, arXiv:2409.02572v4. [Google Scholar] [CrossRef]

- Ali, T.; Kostakos, P. HuntGPT: Integrating Machine Learning-Based Anomaly Detection and Explainable AI with Large Language Models (LLMs). arXiv 2023, arXiv:2309.16021. [Google Scholar] [CrossRef]

- Cheng, Y.; Bajaber, O.; Tsegai, S.A.; Song, D.; Gao, P. CTINexus: Automatic Cyber Threat Intelligence Knowledge Graph Construction Using LLMs. arXiv 2025, arXiv:2410.21060. [Google Scholar]

- Jalalvand, F.; Chhetri, M.B.; Nepal, S.; Paris, C. Alert Prioritisation in Security Operations Centres: A Systematic Survey on Criteria and Methods. ACM Comput. Surv. 2024, 57, 1–36. [Google Scholar] [CrossRef]

- Shah, S.; Parast, F.K. Parast, AI-Driven Cyber Threat Intelligence Automation. arXiv 2024, arXiv:2410.20287v1. [Google Scholar] [CrossRef]

- Cuong Nguyen, H.; Tariq, S.; Baruwal Chhetri, M.; Quoc Vo, B. Towards Effective Identification of Attack Techniques in Cyber Threat Intelligence Reports Using Large Language Models. In Proceedings of the Companion of the ACM on Web Conference 2025 (WWW Companion’25), Sydney, Australia, 28 April–2 May 2025. [Google Scholar] [CrossRef]

- Jin, P.; Zhang, S.; Ma, M.; Li, H.; Kang, Y.; Li, L.; Liu, Y.; Qiao, B.; Zhang, C.; Zhao, P.; et al. Assess and Summarize: Improve Outage Understanding with Large Language Models. arXiv 2023. [Google Scholar] [CrossRef]

- de Witt, C.S. Open Challenges in Multi-Agent Security: Towards Secure Systems of Interacting AI Agents. arXiv 2025, arXiv:2505.02077. [Google Scholar] [CrossRef]

- Sharma, A.N.; Akbar, K.A.; Thuraisingham, B.; Khan, L. Enhancing Security Insights with KnowGen-RAG: Combining Knowledge Graphs, LLMs, and Multimodal Interpretability. In Proceedings of the 10th ACM International Workshop on Security and Privacy Analytics, Pittsburgh, PA, USA, 6 June 2025; pp. 2–12. [Google Scholar] [CrossRef]

- Daniel, N.; Kaiser, F.K.; Giladi, S.; Sharabi, S.; Moyal, R.; Shpolyansky, S.; Murilllo, A.; Elyashar, A.; Puzis, R. Labeling NIDS Rules with MITRE ATT&CK Techniques: Machine Learning vs. Large Language Models. arXiv 2024, arXiv:2412.10978. [Google Scholar] [CrossRef]

- Froudakis, E.; Avgetidis, A.; Frankum, S.T.; Perdisci, R.; Antonakakis, M.; Keromytis, A. Uncovering Reliable Indicators: Improving IoC Extraction from Threat Reports. arXiv 2025, arXiv:2506.11325. [Google Scholar] [CrossRef]

- Alnahdi, A.; Narain, S. Towards Transparent Intrusion Detection: A Coherence-Based Framework in Explainable AI Integrating Large Language Models. In Proceedings of the 2024 IEEE 6th International Conference on Trust, Privacy and Security in Intelligent Systems, and Applications (TPS-ISA), Washington, DC, USA, 28–31 October 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 87–96. [Google Scholar] [CrossRef]

- Jain, S.; Gupta, A.; Neha, K. AI Enhanced Ticket Management System for Optimized Support. In Proceedings of the 4th International Conference on AI-ML Systems (AIMLSystems 2024), Baton Rouge, LA, USA, 8–11 October 2024; pp. 1–7. [Google Scholar] [CrossRef]

- Pei, C.; Wang, Z.; Liu, F.; Li, Z.; Liu, Y.; He, X.; Kang, R.; Zhang, T.; Chen, J.; Li, J.; et al. Flow-of-Action: SOP Enhanced LLM-Based Multi-Agent System for Root Cause Analysis. In Proceedings of the Companion ACM Web Conf. 2025 (WWW Companion’25), Sydney, NSW, Australia, 28 April–2 May 2025; pp. 1–10. [Google Scholar] [CrossRef]

- Chen, Y.; Xie, H.; Ma, M.; Kang, Y.; Gao, X.; Shi, L.; Cao, Y.; Gao, X.; Fan, H.; Wen, M.; et al. Automatic Root Cause Analysis via Large Language Models for Cloud Incidents. arXiv 2023, arXiv:2305.15778v4. [Google Scholar] [CrossRef]

- Yang, Y.; Deng, Y.; Xiong, Y.; Li, B.; Xu, H.; Cheng, P. AidAI: Automated Incident Diagnosis for AI Workloads in the Cloud. arXiv 2025, arXiv:2506.01481. [Google Scholar] [CrossRef]

- Liu, Z.; Benge, C.; Jiang, S. Ticket-BERT: Labeling incident management tickets with language models. arXiv 2023, arXiv:2307.00108. [Google Scholar] [CrossRef]

- Li, C.; Zhu, Z.; He, J.; Zhang, X. RedChronos: A Large Language Model-Based Log Analysis System for Insider Threat Detection in Enterprises. arXiv 2025, arXiv:2503.02702. [Google Scholar]

- Liu, F.; He, X.; Zhang, T.; Chen, J.; Li, Y.; Yi, L.; Zhang, H.; Wu, G.; Shi, R. TickIt: Leveraging Large Language Models for Automated Ticket Escalation. arXiv 2025. [Google Scholar] [CrossRef]

- Nong, Y.; Yang, H.; Cheng, L.; Hu, H.; Cai, H. APPATCH: Automated Adaptive Prompting Large Language Models for Real-World Software Vulnerability Patching. In Proceedings of the 2025 Network and Distributed System Security Symposium (NDSS 2025), San Diego, CA, USA, 23–26 February 2025; Internet Society: Fredericksburg, VA, USA, 2025; pp. 1–15. [Google Scholar]

- Lin, J.; Mohaisen, D. Evaluating Large Language Models in Vulnerability Detection Under Variable Context Windows. arXiv 2025. [Google Scholar] [CrossRef]

- Lin, X.; Zhang, J.; Deng, G.; Liu, T.; Zhang, T.; Guo, Q.; Chen, R. IRCopilot: Automated Incident Response with Large Language Models. arXiv 2025. [Google Scholar] [CrossRef]

- Liu, Z. Multi-Agent Collaboration in Incident Response with Large Language Models. arXiv 2024. [Google Scholar] [CrossRef]

- Perrina, F.; Marchiori, F.; Conti, M.; Verde, N.V. AGIR: Automating Cyber Threat Intelligence Reporting with Natural Language Generation. arXiv 2023, arXiv:2310.02655. [Google Scholar] [CrossRef]

- Wudali, P.N.; Kravchik, M.; Malul, E.; Gandhi, P.A.; Elovici, Y.; Shabtai, A. Rule-ATT&CK Mapper (RAM): Mapping SIEM Rules to TTPs Using LLMs. arXiv 2025, arXiv:2502.02337. [Google Scholar]

- Goel, D.; Husain, F.; Singh, A.; Ghosh, S.; Parayil, A.; Bansal, C.; Zhang, X.; Rajmohan, S. X-lifecycle Learning for Cloud Incident Management using LLMs. arXiv 2024. [Google Scholar] [CrossRef]

- Albanese, M.; Ou, X.; Lybarger, K.; Lende, D.; Goldgof, D. Towards AI-driven human-machine co-teaming for adaptive and agile cyber security operation centers. arXiv 2025, arXiv:2505.06394. [Google Scholar]

- Patel, D.; Lin, S.; Rayfield, J.; Zhou, N.; Vaculin, R.; Martinez, N.; O’donncha, F.; Kalagnanam, J. AssetOpsBench: Benchmarking AI Agents for Task Automation in Industrial Asset Operations and Maintenance. arXiv 2025, arXiv:2506.03828. [Google Scholar]

- Mitra, S.; Neupane, S.; Chakraborty, T.; Mittal, S.; Piplai, A.; Gaur, M.; Rahimi, S. LocalIntel: Generating organizational threat intelligence from global and local cyber knowledge. arXiv 2025, arXiv:2401.10036. [Google Scholar]

- Chopra, S.; Ahmad, H.; Goel, D.; Szabo, C. ChatNVD: Advancing Cybersecurity Vulnerability Assessment with Large Language Models. arXiv 2025, arXiv:2412.04756. [Google Scholar]

- CRondanini, C.; Carminati, B.; Ferrari, E.; Kundu, A.; Gaudiano, A. Malware Detection at the Edge with Lightweight LLMs: A Performance Evaluation. arXiv 2025. [Google Scholar] [CrossRef]

- Jin, Y.; Li, C.; Fan, P.; Liu, P.; Li, X.; Liu, C.; Qiu, W. LLM-BSCVM: An LLM-based blockchain smart contract vulnerability management framework. arXiv 2025, arXiv:2505.17416. [Google Scholar]

- Pasca, E.M.; Delinschi, D.; Erdei, R.; Matei, O. LLM-Driven, Self-Improving Framework for Security Test Automation: Leveraging Karate DSL for Augmented API Resilience. IEEE Access 2025, 13, 56861–56886. [Google Scholar] [CrossRef]

- Torkamani, M.J.; Ng, J.; Mehrotra, N.; Chandramohan, M.; Krishnan, P.; Purandare, R. Streamlining security vulnerability triage with large language models. arXiv 2025, arXiv:2501.18908. [Google Scholar] [CrossRef]

- Applebaum, A.; Dennler, C.; Dwyer, P.; Moskowitz, M.; Nguyen, H.; Nichols, N.; Park, N.; Rachwalski, P.; Rau, F.; Webster, A.; et al. Bridging Automated to Autonomous Cyber Defense. In Proceedings of the 15th ACM Workshop on Artificial Intelligence and Security, Los Angeles, CA, USA, 11 November 2022; ACM: New York, NY, USA, 2022; pp. 149–159. [Google Scholar] [CrossRef]

- Alam, M.T.; Bhusal, D.; Nguyen, L.; Rastogi, N. CTIBench: A Benchmark for Evaluating LLMs in Cyber Threat Intelligence. arXiv 2024. [Google Scholar] [CrossRef]

- Xiao, Y.; Le, V.H.; Zhang, H. Stronger, Faster, and Cheaper Log Parsing with LLMs. arXiv 2024, arXiv:2406.06156. [Google Scholar]

- Boffa, M.; Drago, I.; Mellia, M.; Vassio, L.; Giordano, D.; Valentim, R.; Ben Houidi, Z. LogPrécis: Unleashing language models for automated malicious log analysis. Comput. Secur. 2024, 141, 103805. [Google Scholar] [CrossRef]

- Khayat, M.; Barka, E.; Serhani, M.A.; Sallabi, F.; Shuaib, K.; Khater, H.M. Empowering Security Operation Center with Artificial Intelligence and Machine Learning—A Systematic Literature Review. IEEE Access 2025, 13, 19162–19194. [Google Scholar] [CrossRef]

- Aung, Y.L.; Christian, I.; Dong, Y.; Ye, X.; Chattopadhyay, S.; Zhou, J.; Chattopadhyay, S.; Zhou, J. Generative AI for Internet of Things Security: Challenges and Opportunities. arXiv 2025, arXiv:2502.08886. [Google Scholar] [CrossRef]

- Tran, K.T.; Dao, D.; Nguyen, M.D.; Pham, Q.V.; O’Sullivan, B.; Nguyen, H.D. Nguyen, Multi-Agent Collaboration Mechanisms: A Survey of LLMs. arXiv 2025. [Google Scholar] [CrossRef]

- Jin, H.; Papadimitriou, G.; Raghavan, K.; Zuk, P.; Balaprakash, P.; Wang, C.; Mandal, A.; Deelman, E. Large Language Models for Anomaly Detection in Computational Workflows: From Supervised Fine-Tuning to In-Context Learning. arXiv 2024. [Google Scholar] [CrossRef]

- Wong, M.Y.; Valakuzhy, K.; Ahamad, M.; Blough, D.; Monrose, F. Understanding LLMs Ability to Aid Malware Analysts in Bypassing Evasion Techniques. In Proceedings of the Companion 26th International Conference on Multimodal Interaction, San Jose, Costa Rica, 4–8 November 2024; ACM: New York, NY, USA, 2024; pp. 36–40. [Google Scholar] [CrossRef]

- Chhetri, M.B.; Tariq, S.; Singh, R.; Jalalvand, F.; Paris, C.; Nepal, S. Towards Human-AI Teaming to Mitigate Alert Fatigue in Security Operations Centres. ACM Trans. Internet Technol. 2024, 24, 22. [Google Scholar] [CrossRef]

- Freitas, S.; Kalajdjieski, J.; Gharib, A.; McCann, R. AI-Driven Guided Response for Security Operation Centers with Microsoft Copilot for Security. In Proceedings of the Companion ACM Web Conference 2025 (WWW Companion’25), Sydney, NSW, Australia, 28 April–2 May 2025; pp. 1–10. [Google Scholar] [CrossRef]

- Kim, M.; Wang, J.; Moore, K.; Goel, D.; Wang, D.; Mohsin, A.; Ibrahim, A.; Doss, R.; Camtepe, S.; Janicke, H. CyberAlly: Leveraging LLMs and Knowledge Graphs to Empower Cyber Defenders, Companion. In Proceedings of the ACM on Web Conference 2025, New York, NY, USA, 28 April–2 May 2025; ACM: New York, NY, USA, 2025; pp. 2851–2854. [Google Scholar] [CrossRef]

- Arikkat, D.R.; Abhinav, M.; Binu, N.; Parvathi, M.; Biju, N.; Arunima, K.S.; Vinod, P.; Rafidha Rehiman, K.A.; Conti, M. IntellBot: Retrieval Augmented LLM Chatbot for Cyber Threat Knowledge Delivery. arXiv 2024, arXiv:2411.05442. [Google Scholar] [CrossRef]

- Xu, M.; Wang, H.; Liu, J.; Lin, Y.; Liu, C.X.Y.; Lim, H.W.; Dong, J.S. IntelEX: A LLM-driven attack-level threat intelligence extraction framework. arXiv 2024, arXiv:2412.10872. [Google Scholar]

- Chen, M.; Zhu, K.; Lu, B.; Li, D.; Yuan, Q.; Zhu, Y. AECR: Automatic attack technique intelligence extraction based on fine-tuned large language model. Comput. Secur. 2025, 150, 104213. [Google Scholar] [CrossRef]

- Paul, S.; Alemi, F.; Macwan, R. LLM-assisted proactive threat intelligence for automated reasoning. arXiv 2025, arXiv:2504.00428. [Google Scholar] [CrossRef]

- Ghosh, S.K.; Gjomemo, R.; Venkatakrishnan, V.N. Citar: Cyberthreat Intelligence-driven Attack Reconstruction. In Proceedings of the Fifteenth ACM Conference on Data and Application Security and Privacy, Pittsburgh, PA, USA, 19 June 2024; ACM: New York, NY, USA, 2024; pp. 245–256. [Google Scholar] [CrossRef]

- Las-Casas, P.; Kumbhare, A.G.; Fonseca, R.; Agarwal, S. LLexus: An AI agent system for incident management, SIGOPS Oper. Syst. Rev. 2024, 58, 23–36. [Google Scholar] [CrossRef]

- Hays, S.; White, J. Employing LLMs for Incident-Response Planning and Review. arXiv 2024, arXiv:2403.01271. [Google Scholar]

- Liu, Z. AutoBnB: Multi-Agent Incident Response with Large Language Models. In Proceedings of the 2025 13th International Symposium on Digital Forensics and Security (ISDFS), Boston, MA, USA, 24–25 April 2025; IEEE: Piscataway, NJ, USA, 2025; pp. 1–6. [Google Scholar] [CrossRef]

- Sun, Y.; Luo, Y.; Wen, X.; Yuan, Y.; Nie, X.; Zhang, S.; Liu, T.; Luo, X. TrioXpert: An Automated Incident Management Framework for Microservice Systems. arXiv 2025, arXiv:2506.10043. [Google Scholar] [CrossRef]

- Kramer, D.; Rosique, L.; Narotam, A.; Bursztein, E.; Kelley, P.G.; Thomas, K.; Woodruff, A. Integrating Large Language Models into Security Incident Response. In Proceedings of the Twenty-First Symposium on Usable Privacy and Security (SOUPS 2025), Seattle, WA, USA, 11–12 August 2025; USENIX Association: Berkeley, CA, USA, 2025; pp. 133–144. [Google Scholar]

- Singh, R.; Chhetri, M.B.; Nepal, S.; Paris, C. ContextBuddy: AI-Enhanced Contextual Insights for Security Alert Investigation. arXiv 2025, arXiv:2506.09365. [Google Scholar]

- Jensen, R.I.T.; Tawosi, V.; Alamir, S. Software Vulnerability and Functionality Assessment using LLMs. arXiv 2024. [Google Scholar] [CrossRef]

- Lian, X.; Chen, Y.; Cheng, R.; Huang, J.; Thakkar, P.; Zhang, M.; Xu, T. Configuration Validation with Large Language Models. arXiv 2023. [Google Scholar] [CrossRef]

- Sheng, Z.; Wu, F.; Zuo, X.; Li, C.; Qiao, Y.; Hang, L. LProtector: An LLM-driven Vulnerability Detection System. arXiv 2024. [Google Scholar] [CrossRef]

- Xu, M.; Fan, J.; Huang, X.; Zhou, C.; Kang, J.; Niyato, D.; Mao, S.; Han, Z.; Shen, X.; Lam, K.Y.; et al. Forewarned is Forearmed: A Survey on Large Language Model-based Agents in Autonomous Cyberattacks. arXiv 2025. [Google Scholar] [CrossRef]

- Wang, D.; Zhou, G.; Chen, L.; Li, D.; Miao, Y. ProphetFuzz: Fully Automated Prediction and Fuzzing of High-Risk Option Combinations with Only Documentation via Large Language Model. In Proceedings of the 2024 on ACM SIGSAC Conference on Computer and Communications Security, Salt Lake City, UT, USA, 14–18 October 2024; ACM: New York, NY, USA, 2024; pp. 735–749. [Google Scholar] [CrossRef]

- Toprani, D.; Madisetti, V.K. LLM Agentic Workflow for Automated Vulnerability Detection and Remediation in Infrastructure-as-Code. IEEE Access 2025, 13, 69175–69190. [Google Scholar] [CrossRef]

- Wang, X.; Tian, Y.; Huang, K.; Liang, B. Practically implementing an LLM-supported collaborative vulnerability remediation process: A team-based approach. Comput. Secur. 2025, 148, 104113. [Google Scholar] [CrossRef]

- Beck, V.; Landauer, M.; Wurzenberger, M.; Skopik, F.; Rauber, A. System Log Parsing with Large Language Models: A Review. arXiv 2025, arXiv:2504.04877. [Google Scholar]

- Kshetri, N.; Voas, J. Agentic Artificial Intelligence for Cyber Threat Management. Computer 2025, 58, 86–90. [Google Scholar] [CrossRef]

- Massengale, S.; Huff, P. Linking Threat Agents to Targeted Organizations: A Pipeline for Enhanced Cybersecurity Risk Metrics. In Proceedings of the 2024 4th Intelligent Cybersecurity Conference (ICSC), Valencia, Spain, 17–20 September 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 132–141. [Google Scholar] [CrossRef]

- Shukla, A.; Gandhi, P.A.; Elovici, Y.; Shabtai, A. RuleGenie: SIEM Detection Rule Set Optimization. arXiv 2025. [Google Scholar] [CrossRef]

- Fu, Y.; Yuan, X.; Wang, D. RAS-Eval: A Comprehensive Benchmark for Security Evaluation of LLM Agents in Real-World Environments. arXiv 2025. [Google Scholar] [CrossRef]

- Hamadanian, P.; Arzani, B.; Fouladi, S.; Kakarla, S.K.R.; Fonseca, R.; Billor, D.; Cheema, A.; Nkposong, E.; Chandra, R. A Holistic View of AI-Driven Network Incident Management. In Proceedings of the 22nd ACM Workshop on Hot Topics in Networks (HotNets ’23), Cambridge, MA, USA, 28–29 November 2023; pp. 1–9. [Google Scholar] [CrossRef]

- Bono, J.; Grana, J.; Xu, A. Generative AI and Security Operations Center Productivity: Evidence from Live Operations. arXiv 2024. [Google Scholar] [CrossRef]

- Gandhi, P.A.; Shukla, A.; Tayouri, D.; Ifland, B.; Elovici, Y.; Puzis, R.; Shabtai, A. ATAG: AI-Agent Application Threat Assessment with Attack Graphs. arXiv 2025, arXiv:2506.02859. [Google Scholar] [CrossRef]

- Bountakas, P.; Fysarakis, K.; Kyriakakis, T.; Karafotis, P.; Aristeidis, S.; Tasouli, M.; Alcaraz, C.; Alexandris, G.; Andronikou, V.; Koutsouri, T.; et al. SYNAPSE—An Integrated Cyber Security Risk & Resilience Management Platform, With Holistic Situational Awareness, Incident Response & Preparedness Capabilities: SYNAPSE. In Proceedings of the 19th International Conference on Availability, Reliability and Security, Vienna, Austria, 30 July–2 August 2024; ACM: New York, NY, USA, 2024; pp. 1–10. [Google Scholar] [CrossRef]

- Sarkar, A.; Sarkar, S. Survey of LLM Agent Communication with MCP: A Software Design Pattern Centric Review. arXiv 2025. [Google Scholar] [CrossRef]

- Tseng, P.; Yeh, Z.; Dai, X.; Liu, P. Using LLMs to Automate Threat Intelligence Analysis Workflows in Security Operation Centers. arXiv 2024. [Google Scholar] [CrossRef]

- Ding, A.; Li, G.; Yi, X.; Lin, X.; Li, J.; Zhang, C. Generative AI for Software Security Analysis: Fundamentals, Applications, and Challenges. IEEE Softw. 2024, 41, 46–55. [Google Scholar] [CrossRef]

- Castro, S.R.; Campbell, R.; Lau, N.; Villalobos, O.; Duan, J.; Cardenas, A.A. Large Language Models are Autonomous Cyber Defenders. arXiv 2025. [Google Scholar] [CrossRef]

- Saura, P.F.; Jayaram, K.R.; Isahagian, V.; Bernabé, J.B.; Skarmeta, A. On Automating Security Policies with Contemporary LLMs. arXiv 2025, arXiv:2506.04838. [Google Scholar] [CrossRef]

- Oesch, S.; Chaulagain, A.; Weber, B.; Dixson, M.; Sadovnik, A.; Roberson, B.; Watson, C.; Austria, P. Towards a High Fidelity Training Environment for Autonomous Cyber Defense Agents. In Proceedings of the 17th Cyber Security Experimentation and Test Workshop, Philadelphia, PA, USA, 13 August 2024; ACM: New York, NY, USA, 2024; pp. 91–99. [Google Scholar] [CrossRef]

- Subramaniam, P.; Krishnan, S. DePLOI: Applying NL2SQL to Synthesize and Audit Database Access Control. arXiv 2024. [Google Scholar] [CrossRef]

- Roy, D.; Zhang, X.; Bhave, R.; Bansal, C.; Las-Casas, P.; Fonseca, R.; Rajmohan, S. Exploring LLM-based agents for root cause analysis. In Proceedings of the ACM International Conference on the Foundations of Software Engineering (FSE Companion), Porto de Galinhas, Brazil, 15–19 July 2024. [Google Scholar] [CrossRef]

- Shah, S.P.; Deshpande, A.V. Addressing Data Poisoning and Model Manipulation Risks using LLM Models in Web Security. In Proceedings of the 2024 International Conference on Distributed Systems, Computer Networks and Cybersecurity (ICDSCNC), Bengaluru, India, 20–21 September 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Kalakoti, R.; Vaarandi, R.; Bahşi, H.; Nõmm, S. Evaluating Explainable AI for Deep Learning-Based Network Intrusion Detection System Alert Classification. arXiv 2025, arXiv:2506.07882. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef]

| SOC Task | LLM/AI Agent Techniques | Model Type | Evaluation Method | Dataset |

|---|---|---|---|---|

| Log Summarization | Log Parsing [10,11], Fine-tuning [12], Domain-Specific Processing [13], RAG [14] | GPT (3.5,4,4o) [15], LLaMA (7B, 2, 3.1) [16], Zephyr [17], CodeT5 [12], LogPrompt [18], Cygent [12] | F1 Score, Precision, Recall [18], Efficiency/Scalability [19], Accuracy [20] | Loghub-2.0 [21], Real-world [18], BGL, Spirit, Thunderbird [22], HDFS dataset [15] |

| Alert Triage | NLP [2], RAG [23], LLM Based Agents [24], In Context Learning (ICL) [25] | GPT (3.5,4o,4o mini) [2], LLaMA [26], GeminiPRO [25], SecureBERT [13], HuntGPT [27], CyLens [13] | Accuracy [20], Precision, F1 Score [28], Area Under the Curve (AUC) [29], BERTScore [13] | IoT Traffic [15], LogPub [10], CTI Reports [30] |

| Threat Intelligence | IoC extraction, TTP mapping [2,31], RAG [32], Multi-Agent Systems [33] | GPT (3.5,4,4o), LLaMA-3 [31,34], Claude [35], Transformer-based models [3] | Simulated Environments [3,33], Human-in-the-Loop Validation [36], Standardized benchmarks [27,37] | Real-world data, synthetic data [1], MITRE-CVE [4], NVD [13] |

| Ticket Handling | Unified AI-driven architecture, Context based Operations [38], Automated Root Cause Analysis [39] | Flow of Action [39], RCA Copilot [40], Aid-AI, Tickit [41], Ticket-BERT [42] | Accuracy, Precision, Recall, F1 Score [43], Mean Time to Repair [44], Refuse Rate [24] | National Vulnerability Database [13], ExtractFix [45], Vul4J [46] |

| Incident Response | RAG [3], XAI [5,26], Multiagent systems [47,48] | GPT-4, LLaMA [3], Claude, AidAI [41], GenDFIR [26], IRCopilot [47] | F1 score [1], accuracy precision [35] | NSL-KDD [4], KDD99 [27] |

| Report Generation | Prompt Engineering, RAG, multiagent [3] | GPT- 4 [3], LLaMA, Gemini [17] | Precision, Recall, F1 [49] | Thunderbird [50], BGL, Spirit [31] |

| Asset Discovery and Management | Asset Categorization [51], Data Normalization [52], IoT Agent, Work Order Agent [53], Multi-Agent [3] | GPT (3.5-turbo, 40), LLaMA (2-7b-chat-hf), Qwen, Prometheus [54], AI-Avatar [3], AssetsOpsBench [53] | Accuracy [55], F1-score [45], Detection Rate, False Positive Rate [56] | AssetOpsBench dataset [53], NSL-KDD, CICIDS2017 [37] |

| Vulnerability Management | NLP, LLM Code Analysis [31,55], Knowledge Graphs, Agentic AI [5,50], RAG, Explainable AI [57,58] | GPT, Llama, Gemini, Mistral, Zephyr, BERT, SciBERT, CyBERT [34,35,59] CyLens, Audit-LLM, ChatNVD [59,60] | Accuracy, Precision, Recall, F1-scores [15] | SARD dataset, PatchDB, CVEFixes, ExtractFix, IoT datasets [45,56], NVD, LLM Vulnerability Database (LVD), CTIBench datasets [57,61] |

| SOC Task | Conventional Approaches | LLM Methods | AI Agent Methods | Key Quantitative Metric | Key Qualitative Finding |

|---|---|---|---|---|---|

| Log Summarization | Manual Review [18], Log Parsing [21], Rule-based systems, Source-code based methods [62], Manual regex patterns [90] | CYGENT (GPT-3.5, GPT-3 Davinci) [12], LogPrompt [18], LibreLog [14], LogParser-LLM [10] | CYGENT (as conversational agent) [12] | LogParser-LLM required only 272.5 LLM calls for 3.6M logs, GPT-3 Davinci outperformed other LLMs [10] | LLMs outperform manual analysis; LibreLog reduces LLM query load; CYGENT [10] showed data generalization issues |

| Alert Triage | Manual triage [76], Rule-based correlation [3], SIEM systems (80% false positives) [1] | LLMs for NIDS rule labeling [35], incident summarization [32], prioritization [1] | ReliaQuest agent [91], ContextBuddy [82], multi-agent triage systems [39] | ReliaQuest: 20×faster, 98% alert automation, 5 min containment, 30% improved detection [91] | Reduced alert fatigue and manual burden [1], enhanced contextual understanding [52] |

| Threat Intelligence | Manual analysis from diverse sources [92], traditional NLP [22], rule-based systems [93] | CTI extraction [2], IntellBot [72], CTINexus [28], LANCE [36], IntelEX [73], LocalIntel [54] | CyLens [13], IntellBot agents [72], LANCE engine [36], Multi-agent CTI extractors [31] | IntelEX F1 up to 0.902 [69], IntellBot: BERT >0.8 [72], CTINexus recall/precision ↑10% [28] | LLMs reduce CTI creation time by 75–87.5%, hallucination [30], low precision in decoder-only models still challenges [23] |

| Ticket Handling | Manual categorization [1] and resolution, rule-based mapping [3] | LLMs for grouping [1,3], prioritization, Ticket-BERT for fine-grained labeling [42] | Unified microservice agent architectures [38,83] | Rand score 0.96 for clustering [38], Ticket-BERT outperforms baselines [42] | LLMs reduce delay [94], traditional methods inefficient under volume [38,95] |

| Incident Response | Manual response protocols [1], AIOps with limited scope [4], isolated management [64] | GenDFIR [26], IRCopilot [47], LLexus [77], LLM-BSCVM [57] | AidAI [41], AutoBnB, Audit-LLM [79], Multi-agent IRCopilot [47] | 6 faster detection/mitigation; task completion time ↓30.69% (IT Admins) [4] | LLMs enhance planning, IRCopilot has hallucination/context issues [47], human oversight remains essential |

| Report Generation | Manual CTI report writing [1], data aggregation, prone to errors [30] | GPT models for CTI summary [2], AGIR [49], Microsoft Copilot [96], LLM-BSCVM [57] | AidAI [41], multi-agent CTI generators [49], autonomous audit agents [57] | AGIR recall: 0.99, report time ↓42.6%, CTI effort ↓75–87.5% [49] | AI reduces manual workload [1], outputs need review for consistency [2], TTP accuracy still lower than human reports [30] |

| Asset Discovery and Management | Manual monitoring, planning and interventions [64] | LLMs [94] | AssetOps agent + specialized IoT/maintenance agents [53] | gpt-4.1 scored 100% in FMSR, llama-4-maverick excelled in WO tasks [53] | Enables end-to-end lifecycle automation, WO tasks still depend on structured comprehension [53] |

| Vulnerability Management | Manual bug triaging [38], static analysis tools [45] | LLMs for prediction and CWE/severity assessment (CASEY) [59] | ATAG agent [97], multi-agent IaC analyzers [88], LLM-BSCVM [57] | CASEY: CWE accuracy 68%, severity 73.6%, combined 51.2% [59] | LLMs outperform static tools [88], documentation still emerging [97], privacy concerns persist [26] |

| Category | Agent/System | Topology | Autonomy Level | Primary Data Source |

|---|---|---|---|---|

| Log Summarization | CYGENT [12] | AI Agent | 1 | Uploaded Log Files |

| LibreLog [14] | LLM | 3 | LogHub-2.0 dataset | |

| LogBatcher [62] | LLM | 3 | Public Software Log | |

| Alert Triage | Microsoft Copilot for Security Guided Response (CGR) [70] | AI Agent | 1 | Microsoft Defender alerts and telemetry |

| CyberAlly [3] | AI Agent | 2 | Network telemetry and endpoint event data | |

| sHuntGPT [27] | LLM + AI Agent | 3 | Anomaly detection engine outputs | |

| Threat Intelligence | LocalIntel [54] | AI Agent | 1 | CTI Reports |

| CyLens [13] | LLMs | 2 | Event Logs | |

| CtiNexus [28] | LLMs | 3 | CTI Reports | |

| Ticket Handling | LLexus [77] | AI Agent | 3 | Generated incidents |

| AidAI [41] | LLMs | 3 | Historic data | |

| TickIT [44] | LLMs + CoT | 3 | Customer Support tickets and dialogue | |

| Incident Response | AidAI [41] | AI Agent LLMs + CoT | 3 | Historical Ticket Content |

| IRCopilot [47] | LLMs | 3 | User Activity Logs | |

| TrioXpert [83] | Multi-agent LLM | 3 | Event Logs, D1 and D2 datasets | |

| Report Generation | AGIR [49] | LLM + NLG | 3 | Intelligence sources |

| GenDFIR [26] | LLM + RAG | 3 | Incident events | |

| AttackGen [65] | LLM | 3 | Threat intelligence data | |

| Asset Discovery and Management | AssetOps Agent [53] | Multi-Agent + Global Coordinator | 2 | Multi-modal data |

| GreyMatter [91] | AI Agent | 3 | Security alerts and incident response data | |

| SYNAPSE [98] | Multi-layer toolset AI Agents | 1 | Raw events and evidence | |

| Vulnerability Management | LLM-BSCVM [57] | Multi-agent + RAG | 2 | TrustLLM and Dappscan |

| LProtector [25] | LLM | 2 | National Vulnerability Database (NVD) | |

| CASEY [59] | LLM + AI Agent | 2 | Augmented NVD |

| Evaluation | Strengths of AI Agents | Limitations of AI Agents | Strengths of LLMs | Limitations of LLMs |

|---|---|---|---|---|

| Scalability | Adapt well to increasing data volumes and SOC complexity [57]. | Scalability can be hindered by growing communication overhead [99]. | Generalize across domains and scale efficiently [2]. | Require high compute for deployment and fine-tuning [2]. |

| Interpretability | Providing context-aware, human-comprehensible insights and audit opinions via CoT reasoning [3,5] | Limited due to the “black-box” nature of some models and the potential for cascading errors from hallucinations [1,3] | Enabling natural language interactions for insights and summarized reports, increase accuracy using CoT prompting and RAG [37,49,65] | Susceptibility to hallucinations and output variability [30,100,101] |

| Latency & Efficiency | Efficient processing, multi-agent collaboration, high volume cybersecurity operations [1,3,4] | High computational resources, scalability of the number of AI Agents, the need of high-quality training data [3,64,91] | Efficient processing and analyzing vast diverse data, automating complex tasks, generating structured insights [1,64,69] | High computational resources, probabilistic nature leading to output variability [2,64,93] |

| SOC Integration | Streamline SOC workflows by autonomously managing tasks and coordinating responses across security tools [3] | Complex dynamics [33], emergent behaviors, communication overhead, and hindering predictability [97] | Enhance integration through natural language understanding [3], automated reporting, and context-aware insights [95], streamlining information [6] | Demand high computational resources and produce variable outputs, requiring human oversight and extensive fine-tuning for precision [52] |

| Human-AI Teaming | Can autonomously detect, classify, and respond to threats in real time, significantly reducing TTD [29]. | Vulnerable to attacks like prompt injection and memory poisoning [4]. | Can process vast amounts of unstructured security data [82]. | Can suffer from factual errors or “hallucinations” [3]. |

| Privacy & Security | AI agents automate tasks in secure, private data environments [99] | Multi-agent systems introduce complex vulnerabilities [33], risking sensitive data exfiltration [97] | Enhance security via threat detection [2], incident response, and secure code generation [6] | Risk sensitive data exposure, hallucinations, and adversarial attacks [2,101] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Srinivas, S.; Kirk, B.; Zendejas, J.; Espino, M.; Boskovich, M.; Bari, A.; Dajani, K.; Alzahrani, N. AI-Augmented SOC: A Survey of LLMs and Agents for Security Automation. J. Cybersecur. Priv. 2025, 5, 95. https://doi.org/10.3390/jcp5040095

Srinivas S, Kirk B, Zendejas J, Espino M, Boskovich M, Bari A, Dajani K, Alzahrani N. AI-Augmented SOC: A Survey of LLMs and Agents for Security Automation. Journal of Cybersecurity and Privacy. 2025; 5(4):95. https://doi.org/10.3390/jcp5040095

Chicago/Turabian StyleSrinivas, Siddhant, Brandon Kirk, Julissa Zendejas, Michael Espino, Matthew Boskovich, Abdul Bari, Khalil Dajani, and Nabeel Alzahrani. 2025. "AI-Augmented SOC: A Survey of LLMs and Agents for Security Automation" Journal of Cybersecurity and Privacy 5, no. 4: 95. https://doi.org/10.3390/jcp5040095

APA StyleSrinivas, S., Kirk, B., Zendejas, J., Espino, M., Boskovich, M., Bari, A., Dajani, K., & Alzahrani, N. (2025). AI-Augmented SOC: A Survey of LLMs and Agents for Security Automation. Journal of Cybersecurity and Privacy, 5(4), 95. https://doi.org/10.3390/jcp5040095