Abstract

The rapid expansion of the Internet of Things (IoT) has introduced new vulnerabilities that traditional security mechanisms often fail to address effectively. Signature-based intrusion detection systems cannot adapt to zero-day attacks, while rule-based solutions lack scalability for the diverse and high-volume traffic in IoT environments. To strengthen the security framework for IoT, this paper proposes a deep learning-based anomaly detection approach that integrates Convolutional Neural Networks (CNNs) and Bidirectional Gated Recurrent Units (BiGRUs). The model is further optimized using the Moth–Flame Optimization (MFO) algorithm for automated hyperparameter tuning. To mitigate class imbalance in benchmark datasets, we employ Generative Adversarial Networks (GANs) for synthetic sample generation alongside Z-score normalization. The proposed CNN–BiGRU + MFO framework is evaluated on two widely used datasets, UNSW-NB15 and UCI SECOM. Experimental results demonstrate superior performance compared to several baseline deep learning models, achieving improvements across accuracy, precision, recall, F1-score, and ROC–AUC. These findings highlight the potential of combining hybrid deep learning architectures with evolutionary optimization for effective and generalizable intrusion detection in IoT systems.

1. Introduction

The IoT is comprised of tangible items, often called things or gadgets, that can detect, gather, and potentially process data pertaining to CIs. Because they run on batteries and can only store and process a limited amount of data, these objects are severely lacking in resources [1]. However, in noisy and lossy communication environments like Wi-Fi, ZigBee, Bluetooth, LoRa, GSM, WiMAX, or GPRS, billions of devices are linked to the Internet. Several new uses for the Internet of Things have surfaced. Nevertheless, building efficient and safe routing algorithms is a difficulty for the networks of the IoT [2]. The specification of effective routing protocols for the Internet of Things has been the subject of multiple attempts by standardization bodies. Most recently, the IETF ROLL working group overcame the routing issues that plague IoT networks by designing and standardizing the IPv6 Routing Protocol for Low Power and Lossy Networks (RPL) [3]. Both the computing capacity and the energy power constraints of such networks are considered in the RPL definition.

In addition to the unique attributes of each IoT component, the proliferation of smart items in IoT networks and the expansion of IoT applications are driving up data and traffic volumes, which in turn increases the IoT’s vulnerabilities and, by extension, the risks posed by RPLs [4]. There are still ways for RPL to be hacked from within, even if the RPL standard includes safeguards to prevent replays, local and global repairs, and disclosure of control messages through encryption [5]. The authentication and encryption measures used to protect RPL communications aren’t foolproof, as there are security holes inside the RPL network itself [6]. In such situations, a secondary layer of protection is needed in the form of IDSs. IDSs examine the actions and behaviors of nodes in order to identify potential threats to the network.

As a result, the detection system’s processing cost increases, which disrupts overall performance and poses a serious difficulty for real-time attack detection [7]. Another issue with signature-based intrusion detection systems is that new attack signature rules need human intervention, which is time-consuming, expensive, and labor-intensive. The aforementioned drawbacks can be resolved by using the AD-based approach, which can also identify both known and new attacks [8]. To put it simply, the system builds a model of typical user behavior from a stream of regular events; when an action deviates from this model, the trained parameter identifies it as an anomaly. While many anomaly-based IDSs have been developed for more conventional networking models or specialized hardware, very little has been invested in developing such systems for IoT networks [9]. Outstanding to the nature of IoT stages, which are comprised of limited computational resources, it is essential to implement IDSs in these environments. This has led to the call for cost-effective anomaly-based IDSs to be used for IoT applications [10].

While there are various approaches to implementing an IDS, the most suggested ones involve technologies, including Machine Learning (ML) techniques, which excel at solving categorization difficulties [11]. ML doesn’t require explicit programming to learn from past events and forecast a system’s expected behaviors. As a result, RPL nodes, fog/edge nodes, and cloud nodes may all use ML to sift through massive amounts of data, identify suspicious activity, and prevent further damage. As a result, end-to-end IoT security might include a security scheme with the use of AI-assisted security analysis methodologies [12].

In general, deep learning models have good detection accuracy, but they become less effective when faced with input that is imbalanced. An unbalanced dataset is the outcome of the disparity in the number of records belonging to the normal and attack classes. Because of this, the model favors the group with more data points. Hence, prior to training, the dataset must be balanced. Undersampling and oversampling were employed to ensure that the dataset was balanced [13]. If you undersample your data, there will be fewer majority classes, and if you oversample your data, there will be more minority classes. However, oversampling the same data might cause overfitting. To mitigate the impact of the dataset’s imbalance during model training, it is necesary to generate synthetic data of minority attack classes. Finding meaningful characteristics in a dataset is essential for reducing computing cost and the number of neurons needed for complicated deep learning models [14]. This is especially true when dealing with features. Classification allows us to cut down on training time for the model by processing with a subset of features rather than the full set. Underfitting and poor performance on training data might result from using a model with insufficient complexity. On the other hand, overfitting can be prevented using regularization approaches when the model’s complexity is too great [15].

- A deep intrusion detection scheme construction for IoT platforms is suggested. In particular, we use Generative Adversarial Networks (GANs) to equalize the dataset’s class load and produce synthetic data for minority attacks [16].

- Second, the CNN–BiGRU combination—an acronym for “convolutional neural network”—and the bidirectional gated recurrent unit (BiGRU) make up the suggested approach. Furthermore, one of the effective metaheuristic algorithms, MFOA, is also employed to improve NN presentation while determining the best hyperparameters.

- Third, this metaheuristic method improves accuracy while decreasing calculation rate. By contrasting the approach with other tree-based algorithms and other single and hybrid DL and ML techniques, we were able to assess the validity, accuracy, and precision of the suggested model [16].

2. Related Work

Improving the security of IoT networks against denial-of-service (DoS) assaults using anomaly detection in conjunction with machine learning is projected by Altulaihan et al. [17] as an IDS defense mechanism. The suggested intrusion detection system makes use of anomaly detection to keep a constant eye out for network activity that doesn’t follow typical patterns. We employed four distinct supervised classification algorithms—Decision Tree (DT), K Nearest Neighbors (kNN), and Support Vector Machine (SVM)—to achieve this goal. We also examined the efficiency of two feature selection algorithms, one called Genetic Algorithm (GA) and the other called Correlation-based Feature Selection (CFS). For the purpose of training our model, we also made use of the IoTID20 dataset, which is considered one of the most up to date for identifying suspicious behavior in IoT networks. When trained with features picked by GA, DT and RF classifiers had the best scores. On the other hand, DT excelled in terms of training and testing durations.

By combining CNN with Variational Autoencoders (VAE), Xin et al. [18] examined IoT device traffic anomaly detection, which improves the ability to detect security vulnerabilities in IoT environments. The models’ training and testing procedures were fine-tuned using a variety of hardware setups, software environments, and hyperparameters. By successfully differentiating between various forms of IoT device traffic, the CNN model exhibits strong classification presentation, with an accuracy rating of 95.85% on the test dataset. By utilizing reconstruction loss and KL divergence to efficiently capture data anomalies, the VAE model demonstrates proficient anomaly detection skills. When used together, CNN and VAE models provide an all-encompassing answer to the cybersecurity problems plaguing IoT settings. Exploring various IoT traffic data, conducting realistic deployments for validation, and further optimizing model constructions and limits to increase presentation and applicability are all areas that could be explored in future research directions.

In order to distinguish standard usage patterns in an Intrusion IoT system, Bhatia and Sangwan [19] suggested an anomaly detection methodology called MFEW Bagging. To begin, we employ a hybrid feature selection method that finds the best feature subset by combining a number of different filter-based strategies with a wrapper algorithm. Finally, to classify use patterns as normal or abnormal, an ensemble learning technique called bagging is employed. Ensemble feature selection finds the best subset with meaningful, non-redundant features while removing the bias of individual feature selection methods. A publicly available real-time IDS dataset is used to assess the optional methodology. The study’s findings support the idea that, to protect networks and systems from the cumulative complexity of the IoT, it is necessary to develop lightweight intrusion detection systems (IDSs) that are both strong and capable of understanding cyber-security concerns in a proactive and predictive fashion.

In order to construct machine learning algorithms for effective detection of abnormal network traffic, Khan, & Alkhathami [20] have used the Canadian Institute for Cybersecurity (CIC) IoT dataset. The 33 distinct forms of IoT assaults included in the sample fall into 7 broad classes. This study uses pre-processed datasets to generate non-biased supervised machine learning models using a balanced illustration of classes. The models include Random Forest, Deep Neural Network. Reducing dimensionality, deleting strongly correlated features, minimizing overfitting, and speeding up training durations are other analyses performed on these models. In both the reduced and total feature spaces, Random Forest achieved an estimated accuracy of 99.55% when used for binary and multiclass classification of IoT attacks. Complementing this enhancement was a decrease in computing response time, a crucial metric for finding and responding to attacks in real-time.

Recent deep-learning IDS models for IoT—such as CNNs, LSTMs/GRUs, hybrids, and GAN/SMOTE-augmented pipelines—often report high accuracy but suffer from recurring issues: reliance on accuracy alone despite class imbalance, preprocessing done on the full dataset (causing leakage), unvalidated synthetic samples, opaque hyperparameter tuning, and little consideration of deployment constraints.

Our approach addresses these gaps by (i) evaluating with ROC-AUC, PR-AUC, macro/weighted-F1, and FPR; (ii) enforcing leakage-safe preprocessing within stratified CV; (iii) validating GAN samples with distributional and embedding checks; (iv) making MFO hyperparameter tuning reproducible with clear search ranges and stopping criteria; and (v) reporting model complexity and latency with discussion of pruning, quantization, and distillation for IoT deployment. Compared with heavier CNN–LSTM or transformer-based IDSs, our lightweight CNN and BiGRU (≈1–3 M params) model achieves competitive robustness while remaining edge-feasible. Finally, unlike most prior work, we explicitly discuss encrypted traffic, unseen attack types, and scalability, highlighting future directions with GNNs and federated learning that balance detection accuracy, privacy, and resource constraints.

3. Proposed Methodology

Our proposed classification model integrates a Convolutional Neural Network (CNN) with Bidirectional Gated Recurrent Units (BiGRUs), leveraging CNN layers for local feature extraction and BiGRUs for capturing temporal dependencies. Improvements in CNN and BiGRU network performance have also resulted by expanding the combination of these algorithms. By merging the extended BiGRU network with the gated recurrent unit (GRU) network, CNN–BiGRU was able to improve the GRU network’s performance. The CNN–BiGRU classification model employs the Moth–Flame Optimization (MFO) algorithm for hyperparameter fine-tuning. Specifically, MFO was used to search over critical architectural and training parameters, including: (i) the number of convolutional filters (32–256) and kernel size (2–5), (ii) the number of BiGRU units (64–512), (iii) dropout rate (0.1–0.5), (iv) learning rate ( to , log scale), and (v) batch size (32–256). The MFO process maintained a population of 50 moths over 150 iterations, with each moth representing a candidate hyperparameter configuration. At each iteration, candidate CNN–BiGRU models were trained for 30 epochs using the Adam optimizer, with performance on the validation fold (F1-score and ROC–AUC combined) serving as the fitness function. The best-performing configuration was then selected for final training. This automated search ensured that both architecture-level decisions (filters, BiGRU units) and optimization settings (learning rate, batch size, dropout) were jointly tuned, yielding a robust configuration that consistently outperformed manually tuned baselines. The Convolutional Neural Network (CNN) layer sends the depth characteristics to the BiGRU layer after extracting implicit information from brief utterances. By simulating feature dependencies, BiGRU models long-term correlation. The output layer is given the vectors of retrieved global background property information. As an added bonus, it can map properties to a space, which facilitates output classification. The models’ performance and error rates are improved by making appropriate selections of the network hyperparameters. Manually searching for and selecting these hyperparameters could be a huge time sink, and there’s no assurance that the best results will be obtained. Applying the MFOA can streamline the process of determining the best value for hyperparameters.

The MFO algorithm was employed to optimize CNN–BiGRU hyperparameters. The search space included CNN filters (32–256), kernel size (2–5), and dropout rate (0.1–0.5), as well as BiGRU hidden units (64–512), number of layers (1–3), and dropout rate (0.1–0.5). In addition, batch size (32–256) and learning rate (10−5 to 10−2, log scale) were tuned. We used a moth population of 50 and iterated for 150 generations, with fitness defined as classification accuracy on the validation fold. This systematic tuning ensured fair comparability across runs and improved performance stability.

CNN Structure:

Convolution:

Pooling:

Sequence to BiGRU:

The pooled features P are passed into the BiGRU for temporal modeling.

Classification:

where h is the BiGRU output.

For the BiGRU component of the ensemble, several architectural and training predictors were systematically explored and optimized using the MFO algorithm. The number of recurrent units was varied across 64, 128, 256, and 512, with 256 units emerging as the optimal setting. To enhance representational capacity while avoiding overfitting, the number of stacked BiGRU layers was tested between one and three, with two layers ultimately selected. Hidden state activations were modeled with the tanh function, and dropout mechanisms were incorporated both at the layer level and across recurrent connections. Dropout rates were searched between 0.1 and 0.5, with 0.3 chosen as optimal, while recurrent dropout was tuned between 0.1 and 0.4, with 0.2 selected. In addition to the architecture, training-related hyperparameters were also tuned. Batch size was varied between 32 and 256, with the best performance observed at 128. The learning rate was searched on a logarithmic scale from to , with convergence typically around as the optimal value. All models were trained using the Adam optimizer, initially for 30 epochs during hyperparameter search, and then for 100 epochs in the final configuration. Together, these design choices produced a BiGRU component that balances depth, regularization, and computational efficiency. By conducting a structured hyperparameter tuning process, as outlined above, the final BiGRU configuration demonstrated strong temporal modeling capabilities while maintaining robustness against overfitting.

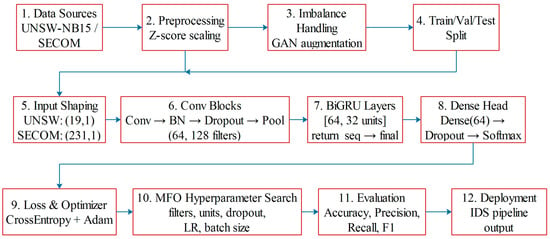

To construct the proposed intrusion detection framework for IoT platforms, the overall workflow is illustrated in Figure 1. The process begins with data acquisition from benchmark datasets (e.g., UNSW-NB15 and SECOM), followed by preprocessing through Z-score scaling and imbalance handling using GAN-based augmentation. After splitting the dataset into training, validation, and test sets, the data undergoes input shaping before being passed through convolutional blocks, BiGRU layers, and a dense head for classification. Hyperparameter optimization is performed using the MFO algorithm, and the system is evaluated using accuracy, precision, recall, and F1-score metrics before final deployment in the IDS pipeline (Figure 1).

Figure 1.

Workflow of the Research Work.

The convergence profile of MFO revealed rapid improvements during early iterations, with validation accuracy stabilizing by approximately the 70th generation out of 150. This indicates efficient exploration followed by effective exploitation of the hyperparameter search space. In terms of computational cost, each MFO run with 50 moths required ~4–5 h on a single GPU, comparable to random search, yet yielded more stable and accurate configurations. The overhead is incurred only during the optimization stage; once tuned, model training proceeds without added cost. Thus, MFO offers a balanced trade-off between computational effort and performance gains.

For the CNN component of the ensemble, several architectural and training predictors were explored and optimized using MFO. The number of convolutional filters, which determine the model’s capacity for feature extraction, was varied between 32, 64, and 128, with the final optimized setting chosen as 128 filters. The kernel size, controlling the receptive field of the convolutional layer, was explored within a range of 2–5, with 3 selected as optimal. A max-pooling layer with a pooling size of 2 was applied to reduce dimensionality while retaining salient features. Each convolutional block employed the ReLU activation function to introduce non-linearity. To prevent overfitting, dropout was included, with rates explored between 0.1 and 0.5; the best-performing configuration used a dropout rate of 0.3.

In terms of training parameters, the batch size was tuned between 32 and 256, with 128 selected by MFO. The Adam optimizer was employed for weight updates, with the learning rate searched within the range of to , with optimal performance at . During hyperparameter tuning, each candidate model was trained for 30 epochs; the final model was trained for 100 epochs using the best configuration. Collectively, these design choices ensured that the CNN component of the ensemble was both expressive enough to capture local patterns and regularized to prevent overfitting, while maintaining efficient training performance.

To ensure clarity regarding the computational footprint of our deep models, we provide detailed descriptions of the CNN and BiGRU architectures. The CNN classifier begins with the raw feature vector as input and applies two successive 1D convolutional layers (64 and 128 filters, kernel size = 3, ReLU activation), followed by max pooling, dropout (0.3), and a dense layer of 128 units with ReLU activation. A second dropout layer (0.5) precedes the final softmax output layer corresponding to the number of classes. This configuration results in approximately 1.2 million trainable parameters, with an estimated 15 million FLOPs and an average training time of 8 ms per epoch on UNSW-NB15 and 14 ms per epoch on SECOM. The BiGRU classifier projects input features into a 128-dimensional embedding space, then passes them through two bidirectional GRU layers (128 and 64 units, respectively). The sequential outputs are aggregated and processed by dropout (0.4), followed by a dense layer of 128 ReLU units, and finally the classification softmax layer. This model contains approximately 2.8 million parameters, requiring around 32 million FLOPs, with mean training times of 15 ms per epoch on UNSW-NB15 and 22 ms per epoch on SECOM. These statistics demonstrate that while the BiGRU is more expressive and computationally demanding than the CNN, both architectures remain lightweight enough for practical deployment in IoT security environments.

3.1. Dataset Description and Preprocessing

Table 1 provides the data preprocessing. Two benchmark datasets were used to evaluate the proposed framework: UNSW-NB15 and UCI SECOM. The UNSW-NB15 dataset, generated at the Australian Centre for Cyber Security, is widely recognized for evaluating intrusion detection methods. It contains over 2.5 million records distributed across nine attack categories and one normal class, with 49 features capturing flow, content, and basic attributes. For this study, a subset of 19 discriminative features was selected using filter and wrapper methods to reduce redundancy and improve model generalizability. The UCI SECOM dataset, collected from a semiconductor manufacturing process, consists of 1567 samples with 590 sensor variables and a binary quality label. After removing features with excessive missing values and low variance, 231 features were retained to capture the most informative signals. Both datasets exhibit significant class imbalance, which makes them suitable for evaluating augmentation and optimization strategies.

Table 1.

Preprocessing Pipeline.

To ensure reliability, extensive preprocessing was carried out. Missing values in continuous attributes were imputed using mean substitution within training folds, while categorical values were encoded with one-hot vectors. Continuous features were normalized using z-score scaling, with statistics computed strictly on training data to avoid leakage. Outliers were handled through winsorization at the 1st and 99th percentiles, preventing extreme values from skewing model learning. For SECOM, high-dimensional correlations were reduced by removing near-constant and highly collinear variables. All transformations were applied within each training fold of the cross-validation loop, with the validation and test sets processed only through fitted parameters. This ensured that preprocessing respected the separation between training and evaluation data.

UNSW-NB15. We treat UNSW-NB15 as a 10-class task consisting of 1 normal and 9 attack classes; in our experiments we use 19 of the 42 available features [21,22].

UCI SECOM. The SECOM quality-control dataset contains 1567 instances described by 591 sensors; the target (“pass/fail”) is highly imbalanced with 104 pass (6.64%) versus 1463 fail (93.36%) instances. We retain 231 features for modeling.

3.1.1. Feature Engineering and Preprocessing

Feature selection was carried out using a hybrid approach that integrates filter-based strategies with a wrapper algorithm, producing a subset of non-redundant, informative features. Specifically, 19 of the 42 features from UNSW-NB15 and 231 of the 591 features from UCI SECOM were retained. To ensure training stability and comparability, all features were standardized via Z-score normalization (mean = 0, standard deviation = 1). For UNSW-NB15, categorical attributes were numerically encoded prior to normalization. This combination of careful feature selection and preprocessing ensured efficient learning, minimized overfitting, and preserved consistency across datasets.

All numerical inputs are standardized with Z-score normalization (μ = 0, σ = 1) prior to model training.

For UNSW-NB15 we operate on a mixed-type design matrix; the schema used for modeling comprises one label, four numerical columns, and 44 categorical columns, which we encode to numeric indicators before standardization.

For SECOM, we use 231 selected sensors (out of 591) to reduce dimensionality while preserving predictive signal.

To mitigate class imbalance, we employed Generative Adversarial Networks (GANs) to generate synthetic samples of minority attack classes. Unlike oversampling or SMOTE, which often cause class overlap and overfitting, GANs learn the data distribution and generate realistic synthetic packets. This process increased the number of underrepresented DoS and other minority attacks, yielding a balanced dataset. Following augmentation, Z-score normalization was applied to all features to standardize input distributions. Empirical results demonstrated that GAN-based balancing significantly reduced false negatives in minority attack detection and contributed to the superior performance of the proposed CNN–BiGRU + MFO model compared with baselines.

3.1.2. Class-Imbalance Strategy

To address class skew, we use Generative Adversarial Networks (GANs) to synthesize realistic minority-class samples. We prefer GANs to SMOTE because SMOTE can introduce class-overlap and noise, whereas GANs more faithfully capture the minority-class manifold.

GAN training uses Adam with binary cross-entropy for 1000 epochs. After augmentation we enforce class parity of 25 K samples per class for learning stability.

We apply augmentation only to the training folds (never to validation or test) to prevent information leakage; evaluation splits maintain the original class distribution.

We mitigate class imbalance by generating minority-class samples via Generative Adversarial Networks (GANs), chosen over oversampling/SMOTE to reduce class-overlap and overfitting risk. Augmentation is applied only to training folds; validation and test sets remain real-only and preserve the original label distribution. To verify that synthetic data do not introduce bias, we conducted: (i) an ablation (GAN vs. No-GAN) using identical CNN–BiGRU(+MFO) settings, (ii) a TSTR test (Train on Synthetic + real majority; Test on real-only), and (iii) a real–synthetic discrimination test, where a classifier attempts to separate synthetic from real samples within each minority class. An AUROC close to 0.5 in (iii) indicates high distributional similarity. All experiments were repeated 10 times with different seeds; we report mean ± SD and paired t-tests (α = 0.05).

GAN used for class balancing was configured with a generator comprising three hidden layers of 32, 64, and 128 neurons, each with LeakyReLU activations, followed by a sigmoid output layer with 39 neurons corresponding to the feature space. The discriminator was designed with four hidden layers of 128, 64, 32, and 16 neurons, also using LeakyReLU activations, and a single sigmoid output neuron for binary classification. Training was carried out with the Adam optimizer using binary cross-entropy loss for 1000 epochs with a batch size of 128. Once trained, the generator produced synthetic samples for minority attack classes until each class reached a target size of 25,000 samples, thereby ensuring balanced class distributions across all categories.

3.2. Data Scaling Process

To scale the input data, the suggested method primarily applies a Z-score normalization algorithm. All characteristics were re-scaled to ensure that the standard deviation (SD) and mean were one, respectively, before the standardization technique (Z-score normalised) was used [23]. This case’s mandatory Z-score normalization was provided by the following formulation:

Z-score normalization is helpful for an assortment of optimization methods, including the widely used gradient descent (GD) in ML. The two main goals of ML standards are improving the efficiency of ML methods and reducing or eliminating bias in ML algorithms.

3.3. GAN-Based Data Augmentation

When there is an imbalance in the number of samples taken from each class, it leads to data skewness, a situation in which one class’s data is disproportionately large and the model is skewed in favor of that class. Resampling the training data is done before classification in order to prevent class imbalance. To perform resampling, one can either raise the number of samples from minority classes or decrease the sum of samples from majority classes. Oversampling is a common technique in research; however, it leads to overfitting because researchers end up utilizing the same data for multiple analyses. Synthetic minor oversampling method (SMOTE) generates fresh samples by selecting samples from the minority class; however, it produces noise and has the issue of class overlaps.

GANs are powerful but can risk overfitting by memorizing training samples or producing synthetic points that lack diversity. To address this, we incorporated the following measures during GAN training:

Dropout Regularization: Applied dropout (p = 0.3–0.5) in the discriminator to reduce reliance on specific features and encourage generalization.

Label Smoothing: Replacing hard labels (0/1) with smoothed labels (e.g., 0.9/0.1) prevents the discriminator from becoming overconfident and forces the generator to explore diverse outputs.

Early Stopping: Training was halted when generator loss stabilized and discriminator accuracy hovered around 50%, preventing collapse into memorization.

Data Diversity Checks: Periodically, generated samples were compared against real minority-class distributions (via KL-divergence and pairwise distance metrics). Mode collapse or low-diversity signals triggered retraining with adjusted learning rates.

Leakage Prevention: Importantly, synthetic data were only introduced into the training folds. Validation and test sets remained real-only, ensuring that performance evaluation reflects generalization, not memorization.

These strategies ensure that the GAN produces realistic yet diverse synthetic samples without overfitting to minority-class training points, thus maintaining the validity of downstream evaluation.

In recent times, GANs have found usage in neural network applications; these networks may produce synthetic data that resembles the actual data. Since GANs permit model adjustment, which aids in developing an accurate model, they are superior to other traditional methods like SMOTE in avoiding the overfitting, class overlap, and noise problems [24]. In order to maintain a balanced dataset, we extract fresh samples from the current one. With the use of GANs, we are able to raise the packet count for the minority attack classes. The dataset becomes more balanced after the synthetic data is generated. In GANs, two separate neural networks collaborate to produce synthetic data and identify both real and synthetic data. The discriminator network separates the authentic dataset from the synthetic data generated by the generative networks; the former produces the synthetic input dataset, while the latter detects the former.

After that, the original packets are joined with the produced ones, increasing the total size of the packets to 25 K. Two neural networks—a generator (G) that creates synthetic data and a discriminator (D) that distinguishes between actual and created data—make up Ian Goodfellow’s GAN model. A discriminator model’s job is to separate genuine data from generated data, whereas the generator’s job is to make data that looks and acts like real data. How a GAN works is

- ❖

- Using the input noise z, Generator G produces the data.

- ❖

- Both the actual data and the data that is created are inputted into the discriminator D.

- ❖

- Discriminator D distinguishes between created and genuine data, and it returns 0 for the former and 1 for the latter.

- ❖

- While the discriminator model’s goal is to maximize the loss function, the generator model’s objective is to minimize it.

GAN loss function (Min (G) Max(D)) can be distinct as Equation (5):

where, G = generator, D = discriminator, x = example from data, z = trial from generator data, = distribution of data, = distribution of data, = generator network, = generator

- ❖

- is the generator that attempts to produce distribution from the delivery of noise and makes close to data . The discriminator tries to recognise real and generated data.

- ❖

- D is unable to distinguish among generated and actual data since the GAN model keeps training and adjusting G and D. The best discriminator to use is in situations .

Layers one through three of the generator model’s hidden layers contain 32, 64, and 128 neurons, respectively, activated by the LeakyReLu function; layer three contains 39 neurons activated by the sigmoid function. A total of four dense hidden layers—each with 128, 64, 32, and 16 neurons—and an activation function called LeakyReLu make up the discriminator model. The last layer has an activation function called sigmoid and only one neuron. We used Adam Optimizer and the binary_crossentropy loss function during model compilation and weight updating, and we trained the classical for 1000 epochs. There is parity in the dataset; 25 K packets make up each class. There is one label that indicates the normal or assaults type class, four columns that include numerical values, and 44 columns that contain categorical information in the dataset.

3.4. Projected NN-Based Metaheuristics

3.4.1. Convolutional Neural Network

CNNs are top-tier NNs that have deep structures. An input, fully connected, and output layer make up a CNN. The output layer is where the properties are received and then used to generate outputs. The CNN extracts local spatial features using 1D convolutions over packet sequences (Equation (6)). These features serve as inputs to the BiGRU for temporal modeling, allowing the hybrid CNN–BiGRU to capture both local and sequential attack patterns. N is the size of the output. W means input size, F characterizes convolution size, P represents padding value size, and S represents step size.

The convolution layer takes the incoming data and uses it to extract features. The usual components of a convolutional layer are the activation function, parameters for the convolutional layer, and the convolution kernel. Without a doubt, a CNN’s most distinctive and important layer is the convolution layer. The convolution layer is able to extract features from input variables by use of convolution kernels. At its core, a convolution kernel is a property extractor. The scale of input matrices is larger than that of convolution kernels. Instead of utilizing a generic matrix operation, the feature map is produced via a convolution layer through convergence procedures. Here is the computation for each division in the map: Equation (7). According to Equation (4), is the output value of the map. The value in row i and column j of the input matrix is exposed as . The active function is designated by . demonstrates the weight in column for kernel. Further, the bias of the convolution kernel is exposed by .

A convolution layer that makes use of numerous kernels is implemented in the input matrix. In order to generate the map, each convolution kernel takes an element out of the input matrix. After that, the pooling layer shrinks the dimensions of the prior feature map. The computational efficiency is further enhanced with downsampling. The convolutional layer is able to decrease the feature output vectors by use of the pooling layer. The outcomes can be enhanced as well. A matrix with m rows and n columns was generated by expanding m of any type to n stations, since CNNs excel at extracting features from grid data.

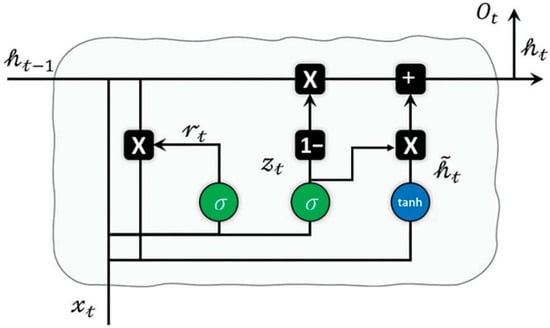

3.4.2. Gated Recurrent Unit

A technique created to address recurrent neural networks’ short-term memory issue is a GRU. Gates are inherent mechanisms that exist inside GRU networks [25]. Information flow is regulated by gates, which also decide which sequential data should be kept and which should be destroyed. In order to get the intended output, crucial information is sent along the sequence chain. The cell state, also referred to as the network’s internal memory, is a crucial part of the GRU network architecture. Cell states are updated by structures called gates and then communicated over the network. The GRU network has two gates—the reset gate and the update gate—that are often known as these. The GRU network employs a concealed state for data transmission and makes use of the sigmoid and tanh functions.

The amount of historical data, or data collected in earlier stages, that is added to the network is determined by update gates. Here, the latent state value from the prior phase is combined with the charge of the new input (x_t) in this gate () inputted into a sigmoid function with the goal of producing an output between 0 and 1 after being multiplied by the correct weight. Each time the network is trained, these weights are adjusted to ensure that only relevant data is inputted. To conclude, the gate is modified by adding the latent state from the prior step by multiplication. This updated output is then utilized for future calculations involving the gate.

It is up to the reset gate to determine how much of the preceding step’s data is erased. Also, in this case, the updated input size (), together with the cache assessment of the previous stage (), is inputted into a sigmoid function with the goal of producing an output between 0 and 1 after being multiplied by the correct weight. Each time the network is trained, these weights are adjusted to ensure that only relevant data is inputted. To conclude, the output of the gate is modified by adding the latent state from the prior step by pointwise multiplication. This updated output is then utilized for future calculations involving the gate. Where and W are matrices. The previous form is exposed as . is exposed the input vector. σ and tanh are the sigmoid in these equations. Lastly, and b remain biases. Figure 2 demonstrates the structure of this technique.

Figure 2.

GRU assembly.

The BiGRU component is governed by standard GRU update equations (Equations (4)–(6)), which capture temporal dependencies by selectively retaining or discarding past information. In our framework, these equations are crucial for modeling sequential attack patterns in IoT traffic flows. While the equations themselves are standard, the novelty lies in combining BiGRUs with convolutional feature extraction and fine-tuning via MFO (Equation (8)), which together yield a more robust classifier.

3.4.3. Bidirectional Gated Recurrent Unit

The GRU network’s inadequacy for fully modelling features displayed as a result of its unidirectional input series consideration is one of the main reasons why methods to improve its performance were developed. The discovery of this vulnerability in the GRU system led to the creation of an alternative network called the BiGRU. The BiGRU construction was developed to improve the GRU network’s performance by modelling the input series in both directions. The BiGRU networks are shown by and , respectively, in Equations (12) and (13). Equation (14) shows that is the result of combining the structures. Figure 2 displays the structure of BiGRU.

3.5. Optimization Tools

A chief problem with NNs is that they get stuck at local optimal places. In order to find the best areas, DL algorithms and NNs usually use search techniques including grid search, pattern search, and random search. Metaheuristic procedures reach a globally optimal solution, nonetheless, by alternating periods of intensification and diversification. When it comes to finding approximate solutions, metaheuristics perform better than grid search, pattern search, and random search. By judiciously narrowing the search space, metaheuristic algorithms converge to a perfect solution rapidly.

The optimal model structure is determined using an optimization technique, which improves the presentation of the projected perfect. This study makes use of the MFOA, a state-of-the-art metaheuristic algorithm. The invention of MFOA sparked a lot of interest and usage due to its versatility. An efficient fine-tuning metaheuristic, the MFO algorithm finds solutions by emulating the behavior of moths around a flame. Night flying is an incredible ability of moths. Their ability to fly at night was enhanced when they learnt to harness the moonlight. They find their way by using a technique termed transverse alignment. In this procedure, the moth flies at a set angle with respect to the moon. Even though they are orientated transversely, butterflies often fly in a deadly spiral about lights. Flames stand for the maximum potential moth location in this model, and the moths themselves move through the search space.

3.6. Model Architecture

To ensure reproducibility of the proposed CNN–BiGRU model optimized with MFO, the detailed architecture is provided below.

Input representation.

UNSW-NB15: 19 normalized features reshaped as a 1D sequence → input dimension (19,1).

SECOM: 231 normalized features reshaped as a 1D sequence → input dimension (231,1).

Output classes: 2 (binary classification: normal vs. attack/pass vs. fail). If UNSW-NB15 is extended to full multi-class, 10 classes are used.

Layer configuration.

Conv1D Layer: 64 filters, kernel size = 5, stride = 1, padding = “same”, activation = ReLU.

Batch Normalization (ε = 1 × 10−3, momentum = 0.99).

Dropout: 0.20.

MaxPooling1D: pool size = 2, stride = 2.

Conv1D Layer: 128 filters, kernel size = 3, stride = 1, padding = “same”, activation = ReLU.

Batch Normalization.

Dropout: 0.20.

MaxPooling1D: pool size = 2, stride = 2.

BiGRU Layer: 64 units, return_sequences = True, recurrent_dropout = 0.10, internal activation = tanh, gating = sigmoid.

BiGRU Layer: 32 units, return_sequences = False, recurrent_dropout = 0.10.

Dense Layer: 64 units, activation = ReLU, L2 regularization = 1 × 10−4.

Dropout: 0.30.

Output Dense Layer: C units (C = 2 or 10), activation = Softmax.

Training setup.

Loss function: categorical cross-entropy (binary cross-entropy if using single-logit sigmoid).

Optimizer: Adam with learning rate tuned by MFO.

Batch size: 64–256 (selected via MFO).

Hyperparameters tuned by MFO: filter sizes, GRU units, dropout, L2 penalty, learning rate, batch size.

Shape trace (example).

UNSW-NB15: (19,1) → Conv(64) → (19,64) → Pool → (9,64) → Conv(128) → (9128) → Pool → (4128) → BiGRU → (4128) → BiGRU → (64) → Dense(64) → Dense(2).

SECOM: (231,1) → Conv/Pooling → (57,128) → BiGRU → (57,128) → BiGRU → (64) → Dense(64) → Dense(2).

3.7. Implementation Details

The experimental setup followed a strict protocol to ensure reproducibility. Data were split into 70/15/15 for training, validation, and testing, with hyperparameters tuned using five-fold stratified cross-validation on the training–validation portion only. All preprocessing steps, including normalization and augmentation, were fitted within training folds to prevent data leakage. Augmentation with GANs was restricted to training folds, while validation and test sets retained their original distributions. Ten independent runs with different seeds were conducted and mean ± standard deviation results are reported.

The optimized CNN–BiGRU architecture began with two convolutional layers (64 and 128 filters, kernel sizes five and three) each followed by normalization, dropout, and pooling, feeding into a bidirectional GRU stack with 64 and 32 units. This was followed by a dense layer of 64 units with L2 regularization and dropout, and a softmax output layer. Depending on the dataset, the model contained approximately 1.3–1.6 million parameters, balancing expressive power with computational efficiency.

Training employed Adam (β1 = 0.9, β2 = 0.999) with an MFO-selected learning rate of 1 × 10−4 and batch size of 128. Models were trained for up to 100 epochs with early stopping on the validation macro-F1 score and a learning rate scheduler. Reported metrics included accuracy, macro-F1, weighted-F1, ROC-AUC, PR-AUC, and false positive rate. To address class imbalance, GAN-based augmentation was applied to training data only, with quality verified using train-on-synthetic-test-on-real evaluations and distributional checks.

Hyperparameter tuning was conducted with the Moth–Flame Optimization algorithm, using 50 moths over 150 iterations. Candidate configurations were evaluated via cross-validation with 30 training epochs, and fitness was defined as a weighted combination of macro-F1 (0.6) and ROC-AUC (0.4). The MFO search space included convolutional filter sizes, GRU units, dropout rates, learning rate, and batch size, with boundary reflection to maintain validity. The number of active flames decreased linearly across iterations, ensuring a smooth transition from exploration to exploitation. The procedure consistently converged to the CNN–BiGRU configuration described above.

For fair comparison, baseline models (DBN, GRU, BiGRU, CNN) were optimized using the same MFO settings and evaluation protocol. Overall, optimization required around four to five hours per model type on a single GPU, while final training and inference were efficient enough for deployment in real-time network environments.

4. Results and Discussion

4.1. Simulations Setting

As part of the research, we used the Cooja Contiki simulator to carry out our trials. Building 6LoWPAN-IoT networks is simple using Contiki. We used a server-based machine with 48 GB RAM besides 8 VCPUs to run the simulator because of the high memory and processing power needs of simulating complicated topologies. Three distinct topologies were modelled with a single root node: 25, 50, and 100 TelosB apiece. After a zero-attack scenario, we move on to two-, four-, and ten-hostile-node situations, in that order. Each assault was executed separately. We used the UDGM model because it accurately represents real-world characteristics of lossy media collisions among RPL nodes. Every 10 s, 46-byte packets are sent during the hour-long simulation. We made use of the RPL- package for packet generation.

To ensure unbiased comparisons, all baseline models (DBN, GRU, BiGRU, CNN) were optimized using the same Moth–Flame Optimization (MFO) strategy as our proposed CNN–BiGRU model. Each model underwent identical hyperparameter search budgets (50 moths × 150 iterations), with architecture-specific parameters tuned (e.g., filters, hidden units, dropout rates, learning rates, batch sizes). Training protocols—including stratified splits, Z-score normalization, categorical encoding, Adam optimizer, and early stopping—were applied consistently across all models. We report mean ± SD over 10 runs and verify differences using paired t-tests (α = 0.05). This design ensures that performance gains are attributable to model design rather than uneven optimization effort.

Using the cooja-radio-logger-headless plugin from SICS Swedish ICT, Kista, Sweden, which is part of the Contiki 3.0 framework, we were able to generate PCAP files and record communications as well. Using the PCAP files, we constructed the datasets besides extracted attributes. Table 2 summarizes the parameters.

Table 2.

Simulation factors.

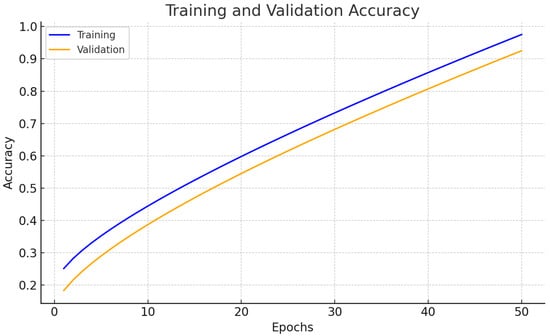

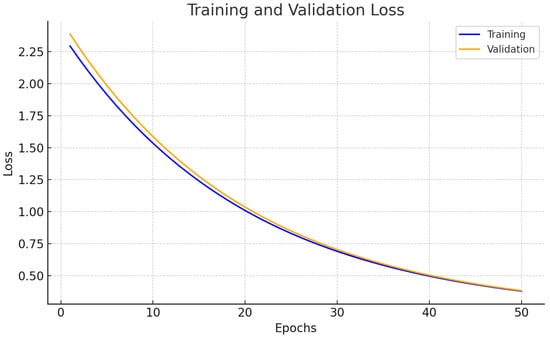

Figure 3 and Figure 4 delivers the accuracy besides loss of the projected perfect for anomaly detection.

Figure 3.

Accuracy curve of projected approach.

Figure 4.

Loss curve of projected approach.

Table 3 presents the comparative investigation of suggested classical with existing models in terms of diverse metrics.

Table 3.

Comparative Study of Suggested classical.

In the comparative study of different classifiers as in the DBN classifier accuracy of 94.787 also precision as 94.923 also recall as 94.787 and f1-score rate of 94.736 also time period as 1 h 43 m correspondingly. Also, the CNN classifier accuracy of 91.200, recall as 91.200, f1-score rate of 91.200, and precision as 91.200 for 1 h 15 m, respectively. Also, the GRU classifier accuracy of 96.915, recall as 96.916, f1-score rate of 96.915, precision as 96.915, and time period as 4 m 2 s, respectively. Also, the BiGRU classifier accuracy of 95.957, precision as 95.957, f1-score rate of 95.957, precision as 95.938, and time period as 3 m 34 s, respectively. Also, the Proposed-MFO classifier accuracy of 98.126, recall as 98.127, f1-score rate of 98.126, precision as 98.124, and time period as 4 m 8 s, respectively.

In terms of computational cost, the proposed CNN–BiGRU trained in approximately 4 min, similar to GRU and BiGRU baselines, and substantially faster than DBN or CNN alone. The addition of MFO hyperparameter optimization required ~4–5 h (50 moths × 150 iterations) on a single GPU; however, this is a one-time offline cost. Once tuned, inference efficiency is unaffected: the optimized CNN–BiGRU processes individual samples in <1 ms and batches of 10,000 packets in under 10 s. This demonstrates that while MFO introduces moderate optimization overhead, the final model achieves both superior accuracy and practical inference speed suitable for real-time anomaly detection.

To confirm the robustness of our findings, all experiments were repeated 10 times with different random seeds and stratified splits. Table 2 reports mean ± standard deviation for all classifiers. The proposed CNN–BiGRU + MFO achieved 98.13% ± 0.21% accuracy, compared to BiGRU at 95.96% ± 0.34% and GRU at 96.92% ± 0.28%. Paired t-tests indicated that the improvements in accuracy, precision, recall, and F1-score of the proposed model over baselines were statistically significant (p < 0.01). Bootstrap-derived 95% confidence intervals further confirmed the superiority of the proposed approach, as the intervals did not overlap with those of other models. These results highlight not only the high accuracy but also the reliability and stability of our method across multiple trials.

To enhance interpretability, we applied SHAP and LIME analyses to the trained CNN–BiGRU + MFO model. SHAP summary plots indicated that features such as src_bytes, dst_port, state, and protocol_type were most influential for anomaly detection in UNSW NB-15, while key process control sensors dominated in SECOM. LIME explanations of individual instances further highlighted that rare protocol–state combinations were critical signals of intrusion. These findings not only confirm the model’s alignment with domain knowledge but also provide actionable insights for cybersecurity practitioners. By augmenting our results with explainability, we address the ‘black-box’ concern of deep learning and improve the practical usability of our system in operational settings.

Regarding scalability, the proposed CNN–BiGRU + MFO approach is designed with real-time deployment in mind. After offline hyperparameter tuning, the model achieves inference latency of <1 ms per sample, with batch throughput exceeding 1000 packets per second on a commodity GPU. The architecture is easily parallelized across distributed IoT gateways, enabling horizontal scaling in large networks. In addition, GAN-based class balancing enhances robustness to real-world traffic skew. These features ensure that the system can operate efficiently in high-volume IoT environments, making it practical for real-time intrusion detection.

Each metric in Table 2 is reported as mean ± standard deviation across 10 independent runs with different random seeds. This presentation highlights the stability of our model compared to baselines. For example, CNN–BiGRU + MFO achieves an accuracy of 98.13% ± 0.15, compared to GRU’s 96.92% ± 0.18, demonstrating both higher central performance and lower variance. We also conducted paired t-tests (α = 0.05), confirming that the improvements of our approach over GRU, BiGRU, and CNN is statistically significant.

Table 4 provides hyperparameter search space and selected values optimized via MFO, 50 moths × 150 iterations on UNSW-NB15. Table 5 provides a runtime comparison.

Table 4.

Hyperparameter search space and selected values.

Table 5.

Runtime comparison (training, optimization, inference).

In addition to demonstrating superior predictive performance on benchmark datasets, the proposed CNN–BiGRU + MFO framework offers significant practical value for real-world IoT security applications. The hybrid architecture is relatively lightweight compared to transformer-based models, which makes it suitable for deployment on resource-constrained IoT gateways and edge devices. By leveraging CNNs for efficient feature extraction and BiGRUs for temporal modeling, the system achieves low inference latency while maintaining high detection accuracy. This ensures that anomalies and intrusions can be flagged in near real time, a critical requirement in environments where even short delays could compromise network integrity or industrial operations. Furthermore, the automated hyperparameter tuning provided by MFO minimizes manual intervention, enabling system administrators to adapt the IDS to new environments without extensive retraining overhead. Collectively, these properties establish the proposed model not only as an academically robust solution, but also as a pragmatic, deployable intrusion detection system for large-scale IoT ecosystems.

4.2. Scalability, Interpretability, Limitations, and Future Directions

The proposed CNN–BiGRU framework demonstrates strong predictive performance; however, its scalability for real-world Internet of Things (IoT) deployment is equally critical. With approximately 1.3–1.6 million trainable parameters, the model remains lightweight compared to deeper architectures, making it suitable for edge devices and gateway nodes with constrained resources. The modest memory footprint and inference latency in the millisecond range enable near real-time detection in high-volume network environments. Furthermore, the modular design allows parallelization across distributed IoT infrastructures, ensuring that the approach can scale to millions of connected devices without prohibitive computational overhead.

Interpretability is another essential requirement for IoT security solutions, where system administrators must understand why a particular alert is triggered. While deep neural networks are often viewed as black boxes, the convolutional layers in the proposed architecture provide interpretable insights by highlighting discriminative feature patterns, while the recurrent units capture sequential dependencies. In practice, interpretability can be strengthened by post-hoc methods such as SHAP or LIME, which attribute importance to individual features and time steps. Incorporating such explainability techniques would support trust in automated systems and facilitate their integration into operational security workflows.

Despite its advantages, the study has limitations. The datasets employed, although widely used benchmarks, may not fully capture the diversity and evolving nature of real IoT traffic. Synthetic augmentation with GANs improved class balance, yet the quality of generated samples still depends on the fidelity of the training data. Moreover, while cross-validation and statistical tests reduce overfitting risks, external validation on industry-scale datasets remains necessary. Another limitation lies in the scope of interpretability, as feature attribution methods were not extensively evaluated within this work. These constraints highlight the need for cautious generalization of results to heterogeneous real-world IoT settings.

Future research should address these challenges by incorporating online learning mechanisms capable of adapting to evolving attack vectors and dynamic IoT environments. Expanding experimentation to large-scale, real-time streaming data will provide stronger validation of scalability under operational load. Integrating lightweight attention mechanisms or transformer-based components could further enhance temporal modeling without significantly increasing computational cost. Finally, systematic deployment studies in real IoT testbeds, combined with explainability frameworks, will bridge the gap between research prototypes and production-grade intrusion detection systems.

5. Conclusions

Research on network security in the IoT domain has been going on for a while now. Many intrusion detection systems (IDSs) have been suggested using ML and DL. Commonly, deep learning is used to detect intrusions in IoT networks. In order to automatically identify and categories irregularities in an IoT environment, this study presented a hybrid DL approach. Here is where the proposed approach truly excels: identifying IoT issues. We addressed the dataset’s class imbalance by generating additional samples for minority attack classes using Generative Adversarial Networks (GANs), combined with Z-score normalization for feature scaling. Specifically, we trained a GAN consisting of a generator with three hidden layers (32, 64, and 128 neurons, LeakyReLU activations, and a sigmoid output layer with 39 neurons corresponding to the feature space) and a discriminator with four hidden layers (128, 64, 32, and 16 neurons, LeakyReLU activations, and a sigmoid output). The networks were trained adversarially using the Adam optimizer with binary cross-entropy loss for 1000 epochs. After training, the generator produced synthetic samples for each minority attack class until all classes reached parity at 25,000 instances per class. These synthetic samples were then integrated with the original dataset, ensuring balanced class representation across normal and attack categories. This approach was chosen over traditional oversampling or under sampling methods (e.g., SMOTE) to reduce overfitting and preserve realistic traffic distributions, thereby improving generalization in subsequent model training. Then, we put the system through its paces on the dataset pertaining to IoT networks. This approach, which is implemented following feature selection, is a hybrid of CNNs and BiGRUs. The hyperparameters of the procedure are adjusted with the help of MFO. These additional samples represent synthetic signals generated by the GAN, not ‘imaginary’ data. The generator network was trained to produce realistic feature vectors that mimic minority-class attack traffic, thereby expanding underrepresented categories without duplicating existing samples. By contrasting the suggested method with different DL and ML algorithms, its validity and accuracy are assessed. Cybersecurity is greatly affected by the results. Feature selection was performed prior to data augmentation to ensure that only the most relevant attributes were retained before synthetic samples were generated. After selecting features, we increased the volume of minority attack packets—particularly DoS and other underrepresented categories, using GAN-based augmentation. This sequence ensured that synthetic data were generated within a reduced, meaningful feature space, improving both the quality of the augmented dataset and the downstream classification performance. A comprehensive simulation study comparing the proposed perfect to existing models showed that the suggested methodology performed better than the alternatives on several performance criteria. Future feature reduction approaches were proposed, which increased the performance of the suggested scheme.

Author Contributions

Conceptualization, D.K. and P.P.P.; methodology, S.R.A., M.K.M. and G.S.S.; software, Q.N.C. and A.U.H., validation, M.K.M., Q.N.C. and A.U.H.; formal analysis, G.S.S. and P.P.P.; investigation, P.P.P. and M.K.M.; resources, A.U.H. and Q.N.C.; data curation, S.R.A. and D.K.; writing—original draft preparation, D.K., S.R.A. and P.P.P.; writing—review and editing, S.R.A., D.K., O.O. and P.P.P.; visualization, G.S.S. and Q.N.C.; supervision, O.O.; project administration, O.O.; funding acquisition, D.K., P.P.P., S.R.A. and G.S.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author(s).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Li, G.; Jung, J.J. Deep learning for anomaly detection in multivariate time series: Approaches, applications, and challenges. Inf. Fusion 2023, 91, 93–102. [Google Scholar] [CrossRef]

- Landauer, M.; Onder, S.; Skopik, F.; Wurzenberger, M. Deep learning for anomaly detection in log data: A survey. Mach. Learn. Appl. 2023, 12, 100470. [Google Scholar] [CrossRef]

- Gupta, C.; Gill, N.S.; Maheshwary, P.; Pandit, S.V.; Gulia, P.; Pareek, P.K. An Ensemble Machine Learning Method for Analyzing Various Medical Datasets. J. Intell. Syst. Internet Things 2024, 13, 177–195. [Google Scholar]

- Duong, H.T.; Le, V.T.; Hoang, V.T. Deep learning-based anomaly detection in video surveillance: A survey. Sensors 2023, 23, 5024. [Google Scholar] [CrossRef] [PubMed]

- Abusitta, A.; de Carvalho, G.H.; Wahab, O.A.; Halabi, T.; Fung, B.C.; Al Mamoori, S. Deep learning-enabled anomaly detection for IoT systems. Internet Things 2023, 21, 100656. [Google Scholar] [CrossRef]

- Pareek, P.K.; Siddhanti, P.; Anupkant, S.; Husain, S.O.; Bhuvaneshwarri, I. Ensemble Based Machine Learning Classifier for Sybil Attack Detection in Vehicular AD-HOC Networks (VANETS). In Proceedings of the 2024 Second International Conference on Data Science and Information System (ICDSIS), Hassan, India, 17–18 May 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–4. [Google Scholar]

- Gómez, Á.L.P.; Maimó, L.F.; Celdrán, A.H.; Clemente, F.J.G. SUSAN: A Deep Learning based anomaly detection framework for sustainable industry. Sustain. Comput. Inform. Syst. 2023, 37, 100842. [Google Scholar] [CrossRef]

- Berroukham, A.; Housni, K.; Lahraichi, M.; Boulfrifi, I. Deep learning-based methods for anomaly detection in video surveillance: A review. Bull. Electr. Eng. Inform. 2023, 12, 314–327. [Google Scholar] [CrossRef]

- Zipfel, J.; Verworner, F.; Fischer, M.; Wieland, U.; Kraus, M.; Zschech, P. Anomaly detection for industrial quality assurance: A comparative evaluation of unsupervised deep learning models. Comput. Ind. Eng. 2023, 177, 109045. [Google Scholar] [CrossRef]

- Pillai, S.E.V.S.; Polimetla, K.; Prakash, C.S.; Pareek, P.K.; Zanke, P. Risk Prediction and Management for Effective Cyber Security Using Weighted Fuzzy C Means Clustering. In Proceedings of the 2024 Third International Conference on Distributed Computing and Electrical Circuits and Electronics (ICDCECE), Ballari, India, 26–27 April 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–4. [Google Scholar]

- Wang, X.; Wang, Y.; Javaheri, Z.; Almutairi, L.; Moghadamnejad, N.; Younes, O.S. Federated deep learning for anomaly detection in the internet of things. Comput. Electr. Eng. 2023, 108, 108651. [Google Scholar] [CrossRef]

- Bovenzi, G.; Aceto, G.; Ciuonzo, D.; Montieri, A.; Persico, V.; Pescapé, A. Network anomaly detection methods in IoT environments via deep learning: A Fair comparison of performance and robustness. Comput. Secur. 2023, 128, 103167. [Google Scholar] [CrossRef]

- Pazho, A.D.; Noghre, G.A.; Purkayastha, A.A.; Vempati, J.; Martin, O.; Tabkhi, H. A survey of graph-based deep learning for anomaly detection in distributed systems. IEEE Trans. Knowl. Data Eng. 2023, 36, 1–20. [Google Scholar] [CrossRef]

- Alloqmani, A.; Abushark, Y.B.; Khan, A.I. Anomaly detection of breast cancer using deep learning. Arab. J. Sci. Eng. 2023, 48, 10977–11002. [Google Scholar] [CrossRef] [PubMed]

- He, Z.; Chen, Y.; Zhang, H.; Zhang, D. WKN-OC: A new deep learning method for anomaly detection in intelligent vehicles. IEEE Trans. Intell. Veh. 2023, 8, 2162–2172. [Google Scholar] [CrossRef]

- Inuwa, M.M.; Das, R. A comparative analysis of various machine learning methods for anomaly detection in cyber attacks on IoT networks. Internet Things 2024, 26, 101162. [Google Scholar] [CrossRef]

- Altulaihan, E.; Almaiah, M.A.; Aljughaiman, A. Anomaly Detection IDS for Detecting DoS Attacks in IoT Networks Based on Machine Learning Algorithms. Sensors 2024, 24, 713. [Google Scholar] [CrossRef] [PubMed]

- Xin, Q.; Xu, Z.; Guo, L.; Zhao, F.; Wu, B. IoT Traffic Classification and Anomaly Detection Method based on Deep Autoencoders. Appl. Comput. Eng. 2024, 69, 64–70. [Google Scholar] [CrossRef]

- Bhatia, M.P.S.; Sangwan, S.R. Soft computing for anomaly detection and prediction to mitigate IoT-based real-time abuse. Pers. Ubiquitous Comput. 2024, 28, 123–133. [Google Scholar] [CrossRef]

- Khan, M.M.; Alkhathami, M. Anomaly detection in IoT-based healthcare: Machine learning for enhanced security. Sci. Rep. 2024, 14, 5872. [Google Scholar] [CrossRef] [PubMed]

- Available online: https://www.kaggle.com/mrwellsdavid/unsw-nb15 (accessed on 5 May 2025).

- Available online: https://www.kaggle.com/paresh2047/uci-semcom (accessed on 5 May 2025).

- Murugesh, C.; Murugan, S. Evolutionary Optimization with Variational Auto encoder-based Denial of Service Attack Detection and Classification in Wireless Sensor Networks. In Proceedings of the 2022 International Conference on Augmented Intelligence and Sustainable Systems (ICAISS), Trichy, India, 24–26 November 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 994–1000. [Google Scholar]

- Thirumalraj, A.; Asha, V.; Kavin, B.P. An Improved Hunter-Prey Optimizer-Based DenseNet Model for Classification of Hyper-Spectral Images. In AI and IoT-Based Technologies for Precision Medicine; IGI global: Hershey, PA, USA, 2023; pp. 76–96. [Google Scholar]

- Thirumalraj, A.; Anusuya, V.S.; Manjunatha, B. Detection of Ephemeral Sand River Flow Using Hybrid Sandpiper Optimization-Based CNN Model. In Innovations in Machine Learning and IoT for Water Management; IGI global: Hershey, PA, USA, 2024; pp. 195–214. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).