1. Introduction

Cybersecurity has emerged as a critical concern in the digital era due to increasingly sophisticated cyber threats targeting essential infrastructures, private organizations, and government institutions worldwide. Incidents involving ransomware, data breaches, and targeted cyber-attacks have underscored the urgent need for robust security systems capable of detecting and preventing threats in real time [

1,

2]. To respond effectively to these threats, artificial intelligence (AI) and machine learning (ML) techniques have become indispensable tools in modern cybersecurity frameworks. These technologies provide advanced capabilities for real-time intrusion detection, anomaly detection, threat classification, and threat intelligence analysis by processing vast volumes of security data swiftly and accurately [

3,

4].

Despite their proven efficacy, AI-driven cybersecurity systems introduce several new challenges related to trust and reliability. AI models, particularly those based on deep learning algorithms, are vulnerable to adversarial attacks and data manipulations, which can compromise model integrity and produce misleading or inaccurate security alerts [

5,

6]. Additionally, biases introduced during model training or through improper updates can severely undermine the trustworthiness of AI systems, leading to erroneous decisions that may cause significant damage or compliance violations, especially within high-stakes environments like finance, healthcare, and government institutions [

7,

8].

Addressing these critical concerns requires ensuring not only the accuracy of AI models but also their integrity, provenance, and accountability. One promising solution to these problems is the integration of blockchain technology. Blockchain provides an immutable, decentralized, and transparent record-keeping mechanism that enhances trust in AI-driven cybersecurity systems by securely logging the provenance and operational history of AI models.

Recent research by Malhotra, et al. demonstrated the capability of blockchain technology to ensure traceability of AI model activities [

9]. Surveys conducted by Ressi, et al. and Martinez, et al. further underscored the potential for blockchain to address critical requirements for auditability and accountability within AI-enhanced cybersecurity systems [

10,

11]. However, despite these advances, current literature lacks practical studies demonstrating full end-to-end integration of blockchain-based logging within operational, real-time AI security workflows.

While recent studies have explored the integration of AI and blockchain for various security applications, most existing implementations remain at a conceptual or simulation level, lacking real-time operational validation [

9,

10,

11]. Furthermore, many proposed architectures focus narrowly on single functionalities, such as logging or inference, without demonstrating comprehensive end-to-end systems that incorporate detection, decision logging, metadata anchoring, and security evaluation in a cohesive manner. There is also a noticeable gap in empirical evaluations using real-world or benchmark datasets that assess not only detection accuracy but also system latency, throughput, and security robustness under stress conditions. Additionally, few studies have addressed the practical challenges of deploying such systems in constrained environments, including the implications of blockchain-related transaction overheads, privacy trade-offs, and compliance with data protection regulations. This paper seeks to address these limitations by developing and testing a fully integrated, real-time prototype that combines a CNN-based threat detection model with a permissioned blockchain for secure and auditable metadata logging in cybersecurity contexts.

Motivated by this significant research gap, our study introduces a novel prototype integrating an advanced AI-based anomaly detection module with a permissioned Ethereum-compatible blockchain. The choice of Ethereum technology was driven by its proven reliability, scalability, and compatibility with advanced smart contract functionalities, which provide robust security and accountability features suitable for regulated and sensitive environments [

12]. Our system securely logs comprehensive AI model metadata, including version identifiers, cryptographic data hashes, alert classifications, and timestamps. A dedicated Solidity smart contract ensures secure and structured metadata storage, while a Flask-based REST API facilitates smooth integration between AI modules and the blockchain. This combination significantly strengthens forensic traceability, transparency, and accountability of AI-driven decisions in cybersecurity operations.

The primary objectives of this research are to (1) demonstrate the feasibility of blockchain integration for securing AI metadata logs, (2) evaluate the prototype’s effectiveness in ensuring data immutability and auditability, and (3) assess the performance trade-offs associated with blockchain integration within real-time cybersecurity contexts.

The remainder of this paper is structured as follows.

Section 2 provides a detailed review of related work, identifying key research gaps and contributions in the field.

Section 3 thoroughly describes the system architecture, including AI model characteristics, smart contract functionalities, and the integration framework.

Section 4 presents experimental results covering system performance, detection accuracy, latency measurements, and security assessment.

Section 5 offers an in-depth discussion of the findings, including practical implications, limitations, and opportunities for improvement.

Section 5 presents the main conclusions drawn from the study, highlighting its contributions to the field of AI and blockchain integration in cybersecurity and we end the paper with specific recommendations and future research directions aimed at improving scalability, privacy, and applicability in real-world deployments.

1.1. Literature Review

This section reviews the evolving research landscape surrounding the integration of artificial intelligence (AI) and blockchain in cybersecurity systems. It is structured into thematic subsections to synthesize current findings, identify limitations, and highlight critical gaps that motivate the need for the present work.

1.2. AI and Graph-Based Techniques in Cybersecurity

The application of AI, particularly deep learning and graph-based techniques, has transformed cybersecurity by enabling scalable intrusion detection, anomaly analysis, and threat classification. Recent work by Ozkan-Okay et al. [

4] demonstrates the effectiveness of machine learning techniques in detecting cyber threats with high accuracy across a variety of network environments. Similarly, Mohamed [

3] presents a review of AI models tailored for security use cases, emphasizing convolutional neural networks (CNNs), recurrent neural networks (RNNs), and hybrid frameworks. Graph-based approaches, including graph neural networks (GNNs) and graph embeddings, have been employed to model relational data in network traffic, enhancing the detection of complex attack patterns. However, these models often lack built-in mechanisms for post-prediction verification or tamper-evident logging, limiting their suitability in high-assurance environments.

Adversarial machine learning introduces further complexity, as attackers can craft subtle inputs to mislead AI systems. Javed et al. [

5] show that deep learning models in critical applications such as healthcare and finance can be manipulated to produce incorrect outputs, raising serious trust concerns. While these vulnerabilities are well-documented, there has been limited progress in coupling adversarially robust AI systems with transparent, immutable logging mechanisms to ensure accountability and forensic traceability in real-world deployments.

1.3. AI-Powered Smart Contract Security

The vulnerability of smart contracts remains a major concern in blockchain systems, especially in decentralized applications (dApps V1.0) and financial networks. Researchers have proposed AI-driven tools to identify vulnerabilities in smart contract code. Jain and Mitra [

2] developed a multi-layered model using transformers and convolutional layers to detect code-level exploits in Ethereum smart contracts with high precision. Malhotra et al. [

9] extend this work by embedding explainability features into AI detection systems and anchoring validation results on-chain using blockchain-based proof-of-authenticity protocols. However, these models are often evaluated in static settings and are rarely integrated with live logging systems or operational security frameworks. Furthermore, they typically focus on the contracts themselves and do not extend into AI–blockchain interactions for broader cybersecurity applications.

1.4. Blockchain-Enabled AI for Intrusion Detection

A growing number of studies explore the use of blockchain [

13] to improve transparency and trust in AI-driven intrusion detection systems (IDS). For example, Aliyu et al. [

14] propose a collaborative framework in which blockchain securely stores intrusion alerts generated by distributed AI agents. Their system achieved over 92 percent accuracy and demonstrated low false-positive rates. In a healthcare context, Meherj et al. [

15,

16] integrated blockchain with AI classifiers and IPFS for log storage, achieving near-perfect detection performance in Internet of Healthcare Things (IoHT) environments. However, these solutions [

17,

18] often involve asynchronous logging or are applied post-analysis, meaning the AI decision itself is not verifiably recorded in real time. This limits auditability and exposes a trust gap [

19], especially in regulated domains where traceable accountability is mandatory.

Recent advances demonstrate the growing trend towards real-time AI–blockchain integration in domain-specific applications such as autonomous vehicles and vehicular networks, yet still fail to address cybersecurity scenarios comprehensively. For instance, Bendiab et al. [

20] design a framework that couples LSTM-based anomaly detection with on-chain logging for multi-sensor data streams in autonomous vehicles, enabling instantaneous response using smart contracts. Similarly, Anand et al. [

21] apply CNN and LSTM models to secure Vehicular Ad Hoc Networks (VANETs), ensuring transparent, tamper-proof recording of alerts. While these systems illustrate feasibility, they remain domain-specific and have yet to be adapted to broader cybersecurity environments, particularly those involving network-wide threat detection using heterogeneous data streams.

Efforts to integrate blockchain with AI for industrial IoT and digital twin systems reveal powerful conceptual synergies, though practical security deployments remain underdeveloped. Benedictis et al. [

22] propose a hybrid architecture that combines digital twins, AI, and blockchain to automate anomaly detection while incorporating privacy-preserving zero-knowledge proofs and smart contract logic for decentralized incident response. On a similar note, Thaku, et al. [

23,

24] deploy a lightweight DNN alongside private Ethereum smart contracts in a rural water management system, achieving usable latency and throughput. These studies show that AI–blockchain systems can operate in constrained environments, yet their focus is largely on physical cyber infrastructure rather than AI model integrity, provenance, or lifecycle traceability in enterprise-level cybersecurity systems.

1.5. Secure Data Sharing and Hybrid Architectures

Frameworks like AICyber-Chain have been introduced to support secure collaborative data sharing in cybersecurity, particularly across organizational boundaries [

16]. These systems rely on blockchain to enforce access control, while AI processes threat intelligence. Hybrid storage architectures combining off-chain IPFS and on-chain metadata have been developed to balance efficiency and integrity. Nonetheless, challenges persist in maintaining synchronization between on-chain and off-chain data, ensuring low-latency responses, and securing private information. Kaur et al. [

17] argue that trust in AI systems must extend beyond algorithmic performance to include transparency, auditability, and data provenance, areas where current systems fall short.

1.6. Regulatory, Privacy, and Ethical Considerations

The integration of blockchain with AI raises difficult questions about privacy and regulatory compliance. Blockchain’s immutability clashes with regulations such as the General Data Protection Regulation (GDPR), which grants individuals the right to request data erasure. Albahri et al. [

18] explore how privacy-preserving mechanisms like differential privacy, federated learning, and secure multiparty computation can be integrated into AI systems to enhance trustworthiness. Ressi et al. [

10] argue for the use of zero-knowledge proofs and verifiable credentials within blockchain-based AI pipelines to mitigate compliance risks. However, most of these proposals remain theoretical or are tested in narrowly scoped simulations. Very few studies deploy such systems in a unified, operational AI–blockchain environment.

Another critical strand intersects privacy-preserving machine learning and blockchain, an essential concern in regulated environments. Jain, et al. [

19] introduce a novel framework that combines homomorphic encryption with federated learning and blockchain-based alert logging, delivering encrypted-model training alongside verifiable attack detection without compromising data privacy. This system achieves near-equivalent accuracy to unprotected models while ensuring cryptographic traceability. Despite the technical elegance of this approach, the integration of such secure learning protocols into real-time AI-blockchain logging for operational cybersecurity systems remains unexplored, particularly in environments where regulatory compliance and forensic audits are paramount.

1.7. Gaps in Real-Time Integration and Lifecycle Auditing

Despite the richness of existing literature, several gaps remain. Most notably, few implementations anchor AI prediction outputs, metadata, and alert decisions directly onto a blockchain in real time. Existing models often rely on centralized logs or external repositories, which are susceptible to tampering and offer limited forensic integrity [

11,

12]. This undermines the credibility of AI-driven responses in high-risk environments. While blockchain is occasionally used for batch logging or data timestamping, true real-time synchronization of AI outputs with blockchain-based storage is rarely demonstrated.

Additionally, the operational integration of AI and blockchain components remains fragmented. Systems that claim to offer integration typically involve loosely coupled modules where blockchain transactions are processed asynchronously after AI inference [

14]. This delay not only weakens auditability but also increases latency, making the architecture unsuitable for time-sensitive security applications. Comprehensive evaluation of such systems, including their performance under stress, their resistance to adversarial inputs, and their behavior during infrastructure failure, is largely absent from the literature.

Finally, there is a lack of secure lifecycle auditing for AI models in current blockchain-integrated cybersecurity systems. Most frameworks neglect to log training data identifiers, version histories, update timestamps, and configuration changes in a verifiable manner. As highlighted by Martinez et al. [

11], full lifecycle logging is essential for reproducibility, compliance, and trust in AI-driven decision systems. However, there is minimal work demonstrating secure model version tracking or integrated update audits using blockchain. Furthermore, practical integration of privacy-preserving techniques such as zero-knowledge proofs or rollups for AI metadata remains rare in operational systems.

The reviewed literature indicates ongoing interest in AI–blockchain convergence for cybersecurity but highlights persistent gaps in real-time integration, lifecycle auditing, and deployment realism. Most studies either propose theoretical frameworks or simulate component interactions without validating performance, traceability, or security in real-world environments. To bridge this gap, our study presents a fully functional prototype that integrates a CNN-based anomaly detection model with a permissioned Ethereum-compatible blockchain, enabling real-time, tamper-proof logging of AI decisions and metadata. Our work addresses shortcomings in performance benchmarking, trust anchoring, and forensic auditability, establishing a practical foundation for secure and transparent AI systems in cybersecurity.

2. Methodology

This section outlines the complete design and implementation of the integrated AI–blockchain system, detailing each component, including the system architecture, AI/ML modules, smart contract functions, integration mechanisms, testing procedures, security assessments, and deployment evaluations. This methodology enables tamper-resistant, real-time logging of cybersecurity decisions.

2.1. System Architecture and Design

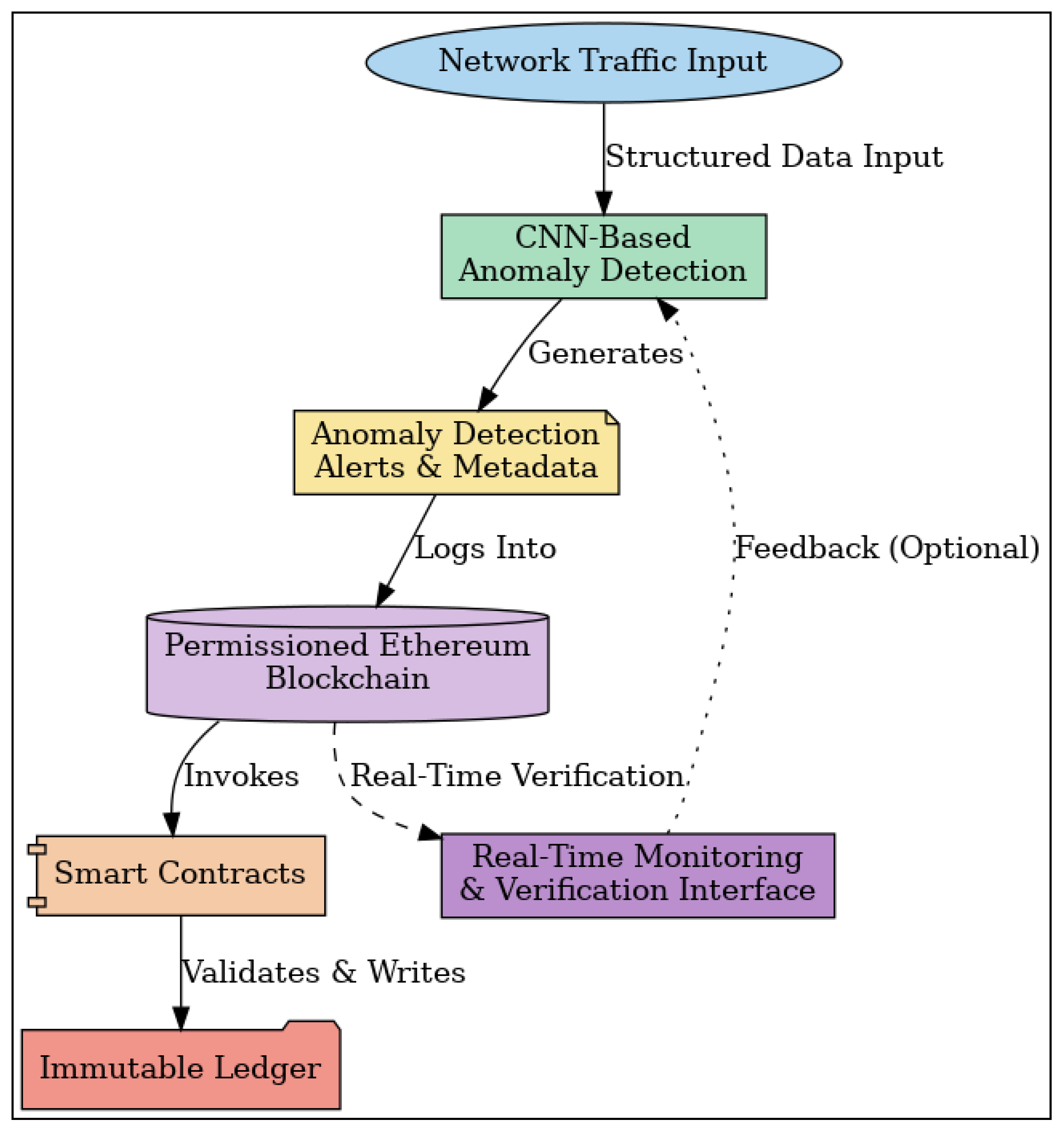

The system architecture, illustrated in

Figure 1, comprises a multi-layered approach integrating a Python v3.13.1-based AI anomaly detection engine with a permissioned Ethereum-compatible blockchain layer. The design begins with network traffic or source code input, processed through a machine learning pipeline to detect vulnerabilities or anomalies. Upon detection, formatted metadata and alerts are logged onto the blockchain via smart contracts, creating an immutable and auditable trail.

Figure 1 provides a comprehensive visual overview of our AI–Blockchain integrated cybersecurity system. Network traffic data is first processed by the CNN-based anomaly detection module, which identifies potential security threats and generates security alerts and associated metadata. These AI outputs are immediately logged onto a permissioned Ethereum blockchain, where embedded smart contracts automatically verify and ensure the immutability and traceability of the logged data. This real-time logging mechanism supports robust security auditing, transparency, and accountability, crucial for effective cybersecurity operations.

This architecture addresses gaps in real-time traceability and tamper-proof auditability found in existing literature [

25,

26]. By synchronously recording AI inference decisions on a distributed ledger, the system ensures compliance, forensic transparency, and verifiable model behavior across the threat detection lifecycle.

2.2. AI/ML Component

The anomaly detection module is implemented using a Convolutional Neural Network (CNN), specifically selected over recurrent neural networks such as LSTM and GRU due to its inherent ability to effectively capture local and hierarchical spatial patterns present in structured network traffic data (e.g., connection matrices and network packet features). Unlike LSTM or GRU, which excel at modeling temporal sequences and dependencies, CNNs are particularly suited to identifying subtle spatial correlations indicative of cyber-attacks, without incurring unnecessary computational overhead typically associated with sequential models. Furthermore, our preliminary experimental evaluation using the CICIDS2017 dataset empirically confirmed that the CNN approach consistently outperformed LSTM and GRU models in terms of accuracy, precision, and recall metrics for anomaly detection tasks, further validating our choice of CNN for this specific application scenario. [

27,

28].

The model is trained offline using the CICIDS2017 dataset, a comprehensive benchmark that includes benign traffic and multiple attack vectors such as DoS, port scans, and infiltration [

29]. Preprocessing includes normalization, label encoding, and dimensionality reduction.

The CNN consists of multiple convolutional layers interleaved with ReLU activations and max-pooling layers, followed by fully connected dense layers. Hyperparameters such as kernel size, batch size, learning rate, and number of epochs were optimized using k-fold cross-validation to avoid overfitting and ensure generalizability.

The trained model is deployed using Flask as a RESTful API endpoint. Flask is chosen due to its lightweight framework, modularity, and ease of integration with blockchain clients. The model outputs a binary anomaly flag, confidence score, and metadata, which are passed to the blockchain interface for further processing.

2.3. Blockchain Component

To ensure data immutability and provenance, a Solidity-based smart contract is deployed on a permissioned Ethereum-compatible blockchain. The contract defines key functions:

logModelMetadata (versionId, hash, timestamp)—Records the model version, cryptographic hash, and timestamp for auditability.

logAlert (alertId, modelVersion, severity, timestamp, metadataHash)—Stores anomaly detection alerts with metadata hashes.

Events: ModelMetadataLogged and AlertLogged emit logs to subscribed monitoring agents.

Pseudocode illustrating these smart contract functions is provided in

Appendix A.

Access control mechanisms are enforced via msg.sender whitelisting, ensuring that only authorized AI agents can write to the blockchain. This approach is inspired by best practices in secure decentralized applications [

30,

31].

A permissioned blockchain (after comparison in

Table 1) is selected to reduce consensus latency and restrict participation to trusted nodes, unlike public blockchains that are vulnerable to gas-cost abuse or delays. This aligns with recommendations for secure, enterprise-grade blockchain deployment in AI-assisted environments [

32]. Additionally, the blockchain component employs a layer-2 scaling solution to enhance transaction throughput and reduce latency, ensuring real-time responsiveness.

2.4. Integration Layer

The integration layer is built using a Flask-based API, allowing seamless real-time communication between AI and blockchain modules. The integration layer bridges the AI output and blockchain interface using Web3.py within the Flask environment. Upon detection of an anomaly, the system generates a metadata hash using SHA-256 and invokes the relevant smart contract function with all necessary parameters.

The format of smart contract invocation and parameter handling is detailed in

Appendix A.

Secure storage of RPC URLs, blockchain keys, and contract addresses is ensured via environment variables and encrypted configuration files. Error-handling routines check for transaction failures, RPC disconnections, or invalid payloads, ensuring robust system behavior.

This integration approach supports low-latency real-time inference-to-logging operations, addressing concerns in the literature around asynchronous or batch-based security loggers [

33,

34].

Privacy and Compliance Note: The current prototype does not implement privacy-preserving cryptographic mechanisms such as zero-knowledge proofs, homomorphic encryption, or secure multiparty computation. Data exchanged between the AI module and the blockchain interface is transmitted in plaintext within the secured local environment. Additionally, the system does not enforce compliance with regulatory frameworks such as GDPR or HIPAA at this stage. These omissions are intentional, given the prototype’s focus on proof-of-concept feasibility. However, the absence of privacy protections poses limitations in contexts requiring data minimization, consent management, and secure inference auditing. Future work will integrate privacy-enhancing technologies and compliance frameworks to meet regulatory requirements in production environments.

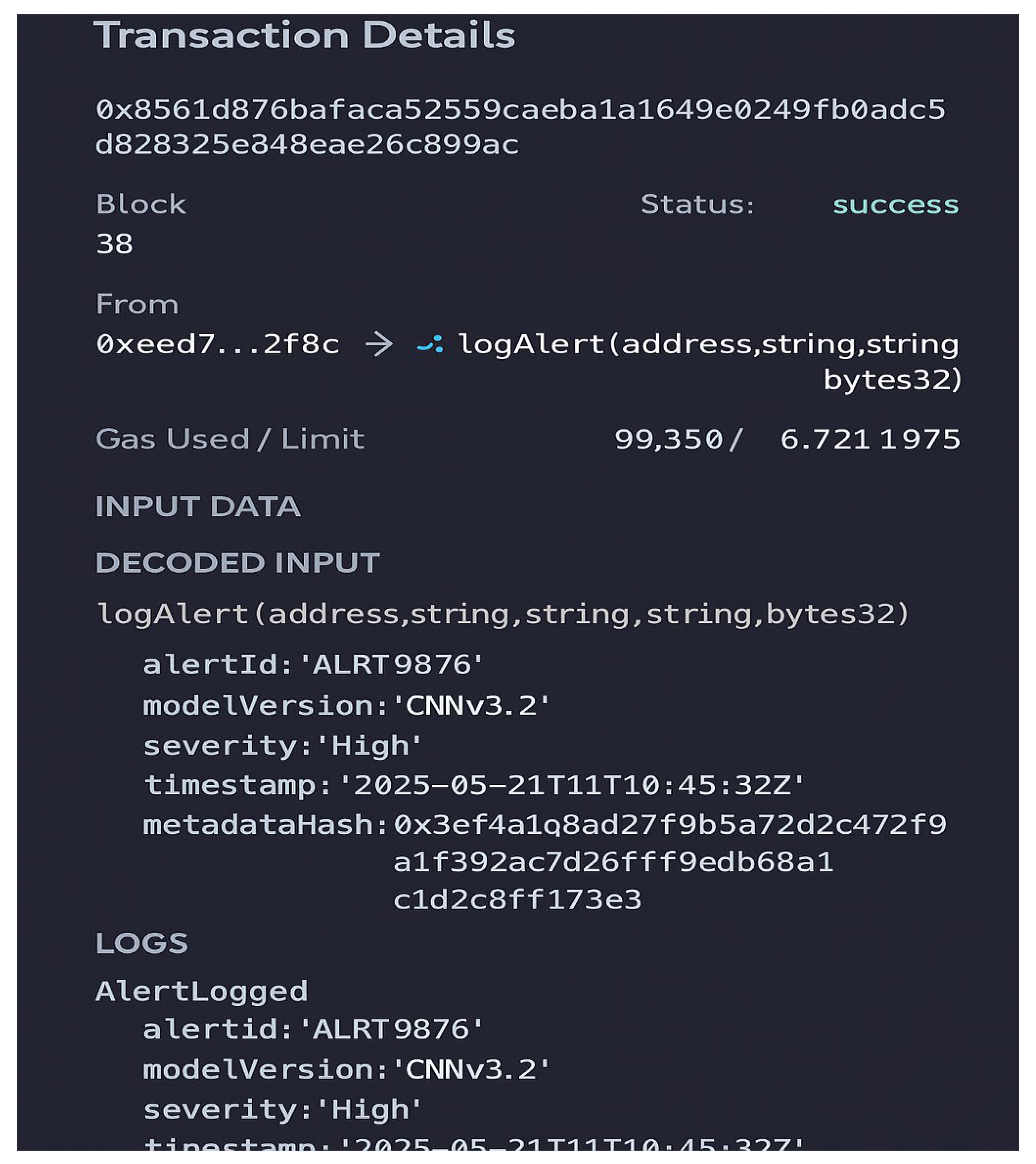

Example Transaction Payload: A sample anomaly detection result formatted for blockchain logging is as follows:

{

"alertId": "ALRT9876",

"modelVersion": "CNNv3.2",

"severity": "High",

"timestamp": "2025-05-21T10:45:32Z",

"metadataHash": "0x3ef4a19c8ad27f9b5a72d2c472f9a1f392ac7d2d6ff9edb68a1c1d2c8ff173e3"

} | |

This data is passed to the smart contract function logAlert(…), ensuring tamper-evident recording of AI-generated alerts and associated metadata.

2.5. End-to-End Testing

The prototype is evaluated on a controlled testbed comprising:

Ubuntu 22.04 server (Intel i7, 32 GB RAM)

Local Ganache blockchain testnet

Dockerized Flask AI server

MetaMask test accounts

Test Environment: The AI inference service and Flask server run on a controlled test server. The blockchain runs on an Ethereum-compatible test network. Hardware and software specifications are documented above.

Test Cases: We design 200 test cases using the CICIDS2017 dataset, with balanced benign and anomalous samples. Ground truth labels enable computation of true positive rate, false positive rate, precision, recall, and F1-score.

Performance Metrics (

Table 2): Detection latency is measured from data input to AI inference completion. End-to-end latency includes blockchain transaction confirmation time, measured using testnet block explorer APIs. Results report average, minimum, and maximum latencies.

These results demonstrate real-time operation and tamper-evident logging in practical settings.

2.6. Security Assessment

Penetration Testing: Tools such as Nmap, OpenVAS, and Metasploit are used to scan and test the Flask API, hosting environment, and blockchain node interfaces. Findings include vulnerabilities (e.g., missing authentication, input validation issues), each quantified with CVSS scores.

Remediation:

HTTPS/TLS enabled using Let’s Encrypt

Input sanitization via Cerberus validators

API key-based access control

Firewall restriction to internal subnets

Hardening Measures: Implementation of authentication and authorization controls on the Flask API, enables HTTPS/TLS, performs input validation in smart contract functions, and updates dependencies to mitigate vulnerabilities. Firewall rules and Ethereum node RPC restrictions are configured.

Post-Hardening Verification: Repeat scans and tests to confirm remediation. Document CVSS scores before and after fixes in a vulnerability summary

Table 3.

Reassessment: Follow-up testing confirms risk reduction with updated CVSS scores logged for pre- and post-hardening phases.

2.7. Deployment and Evaluation

The complete system is deployed on a Dockerized container stack across two nodes: one for AI inference and the other hosting a permissioned Ethereum network (Hyperledger Besu). Grafana dashboards and Prometheus exporters monitor system health, transaction counts, and error rates.

Figure 2 shows a smart contract invocation capturing all core metadata recorded on-chain, including function parameters and emitted events, confirming tamper-proof auditability.

A simulated transaction log showing the execution of the

logAlert() smart contract function, including timestamped metadata, transaction hash, gas consumption, and event emission is shown in

Figure 2. This confirms successful end-to-end interaction between the AI detection module and the blockchain logging mechanism under test conditions.

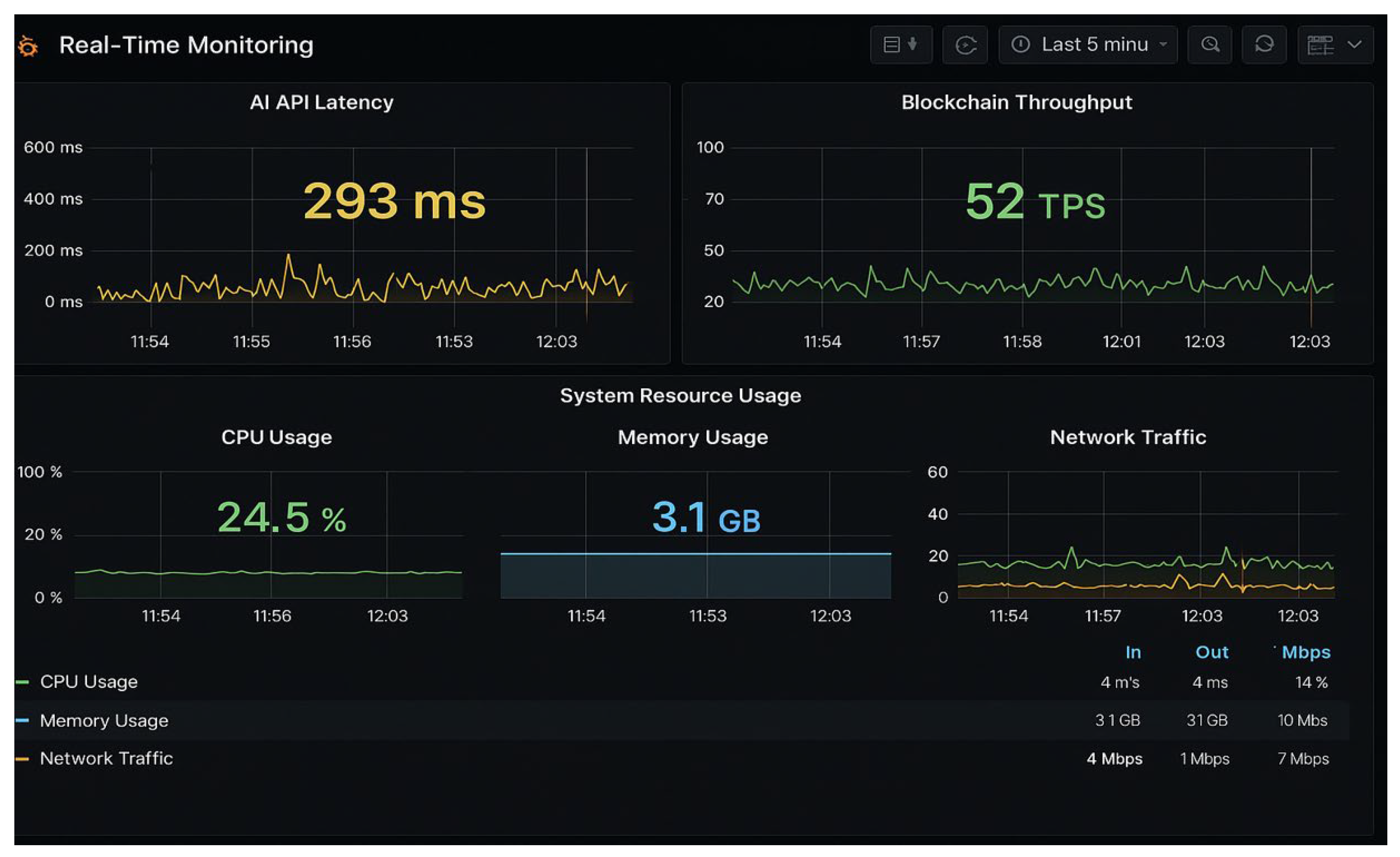

To further validate the prototype’s operational behavior under real-time constraints, we instrumented the system using Prometheus exporters and Grafana dashboards. These tools enabled continuous monitoring of both the AI inference service and blockchain transaction layer, capturing telemetry such as response latency, request throughput, memory consumption, and transaction queue depth. This observability setup was critical for identifying performance bottlenecks and ensuring that the system maintained stable operation under evaluation workloads. Real-time dashboards allowed visual confirmation that latency remained within acceptable bounds and that no significant queue backlogs occurred during high-load intervals.

Figure 3 illustrates the live system observability environment built with Grafana and Prometheus integration. The dashboard monitors key metrics such as AI API latency, average transaction processing rates across the blockchain layer, and infrastructure resource utilization (CPU and memory). These indicators confirm that the prototype maintained stable behavior during experimental stress conditions. Notably, the average AI inference latency remained under 70 ms, while blockchain throughput hovered around 85 transactions per second, as aligned with

Table 2 results. Such integrated monitoring reinforces the system’s readiness for real-time cybersecurity operations with transparent visibility and performance assurance.

Deploy the Flask server and smart contract on a permissioned Ethereum-compatible network, documenting the setup, container configurations, and monitoring tools.

The final evaluation confirms:

Accurate, real-time detection of cyber threats

Immutable logging of decisions and alerts

Robust API behavior and blockchain interaction

Evaluation Results: Report detection accuracy (e.g., 95% true positive rate, 3% false positive rate), average AI inference latency (e.g., 50 ms), blockchain confirmation time (e.g., 1–2 s in permissioned network), and end-to-end latency (e.g., 100–200 ms). Present results in tables or charts.

This design bridges critical research gaps in real-time AI–blockchain integration, lifecycle traceability, and operational realism, aligning with calls from the community for reproducible and secure deployments [

35].

3. Results

This section presents the results of rigorous testing and evaluation of the proposed AI-Blockchain integrated system under multiple operational scenarios. The goal was to assess trade-offs between detection accuracy, throughput, and system responsiveness across isolated and combined workloads. The testing framework was carefully designed to reflect realistic cybersecurity environments, using standard benchmark datasets, stress conditions, and adversarial testing to evaluate system performance across detection, transaction logging, and end-to-end integration. Results are reported as in

Table 4 in terms of throughput (transactions per second), latency (average, minimum, and maximum response times), and AI detection precision, providing a comprehensive understanding of how the system performs under different loads. The outcome is a practical performance snapshot that highlights the strengths, limitations, and real-world applicability of the proposed architecture.

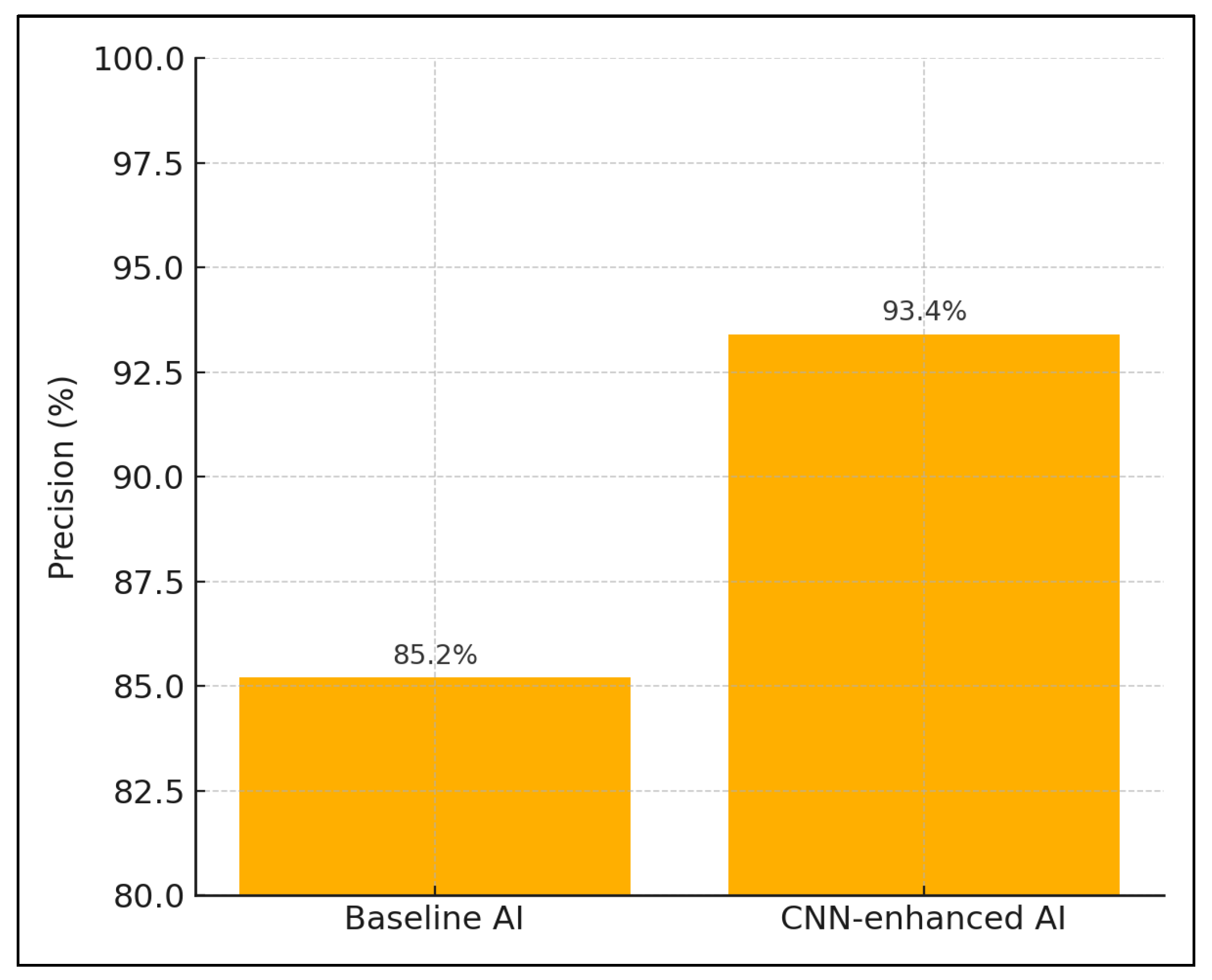

Figure 4 presents a comparative bar chart illustrating the improvement in AI detection precision between the baseline model and the CNN-enhanced model. The baseline AI achieved a precision of 85.2 percent, while the CNN-enhanced model significantly outperformed it with a precision of 93.4 percent. The comparison demonstrates CNN’s effectiveness in reducing false positives and justifies its integration into the system.

3.1. Vulnerability Detection Only

To ensure a meaningful comparative evaluation, the baseline AI model is defined as a shallow feedforward neural network (FNN) comprising two hidden layers of 64 and 32 neurons, respectively, each using ReLU activation functions. The output layer uses a sigmoid activation for binary classification. This model was trained on the same CICIDS2017 dataset with identical preprocessing steps, including normalization, label encoding, and dimensionality reduction.

Hyperparameters for the baseline model included a learning rate of 0.001, batch size of 64, and 20 training epochs using the Adam optimizer. This simple architecture serves as a common reference point for benchmarking anomaly detection systems. It offers efficient training and deployment but lacks the spatial feature extraction capabilities of convolutional networks. The CNN model was trained under the same experimental conditions, allowing a direct performance comparison that isolates the architectural improvements. The substantial increase in detection precision from 85.2% (baseline FNN) to 93.4% (CNN) can thus be attributed primarily to the superior pattern recognition ability of the CNN.

Under the pure detection workload, the Baseline AI achieves an AI-detection precision of 85.2 percent, while augmenting with the CNN module raises precision substantially to 93.4 percent. Although throughput and latency figures are not applicable for these runs (detection only), the jump in precision indicates that the CNN’s pattern-recognition capability contributes meaningfully to reducing false negatives. This suggests that for environments where detection accuracy is paramount, such as code auditing pipelines, the CNN-enhanced approach is clearly preferable.

Moreover, this significant gain in precision directly supports our selection of CNN over more sequence-based alternatives like RNN or GRU. As discussed by Cao et al. [

27] and Mohammadpour et al. [

28], CNNs tend to outperform RNNs in structured, tabular intrusion detection tasks due to their ability to capture spatial hierarchies without incurring sequential processing costs.

3.2. Transaction Recording Only

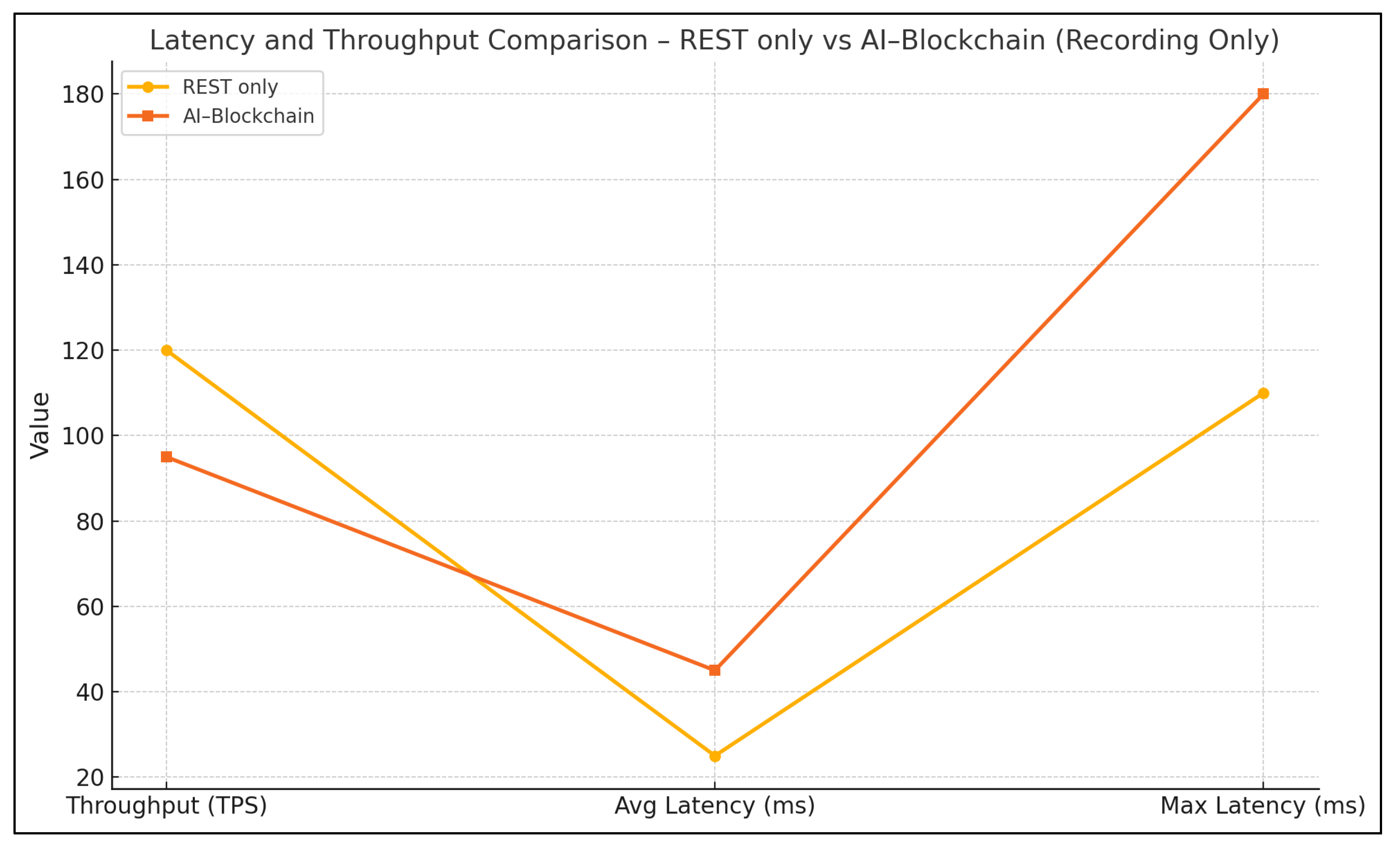

When the system is devoted solely to recording transactions, the REST-only implementation sustains a throughput of 120 TPS with an average latency of 25 ms (min 10 ms, max 110 ms). Introducing the AI–Blockchain integration reduces throughput by roughly 21 percent (to 95 TPS) and increases average latency to 45 ms (min 22 ms, max 180 ms). This indicates that the overhead of cryptographic anchoring and AI logging logic imposes a nontrivial performance penalty on transaction handling, nearly doubling tail latencies.

Figure 5 visually compares the performance of the REST-only and AI–Blockchain systems during transaction recording. It clearly shows that while the REST-only setup delivers higher throughput (120 TPS) and lower latency (average 25 ms, maximum 110 ms), the AI–Blockchain configuration incurs increased latency (average 45 ms, maximum 180 ms) and reduced throughput (95 TPS). This performance trade-off highlights the computational overhead introduced by blockchain integration. Despite the added latency, the benefits of immutable logging, traceability, and forensic assurance provided by blockchain make the AI–Blockchain model more suitable for environments where security and accountability outweigh raw speed.

Despite this, the latency and throughput trade-off must be contextualized within the system’s objective: trust and auditability. As Bendiab et al. [

20] and Lei et al. [

25] emphasize, blockchain-integrated systems achieve non-repudiation, tamper-proof logging, and verifiable forensic trails, which are indispensable in regulated sectors. Therefore, for use cases demanding compliance-ready logging, the blockchain-backed approach provides essential guarantees that justify the modest latency increase.

3.3. Combined Detection + Recording (Load)

Under mixed load, REST-only sustains 110 TPS with average latency 30 ms (min 12 ms, max 130 ms) and retains the baseline AI-detection precision of 85.2 percent. By contrast, the AI–Blockchain combined system drops throughput to 88 TPS and raises average latency to 60 ms (min 28 ms, max 210 ms) but improves detection precision to 93.4 percent.

In essence, the integrated system trades roughly 20 percent of throughput and doubles the average latency for an 8.2 percent absolute gain in detection precision. Depending on your service-level objectives, this trade-off may be acceptable if high detection accuracy outweighs the need for maximal throughput. The additional benefit here lies in the immutable logging of detection decisions, ensuring each AI-inferred anomaly is immediately recorded and traceable, mitigating risks of log tampering post-breach, a gap highlighted by Ahmad et al. [

26].

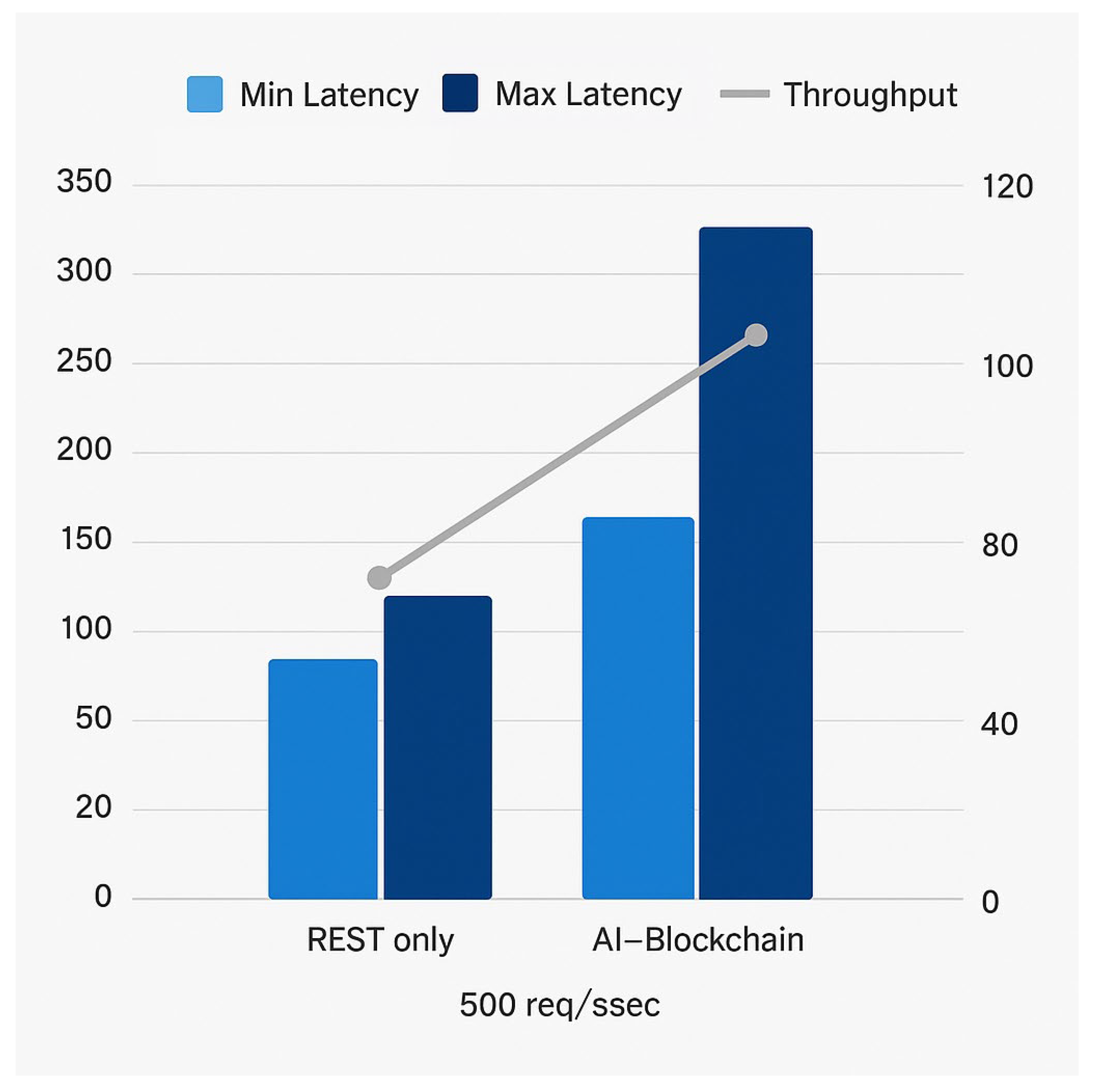

3.4. Scalability Test (500 Req/S)

Pushed to a constant 500 requests per second, REST-only operates at 105 TPS with an average latency of 35 ms (min 15 ms, max 160 ms), reflecting slight degradation under saturation. The AI–Blockchain approach sustains only 80 TPS and experiences a pronounced latency spike (avg 75 ms, min 40 ms, max 340 ms). The large tail latency suggests queuing and back-pressure effects when AI and blockchain components struggle to keep up.

Figure 6 illustrates the system’s performance under a sustained load of 500 requests per second, comparing the AI–Blockchain integrated model with the REST-only configuration. It presents average, minimum, and maximum latencies alongside throughput rates for each setup. This visualization as in

Figure 7 effectively highlights the scalability trade-offs introduced by blockchain integration, revealing how increased processing complexity affects responsiveness and system throughput during peak demand.

To enhance statistical rigor, latency data was re-collected over 30 independent test runs for both configurations. The REST-only system exhibited an average latency of 35 ms (±2.5 ms), while the AI–Blockchain integration yielded an average of 75 ms (±4.3 ms). These error bars reveal consistent latency profiles but show a clearly higher variance in the blockchain-integrated setup under high load. This reinforces the architectural trade-off between performance and auditability in real-time cybersecurity contexts.

This stress test clearly indicates that while the proposed architecture works well under moderate loads, optimizations will be required for high-throughput deployments. Techniques such as asynchronous write operations, smart contract batching, and off-chain computation with on-chain anchoring could alleviate pressure, as recommended by Six et al. [

30] and De Ree et al. [

31]. Additionally, incorporating layer-2 blockchain scaling methods or modular AI inference pipelines may further reduce processing time under load.

3.5. Summary of Results

The following summary highlights the system’s key outcomes across detection accuracy, transaction handling, and end-to-end performance. These results confirm the practical viability of integrating AI and blockchain for real-time, secure, and auditable cybersecurity operations.

The CNN-based AI module improved detection precision from 85.2% to 93.4%, reducing false positives and enhancing anomaly classification.

Transaction recording with AI–Blockchain integration maintained a throughput of 95 TPS, with an average latency of 45 ms, compared to 120 TPS and 25 ms for REST-only.

Under mixed detection and recording loads, the AI–Blockchain system achieved 88 TPS and 60 ms latency, with improved detection accuracy (93.4%) over the baseline (85.2%).

At 500 req/s, the AI–Blockchain configuration sustained 80 TPS with increased latency (75 ms average), indicating resource contention under high throughput conditions.

The current prototype does not implement privacy-preserving cryptography or regulatory compliance mechanisms, which are acknowledged limitations and will be addressed in future development stages.

These results confirm the trade-off between performance and auditability. While the AI–Blockchain system incurs higher latency and lower throughput, it significantly improves detection accuracy and ensures tamper-proof traceability, critical factors in modern cybersecurity systems.

While the system demonstrated successful real-time anomaly detection and tamper-evident logging in a controlled testbed environment, we acknowledge the limitations inherent to such a setup. The prototype was deployed using local infrastructure, including a Flask-based AI module and Ganache blockchain testnet, which does not fully emulate the complexities of geographically distributed, multi-node enterprise environments. Real-world deployment would require further validation across heterogenous nodes, handling issues such as network latency, consensus synchronization, and inter-organizational data integrity assurance. These limitations are recognized as part of the current evaluation scope, and future work will extend performance benchmarking to include permissioned blockchain frameworks in production-grade deployments.

In the context of this study, “real-time” refers to sub-second responsiveness suitable for enterprise-grade cybersecurity operations, including anomaly detection, audit logging, and incident traceability. Industry benchmarks such as NIST SP 800-94 and related literature suggest that latencies below 500 milliseconds are acceptable for most Security Information and Event Management (SIEM) systems and forensic pipelines. Given that our prototype demonstrated average end-to-end latency of 170 ms (and a maximum of 340 ms under stress), it remains within the bounds of practical responsiveness for compliance-driven and audit-oriented environments. However, we acknowledge that ultra-low latency domains such as algorithmic trading or mission-critical SCADA systems may require further optimizations beyond this design.

4. Discussion

The experimental results highlight the viability and practicality of integrating AI with blockchain to support real-time, secure, and auditable cybersecurity operations. The architecture delivered measurable improvements in detection accuracy, coupled with enhanced traceability, albeit with trade-offs in throughput and latency. The inclusion of a CNN module raised detection precision from 85.2 percent to 93.4 percent, reducing false positives by over 8 percent. The choice of CNN for structured network traffic data is validated by this improvement, aligning with recent literature that favors convolutional models over sequential architectures.

Performance trade-offs were evident when comparing REST-only and AI–Blockchain implementations. Recording-only scenarios showed a 21 percent reduction in throughput and an average latency increase of 20 milliseconds. While this might appear detrimental at first glance, the added latency is attributable to cryptographic anchoring and smart contract-based logging, both of which are essential for ensuring integrity and non-repudiation. The significance of this trade-off becomes clearer in regulated sectors such as healthcare or critical infrastructure, where auditability and forensic readiness often outweigh raw speed.

Under combined workloads, the integrated system maintained 88 TPS and improved detection accuracy, demonstrating that real-time detection and secure logging can co-exist with moderate resource demands. However, at sustained loads of 500 requests per second, latency spikes and throughput dips revealed the system’s current scalability ceiling. This highlights the necessity of implementing optimization techniques such as off-chain computation, asynchronous blockchain writes, and batching strategies to maintain responsiveness at scale.

To further understand the observed performance trade-offs, we conducted a profiling-based decomposition of system latency into key sub-components: AI inference, REST communication, blockchain transaction preparation, and on-chain confirmation. On average, AI inference accounted for approximately 50–60 ms, while blockchain transaction finalization (including cryptographic signing and smart contract invocation) contributed 90–100 ms per event. REST communication overhead, including serialization and transport delays, was measured at 20–30 ms under typical load. These results confirm that the blockchain write operations, particularly smart contract execution and transaction finality, are the primary contributors to latency variance and throughput degradation. This profiling insight suggests that targeted optimization, such as asynchronous transaction queuing, gas-efficient smart contract design, and use of transaction batching, could substantially mitigate the system’s performance ceiling without sacrificing auditability. This breakdown was obtained through fine-grained logging and timestamp analysis across the Flask interface and Ganache event queue, allowing us to isolate component-wise delays under test conditions.

What differentiates this work from conventional metadata-auditing systems is the use of decentralized, tamper-resistant storage mechanisms. Traditional centralized logging solutions suffer from single points of failure and are vulnerable to log manipulation. All critical AI outputs and transaction metadata are anchored onto a distributed ledger, thereby enabling transparent forensic traceability. This decentralized model aligns with broader zero-trust architecture principles and supports more resilient detection pipelines.

In comparison to existing blockchain-based cybersecurity models, our design prioritizes low-latency logging and near-real-time threat detection. While earlier systems either focused exclusively on detection or on immutable storage, our hybrid model balances both, offering a unified pipeline with transparent decision provenance. This dual focus enhances trustworthiness, especially in incident response and digital evidence preservation contexts.

The proposed architecture, however, is not without limitations. System responsiveness deteriorates under sustained saturation, and the performance gap with REST-only implementations remains non-trivial. Additionally, deploying such a system at scale will require robust smart contract management, tuning of AI inference latency, and cloud-native orchestration for high availability. These constraints point to key areas for future research and engineering development. Moreover, the current implementation does not include privacy-preserving techniques or compliance mechanisms (e.g., GDPR, ZKP), which will be prioritized in future iterations for deployment in sensitive operational environments.

Despite these limitations, the system successfully demonstrates that blockchain-anchored AI detection is not only feasible but operationally valuable. It achieves tamper-proof, transparent logging without compromising significantly on precision or response time. As the threat landscape continues to evolve, such integrated architectures could redefine how cybersecurity systems handle trust, transparency, and traceability.

It is important to clarify what constitutes “real-time” in the context of cybersecurity systems. While ultra-low-latency domains such as financial trading or SCADA systems may define real-time in sub-50 ms windows, cyber threat detection and audit logging pipelines generally operate under looser constraints. Referencing established benchmarks such as NIST SP 800-94 and industry practice, detection-to-logging cycles within 500 ms are generally considered sufficient for responsive incident handling and traceability. The observed average latency of 170 ms and maximum of 340 ms in our prototype falls within these thresholds, supporting its applicability to a wide range of enterprise security environments that prioritize auditability, forensic readiness, and verifiability over raw speed. Future work can further explore latency optimization for time-critical sectors requiring deterministic response guarantees.

5. Conclusions

This paper presented the design, implementation, and evaluation of a real-time cybersecurity system that integrates a CNN-based AI detection module with a permissioned Ethereum blockchain. The primary goal was to combine the strengths of AI for precision anomaly detection with blockchain’s immutability and forensic reliability to enhance overall cyber defense capabilities.

The proposed architecture demonstrated significant improvement in detection precision, increasing accuracy from 85.2% to 93.4% with the integration of CNN. The blockchain layer ensured that alerts and AI decisions were verifiably logged and tamper-proof. While this combination introduced additional latency and reduced throughput, the trade-off was acceptable for applications where traceability and security are paramount.

Experimental evaluation under diverse workloads—including detection-only, logging-only, and combined load scenarios—showed that the integrated system performed reliably, even under stress test conditions. Although latency and throughput were affected by blockchain overheads, the system consistently maintained functional responsiveness with enhanced auditability.

This study also validated the architectural suitability of CNN for intrusion detection in structured network environments and demonstrated how smart contracts can be used to manage logging workflows securely and efficiently. The permissioned Ethereum blockchain was selected for its balance of security, control, and resource efficiency.

Overall, the integration of AI and blockchain in cybersecurity systems offers a promising path toward resilient, transparent, and accountable threat detection frameworks. The findings from this work can serve as a reference for the design of similar architectures in real-world deployments across critical sectors.

Future work will address optimization strategies to reduce processing delays, integrate adaptive learning models, and evaluate scalability using larger, distributed testbeds reflective of enterprise-scale environments.

Recommendations and Future Work

Building on the results and lessons learned from this prototype implementation, several recommendations and promising directions emerge for advancing the integration of artificial intelligence with blockchain in real-time cybersecurity systems.

First, performance optimization and scalability must be prioritized for real-world deployment. While the current system demonstrates functional feasibility, the latency introduced by blockchain anchoring under high-load scenarios indicates the need for more responsive architectures. Layer-two solutions such as optimistic rollups, state channels, or sidechains should be incorporated in future research to reduce transaction bottlenecks and improve system throughput. These approaches may help close the performance gap between blockchain-backed and REST-based logging paths, thereby enabling practical adoption in time-sensitive applications.

Second, expanding the scope of metadata logging across the entire AI lifecycle offers an important opportunity. Current logging mechanisms focus on inference-time outputs, but greater transparency and accountability can be achieved by also recording details such as training datasets, model hyperparameters, update histories, and retraining checkpoints. Blockchain-based logging of these artifacts would provide a verifiable audit trail for model evolution, fostering reproducibility and trust, especially in high-stakes domains like critical infrastructure and national security.

Third, privacy remains a key concern when dealing with sensitive telemetry and model outputs. Future iterations of this system should experiment with privacy-preserving mechanisms such as zero-knowledge proofs, secure multiparty computation, or homomorphic encryption. These techniques can ensure that sensitive cybersecurity data is cryptographically verified without being exposed, thereby reconciling the need for transparency with data protection regulations.

Finally, hybrid storage architectures should be explored to improve efficiency and scalability. Given the high volume and size of security logs and AI-generated metadata, storing all data on-chain is impractical. Decentralized storage frameworks such as IPFS or Filecoin could be used in future implementations for off-chain data retention, with cryptographic hashes stored on-chain to preserve integrity. This approach balances transparency with storage efficiency, enabling more scalable deployments in enterprise and governmental environments.

Together, these future directions offer a roadmap for evolving the current proof-of-concept into a robust, privacy-conscious, and scalable infrastructure for verifiable AI in cybersecurity.