Security Hardening and Compliance Assessment of Kubernetes Control Plane and Workloads

Abstract

1. Introduction

2. Related Works

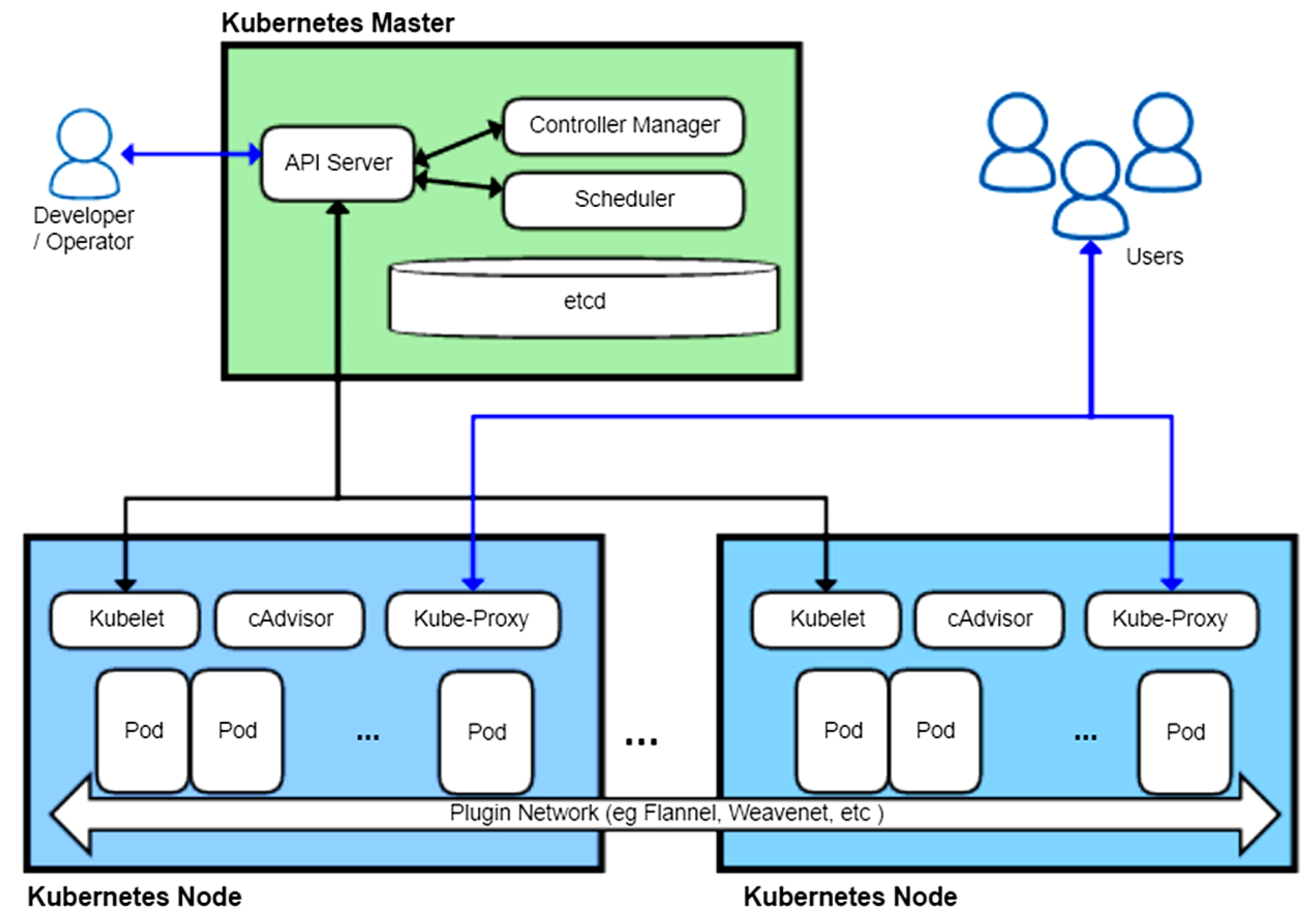

3. Kubernetes Architecture

3.1. Control Plane Elements

- Kubernetes API Server: functions as the front-end interface for the cluster. All operations, whether initiated by users or automated, interface with the cluster through this RESTful API.

- etcd: a distributed key-value repository that maintains the complete cluster state, encompassing configuration, metadata, and status data. The consistency and availability are essential for the reliability of the cluster.

- Controller Manager: manages multiple controllers that oversee the status of cluster resources and align them with the intended state. The ReplicationController guarantees that the designated number of pod replicas is operational.

- Scheduler: tasked with allocating pods to nodes. It determines actions based on resource necessities, affinity regulations, and policy limitations, guaranteeing optimal workload allocation.

3.2. Node Components

- Kubelet: The principal node agent that registers the node with the control plane and guarantees the operational integrity and health of the containers specified in a pod definition.

- Container Runtime: responsible for initiating and overseeing container lifecycles. Kubernetes accommodates multiple runtimes, such as containerd, CRI-O, and, previously, Docker.

- Kube-proxy: manages network routing on every node. It facilitates internal communication among services and pods across nodes and external communication when exposed through an ingress.

3.3. Networking and Service Identification

- Cluster Networking establishes a flat network architecture, enabling direct communication among all pods without requiring NAT. It is generally administered through CNI plugins such as Antrea, Calico, Flannel, or Cilium.

- Services function as reliable front-ends for pod access, frequently load-balanced and reachable through DNS. Kubernetes features an internal DNS service that autonomously allocates DNS records to services and pods.

- Ingress Controllers regulate external access to services, typically through HTTP/S routing protocols and TLS termination, and play a crucial role in securely managing north-south traffic.

3.4. Extensibility and Declarative Configuration

- Custom Resource Definitions (CRDs) facilitate introducing novel resource types, permitting integration with domain-specific logic.

- Operators enhance controller functionality to oversee intricate, stateful applications by utilizing domain expertise embedded in automation.

- Admission Controllers are modular components that intercept API requests to enforce cluster policies or execute validation and mutation.

4. Security Hardening of Kubernetes Components

4.1. Control Plane Security

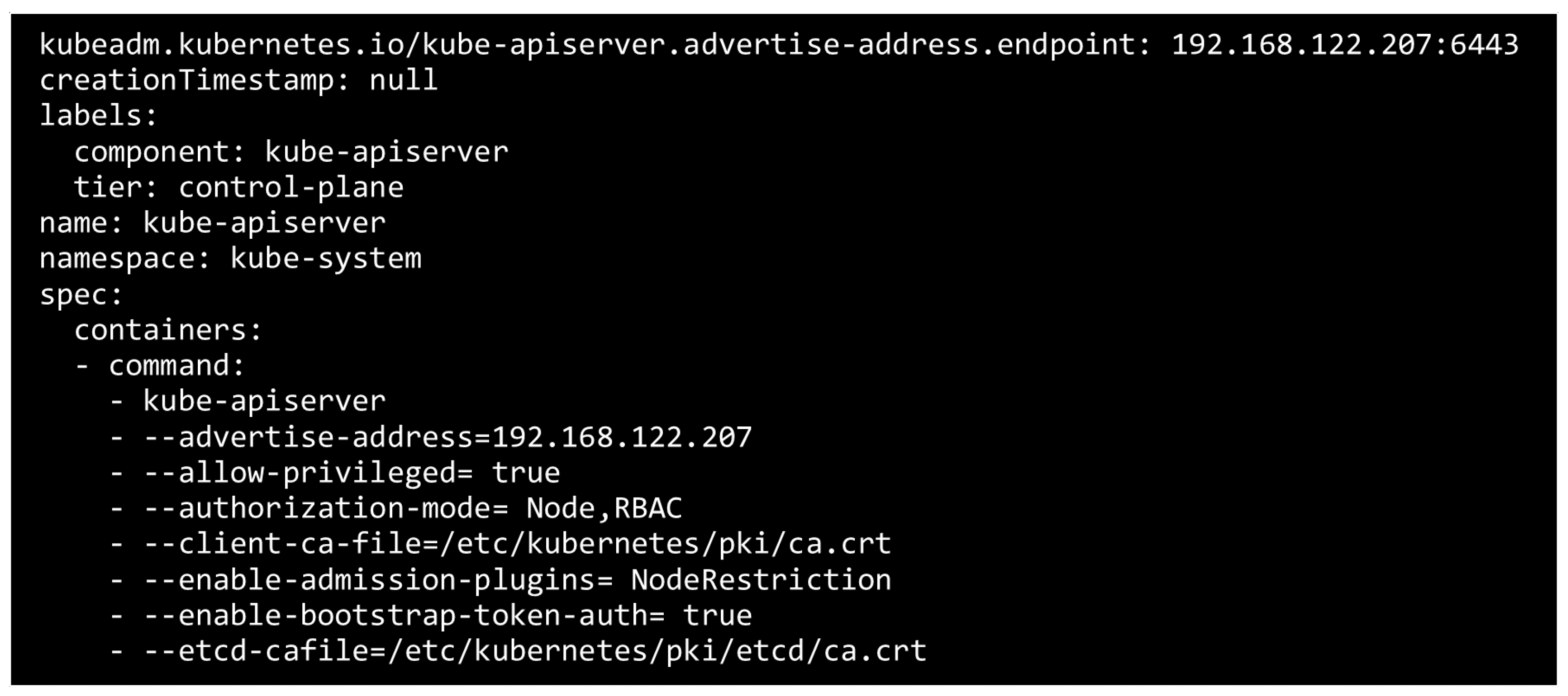

4.1.1. Securing Kubernetes API

4.1.2. Securing etcd

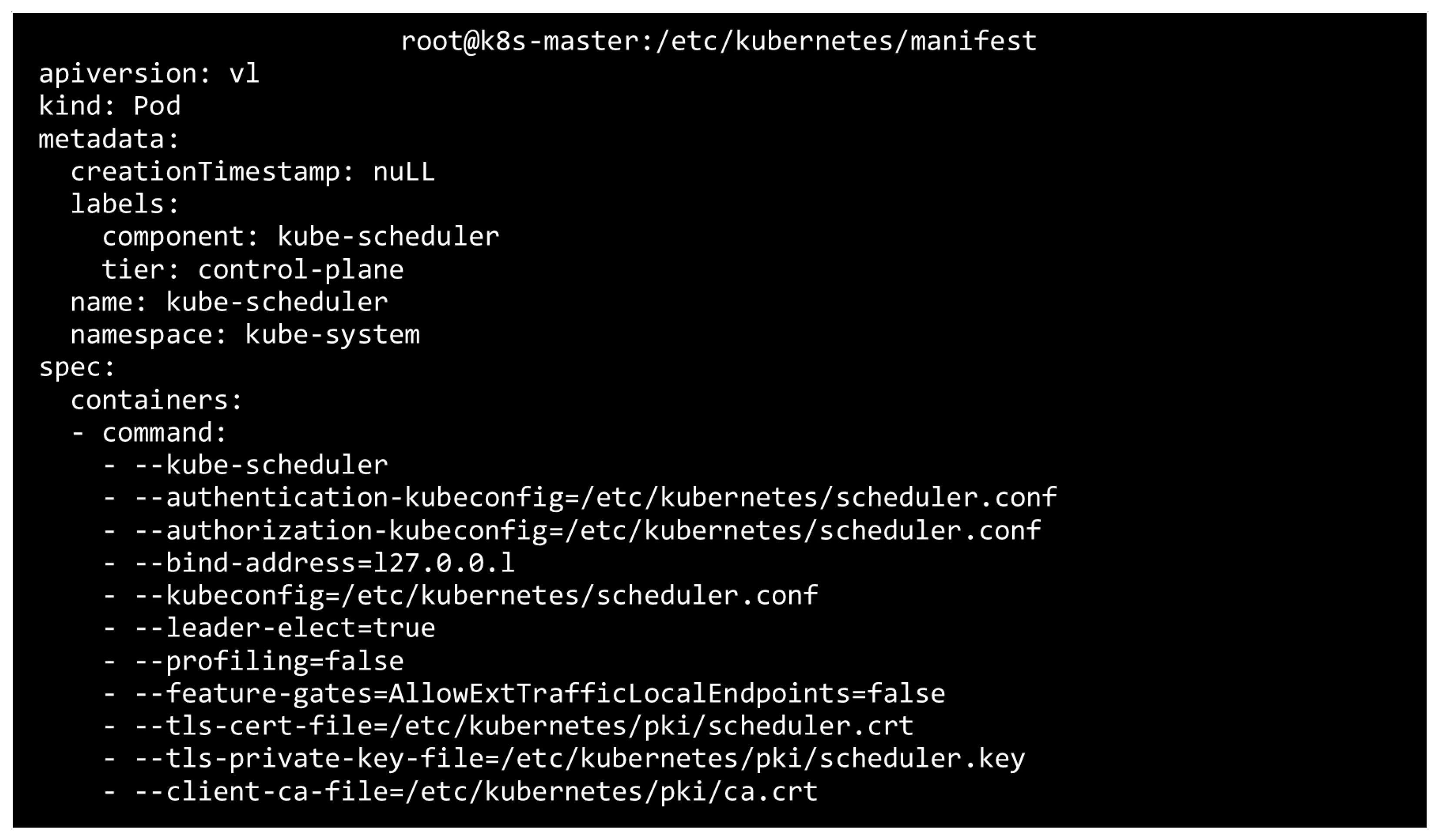

4.1.3. Securing Kube-Scheduler

- --tls-cert-file=/etc/kubernetes/pki/scheduler.crt: specifies the kube-scheduler’s TLS certificate for secure communication.

- --tls-private-key-file=/etc/kubernetes/pki/scheduler.key: specifies the private key associated with the TLS certificate.

- --client-ca-file=/etc/kubernetes/pki/ca.crt: CA file for validating incoming client certificates.

4.2. Node Security

Securing Kubelet

4.3. Image and Workload Hardening

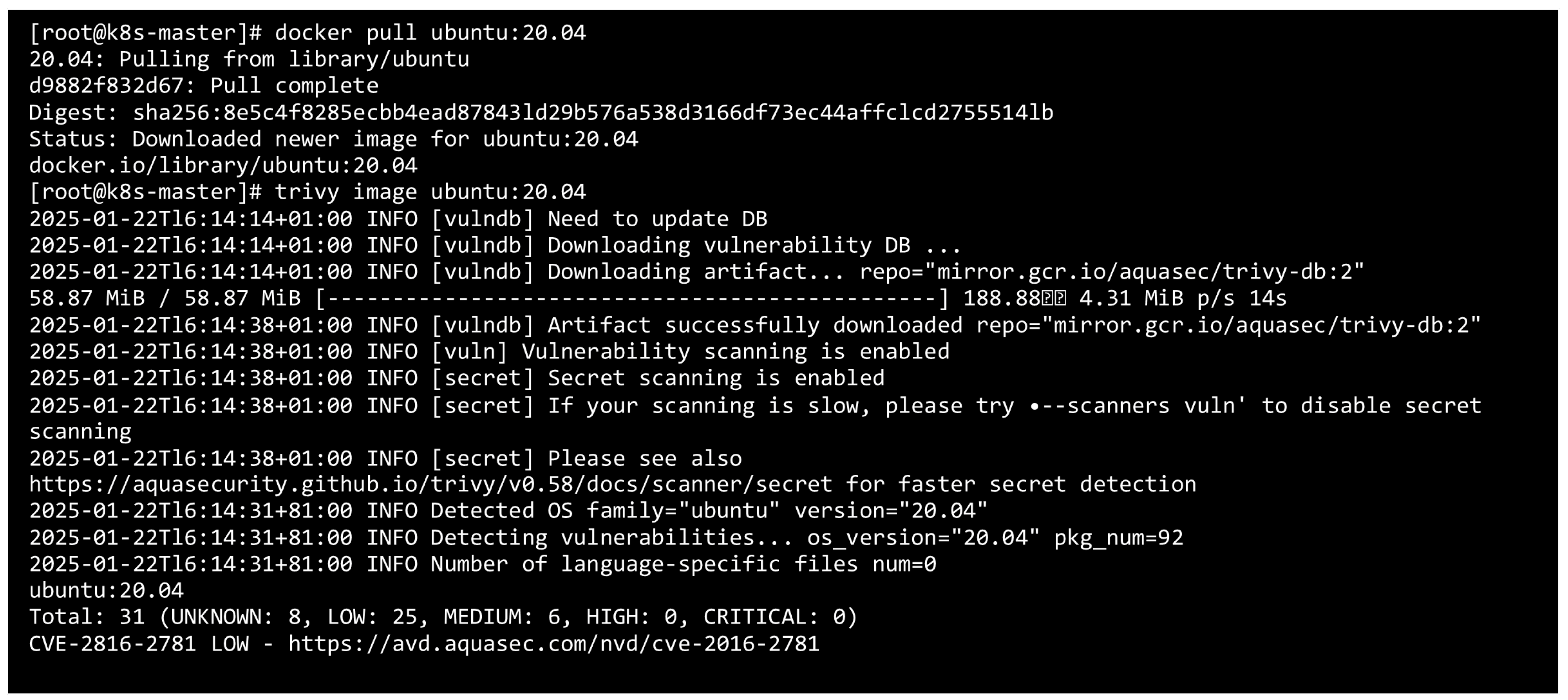

- Proactive detection of vulnerabilities: Container vulnerability scanning allows organizations to identify security concerns in container images during the development phase and before deployment in production environments.

- Compliance: Numerous organizations must comply with regulatory standards that mandate particular security practices and controls. Vulnerability scanning guarantees that container images are devoid of recognized vulnerabilities.

- Minimizing the attack surface: Scanning container images for vulnerabilities enables organizations to reduce their attack surface by eliminating superfluous components, packages, and dependencies that may present security threats.

- Retrieving images: Images must be sourced from a local or reputable repository.

- Extracting image layers: To extract an image’s layers, a vulnerability scanner must proficiently handle the Open Container Initiative format and accommodate the processing of tar archives.

- Identifying packages and dependencies: Dependencies within a container are represented as operating system packages, non-OS packages, files, application libraries, and other components.

- Examine vulnerability databases: Databases predominantly employ the CVE system. An essential element of vulnerability scanners is precisely identifying all vulnerabilities in the database and linking the appropriate package or range of packages as susceptible.

- Match: A fundamental component of a vulnerability scanner is juxtaposing installed packages against recognized vulnerabilities. Diverse methodologies may be employed for this comparison, but the most direct method is name-based matching.

- Clean outcomes: Adhering to a general matching algorithm may yield multiple outcomes. The name-based matching approach is prone to generating false positive outcomes. The results must be analyzed to mitigate inaccuracies before reporting.

- Proactive detection of vulnerabilities: Container vulnerability scanning enables organizations to identify security concerns in container images during development, allowing them to address these concerns before deployment in production environments.

- Compliance: Numerous organizations must comply with regulatory standards that mandate particular security practices and controls. Vulnerability scanning ensures that container images are free from recognized vulnerabilities.

4.3.1. Trivy (v0.62)

4.3.2. Disabling Containers to Run as Root

- runAsNonRoot: guarantees that the container operates without root user privileges; Kubernetes will inhibit the container’s initiation if the UID is configured to 0 (root).

- runAsUser and runAsGroup: designate the user and group ID under which the container operates, facilitating the enforcement of a specific non-root user.

- allowPrivilegeEscalation: inhibits a process from acquiring elevated privileges.

- privileged: When enabled, the container gains access to host devices and can alter the kernel.

4.3.3. Integrating Hardening with CI/CD Pipelines

4.4. Identity and Access Management

4.4.1. Using Certificates

4.4.2. Using Tokens

- Static tokens are a straightforward mechanism for user authentication to the Kubernetes API server. These tokens are pre-established and maintained in a static file on the control plane. They offer a straightforward method for configuring basic authentication in small-scale or testing environments; however, they are not advisable for production due to security issues. Static tokens are transmitted as a CSV file containing user IDs, names, tokens, and optional group names. The API server retrieves bearer tokens from a file when the --token-auth-file=FILENAME option is specified in the command line. A static token file can be generated in /etc/kubernetes/static-token.csv on the controller node and must contain a minimum of three columns: token, username, and user-id. The Controller API manifest is at /etc/kubernetes/manifests/kube-apiserver.yaml must be updated to include the –token-auth-file flag.

- Bootstrap tokens are basic bearer tokens designed to create new clusters or integrate new nodes into an existing cluster. They are ephemeral tokens engineered explicitly for the bootstrapping process to authenticate a node to the cluster’s control plane node. Consequently, they are designed to facilitate the kubeadm process but may also be utilized in alternative contexts. Bootstrap tokens are retained as secrets within the kube-system namespace. The Bootstrap Token authenticator can be activated using the subsequent flag on the API server: --activate-bootstrap-token-authentication. Figure 8 illustrates the default bootstrap token created by the kubeadm init process and stored as a secret in the kube-system namespace:

- 3.

- The OpenID Connect protocol is developed by extending the existing OAuth2 protocol. Kubernetes lacks an OpenID Connect identity provider. Identity providers such as Google or Azure may be utilized. External identity providers can be seamlessly integrated with Kubernetes authentication workflows as needed. OpenID Connect is a derivative of OAuth2 endorsed by numerous OAuth2 providers. The principal improvement it provides over OAuth2 is incorporating an ID Token in the response in addition to the access token, with the ID Token being a JSON Web Token (JWT). To implement OpenID Connect with Kubernetes, one must first authenticate with an identity provider, such as Microsoft Entra ID, Salesforce, or Google. Upon logging in, the identity provider will issue an access_token, an id_token, and a refresh_token. When utilizing kubectl to engage with the Kubernetes cluster, the id_token must be provided either via the --token flag or by incorporating it directly into the kubeconfig file. Upon configuration, kubectl will transmit the id_token in the authorization header during API server requests. The API server will authenticate the JWT signature to confirm the token’s validity. It will also check whether the token has expired to verify its validity. The API server will respond to kubectl if the user possesses authorization.

4.4.3. Using an Authentication Proxy

- --requestheader-client-ca-file=/etc/kubernetes/pki/ca.crt: designates the Certificate Authority certificate file utilized to authenticate client certificates submitted by the proxy, thereby ensuring that the proxy interacting with the API server is both trusted and authorized.

- --requestheader-username-headers=X-Remote-User: designates the HTTP header (X-Remote-User) utilized by the proxy to transmit the authenticated user’s identity. The initial non-empty header is regarded as the username. The proxy establishes this header, which the API server utilizes to recognize the authenticated user.

- --requestheader-group-headers=X-Remote-Group: establishes the header (X-Remote-Group) that enumerates the groups associated with the authenticated user.

- --proxy-client-cert-file=/etc/kubernetes/pki/apiserver.crt: supplies the client certificate for the API server to authenticate with the proxy server, which is utilized during mutual TLS authentication when the API server interacts with the proxy.

- --proxy-client-key-file=/etc/kubernetes/pki/apiserver.key: designates the private key associated with the certificate mentioned above (apiserver.crt), utilized to establish a secure connection between the API server and the proxy.

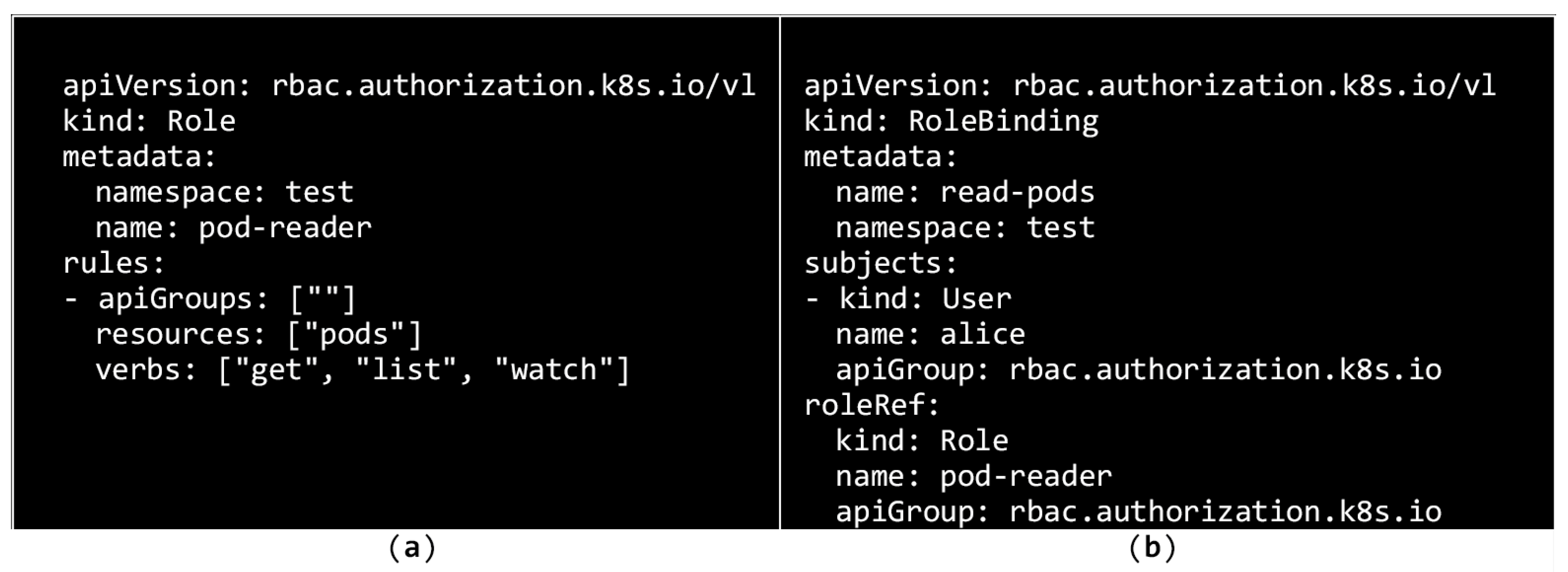

4.4.4. Role-Based Access Control

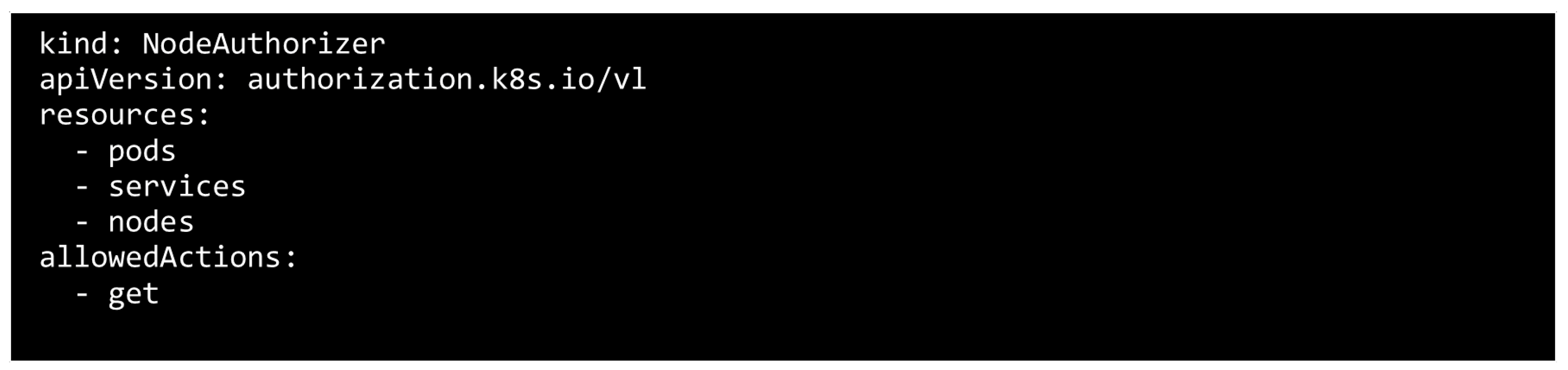

4.4.5. Node Authorization

- Reading operations: pods, nodes, endpoints, secrets, config maps, and volumes.

- Execute operations: nodes, pods, and associated events.

- Authentication operations: read/write access to Certificate Signing Requests for TLS generation, and the creation of TokenReviews and SubjectAccessReviews for delegated authentication and authorization assessments.

4.5. Observability and Auditing

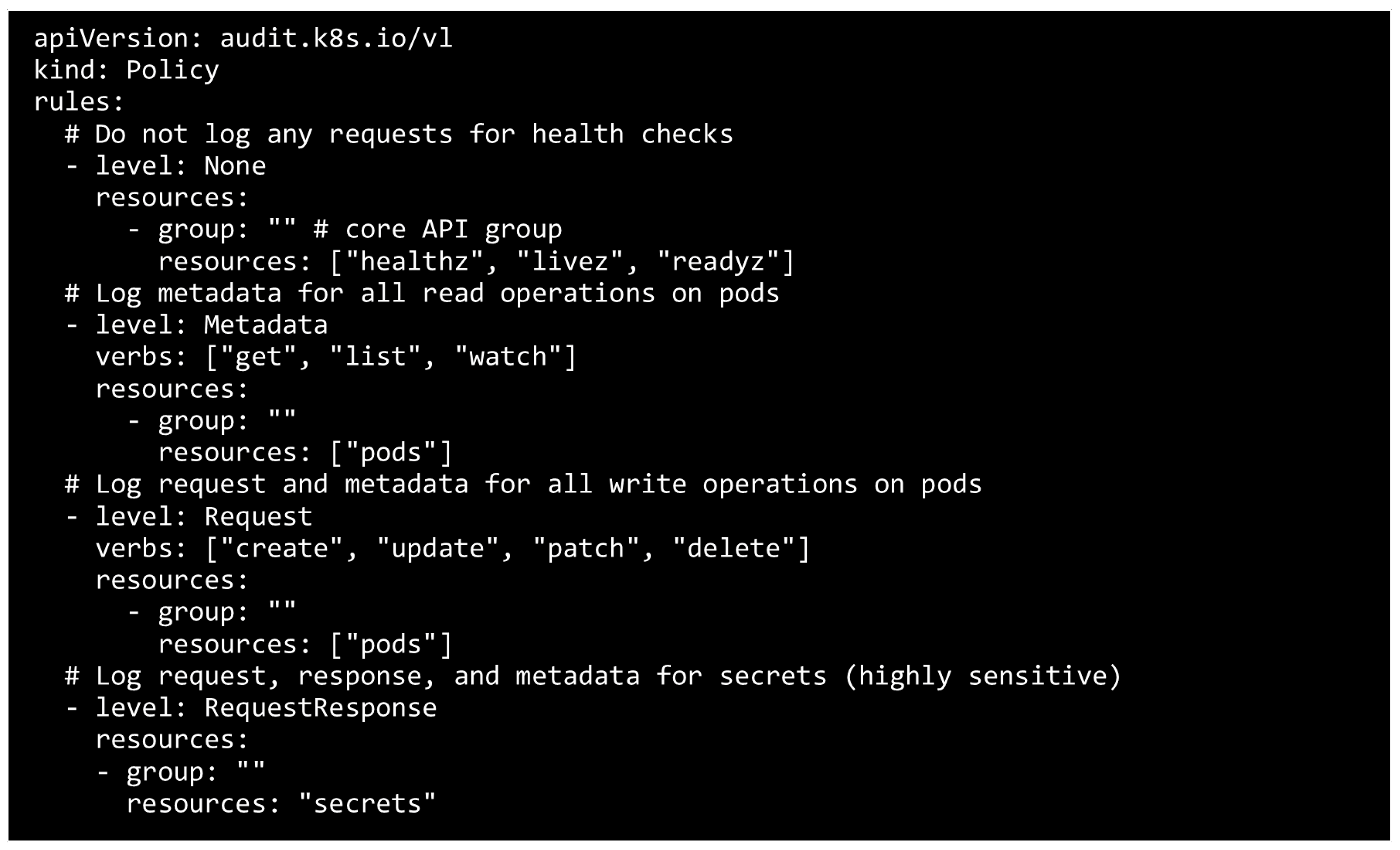

4.5.1. Auditing

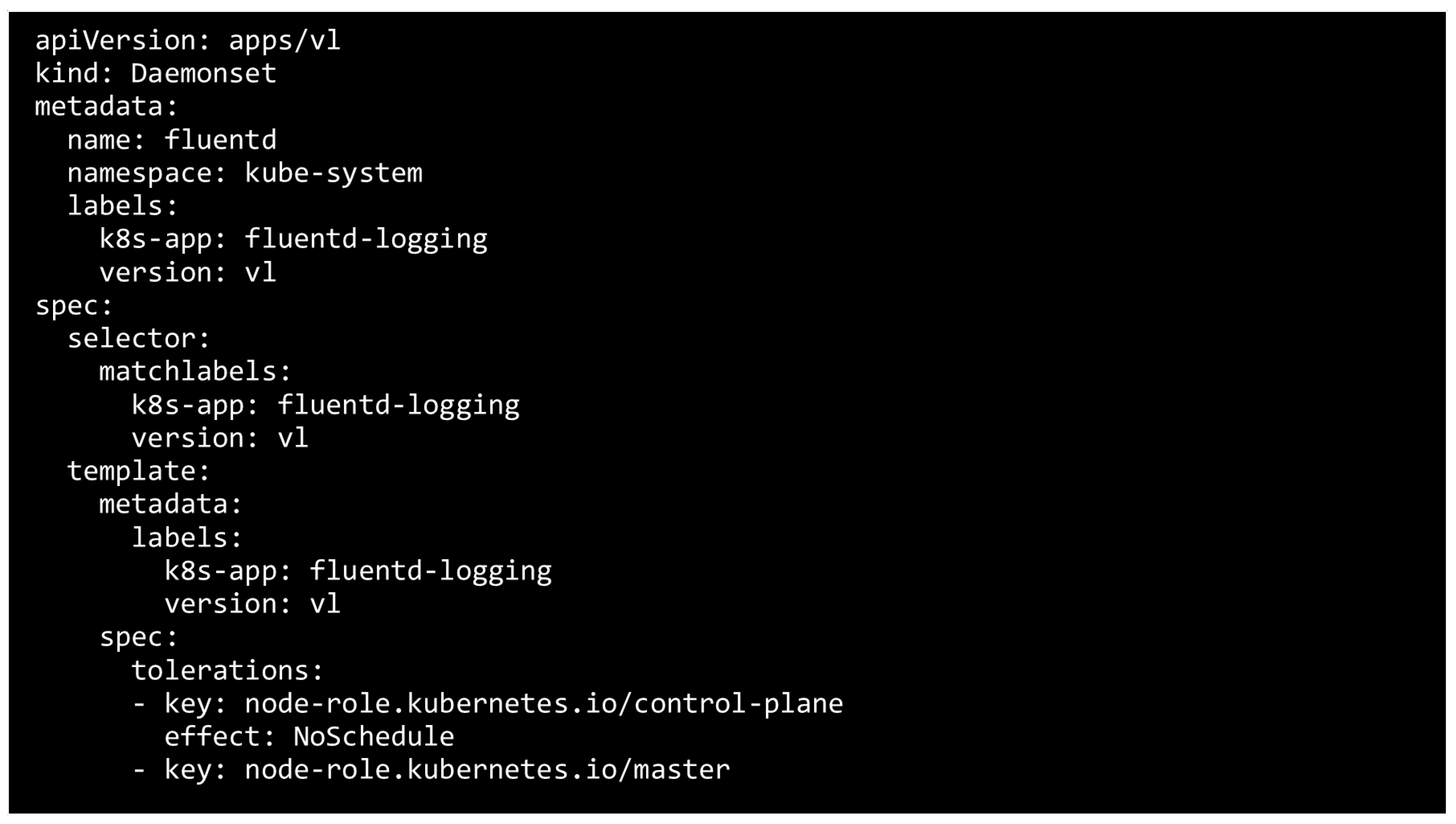

4.5.2. Logging

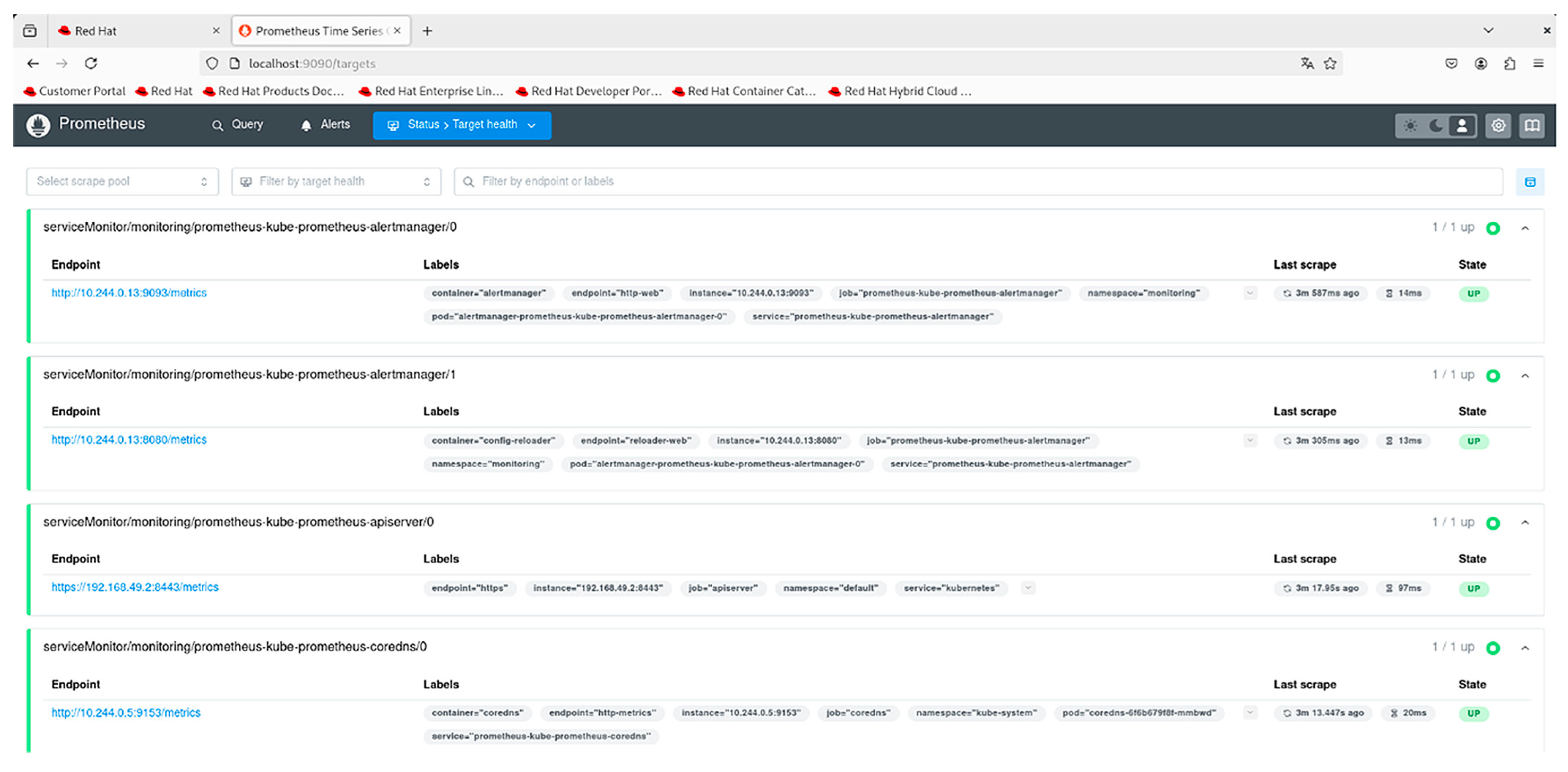

4.5.3. Kubernetes Monitoring

5. Kubernetes Vulnerabilities and Kubernetes Penetration Testing

5.1. Vulnerability Databases

5.2. Kubernetes CVEs

- CVE-2025-1974

- 2.

- CVE-2025-1098

- 3.

- CVE-2025-1097

- 4.

- CVE-2025-24514

- 5.

- CVE-2024-9042

- 6.

- CVE-2024-7646

- 7.

- CVE-2024-29990

- 8.

- CVE-2024-9486

- 9.

- CVE-2024-10220

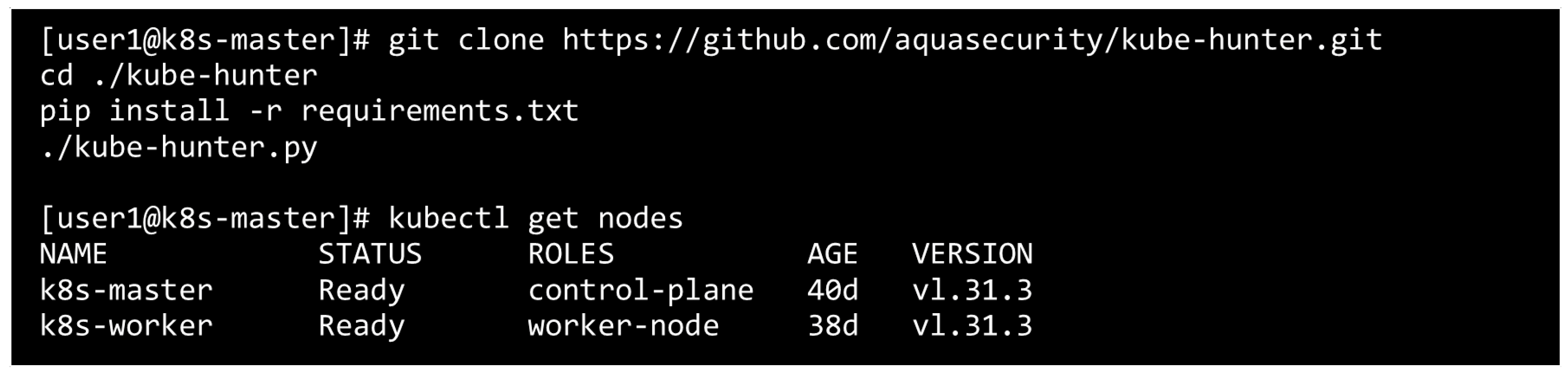

5.3. Penetration Testing Tools, Demonstration, and Security Compliance Assessment

- Remote scanning: To specify a remote machine for scanning, kube-hunter should be started with the --remote option.

- Interface scanning: When selecting interface scanning, the --interface option must be specified; this option will scan all network interfaces on the localhost.

- Network scanning: A specific CIDR can be selected for scanning using the-- cidr option.

5.4. Use Case: Hardening a Local Kubernetes Cluster

5.4.1. Baseline Vulnerability Assessment Using Trivy

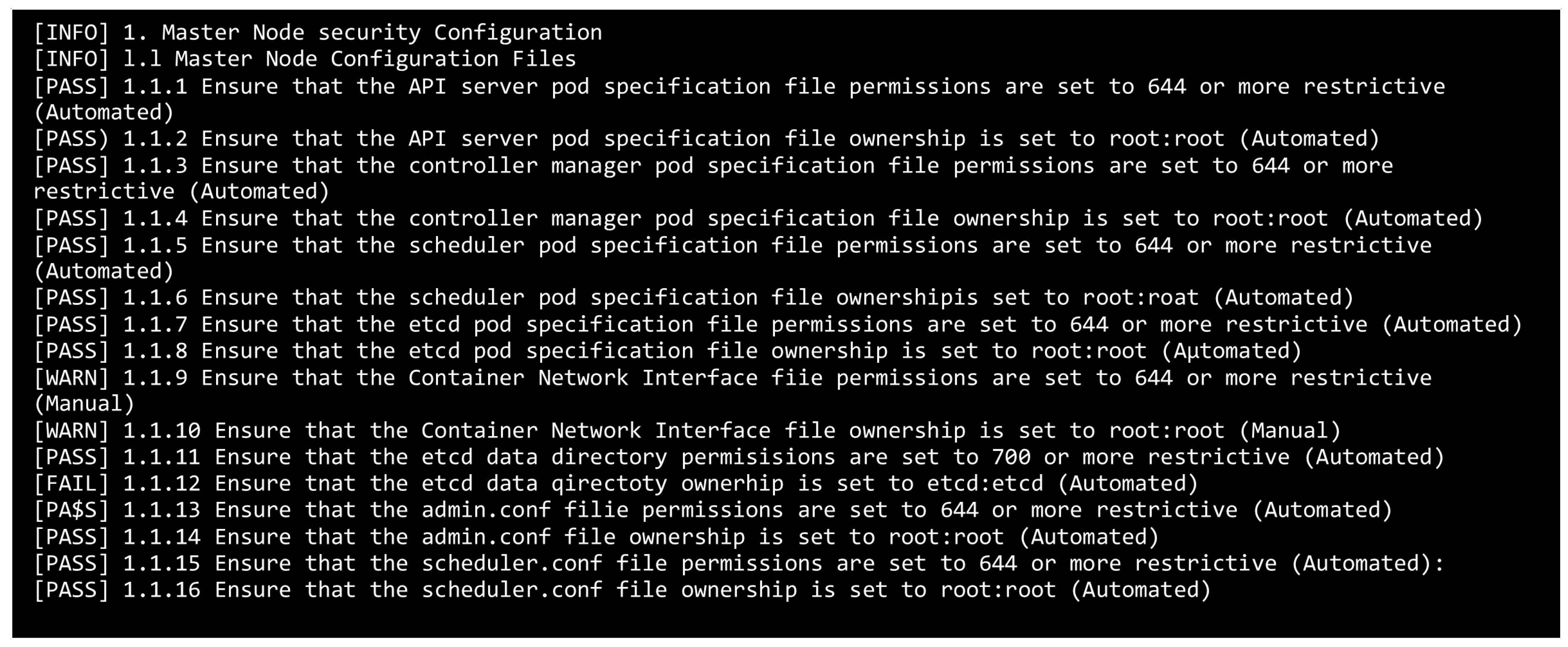

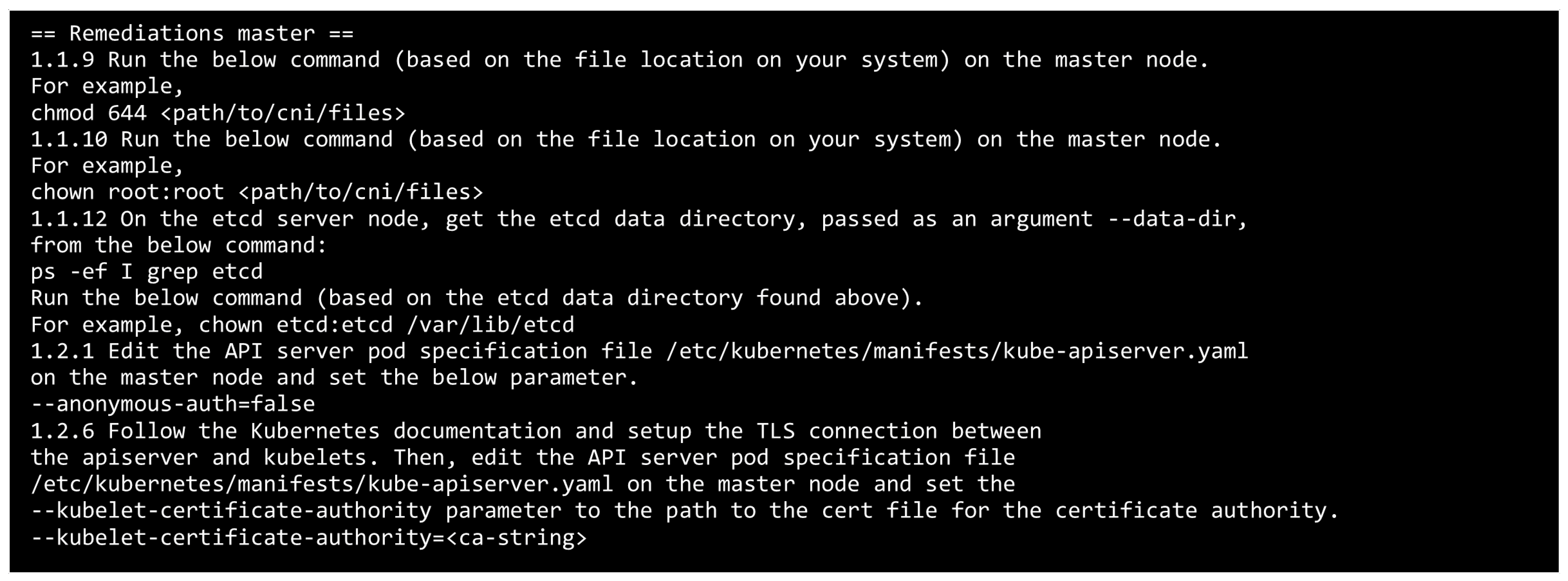

5.4.2. Compliance Scanning with Kube-Bench

- API server accepting anonymous requests (--anonymous-auth=true);

- Unsecured etcd communication channels;

- Kubelet authorization set to AlwaysAllow.

- --anonymous-auth=false added to the API server manifest;

- Mutual TLS was enforced for etcd using custom CA-signed certificates;

- Kubelet authentication mode changed to webhook, and unauthorized cache TTLs tightened.

5.4.3. Penetration Testing with Kube-Hunter

- Disclosure of API Server version;

- Kubelet API accessible on port 10250;

- Etcd port 2379 is available without firewall restrictions.

6. Future Works

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Curtis, J.A.; Eisty, N.U. The Kubernetes Security Landscape: AI-Driven Insights from Developer Discussions. arXiv 2024, arXiv:2409.04647. [Google Scholar] [CrossRef]

- Rahman, A.; Shamim, S.I.; Bose, D.B.; Pandita, R. Security Misconfigurations in Open Source Kubernetes Manifests: An Empirical Study. ACM Trans. Softw. Eng. Methodol. 2023, 32, 1–36. [Google Scholar] [CrossRef]

- Wang, H.; Zhang, G.; Wang, D.; Deng, J. KubeRM: A Distributed Rule-Based Security Management System in Cloud Native Environment. In Proceedings of the International Conference on Cloud Computing, Internet of Things, and Computer Applications (CICA 2022), Luoyang, China, 28 July 2022; p. 128. [Google Scholar] [CrossRef]

- Bose, D.B.; Rahman, A.; Shamim, S.I. ‘Under-Reported’ Security Defects in Kubernetes Manifests. In Proceedings of the 2021 IEEE/ACM 2nd International Workshop on Engineering and Cybersecurity of Critical Systems (EnCyCriS), Madrid, Spain, 3–4 June 2021; pp. 9–12. [Google Scholar] [CrossRef]

- Diana Kutsa Fortifying Multi-Cloud Kubernetes: Security Strategies for the Modern Enterprise. World J. Adv. Res. Rev. 2024, 23, 2719–2724. [CrossRef]

- Kampa, S. Navigating the Landscape of Kubernetes Security Threats and Challenges. J. Knowl. Learn. Sci. Technol. 2024, 3, 274–281. [Google Scholar] [CrossRef]

- Doriguzzi-Corin, R.; Cretti, S.; Catena, T.; Magnani, S.; Siracusa, D. Towards Application-Aware Provisioning of Security Services with Kubernetes. In Proceedings of the 2022 IEEE 8th International Conference on Network Softwarization (NetSoft) 2022, Milan, Italy, 27 June 2022–1 July 2022; pp. 284–286. [Google Scholar] [CrossRef]

- Gunathilake, K.; Ekanayake, I. K8s Pro Sentinel: Extend Secret Security in Kubernetes Cluster. In Proceedings of the 2024 9th International Conference on Information Technology Research (ICITR) 2024, Colombo, Sri Lanka, 5–6 December 2024; pp. 1–5. [Google Scholar] [CrossRef]

- Ascensão, P.; Neto, L.F.; Velasquez, K.; Abreu, D.P. Assessing Kubernetes Distributions: A Comparative Study. In Proceedings of the 2024 IEEE 22nd Mediterranean Electrotechnical Conference (MELECON) 2024, Porto, Portugal, 25–27 June 2024; pp. 832–837. [Google Scholar] [CrossRef]

- German, K.; Ponomareva, O. An Overview of Container Security in a Kubernetes Cluster. In Proceedings of the 2023 IEEE Ural-Siberian Conference on Biomedical Engineering, Radioelectronics and Information Technology (USBEREIT) 2023, Yekaterinburg, Russia, 15–17 May 2023; pp. 283–285. [Google Scholar] [CrossRef]

- Zhu, H.; Gehrmann, C. Kub-Sec, an Automatic Kubernetes Cluster AppArmor Profile Generation Engine. In Proceedings of the 2022 14th International Conference on COMmunication Systems & NETworkS (COMSNETS) 2022, Bangalore, India, 4–8 January 2022; pp. 129–137. [Google Scholar] [CrossRef]

- Kamieniarz, K.; Mazurczyk, W. A Comparative Study on the Security of Kubernetes Deployments. In Proceedings of the 2024 International Wireless Communications and Mobile Computing (IWCMC) 2024, Ayia Napa, Cyprus, 27–31 May 2024; pp. 718–723. [Google Scholar] [CrossRef]

- Aly, A.; Fayez, M.; Al-Qutt, M.; Hamad, A.M. Multi-Class Threat Detection Using Neural Network and Machine Learning Approaches in Kubernetes Environments. In Proceedings of the 2024 6th International Conference on Computing and Informatics (ICCI) 2024, Cairo, Egypt, 6–7 March 2024; pp. 103–108. [Google Scholar] [CrossRef]

- Russell, E.; Dev, K. Centralized Defense: Logging and Mitigation of Kubernetes Misconfigurations with Open Source Tools. arXiv 2024, arXiv:2408.03714. [Google Scholar] [CrossRef]

- Acharekar, T.V. Exploring Security Challenges and Solutions in Kubernetes: A Comprehensive Survey of Challenges and State-of-the-Art Approaches. Int. J. Adv. Res. Sci. Commun. Technol. 2024, 4, 34–38. [Google Scholar] [CrossRef]

- Surantha, N.; Ivan, F. Secure Kubernetes Networking Design Based on Zero Trust Model: A Case Study of Financial Service Enterprise in Indonesia. Adv. Intell. Syst. Comput. 2019, 348–361. [Google Scholar] [CrossRef]

- Molleti, R. Highly Scalable and Secure Kubernetes Multi Tenancy Architecture for Fintech. J. Eng. App. Sci. Technol. 2022, 4, 1–5. [Google Scholar] [CrossRef]

- Surantha, N.; Ivan, F.; Chandra, R. A Case Analysis for Kubernetes Network Security of Financial Service Industry in Indonesia Using Zero Trust Model. Bull. EEI 2023, 12, 3142–3152. [Google Scholar] [CrossRef]

- Chinnam, S.K. Enhancing Patient Care Through Kubernetes-Powered Healthcare Data Management. Int. J. Res. Appl. Sci. Eng. Technol. 2024, 12, 859–865. [Google Scholar] [CrossRef]

- Huang, K.; Jumde, P. Learn Kubernetes Security: Securely Orchestrate, Scale, and Manage Your Microservices in Kubernetes Deployments; Packt Publications: Birmingham, UK, 2020; ISBN 978-1839216503. [Google Scholar]

- Reddy Chittibala, D. Security in Kubernetes: A Comprehensive Review of Best Practices. Int. J. Sci. Res. 2023, 12, 2966–2970. [Google Scholar] [CrossRef]

- Horalek, J.; Urbanik, P.; Sobeslav, V.; Svoboda, T. Proposed Solution for Log Collection and Analysis in Kubernetes Environment. In Proceedings of the Lecture Notes of the Institute for Computer Sciences, Social Informatics and Telecommunications Engineering, Guilin, China, 22–24 July 2023; pp. 9–22. [Google Scholar] [CrossRef]

- Chen, T.; Suo, H.; Xu, W. Design of Log Collection Architecture Based on Cloud Native Technology. In Proceedings of the 2023 4th Information Communication Technologies Conference (ICTC), Nanjing, China, 17–19 May 2023; pp. 311–315. [Google Scholar] [CrossRef]

- LISBOA; da Silva, F.G. A Scalable Distributed System Based on Microservices for Collecting Pod Logs from a Kubernetes Cluster. In Proceedings of the Anais do XVIII Congresso Latino-Americano de Software Livre e Tecnologias Abertas (Latinoware 2021), Online, 13–15 October 2021; pp. 122–125. [Google Scholar] [CrossRef]

- Lim, S.Y.; Stelea, B.; Han, X.; Pasquier, T. Secure Namespaced Kernel Audit for Containers. In Proceedings of the ACM Symposium on Cloud Computing 2021, Seattle, WA, USA, 1–4 November 2021; pp. 518–532. [Google Scholar] [CrossRef]

- Imran, M.; Kuznetsov, V.; Paparrigopoulos, P.; Trigazis, S.; Pfeiffer, A. Evaluation and Implementation of Various Persistent Storage Options for CMSWEB Services in Kubernetes Infrastructure at CERN. J. Phys. Conf. Ser. 2023, 2438, 012035. [Google Scholar] [CrossRef]

- Sai, K. Enhanced Visibility for Real-Time Monitoring and Alerting in Kubernetes by Integrating Prometheus, Grafana, Loki, and Alerta. Int. J. Sci. Res. Eng. Manag. 2024, 08, 1–5. [Google Scholar] [CrossRef]

- Li, K.; Xiao, X.; Gao, C.; Yu, S.; Tang, X.; Tan, G. Implementation of High-Performance Automated Monitoring Collection Based on Kubernetes. In Proceedings of the 2024 3rd International Conference on Cloud Computing, Big Data Application and Software Engineering (CBASE) 2024, Hangzhou, China, 11–13 October 2024; pp. 838–843. [Google Scholar] [CrossRef]

- Mart, O.; Negru, C.; Pop, F.; Castiglione, A. Observability in Kubernetes Cluster: Automatic Anomalies Detection Using Prometheus. In Proceedings of the 2020 IEEE 22nd International Conference on High Performance Computing and Communications; IEEE 18th International Conference on Smart City; IEEE 6th International Conference on Data Science and Systems (HPCC/SmartCity/DSS), Yanuca Island, Cuvu, Fiji, 14–16 December 2020; pp. 565–570. [Google Scholar] [CrossRef]

- Dimitrov, V. CVE (NVD) Ontology. In Proceedings of the CEUR Workshop Proceedings, Information Systems & Grid Technologies: Fifteenth International Conference ISGT’2022, Sofia, Bulgaria, 27–28 May 2022; pp. 220–227. [Google Scholar]

- Johnson, P.; Lagerstrom, R.; Ekstedt, M.; Franke, U. Can the Common Vulnerability Scoring System Be Trusted? A Bayesian Analysis. IEEE Trans. Dependable Secur. Comput. 2018, 15, 1002–1015. [Google Scholar] [CrossRef]

- Dong, Y.; Guo, W.; Chen, Y.; Xing, X.; Zhang, Y.; Wang, G. Towards the detection of inconsistencies in public security vulnerability reports. In Proceedings of the 28th USENIX Conference on Security Symposium, Santa Clara, CA, USA, 14–16 August 2019; pp. 869–885. [Google Scholar]

- He, Y.; Wang, Y.; Zhu, S.; Wang, W.; Zhang, Y.; Li, Q.; Yu, A. Automatically Identifying CVE Affected Versions With Patches and Developer Logs. IEEE Trans. Dependable Secur. Comput. 2024, 21, 905–919. [Google Scholar] [CrossRef]

- Sun, J.; Xing, Z.; Xu, X.; Zhu, L.; Lu, Q. Heterogeneous Vulnerability Report Traceability Recovery by Vulnerability Aspect Matching. In Proceedings of the 2022 IEEE International Conference on Software Maintenance and Evolution (ICSME) 2022, Limassol, Cyprus, 3–7 October 2022; pp. 175–186. [Google Scholar] [CrossRef]

- Μυτιληνάκης, Π.; Mytilinakis, P. Attack Methods and Defenses on Kubernetes 2020. Available online: https://doi.org/10.26267/UNIPI_DIONE/311 (accessed on 21 February 2025).

- Zheng, T.; Tang, R.; Chen, X.; Shen, C. KubeFuzzer: Automating RESTful API Vulnerability Detection in Kubernetes. CMC 2024, 81, 1595–1612. [Google Scholar] [CrossRef]

- Aqua Kube-Hunter, KHV002—Kubernetes Version Disclosure Issue Description. Available online: https://aquasecurity.github.io/kube-hunter/kb/KHV002.html (accessed on 25 April 2025).

- Straesser, M.; Haas, P.; Frank, S.; Hakamian, A.; van Hoorn, A.; Kounev, S. Kubernetes-in-the-Loop: Enriching Microservice Simulation Through Authentic Container Orchestration. In Proceedings of the Lecture Notes of the Institute for Computer Sciences, Social Informatics and Telecommunications Engineering 2024, Hong Kong, China, 9–10 December 2024; pp. 82–98. [Google Scholar] [CrossRef]

| CVE ID | Affected Component | Vulnerability/Risk | Recommended Mitigation |

|---|---|---|---|

| CVE-2025-1974 | Ingress-NGINX Controller | Unauthenticated remote code execution | Upgrade ingress-nginx; apply network restrictions and RBAC |

| CVE-2025-1098 | Ingress-NGINX Controller | Remote code execution | Update ingress-nginx; enforce network segmentation and review ingress policies |

| CVE-2025-1097 | Ingress-NGINX Controller | Remote code execution via misconfiguration | Patch ingress-nginx; implement configuration validation and least privilege |

| CVE-2025-24514 | Ingress-NGINX Controller | Unauthorized code execution through malicious requests | Upgrade ingress-nginx; monitor ingress logs; sanitize input annotations |

| CVE-2024-9042 | Kubelet on Windows Nodes | Command injection via insecure parameter handling | Upgrade Windows kubelet; restrict access using RBAC and firewalls |

| CVE-2024-7646 | Ingress-NGINX Controller | Privilege escalation via annotation injection | Upgrade to ingress-nginx ≥ v1.11.2; apply OPA/Gatekeeper policies |

| CVE-2024-29990 | Azure Kubernetes Service (AKS) | Privilege escalation within AKS | Apply the latest AKS patches; enforce Azure role and policy restrictions |

| CVE-2024-9486 | Kubernetes Image Builder | Root access exposure in image-building environments | Upgrade the builder; isolate build infrastructure from production workloads |

| CVE-2024-10220 | gitRepo Volume Plugin | Data exfiltration via pod-to-pod repository access | Upgrade Kubernetes; replace gitRepo volumes with init containers for controlled cloning |

| Kubernetes Component | Applied Security Controls | Security Outcome |

|---|---|---|

| API Server | TLS encryption, client certs, RBAC, token auth, restricting anonymous access | Secure API access, authenticated requests only, reduced attack surface |

| etcd | TLS encryption, certificate authentication, restricted file permissions, snapshot encryption | Confidentiality and integrity of the cluster state and secrets |

| Kubelet | Disabled anonymous access, webhook authorization, certificate-based TLS, restricted permissions | Controlled access to workloads and node-level operations |

| kube-scheduler | TLS encryption, profiling disabled, access restriction via bind-address | Prevention of workload manipulation or unauthorized scheduling |

| Container Images | Trivy scanning, rootless containers, minimal base images, secure build pipeline. | Reduced supply chain risk and minimized the attack surface of workloads |

| Access Management (IAM) | Client certificates, bootstrap/static tokens, OpenID Connect, RBAC, node authorization | Enforced least privilege access and contextual identity control |

| Auditing & Logging | Audit policies, Fluentd log collection, Prometheus metrics, alert integration | Detection of misconfigurations, threat visibility, and compliance support |

| CI/CD Integration | Trivy in pipeline, conditional build failure on vulnerability detection | Early detection and prevention of insecure image deployments |

| Compliance Monitoring | kube-bench scanning with CIS benchmarks | Measurable security baseline and identification of hardening gaps |

| Penetration Testing | kube-hunter (active/passive scanning) | Validation of cluster surface exposure and misconfiguration detection |

| API Server | TLS encryption, client certs, RBAC, token auth, restricting anonymous access | Secure API access, authenticated requests only, reduced attack surface |

| etcd | TLS encryption, certificate authentication, restricted file permissions, snapshot encryption | Confidentiality and integrity of the cluster state and secrets |

| Kubelet | Disabled anonymous access, webhook authorization, certificate-based TLS, restricted permissions | Controlled access to workloads and node-level operations |

| kube-scheduler | TLS encryption, profiling disabled, access restriction via bind-address | Prevention of workload manipulation or unauthorized scheduling |

| Container Images | Trivy scanning, rootless containers, minimal base images, secure build pipeline. | Reduced supply chain risk and minimized the attack surface of workloads |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Morić, Z.; Dakić, V.; Čavala, T. Security Hardening and Compliance Assessment of Kubernetes Control Plane and Workloads. J. Cybersecur. Priv. 2025, 5, 30. https://doi.org/10.3390/jcp5020030

Morić Z, Dakić V, Čavala T. Security Hardening and Compliance Assessment of Kubernetes Control Plane and Workloads. Journal of Cybersecurity and Privacy. 2025; 5(2):30. https://doi.org/10.3390/jcp5020030

Chicago/Turabian StyleMorić, Zlatan, Vedran Dakić, and Tomislav Čavala. 2025. "Security Hardening and Compliance Assessment of Kubernetes Control Plane and Workloads" Journal of Cybersecurity and Privacy 5, no. 2: 30. https://doi.org/10.3390/jcp5020030

APA StyleMorić, Z., Dakić, V., & Čavala, T. (2025). Security Hardening and Compliance Assessment of Kubernetes Control Plane and Workloads. Journal of Cybersecurity and Privacy, 5(2), 30. https://doi.org/10.3390/jcp5020030