A Human–AI Collaborative Framework for Cybersecurity Consulting in Capstone Projects for Small Businesses

Abstract

1. Introduction

1.1. Background and Motivation

1.2. Purpose and Scope of the R&D Agenda

1.3. Structure of the Paper

2. Literature Review

2.1. Methodologies for Development-Oriented Projects

2.2. Methodologies for Research-Oriented Projects

2.3. Human–AI Collaboration

3. RQs and Objectives

3.1. Primary RQs

- RQ1: What are the core requirements and methodologies for designing an R&D agenda that supports a scalable cybersecurity consulting firm?This question examines the essential requirements and methodologies that form the basis of the agenda, ensuring it meets the demands of both consulting and educational contexts.

- RQ2: How can the R&D agenda structure human–computer collaboration to enhance productivity and scalability in consulting projects?This question investigates how collaborative workflows between human expertise and AI tools, including AI agents, can be embedded within the agenda, aiming to improve productivity and establish a scalable model. It also explores the respective roles of humans and AI agents, examining how each can complement the other to maximise effectiveness in consulting tasks.

- RQ3: How can the R&D agenda provide both educational value and industry relevance for capstone projects within a consulting context?This question explores how the agenda aligns with capstone objectives and industry requirements, ensuring that students gain practical experience while contributing to the goals of a consulting firm.

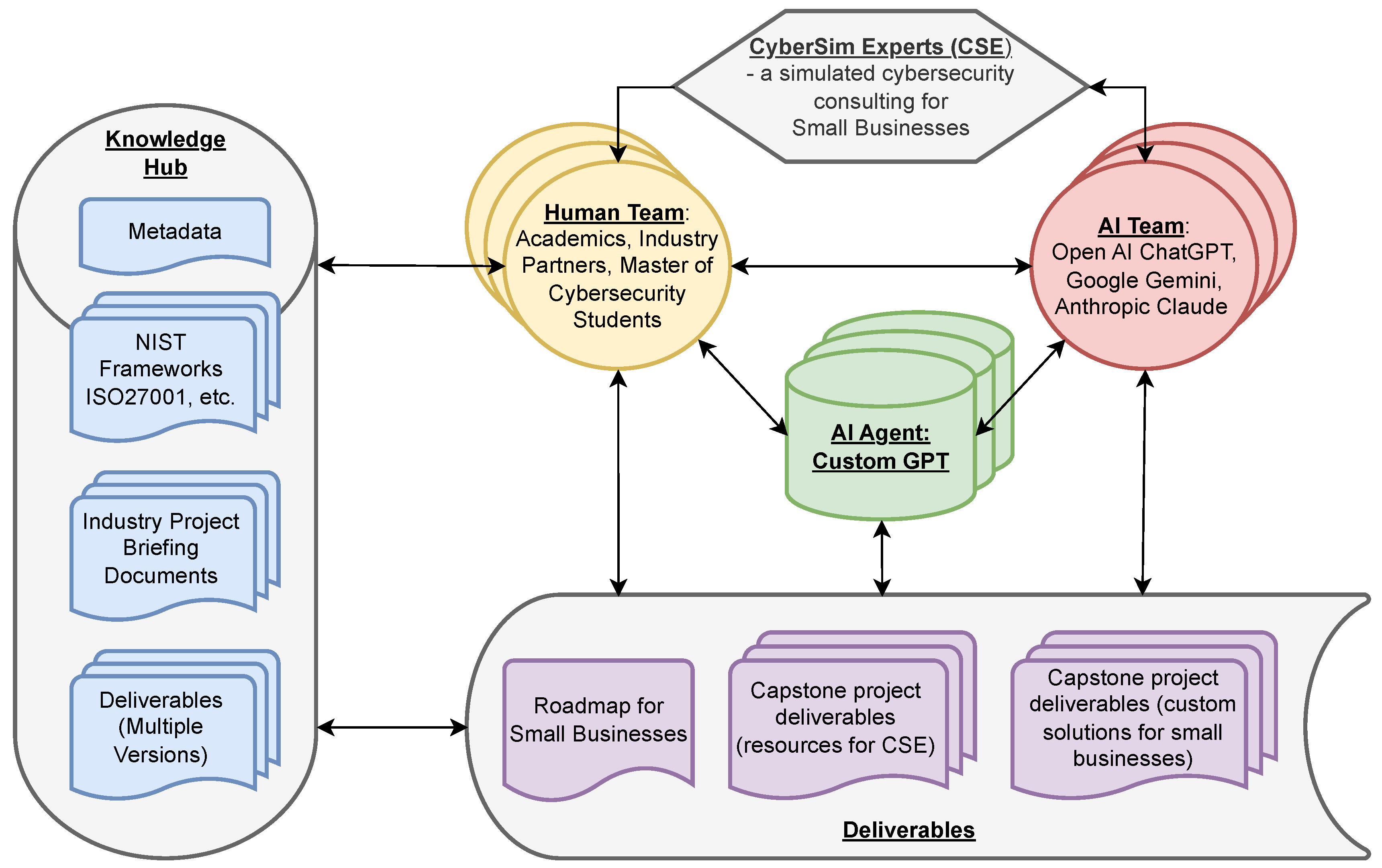

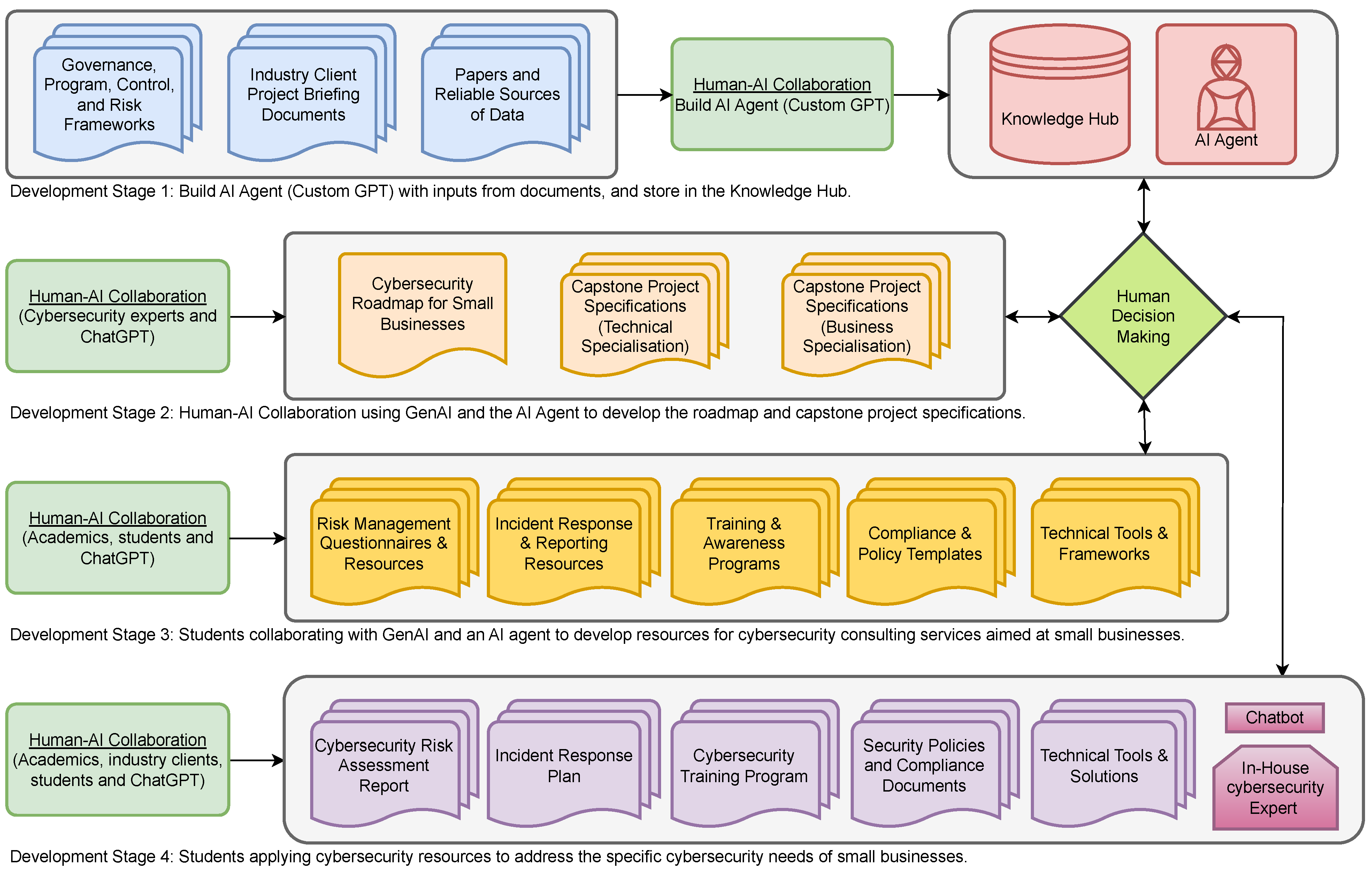

3.2. Overview of the Four Development Stages

- Stage 1: AI agent development—focuses on designing an AI agent trained on cybersecurity frameworks and industry-specific documents to address small business cybersecurity needs, supporting consulting tasks and enhancing productivity.

- Stage 2: Cybersecurity roadmap creation—involves developing a structured cybersecurity roadmap tailored to small businesses, offering a practical guide for these businesses to follow as they advance their cybersecurity practices.

- Stage 3: Resource development—dedicated to creating tools, templates, and training programs that consultants can use and share with clients, establishing an adaptable set of consulting resources.

- Stage 4: Industry application—emphasises the real-world application and testing of resources, gathering feedback from small business clients to inform ongoing refinement and development.

3.3. SRQs by Development Stage

- Stage 1: AI Agent Development

- SRQ1.1: What are the specific design requirements and methodologies for the AI agent to meet consulting and capstone needs?

- SRQ1.2: In what ways can the AI agent enhance consulting productivity and accessibility, and what roles does it serve?

- SRQ1.3: How will the performance and impact of the AI agents be evaluated in cybersecurity consulting contexts, specifically looking at metrics such as accuracy and user satisfaction, among others, as examples of key indicators?

- Stage 2: Cybersecurity Roadmap Creation

- SRQ2.1: How does the agenda structure the process for creating customised cybersecurity roadmaps for small businesses?

- SRQ2.2: How does the roadmap align with both educational standards and industry requirements?

- Stage 3: Resource Development

- SRQ3.1: What design principles guide the creation of consulting tools, templates, and training programs within the agenda?

- SRQ3.2: How do iterative feedback and human–computer collaboration enhance the usability and effectiveness of consulting resources?

- Stage 4: Industry Application

- SRQ4.1: What insights are gained from applying the R&D agenda’s resources in real-world industry settings, and how effective are they?

- SRQ4.2: What lessons can be learned from applying the consulting resources with small business clients?

3.4. Key Objectives of the R&D Agenda

- Scalable development of consulting resources: enable the rapid, scalable creation of consulting resources tailored to small business needs, with flexibility for future adjustments.

- Facilitating human–computer collaboration: establish initial workflows that integrate human expertise and AI-driven tools, enhancing productivity and operational efficiency.

- Supporting educational and industry alignment: ensure that the agenda provides educational value aligned with academic standards and real-world industry requirements, offering students hands-on, practical experience.

- Continuous improvement and stakeholder collaboration: foster collaboration among multiple stakeholders, including academics, program directors, industry partners, WIL teams, and students to allow for iterative feedback and refinement.

4. Methodology

4.1. Research Design Overview

- Development-oriented projects: focusing on creating practical consulting tools and resources, and industry applications, or

- Research-oriented projects: generating insights and frameworks for further refinement and academic contributions.

4.2. Methodology Options and Selection for Designing the R&D Agenda

4.3. Human–AI Collaboration for the Cybersecurity Consulting Firm

4.4. Workflow for Resource Development

4.5. Summary of Methodology

5. Design, Structure, and Initial Results of the R&D Agenda

5.1. Overview of the Four Development Stages

- AI agent development—focuses on building a custom AI agent (Custom GPT) that can draw on cybersecurity documents and other inputs to create a knowledge hub. This agent serves as a foundational tool for subsequent stages, providing accessible, relevant information for consulting tasks. Documents such as widely adopted frameworks and best practices [20,21,22,23,24,25,26], government resources [27,28,29,30,31] and industry resources [32,33] are categorised and included in the training of the custom AI agent.

- Cybersecurity roadmap creation—utilises Human–AI collaboration, integrating both GenAI and the AI agent to co-develop a cybersecurity roadmap and detailed capstone project specifications. The roadmap is structured as a flexible, five-phase maturity model that can be tailored to a variety of business contexts. Each phase is accompanied by modular templates and diagnostic tools that guide implementation according to the client’s size, sector, and technical capacity. This customisation ensures accessibility for low-resourced businesses while preserving scalability for more mature organisations. Additionally, the roadmap is designed to incorporate feedback from industry partners and capstone participants, allowing it to evolve with changing cybersecurity threats and client needs.

- Resource development—involves collaboration between students, GenAI, and the AI agent to create consulting resources aimed at supporting small businesses’ cybersecurity needs.

- Industry application—enables students to apply developed resources to real-world cybersecurity challenges faced by small businesses, adapting resources to fit specific needs and gathering feedback for further refinement.

5.2. Development-Oriented Projects Across Stages

- Purpose: defines the primary goal of each project within the context of consulting needs.

- Human–AI Collaboration: highlights how AI tools and human expertise are integrated to enhance productivity and resource creation.

- Methodology: specifies the selected methodology (DBTL) for guiding project execution.

- Developers: identifies the team members or stakeholders involved in each project.

- Development outputs: lists the expected deliverables for each stage, such as consulting tools or templates.

- Pedagogy principles: emphasises the educational principles guiding project design to ensure relevance to capstone learning objectives.

5.3. Research-Oriented Projects Across Stages

- Purpose: defines the primary objective of each project in terms of generating research insights.

- Example RQs: lists specific questions that each project aims to explore, contributing to the agenda’s SRQs.

- Human–AI Collaboration: describes how Human–AI collaboration supports data collection and analysis processes.

- Methodology: specifies Reflexive DSR as the guiding methodology, fostering systematic exploration and reflection.

- Researchers: identifies the researchers involved in each stage of the project.

- Research Outputs: lists the expected outcomes for each stage, such as frameworks or academic papers.

- Data collection: outlines the primary data collection methods, which may include feedback from participants or case study analysis.

5.4. Initial Findings and Practical Insights from Development Stages

6. Discussion

6.1. Methodology Effectiveness and Alignment (RQ1)

6.2. High-Level Impact of Human–AI Collaboration (RQ2)

6.3. Educational and Industry Alignment (RQ3)

6.4. Challenges and Lessons Learned

6.5. Future Research Directions and Opportunities for SRQs

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Saha, B.; Anwar, Z. A review of cybersecurity challenges in small business: The imperative for a future governance framework. J. Inf. Secur. 2024, 15, 24–39. [Google Scholar] [CrossRef]

- Chidukwani, A.; Zander, S.; Koutsakis, P. A survey on the cyber security of small-to-medium businesses: Challenges, research focus and recommendations. IEEE Access 2022, 10, 85701–85719. [Google Scholar] [CrossRef]

- Elger, D.F.; Beyerlein, S.W.; Budwig, R.S. Using design, build, and test projects to teach engineering. In Proceedings of the 30th Annual Frontiers in Education Conference: Building on A Century of Progress in Engineering Education, Conference Proceedings (IEEE Cat. No. 00CH37135), Kansas City, MO, USA, 18–21 October 2000; Volume 2, p. F3C-9. [Google Scholar]

- Chan, F.K.; Thong, J.Y. Acceptance of agile methodologies: A critical review and conceptual framework. Decis. Support Syst. 2009, 46, 803–814. [Google Scholar] [CrossRef]

- Ilieva, S.; Ivanov, P.; Stefanova, E. Analyses of an agile methodology implementation. In Proceedings of the 30th Euromicro Conference, Rennes, France, 3 September 2004; pp. 326–333. [Google Scholar]

- Kensing, F.; Blomberg, J. Participatory design: Issues and concerns. Comput. Support. Coop. Work CSCW 1998, 7, 167–185. [Google Scholar] [CrossRef]

- Bossen, C.; Dindler, C.; Iversen, O.S. Evaluation in participatory design: A literature survey. In Proceedings of the 14th Participatory Design Conference: Full Papers-Volume 1; Association for Computing Machinery: New York, NY, USA, 2016; pp. 151–160. [Google Scholar]

- Spinuzzi, C. The methodology of participatory design. Tech. Commun. 2005, 52, 163–174. [Google Scholar]

- Abras, C.; Maloney-Krichmar, D.; Preece, J. User-centered design. In Encyclopedia of Human-Computer Interaction; Bainbridge, W., Ed.; Sage Publications: Thousand Oaks, CA, USA, 2004; pp. 445–456. [Google Scholar]

- Vredenburg, K.; Mao, J.Y.; Smith, P.W.; Carey, T. A survey of user-centered design practice. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Minneapolis, MN, USA, 20–25 April 2002; pp. 471–478. [Google Scholar]

- Hevner, A.R.; Chatterjee, S. Design Research in Information Systems: Theory and Practice; Springer: New York, NY, USA, 2010. [Google Scholar]

- Kuechler, B.; Vaishnavi, V. On theory development in design science research: Anatomy of an artifact. J. Assoc. Inf. Syst. 2012, 13, 490–507. [Google Scholar]

- Haj-Bolouri, A.; Purao, S. Action Design Research as a Method-in-Use. DiVA Portal. 2018. Available online: https://www.diva-portal.org/smash/get/diva2:1109694/FULLTEXT01.pdf (accessed on 5 January 2025).

- Cronholm, S.; Göbel, H.; Hjalmarsson, A. Empirical evaluation of action design research. In Proceedings of the Australasian Conference on Information Systems, Wollongong, Australia, 5–7 December 2016. [Google Scholar]

- Sein, M.K.; Henfridsson, O.; Purao, S.; Rossi, M.; Lindgren, R. Action design research. MIS Q. 2011, 35, 37–56. [Google Scholar] [CrossRef]

- Hendricks, C.C. Improving Schools Through Action Research: A Reflective Practice Approach; Pearson: Upper Saddle River, NJ, USA, 2017. [Google Scholar]

- Feucht, F.C.; Lunn Brownlee, J.; Schraw, G. Moving beyond reflection: Reflexivity and epistemic cognition in teaching and teacher education. Educ. Psychol. 2017, 52, 234–241. [Google Scholar] [CrossRef]

- Chan, K.C. Building a Research Integrity Advisor with OpenAI GPT Builder. 2024. Available online: https://ssrn.com/abstract=4884418 (accessed on 5 January 2025).

- Chan, K.C.; Lokuge, S.; Fahmideh, M.; Lane, M.S. AI-Assisted Educational Design: Academic-GPT Collaboration for Assessment Creation. 2024. Available online: https://ssrn.com/abstract=4996532 (accessed on 5 January 2025).

- National Institute of Standards and Technology (NIST). NIST Cybersecurity Framework (CSF) 2.0; National Institute of Standards and Technology: Gaithersburg, MD, USA, 2024. Available online: https://www.nist.gov/cyberframework (accessed on 5 January 2025).

- National Institute of Standards and Technology (NIST). NIST Cybersecurity Framework 2.0: Small Business Quick-Start Guide; NIST Special Publication 1300; National Institute of Standards and Technology: Gaithersburg, MD, USA, 2024. [CrossRef]

- ISO/IEC 27001; Information Security Management Systems—Requirements. International Organization for Standardization: Geneva, Switzerland, 2013. Available online: https://www.iso.org/standard/54534.html (accessed on 5 January 2025).

- Center for Internet Security. CIS Critical Security Controls v8; Center for Internet Security: Washington, DC, USA, 2021. Available online: https://www.cisecurity.org/controls/v8 (accessed on 5 January 2025).

- ISACA. COBIT 2019 Framework: Governance and Management Objectives; ISACA: Rolling Meadows, IL, USA, 2019; Available online: https://netmarket.oss.aliyuncs.com/df5c71cb-f91a-4bf8-85a6-991e1c2c0a3e.pdf (accessed on 5 January 2025).

- Cybersecurity Maturity Model Certification Accreditation Body. CMMC 2.0 Model Overview; Cybersecurity Maturity Model Certification Accreditation Body. 2023. Available online: https://www.cyberab.org/ (accessed on 5 January 2025).

- Australian Cyber Security Centre. Strategies to Mitigate Cybersecurity Incidents: Essential Eight; Australian Cyber Security Centre. Available online: https://www.cyber.gov.au/acsc/view-all-content/essential-eight (accessed on 5 January 2025).

- Australian Cyber Security Centre. Small Business Cyber Security Guide; Australian Cyber Security Centre. 2023. Available online: https://www.cyber.gov.au/resources-business-and-government/essential-cybersecurity/small-business-cybersecurity/small-business-cybersecurity-guide (accessed on 5 January 2025).

- Australian Cyber Security Centre. Small Business Cyber Security Checklist; Australian Cyber Security Centre. 2023. Available online: https://www.cyber.gov.au/sites/default/files/2023-06/Small%20business%20cyber%20security%20checklist.pdf (accessed on 5 January 2025).

- Vazquez, D. Guide to Cybersecurity for Small and Medium Businesses, Part 1: Understanding Cybersecurity; USAID: Washington, DC, USA, 2022. Available online: https://pdf.usaid.gov/pdf_docs/PA00ZKCM.pdf (accessed on 5 January 2025).

- Vazquez, D. Guide to Cybersecurity for Small and Medium Businesses, Part 2: Protecting Your Business; USAID: Washington, DC, USA, 2022; Available online: https://marketlink.org/ (accessed on 5 January 2025).

- Federal Trade Commission. Cybersecurity for Small Business: Protect Your Small Business. Available online: https://www.ftc.gov/business-guidance/small-businesses/cybersecurity (accessed on 5 January 2025).

- Spanning. Building Cybersecurity in Small and Midsize Businesses, Cybersecurity Whitepaper; Spanning. 2018. Available online: https://www.spanning.com/media/downloads/SB0365-whitepaper-cybersecurity.pdf (accessed on 5 January 2025).

- Microsoft. Small Business Resource Center: Discover Tools, Guides, and Expert Advice to Elevate Your Business Success. Available online: https://www.microsoft.com/en-us/microsoft-365/small-business-resource-center (accessed on 5 January 2025).

- Ciaccio, E.J. Use of artificial intelligence in scientific paper writing. Inform. Med. Unlocked 2023, 41, 101253. [Google Scholar] [CrossRef]

| Methodology | DBTL | Agile Development | PD | UCD |

|---|---|---|---|---|

| Focus/Goal | Developing and refining practical tools or frameworks through iterative cycles. | Frequent iterations to improve tools with continuous feedback from stakeholders. | Co-creating solutions with stakeholders to ensure relevance and usability. | Designing solutions based on user needs with continuous feedback shaping the outcome. |

| Core Activities | Design, build, test, learn, and refine continuously. | Develop, release, and gather feedback from users iteratively. | Collaborate closely with stakeholders in the design process. | Prototype solutions and refine based on user input. |

| Primary Output | Functional tools, templates, or systems. | Improve tools or processes through iterative releases. | Usable solutions aligned with stakeholder needs. | User-centered artifacts that meet real-world needs. |

| Stakeholder Involvement | Developers only. | Developers and users. | Developers and users. | Developers and users. |

| Role of Reflection | Reflection occurs during the learning phase to drive future improvements. | Reflection occurs at the end of each sprint to adjust the next iteration. | Reflection is continuous, focusing on user involvement to improve outcomes. | Reflection occurs after each user feedback session to refine prototypes. |

| When to Use | When developing and refining tools or frameworks over time. | When quick feedback and iterative improvement are essential. | When stakeholders must be co-creators throughout the process. | When user needs guide the design and development process. |

| Examples of Application | Developing chatbots or cybersecurity tools through iterative prototyping. | Developing tools or templates with frequent sprints and feedback. | Co-creating templates or tools with students and industry partners. | Designing chatbots with continuous client input and feedback. |

| Methodology | Reflexive DSR | Action DSR | Action Research (AR) | Mixed Methods Research (MMR) |

|---|---|---|---|---|

| Focus/Core Goal | Reflecting on internal processes to generate generalisable knowledge and insights. | Designing artefacts and engaging stakeholders to refine and apply solutions. | Solving practical problems collaboratively with continuous reflection in action. | Generating comprehensive insights by combining qualitative and quantitative data. |

| Core Activities | Analyse, reflect, and refine internal frameworks and decisions. | Co-create and apply artefacts with stakeholders, reflecting on outcomes. | Apply solutions and reflect within the action cycle. | Collect, integrate, and analyse qualitative and quantitative data. |

| Primary Output | Generalisable frameworks and design principles. | Refined artefacts and practical knowledge. | Practical improvements and process insights. | Balanced insights from both qualitative and quantitative data. |

| Stakeholder Involvement | Developers only. | Developers and users. | Developers and users. | Developers and users. |

| Role of Reflection | Reflection is continuous, focusing on lessons learned from decisions. | Reflection occurs through engagement with stakeholders and application of solutions. | Reflection is integrated into the action process to refine outcomes. | Reflection occurs during data analysis, balancing insights from multiple sources. |

| When to Use | When critical reflection on internal processes is necessary. | When engaging stakeholders to apply and refine solutions. | When solving real-world problems with continuous reflection. | When both qualitative and quantitative data are needed. |

| Examples of Application | Reflecting on the development process of a cybersecurity roadmap. | Testing tools such as chatbots and roadmaps with clients and refining based on feedback. | Implementing and refining frameworks of business strategies for small businesses. | Collecting qualitative and quantitative feedback from clients and students. |

| Aspect | Stage 1: Building the AI Agent | Stage 2: Collaborative Roadmap Creation | Stage 3: Resource Development | Stage 4: Industry Application |

|---|---|---|---|---|

| Purpose | Develop Custom GPT and Knowledge Hub | Develop cybersecurity roadmap and define capstone projects | Create usable resources for the cybersecurity consulting firm | Implement resources in real-world small businesses |

| Human–AI Collaboration | Academics, AI tools, and industry partners collaborate to build the AI Agent | Joint development of the roadmap and capstone projects with AI tools and stakeholders | Joint development of resources (templates, chatbot) with AI tools, students, academics, and industry partners | Real-world application with students working with AI tools and industry partners, applying consulting resources |

| Methodology | DBTL: Iterative development of AI Agent and Knowledge Hub | DBTL: Iterative development of roadmap and capstone projects | DBTL: Iterative development of templates, tools, chatbot with iterative feedback | Agile development: Apply resources with real clients and gather feedback |

| Developers | Academics, Industry Partners | Academics, Students, Industry Partners, University Engagement Team | Academics, Students, Industry Partners | Students, Industry Partners |

| Development Outputs | Custom GPT, Knowledge Hub | Cybersecurity Roadmap, Capstone Project Specifications | Templates, Training Programs, Cybersecurity Advisor Chatbot, Cybersecurity Management Systems | Client-specific cybersecurity documents, processes and systems. Refined resources, updated Knowledge Hub |

| Pedagogy Principles | Constructivism: Learning through collaborative building | Experiential Learning: Hands-on involvement in project creation | Collaborative Learning: Co-creation of knowledge and solutions | Reflective Learning: Gaining insights through reflection and feedback |

| Aspect | Stage 1: Building the AI Agent | Stage 2: Collaborative Roadmap Creation | Stage 3: Resource Development | Stage 4: Industry Application |

|---|---|---|---|---|

| Purpose | Develop frameworks for AI agent creation | Create collaborative frameworks for project development | Generate best practices for collaborative resource development | Produce insights into continuous improvement and feedback loops |

| Example RQs | How can cybersecurity frameworks be integrated into an AI agent for SMEs? | How can students and industry partners collaborate to achieve roadmap development? | What practices ensure effective resource development for SMEs? | How does continuous feedback improve resources and processes over time? |

| Human–AI Collaboration | Reflection on how AI tools and industry data integrate within the AI Agent | Co-creation of collaborative frameworks with input from students and industry | Evaluate collaboration and resource co-creation processes with stakeholders | Continuous feedback for refining resources and improving processes |

| Methodology | Reflexive DSR: Reflection on frameworks and input sources | Action DSR: Co-create roadmap and projects with stakeholder input | Reflexive DSR: Evaluate collaborative process and outcomes | Reflexive DSR: Reflect on feedback and refine resources |

| Researchers | Academics, Industry Partners | Academics, Students, Industry Partners | Academics, Students | Students, Industry Partners, Academics |

| Research Outputs (Artefacts) | Framework for AI agent development | Framework for collaborative project creation | Best practices for resource co-creation | Frameworks for continuous feedback and refinement |

| Data Collection | Interviews with academics and industry partners | Workshops and focus groups with students and stakeholders | Observations of collaboration sessions, student reflections | Client feedback, post-implementation reviews, student reflections |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chan, K.C.; Gururajan, R.; Carmignani, F. A Human–AI Collaborative Framework for Cybersecurity Consulting in Capstone Projects for Small Businesses. J. Cybersecur. Priv. 2025, 5, 21. https://doi.org/10.3390/jcp5020021

Chan KC, Gururajan R, Carmignani F. A Human–AI Collaborative Framework for Cybersecurity Consulting in Capstone Projects for Small Businesses. Journal of Cybersecurity and Privacy. 2025; 5(2):21. https://doi.org/10.3390/jcp5020021

Chicago/Turabian StyleChan, Ka Ching, Raj Gururajan, and Fabrizio Carmignani. 2025. "A Human–AI Collaborative Framework for Cybersecurity Consulting in Capstone Projects for Small Businesses" Journal of Cybersecurity and Privacy 5, no. 2: 21. https://doi.org/10.3390/jcp5020021

APA StyleChan, K. C., Gururajan, R., & Carmignani, F. (2025). A Human–AI Collaborative Framework for Cybersecurity Consulting in Capstone Projects for Small Businesses. Journal of Cybersecurity and Privacy, 5(2), 21. https://doi.org/10.3390/jcp5020021