Smart Edge Computing Framework for Real-Time Brinjal Harvest Decision Optimization

Abstract

1. Introduction

2. Materials and Methods

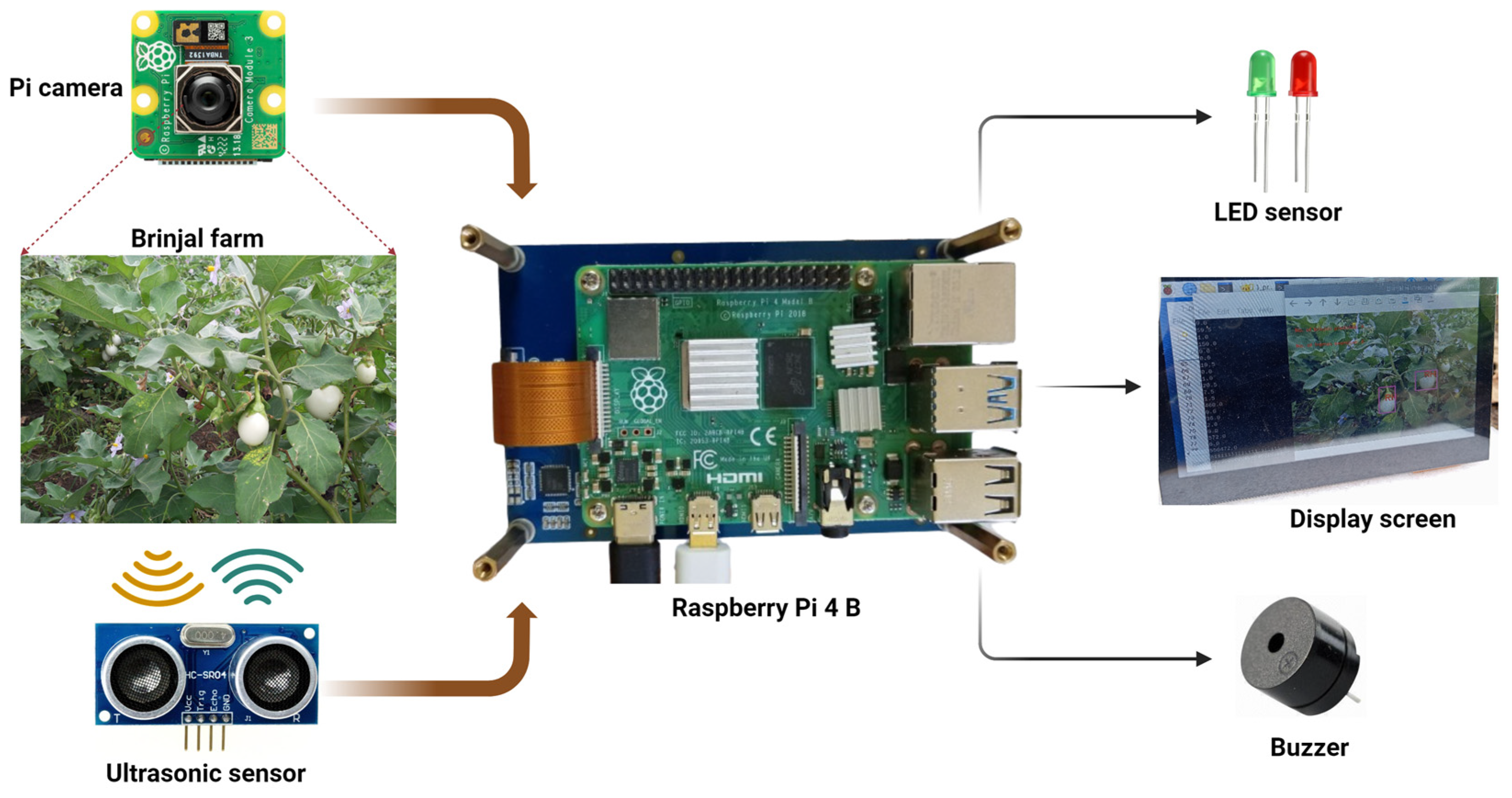

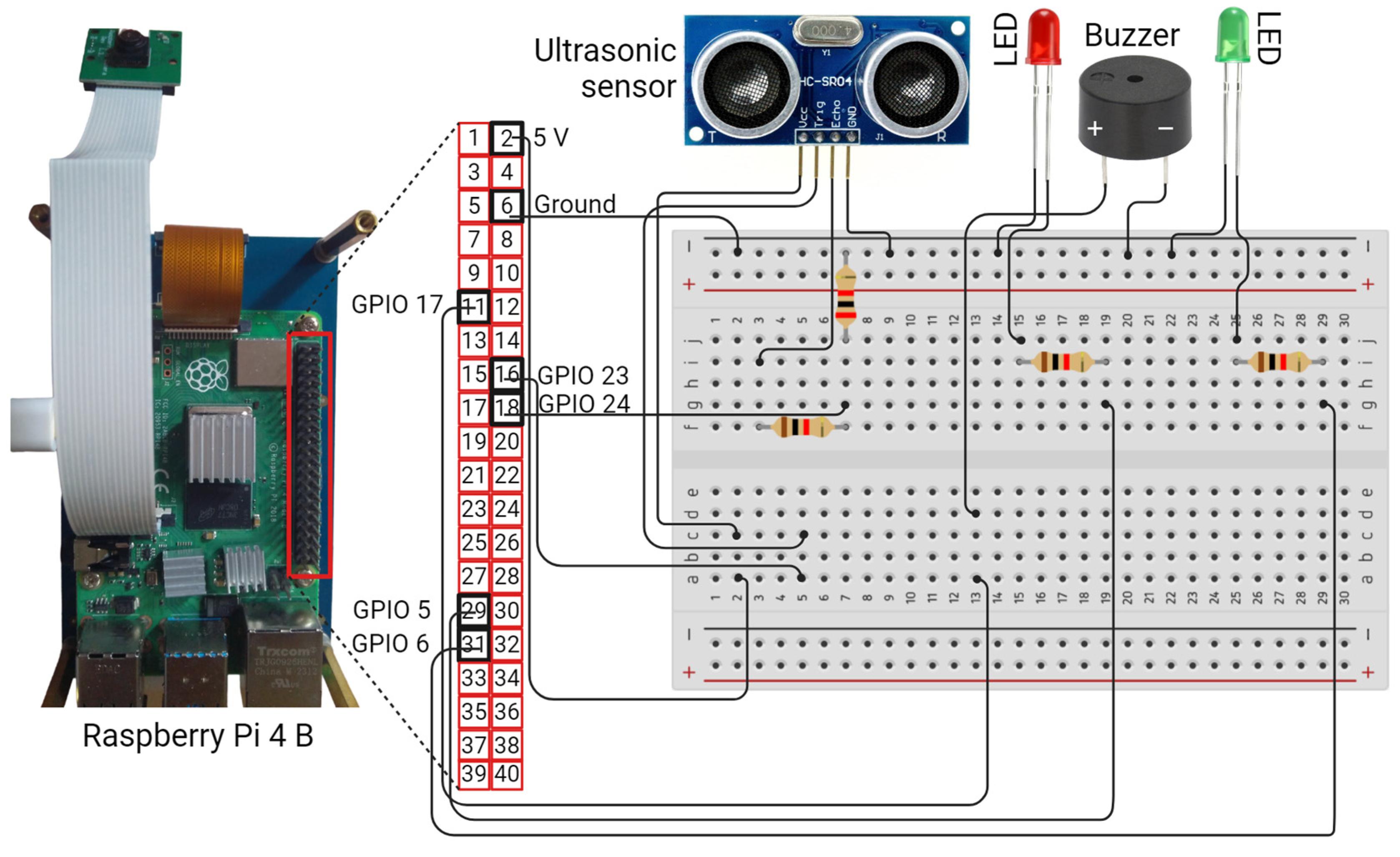

2.1. BHDS Prototype

2.2. Automated Image Acquisition

2.2.1. Determination of Distance

2.2.2. Computation of Focus Measure

| Algorithm 1. Focus measure threshold calculation |

| Input: Video frames from the Pi camera

Output: Threshold value ‘fm’ Steps: 1. Initialize focus_measure = 0; 2. For i = 1 to 25: 3. If (time elapsed = 10 s) and (30 cm <= distance between object and camera <= 50 cm); 4. Image = current frame; 5. focus_measure= focus_measure + Laplacian(image); 6. End If; 7. fm = focus_measure/25; 8. End For; 9. Return fm. |

2.2.3. Good-Quality Image Acquisition

2.3. Brinjal Detection

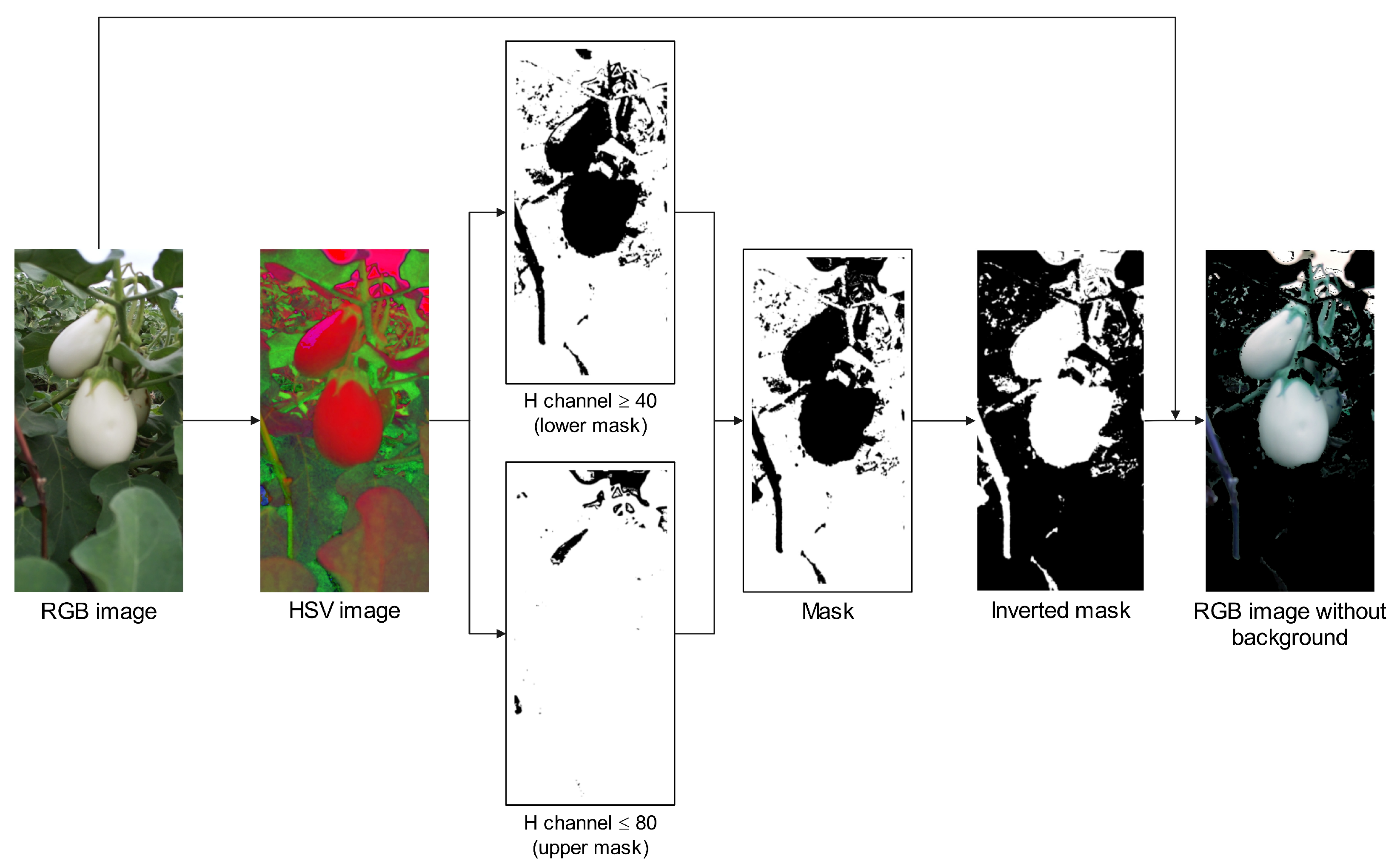

2.3.1. Elimination of Background

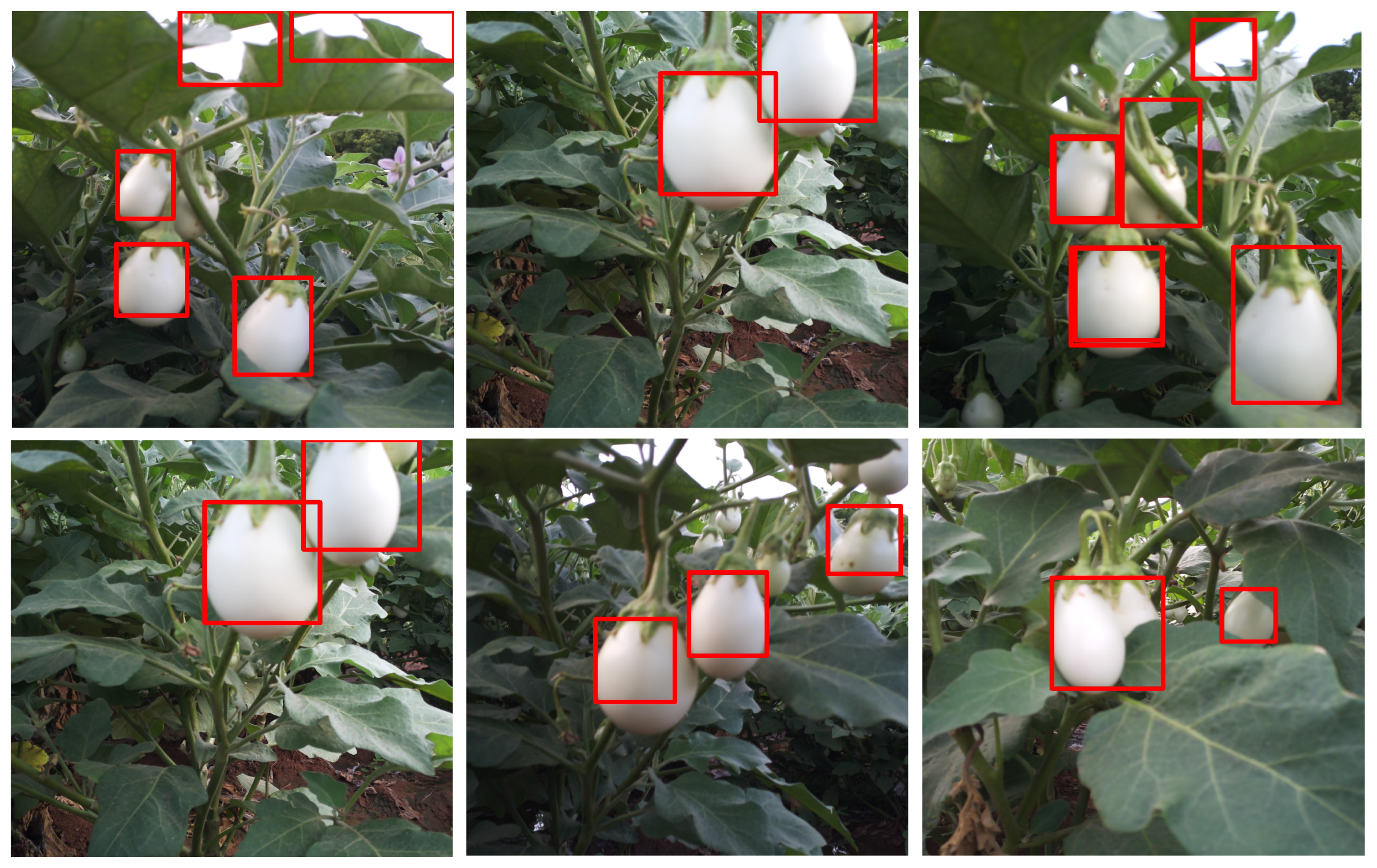

2.3.2. Removal of Non-Interested Regions

2.3.3. Clustering Pixels to Detect Brinjals

2.4. Training Data Acquisition and Brinjal Area Calculation

| Algorithm 2. Training data collection and area calculation |

| Input: Video frames from the Pi camera and receiver response time from ultrasonic sensor.

Output: b_area.csv file. Steps: 1. Initialize image_count = 0; 2. While (image_count < 50): 3. Capture the valid image using the Section 2.2.3; 4. Detect the brinjals using the Section 2.3; 5. If (number of brinjals > 0): 6. For (each brinjal): 7. Calculate the area of the brinjal and save it in b_area.csv file; 8. End For; 9. Increase the image_count by 1; 10. Else: 11. Go to step 2; 12. End If; 13. End While; 14. Return b_area.csv |

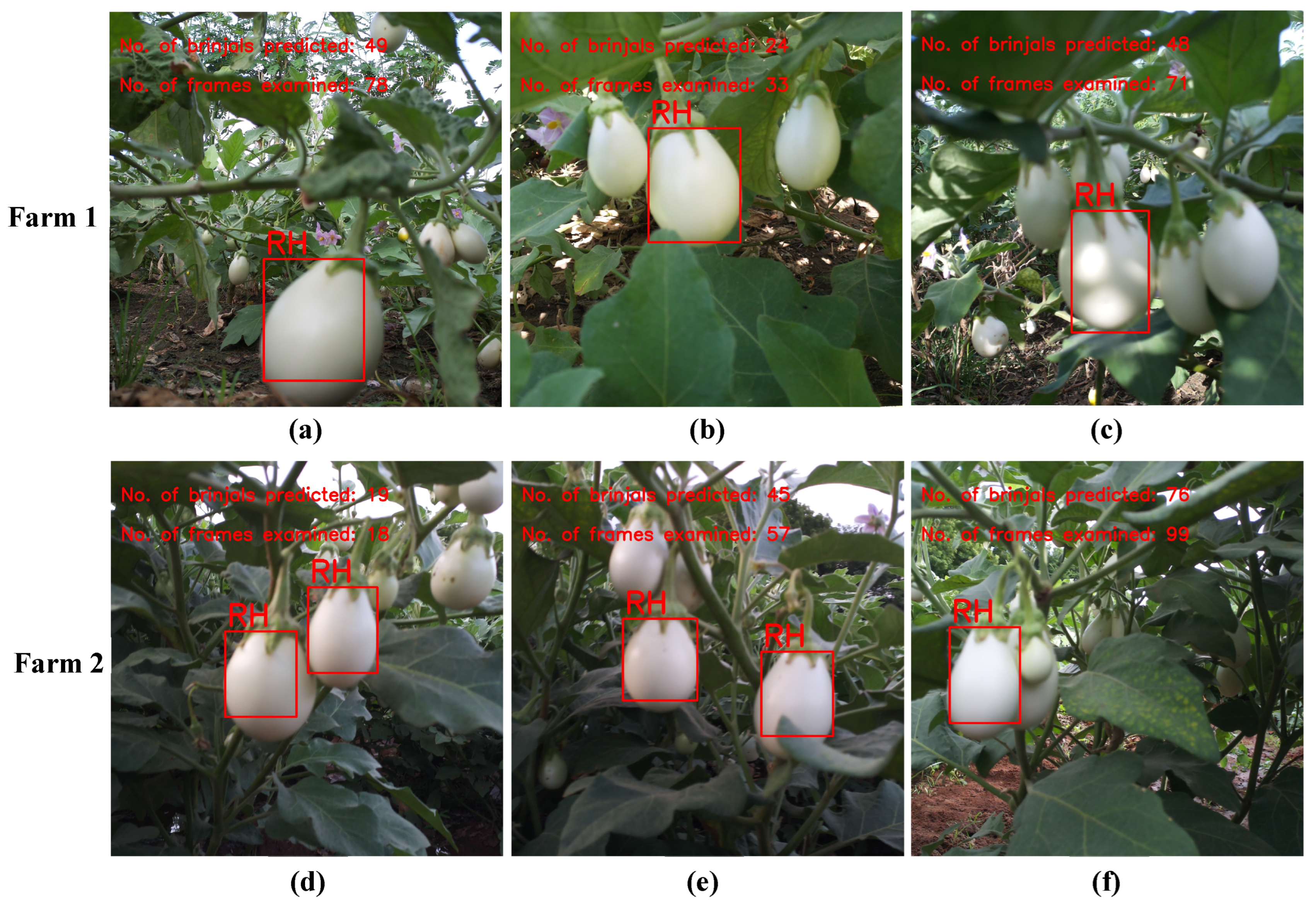

2.5. Brinjal Maturity Prediction

| Algorithm 3. Predict the ready to harvest brinjal |

| Input: Frames from the Pi camera, receiver response time from ultrasonic sensor, and the threshold values ‘min_h’ and ‘max_h’ to predict the ready to harvest brinjal.

Output: Ready to harvest brinjals are marked with bounding boxes and returns the total number of brinjals ready to harvest. Steps: 1. RH_count = 0; 2. Do: 3. Capture the valid image using the Section 2.2.3; 4. Detect the brinjals from the image using the brinjal detection method derived in the Section 2.3; 5. For (each brinjal in the image): 6. Calculate the area; 7. If (min_h <= area of the brinjal <= max_h): 8. Mark the brinjal “READY to HARVEST” 9. Increase the RH_count by 1; 10. Else: 11. Ignore the brinjal; 12. End If; 13. End For; 14. Until (the entire farm is navigated to capture the images); 15. Return RH_count. |

2.6. Hardware and Software Configurations

2.7. Evaluation Metrics

2.8. Roboflow 3.0 Configuration for Brinjal Detection

3. Experimental Results

3.1. Field Evaluation Sites and Conditions

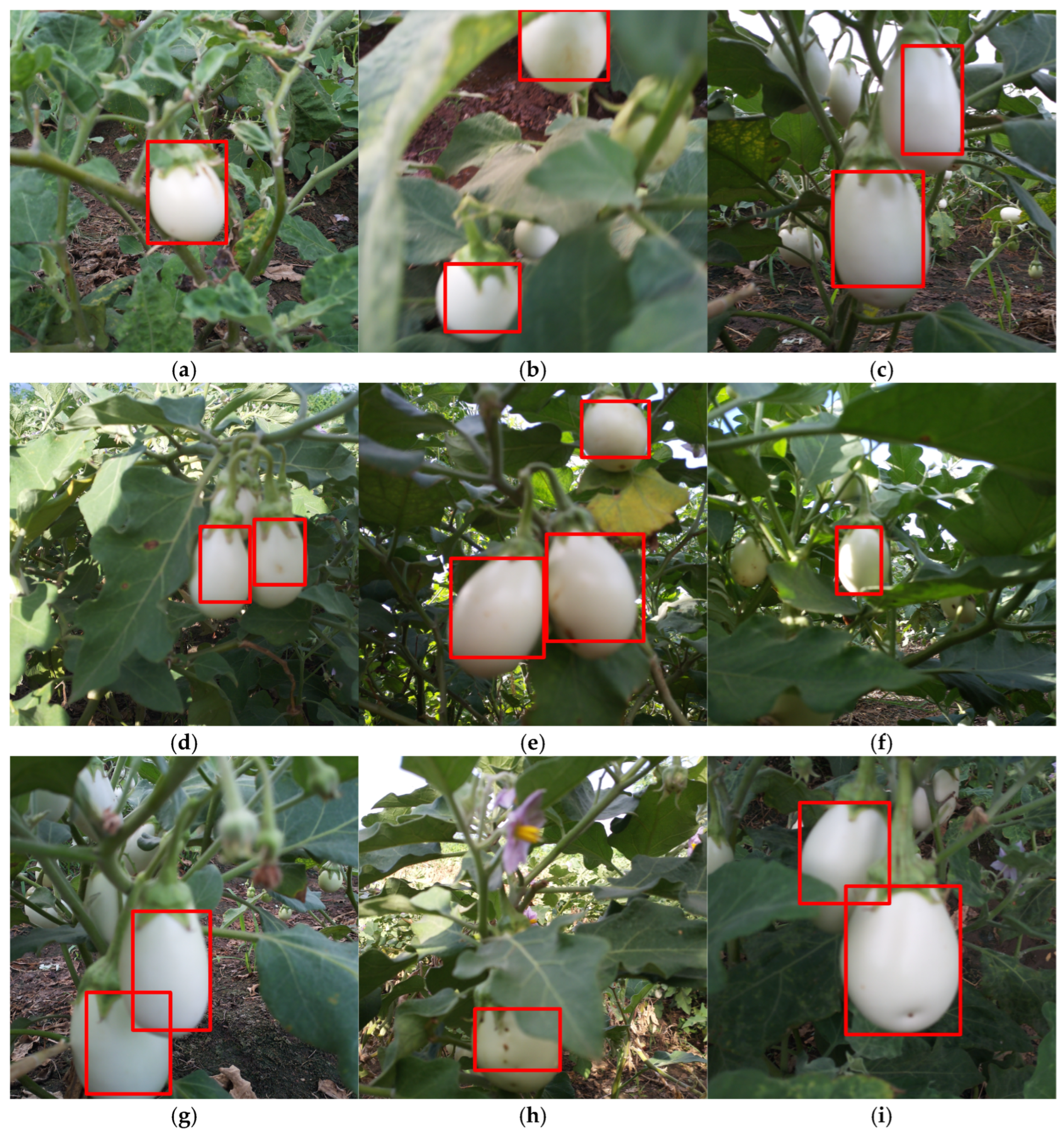

3.2. Evaluation of Brinjal Detection

3.3. Accuracy of Training Image Acquisition

3.4. Performance of Prediction

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Sandhya, K. India: Production Volume of Eggplant 2023. Statista. Available online: https://www.statista.com/statistics/1038975/india-production-of-eggplant/ (accessed on 1 August 2024).

- Peng, J.; Zhao, Z.; Liu, D. Impact of agricultural mechanization on agricultural production, income, and mechanism: Evidence from Hubei province, China. Front. Environ. Sci. 2022, 10, 838686. [Google Scholar] [CrossRef]

- Kahya, E.; Ozduven, F.F.; Aslan, Y. YOLOv5 model application in real-time robotic eggplant harvesting. J. Agric. Sci. 2024, 16, 9. [Google Scholar] [CrossRef]

- Zeng, T.; Li, S.; Song, Q.; Zhong, F.; Wei, X. Lightweight tomato real-time detection method based on improved YOLO and mobile deployment. Comput. Electron. Agric. 2023, 205, 107625. [Google Scholar] [CrossRef]

- Jiao, Z.; Huang, K.; Wang, Q.; Zhong, Z.; Cai, Y. Real-time litchi detection in complex orchard environments: A portable, low-energy edge computing approach for enhanced automated harvesting. Artif. Intell. Agric. 2024, 11, 13–22. [Google Scholar] [CrossRef]

- Tang, Y.; Chen, M.; Wang, C.; Luo, L.; Li, J.; Lian, G.; Zou, X. Recognition and localization methods for vision-based fruit picking robots: A review. Front. Plant Sci. 2020, 11, 510. [Google Scholar] [CrossRef]

- Wan, S.; Goudos, S. Faster R-CNN for multi-class fruit detection using a robotic vision system. Comput. Netw. 2020, 168, 107036. [Google Scholar] [CrossRef]

- Zhuang, J.J.; Luo, S.M.; Hou, C.J.; Tang, Y.; He, Y.; Xue, X.Y. Detection of orchard citrus fruits using a monocular machine vision-based method for automatic fruit picking applications. Comput. Electron. Agric. 2018, 152, 64–73. [Google Scholar] [CrossRef]

- Song, Y. Application of image recognition technology in agriculture. In Proceedings of the International Conference on Electrical Engineering and Intelligent Control (EEIC 2024), Singapore, 11–13 October 2024; pp. 339–342. [Google Scholar]

- Dorj, U.O.; Lee, M.; Yun, S.S. An yield estimation in citrus orchards via fruit detection and counting using image processing. Comput. Electron. Agric. 2017, 140, 103–112. [Google Scholar] [CrossRef]

- Malik, M.H.; Zhang, T.; Li, H.; Zhang, M.; Shabbir, S.; Saeed, A. Mature tomato fruit detection algorithm based on improved HSV and Watershed algorithm. IFAC-PapersOnLine 2018, 51, 431–436. [Google Scholar] [CrossRef]

- Tu, S.; Xue, Y.; Zheng, C.; Qi, Y.; Wan, H.; Mao, L. Detection of passion fruits and maturity classification using Red-Green-Blue Depth images. Biosyst. Eng. 2018, 175, 156–167. [Google Scholar] [CrossRef]

- Li, T.; Fang, W.; Zhao, G.; Gao, F.; Wu, Z.; Li, R.; Fu, L.; Dhupia, J. An improved binocular localization method for apple based on fruit detection using deep learning. Inf. Process. Agric. 2023, 10, 276–287. [Google Scholar] [CrossRef]

- Yu, T.; Hu, C.; Xie, Y.; Liu, J.; Li, P. Mature pomegranate fruit detection and location combining improved F-PointNet with 3D point cloud clustering in orchard. Comput. Electron. Agric. 2022, 200, 107233. [Google Scholar] [CrossRef]

- Suo, R.; Gao, F.; Zhou, Z.; Fu, L.; Song, Z.; Dhupia, J.; Li, R.; Cui, Y. Improved multi-classes kiwifruit detection in orchard to avoid collisions during robotic picking. Comput. Electron. Agric. 2021, 182, 106052. [Google Scholar] [CrossRef]

- Rong, J.; Zhou, H.; Zhang, F.; Yuan, T.; Wang, P. Tomato cluster detection and counting using improved YOLOv5 based on RGB-D fusion. Comput. Electron. Agric. 2023, 207, 107741. [Google Scholar] [CrossRef]

- Li, T.; Sun, M.; He, Q.; Zhang, G.; Shi, G.; Ding, X.; Lin, S. Tomato recognition and location algorithm based on improved YOLOv5. Comput. Electron. Agric. 2023, 208, 107759. [Google Scholar] [CrossRef]

- Guo, J.; Yang, Y.; Lin, X.; Memon, M.S.; Liu, W.; Zhang, M.; Sun, E. Revolutionizing agriculture: Real-time ripe tomato detection with the enhanced tomato-YOLOv7 system. IEEE Access 2023, 11, 133086–133098. [Google Scholar] [CrossRef]

- Hou, G.; Chen, H.; Ma, Y.; Jiang, M.; Hua, C.; Jiang, C.; Niu, R. An occluded cherry tomato recognition model based on improved YOLOv7. Front. Plant Sci. 2023, 14, 1260808. [Google Scholar] [CrossRef]

- Wang, A.; Qian, W.; Li, A.; Xu, Y.; Hu, J.; Xie, Y.; Zhang, L. NVW-YOLOv8s: An improved YOLOv8s network for real-time detection and segmentation of tomato fruits at different ripeness stages. Comput. Electron. Agric. 2024, 219, 108833. [Google Scholar] [CrossRef]

- Hayashi, S.; Ganno, K.; Ishii, Y. Machine vision algorithm of eggplant recognition for robotic harvesting. J. Soc. High Technol. Agric. 2000, 12, 38–46. [Google Scholar] [CrossRef]

- Hayashi, S.; Ganno, K.; Ishii, Y.; Tanaka, I. Robotic harvesting system for eggplants. Jpn. Agric. Res. Q. 2002, 36, 163–168. [Google Scholar] [CrossRef]

- Chong, V.K.; Monta, M.; Ninomiya, K.; Kondo, N.; Namba, K.; Terasaki, E.; Nishi, T.; Goto, T. Development of mobile eggplant grading robot for dynamic in-field variability sensing: Manufacture of robot and performance test. Eng. Agric. Environ. Food 2008, 1, 68–76. [Google Scholar]

- Jian, S. Research on image-based fuzzy visual servo for picking robot. In Proceedings of the International Conference on Computer and Computing Technologies in Agriculture, Boston, MA, USA, 18 October 2008; pp. 751–760. [Google Scholar]

- Miraei Ashtiani, S.H.; Golzarian, M.R.; Baradaran Motie, J.; Emadi, B.; Nikoo Jamal, N.; Mohammadinezhad, H. Effect of loading position and storage duration on the textural properties of eggplant. Int. J. Food Prop. 2016, 19, 814–825. [Google Scholar] [CrossRef]

- Tamilarasi, T.; Muthulakshmi, P. Machine vision algorithm for detection and maturity prediction of Brinjal. Smart Agric. Technol. 2024, 7, 100402. [Google Scholar]

- Top Computer Vision Opportunities and Challenges for 2024. Available online: https://medium.com/sciforce/top-computer-vision-opportunities-and-challenges-for-2024-31a238cb9ff2 (accessed on 2 August 2024).

- Valente, J.; António, J.; Mora, C.; Jardim, S. Developments in image processing using deep learning and reinforcement learning. J. Imaging 2023, 9, 207. [Google Scholar] [CrossRef] [PubMed]

- Wakchaure, G.C.; Nikam, S.B.; Barge, K.R.; Kumar, S.; Meena, K.K.; Nagalkar, V.J.; Choudhari, J.D.; Kad, V.P.; Reddy, K.S. Maturity stages detection prototype device for classifying custard apple (Annona squamosa L.) fruit using image processing approach. Smart Agric. Technol. 2024, 7, 100394. [Google Scholar] [CrossRef]

- Sepulveda, D.; Fernandez, R.; Navas, E.; Armada, M.; Gonzalez-De-Santos, P. Robotic aubergine harvesting using dual-arm manipulation. IEEE Access 2020, 8, 121889–121904. [Google Scholar] [CrossRef]

- Eskiciogiu, A.M.; Fisher, P.S.; Chen, S. Image quality measures and their performance. IEEE Trans. Commun. 1995, 43, 2959–2965. [Google Scholar] [CrossRef]

- Shiddiq, M.; Arief, D.S.; Defrianto; Dasta, V.V.; Panjaitan, D.M.; Saputra, D. Counting of oil palm fresh fruit bunches using computer vision. J. Oil Palm Res. 2023, 35, 111–120. [Google Scholar] [CrossRef]

- MacQueen, J. Some methods for classification and analysis of multivariate observations. In Proceedings of the Fifth Berkeley Symposium on Mathematical Statistics and Probability, Volume 1: Statistics; University of California Press: Berkeley, CA, USA, 1967; pp. 281–297. [Google Scholar]

- Douglas, H.D.; Peucker, K.T. Algorithms for the reduction of the number of points required to represent a digitized line or its caricature. Cartographica 1973, 10, 112–122. [Google Scholar] [CrossRef]

- Ramer, U. An iterative procedure for the polygonal approximation of plane curves. Comput. Graph. Image Process. 1972, 1, 244–256. [Google Scholar] [CrossRef]

- Zhou, X.; Lee, W.S.; Ampatzidis, Y.; Chen, Y.; Peres, N.; Fraisse, C. Strawberry maturity classification from UAV and near-ground imaging using deep learning. Smart Agric. Technol. 2021, 1, 100001. [Google Scholar] [CrossRef]

- Parr, B.; Legg, M.; Alam, F. Grape yield estimation with a smartphone’s colour and depth cameras using machine learning and computer vision techniques. Comput. Electron. Agric. 2023, 213, 108174. [Google Scholar] [CrossRef]

- Zhang, X.; Toudeshki, A.; Ehsani, R.; Li, H.; Zhang, W.; Ma, R. Yield estimation of citrus fruit using rapid image processing in natural background. Smart Agric. Technol. 2022, 2, 100027. [Google Scholar] [CrossRef]

- Mayuri, A.V.R.; Manoharan, R.K.; Subramani, N.; Aridoss, M.; Galety, M.G. Robust facial expression recognition using an evolutionary algorithm with a deep learning model. Appl. Sci. 2023, 13, 468. [Google Scholar]

- Roboflow Everything You Need to Build and Deploy Computer Vision Models. Available online: https://roboflow.com (accessed on 24 August 2024).

- Carvalho, J.; Cunha, L.; Pinto, S.; Gomes, T. FESTA: FPGA-enabled ground segmentation technique for automotive LiDAR. IEEE Sens. J. 2024, 24, 38005–38014. [Google Scholar] [CrossRef]

- Liang, C.; Xiong, J.; Zheng, Z.; Zhong, Z.; Li, Z.; Chen, S.; Yang, Z. A visual detection method for nighttime litchi fruits and fruiting stems. Comput. Electron. Agric. 2020, 169, 105192. [Google Scholar] [CrossRef]

- Badgujar, C.M.; Poulose, A.; Gan, H. Agricultural object detection with You Only Look Once (YOLO) Algorithm: A bibliometric and systematic literature review. Comput. Electron. Agric 2024, 223, 109090. [Google Scholar] [CrossRef]

- Nahiduzzaman, M.; Sarmun, R.; Khandakar, A.; Faisal, M.A.; Islam, M.S.; Alam, M.K.; Rahman, T.; Al-Emadi, N.; Murugappan, M.; Chowdhury, M.E. Deep learning-based real-time detection and classification of tomato ripeness stages using yolov8 on Raspberry Pi. Eng. Res. Express 2025, 7, 015219. [Google Scholar] [CrossRef]

| Model | Dataset | Precision (%) | Recall (%) | F1-Score (%) |

|---|---|---|---|---|

| Proposed method | Farm1 | 88.59 | 86.44 | 87.50 |

| Farm2 | 86.36 | 88.78 | 87.55 | |

| Method from [26] | Farm1 | 92.24 | 82.94 | 87.34 |

| Farm2 | 74.00 | 88.09 | 80.43 | |

| YOLO8 | Farm1 | 74.10 | 96.40 | 83.79 |

| Farm2 | 59.30 | 62.90 | 61.05 |

| Method | Average Time (s) | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Farm 1 | Farm 2 | |||||||||

| RoIT | C | CB | LB | Total | RoIT | C | CB | LB | Total | |

| Proposed | 0.34 | 1.82 | 0.86 | 0.03 | 3.05 | 0.31 | 2.89 | 0.8 | 0.04 | 4.04 |

| [26] | - | 22.83 | 0.22 | 0.15 | 23.2 | - | 22.11 | 0.26 | 0.14 | 22.51 |

| Farm | Captured Images | Curated Images | NPI | TDCAR (%) | Error Rate (%) | Avg. Time (s) |

|---|---|---|---|---|---|---|

| Farm 1 | 91 | 25 | 24 | 98 | 2 | 2.33 |

| Farm 1 | 113 | 50 | 48 | 96 | 4 | 2.15 |

| Farm 1 | 162 | 75 | 71 | 94.66 | 5.34 | 2.23 |

| Farm 1 | 252 | 100 | 95 | 95 | 5 | 2.34 |

| Farm 2 | 120 | 50 | 47 | 94 | 6 | 3.44 |

| Test Field | Ground Truth | Actual Detection | True Detection | Missed Detection | False Detection | TRHDR (%) | MRHDR (%) | FRHDR (%) |

|---|---|---|---|---|---|---|---|---|

| Farm 1 | 52 | 54 | 47 | 5 | 7 | 90.38 | 9.61 | 12.96 |

| Farm 2 | 65 | 76 | 58 | 7 | 11 | 89.23 | 10.76 | 14 |

| Study | Sensors Used | Hardware Platform | Detection Method | Target Fruit | Results |

|---|---|---|---|---|---|

| [29] | - | Raspberry Pi 4B | SVM | Custard Apple | 100% accuracy |

| [30] | Prosilica GC2450C, Mesa SwissRanger | Intel i7-4790 | SVM | Brinjal | 88.35% precision; 88.10% recall |

| [44] | Pi camera | Raspberry Pi 4B | YOLOv8 | Tomato | 73.5% precision; 76.9% recall; 80.8% mAP@50 |

| This work | Pi camera, ultrasonic sensor, LEDs, buzzer | Raspberry Pi 4B | K-means clustering, region merging, symmetry analysis | Brinjal | 87.48% precision; 87.61% recall; 87.53% F1-score |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tamilarasi, T.; Muthulakshmi, P.; Ashtiani, S.-H.M. Smart Edge Computing Framework for Real-Time Brinjal Harvest Decision Optimization. AgriEngineering 2025, 7, 196. https://doi.org/10.3390/agriengineering7060196

Tamilarasi T, Muthulakshmi P, Ashtiani S-HM. Smart Edge Computing Framework for Real-Time Brinjal Harvest Decision Optimization. AgriEngineering. 2025; 7(6):196. https://doi.org/10.3390/agriengineering7060196

Chicago/Turabian StyleTamilarasi, T., P. Muthulakshmi, and Seyed-Hassan Miraei Ashtiani. 2025. "Smart Edge Computing Framework for Real-Time Brinjal Harvest Decision Optimization" AgriEngineering 7, no. 6: 196. https://doi.org/10.3390/agriengineering7060196

APA StyleTamilarasi, T., Muthulakshmi, P., & Ashtiani, S.-H. M. (2025). Smart Edge Computing Framework for Real-Time Brinjal Harvest Decision Optimization. AgriEngineering, 7(6), 196. https://doi.org/10.3390/agriengineering7060196