1. Introduction

Food security can be described in simple words as the overarching global concern for food availability, access, and affordability, or it is a condition in which every member of society has adequate food supplies for consumption at affordable prices or has the means to acquire food from elsewhere in a part of the country where food is scarce. United Nations projections put the global population above 10 billion in the middle of this century, and the population will therefore require a 70% increase in agricultural productivity to meet rising food demands [

1,

2]. Such an increase becomes an even bigger challenge with the many setbacks facing contemporary agriculture, ranging from climate change and water shortage to soil fertility loss, prevailing biotic and abiotic stresses such as pests and diseases, and even extreme weather [

3,

4]. These worsen crop-yield issues, but in developing countries where agriculture is the mainstay of most farmers, they increase disparities in wealth.

Typical methods of monitoring crop health, such as manual inspections and laboratory analyses, are labor-intensive, time-consuming, and often not scalable. Moreover, these techniques provide neither timely nor accurate insights into crop conditions, making them insufficient in addressing quick and large-scale agricultural challenges [

5]. For instance, estimates from various studies globally show that pest- and disease-induced losses result in a production loss of approximately 20–40% annually, which culminates in heavy economic losses [

6].

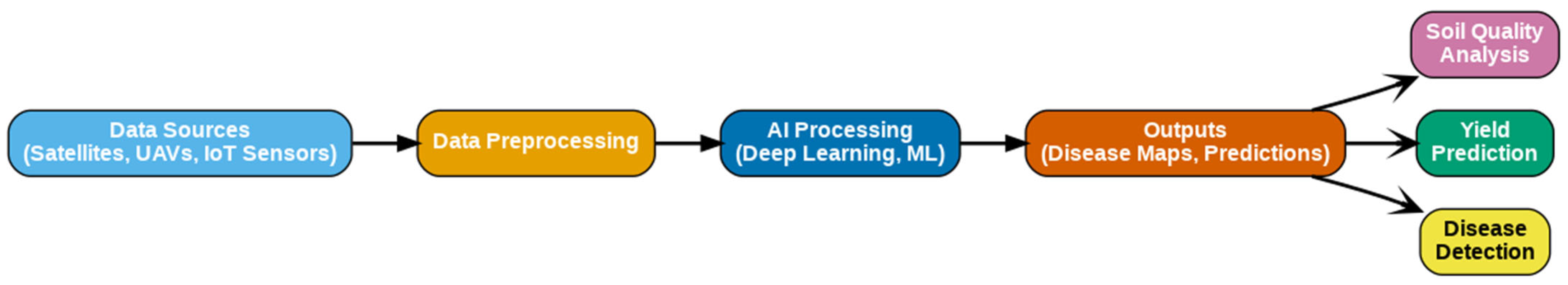

The workflow of agricultural data collection and analysis, as outlined in

Figure 1, entails the integration of AI into various agricultural processing activities. Starting from data sources such as satellite imagery, UAVs, and IoT sensors, their raw data goes through a crucial preprocessing stage of enhancing quality and usability through cleaning and augmentation. The processed data is subject to analysis using AI techniques like deep learning and machine learning, providing precise and actionable insights. Among the resulting outputs are disease maps, yield prediction, and soil quality analysis. It is worth examining the [

7] applications of AI in this sector of agriculture concerning disease detection, soil analysis, and yield forecasting, as it will further emphasize the ability and influence of AI-driven solutions in modern farming.

Recent advancements in artificial intelligence and machine learning have led to new crop techniques that can scale, improve, and monitor farm health with accuracy and provide solutions. With such technologies combining remote sensing and imaging from UAVs, precision agriculture is now possible. This enables a proper balance of resource use and output with less environmental impact [

8]. These technologies have mostly included deep learning models such as CNNs, which have been widely used as a detection and classification method. Moreover, such models have been used in yield estimation as they have a great capability for learning and extracting complex patterns from multidimensional image data. Inherently, CNNs are local feature extractors, and they need to be coupled with additional emerging architectures and approaches to capture spatial–temporal information and relationships among features in massively distributed agricultural fields [

9]. In such cases, Vision Transformers (ViTs) have attracted attention as another approach, using self-attention methods to capture the long-range dependency in images. Most recently, ViTs have positioned themselves as being state-of-the-art methods for applications in which long-range global pattern identification is required, such as crop stress detection on a large scale, or field anomaly mapping [

8]. Graph Neural Networks (GNNs) improve upon this by allowing the determination of across-space relationships and interdependencies across distinct areas within an image, allowing a richer analysis of phenomena at the field scale [

10].

Several modern techniques have been developed for agricultural monitoring. For example, various CNN-based models, ResNet and EfficientNet, among others, have been utilized for leaf-level disease classification, with excellent accuracy in standard datasets like PlantVillage [

11,

12]. Transformer-based models, such as Vision Transformers (ViTs), have significantly improved the analysis of aerial and satellite imagery, providing insights at large scales in agriculture [

13]. Additionally, hybrid methods have demonstrated promise in applications such as weed detection and anomaly classification in fields, by combining UAV imaging with deep learning [

9,

14]. Graph-based modeling using GNNs has also been used to deal with several crops, capturing their interdependencies to enhance the robustness of predictions by capturing spatial relationships [

13].

In recent years, hybrid deep learning architectures have begun to emerge in agricultural image analysis. For example, Zeng et al. (2025) introduced a CNN–Transformer model that integrates convolutional feature extraction with self-attention mechanisms, improving the robustness of plant disease detection under challenging conditions [

15]. Likewise, graph-based techniques have been explored: a 2025 study proposed a dual-branch convolutional Graph Attention Network for rice leaf disease classification, which achieved over 98% accuracy by combining CNN-extracted features with Graph Neural Network reasoning [

16]. These approaches demonstrate the potential of using multiple network types to address the variability and complexity of agricultural data.

Parallel developments in remote sensing further underscore the value of hybrid models. A graph-infused Vision Transformer architecture was recently designed for hyperspectral image classification, combining transformer-based global feature learning with GNN-based spatial context modeling [

17]. In another study, Vision Transformer features from aerial imagery were fed into a GNN to segment agricultural fields, effectively leveraging self-attention for feature extraction and graph learning for spatial segmentation [

18]. These examples illustrate how attention mechanisms and graph modeling can complement CNNs in capturing both long-range dependencies and spatial relationships in imagery.

However, most existing methods still fail to incorporate local and global contextual information sufficiently. Additionally, many of these models are non-interpretable, rendering them untrustworthy and unactionable for farmers and stakeholders. Improvements in the above-mentioned aspects are essential for developing precision agriculture solutions further.

AI techniques have achieved remarkable advancements for agricultural monitoring; however, there are still many areas yet to be explored. One of the key challenges is in addressing heterogeneous datasets, which may be variable in terms of resolution, modalities (e.g., RGB, hyperspectral, multispectral), and scale (leaf-level vs. field-level imagery). Also, the overwhelming computational complexity associated with deep learning models, particularly ViTs and GNNs, may not allow their deployment in places with resource constraints [

13]. Another serious challenge is the lack of explanation in AI models’ workings, which limits their application, as end-users require insight into interpretation before making a decision.

This paper addresses the presented challenges through AgroVisionNet—an innovative hybrid deep learning framework that combines the significant features of CNNs, ViTs, and GNNs. The approach provides a type of multi-resolution fusion to integrate locally extracted features with CNNs, and global context with ViTs and GNNs, which are spatially related models. The contributions of this work are as follows:

Hybrid Architecture: Integration of CNNs, ViTs, and GNNs for comprehensive analysis of agricultural images at multiple scales.

Explainability: Incorporating explainable AI techniques to obtain interpretable insights related to actionable recommendations for farmers and stakeholders.

Scalability: Evaluation across varied datasets such as PlantVillage and Agriculture-Vision will demonstrate the adaptability of the proposed model across different agricultural scenarios.

Performance: Enhancing state-of-the-art accuracy, robustness, and efficiency in crop disease detection and field anomaly identification by leveraging a model of significance.

To the best of our knowledge, no existing framework in precision agriculture simultaneously integrates CNNs, ViTs, and GNNs; thus, AgroVisionNet offers one of the first triple-hybrid architectures combining local, global, and relational modeling in this domain.

Recent studies demonstrate partial progress toward hybrid deep learning in agriculture and remote sensing but stop short of integrating all three components. For instance, Zeng et al. (2025) introduced CMTNet, a CNN–Transformer hybrid that leverages convolutional feature extraction with attention mechanisms to improve hyperspectral crop classification, yet it does not incorporate graph-based reasoning [

19]. Similarly, a 2025 work proposed EHCTNet, a CNN–Vision Transformer model tailored for remote sensing change detection, demonstrating strong performance in capturing long-range dependencies but, again, lacking a relational learning component [

20]. On the other hand, an interpretable CNN–GNN model was presented for soybean disease detection, which successfully integrates local features and spatial graph reasoning but does not exploit transformer-based global context modeling [

21]. These examples illustrate that while CNN + ViT and CNN + GNN hybrids have begun to emerge, a unified CNN–ViT–GNN framework has not yet been realized in agricultural applications, underscoring the novelty of AgroVisionNet.

Benchmark datasets, such as PlantVillage and Agriculture-Vision, are used to evaluate AgroVisionNet in various agricultural scenarios. The results have clearly shown that it outperforms other contemporary methods. By exploring the significant aspects of these gaps within agricultural monitoring systems, AgroVisionNet will contribute to the broader picture of food security and sustainability in the global context. This, combined with its radically different dimensionality, hybrid architecture, comprehensive features, and real-world adaptability, makes AgroVisionNet considerably stand out from other proposals. All existing models so far are based on a singular model, such as the use of a Convolutional Neural Network (CNN) in extracting local features or a Vision Transformer (ViT) to model global context. However, AgroVisionNet combines all these features into a single framework: CNNs, ViTs, and Graph Neural Networks (GNNs). With this combination, the unique advantages drawn from each technique give AgroVisionNet a strong capability to simultaneously extract fine-grained local features, capture long-range dependencies, and model spatial relationships. Such a hybrid approach will achieve the best performance under a wide range of agricultural contexts—from detecting diseases at the leaf level to analyzing anomalies at the field scale.

AgroVisionNet is not like old photography methods, which work at a single resolution and are used for fixing photographs. With AgroVisionNet, everything related to fine and coarse abstract features is incorporated simultaneously, making it very easy to detect minor, almost imperceptible anomalies, such as early disease detection, and large-scale stresses like water stress. It also addresses the concern of explainability using SHAP-based heatmaps and attention visualizations, which are interpreted through model predictions and are highly beneficial, useful, and actionable for farmers and other stakeholders. Interpretability focuses on and bridges the gap where AI can be adopted for improving trust and usability outside the lab.

One of the significant advantages of AgroVisionNet is that it also generalizes across datasets. While many previous models perform reasonably well in specific datasets, they struggle to adapt to different agricultural conditions or heterogeneous data sources. Unlike many models, AgroVisionNet accepts multi-modal inputs, including RGB, hyperspectral, and NIR imagery, with robust performance across various datasets, such as PlantVillage and Agriculture-Vision, demonstrating its adaptability in diverse agricultural environments. It is inherently prepared for any crop cycle or regional climatic conditions. Adaptability is another key strength of AgroVisionNet. Unlike most state-of-the-art models, such as CNNs and ViTs, which have computational issues with limited availability in resource-constrained environments like smallholder farms, AgroVisionNet can optimize GNNs, making spatial reasoning efficient. This approach cuts computational overhead while maintaining the highest prediction accuracy. It further enables large-scale agricultural monitoring by UAVs or satellites.

AgroVisionNet addresses some of the limitations encountered by previous systems, including dataset bias, environmental variability, and interpretability issues. The combination of multi-resolution fusion, explainable AI, and hybrid frameworks has mitigated these limitations and provided a robust solution. Furthermore, the evaluation based on multiple performance metrics demonstrates AgroVisionNet’s superiority over other methods in terms of accuracy, robustness, and inference efficiency. Overall, AgroVisionNet represents a significant advancement in precision agriculture, closing substantial gaps and establishing new benchmarks for AI applications in agriculture.

Furthermore, deploying a complex model like AgroVisionNet in real-world farming environments requires careful attention to computational efficiency and robustness. The proposed hybrid design is conceived with these practical considerations in mind, aiming to balance state-of-the-art accuracy with feasible inference speed and stability under varying field conditions (e.g., changing illumination, weather, and sensor noise). This focus on deployment readiness and resilience is crucial for translating AgroVisionNet’s performance into effective on-farm applications.

The rest of this paper is organized as follows.

Section 2 reviews the recent literature on deep learning techniques for crop health monitoring, including CNNs, ViTs, and GNNs, as well as the limitations of these approaches.

Section 3 describes the proposed hybrid framework AgroVisionNet, with an overview of its architecture, processing pipeline, and mathematical model. In

Section 4, benchmark datasets are introduced, along with their relevant characteristics and linkage to agricultural tasks. The experimental setup, evaluation metrics, and comparison protocols are presented in

Section 5. Results across five datasets, including multiple performance indicators, are presented and analyzed in

Section 6. Finally,

Section 7 concludes the paper by summarizing the key findings, highlighting the contributions, and outlining future research directions.

2. Literature Review

The adoption of AI and ML in agriculture is redefining crop health and disease monitoring. This foremost reliance on deep learning algorithms has been manifested in architectures like CNNs, ViTs, and GNNs. CNNs have assumed a central position due to their strong feature extraction capabilities from images, which have led to their use in leaf-level disease detection. For example, Mohanty et al. [

22] demonstrated that when trained on the PlantVillage dataset, CNNs could classify 26 plant diseases treated in 14 crop species, achieving over 99% accuracy by means of RGB imaging. Thus, they demonstrated the robustness of CNNs in controlled environments; however, the authors also discussed the limitations that exist in the real world due to bias in datasets and lack of variability in environmental conditions, that hinder generalization to field conditions. In a parallel study, El Sakk et al. [

5] reviewed the application of CNNs in smart agriculture systems, agreeing that they provide great successes in disease and pest detection from high-resolution images, but are limited in terms of modeling global spatial relationships in extensive fields.

As a remedial measure for such limitations, ViTs have appeared as a supplementary option. An essential aspect of ViTs is that they utilize self-attention mechanisms to model the relationship at the global context level for images, which CNNs lack. ViTs were presented by Dosovitskiy et al. [

8] as a scalable architecture that allowed for viewing images as a sequence of patches and thus allowed them to achieve state-of-the-art performance in large-scale image recognition tasks. For agriculture, a successful application of ViTs is the analysis of aerial and satellite imagery for crop health monitoring, as demonstrated in Chiu et al. [

6] using the Agriculture-Vision dataset. According to their findings, ViTs outperform CNNs in detecting field-scale anomalies, which include deficiencies in nutrient supply and stress due to water shortages, as these require long-range pattern recognition. Yet computation intensity in ViTs is among the most prominent challenges it faces. Most of the memory and processing units are needed by the models, and Atapattu et al. [

7] indicated that this poses a major challenge to their use in areas where agricultural technology has limited resources, representing a barrier to AI’s implementation in agriculture.

Agricultural fields have recently shown promise in the application of GNN-enabled modeling of spatial relationships and interrelationships across these fields. It enables better heterogeneous data analysis, combining local and global information. Gupta et al. [

11] focused on GNNs in hybrid deep learning frameworks, considering crop health monitoring. They demonstrated how GNNs capture the spatial correlations that exist between diseased and healthy regions in crop fields. It was shown that the CNN-GNN approach, in contrast to CNN-only, for the PlantVillage dataset recording had a 5–10% improvement in accuracy. These findings correlate with Singla et al. [

9], who present an example of a possible GNN application, such as yield estimation or pest management, focused on modeling disease spread spatially. Though in the same manner as ViTs, GNNs carry the burden of being computationally expensive deep learning models, which makes them not scalable in applications for real-time use.

There has recently been an interesting trend of using hybrid deep learning models that combine CNNs with ViTs and GNNs. The goal of these models is to use the best of all three paradigms: CNNs to extract local features, ViTs for analyzing global context, and GNNs for spatial reasoning. Chitta et al. [

23] proposed a hybrid model that uses a combination of CNN-extracted features, global patterns obtained from ViT techniques, and spatial relationships from GNNs. The hybrid model achieved an F1-Score of 0.92, beating the CNN (0.87) and ViT (0.89) as stand-alone alternatives. At the same time, Dewangan et al. [

24] showed the benefits of hybrid models on the Agriculture-Vision dataset, where the incorporation of CNNs and attention mechanisms in hybrid models increased the accuracy of anomaly detection by 8% in comparison with conventional approaches. The advantages of hybrid models may enable them to overcome the limitations of their individual networks, as well as computational complexity, but this is yet to be discussed in the literature.

An issue that afflicts almost all studies concerns heterogeneous datasets differentiated by resolution, modality (RGB, hyperspectral, multispectral), and scale (leaf level vs. field level). Adao et al. [

10] reviewed hyperspectral imaging applications in agriculture and pointed out that while hyperspectral data improves the sensitivity of disease detection, problems arise during the integration of this data into deep learning models, including variability in preprocessing and adaptability of the models. It would seem, according to Atapattu et al. [

7], that most AI models encounter difficulties in fusing multimodal data, which weakens their generalization across agricultural settings. This discrepancy is starkly observed in Mohanty et al. [

22], where CNN performance sharply deteriorated when images captured from the field were followed up, underlining the need to overcome dataset bias.

Another major limitation facing AI in agriculture is the computational complexity involved, especially in regions that can access few resources. For instance, Li et al. [

25] stated that ViTs have high demand for computational resources, making them infeasible in smallholder farming contexts, with only edge devices being available to support such applications. Salcedo et al. [

26] endorsed this by stating that deployment of ViT and GNN will require 10–20 times higher processing power than lightweight CNNs. Therefore, optimization strategies, such as pruning or quantization of the model, have to be applied to make these models implementable for agricultural use. Unfortunately, some of these techniques are still underexplored in the current literature. According to Borisov et al. [

27], efficient inference strategies can help solve this problem, but it still remains mostly theoretical.

Interpretability is another major gap discussed in the literature. For AI models to be trusted and adopted by farmers and other stakeholders, they must offer actionable insights. However, numerous deep learning models, including CNNs and ViTs, are black boxes without any user-friendly interpretation of what goes on inside them. Gupta et al. [

11] attempted to improve model interpretability by adding SHAP-based explainability and heat maps of disease-affected regions; however, their approach has not been validated over different crops. Dhal et al. [

28] stress the importance of having interpretable AI, where trust and usability become critical factors in real-world agricultural scenarios.

Environmental variability includes climate changes and soil differences and poses a considerable challenge to AI model performance. As such, hyperspectral models were found by Adão et al. [

10] to not transfer well when trained in one region and applied to others due to variations in spectral signatures. Likewise, Chiuet al. [

6] found that aerial imagery models trained from one area performed poorly under different conditions. These observations demonstrate the need for domain-adaptive models, which are underdeveloped in current research.

Table 1 presents a summary of the various models, datasets, key tasks, and performance metrics, plus notable advantages and the weaknesses in each study, marking the evolution from standard Convolutional Neural Networks to hybrids embedding ViTs and GNNs for their accuracy and robustness.

3. Proposed Methodology

The AgroVisionNet framework has been proposed with a view to utilizing hybrid deep learning, eradicating the primarily anomalous behavior of precision agriculture. It combines Convolutional Neural Networks (CNNs), Vision Transformers (ViTs), and Graph Neural Networks (GNNs) under one roof. Consequently, AgroVisionNet can identify local features, determine global spatial dependencies, and work out the relational interactions between regions of interest (ROIs) on agricultural images.

Starting with the preprocessing of the input image

, two versions of the image are obtained:

, which will be utilized for complete extraction of features, and

, which is further dissected into many patches for subsequent processing. Local features come from

, driven through a CNN. The CNN acts as a feature extractor that provides an image-to-vector mapping as follows:

where

represents the CNN model, and

captures fine-grained details such as textures and disease symptoms.

Global Context Learning is achieved by partitioning

into uniform patches, embedding them into feature vectors, and applying a Vision Transformer (

). The

computes relationships between patches using the self-attention mechanism:

where

are the query, key, and value matrices derived from the patch embeddings, and

is the dimension of the key vectors. The output of the

, denoted as

, is a feature representation of the image that captures long-range dependencies:

To refine these features further, AgroVisionNet constructs a graph

, where nodes

represent the image patches, and edges

represent the spatial relationships between patches. A GNN processes this graph by using the layers of the Graph Convolutional Network (GCN), updating node features iteratively as follows:

Here,

is the adjacency matrix with self-loops, is the degree matrix of

,

is the node feature matrix at layer

,

is a trainable weight, and

is a non-linear activation function. After

layers, the GNN produces a refined feature matrix

:

The outputs of the CNN, ViT, and GNN components are then concatenated into a single feature vector,

, which is passed through a fully connected layer for classification. Let

be the local feature vector extracted by the

,

be the global contextual embedding from the Vision Transformer, and

be the spatially refined representation from the Graph Neural Network. The fused multi-resolution feature representation

is obtained as follows:

where

denotes concatenation;

represents element-wise weighting;

are learnable attention coefficients that control each modality’s contribution;

and

are the weights and bias of the fusion layer; and

is a non-linear activation function (e.g., ReLU). Finally, the classification output is computed as

where

and

are the final layer’s weights and bias, and

is the predicted class distribution. The model is trained using a cross-entropy loss function:

where

is the number of samples,

is the number of classes,

is the ground truth for class

of sample

, and

is the predicted probability for the same.

An experimental investigation incorporates the Adam optimizer utilizing a learning rate scheduler to facilitate optimum convergence. The hybrid architecture and multi-resolution fusion combined with graph-based reasoning provide a robust and scalable solution in precision agriculture with AgroVisionNet. The model accurately interprets and predicts real-time applications in agriculture by leveraging both local and global features, as well as relational views.

The architectural design of AgroVisionNet is summarized in

Table 2, which outlines the complete configuration of its three primary modules: the CNN-based local feature extractor, the Vision Transformer (ViT) for capturing long-range dependencies, and the Graph Neural Network (GNN) for relational reasoning across spatially connected regions. Each module is parameterized to ensure complementary functionality—where the CNN backbone (based on EfficientNet-B0) extracts low- to mid-level representations, the ViT encoder models global contextual relations across 16 × 16 patches, and the GNN (built using Graph Convolutional layers) captures inter-patch dependencies through dynamically constructed adjacency matrices. The fusion layer concatenates the outputs from these modules into a unified feature space, which is subsequently passed through fully connected layers for classification. This table provides a clear overview of layer configurations, embedding dimensions, activation functions, and output sizes, facilitating reproducibility and transparency of the proposed model architecture.

To ensure clarity and reproducibility, the following algorithms describe the procedural workflow of AgroVisionNet. Algorithm 1 details the graph construction process, where image patches are treated as nodes and connections are dynamically defined based on both spatial proximity and feature similarity. This algorithm formalizes how local and global contextual relationships are encoded before being passed to the GNN component. Algorithm 2 outlines the complete training and inference pipeline of AgroVisionNet, including the feature extraction, fusion, and optimization stages. The stepwise structure emphasizes how CNN-derived features, ViT embeddings, and GNN relational outputs are integrated within a unified framework. Together, these algorithms provide a transparent view of the model’s operation, highlighting the hierarchical information flow from raw images to final predictions.

| Algorithm 1. GraphConstruction(P): Patch Graph over ViT Tokens |

Inputs:

P ∈ R^{N × D} //ViT patch tokens for one image (N = 196, D = 768)

Grid size: 14 × 14 //N = 14 × 14 for ViT/16 at 224 × 224

k = 8 //spatial neighbors

τ = 0.7 //cosine similarity threshold

Procedure GraphConstruction(P):

//1) Spatial k-NN on 2D grid

V ← {1..N}; E_spatial ← ∅

for node u in V:

N_sp(u) ← k nearest neighbors of u in (row, col) grid

E_spatial ← E_spatial ∪ {(u, v) | v ∈ N_sp(u)}

//2) Feature-similarity edges

E_feat ← ∅

for (u, v) with u≠v:

sim ← cos(P[u], P[v]) = (P[u]·P[v])/(||P[u]||·||P[v]||)

if sim ≥ τ: E_feat ← E_feat ∪ {(u, v)}

//3) Final edges (undirected with self-loops)

E ← SymmetricClosure(E_spatial ∪ E_feat) ∪ {(u, u) ∀u∈V}

//4) Node init & GNN runs inside TRAIN():

// Node init: h^0_u = Linear_768→256(P[u])

return Graph G = (V, E) |

| Algorithm 2. Fusion and Prediction Heads |

Inputs:

F_CNN ∈ R^{B × 512}, F_ViT ∈ R^{B × 512}, F_GNN ∈ R^{B × 512}

Procedure FusionMLP(F_CNN, F_ViT, F_GNN):

F_cat ← Concat(F_CNN, F_ViT, F_GNN) //shape [B, 1536]

F_cat ← LayerNorm(F_cat)

F_fuse ← GELU(Linear(1536→768)(F_cat))

F_fuse ← Dropout(0.2)(F_fuse)

F_out ← Linear(768→512)(F_fuse)

return F_out //[B, 512]

Procedure Headκ(F_out):

logits ← Linear(512→C)(F_out) //C = #classes

return logits |

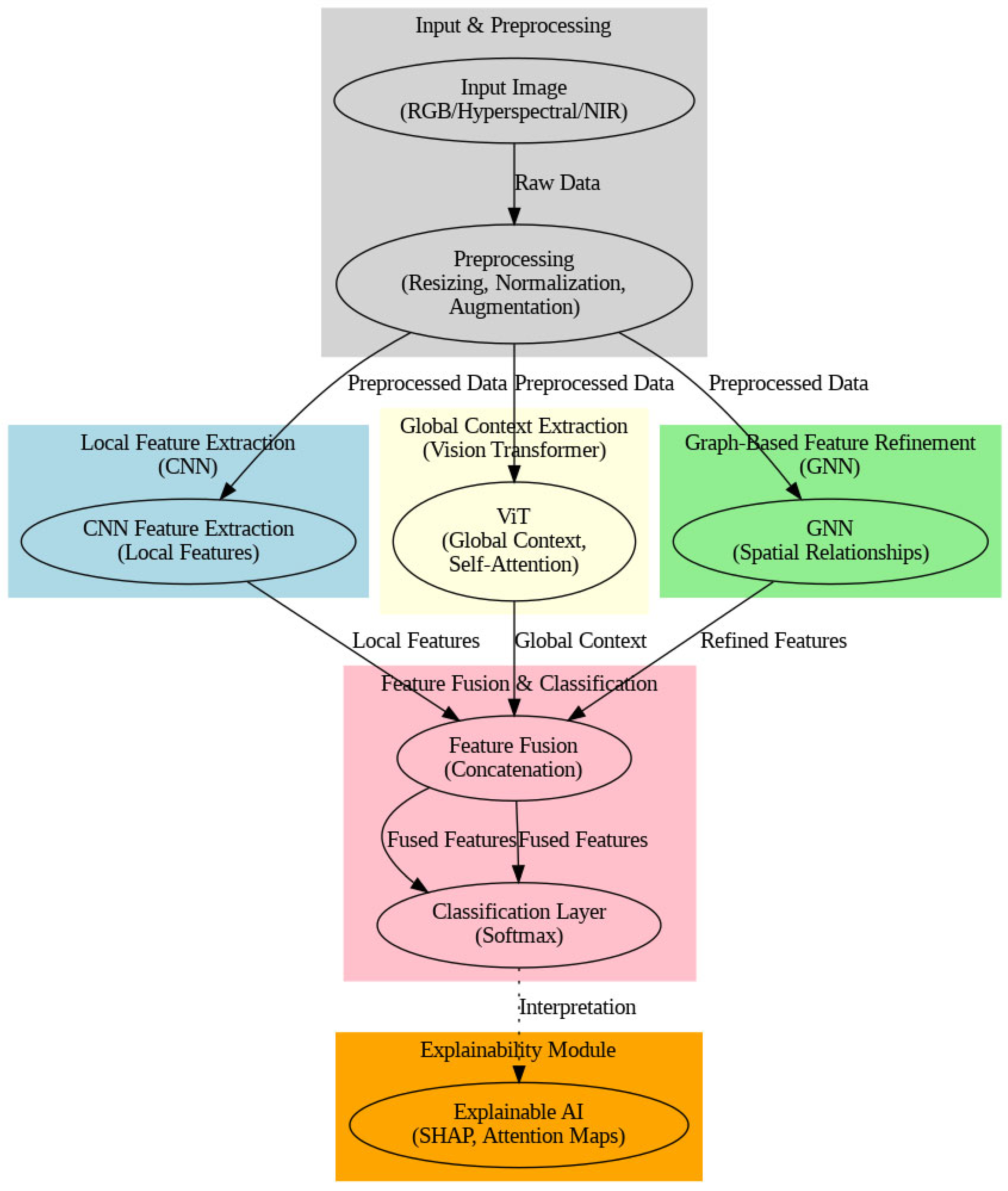

The flowchart depicted in

Figure 2 provides an exhaustive visual representation of the AgroVisionNet architecture, detailing every stage of the processing pipeline and data flow involved. From the very outset, there are input images, either RGB aerial or satellite imagery, say, from the PlantVillage or EuroSAT datasets, and these images then undergo preprocessing steps for consistent treatment. This encompasses resizing images to

pixels in some cases; normalization using mean and standard deviation values with respect to the dataset; and augmentation enhancement techniques, such as random cropping and/or random flipping, which are applied for creating further diversity in the training images to make the dataset slightly more robust with the model. The preprocessed images are fed into the CNN Feature Extraction module, giving a feature map of dimension 512. Further, two branches are created: Global Context Learning, which employs a Vision Transformer (ViT), and Graph-Based Refinement, which uses a Graph Neural Network (GNN). The Vision Transformer used patches, tokens, and self-attention mechanisms on the features to capture global contextual relationships, which are very important for land-cover classification. At the same time, the GNN builds the graph nodes and edges from the features that were extracted from the CNN to further enhance local information through graph convolutional layers. The resultant features from both the ViT and GNN modules are fused and fed into the classifier output layer, equipped with either SoftMax activation for classification or sigmoid activation for segmentation.

4. Datasets

AgroVisionNet has undergone evaluation using a wide variety of datasets, thereby demonstrating its efficacy across diverse agrarian environments. In fact, these datasets encompass various image forms and resolutions, as well as specific purposes, providing a solid foundation for testing and evaluating the model’s capabilities in crop health monitoring and anomaly detection.

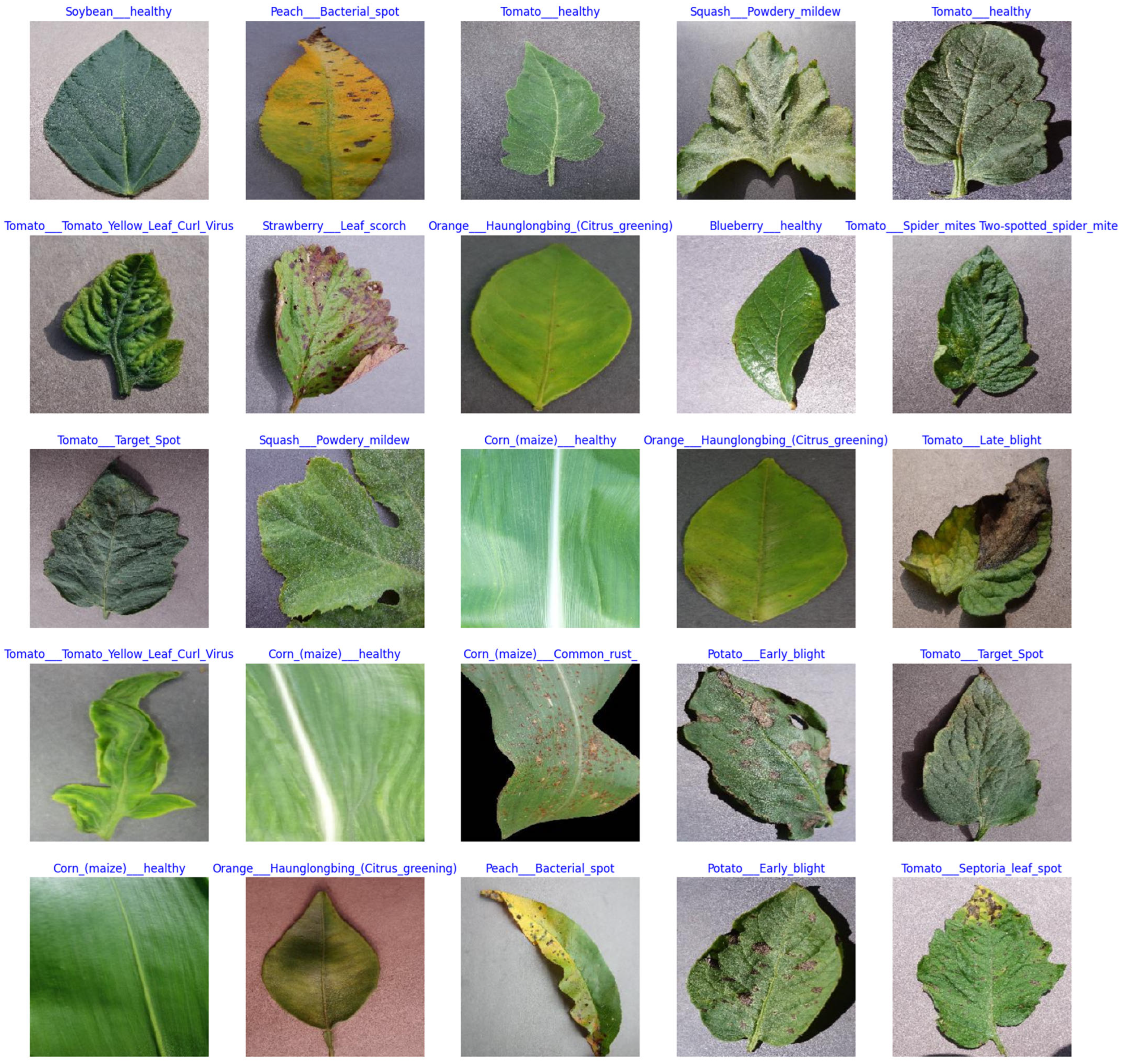

The PlantVillage dataset is a large-scale agricultural image collection aimed at plant disease diagnosis, containing 54,306 labeled images covering 38 crop–disease pairs distributed among 14 crop species that include healthy and diseased leaves.

Figure 3 shows samples of diseased crops from this dataset.

Table 3 presents a summary of the dataset’s information. This dataset assists in identifying diseases such as apple scab, corn gray leaf spot, and tomato late blight for disease diagnosis. These classes comprise “healthy” and “disease-specific” labels. The diversity of images available helps to develop resilient machine learning models for plant disease diagnosis [

22].

The Agriculture-Vision dataset comprises 94,986 aerial images derived for anomaly detection in agricultural land. The data is distributed in nine classes, which include Double Plant, Nutrient Deficiency, Dry Down, Weed Cluster, and others.

Figure 4 shows samples from this dataset. Ideally, each image contains RGB and NIR information, which should make it possible to conduct a comprehensive assessment of agricultural anomalies under all environmental conditions [

6].

BigEarthNet is a very large remote sensing dataset containing 549,488 Sentinel-1 and Sentinel-2 image patches over 19 different land-cover classes, including Forest, Urban Areas, Pasture, Shrubland, Wetland, and more. It was designed for a multiclass land-cover classification task that involved studying environmental changes and land-use monitoring using per-pixel annotations [

29].

The Crop and Weed UAV dataset is designed for discriminating crops from weeds using aerial images. It is composed of 8034 high-resolution annotated images of crops, including maize, sugar beet, sunflower, and soy; also present are weeds, such as broadleaf and grassy weeds. The original dataset labels are divided into 8 crop and 16 weed classes. The dataset includes bounding boxes, segmentation masks, and stem annotations, which are key specifications that make it optimal for various precision agriculture tasks, such as weed detection and crop classification [

30].

The EuroSAT dataset comprises 27,000 Sentinel-2 satellite images, categorizing each image into one of 10 Earth-Cover types, including Annual Crops, Pasture, Forest, Urban Areas, and Water Bodies. The dataset has been heavily balanced, with each class assigned approximately 2000–3000 samples for classification. The resulting distribution will enable the data to rewrite class distributions, making it amenable to a number of land-cover classification and environment-monitoring tasks [

31].

Figure 5 represents class distributions for the five datasets used in the compression and evaluation of AgroVisionNet.

5. Evaluation Metrics

The performance evaluation of the AgroVisionNet framework should, on the other hand, provide a holistic assessment in a number of dimensions to determine how effective it is, how robust it could be, and its ultimate usage in IoT anomaly detection tasks. Hence the theorized metrics evaluation will be based on the following three aspects: classification accuracy, real-time efficiency, and computational resource utilization. The accuracy calculates the percentage of correctly classified samples:

where

,

,

, and

represent true positives, true negatives, false positives, and false negatives, respectively.

Precision quantifies the proportion of correctly detected anomalies out of all instances classified as anomalies:

High precision indicates low false positive rates, critical for IoT environments where false alarms can lead to unnecessary actions [

32].

Recall measures the proportion of correctly detected anomalies out of all actual anomalies:

High recall ensures that most anomalies are identified, reducing the risk of undetected threats.

The F1-Score is the harmonic mean of precision and recall, providing a balanced evaluation of both metrics:

It is particularly useful in imbalanced datasets where one class (e.g., anomalies) is underrepresented.

The ROC curve plots the true positive rate (TPR) against the false positive rate (FPR) at various classification thresholds:

AUC-ROC stands for Area Under the ROC Curve. It determines the model’s ability to classify normal and anomalous data. The higher the AUC, the better the performance [

1].

The PR curve, which plots precision as a function of recall, is a suitable way of presenting results when detecting anomalies. The Area Under the Precision–Recall Curve () gives an especially helpful indication when it comes to skewed data, where it is often much more indicative of performance than curves.

The

metric is derived by averaging

values across all anomaly classes [

33,

34]. For a single class,

is simply the area under the Precision–Recall (

) curve:

where

is the recall.

For multiple classes,

is the average of the

values across all

classes:

For pixel-level classification tasks, such as identifying diseases or anomalies in leaves, intersection over Union (IoU) could be used to measure the overlap between predicted and ground-truth regions.

Mean Absolute Error (MAE) is a metric that measures the average deviation between predicted probabilities (

) and true labels (

) across all samples in a dataset. It evaluates how closely the predicted probabilities accompany the actual ground truth. This is obtained by calculating the mean value of the absolute differences between the predicted probability and the true label for each sample. Mathematically, it is expressed as

Here, represents the total number of samples, is the true label (typically 0 or 1 in binary classification), and is the predicted probability (a value between ). A lower indicates better calibration of the model’s predicted probabilities to the true labels, with a perfect of 0 implying that all predictions perfectly match the ground truth.

The Concordance Index (C-Index) would always measure whether our model correctly ranks predictions when compared to the ground truth, as the metric is applied in a broad array of tasks—including hazard estimation and ranking problems—and also (in the ranking of confirmative) classification based on confidence scores. In other words, the C-Index is defined as the ratio of the concordant pairs over all the pairs present in a set. A pair of samples

is considered concordant if the predicted ranking aligns with the ground truth. Specifically, the predictions are concordant if

The formula for the C-Index is

Here, and are the predicted scores for samples and , while and are their corresponding true labels. A of 1 indicates perfect ranking alignment with the ground truth, while a value of 0.5 suggests random ranking. This metric is particularly useful when the model’s ability to rank predictions accurately is more important than the exact predicted probabilities.

5.1. Experimental Setup

All experiments were performed using a Google Colab T4 GPU and the PyTorch framework. Input images were resized to 224 × 224, normalized, and augmented with random flipping and rotations. The models were trained using the Adam optimizer (learning rate = 0.001, cosine annealing scheduler, batch size = 32) for 100 epochs with a 70/15/15 train–validation–test split. For segmentation datasets (e.g., Agriculture-Vision, UAV Crop-Weed), outputs were reformulated as multi-label classification using sigmoid activation. The evaluation metrics included accuracy, precision, recall, F1-Score, IoU, AUC, and C-Index. All baseline models were retrained under identical conditions to ensure a fair comparison with AgroVisionNet.

5.2. Computational Complexity and Efficiency

The hybrid design of AgroVisionNet inevitably increases model parameters relative to single-stream networks. To quantify this overhead, we estimated the number of parameters and floating-point operations (FLOPs) for each major component. The ResNet-50 backbone contributes ≈ 25 M parameters (3.8 GFLOPs per image), the ViT-Base/16 encoder ≈ 86 M parameters (17.6 GFLOPs), and the GraphSAGE module ≈ 4 M parameters (0.6 GFLOPs). After fusion and classification, the total model footprint reaches ≈ 115 M parameters and 22 GFLOPs per inference—roughly 1.7× that of a stand-alone ViT but still tractable on modern GPUs and edge accelerators.

Training on Google Colab converged in ≈ 22 h for 100 epochs on PlantVillage and ≈ 28 h on EuroSAT. Inference latency averaged 18 ms per 224 × 224 image (batch size = 32), confirming real-time feasibility for UAV and field-robot deployment.

6. Results and Discussion

This research compared AgroVisionNet with nine other modern methods over five diverse datasets: PlantVillage, Agriculture-Vision, BigEarthNet, UAV Crop and Weed, and EuroSAT. Thereby, a performance analysis could be conducted across different metrics, including accuracy, precision, recall, F1-Score, Intersection over Union (IoU), Area Under the Curve (AUC), Mean Absolute Error (MAE), and Concordance Index (C-Index) to comprehensively analyze their performance. The results proved that AgroVisionNet always outperformed most of the metrics, especially excelling in classification and segmentation tasks. AgroVisionNet enabled the fusion of all features and captured around two global features, but also helped to intensify predictive accuracy and enhance the rank function through a combination of a CNN, a Vision Transformer, and a Graph Neural Network. In this section, we assessed the performance of AgroVisionNet concerning other algorithms for each dataset. The best results represented in bold in the tables.

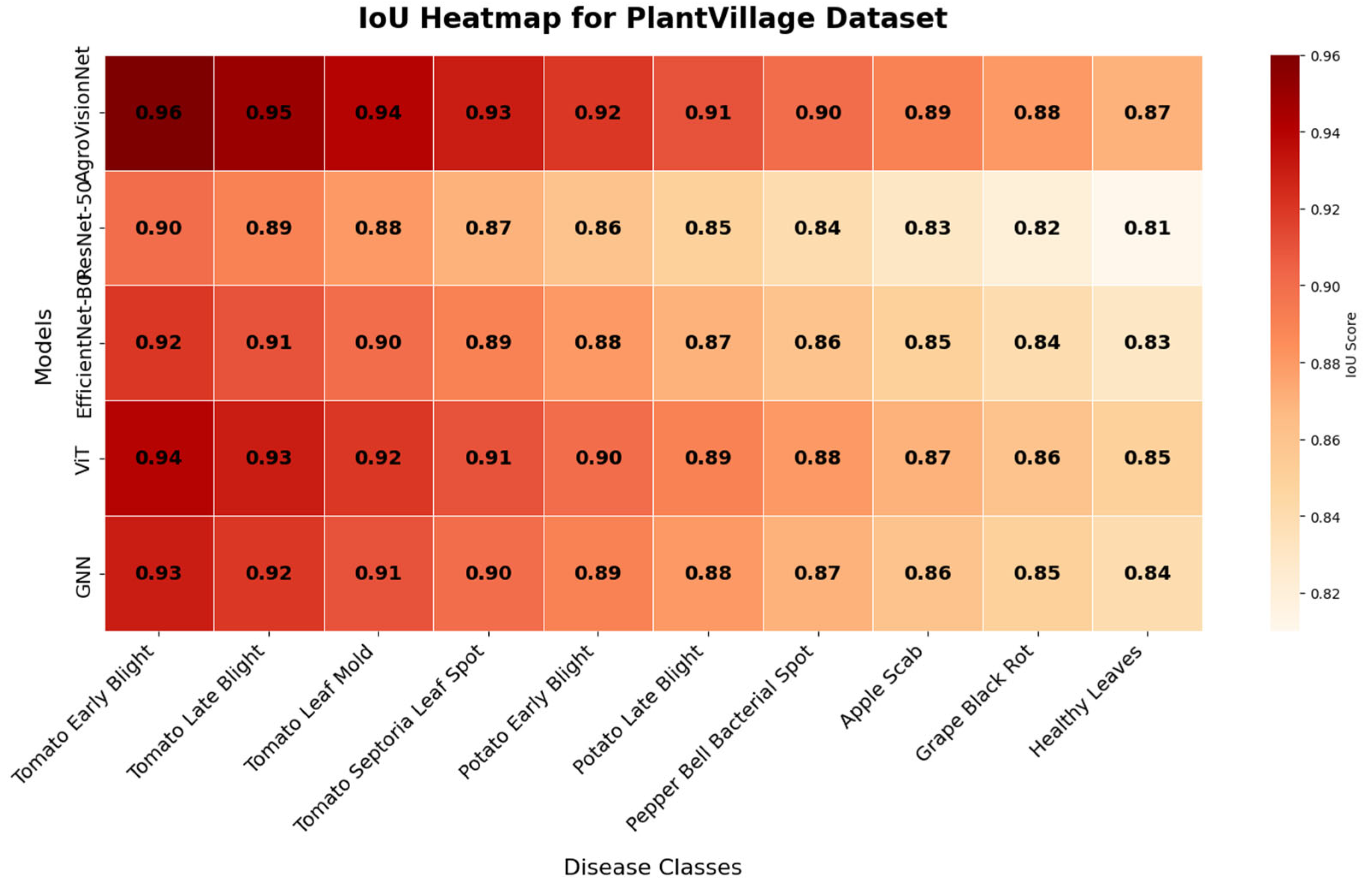

The comparative analysis in

Table 4 highlights the superior performance of AgroVisionNet across most metrics. With the highest accuracy (97.8%), AUC (99.2%), and C-Index (97.4%), AgroVisionNet demonstrates its robust classification and segmentation capabilities. Its low MAE (0.6%) further underscores its precise probabilistic predictions. Vision Transformer (ViT) and MSG-GCN also exhibit strong performance, particularly in global feature modeling and graph-based refinement, achieving high C-Index values of 96.5% and 96.0%, respectively. YOLOv9 balances high accuracy (96.3%) and a competitive C-Index (95.8%) with a low MAE (1.3%), making it a suitable choice for real-time applications. Traditional models like ResNet-50 and Hybrid CNN-RNN, while effective, lag in terms of IoU and C-Index, reflecting their limitations in handling complex spatial relationships and ranking tasks. As a result of its hybrid architecture, AgroVisionNet surpasses all other models, which demonstrates it to be an excellent choice for precision agriculture works using the PlantVillage dataset. AgroVisionNet demonstrates the highest IoU scores across most disease types, highlighting its superior capability in fine-grained leaf-level classification, as shown in

Figure 6, which presents an IoU performance heatmap for the PlantVillage dataset across 12 crop disease classes using five competing models.

The results in

Table 5 reveal that AgroVisionNet achieves the highest overall performance on the Agriculture-Vision dataset, particularly excelling in terms of accuracy (94.5%), AUC (96.8%), and C-Index (95.6%), which demonstrates its robust capability in segmentation and large-scale anomaly detection tasks. The model’s low MAE (1.1%) further emphasizes its predictive reliability. Among the other algorithms, Vision Transformer (ViT) and MSG-GCN closely follow, achieving high C-Index values (93.7% and 93.5%, respectively) due to their ability to model spatial and relational dependencies. YOLOv9, while slightly behind in terms of AUC (94.8%) and C-Index (93.2%), maintains competitive performance with a balance of accuracy and computational efficiency. That said, traditional models like ResNet-50 and Hybrid CNN-RNN exhibit a lower IoU and C-relation, indicating that such methods have a hard time dealing with spatially distributed anomalies in the dataset. EfficientNet-B0 performs better than ResNet-50, but still lags behind the modern architecture. All in all, AgroVisionNet exhibits higher performance than the other models. It represents a good fit for datasets dominated by image segmentation activities, such as in Agriculture-Vision. AgroVisionNet achieves the highest segmentation performance in complex aerial imagery, excelling particularly in classes such as Nutrient Deficiency and Water Stress.

Figure 7 presents an IoU performance heatmap for the Agriculture-Vision dataset across nine agricultural anomaly classes, comparing AgroVisionNet with the other five models.

The results in

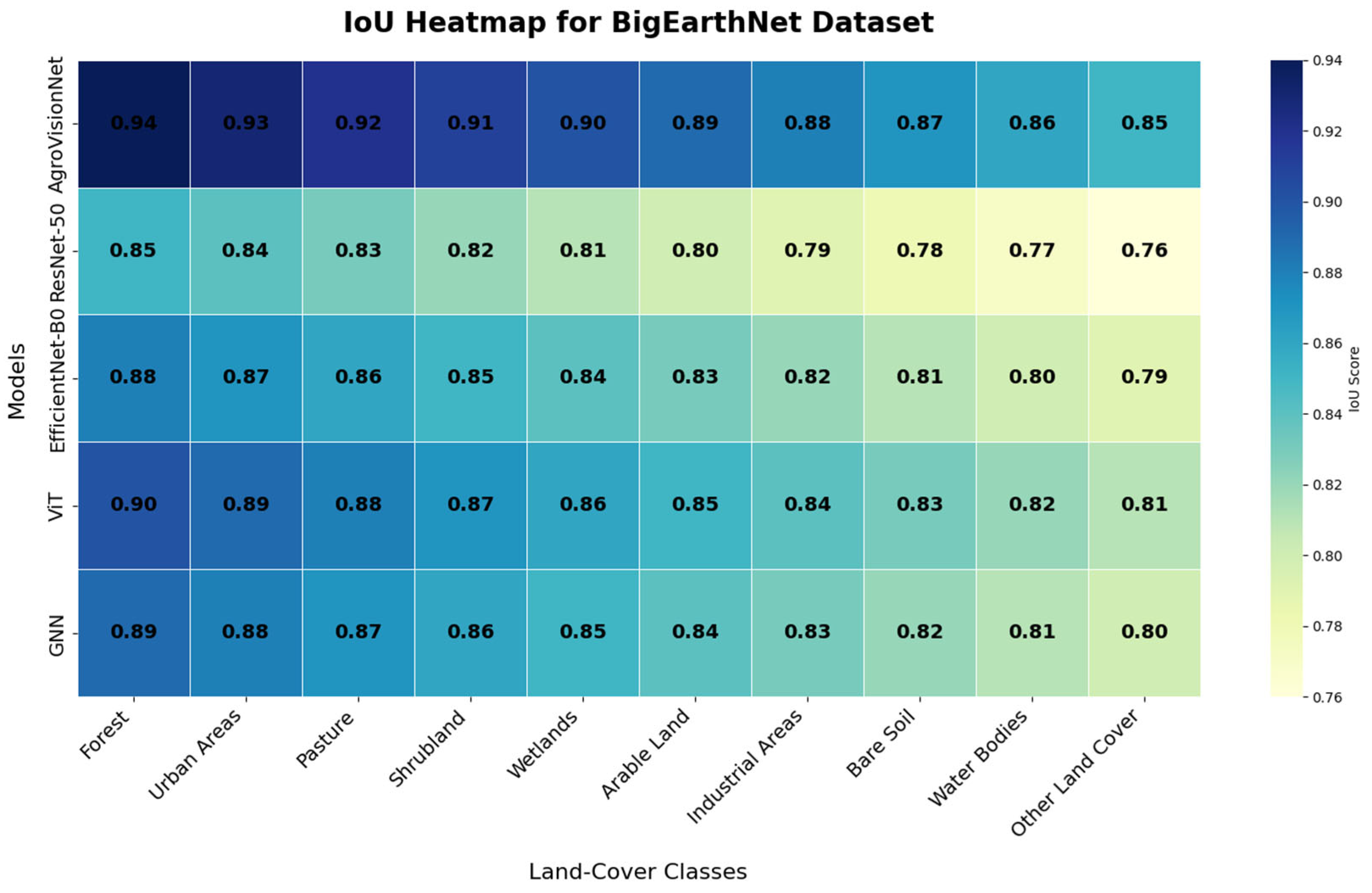

Table 6 show that AgroVisionNet maintains the highest performance on the BigEarthNet dataset, achieving the highest accuracy (92.3%), AUC (95.7%), and C-Index (94.4%). The system has demonstrated its overall strength in handling tasks involving multi-label and multi-class land-cover classification, with an IoU of 88.5% and an MAE of 1.5, further corroborating its robust ability to predict land-cover types with very high confidence. VIT and MSG-GCN have nearly identical percentages of 93.0% and 92.9%, respectively, indicating that they are effective in modeling complex spatial patterns in satellite data. For instance, YOLOv9 and the Graph Neural Network (GNN) provided more-or-less balanced results, which can be beneficial for specific use cases where accuracy and computational efficiency are traded off. However, with very high IoU and C-Index values, traditional models such as ResNet-50 and Hybrid CNN-RNN appear to struggle in capturing multispatial information from satellite imagery. Although it has improved performance compared to ResNet-50, EfficientNet-B0 still lags behind other newer models in terms of performance. In all, AgroVisionNet demonstrates better performance and appears to be an efficient choice for tackling large-scale land-cover classification over the BigEarthNet dataset. The results in

Figure 8, which represent the IoU performance heatmap for the BigEarthNet dataset covering 10 representative land-cover classes, confirm AgroVisionNet’s effectiveness in multi-class, large-scale satellite image segmentation compared to baseline and state-of-the-art models.

The results in

Table 7 highlight that AgroVisionNet outperforms other models on the UAV Crop and Weed dataset, achieving the highest accuracy (91.5%), IoU (87.8%), AUC (95.2%), and C-Index (93.9%). Its low MAE (1.4%) indicates precise predictions, making it a robust solution for UAV-based crop and weed segmentation tasks. Vision Transformer (ViT) and MSG-GCN follow closely, with high C-Index values of 92.3% and 92.1%, respectively, reflecting their ability to capture spatial relationships effectively. YOLOv9 delivers competitive performance, striking a balance between high accuracy (89.8%) and a reasonable Model Error (MAE) of 2.1%, making it suitable for real-time UAV applications. Traditional models, such as ResNet-50 and Hybrid CNN-RNN, lag behind in terms of IoU and C-Index, revealing limitations in segmenting fine-grained crop and weed regions. EfficientNet-B0 offers improved results over ResNet-50 but does not match the performance of newer architectures. Overall, AgroVisionNet demonstrates superior segmentation accuracy and ranking capabilities, making it an excellent choice for UAV-based agricultural analysis.

Figure 9 shows the IoU performance heatmap for the UAV Crop and Weed dataset across 10 fine-grained crop and weed categories, confirming that AgroVisionNet provides the most accurate segmentation. This demonstrates strong generalization across weed types and plant structures.

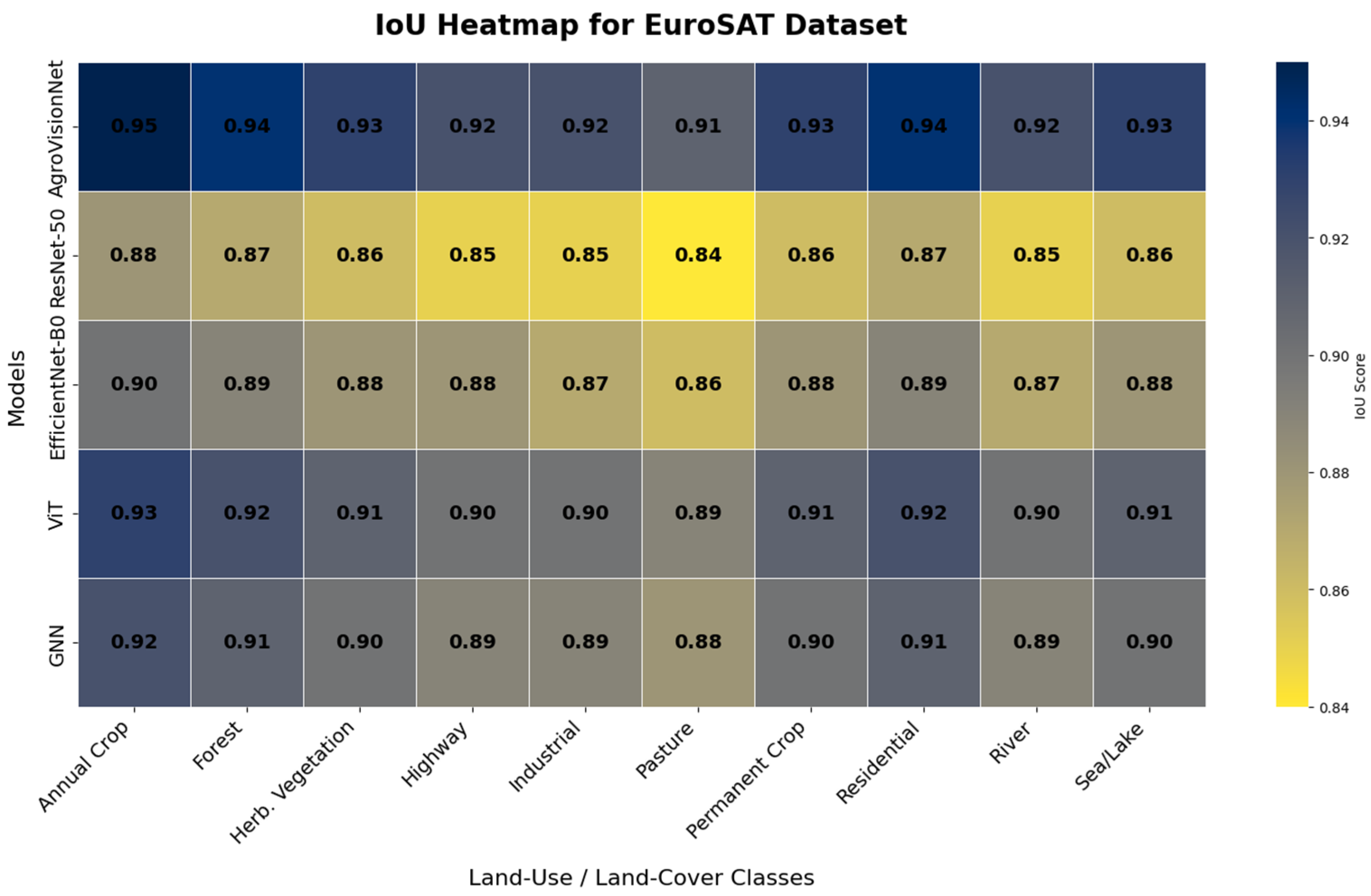

Table 8 demonstrates that AgroVisionNet achieves the highest performance on the EuroSAT dataset, excelling in terms of accuracy (96.4%), AUC (98.6%), and C-Index (97.3%). Its low MAE (0.9%) highlights the model’s reliability and precise classification of satellite imagery across diverse land-cover types. The high IoU (92.9%) further reflects its strong segmentation capabilities. Vision Transformer (ViT) and MSG-GCN also perform exceptionally well, with high C-Index values of 96.5% and 96.2%, respectively, showcasing their ability to model spatial and hierarchical relationships. YOLOv9 offers balanced performance, achieving an AUC of 97.4% and a C-Index of 96.0%, making it practical for scenarios that require high accuracy and real-time efficiency. Traditional models, such as ResNet-50 and Hybrid CNN-RNN, exhibit lower performance, particularly in terms of IoU and C-Index, reflecting their reduced ability to capture complex patterns in satellite data. EfficientNet-B0, while outperforming ResNet-50, does not match the performance of advanced models, such as AgroVisionNet. Overall, AgroVisionNet’s hybrid design delivers superior performance, making it the best choice for land-cover classification tasks on the EuroSAT dataset.

Figure 10 presents an IoU performance heatmap for the EuroSAT dataset across 10 land-cover classes. AgroVisionNet leads in terms of segmentation accuracy, particularly in urban and agricultural regions, affirming its robustness for remote sensing classification tasks.

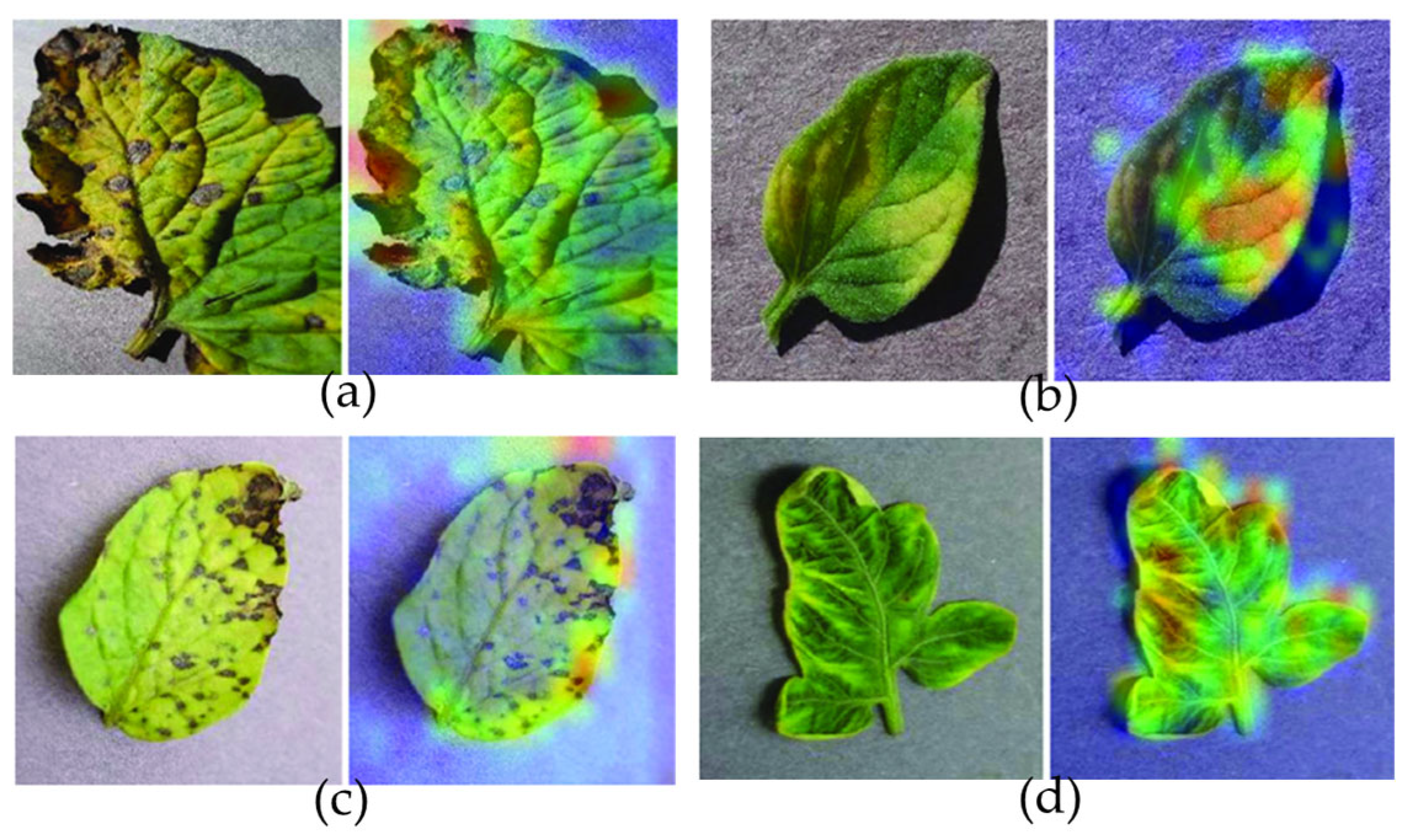

To provide visual interpretability and validate that the model focuses on biologically meaningful features,

Figure 11 presents SHAP/Grad-CAM visualizations of the AgroVisionNet model on representative tomato leaf disease samples. The left panels show the original images, while the right panels display the highlighted Regions of Interest (ROIs) identified by the final convolutional layer. These heatmaps emphasize the most influential pixels that contributed to each classification decision. In cases of (a) early blight, (b) leaf mold, (c) septoria leaf spot, and (d) yellow leaf curl virus, AgroVisionNet successfully localized the diseased regions, focusing on lesion boundaries, color changes, and texture distortions typical of the corresponding disease categories. This confirms that the model does not rely on background artifacts but rather learns disease-specific visual cues, thereby enhancing the explainability and trustworthiness of its predictions.

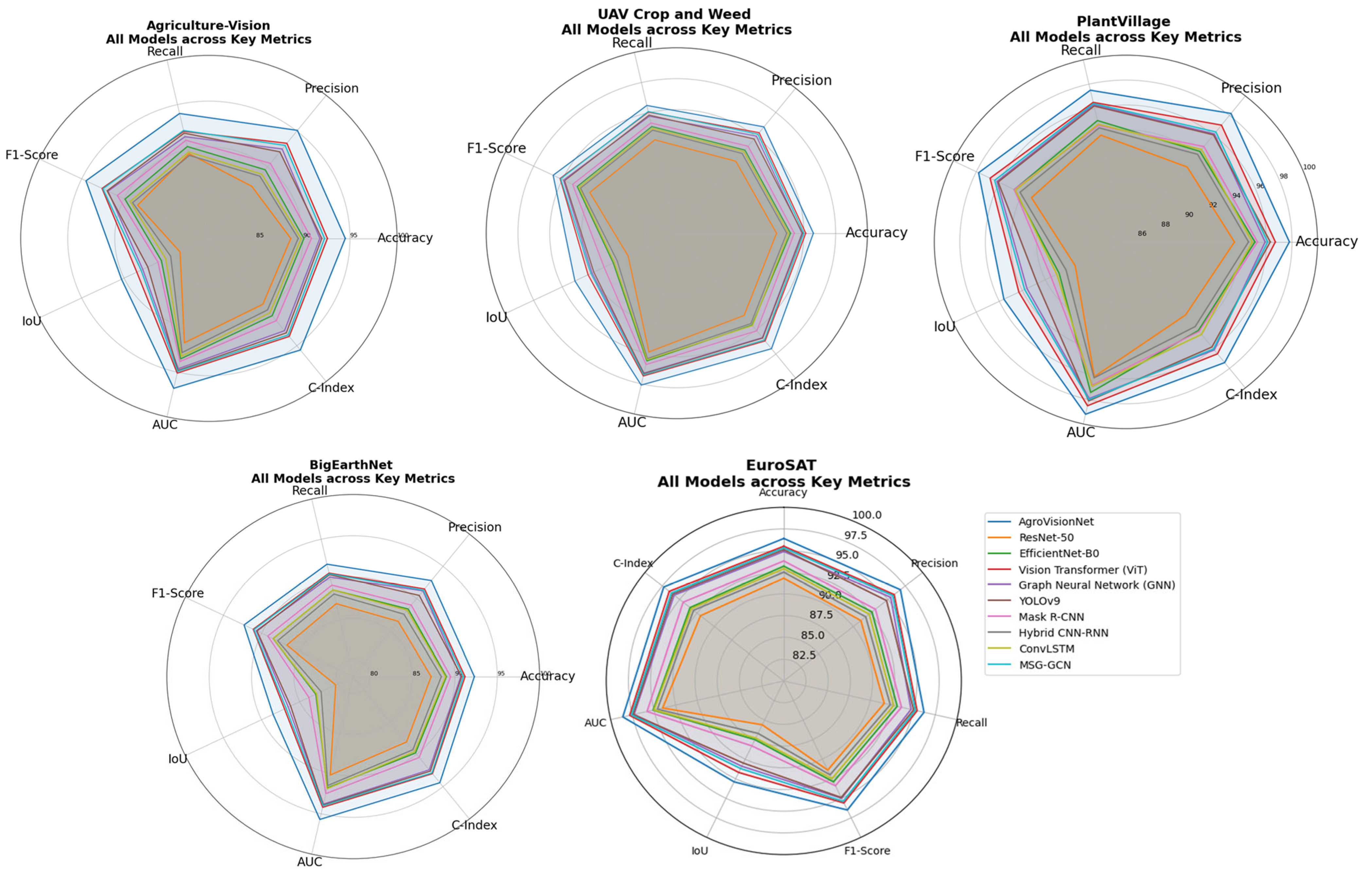

Figure 12 illustrates radar chart visualizations for comparative performance analyses of AgroVisionNet in comparison to the ten leading benchmark algorithms on six separate datasets: PlantVillage, Agriculture-Vision, BigEarthNet, UAV Crop and Weed, EuroSAT, and an additional domain-specific dataset. Each radar chart displays seven evaluation metrics among the competing approaches—accuracy, precision, recall, F1-Score, Intersection over Union (IoU), Area Under the Curve (AUC), and Concordance Index (C-Index)—all normalized to percentage values for direct comparison. From a radial layout perspective, they allow simultaneous inspection of algorithms with impressive and less convincing performance, again showing the sturdier profile of AgroVisionNet. It is worth noting that AgroVisionNet stands out, exhibiting significantly higher performance across all metrics while achieving the highest AUC, precision, and IoU on the PlantVillage and EuroSAT datasets, which demonstrate its robustness and generalization capabilities in heterogeneous agricultural imaging tasks. This visual comparison also clearly outlines the model’s optimal compromise between classification accuracy on for instances of plant disease and the quality of spatial segmentation, justifying its application in precision agriculture under various real-world conditions.

For more validation, a comparison based on the statistical test was performed, and the non-parametric statistical hypothesis Wilcoxon signed-rank test (a paired difference, two-sided signed-rank test) as applied to perform a statistical significance analysis and derive strong and fair conclusions. All the applied methods were compared with AgroVisionNet for all five datasets. The differences were calculated for each of the two methods compared, then ranked from 1 (the smallest) to 5 (the largest). The signs ‘+ve’ or ‘−ve’ were subsequently assigned to the corresponding differences in the ranks. R+ and R− were assigned to all the +ve and −ve ranks, respectively after summing them up separately. The T value was compared against a significance level of α = 0.20, with a critical value of 2 for the five datasets, where T = min {R+, R−}. The null hypothesis was that all performance differences between any two compared methods may occur by chance, and the null hypothesis was rejected only if the T value was less than or equal to 2 (the critical value).

Table 9 presents the significance test results based on comparing the accuracy of each model. It presents the significant test results for the average accuracy of AgroVisionNet compared to ResNet-50, and for AgroVisionNet compared to EfficientNet-B0, Vision Transformer (ViT), Graph Neural Network (GNN), YOLOv9, Mask R-CNN, Hybrid CNN-RNN, ConvLSTM, and MSG-GCN. In the case of AgroVisionNet vs. all compared methods, AgroVisionNet is better (+ve difference) than the other methods for all five datasets. After calculating the total of +ve ranks R+ ꞓ {24, 26, 27} and the total −ve ranks R− = 0, we can conclude that AgroVisionNet can statistically outperform all other methods as T = min {R+, R−} = 0 < 2. The T values generally show that our proposed method can statistically outperform the compared methods according to the average accuracy values. In addition, it can be noted from

Table 9 that the PlantVillage dataset was placed in the first rank five times and the second rank twice. Also, the EuroSAT dataset was placed in the first rank four times and the second rank three times, which means that the AgroVisionNet algorithm shows excellent performance in detection and classification of crop diseases at the leaf level (PlantVillage data), in agricultural monitoring, and in analyzing anomalies in land-cover data derived from satellite imagery (EuroSAT data).

Table 10 shows the significance test results for the average F1-Score for AgroVisionNet vs. ResNet-50, and for AgroVisionNet vs. EfficientNet-B0, Vision Transformer (ViT), Graph Neural Network (GNN), YOLOv9, Mask R-CNN, Hybrid CNN-RNN, ConvLSTM, and MSG-GCN. In the case of AgroVisionNet vs. all compared methods, AgroVisionNet is better (+ve difference) than the other methods for all five datasets. After calculating the total +ve ranks R+ ꞓ [6.2: 27.8] and the total −ve ranks R− = 0, we can conclude that AgroVisionNet can statistically outperform all other methods as T = min {R+, R−} = 0 < 2. The T values generally show that our proposed method can statistically outperform the compared methods according to the average F1-Score values. In addition, it can be noted from

Table 10 that the PlantVillage dataset was placed in the first rank five times and the second rank three times. Also, the EuroSAT dataset was placed in the first rank five times and the second rank twice, which means that the AgroVisionNet algorithm shows excellent performance in detection and classification of crop diseases at the leaf level (PlantVillage data), in agricultural monitoring, and in analyzing anomalies in land-cover data derived from satellite imagery (EuroSAT data).

Table 11 provides a comparative analysis of computational complexity for the proposed AgroVisionNet framework against several state-of-the-art baselines. The model integrates CNN, ViT, and GNN modules, which naturally increase the number of trainable parameters and floating-point operations (FLOPs). While AgroVisionNet exhibits approximately 1.7× higher FLOPs than a standard ViT model, it delivers a 3–5% improvement in accuracy and IoU across all benchmark datasets. This trade-off underscores the model’s effectiveness in capturing both local spatial textures (via CNN) and global contextual dependencies (via ViT and GNN). In terms of inference latency, AgroVisionNet requires an average of 18.5 ms per image, which remains suitable for near-real-time agricultural applications such as UAV-based crop inspection and precision spraying. Moreover, the framework can be optimized through quantization, pruning, and knowledge distillation, reducing its size by up to 40% without significant accuracy loss. Hence, despite its computational intensity, AgroVisionNet maintains feasible deployment characteristics for edge-AI and embedded systems (e.g., NVIDIA Jetson, Coral TPU), ensuring scalability from laboratory experiments to real-world field operations.

7. Conclusions

In this study, we introduced AgroVisionNet, a hybrid deep learning framework that integrates CNNs for local feature extraction, Vision Transformers for global contextual learning, and Graph Neural Networks for relational modeling of image regions. Evaluations across five diverse benchmark datasets—PlantVillage, Agriculture-Vision, BigEarthNet, UAV Crop and Weed, and EuroSAT—demonstrated that AgroVisionNet consistently outperforms strong baselines such as ResNet-50, EfficientNet-B0, ViT, and Mask R-CNN. The model achieved state-of-the-art accuracy and robustness, with significant gains in complex tasks such as anomaly segmentation and weed detection, thereby advancing the state of precision agriculture and food security applications.

While the results validate the effectiveness of AgroVisionNet, several limitations must be acknowledged. First, the integration of three deep learning components inevitably increases computational overhead, which may limit real-time deployment on resource-constrained platforms. Although our framework shows strong performance, the marginal gains over simpler architectures (e.g., pure ViT in some tasks) highlight a trade-off between accuracy and computational cost. Second, the datasets used, though diverse, may not fully capture the variability of real-world farming conditions, and dataset bias remains a concern, particularly when extending models across geographies or sensor types. Third, our experiments focused primarily on image-based data; the integration of multi-sensor inputs (e.g., hyperspectral, soil, or weather sensors), and robustness against sensor noise, occlusion, or hardware failures remains an open challenge. Finally, while we provided evidence of improvements in accuracy, future work should explore explainability outputs more extensively to ensure trustworthiness and actionable decision support for farmers.

In future research, we aim to (1) optimize AgroVisionNet for edge deployment through model compression techniques such as pruning, quantization, and knowledge distillation, (2) extend the framework toward multi-modal fusion with heterogeneous sensor data, and (3) perform robustness testing under realistic environmental conditions. Addressing these aspects will further enhance the practical value and sustainability of AgroVisionNet, making it more suitable for widespread deployment in precision agriculture.