1. Introduction

Food insecurity is a growing concern due to the rising global population, which places significant pressure on the agricultural sector to produce enough food to sustain the expanding population. The latest report from the Food and Agriculture Organization (FAO) on food security and nutrition has shown an increasing trend in the number of people affected by hunger worldwide since 2014 [

1]. Therefore, it has become essential to implement sustainable crop production techniques to reduce crop losses and enhance productivity. These techniques may include integrated weed management, variable-rate agrochemical applications, irrigation management, crop vegetation growth monitoring, and yield estimation.

One critical tool for ensuring future food security is the emergence of precision agriculture (PA) technologies, which integrate Global Positioning System (GPS), Geographic Information System (GIS), and remote sensing technologies. By managing, analyzing, and processing a large amount of real-time agricultural data, PA provides farm management services that assist farmers in on-the-spot decisions. PA technologies have been used for a wide array of applications, such as delineating management zones for variable-rate (VR) operations and monitoring crop health [

2]. Other examples demonstrate that with real-time remote sensing imagery, a crop health monitoring framework can be instrumental in identifying the impacts caused by insects, weeds, fungal infestations, or weather-related damage [

3]. Other implementations of PA technologies included using digital color cameras to determine plant leaf areas for spot application of fungicide [

4] or detecting fruit orangeness when estimating yields within citrus orchards [

5]. Rehman et al. [

6] developed a Graphical User Interface (GUI) system to acquire imagery for controlling spray nozzles of a VR sprayer, resulting in agrochemical savings when applied to spot application.

To provide PA-based, farm management solutions to farmers, it is crucial to consider not only the collection of spatiotemporal data but also the processing, analysis, management, and storage of that data [

7]. Guo et al. [

8] demonstrated that monitoring crop growth status is possible by tracking vegetation indices along with state-of-the-art GPS location information derived from the crop stress maps. Moreover, real-time remote sensing data can significantly enhance decision making in PA if high spatial and spectral resolutions are ensured [

9].

Farmers demand faster processing and real-time, actionable information for analyzing the status of their field using image processing [

10]. However, processing speed and cost-effectiveness remain significant obstacles in existing PA image-processing techniques in real-time and at field scale [

11]. Although real-time control over farmlands has been achieved using the latest, high-performance multiprocessor data-computing systems, such as clusters of networks of central processing units (CPUs) [

12], it is cost-prohibitive. Field programmable gate array (FPGA), however, may present an ideal alternative for image-processing applications [

13] due to its high processing speed, ability to run multiple tasks simultaneously and in parallel, and cost-effectiveness [

13,

14,

15,

16,

17].

For site-specific crop management decisions, Ruß and Brenning [

18] established spatial relationships among geographical data, soil parameters, and crop properties. However, georeferencing using a GPS with a positional accuracy of less than 1 m is needed for monitoring plant canopy status, yield mapping, weed mapping, and weed detection techniques to construct the most accurate map for VR and PA applications [

19,

20]. To address this issue, real-time kinematic (RTK)-GPS can achieve positional accuracies at a few-centimeter scale; for example, Sun et al. [

21] proved its feasibility though the automated mapping of transplanted row crops.

To meet the need for high positional accuracy in crop monitoring, this study integrated RTK-GPS with FPGA-based real-time image acquisition and processing as the foundation for real-time crop monitoring. The study aims to address current limitations in PA related to computational complexity and develop cost-effective and efficient solutions for field-scale farm management applications. The specific research goals of this study were (1) to develop a real-time crop-monitoring system that includes a custom-developed real-time FPGA-based image-processing (RFIP) system and RTK-GPS for monitoring crop growth and/or for other related purposes; (2) to create a real-time data collection and post-processing system; and (3) to establish a geo-visualization layer to support crop management decisions.

2. Materials and Methods

To achieve the research goals, an RFIP, i.e., a real-time FPGA-based image-processing system, was developed and evaluated in the laboratory environment using colored objects. Subsequently, outdoor testing was conducted on Romaine lettuce (

Lactuca sativa L. var.

longifolia) plants [

22] and image processing was carried out on real-time imagery. An overview of the proposed system and performance evaluation techniques are explained in the following subsections.

2.1. Overview of the Real-Time Crop-Monitoring System

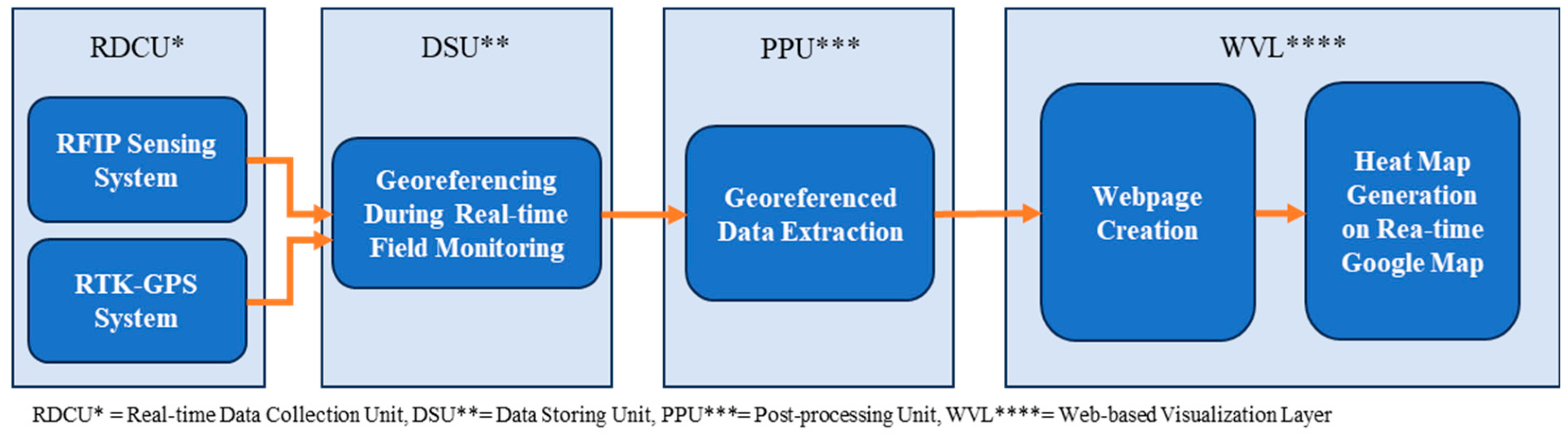

The overall functionality of the real-time crop-monitoring system is depicted in

Figure 1. Firstly, an RTK high-accuracy solution using the u-blox NEO-M8P-2 module and the C94-M8P application board (u-blox Inc.; Thalwil, Switzerland) was configured to achieve an accuracy of 8 cm. The RFIP and RTK-GPS served as real-time data collection devices and are collectively referred to as the real-time data collection unit (RDCU). Here, a personal computer (PC) was connected to the output ports of the RDCU and acted as a data-storing unit (DSU). The Python programming tool V10.0 (Python Software Foundation Inc.; Wilmington, DE, USA) was installed in the DSU to read the RDCU output ports and geotag the real-time field data using reference images from the AVERMEDIA Live Streamer CAM 313 (AVerMedia Inc.; New Taipei City, Taiwan) web camera. Due to the heavy weight and high cost of a digital single-lens reflex (DSLR), it could not be used for this study. However, the web camera showed good correlation with the DSLR camera in a previous study [

22]; therefore, we selected the web camera as an alternative reference camera system.

The collected real-time georeferenced images were then processed by the post-processing unit (PPU) to extract metadata and save it in a specified format for use in the web-based visualization layer (WVL). The PPU used the same PC and programming tool as the DSU; however, the processing did not require real-time data acquisition. The WVL involves two steps: creating and serving local files on a localhost web server and displaying a heat map layer on a real-time Google Map using Maps JavaScript API on the webpage.

The final product is a heat map that displays recorded geographic locations along with real-time field data. The color scheme on the marked locations represents the intensity of the green areas, which is the ratio of the detected Romaine lettuce (Lactuca sativa L. var. longifolia) plant leaf areas to the ground resolution of the RFIP system at corresponding locations.

2.2. Real-Time Data Collection Unit

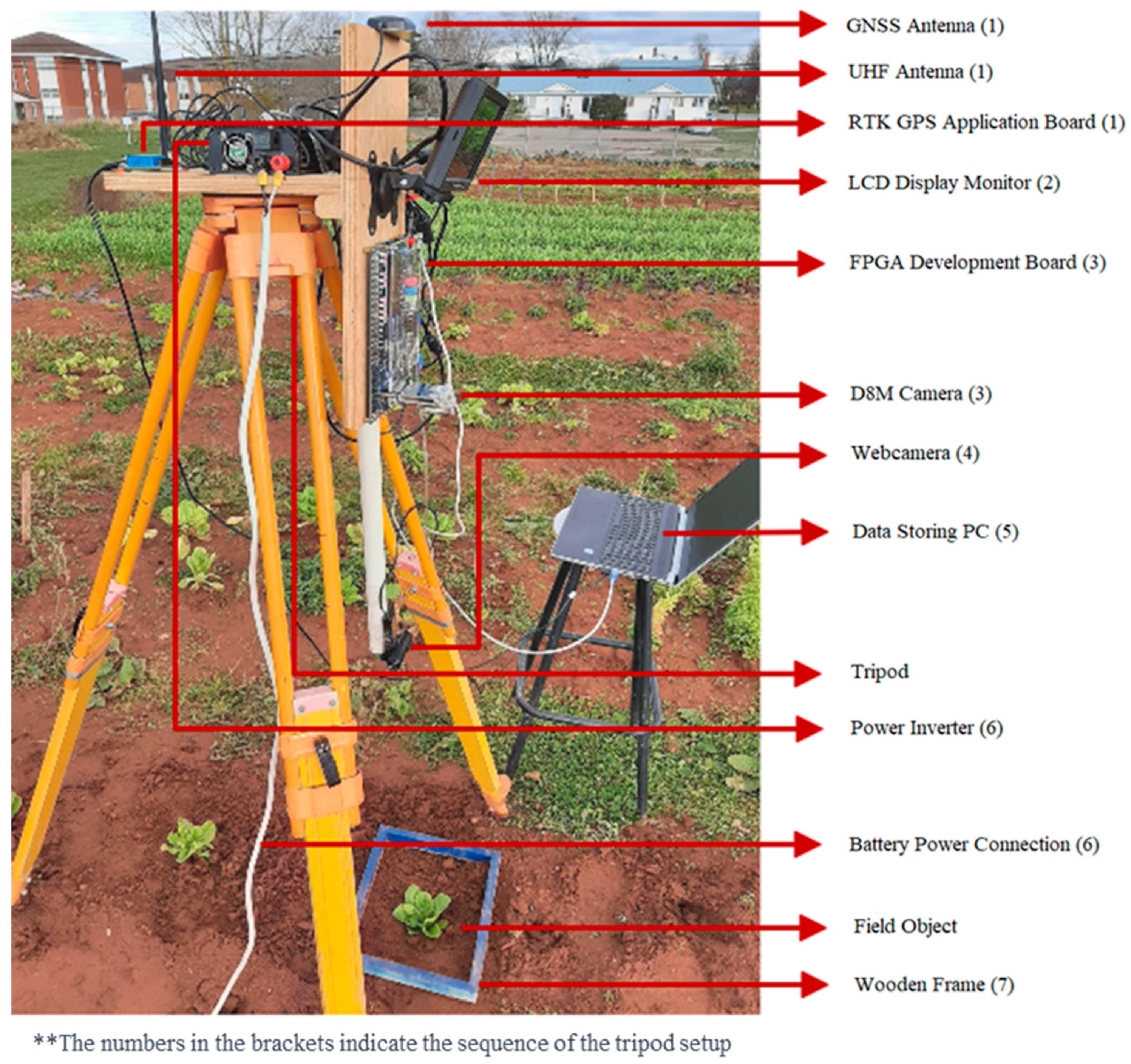

One of the major parts of this research was the RDCU, a prototype of an agile, real-time, FPGA-based, lightweight, and cost-effective crop-monitoring system. For development and testing, the necessary hardware and devices were installed on a horizontal, T-shaped, wooden frame. After installation, the custom-built wooden frame was affixed to a tripod using a metal screw. The tripod had adjustable legs that could be extended to a length of 5 feet and could rotate both left and right.

The tripod setup (

Figure 2) includes the following components: (1) one of two identical RTK boards, configured as a rover with two antennas for global navigation satellite system (GNSS) and UHF; (2) a liquid crystal display (LCD) monitor connected to the VGA output port of the FPGA development board, displaying output images based on specific switching logic [

22]; (3) the RFIP system, consisting of the FPGA board and D8M camera board, which acquires and processes images and transfers the detected pixel area in real-time; (4) a web camera used for geotagging the GPS location and the detected pixel area; (5) one PC that supplies power to the USB web camera and to the RTK rover, connected with the USB output for downloading the Quartus Prime (Intel Inc.; Santa Clara, CA, USA) program configuration file to the FPGA board, and links with the RS232 serial output port of the RFIP system; (6) a battery source with a 400 W power inverter to supply power to the FPGA device and the LCD monitor; and (7) a blue-painted wooden frame measuring 30.0 cm × 22.5 cm, designed to maintain a consistent camera projection at a fixed ground resolution over the selected spots.

2.2.1. RFIP Sensing System

The RFIP sensing system consisted of three distinct units: the image acquisition, image-processing, and data transfer units [

22]. In this setup, the same hardware and software configuration was used to instruct the camera to capture images. The analog gain, digital gain (i.e., red, green, and blue channel gain), and exposure gain were optimized through several experiments and adjustments to acquire imagery at a resolution of 800 × 600 px and at a rate of 40 frames per second. These images were then processed onboard using the G-ratio formula: (255 × G)/(R + G + B) [

22]. An intensity threshold of 90 was selected for each of the color ratio filters to produce a binary image, where the detected area appeared white by setting the processed G output color components to the maximum intensity of 255. The resulting binary image was displayed on the LCD monitor attached to the RDCU. Due to the automatic exposure control limitation of the RFIP system, field data collection was scheduled during clear, mainly sunny, or slightly cloudy weather conditions. To avoid potential effects of occasional cloudiness on plant intensity and maintain consistent brightness during data collection, an umbrella was positioned as a shade in the camera’s ground view at all sample points.

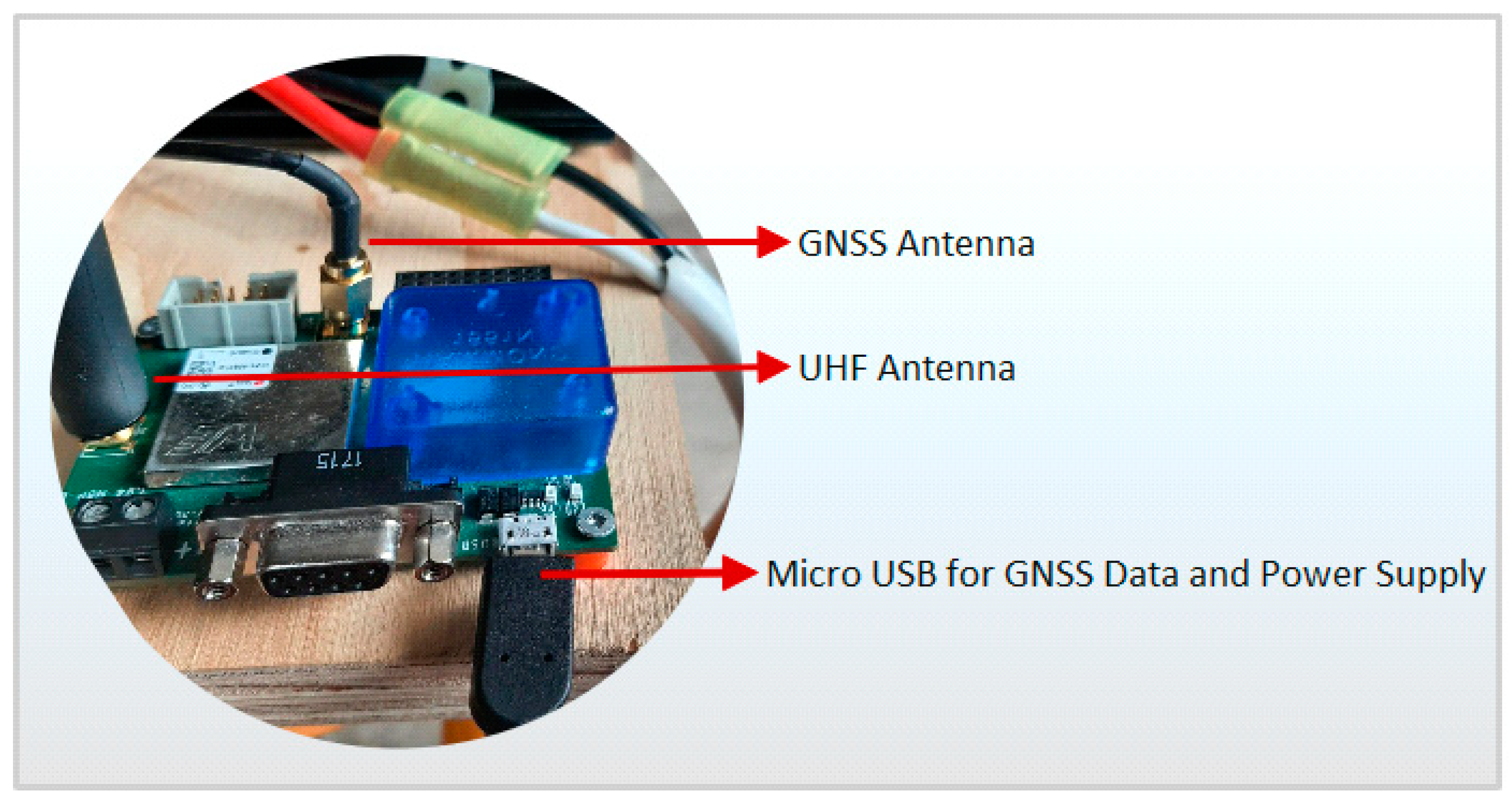

2.2.2. RTK-GPS System

Geolocational information, specifically latitude and longitude, is a crucial factor in the decision support system that assists end-users in applying site-specific farm management solutions in the practical field. A review of current variable-rate PA solutions revealed that GPS accuracy within the meter range is sometimes inadequate for practical ground-truth prescription map generation [

19]. Therefore, another significant aspect of this study was our focus on real-time GPS accuracy in the cm range. To achieve this, two identical RTK boards from u-blox’s M8 high-precision positioning module were configured to serve as RTK rover and RTK base station. Each board required three basic connections (

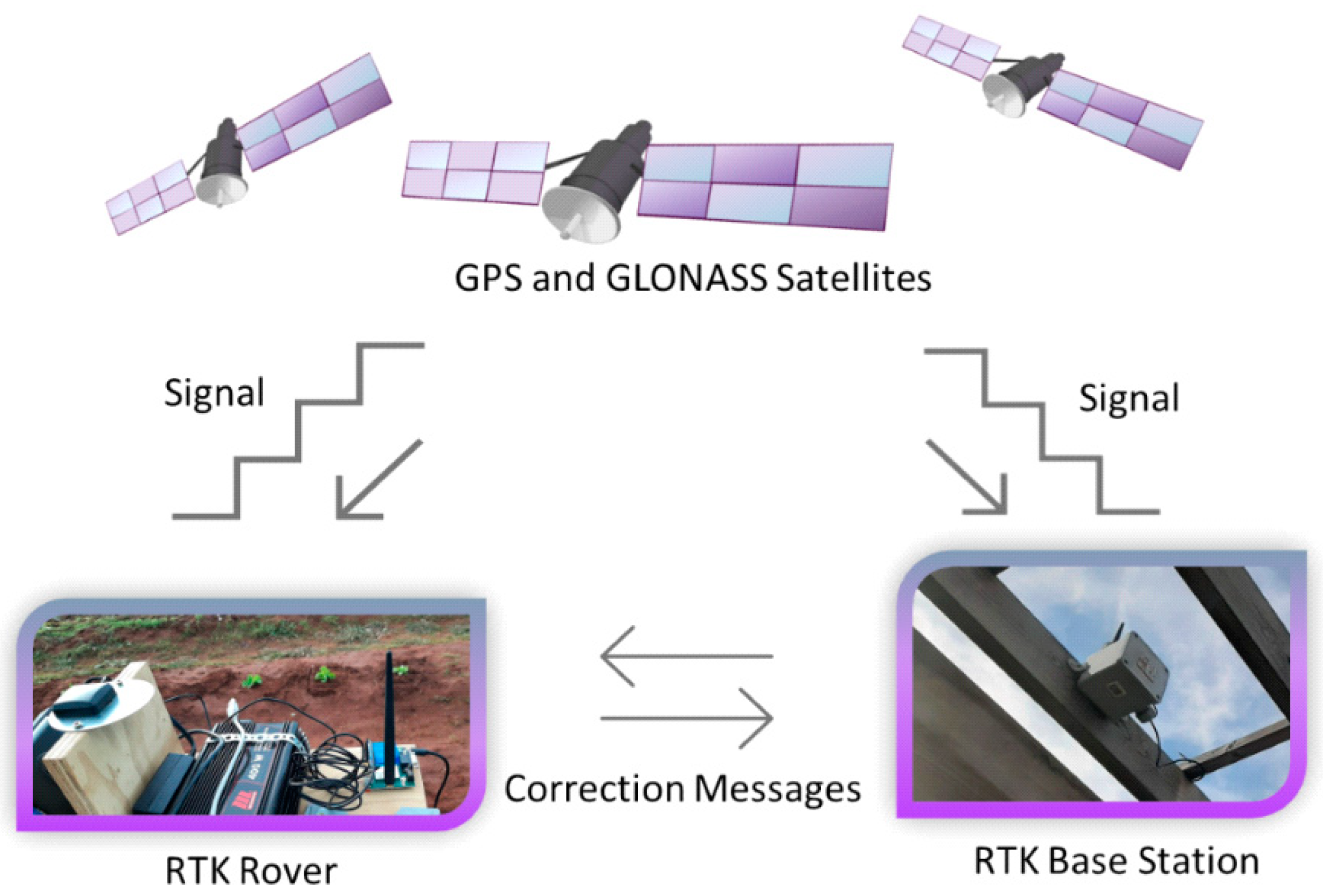

Figure 3): a GNSS patch antenna that responds to the radio signals from GNSS satellites to compute the position, a UHF whip antenna that provides maximum flexibility to assess GPS signals in the high-frequency range, and a micro-USB to supply both the 5-volt power and the configuration setup.

During field data collection with the RTK base station running in TIME mode, the RTK rover took a couple of minutes to go into RTK FLOAT mode and eventually RTK FIXED mode after receiving Radio Technical Commission for Maritime Services (RTCM) corrections and resolving carrier ambiguities. Throughout the data collection period, it was crucial for the RTK rover to be in FIXED mode to provide accurate latitude and longitude. The functionality of the RTK-GPS is depicted in

Figure 4.

2.3. Data Storing Unit

The data-storing unit (DSU) is a crucial component of the real-time crop-monitoring system, enabling software communication between different hardware units through the physical ports of a PC (

Figure 5). The DSU consists of a small PC or laptop with an Intel

® Core™ i5-8250U CPU @ 1.60 GHz–1.80 GHz × 64-based processor, running on Windows 10 64-bit operation system. Python serves as the core programming tool for designing and operating the DSU, which collects real-time data from the serial output of the RFIP hardware and the RTK rover and geotags them with corresponding reference images at a 1920 × 1080 pixel resolution via USB connection with the web camera. The piexif, PIL, cv2, pynmea2, serial, and fractions Python packages were employed. The freely available integrated development environment (IDE), known as Spyder, from the Anaconda navigator desktop GUI was used to write and run the Python scripts for data collection and storage.

2.4. Post-Processing Unit

In many current real-time crop-monitoring services, analyzing field data is often time-consuming. However, in this research, the RFIP prototype was developed not only to acquire field images but also to process them and deliver results in real time. As a result, the PPU only extracts the image metadata from the georeferenced field images and stores the detected area with the corresponding GPS location in a specified format for use by the WVL.

The PPU uses the same PC and programming tool as the DSU but employs different Python packages. The software for the PPU is simpler than that of the DSU, utilizing only two Python packages: piexif and PIL. Furthermore, it is straightforward, without the need for multiple functions or layers. The Exif metadata of saved images is read and saved as a comma-separated value (CSV) file in a specified location on the PC.

2.5. Web-Based Visualization Layer

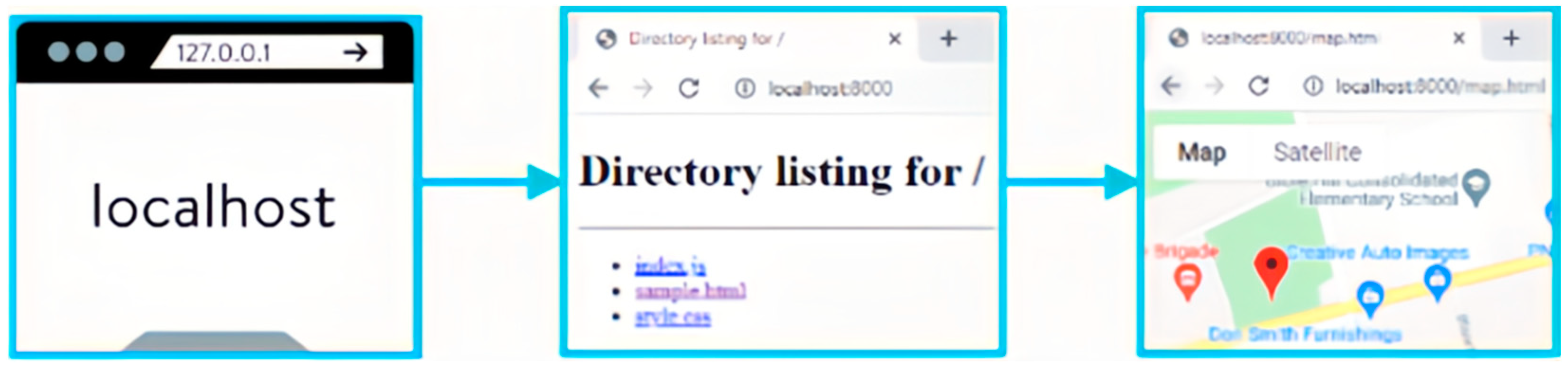

In the pursuit of cost-free map visualization techniques with limited field data, a simpler and cost-effective service has been identified (

Figure 6). This approach involves setting up a localhost web server and using the PC as a local server from port 8000. This setup enables the serving of local files, including Hypertext Markup Language (HTML), Cascading Style Sheets (CSS), and JavaScript (JS), on the local port. By accessing Google Maps services and following the heat map layer example, it was straightforward to represent the intensities with corresponding GPS locations as a heat map layer on top of the real-time Google map.

2.5.1. Webpage Creation

The decision was made to use the same Python programming tool as the DSU and the PPU, which was installed on the PC. To establish a simple web server using Python, the ‘hello Handler’ class was created and port 8000 was defined to serve indefinitely, utilizing the HTTPServer and BaseHTTPRequestHandler Python packages. This made it easy to serve local files in the specified folder directory directly on the localhost:8000 website using the command prompt terminal.

In this setup, the PC was designated as a local server and connected with the web browser through port 8000, as defined in the Python script. The command written in the specified directory inside the command prompt terminal was python -m http.server 8000 (

Figure 7). Finally, by typing the

http://localhost:8000/ web address on the Google web browser’s search window, the files within the specified folder directory on the PC became visible as directory listings. After creating an HTML file with any intended content within the folder directory, viewing the webpage from the Google browser became straightforward.

2.5.2. Heat Map Generation on Real-Time Google Maps

The first step in generating a heat map is to display the Google map on the created webpage. This requires having a Google account and using that account to create a new project in the Google Cloud Platform along with a billing account. The next step involves obtaining the application programming interface (API) key by creating credentials for the Maps JavaScript API and Places API. This API key allows 100 data points to be uploaded and 1000 visits to be paid to the webpage for free during the 90-day free trial.

For simplicity, the example scripts have not been extensively modified except for the JS, which includes the latitude and longitude of the map, gradient scheme, radius, opacity to change color appearance, and the data points. The data points were replaced by the latitude and longitude collected during real-time crop monitoring. An important modification was made to the weight field, influencing the visual color scheme on the heat map. The weight field values were generated as intensity values using the detected pixel area during real-time crop monitoring, using Equation (1). We chose shades of green for the heat map color scheme to represent greater or lower percentage areas detected as areas of plant leafage.

2.6. Testing of the RTK-GPS

This study had two primary objectives: to assess the accuracy of the RTK-GPS system at the centimeter level, and to collect the real-time field data, including geographic location and the detected pixel area from the region of interest (ROI). Although the entire system needed to be transported and positioned at selected points on a field, the data were recorded in stationary mode to minimize the potential for human errors.

Data Collection Using RTK-GPS

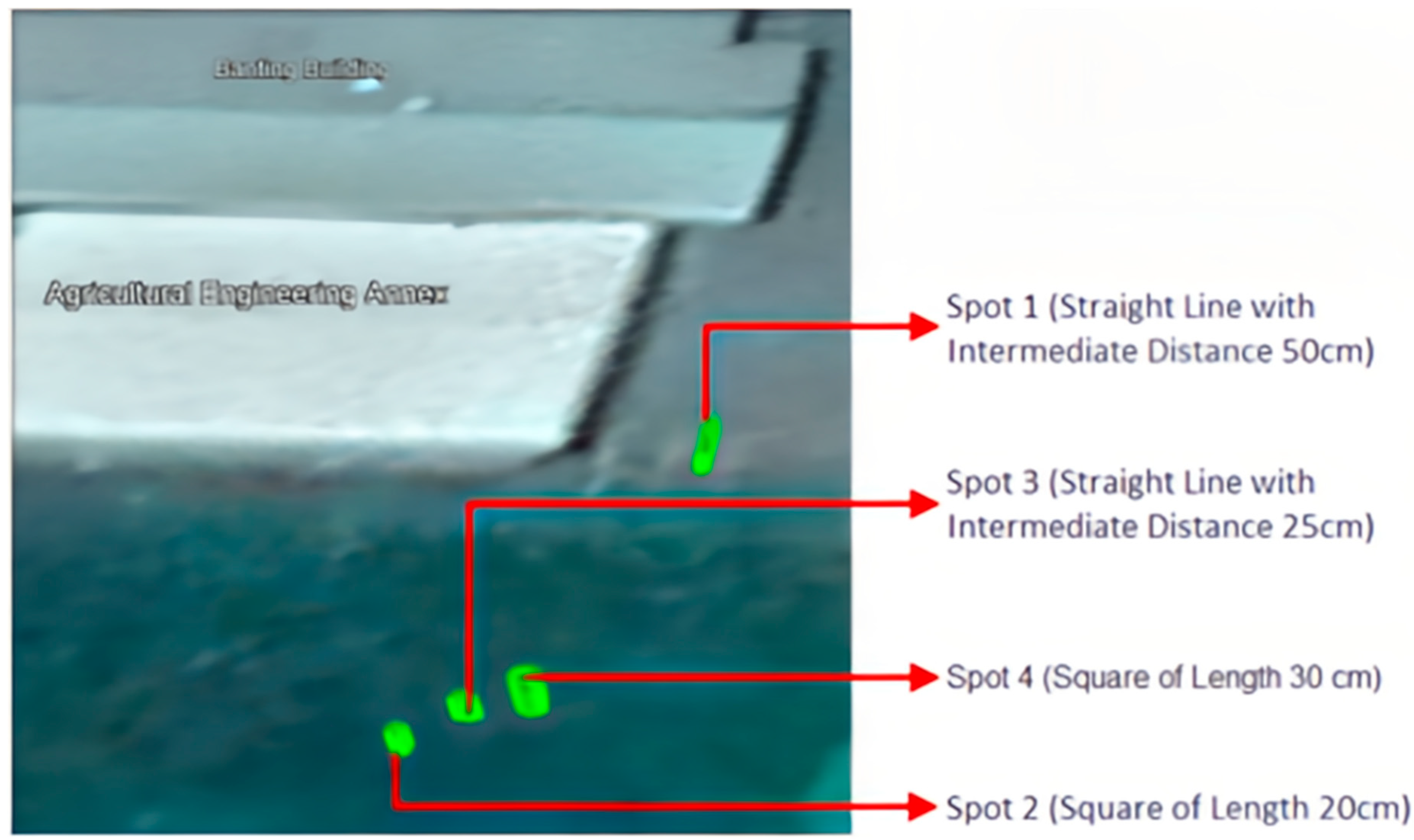

To evaluate the accuracy of the RTK rover, four spots were selected (Spot 1: a straight line with an intermediate distance of 50 cm; Spot 2: a square with a side length of 20 cm; Spot 3: a straight line with an intermediate distance of 20 cm; and Spot 4: a square with a side length of 30 cm) (

Figure 8). These spots, illustrated in

Figure 8, were located within a 50 m radius of the RTK base station. The GPS data of the RTK rover were recorded using u-center 21.05 (u-blox Inc.; Thalwil, Switzerland) GNSS evaluation software, ensuring the rover’s FIXED status. Subsequently, Google KML files were generated from the four recorded data files using u-center software to visualize the GPS data on a real-time Google map through Google Earth Pro software 7.3 (Google Inc.; Mountain View, CA, USA).

2.7. Testing of the Real-Time Crop-Monitoring System

To test the real-time crop-monitoring system, we selected Dalhousie University’s Demonstration Garden (45.3755° N, 63.2631° W), located within a 100 m range of the RTK base station. We specifically chose a portion of three plant rows within a Romaine lettuce (

Lactuca sativa L. var.

longifolia) crop area, consisting of 21 plants (7 in each row;

Figure 9). The system was repositioned and adjusted by changing the lengths and angles of the tripod’s three legs to maintain a consistent ground resolution of 30 cm × 22.5 cm with an image resolution of 800 × 600 pixels for each of 21 data points. At each data point, we collected a total of 7 samples, resulting in a comprehensive dataset of 147 samples.

The field data were collected from two serial outputs: the latitude and longitude with the RTK rover in FIXED mode, and the pixel area detected as a plant. These data were geotagged as image metadata with the web camera reference image of corresponding data points while maintaining 1920 × 1080 pixels. The reference images were then cropped and resized to match the FPGA imagery. The cropped and resized reference were processed using the NumPy and cv2 packages in the Python programming language. A Python script was written to perform mathematical operations pixel by pixel, following the G-ratio formula [

22]. This resulted in a list of 147 reference pixel areas to compare the performance of the RFIP system. Simultaneously, the 147 GPS data points were extracted from the reference images’ Exif metadata and saved as a CSV file. The latitude and longitude from the CSV file were utilized by the Google Earth Pro software to visualize the data points on the Google map for validation.

Finally, to visualize the real-time field data in the form of a heat map on a localhost webpage, an average was computed for every 7 samples from 21 data points, resulting in averaged latitude, longitude, and detected area values. To display the color scheme on the heat map based on the detected area, an additional step was required to prepare the dataset by performing the percentage area calculation for 21 points (using the previously mentioned formula) to assess the intensity of the corresponding geographic location. In this process, latitude, longitude, and intensity values of 21 data points were included in the JS of the local server webpage to create a layer serving as a heat map on top of the Google map.

2.8. Performance Evaluation

Since this research aimed to provide a cost-effective, faster, and reliable real-time image-processing system alternative, the web camera imagery was used as the gold standard to compare and evaluate the performance of RFIP system. The web camera has been widely utilized in real-time image-processing systems in recent years [

6,

23]. Statistical analyses on collected data were conducted using Minitab 19 (Minitab Inc., State College, PA, USA). Basic statistical measures, including the mean and standard deviation (SD) of the detected pixel area, were formed on the basis of our comparisons.

For the field evaluation of the RFIP system, there were 7 samples for each of the 21 plants, totaling 147 samples from both the FPGA output and web camera reference system. The FPGA data and web camera data were averaged from 7 samples per plant to produce 21 samples for each system. These data were then analyzed and compared using the G-ratio algorithm for real-time detection in the field environment. The detected pixel area predicted by the RFIP system was correlated with the area detected by the web camera using regression analyses. The coefficient of determination (R2) was calculated to compare the RFIP system’s performance with the web camera’s image acquisition.

Since the RFIP system integrates image acquisition and image processing, the reference images were processed pixel by pixel using Python, applying the same algorithm used in the RFIP system’s image-processing unit. To further assess the degree of correctness between the RFIP data and the reference data, Lin’s concordance correlation coefficient (CCC) was computed using the field test findings [

24]. According to Lin [

24], it makes more sense to test if CCC is larger than a threshold number,

, while carrying out hypothesis testing, rather than just determining whether CCC is zero. The following Equation (2) was used to calculate the threshold, where

represents the R-squared achieved when the RFIP data were regressed to the reference data;

is the measure of precision, determined by Equation (3); υ and ω are the functions of mean and standard deviation; and

d is the % loss in precision that can be tolerated (Lin, 1992) [

24].

The null and alternative hypotheses are : CCC ≤ (indicating no significant concordance between the RFIP data and the reference data) and : CCC > (indicating significant concordance between the RFIP data and the reference data). If CCC > , the null hypothesis is rejected, establishing the concordance of the new test procedure.

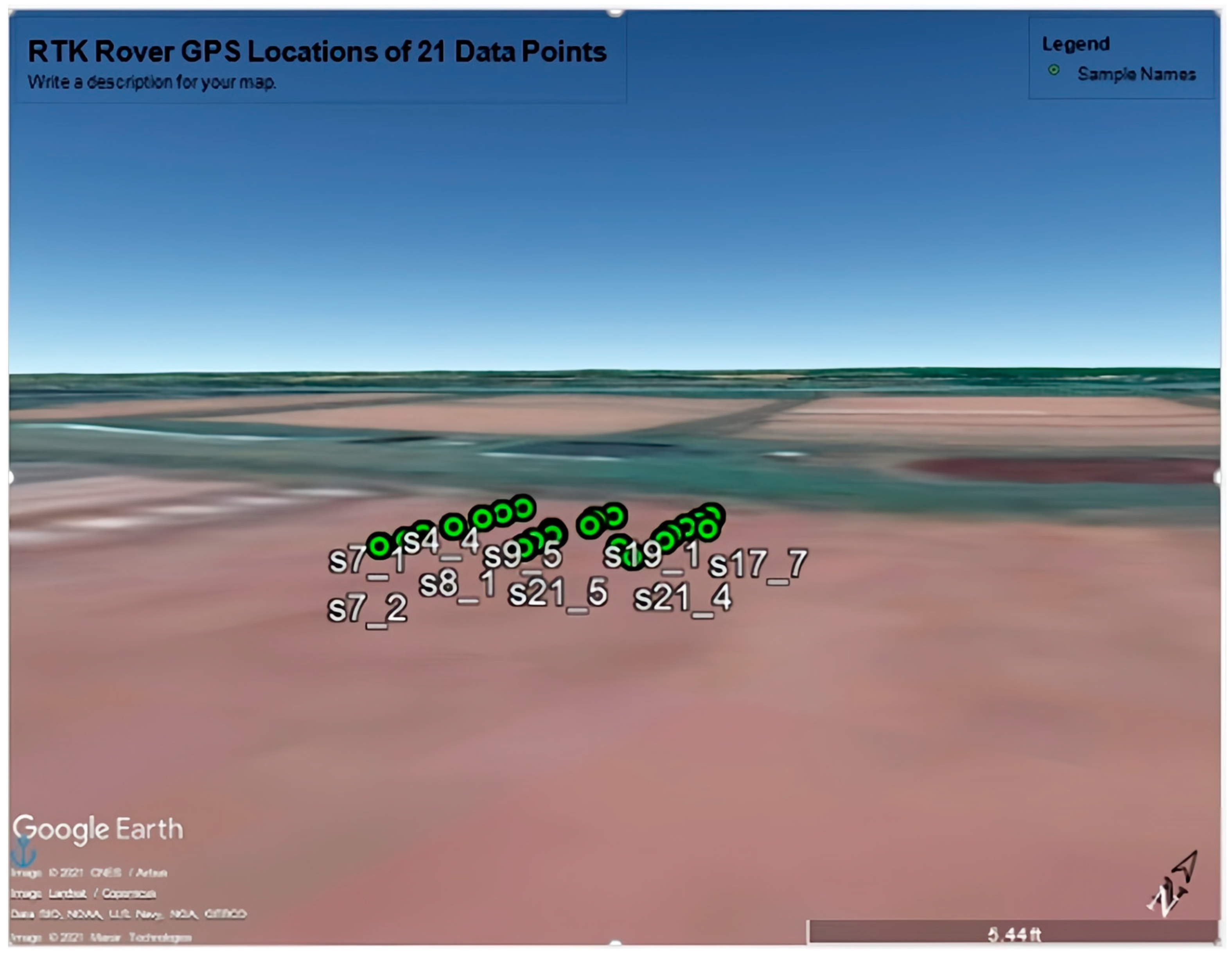

In addition, Google Earth Pro software is used for evaluating GPS data. As this research was conducted within a limited geographical range, the available data points or latitude and longitude values were insufficient to generate a prominent visualization on the Google map. Therefore, instead of using the full-screen images from the Google Earth Pro software, small areas with a few data points were cropped and found to represent the minimum visualization for this prototype.

3. Results

The RTK-GPS data collected from four selected spots during the experiment were visualized using Google Earth Pro software to assess the RTK-GPS performance at an 8 cm scale. The spots were chosen at distances of 50 cm (Spot 1), 30 cm (Spot 2), 25 cm (Spot 3), and 20 cm (Spot 4) (

Figure 10). In the Google Earth Pro map visualization presented in

Figure 10, the selected lines and squares from the experimental setup are easily identifiable, demonstrating the excellent performance of the configured RTK-GPS in accurately locating geographical places.

The RFIP system’s output from the field experiment, which includes the number of pixels detected as the plant leaf area of Romaine lettuce (

Lactuca sativa L. var.

longifolia) by applying a green color detection algorithm, was compared with the reference images captured by the web camera, continuing the same experimental setup for both cases. The complete data, including all the resulting numbers, are listed in

Table 1.

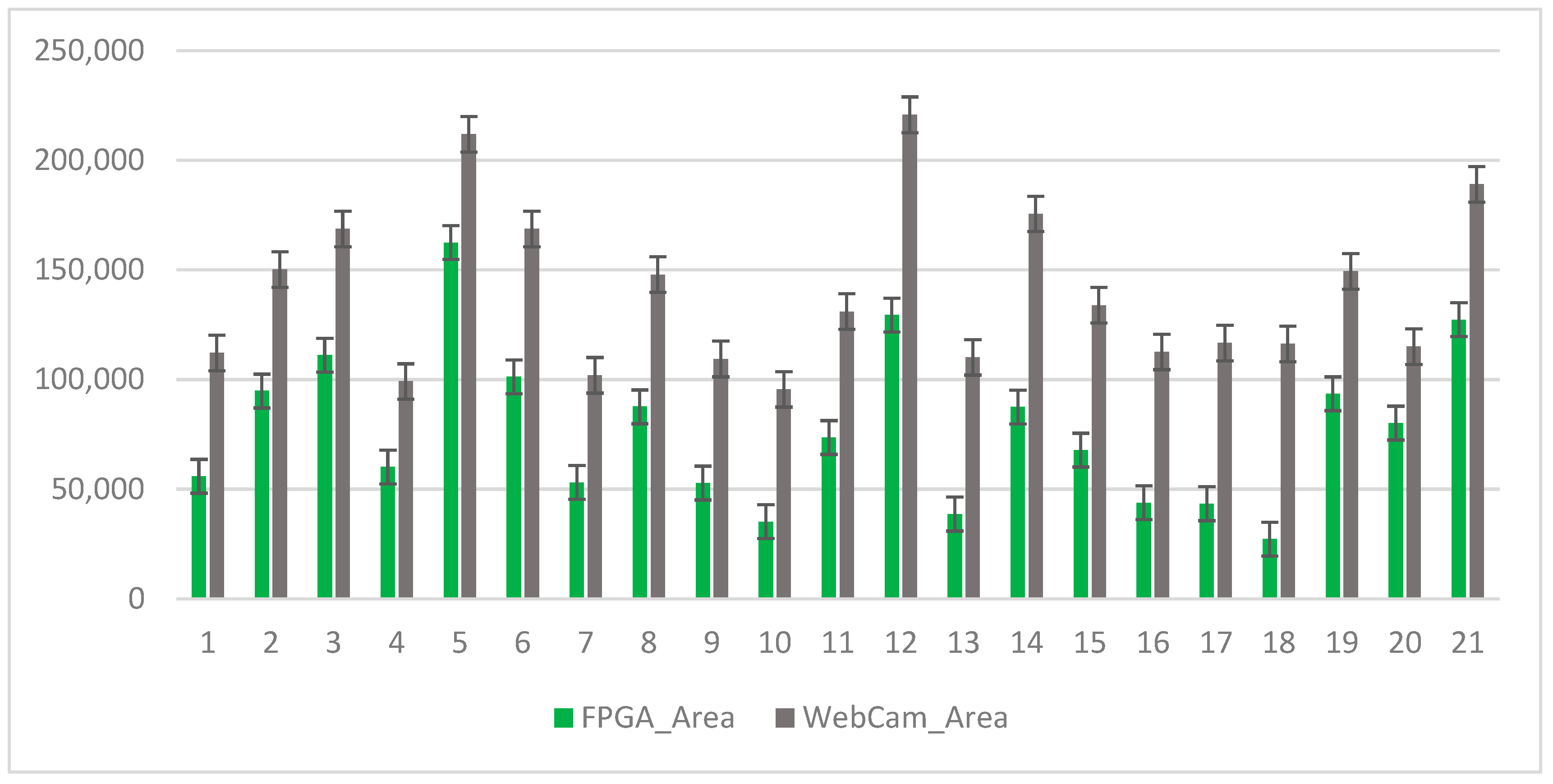

From the numerical data analysis, the variability in the SD of the 21 objects [Romaine lettuce (

Lactuca sativa L. var.

longifolia) plants] was found to be considerably low, ranging from 0.027% to 8.32% of the ROI. During the field trials, the RFIP system performed well in terms of detecting plant leaf area. The results are further represented as a bar graph showing the area detected by the RFIP system and reference system, along with the SD values as error bars on top of the corresponding data columns (

Figure 11). The bar graph illustrates that the RFIP system worked considerably well when evaluated against the reference system.

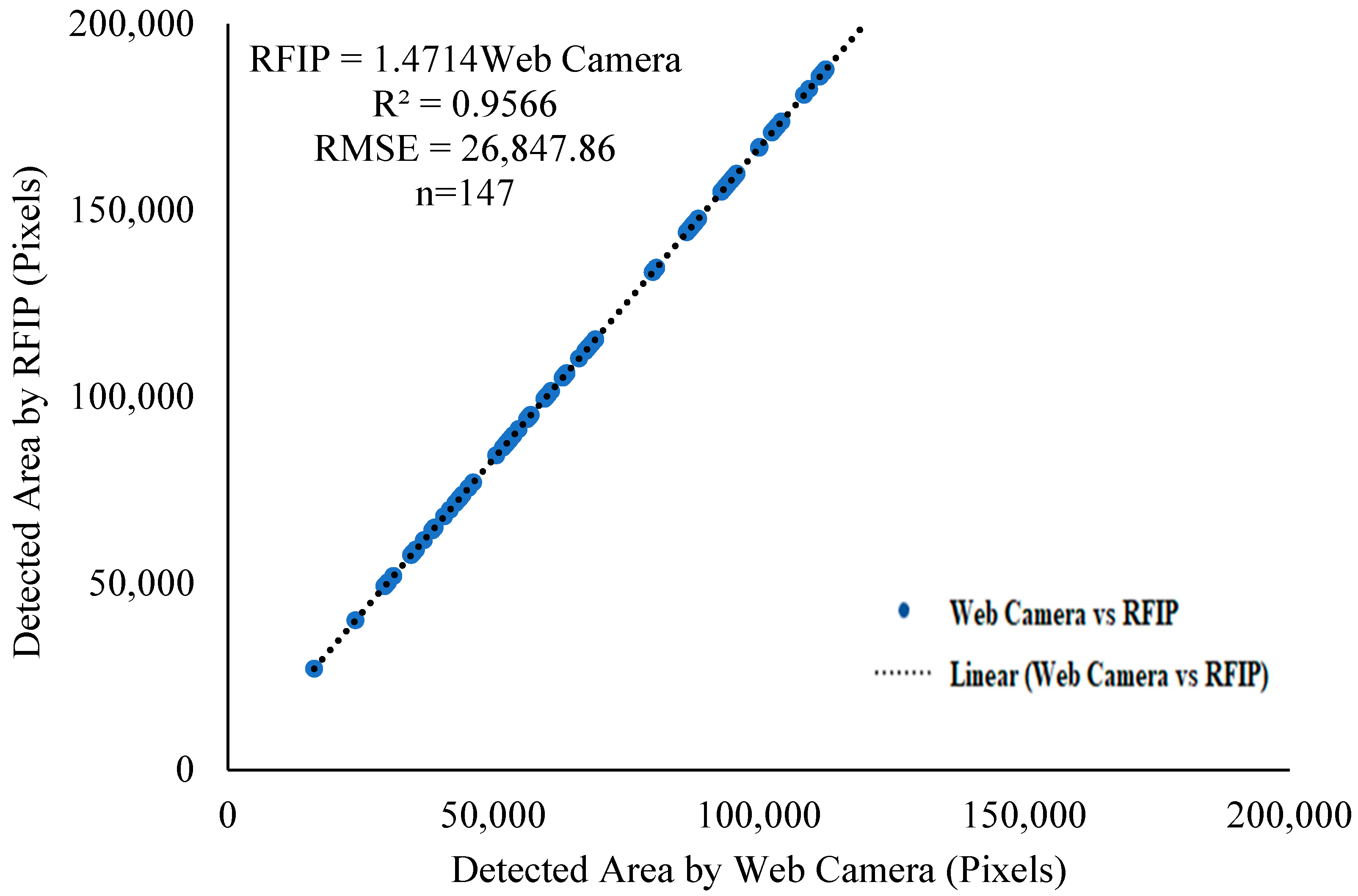

The performance of the developed system was compared to the web camera system using the G-ratio algorithm. The detected area by the RFIP system showed a strong correlation with the web camera reference system

), which implies that the developed system could explain 95.66% variability in the area detected by web camera with substantial accuracy (CCC = 0.8292). A regression model, having a zero y-intercept, was generated to visualize the performance of the RFIP system and compare it with ideal system (

Figure 12). Additionally, Lin’s CCC = 0.8292 with a 95% confidence interval (0.7804, 0.8656) and

of 0.8468 with a tolerable 5% loss of precision were obtained from the hypothesis testing based on Equations (2) and (3). As the lower limit of CCC >

, the test is statistically significant, and the null hypothesis is rejected. Therefore, the field experiment result shows that the proposed RFIP system can be utilized to determine the area that the web camera detects.

Google Earth Pro software was used to visualize the real-time geographic locations of the RTK rover using the 147 GPS locations from the georeferenced web camera images. The best possible view from the ground-level map is shown in

Figure 13.

For the final product, 147 RTK data points and the intensity of 147 FPGA data were averaged using 7 samples for each plant to obtain 21 samples, whereby the data were then formatted into a CSV file with the corresponding latitude, longitude, and intensity values. This final dataset was submitted to the webpage’s JS file, which runs as localhost on port 8000 and serves the local files. A numerical representation of the complete data, including all resulting numbers, is presented in

Table 2. Due to the limited number of data points, the heat map visualization is not very prominent on the Google map (

Figure 14).

4. Discussion

In accordance with the primary prerequisites for evaluating the RFIP system in an outdoor environment [

22], several important factors were taken into account during field data collection. Firstly, a mainly sunny day was chosen with a temperature of 4 °C, wind speed of 14 km/h NW, and a maximum wind gust of 30 km/h. Secondly, a large, 7.5-foot, summer outdoor umbrella and a small rain umbrella were used to maintain and mitigate the effects of clouds over the plant. However, some unavoidable challenges during the field experiment could impact precise data collection, such as the wind’s effect on plant leaves, the shadowing of leaves on plants with multiple leaves, the color shadow effect from the umbrella, and the significant influence of ambient sunlight and clouds in the open field. Despite these challenges, maintaining a consistent experimental setup improved the system’s performance, as the same real-time crop growth monitoring setup remained stationary while recording the data.

In addition, the RFIP system demonstrated better performance in the outdoor evaluation (99.56% correlation) than in the real crop field evaluation (95.66% correlation) [

22]. This difference may be attributed to the reduced impact of wind, the more pronounced shadow over the ground ROI, and the greater number of replications for each object (10 samples per object for outdoor evaluation and 7 samples per object for field evaluation). The RTK-GPS accuracy was precise enough to locate the field data points on the real-time Google map. Although the RFIP system did not show superior performance in real-time crop growth monitoring, it remains useful in assisting the decision support system by providing an accurate ground truth map to farmers for on-the-spot farm management decisions.

5. Conclusions

This study aimed to address the current limitations of high-resolution imagery and to achieve GPS data accuracy of better than 1 m for budget-friendly, real-time monitoring of crop growth and/or germination PA applications. Here, we demonstrated the RFIP system’s significant potential by applying it in field conditions. When validated, the RFIP system achieved a 95.66% correlation with the reference data during field testing. The proposed system has the ability to overcome the challenges in agricultural imaging associated with computational complexity, image resolution, time, and cost involved in deploying photographic technology, thereby providing real-time actionable insights for field management in smart agriculture.

This proposed system primarily focused on the development and initial evaluation of real-time crop monitoring with enhanced accuracy using cost-effective technology. However, the project faced several constraints that impacted its implementation and data collection process. The RFIP system’s automatic exposure control limitations required scheduling field data collection during specific weather conditions and using an umbrella to maintain consistent lighting. Additionally, the RTK GPS required several minutes to achieve the crucial FIXED mode for accurate positioning, potentially delaying data collection. Weight and cost constraints also prevented the use of a high-quality DSLR camera, leading to the adoption of a web camera as an alternative reference system. While this camera correlated with DSLR performance in [

22], it introduced some compromises in image quality.

In the future, we aim to develop an advanced automatic exposure control system for the RFIP system that can adapt to varying weather conditions, reducing reliance on specific environmental factors. We will also investigate methods to reduce RTK GPS initialization time and improve stability in FIXED mode. Additionally, we plan to integrate the system with other mobile platforms, such as unmanned ground and aerial vehicles, for real-time crop health monitoring, yield assessment, and crop disease detection.