1. Introduction

Digital-to-Analog Converters (DACs) are indispensable components in mixed-signal integrated circuits, serving as the critical interface between digital computation and the analog world. They are foundational to systems requiring precise analog waveforms, including audio and video processing, instrumentation, communication systems, and sensor interfaces [

1]. As the backbone of signal generation and control, DACs must balance competing demands of resolution, linearity, speed, power, and silicon area [

2,

3,

4,

5,

6].

Popular DAC architectures—such as resistor-string, R-2R ladder, current-steering, and capacitive DACs—are selected based on application-specific trade-offs [

7]. In emerging domains such as the Internet-of-Things (IoT) and edge artificial intelligence (AI), the trend toward integrating thousands of DACs on a single chip has heightened the need for ultra-compact, energy-efficient designs [

8]. For instance, in analog neural networks and in-memory computing platforms, DACs are deployed to modulate weights stored as charge, conductance, or current. A notable example is the phase-change memory-based AI accelerator published in [

9], where on-chip DACs played a pivotal role in determining computational precision and power efficiency.

Similarly, in applications like ultrasound imaging, LiDAR, or MEMS control, DACs are required at the channel or pixel level for biasing or actuation [

10]. Scaling such systems to hundreds or thousands of channels demands DACs that are ultra-compact and energy-efficient. However, aggressive area reduction typically exacerbates mismatch and compromises linearity, resulting in deviation of the DAC transfer function from its ideal behavior (

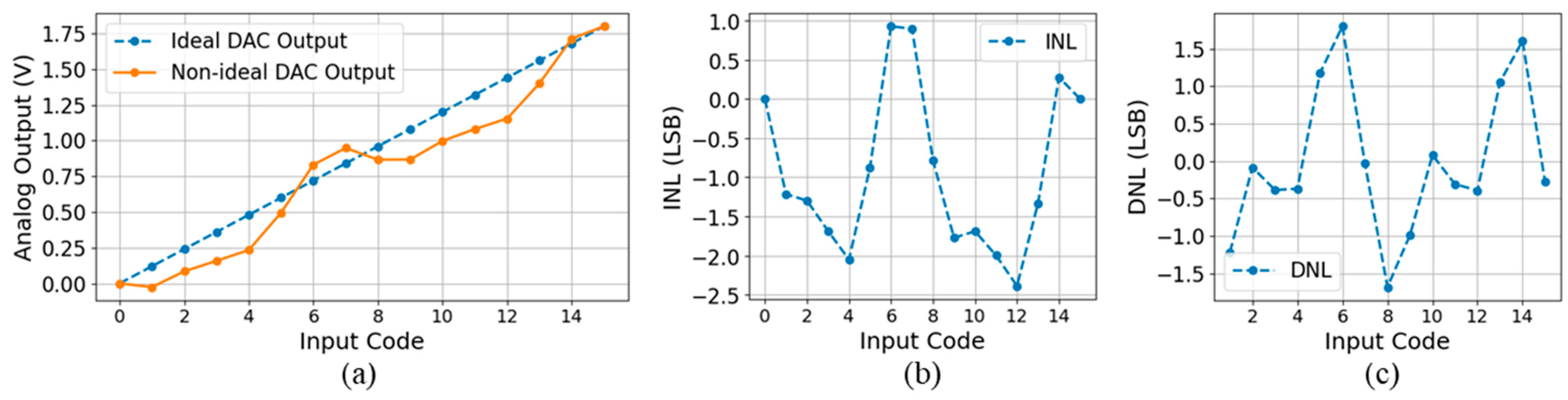

Figure 1a). These deviations are quantitatively characterized by integral nonlinearity (INL) and differential nonlinearity (DNL). INL measures the deviation of each output level from an ideal linear reference, which is typically defined by a straight line connecting the first and last output codes of the DAC, this line iscommonly referred to as the end-point fit. The expression for this ideal linear voltage is given in Equation (1).

where

and

denote the first and last digital input codes, respectively, and

n represent the resolution of the DAC in bits. The INL at each code is given in Equation (2) as illustrated in

Figure 1b. The overall INL of the DAC is then defined as the maximum absolute value of the code-level INLs, as expressed in Equation (3).

DNL quantifies the deviation of each output step from the ideal step size. It is mathematically defined in Equation (4) and graphically illustrated in

Figure 1c. DNL captures irregularities in step size that may result in missing codes or non-uniform output transitions. The overall DNL is typically expressed as the maximum of the absolute values of DNL(k), as defined in Equation (5). The ideal

LSB, denoted as

is defined as

, where

is the full-scale output voltage and

is the resolution of the DAC.

It should be noted that If

or

the transfer characteristic of the DAC is monotonic [

11]

Traditional DACs achieve high linearity through meticulous device matching [

12], laser trimming [

13], or the use of large components [

14]—techniques that, while effective, result in significant silicon area overhead. To overcome this trade-off between linearity and area, we introduce a redundancy-based design methodology. The central concept is to design the DAC with relaxed matching constraints by employing smaller, mismatch-prone components to save area, and subsequently restore accuracy through digital calibration. This approach ensures the final DAC achieves near-ideal performance with minimal area cost, enabling aggressive area reduction without sacrificing linearity.

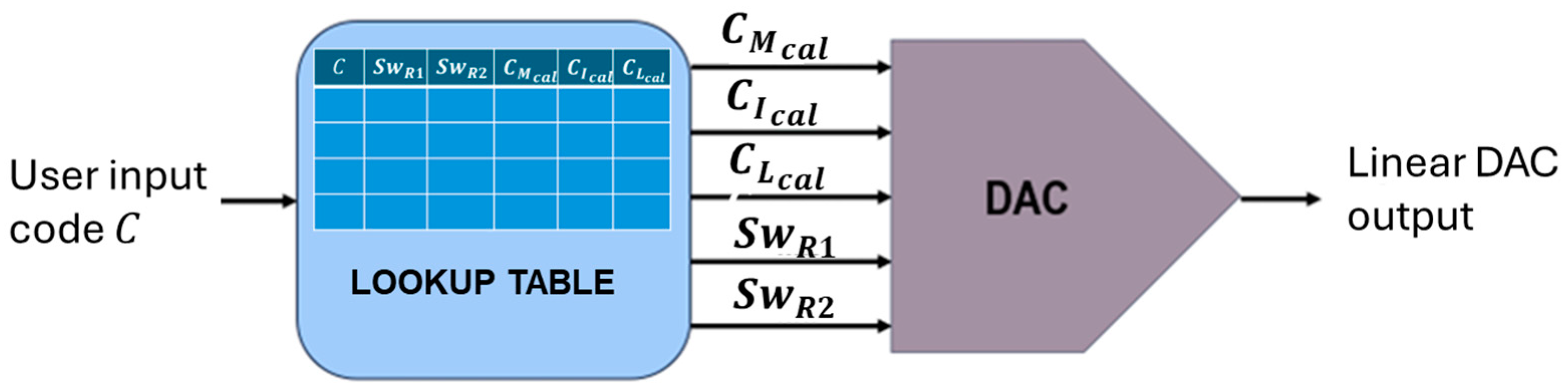

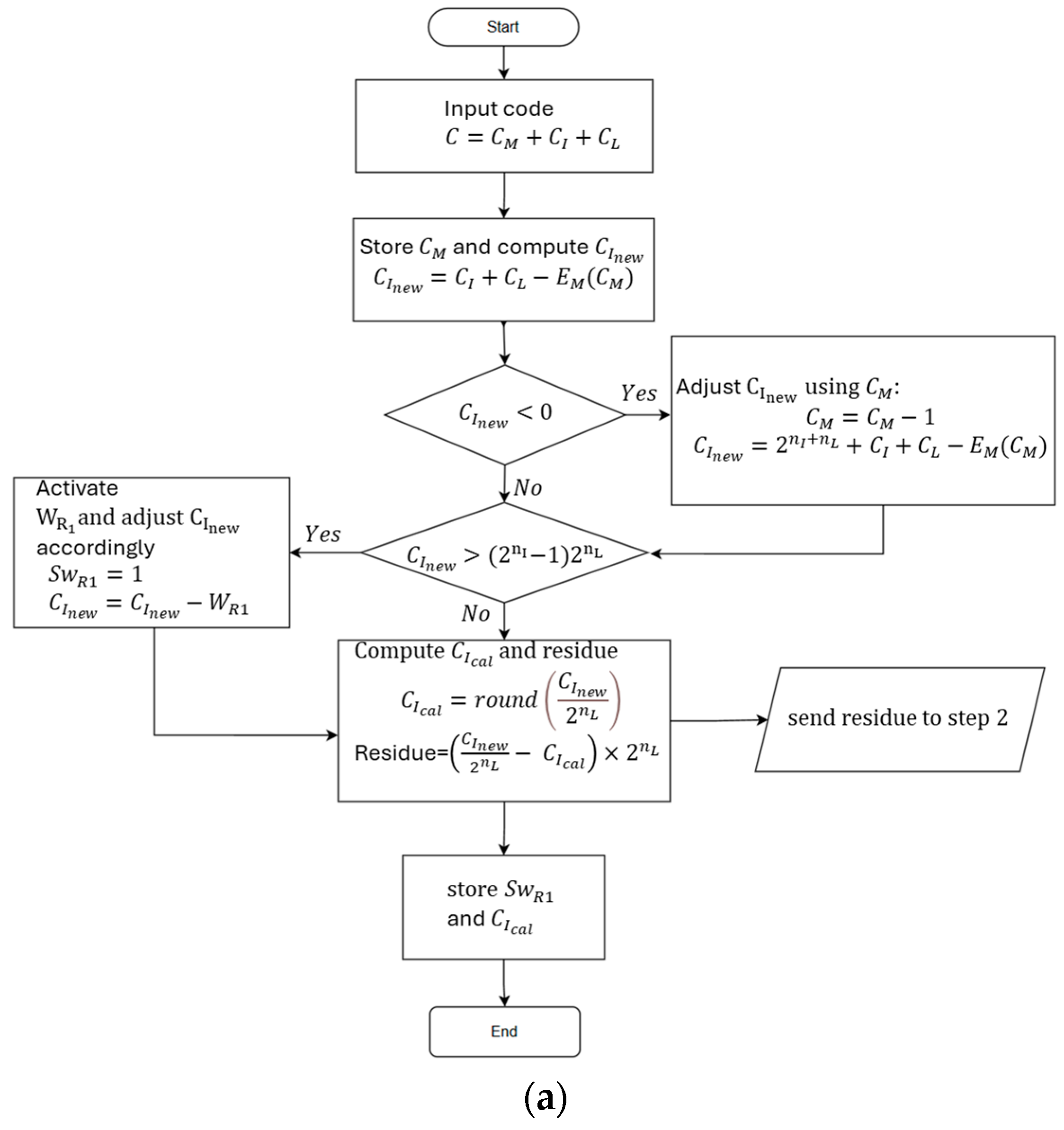

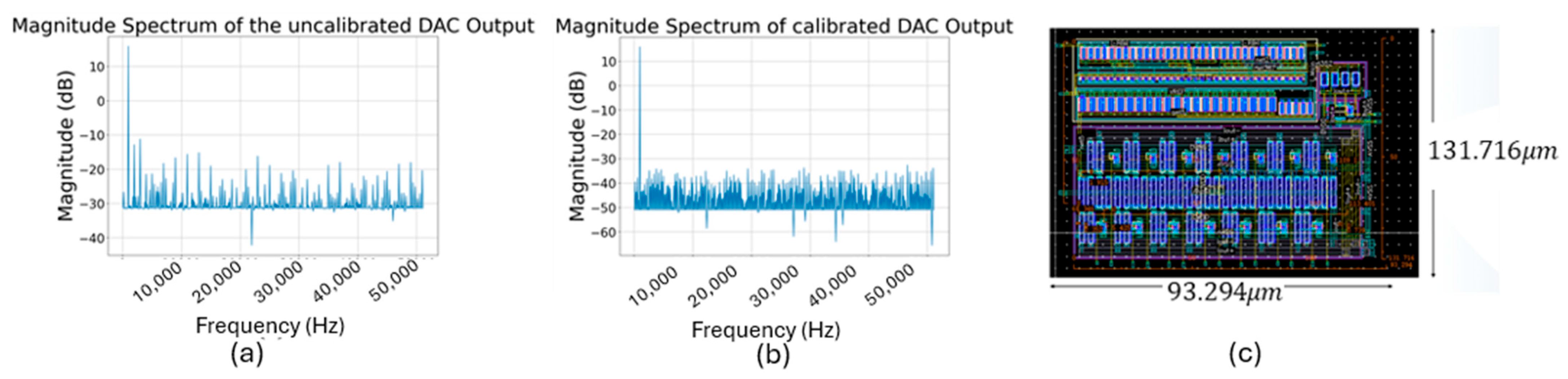

Figure 2 show a simplified flow chart of our proposed design methodology.

We begin by designing a compact DAC architecture with relaxed matching constraints, while ensuring that specific conditions are met to make the DAC calibratable. The fabricated DAC is subsequently tested, and the integral nonlinearity (INL) at major code transitions is measured and stored in memory. These INL measurements are then used to generate a predistortion or calibration code that linearizes the DAC output. Depending on system requirements, the predistortion code may be applied directly as they are generated or stored in a lookup table (LUT). During normal operation, the LUT maps user input codes to their corresponding calibration codes, thereby ensuring a linear output response.

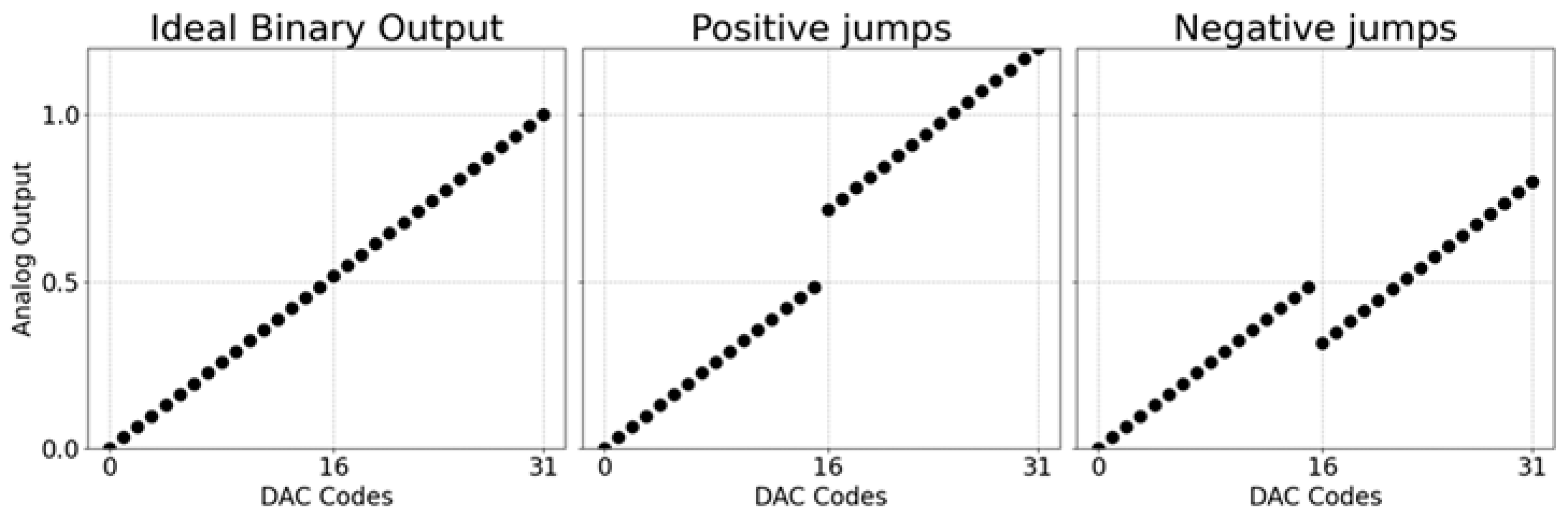

Importantly, our proposed digital calibration algorithm addresses both negative and positive INL jumps in the transfer function. Negative INL jumps—representing a scenario where the output voltage for a given code is substantially less than that of the previous code—are typically calibratable. However, positive INL jumps—a scenario in which the output voltage corresponding to a given input code increases abruptly relative to its preceding code—may render intermediate output levels unreachable, leading to non-recoverable errors,

Figure 3 illustrates this.

While negative jumps can be corrected by existing codes, positive jumps necessitate redundancy. By introducing redundant bits and switching schemes, we create additional degrees of freedom in the DAC transfer function, enabling smooth interpolation of previously unreachable voltage levels (

Figure 4).

As shown in

Figure 4a, the non-ideal DAC transfer function exhibits a discontinuity marked by a large positive jump—specifically, the output voltage abruptly increases from approximately 0.95 V to 1.5 V between two adjacent digital input codes. This creates a 0.55 V gap in the output range, where no intermediate code can generate a valid output voltage. Such missing regions are particularly problematic because they represent non-recoverable linearity errors, rendering portions of the analog output space unreachable.

Figure 4b demonstrates the application of a redundancy-based technique to eliminate this discontinuity. By activating a redundant bit, a controlled offset is introduced into the transfer function, effectively bridging the output gap. This approach ensures complete output coverage across the full-scale range. The redundant bit does not modify the core DAC architecture or its nominal transfer function. Instead, it enables small, precise shifts in the output that, when combined with calibration, allow alternate input codes to reconstruct the missing output levels. This synergy between redundancy and calibration significantly improves overall linearity without architectural disruption.

Existing calibration techniques such as Dynamic Element Matching (DEM) [

15] work by randomizing mismatch errors across conversions. While effective in reducing static mismatch, DEM is often inadequate for high-speed or highly mismatched systems due to its non-deterministic nature and limited effectiveness in dynamic conditions.

Ref. [

16] presented a low-complexity calibration algorithm that leverages segmentation alongside Successive Approximation Register (SAR) logic and a high-resolution comparator. While this approach offers the benefit of tracking temperature variations and component parameter drift, it suffers from several limitations. Notably, the reliance on multiple feedback loops increases the overall circuit complexity and introduces additional latency due to the time required for loop convergence. This makes the algorithm less suitable for rapidly changing conditions. Furthermore, the presence of multiple feedback paths raises the risk of instability, particularly under aggressive correction gains or noisy operating environments. Other approaches, including digital foreground calibration techniques presented in [

17,

18], proposed a set of switching-based calibration algorithms tailored primarily for unary or segmented DAC architectures. While effective at reducing static nonlinearity due to random mismatch, their approach exhibits limited capability in correcting systematic errors such as gradient-induced mismatches. Additionally, the method also introduces non-trivial digital control complexity due to the required folding and reordering of switching sequences, and its applicability is largely restricted to unary DACs, limiting its generalizability across broader DAC architectures.

Compared to the methods presented above, the proposed calibration algorithm offers a more general, robust, and scalable solution. It is applicable to a broad range of DAC architectures, including those where the superposition principle holds (e.g., R-2R ladders) as well as those where it does not (e.g., segmented resistor-string DACs). Unlike prior approaches that are restricted to foreground-only calibration and unary configurations, the proposed algorithm supports both foreground and background calibration. In particular, an integrated ADC can periodically monitor the DAC output for deviations, enabling adaptive recalibration of correction codes over time. This approach effectively compensates for both random and systematic errors through a combination of hierarchical redundancy and deterministic digital correction, thereby maintaining high linearity and ensuring reliable performance throughout the DAC’s operational lifespan. Overall, the proposed method achieves high resolution, low latency, and robust operation under process, voltage, temperature, and aging variations, making it well-suited for modern, high-performance, and deeply scaled mixed-signal systems.

This article is a revised and expanded version of the following three conference papers [

8,

19,

20].

The remainder of this paper is organized as follows.

Section 2 introduces the conceptual DAC design.

Section 3 describes the proposed redundancy-based calibration algorithm.

Section 4 presents several representative design examples.

Section 5 reports the simulation and measurement results, while

Section 6 compares the proposed work with state-of-the-art DACs. Finally,

Section 7 concludes the paper.

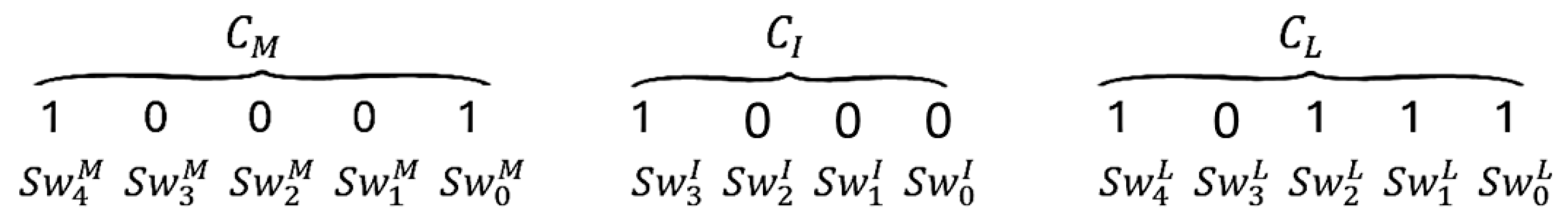

2. Conceptual Design of the DAC

Figure 5 presents the conceptual block diagram of the proposed DAC architecture. At its core lies a segmented DAC structure, which may be implemented using any conventional topology—resistor-string, capacitive (CDAC), or current-steering—depending on the application requirements. The architecture is partitioned into three segments: the Most Significant Bit (MSB), Intermediate Significant Bit (ISB), and Least Significant Bit (

LSB). In addition to the core analog segments, the system includes a digital controller, an embedded memory unit, and a redundant bit segment. To achieve substantial area savings, the DAC is deliberately designed with relaxed matching constraints—particularly in the MSB and ISB segments—while preserving precise matching only in the

LSB segment. This relaxed matching requirement led to significant area savings leveraging the rule of thumb that each 1-bit ENOB increase requires approximately 4 times area growth. This area-efficient design inherently introduces nonlinearities at the major code transitions of the MSB and ISB segments, resulting in deviations from the ideal transfer function. These non-idealities are modeled as deterministic code dependent error functions

, and

, where

and

, respectively, represent the code for the MSB and ISB segments. These error values or INL, recorded during a post-fabrication testing phase, are stored in a local memory.

The extracted INL data are processed by a digital controller, which computes accurate calibration codes that linearize the DAC output during normal operation. When activated, the corresponding calibration code triggers additional control logic—including redundant bit segments—to selectively compensate for nonlinearity in the MSB and ISB segments.

The proposed algorithm can be operated in two different modes depending on its application which include the inline calibration mode, and a full lookup table calibration mode; in the inline calibration mode, the user input code is processed in real time by a digital controller, which computes the corresponding calibrated code that is then sent to the nonlinear DAC to generate a linearized output. In this calibration mode, memory requirements are minimized by storing only key segment-level error terms:

entries of

,

entries of

, and a single gain error term. For a 14-bit DAC with segmentation

,

and

, this results in a total of only 32 + 16 + 1 = 49 stored error values. Assuming the calibration error terms are stored in a 49-entry lookup table with 8-bit resolution, and the SRAM is implemented using the TSMC 180 nm technology node (with a reported 6T bit-cell size of 4.4–4.65 μm

2 [

21,

22]), the total core memory capacity is 49 × 8 = 392 bits. This corresponds to an estimated core cell area of approximately 392 bits × 4.4 μm

2 ≈ 1724.8 μm

2. Accounting for an additional 30–100% peripheral overhead, the overall memory area is projected to be in the range of 2248 μm

2 to 3449.6 μm

2. This area can be substantially reduced by migrating to more advanced technology nodes. For instance, in the TSMC N2 (2 nm) node, which has a projected 6T SRAM bit-cell area of 0.0199 μm

2 [

23,

24] the same 392-bit memory would occupy only about 392 × 0.0199 μm

2 ≈ 7.8 μm

2, and including the same peripheral overhead, the total area would be expected to fall within 10.14 μm

2 to 15.6 μm

2. While this approach is highly memory-efficient, it introduces additional latency due to real-time computation and increases power consumption due to the active digital processing logic required during operation. In latency-sensitive applications, a lookup table (LUT)-based calibration mode is preferable. In this approach, calibration codes are precomputed and stored in memory during a one-time calibration phase. During normal operation, each user input code is directly remapped to its calibrated output through a simple memory fetch. This method significantly reduces both latency and runtime power consumption, as it eliminates the need for real-time computation and involves minimal combinational logic. The primary trade-off is an increased memory requirement to store all calibrated codes, with an estimated area within

and

when implemented in the TSMC 180 nm Process, and within

and

when implemented in the TSMC N2 technology. Nevertheless, the overall power overhead remains low, and the method avoids the use of feedback loops or toggling of analog circuitry. This is demonstrated in

Figure 6.

For high-density systems such as analog AI accelerators or large-scale mixed-signal SoCs, both power consumption and memory requirements can be significantly reduced through calibration reuse strategies. DACs exhibiting similar mismatch profiles—resulting from symmetric layout placement or shared environmental conditions—can share a common calibration profile. In scenarios where chip-to-chip variation is minimal, a single calibration instance can be reused across multiple identical DACs, effectively minimizing calibration overhead. Moreover, the proposed architecture is inherently compatible with modern System-on-Chip (SoC) platforms. The digital controller and memory required for calibration can be implemented using existing on-chip computational resources, eliminating the need for external calibration circuitry. In large-scale AI accelerators, embedded processors can autonomously execute the calibration algorithms—including those augmented by machine learning techniques—to dynamically correct for analog non-idealities. This self-correcting capability enhances system linearity, yield, and robustness while incurring minimal area and power overhead per DAC cell.

4. Design Examples

To show the universality of our design methodology we demonstrate using three design examples, all MOSFET R2R DAC, Three Segment Interpolating Resistor String DACs and a hybrid Current Source Capacitor DAC. All three cases are 14-bit architectures with each structure also incorporate two additional redundant bits.

4.1. All MOSFET DAC

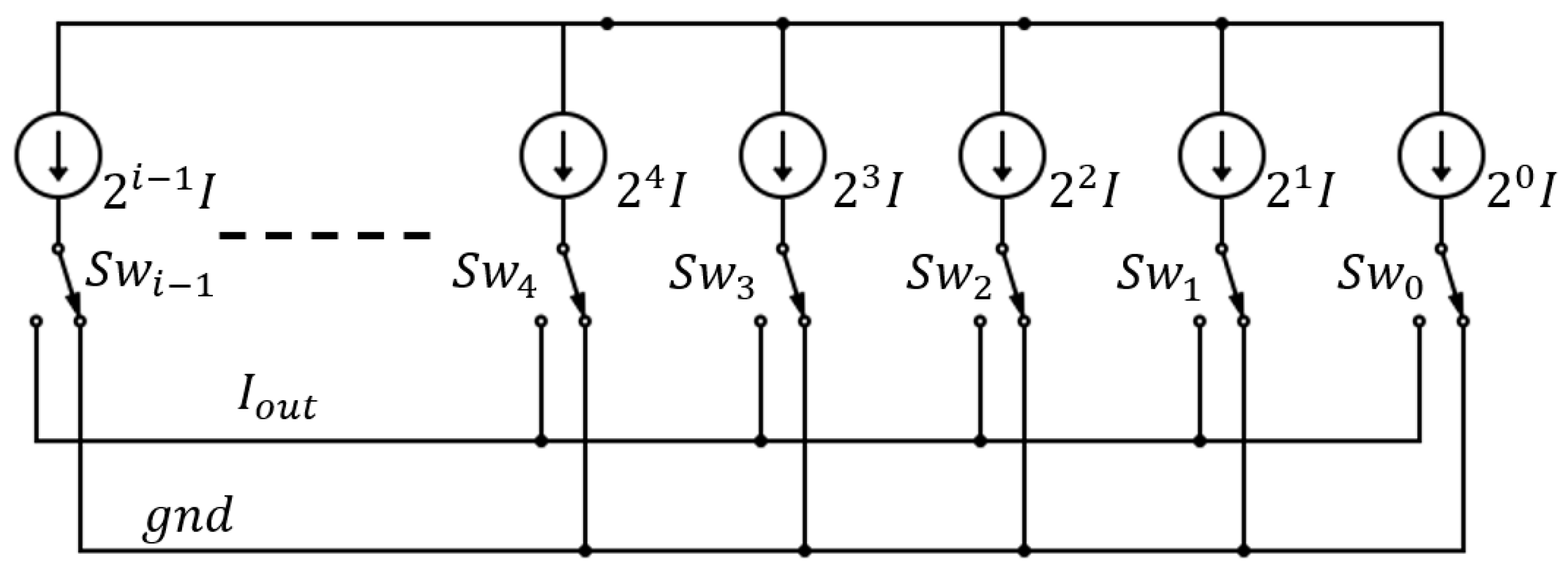

Figure 10 depicts the schematic circuit of the modified all MOSFET R-2R current steering DAC with redundant bits. The core structure, as illustrated in

Figure 10a, is a 14-bit all MOSFET R-2R structure previously introduced in [

26]. The MOSFET structure operates based on the linear current division principle. It divides an input current

into two equal currents:

.

passes through

and is switched between

, which represent the differential outputs of the DAC. Meanwhile,

passes through

and is equally divided into

and

, and so on. This configuration results in an R-2R structure, where

and

or

form the 2R part depending on which one is activated, while

constitutes the R part. In addition to the core structure, which comprises a 14-bit all MOSFET R-2R ladder, the proposed design incorporates two extra redundant bits, as depicted in

Figure 10b.

The DAC design process consists of two key stages. The first involves determining the minimum MOSFET area required to achieve adequate matching in the LSB segment. The second focuses on sizing the redundant bit to ensure it can correct the worst-case INL.

To determine the minimum required MOSFET area for an

-bit matching, the standard deviation of the relative current mismatch,

is first estimated using Monte Carlo simulation, as described in [

27]. This mismatch is directly related to the transistor gate area

WL, as expressed in Equation (17), and is further linked to the target resolution

and the standard deviation of INL, as shown in Equation (18). Where

, and

are Pelgrom mismatch coefficients, and

is excess bias voltage.

Using Equations (17) and (18), we can estimate the required MOSFET area for a desired INL or, conversely, predict the maximum achievable INL for a given area as expressed in Equation (19).

For high yield we will size the redundant bits weights as three times this standard deviation, ensuring they can correct errors from both the MSB and ISB segments.

The first redundant bit

is sized to correct the worst-case cumulative error

from the MSB segment, estimated in Equation (20).

The second redundant bit

is sized to cover both the worst case cumulative ISB error

and the quantization residue from the MSB segment as in Equation (21). Where

represents the quantization residue with a maximum value of

.

Equations (20) and (21) define the lower bounds for

and

. The upper bounds are defined by the maximum output current that can be produced by the ISB and

LSB segments. Hence the redundant bits must satisfy the conditions in Equations (22) and (23) below.

If the weight of a redundant bit is too small, it will be insufficient to bridge large integral nonlinearity (INL) gaps, rendering calibration ineffective. Conversely, if the weight is too large, it may lead to overcorrection or create unusable code regions. To ensure the redundant bit weight remain within this range even when subjected to mismatch, each redundant bit is conservatively sized as the average of its respective lower and upper bounds. Additionally, the area of the core DAC is sized such that the range of acceptable redundant bit weights is larger than the worst-case variation in the redundant bits due to mismatch. This design strategy ensures that, even under mismatch, the actual (measured) weight of the redundant bit remains within the predefined calibratable range. The calibration algorithm then uses this measured weight during calibration code generation/remapping. As long as the redundant bit’s weight remains within the valid range, the DAC remains fully calibratable.

The required MOSFET gate area

WL to ensure adequate matching in the

LSB segment is given by Equation (24). For high yield it is important to maintain

.

The overall DAC transfer function is thus expressed as in Equation (25). Where the first term represents the ideal current contribution from the MSB segment, combined with the error due to device mismatch, denoted as

. The second term corresponds to the ISB segment, including its mismatch-induced error

. The third term is the contribution from the

LSB segment, which may be subject to a gain error

. The final two terms represent the current contributions from redundant bits, used to facilitate digital calibration. The digital calibration algorithm described in

Section 3 is then employed to compensate for the mismatch and gain errors in the MSB, ISB, and

LSB segments, thereby restoring DAC linearity.

4.2. Three Segment String DAC

Figure 11 illustrates a modified three-segment resistor-string DAC with redundancy [

19]. The architecture consists of three cascaded resistor ladders, labeled from left to right as the MSB, ISB, and

LSB segments. Each segment is responsible for generating coarse, intermediate, and fine voltage levels, respectively. To minimize area and power consumption, the buffers which are traditionally used to isolate adjacent segments are removed, at the cost of increased susceptibility to loading effects. To further minimize area, the unit resistor is sized to meet the matching requirement for the

LSB segment using Equations (26)–(28). Where

represent the standard deviation of resistor mismatch,

is the resistor mismatch coefficient, and A represent the area of unit Resistor. For high yield, the design targets

for the

LSB segment.

Although this relaxed resistor sizing approach reduces area, it, along with the removal of inter-segment buffers, introduces nonlinearity in the DAC output due to mismatch and increased segment loading effects. To introduce redundancy and compensate for these errors, an additional resistor is added to the MSB segment, increasing the total number of resistors in the MSB segment () to . Furthermore, the tap voltages and in the ISB segment are connected across two MSB resistors instead of one, deviating from the conventional configuration. As a result, resistors are distributed across two MSB resistors. This modification effectively doubles the voltage span across the ISB segment, providing an additional LSB of redundancy. These extra codes enable correction of the mismatch-induced errors and in the MSB, ISB and LSB segments, respectively, as well as errors arising from segment loading.

The overall DAC transfer function which is the sum of the contributions from the MSB, ISB, and

LSB segments, is expressed in Equation (29), where

,

, and

are defined in Equations (30), (31) and (32), respectively.

In Equations (30)–(32), the first term denotes the nominal voltage contribution of the MSB, ISB, and LSB segments, respectively. The second term in Equation (30) captures the MSB-segment mismatch error, which propagates to the ISB and LSB outputs, as reflected by the second terms of Equations (31) and (32). The third term in Equation (31) represents mismatch-plus-loading error in the ISB segment; this error similarly manifests in the LSB output, as shown by the third term in Equation (32). The final term in Equation (32) corresponds to LSB-segment mismatch error, which remains below ±0.5 LSB.

and

represent the switch position of the MSB, ISB and

LSB segment.

,

and

is defined in Equation (33).

is the effective resistance of the ISB tap resistor due to the loading effect of the

LSB segment, similarly

is the effective resistance of the two MSB tap resistors due to the combined loading ISB and

LSB segments. These relationships are quantified in Equation (34).

Given the additional LSBs of redundancy in the ISB segment, errors originating from both mismatch and loading effects can be effectively compensated during calibration by adjustments within the ISB segment. A decoder is however necessary to map and to and

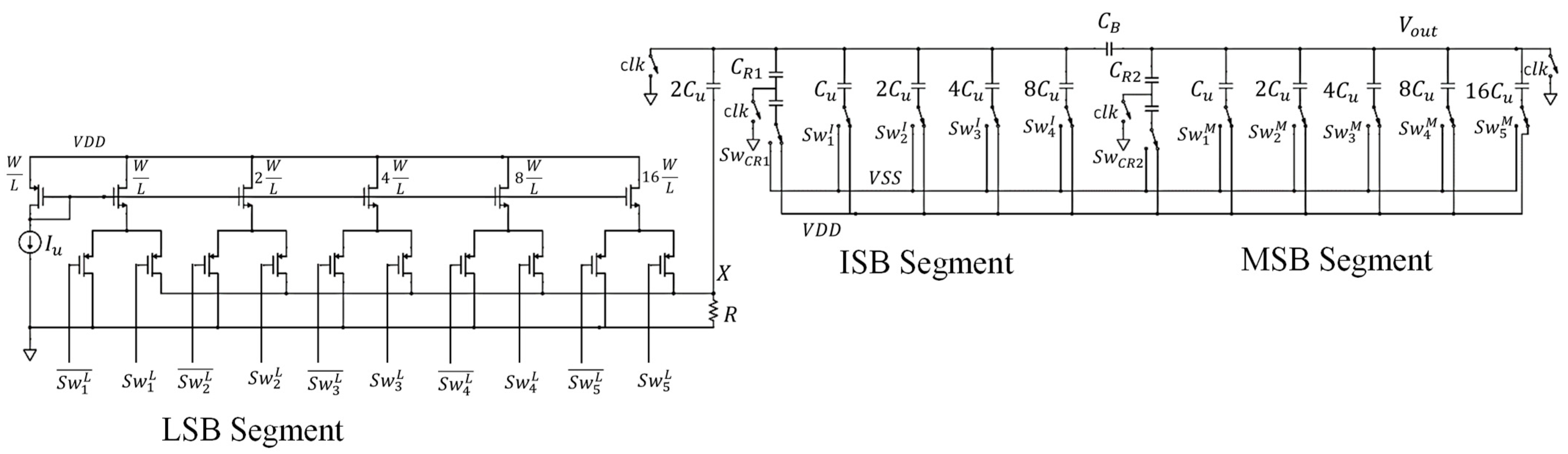

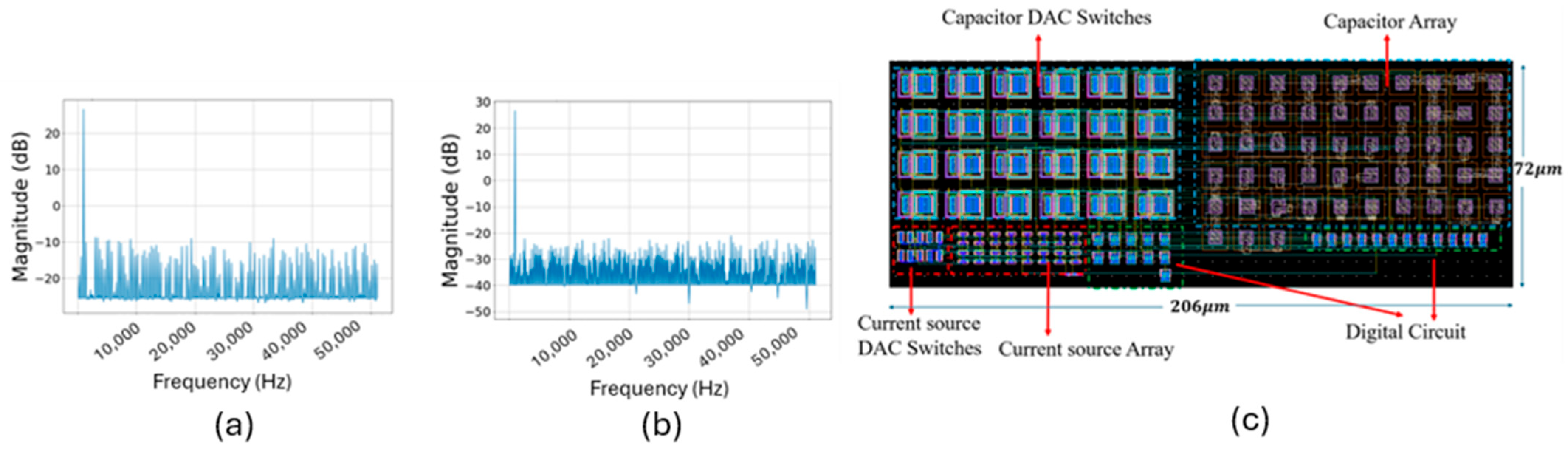

4.3. Current Source Capacitor DAC

Figure 12 illustrates the schematic of a proposed current source–CDAC (Capacitive DAC) architecture with redundancy. The DAC is implemented as a two-segment CDAC, comprising an

-bit Most Significant Bit (MSB) segment, an

-bit Intermediate Significant Bit (ISB) segment, and an

-bit Least Significant Bit (

LSB) segment, which utilizes a binary-weighted switched current source DAC.

A bridge capacitor which connects the MSB and ISB segments is used to minimize the capacitance spread. In the LSB segment, current from the switched current sources is steered between node X and ground based on the input LSB code. This current develops a voltage across a resistor R, which then charges the LSB capacitor (sized as ). This configuration constrains the maximum voltage to , ensuring adequate voltage headroom to keep all MOS devices in saturation.

To minimize channel length modulation, the lengths of all MOS transistors are set to at least twice the minimum feature length defined by the process. To reduce quiescent power consumption in the

LSB current source DAC, the unit current

is minimized and the resistor R is chosen to have a high value. The unit current is given in Equation (35).

Given

, the transistor dimensions W/L and node voltages can be determined. Starting with the diode-connected transistor and the unit current source transistor

, the overdrive voltage

is selected to mitigate threshold voltage variation, enhancing current matching. From the square-law model Equation (36) we can find W/L.

The widths of subsequent current sources in the DAC are binary-scaled relative to that of

. The drain-source voltage

of

is set to 1.5

, yielding a drain voltage

given by

. For the differential pair transistors

and

the overdrive voltage is set to half that of

, and their widths four times that of

. The common-mode input gate voltage

is therefore given in Equation (37).

To ensure saturation of all transistors as

approaches

, the input differential voltage must satisfy:

To analytically determine the required area for accurate matching in the

-bit

LSB segment, we refer to Equation (24). The redundant bits, with weights

and

, are designed to correct for mismatch-induced errors originating from the ISB and MSB segments, which are collectively formed by the CDAC. Therefore, the sizing of

and

must be based on the worst-case mismatch in these segments. Specifically, their values are determined using the standard deviation of the unit capacitor mismatch,

as defined in Equation (38).

where

is the capacitor matching coefficient and

is the unit capacitor area. Assuming a metal-insulator-metal (MIM) implementation, the worst-case integral nonlinearity (INL) is given by:

where

is the capacitor density and

. To ensure high yield, the redundant weights must exceed three times the worst-case INL in their respective segments. This imposes the constraints in Equations (40) and (41).

The transfer function of the DAC is therefore defined in Equation (42). Where

,

,

represent the binary switch state of the

switch in the MSB, ISB, and

LSB segments.

and

denote the redundant bit capacitor, while

and

denote the redundant bit switches. The first four terms define the ideal contributions from the CDAC (MSB, ISB, and redundant bits), while the last term represents the

LSB current-source DAC contribution.

and

represent the error in the MSB and ISB segment,

represent the gain error in the

LSB segment.

6. Discussion

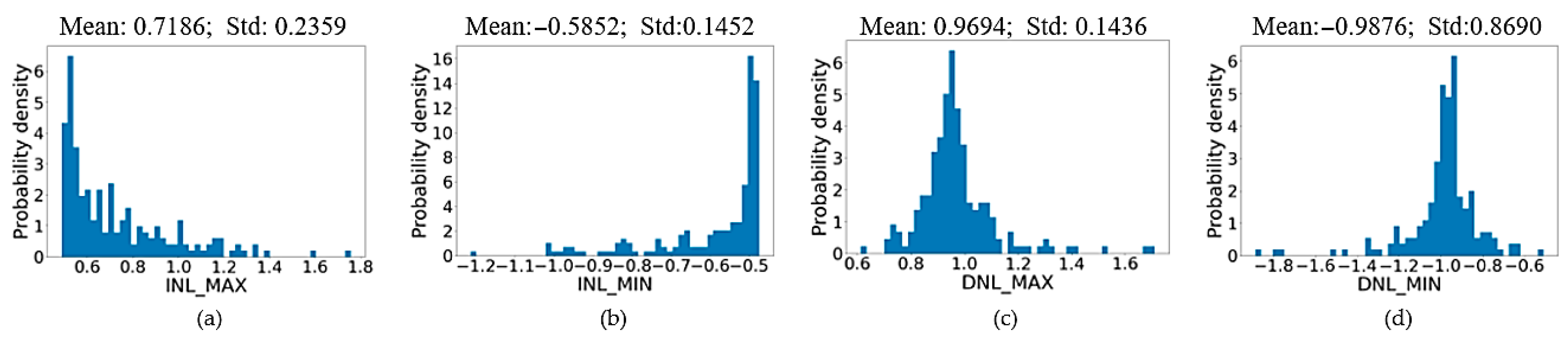

Table I benchmarks the three prototype DACs presented in this work against four representative state-of-the-art designs reported in JSSC’03 [

27], T-VLSI’12 [

28], ESSCIRC’10 [

29], and JSSC’07 [

30]. Taken together, the data highlight three main advantages of the proposed redundancy-based calibration strategy: superior linearity at higher resolution, dramatic area savings, and architecture-agnostic robustness.

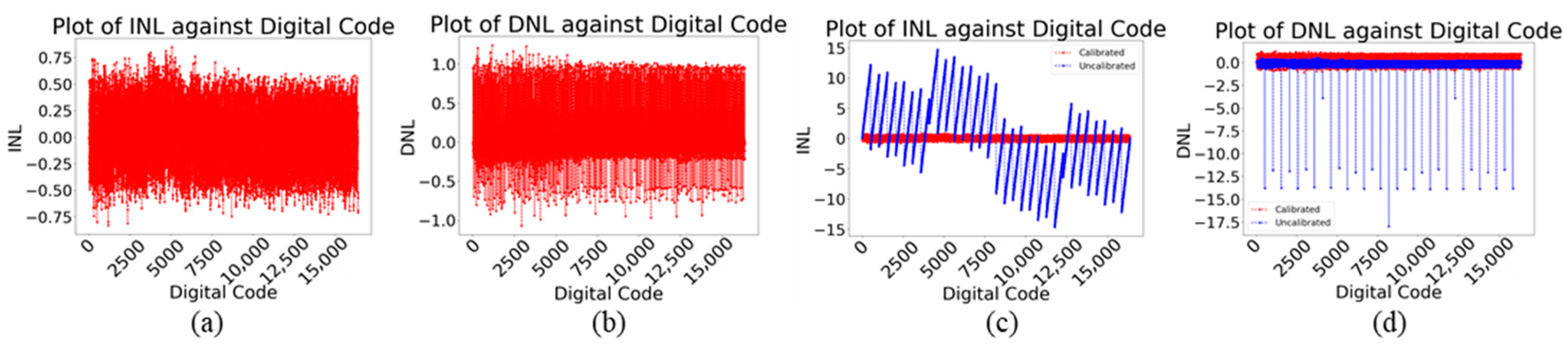

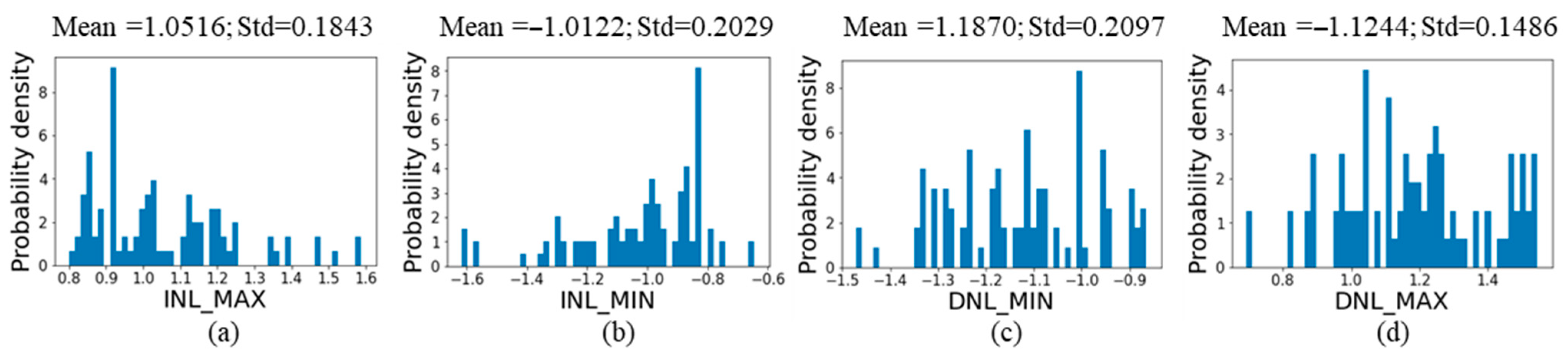

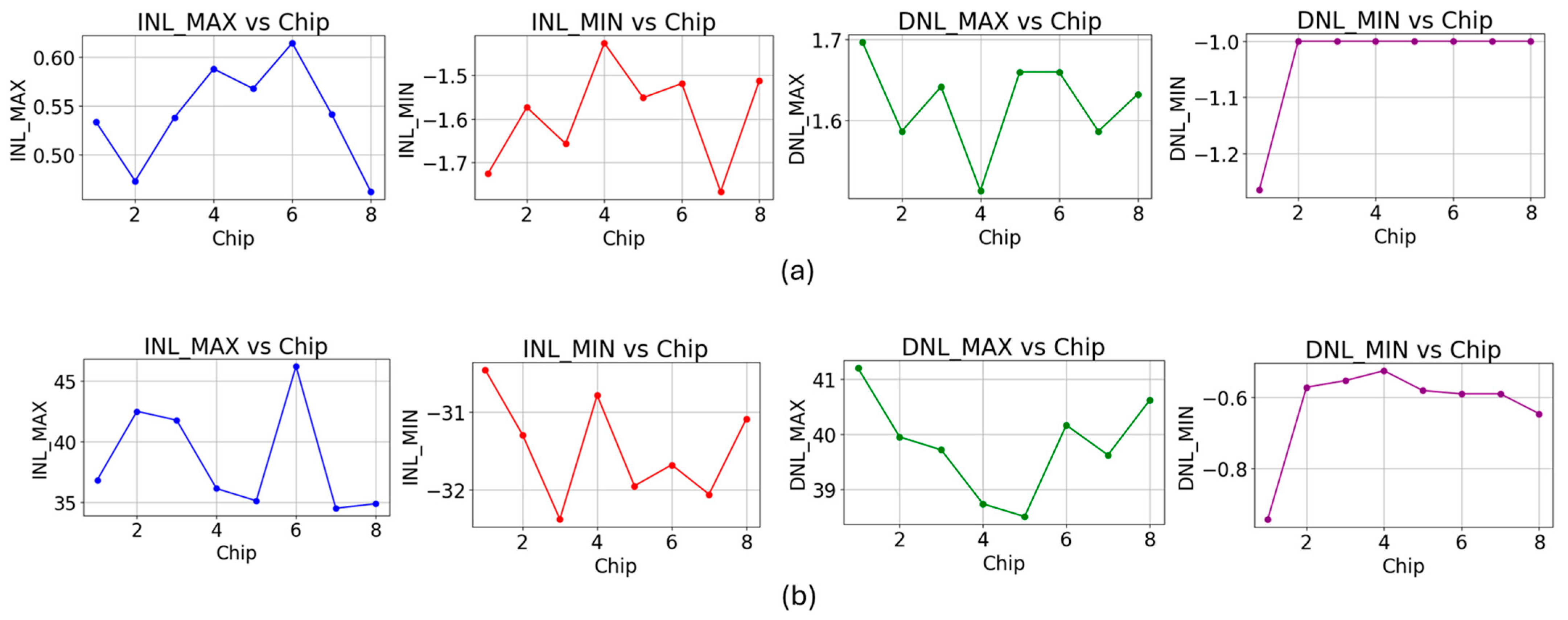

First, the proposed designs achieve high linearity despite being fabricated in an older 180 nm process. The 14-bit All-MOS R-2R DAC exhibits an INL of 0.6

LSB and a DNL of 1

LSB—surpassing the 12-bit linearity of the current-steering DACs in [

27,

28,

29] and outperforming the 14-bit DAC in [

30] by more than 2× in terms of INL. The three-segment resistor-string DAC, validated in silicon, achieves ±1.6

LSB INL and DNL, offering two extra bits of resolution compared to [

27,

28,

29], with comparable or better static performance. Although the INL/DNL of the resistor-string DAC is slightly larger than the best-reported 12-bit performance, the gain in resolution and area efficiency remains substantial. Similarly, the hybrid current-source CDAC achieves 0.8

LSB INL and 1.2

LSB DNL, verifying that the proposed calibration method generalizes well across diverse architectures.

Second, the proposed designs demonstrate unprecedented area efficiency. The All-MOS DAC occupies just 0.017 mm

2, more than 60× smaller than the 1.04 mm

2 DAC in [

27] and over 10× smaller than the 0.18 mm

2 design in [

28], despite offering two more bits of resolution. The silicon-verified resistor-string DAC requires only 0.108 mm

2, a 28× reduction in area compared to the 3 mm

2 design in [

30]. Remarkably, the current-source CDAC achieves the smallest footprint at 0.015 mm

2, making it the most compact entry in the table. These savings stem from the use of minimal-sized devices, with linearity restored digitally using the proposed redundancy-assisted predistortion strategy.

Third, because the calibration operates purely in the digital domain and leverages redundant weights embedded in each segment, it is inherently deterministic and architecture-agnostic. Unlike dynamic-element-matching methods, which randomize rather than correct mismatch, the present approach remaps input codes to cancel both positive and negative INL jumps. Silicon measurements of the resistor-string prototype confirm close agreement with Monte-Carlo predictions, underscoring the method’s robustness to process variation and mismatch.

Overall, the comparison presented in

Table 2 demonstrates that the proposed methodology allows designers to trade costly analog device matching for inexpensive and scalable digital logic—a trade-off that becomes increasingly advantageous as CMOS technology continues to scale. As technology nodes shrink, device mismatch worsens due to increased process variability. However, this trend has minimal impact on our design methodology, which requires precise matching only in the

LSB segment. The redundant bits are appropriately sized to compensate for mismatch in the upper segments, ensuring calibratability without relying on precise analog layout techniques.

At the same time, digital resources—such as logic and memory—become smaller, faster, and more cost-effective at advanced nodes. Our redundancy-assisted digital calibration framework is designed to exploit this trend, making it particularly well-suited for sub-65 nm technologies. Moreover, as maintaining analog precision becomes increasingly costly and area-intensive in deeply scaled processes, digital correction techniques such as predistortion offer a highly scalable and energy-efficient alternative. This makes the proposed architecture an attractive option for modern mixed-signal and system-on-chip (SoC) designs targeting advanced CMOS nodes.

Ongoing work focuses on fully integrating the calibration controller and lookup table on-chip, scaling the technique to support ≥16-bit resolution and update rates exceeding 1 GS/s. The approach is also being ported to sub-65 nm CMOS nodes, where mismatch becomes more pronounced, yet the relative cost of digital logic is significantly reduced. While the current algorithm primarily addresses static nonlinearity, efforts are underway to extend its capabilities to dynamic nonlinearity correction. Additionally, future work will explore regression-based models and neural network techniques for predictive estimation of INL and DNL.