3.1. Dataset

The “KOA-PD-NM” gait dataset (Kour et al., 2022) [

22] comprises both demographic and gait video data from 96 subjects, categorized into three groups: individuals with Knee Osteoarthritis (KOA), Parkinson’s Disease (PD), and healthy/normal (NM) participants. The KOA group includes 50 subjects, subdivided into early (15), moderate (20), and severe (15) stages, with respective average ages and heights of 47.1 years/1.54 m, 59.8 years/1.58 m, and 62.4 years/1.54 m. The PD group consists of 16 subjects with mild (6), moderate (7), and severe (3) symptoms, averaging 66.5, 69.8, and 70 years of age, and heights of 1.67, 1.61, and 1.66 m, respectively. The NM group includes 30 healthy individuals with an average age of 43.7 years and an average height of 1.60 m. All participants are annotated with subject ID, gender, age, and height. Gait data were collected using a single Nikon D5300 DSLR camera positioned 8 m from a walking mat in a clinical setting. Each subject was recorded in two sagittal plane walking sequences (left-to-right and right-to-left), and each video is provided in MOV format. To enhance motion tracking, six red-colored passive reflective markers were attached to key body joints. The dataset includes 100 KOA videos (30 early, 40 moderate, 30 severe), 31 PD videos (12 mild, 14 moderate, 5 severe), and 60 NM videos. The file naming convention embeds subject ID, sequence number, and severity level (for KOA and PD). This dataset is a valuable resource for research in clinical gait analysis, disease progression modeling, and machine learning applications in healthcare, providing both quantitative demographic context and high-quality visual gait recordings. In this study, we deliberately adopted a three-class classification approach instead of the original seven-class severity-based classification (KOA: early/moderate/severe; PD: mild/moderate/severe; NM) to focus on disease-type discrimination. The three target classes are:

KOA: All subjects with Knee Osteoarthritis (regardless of severity stage)

PD: All subjects with Parkinson’s Disease (regardless of severity stage)

NM: Healthy Normal subjects

This simplification allows the model to focus on distinguishing between different pathological gait patterns rather than staging disease severity, which is clinically relevant for initial screening and diagnosis.

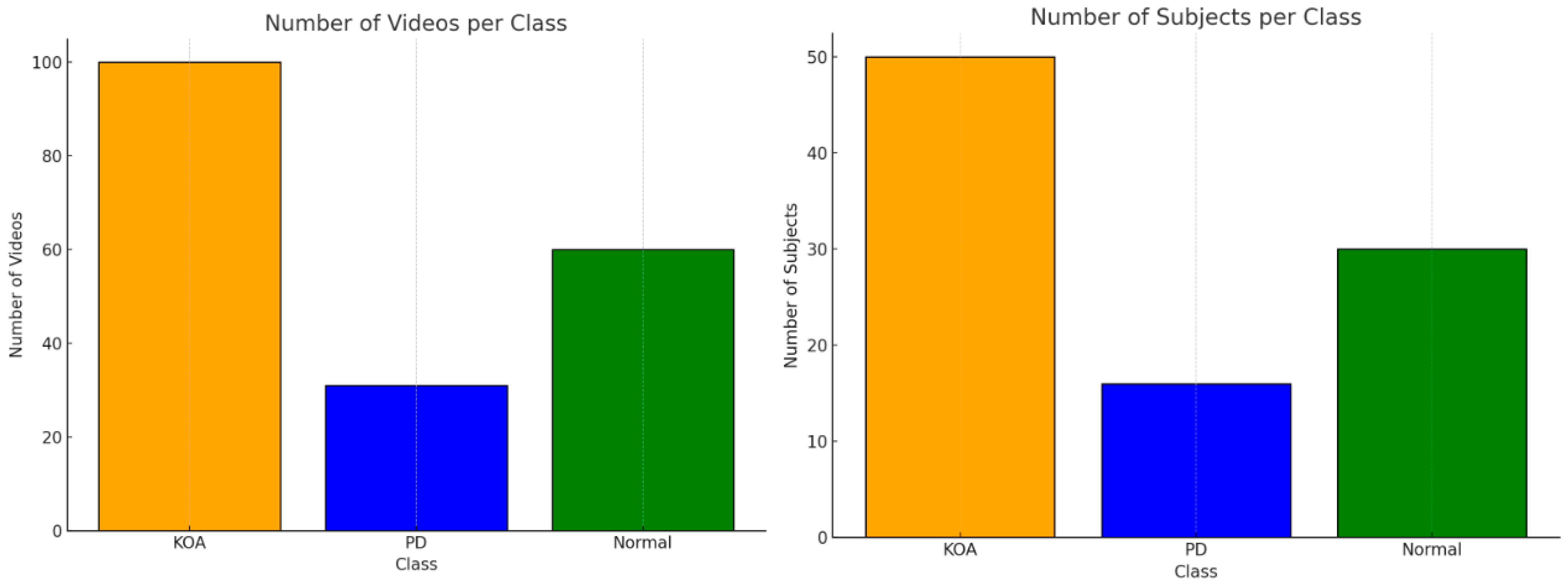

Figure 2 illustrates the class distribution in terms of both the number of subjects and the number of video sequences for each category: KOA, Parkinson’s Disease (PD), and Normal. A clear imbalance is observed, with the KOA group having the highest representation (50 subjects, 100 videos), followed by the Normal class (30 subjects, 60 videos), and PD being significantly underrepresented (16 subjects, 31 videos). This class imbalance may introduce bias during training and could negatively affect model generalization. Therefore, appropriate balancing techniques—such as data augmentation, class weighting, or synthetic oversampling—are recommended when developing deep learning models for classification based on this dataset. For this study, the SMOTE technique was employed for this purpose.

3.2. Equipment, Software, and Experimental Setup

All the equipment, materials, and software used in this study are listed below with their corresponding manufacturers, locations, and version numbers. The gait video recordings were acquired using a Logitech HD Pro C920 camera (Logitech Inc., Lausanne, Switzerland) mounted on a fixed tripod to capture both frontal and sagittal views. Data preprocessing, model training, and evaluation were carried out using Python 3.10 (Python Software Foundation, Wilmington, DE, USA). The implementation involved OpenCV 4.8.1 (Intel Corporation, Santa Clara, CA, USA) for image and video processing, NumPy 1.26.0 and Pandas 2.1.1 for data handling, and Matplotlib 3.8.0 for figure generation and result visualization. The YOLOv8-based pose extraction was implemented using the Ultralytics library (Ultralytics, London, UK; version 8.1.0), and the spiking neural network module was developed with the SpikingJelly 0.0.15 framework (Tsinghua University, Beijing, China). The GAN-based data augmentation was implemented with PyTorch 2.1.0 (Meta Platforms, Inc., Menlo Park, CA, USA). All experiments were conducted on a workstation equipped with an Intel Core i7-12700 CPU, 32 GB RAM, and an NVIDIA Tesla P100 GPU (NVIDIA Corporation, Santa Clara, CA, USA). Statistical analyses were performed using IBM SPSS Statistics 29.0 (IBM Corp., Armonk, NY, USA).

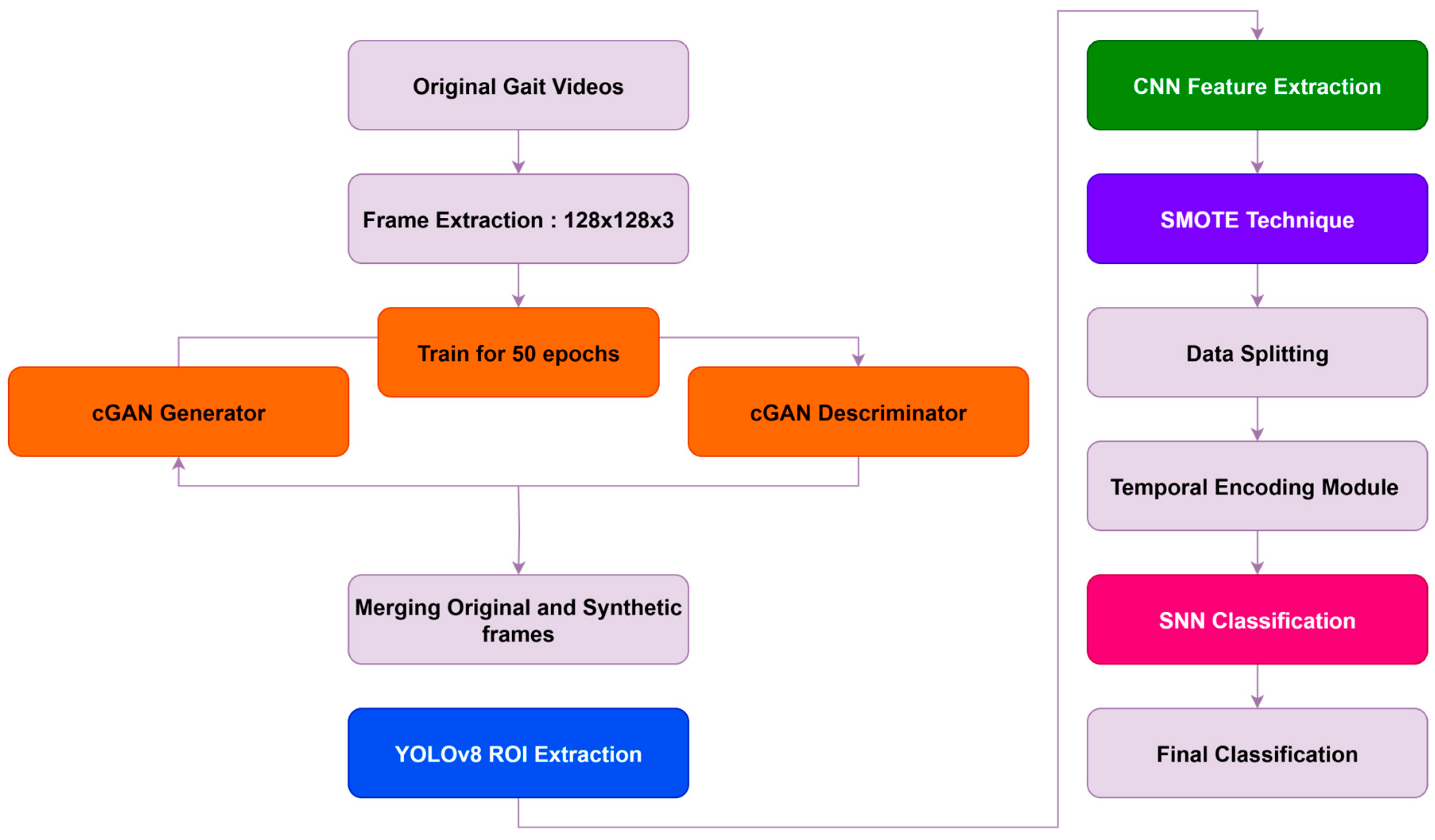

3.4. The Proposed Approach

This section describes the architecture and methodology adopted for classifying subjects into three categories: Knee Osteoarthritis (KOA), Parkinson’s Disease (PD), and Normal. The proposed framework integrates data augmentation, class balancing, deep feature extraction, temporal encoding, and biologically inspired classification through spiking neural networks (SNNs). The overall pipeline is illustrated in

Figure 3 and is composed of the following stages:

The input to the system consists of gait videos recorded from KOA, PD, and Normal subjects. Each video is decomposed into individual frames, forming the raw dataset of 2D gait images. To ensure computational efficiency while preserving essential gait information, each frame is resized to 128 × 128 pixels. This resolution was empirically selected based on preliminary experiments that demonstrated a balance between visual fidelity and resource consumption. Although higher resolutions (e.g., 224 × 224 or 256 × 256) can capture finer anatomical detail, the gait dynamics relevant to this study—such as joint motion patterns—were sufficiently preserved at 128 × 128. Future improvements may include multi-resolution modeling or region-specific cropping using pose estimation or attention mechanisms.

To address class imbalance and enhance generalization, we implemented a sequential, dual-space augmentation strategy where cGAN and SMOTE were applied separately in distinct representational spaces.

- -

Stage 1: cGAN-Based Augmentation in Raw Image Space

The conditional GAN was applied first, operating directly in the pixel domain on 128 × 128 × 3 RGB images. The cGAN generator takes a 100-dimensional latent noise vector and a class label (KOA, PD, or Normal) to synthesize realistic gait frames, while the discriminator distinguishes real from synthetic images.

After training for 50 epochs on the extracted frames (approximately 5730 extracted frames), the cGAN generated approximately 15,000 synthetic frames distributed across all classes, with emphasis on the underrepresented PD class (originally only 31 videos vs. 100 KOA and 60 Normal). This enhanced visual diversity and generated realistic motion patterns including body posture, joint angles, and motion blur.

- -

Stage 2: SMOTE Application in CNN Feature Space

Following cGAN augmentation and YOLOv8-based ROI extraction, all images (both original and synthetic) were processed through the CNN backbone, which extracted 128-dimensional feature vectors representing high-level gait characteristics.

SMOTE was then applied exclusively in this 128-dimensional CNN feature space, not in the raw image domain. For each minority class sample, SMOTE identified its 5 nearest neighbors and generated synthetic feature vectors through linear interpolation, producing approximately 6000 balanced feature samples for SNN training. By operating in feature space rather than pixel space, SMOTE avoided visual artifacts while ensuring balanced class representation.

- -

Rationale for Sequential, Multi-Space Application

This approach leverages complementary augmentation mechanisms: cGAN enriches raw data diversity before feature extraction by generating visually coherent, anatomically plausible gait frames, while SMOTE balances the extracted feature distribution through topological interpolation before classification. The sequential pipeline ensures each method contributes its unique strength—cGAN creates authentic visual diversity, and SMOTE ensures balanced feature-space representation without compromising visual quality.

Following the cGAN-based augmentation in image space, YOLOv8 (You Only Look Once, version 8) was applied to all gait images (both original and cGAN-generated synthetic frames) for automated detection and localization of human silhouettes. YOLOv8 is a state-of-the-art object detection model known for its high precision, speed, and lightweight architecture. In this study, it was used to identify and extract the region of interest (ROI), specifically the full-body bounding box of each subject within a frame. This step was introduced to eliminate background noise and irrelevant scene information, allowing the model to concentrate solely on the gait-relevant features of the human subject. By focusing on the detected ROI, the system benefits from improved spatial consistency and reduced input variability, which in turn enhances the performance of downstream components such as the CNN and SNN. Additionally, this approach reduces the dimensionality of the input, contributing to faster training and inference without compromising the fidelity of gait-related information. YOLOv8 was used in its pre-trained form (trained on the COCO dataset) and optionally fine-tuned on a small set of annotated gait images to improve detection accuracy in the specific application context. The resulting cropped images, containing only the subject’s body, were then passed to the CNN-based feature extractor for subsequent processing.

A Convolutional Neural Network (CNN) is utilized to extract robust spatial features from the preprocessed gait frames (both original and cGAN-generated synthetic frames after YOLOv8 ROI extraction). The CNN architecture includes convolutional layers with ReLU activation, followed by pooling operations. To enhance generalization, Batch Normalization and Dropout are applied. The output of this module produces 128-dimensional feature vectors that encode discriminative spatial information representing gait characteristics such as stride patterns, posture alignment, and movement kinematics. These feature vectors serve as input to the subsequent SMOTE balancing stage.

Following CNN feature extraction, SMOTE (Synthetic Minority Over-sampling Technique) is applied exclusively in the 128-dimensional CNN feature space to address any residual class imbalance. For each minority class sample in the feature space, SMOTE identifies its k = 5 nearest neighbors based on Euclidean distance and generates synthetic feature vectors through linear interpolation between each minority sample and randomly selected neighbors. This process balances the feature distribution across the three classes (KOA, PD, Normal), yielding approximately 6000 balanced feature samples. By operating in the high-level feature space rather than raw pixel space, SMOTE avoids introducing visual artifacts while ensuring balanced class representation for the downstream SNN classifier.

Following SMOTE augmentation in the feature space, the balanced feature dataset is partitioned into training and testing sets using an 80/20 split. This ensures that the model is trained on the majority of the data while reserving a representative portion for unbiased evaluation. The splitting is performed in a stratified manner to preserve class distribution across both sets, thereby preventing skewed performance metrics. This split occurs after all augmentation and balancing procedures to ensure proper evaluation of truly unseen data.

The CNN feature vectors from the training set are converted into spike trains using temporal coding schemes, such as rate-based or latency-based encoding. These techniques enable the incorporation of temporal dynamics and facilitate the compatibility of the data with Spiking Neural Networks. This biologically inspired transformation embeds timing information, aligning the model more closely with neural computation paradigms observed in the brain.

The temporally encoded spike trains are fed into a Spiking Neural Network (SNN) composed of Leaky Integrate-and-Fire (LIF) neurons. To enable effective supervised learning, the SNN is trained using surrogate gradient-based backpropagation. Since spike functions are inherently non-differentiable, surrogate gradient methods approximate the spike activity using smooth functions (e.g., fast sigmoid) during the backward pass, allowing the use of gradient descent optimization. This approach maintains the spiking behavior during inference while leveraging the power of deep learning during training. Although biologically plausible mechanisms like Spike-Timing Dependent Plasticity (STDP) were not employed in this version due to scalability concerns, integrating STDP with reward-modulated frameworks remains a promising direction for future work.

The final class prediction is derived from the spiking activity in the output layer of the SNN. Each sample is ultimately classified into one of the three target categories: KOA, PD, or Normal.

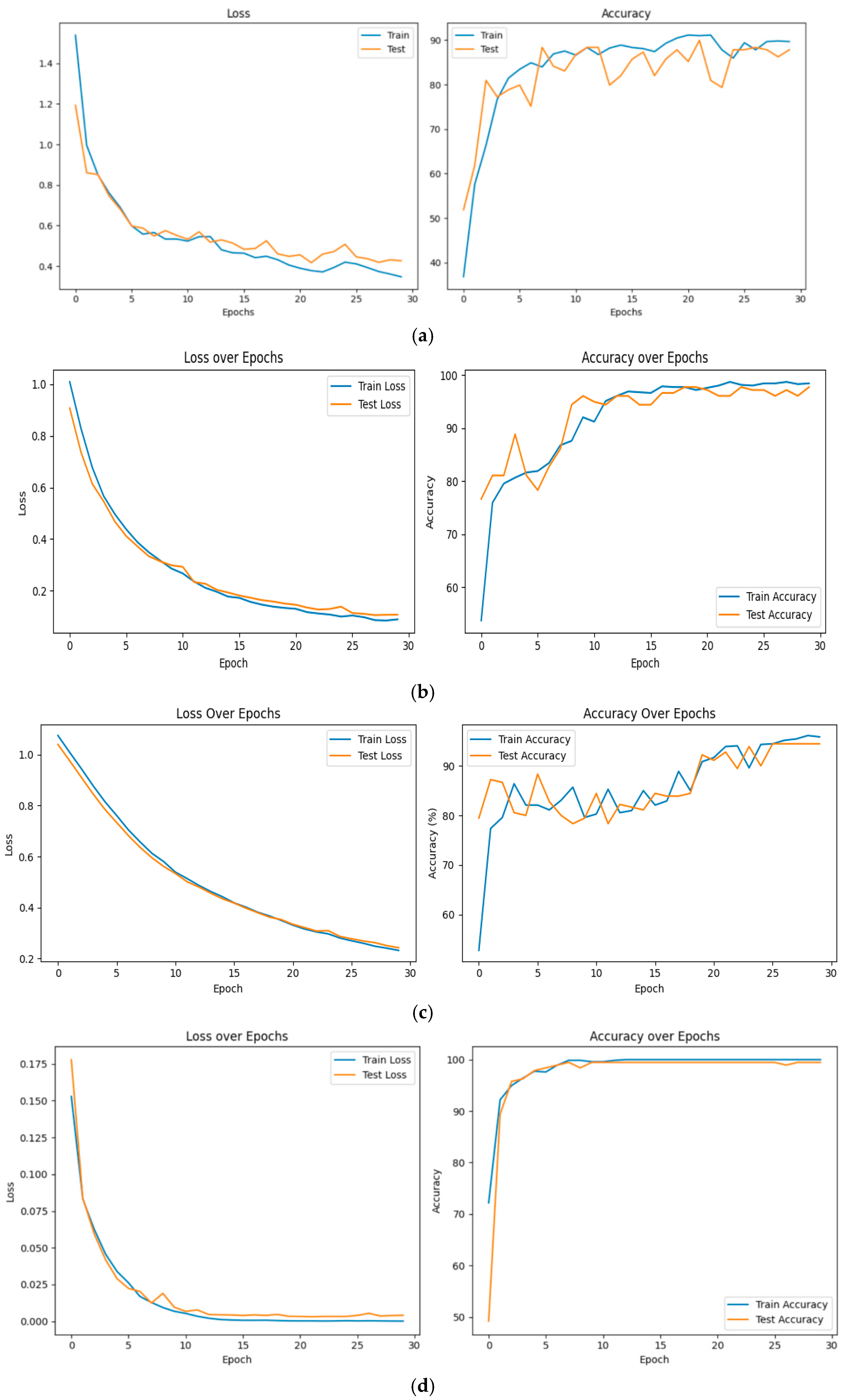

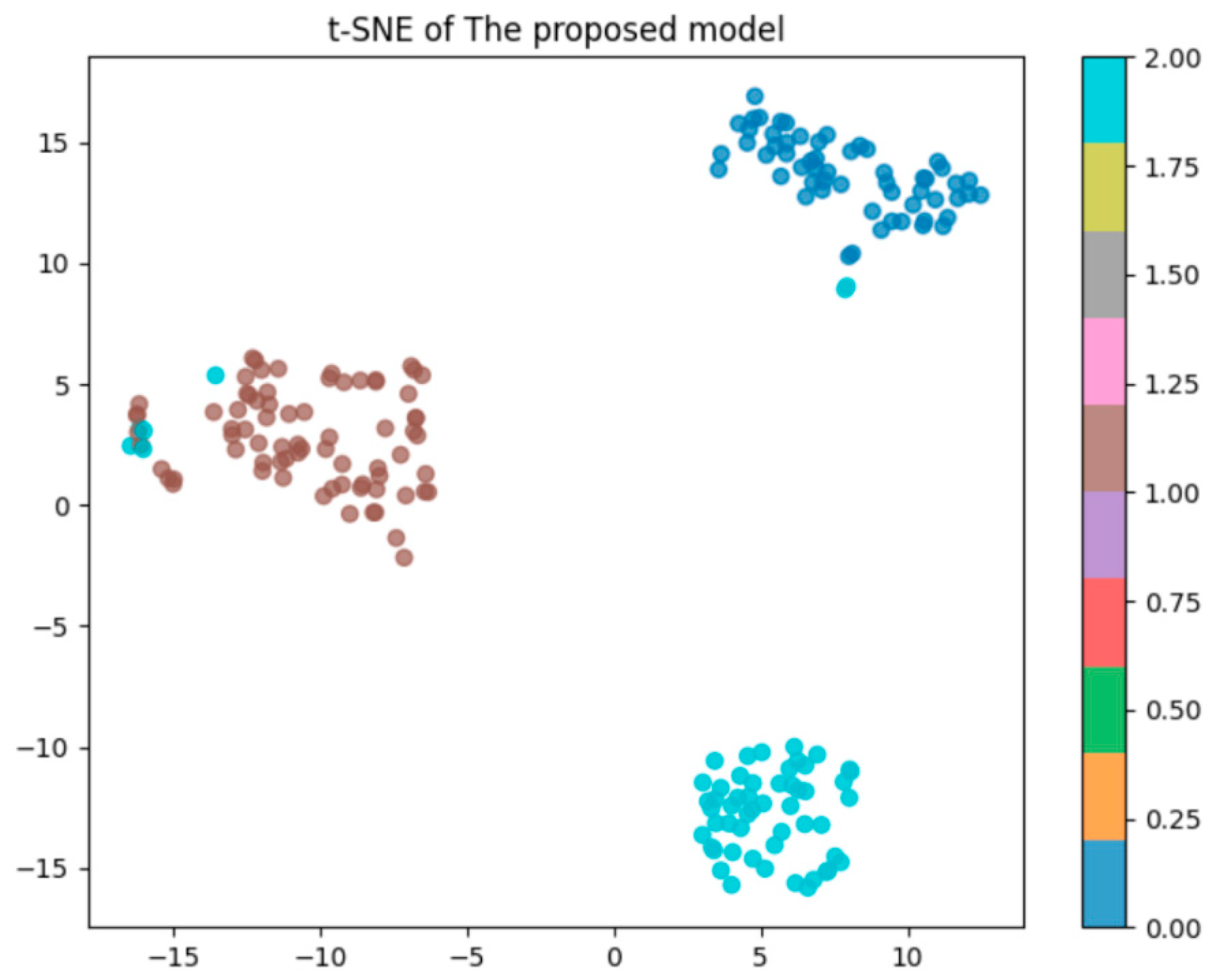

Figure 3,

Figure 4 and

Figure 5 show all the main steps of the proposed approach, including video preprocessing, data augmentation with cGAN, class balancing using SMOTE, splitting the data, detecting the person with YOLOv8, extracting features with a CNN, converting them into spikes, and finally classifying them using a Spiking Neural Network.

Table 2 presents a comparative summary of recent studies addressing similar classification tasks, highlighting the techniques used, publication years, and achieved accuracies. The proposed study outperforms previous approaches, achieving the highest accuracy of 99.47%, which demonstrates the effectiveness of integrating GAN-based augmentation, YOLO for detection, and the hybrid CNN-SNN architecture for classification.