Learning with Errors: A Lattice-Based Keystone of Post-Quantum Cryptography

Abstract

1. Introduction

2. Preliminaries

3. Lattices

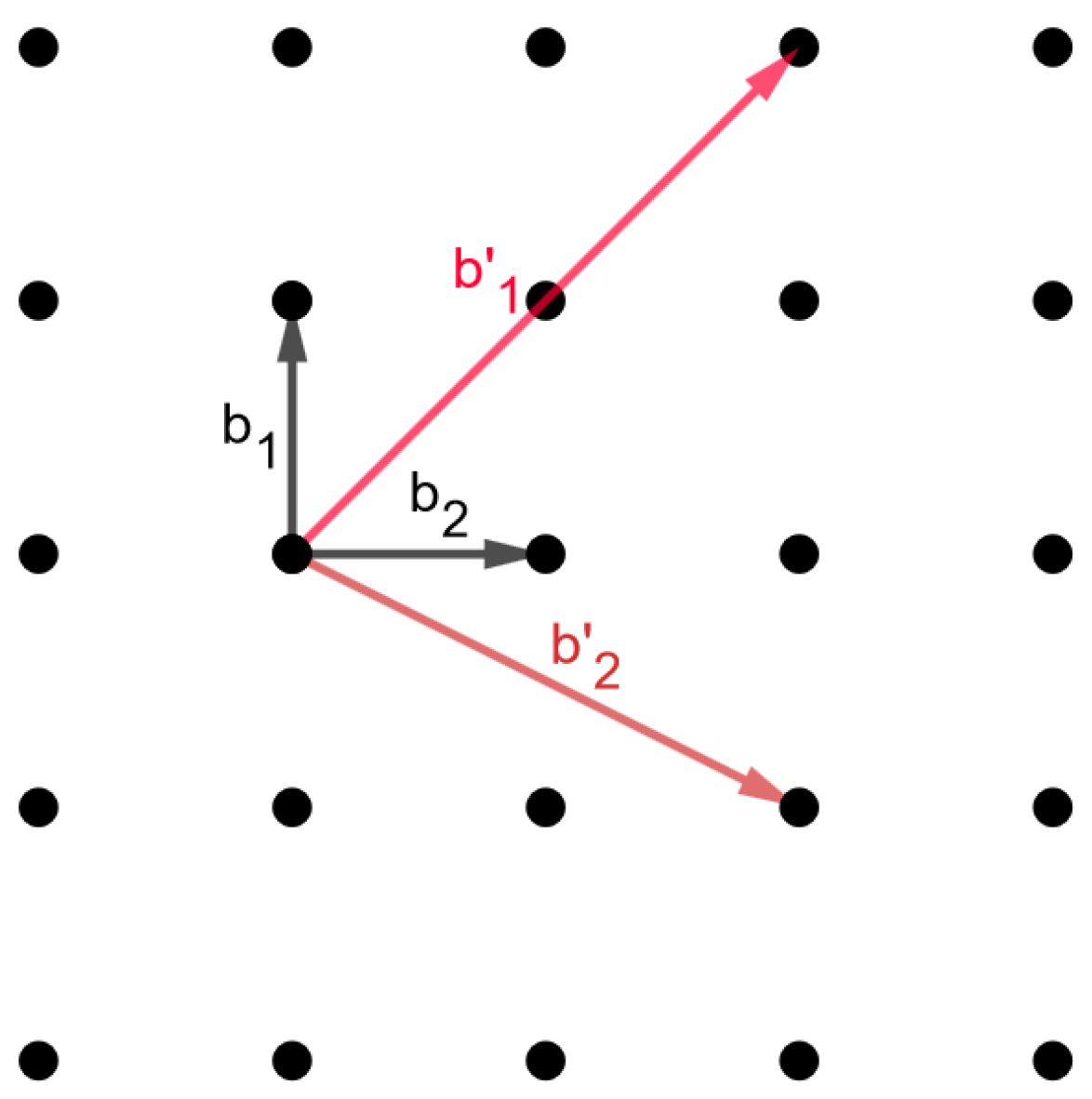

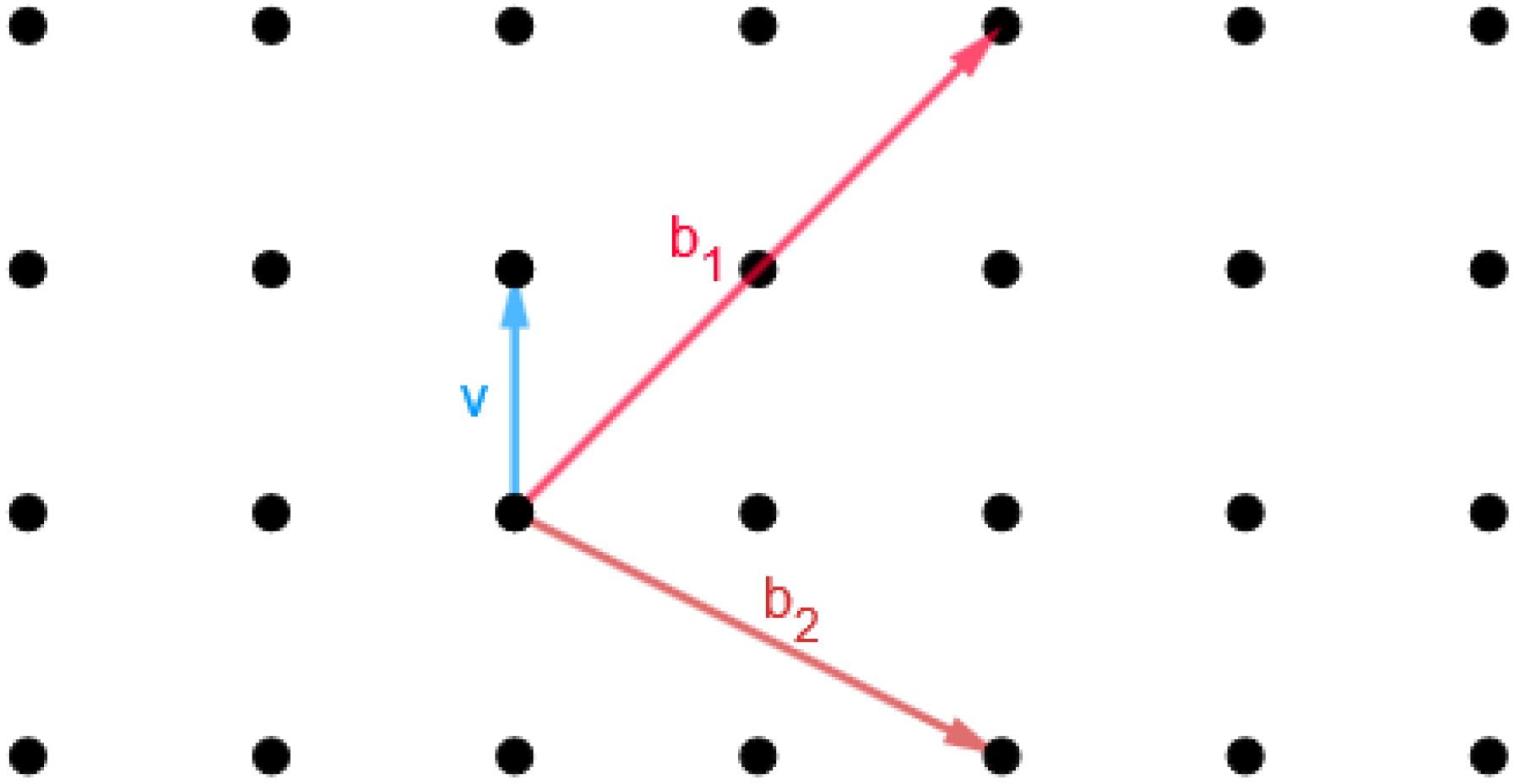

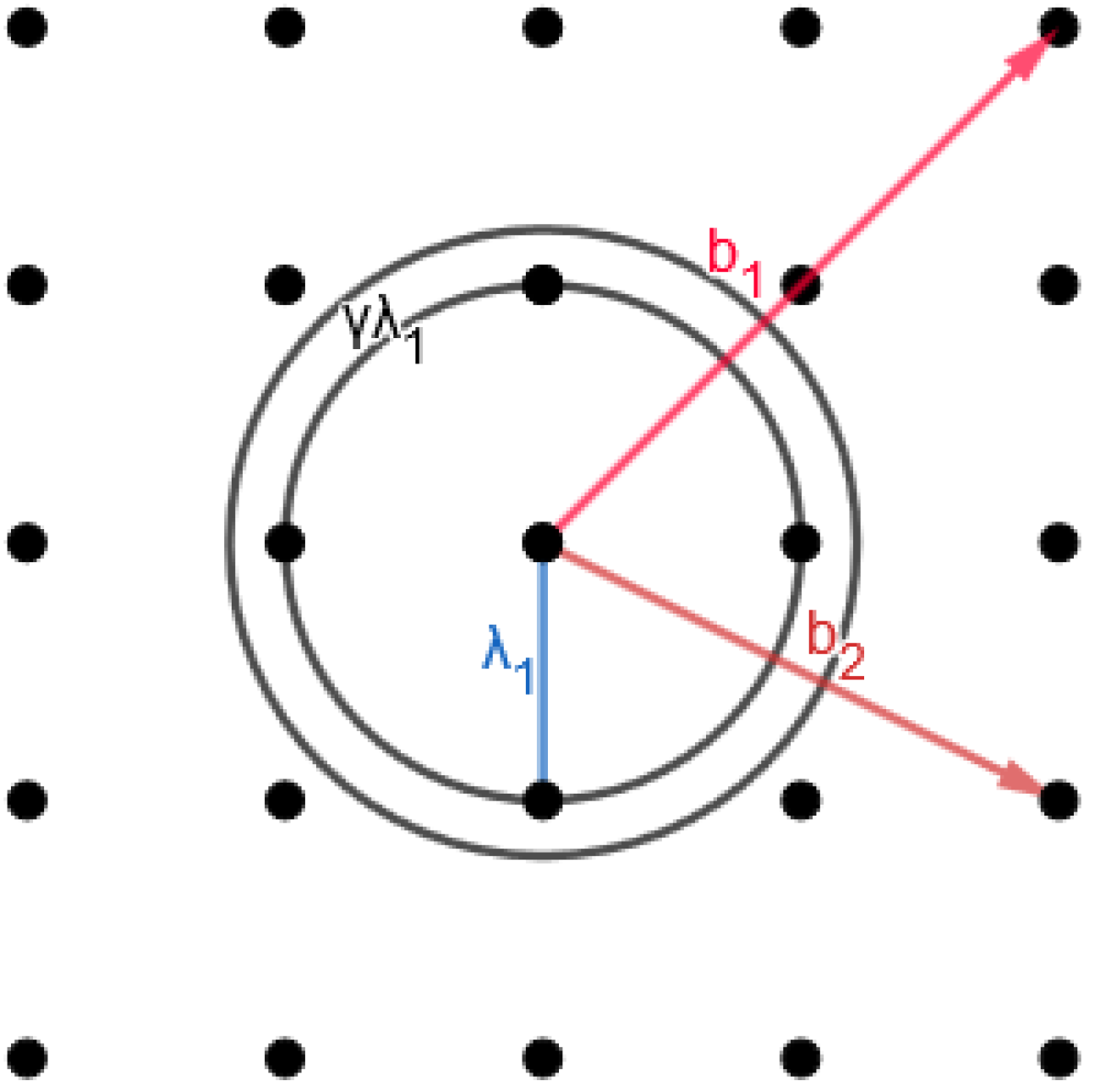

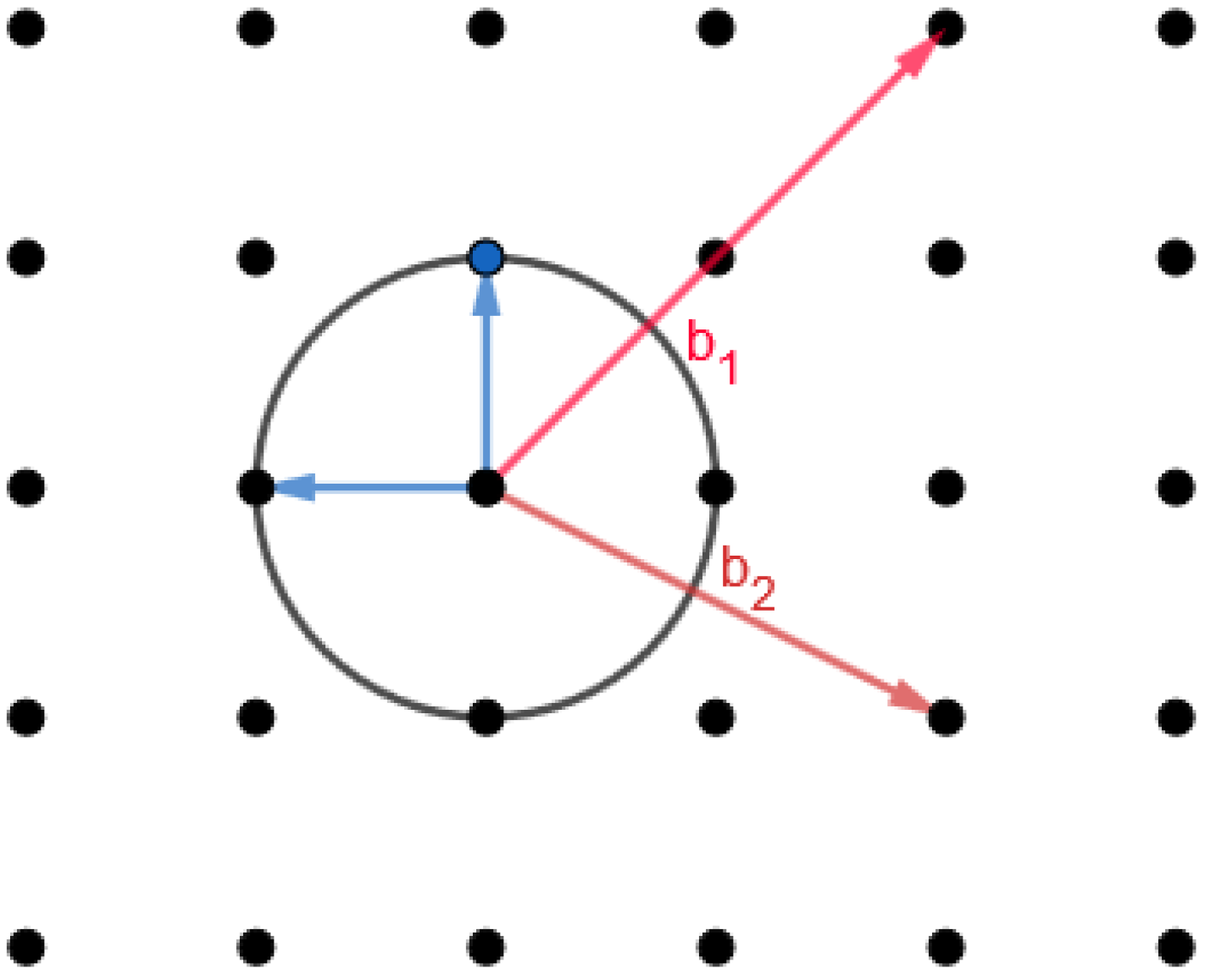

3.1. Basic Definitions

- 1.

- and iff

- 2.

- 3.

- and

- 4.

- , .

3.2. Computational Lattice Problems

3.2.1. Shortest Vector Problem (SVP)

3.2.2. Closest Vector Problem (CVP)

3.2.3. Shortest Independent Vector Problem (SIVP)

3.3. Computational Lattice Problems and Complexity

3.4. Quantum Computers and Lattices

4. Lattice-Based Cryptography

5. Learning with Errors (LWE)

5.1. The Learning with Errors Problem

5.2. The LWE Cryptosystem

- Private key. A uniformly random vector is chosen.s is the private key.

- Public key. m vectors are selected independently from the uniform distribution.There are elements (error offsets) chosen independently, , according to .The public key of the cryptosystem is , where .

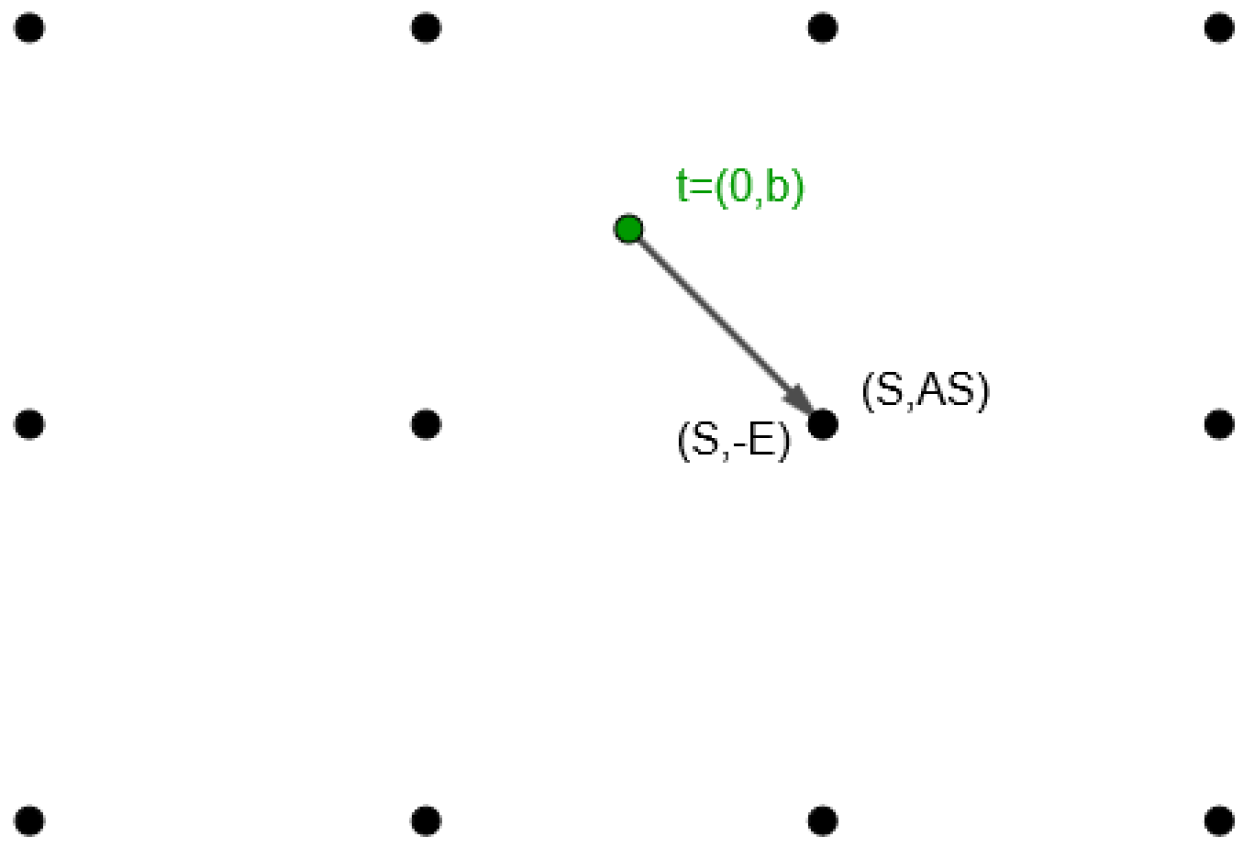

- Encryption. To be able to encrypt a bit, a random set S is chosen uniformly among all subsets of .The encryption holds if the bit is 0 and if the bit is 1.

- Decryption. The decryption of a pair is 0 if is closer to 0 than to modulo q. Otherwise, the decryption is 1.

| Algorithm 1: LWE |

|

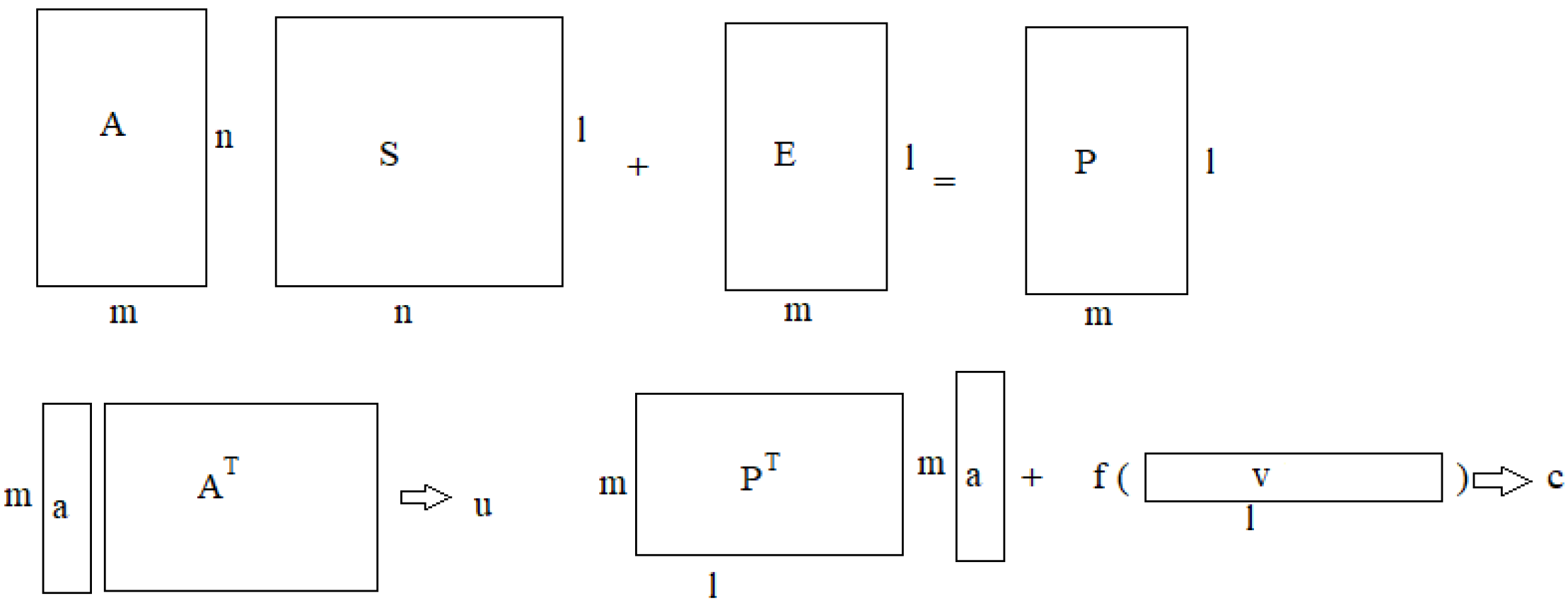

Private key. A matrix is chosen uniformly at random. S is the private key. Public key. A matrix is chosen uniformly at random and a matrix is chosen, whose each element entries are according to . The public key is . Encryption. Given a message and the public key , a vector is chosen uniformly at random. The output is the ciphertext . Decryption. Given the private key and the ciphertext , recover the plaintext . |

5.3. An Efficient Variant of LWE

- Private key. Choose a matrix uniformly at random.S is the private key.

- Public key. Choose a matrix uniformly at random and compute the matrix product .Choose a suitable mapping , , where transforms the elements of the matrix and maps them to elements of a matrix D, i.e., f is a suitable correspondence. D is the matrix product , where F is the matrix transformation of f.Choose an error matrix , where each entry is chosen independently from an error distribution .The public key is . The entry of P is , where is the element of the matrix D yielding the linear transformation and the matrix C.

- Encryption. To encrypt a message , define the vector by applying coordinate-wise to v.Choose a vector uniformly at random.The pair is the resulting ciphertext.

- Decryption. Given a ciphertext and the private key , compute . Output the plaintext , where each is such that is closest to .

5.4. Certain Instances of the Variant

5.4.1. The Sum of Two Entries

| Algorithm 2: KeyGen(1): Key Generation 1 |

|

- The private key is chosen uniformly at random and is such that

- It is selectedCompute the product , such that

- With the aid of the linear map and the matrix transformationcompute the matrix product , such that

- Select matrixThe public key is

5.4.2. The Cantor Pairing Function

| Algorithm 3: KeyGen(2): Key Generation 2 |

|

5.4.3. An Inverse Transformation

| Algorithm 4: KeyGen(3): Key Generation 3 |

|

- The private key is chosen uniformly at random and is such that

- The parameter is , so the public key is generated with the help of the uniformly at random .It is chosenCompute the product , such thatWith the aid of the linear map , with matrix transformationcompute the matrix product , such thatSelect matrix , each entry of which is selected according to .ComputeThe public key is .

5.5. Attacks and Threats

| Algorithm 5: Dual Attack |

|

6. Conclusions and Future Research

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- National Quantum Initiative. Available online: https://www.quantum.gov (accessed on 30 September 2020).

- Nielsen, M.; Chuang, I. Quantum Computation and Quantum Information; Cambridge University Press: Cambridge, UK, 2011. [Google Scholar]

- Savvas, I.; Sabani, M. Quantum Computing, from Theory to Practice, 1st ed.; Tziola Publications: Larisa, Greece, 2022. (In Greek) [Google Scholar]

- Poulakis, D. Cryptography, the Science of Secure Communication, 1st ed.; Ziti Publications: Thessaloniki, Greece, 2004. (In Greek) [Google Scholar]

- Bennett, C.H.; Brassard, G.; Breidbart, S.; Wiesner, S. Quantum cryptography, or Unforgeable subway tokens. In Advances in Cryptology: Proceedings of Crypto ’82 (August 1982); Springer: Boston, MA, USA, 1983; pp. 267–275. [Google Scholar]

- Bennett, C.H.; Brassard, G. Quantum Cryptography: Public Key Distribution and Coin Tossing. In Proceedings of the International Conference in Computer Systems and Signal Processing, Bangalore, India, 10–12 December 1984. [Google Scholar]

- Sabani, M.; Savvas, I.K.; Poulakis, D.; Makris, G. Quantum Key Distribution: Basic Protocols and Threats. In Proceedings of the 256th Pan-Hellenic Conference on Informatics (PCI 2022), Athens, Greece, 25–27 November 2022; ACM: New York, NY, USA, 2022; pp. 383–388. [Google Scholar]

- Sabani, M.; Savvas, I.K.; Poulakis, D.; Makris, G.; Butakova, M. The BB84 Quantum Key Protocol and Potential Risks. In Proceedings of the 8th International Congress on Information and Communication Technology (ICICT 2023), London, UK, 20–23 February 2023. [Google Scholar]

- Zhong, X.; Hu, J.; Curty, M.; Qian, L.; Lo, H.K. Proof-of-principle experimental demonstration of twin-field type quantum key distribution. Phys. Rev. Lett. 2019, 123, 100506. [Google Scholar] [CrossRef] [PubMed]

- Hoshino, S.; Suzuki, M.T.; Ikeda, H. Spin-Derived Electric Polarization and Chirality Density Inherent in Localized Electron Orbitals. Phys. Rev. Lett. 2023, 130, 250801. [Google Scholar] [CrossRef] [PubMed]

- Cao, X.Y.; Li, B.H.; Wang, Y.; Fu, Y.; Yin, H.L.; Chen, Z.B. Experimetal quantum e-commerce. Sci. Adv. 2024, 10, eadk3258. [Google Scholar] [CrossRef] [PubMed]

- Shor, P.W. Polynomial-time algorithms for prime factorization and discrete logarithms on a quantum computer. J. Comput. SIAM 1997, 26, 1484–1509. [Google Scholar] [CrossRef]

- Berstein, D.J.; Buchmann, J.; Brassard, G.; Vazirani, U. Post-Quantum Cryptography; Springer: Berlin/Heidelberg, Germany, 2009. [Google Scholar]

- Peikert, C. Lattice-Based Cryptography: A Primer. IACR Cryptol. Eprint Arch. 2016. Available online: https://web.eecs.umich.edu/~cpeikert/pubs/slides-qcrypt.pdf (accessed on 17 February 2016).

- Birkoff, G. Lattice Theory, 1st ed.; American Mathematical Society: New York, NY, USA, 1948. [Google Scholar]

- Rota, G.C. The Many Lives of Lattice Theory. AMS 1997, 44, 1440–1445. [Google Scholar]

- Ajtai, M. Generating hard instances of lattice problems (extended abstract). In Proceedings of the Twenty-Eighth Annual ACM Symposium on Theory of Computing, New York, NY, USA, 22–24 May 1996; STOC ’96. ACM: New York, NY, USA, 1996; pp. 99–108. [Google Scholar]

- Micciancio, D. On the Hardness of the Shortest Vector Problem. Ph.D. Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 1998. [Google Scholar]

- Aharonov, D.; Regev, O. Lattice problems in NP∩coNP. In IW-PEC, Volume 5018 of Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2005; p. 765. [Google Scholar]

- Babai, L. On Lovasz’ lattice reduction and the nearest lattice point problem. Combinatorica 1986, 6, 1–13. [Google Scholar] [CrossRef]

- Kannan, R. Improved algorithms for integer programming and related lattice problems. In Proceedings of the Fifteenth Annual ACM Symposium on Theory of Computing, STOC ’83, New York, NY, USA, 25–27 April 1983; pp. 193–206. [Google Scholar]

- Micciancio, D.; Voulgaris, P. A deterministic single exponential time algorithm for most lattice problems based on Voronoi cell computations. SIAM J. Comput. 2013, 42, 1364–1391. [Google Scholar] [CrossRef]

- Micciancio, D. The hardness of the closest vector problem with preprocessing. IEEE Trans. Inform. Theory 2001, 47, 1212–1215. [Google Scholar] [CrossRef]

- Bennett, H.; Peikert, C. Hardness of Bounded Distance Decoding on Lattices in lp Norms. Available online: https://arxiv.org/abs/2003.07903 (accessed on 17 March 2020).

- Blomer, J.; Seifert, J.P. On the complexity of computing short linearly independent vectors and short bases in a lattice. In Proceedings of the Thirty-First Annual ACM Symposium on Theory of Computing, STOC ’99, New York, NY, USA, 1–4 May 1999; pp. 711–720. [Google Scholar]

- Lenstra, A.K.; Lenstra, H.W., Jr.; Lovasz, L. Factoring polynomials with rational coefficients. Math. Ann. 1982, 261, 513–534. [Google Scholar] [CrossRef]

- Schnorr, C.P. A hierarchy of polynomial time lattice basis reduction algorithms. Theor. Comput. Sci. 1987, 53, 201–224. [Google Scholar] [CrossRef]

- Schnorr, C.P.; Euchner, M. Lattice basis reduction: Improved practical algorithms and solving subset sum problems. In FCT; Springer: Berlin/Heidelberg, Germany, 1991; pp. 68–85. [Google Scholar]

- Sabani, M.; Galanis, I.P.; Savvas, I.K.; Garani, G. Implementation of Shor’s Algorithm and Some Reliability Issues of Quantum Computing Devices. In Proceedings of the 25th Pan-Hellenic Conference on Informatics (PCI 2021), Volos, Greece, 26–28 November 2021; ACM: New York, NY, USA, 2021; pp. 392–396. [Google Scholar]

- Micciancio, D.; Regev, O. Lattice-based cryptography. In Post-Quantum Cryptography; Springer: Berlin/Heidelberg, Germany, 2009. [Google Scholar]

- Regev, O. Quantum computation and lattice problems. SIAM J. Comput. 2004, 33, 738–760. [Google Scholar] [CrossRef]

- Funcke, L.; Hartung, T.; Jansen, K.; Kuhn, S. Review on Quantum Computing for Lattice Field Theory. In Proceedings of the 39th International Symposium on Lattice Field Theory, Hörsaalzentrum Poppelsdorf, Bonn, Germany, 8–13 August 2022. [Google Scholar]

- Regev, O. An Efficient Quantum Factoring Algorithm. Available online: https://arxiv.org/abs/2308.06572 (accessed on 17 August 2023).

- Peikert, C. Lattice Cryptography for the Internet. In Post-Quantum Cryptography; Springer: Cham, Switzerland; Publishing House: Moscow, Russia, 2014; pp. 197–219. [Google Scholar]

- Ajtai, M.; Dwork, C. A public-key cryptosystem with worst-case/average case- equivevalence. In Proceedings of the Twenty-Ninth Annual ACM Symposium on Theory of Computing, El Paso, TX, USA, 4–6 May 1997; pp. 284–293. [Google Scholar]

- Hoffstein, J.; Pipher, J.; Silverman, J. NTRU: A ring-based public key cryptosystem. In Algorithmic Number Theory (Lecture Notes in Computer Science); Springer: New York, NY, USA, 1998; Volume 1423, pp. 267–288. [Google Scholar]

- Post-Quantum Cryptography. Available online: https://csrc.nist.gov/projects/post-quantum-cryptography (accessed on 2 August 2016).

- McEliece, R. A public key cryptosystem based on alegbraic coding theory. DSN Prog. Rep. 1978, 42–44, 114–116. [Google Scholar]

- Goldreich, O.; Goldwasser, S.; Halive, S. Public-Key cryptosystems from lattice reduction problems. In Advances in Cryptology, Proceedings of the CRYPTO’97: 17th Annual International Cryptology Conference, Santa Barbara, CA, USA, 17–21 August 1997; Springer: Berlin/Heidelberg, Germany, 1997; Volume 10, pp. 112–113. [Google Scholar]

- Sabani, M.; Savvas, I.; Poulakis, D.; Garani, G.; Makris, G. Evaluation and Comparison of Lattice-based Cryptosystems for a Secure Quantum Computing Era. Electronics 2023, 12, 2643. [Google Scholar] [CrossRef]

- Micciancio, D. Lattice based cryptography: A global improvement. In Theory of Cryptography Library; Technical Report; Springer: Berlin/Heidelberg, Germany, 1999; pp. 99–105. [Google Scholar]

- Micciancio, D. Improving Lattice Based Cryptosystems Using the Hermite Normal Form. In Cryptography and Lattices Conference; Springer: Berlin/Heidelberg, Germany, 2001. [Google Scholar]

- Nguyen, P.Q.; Regev, O. Learning a parallelepiped: Cryptanalysis of GGH and NTRU signatures. J. Cryptol. 2009, 22, 139–160. [Google Scholar] [CrossRef]

- Lee, M.S.; Hahn, S.G. Cryptanalysis of the GGH Cryptosystem. Math. Comput. Sci. 2010, 3, 201–208. [Google Scholar] [CrossRef]

- Gu, C.; Yu, Z.; Jing, Z.; Shi, P.; Qian, J. Improvement of GGH Multilinear Map. In Proceedings of the IEEE Conference on P2P, Parallel, Grid, Cloud and Internet Computing (3PGCIC), Krakow, Poland, 4–6 November 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 407–411. [Google Scholar]

- Hoffstein, J.; Graham, N.A.; Pipher, J.; Silverman, H.; Whyte, W. NTRUSIGN: Digital Signatures using the NTRU lattice. In Proceedings of the Cryptographers’ Track at the RSA Conference, San Francisco, CA, USA, 13–17 April 2003; Springer: New York, NY, USA, 2003; pp. 122–140. [Google Scholar]

- Lyubashevsky, V. Fiat-Shamir with Aborts: Applications to Lattice and Factoring-Based Signatures. In Advances in Cryptology—ASIACRYPT 2009; Matsui, M., Ed.; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2009; Volume 5912, pp. 598–616. [Google Scholar]

- Ducas, L.; Durmus, A.; Lepoint, T.; Lyubashevsky, V. Lattice Signatures and Bimodal Gaussians. In Advances in Cryptology—CRYPTO 2013; Springer: Berlin/Heidelberg, Germany, 2013; pp. 40–56. [Google Scholar]

- Regev, O. On lattices, learning with errors, random linear codes, and cryptography. J. ACM 2009, 56, 1–40. [Google Scholar] [CrossRef]

- Lyubashevsky, V.; Peikert, C.; Regev, O. On Ideal Lattices and Learning with Errors over Rings. ACM 2013, 60, 1–35. [Google Scholar] [CrossRef]

- Regev, O. The learning with errors problem (invited survey). In Proceedings of the 25th Annual IEEE Conference on Computational Complexity, CCC 2010, Cambridge, MA, USA, 9–12 June 2010. [Google Scholar]

- Brakerski, Z.; Gentry, C.; Vaikuntanathan, V. New Constructions of Strongly Unforgeable Signatures Based on the Learning with Errors Problem. In Proceedings of the 48th Annual ACM Symposium on Theory of Computing, Cambridge, MA, USA, 19–21 June 2016. [Google Scholar]

- Komano, Y.; Miyazaki, S. On the Hardness of Learning with Rounding over Small Modulus. In Proceedings of the 21st Annual International Conference on the Theory and Application of Cryptology and Information Security, Sofia, Bulgaria, 26–30 April 2015. [Google Scholar]

- Bos, J.; Ducas, L.; Kiltz, E.; Lepoint, T.; Lyubashevsky, V.; Schanck, J.M.; Schwabe, P.; Seiler, G.; Stehlé, D. CRYSTALS—Kyber: A CCA-Secure Module-Lattice-Based KEM. Available online: https://eprint.iacr.org/2017/634.pdf (accessed on 14 October 2020).

- Peikert, C.; Vaikuntanathan, V.; Waters, B. A framework for efficient and composable oblivious transfer. In Advances in Cryptology (CRYPTO); LNCS; Springer: Berlin/Heidelberg, Germany, 2008. [Google Scholar]

- Albrecht, M.R.; Player, R.; Scott, S. On the concrete hardness of Learning with Errors. J. Math. Cryptol. 2015, 9, 169–203. [Google Scholar] [CrossRef]

- Pouly, A.; Shen, Y. Provable Dual Attacks on Learning with Errors. Cryptol. Eprint Arch. 2023. Available online: https://eprint.iacr.org/2023/1508.pdf (accessed on 21 February 2024).

- Lindner, R.; Peikert, C. Better Keys Sizes (and Attacks) for LWE-Based Encryption. In Topics in Cryptology, Proceedings of the CT-RSA 2011: The Cryptographers’ Track at the RSA Conference 2011, San Francisco, CA, USA, 14–18 February 2011; Springer: Berlin/Heidelberg, Germany, 2011; pp. 319–339. [Google Scholar]

- Ducas, L.; Durmus, A.; Lepoint, T. Reconciliation Attacks: Finding Secrets in Full-Matrix LWE. In Proceedings of the EUROCRYPT 2018, Tel Aviv, Israel, 29 April–3 May 2018. [Google Scholar]

- Bi, L.; Lu, X.; Luo, J.; Wang, K.; Zhang, Z. Hybrid dual attack on LWE with arbitrary secrets. Cryptol. Eprint Arch. 2022, 5, 15. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sabani, M.E.; Savvas, I.K.; Garani, G. Learning with Errors: A Lattice-Based Keystone of Post-Quantum Cryptography. Signals 2024, 5, 216-243. https://doi.org/10.3390/signals5020012

Sabani ME, Savvas IK, Garani G. Learning with Errors: A Lattice-Based Keystone of Post-Quantum Cryptography. Signals. 2024; 5(2):216-243. https://doi.org/10.3390/signals5020012

Chicago/Turabian StyleSabani, Maria E., Ilias K. Savvas, and Georgia Garani. 2024. "Learning with Errors: A Lattice-Based Keystone of Post-Quantum Cryptography" Signals 5, no. 2: 216-243. https://doi.org/10.3390/signals5020012

APA StyleSabani, M. E., Savvas, I. K., & Garani, G. (2024). Learning with Errors: A Lattice-Based Keystone of Post-Quantum Cryptography. Signals, 5(2), 216-243. https://doi.org/10.3390/signals5020012