A Systematic Review of Electroencephalography-Based Emotion Recognition of Confusion Using Artificial Intelligence

Abstract

1. Introduction

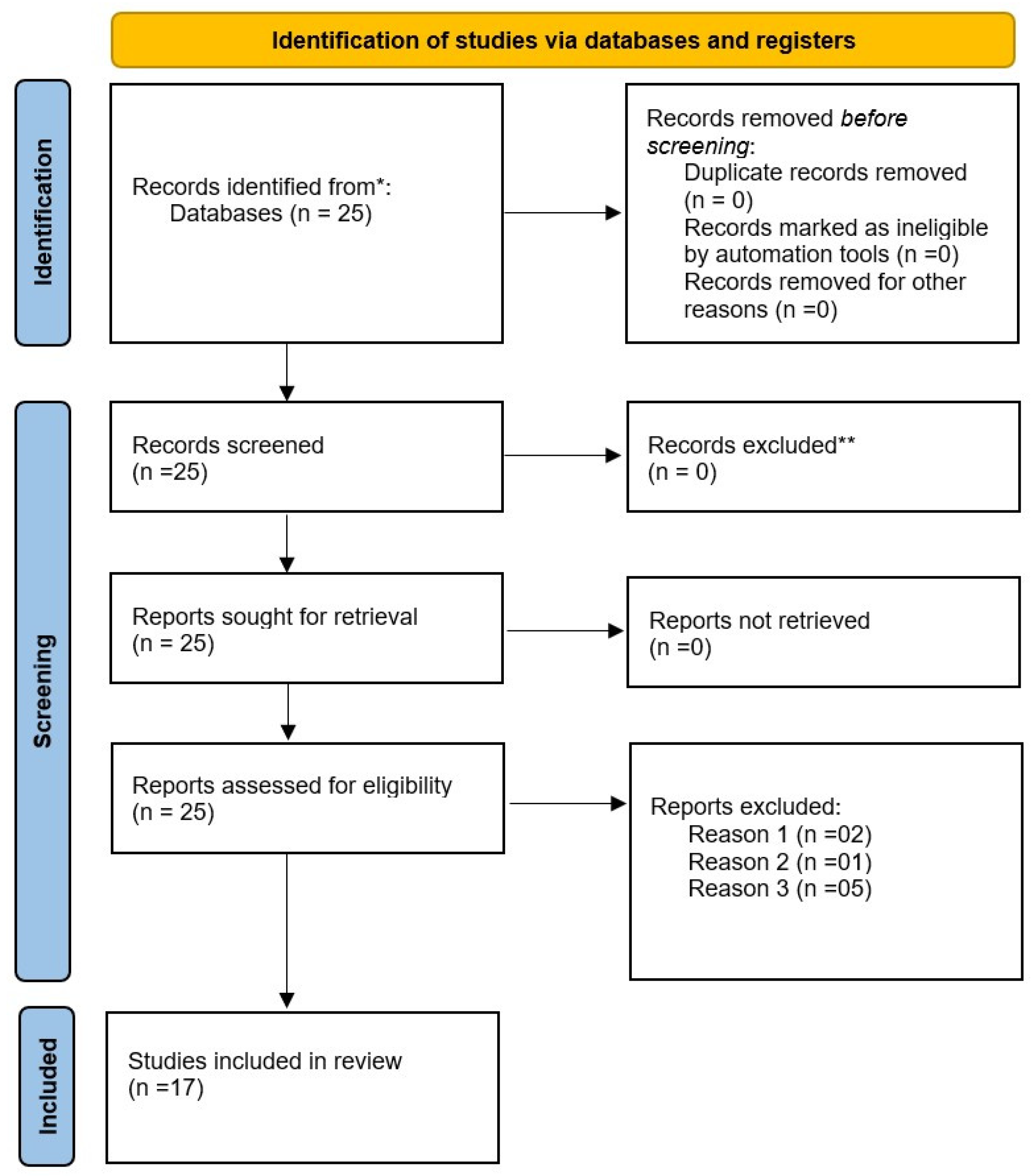

2. Methodology

- (a)

- Selection of Articles:

- (b)

- Screening of Articles:

3. Results

3.1. Datasets

3.2. Approaches for EEG Preprocessing

3.3. Types of EEG Features

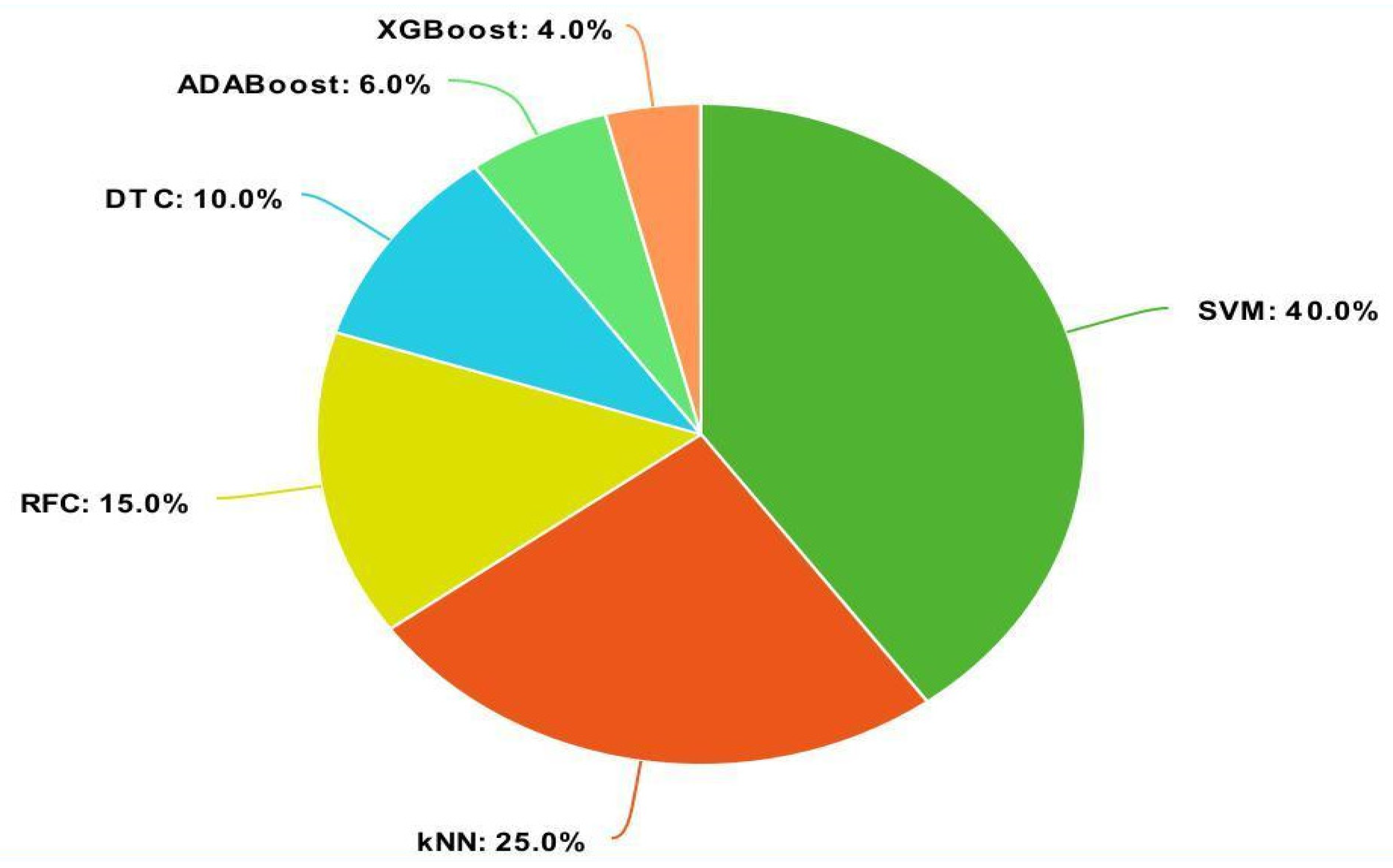

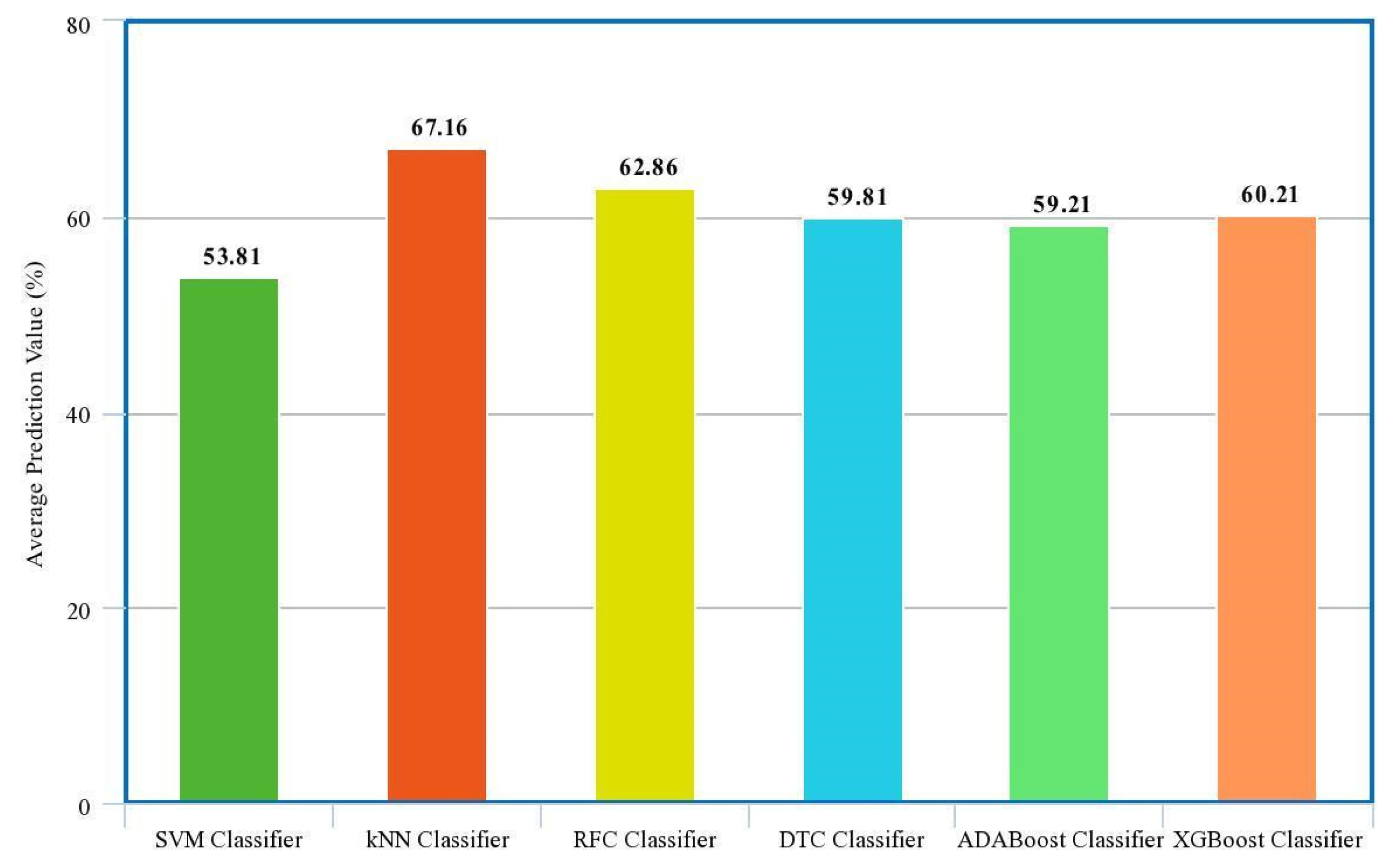

3.4. Machine Learning (ML) Architectures and Performance Comparison

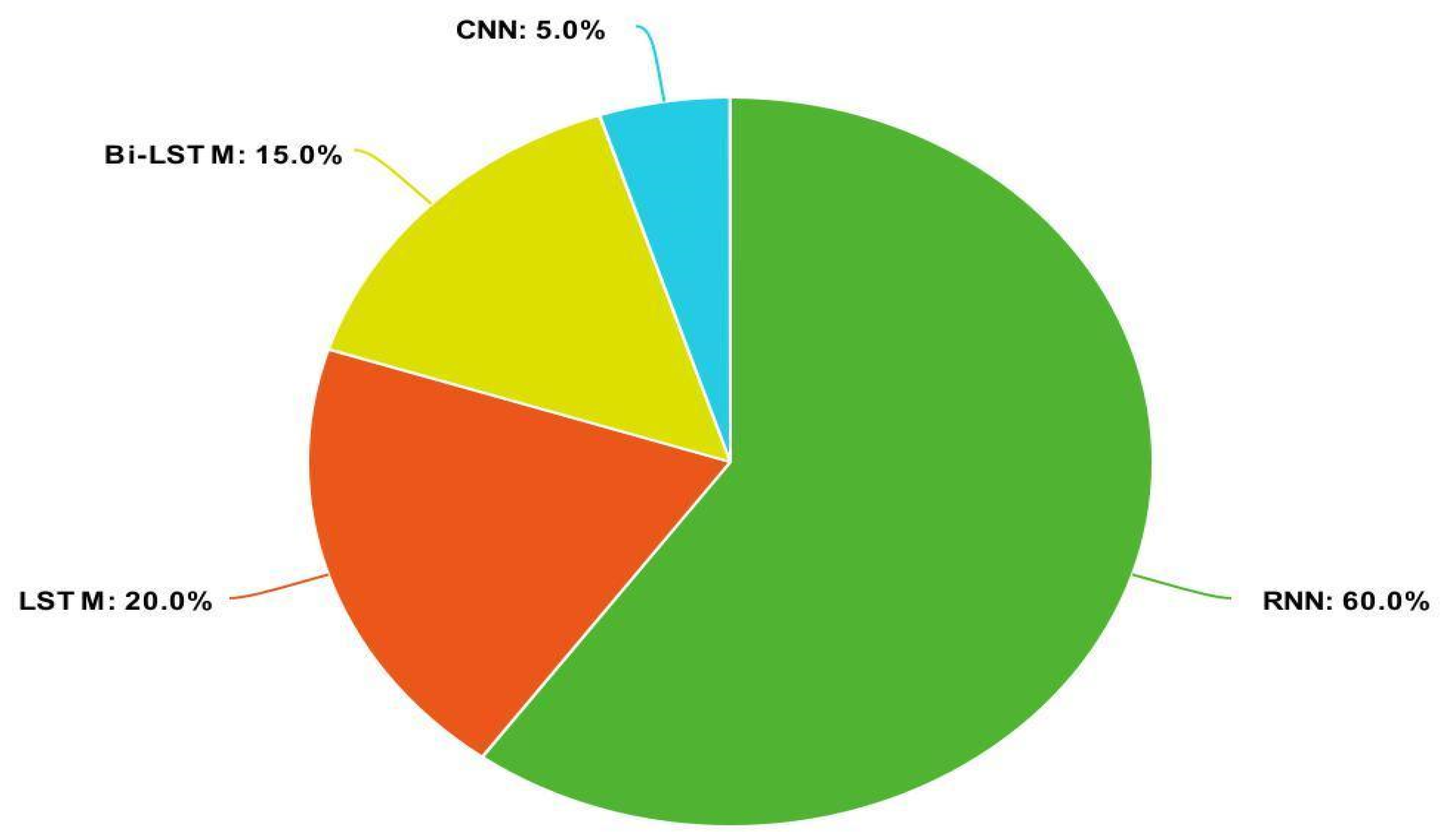

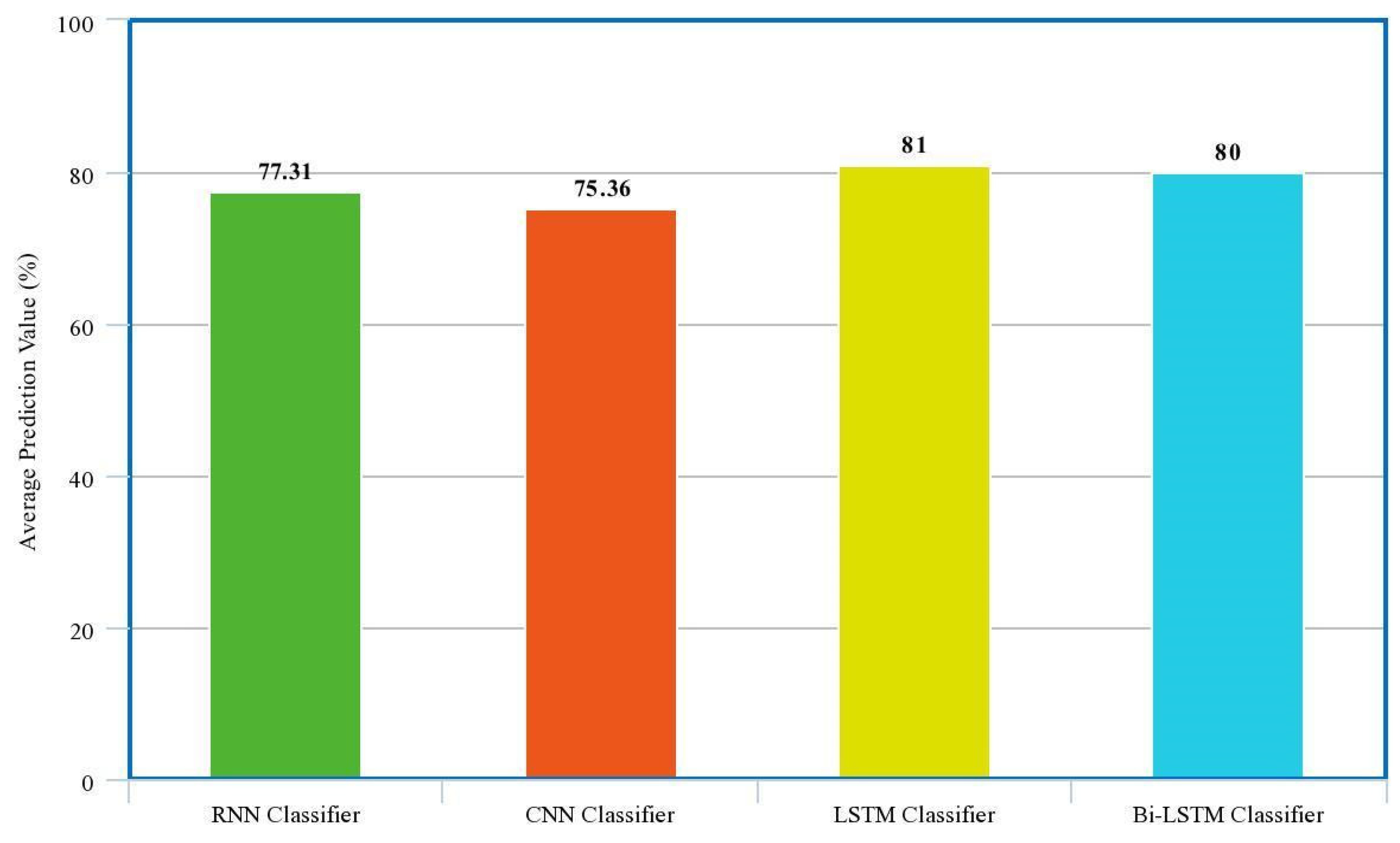

3.5. The Need for Deep Learning

| Research Gap | Associated Research Problem | Suggested Research Works for Future |

|---|---|---|

| Bias–Variance Dilemma in existing Confusion Emotion Models | Model having hindered performance. The model’s prediction performance is unreliable for new and unseen data. | Metadata such as demographics play an important role in human emotional states [48]. Hence, they can be considered as inputs for model training. Use advanced EEG feature preprocessing techniques to remove noise in EEG data. Use advanced feature selection techniques to identify the optimal EEG features that can predict confusion emotion. Construct models having bias–variance tradeoff. |

| Limited research on the development of cost-sensitive confusion emotion models | Good-performing models are unaffordable for end users. | Research on constructing cost-sensitive models having a tradeoff between cost and model performance. |

| Limited datasets | Unable to test developed models on independent datasets for their prediction reliability on new and unseen data. | Conduct human experiments to collect EEG recordings and create datasets. |

| Low Generalizability in existing confusion emotion models | Models would not perform well if new data having different levels of complexities were provided. | Collect EEG recordings among a broader demographic population of learners. Test the models on EEG recordings from real-world populations rather than recordings obtained from a laboratory experiment setup. |

3.6. Deep Learning Architectures and Performance Comparison

4. Discussion and Future Directions

4.1. Answering Research Question One

- (a)

- Limited creation of datasets that collect EEG recordings by conducting human experiments. This is due to the following reasons: (i) difficulty in stimulus design for inducing confusion within an educational setting; (ii) unavailability of standardized psychological procedures for deliberate induction of confusion through learning activities; and (iii) practical problems and challenges relating to EEG acquisition remain, such as the need for the necessary technological infrastructure, legal approvals, time, and financial commitment for the acquisition of a significant number of EEG recordings from participants [46].

- (b)

- Limited publicly accessible datasets: datasets that are publicly accessible help overcome obstacles related to EEG acquisition. The unavailability of datasets may demotivate those researchers who are interested in conducting research in this domain yet cannot overcome the above obstacles. The availability of a wide range of datasets offers diversified opportunities to analyze EEG data and extract uncovered information that would promote more research. Hence, in any study field, the increased availability of datasets is just as crucial as developing the datasets themselves.

4.2. Answering Research Question Two

4.3. Answering Research Question Three

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

| No. | Year of Publication | Authors | Name of Journal/Conference Proceedings | Title | Citation |

|---|---|---|---|---|---|

| 1 | 2013 | Wang, Haohan, Yiwei Li, Xiaobo Hu, Yucong Yang, Zhu Meng, and Kai-min Chang | AIED Workshops | Using EEG to Improve Massive Open Online Courses Feedback Interaction | [5] |

| 2 | 2016 | Yang, Jingkang, Haohan Wang, Jun Zhu, and Eric P. Xing | arXiv preprint arXiv:1611.10252 | Sedmid for Confusion Detection: Uncovering Mind State from Time Series Brain Wave Data | [8] |

| 3 | 2017 | Ni, Zhaoheng, Ahmet Cem Yuksel, Xiuyan Ni, Michael I. Mandel, and Lei Xie | 8th ACM International Conference on Bioinformatics, Computational Biology, and Health Informatics | Confused or not confused? Disentangling brain activity from EEG data using bi-directional LSTM recurrent neural networks. | [9] |

| 4 | 2018 | Yun Zhou; Tao Xu; Shiqian Li; Shaoqi Li | 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC) | Confusion State Induction and EEG-based Detection in Learning | [10] |

| 5 | 2021 | Nabil Ibtehaz, Mahmuda Naznin | NSysS’21: Proceedings of the 8th International Conference on Networking, Systems and Security | Determining confused brain activity from EEG sensor signals | [11] |

| 6 | 2018 | A. Tahmassebi, A. H. Gandomi and A. Meyer-Baese | IEEE Congress on Evolutionary Computation (CEC), Rio de Janeiro, Brazil | An Evolutionary Online Framework for MOOC Performance Using EEG Data | [12] |

| 7 | 2019 | Zhou, Yun, Tao Xu, Shaoqi Li, and Ruifeng Shi | Universal Access in the Information Society | Beyond engagement: an EEG-based methodology for assessing user’s confusion in an educational game | [13] |

| 8 | 2019 | Bikram Kumar, Deepak Gupta, Rajat Subhra Goswami | International Journal of Innovative Technology and Exploring Engineering | Classification of Student’s Confusion Level in E-Learning using Machine Learning | [14] |

| 9 | 2019 | Erwianda, Maximillian Sheldy Ferdinand, Sri Suning Kusumawardani, Paulus Insap Santosa, and Meizar Raka Rimadana. | International Seminar on Research of Information Technology and Intelligent Systems | Improving confusion-state classifier model using xgboost and tree-structured parzen estimator | [15] |

| 10 | 2019 | Wang, Yingying, Zijian Zhu, Biqing Chen, and Fang Fang. | Cognition and Emotion volume 33, no. 4. | Perceptual learning and recognition confusion reveal the underlying relationships among the six basic emotions. | [16] |

| 11 | 2019 | Claire Receli M. Reñosa; Argel A. Bandala; Ryan Rhay P. Vicerra | 2019 IEEE 11th International Conference on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment, and Management (HNICEM) | Classification of Confusion Level Using EEG Data and Artificial Neural Networks | [17] |

| 12 | 2021 | Dakoure, Caroline, Mohamed Sahbi Benlamine, and Claude Frasson | International FLAIRS Conference Proceedings, vol. 34 | Confusion detection using cognitive ability tests | [18] |

| 13 | 2021 | Benlamine, Mohamed Sahbi, and Claude Frasson | In Intelligent Tutoring Systems: 17th International Conference, Proceedings. Springer International Publishing | Confusion Detection Within a 3D Adventure Game | [19] |

| 14 | 2021 | He, Shuwei, Yanran Xu, and Lanyi Zhong | IEEE 2nd International Conference on Artificial Intelligence and Computer Engineering (ICAICE) | EEG-based Confusion Recognition Using Different Machine Learning Methods | [20] |

| 15 | 2022 | Daghriri, Talal, Furqan Rustam, Wajdi Aljedaani, Abdullateef H. Bashiri, and Imran Ashraf | IEEE Electronics volume 11, no. 18 | Electroencephalogram Signals for Detecting Confused Students in Online Education Platforms with Probability-Based Features | [21] |

| 16 | 2022 | Hashim Abu-gellban; Yu Zhuang; Long Nguyen; Zhenkai Zhang; Essa Imhmed | 2022 IEEE 46th Annual Computers, Software, and Applications Conference (COMPSAC) | CSDLEEG: identifying confused students based on EEG using multi-view deep learning | [22] |

| 17 | 2022 | Xiuping Men, Xia Li | International Journal of Education and Humanities | Detecting the confusion of students in massive open online courses using EEG | [23] |

| 18 | 2022 | Na Li, John D. Kelleher, Robert Ross | arXiv preprint:2206.02436 | Detecting interlocutor confusion in situated human-avatar dialogue: a pilot study | REJECTED (Reason 03 of Exclusion Criteria) |

| 19 | 2022 | Na Li, Robert Ross | arXiv preprint:2206.01493 | Transferring studies across embodiments: a case study in confusion detection | REJECTED (Reason 03 of Exclusion Criteria) |

| 20 | 2022 | Na Li, Robert Ross | arXiv preprint:2208.09367 | Dialogue policies for confusion mitigation in situated HRI | REJECTED (Reason 03 of Exclusion Criteria) |

| 21 | 2022 | Anala, Venkata Ajay Surendra Manikanta, and Ganesh Bhumireddy. | A thesis is submitted to the Faculty of Computing at Blekinge Institute of Technology | Comparison of machine learning algorithms on detecting the confusion of students while watching MOOCS | REJECTED (Reason 03 of Exclusion Criteria) |

| 22 | 2023 | Li, Na, and Robert Ross. | Proceedings of the 2023 ACM/IEEE International Conference on Human-Robot Interaction, pp. 142–151. 2023 | Hmm, You Seem Confused! Tracking Interlocutor Confusion for Situated Task-Oriented HRI | REJECTED (Reason 03 of Exclusion Criteria) |

| 23 | 2023 | Rashmi Gupta; Jeetendra Kumar | 5th IEEE Biennial International Conference on Nascent Technologies in Engineering (ICNTE) | Uncovering of learner’s confusion using data during online learning | REJECTED (Reason 03 of Exclusion Criteria) |

| 24 | 2023 | Tao Xu, Jiabao Wang, Gaotian Zhang, Ling Zhang, and Yun Zhou | Journal of Neural Engineering | Confused or not: decoding brain activity and recognizing confusion in reasoning learning using EEG | REJECTED (Reason 02 of Exclusion Criteria) |

| 25 | 2019 | Borges, Niklas, Ludvig Lindblom, Ben Clarke, Anna Gander, and Robert Lowe | 2019 8th International Conference on Affective Computing and Intelligent Interaction Workshops and Demos (ACIIW) | Classifying confusion: autodetection of communicative misunderstandings using facial action units | REJECTED (Reason 01 of Exclusion Criteria) |

| 26 | 2020 | F Ibrahim, S Mutashar and B Hamed | IOP conference series: materials science and engineering, volume 1105 | A Review of an Invasive and Non-invasive Automatic Confusion Detection Techniques | REJECTED (Reason 02 of Exclusion Criteria) |

References

- Bhattacharya, S.; Singh, A.; Hossain, M. Health system strengthening through massive open online courses (moocs) during the COVID-19 pandemic: An analysis from the available evidence. J. Educ. Health Promot. 2020, 9, 195. [Google Scholar] [CrossRef] [PubMed]

- Ganepola, D. Assessment of Learner Emotions in Online Learning via Educational Process Mining. In Proceedings of the 2022 IEEE Frontiers in Education Conference (FIE), Uppsala, Sweden, 8–11 October 2022; pp. 1–3. [Google Scholar] [CrossRef]

- El-Sabagh, H.A. Adaptive e-learning environment based on learning styles and its impact on development students’ engagement. Int. J. Educ. Technol. High. Educ. 2021, 18, 53. [Google Scholar] [CrossRef]

- Wang, H.; Li, Y.; Hu, X.S.; Yang, Y.; Meng, Z.; Chang, K.K. Using EEG to Improve Massive Open Online Courses Feedback Interaction. CEUR Workshop Proc. 2013, 1009, 59–66. Available online: https://experts.illinois.edu/en/publications/using-eeg-to-improve-massive-open-online-courses-feedback-interac (accessed on 8 April 2024).

- Wang, H. Confused Student EEG Brainwave Data. Kaggle, 28 March 2018. Available online: https://www.kaggle.com/datasets/wanghaohan/confused-eeg/code (accessed on 11 February 2023).

- Xu, T.; Wang, J.; Zhang, G.; Zhou, Y. Confused or not: Decoding brain activity and recognizing confusion in reasoning learning using EEG. J. Neural Eng. 2023, 20, 026018. [Google Scholar] [CrossRef] [PubMed]

- Xu, T.; Wang, X.; Wang, J.; Zhou, Y. From Textbook to Teacher: An Adaptive Intelligent Tutoring System Based on BCI. In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine & Biology Society, Mexico City, Mexico, 1–5 November 2021; pp. 7621–7624. [Google Scholar] [CrossRef]

- Yang, J.; Wang, H.; Zhu, J.; Eric, P.X. SeDMiD for Confusion Detection: Uncovering Mind State from Time Series Brain Wave Data. arXiv 2016, arXiv:1611.10252. [Google Scholar]

- Ni, Z.; Yuksel, A.C.; Ni, X.; Mandel, M.I.; Xie, L. Confused or not Confused? Disentangling Brain Activity from EEG Data Using Bidirectional LSTM Recurrent Neural Networks. In Proceedings of the 8th ACM International Conference on Bioinformatics, Computational Biology, and Health Informatics (ACM-BCB’17), Boston, MA, USA, 20–23 August 2017; Association for Computing Machinery: New York, NY, USA, 2017; pp. 241–246. [Google Scholar] [CrossRef]

- Zhou, Y.; Xu, T.; Li, S.; Li, S. Confusion State Induction and EEG-based Detection in Learning. In Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Honolulu, HI, USA, 18–21 July 2018; pp. 3290–3293. [Google Scholar] [CrossRef]

- Ibtehaz, N.; Naznin, M. Determining Confused Brain Activity from EEG Sensor Signals. In Proceedings of the 8th International Conference on Networking, Systems and Security (NSysS’21), Cox’s Bazar, Bangladesh, 21–23 December 2021; Association for Computing Machinery: New York, NY, USA, 2021; pp. 91–96. [Google Scholar] [CrossRef]

- Tahmassebi, A.; Gandomi, A.H.; Meyer-Baese, A. An Evolutionary Online Framework for MOOC Performance Using EEG Data. In Proceedings of the 2018 IEEE Congress on Evolutionary Computation (CEC), Rio de Janeiro, Brazil, 8–13 July 2018; pp. 1–8. [Google Scholar] [CrossRef]

- Zhou, Y.; Xu, T.; Li, S.; Shi, R. Beyond engagement: An EEG-based methodology for assessing user’s confusion in an educational game. Univ. Access Inf. Soc. 2019, 18, 551–563. [Google Scholar] [CrossRef]

- Kumar, B.; Gupta, D.; Goswami, R.S. Classification of student’s confusion level in e-learning using machine learning: Sciencegate. Int. J. Innov. Technol. Explor. Eng. 2019, 9, 346–351. Available online: https://www.sciencegate.app/document/10.35940/ijitee.b1092.1292s19 (accessed on 1 June 2023).

- Erwianda, M.S.F.; Kusumawardani, S.S.; Santosa, P.I.; Rimadana, M.R. Improving Confusion-State Classifier Model Using XGBoost and Tree-Structured Parzen Estimator. In Proceedings of the 2019 International Seminar on Research of Information Technology and Intelligent Systems (ISRITI), Yogyakarta, Indonesia, 5–6 December 2019; pp. 309–313. [Google Scholar] [CrossRef]

- Wang, J.; Wang, M. Review of the emotional feature extraction and classification using EEG signals. Cogn. Robot. 2021, 1, 29–40. [Google Scholar] [CrossRef]

- Renosa, C.R.M.; Bandala, A.A.; Vicerra, R.R.P. Classification of Confusion Level Using EEG Data and Artificial Neural Networks. In Proceedings of the 2019 IEEE 11th International Conference on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment, and Management (HNICEM), Laoag, Philippines, 29 November–1 December 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Dakoure, C.; Benlamine, M.S.; Frasson, C. Confusion detection using cognitive ability tests. Int. Flairs Conf. Proc. 2021, 34. [Google Scholar] [CrossRef]

- Benlamine, M.S.; Frasson, C. Confusion Detection within a 3D Adventure Game. Intell. Tutoring Syst. 2021, 12677, 387–397. [Google Scholar] [CrossRef]

- He, S.; Xu, Y.; Zhong, L. EEG-based Confusion Recognition Using Different Machine Learning Methods. In Proceedings of the 2021 2nd International Conference on Artificial Intelligence and Computer Engineering (ICAICE), Hangzhou, China, 5–7 November 2021; pp. 826–831. [Google Scholar] [CrossRef]

- Daghriri, T.; Rustam, F.; Aljedaani, W.; Bashiri, A.H.; Ashraf, I. Electroencephalogram Signals for Detecting Confused Students in Online Education Platforms with Probability-Based Features. Electronics 2022, 11, 2855. [Google Scholar] [CrossRef]

- Abu-Gellban, H.; Zhuang, Y.; Nguyen, L.; Zhang, Z.; Imhmed, E. CSDLEEG: Identifying Confused Students Based on EEG Using Multi-View Deep Learning. In Proceedings of the 2022 IEEE 46th Annual Computers, Software, and Applications Conference (COMPSAC), Los Alamitos, CA, USA, 1–27 July 2022; pp. 1217–1222. [Google Scholar] [CrossRef]

- Men, X.; Li, X. Detecting the Confusion of Students in Massive Open Online Courses Using EEG. Int. J. Educ. Humanit. 2022, 4, 72–77. [Google Scholar] [CrossRef]

- Islam, R.; Moni, M.A.; Islam, M.; Mahfuz, R.A.; Islam, S.; Hasan, K.; Hossain, S.; Ahmad, M.; Uddin, S.; Azad, A.; et al. Emotion Recognition From EEG Signal Focusing on Deep Learning and Shallow Learning Techniques. IEEE Access 2021, 9, 94601–94624. [Google Scholar] [CrossRef]

- Background on Filters for EEG. Clinical Gate, 24 May 2015. Available online: https://clinicalgate.com/75-clinical-neurophysiology-and-electroencephalography/ (accessed on 13 February 2023).

- Preprocessing. NeurotechEDU. Available online: http://learn.neurotechedu.com/preprocessing/ (accessed on 13 February 2023).

- Al-Fahoum, A.S.; Al-Fraihat, A.A. Methods of EEG Signal Features Extraction Using Linear Analysis in Frequency and Time-Frequency Domains. ISRN Neurosci. 2014, 2014, 730218. [Google Scholar] [CrossRef]

- Geng, X.; Li, D.; Chen, H.; Yu, P.; Yan, H.; Yue, M. An improved feature extraction algorithms of EEG signals based on motor imagery brain-computer interface. Alex. Eng. J. 2022, 61, 4807–4820. [Google Scholar] [CrossRef]

- Nandi, A.; Ahamed, H. Time-Frequency Domain Analysis. Cond. Monit. Vib. Signals 2019, 79–114. [Google Scholar] [CrossRef]

- Harpale, V.K.; Bairagi, V.K. Seizure detection methods and analysis. In Brain Seizure Detection and Classification Using Electroencephalographic Signals; Academic Press: Cambridge, MA, USA,, 2022; pp. 51–100. [Google Scholar] [CrossRef]

- Suviseshamuthu, E.S.; Handiru, V.S.; Allexandre, D.; Hoxha, A.; Saleh, S.; Yue, G.H. EEG-based spectral analysis showing brainwave changes related to modulating progressive fatigue during a prolonged intermittent motor task. Front. Hum. Neurosci. 2022, 16, 770053. [Google Scholar] [CrossRef]

- Kim, D.W.; Im, C.H. EEG spectral analysis. In Biological and Medical Physics, Biomedical Engineering; Springer: Singapore, 2018; pp. 35–53. [Google Scholar]

- Tsipouras, M.G. Spectral information of EEG signals with respect to epilepsy classification. EURASIP J. Adv. Signal Process. 2019, 2019, 10. [Google Scholar] [CrossRef]

- Vanhollebeke, G.; De Smet, S.; De Raedt, R.; Baeken, C.; van Mierlo, P.; Vanderhasselt, M.-A. The neural correlates of Psychosocial Stress: A systematic review and meta-analysis of spectral analysis EEG studies. Neurobiol. Stress 2022, 18, 100452. [Google Scholar] [CrossRef]

- Mainieri, G.; Loddo, G.; Castelnovo, A.; Balella, G.; Cilea, R.; Mondini, S.; Manconi, M.; Provini, F. EEG activation does not differ in simple and complex episodes of disorders of arousal: A spectral analysis study. Nat. Sci. Sleep 2022, 14, 1097–1111. [Google Scholar] [CrossRef]

- An, Y.; Hu, S.; Duan, X.; Zhao, L.; Xie, C.; Zhao, Y. Electroencephalogram emotion recognition based on 3D feature fusion and convolutional autoencoder. Front. Comput. Neurosci. 2021, 15, 743426. [Google Scholar] [CrossRef] [PubMed]

- Uyanık, H.; Ozcelik, S.T.A.; Duranay, Z.B.; Sengur, A.; Acharya, U.R. Use of differential entropy for automated emotion recognition in a virtual reality environment with EEG signals. Diagnostics 2022, 12, 2508. [Google Scholar] [CrossRef] [PubMed]

- Ding, L.; Duan, W.; Wang, Y.; Lei, X. Test-retest reproducibility comparison in resting and the mental task states: A sensor and source-level EEG spectral analysis. Int. J. Psychophysiol. 2022, 173, 20–28. [Google Scholar] [CrossRef] [PubMed]

- Roy, Y.; Banville, H.; Albuquerque, I.; Gramfort, A.; Falk, T.H.; Faubert, J. Deep learning-based electroencephalography analysis: A systematic review. J. Neural Eng. 2019, 16, 051001. [Google Scholar] [CrossRef]

- Ding, Y.; Robinson, N.; Zeng, Q.; Chen, D.; Wai, A.A.P.; Lee, T.-S.; Guan, C. TSception:A Deep Learning Framework for Emotion Detection Using EEG. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; pp. 1–7. [Google Scholar] [CrossRef]

- Wang, J.; Song, Y.; Mao, Z.; Liu, J.; Gao, Q. EEG-Based Emotion Identification Using 1-D Deep Residual Shrinkage Network With Microstate Features. IEEE Sensors J. 2023, 23, 5165–5174. [Google Scholar] [CrossRef]

- Altaheri, H.; Muhammad, G.; Alsulaiman, M.; Amin, S.U.; Altuwaijri, G.A.; Abdul, W.; Bencherif, M.A.; Faisal, M. Deep learning techniques for classification of electroencephalogram (EEG) motor imagery (MI) signals: A review. Neural Comput. Appl. 2021, 35, 14681–14722. [Google Scholar] [CrossRef]

- Lun, X.; Yu, Z.; Chen, T.; Wang, F.; Hou, Y. A simplified CNN classification method for Mi-EEG via the electrode pairs signals. Front. Hum. Neurosci. 2020, 14, 338. [Google Scholar] [CrossRef] [PubMed]

- Amin, S.U.; Alsulaiman, M.; Muhammad, G.; Mekhtiche, M.A.; Hossain, M.S. Deep Learning for EEG motor imagery classification based on multi-layer CNNs feature fusion. Futur. Gener. Comput. Syst. 2019, 101, 542–554. [Google Scholar] [CrossRef]

- Joshi, V.M.; Ghongade, R.B.; Joshi, A.M.; Kulkarni, R.V. Deep BiLSTM neural network model for emotion detection using cross-dataset approach. Biomed. Signal Process. Control 2021, 73, 103407. [Google Scholar] [CrossRef]

- Wang, H.; Wu, Z.; Xing, E.P. Removing Confounding Factors Associated Weights in Deep Neural Networks Improves the Prediction Accuracy for Healthcare Applications. Biocomputing 2018, 24, 54–65. [Google Scholar] [CrossRef]

- Khan, S.M.; Liu, X.; Nath, S.; Korot, E.; Faes, L.; Wagner, S.K.; Keane, P.A.; Sebire, N.J.; Burton, M.J.; Denniston, A.K. A global review of publicly available datasets for ophthalmological imaging: Barriers to access, usability, and generalizability. Lancet Digit. Health 2021, 3, 51–66. [Google Scholar] [CrossRef] [PubMed]

- Lodge, J.M.; Kennedy, G.; Lockyer, L.; Arguel, A.; Pachman, M. Understanding difficulties and resulting confusion in Learning: An integrative review. Front. Educ. 2018, 3, 49. [Google Scholar] [CrossRef]

- Young, A.T.; Xiong, M.; Pfau, J.; Keiser, M.J.; Wei, M.L. Artificial intelligence in dermatology: A primer. J. Investig. Dermatol. 2020, 140, 1504–1512. [Google Scholar] [CrossRef] [PubMed]

- Maria, S.; Chandra, J. Preprocessing Pipelines for EEG. SHS Web Conf. 2022, 139, 03029. [Google Scholar] [CrossRef]

- Donoghue, T.; Dominguez, J.; Voytek, B. Electrophysiological Frequency Band Ratio Measures Conflate Periodic and Aperiodic Neural Activity. eNeuro 2020, 7, ENEURO.0192-20.2020. [Google Scholar] [CrossRef]

- Ganepola, D.; Maduranga, M.; Karunaratne, I. Comparison of Machine Learning Optimization Techniques for EEG-Based Confusion Emotion Recognition. In Proceedings of the 2023 IEEE 17th International Conference on Industrial and Information Systems (ICIIS), Peradeniya, Sri Lanka, 25–26 August 2023; pp. 341–346. [Google Scholar] [CrossRef]

- Ganepola, D.; Karunaratne, I.; Maduranga, M.W.P. Investigation on Cost-Sensitivity in EEG-Based Confusion Emotion Recognition Systems via Ensemble Learning. In Asia Pacific Advanced Network; Herath, D., Date, S., Jayasinghe, U., Narayanan, V., Ragel, R., Wang, J., Eds.; APANConf 2023; Communications in Computer and Information Science; Springer: Cham, Switzerland, 2024; Volume 1995. [Google Scholar] [CrossRef]

- Arguel, A.; Lockyer, L.; Lipp, O.V.; Lodge, J.M.; Kennedy, G. Inside Out: Detecting Learners’ Confusion to improve Interactive Digital Environments. J. Educ. Comput. Res. 2016, 55, 526–551. [Google Scholar] [CrossRef]

| Research Database | No. of Extracted Literature |

|---|---|

| IEEE Xplore | 06 |

| ACM Digital Library | 10 |

| arXiv | 4 |

| Springer | 3 |

| Other | 2 |

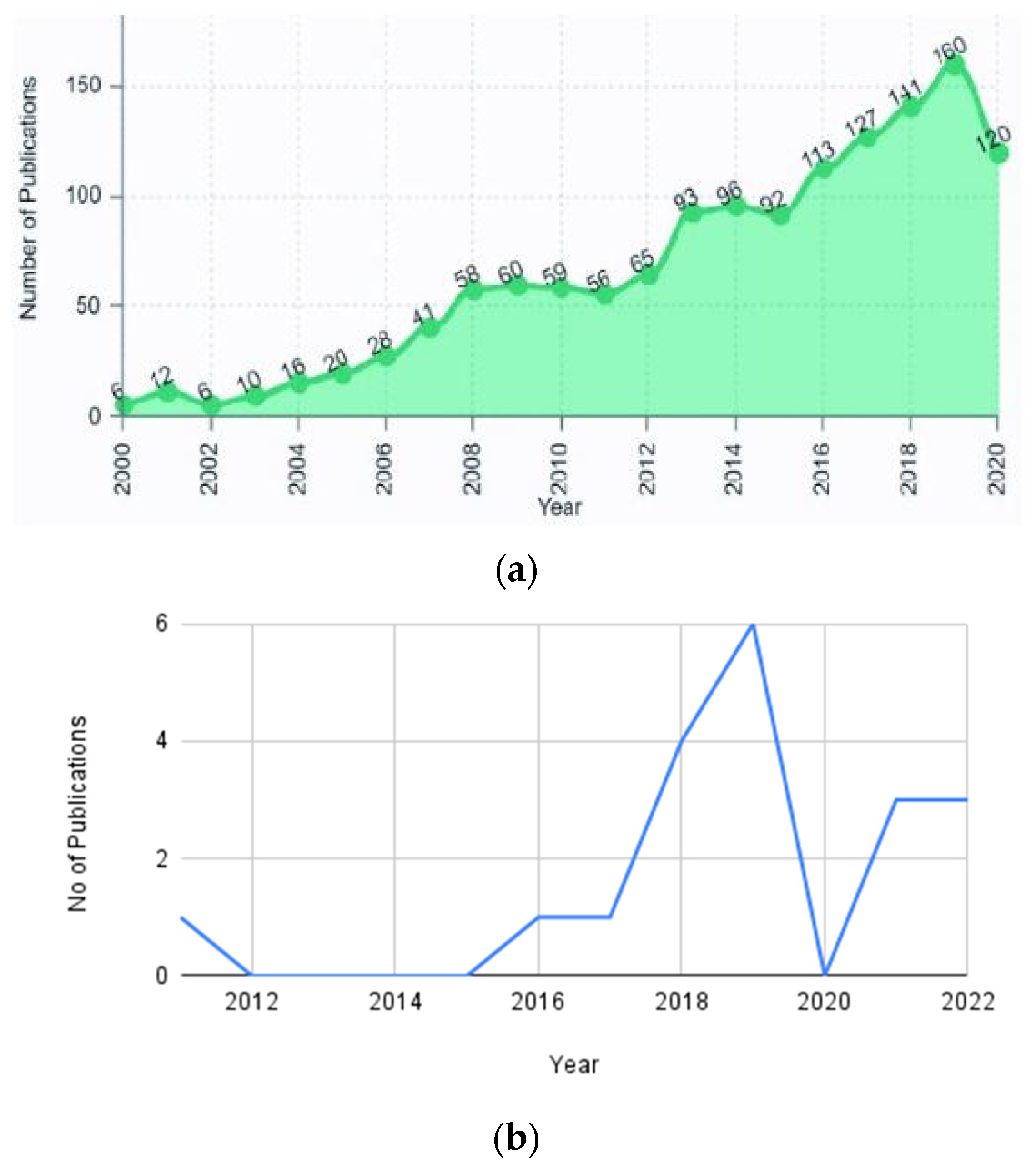

| Year | Publication |

|---|---|

| 2011 | Wang et al. [4] |

| 2016 | Yang et al. [8] |

| 2017 | Nie et al. [9] |

| 2018 | Zhou et al. [10], Ibtehaz et al. [11], Tahmassebi et al. [12] |

| 2019 | Zhou et al. [13], Kumar et al. [14], Erwianda et al. [15], Wang et al. [16], Reñosa et al. [17], |

| 2021 | Dakoure et al. [18], Benlamine et al. [19], He et al. [20] |

| 2022 | Daghriri et al. [21], Abu-gellban et al. [22], Men et al. [23] |

| Author | Year | Nature of Subjects | Stimuli | EEG Headset | No of/Type of Electrodes Used | EEG Sampling Rate | No. of Data Samples | Access |

|---|---|---|---|---|---|---|---|---|

| Wang et al. [4] | 2011 | 10 college Students | MOOC videos | Single channel Neurosky Mindset | Single channel (frontal lobe Fp1 location) | 512 Hz | 12,811 | Free and public use |

| Zhou et al. [10] | 2018 | 16 college students | Raven’s tests | Emotiv Epoc+ | 16 channels 14 channels and 2 references | Not reported | Not reported | Unavailable for public use |

| Zhou et al. [13] | 2019 | 28 23 college students and 5 Masters level students | Raven Test and Sokoban Cognitive Game | OpenBCI | 10 channels 08 channels and 2 references | 250 Hz | Not reported | Unavailable for public use |

| Dakoure et al. [18] | 2021 | 10 CS * undergraduates | Cognitive ability tests | Emotiv Epoc | 16 channels 14 channels and 2 references | 128 samples/s | 128 | Unavailable for public use |

| Benlamine et al. [19] | 2021 | 20 CS * undergraduates | 3D adventure serious game | Emotiv Epoc | 16 channels 14 channels and 2 references | 128 samples/s | 128 | Unavailable for public use |

| Technique | Overview |

|---|---|

| Down-sampling | This technique reduces the data in the EEG signal. For example, signals recorded at 512 Hz can be down-sampled into 128 Hz. This technique mostly opts for wireless transmission [9,10]. |

| Filtering for Artifact removal | Artifacts are noises/disturbances recorded in the EEG signal. Artifacts can be internal such as eye blinks or external such as electrode displacement in the EEG headset. Independent Component Analysis and Regression are commonly used approaches for artifact removal [8,9,10]. |

| Re-referencing | When obtaining EEG recordings, researchers place a reference electrode mostly at one Mastoid Cz. This will record voltages relative to another electrode. This technique can be performed by changing the reference electrode to another position. This is a good option when initial data recorded have not been collected without proper reference [10]. |

| Publication | Preprocessing Technique |

|---|---|

| Zhou et al. [7] | Z-score standardization was used for EEG normalization to reduce individual differences in the signals. |

| Dakoure et al. [8] | Performed Artifact Removal using high pass filtering at 0.5 Hz and Low pass filtering at 43 Hz—the authors stated that the first filter was used as they wanted to deploy ICA. The second filter was used because ICA was sensitive to low frequencies and the headset used for EEG acquisition did not record signals above 43 Hz. ICA deployment. This algorithm breaks down the EEG signal into independent signals coming from particular sources. |

| Feature Types | Significance to EEG Data Analysis | |

|---|---|---|

| Frequency Domain (FD). | Statistical measures extracted from Power Spectral Density (PSD): energy, intensity weighted mean frequency, intensity weighted bandwidth, spectral edge frequency, spectral entropy, peak frequency, the bandwidth of the dominant frequency, power ratio [18] | PSD serves as the base calculation of this domain. The EEG series’ power distribution over frequency is represented by PSD values. Computing PSD values are advantageous as neuroscientists believe they directly illustrate the neural activity of the human brain. [4,8,19,20]. |

| Relative PSD | The most frequently used frequency domain features in EEG signal analysis [16]. Relative PSD is the ratio of the PSD values of the frequency band to be analyzed to the total frequency band. This measure reduces the inter-individual deviation associated with absolute power. However, the accuracy for analyzing brain changes based on the non-stationary EEG signal is limited [18,19,20,21]. | |

| Differential Entropy | It is the fundamental concept that quantifies the uncertainty/randomness of a continuous signal. It measures the amount of information consisting of a signal per unit time [22]. Differential entropy is the most used for emotion classification nowadays. However, this measure only considers relative uncertainty but does not calculate absolute uncertainty [22,23,24,25]. | |

| Time Domain (TD) Time Domain (TD) | Statistical measures such as minimum and maximum values to quantify the range of data or the magnitude of signal baseline, mean, mode, variance, skewness, and kurtosis [15] | The time domain decomposes the raw EEG signal about time. It is assumed that statistical distributions can identify EEG seizure activities from normal activities [22,23,24,25]. |

| Hjorth Parameters | These are a set of statistical descriptors introduced by Bengt Hjorth in 1970. The descriptors describe temporal domain characteristics of mostly EEG and ECG signals. The parameters provide information about the mobility, activity, and complexity of the above signals [23,24,25,26]. | |

| Time–Frequency Domain (TFD) Time–Frequency Domain (TFD) | Statistical measures such as mean, variance, standard deviation, absolute mean, absolute median | These features are estimated to differentiate EEG signal variations through statistical properties in each designated frequency sub-band for a specific time domain [18,19,20]. |

| Energy, Root Mean Square (RMS), and Average Power | The signal amplitudes that correlate to frequency sub-bands for a specific time domain are examined [18,19,20]. | |

| Short-Time Fourier Transform (STFT) | The time is frequently modified by a fixed window function using the short-time Fourier transform (STFT). A number of brief-duration stationary signals are superimposed to form the non-stationary process. However, the low-frequency subdivision and the high-frequency temporal subdivision criteria cannot be satisfied [17]. | |

| Wavelet Transform (WT) | The STFT local analysis capability is inherited by the Wavelet Transform (WT) method. WT has a higher resolution to investigate time-varying and non-stationary signals [17]. |

| Reference | ML Algorithm/s | Type of Features | Prediction Performance | Significance |

|---|---|---|---|---|

| Wang et al. [4] | Naïve Bayes—Gaussian | Power Spectral Density (PSD) values | 51–56% | The classifier was chosen as it works well with sparse and noisy training data. They stated that the difficulties in interpreting EEG data and their large dimensionality were found to have a negative impact on the accuracy of the classification, which was not significantly different from the applied educational researchers’ method of direct observation. |

| He et al. [26] | Naïve Bayes—Gaussian | PSD values | 58.4% | They found that Random Forest and XG Boost are approximately 10% higher when compared with Naïve Bayes and KNN. Concluded that Naïve Bayes is not suitable for this dataset as the classifier makes an incorrect assumption that each value in the multi-dimensional sample has an independent impact on categorization. |

| Naïve Bayes—Bernoulli | 52.4% | |||

| k Nearest Neighbor (kNN) | 56.5% | |||

| Random Forest (RF) | 66.1% | |||

| XG Boost | 68.4% | |||

| Dakoure et al. [8] | Support Vector Machine (SVM) | PSD values | 68.0% | They classified confusion levels into three (03) levels, which had not been previously done. They concluded that SVM is better suited for EEG classification than KNN with justifications stating that KNN does not abstract and learn patterns in data like SVM. It merely computed distances and formed clusters, and the instances of the same cluster are close in the feature space. |

| kNN | 65.2% (k = 20) | |||

| Kumar et al. [27] | kNN | PSD values | 54.86% (n = 1) | The authors employed 32 supervised learning algorithms with different parameter settings. (Algorithms with the highest accuracies are only displayed here.) They concluded that Random Forest with Bagging had the highest accuracy. However, they did not provide justifications for their conclusions. They recorded universal-based models to improve the accuracy. |

| Logistic Regression CV | 53.88% | |||

| Linear Discriminant Analysis | 53.38% | |||

| Ridge Classifier | 53.38% | |||

| SVM | 55.18% | |||

| RF with Bagging | 61.89% | |||

| XG Boost | 59.21% | |||

| Erwianda et al. [28] 2019 | XG Boost | PSD values | 82% | RFE was utilized as the feature selection technique and TPE as the hyperparameter optimization technique. The study revealed that the best features were Theta, Delta, and Gamma-2 for confusion detection. However, an explanation for this fact was not provided. |

| XG Boost-Recursive Feature Elimination (RFE) | 83% | |||

| XG Boost-RFE—Tree-Structured Parzan Estimator (TPE) | 87% | |||

| Daghriri et al. [29] | Gradient Boosting (GB) | PSD values | 100% | A novel feature engineering approach was proposed to produce the feature vector. Class probabilities from RF and GB were utilized to develop the feature vector. Results indicated that 100% accuracy was obtained via this approach. Further, it stated that the DL models that were trained did not perform well when compared with ML. The reason stated was the dataset was too small for better performance. |

| RF | ||||

| Support Vector Classifier (SVC) | ||||

| Logistic Regression (LR) | ||||

| Yang et al. [30] | SedMid Model | EEG time series data and data extracted from audio and video features of the lectures | 87.76% | The authors introduced a new model named Sequence Data-based Mind-Detecting (SedMid). First of a kind that detected confusion levels by combining other sources. The Sedmid model developed mixed time series EEG signals with audio–visual features. |

| Benlamine et al. [9] | kNN | EEG time series | 92.08% (k = 5 ≈ log (number of samples)) | A novel approach where the researchers attempted to recognize confusion emotion in learners in a multi-class classification approach rather than a binary classification. At fixed time frames, facial data showing the confusion levels of each participant were recorded. The corresponding EEG signals were recorded. Finally, separate EEG recordings were obtained for high, medium, and low levels of confusion. The Model was trained using SVM and KNN; however, they preferred SVM due to (a) Popular in BCI research; (b) Robustness against nonlinear data; (c) Efficient in high dimensional space. The authors did not review why KNN produced higher results, although they stated that KNN is not suitable for EEG classification. |

| SVM | 92.08% |

| Reference | Deployed Algorithm | Prediction Accuracy | |

|---|---|---|---|

| Benlamine et al. [19] | ML | kNN | 92.08% |

| DL | LSTM * | 94.8% | |

| He et al. [20] | ML | XGBoost | 68.4% |

| DL | LSTM * | 78.1% | |

| Reference | DL Algorithm/s Deployed | Prediction Performance | Significance |

|---|---|---|---|

| Zhou et al. [13] | Convolutional Neural Network (CNN) | 71.36% | This study selected CNN as it accepts raw data out of several channels as direct inputs, reducing the need to transform the EEG raw data into the standard frequency bands and the process subsequent feature extraction method. |

| Ni et al. [9] | CNN | 64.0% | This study was conducted to improve the accuracy of the dataset [4]. Concluded that CNNs and DBNs are not a good choice for this dataset as there is a high chance of overfitting. They recommended LSTMs, although different time steps (i.e., a feature for every 0.5 s) supplied to the LSTM share the same weights in the neural network, the forget gate can learn to utilize previous hidden states in the LSTM. Bi-directional LSTMs employ sequential data from both directions to learn a representation in both directions including context data, which enables a more reliable and accurate model. |

| Deep Belief Network (DBN) | 52.7% | ||

| LSTM | 69.0% | ||

| Bi-LSTM | 73.3% | ||

| Wang et al. [16] | Confounder Bi-LSTM (CF-Bi-LSTM) | 75% | This study was conducted to improve the accuracy of the dataset of [4] by the same authors. They introduced the Confounder Filtering method that reduces confounders and improves the generalizability of the deep neural network and concluded that this approach improves the performance of bioinformatics-related predictive models. |

| Abugellban et al. [22] | CNN with Rectified Linear Unit (ReLu) | 98% | This study addressed the performance issues of CDL 14, which had ignored the demographic information of the students. Concluded that demographic information is naturally influenced by confusion emotion detection. |

| Reñosa et al. [17] | Artificial Neural Network (ANN) | 99.78% | The research attempted to classify confusion levels of students as a percentage using combined averaged power spectra of all frequency bands and the standard deviation of each frequency band as inputs for the ANN. |

| Zhou et al. [13] | 05 layered CNN Adaptive Moment Estimation (ADAM) for optimization | 91.04% | The authors provided a detailed description of how they conducted the human experiment to collect EEG recordings by inducing confusion through game-based learning. They utilized Adam Optimization to speed the gradient descent process and remove excessive swings during the CNN model training process. They highlighted that the binary classification of the confusion emotion state is preliminary since confusion is a complex emotion state. They recommended multi-class classification as a good alternative. |

| Men et al. [23] | LSTM | 77.3% | This paper addresses the issue of an overfitting problem with LSTM and Bi-LSTM algorithms by introducing an attention layer. This is a technique in neural networks that attempts to resemble cognitive attention. The authors stated that the attention layer achieved good accuracy in textual and image classification models. They utilized two DL algorithms: LSTM and Bi-LSTM but were not satisfied with either model as they had not met their expectations. They concluded that LSTM had heavy overfitting and Bi-LSTM did not learn features from the training data. |

| LSTM + Attention Layer | 81.7% | ||

| Bi-LSTM | 67.9% | ||

| Bi-LSTM + Attention Layer | 69.7% | ||

| Ibtehaz et al. [11] | CNN + Logistic Regression | 81.88% | The authors proposed a new algorithm where spectral features of EEG signals are fused with the temporal features. Spectral features were extracted using CNN and classification was performed through ML algorithms. Thus, this study implemented a hybrid ML and DL model. The features for the classifier algorithms were the activation map received from the Global Average Pooling layer. The authors concluded that their approach yielded higher accuracies since CNN extracts better features for classification rather than manually picking features for model training. |

| CNN + Naïve Bayes—Gaussian | 77.98% | ||

| CNN + Support Vector Machine | 78.075% | ||

| CNN + Decision Trees | 73.922% | ||

| CNN + Random Forest | 79.88% | ||

| CNN + k Nearest Neighbor (kNN) | 82.92% | ||

| CNN + AdaBoost | 80.79% | ||

| Tahmassebi et al. [12] | NSGA II—knee model | 73.96% | This study aims to classify confused learners from non-confused learners using Genetic Programming. They developed models from the NSGA-II algorithm and multi-objective genetic programming approach. Based on the fitness and complexity measures, the authors defined three models of which the third—knee model—produced the highest accuracy. They concluded that this model can be a good substitute for traditional algorithms as it has an 80% shorter computational run time. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ganepola, D.; Maduranga, M.W.P.; Tilwari, V.; Karunaratne, I. A Systematic Review of Electroencephalography-Based Emotion Recognition of Confusion Using Artificial Intelligence. Signals 2024, 5, 244-263. https://doi.org/10.3390/signals5020013

Ganepola D, Maduranga MWP, Tilwari V, Karunaratne I. A Systematic Review of Electroencephalography-Based Emotion Recognition of Confusion Using Artificial Intelligence. Signals. 2024; 5(2):244-263. https://doi.org/10.3390/signals5020013

Chicago/Turabian StyleGanepola, Dasuni, Madduma Wellalage Pasan Maduranga, Valmik Tilwari, and Indika Karunaratne. 2024. "A Systematic Review of Electroencephalography-Based Emotion Recognition of Confusion Using Artificial Intelligence" Signals 5, no. 2: 244-263. https://doi.org/10.3390/signals5020013

APA StyleGanepola, D., Maduranga, M. W. P., Tilwari, V., & Karunaratne, I. (2024). A Systematic Review of Electroencephalography-Based Emotion Recognition of Confusion Using Artificial Intelligence. Signals, 5(2), 244-263. https://doi.org/10.3390/signals5020013