Abstract

Digital platforms have become primary channels for cultural heritage transmission, yet how individuals visually represent their personal heritage online remains unexplored. This study investigates the visual patterns in personal heritage representation across digital platforms, examining whether platform affordances or demographics influence these patterns. Through the LAVIS multimodal AI system, we analyzed 588 heritage images from Instagram and “Personas y Patrimonios”, combining automated content, composition, color, and saturation analyses with human validation. Our findings revealed that intimate, portable objects—particularly jewelry (22.79%)—dominate personal heritage representations, with no content differences between platforms or genders. Small but statistically significant platform differences emerged in color patterns (Cohen’s d = −0.215) and compositional attention (Cohen’s d = 0.147), while gender showed no significant differences in any visual dimension. These findings may indicate that personal heritage representation follows universal visual patterns, emphasizing personal bonds that transcend both platform affordances and demographic differences. These results advance understanding of personal digital heritage communication by identifying the universal patterns in its visualization. Beyond establishing a methodological framework for AI-assisted heritage image analysis, this research provides practical insights for heritage educators and digital platform designers while illuminating how biographical objects function in digital environments, ultimately underscoring the pivotal role of imagery in contemporary cultural transmission.

1. Introduction

Cultural heritage represents the legacy transmitted between generations [1], a process that enables the understanding, respect, valuation, and enjoyment of one’s cultural inheritance [2,3]. Modern heritage approaches recognize that individuals, through their agency and personal values, determine which tangible or intangible assets constitute their legacy [4], and select the mechanisms for its transmission [5].

The digital revolution has fundamentally transformed these transmission processes, establishing new spaces and methods for heritage preservation and sharing. In digital environments, heritage assets—whether tangible elements or image records of intangible ones—endure as digital imprints that require specific transmission channels, primarily through social networks [6,7] and dedicated web platforms [8,9]. Although traditional institutions, such as museums and educational centers, maintain their vital roles, these digital contexts have emerged as essential channels [10,11,12] for heritage communication and the preservation of collective cultural imaginaries [13,14,15,16].

The characteristics and affordances of different digital platforms may influence how heritage is communicated and preserved. The concept of affordance becomes particularly relevant when examining heritage transmission in digital environments. As Costa [17] demonstrates, platform usage patterns are not solely determined by technical capabilities but are shaped by specific social and cultural contexts. Sun & Suthers’ [18] concept of “cultural affordances” further illuminates this dynamic, suggesting that platforms both reinforce existing cultural values and create opportunities for new forms of heritage expression. Beyond platform-specific factors, substantial empirical literature suggests that gender may systematically influence visual representation patterns in digital heritage environments. Large-scale research examining over one million images from Google, Wikipedia, and Internet Movie Database, alongside billions of words from these platforms, demonstrates that gender bias online is consistently more prevalent and psychologically potent in images than in text [19]. Controlled experiments with nationally representative samples have revealed that exposure to images rather than textual descriptions significantly amplifies the gender bias in people’s beliefs, with the participants who searched for images showing stronger explicit gender associations than those who searched for text. Critically, these effects persist over time, with the participants exposed to images maintaining a stronger implicit bias three days after the initial exposure, indicating its enduring psychological impacts.

This theoretical framework becomes especially relevant when comparing online heritage-sharing behaviors across different digital environments. General social networks like Instagram, with their emphasis on visual content and broad social reach, create distinct affordances for personal heritage communication compared to specialized platforms like “Personas y Patrimonios,” a dedicated website designed specifically for sharing and preserving personal heritage. While both platforms facilitate the creation of online communities centered on personal heritage sharing, their different architectures and user expectations may influence how individuals conceptualize and present their cultural inheritance. Although these platforms employ both textual and visual content, images play a dominant role in the heritage transmission process [20,21].

The analysis of these shared images helps us understand both the selection criteria for heritage assets and how the process of heritage formation develops [22,23], enabling the identification of valued asset typologies. All of this allows for the inference of the existence of generalized behavioral patterns in heritage transmission—derived from the underlying conceptualization of heritage in each asset typology—linked to the virtual container used to make it globally visible.

Despite the growing application of visual methodologies in heritage research [24,25,26,27,28,29,30,31,32,33,34,35,36], significant limitations remain. Traditional approaches face scalability constraints when analyzing large-scale digital heritage representations, while AI-enhanced methods risk cultural insensitivity and exclusion of heritage dimensions [37]. To address these methodological gaps, a hybrid approach that combines multimodal AI analysis with human interpretation is needed.

Studies incorporating visual methodologies in heritage-related fields remain limited and fragmented across various disciplines, such as Sociology [24,25,26], Pedagogy [27], Anthropology [28,29], and Semiotics [30]. In the heritage field specifically, visual research has primarily focused on tourism [29,31,32], ethnology [33], social networks [34], cultural property restoration [35], and safeguarding [36]. Notably absent are studies examining how individuals visually represent and transmit their personal heritage through digital platforms.

This implies that there is a limited understanding of how individuals visually express their heritage, which may constrain educators’ ability to develop effective strategies for transmitting the value of cultural assets. To address these limitations in visual heritage analysis, this study builds on the Heritage Learning Sequence (HLS) framework [38], which defines heritage processes through seven key interconnected actions: know (cognitive recognition), understand (contextual comprehension), respect (acknowledgment of value), value (personal significance attribution), care (preservation commitment), enjoy (emotional connection), and transmit (sharing with others to make it known to them). In digital environments, these seven actions may occur simultaneously or in non-linear sequences, particularly when visual elements become the primary transmission vehicle.

Our literature review reveals that the published research rarely includes color metrics or image statistics. In heritage education research, no previous studies have examined how individuals use personal heritage photographs on social networks and the internet to transmit their intimate cultural property. While previous research has applied the HLS framework to various aspects of heritage education [39,40] and to exploring digital contexts [6,7,39], in this study we focus on the transmission phase of the HLS framework, as all the analyzed images were consciously chosen to communicate personal heritage on online communities.

This temporal specificity allows us to examine how intimate heritage is conceptualized and transmitted through images and explanatory text [41]. By applying artificial intelligence to a social semiotics analysis [42,43], we examine both the content and aesthetic features of heritage images, including color, structure, and saturation, as they significantly influence human aesthetic preferences and perceptions [44].

Therefore, this study aims to (O1) test the usefulness of relying on AI tools for the visual analysis of personal heritage images. Based on the theoretical frameworks outlined above, we hypothesize that (H1) the visual characteristics of personal heritage images will differ significantly between Instagram and “Personas y Patrimonios” due to their distinct platform affordances and community structures, and that (H2) systematic differences will exist in the visual representation of personal heritage between male and female users, reflecting documented gender differences in visual attention, compositional preferences, and narrative approaches.

2. Materials and Methods

2.1. Participants

A sample of N = 588 people from the Personas y Patrimonios community was used, with participants providing informed consent for their participation in the research. The sample included Instagram participants who subsequently migrated to the Personas y Patrimonios website (307 women, 83 men, and 74 with no binary preference), and direct users of the website www.personasypatrimonios.com (93 women, 29 men, and 2 with no binary preference). The participants were selected by convenience sampling, considering (1) the availability of the images they uploaded to the platforms and (2) the clarity, sharpness, and comprehensibility of the images. To analyze these images, we implemented a procedure that leveraged both traditional and AI-powered techniques.

2.2. Procedure

An orderly analysis procedure was followed in three consecutive stages:

- (1)

- Data were obtained from the participants in the community regarding gender and shared images; the objective of the analysis was to characterize the visual components of the images, as these are of great utility in the study of the representation and expression of heritage.

- (2)

- We proceeded to explore the color and structure of the images. The standard protocols for image research were as follows: i.e., the Exif data were analyzed to determine their peculiarities, image format, device, ISO, shutter time, and lens aperture.

- (3)

- The absence of metadata in most of the images led us to refocus our analysis on their content and quantitative aesthetic properties [45]. Our analysis strategy incorporated three complementary approaches: AI (artificial intelligence) for content analysis, color space analysis for aesthetic properties, and statistical testing for relationship verification. For implementation, we used R (v4.3.2; [46]) with the following specialized libraries: tidyverse (v2.0.0; [47]) for data management and ggplot2 (v3.4.4; [48]), reshape2 (v1.4.4; [49]), imager (v0.45.2; [50]), magick (v2.8.1; [51]) and colorspace (v2.1-0; [52]) for visualization and color analysis. Additionally, we employed Python v3.9.18 with pandas (v2.2; [53]) and numpy (v1.26.0; [54]) for data management, matplotlib (v3.7.4; [55]) for graphing, PIL (v10.2.0; [56]) for image processing, and Lavis (v1.0.2; [57]) for multimodal AI implementation.

2.3. Analysis Using Artificial Intelligence

The systematic extraction of image information was conducted using a multimodal AI system. In this context, multimodal refers to computational frameworks that can process heterogeneous data types (text, image, sound, or video) or modalities, and specifically, in this implementation, visual and linguistic modalities.

While standalone implementations like CLIP or ViLT [58] or alternatives like MMF were considered, we selected LAVIS [57] for its modular architecture, unified interface, and robust evaluation tools, which simplify the integration of various visual processing methods.

We implemented the multimodal framework using two distinct model architectures. For caption generation and visual question–answering tasks, we employed the BLIP-2 (Bootstrapping Language–Image Pre-Training) model with FlanT5-XL base architecture (pretrain_flant5xl configuration). The model was set to evaluation mode and processed the images through a standardized visual processor pipeline.

The methodology employed the generated automated image captions via the pretrained model, and subject identification was performed using two standardized prompts: (1) “What is the subject of this image?” for the primary content analysis, and (2) “Is there a person in the image?” for human presence detection. When a human presence was detected through a positive response, an additional prompt—“What is the pose or stance of the person in the image?”—was used to characterize the subject’s posture. While we initially tested more complex role-playing prompts [59], they did not yield better results than simpler queries, leading us to opt for the more straightforward approach. Additional potential queries regarding the artistic nature, image age, and rule of thirds composition were tested but performed poorly in our preliminary analysis, and were consequently excluded.

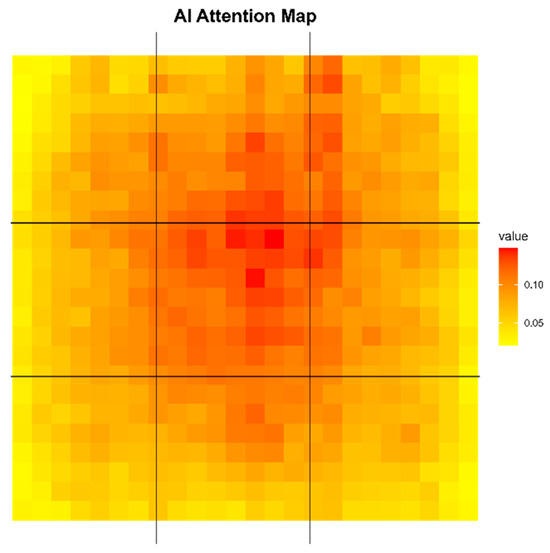

The use of attention maps, which are gradient-weighted matrices that constitute a spatial representation where the intensity values correspond to the relative importance assigned to specific image areas during the model’s inference process, aided in comprehending the provided responses by pinpointing the approximate location of identified subjects within the images.

For the generation of these maps, we utilized the BLIP Image–Text Matching model in its large configuration with a gradient-weighted class activation mapping (Grad-CAM) to generate normalized attention heatmaps. These heatmaps reveal where the multimodal model focuses when identifying heritage objects, effectively mapping the visual weight distribution across the images. When these attention maps are superimposed across all 588 images, they create a composite visualization showing the aggregate spatial distribution of visual focus. This composite pattern can be directly compared to established photographic composition principles by analyzing where the attention intensity peaks occur within the image frame.

To analyze the compositional patterns, we divided each image into a 3 × 3 grid corresponding to the rule of thirds framework, with additional assessment of central placement (the middle third of both horizontal and vertical axes). The high-intensity regions in the superimposed attention maps indicate the areas where heritage objects consistently draw the AI model’s focus. The rule of thirds is evidenced when the attention peaks align with the four intersection points of the grid lines (particularly the upper-right intersection), while central placement is indicated by a high attention intensity in the center quadrant of the image frame.

All the images were processed in RGB (Red–Green–Blue) format and standardized to a 720-pixel width while maintaining their original aspect ratio through proportional scaling. The preprocessing pipeline included normalization to float32 format in the 0–255 range before being converted to PyTorch tensors. The model attention maps outputs were generated as NumPy arrays with a normalized floating point.

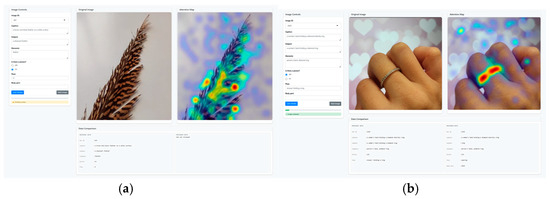

Given the probabilistic nature of these models, all the outputs were subject to review and correction by the study’s authors. For this purpose, an ad hoc application was implemented in Shiny (v1.8.0; [60]; Figure 1), which allowed us to compare the original image, the superimposed heat map, the captions, and the responses provided by AI and to edit them if necessary.

Figure 1.

Shiny application interfaces showing different object recognition. (a) Pre-review interface displaying a pheasant feather with its attention heatmap highlighting the structure. (b) Post-review interface showing a diamond ring on a hand. Both examples showcase the interface components: manual annotation controls, original images, and corresponding attention heatmaps. The data comparison panel displays the original AI-generated descriptions alongside the human-reviewed data, illustrating the validation workflow.

The review process involved systematic examination of AI-generated outputs by the research team. Of the 588 images analyzed, 117 (19.9%) required manual corrections due to AI misclassification or incomplete object recognition. The most common errors included misidentification of neckwear or metallic objects such as jewelry (n = 45), incorrect categorization of toys (n = 17), and failure to recognize religious elements (n = 4). Each image was individually assessed for accuracy of content identification, with corrections implemented through the Shiny interface when discrepancies were identified between the AI outputs and the actual image content.

The unique subjects obtained by this method (n = 182) were evaluated and classified into 15 categories based on the heritage conceptualization framework established in previous research [6]: jewelry, art, toys, clothing, animals, music, nature, containers, sports, architecture, food, everyday objects, religion, people, and miscellaneous.

After reviewing the data returned by the algorithm, we proceeded to obtain a second sample of AI attention maps. After visual verification of both maps, no differences were detected.

2.4. Classification of Heritage Objects

Following the manual review and validation of AI-generated descriptions, we implemented a systematic categorization process to group the identified heritage objects into meaningful thematic categories. The classification was conducted using previous classifications [6] improved through an iterative analysis of the initial object descriptions.

We established 15 distinct categories based on the nature and function of the identified objects: jewelry (including bracelets, necklaces, rings, earrings, pendants, medallions, brooches, watches, chains, and charms), art (encompassing drawings, tattoos, photos, paintings, pictures, sculptures, and crafts), toys (stuffed animals, dolls, trading cards, games, and collectibles), clothing (shoes, accessories, textiles, and personal garments), animals (pets and animal-related items), music (instruments, recordings, and musical accessories), nature (landscapes, plants, natural specimens, and outdoor scenes), containers (boxes, jars, bottles, bags, and storage items), sports (medals, equipment, and sports-related memorabilia), architectural (buildings, structures, and constructed environments), food (culinary items and dining objects), everyday objects (books, stationery, household items, and utilitarian objects), religion (religious symbols, figures, and ceremonial items), people (human subjects in various contexts), and miscellaneous (items that did not fit into the primary categories).

The classification process was implemented programmatically using R statistical software, where each simplified object description was automatically assigned to its corresponding category through a matching algorithm. The objects that could not be classified within the predefined categories were assigned to a general “other” category. This systematic approach ensured consistency in categorization while maintaining the ability to capture the diversity of personal heritage objects shared across both platforms.

2.5. Color Analysis

For the color analysis, we utilized the CIE-Lab color space, a three-dimensional coordinate system for color quantification. This color space represents chromatic information through three parameters: L (luminance), a* (chromatic coordinates along the green–red axis), and b* (chromatic coordinates along the blue–yellow axis) [61]. A fundamental characteristic of CIE-Lab is that it was designed to be perceptually uniform, meaning that a measured difference between two colors corresponds consistently to the perceived difference seen by human observers, regardless of where those colors fall in the color space. Despite being a non-Euclidean space, CIE-Lab was developed so that the color components correspond to perceived changes, enabling the use of the Euclidean distance equation for color difference calculations [62]. This property facilitates precise colorimetric measurements and comparative analyses across the color spectrum.

Subsequently, we applied K-means clustering to identify the dominant colors in each image. The optimal number of clusters (K) was determined using the elbow method, evaluating the within-cluster sum of squares for K values ranging from 2 to 6. For each image, we applied K-means clustering to the a* and b* coordinates of all pixels, then identified the cluster containing the largest number of pixels as the dominant cluster. The most representative color of each image was defined as the median of the Lab* values from this dominant cluster. To position each image within the color space, we calculated the Euclidean distance in the ab plane from each representative color to the global sample centroid (a* = 2.779, b* = 5.517). This methodology enabled the characterization of the overall distribution of images within the color space while maintaining the essential chromatic characteristics that defined each image’s visual identity, with an average of 2.8 clusters per image and the dominant cluster representing approximately 58.02% of pixels in each image.

2.6. Data Analysis

Our analysis employed multiple statistical approaches tailored to the nature of our data. For categorical relationships, we used chi-square tests of independence with Cramer’s V to assess the association strength, as these tests are appropriate for nominal data and robust to unequal sample sizes. The chi-square test assessed whether the observed frequencies differed significantly from the expected frequencies, while Cramer’s V provided a normalized measure of effect size.

For the color distribution analysis in the CIE-Lab space, the preliminary Shapiro–Wilk tests revealed non-normal distributions (p < 0.001), necessitating non-parametric methods. We used Fligner–Killeen tests for variance homogeneity due to their robustness to non-normality, followed by Welch’s t-tests for the platform and gender comparisons to account for the unequal sample sizes and potential heteroscedasticity.

The image saturation analysis employed Hasler and Süsstrunk’s [63] classification methodology. We used both Kolmogorov–Smirnov and Wilcoxon rank-sum tests based on the data characteristics: 97.1% unique values with minimal ties (2.9%) satisfied the Kolmogorov–Smirnov assumptions, while similar right-skewed distributions across the platforms (skewness: Personas y Patrimonios = 0.69, Instagram = 0.81) validated the Wilcoxon test usage for location comparison.

For spatial patterns in AI-generated attention maps, we tested variance homogeneity using Levene’s test, followed by non-parametric Wilcoxon tests and Cohen’s d with confidence intervals for effect sizes. All analyses maintained α = 0.05, providing robust framework for examining relationships between variables.

3. Results

3.1. Content

The analysis of the images indicated that most of the personal heritage objects presented by the 588 subjects were jewelry (n = 134), representing 22.79% of the total. As detailed in Table 1, among these objects, jewelry items like bracelets, necklaces, and rings emerged as the most prominently featured pieces within the dataset.

Table 1.

Frequency of personal heritage objects.

The category “everyday objects” (n = 66) was the second most prevalent (11.22% of the total). This category encompasses various personal objects, with books being the most frequent (n = 20), followed by other items, such as bookmarks, pens, coins, keys, key rings, and cameras. Both categories, together with “art” (n = 60, 10.20%)—encompassing creative elements such as paintings, photos, and drawings, “toys” (n = 55), “nature” (n = 41), and “clothes” (n = 41), made up 67.51% of the subjects, which reinforces the idea that the link established with heritage goods is inherently individual, temporal, and socially rooted.

Pearson’s chi-square tests revealed no significant associations between the platform type (social network vs. heritage community) and heritage object categories (χ2 = 13.407, df = 14, p = 0.495), nor between the participant’s gender and heritage object categories (χ2 = 43.702, df = 42, p = 0.399). However, Cramer’s V (0.168) for the gender comparison suggested a mild association may be present.

Out of the 588 images analyzed, 135 of them featured individuals either entirely or partially, representing 22.95% of the images. Within this context, a total of 22 distinct actions performed by the individuals within the images were cataloged and examined as part of the analysis. The predominant theme observed in the images involves the presentation of heritage objects to viewers and how the individuals interact with these objects. This pattern suggests that heritage objects are either in active use or that individuals abdemonstrate them intentionally for the viewer as shown in Table 2.

Table 2.

Most frequent actions performed by individuals in heritage images.

Furthermore, our analysis revealed no discernible correlation between the gender of the individuals depicted and the actions they performed (χ2 = 39.632, df = 38, p = 0.397). This suggests that the actions carried out by individuals in the images are not contingent upon their gender. Having established these content patterns, we next examined how these heritage objects were visually represented through color choices.

3.2. Color

Using a cluster analysis, we obtained the most representative color of an image by grouping all the colors in a single cluster. This allowed us to determine the distance of the colors from a center—calculated as the average of the values in the a* and b* planes—thereby allowing for an assessment of color distribution.

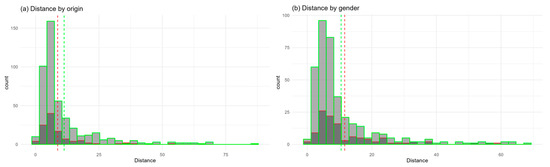

The analysis of color distributions revealed consistent patterns across the platforms. The Fligner–Killeen test showed adequate homogeneity of variances between the platforms (χ2 = 2.573, df = 1, p = 0.109). The subsequent Welch’s two-sample t-test comparing the mean color distances between Personas y Patrimonios (M = 8.712) and Instagram (M = 11.258) showed significant differences with a small size effect (t = −2.596, df = 242.7, p = 0.010, 95% CI [−4.478, −0.614]; Cohen’s d = −0.215, 95% CI [−0.423, −0.007]; as shown in Figure 2a).

Figure 2.

(a) The distribution of the distances between colors between the Instagram and Personas y Patrimonios communities; we observe that the distance between the means of the two distributions shows significant differences. In (b), we can observe the distribution of the distance between colors as a function of gender.

Figure 2b compares the distribution of the calculated distance by gender. Although a visual scan seemed to detect differences between the means, the Fligner–Killeen test confirmed variance homogeneity (χ2 = 1.465, df = 1, p = 0.226), and the subsequent analysis showed these were not statistically significant (t = −0.913, df = 180.9, p = 0.362), with means of 10.506 and 11.595 for males and females, respectively (95% CI [−3.441, 1.263]). Both genders exhibited a concentration of colors around the central color #80756E in Hexadecimal. This implies that the images centered around this mean value lack a dominant color presence.

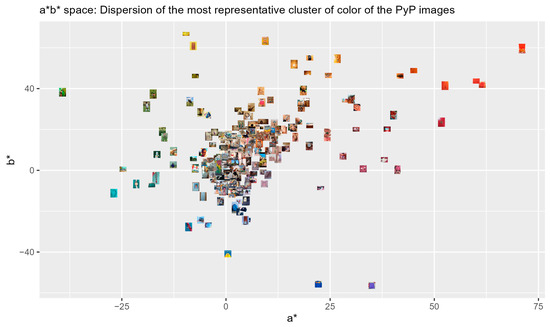

Another interesting aspect of using the CIE-Lab color model is that it allows us to place the images within the plane formed by a* and b* and, consequently, to check how the images are distributed in the plane according to their most representative color (Figure 3).

Figure 3.

Representation of the image sample in the color space as a function of centroid color.

In our sample, most of the images are grouped in the center, with more distinctive colors dispersed towards reds, yellows, and blues in the color space. To better understand the visual impact of these color choices, we conducted a detailed analysis of image saturation, which provides insight into the intensity of these color preferences.

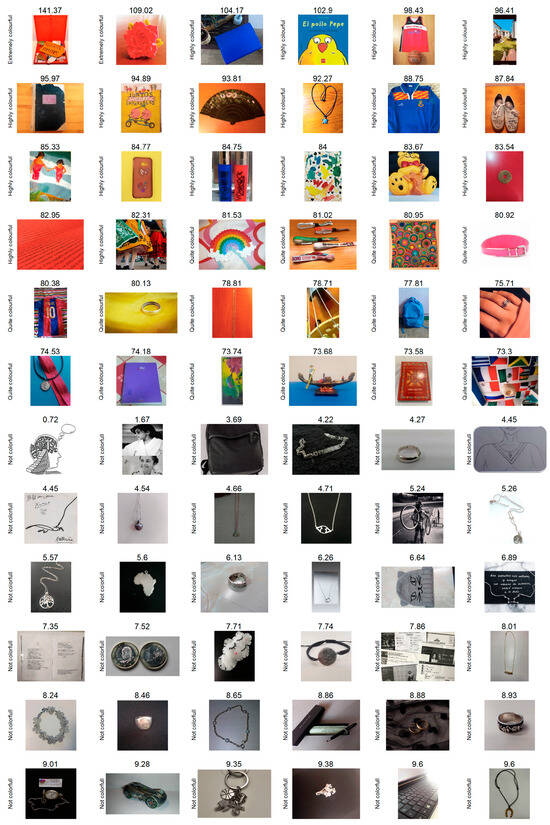

3.3. Saturation

In Figure 4, the images in the central positions have a slight saturation and are only slightly separated from those taken in black and white; the peripheral ones, on the other hand, have extreme saturation.

Figure 4.

Distribution of the images according to their saturation. We can see how the images are concentrated in a central band, with fewer images in the extreme values and a preponderance of low saturation levels.

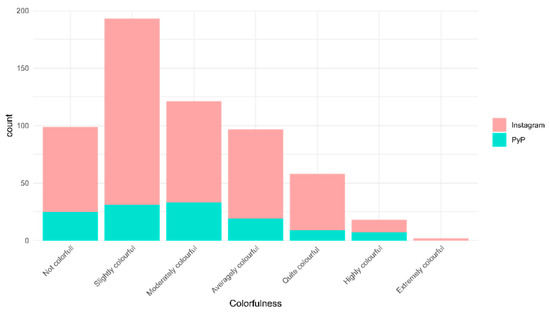

As observed in Figure 5, the most prevalent saturation levels fall within the categories “Slightly saturated” and “Moderately saturated.” Interestingly, both the Kolmogorov–Smirnov test, which assesses the overall distributional differences (D = 0.073, p = 0.682), and the Wilcoxon rank-sum test, examining the location shifts (W = 28744, p = 0.989), consistently indicate no significant differences in the saturation patterns between Instagram and the Personas y Patrimonios website.

Figure 5.

Distribution of the different saturation levels according to the source of the image.

Remarkably, 51.51% of images categorized as “not saturated” (n = 99) and 22.80% of “slightly saturated” images (n = 193) featured jewelry. This marks a difference when compared to the immediate category of “everyday objects,” comprising 11.40% “slightly saturated” images (n = 22) and 9.09% “not saturated” images (n = 9).

On the other hand, the images with higher saturation levels, whose visual components are more powerful (n = 18), allude to everyday objects (27.78%), clothing (22.22%), and artistic elements (16.67%), as shown in Figure 6.

Figure 6.

Figure showing elements with greater and lesser saturation. There is a greater variability in elements with higher color saturation; within the lower saturation levels we mainly find jewelry.

Finally, the moderately saturated images dominate the categories “art” (n = 16; 13.22%), “toys” (n = 16; 13.22%), “jewelry” (12.40%), and “everyday objects” (12.40%). Beyond these color and saturation patterns, we also examined how these heritage objects were compositionally arranged within the image frame using a multimodal AI system.

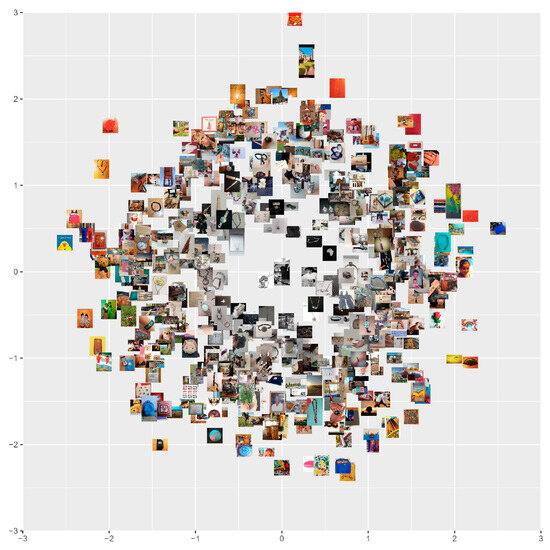

3.4. Structure

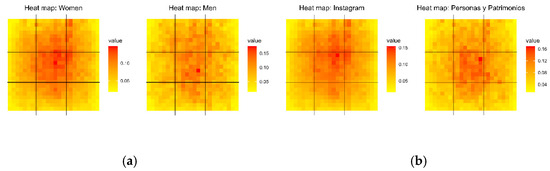

To analyze the compositional patterns, we employed AI attention maps instead of traditional bounding boxes, as the former were considered to perform a better segmentation of the image. The interpretation of these heat maps showed that placing subjects in the central area, following the rule of thirds (Figure 7), or positioning them along the upper-right-third axis were the most common compositional principles observed in the images.

Figure 7.

Set of all the superimposed attention maps. It can be seen how the subjects are in the central quadrant and the upper part of the right axis.

The heat map analyses based on gender also hint that females preferred the central quadrant and the upper part of the right axis, whereas males favored the central quadrant, with a slight inclination towards the upper third and the right axis (Figure 8). Nevertheless, non-significant differences were found (t = 1.020, df = 1149.9, p = 0.308, 95% CI [−0.002, 0.005]) and the effect size was trivial (Cohen’s d = 0.060, 95% CI [−0.055, 0.176]). It is worth noting that these differences may have been influenced by sample disparity or potential artifacts.

Figure 8.

Comparison of heat maps obtained by AI of images segregated by gender (a) and platform (b).

To conclude our analysis, we found statistically significant differences between the heat maps for Personas y Patrimonios and Instagram (t = −2.576, df = 1149.5, p = 0.010, 95% CI [−0.008, −0.001]). However, the effect size was small (Cohen’s d = 0.147, 95% CI [0.031, 0.262]), with Instagram users showing slightly higher attention values overall, particularly in the right third of the heat map. This finding, along with our observations on content preferences and color patterns, points to subtle but consistent patterns in digital heritage communication across platforms.

4. Discussion

In the current investigation, we conducted a comprehensive analysis of image content, color, and structural features, which reveals several key insights into how personal heritage is shared. The analysis of content demonstrates that it is predominantly represented through intimate, portable objects, particularly jewelry (22.79%), followed by everyday objects (11.22%) and creative or artistic elements (10.20%). This finding provides direct empirical validation of Fontal’s LHS theoretical framework [4], emphasizing “intimate or private assets” as central to the heritage bond. The LHS conceptual work on heritage education centered on bonds theorized that authentic heritage connections emerge through objects that maintain “close emotional and physical relationships with the owner,” but lacked large-scale quantitative verification. This interpretation is reinforced by the presence of human interactions in 28.92% of the images, primarily holding (n = 32) and carrying (n = 29), which emphasizes the intimate nature of these objects’ relationships with personal heritage.

The absence of significant correlations between gender and types of actions performed (χ2 = 43.702, df = 42, p = 0.399) suggests that patterns of heritage interaction in on-line communities can transcend gender boundaries, which may create more egalitarian heritage expression patterns than those observed in conventional educational settings described in the heritage education literature [39,40]. These findings hint that pedagogical approaches may benefit from prior personal heritage experience over traditional monument-focused curricula to foster a deeper understanding of the person–heritage bond. This aligns with heritage education frameworks that emphasize the centrality of bonds [4] between individuals, values, and material objects in meaningful heritage learning experiences.

The color analysis reveals consistent patterns across genders, with both female and males showing a concentration around the color #80756E. The high proportion of jewelry images with low saturation levels indicates a focus on the bond between the object and the person rather than its artistic properties. Statistically significant differences emerge between the platforms (p = 0.010, Cohen’s d = −0.215), though the small effect size suggests practical similarities in color preferences, probably due to Instagram’s enhanced editing capabilities, suggesting that heritage representation is driven by the assets’ established bonds and remains mainly unaffected by the visual and artistic qualities of the chosen image.

The analysis of structural composition through heat maps reveals subtle yet statistically significant differences between the platforms (Cohen’s d = 0.147, 95% CI [0.031, 0.262]), with Instagram users showing higher attention values in the right third. While the gender analysis shows non-significant variations in composition preferences (t = 1.020, df = 1149.9, p = 0.308, 95% CI [−0.002, 0.005]), the predominant use of central placement and rule of thirds across all groups suggests that heritage representation follows universal compositional patterns rather than platform- or gender-specific conventions.

These results partially contrast with Costa’s ethnographic findings on cultural variation in social media usage [17]. Costa documented significant cultural differences in how users adapt to different digital environments. Our analysis found small (d = −0.215, 95% CI [−0.423, −0.007]) but significant differences in the visual patterns between Instagram and the specialized website Personas y Patrimonios (color distributions: χ2 = 2.573, df = 1, p = 0.109; content categories: χ2 = 13.407, df = 14, p = 0.495). However, these apparent differences between genders and platforms may reflect our study’s scope, as we examined only two specific heritage-focused groups rather than broader user populations. The observed uniformity suggests that cultural transmission may follow different logics than general social media usage, though this conclusion requires cautious interpretation given our focused sample. While Costa’s participants demonstrated environment-specific adaptation strategies, patrimony sharing appears to follow consistent visual and content patterns regardless of digital architecture within the examined groups. Future research incorporating diverse cultural communities across multiple online spaces will be necessary to determine whether this consistency represents a universal characteristic of heritage communication or reflects the populations analyzed.

Our hybrid approach combining multimodal AI analysis with human validation addresses specific limitations identified in Rose’s comprehensive survey of visual methodologies [24]. While Rose’s framework emphasizes a “critical visual methodology” that considers “cultural significance, social practices and power relations” for individual images or small sets, our approach demonstrates how to scale up visual analysis to 588 heritage images while maintaining cultural sensitivity through systematic human oversight. The 19.9% correction rate for the AI classifications validates Rose’s warning about purely quantitative approaches while demonstrating that automated methods, though scalable, require human review to capture culturally specific meanings. Our methodology represents a synthesis of Rose’s interpretive emphasis with computational capabilities, rather than replacement, confirming her argument that different methodological approaches reveal different aspects of visual meaning—the CIE-Lab color analysis revealed patterns invisible to human observers, while the human review corrected AI misinterpretations of cultural significance, supporting Rose’s advocacy for methodological pluralism in visual research.

5. Conclusions

This study successfully fulfills its primary objective (O1) by validating the effectiveness of AI tools for personal heritage image analysis. Our systematic examination of 588 heritage images across different platforms reveals that personal heritage visualization follows consistent patterns that transcend both platform architecture and gender boundaries.

Our empirical findings reveal that personal heritage representations center on intimate, portable objects, with jewelry (22.79%) and everyday objects (11.22%) dominating across gender and the platforms studied. This empirically validates the theoretical frameworks emphasizing the centrality of personal bonds in heritage conceptualization, providing the first large-scale quantitative evidence for these theoretical propositions. Despite the platforms’ differences in technical capabilities, both Instagram and “Personas y Patrimonios” show convergent color patterns around #80756E, suggesting that heritage representation prioritizes emotional bonds over aesthetic enhancement. Furthermore, the AI attention mapping reveals consistent adherence to central placement and rule of thirds composition principles across all groups, indicating universal visual communication patterns in heritage contexts.

From a methodological perspective, our hybrid approach combining multimodal AI analysis (LAVIS framework) with human validation (19.9% correction rate) provides a scalable yet culturally sensitive methodology for large-scale visual heritage analysis. This addresses the significant limitations identified in traditional visual methodologies while maintaining the cultural sensitivity essential for heritage research. Their successful integration demonstrates that automated approaches, when properly supervised, can effectively scale up visual heritage research without sacrificing interpretive depth.

The absence of significant platform (H1 partially confirmed) and gender differences (H2 rejected) in content representation suggests that personal heritage transmission may operate through universal visual languages that transcend conventional social media usage patterns documented in broader digital communication research. For heritage educators and digital platform designers, these findings indicate that effective heritage communication strategies should prioritize universal accessibility and connection mechanisms rather than platform-specific or demographic-targeted approaches. The dominance of low-saturation imagery suggests the focus should remain on object–person relationships rather than aesthetic enhancement or technical capabilities.

However, several limitations must be acknowledged. Our use of incidental sampling may not fully represent all heritage communities or alternative platforms, potentially limiting the generalizability of findings to broader populations. While our gender-based analysis provides valuable insights, the inability to gather other demographic variables, such as age, education, and socioeconomic status, limits our understanding of potential cultural influences on heritage representation. Additionally, our focus on two specific platforms may not capture the full spectrum of digital heritage communication practices across diverse online environments.

Future research should explore category-specific patterns across demographic variables with larger sample sizes. The integration of additional contextual analysis through advanced image segmentation techniques could provide deeper insights into the spatial and environmental aspects of heritage representation. Furthermore, cross-cultural studies could illuminate how personal heritage conceptualization and representation vary across different cultural contexts.

This research ultimately establishes a methodological framework for AI-assisted heritage image analysis while demonstrating that personal heritage representation in digital environments follows consistent patterns that emphasize individual bonds over technological or social constraints, underscoring imagery’s pivotal role in contemporary cultural transmission and providing practical guidance for heritage education and digital platform development.

Author Contributions

Conceptualization, O.F.M., P.d.C.M. and V.E.G.-B.; methodology, V.E.G.-B.; software, V.E.G.-B.; validation, O.F.M. and V.E.G.-B.; formal analysis, V.E.G.-B.; investigation, O.F.M., P.d.C.M. and V.E.G.-B.; resources, O.F.M., P.d.C.M. and V.E.G.-B.; data curation, V.E.G.-B.; writing—original draft preparation, O.F.M., P.d.C.M. and V.E.G.-B.; writing—review and editing, O.F.M., P.d.C.M. and V.E.G.-B.; visualization, V.E.G.-B.; supervision, O.F.M. and P.d.C.M.; project administration, O.F.M.; funding acquisition, O.F.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Spain’s Ministry of Science and Innovation, the State Research Agency, within the framework of project PID2019-106539RB-I00, Learning Models in Digital Environments On Heritage Education. The principal investigators are Olaia Fontal Merillas and Alex Ibañez Etxeberria.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AI | Artificial Intelligence |

| HLS | Heritage Learning Sequence |

| LAVIS | Language–Vision Instruction for Segmentation |

| BLIP | Bootstrapping Language–Image Pre-Training |

| FlanT5-XL | Flan Text-to-Text Transfer Transformer (Extra Large) |

| RGB | Red–Green–Blue |

| Grad-CAM | Gradient-Weighted Class Activation Mapping |

| CIE-Lab | Commission Internationale de l’Éclairage Lab* |

| CLIP | Contrastive Language–Image Pre-Training |

| ViLT | Vision-and-Language Transformer |

| MMF | Multimodal Framework |

| PIL | Python Imaging Library |

References

- Kumar, T.K.; Nair, R. Conserving knowledge heritage: Opportunities and challenges in conceptualizing cultural heritage information system (CHIS) in the Indian context. Glob. Knowl. Mem. Commun. 2022, 71, 564–583. [Google Scholar] [CrossRef]

- Khalaf, R.W. The Implementation of the UNESCO World Heritage Convention: Continuity and Compatibility as Qualifying Conditions of Integrity. Heritage 2020, 3, 384–401. [Google Scholar] [CrossRef]

- Khalaf, R.W. Continuity: A fundamental yet overlooked concept in World Heritage policy and practice. Int. J. Cult. Policy 2021, 27, 102–116. [Google Scholar] [CrossRef]

- Fontal, O. La Educación Patrimonial Centrada en los Vínculos. El Origami de Bienes, Valores y Personas; Trea: Gijón, Spain, 2022. [Google Scholar]

- Martín Cáceres, M.J.; Cuenca López, J.M. Educomunicación del patrimonio. Educ. Siglo XXI 2015, 33, 33–54. [Google Scholar] [CrossRef][Green Version]

- Fontal, O.; Arias, B.; Ballesteros-Colino, T.; de Castro Martín, P. Conceptualización del patrimonio en entornos digitales mediante referentes identitarios de maestros en formación. Educ. Soc. 2022, 43, 1–26. [Google Scholar] [CrossRef]

- Marín-Cepeda, S.; Fontal, O. La arquitectura del vínculo a través de la web Personas y Patrimonios. OBETS Rev. De Cienc. Soc. 2020, 15, 137–158. [Google Scholar] [CrossRef]

- Piñeiro-Naval, V.; Igartua, J.J.; Rodríguez-de-Dios, I. Identity-related implications of the dissemination of cultural heritage through the Internet: A study based on Framing Theory. Commun. Soc. 2018, 31, 1–21. [Google Scholar] [CrossRef]

- Poong, Y.S.; Yamaguchi, S.Y.; Takada, J.-i. Analysing Mobile Learning Acceptance in the World Heritage Town of Luang Prabang, Lao PDR. In Mobile Learning in Higher Education in the Asia-Pacific Region. Education in the Asia-Pacific Region: Issues, Concerns and Prospects; Murphy, A., Farley, H., Dyson, L., Jones, H., Eds.; Springer: Singapore, 2017; Volume 40. [Google Scholar] [CrossRef]

- Castro-Calviño, L.; Rodríguez-Medina, J.; López-Facal, R. Educación patrimonial para una ciudadanía participativa. Evaluación de resultados de aprendizaje del alumnado en el programa Patrimonializarte. Rev. Electrónica Interuniv. Form. Profr. 2021, 24, 205–219. [Google Scholar] [CrossRef]

- Luna, U.; Ibáñez-Etxeberria, A.; Rivero, P. El patrimonio aumentado. 8 apps de Realidad Aumentada para la enseñanza-aprendizaje del patrimonio. Rev. Interuniv. Form. Profr. 2018, 3, 43–62. [Google Scholar]

- Luna, U.; Rivero, P.; Vicent, N. Augmented reality in heritage apps: Current trends in Europe. Appl. Sci. 2019, 9, 2756. [Google Scholar] [CrossRef]

- Ibáñez-Exeberria, A.; Kortabitarte, A.; De Castro, P.; Gillate, I. Digital competence using heritage theme apps in the DigComp framework. Rev. Interuniv. Form. Profr. 2019, 22, 13–27. [Google Scholar] [CrossRef]

- Narváez-Montoya, A. Comunicación educativa, educomunicación y educación mediática: Una propuesta de investigación y formación desde un enfoque culturalista. Palabra Clave 2019, 22, e22311. [Google Scholar] [CrossRef]

- Mason, M. The dimensions of the mobile visitor experience: Thinking beyond the technology design. Int. J. Incl. Mus. 2013, 5, 51–72. [Google Scholar] [CrossRef]

- Comes, P. ¿Cómo Pueden Ayudarnos las Nuevas Tecnologías a Desarrollar la Identidad Ciudadana? Rastres, un Ejemplo de Aplicación Didáctica Multimedia con Vocación Cívica. En Identidades y Territorios: Un Reto Para la Didáctica de las Ciencias Sociales; Asociación Universitaria de Profesores de Didáctica de las Ciencias Sociales: Madrid, Spain, 2001; pp. 529–538. [Google Scholar]

- Costa, E. Affordances-in-practice: An ethnographic critique of social media logic and context collapse. New Media Soc. 2018, 20, 3641–3656. [Google Scholar] [CrossRef] [PubMed]

- Sun, Y.; Suthers, D.D. Cultural affordances and social media. In Proceedings of the Annual Hawaii International Conference on System Sciences, Kauai, HI, USA, 5–8 January 2021; pp. 3017–3026. [Google Scholar] [CrossRef]

- Guilbeault, D.; Delecourt, S.; Hull, T.; Desikan, B.S.; Chu, M.; Nadler, E. Online images amplify gender bias. Nature 2024, 626, 1049–1055. [Google Scholar] [CrossRef] [PubMed]

- De Castro Martín, P.; Sánchez-Macías, I. #Souvenirs1936. Transmedia y procesos de identización en el aprendizaje de la Guerra Civil Española. Rev. Interuniv. Form. Profr. 2019, 94, 63–82. [Google Scholar]

- Vassilakis, C.; Antoniou, A.; Lepouras, G.; Poulopoulos, V.; Wallace, M.; Bampatzia, S.; Bourlakos, I. Stimulation of Reflection and Discussion in Museum Visits Through the Use of Social Media; Social Network Analysis and Mining: Heidelberg, Germany, 2017; Volume 7. [Google Scholar] [CrossRef]

- Fontal, O. (Coord.). La Educación Patrimonial. Del Patrimonio a las Personas; Trea: Gijón, Spain, 2013. [Google Scholar]

- Gómez Redondo, C.; Fontal Merillas, O.; Ibáñez-Exeberría, A. Procesos de patrimonialización en el arte contemporáneo. EARI Educ. Artística Rev. Investig. 2016, 2, 108–112. [Google Scholar] [CrossRef]

- Rose, G. Visual Methodologies: An Introduction to Researching with Visual Materials, 5th ed.; SAGE Publications Ltd.: Thousand Oaks, CA, USA, 2023. [Google Scholar]

- Pink, S. (Ed.) Advances in Visual Methodology; SAGE Publications Ltd.: Thousand Oaks, CA, USA, 2012. [Google Scholar]

- Mitchell, C.; Lange, N.; Moletsane, R. Participatory Visual Methodologies; SAGE Publications Ltd.: Thousand Oaks, CA, USA, 2018. [Google Scholar] [CrossRef]

- Wee, B.; DePierre, A.; Anthamatten, P.; Barbour, J. Visual methodology as a pedagogical research tool in geography education. J. Geogr. High. Educ. 2013, 2, 164–173. [Google Scholar] [CrossRef]

- Talsi, R.; Laitila, A.; Joensuu, T.; Saarinen, E. The Clip Approach: A Visual Methodology to Support the (Re)Construction of Life Narratives. Qual. Health Res. 2021, 31, 789–803. [Google Scholar] [CrossRef]

- Martins, H. For a visual anthropology of tourism: The critical use of methodologies and visual materials. PASOS Rev. Tur. Patrim. Cult. 2016, 14, 527–541. [Google Scholar] [CrossRef]

- Bradley, J. Building a visual vocabulary: The methodology of ‘reading’ images in context. In Approaching Methodology; Latvala, F.P., Leslie, H.F., Eds.; Annales Academiæ Scientiarum Fennicæmia: Helsinki, Finland, 2013; Volume 368, pp. 83–98. [Google Scholar]

- Graziano, T. The insiders’ gaze: Fieldworks, social media and visual methodologies in urban tourism. AIMS Geosci. 2022, 8, 366–384. [Google Scholar] [CrossRef]

- Bell, V. Visual Methodologies and Photographic Practices: Encounters with Hadrian’s Wall World Heritage Site. In Tourism and Visual Culture; Burns, P.M., Lester, J.A., Bibbings, L., Eds.; CABI: Oxfordshire, UK, 2010; Volume 2, pp. 120–134. [Google Scholar] [CrossRef]

- Savin, P.; Trandabat, D. Ethnolinguistic Audio-visual Atlas of the Cultural Food Heritage of Bacău County—Elements of methodology. BRAIN Broad Res. Artif. Intell. Neurosci. 2018, 9, 125–131. [Google Scholar]

- Huebner, E.J. TikTok and museum education: A visual content analysis. Int. J. Educ. Through Art 2022, 18, 209–225. [Google Scholar] [CrossRef]

- Pietroni, E.; Ferdani, D. Virtual Restoration and Virtual Reconstruction in Cultural Heritage: Terminology, Methodologies, Visual Representation Techniques and Cognitive Models. Information 2021, 12, 167. [Google Scholar] [CrossRef]

- Wang, M.; Zhao, M.T.; Lin, M.L.; Cao, W.; Zhu, H.L.; An, N. Seeking lost memories: Application of a new visual methodology for heritage protection. Geogr. Rev. 2020, 110, 41–55. [Google Scholar] [CrossRef]

- Gattiglia, G. Managing artificial intelligence in archeology. an overview. J. Cult. Herit. 2025, 71, 225–233. [Google Scholar] [CrossRef]

- Fontal, O. La Educación Patrimonial: Teoría y Práctica en el Aula, el Museo e Internet; Trea: Gijón, Spain, 2003. [Google Scholar]

- Fontal Merillas, O.; De Castro Martín, P. El patrimonio cultural en la educación artística: Del análisis del currículum a la mejora de la formación inicial del profesorado en Educación Primaria. Arte Individuo Soc. 2023, 35, 461–481. [Google Scholar] [CrossRef]

- Falcon, R.; Fontal Merillas, O.; Torregrosa, A. Le patrimoine comme don du temps. Sociétés 2015, 129, 115–124. [Google Scholar] [CrossRef]

- Clough, P.; Goodley, D.; Lawthom, R.; Moore, M. Researching Life Stories. Method, Theory and Analysis in a Biographical Age; Routledge: Oxfordshire, UK, 2004. [Google Scholar]

- Kress, G. Literacy in the New Media; Routledge: Oxfordshire, UK, 2003. [Google Scholar]

- Kress, G.; Van Leeuwen, T. Reading Images. The Grammar of Visual Design; Routledge: Oxfordshire, UK, 2006. [Google Scholar]

- Camgöz, N.; Yener, C.; Güvenç, D. Effects of hue, saturation, and brightness on preference. Color Res. Appl. 2002, 27, 199–207. [Google Scholar] [CrossRef]

- Mather, G. Aesthetic Image Statistics Vary with Artistic Genre. Vision 2020, 4, 10. [Google Scholar] [CrossRef]

- R Core Team. R: A Language and Environment for Statistical Computing. R Foundation for Statistical Computing; R Core Team: Vienna, Austria, 2023; Available online: https://www.R-project.org/ (accessed on 19 December 2024).

- Wickham, H.; Averick, M.; Bryan, J.; Chang, W.; McGowan, L.D.; François, R.; Grolemund, G.; Hayes, A.; Henry, L.; Hester, J.; et al. Welcome to the tidyverse. J. Open Source Softw. 2019, 4, 1686. [Google Scholar] [CrossRef]

- Wickham, H. ggplot2: Elegant Graphics for Data Analysis; Springer: Cham, Switzerland, 2016. [Google Scholar]

- Wickham, H. Reshaping Data with the reshape Package. J. Stat. Softw. 2007, 21, 1–20. [Google Scholar] [CrossRef]

- Barthelme, S. Imager: Image Processing Library Based on ‘CImg’, R Package Version 0.45.2; CRAN: Vienna, Austria, 2023; Available online: https://CRAN.R-project.org/package=imager (accessed on 19 December 2024).

- Ooms, J. Magick: Advanced Graphics and Image-Processing in R, R Package Version 2.8.1; CRAN: Vienna, Austria, 2023; Available online: https://CRAN.R-project.org/package=magick (accessed on 19 December 2024).

- Zeileis, A.; Fisher, J.C.; Hornik, K.; Ihaka, R.; McWhite, C.D.; Murrell, P.; Stauffer, R.; Wilke, C.O. Colorspace: A Toolbox for Manipulating and Assessing Colors and Palettes. J. Stat. Softw. 2020, 96, 1–49. [Google Scholar] [CrossRef]

- Zenodo. The Pandas Development Team. Pandas-Dev/Pandas: Pandas (v2.2.0rc0). 2023. Available online: https://doi.org/10.5281/zenodo.10426137 (accessed on 20 December 2024).

- Harris, C.R.; Millman, K.J.; van der Walt, S.J.; Gommers, R.; Virtanen, P.; Cournapeau, D.; Wieser, E.; Taylor, J.; Berg, S.; Smith, N.J.; et al. Array programming with NumPy. Nature 2020, 585, 357–362. [Google Scholar] [CrossRef] [PubMed]

- Caswell, T.A.; Sales de Andrade, E.; Lee, A.; Droettboom, M.; Hoffmann, T.; Klymak, J.; Hunter, J.; Firing, E.; Stansby, D.; Varoquaux, N.; et al. Matplotlib/Matplotlib: REL v3.7.4; Zenodo: Geneva, Switzerland, 2023. [Google Scholar] [CrossRef]

- Clark, A. Pillow (PIL Fork) Documentation; Readthedocs: Portland, OR, USA, 2015; Available online: https://buildmedia.readthedocs.org/media/pdf/pillow/latest/pillow.pdf (accessed on 15 December 2024).

- Du, Y.; Liu, Z.; Li, J.; Zhao, W.X. A survey of vision-language pre-trained models. arXiv 2022, arXiv:2202.10936. [Google Scholar]

- Li, D.; Li, J.; Le, H.; Wang, G.; Savarese, S.; Hoi, S.C.H. LAVIS: A Library for Language-Vision Intelligence. arXiv 2022, arXiv:2209.09019. [Google Scholar] [CrossRef]

- Kong, A.; Zhao, S.; Chen, H.; Li, Q.; Qin, Y.; Sun, R.; Zhou, X.; Wang, E.; Dong, X. Better zero-shot reasoning with role-play prompting. arXiv 2023, arXiv:2308.07702. [Google Scholar]

- Chang, W.; Cheng, J.; Allaire, J.; Sievert, C.; Schloerke, B.; Xie, Y.; Allen, J.; McPherson, J.; Dipert, A.; Borges, B. Shiny: Web Application Framework for R; R Package Version 1.8.0; CRAN: Vienna, Austria, 2023; Available online: https://CRAN.R-project.org/package=shiny (accessed on 11 November 2024).

- Hill, B.; Roger, T.; Vorhagen, F.W. Comparative analysis of the quantization of color spaces on the basis of the CIELAB color-difference formula. ACM Trans. Graph. 1997, 16, 109–154. [Google Scholar] [CrossRef]

- Jain, A.K. Fundamentals of Digital Image Processing; Prentice-Hall, Inc.: Hoboken, NJ, USA, 1989. [Google Scholar]

- Hasler, D.; Süsstrunk, S.E. Measuring colorfulness in natural images. In Human Vision and Electronic Imaging VIII; SPIE: Bellingham, WA, USA, 2003; Volume 5007, pp. 87–95. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).