A Comparative Study of Vision Language Models for Italian Cultural Heritage

Abstract

1. Introduction

2. Literature Review

3. Materials and Methods

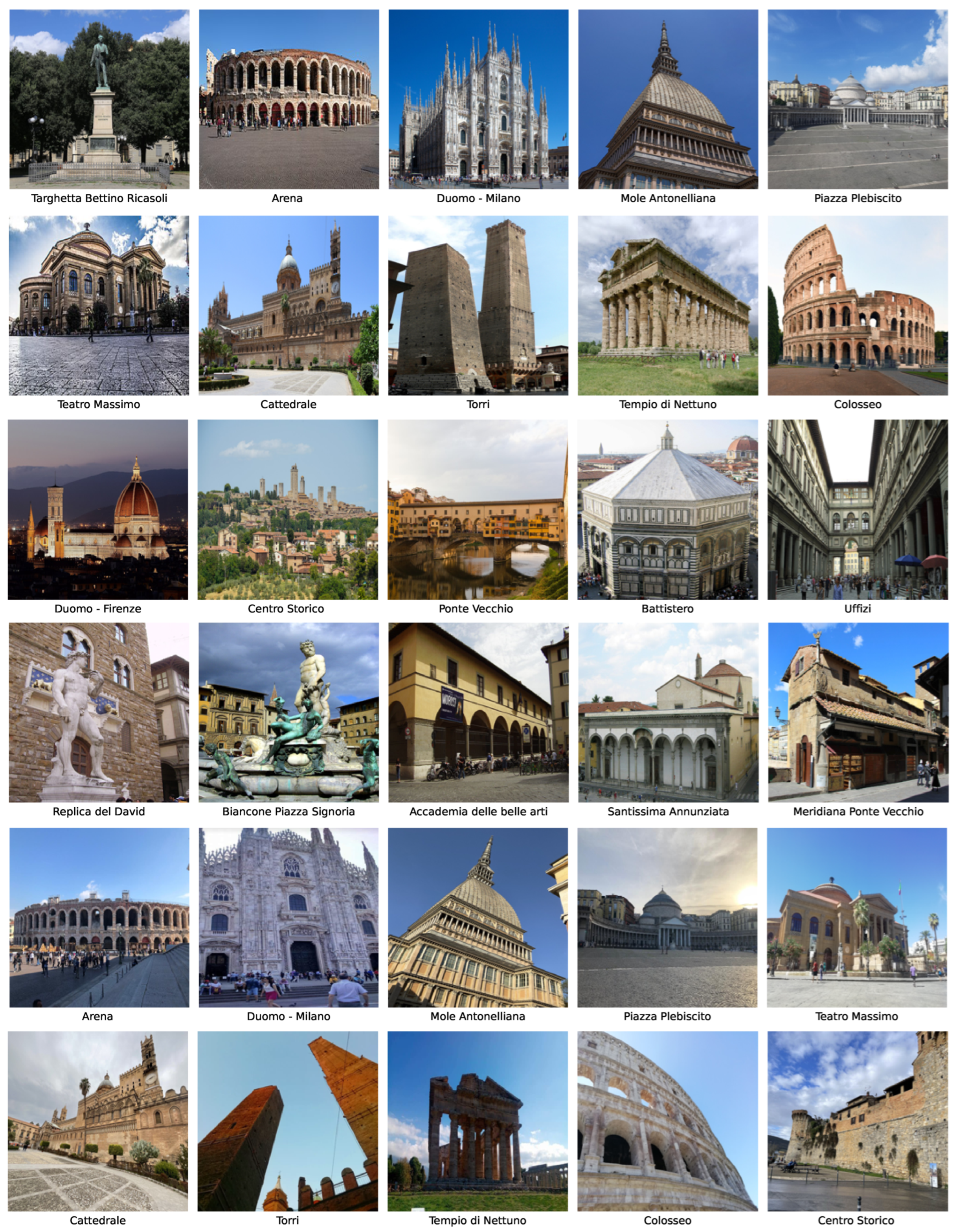

3.1. Dataset Curation

- 20 photographs from Wikimedia: These images were sourced from the widely recognized Wikimedia platform, which provides indexed and searchable content. They served as the reference standard for both national and Florentine landmarks due to their high accessibility and consistent quality;

- 10 photographs from FloreView [28]: This subset originates from a specialized dataset dedicated to Florence’s historic center. While available to the scientific community, these images are not indexed by search engines, offering a unique and controlled dataset for research purposes;

- 10 photographs classified as “Others”: This category includes personal photographs captured exclusively for private use. These images have not been indexed or published online, ensuring their novelty and exclusivity within the dataset.

3.2. Visual Large Language Models Selection

3.3. Evaluation Protocol

3.4. Results Analysis Protocol

4. Results and Discussion

- Q1

- Which model performs best in subject and city identification?

- Q2

- To what extent does providing the city where the picture was taken enhance accuracy?

- Q3

- Does the choice of language, between Italian and English, significantly affect the model’s performance?

- Q4

- Which subjects are most and least easily recognized by the models?

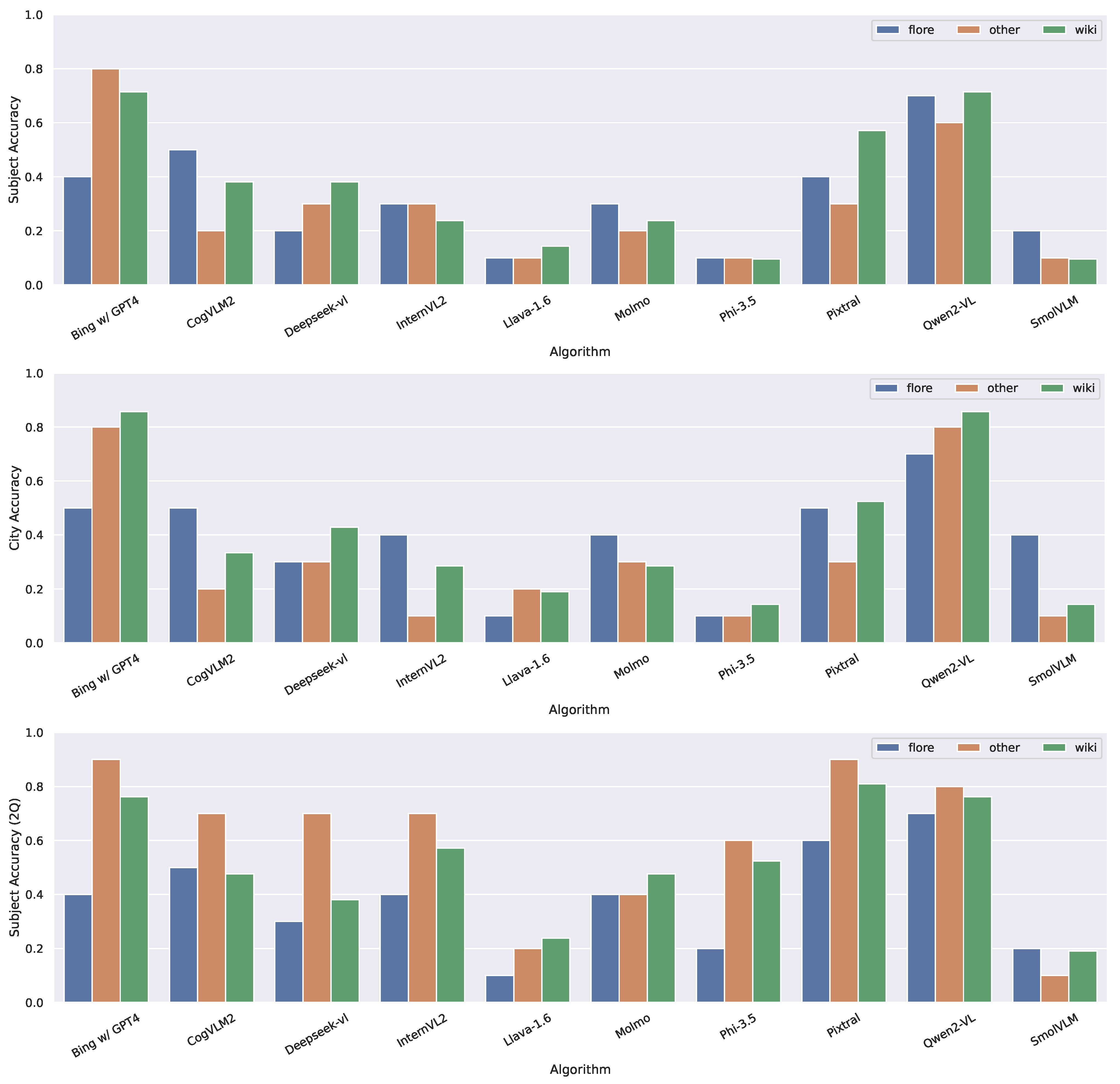

4.1. City and Subject Identification

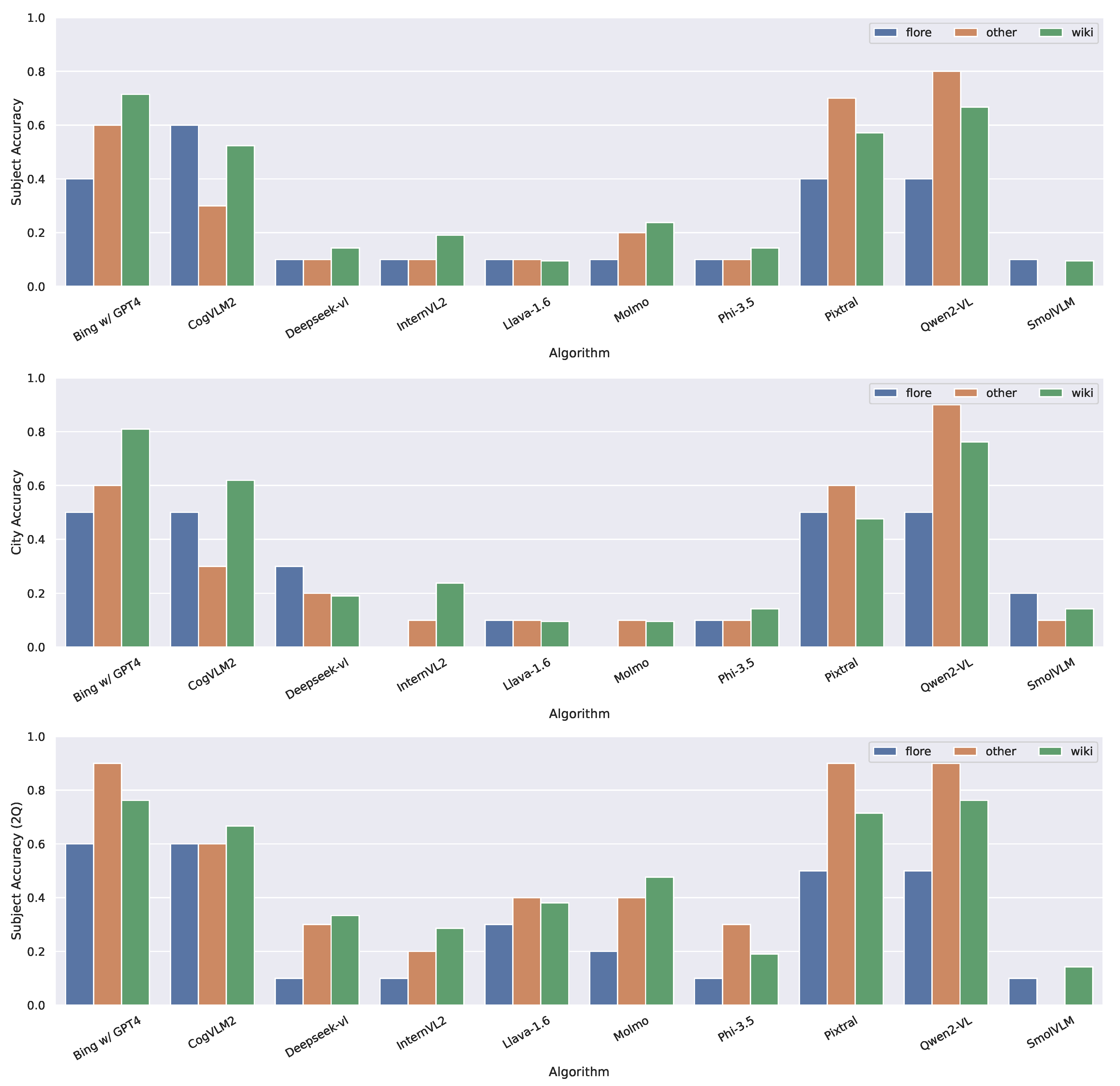

4.2. Impact of Providing Additional Context

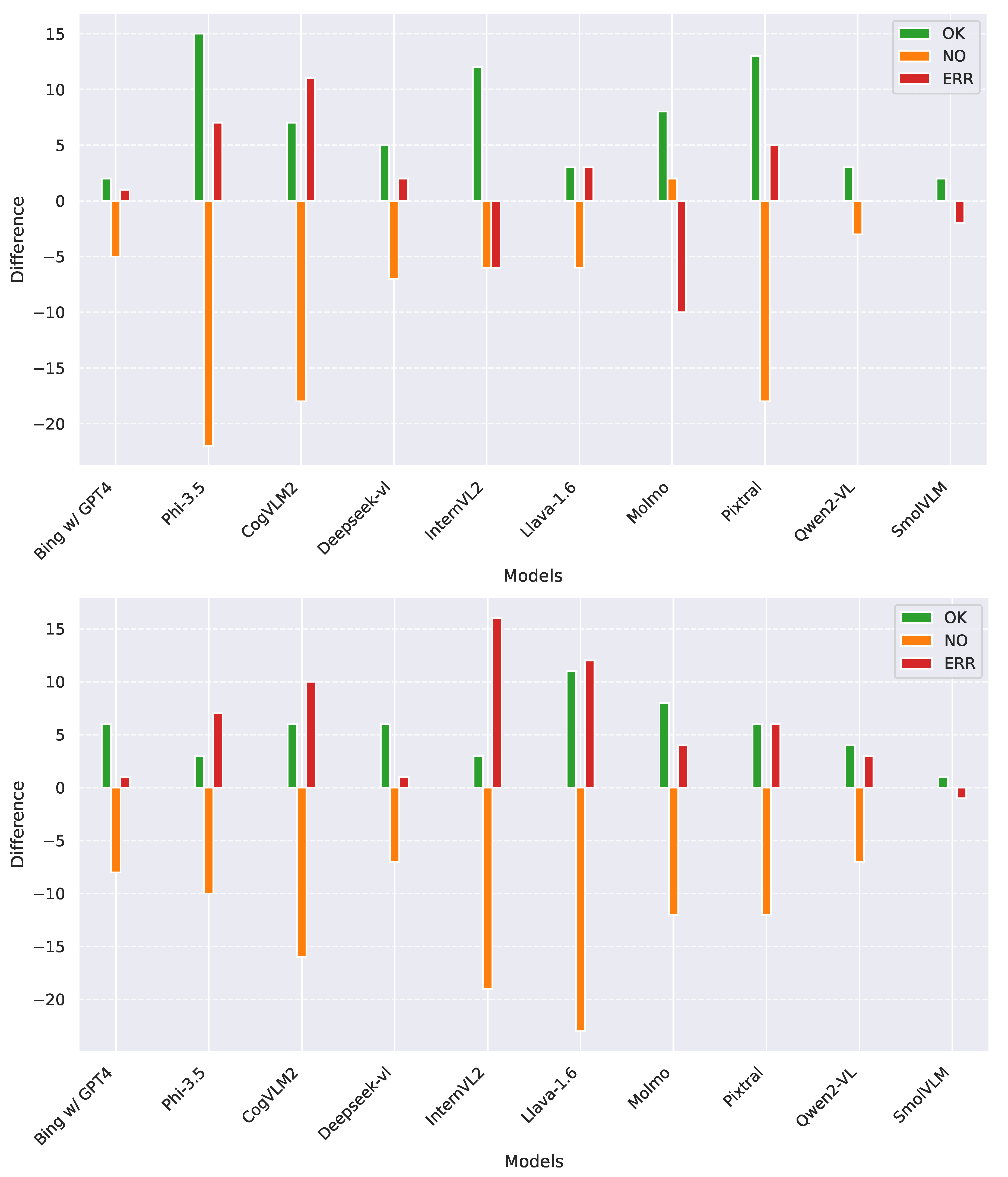

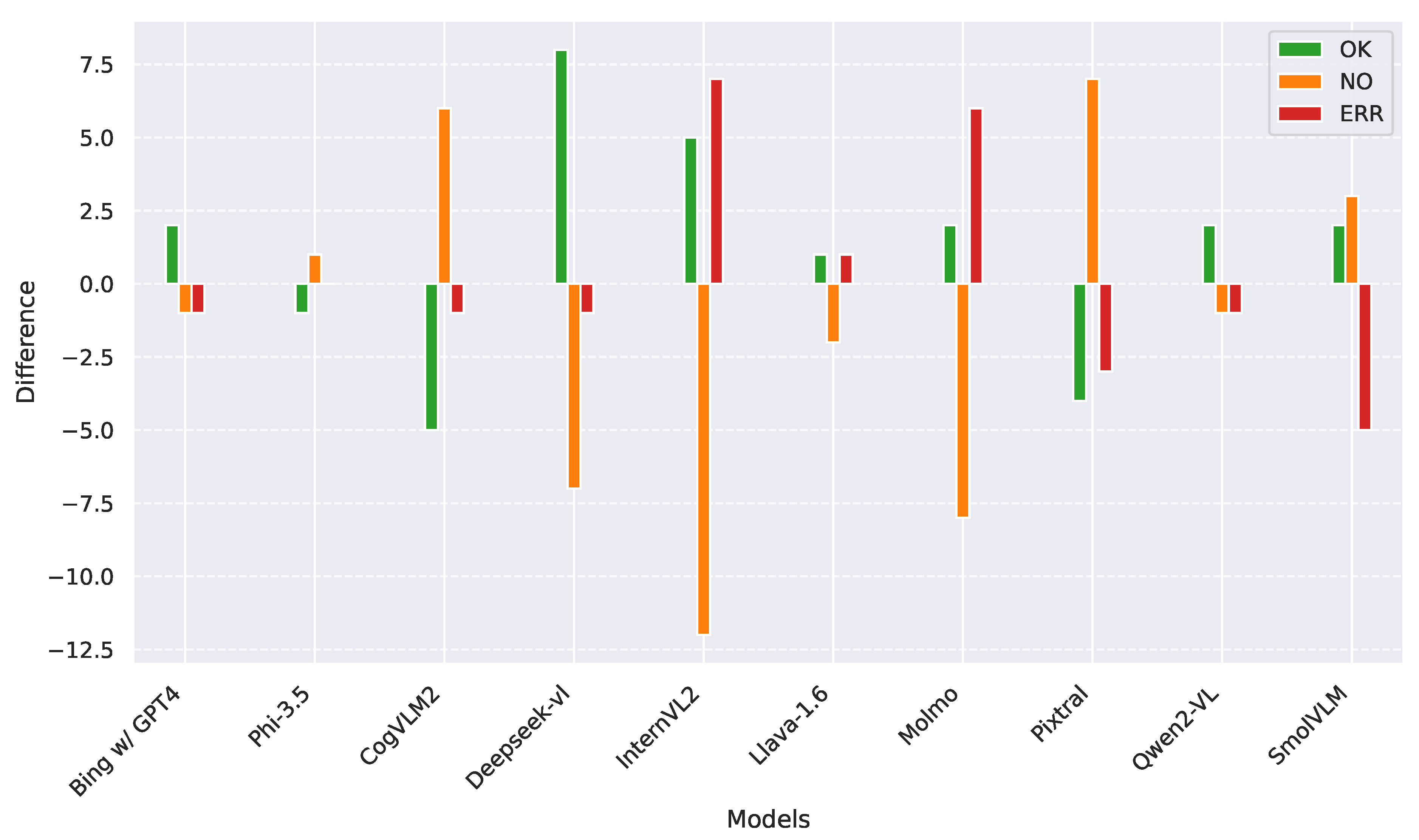

4.3. Language Performance

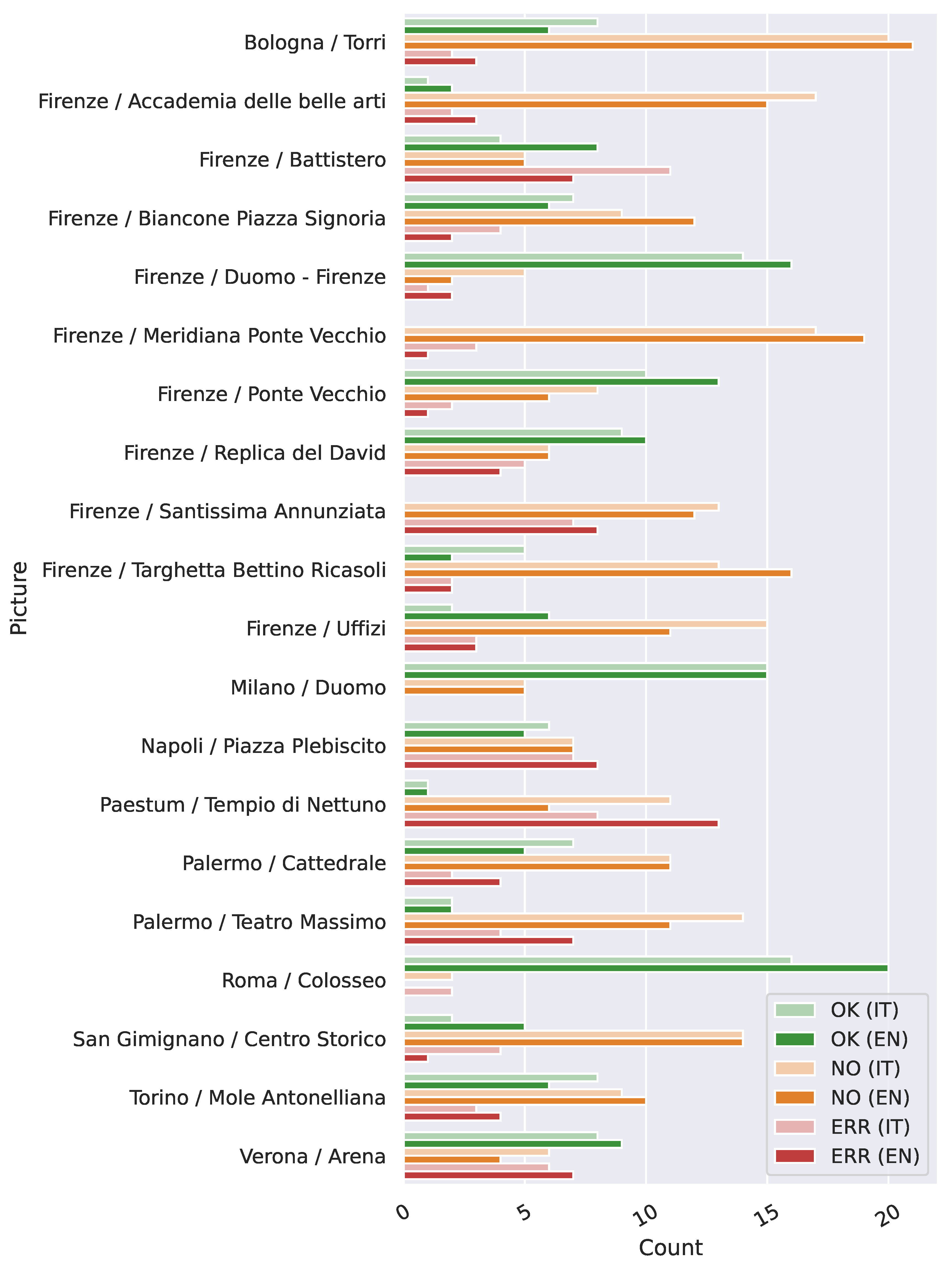

4.4. Subjects Most and Least Recognized

4.5. Hallucinations, Errors, and Peculiarities in Responses

5. Limitations

6. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| LLM | Large language model |

| VQA | Visual Question Answering |

| VLLM | Visual Large Language Model |

| Bing+ChatGPT-4 | Bing’s search engine with GPT-4 |

| 1 | https://whc.unesco.org/en/list/ (accessed on 9 February 2025). |

| 2 | https://huggingface.co/collections/allenai/pixmo-674746ea613028006285687b (accessed on 9 February 2025). |

References

- Francioni, F.; Lenzerini, F. The 1972 World Heritage Convention: A Commentary; Oxford University Press: Oxford, UK, 2023. [Google Scholar]

- Tosco, C. I beni culturali. In Storia, Tutela e Valorizzazione; Il Mulino: Bologna, Italy, 2014. [Google Scholar]

- Hayhoe, S.; Carrizosa, H.G.; Rix, J.; Sheehy, K.; Seale, J. Accessible resources for cultural heritage ecosystems (arches): Initial observations from the fieldwork. In Proceedings of the Educational Research Association of Singapore (ERAS) Asia-Pacific Educational Research Association (APERA) International Conference 2018: Joy of Learning in a Complex World, Singapore, 12–14 November 2018. [Google Scholar]

- De Groote, I. D8. 2 Dissemination Plan (DIGIART-The Internet of Historical Things and Building New 3D Cultural Worlds). 2015. Available online: http://researchonline.ljmu.ac.uk/id/eprint/3023/1/8%202%20Dissemination%20Plan%201%205.pdf (accessed on 9 February 2025).

- Frangakis, N. Social Platform for Heritage Awareness and Participation. In Proceedings of the EuroVR Conference 2016, Athens, Greece, 22–24 November 2016. [Google Scholar]

- Sala, T.M.; Bruzzo, M. I-Media-Cities. Innovative e-Environment for Research on Cities and the Media; Edicions Universitat Barcelona: Barcelona, Spain, 2019. [Google Scholar]

- Katifori, A.; Roussou, M.; Perry, S.; Drettakis, G.; Vizcay, S.; Philip, J. The EMOTIVE Project-Emotive Virtual Cultural Experiences through Personalized Storytelling. In Proceedings of the Cira@EuroMed, Nicosia, Cyprus, 29 October–3 November 2018; pp. 11–20. [Google Scholar]

- Lim, V.; Frangakis, N.; Tanco, L.M.; Picinali, L. PLUGGY: A pluggable social platform for cultural heritage awareness and participation. In Proceedings of the Advances in Digital Cultural Heritage: International Workshop, Funchal, Portugal, 28 June 2017; Revised Selected Papers. Springer: Berlin/Heidelberg, Germany, 2018; pp. 117–129. [Google Scholar]

- Tóth, Z. (Ed.) Heritage at Risk: EU Research and Innovation for a More Resilient Cultural Heritage; Working paper; European Commission, Publications Office: Luxemburg, 2018. [Google Scholar]

- Maier, A.; Fernández, G.; Kestemont, M.; Fornes, A.; Eskofier, B.; Vallet, B.; van Noort, E.; Vitali, F.; Albertin, F.; Niebling, F.; et al. Time Machine: Big Data of the Past for the Future of Europe Deliverable D2.1 Science and Technology (Pillar 1) Roadmap—Draft. 2019. Available online: https://pure.knaw.nl/portal/en/publications/time-machine-big-data-of-the-past-for-the-future-of-europe-delive (accessed on 9 February 2025).

- Anichini, F.; Dershowitz, N.; Dubbini, N.; Gattiglia, G.; Itkin, B.; Wolf, L. The automatic recognition of ceramics from only one photo: The ArchAIDE app. J. Archaeol. Sci. Rep. 2021, 36, 102788. [Google Scholar] [CrossRef]

- Spallone, R.; Palma, V. Intelligenza artificiale e realtà aumentata per la condivisione del patrimonio culturale. Boll. Della Soc. Ital. Fotogramm. Topogr. 2020, 1, 19–26. [Google Scholar]

- Trichopoulos, G.; Konstantakis, M.; Caridakis, G.; Katifori, A.; Koukouli, M. Crafting a Museum Guide Using ChatGPT4. Big Data Cogn. Comput. 2023, 7, 148. [Google Scholar] [CrossRef]

- Vadicamo, L.; Amato, G.; Bolettieri, P.; Falchi, F.; Gennaro, C.; Rabitti, F. Intelligenza artificiale, retrieval e beni culturali. In Proceedings of the Ital-IA 2019, Rome, Italy, 18–19 March 2019. [Google Scholar]

- Ishmam, M.F.; Shovon, M.S.H.; Mridha, M.F.; Dey, N. From image to language: A critical analysis of visual question answering (vqa) approaches, challenges, and opportunities. Inf. Fusion 2024, 106, 102270. [Google Scholar] [CrossRef]

- Bai, Z.; Nakashima, Y.; Garcia, N. Explain me the painting: Multi-topic knowledgeable art description generation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 5422–5432. [Google Scholar]

- Zhang, J.; Zheng, L.; Wang, M.; Guo, D. Training a small emotional vision language model for visual art comprehension. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; pp. 397–413. [Google Scholar]

- Balauca, A.A.; Paudel, D.P.; Toutanova, K.; Van Gool, L. Taming CLIP for Fine-Grained and Structured Visual Understanding of Museum Exhibits. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; pp. 377–394. [Google Scholar]

- Rachabatuni, P.K.; Principi, F.; Mazzanti, P.; Bertini, M. Context-aware chatbot using MLLMs for Cultural Heritage. In Proceedings of the 15th ACM Multimedia Systems Conference, Bari, Italy, 15–18 April 2024; pp. 459–463. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.u.; Polosukhin, I. Attention is All you Need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: New York, NY, USA, 2017; Volume 30. [Google Scholar]

- Radford, A.; Narasimhan, K.; Salimans, T.; Sutskever, I. Improving Language Understanding by Generative Pre-Training. OpenAI 2018. Available online: https://cdn.openai.com/research-covers/language-unsupervised/language_understanding_paper.pdf (accessed on 9 February 2025).

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskever, I. Language Models are Unsupervised Multitask Learners. OpenAI 2019. Available online: https://cdn.openai.com/better-language-models/language_models_are_unsupervised_multitask_learners.pdf (accessed on 9 February 2025).

- Brown, T.B.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language Models are Few-Shot Learners. arXiv 2020, arXiv:2005.14165. [Google Scholar]

- Martellozzo, N. Quando cadono le statue: Memorie contestate e counter-heritage nelle proteste di Black Lives Matter. Dialoghi Mediterr. 2020, 45, 1–10. [Google Scholar]

- di Firenze, C. The Management Plan of the Historic Centre of Florence. Firenze: Ufficio Centro Storico UNESCO del Comune di Firenze. 2016. Available online: http://www.firenzepatrimoniomondiale.it/wp-content/uploads/2015/12/Piano-gestione-enweb1.pdf (accessed on 9 February 2025).

- Dondero, M.G. Scenari del sé e monumenti in posa nella fotografia turistica. In Espressione e Contenuto: Rivista dell’Associazione Italiana di Studi Semiotici; Associazione Italiana di Studi Semiotici: Palermo, Italy, 2005. [Google Scholar]

- Verhoeven, G. Basics of photography for cultural heritage imaging. In 3D Recording, Documentation and Management of Cultural Heritage; Whittles Publishing: Dunbeath, UK, 2016; pp. 127–251. [Google Scholar]

- Baracchi, D.; Shullani, D.; Iuliani, M.; Piva, A. FloreView: An image and video dataset for forensic analysis. IEEE Access 2023, 11, 109267–109282. [Google Scholar] [CrossRef]

- Wang, P.; Bai, S.; Tan, S.; Wang, S.; Fan, Z.; Bai, J.; Chen, K.; Liu, X.; Wang, J.; Ge, W.; et al. Qwen2-VL: Enhancing Vision-Language Model’s Perception of the World at Any Resolution. arXiv 2024, arXiv:2409.12191. [Google Scholar]

- Wang, W.; Lv, Q.; Yu, W.; Hong, W.; Qi, J.; Wang, Y.; Ji, J.; Yang, Z.; Zhao, L.; Song, X.; et al. CogVLM: Visual Expert for Pretrained Language Models. arXiv 2023, arXiv:2311.03079. [Google Scholar]

- Hong, W.; Wang, W.; Ding, M.; Yu, W.; Lv, Q.; Wang, Y.; Cheng, Y.; Huang, S.; Ji, J.; Xue, Z.; et al. CogVLM2: Visual Language Models for Image and Video Understanding. arXiv 2024, arXiv:2408.16500. [Google Scholar]

- Lu, H.; Liu, W.; Zhang, B.; Wang, B.; Dong, K.; Liu, B.; Sun, J.; Ren, T.; Li, Z.; Yang, H.; et al. DeepSeek-VL: Towards Real-World Vision-Language Understanding. arXiv 2024, arXiv:2403.05525. [Google Scholar]

- Abdin, M.; Aneja, J.; Awadalla, H.; Awadallah, A.; Awan, A.A.; Bach, N.; Bahree, A.; Bakhtiari, A.; Bao, J.; Behl, H.; et al. Phi-3 Technical Report: A Highly Capable Language Model Locally on Your Phone. arXiv 2024, arXiv:2404.14219. [Google Scholar]

- Chen, Z.; Wu, J.; Wang, W.; Su, W.; Chen, G.; Xing, S.; Zhong, M.; Zhang, Q.; Zhu, X.; Lu, L.; et al. InternVL: Scaling up Vision Foundation Models and Aligning for Generic Visual-Linguistic Tasks. arXiv 2023, arXiv:2312.14238. [Google Scholar]

- Chen, Z.; Wang, W.; Tian, H.; Ye, S.; Gao, Z.; Cui, E.; Tong, W.; Hu, K.; Luo, J.; Ma, Z.; et al. How Far Are We to GPT-4V? Closing the Gap to Commercial Multimodal Models with Open-Source Suites. arXiv 2024, arXiv:2404.16821. [Google Scholar] [CrossRef]

- Agrawal, P.; Antoniak, S.; Hanna, E.B.; Bout, B.; Chaplot, D.; Chudnovsky, J.; Costa, D.; Monicault, B.D.; Garg, S.; Gervet, T.; et al. Pixtral 12B. arXiv 2024, arXiv:2410.07073. [Google Scholar]

- Liu, H.; Li, C.; Wu, Q.; Lee, Y.J. Visual Instruction Tuning. Adv. Neural Inf. Process. Syst. 2023, 36, 34892–34916. [Google Scholar]

- Liu, H.; Li, C.; Li, Y.; Lee, Y.J. Improved Baselines with Visual Instruction Tuning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024. [Google Scholar]

- Deitke, M.; Clark, C.; Lee, S.; Tripathi, R.; Yang, Y.; Park, J.S.; Salehi, M.; Muennighoff, N.; Lo, K.; Soldaini, L.; et al. Molmo and PixMo: Open Weights and Open Data for State-of-the-Art Multimodal Models. arXiv 2024, arXiv:2409.17146. [Google Scholar]

- Allal, L.B.; Lozhkov, A.; Bakouch, E.; Blázquez, G.M.; Tunstall, L.; Piqueres, A.; Marafioti, A.; Zakka, C.; von Werra, L.; Wolf, T. SmolLM2—With Great Data, Comes Great Performance. 2024. Available online: https://arxiv.org/pdf/2502.02737 (accessed on 9 February 2025).

- McNemar, Q. Note on the Sampling Error of the Difference Between Correlated Proportions or Percentages. Psychometrika 1947, 12, 153–157. [Google Scholar] [CrossRef] [PubMed]

- Holm, S. A Simple Sequentially Rejective Multiple Test Procedure. Scand. J. Stat. 1979, 6, 65–70. [Google Scholar]

- Papineni, K.; Roukos, S.; Ward, T.; Zhu, W.J. Bleu: A method for automatic evaluation of machine translation. In Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics, Philadelphia, PA, USA, 6–12 July 2002; pp. 311–318. [Google Scholar]

| Model | Variant | Parameters | Quantization | Cutoff Date |

|---|---|---|---|---|

| Qwen2-VL [29] | Instruct | 7 B | – | June 2023 † |

| CogVLM2 [30,31] | llama3-Chat | 19 B | int4 | March 2023 ‡ |

| Deepseek-vl [32] | base | 7 B | – | May 2023 † |

| Phi-3.5 [33] | Vision-Instruct | 4.2 B | – | March 2024 ‡ |

| Pixtral [36] | – | 12 B | float16 | September 2024 ⋆ |

| InternVL2 [34,35] | – | 8 B | – | July 2024 ⋆ |

| Llava 1.6 [37,38] | mistral-7b-hf | 7 B | – | December 2023 † |

| Molmo [39] | O-0924 | 7 B | bfloat16 | October 2024 ⋆ |

| SmolVLM [40] | – | 1.7 B | bfloat16 | December 2024 ‡ |

| Bing+ChatGPT-4 | ? | ? | ? | ? |

| Language | City | Question |

|---|---|---|

| ITA | – | Qual è il soggetto rappresentato in questa immagine? |

| ITA | ✓ | Sei a CITY, qual è il soggetto rappresentato in questa immagine? |

| ENG | – | Which is the subject captured in this picture? |

| ENG | ✓ | You are in CITY, which is the subject captured in this picture? |

| Algorithm | Answer 1 | City | Subject | Description |

|---|---|---|---|---|

| SmolVLM [40] | The Colosseum in Rome. | OK | OK | NO |

| Qwen2-VL [29] | The subject captured in this picture is the Colosseum, an ancient amphitheater located in Rome, Italy. The Colosseum is one of the most iconic and well-preserved structures of ancient Roman architecture. | OK | OK | OK |

| InternVL2 [35] | The subject captured in this picture is the Colosseum. | NO | OK | NO |

| Llava 1.6 [37,38] | The subject captured in this picture is the Colosseum, an iconic landmark located in Rome, Italy. It’s a large, oval amphitheater that was used for gladiatorial contests and other public spectacles during the Roman Empire. | OK | OK | OK |

| Pixtral [36] | The image depicts the Colosseum, an iconic ancient amphitheater located in Rome, Italy. The Colosseum is known for its distinctive elliptical shape and multiple levels of arches. The structure is made of stone and concrete, showcasing the architectural ingenuity of the Roman Empire. The Colosseum was used for gladiatorial games, public spectacles, and other events, and it remains one of the most famous landmarks in the world. The clear blue sky in the background highlights the grandeur and historical significance of this ancient structure. | OK | OK | OK |

| Phi-3.5 [33] | The Colosseum in Rome | OK | OK | NO |

| Bing+ChatGPT-4 | The subject captured in the picture you sent is the Colosseum in Rome, Italy. The Colosseum is an ancient amphitheater, built of concrete and stone. | OK | OK | OK |

| Molmo [39] | The subject captured in this picture is the Colosseum in Rome, Italy. This iconic ancient amphitheater dominates the frame, showcasing its impressive architecture with multiple levels of arched windows and columns. The image focuses on the upper portion of the Colosseum, highlighting its grandeur and historical significance against a clear blue sky. | OK | OK | OK |

| Deepseek-vl [32] | The subject captured in this picture is the Colosseum, a famous landmark in Rome, Italy. | OK | OK | NO |

| CogVLM2 [31] | The subject captured in this picture is the Colosseum, an iconic ancient Roman amphitheater located in Rome, Italy. | OK | OK | OK |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vitaloni, C.; Shullani, D.; Baracchi, D. A Comparative Study of Vision Language Models for Italian Cultural Heritage. Heritage 2025, 8, 95. https://doi.org/10.3390/heritage8030095

Vitaloni C, Shullani D, Baracchi D. A Comparative Study of Vision Language Models for Italian Cultural Heritage. Heritage. 2025; 8(3):95. https://doi.org/10.3390/heritage8030095

Chicago/Turabian StyleVitaloni, Chiara, Dasara Shullani, and Daniele Baracchi. 2025. "A Comparative Study of Vision Language Models for Italian Cultural Heritage" Heritage 8, no. 3: 95. https://doi.org/10.3390/heritage8030095

APA StyleVitaloni, C., Shullani, D., & Baracchi, D. (2025). A Comparative Study of Vision Language Models for Italian Cultural Heritage. Heritage, 8(3), 95. https://doi.org/10.3390/heritage8030095