ML Approaches for the Study of Significant Heritage Contexts: An Application on Coastal Landscapes in Sardinia

Abstract

1. Introduction

- Is it possible to provide efficient and automatic data structuring pipelines for existing regional low-scale datasets even though they have not been acquired for heritage documentation and detection purposes?

- Is it possible to enrich semantically unstructured 3D datasets through ML techniques in the context of widespread heritage?

1.1. Research Background and Related Works

1.1.1. Heritage Research Framework

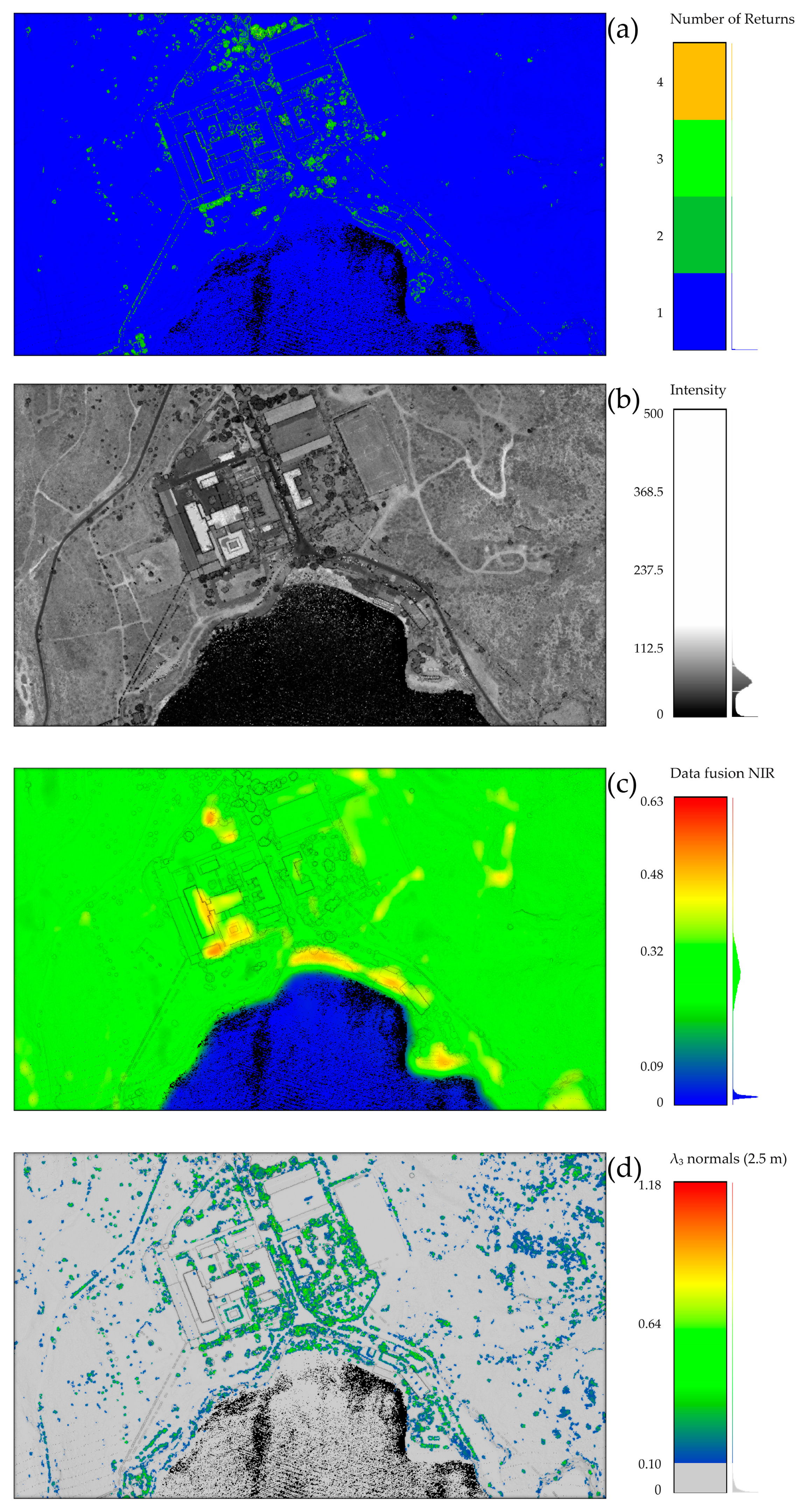

1.1.2. Integration of Passive and Active Sensors for Landscape Context Mapping and Documentation

1.1.3. Semantic Enrichment and Structuring: Data Fusion and ML Approaches for Point Cloud Segmentation and Object Detection

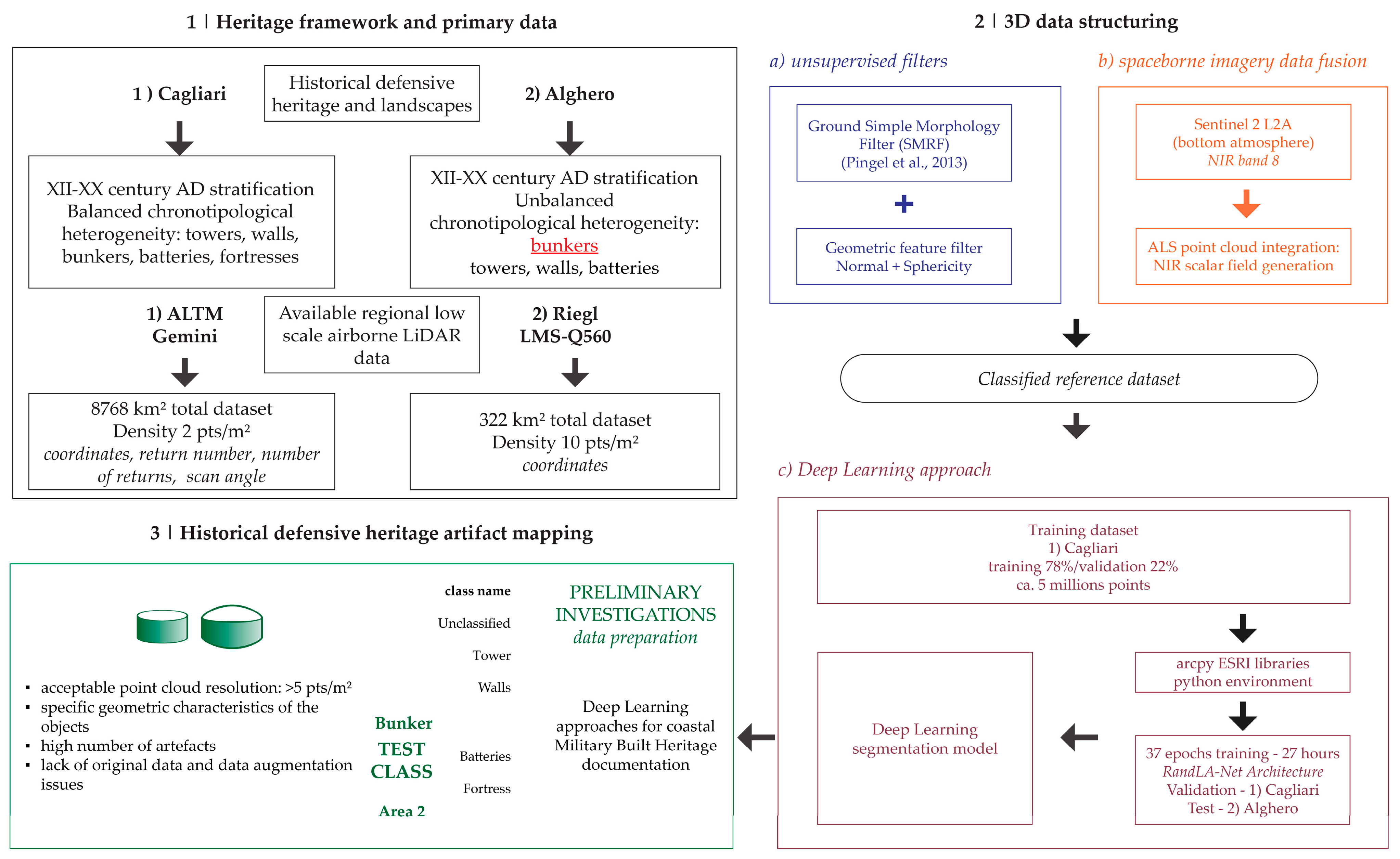

2. Materials and Methods

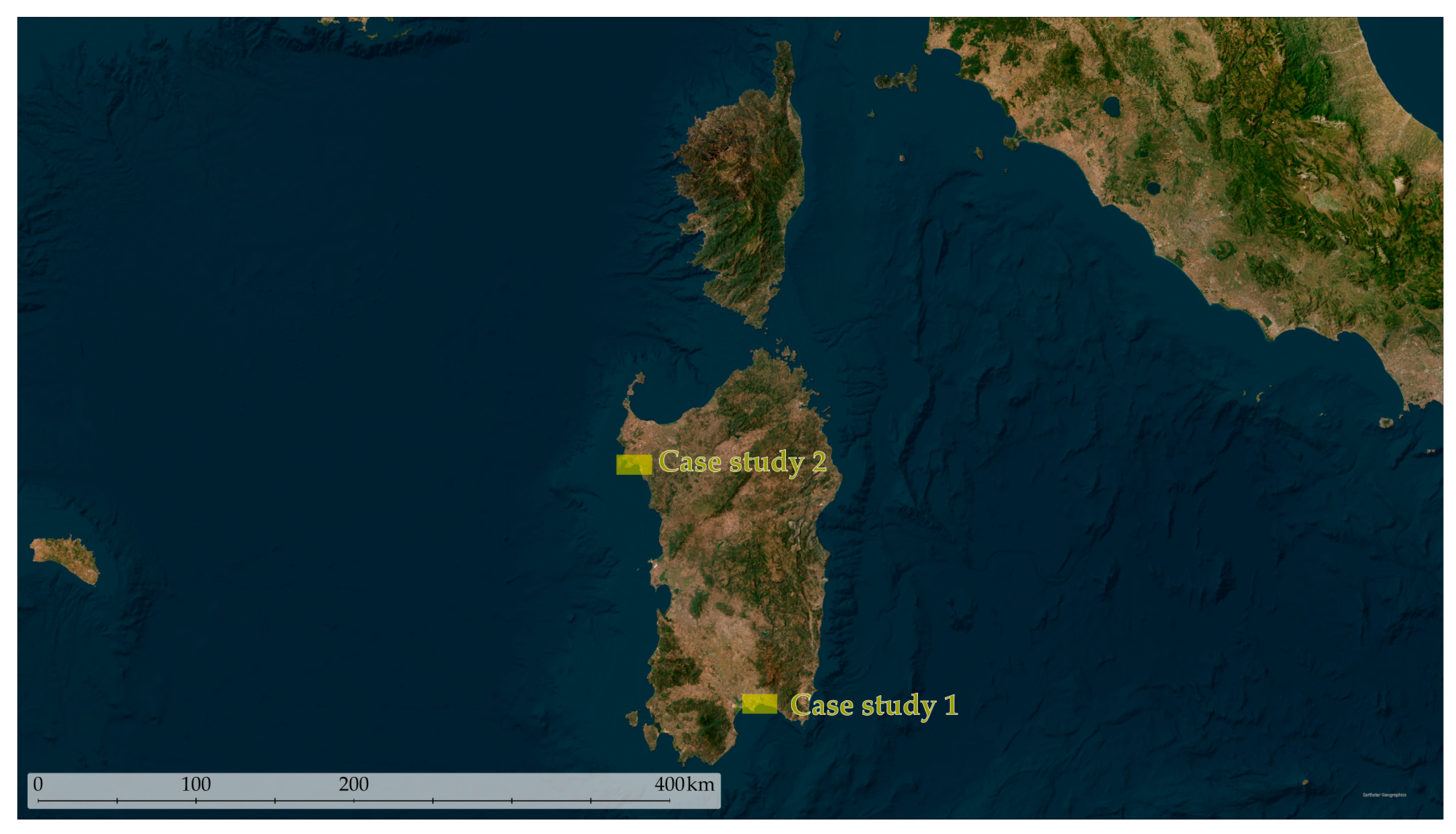

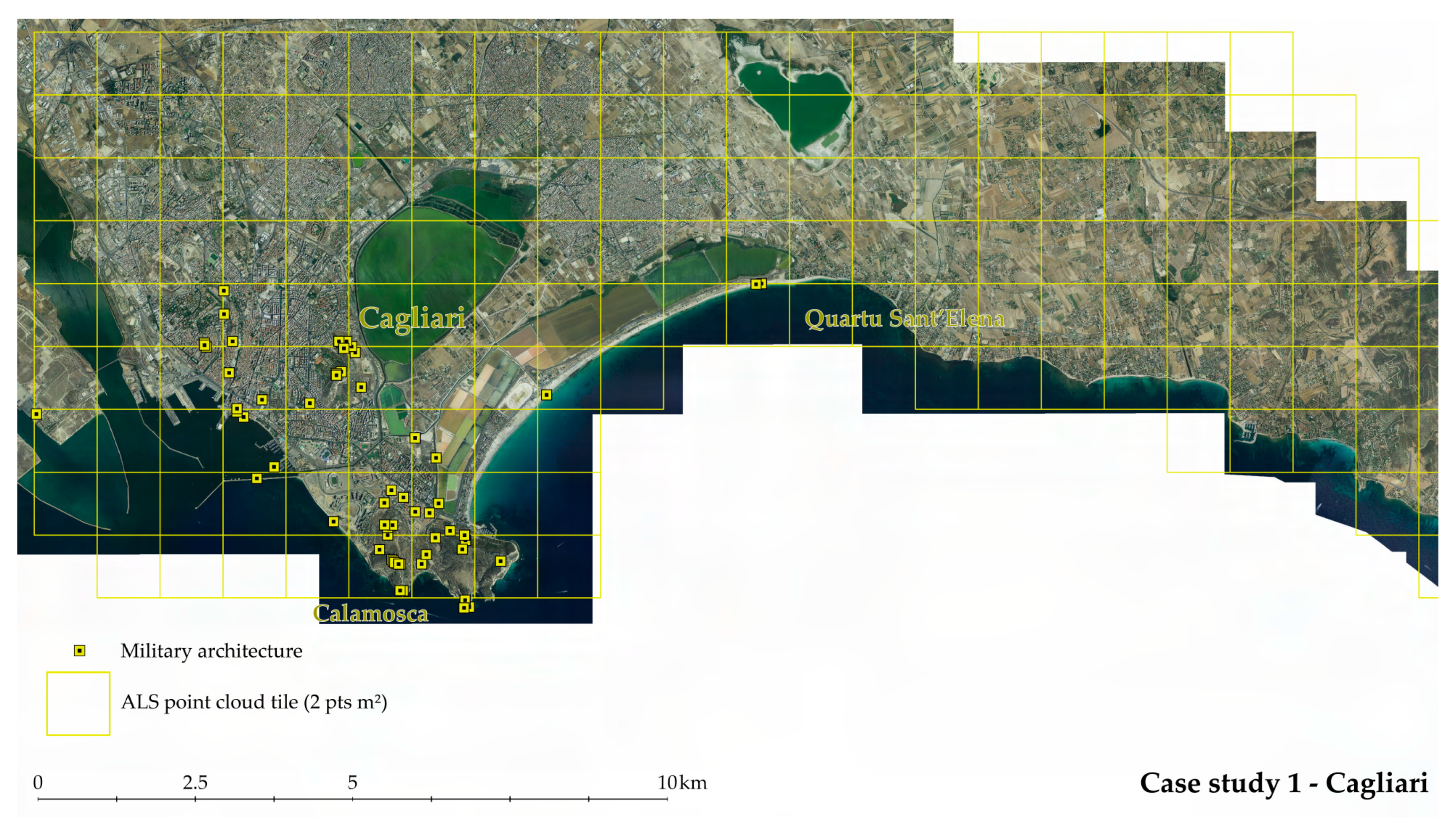

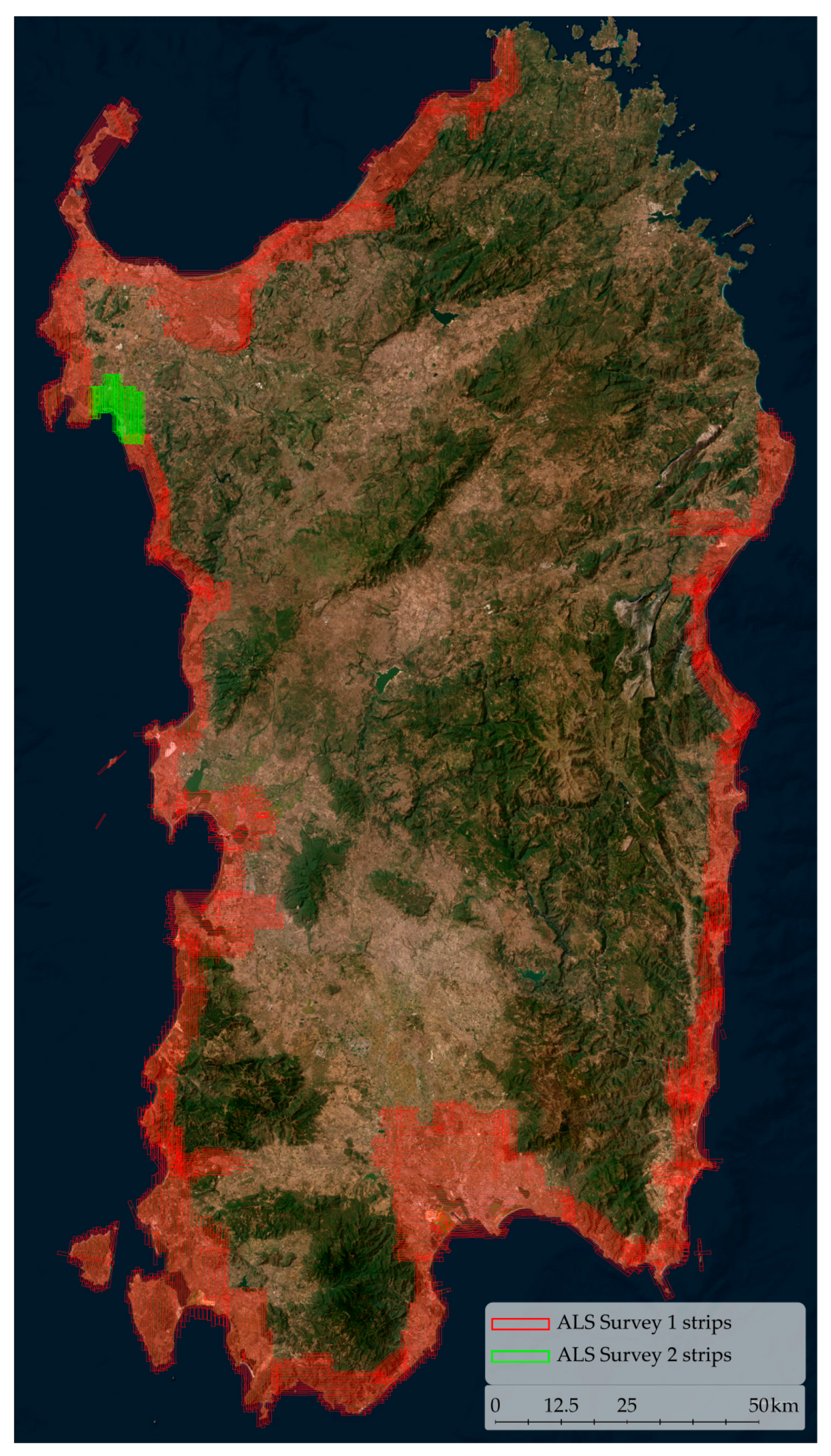

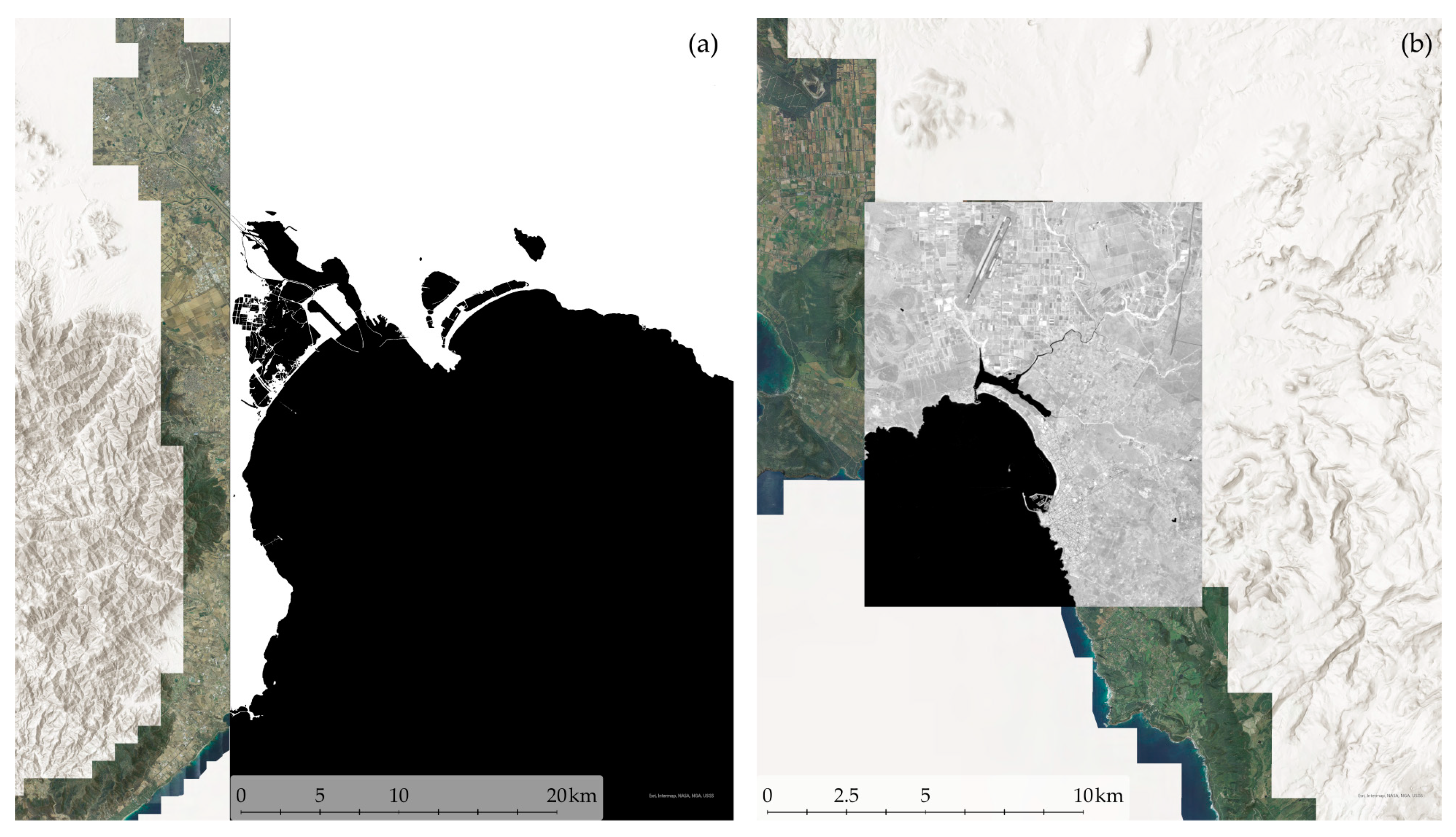

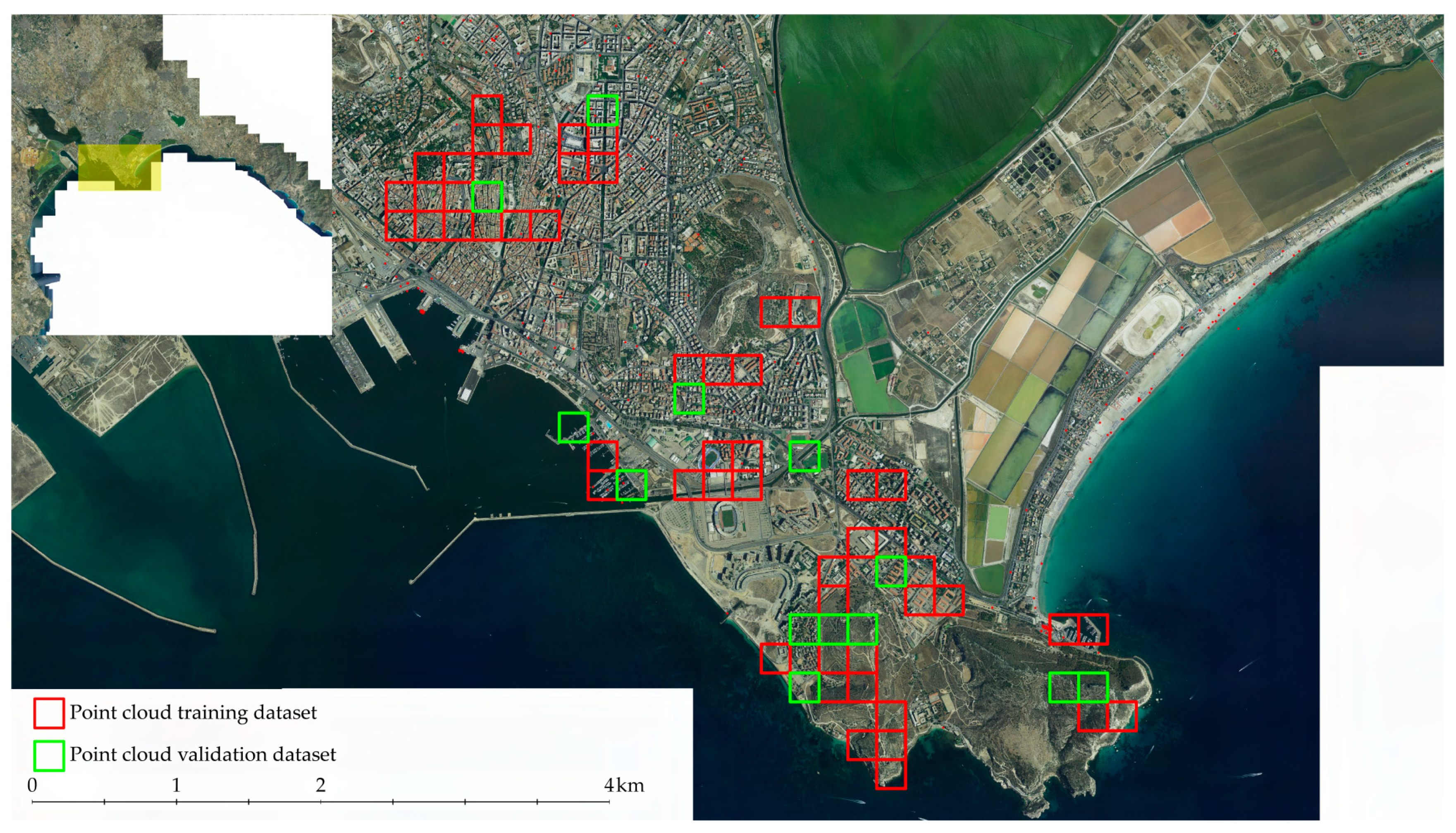

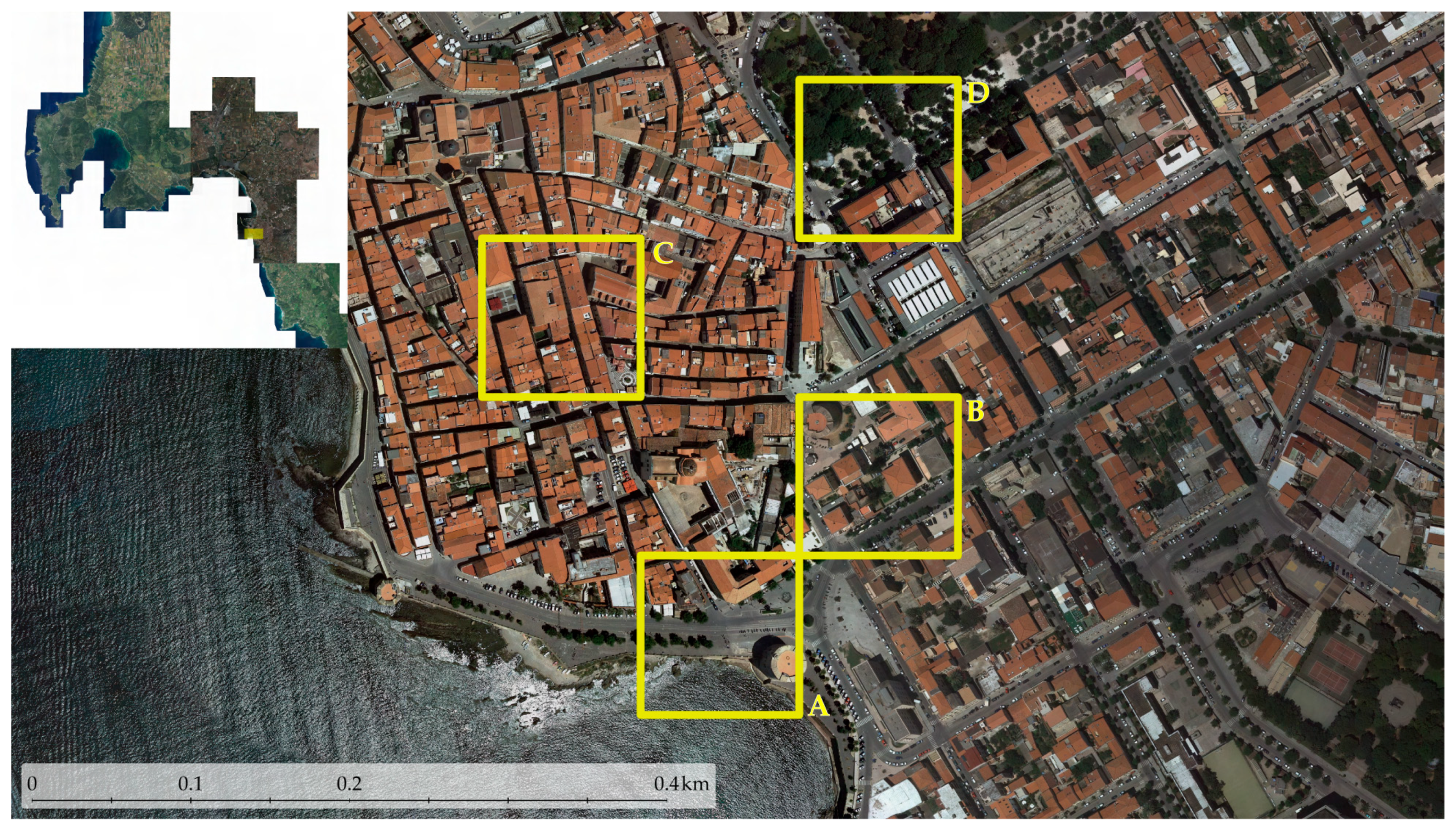

2.1. Case Studies and Airborne LiDAR Sardinia Datasets

2.2. Methodologic Approaches for Semi-Automatic Data Structuring

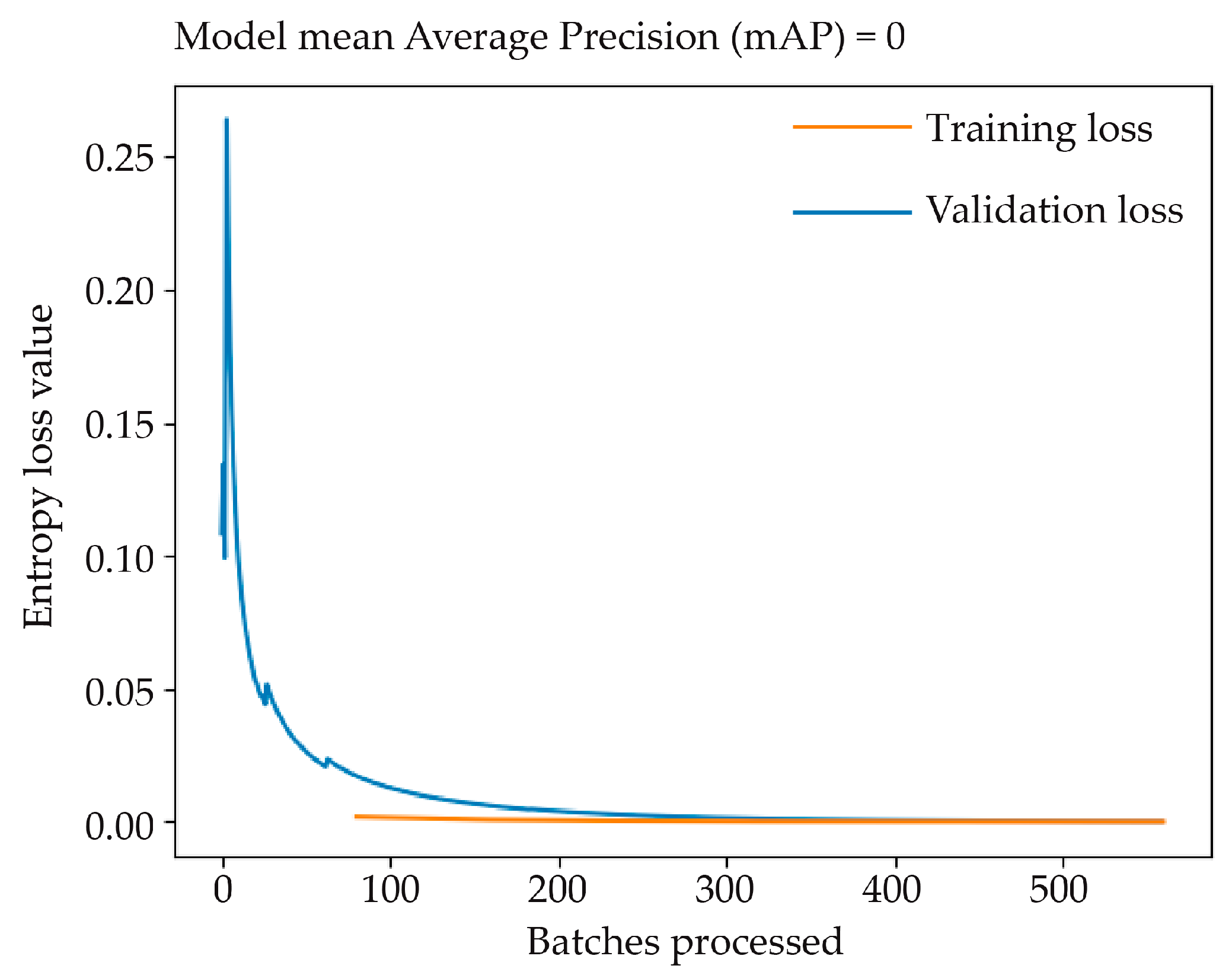

2.2.1. Data Preparation for Semantic Segmentation DLM Training

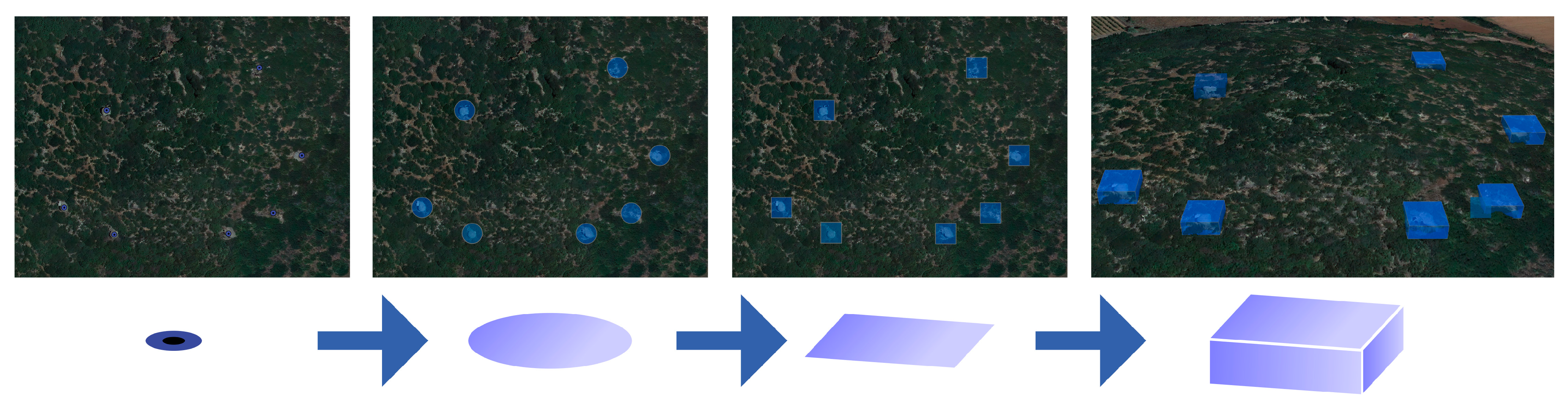

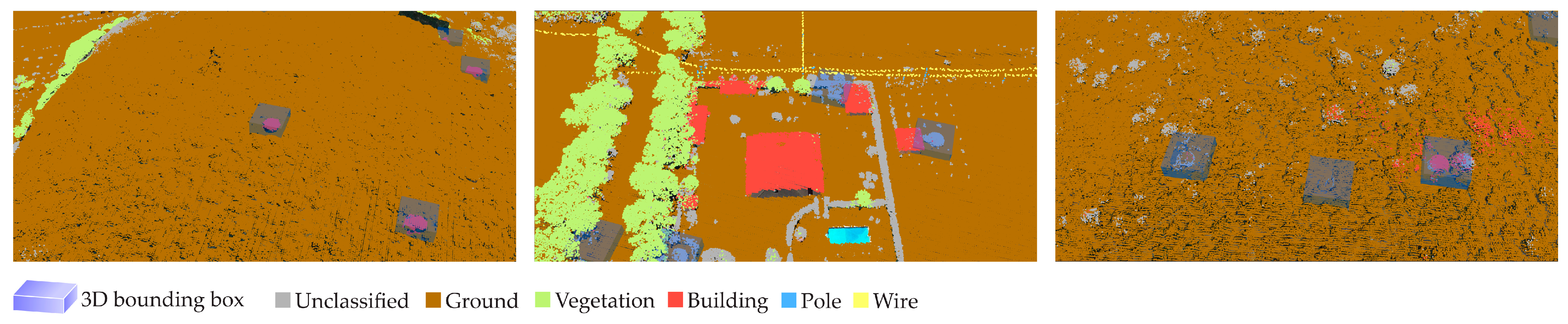

2.2.2. Historical Defensive Heritage Artifact Label Generation and NN Investigations

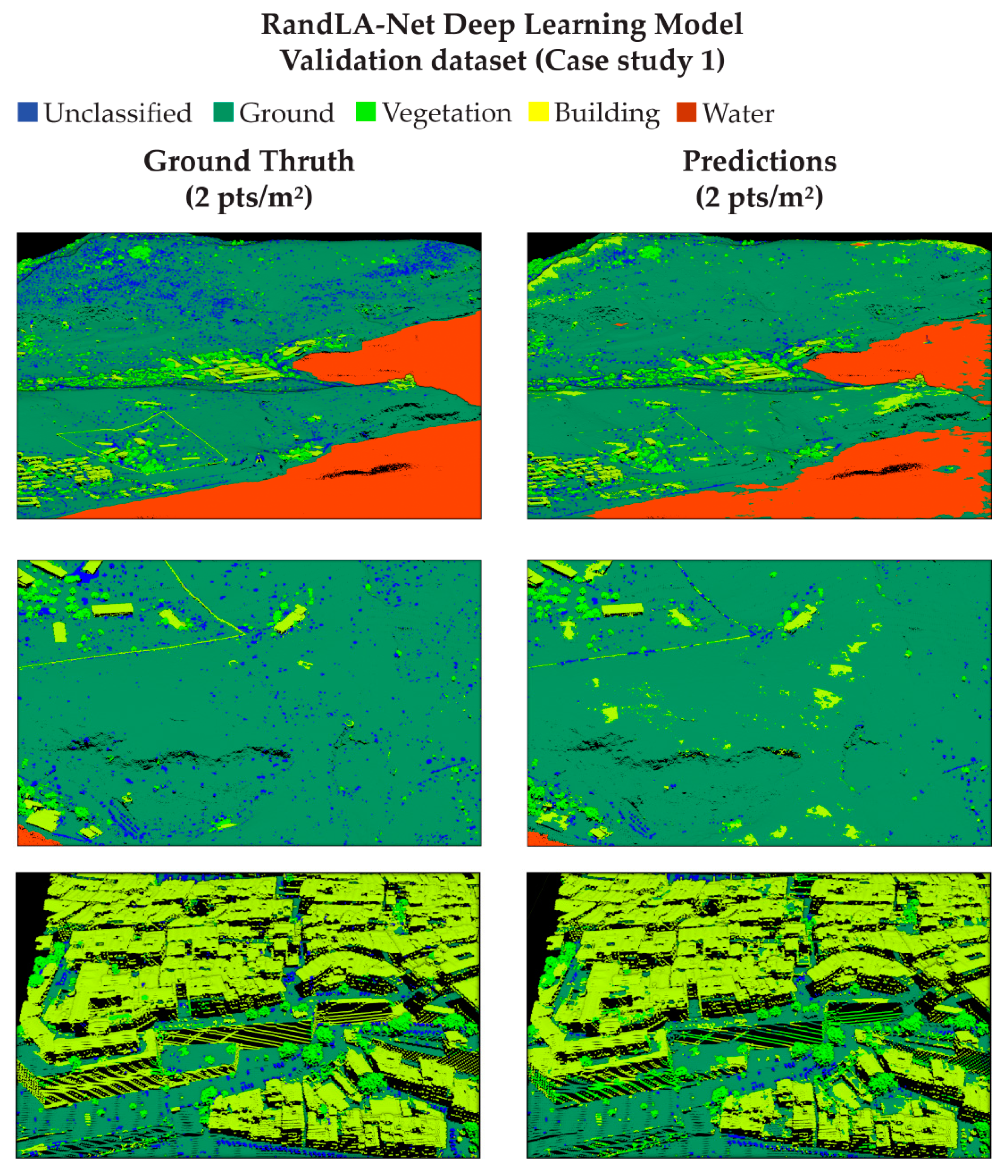

3. Results and Discussion

- Overprediction of building class over flat ground areas;

- Underprediction of building class over oblique occluded surfaces;

- Overprediction of unclassified class on small wall objects;

- Underprediction of unclassified class (small evergreen shrubs difficult distinguishable from ground);

- Underprediction of water class.

4. Conclusions and Future Perspectives

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Stubbs, J.H. Time Honored: A Global View of Architectural Conservation; Wiley: Hoboken, NJ, USA, 2009; ISBN 978-0-470-26049-4. [Google Scholar]

- Moullou, D.; Vital, R.; Sylaiou, S.; Ragia, L. Digital Tools for Data Acquisition and Heritage Management in Archaeology and Their Impact on Archaeological Practices. Heritage 2024, 7, 107–121. [Google Scholar] [CrossRef]

- Fiorino, D.R. Sinergies: Interinstitutional Experiences for the Rehabilitation of Military Areas; UNICApress: Cagliari, Italy, 2021; Volume 1, ISBN 9788833120485. [Google Scholar]

- Fiorino, D.R. Military Landscapes: A Future for Military Heritage. In Proceedings of the International Conference, La Maddalena, Italia, 21–24 June 2017; Fiorino, D.R., Ed.; Skirà: Milano, Italy, 2017. [Google Scholar]

- Virilio, P. Bunker Archaeology, 2nd ed.; Princeton Architectural Press: New York, NY, USA, 1994; ISBN 9781568980157. [Google Scholar]

- Bassier, M.; Vincke, S.; Hernandez, R.d.L.; Vergauwen, M. An Overview of Innovative Heritage Deliverables Based on Remote Sensing Techniques. Remote Sens. 2018, 10, 1607. [Google Scholar] [CrossRef]

- Rabbia, A.; Sammartano, G.; Spanò, A. Fostering Etruscan Heritage with Effective Integration of UAV, TLS and SLAM-Based Methods. In Proceedings of the 2020 IMEKO TC-4 International Conference on Metrology for Archaeology and Cultural Heritage, Trento, Italy, 22–24 October 2020; pp. 322–327. [Google Scholar]

- Petras, V.; Petrasova, A.; McCarter, J.B.; Mitasova, H.; Meentemeyer, R.K. Point Density Variations in Airborne Lidar Point Clouds. Sensors 2023, 23, 1593. [Google Scholar] [CrossRef] [PubMed]

- Kölle, M.; Laupheimer, D.; Schmohl, S.; Haala, N.; Rottensteiner, F.; Wegner, J.D.; Ledoux, H. The Hessigheim 3D (H3D) Benchmark on Semantic Segmentation of High-Resolution 3D Point Clouds and Textured Meshes from UAV LiDAR and Multi-View-Stereo. ISPRS Open J. Photogramm. Remote Sens. 2021, 1, 100001. [Google Scholar] [CrossRef]

- Brovelli, M.A.; Cina, A.; Crespi, M.; Lingua, A.; Manzino, A.; Garretti, L. Ortoimmagini e Modelli Altimetrici a Grande Scala-Linee Guida; CISIS, Centro Interregionale per I Sistemi Informatici Geografici e Statistici In Liquidazione: Rome, Italy, 2009. [Google Scholar]

- Argyrou, A.; Agapiou, A. A Review of Artificial Intelligence and Remote Sensing for Archaeological Research. Remote Sens. 2022, 14, 6000. [Google Scholar] [CrossRef]

- Cappellazzo, M.; Baldo, M.; Sammartano, G.; Spano, A. Integrated Airborne LiDAR-UAV Methods for Archaeological Mapping in Vegetation-Covered Areas. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2023, 48, 357–364. [Google Scholar] [CrossRef]

- Cappellazzo, M.; Patrucco, G.; Sammartano, G.; Baldo, M.; Spanò, A. Semantic Mapping of Landscape Morphologies: Tuning ML/DL Classification Approaches for Airborne LiDAR Data. Remote Sens. 2024, 16, 3572. [Google Scholar] [CrossRef]

- Cherchi, G.; Fiorino, D.R.; Pais, M.R.; Pirisino, M.S. Bunker Landscapes: From Traces of a Traumatic Past to Key Elements in the Citizen Identity. In Defensive Architecture of the Mediterranean: Vol. XV; Pisa University Press: Pisa, Italy, 2023; pp. 1195–1201. [Google Scholar] [CrossRef]

- Cappellazzo, M. Layered Landscape and Archeology of Military Heritage: Valorization Strategies for Porto Conte Park Territories (Alghero, SS) with GIS Technologies and Low-Cost Survey Contributions. Master’s Thesis, Politecnico di Torino, Torino, Italy, 2019. [Google Scholar]

- Bassier, M.; Vergauwen, M.; Van Genechten, B. Automated Classification of Heritage Buildings for As-Built BIM Using Machine Learning Techniques. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 4, 25–30. [Google Scholar] [CrossRef]

- Yang, S.; Hou, M.; Li, S. Three-Dimensional Point Cloud Semantic Segmentation for Cultural Heritage: A Comprehensive Review. Remote Sens. 2023, 15, 548. [Google Scholar] [CrossRef]

- Luo, L.; Wang, X.; Guo, H.; Lasaponara, R.; Zong, X.; Masini, N.; Wang, G.; Shi, P.; Khatteli, H.; Chen, F.; et al. Airborne and Spaceborne Remote Sensing for Archaeological and Cultural Heritage Applications: A Review of the Century (1907–2017). Remote Sens. Environ. 2019, 232, 111280. [Google Scholar] [CrossRef]

- Jensen, J.R. Introductory Digital Image Processing: A Remote Sensing Perspective, 2nd ed.; Prentice Hall, Inc.: Upper Saddle River, NJ, USA, 1996; ISBN 0132058405. [Google Scholar]

- Abate, N.; Frisetti, A.; Marazzi, F.; Masini, N.; Lasaponara, R. Multitemporal–Multispectral UAS Surveys for Archaeological Research: The Case Study of San Vincenzo Al Volturno (Molise, Italy). Remote Sens. 2021, 13, 2719. [Google Scholar] [CrossRef]

- Santoro, V.; Patrucco, G.; Lingua, A.; Spanò, A. Multispectral Uav Data Enhancing the Knowledge of Landscape Heritage. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2023, 48, 1419–1426. [Google Scholar] [CrossRef]

- Martino, A.; Gerla, F.; Balletti, C. Multi-Scale and Multi-Sensor Approaches for the Protection of Cultural Natural Heritage: The Island of Santo Spirito in Venice. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci.—ISPRS Arch. 2023, 48, 1027–1034. [Google Scholar] [CrossRef]

- Wieser, M.; Hollaus, M.; Mandlburger, G.; Glira, P.; Pfeifer, N. ULS LiDAR Supported Analyses of Laser Beam Penetration from Different ALS Systems into Vegetation. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 3, 233–239. [Google Scholar] [CrossRef]

- Masini, N.; Coluzzi, R.; Lasaponara, R.; Masini, N.; Coluzzi, R.; Lasaponara, R. On the Airborne Lidar Contribution in Archaeology: From Site Identification to Landscape Investigation. In Laser Scanning, Theory and Applications; IntechOpen: London, UK, 2011. [Google Scholar] [CrossRef]

- Golden, C.; Scherer, A.K.; Schroder, W.; Murtha, T.; Morell-Hart, S.; Fernandez Diaz, J.C.; Del Pilar Jiménez Álvarez, S.; Firpi, O.A.; Agostini, M.; Bazarsky, A.; et al. Airborne Lidar Survey, Density-Based Clustering, and Ancient Maya Settlement in the Upper Usumacinta River Region of Mexico and Guatemala. Remote Sens. 2021, 13, 4109. [Google Scholar] [CrossRef]

- Kalacska, M.; Arroyo-Mora, J.P.; Lucanus, O. Comparing UAS LiDAR and Structure-from-Motion Photogrammetry for Peatland Mapping and Virtual Reality (VR) Visualization. Drones 2021, 5, 36. [Google Scholar] [CrossRef]

- Mazzacca, G.; Grilli, E.; Cirigliano, G.P.; Remondino, F.; Campana, S. Seeing among Foliage with LiDaR and Machine Learning: Towards a Transferable Archaeological Pipeline. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, 46, 365–372. [Google Scholar] [CrossRef]

- Adedapo, S.M.; Zurqani, H.A. Evaluating the Performance of Various Interpolation Techniques on Digital Elevation Models in Highly Dense Forest Vegetation Environment. Ecol. Inform. 2024, 81, 102646. [Google Scholar] [CrossRef]

- Albrecht, C.M.; Fisher, C.; Freitag, M.; Hamann, H.F.; Pankanti, S.; Pezzutti, F.; Rossi, F. Learning and Recognizing Archeological Features from LiDAR Data. In Proceedings of the 2019 IEEE International Conference on Big Data, Big Data 2019, Los Angeles, CA, USA, 9–12 December 2019; pp. 5630–5636. [Google Scholar] [CrossRef]

- Mallet, C.; Bretar, F.; Roux, M.; Soergel, U.; Heipke, C. Relevance Assessment of Full-Waveform Lidar Data for Urban Area Classification. ISPRS J. Photogramm. Remote Sens. 2011, 66, S71–S84. [Google Scholar] [CrossRef]

- Mancini, F.; Dubbini, M.; Gattelli, M.; Stecchi, F.; Fabbri, S.; Gabbianelli, G. Using Unmanned Aerial Vehicles (UAV) for High-Resolution Reconstruction of Topography: The Structure from Motion Approach on Coastal Environments. Remote Sens. 2013, 5, 6880–6898. [Google Scholar] [CrossRef]

- Adamopoulos, E.; Rinaudo, F. Close-Range Sensing and Data Fusion for Built Heritage Inspection and Monitoring—A Review. Remote Sens. 2021, 13, 3936. [Google Scholar] [CrossRef]

- Agapiou, A.; Lysandrou, V.; Hadjimitsis, D.G. Optical Remote Sensing Potentials for Looting Detection. Geosciences 2017, 7, 98. [Google Scholar] [CrossRef]

- Maxwell, A.E.; Warner, T.A.; Fang, F. Implementation of Machine-Learning Classification in Remote Sensing: An Applied Review. Int. J. Remote Sens. 2018, 39, 2784–2817. [Google Scholar] [CrossRef]

- Matrone, F.; Grilli, E.; Martini, M.; Paolanti, M.; Pierdicca, R.; Remondino, F. Comparing Machine and Deep Learning Methods for Large 3D Heritage Semantic Segmentation. ISPRS Int. J. Geo-Inf. 2020, 9, 535. [Google Scholar] [CrossRef]

- Poux, F.; Billen, R. Voxel-Based 3D Point Cloud Semantic Segmentation: Unsupervised Geometric and Relationship Featuring vs Deep Learning Methods. ISPRS Int. J. Geo-Inf. 2019, 8, 213. [Google Scholar] [CrossRef]

- Guo, Y.; Wang, H.; Hu, Q.; Liu, H.; Liu, L.; Bennamoun, M. Deep Learning for 3D Point Clouds: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 4338–4364. [Google Scholar] [CrossRef]

- Laupheimer, D.; Haala, N. Multi-modal semantic mesh segmentation in urban scenes. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, 5, 267–274. [Google Scholar] [CrossRef]

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Lo, W.Y.; et al. Segment Anything. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; pp. 3992–4003. [Google Scholar] [CrossRef]

- Xiong, X.; Adan, A.; Akinci, B.; Huber, D. Automatic Creation of Semantically Rich 3D Building Models from Laser Scanner Data. Autom. Constr. 2013, 31, 325–337. [Google Scholar] [CrossRef]

- Li, Y.; Ma, L.; Zhong, Z.; Liu, F.; Chapman, M.A.; Cao, D.; Li, J. Deep Learning for LiDAR Point Clouds in Autonomous Driving: A Review. IEEE Trans. Neural Netw. Learn. Syst. 2021, 32, 3412–3432. [Google Scholar] [CrossRef]

- Geiger, A.; Lenz, P.; Urtasun, R. Are We Ready for Autonomous Driving? The KITTI Vision Benchmark Suite. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 3354–3361. [Google Scholar] [CrossRef]

- Patil, A.; Malla, S.; Gang, H.; Chen, Y.T. The H3D Dataset for Full-Surround 3D Multi-Object Detection and Tracking in Crowded Urban Scenes. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 9552–9557. [Google Scholar] [CrossRef]

- Hahner, M.; Dai, D.; Liniger, A.; Van Gool, L. Quantifying Data Augmentation for LiDAR Based 3D Object Detection. arXiv 2020, arXiv:2004.01643. [Google Scholar]

- Chen, X.; Ma, H.; Wan, J.; Li, B.; Xia, T. Multi-View 3D Object Detection Network for Autonomous Driving. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–27 July 2017; pp. 6526–6534. [Google Scholar] [CrossRef]

- Lu, H.; Chen, X.; Zhang, G.; Zhou, Q.; Ma, Y.; Zhao, Y. Scanet: Spatial-Channel Attention Network for 3D Object Detection. In Proceedings of the ICASSP 2019—2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 1992–1996. [Google Scholar] [CrossRef]

- Yan, Y.; Mao, Y.; Li, B. SECOND: Sparsely Embedded Convolutional Detection. Sensors 2018, 18, 3337. [Google Scholar] [CrossRef] [PubMed]

- Lang, A.H.; Vora, S.; Caesar, H.; Zhou, L.; Yang, J.; Beijbom, O. Pointpillars: Fast Encoders for Object Detection from Point Clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 12689–12697. [Google Scholar] [CrossRef]

- Yang, Z.; Sun, Y.; Liu, S.; Jia, J. 3DSSD: Point-Based 3D Single Stage Object Detector. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11037–11045. [Google Scholar] [CrossRef]

- Yang, Z.; Sun, Y.; Liu, S.; Shen, X.; Jia, J. STD: Sparse-to-Dense 3D Object Detector for Point Cloud. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1951–1960. [Google Scholar] [CrossRef]

- Colucci, E.; Xing, X.; Kokla, M.; Mostafavi, M.A.; Noardo, F.; Spanò, A. Ontology-Based Semantic Conceptualisation of Historical Built Heritage to Generate Parametric Structured Models from Point Clouds. Appl. Sci. 2021, 11, 2813. [Google Scholar] [CrossRef]

- Mallet, C.; Bretar, F. Full-Waveform Topographic Lidar: State-of-the-Art. ISPRS J. Photogramm. Remote Sens. 2009, 64, 1–16. [Google Scholar] [CrossRef]

- Graham, L. LAS 1.4 Specification. Photogramm. Eng. Remote Sens. 2012, 78(2), 93–102. [Google Scholar]

- Niemeyer, J.; Rottensteiner, F.; Soergel, U. Contextual Classification of Lidar Data and Building Object Detection in Urban Areas. ISPRS J. Photogramm. Remote Sens. 2014, 87, 152–165. [Google Scholar] [CrossRef]

- Matrone, F.; Lingua, A.; Pierdicca, R.; Malinverni, E.S.; Paolanti, M.; Grilli, E.; Remondino, F.; Murtiyoso, A.; Landes, T. A Benchmark for Large-Scale Heritage Point Cloud Semantic Segmentation. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 43, 1419–1426. [Google Scholar] [CrossRef]

- Pingel, T.J.; Clarke, K.C.; McBride, W.A. An Improved Simple Morphological Filter for the Terrain Classification of Airborne LIDAR Data. ISPRS J. Photogramm. Remote Sens. 2013, 77, 21–30. [Google Scholar] [CrossRef]

- Zhang, W.; Qi, J.; Wan, P.; Wang, H.; Xie, D.; Wang, X.; Yan, G. An Easy-to-Use Airborne LiDAR Data Filtering Method Based on Cloth Simulation. Remote Sens. 2016, 8, 501. [Google Scholar] [CrossRef]

- Batar, A.K.; Watanabe, T.; Kumar, A. Assessment of Land-Use/Land-Cover Change and Forest Fragmentation in the Garhwal Himalayan Region of India. Environments 2017, 4, 34. [Google Scholar] [CrossRef]

- Iglhaut, J.; Cabo, C.; Puliti, S.; Piermattei, L.; O’Connor, J.; Rosette, J. Structure from Motion Photogrammetry in Forestry: A Review. Curr. For. Rep. 2019, 5, 155–168. [Google Scholar] [CrossRef]

- Candiago, S.; Remondino, F.; De Giglio, M.; Dubbini, M.; Gattelli, M. Evaluating Multispectral Images and Vegetation Indices for Precision Farming Applications from UAV Images. Remote Sens. 2015, 7, 4026–4047. [Google Scholar] [CrossRef]

- Picollo, M.; Cucci, C.; Casini, A.; Stefani, L. Hyper-Spectral Imaging Technique in the Cultural Heritage Field: New Possible Scenarios. Sensors 2020, 20, 2843. [Google Scholar] [CrossRef] [PubMed]

- Drusch, M.; Del Bello, U.; Carlier, S.; Colin, O.; Fernandez, V.; Gascon, F.; Hoersch, B.; Isola, C.; Laberinti, P.; Martimort, P.; et al. Sentinel-2: ESA’s Optical High-Resolution Mission for GMES Operational Services. Remote Sens. Environ. 2012, 120, 25–36. [Google Scholar] [CrossRef]

- Roy, D.P.; Li, J.; Zhang, H.K.; Yan, L. Best Practices for the Reprojection and Resampling of Sentinel-2 Multi Spectral Instrument Level 1C Data. Remote Sens. Lett. 2016, 7, 1023–1032. [Google Scholar] [CrossRef]

- McFeeters, S.K. The Use of the Normalized Difference Water Index (NDWI) in the Delineation of Open Water Features. Int. J. Remote Sens. 1996, 17, 1425–1432. [Google Scholar] [CrossRef]

- Hu, Q.; Yang, B.; Xie, L.; Rosa, S.; Guo, Y.; Wang, Z.; Trigoni, N.; Markham, A. RandLA-Net: Efficient Semantic Segmentation of Large-Scale Point Clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11105–11114. [Google Scholar] [CrossRef]

- Wu, Y.; Wang, Y.; Zhang, S.; Ogai, H. Deep 3D Object Detection Networks Using LiDAR Data: A Review. IEEE Sens. J. 2021, 21, 1152–1171. [Google Scholar] [CrossRef]

- Huang, X.; Wang, P.; Cheng, X.; Zhou, D.; Geng, Q.; Yang, R. The ApolloScape Open Dataset for Autonomous Driving and Its Application. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 42, 2702–2719. [Google Scholar] [CrossRef]

- Sun, P.; Kretzschmar, H.; Dotiwalla, X.; Chouard, A.; Patnaik, V.; Tsui, P.; Guo, J.; Zhou, Y.; Chai, Y.; Caine, B.; et al. Scalability in Perception for Autonomous Driving: Waymo Open Dataset. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 2443–2451. [Google Scholar] [CrossRef]

- Caesar, H.; Bankiti, V.; Lang, A.H.; Vora, S.; Liong, V.E.; Xu, Q.; Krishnan, A.; Pan, Y.; Baldan, G.; Beijbom, O. NuScenes: A Multimodal Dataset for Autonomous Driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11618–11628. [Google Scholar] [CrossRef]

- Good, I.J. Rational Decisions. J. R. Stat. Society. Ser. B 1952, 14, 107–114. [Google Scholar] [CrossRef]

- Sardinia Geoportal—Autonomous Region of Sardinia. Available online: https://www.sardegnageoportale.it/index.html (accessed on 30 July 2024).

- Collins, C.B.; Beck, J.M.; Bridges, S.M.; Rushing, J.A.; Graves, S.J. Deep Learning for Multisensor Image Resolution Enhancement. In Proceedings of the 1st Workshop on GeoAI: AI and Deep Learning for Geographic Knowledge Discovery, GeoAI 2017, Los Angeles, CA, USA, 7–10 November 2017; pp. 37–44. [Google Scholar]

- Zhu, Q.; Fan, L.; Weng, N. Advancements in Point Cloud Data Augmentation for Deep Learning: A Survey. Pattern Recognit. 2024, 153, 110532. [Google Scholar] [CrossRef]

| Survey | Sensor | LPRR [kHz] | Target Echoes | Overlap | Vertical Accuracy [m] | Ground Speed [kn] | AGL [m] | Average Density [pts/m2] |

|---|---|---|---|---|---|---|---|---|

| 1 * | ALTM Gemini | 125 | 4 | 30% | 25 | 140 | 1400 | 2 |

| 2 * | Riegl LMS-Q560 | 240 | unlimited ** | 60% | 10 | 110 | 500 | 10 |

| Class Value | Class Name | Area 1 Objects | Area 2 Objects | Description |

|---|---|---|---|---|

| 0 | Not classified or in use | 28 out of 62 45% | 28 out of 113 25% | This class contains all the points remaining from the other classes. |

| 1 | Tower | 2 out of 62 3% | 6 out of 113 5% | This class integrates all kinds of objects related to military observation towers and outposts. |

| 2 | Walls | - | 1 out of 113 1% | This class must be intended as objects pertaining to fortification walls and bastions. |

| 3 | Bunker | 14 out of 62 23% | 66 out of 113 58% | This class contains objects related to the pillbox-fortified structures. These structures must be intended as a special type of concrete camouflaged guard post. |

| 4 | Batteries | 15 out of 62 24% | 12 out of 113 11% | This class contains objects related to military batteries, whether those be antiaircraft or antinavy. |

| 5 | Fortress | 3 out of 6 25% | - | This class contains objects pertaining to fortresses and strongholds. |

| Class Value | Class Name | Description |

|---|---|---|

| 0 | Unclassified | This class contains all the points remaining from the other classes. It particularly refers to low vegetation (e.g., bushes), cars, etc. |

| 2 | Ground | This class contains all the points pertaining to the ground surface. |

| 5 | High vegetation | This class contains objects recognized as trees. |

| 6 | Buildings | This class contains points related to human-made artifacts, such as buildings, ruins, etc. |

| 9 | Water | This class contains water surface points. |

| Training Dataset | Data Percentage | Class | Class Distribution |

|---|---|---|---|

| Training | 78% | Unclassified | 5% |

| Ground | 58% | ||

| High Vegetation | 10% | ||

| Building | 26% | ||

| Water | 2% | ||

| Validation | 22% | Unclassified | 6% |

| Ground | 67% | ||

| High Vegetation | 8% | ||

| Building | 18% | ||

| Water | 1% |

| Unclassified | Ground | High Vegetation | Building | Water | |

|---|---|---|---|---|---|

| A | 2% | 52% | 2% | 38% | 6% |

| B | 4% | 43% | 9% | 44% | - |

| C | 1% | 19% | 1% | 80% | - |

| D | 3% | 41% | 36% | 20% | - |

| Dataset | Reference | Year | Data | Type | Object Classes | Annotated 3D Boxes |

|---|---|---|---|---|---|---|

| KITTI | [42] | 2012 * | RGB + LiDAR | Autonomous driving | 8 | 200K |

| ApolloScape | [67] | 2018 | RGB + LiDAR | Autonomous driving | 6 | 70K |

| H3D | [43] | 2019 | RGB + LiDAR | Autonomous driving | 8 | 1.1M |

| Waymo Open | [68] | 2020 | RGB + LiDAR | Autonomous driving | 4 | 12M |

| nuScenes | [69] | 2020 | RGB + LiDAR | Autonomous driving | 23 | 1.4M |

| Class | Accuracy | Precision | Recall | F1 score |

|---|---|---|---|---|

| 0—Unclassified | 0.95 | 0.78 | 0.29 | 0.42 |

| 2—Ground | 0.92 | 0.93 | 0.94 | 0.94 |

| 5—High Vegetation | 0.97 | 0.81 | 0.90 | 0.85 |

| 6—Building | 0.94 | 0.78 | 0.89 | 0.84 |

| 9—Water | 0.99 | 0.71 | 0.64 | 0.67 |

| Macro Average | 0.95 | 0.80 | 0.73 | 0.74 |

| Area | Class | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|---|

| A | 0—Unclassified | 0.99 | 0.71 | 0.58 | 0.64 |

| 2—Ground | 0.84 | 0.80 | 0.93 | 0.86 | |

| 5—High Vegetation | 0.98 | 0.57 | 0.66 | 0.61 | |

| 6—Building | 0.89 | 0.89 | 0.81 | 0.85 | |

| 9—Water | 0.95 | 0.97 | 0.21 | 0.35 | |

| Macro Average | 0.93 | 0.79 | 0.64 | 0.66 | |

| B | 0—Unclassified | 0.97 | 0.78 | 0.53 | 0.64 |

| 2—Ground | 0.94 | 0.87 | 1.00 | 0.93 | |

| 5—High Vegetation | 0.97 | 0.86 | 0.80 | 0.83 | |

| 6—Building | 0.89 | 0.92 | 0.83 | 0.87 | |

| Macro Average | 0.96 | 0.86 | 0.79 | 0.82 | |

| C | 0—Unclassified | 0.99 | 0.98 | 0.30 | 0.46 |

| 2—Ground | 0.99 | 0.94 | 1.00 | 0.97 | |

| 5—High Vegetation | 1.00 | 0.87 | 0.92 | 0.89 | |

| 6—Building | 0.98 | 0.99 | 0.99 | 0.99 | |

| Macro Average | 0.99 | 0.95 | 0.80 | 0.83 | |

| D | 0—Unclassified | 0.99 | 0.97 | 0.58 | 0.73 |

| 2—Ground | 0.96 | 0.91 | 1.00 | 0.96 | |

| 5—High Vegetation | 0.99 | 0.99 | 0.98 | 0.99 | |

| 6—Building | 0.96 | 0.95 | 0.83 | 0.89 | |

| Macro Average | 0.97 | 0.96 | 0.85 | 0.89 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cappellazzo, M.; Patrucco, G.; Spanò, A. ML Approaches for the Study of Significant Heritage Contexts: An Application on Coastal Landscapes in Sardinia. Heritage 2024, 7, 5521-5546. https://doi.org/10.3390/heritage7100261

Cappellazzo M, Patrucco G, Spanò A. ML Approaches for the Study of Significant Heritage Contexts: An Application on Coastal Landscapes in Sardinia. Heritage. 2024; 7(10):5521-5546. https://doi.org/10.3390/heritage7100261

Chicago/Turabian StyleCappellazzo, Marco, Giacomo Patrucco, and Antonia Spanò. 2024. "ML Approaches for the Study of Significant Heritage Contexts: An Application on Coastal Landscapes in Sardinia" Heritage 7, no. 10: 5521-5546. https://doi.org/10.3390/heritage7100261

APA StyleCappellazzo, M., Patrucco, G., & Spanò, A. (2024). ML Approaches for the Study of Significant Heritage Contexts: An Application on Coastal Landscapes in Sardinia. Heritage, 7(10), 5521-5546. https://doi.org/10.3390/heritage7100261