Novel Paradigms in the Cultural Heritage Digitization with Self and Custom-Built Equipment

Abstract

:1. Introduction

2. Materials and Methods

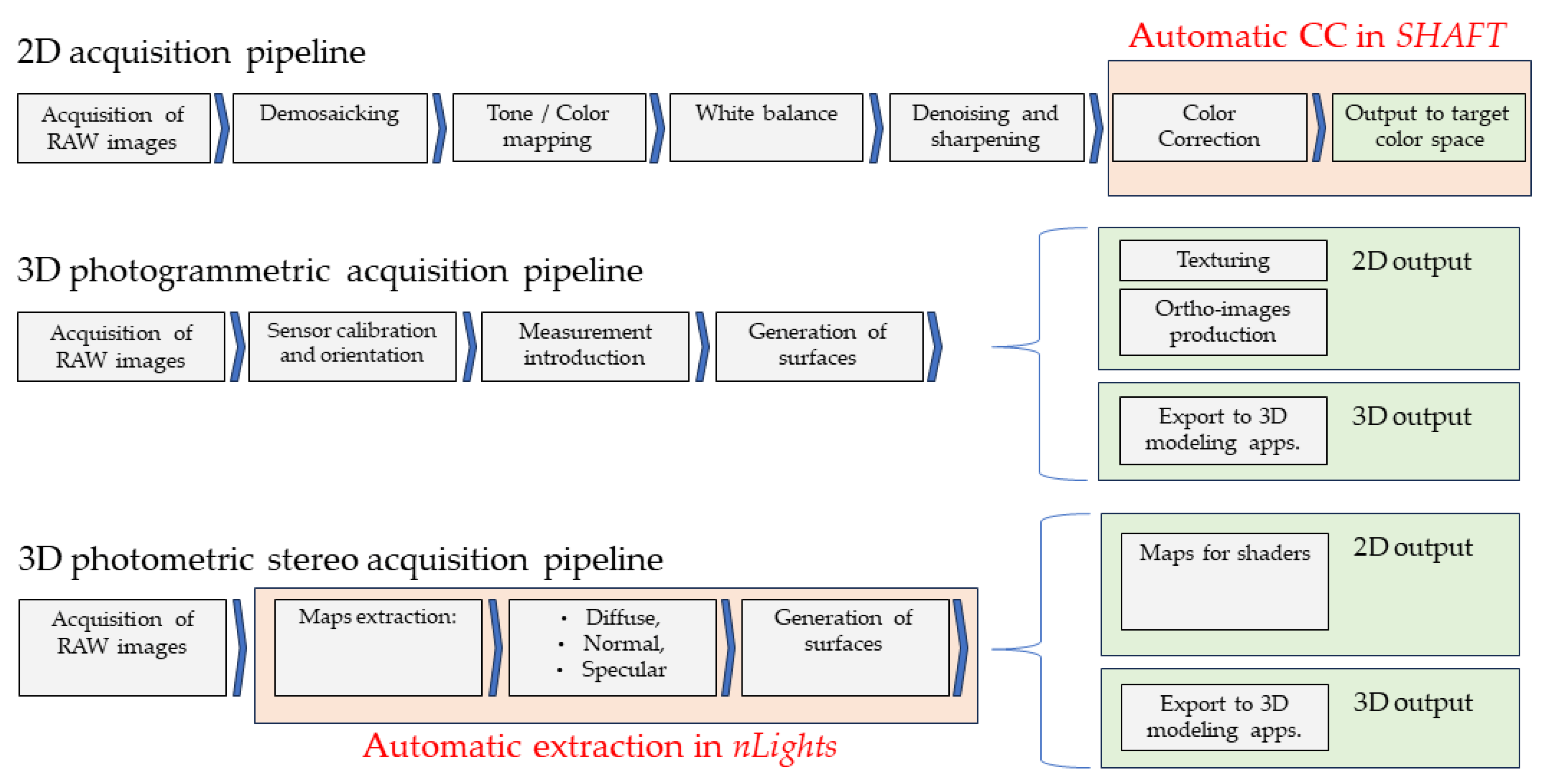

2.1. Pipelines

- Sensor resolution and color calibration,

- Image acquisition,

- Analog-to-digital conversion,

- Demosaicking,

- White balance,

- Color correction,

- Denoising and sharpening,

- 2D output to target color space and gamma encoding.

- Sensor radiometric calibration,

- Image acquisition,

- Analog-to-digital conversion,

- Demosaicking,

- White balance,

- Color correction,

- Denoising and sharpening,

- 2D output to target color space and gamma encoding,

- Sensor calibration and orientation through self-calibration,

- Measurement introduction,

- Surfaces generation,

- 2D output:

- ◦

- Texturing,

- ◦

- Ortho-images production,

- 3D output:

- ◦

- Export of models towards 3D modeling applications.

- Sensor resolution and color calibration,

- Image acquisition,

- Analog-to-digital conversion,

- Demosaicking,

- White balance,

- Color correction,

- Denoising and sharpening,

- 2D output to target color space and gamma encoding,

- Maps extraction:

- ◦

- Diffusion map,

- ◦

- Normal map,

- ◦

- Specular map,

- Generation of mesh surfaces,

- 2D output:

- ◦

- Maps for shaders,

- 3D output:

- ◦

- Export of models towards 3D applications.

2.2. An Overview of the Companion Software

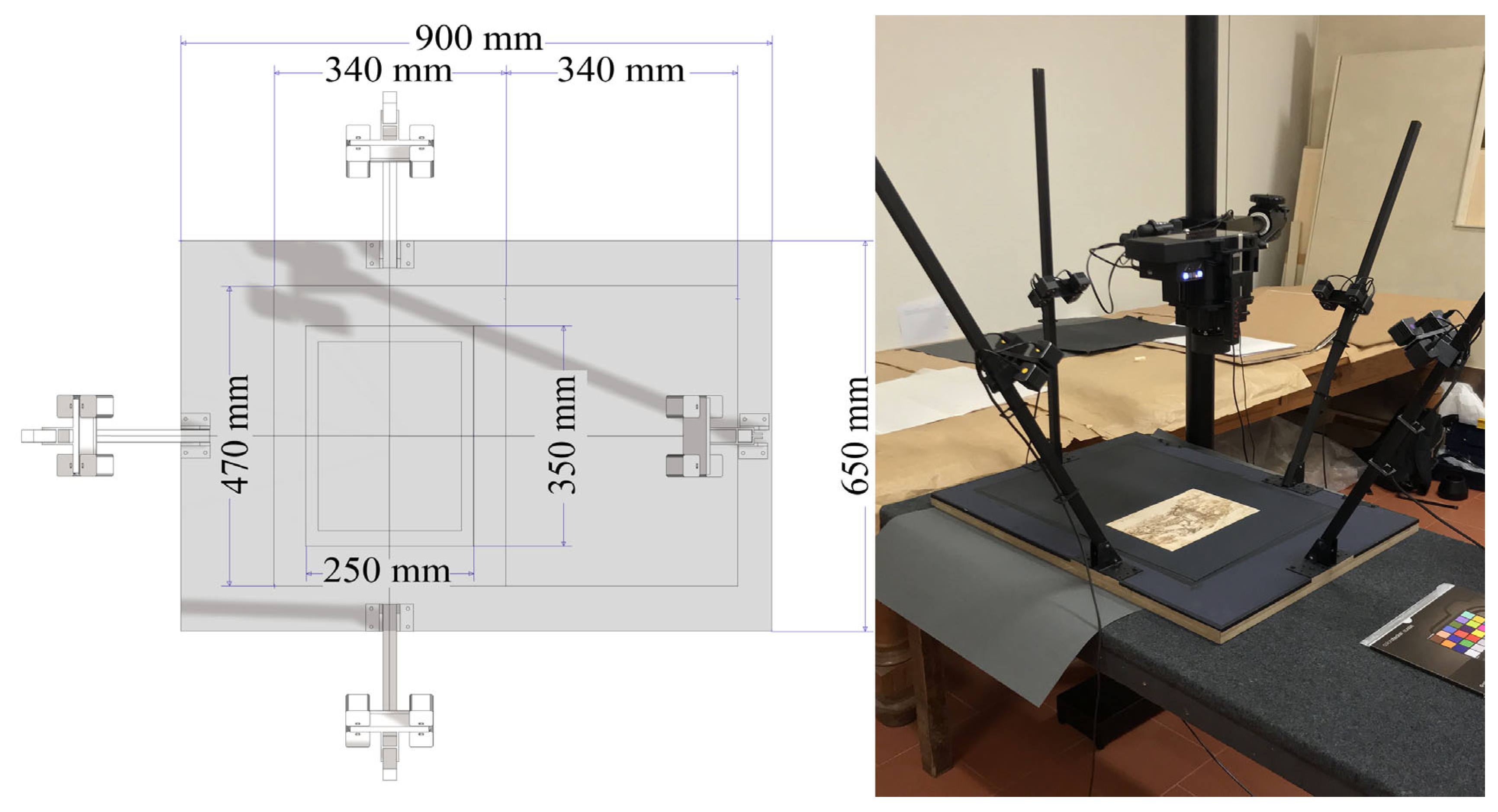

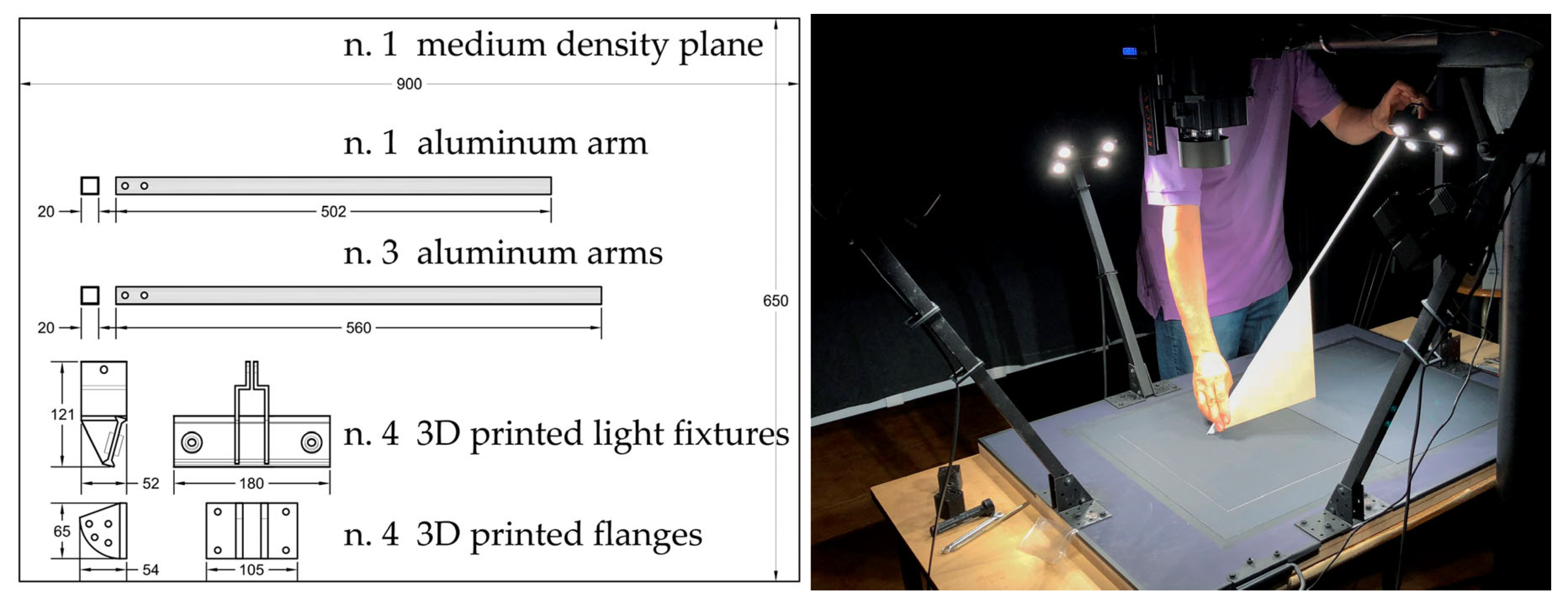

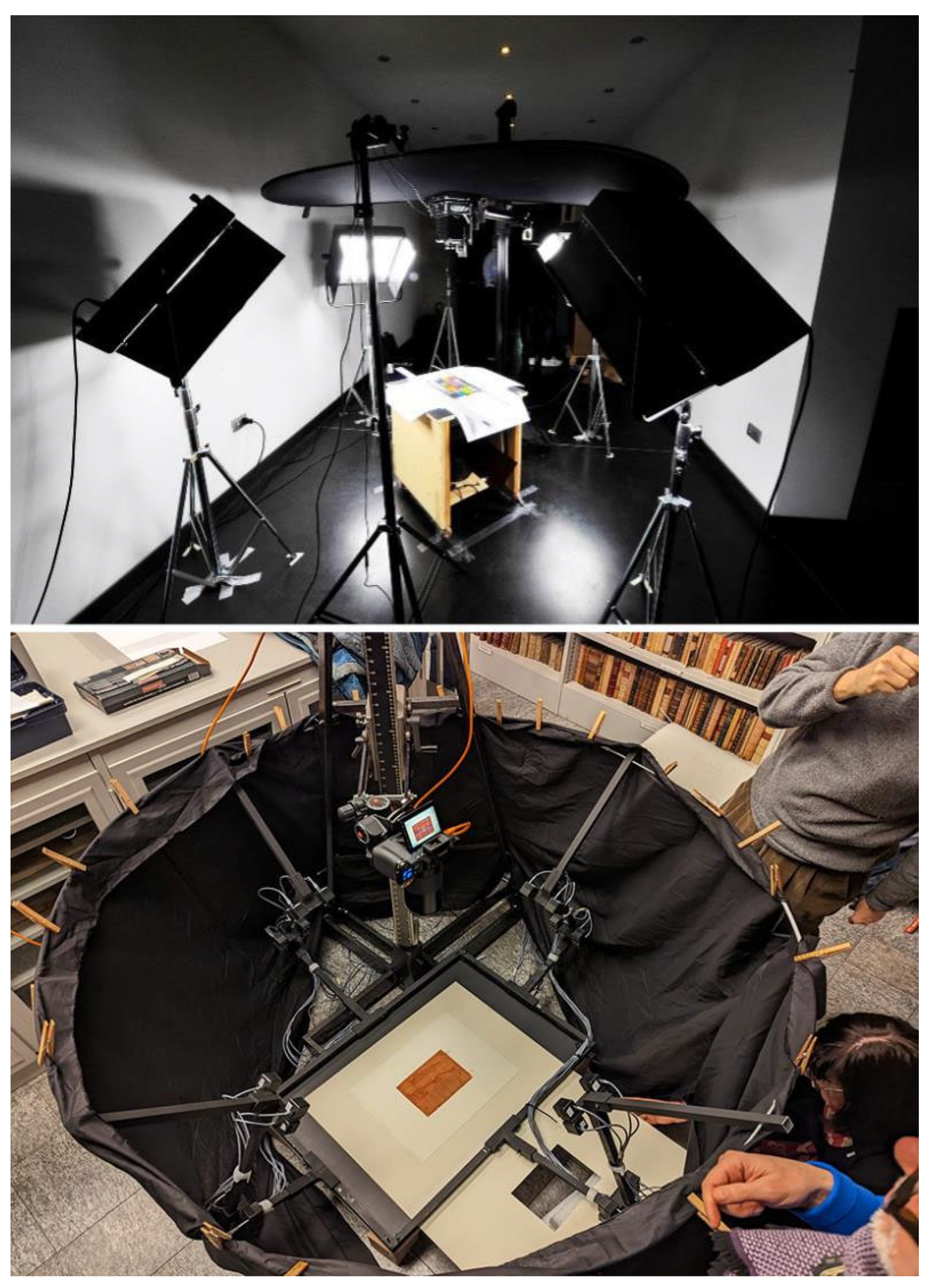

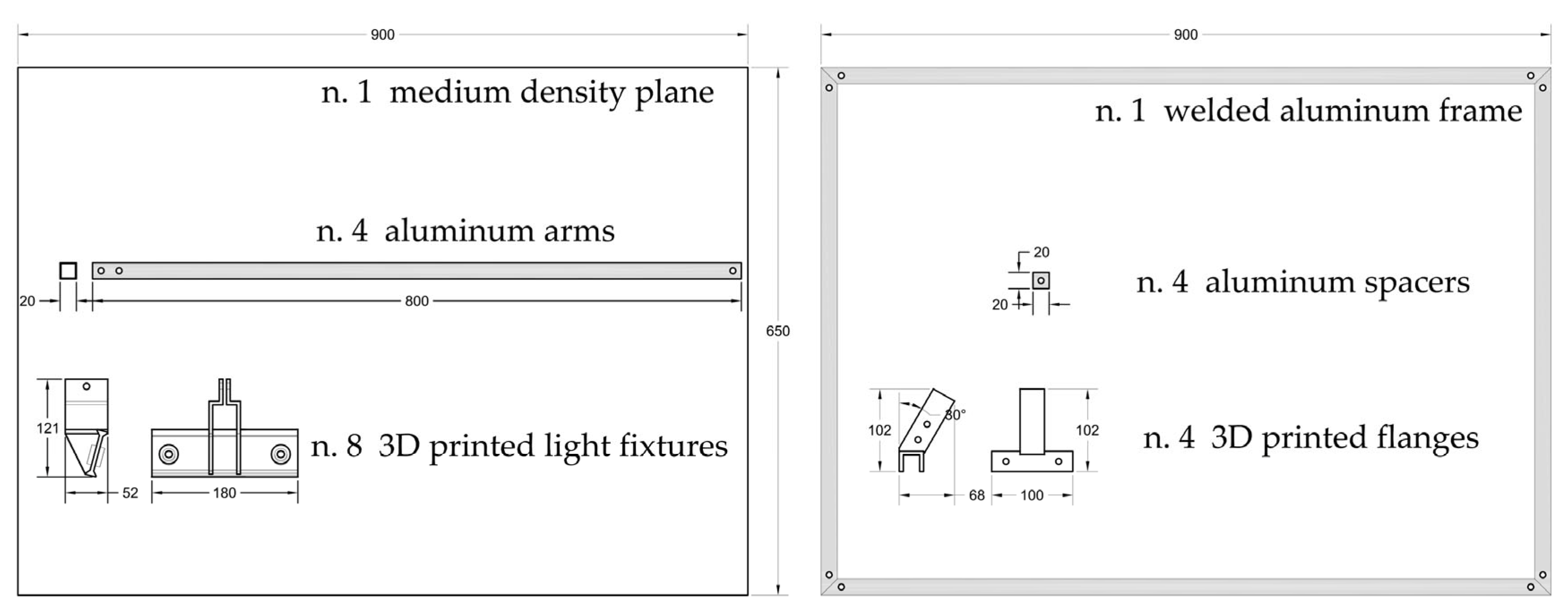

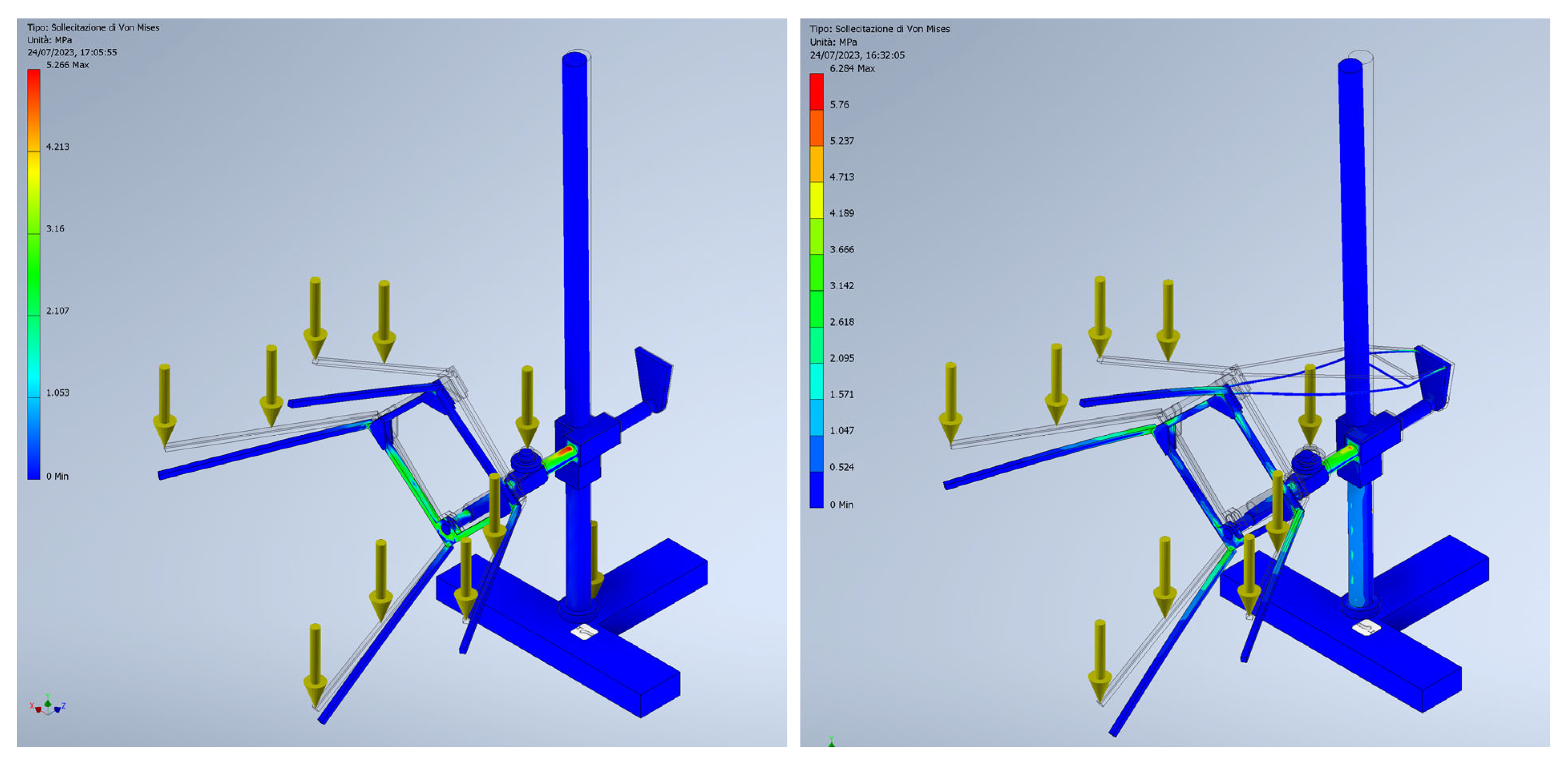

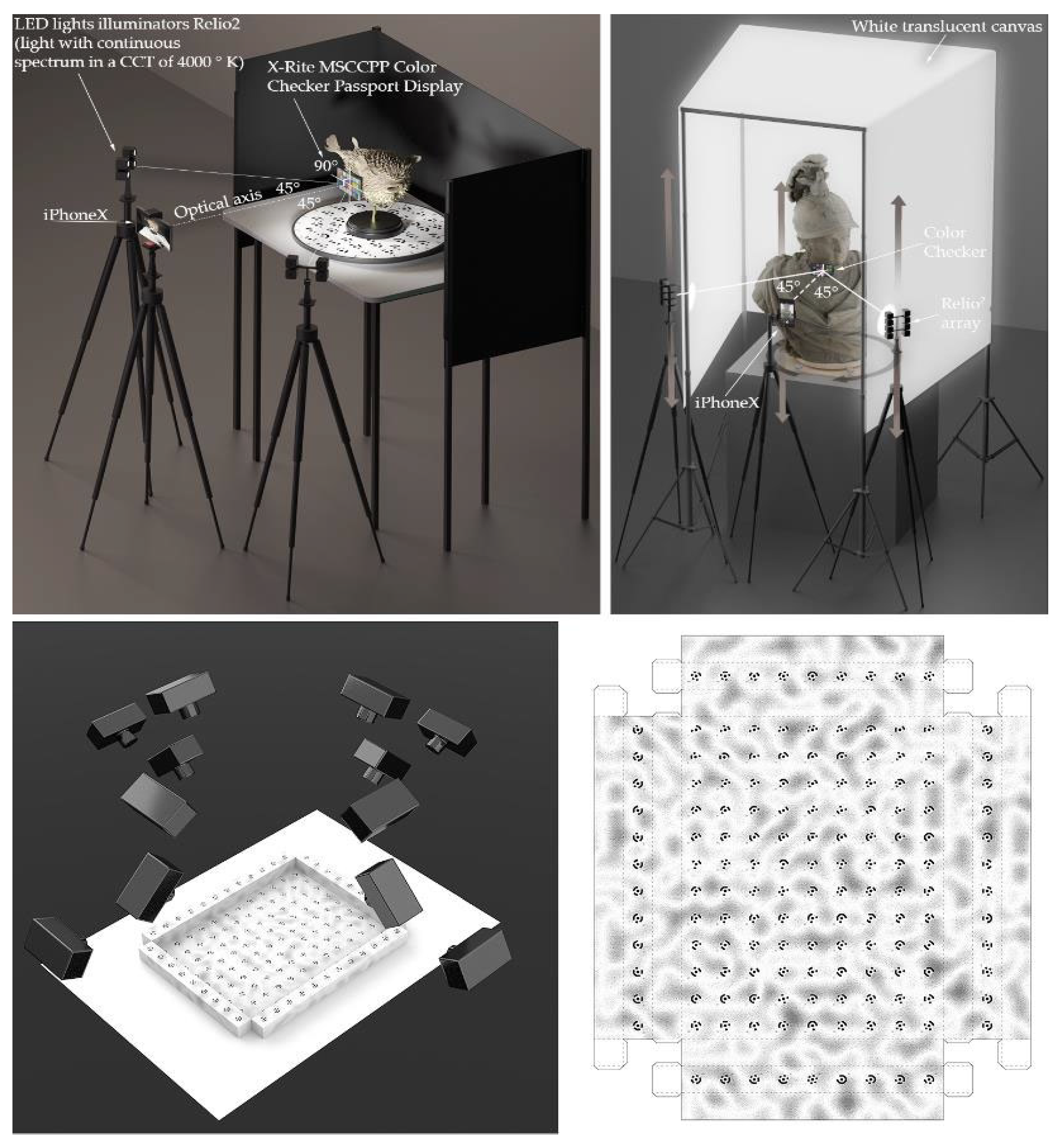

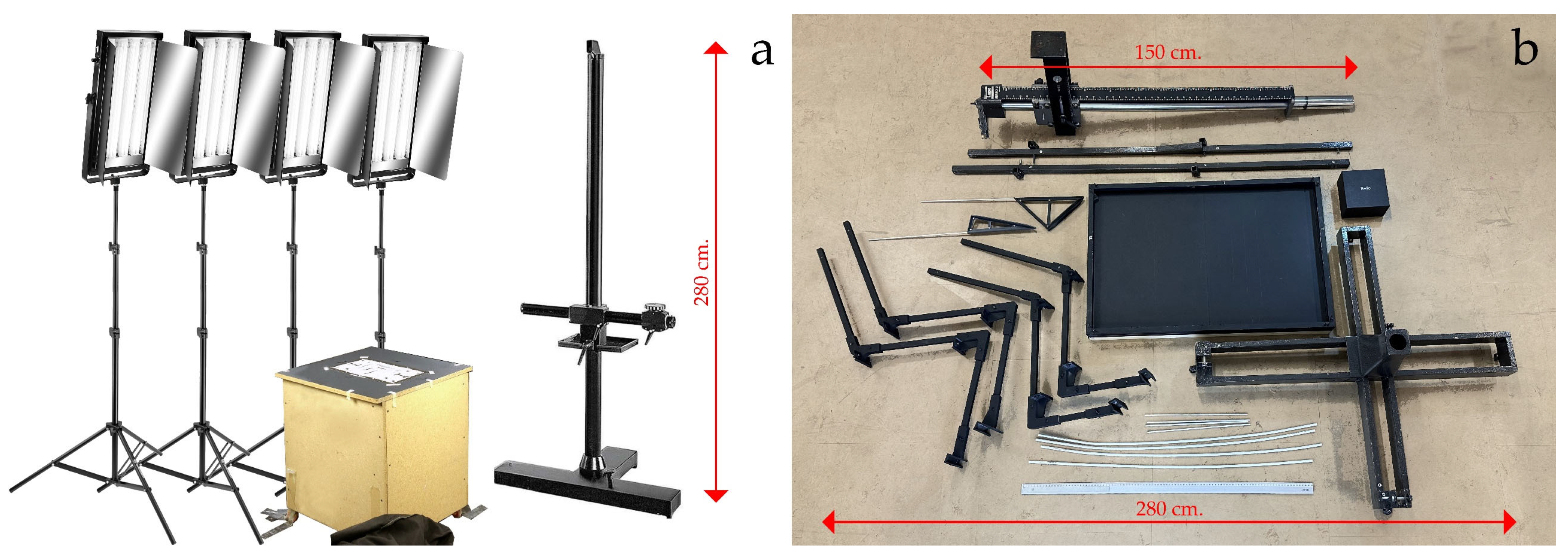

2.3. Developed Equipment Solutions

- Lack of illuminants efficiency, with inconsistent or harmful lighting conditions leading to variations in image quality and accuracy;

- Presence of erratic reflections and parasite light coming from outdoor;

- Absence of planarity of the camera and the surface to be captured: without correct planarity between the camera and the object’s surface, there can be discrepancies in image resolution, affecting the clarity and details of the captured images;

- Significant time required to set up the shooting stage: positioning lighting equipment, light-blocking screens, and cameras can be difficult to set up, time-consuming, increasing the overall time required for capturing images;

- Too complex tools requiring specific expertise in their use or many human resources that may pose challenges for average users;

- Costly and hard-to-find tools and spares: some hardware tools or setups may be expensive and not easily accessible, making it challenging to acquire them, as, e.g., in the Operation Night Watch project, by Rijksmuseum in Amsterdam [39].

- A set of very portable lights with known emissions and efficiency to easily transport them and minimize the technical time required to set up the stage,

- A repro stand for artworks to be captured on a horizontal plane, such as ancient drawings;

- A repro stand for artworks to be captured on a vertical plane, such as paintings or frescoes;

- A calibrated roundtable and 3D test-field plate to capture small museum objects.

- A.

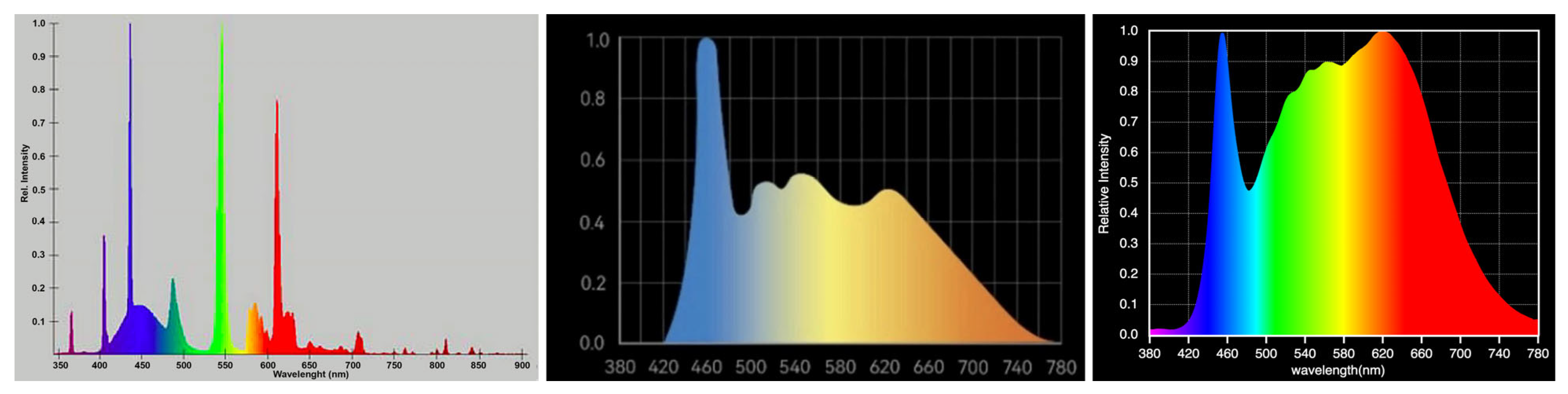

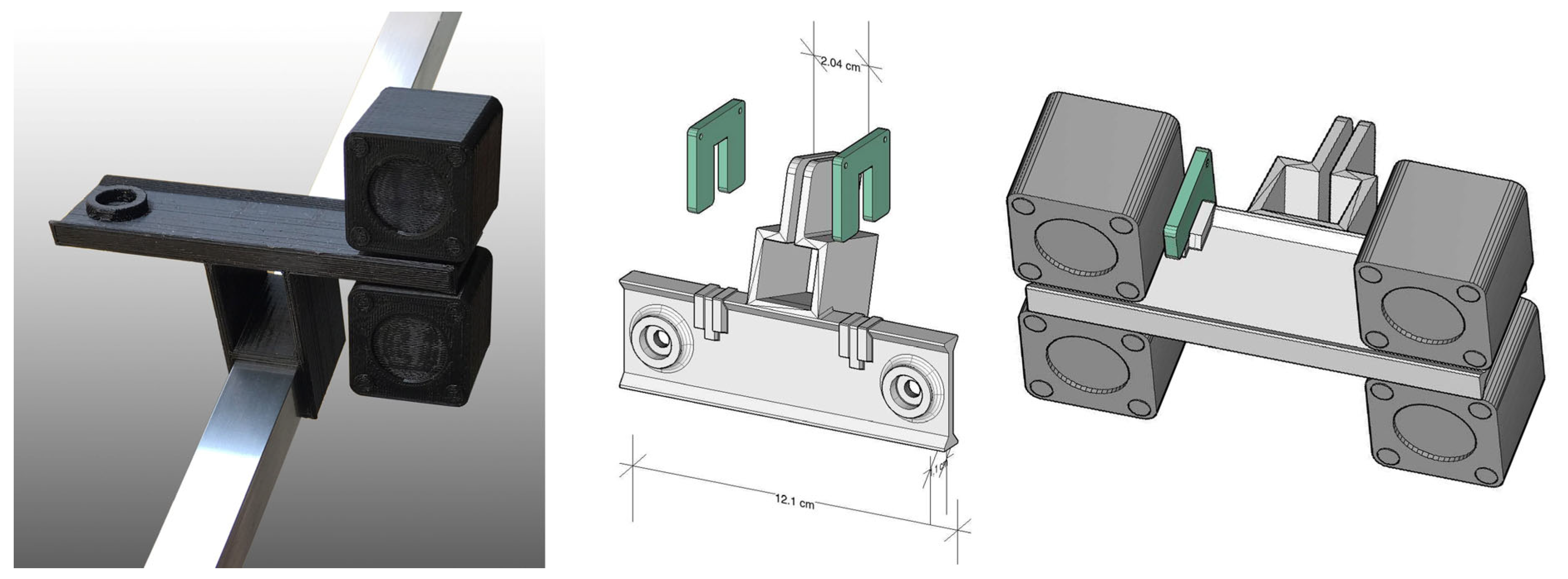

- Portable lights—To avoid the lack of illuminants efficiency and too complex lighting systems to illuminate the scenes, a new lighting system based on a series of single LED lights, characterized by limited dimensions and good portability was chosen. A custom prototype was crafted, including either sixteen or thirty-two Relio2 LED lights thoughtfully distributed on the repro stand, with four or eight lights placed on each side. These lights boast a 4000 K Correlated Color Temperature (CCT), and an illuminance of 40,000 lux at 0.25 m. Their IES (Illuminating Engineering Society) TM-30–15 Color Fidelity Index is Rf = 91 [40]. The illuminators’ Spectral Power Distribution (SPD) shows high chromatic consistency across the entire wavelength spectrum (Figure 2) and excellent color rendition even during the rendering phase. Besides, they do not generate any potentially harmful UV or IR emissions. Finally, they present a setup in which the time in which the lamps warm up to the nominal color temperature and light intensity stabilization is minimized. Relio2 illuminants were positioned and grouped with specifically designed 3D printed supports to place lights at desired directions and inclinations (Figure 3). These supports were crafted in ABS (acrylonitrile-butadiene-styrene) using a XYZprinting Da Vinci Pro 1.0 3D printer and 1.75 mm diameter black matte filament. The choice of ABS as preferred material, rather than other easier-to-print filaments, was driven by the need to prevent the supports from being damaged or deformed by the heat emitted by the illuminators (approximately 60 °C each). This solution is also economically affordable, since it solves the problem of finding costly spare parts in case of damages during the working operations (as they can be reprinted).

- B.

- Repro stand for artworks to be captured on a horizontal plane—The stand, aiming to host ancient drawings or manuscripts to be digitally acquired, was designed to own the following features:

- A stable structure to minimize blurring caused by oscillations and vibrations and small movements of lamps that may cause potential non-uniformity of the light,

- A wide reproduction area capable of accommodating the open passe-partout containing the drawings to be captured, ensuring a safety management of it and its planarity,

- The lighting system positioned on all four sides, equidistant from the center of the drawing to guarantee homogeneous illumination for the whole acquisition area,

- No interference between the light sources and the camera,

- Easy portability within the locations where the drawings are usually stored.

- C.

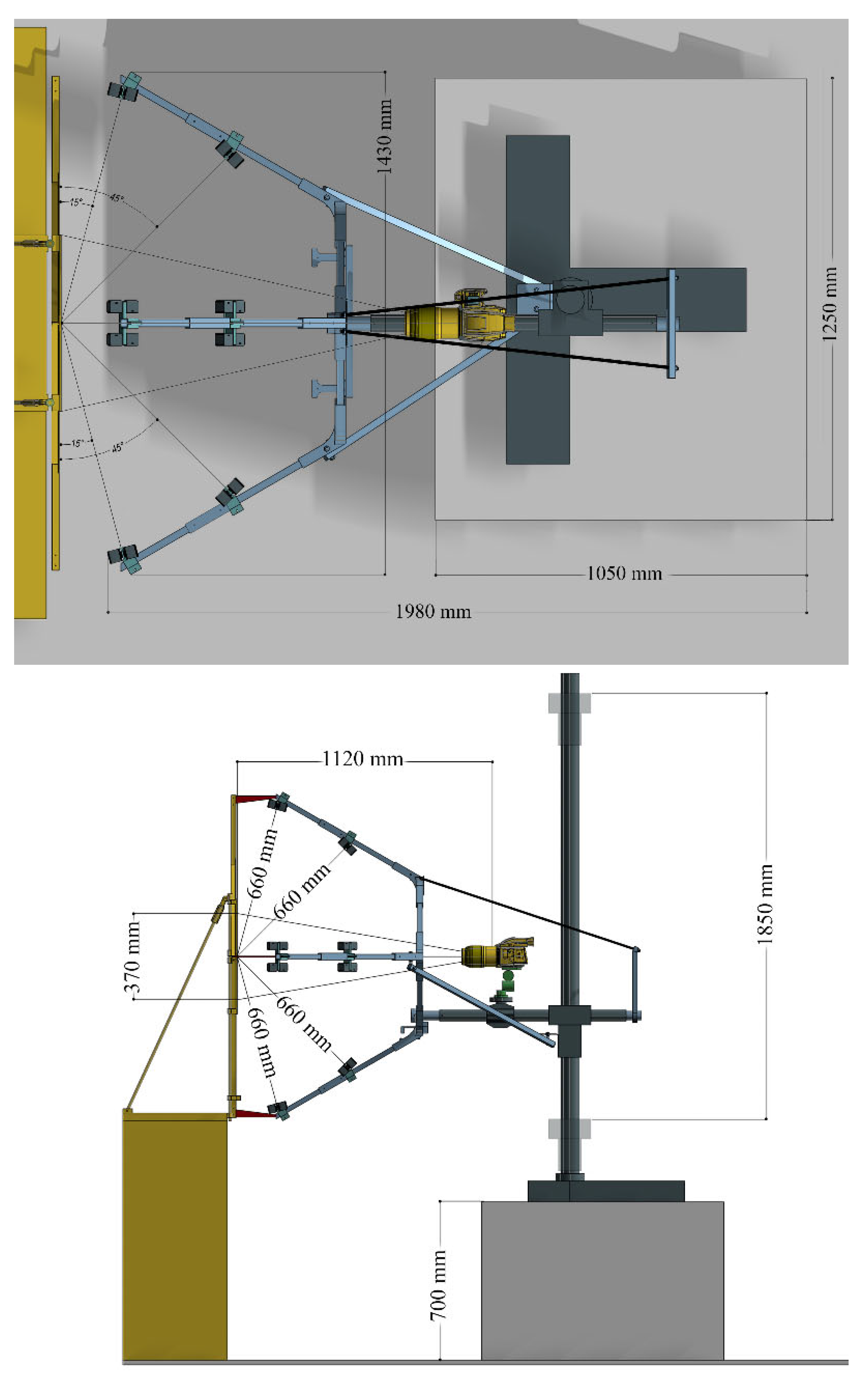

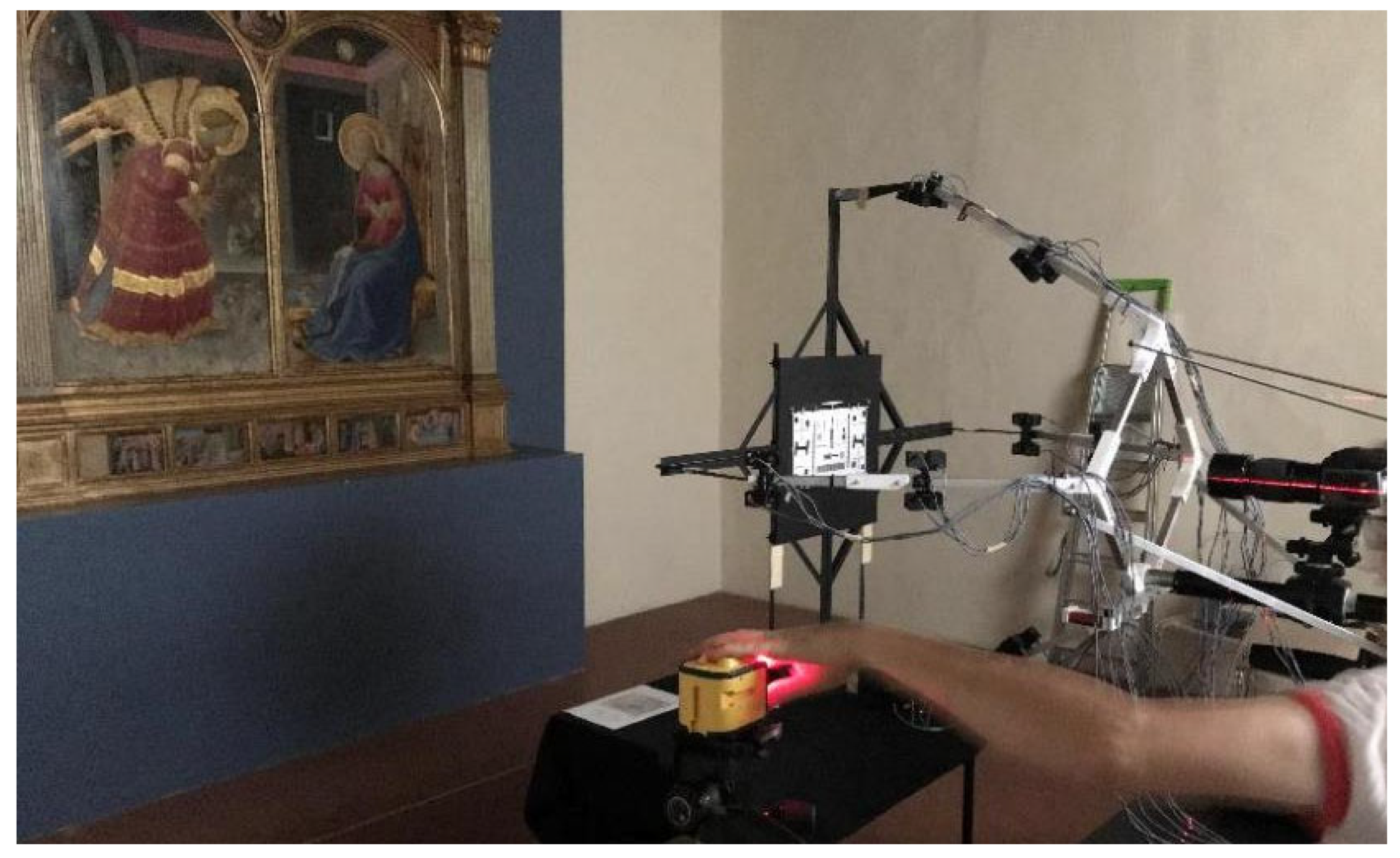

- Repro stand for artworks to be captured on a vertical plane—The stand, was designed to offer the following features:

- A stable structure to minimize blurring caused by oscillations and vibrations and small movements of lamps that may cause potential non-uniformity of the light;

- Due to the larger size of paintings when compared to drawings and the limited frame of each picture taken to get the needed resolution, once they need to be mosaicked together the acquisition system had to be both vertically and horizontally movable;

- Safety management and parallelism to the vertical plane of the painting to be acquired;

- The lighting system positioned on all four sides, equidistant from the center of the captured area to guarantee homogeneous illumination for the whole acquisition area;

- No interference between the light sources and the camera;

- A system for camera calibration since calibration panels or color checkerboard cannot be superimposed to the painting for safety reasons.

- D.

- Calibrated roundtable and 3D test-field plate—The developed solution addresses the challenges that stem from using overly intricate tools that demand specialized expertise, even for technical procedures such as camera calibration [41]. This stands in contrast to sophisticated approaches as outlined in [14], which features complex setups involving stepper motors and controllers to maneuver the table’s movement. In place of complex rigs, which present challenges in terms of fabrication for operators, a more user-friendly approach has been adopted. This entails providing comprehensive usage guidelines and crafting tools directly to users who can build up their own acquisition set through the folding of basic cardboard.

2.4. Acquisition Requirements and Calibration Procedures

- Color: color accuracy must be estimated between 0 and 1.5 CIE ΔE00 [42].

- Resolution: the resolution of acquired artwork must be at least equal to 0.1 mm, which means to acquire digital information at a 0.05 mm magnitude, according to the Nyquist-Shannon sampling theorem. According to this theorem, the sample rate must be at least twice the signal’s bandwidth to effectively avoid aliasing distortion.

- Times: it must be the less possible, without affecting the quality of the acquisition.

- Costs: they must be low if compared to commercial solutions, to improve the economic sustainability of the acquisitions.

- Usability: it must be wide, to involve possible users not necessarily specifically prepared.

2.5. Camera Geometric Calibration Procedure

- Number of oriented images.

- Bundle adjustment (BA) (re-projection error).

- Number of points collected in the dense point cloud.

- Comparison of the dense point cloud to the ground truth of the object. The photogrammetric models were compared to a reference SLR camera model, using CloudCompare.

- Original drawings by Leonardo da Vinci (mostly around at the end of XV century with dimensions roughly similar to an A4 sheet), hosted and digitized in several locations such as Gallerie dell’Accademia in Venice, Le Gallerie degli Uffizi in Florence, Civico Gabinetto dei Disegni al Castello Sforzesco and Veneranda Biblioteca Ambrosiana, both in Milan.

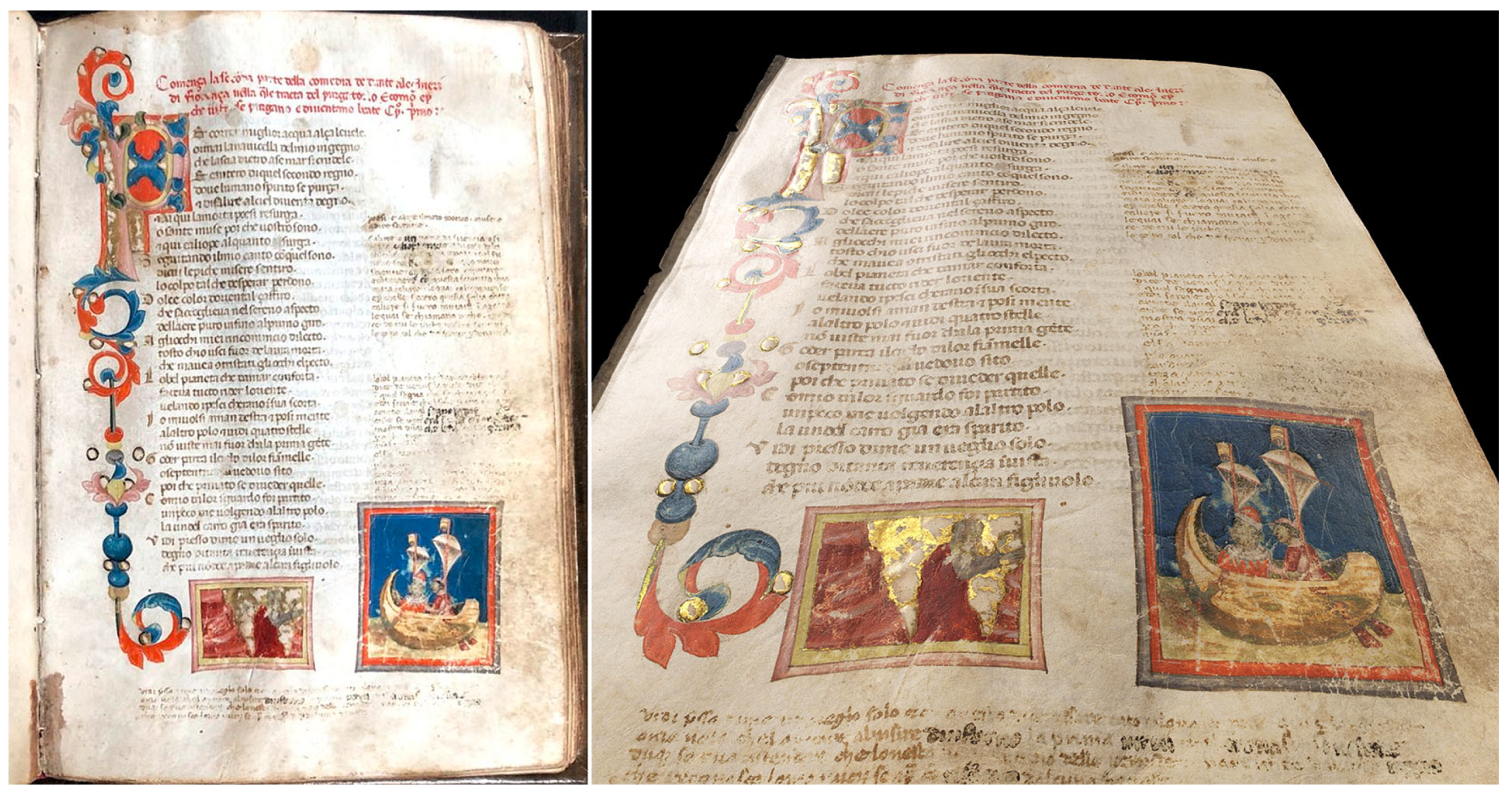

- Manuscript no. 589 (XIV century, 273 mm × 187 mm) titled Dante Alighieri, Commedia con rubriche volgari brevi e glosse dal Lana per le prime due cantiche, hosted and digitized at the Biblioteca Universitaria di Bologna (BUB).

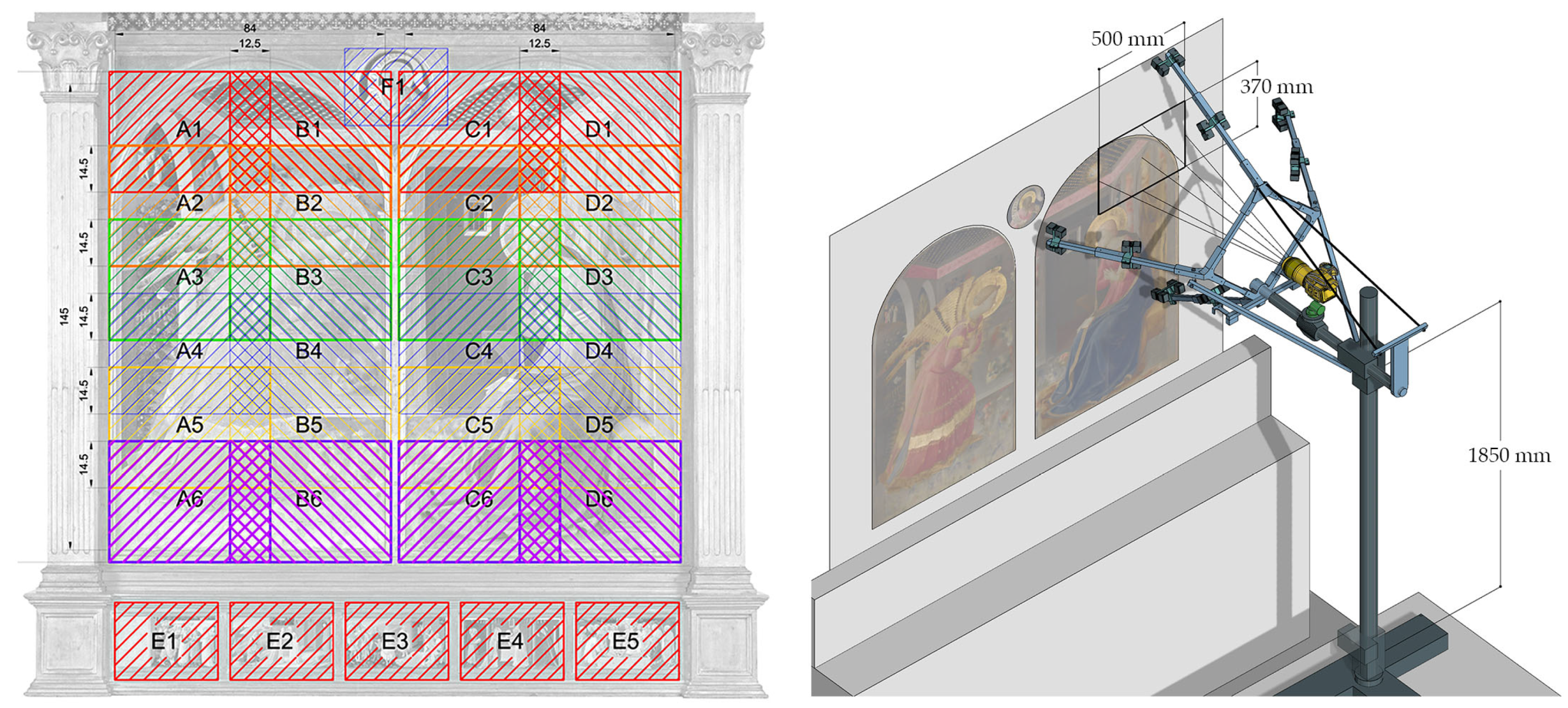

- Annunciation by Beato Angelico (c. 1430-32, 2380 mm × 2340 mm) hosted and digitized at the Museum of the Basilica of Santa Maria delle Grazie in San Giovanni Valdarno.

- An embalmed Porcupinefish (Diodon Antennatus, 350 mm × 190 mm × 250 mm) and a Globe by astronomer Horn d’Arturo (310 mm × 310 mm × 460 mm), both hosted and digitized at the Sistema Museale di Ateneo (SMA) in Bologna.

3. Results

- Footprint of the acquisition area and on-field setup time for the acquisition set.

- Color accuracy.

- Quality of the normal maps for the 3D surface mesostructure reconstruction.

- Dimensional quality achieved using general-purpose devices (i.e., smartphone cameras) on 3D CH objects.

- Visual outcomes from the developed pipeline on different types of CH objects.

- Costs.

- Public and scientific successes of the outputs.

3.1. Footprint and On-Field Setup Time

3.2. Color Accuracy

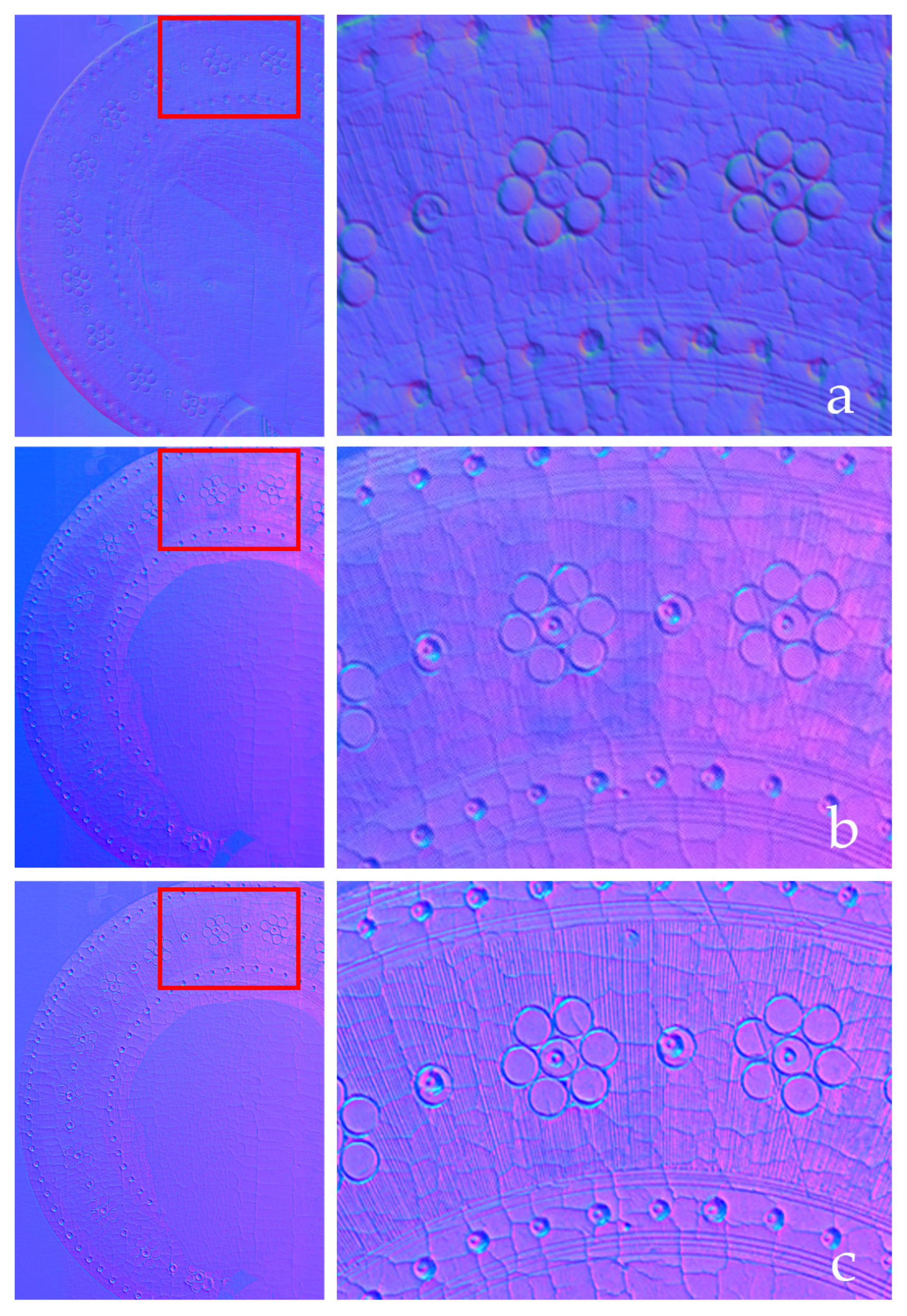

- (a)

- Lighting system design and construction

- (b)

- Lighting system use on the field.

- (c)

- To determine the best performance in terms of color rendition, three different set of illuminators were tested using the same camera (Canon EOS 5D Mark III equipped with EF 100 mm f/2.8 lens). Table 6 summarizes the results of tests conducted at the Dept. of Architecture in Bologna to compare Relio2 lights to previous solutions adopted. The ΔE00 max. values are also documented, as they report the highest variation observable within a unique color segment of the chart.

- (d)

- Table 7 presents the evolution of the statistical measures of ∆E00 and ∆L (the difference of lightness between values measured and values expected), considering stands’ improvement in features and building quality. Starting from 2014, when custom prototypes were still non-existent, ∆E00 and ∆L resulted in better and better values, following a linear progression that confirmed the quality of the enhancements introduced in the prototypes, estimated using the same SHAFT version to keep results comparable. Many camera devices were used over the years: the Rencay DiRECT Camera System 24k3 (image resolution 13,000 px × 8000 px and sensor size 72 mm × 118 mm exploiting a trilinear RGB architecture), the Hasselblad H6D-400C (image resolution 11,600 px × 8700 px and sensor size 53.4 mm × 40.0 mm) and the Hasselblad H2D-100C (image resolution 11,656 px × 8742 px and sensor size 43.8 mm × 32.9 mm). Since the quality of the various sensors is very similar (over the years the single pixel accuracy improved but the size of the pixels decreased, giving very close results) the measurement mainly reflected the difference in quality of the light source.

3.3. Normal Maps Improvements

- PD = Pixel density (in ppi)

- Sr = Sensor width resolution (in pixel): 23,200

- FL = Focal length of the lens system (in mm): 120

- Uf = Unit conversion factor: 25.4 for PD in inch (ppi)

- D = Camera distance to the painting (mm): 1120

- Sw = Camera sensor width (mm): 53.4

3.4. Dimensional Quality

3.5. Visual Outcomes

3.6. Costs

3.7. Public and Scientific Successes

4. Conclusions

- The full elimination of commercial components,

- A modular design using a limited number of distinct parts with the goal to enhance the production standardization, and to reduce costs and design time,

- The construction of stands tailored to specific cameras, with the aim to mitigate common issues related to parts that unintentionally shift the camera or fail to ensure precise positioning and orientation, challenges frequently encountered using conventional stands.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Addison, A.C.; Gaiani, M. Virtualized architectural heritage: New tools and techniques. IEEE MultiMedia 2000, 7, 26–31. [Google Scholar] [CrossRef]

- Masciotta, M.G.; Sanchez-Aparicio, L.J.; Oliveira, D.V.; Gonzalez-Aguilera, D. Integration of Laser Scanning Technologies and 360° Photography for the Digital Documentation and Management of Cultural Heritage Buildings. Int. J. Archit. Herit. 2023, 17, 56–75. [Google Scholar] [CrossRef]

- Chong, H.T.; Lim, C.K.; Rafi, A.; Tan, K.L.; Mokhtar, M. Comprehensive systematic review on virtual reality for cultural heritage practices: Coherent taxonomy and motivations. Multimed. Syst. 2022, 28, 711–726. [Google Scholar] [CrossRef]

- Phase One. Available online: https://www.phaseone.com/ (accessed on 25 July 2023).

- Nocerino, E.; Stathopoulou, E.K.; Rigon, S.; Remondino, F. Surface Reconstruction Assessment in Photogrammetric Applications. Sensors 2020, 20, 5863. [Google Scholar] [CrossRef] [PubMed]

- Matrone, F.; Grilli, E.; Martini, M.; Paolanti, M.; Pierdicca, R.; Remondino, F. Comparing Machine and Deep Learning Methods for Large 3D Heritage Semantic Segmentation. ISPRS Int. J. Geo-Inf. 2020, 9, 535. [Google Scholar] [CrossRef]

- Toschi, I.; Capra, A.; De Luca, L.; Beraldin, J.-A.; Cournoyer, L. On the evaluation of photogrammetric methods for dense 3D surface reconstruction in a metrological context. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, 2–5, 371–378. [Google Scholar] [CrossRef]

- Remondino, F.; Spera, M.G.; Nocerino, E.; Menna, F.; Nex, F.; Gonizzi-Barsanti, S. Dense image matching: Comparisons and analysis. In Proceedings of the IEEE Conference Digital Heritage 2013, Marseille, France, 1 November 2013; Volume 1, pp. 47–54. [Google Scholar] [CrossRef]

- Farella, E.M.; Morelli, L.; Grilli, E.; Rigon, S.; Remondino, F. Handling critical aspects in massive photogrammetric digitization of museum assets. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, XLVI-2/W1-2022, 215–222. [Google Scholar] [CrossRef]

- Porter, S.; Roussel, M.; Soressi, M. A Simple Photogrammetry Rig for the Reliable Creation of 3D Artifact Models in the Field: Lithic Examples from the Early Upper Paleolithic Sequence of Les Cottés (France). Adv. Archaeol. Pract. 2016, 4, 71–86. [Google Scholar] [CrossRef]

- Sun, T.; Xu, Z.; Zhang, X.; Fanello, S.; Rhemann, C.; Debevec, P.; Tsai, Y.T.; Barron, J.T.; Ramamoorthi, R. Light stage super-resolution: Continuous high-frequency relighting. ACM Trans. Graph. 2020, 39, 260. [Google Scholar] [CrossRef]

- Santos, P.; Ritz, M.; Tausch, R.; Schmedt, H.; Monroy, R.; De Stefano, A.; Posniak, O.; Fuhrmann, C.; Fellner, D.W. CultLab3D: On the verge of 3D mass digitization. In Proceedings of the Eurographics Workshop on Graphics and Cultural Heritage (GCH’ 14), Darmstadt, Germany, 6–8 October 2014; Eurographics Association: Goslar, Germany; pp. 65–73, ISBN 978-3-905674-63-7. [Google Scholar]

- Menna, F.; Nocerino, E.; Morabito, D.; Farella, E.M.; Perini, M.; Remondino, F. An open-source low-cost automatic system for image-based 3D digitization. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, XLII-2/W8, 155–162. [Google Scholar] [CrossRef]

- Gattet, E.; Devogelaere, J.; Raffin, R.; Bergerot, L.; Daniel, M.; Jockey, P.; De Luca, L. A versatile and low-cost 3D acquisition and processing pipeline for collecting mass of archaeological findings on the field. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, XL-5/W4, 299–305. [Google Scholar] [CrossRef]

- DXO Mark. Available online: https://www.dxomark.com/disruptive-technologies-mobile-imaging-taking-smartphone-cameras-next-level (accessed on 25 July 2023).

- Han, W.; Shin, J.; Ho Shin, J. Low-cost, open-source contact angle analyzer using a mobile phone, commercial tripods and 3D printed parts. HardwareX 2022, 12, 1175–1185. [Google Scholar] [CrossRef] [PubMed]

- Sylwan, S.; MacDonald, D.; Walter, J. Stereoscopic CG camera rigs and associated metadata for cinematic production. In Proceedings of the SPIE 7237, Stereoscopic Displays and Applications XX, 72370C, San Jose, CA, USA, 14 February 2009. [Google Scholar] [CrossRef]

- Lee, S.H.; Lee, S.J. Development of remote automatic panorama VR imaging rig systems using smartphones. Clust. Comput. 2018, 21, 1175–1185. [Google Scholar] [CrossRef]

- Apollonio, F.I.; Gaiani, M.; Garagnani, S. Visualization and Fruition of Cultural Heritage in the Knowledge-Intensive Society: New Paradigms of Interaction with Digital Replicas of Museum Objects, Drawings, and Manuscripts. In Handbook of Research on Implementing Digital Reality and Interactive Technologies to Achieve Society 5.0; Ugliotti, F.M., Osello, A., Eds.; IGI Global: Hershey, PA, USA, 2022; pp. 471–495. [Google Scholar] [CrossRef]

- Apollonio, F.I.; Fantini, F.; Garagnani, S.; Gaiani, M. A Photogrammetry-Based Workflow for the Accurate 3D Construction and Visualization of Museums Assets. Remote Sens. 2021, 13, 486. [Google Scholar] [CrossRef]

- Apollonio, F.I.; Foschi, R.; Gaiani, M.; Garagnani, S. How to Analyze, Preserve, and Communicate Leonardo’s Drawing? A Solution to Visualize in RTR Fine Art Graphics Established from “the Best Sense”. ACM J. Comput. Cult. Herit. 2021, 14, 1–30. [Google Scholar] [CrossRef]

- Ackermann, J.; Goesele, M. A Survey of Photometric Stereo Techniques. Found. Trends Comput. Graph. Vis. 2015, 9, 149–254. [Google Scholar] [CrossRef]

- Horn, B.K.P. Obtaining shape from shading information. In The Psychology of Computer Vision; Winston, P.H., Ed.; McGraw-Hill: New York, NY, USA, 1975; pp. 115–155. ISBN 978-0070710481. [Google Scholar]

- Horn, B.K.P.; Sjoberg, R.W. Calculating the reflectance map. Appl. Opt. 1979, 18, 1770–1779. [Google Scholar] [CrossRef]

- Ikeuchi, K.; Horn, B.K.P. An application of the photometric stereo method. In Proceedings of the 6th International Joint Conference on Artificial Intelligence, Tokyo, Japan, 20 August 1979; ISBN 978-0-934613-47-7. [Google Scholar]

- Silver, W.M. Determining Shape and Reflectance Using Multiple Images. Ph.D. Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 1980. [Google Scholar]

- Woodham, R.J. Photometric method for determining surface orientation from multiple images. Opt. Eng. 1980, 19, 139–144. [Google Scholar] [CrossRef]

- Mildenhall, B.; Srinivasan, P.P.; Tancik, M.; Barron, J.T.; Ramamoorthi, R.; Ng, R. Nerf: Representing scenes as neural radiance fields for view synthesis. Commun. ACM 2021, 65, 99–106. [Google Scholar] [CrossRef]

- Martin-Brualla, R.; Radwan, N.; Sajjadi, M.S.; Barron, J.T.; Dosovitskiy, A.; Duckworth, D. Nerf in the wild: Neural radiance fields for unconstrained photo collections. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 7210–7219. [Google Scholar] [CrossRef]

- Müller, T.; Evans, A.; Schied, C.; Keller, A. Instant neural graphics primitives with a multiresolution hash encoding. ACM Trans. Graph. ToG 2022, 41, 1–15. [Google Scholar] [CrossRef]

- Remondino, F.; Karami, A.; Yan, Z.; Mazzacca, G.; Rigon, S.; Qin, R. A Critical Analysis of NeRF-Based 3D Reconstruction. Remote Sens. 2023, 15, 3585. [Google Scholar] [CrossRef]

- Gaiani, M.; Ballabeni, A. SHAFT (SAT & HUE Adaptive Fine Tuning), a new automated solution for target-based color correction. Colour Color. Multidiscip. Contrib. 2018, 14, 69–80. [Google Scholar]

- Fantini, F.; Gaiani, M.; Garagnani, S. Knowledge and documentation of renaissance works of art: The replica of the “Annunciation” by Beato Angelico. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2023, XLVIII-M-2-2023, 527–534. [Google Scholar] [CrossRef]

- Scuello, M.; Abramov, I.; Gordon, J.; Weintraub, S. Museum lighting: Why are some illuminants preferred? J. Opt. Soc. Am. A. Opt. Image Sci. Vis. 2004, 21, 306–311. [Google Scholar] [CrossRef]

- Hong, G.; Ronnier, M.L.; Rhodes, P.A. A Study of Digital Camera Colorimetric Characterization Based on Polynomial Modeling. Color Res. Appl. 2001, 26, 76–84. [Google Scholar] [CrossRef]

- ISO 17321-1; Graphic Technology and Photography—Colour Characterisation of Digital Still Cameras (DSCs). ISO (International Organization for Standardization), ISO Central Secretariat: Geneva, Switzerland, 2012.

- Jiang, J.; Liu, D.; Gu, J.; Süsstrunk, S. What is the space of spectral sensitivity functions for digital color cameras? In Proceedings of the 2013 IEEE Workshop on Applications of Computer Vision (WACV), Los Alamitos, CA, USA, 15–17 January 2013. [Google Scholar] [CrossRef]

- Lyon, R.F. A Brief History of “Pixel”. In Proceedings of the SPIE Electronic Imaging 2006—Digital Photography II, San Jose, CA, USA, 15–19 January 2006. [Google Scholar] [CrossRef]

- Operation Night Watch. Available online: https://www.rijksmuseum.nl/en/whats-on/exhibitions/operation-night-watch (accessed on 25 July 2023).

- Relio2. Available online: https://www.relio.it/ (accessed on 25 July 2023).

- Beraldin, J.A.; Gaiani, M. Evaluating the performance of close-range 3D active vision systems for industrial design applications. In Proceedings of the SPIE Electronic Imaging 2005—Videometrics VIII, San Jose, CA, USA, 17 January 2005. [Google Scholar] [CrossRef]

- ISO/CIE 11664–6; Colorimetry–Part 6: CIEDE2000 Colour-Difference Formula. ISO (International Organization for Standardization), ISO Central Secretariat: Geneva, Switzerland, 2014.

- Beretta, G.; Gaiani, M.; Rizzi, A. La riproduzione digitale del colore: Una storia da quattro bit. Kritike 2021, 2, 263–304. [Google Scholar]

- Parulski, K.; Wueller, D.; Burns, P.; Yoshida, H. Creation and evolution of ISO 12233, the international standard for measuring digital camera resolution. In Proceedings of the IS&T International Symposium on Electronic Imaging: Image Quality and System Performance 2022, New York, NY, USA, 17–26 January 2022; Society for Imaging Science and Technology (IS&T): Springfield, VA, USA; pp. 347-1–347-7. [Google Scholar] [CrossRef]

- Williams, D.R. Benchmarking of the ISO 12233 Slanted-Edge Spatial Frequency Response (SFR) Plug-In. In Proceedings of the IS&T/PICS Image Processing, Image Quality, Image Capture, Systems Conference (PICS-98), Portland, OR, USA, 17–20 May 1998; pp. 133–136, ISBN 0-89208-211-9. [Google Scholar]

- Burns, P.D. Slanted Edge MTF for Digital Camera and Scanner Analysis. In Proceedings of the IS&T/PICS Image Processing, Image Quality, Image Capture, Systems Conference (PICS-00), Portland, OR, USA, 27–31 March 2000; pp. 135–138, ISBN 0-89208-227-5. [Google Scholar]

- Brown, D.C. Close-range camera calibration. Photogramm. Eng. 1971, 37, 855–866. [Google Scholar]

- Fryer, J.G. Camera calibration. In Close Range Photogrammetry and Machine Vision; Atkinson, K.B., Ed.; Whittles Publishing: Dunbeath, UK, 2001; Chapter 6; pp. 156–179. ISBN 978-1870325-73-8. [Google Scholar]

- Remondino, F.; Fraser, C.S. Digital camera calibration methods: Considerations and comparisons. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2006, 36, 266–272. [Google Scholar] [CrossRef]

- Kirkpatrick, J.D.; Kirkpatrick, W.K. Kirkpatrick’s Four Levels of Training Evaluation; Association for Talent Development: Alexandria, VA, USA, 2016; ISBN 9781607280088. [Google Scholar]

- Cabezos-Bernal, P.M.; Rodriguez-Navarro, P.; Gil-Piqueras, T. Documenting Paintings with Gigapixel Photography. J. Imaging 2021, 7, 156. [Google Scholar] [CrossRef] [PubMed]

- Berns, R.; Tongbo, C. Practical total appearance imaging of paintings. In Proceedings of the IS&T Archiving 2012, Copenhagen, Denmark, 12–15 June 2012; pp. 162–167. [Google Scholar] [CrossRef]

- Apollonio, F.I.; Gaiani, M.; Garagnani, S.; Martini, M.; Strehlke, C.B. Misurare e restituire l’Annunciazione di San Giovanni Valdarno del Beato Angelico. Disegnare Idee Immagin. 2023, in press. [Google Scholar]

- Apollonio, F.I.; Clini, P.; Gaiani, M.; Perissa Torrini, A. The third dimension of Leonardo’s Vitruvian Man. Disegnare Idee Immagini 2015, 50, 48–59. [Google Scholar]

- Marani, P.C.; Barsanti, R.; Apollonio, F.I.; Gaiani, M. Leonardo, Anatomia Dei Disegni [Reloaded]; Bologna University Press: Bologna, Italy, 2023; p. 112. ISBN 979-12-547-7263-8. [Google Scholar]

- Marani, P.C.; Barsanti, R.; Gaiani, M. Il Disegno Anatomico di Leonardo al Tempo Del Salvator Mundi; Bologna University Press: Bologna, Italy, 2023; p. 136. ISBN 979-12-547-7289-8. [Google Scholar]

- Strehlke, C.B. (Ed.) Masaccio e Angelico: Dialogo Sulla Verità Nella Pittura; Magonza: Arezzo, Italy, 2022; p. 172. ISBN 88-31280-71-6. [Google Scholar]

| Component | Commercial | Custom Made |

|---|---|---|

| Camera system | Rencay DiRECT Camera System 24k3 camera equipped with a Rodenstock Apo Macro Sironar Digital 120 mm, f/5.6 lens. | - |

| Column stand | Manfrotto 809 Salon 230 | - |

| Light system | 16 Relio2 single LED lamps (gathered in 4 groups consisting in 4 lights each) | - |

| Light support | - | Custom 3D printed joints placed on four detachable arms using 20 mm × 20 mm × 1 mm hollow aluminum extrusions |

| Flat acquisition surface | - | 900 mm × 650 mm × 32 mm medium-density panels, with laser engraved reference system |

| Component | Commercial | Custom Made |

|---|---|---|

| Camera system | Hasselblad H6D-400C multi-shot camera | - |

| Column stand | Lupo Repro 3, equipped with a Manfrotto 410 geared head | Modified with reinforced welded steel sheet base and made sturdier with additional bracing elements attached to the structure |

| Light system | 16 Relio2 single LED lamps (gathered in 4 groups consisting of 4 lights each) | - |

| Light support | - | Custom 3D printed joints placed on four detachable arms using 20 mm × 20 mm × 1 mm hollow aluminum extrusions |

| Flat acquisition surface | - | 900 mm × 650 mm × 32 mm medium-density panels, with laser engraved reference system |

| Darkening system | Introduced with a guide at the top of the arms to hold a black drape |

| Component | Commercial | Custom Made |

|---|---|---|

| Camera system | Hasselblad H6D-400C multi-shot camera | - |

| Column stand | Manfrotto 809 Salon 230 | - |

| Light system | 32 Relio2 single LED lamps (gathered in 8 groups consisting of 4 lights each) | - |

| Light support | - | Custom 3D printed joints placed on four arms made of n. 12, 20 mm × 20 mm × 1 mm hollow aluminum extrusions |

| Flat calibration surface | - | medium-density panel, with laser engraved reference system hosted on a vertical aluminum frame |

| Value | Prototype for Horizontal Acquisitions | Prototype for Vertical Acquisitions |

|---|---|---|

| MTF50 | 0.1300 (Hasselblad H6D-400C) | 0.165 (Hasselblad H6D-400C) |

| 0.119 (Hasselblad X2D-100C) | 0.308 (Hasselblad X2D-100C) | |

| MTF10 | 0.228 (Hasselblad H6D-400C) | 0.277 (Hasselblad H6D-400C) |

| 0.119 (Hasselblad X2D-100C) | 0.556 (Hasselblad X2D-100C) | |

| Efficiency | 0.314 (Hasselblad H6D-400C) | 0.662 (Hasselblad H6D-400C) |

| 0.475 (Hasselblad X2D-100C) | 0.754 (Hasselblad X2D-100C) |

| 2014 | 2018 | 2019 | 2021 | 2022 | |

|---|---|---|---|---|---|

| Usability area (in mt) | ~4 × 4 × 3 | ~2.5 × 3 × 2.8 | ~2.5 × 3 × 2.8. | ~1.5 × 1.5 × 2.8 | ~1.5 × 1.2 × 1.6 |

| Setup time for the stand | 2 h | 1 h | 30 min | 30 min | 30 min |

| Setup time for lights | 1 h | 1 h | Embedded | Embedded | Embedded |

| Setup time for camera | 5 h | 30 min | Embedded | Embedded | Embedded |

| MTF + Color checker | 50 min | 30 min | 15 min | 10 min | 5 min |

| White image (flat fielding) | 30 min | 15 min | 8 min | 8 min | 5 min |

| Acquisition time (per shot) | 20 min | 8 min | 2 min | 2 min | <1 min |

| Dismantling time | 3 h | 1 h | 20 min | 15 min | 15 min |

| Total time (all shots) | 14 h | 7 h | 6 h | 4.5 h | 3 h |

| Osram Fluorescent Lamps 1 | Godox LED Lamps 2 | Relio2 LED Lamps 3 |

|---|---|---|

| ΔE00 mean = 1.47 | ΔE00 mean = 1.17 | ΔE00 mean = 1.05 |

| ΔE00 max = 3.5 | ΔE00 max = 3.3 | ΔE00 max = 2.5 |

| Year | Prototype | General Results 1 |

|---|---|---|

| 2014 | First prototype for ancient drawings (horizontal) | ∆E00 mean = 1.34 |

| ∆L mean = 0.14 | ||

| 2018 | Second prototype for ancient drawings (horizontal) | ∆E00 mean = 1.31 |

| ∆L mean = 0.19 | ||

| 2019 | Second prototype for ancient drawings (horizontal, darkened) | ∆E00 mean = 1.33 |

| ∆L mean = 0.21 | ||

| 2021 | Second prototype for ancient drawings (horizontal, darkened) | ∆E00 mean = 0.95 |

| ∆L mean = 0.19 | ||

| 2022 | Second prototype for ancient drawings (horizontal, darkened) | ∆E00 mean = 0.94 |

| ∆L mean = 0.19 | ||

| 2022 | Prototype for vertical paintings | ∆E00 mean = 0.85 |

| ∆L mean = 0.18 |

| iPhone X | Nikon D5200 | |||||||

|---|---|---|---|---|---|---|---|---|

| ΔE00 Mean | ΔE00 Max | ΔL | Exposure Error (f-Stops) | ΔE00 Mean | ΔE00 Max | ΔL | Exposure Error (f-Stops) | |

| Porcupinefish | 3.67 | 8.11 | 2.52 | −0.04 | 2.79 | 6.79 | 1.72 | −0.03 |

| Horn d’Arturo’s Globe | 3.05 | 7.38 | 2.02 | −0.10 | 2.47 | 6.43 | 1.43 | −0.01 |

| Porcupinefish | Horn d’Arturo’s Globe | |||

|---|---|---|---|---|

| iPhone X | Nikon D5200 | iPhone X | Nikon D5200 | |

| Mean BA reprojection error (px) | 0.6608 | 0.44330 | 0.5703 | 0.4929 |

| Numb. oriented images | 141/141 | 141/141 | 76/76 | 76/76 |

| Observations | 220,568 | 352,664 | 222,170 | 344,729 |

| Points | 50.390 | 90.115 | 42.881 | 60.341 |

| Numb. 3D points dense matching | 1,815,027 | 3,216,005 | 1,541,992 | 1,645,099 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bacci, G.; Bozzola, M.; Gaiani, M.; Garagnani, S. Novel Paradigms in the Cultural Heritage Digitization with Self and Custom-Built Equipment. Heritage 2023, 6, 6422-6450. https://doi.org/10.3390/heritage6090336

Bacci G, Bozzola M, Gaiani M, Garagnani S. Novel Paradigms in the Cultural Heritage Digitization with Self and Custom-Built Equipment. Heritage. 2023; 6(9):6422-6450. https://doi.org/10.3390/heritage6090336

Chicago/Turabian StyleBacci, Giovanni, Marco Bozzola, Marco Gaiani, and Simone Garagnani. 2023. "Novel Paradigms in the Cultural Heritage Digitization with Self and Custom-Built Equipment" Heritage 6, no. 9: 6422-6450. https://doi.org/10.3390/heritage6090336

APA StyleBacci, G., Bozzola, M., Gaiani, M., & Garagnani, S. (2023). Novel Paradigms in the Cultural Heritage Digitization with Self and Custom-Built Equipment. Heritage, 6(9), 6422-6450. https://doi.org/10.3390/heritage6090336