Abstract

Nowadays, a great interest in historical installations like museographic devices in international museological studies (capable of giving space form during user communication) is largely attributable to new techniques of immediate communication. Tools and libraries for virtual, augmented, and mixed reality content have recently become increasingly available. Despite its increased cost and investment, these are new ways for increasing visitor presence in museums. The purpose of this paper is to describe the potential of a tourist/archaeological application suitably developed and implemented by us. The application was developed in Unity3D and allows the user to view cultural heritage in a virtual environment, making information, multimedia content, and metrically precise 3D models available and accessible (obtained after a phase of reducing the mesh border), useful for obtaining good 3D printing reproduction. The strengths of our application (compared to the many already in the literature, which are also used as research ideas) stem from the possibility of using and easily integrating different techniques (3D models, building information models, virtual and augmented reality) allowing the choice of different 3D models (depending on the user’s needs) on some of which (again depending on the application needs) were tested and performed simplification and size reduction processes, to make the application loading phase faster and the user experience easier and better. Another application cue was to finalize an application to relate the elements of the museum with some archaeological elements of the territory according to historical period and element type.

1. Introduction

The role of virtual reality (VR) in museums context is growing in importance because it helps museums to resolve two main problems: authenticity and the new museology. In other words, today’s museums are expected to present an authentic experience and enhance visitor experience with both educational and entertainment content. VR helps overcome these concerns because immersive VR environments are conveyed to the visitors who experience virtual images of the artefacts as original and obtain information about the different collections present. VR technology therefore represents an ideal means for disseminating cultural heritage (CH) in museums and other cultural institutions.

Indeed, a considerable number of museums, such as the British, Prado, and Vatican City Museums, have long been using VR devices to offer their visitors spectacular immersive experiences.

VR has therefore been adopted in cultural tourism due to its characteristics of helping to achieve the tourism sector goal of generating unique experiences for tourists [1]. VR improves tourists’ experiences by facilitating their interactions with tourist/exhibition routes [2], barriers and distances, providing immediate and more understandable information before the tourist even visits (on site) [3]. Added to this, VR offers a complete environment in which users can be completely “immersed” in an educational and entertaining experience [4,5].

Contrary to the aforementioned advantages, some scholars have considered VR as a substitute or even a threat to tourist sites [5,6], highlighting that although VR reduces certain inconveniences experienced in a real tour, it leads tourists to think that visiting real tourist sites is no longer necessary, and therefore completely replaces visits with advanced and realistic VR simulations [6]. However, it has nevertheless been shown that most people do not believe that a virtual visit can completely replace a real visit, but rather perceive it as an effective preparation tool for the face-to-face visit [7,8].

Therefore, as the museum is currently a destination for many visitors, many researchers and experts are working on new technologies to make the visitor experience even more attractive and accessible. So, in recent years guidelines have been drawn on how to represent CH through virtual instruments, such as the London Charter [9] and Principles of Seville [10,11].

The Augmented Reality (AR)/VR revolution will almost certainly use 3D digital models, replacing traditional graphic documentation of CH. These visuals will be used to monitor the state of conservation, reconstruction and restoration activity, virtual use, etc. [12].

Furthermore, 3D models created are suitable for AR applications for promoting archaeological, architectural, and CH [13].

However, 3D models built with photogrammetry or laser scanning processes have a considerable amount of data and a very great number of “faces” that make the process of loading scenes within applications very difficult. So, it is necessary to simplify the models by reducing the number of surfaces that make up the model mesh. However, this operation may risk the loss of both graphic information (details) and metrics (dimensions), and in this regard it is necessary to act carefully to avoid results that are an unreliable representation of the reproduced reality.

In this regard, a possible alternative to the practice of simplifying models could also be the reconstruction of models in the building information model (BIM) environment, which would simplify the models whilst producing excellent results that are easily exploitable for the proposed purposes.

Therefore, these different models created by different methodologies can be inserted, integrated, and connected within a single application.

In the broader phase of preservation and spread of archeology heritage, the Geomatics laboratory of the Mediterranean University of Reggio Calabria (UniRC) is creating a tourism-related educational application and a first experimentation of mixed reality (MR).

Our purpose is to present these processes that lead to the realization of the application (3D models), to the assignment of AR and VR content, to the virtual user tracking system, and to collect, store, and distribute archaeological data, using 3D modelling, BIM, VR, AR, and MR. The application is designed to maximize and facilitate user experience, helping choice in where to visit [14].

The proposed methodology therefore offers the advantage of making the virtual images of the artefacts authentic to users who obtain information on the collections [15].

As an application case, the proposed application was designed to be used and integrated to allow the user to record, study, and provide information on the archaeological finds preserved in the city and in the National Archaeological Museum of Reggio Calabria (MArRC), both from a metric and a graphic point of view. The authors’ idea was to develop an application that would relate the museum’s elements to certain archaeological features in the area according to historical period and type of element.

The MArRC is a rare example of a building designed and built to house museum collections. It is one of the most representative archaeological museums for the period of Magna Graecia and ancient Calabria, known worldwide for the permanent exhibition of the famous Riace Bronzes (very ancient masterpieces of bronze statuary) and for its rich collection of artifacts from all over Calabria, from prehistoric times to the late Roman age.

The Museum was constructed between 1932 and 1941, and on its main façade is carved a series of large decorations reproducing the coins of the cities of Magna Graecia. From 2009 to 2013 it was closed for renovation and expansion works [16], and on 21 December 2013 the room housing the Riace Bronzes was temporarily reopened. The museum was definitively reopened on 30 April 2016.

From 2009 until 2016, the building underwent an important restoration (about 11,000 square metres) consisting of architectural redevelopment of the historic building and the realization of a new, enlarged, and better organized structure, in order to create a better equipped museum itinerary.

The new museum itinerary starts on the second floor, with a section dedicated to Protohistory, and extends to the ground floor through the exhibition of the great architecture of the temples of the territories of Locri, Kaulonia, and Punta Alice, guaranteeing temporal, spatial, and logical continuity with the display of materials. This path is also important for links to other historical periods. Captions, panels with explanatory texts, and dedicated support help to “narrate” the history of Calabria to the visitor.

Nowadays, the function of a museum should be to help non-specialists understand information and context through short-lived interaction. Ideally, museums should also increase visitor interest. In accordance with their educational mission, museums must consistently present and represent complex themes in ways that are both informative and entertaining, thus providing access to a wide audience.

Within this scientific panorama and for improving the usability of the MArRC, the UNIRC’s Geomatics laboratory, as part of a broader project of conservation and enhancement of CH, developed an application that enables users to make a CH Virtual Tour in the Museum and in the parts of the city that in certain ways interconnect. For this reason, the author’s project, developed for the conservation of the city’s CH, offers virtual connections (e.g., era or of same type) to even less experienced tourists in the sector, making each visit much more fluid, practical, and instructive, and transforming it into something unique.

This application is a mobile tourist application therefore allowing the user to follow a real time virtual tour inside the museum, or view the media associated with the various artefacts (AR), connecting them to both documentation and historical information and the most well-known discoveries and CH of the same reference period, in or out of the city [17].

With this application, users can take virtual tours as spectators (simply on the device screen) or as active participants “accompanied” by a virtual guide who interacts with the surroundings [18]. The application, still in the definition and development phase, is currently used exclusively for educational purposes.

The application, in the tours and demonstrations of the elements represented, uses 3D models made with both fast techniques (photogrammetry from Google Heart survey) and precision (laser scanner photogrammetry), which users can then use by associating them directly to the element observed inside the museum [19]. The types of models used in the proposed application were chosen based on their application within the application; in fact, the purely graphic models are reproduced with simplified models, while the models from which it is necessary to extrapolate more information are created by purely geomatic techniques and subsequently simplified in the less important parts.

In summary, the proposed application allows viewing from the visitor’s position within the museum, naming exhibition rooms and multimedia content of the artefacts present. It provides easily understandable artefact details directly, along with a virtual guide (video) who tells the artifact’s history, or the reconstruction, in a virtual environment of possibly broken artefacts [20].

The application also connects to virtual representations of CH (concerning the same historical period or historical links) in order to allow further information on the displayed themes.

Finally, beginning from the 3D component and VR construction methodology, we carried out a first experimentation of MR using Microsoft HoloLens for the interaction, when the real world meets VR.

2. Materials and Methods

The proposed application in this paper (currently still being defined) allows users to choose the service needed to implement their exploratory experience in CH field and find any marketing-oriented offers.

This application is developed to:

- identify the minimum path to reach the museum through the user’s location coordinates by viewing it in 3D on the map,

- provide the user with a virtual tour of the museum and the surrounding area (noting other artifacts),

- allow the user to tour the museum as a passive observer or as an active participant by interacting via their device’s screen (the choice of the basic scenario was made partly through photogrammetric models of the environments and partly through the realization of BIM models),

- display the visitor’s position on the museum floor plan with the names of the rooms where the users are located, where the red dot represents the user’s position in real time in the various sections of the museum,

- create references and links to 3D models of artefacts from the same historical period in advance using fast or precision techniques, showing various information regarding the history of the artefacts that the smartphone camera is capturing,

- view multimedia content (video or audio) associated with the user’s framed object,

- draw attention to “details of interest” on the artifact being framed,

- view the 3D model of the product with the possibility of transforming it (disassembly and assembly),

- ingeniously reassemble three-dimensional models, and

- pre-order a 3D print collectible.

Specifically for the functions of the application, we observe that once the application is launched, the device’s coordinates match those of artifacts found in the city and with the museum location coordinates, so that the application calculates the minimum route. The user can then decide whether to reach the museum or continue the tour in the city. In the first hypothesis, the three-dimensional model of the museum is shown on the map, and whenever the coordinates of the user’s position enter the surroundings of a monument of interest on the display, a warning icon appears on the screen. The application therefore acts as a navigator to the museum, indicating the places of interest in the vicinity of the user [21].

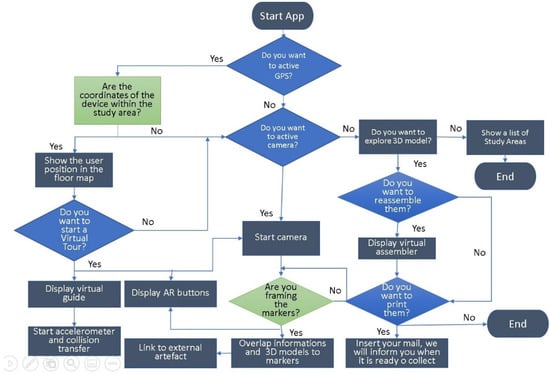

Once the place of interest has been reached, the user can decide to activate the camera of the device and then frame the object of study, so that multimedia contents, VR, and links to artefacts of the same type can be associated. In particular, once the museum is reached, the user can decide whether to take the tour from the outside or, once inside, he can be assisted by a virtual guide who explains the various content associated with the object framed by the camera. Once the object of study is framed, the application allows the reassembly of the various parts of the artefact and even its printing. The case studies are listed and can also be implemented within the application in a section suitable for integration. The flow chart in Figure 1 describes the main potential of the application.

Figure 1.

Flow chart application.

The peculiarity of the application therefore consists of the integration of several simplified models, produced with different methodologies, allowing a fluid navigation without blocking or long waiting times for loading [22,23].

Ultimately, modern technology helps make learning attractive by presenting it in new and often amusing ways; that is, interactive activities that can inform the visitor about more things than those that physically are in the museum, abandoning the concept of a basic container design, but a more alive and a richer reality capable of attracting the public through the use of modern technology. 3D models in AR environments are another important use; 3D models could be used to show past events in a more contemporary context to inform and entertain the public.

3D models used within the application were created using different methodologies, based on the precision of the details deemed necessary and the practicality inherent in their use.

The methodologies for 3D models realization are various and each has a different level of detail accuracy (instrumentation and methodology used) related to the final use for which the work is intended. In some parts of this case study, the metric precision of the 3D model is of little importance due to the didactic nature and the tourism purpose of the application [24]. For example, during the initial visualisation of the app, a simple visualisation of the 3D model of the museum building (compared to the metric one) is not of primary importance to the tourist, it was decided to survey the 3D construction in a relatively “fast” manner, directly using a dataset obtained from Google Earth and subsequently processed with classical photogrammetric techniques. We used the Google Earth Pro program, setting the view of the castle at a 45° angle. Next, we created a tour, completing a 360° turn around the building and maintaining the same viewing angle, thus simulating drone flight.

The survey of the artefacts and the interiors of the museum were instead made using classic photogrammetric techniques (Computer Vision and BIM modelling) [18] and with a laser scanner, using the classic methods of reconstruction.

Photographic acquisition was performed by a Canon Eos 7D with a “convergent axis” scheme, to get both geometrically accurate models and photorealistic renderings.

The captured frames were processed with Agisoft Metashape. Image orientation, model generation, and final reconstruction are fully automated. This condition improved processing times and the machine/software complex’s performance [25,26].

The processing steps were as follows:

- Our photos are aligned by software, calculating their spatial position, and reconstructing the so-called grip geometry. Then, it calculates photograph’s elements spatial position using geometric triangulation. Clearly, the final 3D model’s quality determines the alignment’s quality. This phase produces a scattered point cloud. To record block in real coordinates, control points were measured after the alignment.

- Create a dense cloud using dense image matching algorithms that find matches in multiple images using a stereo pair.

- Create a polygonal model of the new dense cloud. Mesh is a polyhedral solid subdivision.

- Texture; allowing association of colorimetric values to the mesh for a correct 3D model.

The final step is to scale the model, which assigns it the correct metric size for precision measurements [27,28].

As mentioned, one function of the application is to connect the exhibited and framed objects with other artefacts external to the museum and connected to them (same historical period, same types, same area of discovery, etc.).

Regarding the laser scanner survey in particular, 3D Faro Focus and The Scan Arm were used, using classic reconstruction methods offered by proprietary software.

With a 0.2 mm grid and 1.0 mm line spacing, the laser scanner information was reprocessed.

For aligning the scans, each was performed on both sides of the object. After scanning both sides, the point clouds were cleaned to remove the table surface where the objects were placed and any other unnecessary data.

To create a single point cloud representing all sides of an object, each side’s point cloud was aligned with the other.

The photogrammetric and the laser scanned model were both imported into Geomagic Wrap after the point clouds were merged. To replace photogrammetric mesh with a laser scanned one, it had to be aligned first.

We used realistic and metrically accurate three-dimensional models obtained from the laser scanner and photogrammetry to create an application that allows for the end user to obtain an overview of built environments.

This last aspect, thanks to the integration with virtual and mixed realities, allows for new and more modern methods of interaction with the building and, therefore, a better and broader understanding of its historical heritage.

The result was a photogrammetric 3D model with laser scanner precision [29]. Because the underlying mesh is more accurate, measurements taken from visual photographic texture cues are more accurate [30].

On the other hand, it should be remembered, due to the high level of detail that 3D models can offer, it is difficult to manage the models for their size, and therefore it is desirable to minimise the size, making the models more usable, acting exclusively on the minimum entities that compose the model mesh (vertices, sides, and faces).

Polygonal models use simple, regular rendering algorithms that embed well in hardware; so, polygon rendering accelerators are available for every platform. The number of polygons in these models grows faster than our ability to render them interactively. Polygonal simplification techniques simplify small, distant, or otherwise unimportant portions of the model, reducing rendering cost without a significant loss in the scene’s visual content (from 1976).

There are numerous traditional automatic methodologies for mesh reduction that can only be used on the minimum entities of the mesh (vertices, sides, and faces) [31]. However, in most cases, manual attempt to reorganise the mesh remains the most popular approach. Therefore, Blender and Maya software was used.

Depending on our objective, we have several possibilities: whether we seek to preserve geometric accuracy by reducing redundancies, visual fidelity, or pre-processing time, whether we want drastic simplification or a fully automatic process, or, finally, to simplify the code, we can apply some of the many available algorithms. However, we have chosen to operate manually using BIM, and still obtained a result that meets our expectations. The 3D models to be handled have been reduced to 500,000 faces because this is the size usable by Unity, as problems can occur with higher values; therefore, each 3D model, after construction, regardless of the number of faces it originally had, has been reduced to 500,000 faces or alternatively to distinct blocks of 500,000 faces, in order to be managed by Unity. In other words, a complex 3D model has been broken down into many blocks, each containing 500,000 faces.

In terms of vertices, it must be borne in mind that since the meshes are triangular, each face loses two vertices or, rather, by eliminating a face that is originally composed of three vertices, two vertices are lost for each face. Furthermore, this elimination of faces and vertices is carried out in our case, first with the programmes shown in the paper and then manually.

We are planning for the future to operate with some of the simplifying algorithms.

We will work to improve even this application, trying to automate it according to the need for reductions in functions and of the use the tourist must make of the 3D model.

In any case, an average reduction value must be set in some way, always bearing in mind the considerations we made above, namely that, for Unity to work well, the application must have model portions of, at most, 500,000 faces.

With reference to the artefacts inside the museum, given the complexity, different manual techniques were adopted and applied simultaneously, even on a single artefact.

Among the various manual techniques, those of the curve approaches (creation of new vertex by the intersection of the new drawing curves on the surface) was found to be more convenient in the more geometric areas of the models (such as the pedestal and the rear column), but less so in the organic parts (such as the face, hands, and feet). Since this approach does not facilitate the reduction of the mesh, the vertices (minimum entities of the mesh) were manually positioned, based on a preventive topological study performed in 2D. In the more geometric areas, on the other hand, the edge-flow was determined by the shape itself, without necessarily resorting to singularities; the curves were simply positioned along the edges.

As for the scenario to be explored in the VR application, the 3D models obtained, once simplified and reduced, are associated with markers, to recreate navigable scenes within which users can “navigate” [32]. For simple scenes (not rich in interior elements) 3D models of the environment scene were used directly. These same models replace the reconstruction of the scenario in a box, on whose walls are the default background images. Alternatively, for some scenarios, it is possible to use environments reconstructed directly in BIM as a navigable scene (Figure 2). Figure 2 provides the example of 3D reproduction of a medieval church in a BIM environment.

Figure 2.

3D BIM scenarios.

Technical Development: The Developed Application

Today, developing an application in VR is possible thanks to Vuforia and Unity applications that make the application’s creation easy and immediate, even for non-professionals. In this regard, the application developed by the laboratory mainly integrates a series of contents created by students during their various didactic activities, highlighting weaknesses and strengths for each subsequent implementation, and improving the main aspects from time to time [33].

Unity is a cross-platform 3D content creation tool, as architectural visualisations or real time 3D animations, using two different programming languages: C ++ and NET. The work of programming basing on so-called “Game Objects”. Scripts can indeed be connected with these components, which can be graphic or not, all extensions of the “Mono-Behaviour” class base. This script in Unity, connected to a client-server Game Object, has, as a main task, checking for new data in the Buffer and updating the representation for both the customer and the server [34].

This effect is achieved by using the remote procedure call (RPC) functions.

The object action to be performed is based on the buffer’s content and is performed and chosen both on the server and client versions of the object.

Vuforia software is the world’s most popular platform for developing AR with the main mobile devices. It simplifies work, such as solving complex problems in VR development.

From a practical standpoint, the software development kit (SDK) uses real-time image and object monitoring. Virtual elements (e.g., 3D models) can be placed on these “Markers” and displayed on the screen adopting the observer’s viewpoint. The freedom to choose these Markers is so important because the framework allows the recognition of previously scanned (through special tools, words) objects, and images, even more than one at the same time, and is made developer-friendly [35].

The Unity 3D application is divided into scenes. There are opening, loading, and exploration scenes, the order of which is determined by the user’s choices. The scene manager script performs initialisation operations, while the scene loader script loads the desired scene and controls scene transitions. The application is designed as a timeline of scenes [36]. The first part of the work focuses on creating the main scene corresponding to the virtual tour containing all the information we want to create. So, the virtual tour is conceived as a series of scenes presented sequentially over time, creating the illusion of movement. Besides triggering animations or videos based on the device’s position and orientation, motion tracking allows people to move around objects and interact with them as if they were part of the environment. Concurrent odometry and mapping (COM) is a process that is able to determine that the smartphone’s location correctly renders the three-dimensional model in AR so that it appears realistic, autonomously defining floor plans and characteristic points to determine the proper constraints for the object’s spatial and camera positioning. Essentially, it allows the smartphone to understand its position as it relates to the world around it, allowing it to realistically interact with the environment [37]. Furthermore, you are able to observe the surrounding light and illuminate objects as if they were part of the environment.

We ran the test on Unity with Arcore SDK, loading the latter in the Unity environment and activating it from Player Settings => XR Settings => Arcore, disabling the Auto Graphics Application Programming Interface (API) from Player Settings => Other Settings > Rendering. Then, the three-dimensional model was imported into the scene, the pose position (Center Eye) was defined, to achieve the desired effect within the GameObject MainCamera, the Track Pose Driver and ARCore Session components were added, configured, and finally, we defined the Background as an ARBacground.

To make the experience as realistic as possible, while increasing user involvement, we allowed the user to pause and resume the experience later, without having to start over, which could reduce user interest considerably. This brings up a full screen image when paused and allows you to resume exactly from the point where it was interrupted. We found it necessary to proceed in this direction rather than using ARCore’s native pauses because the latter forces the user to resume playing the entire scene from the beginning for a medium-long pause, such as a phone call. Using an RPC (Remote Position Call) function, it was possible, based on the contents of the buffer, to make the object in question perform an action defined for a predetermined event, and this action is performed on both the client and the server object.

The proposed application in this paper (currently under definition):

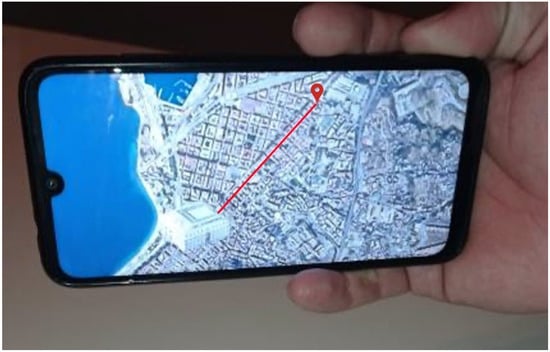

- Allows users to identify the minimum path to reach the museum through the user’s location coordinates by viewing them in 3D on the map (Figure 3). The application uses the global position system (GPS) position received from the device to identify a series of alternatives to reach the place of interest, highlighting the shortest route as the first alternative. In Figure 3, we can see an example in the application for the minimum route to reach the museum (which appears in 3D on the map) from the user’s position (red dot).

Figure 3. Example of 3D visualisation in application of the minimum path to reach the MArRC.

Figure 3. Example of 3D visualisation in application of the minimum path to reach the MArRC. - Offers the user a virtual guide that accompanies him to the museum and connect him with the surrounding environment (detecting the presence of other artifacts) (Figure 4). This feature aims to prevent a less experienced tourist from being able to visualize (due to the lack of information) all the places of interest in the environment around him. Figure 4 displays a cartoonized “Bronze”, used as a virtual guide.

Figure 4. Example of a cartoonized “Bronze,” used as a virtual guide.

Figure 4. Example of a cartoonized “Bronze,” used as a virtual guide. - The other places of interest present in the city are highlighted on the map in a range of 100 m from the user, allowing the tourist also to mark or delete a place from the map as interesting or already displayed.

- Figure 5 is an example in the application, where the user is represented as red dot.

Figure 5. Example displaying the minimum path to the Museum and surrounding places of interest.

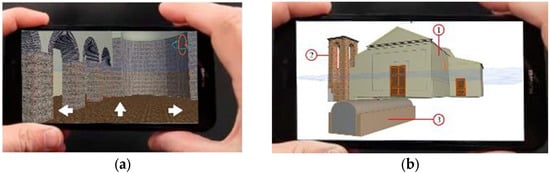

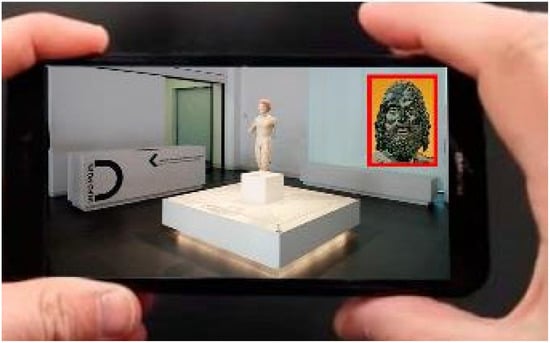

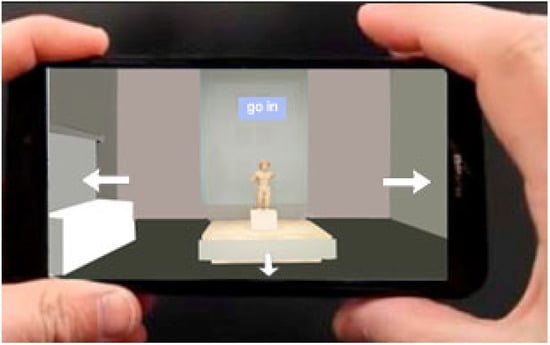

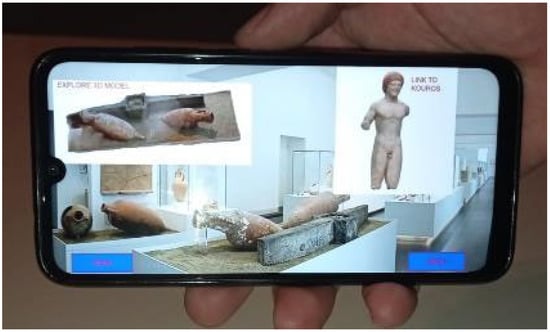

Figure 5. Example displaying the minimum path to the Museum and surrounding places of interest. - The user can also take a virtual tour of the museum as a spectator or as an active participant by interacting via your device’s screen (choice of the basic scenario was made partly through photogrammetric models of the environments and partly through the realization of BIM models) (Figure 6). Figure 6 displays a complete 3D reconstruction, in a BIM environment, of the surrounding environment where the 3D model, obtained with photogrammetric techniques, is located. You can move between different scenes using the arrows.

Figure 6. Virtual scenary and a 3D statue of Kouros.

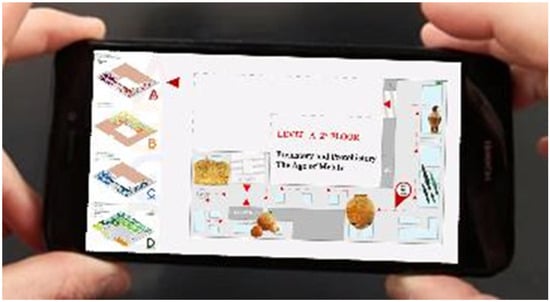

Figure 6. Virtual scenary and a 3D statue of Kouros. - The application displays the visitor’s location on the museum floor plans, with the names of the rooms, where a red dot represents real-time user position in the various sections of the museum (Figure 7). Figure 7 displays a visualization with the visitor’s position in red on the floor plans, the room’s name, and the main attraction.

Figure 7. User position.

Figure 7. User position. - This feature aims to make the tourist self-sufficient in choosing and customizing their route during their visit. The possibility of marking the areas already exhibited provides a “free” choice of what to see.

- The application creates references and links to 3D models of artefacts from the same historical period, made in advance with fast or precise techniques and displaying various information regarding the history of the artefacts that the smartphone camera is capturing. Also, in this case, the possibility of inserting links between various scenarios makes the tourist’s browsing experience much more fluid and simpler; more than 50 connections have been made for each element represented. In this regard, to make the application more intuitive, the various objects have been cataloged into areas, sections, and sub-sections. In Figure 8, the red dot demarks the user’s position in the room and the information of the artefacts closest to him.

Figure 8. User position and information of the artefact closest to him.

Figure 8. User position and information of the artefact closest to him. - The application allows for multimedia content on the user’s device, such as audio or video (Figure 9). In Figure 9, we can see how the application starts a video playback that frames the statue “Bronze A”.

Figure 9. Multimedia Content associated to the framed artefact.

Figure 9. Multimedia Content associated to the framed artefact. - The application draws attention to “details of interest” on the artifact being framed. Thanks to this function, the user is able to view the details of the most important works of art without necessarily having to approach them [38]. Figure 10 displays a zoom on a 3D or real image, highlighting some more interesting details.

Figure 10. Detail of the “Bronze”.

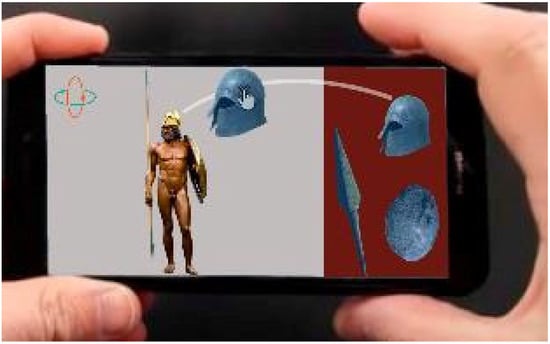

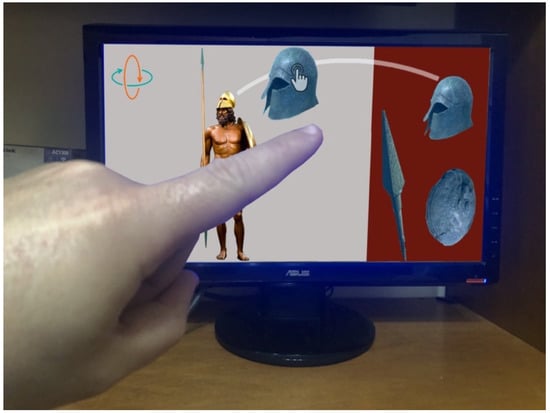

Figure 10. Detail of the “Bronze”. - The user can view the 3D model of the product with the possibility of transforming it (disassembly and assembly). Figure 11 illustrates an application example on a bronze; users can apply shields or spears to the statue and change its’ color.

Figure 11. 3D models assembling.

Figure 11. 3D models assembling. - Users can reconstruct and assemble (even with imagination) one or more 3D models (Figure 12). Figure 12 displays how the user can reconstruct a broken climbing mask.

Figure 12. Reconstruction of a 3D Model of a climbing mask.

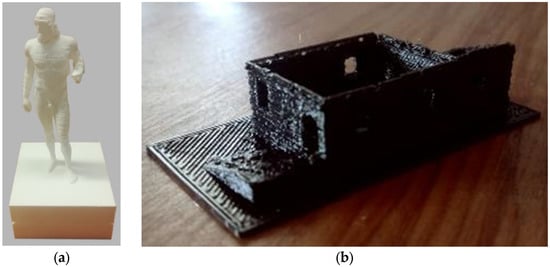

Figure 12. Reconstruction of a 3D Model of a climbing mask. - Users can book a 3D print to be collected in the future (Figure 13). Figure 13a displays a 3D print of the “Bronzo di Riace” reconstructed by photogrammetry; Figure 13b displays the printing of a Saracen tower.

Figure 13. Print of a 3D model of: (a) a bronze and (b) an historical building.

Figure 13. Print of a 3D model of: (a) a bronze and (b) an historical building.

Once you have learned the main functions, it is good to stress that the peculiarity of the application is its’ ability to locate the connection between the detected internal artefact and the external one, the ability to locate the connection between the detected internal artefact and the external one. The connection between the artefacts outside the museum and the internal ones was made mainly on the basis of the historical period, foundation area, and symbolic importance for the city.

For example, we report the connecting information relating to Athena: a clay mask, Kouros: a Dressel amphora, and Aragonese Castle: bronzes.

In particular:

- The clay mask is linked to Athena Vest, thanks to studies related to the manufacture of this artefact in the same period (Figure 14).

Figure 14. 3D Model of Athena and a clay mask in virtual scenario.

Figure 14. 3D Model of Athena and a clay mask in virtual scenario.

Indeed, the Robe of Athena (made of marble, in excellent condition, particularly appreciated by tourists for the harmonious and detailed workmanship) and the clay mask (the cult of Dionysus present in the art) dates back to the period of Magna Graecia, the funerary of Magna Graecia, which is part of a great collection of theatrical masks and statuettes found in the funerary equipment and conserved in the classic section of the Museo Eoliano di Lipari, dating back to the 4th and 3rd centuries BC;

- The Kouros statue has been connected to the Dressel Amphora, thanks to studies linking the manufacture of this artefact in the area where they were found (Figure 15).

Figure 15. 3D Model of Kouros and the Dressel amphora in a virtual scenario.

Figure 15. 3D Model of Kouros and the Dressel amphora in a virtual scenario.

Indeed, the statue of Kouros (500 BC circa) is an important example of Calabrian archaic sculpture. With a thick head of hair styled with 4 rows of curls on the brow, long vertical curls, and braids knotted at the nape of the neck, the statue depicts a young man aged 18–20 in all his spiritual and physical beauty, and the Dressel amphora (that is the most common amphorae, the late Roman republican era, produced between 140/130 BC and the middle of the 1st century BC) were found closer to the same study area, as illustrated by documentation preserved in the archives.

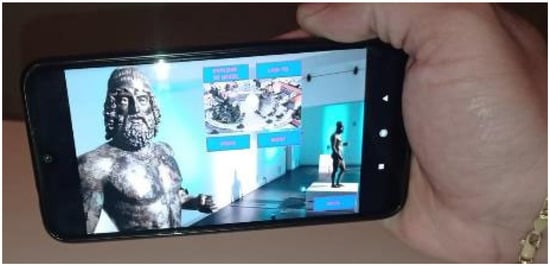

- The Bronzes were linked to the Aragonese Castle because both are considered the symbol of the city (Figure 16).

Figure 16. 3D Model of Aragonese Castle and Bronze A in a virtual scenario.

Figure 16. 3D Model of Aragonese Castle and Bronze A in a virtual scenario.

In fact, the bronzes (which are two magnificent bronze statues, known all over the world and considered among the most significant sculptural masterpieces of Greek art; the “Riace Bronzes” represent one of the greatest moments of sculptural production of all time and are the most important archaeological find of the last century) and the Aragonese Castle (although the current structure is typical of Aragonese defensive fortresses, the castle of Reggio actually has much more ancient origins; traces of a pre-existing fortification have been found throughout the area adjacent to the castle itself) are very important symbols of the city to which the citizens are very attached.

3. Create MxR Interactivity and Visualization

MR (or MxR) combines the real and the virtual. It allows you to immerse yourself in the virtual world and interact with it [39].

There are many standalone Head Mounted Displays (HMDs). Magic Leap, HoloLens, and Meta 2 are just a few popular MxR displays. Other (less expensive) options include Holoboard and Mira, which use a smartphone to process and display data (they are still in development).

Microsoft HoloLens (Figure 17) is a transparent head-mounted optical display (HMD) designed for AR/MR interactions. This device can be controlled by gaze, gestures, and voice. Head-tracking, for example, allows the user to focus the application on what they are seeing. Multiple interactions with the virtual object or interface are supported using bloom, air tap, and pinch. Touching the air selects any virtual object or button, just like clicking a mouse. Touch and pinch can also drag and move virtual objects. A “bloom” gesture opens the shell/interface. Actions can be triggered by voice commands.

Figure 17.

Microsoft Hololens.

HoloLens are used for different application areas; however, they are still used infrequently in the field of CH.

Below we outline the main steps required to deploy 3D models on HoloLens for a MR experience.

Unity 3D (or Unity) is a popular cross-platform game engine. Due to Unity’s popularity, most AR/VR headsets use it as a development platform. Therefore, HoloLens uses Unity to create AR/MR experiences.

The first step is to set up the Unity development environment. This can be done in two ways. The first option is to use Unity’s standard configuration.

The Mixed Reality Toolkit, a Unity package containing a collection of custom tools developed by the Microsoft HoloLens team to help develop and deploy AR/MxR experiences on the device (HoloLens), is used.

The Mixed Reality Toolkit was downloaded from GitHub and imported as a resource pack into a Unity project. After importing the Toolkit, the project environment was configured twice. The first level of configuration involves changing the “Project Settings” using the Mixed Reality Toolkit’s “Apply Mixed Reality Project Settings” option. This option configures Unity project-wide. Make sure “Universal Windows Platform Settings” is checked, as well as “Virtual Reality Supported” in the XR settings list. Include the script backend, rendering quality, and player options. All settings in this Unity project have been applied to the current “created scene”.

Unity can make projects for various platforms. However, in this case, the project was created for UWP (UWP). UWP is a Microsoft open source API introduced in Windows 10. This platform helps developers create universal applications that work on Windows 10, Windows 10 Mobile, Xbox One, and HoloLens without having to code for each device. So, a single build can target multiple devices. Before starting the UWP project, add a scene to the “Integrated Settings”, enable C# debugging, and select HoloLens as the target device.

The second configuration level affects a scene created “as part of a project”. This was done using the Mixed Reality Toolkit’s “Apply Mixed Reality Scene Settings” option. The toolkit was used to configure the scene-level camera position, add the custom HoloLens camera, and render settings. The generated 3D model was then imported into the Unity project using the platform asset import option. Finally, the 3D model now supports gesture interaction, allowing users to interact with the model using HoloLens-recognized gestures. The Mixed Reality Toolkit includes scripts and tools to enhance the MxR experience. The toolkit enabled gesture and gaze-based interaction.

Figure 9 depicts the built environment and construction phases for UW. First, select “Build Settings” from the menu, then “Add Open Scenes” and select the scenes to distribute. Select “Universal Windows Platform” as the Platform, then “Target Device” as HoloLens. Then select “Unity C # Projects” to enable C # debugging and click “Build”. At this stage, all files (including *.sln) required for HoloLens deployment are created and stored in the user-specified location.

The *.sln file from the previous “Building with UWP” step is imported into Microsoft Visual Studio for debugging and HoloLens use. Start the deployment process from the Debug menu by connecting the HoloLens via Wi-Fi or a USB cable.

The HoloLens must be connected to the computer via USB to open the *.sln file. Select “Start without debugging” from the drop-down menu under “Debug”. Input and output details are then displayed. Ensure that “Distribution” is 1 and “failed” is 0. Once uploaded, HoloLens will launch the application.

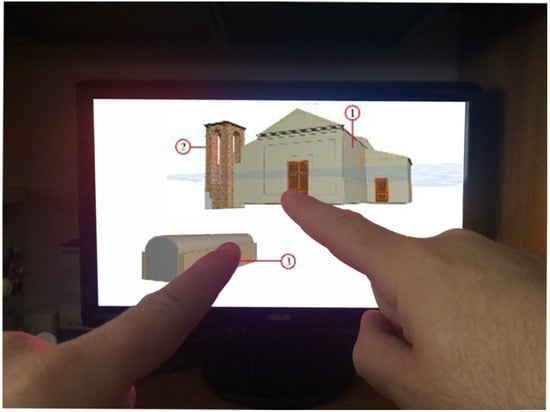

After streaming an application on HoloLens, a user can connect the smartphone to a larger screen via Wi-Fi to share the experience with others. It was used to stream the HoloLens user experience. The actual experience and the content flowing to the other person’s screen differ slightly. Specifically, regarding our application case, in Figure 18 we can see how the sensors contained within Microsoft Hololens recognize the movement of the hands and allow the user to interact with the virtual world we have created. The user can then virtually reconstruct the Riace Bronzes by moving the missing elements such as the helmet, spear, and shield with his fingers. Furthermore, people who do not wear the headset are able to share the user experience thanks to the possibility of viewing their experience on a monitor connected to the same Wi-Fi Network.

Figure 18.

A HoloLens user experience shared on an external monitor.

In this other image, we can also observe the user interacting with and exploring the various architectural parts of the BIM model with his fingers and sharing his experience with other viewers through an external monitor (Figure 19).

Figure 19.

The user experience of HoloLens.

4. Discussion

Consequently, the use of 3D models, associated with AR/VR/MR, is aimed at acquiring a knowledge of CH otherwise closed to the masses or difficult to access without being invasive for such an important, ancient, and, in most cases, delicate heritage (Cuca, et al. 2011).

While many museums still maintain conservatism for presenting their exhibits, a great number of them understand that the modern generation needs more modern ways to be entertained and therefore make continual efforts to find different ways to interact with their audiences. Right now, that means embedding experiences in AR.

AR allows us to see, in a real-life scenario, a level of digital content that, in this case, offers visitors a richer view of history, bringing it back to life.

This VR system consists of combining the user’s view to a pre-recorded digital virtual scene that is heavily influenced by the active subject’s actions. On the other hand, the subject can “explore” a real environment on which virtual elements are overlaid. In this reality, by using labels and tags, it is possible to view and share knowledge outside of mere verbal communication, where once it was confined to linguistic communities through visual communication and therefore understandable to everyone, at a global level.

Unlike VR, AR does not replace physical reality. It superimposes the computer data on the real environment so that the user has the sensation of being physically present in the landscape he sees.

The founding elements of any AR system are tracking, real-time rendering, and visualization technologies [40,41,42,43,44,45].

In particular, tracking is fundamental as a recording of the observer’s position and of real and fictitious objects. Tracking accuracy and speed are essential for the correct visualization of the virtual components of the scene [46,47].

The use of MR allows the user to interact with the virtual world using their hands as tools, thus allowing them to immerse themselves in a completely new playful/emotional/educational experience. However, this new methodology is limited by the number of hardware devices and by the little software experimentation in this field [48,49]. Recent developments in the literature are consistent with the effort to apply these recent technologies to CH also in museums, in particular eye-tracking support, which presents itself as an interesting system very much connected with the perception of CH, and which, although not considered in this study, we could study and implement for the future [50,51,52].

5. Conclusions

The improvement of CH using 3D acquisition and simulation models’ tools is a rapidly growing field of study. In fact, new technologies like AR/VR/MR allow us to enhance our CH while completely changing user experience. They allow us to live an experience on multiple levels, including a virtual level with a huge amount of information, through video, audio, or three-dimensional reconstructions, that would otherwise be impossible to provide.

Today, we are experiencing a revaluation of CH, which is no longer viewed as the exclusive domain of specialized scholars, but also as a resource for the economic development of local communities and regions.

3D modelling systems can favour the dissemination and enhancement of CH.

All the examples explained for using technologies in the field of CH attempt to demonstrate a triumph over the tradition/innovation dichotomy; we attempt to pave the way towards sharing the latter with new technologies through AR/VR/MR.

In this reality, the observer uses more than just sight to experience something real and memorable. Thanks to 3D digital models and new technologies allowing interactive exploration, the “passive” viewer becomes an “active user” who decides what to see and what paths to take, and to interact with objects or virtual environments, sharing their sensations and opinions; in this way, the experience remains in the visitor’s memory.

Author Contributions

Conceptualization, V.B., E.B. and G.B.; methodology, V.B. and E.B.; software, V.B., A.F. and G.B.; validation, V.B. and G.B.; formal analysis, E.B. and G.B.; investigation, E.B. and G.B.; resources, V.B., A.F., E.B. and G.B.; data curation, E.B. and G.B.; writing—original draft preparation, V.B. and E.B.; writing—review and editing, E.B. and G.B.; visualization, E.B. and G.B.; supervision, V.B. and G.B.; project administration, V.B., E.B. and G.B.; funding acquisition, V.B., A.F., E.B. and G.B. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Bruno, F.; Bruno, S.; De Sensi, G.; Luchi, M.L.; Mancuso, S.; Muzzupappa, M. From 3D reconstruction to virtual reality: A complete methodology for digital archaeological exhibition. Int. J. Cult. Herit. 2010, 11, 42–49. [Google Scholar] [CrossRef]

- Kang, M.; Gretzel, U. Effects of podcast tours on tourist experience in a national park. Int. Tour. Manag. 2012, 22, 440–455. [Google Scholar] [CrossRef] [Green Version]

- Kim, M.J.; Hall, C.M. A hedonic motivation model in virtual reality tourism: Comparing visitors and non-visitors. Int. J. Inf. Manag. 2019, 46, 236–249. [Google Scholar] [CrossRef]

- Jung, T.; Dieck, M.C.; Lee, H.; Chung, N. Effects of virtual reality and augmented reality on visitor experiences in Museum. Int. Inf. Commun. Technol. Tour. 2016, 621–635. [Google Scholar] [CrossRef]

- Guttentag, D.A. Virtual reality: Applications and implications for tourism. Tour. Int. Manag. 2010, 31, 637–651. [Google Scholar] [CrossRef]

- Cheong, R. The virtual threat to travel and tourism. Tour. Int. Manag. 1995, 16, 417–422. [Google Scholar] [CrossRef]

- Dewailly, J.M. Sustainable tourist space: From reality to virtual reality? Int. Tour. Geogr. 1999, 1, 41–55. [Google Scholar] [CrossRef]

- Spicer, J.I.; Stratford, J. Student perceptions of a virtual field trip to replace a real field trip. J. Comput. Assist. Learn. 2001, 17, 345354. [Google Scholar] [CrossRef]

- Denard, H. The London Charter for the Computer-Based Visualisation of Cultural Heritage. 2009. Available online: https://www.vi-mm.eu/2016/10/28/the-london-charter-for-the-computer-based-visualisation-of-cultural-heritage/ (accessed on 1 April 2022).

- In Proceedings of the International Forum of Virtual Archaeology, Principles of Seville. International Principles of Virtual Archeology. 2011, p. 20. Available online: https://link.springer.com/chapter/10.1007/978-3-319-01784-6_16 (accessed on 1 April 2022).

- John, B.; Wickramasinghe, N. A review of mixed reality in Health Care. In Delivering Superior Health and Wellness Management with IoT and Analytics; Wickramasinghe, N., Bodendorf, F., Eds.; Springer: Berlin/Heidelberg, Germany, 2019; pp. 375–382. Available online: https://link.springer.com/book/10.1007/978-3-030-17347-0 (accessed on 1 April 2022).

- Baik, A.; Yaagoubi, R.; Boehm, J. Integration of Jeddah historical BIM and 3D GIS for documentation and restoration of the historical monument. Int. Soc. Photogramm. Remote Sens. 2015, XL-5/W7, 29–34. Available online: https://docplayer.net/14396855-Integration-of-jeddah-historical-bim-and-3d-gis-for-documentation-and-restoration-of-historical-monument.html (accessed on 1 April 2022). [CrossRef] [Green Version]

- Muñoz, A.; Martí, A. New storytelling for archaeological museums based on augmented reality glasses. In Communicating the Past in the Digital Age; Hageneuer, S., Ed.; Ubiquity Press: London, UK, 2020. [Google Scholar] [CrossRef] [Green Version]

- Alizadehsalehi, S.; Hadavi, A.; Huang, J.C. From BIM to extended reality in AEC industry. Autom. Constr. 2020, 116, 103254. Available online: https://www.sciencedirect.com/science/article/abs/pii/S0926580519315146 (accessed on 1 April 2022). [CrossRef]

- Alizadehsalehi, S.; Hadavi, A.; Huang, J.C. Virtual Reality for Design and Construction Education Environment, AEI 2019; American Society of Civil Engineers: Reston, VA, USA, 2019; pp. 193–203. [Google Scholar]

- Museo Archeologico Nazionale Reggio Calabria. Available online: https://www.museoarcheologicoreggiocalabria.it/ (accessed on 18 March 2022).

- Fazio, L.; Lo Brutto, M. 3D survey for the archaeological study and virtual reconstruction of the sanctuary of Isis in the ancient Lilybaeum (Italy). Virtual Archaeol. Rev. 2020, 11, 1–14. [Google Scholar] [CrossRef] [Green Version]

- Bae, H.; Golparvar-Fard, M.; White, J. High-precision vision-based mobile augmented reality system for context-aware architectural, engineering, construction and facility management (AEC/FM) applications. Visual. Eng. 2013, 1, 1–13. [Google Scholar] [CrossRef] [Green Version]

- Ferrari, I.; Quarta, A. The Roman pier of San Cataldo: From archaeological data to 3D reconstruction. Virtual Archaeol. Rev. 2018, 10, 28–39. [Google Scholar] [CrossRef]

- Barrile, V.; Fotia, A.; Candela, G.; Bernardo, E. Geomatics techniques for cultural heritage dissemination in augmented reality: Bronzi di riace case study. Heritage 2019, 2, 136. [Google Scholar] [CrossRef] [Green Version]

- Antlej, K.; Horan, B.; Mortimer, M.; Leen, R.; Allaman, M.; Vickers-Rich, P.; Rich, T. Mixed reality for museum experiences: A co-creative tactile-immersive virtual coloring serious game. In Proceedings of the 2018 3rd Digital Heritage International Congress (DigitalHERITAGE) Held Jointly with 2018 24th International Conference on Virtual Systems and Multimedia (VSMM 2018), San Francisco, CA, USA, 26–30 October 2018; Available online: https://research.monash.edu/en/publications/mixed-reality-for-museum-experiences-a-co-creative-tactile-immers (accessed on 18 March 2022).

- Antón, C.; Camarero, C.; Garrido, M.-J. Exploring the experience value of museum visitors as a co-creation process. Curr. Issues. Tour 2018, 21, 1406–1425. [Google Scholar] [CrossRef]

- Rinaudo, F.; Bornaz, L.; Ardissone, P. 3D high accuracy survey and modelling for Cultural Heritage Documentation and Restoration. Vast 2007–future technologies to empower heritage professionals. In Proceedings of the 8th International Symposium on Virtual Reality, Archaeology and Intelligent Cultural Heritage, Brighton, UK, 26–29 November 2007; pp. 19–23. [Google Scholar]

- Barazzetti, L.; Remondino, F.; Scaioni, M. Orientation and 3D modelling from marker less terrestrial images: Combining accuracy with automation. Photogramm. Rec. 2010, 25, 356–381. [Google Scholar] [CrossRef]

- Balletti, C.; Adami, A.; Guerra, F.; Vernier, P. Dal Rilievo Alla Maquette.Il Caso di san Michele in Isola. Archeomatica 2012, 24–30. Available online: https://mediageo.it/ojs/index.php/archeomatica/article/view/122 (accessed on 18 March 2022).

- Canciani, M.; Gambogi, P.; Romano, F.G.; Cannata, G.; Drap, P. Low cost digital photogrammetry for underwater archaeological site survey and artifact insertion. International Archives of the Photogrammetry. Remote Sens. Spat. Inf. Sci. 2003, 34, 95–100. [Google Scholar]

- Bonora, V.; Tucci, G. Strumenti e metodi di rilievo integrato. In Nuove Ricerche Su Sant’Antimo; Peroni, A., Tucci, G., Eds.; Alinea: Firenze, Italy, 2008; Available online: https://books.google.com.au/books?hl=en&lr=&id=ZbtTTIwFeOAC&oi=fnd&pg=PA2&dq=Bonora,+V.and+Tucci,+G. (accessed on 18 March 2022).

- Brůha, L.; Laštovička, J.; Palatý, T.; Štefanová, E.; Štych, P. Reconstruction of lost cultural heritage sites and landscapes: Context of ancient objects in time and space. ISPRS Int. J. Geo.-Inf. 2020, 9, 604. [Google Scholar] [CrossRef]

- Micheletti, N.; Chandler, J.H.; Lane, S.N. Structure from motion (SFM) photogrammetry. Geomorphol. Tech. 2015. Available online: https://repository.lboro.ac.uk/articles/journal_contribution/Structure_from_motion_SFM_photogrammetry/9457355 (accessed on 18 March 2022).

- Green, S.; Bevan, A.; Shapland, M. A comparative assessment of structure from motion methods for archaeological research. J. Archaeol. Sci. 2014, 46, 173–181. [Google Scholar] [CrossRef]

- Luebke, D.P. A developer’s survey of polygonal simplification algorithms. IEEE Comput. Graph. Appl. 2001, 21, 24–35. [Google Scholar] [CrossRef]

- Bonetti, F.; Warnaby, G.; Quinn, L. Augmented Reality and Virtual Reality in Physical and Online Retailing: A Review, Synthesis and Research Agenda. In Augmented Reality and Virtual Reality; Jung, T., Tom Dieck, M., Eds.; Progress in IS; Springer: Cham, Switzerland, 2018. [Google Scholar]

- Han, D.I.; Tom Dieck, M.C.; Jung, T. User experience model for augmented reality applications in urban heritage tourism. J. Herit. Tour. 2018, 13, 46–61. [Google Scholar] [CrossRef]

- Pietroszek, K.; Tyson, A.; Magalhaes, F.S.; Barcenas, C.E.M.; Wand, P. Museum in Your Living Room: Recreating the Peace Corps Experience in Mixed Reality. In Proceedings of the 2019 IEEE Games, Entertainment, Media Conference (GEM), New Haven, CT, USA, 18–21 June 2019; Available online: https://ieeexplore.ieee.org/abstract/document/8811547/ (accessed on 18 March 2022).

- Flavián, C.; Ibáñez-Sánchez, S.; Orús, C. The impact of virtual, augmented and mixed reality technologies on the customer experience. J. Bus. Res. 2019, 100, 547–560. [Google Scholar] [CrossRef]

- Lee, H.; Jung, T.H.; Tom Dieck, M.C.; Chung, N. Experiencing immersive virtual reality in museums. Inf. Manag. Sci. 2020, 57, 103229. [Google Scholar] [CrossRef]

- Aparicio Resco, A.; Figueiredo, C. El grado de evidencia histórico-arqueológica de las reconstrucciones virtuales: Hacia una escala de representación gráfica. Rev. Otarq Otras. Arqueol. 2017, 1, 235–247. [Google Scholar] [CrossRef]

- Angheluta, L.M.; Radvan, R. Macro photogrammetry for the damage assessment of artwork painted surfaces. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-2/W15, 101–107. [Google Scholar] [CrossRef] [Green Version]

- Papagiannakis, G.; Lydatakis, N.; Kateros, S.; Georgiou, S.; Zikas, P. Transforming Medical Education and Training with VR Using Images. SIGGRAPH Asia 2018 Posters. SIGGRAPH Asia 2018 Posters; ACM Press: Tokyo, Japan, 2008; pp. 1–2. Available online: https://dl.acm.org/doi/10.1145/3283289.3283291 (accessed on 18 March 2022).

- Chung, N.; Lee, H.; Kim, J.-Y.; Koo, C. The Role of Augmented Reality for Experience-Influenced Environments: The Case of Cultural Heritage Tourism in Korea. J. Travel Res. 2018, 57, 627–643. [Google Scholar] [CrossRef]

- Cuca, B.; Brumana, R.; Scaioni, M.; Oreni, D. Spatial data management of temporal map series for cultural and environmental heritage. Int. J. Spat. Data Infrastruct. Res. 2022, 6, 1–31. [Google Scholar]

- English Heritage, 3D Laser Scanning for Heritage, English Heritage Publishing. 2011. Available online: https://www.wessexarch.co.uk/news/3d-laser-scanning-heritage-2nd-edition (accessed on 18 March 2022).

- English Heritage, Photogrammetric Applications for Cultural Heritage, English Heritage Publishing. 2017. Available online: https://historicengland.org.uk/images-books/publications/photogrammetric-applications-for-cultural-heritage/ (accessed on 18 March 2022).

- Mollica Nardo, V.; Aliotta, F.; Mastelloni, M.A.; Ponterio, R.C.; Saija, F.; Trusso, S.; Vasi, C.S. A spectroscopic approach to the study of organic pigments in the field of cultural heritage. Atti Della Accad. Peloritana Dei Pericolanti-Cl. Di Sci. Fis. Mat. E Nat. 2017, 95. Available online: https://cab.unime.it/journals/index.php/AAPP/article/view/AAPP.951A5 (accessed on 18 March 2022).

- Barrile, V.; Bernardo, E.; Bilotta, G. An experimental HBIM processing: Innovative tool for 3D model reconstruction of morpho-typological phases for the cultural heritage. Remote Sens. 2022, 14, 1288. [Google Scholar] [CrossRef]

- Barrile, V.; Fotia, A.; Ponterio, R.; Mollica Nardo, V.; Giuffrida, D.; Mastelloni, M.A. A combined study of art works preserved in the archaeological museums: 3D survey, spectroscopic approach and augmented reality. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2019, XLII-2/W11, 201–207. [Google Scholar] [CrossRef] [Green Version]

- Barrile, V.; Bernardo, E.; Bilotta, G.; Fotia, A. “Bronzi di Riace” Geomatics Techniques in Augmented Reality for Cultural Heritage Dissemination. Geomat. Geospat. Technol. 2022, 1507, 195–215. [Google Scholar] [CrossRef]

- Skublewska-Paszkowska, M.; Milosz, M.; Powroznik, P.; Lukasik, E. 3D technologies for intangible cultural heritage preservation—literature review for selected databases. Herit. Sci. 2022, 10, 3. [Google Scholar] [CrossRef] [PubMed]

- Li, H.; Ito, H. Comparative analysis of information tendency and application features for projection mapping technologies at cultural heritage sites. Herit. Sci. 2021, 9, 126. [Google Scholar] [CrossRef]

- Rusnak, M. Eye-tracking support for architects, conservators, and museologists. Anastylosis as pretext for research and discussion. Herit. Sci. 2021, 9, 81. [Google Scholar] [CrossRef]

- Rusnak, M.A.; Ramus, E. With an eye tracker at the Warsaw Rising Museum: Valorization of adaptation of historical interiors. J. Herit. Conserv. 2019, 58, 78–90. [Google Scholar]

- Reitstätter, L.; Brinkmann, H.; Santini, T.; Specker, E.; Dare, Z.; Bakondi, F.; Miscená, A.; Kasneci, E.; Leder, H.; Rosenberg, R. The Display Makes a Difference: A Mobile Eye Tracking Study on the Perception of Art Before and After a Museum’s Rearrangement. J. Eye Mov. Res. 2020, 13. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).