1. Introduction

Crafts that are transmitted as Cultural Heritage (CH) are called Traditional Crafts (TCs) and exhibit tangible and intangible dimensions. Traditional Crafts are presented in ethnographic museums and collections around the world, and have recently received the attention of the scientific community and the public. Nevertheless, there are important barriers that detach the public from such crafts, mainly due to the lack of practitioners and connections with the community and values of the society that practices or practiced them. Tools, materials, traditional machines, and artifacts lose part of their interest when presented outside of the context of their usage or production. With this motivation, reviving or reenacting processes, locations, places, and traditions connected with crafts may provide new dimensions and enhance the museum visiting experience. Furthermore, this approach can enhance the collaboration of the museum with its geographic context, by connecting the ethnographic exhibits with their immediate urban and rural surroundings, where livening elements of this craft and tradition take place.

In this work, we explore an indigenous craft, practiced in the south of the island of Chios in Greece, where a single type of tree has shaped the local trade, culture, and built environment. The resin harvested from the mastic tree is used for a wide range of products, mainly culinary, but also including skincare and medicinal. The solidified resin is called mastic and its liquid drops are metaphorically called ‘tears.’ This practice takes place at a cluster of nearby villages called Mastichochoria (Mastic Villages). At these places, relevant traditions were formed. The know-how of mastic cultivation, on the Island of Chios, is inscribed on the Representative List of Intangible Cultural Heritage of Humanity of UNSECO [

1]. Traditional Crafts are recognized by the Convention for the Safeguarding of Intangible Cultural Heritage (ICH) [

2] as an independent category of Intangible ICH. The inscription presents social and traditional elements, such as the familial nature of cultivation, in which men, women, children, and elders participate on equal terms but share different roles. The culture of mastic represents a comprehensive social event for the community which considers mastic as part of its identity. Less represented are the practical processes included in mastic cultivation, as well as its post-processing for the creation of traditional mastic chicle.

The main challenge encountered in this research work regards the representation and presentation of a craft that exhibits several peculiarities. Initially, the craft itself has formulated the traditions and culture of an entire community during at least six centuries, contributing to the identity of the community. Second, the craft is affecting the daily life, activities, symbols, myths, and stories of the community, as it is practiced all year long. Third, the craft and its practice in the past have affected the organization and architectural structure of villages and houses. Fourth, the commercial activities connected to the craft have affected the organization of cultivators. Fifth, the industrialization of the production of mastic products involved people from the same community working in the industrial facilities. Having these peculiarities, the representation of the craft requires historical, archival, ethnographic, and anthropological research, which is a challenge by itself. The real challenging aspect of this research work, though, is how to transmit, in the narrow frame of a museum visit, the multitude of tangible and intangible dimensions of this craft that affected the life of the entire community for centuries.

Rooted to the above-described challenge, in this work historical research, together with research of archival material and fieldwork on social dimensions, is combined with new anthropological and ethnographic research on mastic cultivation and production processes. Based on the outcomes, this work creates a craft representation that captures tangible and intangible craft dimensions. This representation includes audiovisual and 3D recordings of craft practices, as well as semantic descriptions of their explanation. In addition, these descriptions extend to social and historical dimensions, through the inclusion of narratives that contextualize physical assets and places.

The articulated formation of this representation, and the structured way that it encodes digital assets and knowledge, facilitates a multiplicity of ways that this content can be utilized to enhance visits to an ethnographic museum presenting a craft.

2. Background and Related Work

The plethora of dimensions in the representation and presentation of Heritage Crafts inevitably leads to the need to integrate a multitude of research and application domains into a systematic methodology. For these, the focus in this work is on the ones that relate to the digital representation of TCs and to advances in their presentation. The following sections summarize past research and outcomes relevant to this work.

2.1. Representation of Traditional Crafts

2.1.1. Digital Documentation

In the last two decades, a lot of research on the digitization of artifacts and archives has taken place [

3,

4,

5,

6], leading to the formulation of sophisticated digitization techniques, including guidelines and best practice guides, i.e., [

7]. In the same context, such efforts included the production of standardized formats for digitization that enabled the production of open knowledge bases such as Europeana [

8]. More recently, an interest in capturing, modeling, and digitally preserving the intangible aspects of CH has risen.

TCs have both tangible and intangible elements [

2]. Furthermore, TCs exhibit a wide thematic range of CH topics of historical, societal, anthropological, and ethnographic interest.

Despite its importance, the scientific literature on the preservation and curation of TCs started to emerge recently, and only a few studies are treating the topic in an integrated manner, given its multifaceted nature. The multifaceted nature of TCs has provided a range of definitions that are explored in seminal attempts to theoretically define the notions and contexts of TCs [

8,

9]. Though a crisp definition is elusive, it is conceded that TCs are characterized, as a minimum, by (a) traditional materials and technologies of their manipulation, (b) a certain type of product, (c) the dexterous use of tools and/or hand-operated machinery to make or repair useful things, and (d) a type of making that involves the knowledge and application of traditional designs [

10,

11].

In the above-defined context, the digital documentation of TCs includes the collection of data from digital recordings of concrete structures and dynamic scenes, which are called endurant and perdurant entities, respectively. Endurants are entities that exist in time, such as materials, tools, machines, workplaces, and craft products. Perdurants are entities that occur in time, such as events, actions, processes, practitioner postures, and gestures, as well as natural phenomena. Thus, documentation includes the digitization of both structure and appearance for endurants. For permutants, documentation includes the digitization of motion as well.

The digitization of endurant entities requires a variety of digitization modalities. The principal modalities for the documentation of the structure and appearance of endurant entities, whether small objects or wide-area environments, are photographic and 3D documentation.

Perdurant entities are captured by Motion Capture (MoCap) and Computer Vision (CV) methods, which record articulated human motion in 3D. They have been used to document body motion in dance [

12,

13,

14] and theatre [

15]. The applicability of recording modalities varies depending on the type of motion and the environment of practice. MoCap and video are employed in the recording of human motion. Optical MoCap is achieved by the visual tracking of wearable markers [

16], while Inertial MoCap is based on Inertial Motion Units (IMUs) woven in clothing [

17]. CV methods infer articulated, 3D human posture and motion from images and video without the use of markers. They are less pervasive, but also less reliable and more sensitive to noise [

18,

19]. On the other hand, MoCap requires special equipment and is obtrusive, but provides direct and accurate 3D measurements.

2.1.2. Semantic Representation

Research on Semantic Web technologies for CH has been active for at least the past two decades, and such technologies are starting to be considered standard tools [

20]. There is a significant history of pertinent approaches, dating to the pioneering work of Europeana [

21]. During 2000–2010, project research was targeted to knowledge classification, stemming from the library, and archival science. Thus, the work focused on catalogs, collections, and the artifact descriptions (MINERVA [

22], Europeana Rhine [

23], etc.). The main innovation was the semantic search. During 2010–2015, the focus shifted towards richer, event-centric representations. The class “Event” is one of the basic classes that the Europeana Data Model [

21] inherited from the CIDOC-CRM [

24]. The events could not be found in institutional repositories, thus making past research outcomes on knowledge classification difficult to be reused. For example, the class “Event” was not populated in the Danube release of Europeana in 2011 [

25]. Since 2015, significant changes have been observed by providing breakthroughs in knowledge extraction from texts (e.g., [

26,

27]), scalable semantic systems based on the solid implementation of Semantic Web standards (e.g., [

25]), and consolidating existing ontologies, notably the CIDOC-CRM, to provide higher expressivity and domain coverage. Furthermore, this was supported by the development of new representations of CH artifacts, based on new digitization techniques, that are able to exploit the above-mentioned technological advances [

28].

This work can be considered to be part of the third phase of the adoption of Semantic Web technologies, and a contributor to the expansion of the provided possibilities through narrative- and process-centric representations.

2.2. Presentation of Traditional Crafts

2.2.1. Virtual Humans

For the visualization of perdurant entities such as human motion, Virtual Humans (VHs) have been studied in the past since they are able to simulate verbal and nonverbal communication. This type of interface is made possible with the help of multimodal dialogue systems, which extend common speech dialogue systems through additional modalities, just like in human–human interactions [

29]. Employing VHs as personal and believable dialogue partners in multimodal dialogues entails several challenges, as this requires not only a reliable and consistent motion and dialogue behavior, but also nonverbal communication and affective components. Besides modeling the “mind” and creating intelligent communication behavior on the encoding side, which is an active field of research in artificial intelligence [

30], the visual representation of a character from a decoding perspective, including its perceivable behavior, such as facial expressions and gestures [

31], belongs to the domain of computer graphics and likewise implicates many open issues concerning natural communication [

32,

33]. In conclusion, building realistic VHs was traditionally a great challenge requiring a professional motion capture studio and heavy resources in 3D animation and design. Lately, workflows for the implementation of VHs have been proposed, based on current technological trends in wearable mocap systems and advancements in software technology for their implementation, animation, and visualization [

34]. The inclusion of Virtual Humans (VHs) [

35] in the augmented content as demonstrators and narrators is also relevant to this work.

2.2.2. Augmented Reality for CH Applications

Augmented Reality (AR) technologies exhibit the potential for providing novel experiences to museum visitors. The conventional use of AR is to interactively visualize digital assets in conjunction with physical exhibits [

36,

37]. A survey [

38] highlighted that AR has a significant influence on the visitor experience.

Recently, AR has been reinforced by advances in mobile computing and display technologies [

39,

40]. Today, commercial applications are developed in a wide range of disciplines that include physical, industrial, and handicraft interaction [

41,

42]. AR systems use the display of the device to implement a transparency metaphor, in which the live feed from the camera is used as if the device is transparent and the user is looking through the screen’s glass. In this illusion, the system realistically augments the feed with objects and events that are not present in the physical environment.

In this task, the estimation of the location and orientation of the device is crucial, and is largely based on the visual input from the camera. Robustness is supported by markers, which provide spatial references [

43]. The proliferation of unique keypoint features largely alleviated the requirements for markers, or increased the flexibility in the appearance of markers to match the aesthetic needs of the installations [

44]. More immersive experiences are provided by untethered AR headsets.

A common denominator in the degree of immersion is the compatibility of the appearance of the displayed content with the physical environment, True AR describes the condition of an AR experience that is not distinguishable from reality due to its high level of realism [

45,

46]. Several True AR examples can be found in [

47,

48,

49,

50]. In True AR, visual realism requires, at least, treatment for geometric and photometric consistency. Geometric consistency requires that the virtual objects and VHs must be spatially registered in the environment to give the impression that they are a part of it. It is thus required that occlusions are treated when augmenting virtual objects in the video feed, and thus a 3D model of the environment facilitates the solution to this problem [

32,

51]. Photometric consistency dictates that the virtual content must be lit as if immersed in the light of the real environment. To solve this problem, highlights, shadows, and brightness are rendered consistent with the physical environment [

52].

AR systems facilitate the implementation of virtual museums [

53]. Many AR approaches emerged, each one focusing on individual aspects of augmenting physical exhibits with digital content [

54]. In [

55,

56], the importance of immersion for CH applications is underscored. In [

57], a pipeline for highly realistic digital assets, suitable for AR applications, is proposed. In [

58], antiquities were showcased through tours of an archaeological site using Meta AR glasses. Components for the development of AR applications for CH are proposed in [

59].

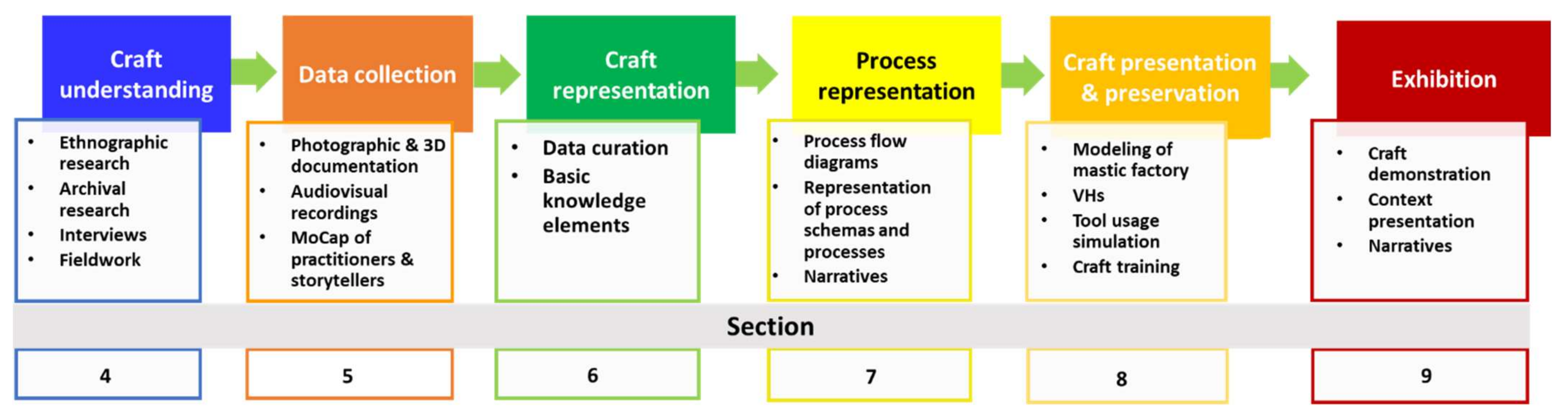

3. Methodology

The methodology proposed by this research work is inspired by the definition of an articulated approach to the documentation and representation of crafts as proposed by the EU-funded project Mingei [

60,

61]. The main contribution of this methodology is that it provides a series of steps that, when applied according to the provided instructions, can lead to the representation of a TC. In turn, this representation is used to implement presentations for a plethora of dissemination channels. In this work, we report on the application and step-by-step adaptation of the proposed methodology to address the needs of the targeted TC. The technical implementation of the approach for the formal and digital representation of the crafting process is supported by the Mingei Online Platform (MOP) [

62,

63], where digital assets and semantic metadata are organized in a formal representation compatible with contemporary digital preservation standards. The proposed approach, as applied in the use case studied, is illustrated in

Figure 1, and described as follows:

Craft understanding identifies the workflow of the crafting processes, the location of practice, the involved objects, and its actors.

Data collection is the recording of physical objects and the human actions leading to the transformation of materials into articles of craft. The collected data are acquired from media objects, which is an abstraction of digital recordings, such as images, video, 3D scanners, and Motion Capture (MoCap), and their technical metadata.

Craft representation refers to the definition of semantic metadata, or basic knowledge elements. These are the tools and affordances used in the crafting process, as well as the related objects, places, events, and materials.

Process representation refers to the representation of the crafting process in terms of the steps, and the interrelating conditions between them, that define the crafting process. Process execution representation refers to the semantic representation of an executed crafting process. The executed process steps are linked with recordings of the activity.

Craft presentation and preservation refers to the experience built on top of this rich semantic representation

The exhibition refers to the integration of experiences in physical space from where visitors can experience the represented craft instance.

In

Figure 1, the forthcoming sections and sub-section that elaborate on each phase are noted.

Considering that the presented methodology involves six steps for its implementation, and that each of these steps involves specialized expertise and technologies, the rest of this paper is structured as follows. Craft understanding is presented in

Section 4, followed by data collection (

Section 5) and craft representation (

Section 6). Based on the acquired knowledge, process representation is discussed in

Section 7. Different presentations are elaborated in

Section 8, while the final exhibition is analyzed in

Section 9.

4. Craft Understanding

The first step of the proposed methodology is craft understanding, which identifies the workflow of the crafting processes, the location of practice, the involved objects, and its actors. The rich tradition and culture of mastic are transferred from one generation to another through training, social traditions, and stories. In this research work, it was decided that the value of stories as a means of transferring knowledge in the museum context was to have a primary role in the provided experiences. To achieve this objective, the research that was conducted included archives, photographic and audio-visual documentation, and interviews. Archival research focused on the archive of the Piraeus Bank Group Cultural Foundation (PIOP) that holds information on mastic and its cultivation, the production processes of mastic products, historical and social material, and financial data. Furthermore, literature regarding the mastic tree, mastic production, and historical and social facts were acquired through essays developed by the curators of the Chios Mastic Museum, and the bibliography available in the Korais Central Public Library of Chios was employed.

The photographic material studied included a collection of: (1) photographs from the Chios Mastic Growers Association, (2) photographs of mastic shops and merchants, (3) geographical and geological maps and diagrams, (3) photographs of women at work, (4) historical depictions of Chios and emblems of Genoan rule, as well as photographs of a Genoan galley from the Genoa Maritime Museum, (5) photographs and diagrams of houses from the mastic villages, (6) photographs and sketches of the mastic tree, (7) pharmacological announcements related to the mastic, (8) portraits, (9) photographs and sketches of the mastic villages, (10) photographs depicting the mastic processing (i.e., embroidering, collecting, sifting and cleaning, cleaning with water, and pinching), (11) product packages and advertisements, (12) photographs of machines, objects, and tools, (13) religious-related depictions, (14) photographs and diagrams of the settlements, and (15) photographs related to customs and traditions.

Audiovisual material studied included: (a) documentaries produced by television channels and cinematographers, (b) documentation videos of history researchers and ethnographers that cooperated with PIOP for the production and collection of material for the Chios Mastic Museum (the videos include interviews with mastic producers, and men and women that used to work at the Chios Mastic Growers Association), and (c) advertisement clips of popular mastic products.

The audio material included: (a) interviews with former employers at the Chios Mastic Growers Association on topics including chewing gum production, distillation, and descriptions of the factory and the machines, (b) radio advertisements of popular mastic products, (c) women singing traditional songs regarding mastic cultivation, and (d) recordings from the traditional feast of Agha. Part of the ethnographic work regarded an analysis of the mastic chicle production line and its machines.

The outcomes of this research were used for the creation of narratives that are intended to be narrated by the museum to its visitors, to transmit the rich tradition of mastic and thus make visitors part of the social establishment of the craft. Narratives can be digitally transmitted in multiple ways, including through virtual narrators. In this work, narrators were initially represented as personas whose profiles and stories were inspired by the ethnographic research on the island’s population of mastic producers. As such, the profiles and stories of the factory workers are an assortment of the material in the oral testimonies. Eight personas have been created in total. The stories represent how life at the villages was, how workers grew up in the village (i.e., education, agricultural life, leisure time, adolescence, and married life), what led them to seek work at the Association in Chora of Chios, how their working life in the Association was, and in which process(es) they worked. This information is divided into sections according to (a) family background and early and adult years of life, (b) work-life in the Association, and (c) the explanation of the processes.

5. Data Collection

The acquired craft understanding is supported through endurant and perdurant entities. For their digitization, various scientific disciplines and technical tools were employed. Context representations were acquired through 3D reconstruction and 3D modeling. Craft actions and narrations were captured using MoCap technologies. The following sub-sections provide a summary of the key assets acquired.

5.1. Large and Medium Scale Representation of Geographical Context

In the context of this research work, relevant context included the mastic villages formulated in a way so as to support the production and economy of mastic cultivation and the industrial processes supporting the creation of mastic products. As such, 3D context representations were acquired and implemented. For the reconstruction of the mastic villages of Chios, aerial images were acquired via a drone overlooking the Pyrgi, Mesta, Olympoi, and Elata villages on Chios, Greece. The subjects were large building complexes that pose several challenges due to the complex, medieval city-planning style, as well as the fortifications (see

Figure 2).

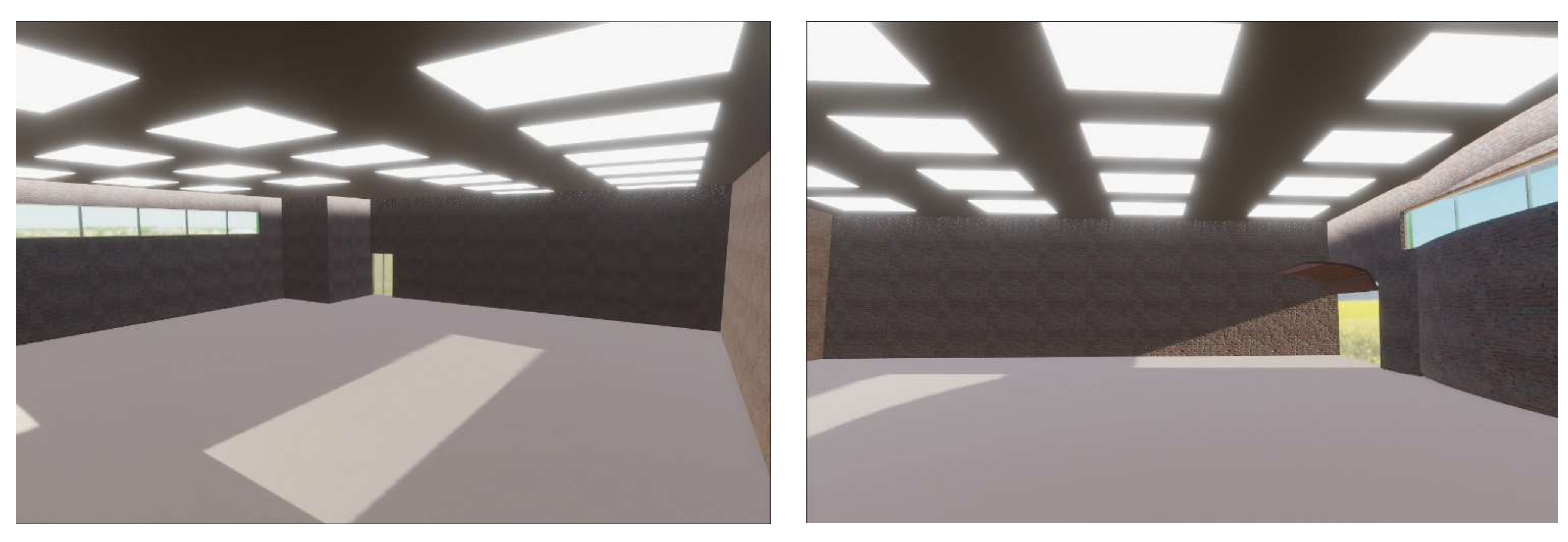

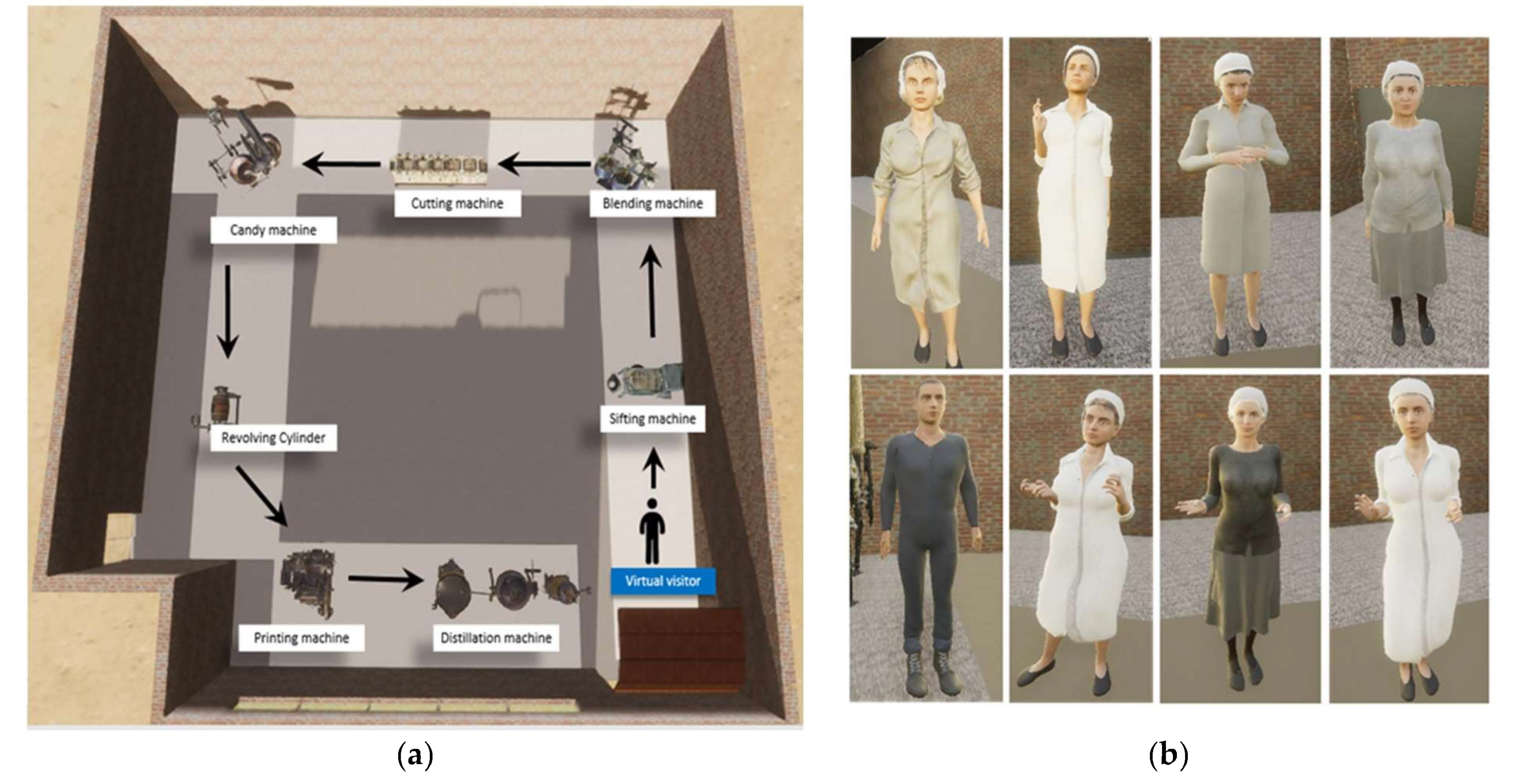

The virtual space for the industrial production of mastic chicle was created in the Unity 3D game engine [

64] and the High-Definition Render Pipeline (HDRP) [

65], and is shown in

Figure 3.

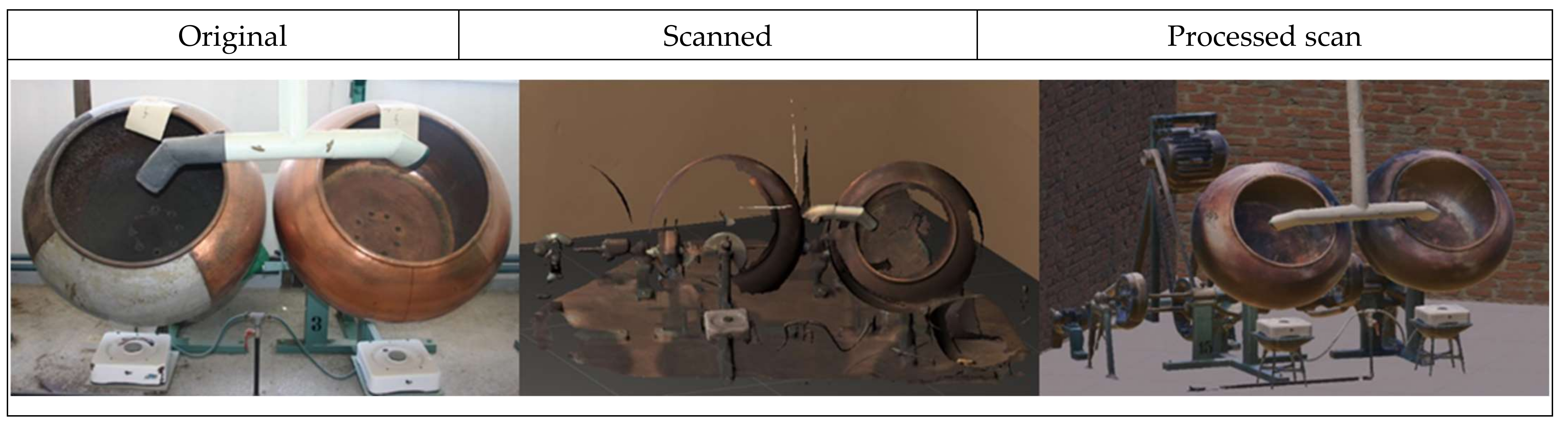

For building the 3D models of the machines to inhabit the implemented virtual model, the actual machines exhibited at the Chios Mastic Museum were scanned using a handheld tri-optical scanner, and photographic documentation was acquired. Using the Blender 3D software [

66], the scanned models were post-processed to deal with known scanning issues relevant to metallic reflective materials, glass parts, etc. Indicative examples of the photographic documentation (Original), reconstruction results (Scanned), and post-processing outcomes (Processed scan) are shown in

Figure 4.

5.2. Modeling of Mastic Cultivation Tools

For craft presentation, perdurant entities described in the next section capture human motion in mastic cultivation activities. Considering that motion is related to the manipulation of tools, the modeling of mastic cultivation tools was added to the endurant entities modeled. These are plain agricultural hand tools modeled from scratch in 3D using the Blender 3D [

66] (see

Figure 5).

5.3. MoCap of Mastic Cultivation Activities

The cultivation of mastic was recorded in three days, from 11 September to 13 September 2019, in Chios, Greece. Due to the nature of the cultivation process, each motion was split into different recordings. The tasks related to the cultivation of mastic are illustrated in

Table 1. This resulted in separate motion files for each part of the process. In general, the cultivation of mastic was recorded realistically. However, in the actual cultivation and harvesting process, all tasks are days or weeks apart and usually take hours to be completed. As such, experts had to perform a brief example of the gestures while still being realistic. Recordings that required kneeling/sitting on the ground had errors on the legs’ joint angles. This probably happened because the sensors were interfering with each other. The motion files were corrected offline.

5.4. MoCap of Narrations

Using the Rokoko equipment and software, unique animations for each narration were recorded. For the narration moves to be more realistic, the voice of the narrator was synchronously captured. In this way, the synchronization of voice and movement in the narration guaranteed a more natural narration (see

Figure 6). Once the narration animations and audio were recorded, we segmented them, removing redundant sequences and fixing recording errors from both streams (e.g., resulting in repetition of the same phrase more than once). Then, the segments were joined again and exported as a single motion sequence and a single audio file.

6. Craft Representation

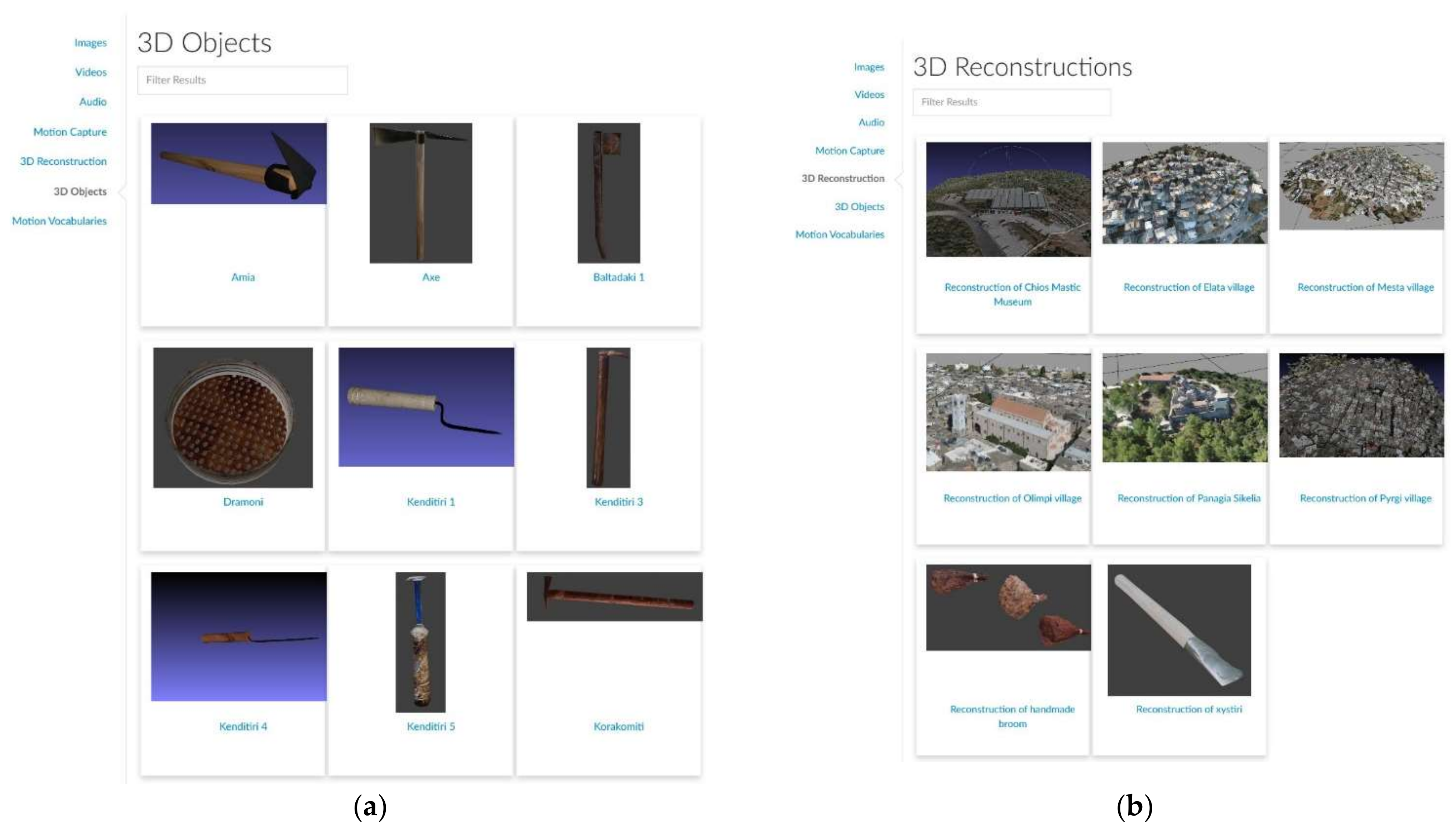

In this step, the acquired documentation of objects and actions are reposited and semantically represented. The representation employs a few classes from the Mingei Crafts Ontology called basic knowledge elements, which contain links to the semantic metadata provided by the user and links to the digital assets formed in the previous step. From motion recordings, reference postures and gestures are identified using the AnimIO annotation editor [

61], which facilitates the body-member-specific annotation of motion recordings. The recording segments are combined under the context of the Event knowledge entity, which contains links to the representations of the Location, Participants, Tools, Materials, and (intermediate) Products pertinent to the Event. Conceptually, Events align with the steps of the process, and both can be hierarchically analyzed by sub-events and sub-steps.

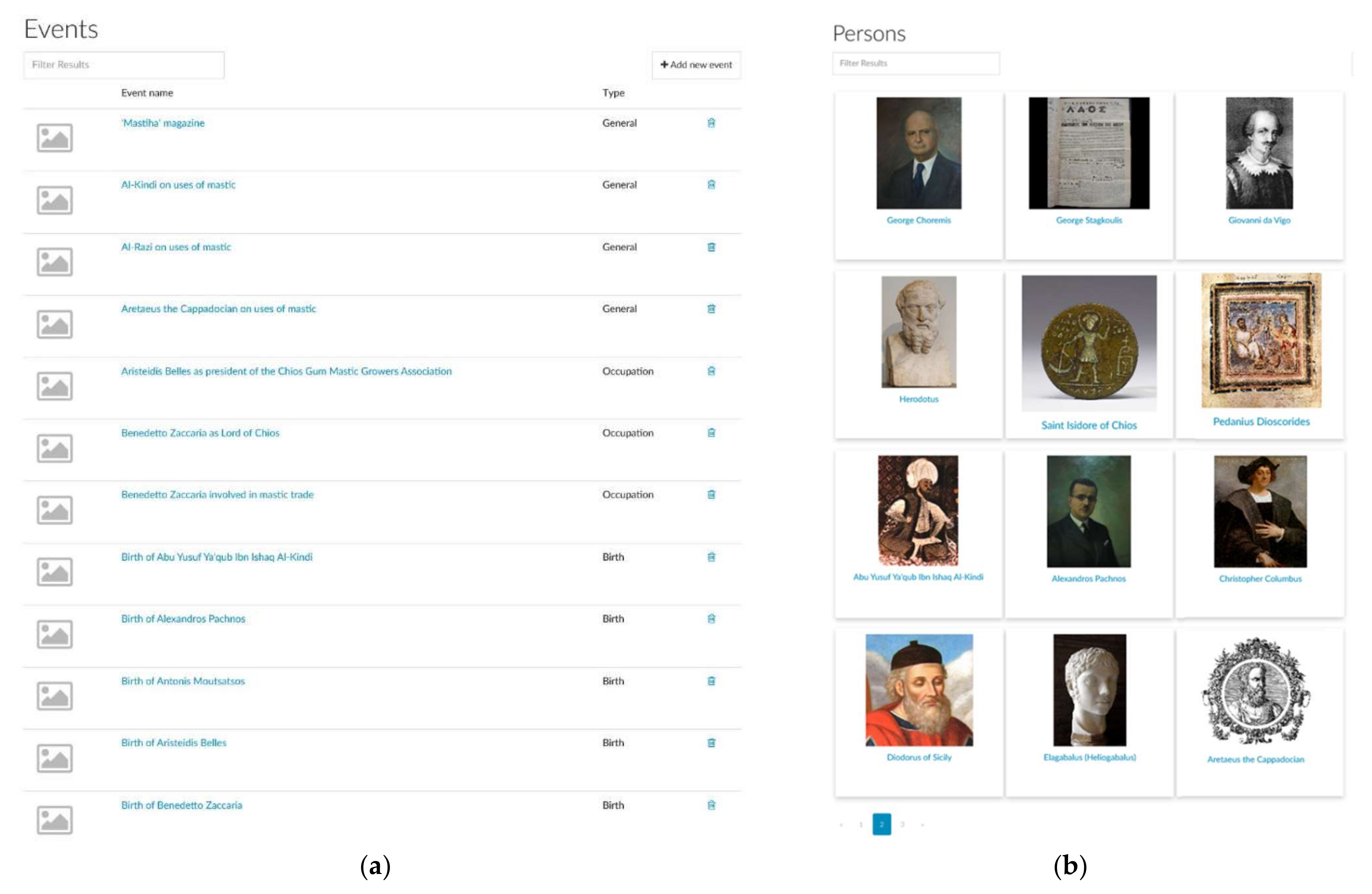

Using the MOP, all knowledge elements are created through form-filling operations, as shown in

Figure 7 and

Figure 8. Each type of element has a dedicated Webform, where its metadata are edited. Furthermore, facilities to create links with other knowledge elements are provided. Links are provided in the form of URI for external resources, or in the form of semantic links for digital items curated in the MOP. Moreover, knowledge elements are linked to media objects of relevance.

7. Process Representation

For the representation of craft processes, we adopt the approach proposed by Mingei [

67]. In this approach, to encode craft understanding, activity diagrams are borrowed from the Unified Modeling Language (UML) [

68], and used in the following sense. While UML represents computational actions that transform data, in this work it is physical actions that transform the materials. The transition types Transition, Fork, Merge, Join, and Branch are adopted and denoted as in UML. Activity diagrams can be defined hierarchically, allowing for the increase in representation detail at later stages. Moreover, their visual nature was found to support collaboration with practitioners. The progression of sequential steps is modeled by a Transition link. Forks are used to represent the initiation of two parallel tasks. In Merge transition, two or more control paths unite, and Join connects steps that should be completed before the transition to the next step. The Merge and Join transitions are structurally similar, but a Join is a synchronization across a set of parallel flows, while in a Merge only a single flow is active. Finally, Branch transitions connect a step with a decision step that accepts tokens on one incoming edge and selects one outgoing alternative. Branch nodes control the flow of a process by selecting one of several alternatives, based on the outcome of a condition evaluation.

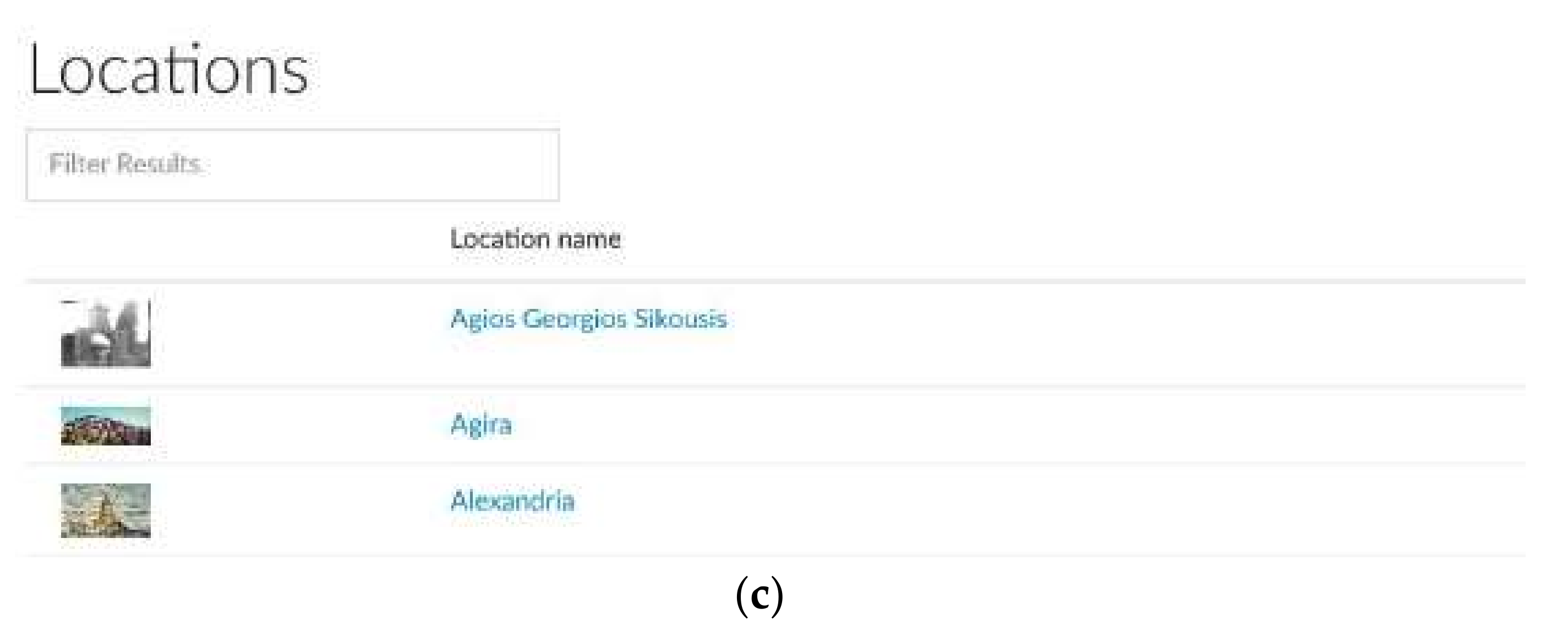

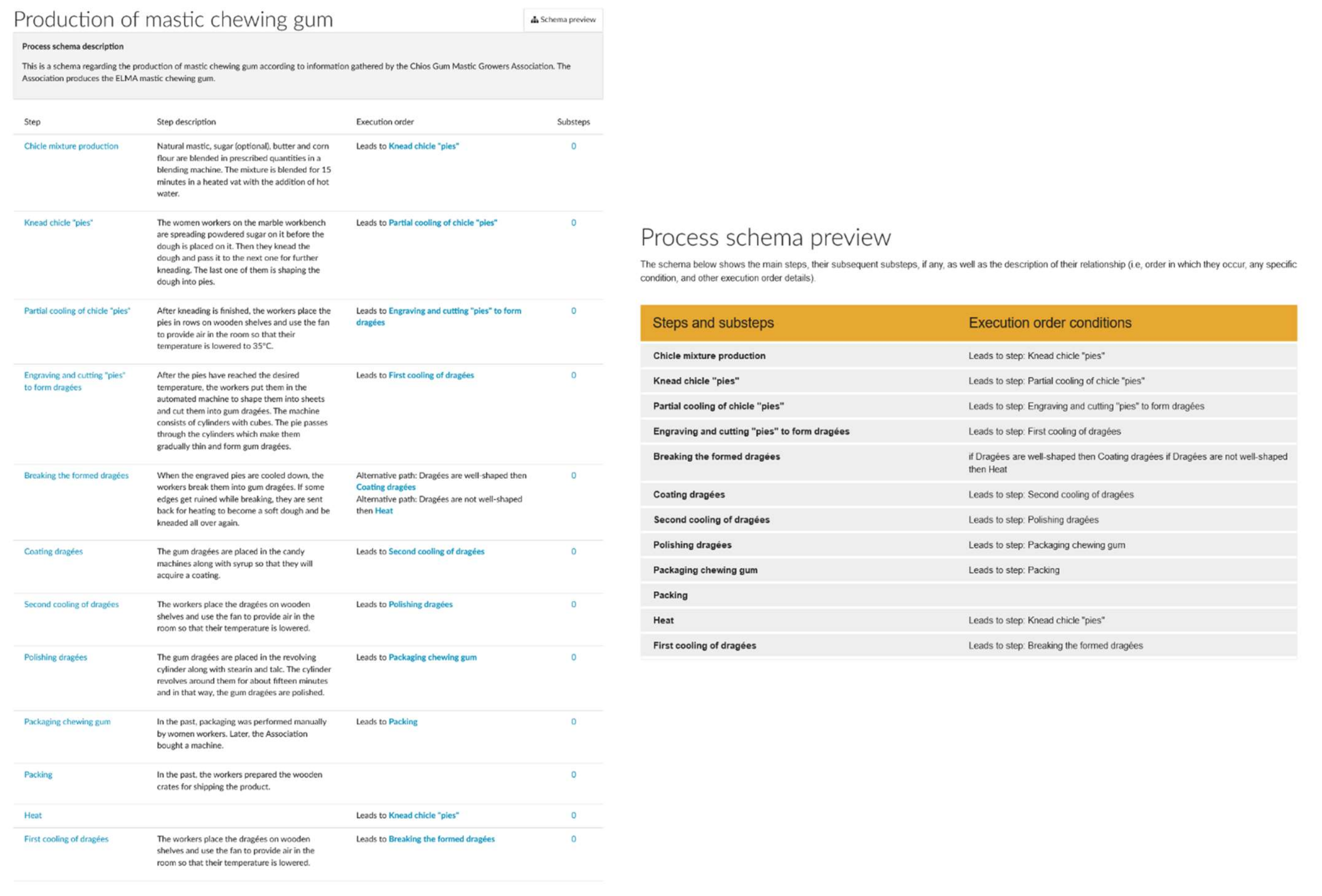

To formally represent the annual process of mastic cultivation and the production of mastic chewing gum, activity diagrams were created and then encoded with the process schema in the MOP.

The annual process is carried out by cultivators, including the typical cultivation activities such as pruning, cleaning, fertilization, and irrigation. Cleaning the soil is an important activity that takes place from the end of June until the beginning of July, where producers clean, level, and press the soil under the trees. Then, the soil is covered with white clay so that the mastic resin that falls will stay clean. Embroidering takes place in July and August. Once dry, the collection of the dried mastic resin takes place from mid-August till mid-October. Usually, big mastic pieces of resin fall on the soil, while mastic “tears” remain and dry on the bark and the branches. Sifting helps to separate the mastic gum from gathered dirt and leaves. After sifting, the producers clean the gum with soap and plenty of water. The producers make a first classification of the mastic gum that is collected. After the classification, the mastic gum is sent to the Chios Mastic Growers Association in November. The activity diagram is shown in

Figure 9.

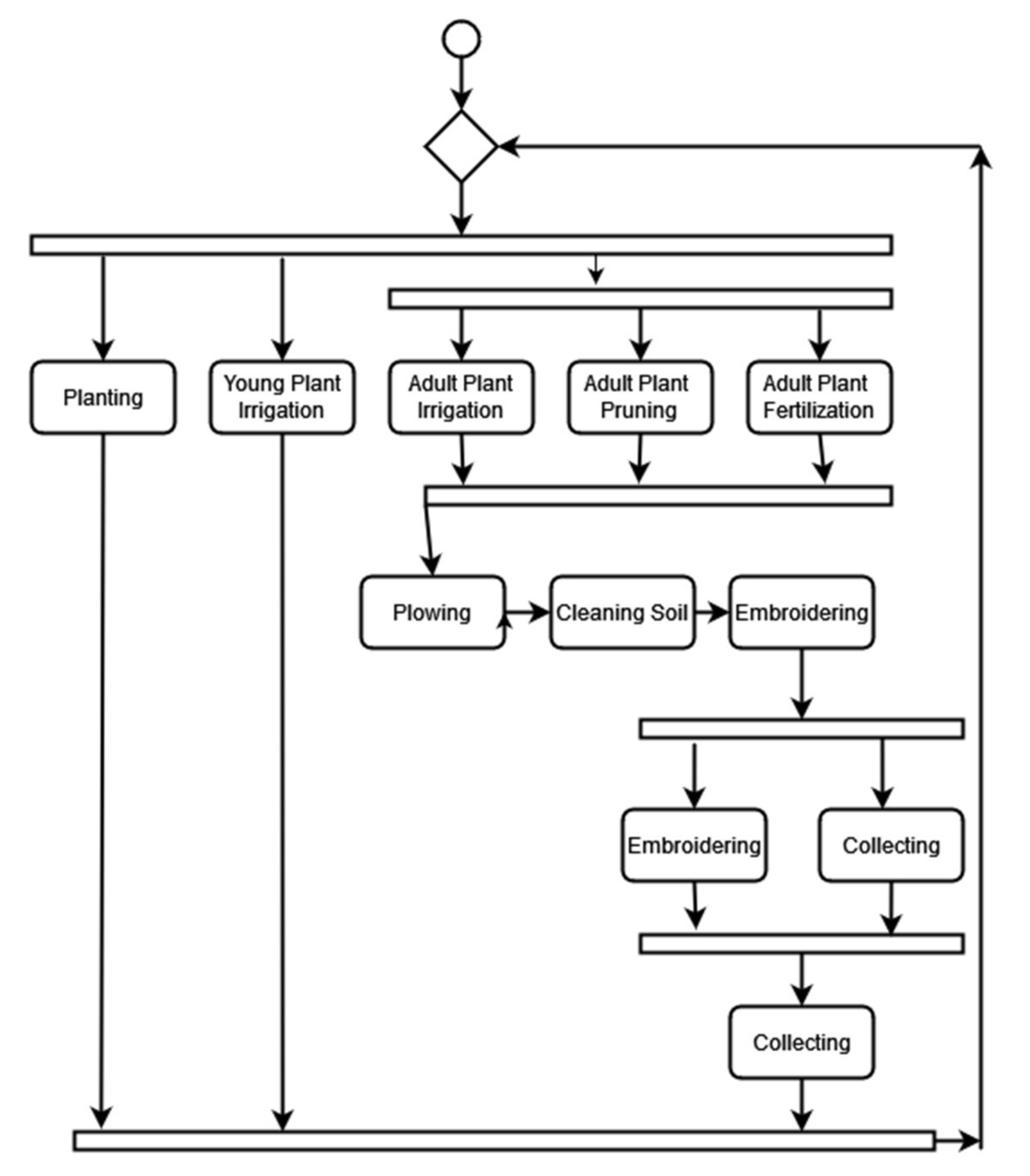

For the production of mastic chewing gum, first, the mixture is prepared using mastic, sugar, butter, corn flour, and water. The ingredients are placed in the blending machine to produce the mixture. After 15 min, the mixture is taken out of the blending machine and is placed on a marble counter. Then, it is formed into pieces with a maximum height of 3 cm and left to cool. After cooling, the pieces are transferred to the press and engraving machine, where they are pressed and gum pieces are formed. In the end, the pieces are cut and put in the candy machine to create a coating made out of syrup. The activity diagram is shown in

Figure 10.

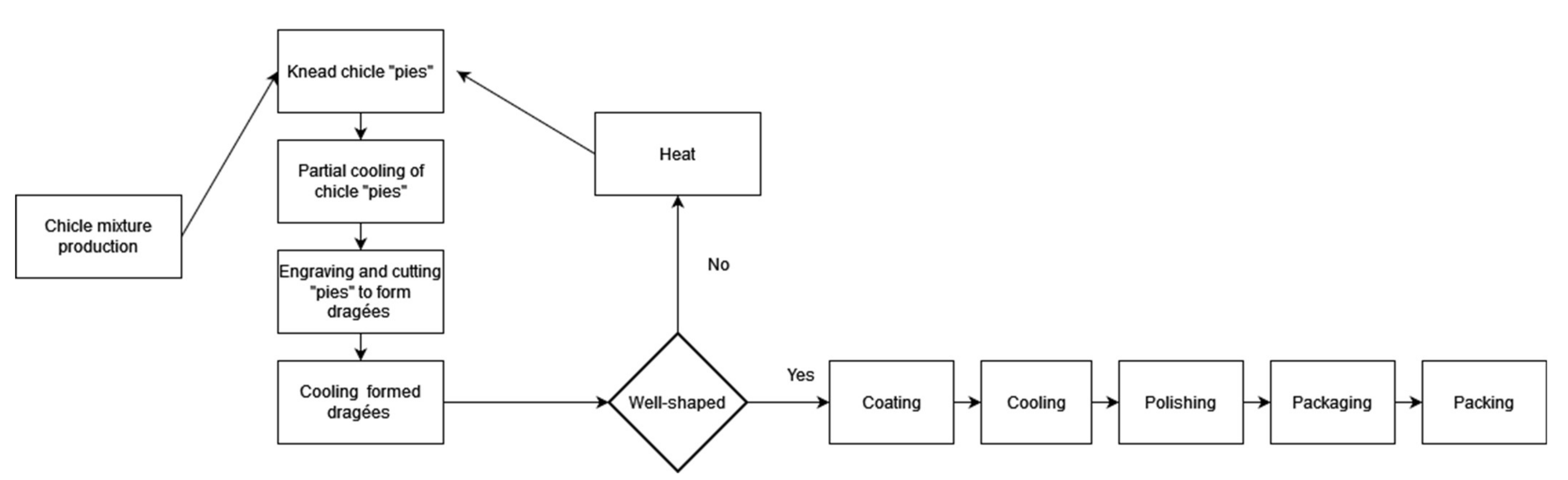

The activity diagrams are transcribed into transition graphs using the MOP UI. Data fields are used to enter appellations, informal descriptions, and the step order. Transitions are instantiated via dynamic UI components that adapt to transition type. An example of a represented process schema in the MOP for mastic chewing gum production is presented in

Figure 11.

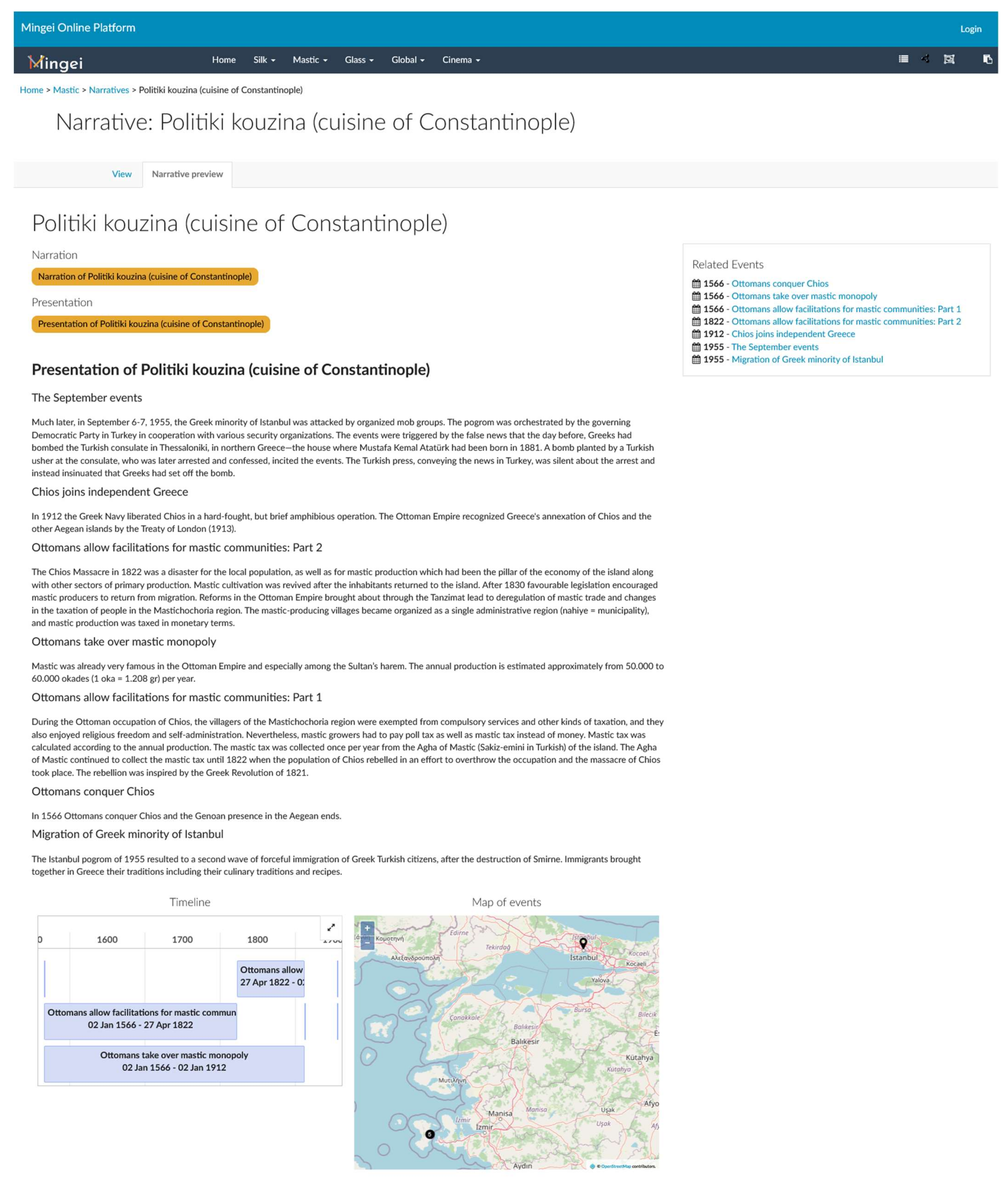

Representation of Narratives

In this work, a narrative is to be presented by a narrator in a form provided by a narration and in a format identified as a presentation. An example is a VH (narrator) presenting orally (an instance of a presentation) a narration (an instance of a narration that encodes a narrative presented in the museum). These entities are encoded with the help of the MOP. The narrative authoring component allows a heritage professional (i.e., a data curator, craft researcher, etc.) to synthesize a narrative using semantically linked data (i.e., basic data entries, events, etc.). Furthermore, recognizing the diversity of target audiences and technologies used in the project, this component supports the creation of different narration styles. Different presentations are intended for different presentation media as shown in the following section.

8. Craft Presentation and Preservation

Craft presentations built on top of the aforementioned semantic representation are delivered through multiple dissemination channels. Among these, in this section, we present (a) the digital preservation of knowledge and dissemination in open data formats, (b) web access to craft knowledge and narratives, (c) craft demonstrations via 3D visualizations, (d) virtual narrations, and (e) immersive context presentations.

8.1. Digital Preservation and Open Data Dissemination

The digital assets hosted in the MOP repository are provided online in conventional and open formats. Each asset has a unique Internationalized Resource Identifier (IRI) to be directly integrated by third parties. Our knowledge is available on the Semantic Web via the SPARQL endpoint by the MOP. Furthermore, to ensure compatibility with online knowledge sources, definitions of terms are imported to the MOP by linking to terms from the Getty Arts and Architecture thesaurus [

69] and the UNESCO thesaurus [

70]. For further exploitation of the semantic knowledge encoded in the MOP, a Europeana Data Model (EDM) [

21] export facility has been also been implemented allowing (a) the export of data in semantic compatible with EDM format and (b) the formulation of SPARQL queries [

71] to the MOP SPARQL endpoint to receive EDM-formatted results.

8.2. Web-Based Access to Knowledge and Narratives

The represented knowledge network is available through the WWW and MOP [

63] in hypertext format. Semantic links are implemented as hyperlinks that lead to the pages of cited entities. Contents are also organized and presented thematically, per class type. Documentation pages contain links to digital assets, textual presentation of metadata, and previews of the associated digital assets. For locations and events, specific UI modules are provided. For locations, embedded, dynamic maps are provided through OpenStreetMap [

72]. Timeline and calendar views are available for events. An example of narrative presentation through the MOP is presented in

Figure 12.

Process presentations are presented containing links to the recordings of the knowledge elements for the tools and materials, involving the participating practitioners, the date, the tools employed, and the location of the recording. If the process follows a process schema, a link to that schema and its preview are also provided. The hierarchy of process steps is presented using insets, each one presenting textual information and previews of the available digital assets. To present step organization, insets are dynamically unfolded to any depth in the process hierarchy, associated with image previews and embedded videos. Variations include images and textural descriptions.

8.3. Craft Presentations by Virtual Humans

8.3.1. Virtual Human Models of Craftspersons and Narrators

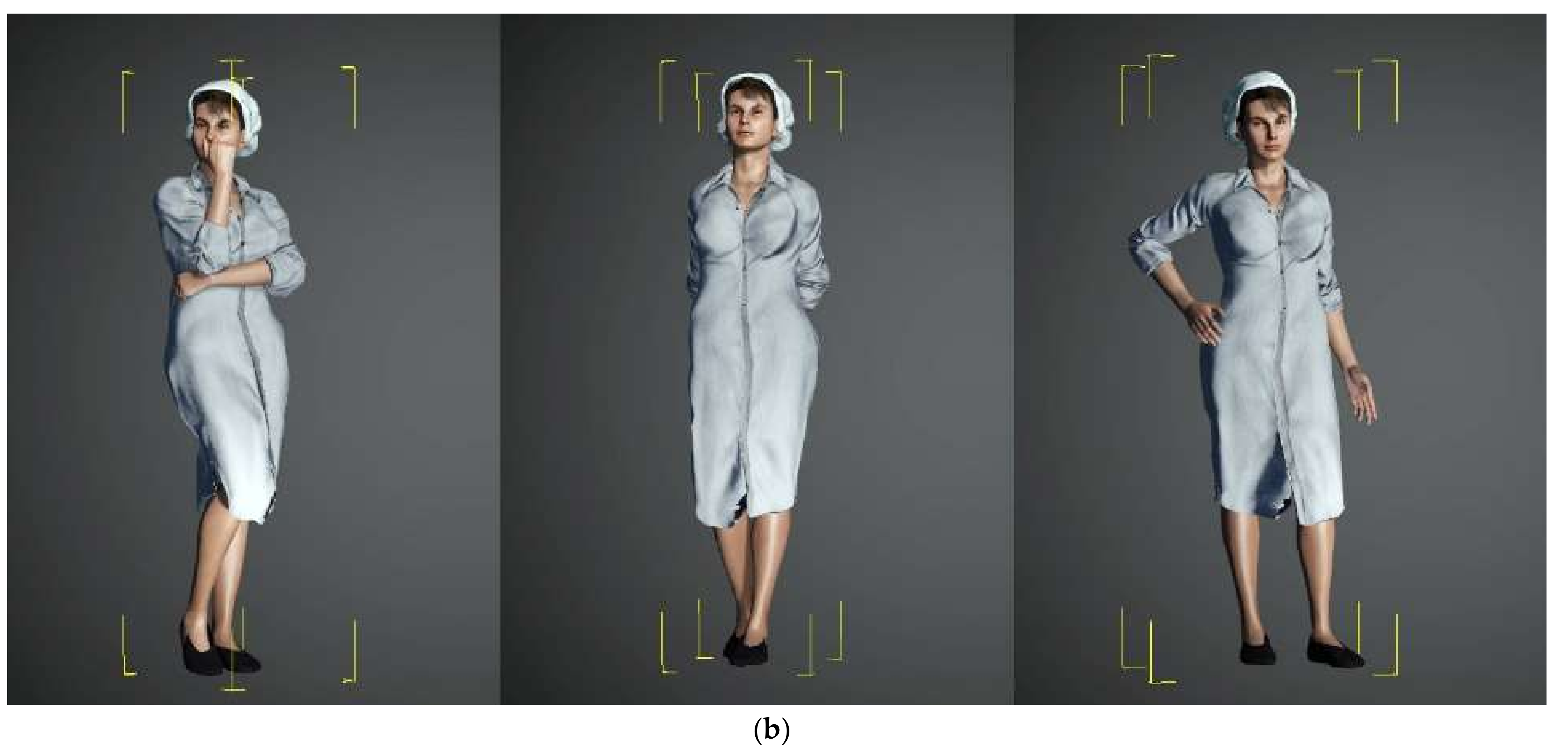

The demonstrations of 3D virtual humans, with their corresponding garments and accessories, have been carried out based on cultural and historical information and sources. Eight VHs were created using references from the ethnographic research. For the definition of the 3D meshes and the design of the skin surfaces, a customized [

73,

74,

75,

76] toolchain was employed that combines automatic generation methods with manual editing and refining. The approach utilizes VH creation software and 3D modeling software for clothes and accessories. The final model inherits and encapsulates the required structural components, such as bone and skin attachment data. This allows the VH to be dynamically animated and to exhibit real-time animation capabilities.

Another important aspect for attaining a high level of realism is the motion, behavior, and natural interaction of the VH with users, to avoid the “uncanny valley” effect [

77,

78]. The VH must have the ability for verbal as well as nonverbal communication skills, be intelligent, have natural communication with the users, perceive information from the user, and physically interact with them. The deformation of the skinned characters during their movements must be realistic, smooth, and not contain any discontinuities. The VH that supports all these capabilities will be an intuitive craft master acting effectively as an elderly companion and assistant for elder people.

Garment design was also considered during the implementation of avatars. To ensure appropriate rigging, since the generated models are automatically rigged either by Mixamo [

76] for the VH that do not require facial animation, or by CC3 [

74] for the VH that require facial animation (storytellers’ avatars), an additional check was performed to ensure that the rig is applied correctly, and the bones are well adjusted to the 3D body (see

Figure 13).

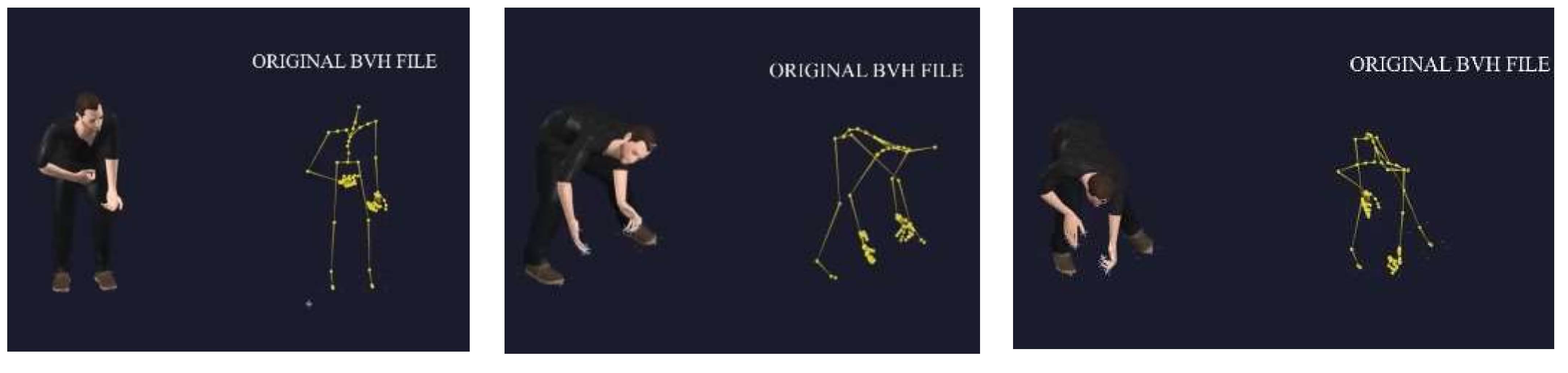

8.3.2. Processing Craft Actions and Retargeting to VHs

The processing of the motion data was completed on Autodesk MotionBuilder software [

79], which is dedicated to animation and the direct integration of motion capture technologies. The process requires different steps: (a) the creation of an “actor” in MotionBuilder with a skeleton definition corresponding to the BVH hierarchy, (b) the mapping of the received animations onto the actor, and (c) the synchronization of the VH with the actor by adjusting the two models so that the measurements match and the animations are correctly reproduced (retargeting, see

Figure 14).

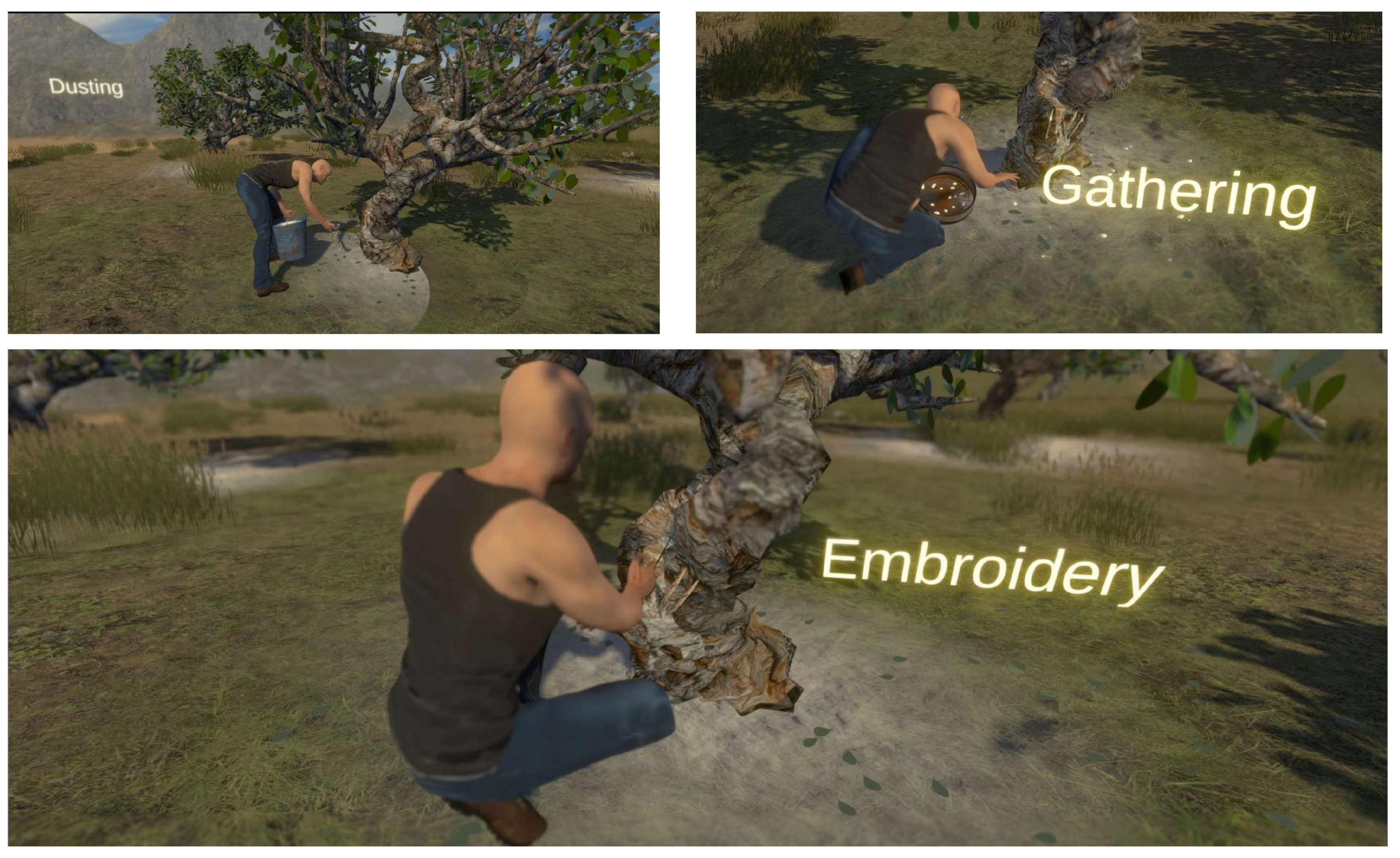

Processed animations were used for the implementation of the 3D representation of the mastic cultivation activities in a virtual environment depicting a mastic tree field, as shown in

Figure 15. In this example, the processed gestural know-how of the captured craft practitioner is used not only for replicating the movements of the practitioner, but also setting digitized craft tools in motion using a technical approach for attaching tools to VHs [

80], and by inferring tool motion from human motion [

81].

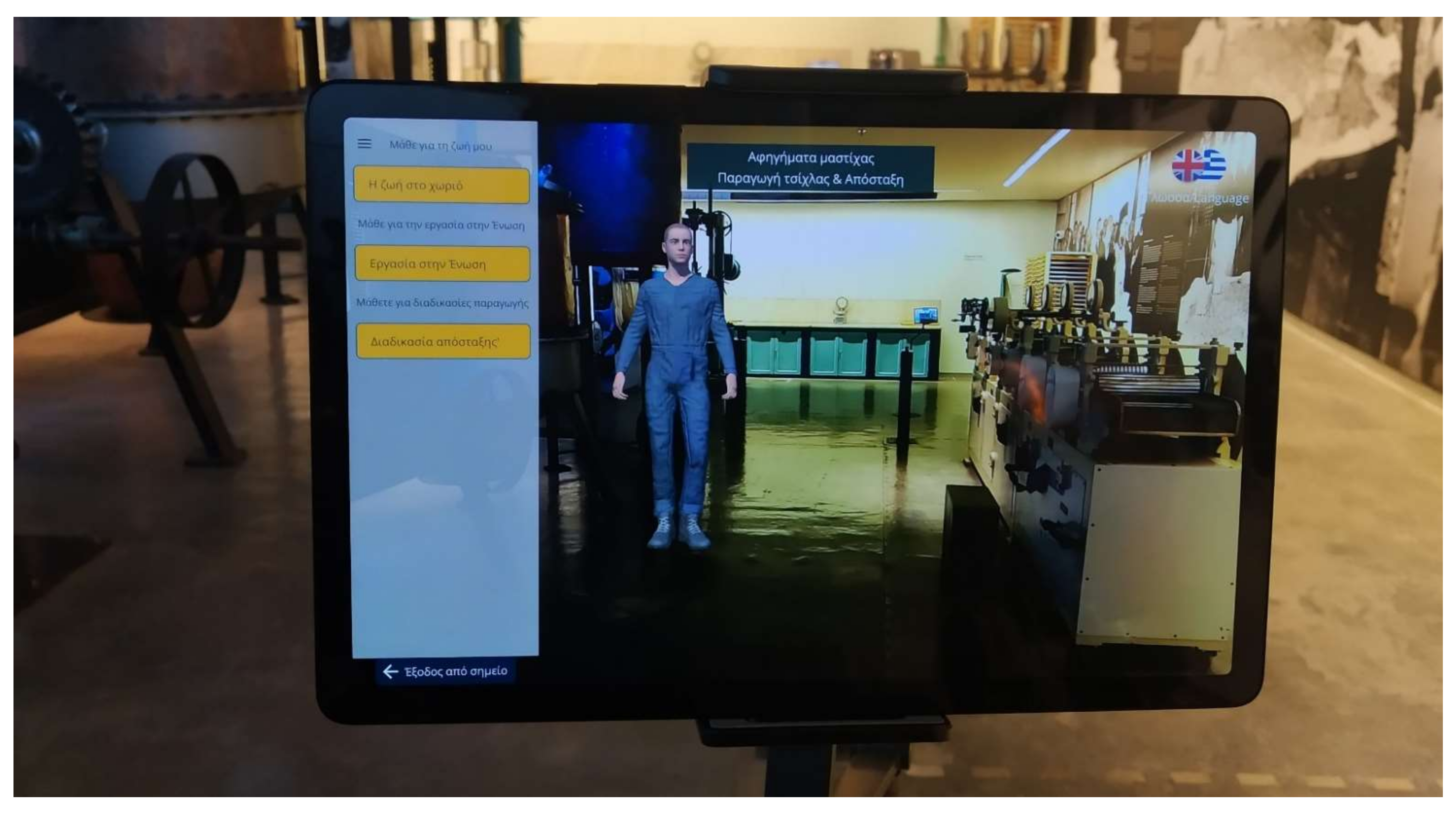

8.3.3. Virtual Narrations

Virtual narrations were supported both in 3D and in AR. Within an AR context, exhibits of the Chios Mastic Museum can be augmented with VHs. Viewing the machines through the museum’s tablets, the visitors see VHs standing next to them, ready to share their stories and explain the functionality of the respective machines. A similar experience can be provided offline, through a virtual tour traveling back in time in the mast factory and interacting with workers (see

Figure 16).

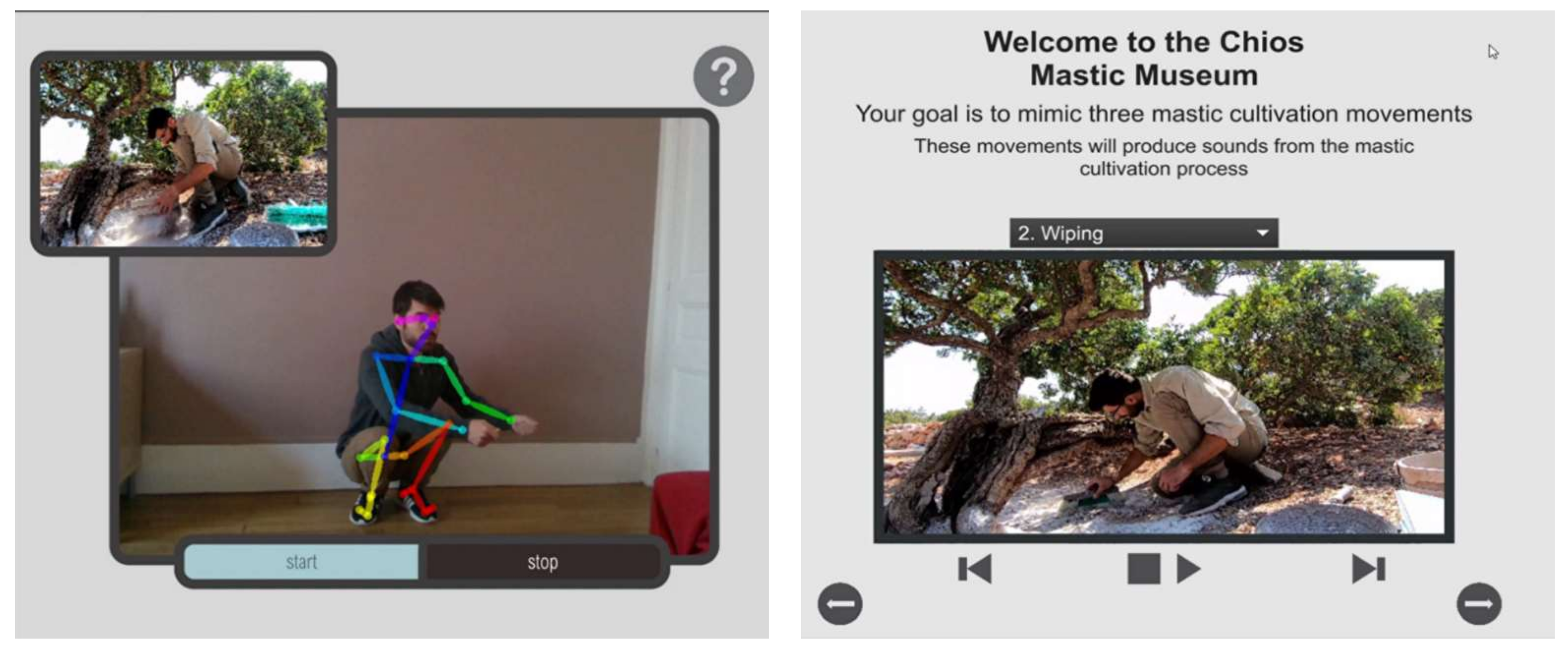

8.4. Craft Training Employing Gestural Know-How on Mastic Cultivation

A craft training application for mastic cultivation was created using Hidden Markov Models for performing gesture recognition. For feedback, each one of the gestures was mapped to an abstract sound that was affected by the success factor of the user performing a gesture. An instance of the application is shown in

Figure 17. Since the movement of the expert does not have details concerning the dexterous movement of the fingers, the video of the expert mastic cultivator has been placed on the top left of the screen, while in a bigger panel in the middle of the screen, there is the real-time recording of the video with the skeleton extracted from the OpenPose framework.

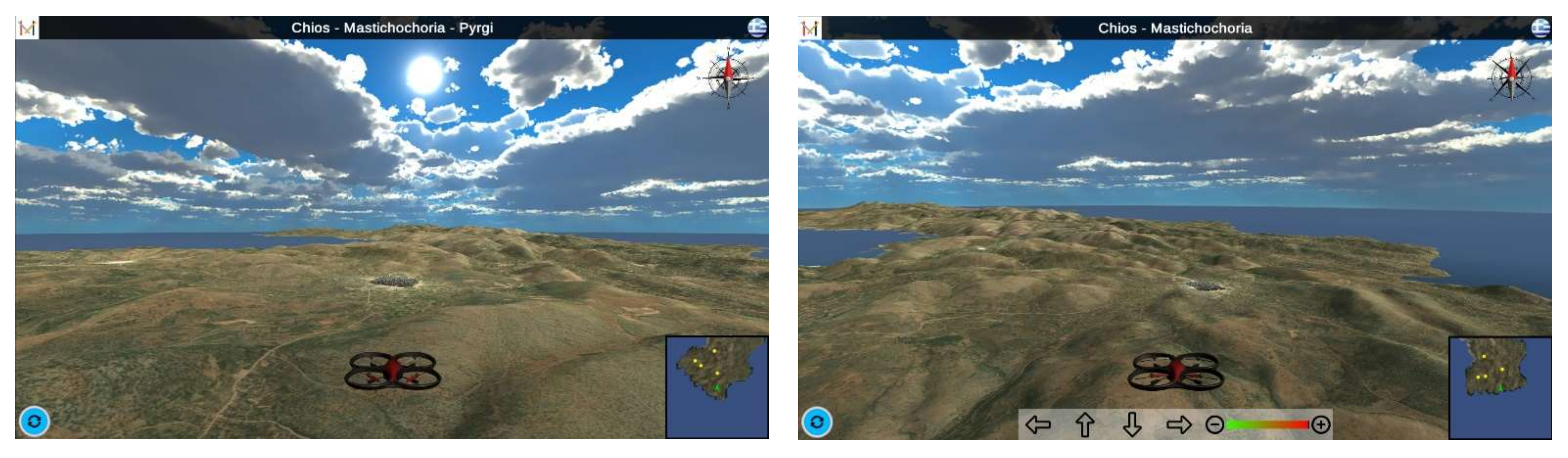

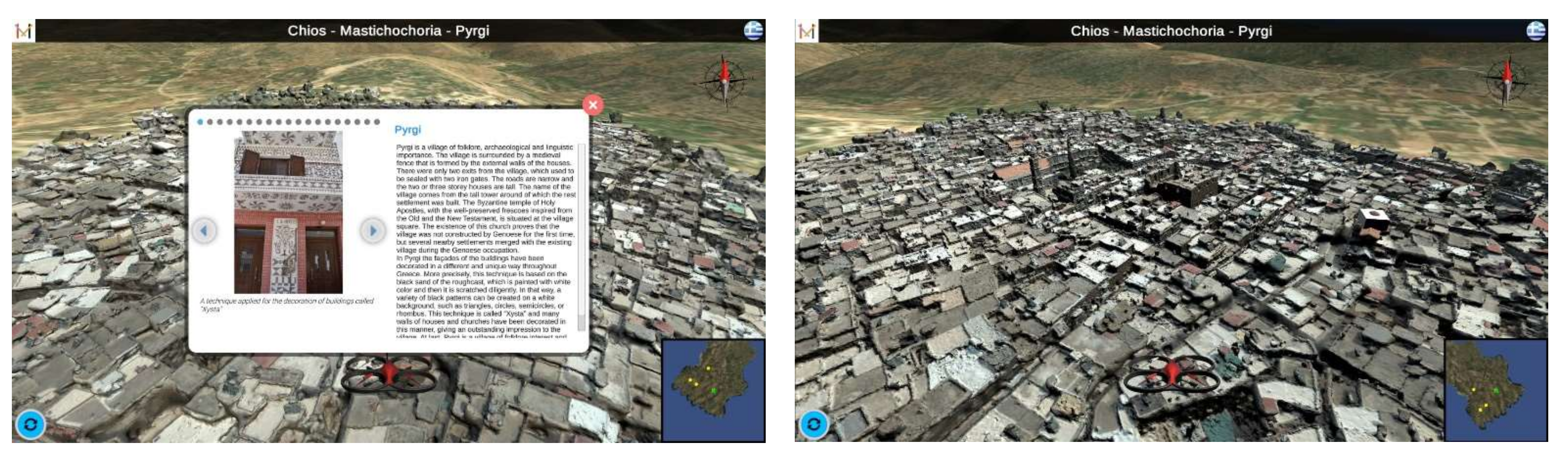

8.5. Geographical Context Presentation

Information on geographical location and context shows the environmental aspects affecting craft practice and development. We developed Airborne, an immersive flight simulator allowing users to fly over various mastic villages in Chios. During the flyover, users can stop at each village and retrieve multimedia and text information related to those villages as shown in

Figure 18.

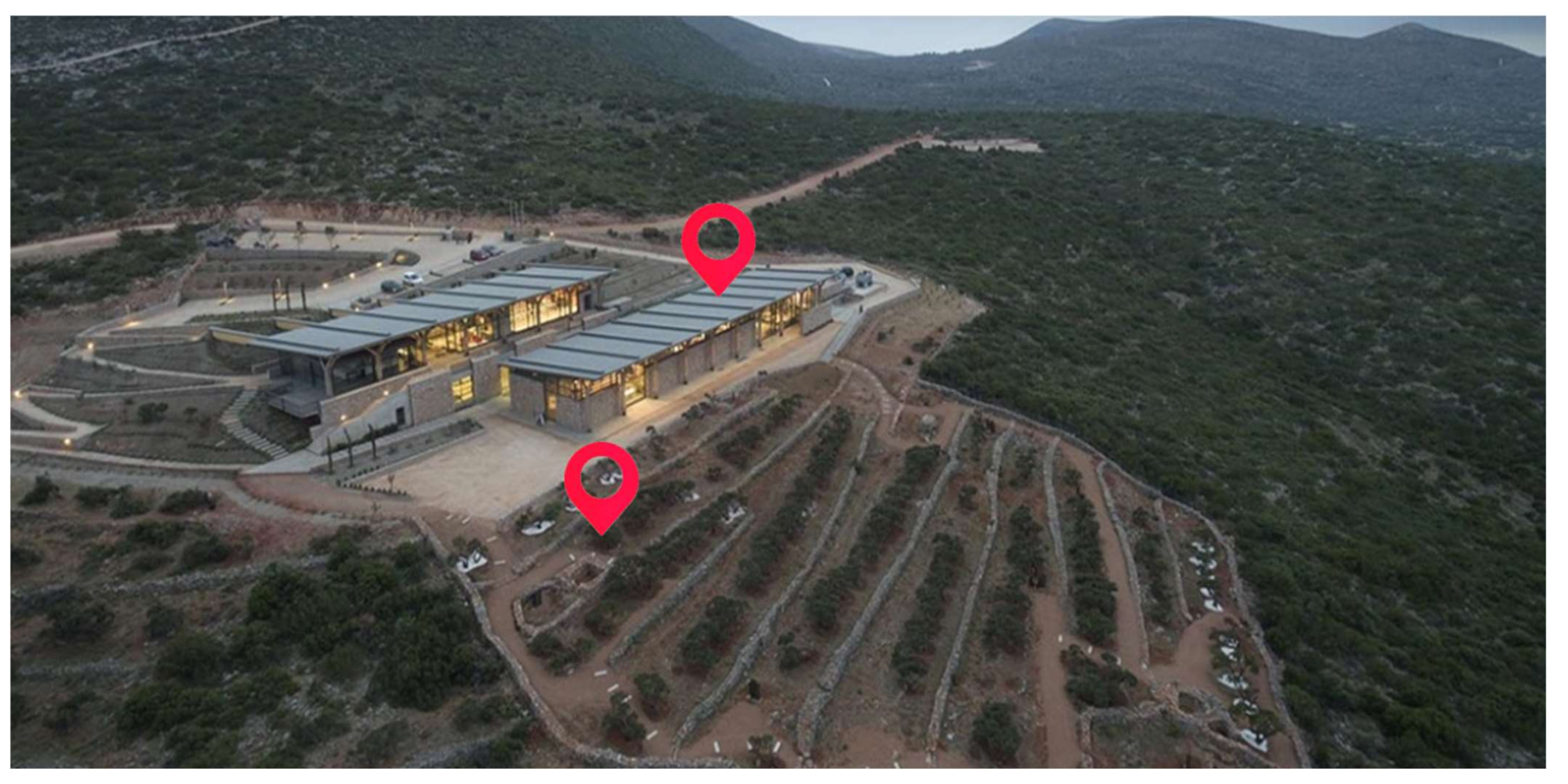

9. Exhibition

A subset of the presentation technologies presented in the previous section was used to implement an interactive exhibition at the Chios Mastic Museum. The objective was to enhance the presentation of intangible and tangible dimensions of the TC through targeted interactive presentations. The museum can be seen in

Figure 19.

As shown in this figure, the main areas of intervention were the main exhibition space of the museum and the rural space outside the museum. In the main space of the museum, this work targeted the mastic factory area that is less noticed by museum visitors since it is located in a space under the main exhibition. Furthermore, educational applications, such as craft training and context presentation, were installed in the multimedia space of the museum dedicated to children, school visits, and learning. In the rural space of the museum, there is a mastic field with metal sculpts that represent everyday people in the mastic field. The exterior interventions regarded the usage of AR for the presentation of craft activities in the mastic field. Each of the aforementioned interventions is presented thoroughly in the following sections.

9.1. Craft Presentations

9.1.1. Narrations in the Mastic Factory Exhibition

The installation at the mastic factory exhibition room is comprised of four tablet devices mounted on floor mounter bases that are located in four main spots of the museum. From each tablet, a specific area of the museum is covered and augmented through the camera of the tablet with hot spots, as shown in

Figure 20. In each hot spot exist one or more stories to be told. By selecting the hotspot, a VH appears that is the visual twin of a persona used to working in the factory and operating one of the machines in visual approximation to its location. When the hot spot is selected, the VH appears in the factory through the camera of the table to narrate his life story and his daily life and work at the factory (see

Figure 21).

9.1.2. Craft Presentations in the Mastic Field

One of the requirements of presenting the craft was to display its seasonality. Very often, visitors do not understand the complete process of mastic cultivation. Another necessity for the museum was the exploitation of the external spaces and the beautiful mastic tree field, which is often overlooked by visitors. It also became evident that this “guided” tour (it is a simple and straight path through the phases of mastic cultivation, harvesting, and cleaning) is a perfect example of the yearlong process. Visitors to the rural space outside the museum can experience mastic cultivation in the field through their mobile devices. The application facilitates an AR-capable device to recognize metallic sculptures that exist in the rural space of the museum. Through the camera, these sculptures become alive to present typical cultivation activities.

Figure 22 presents an example of app screens, with an emphasis in the middle on the AR augmentation of the sculpts that are part of the mastic field with animations of the cultivation process.

9.2. Craft Training

Craft training is intended for demonstrating the cultivation activities to visitors of the museum, thus providing a more immersive experience. This application was installed on the ground floor of the museum in the multimedia space. The installation is comprised of a personal computer and a monitor, together with a depth sensor for tracking the user’s actions. The user stands in front of the installation and follows the instructions provided on the screen to mimic craft actions. An example of this process is presented in

Figure 23.

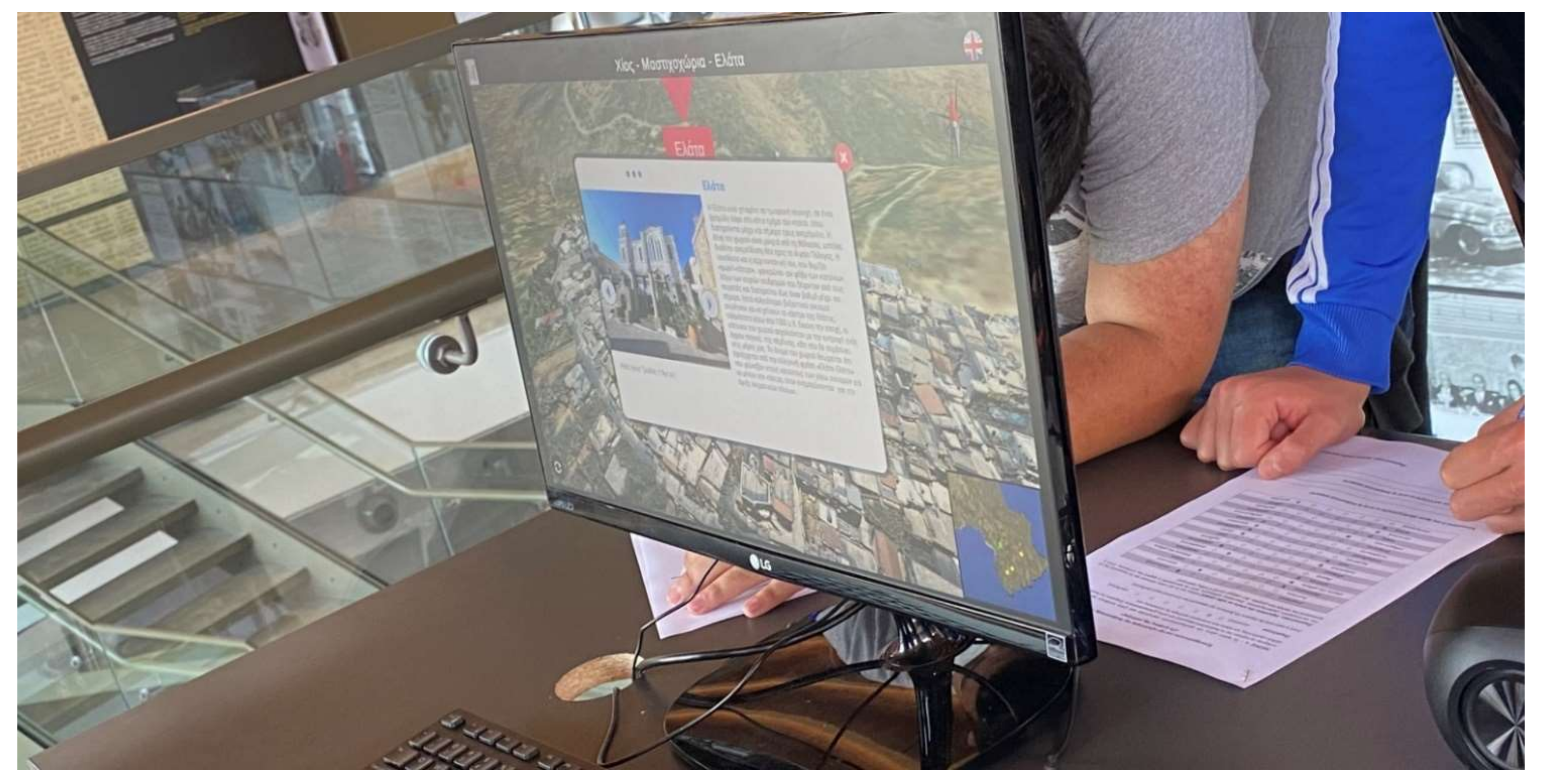

9.3. Presentation of Geographic Context

Airborne was installed in the multimedia room of the museum (see

Figure 24). The setup was very simple and straightforward, as it involved a desktop computer set. There are two options available, (a) automated tour and (b) flight simulator. The automated tour targets users that wish to explore the mastic villages in a movie-like way, while the flight simulator is more gameplay-oriented, since users have control of the virtual drone flying in the Chios sky and are free to explore information in any way they like. In the future, the setup will be updated with a large touch-enabled screen to enhance the gameplay of the installation.

9.4. Preliminary Evaluation

After the installation, a small preliminary evaluation was conducted through the approach of user observation. A user experience evaluator observed the interaction of users with the various installations at the museum to record any usability issues encountered during the interaction. Identified issues were documented to produce an evaluation report to be used for the improvement of the interactive applications. In the future, this, of course, will be followed by a formal user-based evaluation where museum visitors are recruited with their consent and requested to perform certain tasks. During the interaction, observation is employed, and after completing the tasks the users will be requested to fill in a user experience questionnaire. The preliminary evaluation was targeted at fine-tuning the installations before the final user-based evaluation to ensure that basic issues were identified and resolved before the evaluation, and thus more targeted feedback could be received.

10. Discussion and Lessons Learned

The processes presented in this paper are the results of applying the methodology discussed over a three-year period, with the objective of representing and presenting the TC of mastic cultivation. In this period, the lessons learned apply to a wider scientific context based on the collaboration of multiple researchers and scientific disciplines.

10.1. Methodology Structure

As presented in

Section 3 in this work, a systematic methodology for the representation and presentation of a TC instance is applied. This methodology, summarized in a series of steps, was adapted to address the needs of the specific TC. Of course, the linear execution of these steps meant that the entirety of digital assets would have been acquired a priori. However, knowledge acquired in the second step, in some cases, referred to non-digitized items, which were only then identified, and needed to be digitized as new digital assets in the context of the first step. Moreover, more sophisticated methods of asset digitization were acquired later on in the timeline of this work, judged so by CH professionals. Thus, the main lesson learned was that the linearity in these steps can be disrupted by refining iterations when needed. However, the methodology was found to be very adaptive to such iterations, taking into account that new needs resulted in the application of the previous steps only for the acquired assets and their context.

10.2. Collaboration

One of the main challenges faced by the application of this methodology was the need for several scientific disciplines to work together under a unique methodological framework. This was indeed challenging since different scientific approaches, technical tools, and research methods were applied. In this challenge, we learned that the MOP, as a single point of representation of research data, greatly enhanced the collaboration of the team, as it allowed different scientific disciplines to report and document results under a uniform semantic representation. Of course, several adaptations in terminology had to be made for the entire team to have a common understanding of the represented data.

10.3. Replicability

Replicability can be judged even from applications in a single use case by referring to the genericity of the approach. In this use case, we were able to apply several scientific approaches for each step and combine the results into a single representation. This became evident both in the craft understanding phase, where several approaches to studying social and historic context were applied, and the data collection step, where several scientific methods were used for data acquisitions. Regardless of the heterogeneity of the results, the MOP has been proven sufficient as a representation and no surprises were encountered on the representation of processes and narratives. Another supportive finding for the replicability of this methodology was that by detaching the representation from its presentation in this work, multiple presentation instances were created from different technologies. This was achieved by applying the exporting functionality of the MOP in the representation, and leaving it to the developers of the presentation layers to judge the most appropriate way of facilitating the representation in their presentation context. Finally, the included web-based presentation modalities in the MOP allowed the direct preview and dissemination of the represented knowledge through the Web.

10.4. Potential Improvements

Regarding future improvements of the presented methodology, several directions can be followed. Initially, we acknowledge that the process presented is time- and resource-demanding, considering that the objective is a valid representation that could lead to multiple presentations of a craft. As such, optimizations in the process can significantly improve the uptake of the proposed methodology. To that respect, optimizations could be in regard to the data curatorial platform, by simplifying and automating parts of the process to minimize the time spent in data curation, in conjunction with the time spent on the scientific exploitation of data. Additionally, simplification could also be in regard to additional actions required for the post-processing of input data, by integrating more automated tools and data processing wizards. Furthermore, another direction regards the further validation of the methodology through new craft instances. The study of new instances and the creation of new presentations and visualizations will enhance the value of the representation. Finally, further research could concern computer-aided facilities for the semi-automated creation of craft representations.

Author Contributions

Conceptualization, C.R., E.T., X.Z., N.P. (Nikolaos Partarakis), A.G., and S.M.; methodology, X.Z. and N.P. (Nikolaos Partarakis); software, N.P. (Nikolaos Patsiouras), E.Z., A.P., E.K., D.M., N.C., M.F. and V.N.; validation, I.A., C.R., E.T. and X.Z.; resources, D.K., C.R., and E.T.; data curation, D.K.; writing—original draft preparation, N.P. (Nikolaos Partarakis); writing—review and editing, X.Z. and N.P. (Nikolaos Partarakis); visualization, N.C, E.B., A.P., N.P. (Nikolaos Partarakis), N.C., N.P. (Nikolaos Patsiouras) and E.K.; supervision, X.Z., N.P. (Nikolaos Partarakis), N.M.T., S.M., A.G. and L.P.; project administration, N.P. (Nikolaos Partarakis) and X.Z.; funding acquisition, X.Z. and N.P. (Nikolaos Partarakis). All authors have read and agreed to the published version of the manuscript.

Funding

This work has been conducted in the context of the Mingei project, which has received funding from the European Union’s Horizon 2020 research and innovation program under grant agreement No. 822336.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data available upon request.

Acknowledgments

The authors would like to thank the Chios Mastic Museum of the Piraeus Bank Group Cultural Foundation for its contribution to the preparation of the exhibition.

Conflicts of Interest

The authors declare no conflict of interest.

References

- UNSECO. Know-How of Cultivating Mastic on the Island of Chios; Inscription: 9.COM 10.18; UNSECO: Paris, France, 2014. [Google Scholar]

- UNESCO. Text of the Convention for the Safeguarding of the Intangible Cultural Heritage; UNSECO: Paris, France, 2003. [Google Scholar]

- European Commission. Report on Digitisation, Online Accessibility and Digital Preservation of Cultural Material 2011/711/EU 2011-2013 and 2013-2015; European Commission: Brussels, Belgium, 2016. [Google Scholar]

- Boston Consulting Group. Digitizing Europe; Survey Commissioned by Google; Boston Consulting Group: Boston, MA, USA, 2016. [Google Scholar]

- Europeana. Survey Report on Digitisation in European Cultural Heritage Institutions; ENUMERATE EU Project, Deliverable 1.2; Europeana: 2015. Available online: https://www.egmus.eu/fileadmin/ENUMERATE/documents/ENUMERATE-Digitisation-Survey-2014.pdf (accessed on 27 January 2022).

- European Commission. Cultural Heritage Research, Survey and Outcomes of Projects within the Environment Theme From 5th to 7th FP; European Commission: Brussels, Belgium, 2012. [Google Scholar]

- ETH-Bibliothek. Best Practices Digitization (Version 1.1, 2016). Available online: https://moam.info/no-title_5b07bf9f8ead0ea46f8b45c3.html (accessed on 27 January 2022).

- Europeana. Available online: https://www.europeana.eu/el (accessed on 24 January 2022).

- Metcalf, B. Contemporary Craft: A Brief Overview, in Exploring Contemporary Craft: History, Theory and Critical Writing; Coach House Books: Toronto, ON, Canada, 1998. [Google Scholar]

- Donkin, L. Crafts and Conservation: Synthesis Report for ICCROM; ICCROM: Roma, Italy, 2001. [Google Scholar]

- Zabulis, X.; Meghini, C.; Partarakis, N.; Kaplanidi, D.; Doulgeraki, P.; Karuzaki, E.; Stefanidi, E.; Evdemon, T.; Metilli, D.; Bartalesi, V. What Is Needed to Digitise Knowledge on Heritage Crafts? Memoriamedia Review. 2019. Available online: http://memoriamedia.net/pdfarticles/EN_MEMORIAMEDIA_REVIEW_Heritage_Crafts.pdf, (accessed on 27 January 2022).

- Dimitropoulos, K.; Manitsaris, S.; Tsalakanidou, F.; Nikolopoulos, S.; Denby, B.; Kork, S.A.; Crevier-Buchman, L.; Pillot-Loiseau, C.; Adda-Decker, M.; Dupont, S.; et al. Capturing the intangible an introduction to the i-Treasures project. In Proceedings of the International Conference on Computer Vision Theory and Applications, Lisbon, Portugal, 5–8 January 2014; Volume 2, pp. 773–781. [Google Scholar]

- Doulamis, A.; Voulodimos, A.; Doulamis, N.; Soile, S.; Lampropoulos, A. Transforming intangible folkloric performing arts into tangible choreographic digital objects: The terpsichore approach. In Proceedings of the 12th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications, INSTICC 2017, Porto, Portugal, 27 February–1 March 2017; SciTePress: Setúbal, Portugal, 2017; Volume 5, pp. 451–460. [Google Scholar]

- Syu, Y.; Chen, L.; Tu, Y.-F. A case study of digital preservation of motion capture for ba Jia Jiang performance, Taiwan religious performing arts. In Digital Heritage. Progress in Cultural Heritage: Documentation, Preservation, and Protection; Springer International Publishing: Cham, Switzerland, 2018; pp. 103–110. [Google Scholar]

- Mokhov, S.A.; Kaur, A.; Talwar, M.; Gudavalli, K.; Song, M.; Mudur, S.P. Real-time motion capture for performing arts and stage. In ACM SIGGRAPH 2018 Educator’s Forum; Association for Computing Machinery: New York, NY, USA, 2018; pp. 1–2. [Google Scholar]

- Field, M.; Stirling, D.; Naghdy, F.; Pan, Z. Motion capture in robotics review. In Proceedings of the IEEE International Conference on Control and Automation, Christchurch, New Zealand, 9–11 December 2009; pp. 1697–1702. [Google Scholar]

- Shi, G.; Wang, Y.; Li, S. Human motion capture system and its sensor analysis. Sens. Transducers 2014, 172, 206–212. [Google Scholar]

- Sarafianos, N.; Boteanu, B.; Ionescu, B.; Kakadiaris, I. 3D human pose estimation: A review of the literature and analysis of covariates. Comput. Vis. Image Underst. 2016, 152, 1–20. [Google Scholar] [CrossRef]

- Ceseracciu, E.; Sawacha, Z.; Cobelli, C. Comparison of markerless and marker-based motion capture technologies through simultaneous data collection during gait: Proof of concept. PLoS ONE 2014, 9, e87640. [Google Scholar]

- Vavliakis, K.N.; Karagiannis, G.T.; Mitkas, P.A. Semantic Web in cultural heritage after 2020. In Proceedings of the International Semantic Web Conference, Boston, MA, USA, 11–15 November 2012; pp. 11–15. [Google Scholar]

- Doerr, M.; Gradmann, S.; Hennicke, S.; Isaac, A.; Meghini, C.; van de Sompel, H. The Europeana Data Model (EDM). In Proceedings of the World Library and Information Congress, Gothenburg, Sweden, 10–15 August 2010; Volume 10, p. 15. [Google Scholar]

- Zimmer, C.; Tryfonopoulos, C.; Weikum, G. European Conference on Research and Advanced Technology for Digital Libraries, ECDL 2007. Budapest, Hungary,, 16–21 September 2007. [Google Scholar]

- Bloomberg, R.; Dekkers, M.; Gradmann, S.; Lindquist, M.; Lupovici, C.; Meghini, C.; Verleyen, J. Functional Specification for Europeana Rhine Release, D3.1 of Europeana v1.0 Project (public deliverable). 2009. Available online: https://pro.europeana.eu/files/Europeana_Professional/Projects/Project_list/Europeana_Version1/Deliverables/D3.2FunctionalspecificationfortheEuropeanaDanuberelease_370704.pdf (accessed on 27 January 2022).

- Doerr, M. The CIDOC Conceptual Reference Model: An ontological approach to semantic interoperability of metadata. AI Mag. 2003, 24, 75–92. [Google Scholar]

- Bloomberg, R. Functional Specification for the Europeana Danube release. Europeana v 1.0. 2010. Available online: http://hdl.handle.net/10421/6082 (accessed on 27 January 2022).

- Zhang, X.; LeCun, Y. Text understanding from scratch. arXiv 2015, arXiv:1502.01710. [Google Scholar]

- Bordes, A.; Weston, J.; Collobert, R.; Bengio, Y. Learning structured embeddings of knowledge bases. In Proceedings of the AAAI Conference on Artificial Intelligence 2011, San Francisco, CA, USA, 1 December 2010–1 February 2011. [Google Scholar]

- D’Andrea, A.; Niccolucci, F.; Bassett, S.; Fernie, K. 3D-ICONS: World Heritage sites for Europeana: Making complex 3D models available to everyone. In Proceedings of the IEEE International Conference on Virtual Systems and Multimedia, Milan, Italy, 2–5 September 2012; pp. 517–520. [Google Scholar]

- Jung, Y.; Kuijper, A.; Fellner, D.; Kipp, M.; Miksatko, J.; Gratch, J.; Thalmann, D. Believable virtual characters in human-computer dialogs. In Proceedings of the Eurographics 2011—State of The Art Report, Llandudno, UK, 11–15 April 2011; pp. 75–100. [Google Scholar]

- Kasap ZMagnenat-Thalmann, N. Intelligent Virtual Humans with Autonomy and Personality: State-of-the-Art. Intell. Decis. Technol. 2007, 1, 3–15. [Google Scholar]

- Shapiro, A. Building a Character Animation System; Springer: Berlin/Heidelberg, Germany, 2011. [Google Scholar]

- Egges, A.; Papagiannakis, G.; Magnenat-Thalmann, N. Presence and interaction in mixed reality environments. Vis. Comput. 2007, 23, 317–333. [Google Scholar] [CrossRef] [Green Version]

- Papanikolaou, P.; Papagiannakis, G. Real-time separable subsurface scattering for animated virtual characters. In GPU Computing and Applications; Lecture Notes in Computer Science; Springer: Singapore, 2013; pp. 1–16. [Google Scholar]

- Karuzaki, E.; Partarakis, N.; Patsiouras, N.; Zidianakis, E.; Katzourakis, A.; Pattakos, A.; Kaplanidi, D.; Baka, E.; Cadi, N.; Magnenat-Thalmann, N. Realistic Virtual Humans for Cultural Heritage Applications. Heritage 2021, 4, 228. [Google Scholar] [CrossRef]

- Magnenat-Thalmann, N.; Thalmann, D. Virtual humans: Thirty years of research, what next? Vis. Comput. 2005, 21, 997–1015. [Google Scholar] [CrossRef] [Green Version]

- Partarakis, N.; Grammenos, D.; Margetis, G.; Zidianakis, E.; Drossis, G.; Leonidis, A.; Metaxakis, G.; Antona, M.; Stephanidis, C. Digital cultural heritage experience in Ambient Intelligence. In Mixed Reality and Gamification for Cultural Heritage; Springer: Cham, Switzerland, 2017; pp. 473–505. [Google Scholar]

- Partarakis, N.; Antona, M.; Stephanidis, C. Adaptable, personalizable and multi user museum exhibits. In Curating the Digital; Springer: Cham, Switzerland, 2016; pp. 167–179. [Google Scholar]

- Jung, T.; Tom Dieck, M.C.; Lee, H.; Chung, N. Effects of Virtual Reality and Augmented Reality on Visitor Experiences in the Museum; Springer: Cham, Switzerland, 2016. [Google Scholar] [CrossRef]

- Billinghurst, M.; Kato, H.; Myojin, S. Advanced Interaction Techniques for Augmented Reality Applications. In Virtual and Mixed Reality; Shumaker, R., Ed.; VMR 2009, Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2009; Volume 5622. [Google Scholar] [CrossRef]

- Merino, L.; Schwarzl, M.; Kraus, M.; Sedlmair, M.; Schmalstieg, D.; Weiskopf, D. Evaluating Mixed and Augmented Reality: A Systematic Literature Review (2009–2019). In Proceedings of the 2020 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Porto de Galinhas, Brazil, 9–13 November 2020; pp. 438–451. [Google Scholar] [CrossRef]

- Eleonora, B.; Giuseppe, V. Augmented reality technology in the manufacturing industry: A review of the last decade. IISE Trans. 2019, 51, 284–310. [Google Scholar] [CrossRef] [Green Version]

- Rankohi, S.; Waugh, L. Review and analysis of augmented reality literature for the construction industry. Vis. Eng. 2013, 1, 9. [Google Scholar] [CrossRef] [Green Version]

- Bang, J.; Lee, D.; Kim, Y.; Lee, H. Camera pose estimation using optical flow and ORB descriptor in SLAM-based mobile AR game. In Proceedings of the 2017 International Conference on Platform Technology and Service (PlatCon), Busan, Korea, 13–15 February 2017; pp. 1–4. [Google Scholar]

- Aldoma, A.; Tombari, F.; Rusu, R.B.; Vincze, M. OUR-CVFH–oriented, unique and repeatable clustered viewpoint feature histogram for object recognition and 6DOF pose estimation. In Joint DAGM (German Association for Pattern Recognition) and OAGM Symposium; Springer: Berlin/Heidelberg, Germany, 2012; pp. 113–122. [Google Scholar]

- Sandor, C.; Fuchs, M.; Cassinelli, A.; Li, H.; Newcombe, R.; Yamamoto, G.; Feiner, S. Breaking the barriers to true augmented reality. arXiv 2015, arXiv:1512.05471. [Google Scholar]

- Geronikolakis, E.; Zikas, P.; Kateros, S.; Lydatakis, N.; Georgiou, S.; Kentros, M.; Papagiannakis, G. A True AR Authoring Tool for Interactive Virtual Museums. arXiv 2020, arXiv:1909.09429. [Google Scholar]

- Magnenat-Thalmann, N.; Foni, A.; Papagiannakis, G.; Cadi-Yazli, N. Real-Time Animation and Illumination in Ancient Roman Sites. Int. J. Virtual Real. 2007, 6, 11–24. [Google Scholar]

- Pilet, J.; Lepetit, V.; Fua, P. Fast Non-Rigid Surface Detection, Registration and Realistic Augmentation. Int. J. Comput. Vis. 2008, 76, 109–122. [Google Scholar] [CrossRef] [Green Version]

- Nowrouzezahrai, D.; Geiger, S.; Mitchell, K.; Sumner, R.; Jarosz, W.; Gross, M. Light Factorization for Mixed-Frequency Shadows in Augmented Reality. In Proceedings of the IEEE International Symposium on Mixed and Augmented Reality, Bari, Italy, 4–8 October 2021. [Google Scholar]

- Papaefthymiou, M.; Feng, A.; Shapiro, A.; Papagiannakis, G. A fast and robust pipeline for populating mobile AR scenes with gamified virtual characters. In Proceedings of the ACM SIGGRAPH-ASIA 2015, Symposium on Mobile Graphics and Interactive Applications, Kobe, Japan, 2–6 November 2015; Association for Computing Machinery: New York, NY, USA, 2015. [Google Scholar]

- Zhou, F.; Duh, H.B.-L.; Billinghurst, M. Trends in augmented reality tracking, interaction and display: A review of ten years of ISMAR. In Proceedings of the IEEE and ACM International Symposium on Mixed and Augmented Reality, Cambridge, UK, 15–18 September 2008. [Google Scholar]

- Feng, Y. Estimation of light source environment for illumination consistency of augmented reality. In Proceedings of the 2008 Congress on Image and Signal Processing, Sanya, China, 27–30 May 2008; Volume 3, pp. 771–775. [Google Scholar]

- Liarokapis, F.; Sylaiou, S.; Basu, A.; Mourkoussis, N.; White, M.; Lister, P.F. An interactive visualisation interface for virtual museums. In Proceedings of the 5th International Symposium on Virtual Reality, Archaeology and Cultural Heritage, Brussels, Belgium, 6–10 December 2004; pp. 47–56. [Google Scholar]

- Pedersen, I.; Gale, N.; Mirza-Babaei, P.; Reid, S. More than meets the eye: The benefits of augmented reality and holographic displays for digital cultural heritage. J. Comput. Cult. Herit. 2017, 10, 1–15. [Google Scholar] [CrossRef]

- Papagiannakis, G.; Partarakis, N.; Stephanidis, C.; Vassiliadi, M.; Huebner, N.; Grammalidis, N.; Drossis, G.; Chalmers, A.; Magnenat-Thalmann, N.; Margetis, G.; et al. Mixed Reality, Gamified Presence, and Storytelling for Virtual Museums. In Encyclopedia of Computer Graphics and Games; Lee, N., Ed.; Springer: Cham, Switzerland, 2018. [Google Scholar] [CrossRef]

- Ioannides, M.; Fink, E.; Moropoulou, A.; Hagedorn-Saupe, M.; Fresa, A.; Liestøl, G.; Rajcic, V.; Grussenmeyer, P. Digital heritage. Progress in cultural heritage: Documentation, preservation, and protection. In Proceedings of the 7th International Conference, EuroMed 2018, Nicosia, Cyprus, 29 October–3 November 2018; Volume 11196. [Google Scholar]

- Papaeftymiou, M.; Kanakis, E.M.; Geronikolakis, E.; Nochos, A.; Zikas, P.; Papagiannakis, G. Rapid reconstruction and simulation of real characters in mixed reality environments. In Digital Cultural Heritage; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2018; Volume 10605, pp. 267–276. [Google Scholar]

- Zikas, P.; Bachlitzanakis, V.; Papaefthymiou, M.; Kateros, S.; Georgiou, S.; Lydatakis, N.; Papagiannakis, G. Mixed reality serious games for smart education. In Proceedings of the European Conference on Games-Based Learning 2016, ECGBL’16, Paisley, UK, 6–7 October 2016. [Google Scholar]

- Mixed Reality and Gamification for Cultural Heritage; Ioannides, M.; Magnenat-Thalmann, N.; Papagiannakis, G. (Eds.) Springer Nature: Cham, Switzerland, 2017. [Google Scholar] [CrossRef]

- Zabulis, X.; Meghini, C.; Partarakis, N.; Beisswenger, C.; Dubois, A.; Fasoula, M.; Nitti, V.; Ntoa, S.; Adami, I.; Chatziantoniou, A.; et al. Representation and preservation of Heritage Crafts. Sustainability 2020, 12, 1461. [Google Scholar] [CrossRef] [Green Version]

- Partarakis, N.; Zabulis, X.; Chatziantoniou, A.; Patsiouras, N.; Adami, I. An approach to the creation and presentation of reference gesture datasets, for the preservation of traditional crafts. Appl. Sci. 2020, 10, 7325. [Google Scholar] [CrossRef]

- Partarakis, N.; Doulgeraki, P.; Karuzaki, E.; Adami, I.; Ntoa, S.; Metilli, D.; Bartalesi, V.; Meghini, C.; Marketakis, Y.; Theodoridou, M.; et al. Representation of socio-historical context to support the authoring and presentation of multimodal narratives: The Mingei Online Platform. J. Comput. Cult. Herit. 2022, 15, 1–22. [Google Scholar] [CrossRef]

- Mingei Online Platform. Available online: http://mop.mingei-project.eu (accessed on 10 November 2021).

- Unity 3D. Available online: https://unity.com/ (accessed on 10 November 2021).

- High Definition Render Pipeline. Available online: https://docs.unity3d.com/Packages/com.unity.render-pipelines.high-definition@10.8/manual/index.html (accessed on 10 November 2021).

- Blender 3D. Available online: https://www.blender.org/ (accessed on 10 November 2021).

- Zabulis, X.; Meghini, C.; Dubois, A.; Doulgeraki, P.; Partarakis, N.; Adami, I.; Karuzaki, E.; Carre, A.; Patsiouras, N.; Kaplanidi, D.; et al. Digitisation of traditional craft processes. ACM J. Comput. Cult. Herit. 2022, in press. [Google Scholar] [CrossRef]

- Fowler, M. UML Distilled: A Brief Guide to the Standard Object Modeling Language; Addison-Wesley Professional: Boston, MA, USA, 2004. [Google Scholar]

- Getty Arts and Architecture Thesaurus. Available online: https://www.getty.edu/research/tools/vocabularies/aat/ (accessed on 10 November 2021).

- UNESCO Thesaurus. Available online: http://vocabularies.unesco.org/browser/thesaurus/en/ (accessed on 10 November 2021).

- Hartig, O.; Bizer, C.; Freytag, J.C. Executing SPARQL queries over the web of linked data. In International Semantic Web Conference; Springer: Berlin/Heidelberg, Germany, 2009; pp. 293–309. [Google Scholar]

- OpenStreetMap. Available online: https://www.openstreetmap.org (accessed on 10 November 2021).

- Fuse. Available online: https://store.steampowered.com/app/257400/Fuse/ (accessed on 10 November 2021).

- Character Creator 4. Available online: https://www.reallusion.com/ (accessed on 10 November 2021).

- 3ds-max. Available online: https://www.autodesk.com/products/3ds-max (accessed on 10 November 2021).

- Mixamo. Available online: https://www.mixamo.com/ (accessed on 10 November 2021).

- MacDorman, K.F.; Chattopadhyay, D. Reducing consistency in human realism increases the uncanny valley effect, increasing category uncertainty does not. Cognition 2016, 146, 190–205. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- MacDorman, K.F.; Ishiguro, H. The uncanny advantage of using androids in social and cognitive science research. Interact. Stud. 2006, 7, 297–337. [Google Scholar] [CrossRef]

- Motionbuilder. Available online: https://www.autodesk.com/products/motionbuilder/overview (accessed on 10 November 2021).

- Stefanidi, E.; Partarakis, N.; Zabulis, X.; Zikas, P.; Papagiannakis, G.; Thalmann, N.M. TooltY: An approach for the combination of motion capture and 3D reconstruction to present tool usage in 3D environments. In Intelligent Scene Modeling and Human-Computer Interaction; Springer: Cham, Switzerland, 2021; pp. 165–180. [Google Scholar]

- Stefanidi, E.; Partarakis, N.; Zabulis, X.; Papagiannakis, G. An approach for the visualization of crafts and machine usage in virtual environments. In Proceedings of the 13th International Conference on Advances in Computer-Human Interactions, Valencia, Spain, 21–25 November 2020; pp. 21–25. [Google Scholar]

Figure 1.

Proposed methodology and structure of this paper.

Figure 1.

Proposed methodology and structure of this paper.

Figure 2.

Village reconstruction using aerial images acquired by a drone.

Figure 2.

Village reconstruction using aerial images acquired by a drone.

Figure 3.

Mastic factory using HDRP in Unity.

Figure 3.

Mastic factory using HDRP in Unity.

Figure 4.

Photographic documentation of chicle production machines, together with photogrammetric reconstruction results and post-processing in Blender 3D [

66].

Figure 4.

Photographic documentation of chicle production machines, together with photogrammetric reconstruction results and post-processing in Blender 3D [

66].

Figure 5.

Renderings of 3D models of mastic cultivation tools in Blender 3D [

66].

Figure 5.

Renderings of 3D models of mastic cultivation tools in Blender 3D [

66].

Figure 6.

Recording narrations with the help of a narrator.

Figure 6.

Recording narrations with the help of a narrator.

Figure 7.

Presentation of (a) 3D objects and (b) 3D reconstructions, in MOP.

Figure 7.

Presentation of (a) 3D objects and (b) 3D reconstructions, in MOP.

Figure 8.

Representation in MOP of (a) Events, (b) Persons, and (c) Locations.

Figure 8.

Representation in MOP of (a) Events, (b) Persons, and (c) Locations.

Figure 9.

UML diagram of the mastic cultivation process.

Figure 9.

UML diagram of the mastic cultivation process.

Figure 10.

UML diagram of the production of mastic chewing gum.

Figure 10.

UML diagram of the production of mastic chewing gum.

Figure 11.

Process schema representations in MOP.

Figure 11.

Process schema representations in MOP.

Figure 12.

A presentation of a narrative in MOP.

Figure 12.

A presentation of a narrative in MOP.

Figure 13.

(a) Checking bone positions. (b) Rigged model assuming poses.

Figure 13.

(a) Checking bone positions. (b) Rigged model assuming poses.

Figure 14.

Examples of animating a VH using BVH input from MoCap recordings.

Figure 14.

Examples of animating a VH using BVH input from MoCap recordings.

Figure 15.

Demonstration of the mastic cultivation activities by a VH.

Figure 15.

Demonstration of the mastic cultivation activities by a VH.

Figure 16.

(a) Virtual factory with machines, (b) Examples of narrator VHs is the virtual factory.

Figure 16.

(a) Virtual factory with machines, (b) Examples of narrator VHs is the virtual factory.

Figure 17.

An instance of the main screen of the interactive installation.

Figure 17.

An instance of the main screen of the interactive installation.

Figure 18.

Airborne indicative screens.

Figure 18.

Airborne indicative screens.

Figure 19.

The Chios Mastic Museum.

Figure 19.

The Chios Mastic Museum.

Figure 20.

A view of the available hot spots for a specific area of the museum.

Figure 20.

A view of the available hot spots for a specific area of the museum.

Figure 21.

A VH appears through AR on the screen showing the available narrations.

Figure 21.

A VH appears through AR on the screen showing the available narrations.

Figure 22.

Mobile app navigation example.

Figure 22.

Mobile app navigation example.

Figure 23.

Craft training through replication of the gestural know-how of the mastic cultivator.

Figure 23.

Craft training through replication of the gestural know-how of the mastic cultivator.

Figure 24.

An instance of the main screen of the interactive installation.

Figure 24.

An instance of the main screen of the interactive installation.

Table 1.

Recordings of mastic cultivation activities with a MoCap suit.

| Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).