Abstract

A random variable X that is base b Benford will not in general be base c Benford when . This paper builds on two of my earlier papers and is an attempt to cast some light on the issue of base dependence. Following some introductory material, the “Benford spectrum” of a positive random variable is introduced and known analytic results about Benford spectra are summarized. Some standard machinery for a “Benford analysis” is introduced and combined with my method of “seed functions” to yield tools to analyze the base c Benford properties of a base b Benford random variable. Examples are generated by applying these general methods to several families of Benford random variables. Berger and Hill’s concept of “base-invariant significant digits” is discussed. Some potential extensions are sketched.

1. Introduction

My grandfather, the physicist Frank Benford for whom Benford’s Law is named, considered his “law of anomalous numbers” as evidence of a “real world” phenomenon. He realized that geometric sequences and exponential functions are generally base 10 Benford, and on this basis he wrote [1]:

“If the view is accepted that phenomena fall into geometric series, then it follows that the observed logarithmic relationship is not a result of the particular numerical system, with its base, 10, that we have elected to use. Any other base, such as 8, or 12, or 20, to select some of the numbers that have been suggested at various times, would lead to similar relationships; for the logarithmic scales of the new numerical system would be covered by equally spaced steps by the march of natural events. As has been pointed out before, the theory of anomalous numbers is really the theory of phenomena and events, and the numbers but play the poor part of lifeless symbols for living things.”

This argument seems compelling, and it might seem to apply to Benford random variables as well as to geometric sequences and exponential functions. It is therefore somewhat surprising to observe that a random variable that is base b Benford is not generally base c Benford when . We’ll see some examples shortly.

This paper builds on two of my earlier papers [2,3] and is an attempt to cast some light on the issue of base dependence. It’s organized as follows. Section 2 introduces the significand function and the fractional part notation and gives several logically equivalent definitions of “Benford random variable.” The base b first digit law is introduced, and several examples of random variables are presented that are Benford relative to one base but not to another. Section 3 introduces the “Benford spectrum” of a positive random variable X and summarizes some of the known analytical results that involve . Section 4 is a brief digression listing some facts about Fourier transforms that are needed in subsequent sections. Section 5 introduces some fundamental notation and results that provide a framework for the “Benford analysis” of a positive random variable. Section 6 combines the framework of Section 5 with my method of “seed functions” to develop the theory of the base c Benford properties of random variables X that are known to be Benford relative to base b, and Section 7 gives several examples of such random variables. Section 8 discusses Berger and Hill’s concept of “base-invariant significant digits.” Section 9 is a summary and a look ahead.

2. Benford Random Variables

The best way to define Benford random variables is via the significand function. Let be a fixed “base.” Any may be written uniquely in the form

and the base b significand of x, written , is defined as this s. Hence,

(Berger and Hill [4] define the significand of x for all , but we don’t require this generality.)

Now let X be a positive random variable; that is, Assume that X is continuous with a probability density function (pdf).

Definition 1.

X is base b Benford (or X is b-Benford) if and only if the distribution function of is given by

Nothing written above requires that b be an integer. For this paragraph alone, we assume that b is an integer greater than or equal to 3. Let denote the first (i.e., leftmost or most significant) digit of X in the base b representation of X, so . (Leading zeros, if there are any, are ignored.)

Proposition 1.

If X is b-Benford, then

for all . This is the “base b First Digit Law.” To prove it, it is sufficient to observe that if and only if .

It’s useful at this point to introduce some non-standard notation. Let and recall that the “floor” of y, written , is defined as the largest integer that is less than or equal to y. Define as:

and note that for every . We’ll call the fractional part of y, though if this description is misleading.

If we take the logarithm base b of Equation (1) we obtain

On the other hand,

As is necessarily an integer and , comparison of Equations (5) and (6) shows that

for any

Using Equation (7), we may rephrase Definition 1 in several logically equivalent ways.

Proposition 2.

X is b-Benford if and only if any one of the following four conditions is met.

where the notation “” means that W is uniformly distributed on the half open interval . (More generally, I use the symbol “∼” to mean “is distributed as.” Hence, for example, “” means that X is distributed with pdf f, and “” means that and have the same distribution.)

For any random variable Y, if we sometimes say that Y is “uniformly distributed modulo one,” abbreviated “u.d. mod 1.” Hence X is b-Benford if and only if is u.d. mod 1.

With this background we can now give a couple of examples of random variables that are Benford with respect to one base but not to another. Let .

Example 1.

Let , so X is 10-Benford. But it’s not 8-Benford as it fails to satisfy the base 8 First Digit Law. To see this, note that the support of X is , and let denote the first digit in the base 8 representation of X. Then

whereas

Example 2.

Let Y be as above, but now let , so X is 8-Benford. Note that the support of X is . Let denote the first digit in the base 10 representation of X. Hence whereas

Hence, X fails to satisfy the base 10 First Digit Law.

3. The Benford Spectrum

Let X be a positive random variable.

Definition 2.

Following Wójcik [5], the “Benford spectrum” of X, denoted , is defined as

The Benford spectrum of X may be empty. In fact, the Benford spectra of essentially all the standard random variables used in statistics are empty.

This section summarizes some of the known facts about Benford spectra. While proofs are provided for Proposition 4 and 6, I’m just going to provide citations for proofs of the other propositions.

Proposition 3

(Berger and Hill [4], page 44, Proposition 4.3 (iii)). A random variable Y is u.d. mod 1 if and only if is u.d. mod 1 for every integer and every .

Proposition 4

(Whittaker [6]). If , then for all . In other words, if X is b-Benford, then X is -Benford for all .

Proof.

Suppose that X is b-Benford, so where Y is u.d. mod 1. Hence, for any

As and is u.d. mod 1 by Proposition 3, it follows that □

Proposition 5.

If is non-empty, then it is bounded above. In other words, no random variable can be b-Benford for arbitrarily large b. Citations: Refs. [3,5,6].

Proposition 6.

If X is b-Benford and , then is b-Benford.

Proof.

As X is b-Benford, is u.d. mod 1. As is u.d. mod 1 from Proposition 3, it follows that is b-Benford. □

We say of this result that the Benford property of a random variable is “scale-invariant.”

Proposition 7.

Suppose that X and W are independent positive random variables and that X is b-Benford. Then the product is also b-Benford. Citations: Refs. [3,4,5].

Proposition 8

(a corollary of Proposition 7). If X and W are independent positive random variables, then

So far, the spectra we’ve seen are at most countably infinite. One may wonder if there exists a random variable with an uncountable spectrum. Whittaker showed by an example that such a random variable exists. Let be given. Define by

It may be shown that g is a legitimate pdf, and Y is u.d. mod 1 if . Hence is b-Benford. (This is what I’ve called Whittaker’s random variable.) For any , define . It may then be shown that is u.d. mod 1 (and hence that X is c-Benford) if and only if . In summary, . Citations: Refs. [3,5,6].

4. Digression: Fourier Transforms

Before going much further, we need to list some facts about Fourier transforms. Let g denote the pdf of a real valued random variable . The Fourier transform of g is the function defined as

for all , where

Note that u is an even function and v is an odd function, and hence that where the overbar denotes complex conjugation. Though the Fourier transform is generally complex valued, it is real valued if g is an even function, i.e., if Y is symmetrically distributed around the origin. Hence, if g is an even function, then is an even function. Finally, note that .

The following fact is very useful.

Proposition 9

(shift and scale with random variables). Suppose that where . Suppose that and let h denote the pdf of W. We may obtain h from g and from as follows:

(proof left to reader) and

If , Equation (13) becomes .

Appendix A of this paper contains a table of selected Fourier transforms.

5. A Framework for Benford Analysis

Suppose that X is a positive random variable and that . We may wish to know if X is b-Benford, and if it’s not by how far does it differ from “Benfordness.” I call an attempt to answer these and related questions a “Benford analysis.” In this section I establish some notation I’ll use for a Benford analysis, and give some fundamental results that allow us to proceed.

First, define

Next, let

Given we may answer the two questions given above. (1) X is b-Benford if and only if for almost all . (2) If X is not b-Benford, we may measure its deviation from Benfordness by any measure of the deviation of from a uniform distribution. For example, if is continuous, or if its only discontinuities are “jumps,” we could use the infinity norm:

We need a way to find from g. Under a reasonable assumption, it may be shown that

for all . The “reasonable assumption” is described in [2]. In this paper we’ll just accept Equation (16) as given.

Although Equation (16) is fundamental for a Benford analysis of X, it is not very useful for finding the answers to some analytical questions one may ask. Fourier analysis provides the tools needed to continue the analysis. It may be shown [3] that the Fourier series representation of is

At first sight this expression may not seem very useful; the series of real valued functions in Equation (16) has been replaced by a series of complex valued functions multiplied by complex coefficients. But , and Equation (17) may be written as

As is the complex conjugate of , it follows that each term in brackets in Equation (18) is real valued. In fact,

where

Combining Equations (18) and (19) yields

In practice, it is often convenient to go one step further and rewrite Equation (21) as

where satisfies

and is any solution to

The parameters and are not uniquely determined by Equations (23) and (24), but in practice natural candidates for and often present themselves. I’ll call an “amplitude” (though this term generally refers to ) and a “phase.”

Proposition 10.

The pdf is that of a random variable if and only if for all . Equivalently, the pdf is that of a random variable if and only if for all .

Proof.

The first assertion follows fromEquation (18) combined with for any . The second assertion follows from Equation (22). □

Proposition 11.

Proof.

Solving Equation (20) for and , we find

It follows that

□

6. Base Dependence: Theory

Suppose we’re given a u.d. mod 1 random variable Y with pdf g and . Then is b-Benford. Now let be another possible base and define . Let and denote the pdfs of and , respectively, and let denote the Fourier transform of . My aim in this section is to present tools that allow one to study how varies as a function of c.

The first thing to observe is that is proportional to Y:

It then follows from Proposition 9 that

for any .

To use Equation (28) we first need to say something about g. I introduced “seed functions” in [2] and showed that every pdf g of a u.d. mod 1 random variable may be written

for every , where H is a seed function. Hence

Under various assumptions about H, we may combine Equations (28) and (30) to compute for all , and given we may compute and in the expression

for all , and thereby derive . In this section I’ll partially carry out this program for two broad classes of seed functions: (1) is a step function, and (2) is increasing and absolutely continuous.

Example 3.

Suppose that is the following step function:

This seed function implies that

Hence, from the table of Fourier transforms in Appendix A,

(where it is understood that ). Combining this with Equation (28) yields

for any . From Proposition 10 we know that will be c-Benford if and only if for every , and from Equation (33) it’s clear that this happens if and only if ρ is an integer. But

for every . Hence, is c-Benford if and only if c is an integral root of b. This result agrees with Proposition 4.

Certain features of this result are repeated with every seed function H we consider. In particular, we always find that for all whenever c is an integral root of b. Also, note that depends on c entirely through the parameter .

Equation (33) implies that

for this example.

Example 4.

To generalize Example 3 slightly, suppose that H jumps from 0 to 1 at for some . The pdf g implied by this seed function is just that given by Equation (31) shifted right by μ. From Proposition 9 and Equation (32) we obtain

and hence

for any . Note that if and only if for some . Equation (36) implies that

for this example. The only effect of including μ is to change the phase. Note that the phase does not depend on n.

Now assume that H is increasing and absolutely continuous. This assumption makes H mathematically equivalent to the distribution function of an absolutely continuous random variable. Under this assumption H is differentiable almost everywhere and . We want to evaluate the integral

It’s clear from the rightmost expression in this equation that . When , an initial integration by parts yields

Evaluating this integral,

Hence,

for any . We see once again that for all whenever c is an integral root of b. In addition, there’s another possibility; for all if for all . This is essentially the possibility that was exploited in the construction of Whittaker’s random variable. We’ll return to this point in a moment.

Example 5.

Still working with the assumption that H is increasing and absolutely continuous, we now make the additional assumption that h is an even function, which implies that is an even function. Under these assumptions, Equation (38) implies that

Example 6.

In Example 5 we assume that h is even, so that it’s symmetrical around the point . Now assume that h is symmetrical around the point for some . Define so is an even function. It is easy to show that . Combining this fact with Equation (38) yields

Equation (40) implies that

for Example 6. We observe that the phase depends on μ and ρ, but not on n.

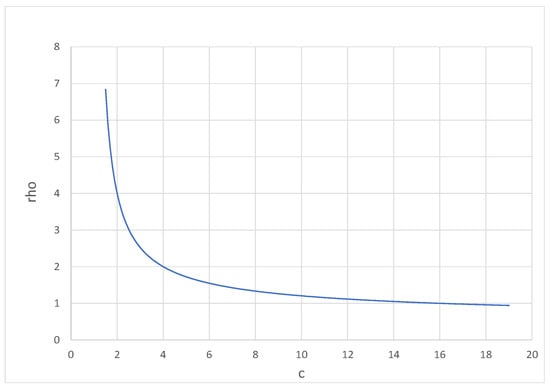

Note that and depend on c only through in all of these examples. It’s useful to keep in mind that depends on c as shown in Figure 1 (where I’ve let ). In words, increases from 1 to ∞ as c decreases from b towards 1.

Figure 1.

as a function of c.

7. Base Dependence: Examples

Equations (38) and (41) provide the scaffolding for the construction of , but require insertion of an actual formula for in Equation (38) or in Equation (41) for completion. This section completes this construction using the table of Fourier transforms in Appendix A.

Every distribution function is a legitimate seed function. Hence every Fourier transform given in Appendix A is a legitimate candidate for . Moreover, four of the distributions in Appendix A (the normal, Laplace, Cauchy, and logistic) are even functions, and their Fourier transforms are therefore legitimate candidates for . All four of these distributions have fixed variances, however, and it is desirable to append a “scale” parameter that allows these variances to be adjusted. Proposition 9 justifies the following expanded table of Fourier transforms.

Example 7.

Gauss-Benford random variables. Suppose that H is the distribution function of a random variable, i.e., a random variable shifted μ to the right. I’ll call the random variable X implied by this seed function a “Gauss-Benford” random variable. Combining Equation (41) with the appropriate entry from Table 1, we obtain

Table 1.

Fourier transforms of selected even density functions with a scale parameter.

As , it follows that . Let

so . Viewed as a function of n or ρ, oscillates within an envelope , and for all and ρ. Asymptotically, letting any of the parameters n, ρ, or implies that . Equation (43) implies that . The descent of towards zero with increases in n, ρ, or σ is extremely rapid, and can be small with even low values of ρ and σ. For example, letting implies that . In this case, the graph of is visually indistinguishable from that of a uniform distribution on and we would have to conclude that X is “effectively” c-Benford for all .

Example 8.

Laplace-Benford random variables. Now suppose that H is the distribution function of a Laplace random variable. I’ll call the random variable X implied by this seed function a “Laplace-Benford” random variable. Combining Equation (41) with the appropriate entry from Table 1, we obtain

As , it follows that . Let

so and for all and ρ. Asymptotically, letting any of the parameters n, ρ, or implies that . Though the asymptotic limits of Equations (43) and (45) are identical, the approach of to zero (as n, ρ, or σ increases) is very much slower for Equation (45) than it is for Equation (43).

Example 9.

Cauchy-Benford random variables. Now suppose that H is the distribution function of a Cauchy random variable. I’ll call the random variable X implied by this seed function a “Cauchy-Benford” random variable. Combining Equation (41) with the appropriate entry from Table 1, we obtain

As , it follows that . Let

so . The asymptotic behavior for this is identical to that of Equations (43) or (45). The rate of descent of towards zero is intermediate between that of a Gauss-Benford random variable and that of a Laplace-Benford random variable.

Example 10.

Logistic-Benford random variables. For our final example of a symmetric seed function, let H be the distribution function of a logistic random variable. I’ll call the random variable X implied by this seed function a “Logistic-Benford” random variable. Combining Equation (41) with the appropriate entry from Table 1, we obtain

where

The asymptotic behavior for this is identical to that of the previous three random variables. The rate of convergence of to zero is comparable to that of a Cauchy-Benford random variable.

Example 11.

Gamma-Benford random variables. Suppose that the seed function H is the distribution function of a random variable. I’ll call the random variable X implied by this seed function a “Gamma-Benford” random variable. This seed function is increasing and absolutely continuous, but h isnot symmetrically distributed around any point μ, so Equation (41) does not apply. Combining Equation (38) with the appropriate entry from the table of Fourier transforms found in Appendix A, we obtain

for every integer . To make headway, define

and rewrite in polar form, so

Hence,

where

Hence,

where

To “compare and contrast” these results with those with symmetric distributions, we make the following observations. (1) The presence of in the numerator of Equation (56), combined with , implies that for all if and only if ρ is an integer, i.e., if and only if c is an integral root of b. (2) Unlike our earlier results, where the phase is given by Equation (41) and does not depend on n, for a Gamma-Benford random variable the phase is given by Equation (54). It’s easy to show that as , and hence that (3) It’s easy to show that

Example 12.

Whittaker-Benford random variables: For our final example, we return to Equation (38),

which holds for all increasing and absolutely continuous seed functions. All of our previous examples have made use of the fact that for all whenever ρ is an integer. We now consider another possibility: for all if for all . I’ll say that a b-Benford random variable X satisfying this condition is a “Whittaker-Benford” random variable. The key here is to find with bounded support, and the simplest such is triangular:

where . With this it’s clear that for all if . Note that . Therefore, the Benford spectrum of a Whittaker-Benford random variable X with given by Equation (57) has two (overlapping) components: where

(The superscript d stands for “discrete,” and the superscript c stands for “continuous.”) If , then . For example, if then . On the other hand, if , then , so equals the disjoint union of the discrete set () and the continuous set .

The function h that yields given by Equation (57) is

8. On “Base-Invariant Significant Digits”

I wish to acknowledge that I first encountered many of the ideas discussed in this section in Michal Wójcik’s admirable paper [5]. All citations to Berger and Hill in this section are to their text, reference [4].

Proposition 12.

If X is b-Benford, then is b-Benford for any

Proof.

As X is b-Benford, where Y is u.d. mod 1. Hence . But is u.d. mod 1 by Proposition 3. Therefore is b-Benford. □

Corollary 1.

As , it follows that is -Benford if X is b-Benford.

Corollary 2.

If X is b-Benford, then for any . This follows from Definition 1.

One may wonder if the converse of Corollary 2, namely

is true. The answer is “no.” Here’s a counterexample. If , then for all , but X is not b-Benford. In fact, any X of the form where is a counterexample, as . However, we may show the following:

Proposition 13.

If for all then either X is b-Benford, or . We’ll provide a proof in a moment.

Definition 3.

Let

be called Wójcik’s condition.

Here’s another way to state Proposition 13. (This is Wójcik’s Theorem 19.)

Proposition 14.

X satisfies Wójcik’s condition if and only if the distribution function of is given by

for some and for all .

To prove Proposition 13 or 14, we first massage Wójcik’s condition into an alternative form. Let X be a positive random variable and define . For all

where the last equality follows from the identity for any .

Berger and Hill ([4], Lemma 5.15, page 77) show the following.

Proposition 15.

For any random variable Y, the relation for all holds if and only if

for some .

Propositions 13 and 14 are straightforward corollaries of Proposition 15.

I bring these facts to the reader’s attention because Wójcik’s condition is effectively equivalent to Berger and Hill’s notion of “base-invariant significant digits” and sheds some light on this notion. (I say “effectively equivalent” as Berger and Hill’s concept applies to a probability measure P, whereas Wójcik’s condition applies to a random variable X.)

Here’s Berger and Hill’s definition (Definition 5.10, page 75). Let be a -algebra on A probability measure P on has base-invariant significant digits if for all and all .

Here’s a guide to the symbols used in this definition. (1) is the -algebra generated by the significand function . (2) , the set of strictly positive real numbers. (3) For any and . Also, it’s useful at this point to introduce one more bit of non-standard notation used by Berger and Hill: for every and every set , let .

The following proposition (showing the effective equivalence of Wójcik’s condition and Berger and Hill’s base-invariant significant digits) is the major result of this section.

Proposition 16.

Suppose that . Then for all if and only if P has base-invariant significant digits.

Proof.

We begin by proving that Wójcik’s condition holds whenever P has base-invariant significant digits. Suppose that . From the definition of , we have . Hence,

If P has base-invariant significant digits, then

Combining Equations (64) and (65), we see that

whenever P has base-invariant significant digits. As there exists a set such that

In fact, . Hence

Combining Equations (66) and (68), we conclude that

As this equation holds for every , we conclude that whenever and P has base-invariant significant digits.

To prove that Wójcik’s condition implies that P has base-invariant significant digits, we essentially reverse this chain of logic. Wójcik’s condition implies Equation (69) for any , which in turn implies Equation (66) for A given by Equation (67). But and , so Equation (66) implies that . As was an arbitrary element of , the equation holds for A, an arbitrary element of , and the proof is complete. □

Berger and Hill state the following theorem (Theorem 5.13, page 76). A probability measure P on with has base-invariant significant digits if and only if, for some

(The meaning of the “Dirac measure” is given on page 22 of their book, and the meaning of the “Benford measure” is given on page 32.)

In the light of Proposition 16, it can be seen that Berger and Hill’s Theorem 5.13 is equivalent to Proposition 14 given above.

I conclude this section with a personal opinion about Berger and Hill’s exposition. I think that the terminology “base-invariant” they chose for their concept is a misnomer. There is only one base (b) in the definition, and their concept of “base-invariant” significant digits tells us nothing about the Benford properties of alternative bases for a b-Benford random variable. Hence, the label “base-invariant” they chose for their concept seems misleading and I believe they really should give it a different name.

9. Conclusions and Prospect

Let Y be a u.d. mod 1 random variable with pdf g, let , and define , so X is b-Benford. Without loss of generality we may assume that

where is a seed function. Let . In principle, the machinery introduced in Section 6 allows one to investigate the dependence of the distribution of on c. In practice, I’ve carried out this investigation only for seed functions of the first two types in the following list of classes of seed functions.

- (1)

- Step functions that jump from 0 to 1 in a single step.

- (2)

- Increasing functions that are absolutely continuous.

- (3)

- Step functions that increase from 0 to 1 at a finite or countably infinite number of “points of jump.”

- (4)

- Convex combinations of seed functions in classes (2) and (3).

- (5)

- “Singular” distribution functions. These functions are increasing and continuous, but not absolutely continuous. The Cantor function is the best known example.

- (6)

- Seed functions satisfy a condition I call “unit interval increasing.” Every increasing function is unit interval increasing, but not conversely. That is, a function H may be unit interval increasing, but not everywhere increasing. Several examples of such seed functions are given in [2].

My intuition suggests that seed functions of types (3) and (4) will offer no additional conceptual difficulties, though they will certainly complicate the algebra. I’ll leave the investigation of seed functions of classes (5) and (6) to the reader.

With X and c defined as above, let denote the pdf of . If X is c-Benford, and if is continuous or has only “jump” discontinuities, then

Hence, c is in the Benford spectrum if and only if Equation (70) is satisfied. For almost all random variables X, the Benford spectrum is empty. We might want to say that X is “effectively” c-Benford if

for some small number . If we define the “effective” Benford spectrum of X to be the set

then . In general, I suggest, the effective spectrum will be a much larger set than the spectrum.

The machinery described in Section 5 to carry out a “Benford analysis” helps us determine whether or not the criterion of Equation (71) is satisfied. In Section 7 I suggested that a Gauss-Benford random variable should be regarded as effectively c-Benford if the product is large enough. In [3] I suggested that a lognormal random variable, which is not b-Benford for any b, should be regarded as effectively c-Benford if is large enough.

I leave further investigation of effectively Benford random variables to the reader.

Funding

This research received no external funding.

Acknowledgments

I’d like to thank Kenneth Ross for thoughtful comments on earlier drafts of this paper, and three anonymous referees for useful suggestions. I’d also like to thank William Davis and Don Lemons for their heroic efforts to convert my EXP document into an acceptable LaTeX form.

Conflicts of Interest

The author declares no conflict of interest.

Appendix A. A Small Table of Fourier Transforms

Feller [7] gives a table of characteristic functions of selected probability density functions. I’ve adapted his table to give the Fourier transforms of 8 of his 10 densities, and added a row for an additional pdf (the logistic).

Table A1.

Fourier transforms of selected probability density functions.

Table A1.

Fourier transforms of selected probability density functions.

| No. | Name | Density | Interval | Fourier Transform |

|---|---|---|---|---|

| 1 | ||||

| 2 | ||||

| 3 | ||||

| 4 | Triangular | |||

| 5 | Dual of 4 | |||

| 6 | ||||

| 7 | Laplace | |||

| 8 | Cauchy | |||

| 9 | Logistic |

References

- Benford, F. The Law of Anomalous Numbers. Proc. Am. Philos. Soc. 1938, 78, 551–572. [Google Scholar]

- Benford, F.A. Construction of Benford Random Variables: Generators and Seed Functions. arXiv 2020, arXiv:1609.04852. [Google Scholar]

- Benford, F.A. Fourier Analysis and Benford Random Variables. arXiv 2020, arXiv:2006.07136. [Google Scholar]

- Berger, A.; Theodore, H. An Introduction to Benford’s Law; Princeton University Press: Princeton, NJ, USA, 2015. [Google Scholar]

- Wójcik, M. Notes on Scale-Invariance and Base-Invariance for Benford’s Law. arXiv 2013, arXiv:1307.3620. [Google Scholar]

- Whittaker, J. On Scale-Invariant Distributions. SIAM J. Appl. Math. 1983, 43, 257–267. [Google Scholar] [CrossRef]

- Feller, W. An Introduction to Probability Theory and Its Applications, 2nd ed.; John Wiley & Sons: New York, NY, USA, 1971; Volume II. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).