1. Introduction

The Global Navigation Satellite System (GNSS) provides positioning, velocity and timing (PVT) services to users equipped with appropriate hardware (i.e., an antenna and a receiver) [

1]. There are four GNSS constellations that allow users to compute their PVT on a global basis (i.e., worldwide), two of which have already declared their full operational capability (FOC). Namely, the Global Positioning System (GPS, US Air Force), completed in 1994; the Global Navigation Satellite System (GLONASS, Russian Federal Space Agency) completed in 1995 (and restored in 2011). Two additional constellations are being completed and thus have not reached FOC: the BeiDou Navigation Satellite System (BDS, China National Space Administration) and Galileo (European Union). The Multi-GNSS Experiment (MGEX) [

2] of the International GNSS Service (IGS) [

3] monitors the rapid development of GNSS constellations. The processing of GNSS data is an essential part of PVT determination [

4]. Once the receiver has acquired and demodulated the radio frequency GNSS signals from the antenna, it generates the code and carrier-phase measurements (the so-called observables). Then, these observables undergo a series of processing steps so that they can be used to estimate the PVT of the GNSS receiver by means of least squares (LS) or the Kalman filter [

5], among other methods. Several software packages exist that are capable of processing GNSS data automatically.

The GNSS LABoratory tool (gLAB) is an advanced educational multi-purpose software used for processing and analyzing GNSS data [

6]. The research group of Astronomy and Geomatics (gAGE) started developing gLAB in 2009, in the context of the European Space Agency (ESA) educational program on satellite navigation (EDUNAV). After one decade of evolution, gLAB capabilities include the most common GNSS navigation modes: stand-alone positioning, differential-mode, and augmented positioning with integrity. gLAB is open source and allows to fully control its internal processing through its many configuration options. This is a great advantage with respect to proprietary GNSS data processing programs produced by receiver manufacturers, which do not allow any modification and hence, from the user/scientific point of view, are black boxes. The processing core of gLAB is structured in five highly configurable modules. These modules mimic the aforementioned processing steps, being quite standard in commercial software receiver packages. For completeness, those modules are briefly summarized as follows:

The INPUT module: interfaces with the standard input files and the rest of the program. It implements all the reading capabilities and stores the data in the memory structures of gLAB, that contain, as minimum, the raw measurements and the pseudo-Keplerian elements that allow computing the GNSS satellite position and its clock offset.

The PREPROCESSING module: checks and selects the read data to be further processed. It detects cycle-slips (i.e., discontinuities) in the carrier-phase measurements, decimates the input data to a lower processing rate (if required) and selects which satellites and which constellations are used in the following modules, among other functions.

The MODELLING module: provides an accurate model of the measurements from the receiver to each tracked satellite. In this regard, the pseudorange measurements

can be written according to [

4] as

where

is the geometric distance between the receiver and the satellite,

c stands for the velocity of light,

and

are the receiver and satellite clock offsets,

and

are the propagation delays occurring at the troposphere and ionosphere respectively,

is the satellite inter-frequency biases (IFB), and

accounts for the measurement noise. The output of the MODELLING module are the pre-fit residuals, i.e., the difference between the preprocessed measurements and the sum of the model terms:

Considering the model terms described in the standard point positioning (SPP) [

7], the pre-fit residuals result in:

where Equation (

3) contains four unknowns: three receiver coordinates in the geometric distance

and the time offset of the receiver clock offset

, with respect to GNSS time.

The FILTER module: implements an extended Kalman filter to obtain the estimations of the receiver PVT from the pre-fit residuals. The filter outputs the values of the estimated unknowns together with its co-variance, as a measure of the uncertainty in the estimation process.

The OUTPUT module: is in charge of printing intermediate and final results in a structured manner. This allows extracting useful and complete information at any point in the GNSS data processing chain.

Both in gLAB and in commercial software packages, PVT estimation can be deteriorated by outliers. Outliers can be intuitively defined as elements of a sample qualitatively distinct as ‘bad’ compared to the majority of the sample considered as ‘good’. Note that it is not said that outliers are ‘wrong’ data; they are data needing a ‘careful look’, since their ‘being distinct’ from the majority could lead to unreliable estimates. Outlier detection, by a comparative or quantitative evaluation of their quality of ‘being distinct’, is a central component of PVT estimation.

The aim of the present work was to improve the outlier detection capabilities of gLAB by working at the intermediate step between the MODELLING and the FILTER modules. To this aim, we considered as basic dataset the

pre-fit residuals as in Equation (

2) above. In this GNSS context, outliers can be more precisely defined as pre-fit residual values from a particular satellite that are too different from the pre-fit residuals median (computed including all satellites). Note that by

pre-fit residuals we mean something distinct from

residuals in regression theory; the different use of the word

residuals should be clear from the context. The most common reason for the presence of outliers in the pre-fit residuals is the multipath effect, when GNSS signals from low-elevation satellites reach the antenna along multiple paths, following a reflection in the ground or surrounding structure. Other outlier sources are the malfunctioning of the receiver or satellite hardware. For the latter, the ground segment (GS) of each constellation constantly monitors the status of its satellites using a worldwide distribution of permanent stations. Thus, the GS can inform users of satellite anomalies by means of the healthy flag broadcast in the navigation message that is typically refreshed every two hours. In contrast, anomalies at one particular isolated receiver are more difficult to detect, as there is no redundancy of measurements. In this case, receiver autonomous integrity monitoring (RAIM) must be applied, following [

8]. In the simplest form of position computation, as described in standard point positioning (SPP) [

7], the pre-fit residuals of all satellites in view are fit by ordinary least squares, OLS, to three coordinates and one time-offset unknowns in an over-determined system of equations. The severity of outlier mis-detection depends on the magnitude of the outlier, on satellite geometry and on the user application. Safety of life (SoL) applications such as civil aviation or autonomous driving require specialized techniques to ensure positioning integrity (i.e., the measure of trust that can be placed in the correctness of the information supplied by a navigation system).

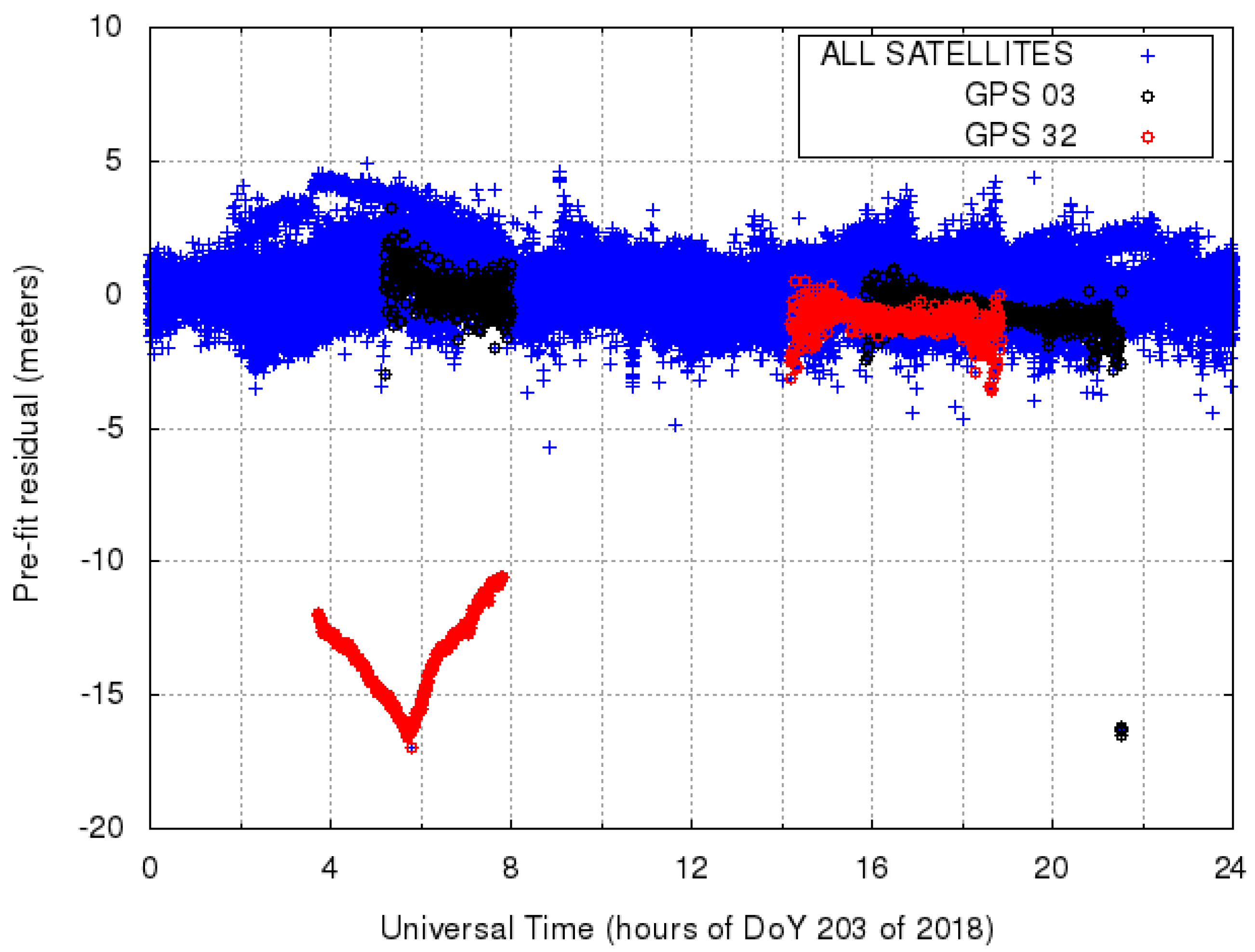

The qualitative distinction of outliers from the majority of the sample is immediately remarked when values are graphically represented in an appropriate way. For example, with reference to

Figure 1, the ‘V-shaped’ set of points in the lower-left part is promptly perceived as ‘distinct from the majority of the sample’; the appropriate graphical representation of data remains today, when possible, an instrument for

outlier detection by visual screening. When visual screening is not possible, or a fine-drawn decision has to be taken, automated techniques must be used. George Edward Pelham Box, in 1953, coined the term

robust in relation to this need, but this (certainly older) concept was formalized in a proper discipline only later, starting from the mid-1960s. Roughly speaking,

robust statistics can now be seen as an operational approach to the treatment of outliers; treatment that can range from the detection and elimination of outliers from the sample to some degree of mitigation of their effects on the choice and computation of estimators. The standard example of

resistance to outliers of an estimator is given by comparing the effect of a single diverging value

on the sample mean and the sample median: the mean being immediately influenced by this value of

y, while the median is

resistant. The

degree of resistance to the outliers of an estimator is represented by a parameter called

breakdown point. The breakdown point of the sample mean is

while the breakdown point for the median is

since it can tolerate up to

of ‘gross errors’ in data before diverging.

The use of robust statistics techniques for GNSS positioning is a growing field both in applications and in methodological aspects. Examples of the applications of robust statistics to positioning problems can be found in artificial intelligence [

9] and geodesy [

10]. Concerning the methodological aspects, an example of improvement of the SPP algorithm by using Huber M-estimators can be found in [

11] while in [

12] a form of robust Kalman filtering is used in the kinematic positioning component. These two methodological aspects can be referred to the MODELLING and FILTER modules of gLAB. To our knowledge, there are no other attempts in the literature to improve the position solution with robust statistics by exploiting the pre-fit residuals, i.e., by working at the connection between the MODELLING and the FILTER modules, as in the gLAB architecture.

The Flexible Statistics and Data Analysis (FSDA) toolbox [

13] is a MATLAB module gathering a comprehensive set of statistical functions for the analysis of data. The original aim of this library of functions was to extend the MATLAB Statistics Toolbox with

forward search analysis [

14]. FSDA was subsequently extended and now includes all the main

robust multivariate and regression tools, complemented by instruments for robust transformations and the interactive graphical exploration of data; it is available for Windows, Unix and MacOS platforms.

(FSDA is copyright of the European Commission and the University of Parma. It is protected under European Union Public Licence (EUPL) [15], which is a free software licence granting recipients rights to modify and redistribute the code. FSDA is distributed through GitHub https://github.com/UniprJRC/FSDA—accessed on 15 May 2021— and the MATLAB community https://www.mathworks.com/matlabcentral/fileexchange/72999-fsda (accessed on 15 May 2021). A complete documentation with examples, datasets and tutorials is available at http://rosa.unipr.it/FSDA (accessed on 15 May 2021). A version of the suite with selected functionalities is also available in R at https://cran.r-project.org/web/packages/fsdaR/index.html (accessed on 15 May 2021) and in SAS, upon request to the JRC co-authors.) The analysis of pre-fit datasets reported in this article can be considered as our first attempt to apply robust statistical techniques as provided by the FSDA toolbox to PVT estimation.

After a description of the typical data and the problems to be faced in

Section 2, with a first essay employing the traditional robust estimator

least median of squares (LMS), we proceeded with the use of other FSDA functions for outlier detection still applied to relatively large samples of data. We did not use the full potential of FSDA, however, with the aim of comparing the results obtained at the beginning of

Section 2 with LMS, we exploited

least trimmed squares (LTS) and

forward search (FS). In

Section 3, a more precise analysis is done by considering samples whose cardinality is coherent with practical use: relatively short

time windows of ten samples. In

Section 4, the results of our analysis are summarized together with an indication of the directions of future work.

2. Structure of the Dataset and Preliminary Analysis

In this section, our study uses actual and publicly available data collected by a Javad ‘TRE_G3TH’ receiver, installed at a permanent station named KIR0 in Sweden. This is a standard geodetic-grade receiver as those usually found in the IGS network.

(IGS data can be downloaded from the URL https://www.igs.org/data/—accessed on 15 May 2021.) The day of the experiment is the day of the year (DoY) 203 of 2018 (i.e., November 4th). Code pseudorange C1C measurements are logged with a period of 30 s, i.e., the

sampling frequency is

Hz, so we have 2880

epochs for the entire day. Each epoch contains a record, as in

Table 1, for each satellite ‘visible’ and ‘tracked’ by the receiver.

Figure 1 illustrates the dataset which contains 24 h of pre-fit residuals, the output of the gLAB MODELLING module. This dataset contains both the

pseudorange data corrected for some inconsistencies by the PREPROCESS module as briefly explained above, and the pseudorange data as predicted by the model. The computation of the pseudorange takes into account a number of model corrections following the SPP [

7]. Note that, since we are using a fixed station with known coordinates and the satellite positions can be obtained from the broadcast navigation message of the satellites, we can compute the geometric range, the

measured range in

Table 1, for each of the tracked satellites. Then, the pre-fit residuals in Equation (

3) turn into:

Therefore, we should expect all pre-fit residuals from all satellites around the receiver clock offset value

to be only distinguishable by the noise of the measurement,

. Under normal conditions, this

pseudorange noise reaches one meter at the high elevation of the satellite and few to several meters at low elevations.

In

Table 1, an excerpt of two epochs from the complete data table of about 32,000 rows was reported; this table contains the satellite identifier and the pre-fit value, the plain difference between

modeled and

measured range. Note that at the first epoch,

, the order of magnitude of the pre-fit is about 1 meter for all satellites while at epoch 77,520, corresponding to hours

in

Figure 1, satellite 3 shows a pre-fit of about 16 m, probably caused by its low elevation. It is remarked that it is general practice not to consider in the solution of the positioning problem the satellites with ‘low’ elevation. gLAB, while operating in SPP mode, excludes by default satellites with an elevation below five degrees already in the PREPROCESSING module.

As a first essay with the dataset, we apply a LMS regression. The least median of squares estimator [

16], described in more detail below, is historically among the first improvements of OLS and is implemented in FSDA by the

LXS function. For

LXS, we used a very conservative estimate, which was a

Bonferroni-corrected [

17] confidence level of

where

N is the number of observations—

in the present case. The quantity

can be considered as the probability to falsely declare a dataset as contaminated by outliers, when one is testing a large number of genuine datasets.

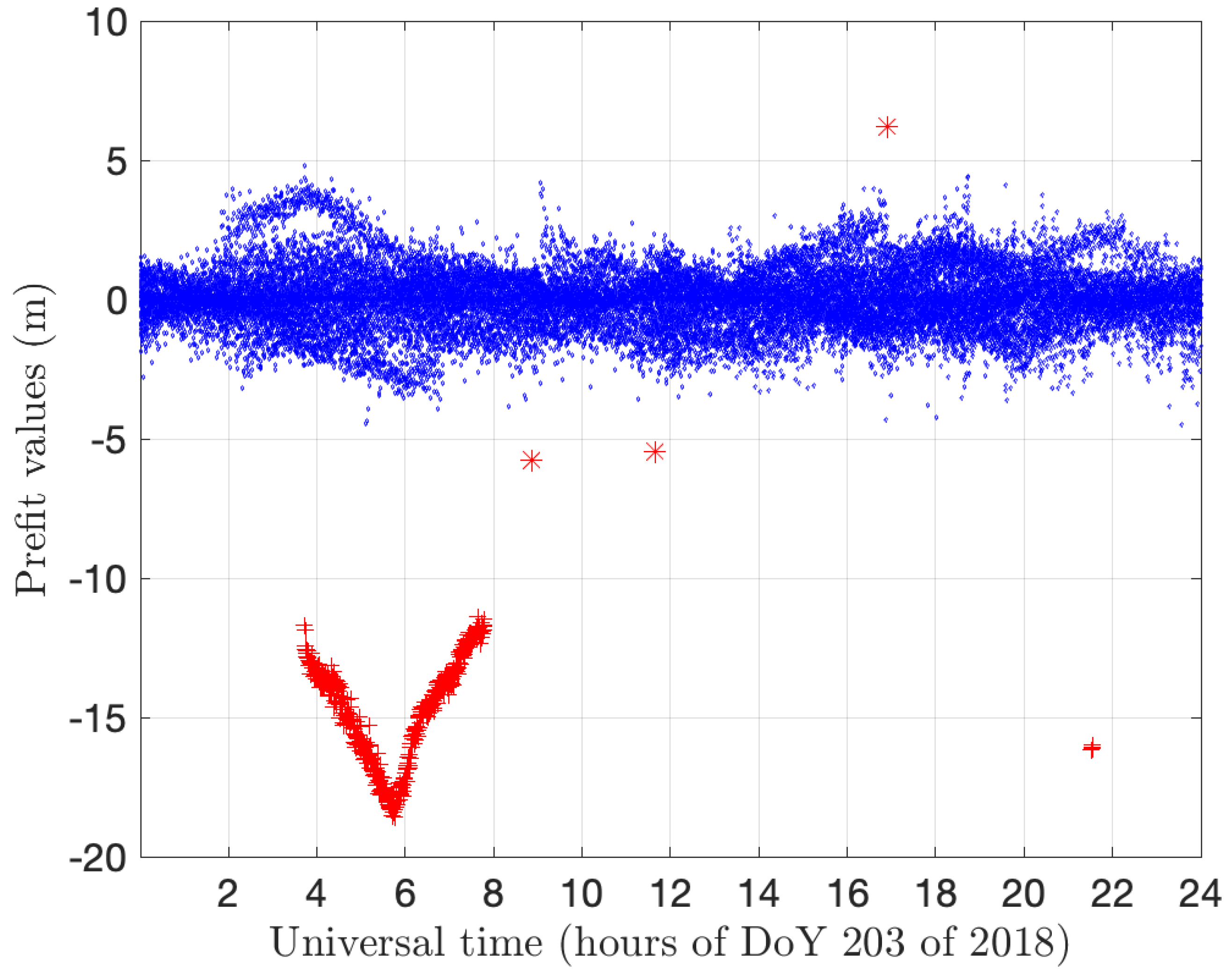

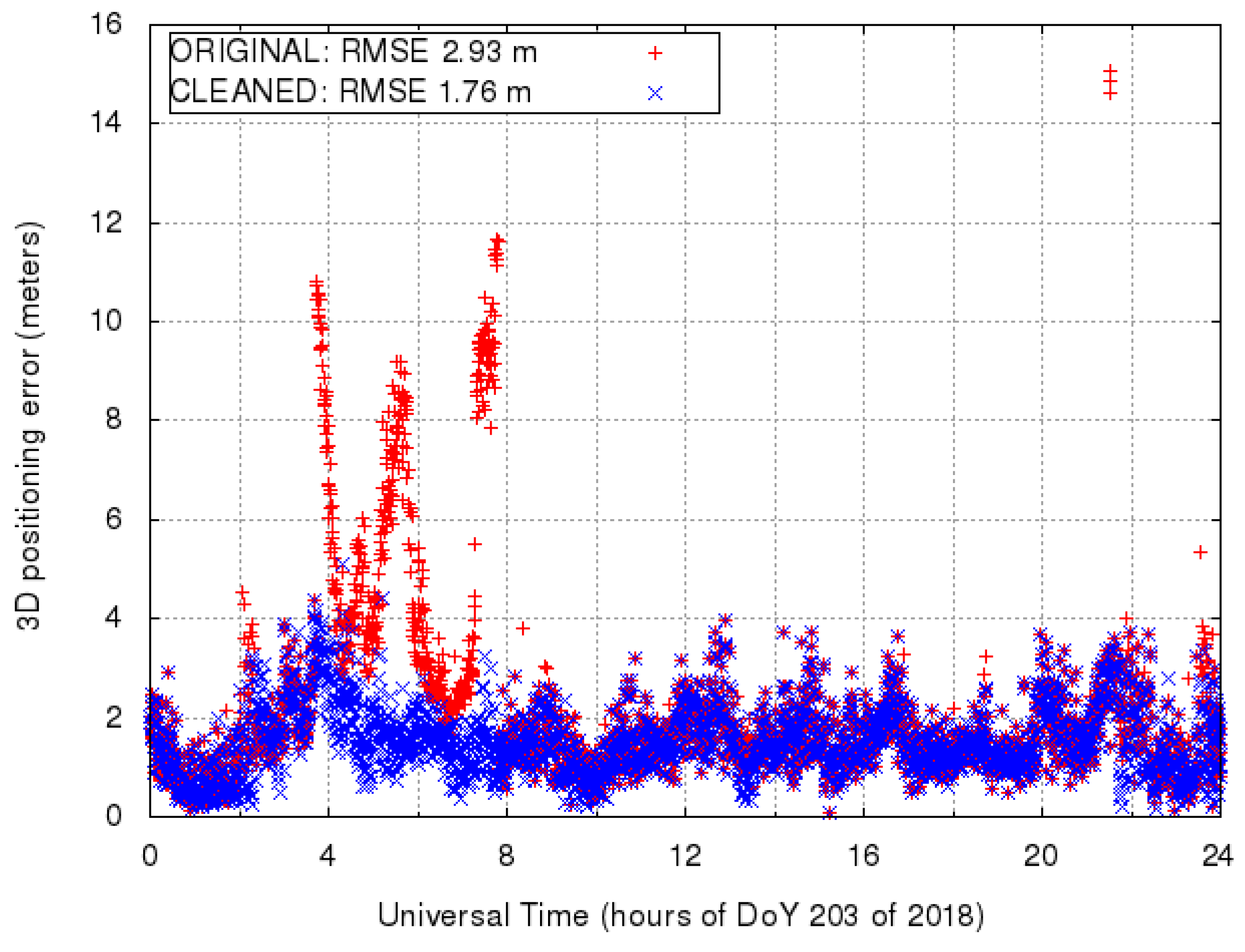

Figure 2 represents the outlier detection against LMS regression on 24 h of samples. The result with respect to other estimators, compared to LMS below in this section, will also be referred to in this Figure. With reference to

Figure 2, the median pre-fit value for the whole dataset is

m. This rather small value indicates that the receiver is steering its clock to the GPS time, hence the receiver clock offset value

is close to 0 in Equation (

4). The confidence interval found corresponds to about

m. The two main groups of outliers were already evident by visual inspection in

Figure 1. The “V-shaped” subset between hours 4 and 8 and the smaller group at about hour 22 are correctly identified by LMS. It is remarked that three distinct points at about hours 9, 12 and 17, very close to the limits of the confidence interval, are marked as outliers as well.

After this first essay on the data by LMS regression, three remarks can be made:

- 1

The outlier detection by visual screening and LMS regression with Bonferroni correction give comparable results;

- 2

Many robust methods (including those implemented in FSDA) are based on the assumption that the error component of the data analyzed is normally distributed. As the actual distribution can of course deviate from this common assumption, the confidence intervals are just ‘nominal’ in that the actual proportion of outliers detected can differ from the expected one.

- 3

The outlier detection algorithm presently used by gLAB, based on an heuristic threshold of 40 m:

epochs := set of considered epochs;

median := median of all pre-fit values in all epochs;

for each epoch in epochs

for each satellite s in epoch

if | prefit(s) - median | > treshold

then discard satellite s in current epoch.

would have not detected the obvious outliers.

We now exploit, on the same dataset, two other estimators in addition to LMS above: least trimmed squares (LTS) and forward search (FS) as implemented in the FSDA toolkit. A detailed description of these methods with their actual implementation in FSDA can be found in [

18]. The three robust regression methods build a

linear model upon a set of data points composed of a vector of

independent variables and one

dependent variable. In our case, the data points would be structured, as shown in

Table 1, as a vector of independent variables containing at least

and with the pre-fit as the dependent variable. This would lead to a multi-variate distribution. We adopted a simplification, by considering the dataset as a sample from a uni-variate distribution allowing, in each epoch, for the repetition of the observations. While computing the

linear model, we will not make distinctions among the individual satellites and we will not consider the elevation and other information included in the complete satellite’s messages.

The formal expression of the

linear model (with intercept) is:

, where

represents a normally distributed error and

x represents now the pairs

. Given

n as the cardinality of the dataset, the set of data points will be

with

the pre-fit and

representing a distinct pair

. The model is then computed by estimating with

the parameters

with respect to an appropriate objective function of the

residuals:

. The objective functions to minimize are, respectively, the sum of the squares of the

residuals for OLS, the median of the squares of the residuals for LMS and the sum of the squares of a selected subset of the residuals for LTS [

16]:

where

is the ordered set of the squared residuals

and

h decides the appropriate subset of the points considered. Typically, for LTS,

h is chosen with a

breakdown point of 50% (

) but it can be automatically increased according to the estimate of the residuals variance [

16]. This

re-weighting step allows keeping the desired robustness while increasing the efficiency of the estimator

.

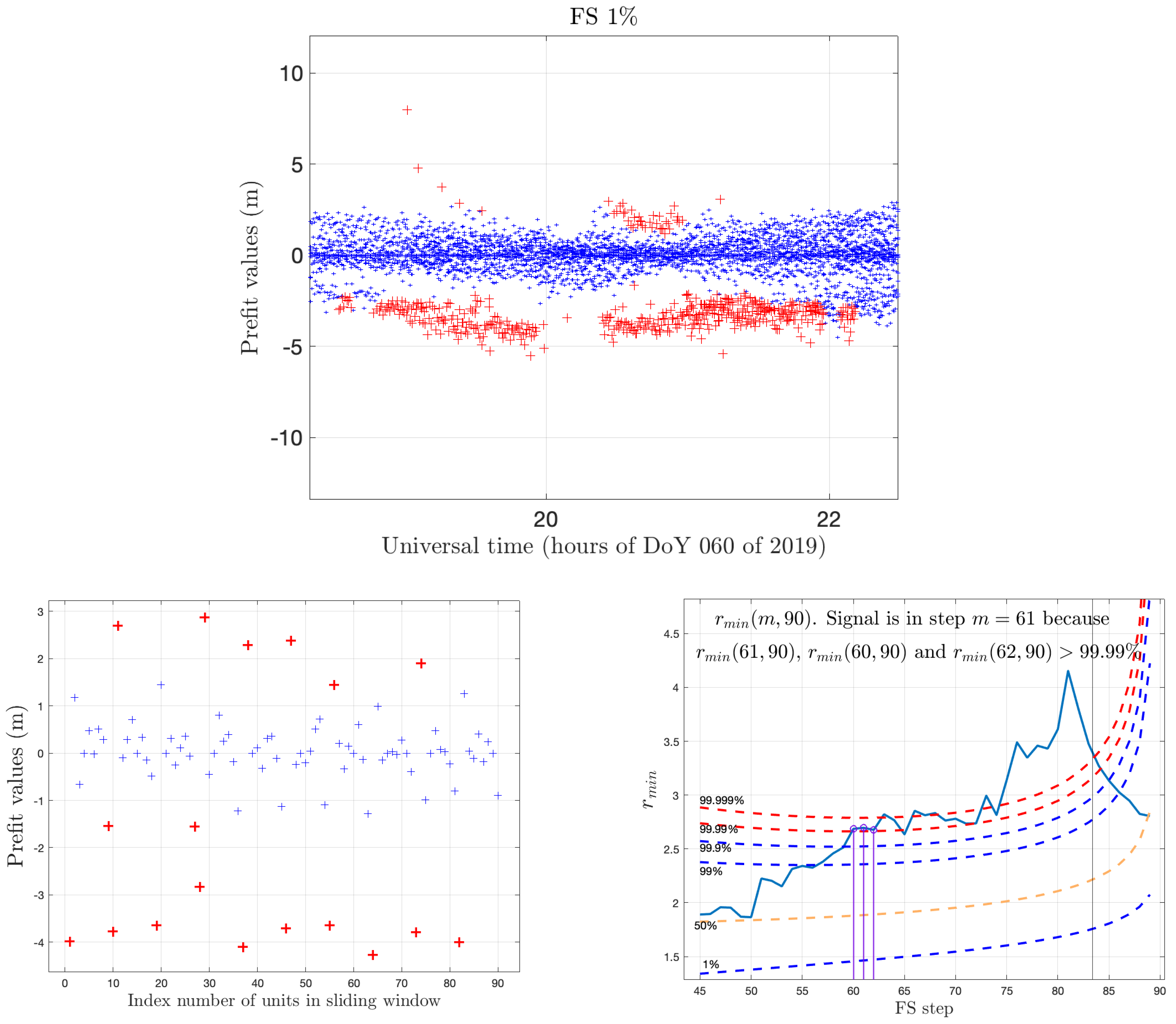

The forward search further exploits the re-weighting idea by building a sequence of data subsets of increasing size, and fitting a sequence of estimators on them. More precisely, the forward process starts with a first small subset of data points, where is estimated with LMS or LTS. Note that can just be equal to the number of parameters, so the computational burden required by the initial LMS or LTS application is very limited. Then, the process continues by repeatedly computing with the OLS on the subsequent subsets of cardinality . Building the subset with the units with smallest squared residuals requires the computation of the order statistic in a vector of n numbers, which is known to have linear computational complexity (full or partial sorting of the residuals is not necessary). The process is iterated with OLS in steps until the cardinality of the subsets reaches the cardinality n of the dataset. Figure 10 illustrates the signal detection mechanism of the FS using data in a window of 10 epochs.

Figure 2 reports the comprehensive result of the application of the estimators of LMS as above, LTS with re-weighting and Bonferroni correction and FS to the whole dataset. The outcomes of LTS and FS are identical and can be compared with the outcome of LMS. As in

Figure 2, the difference between LMS on one side and LTS and FS on the other are in the three outliers close to the limits of the confidence interval of about 5 m. Overall, the three estimators give the same results while LTS and FS yield a somewhat different performance compared to LMS, in dependence of the judgment (outliers or main population) for the points at the border of the confidence interval.

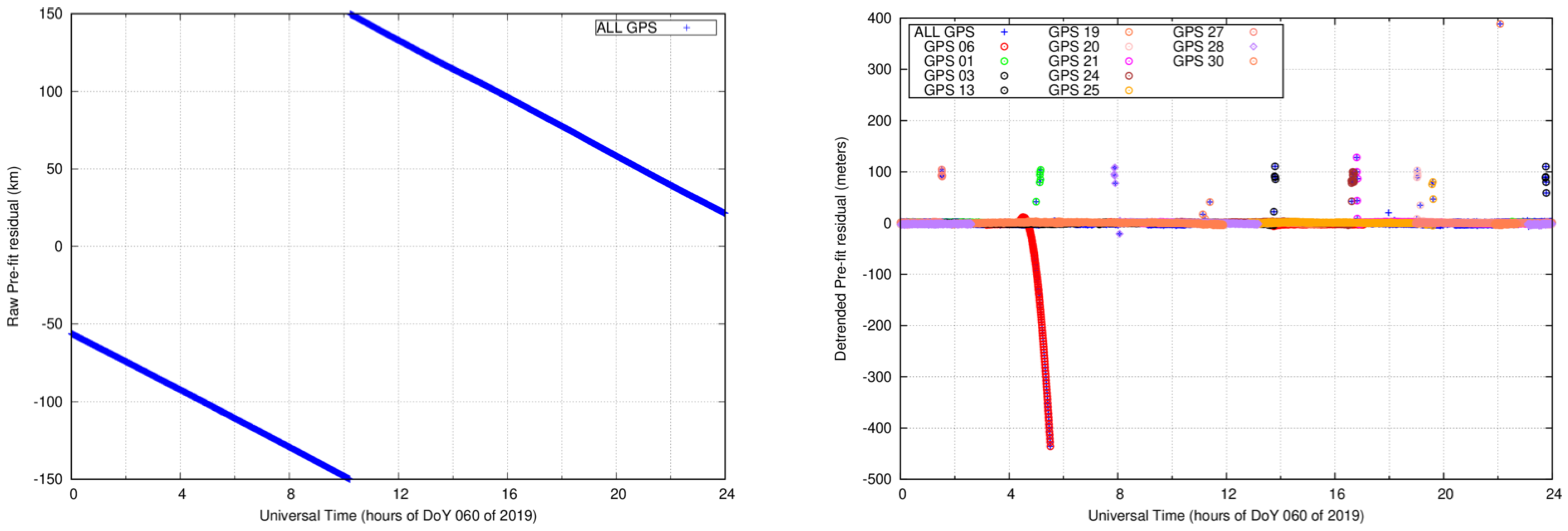

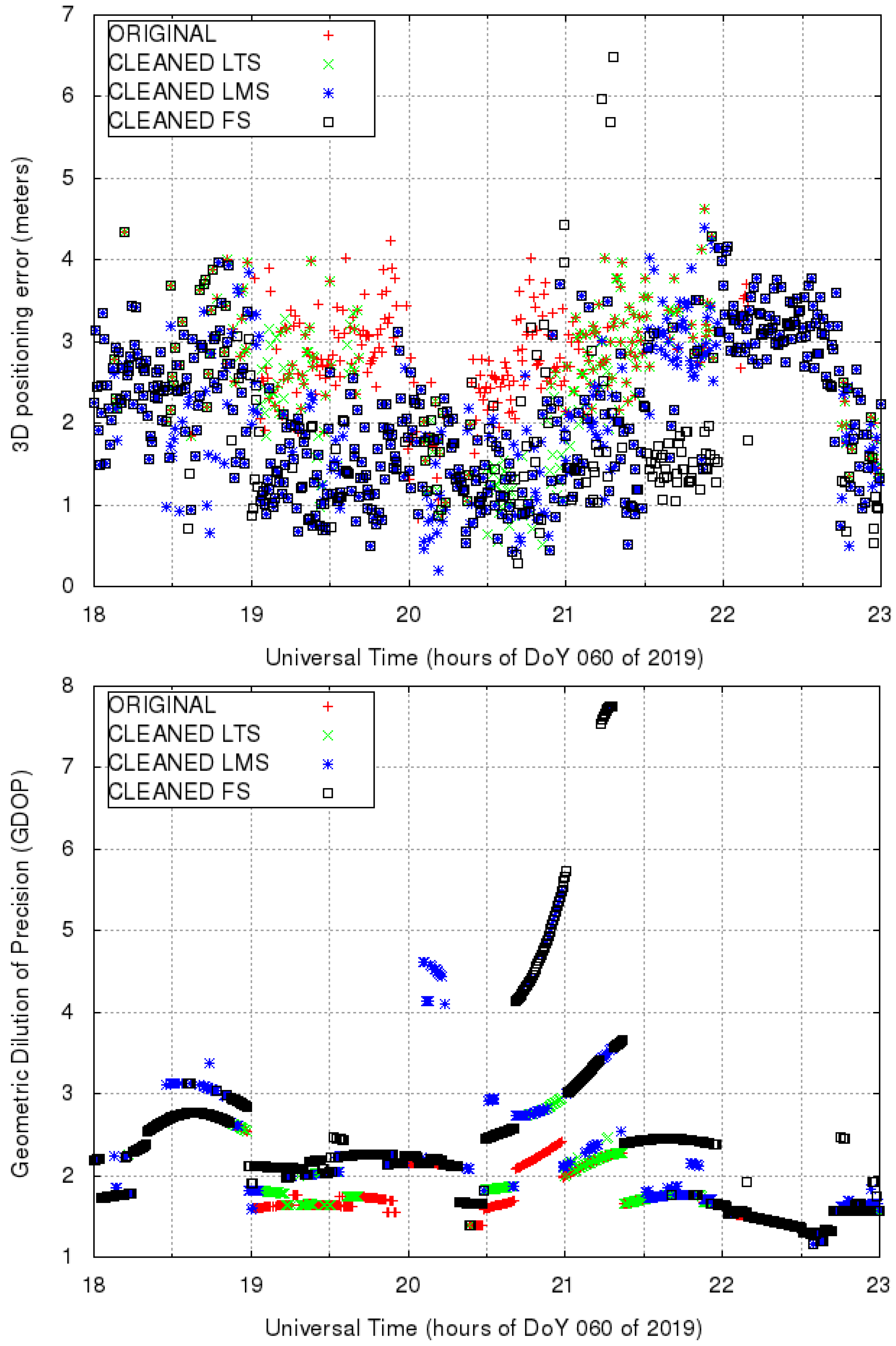

3. Analysis with Time Windows

In this section, we further deepened our investigation by applying the previous methods to a sliding time window of ten epochs. The dataset used in this case belongs to the permanent station named HUEG, located in Germany, for DoY 060 of 2019 (i.e., March 1st). Although the receiver is a Javad ‘TRE_G3TH’, the same model as the one used in the preliminary analysis of

Section 2 above, the clock of the receiver (i.e.,

in Equation (

4)) is not steered to GPS time, presenting a rapid drift as illustrated in the left plot of

Figure 3. According to the interface control document (ICD) [

19], the receiver clock offset can have any value within a

ms (i.e.,

km). When it reaches the minimum (or maximum) limit, it must reset to remain within the prescribed range of

ms Therefore, in order to analyze the pre-fit residuals containing such clock behaviors, a

detrending procedure must be applied. Moreover, as a step towards real-time applications, in this section, we use a sliding window rather than analyzing the complete

time window of 24 h at once.

Thus, in order to eliminate the clock drift prior to outlier detection, we performed a linear detrending to all pre-fit residuals. This detrending is obtained by subtracting the instantaneous median value at each epoch. The right plot of

Figure 3 illustrates the result of such detrending. We can observe few outliers at some particular satellites that were hidden by the clock drift in the original ‘raw’ pre-fit residuals depicted in the left plot of

Figure 3. While most of the outliers range within 100 m, GPS satellite 6 presents an outlier that reaches more than 400 m. This outlier is simultaneously observed in other permanent stations, thus it is attributable to some satellite anomaly. Indeed, the Notice Advisory to Navstar Users (NANU) number 2019029 [

20] was issued, reporting that the GPS06 satellite was unusable that day.

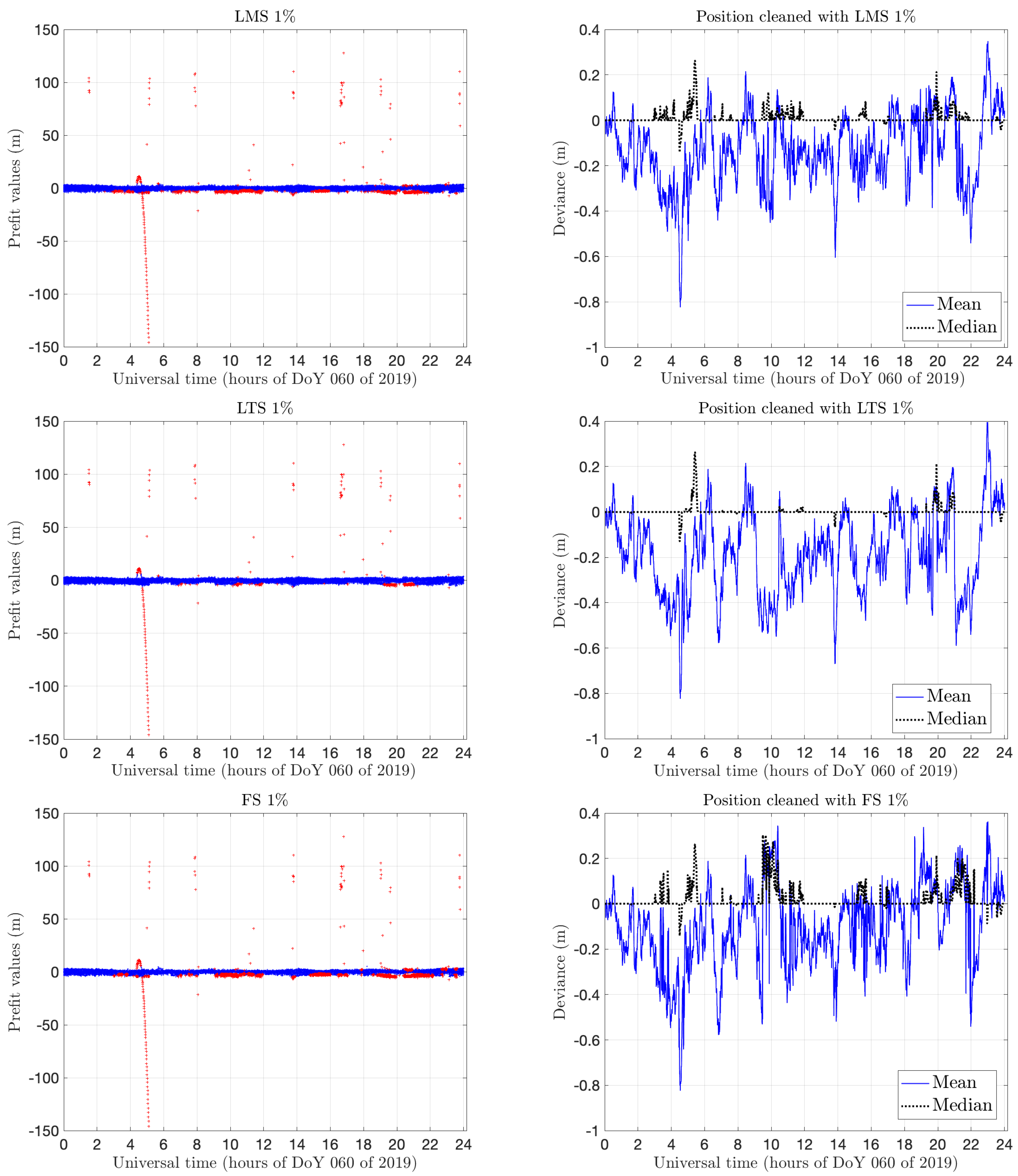

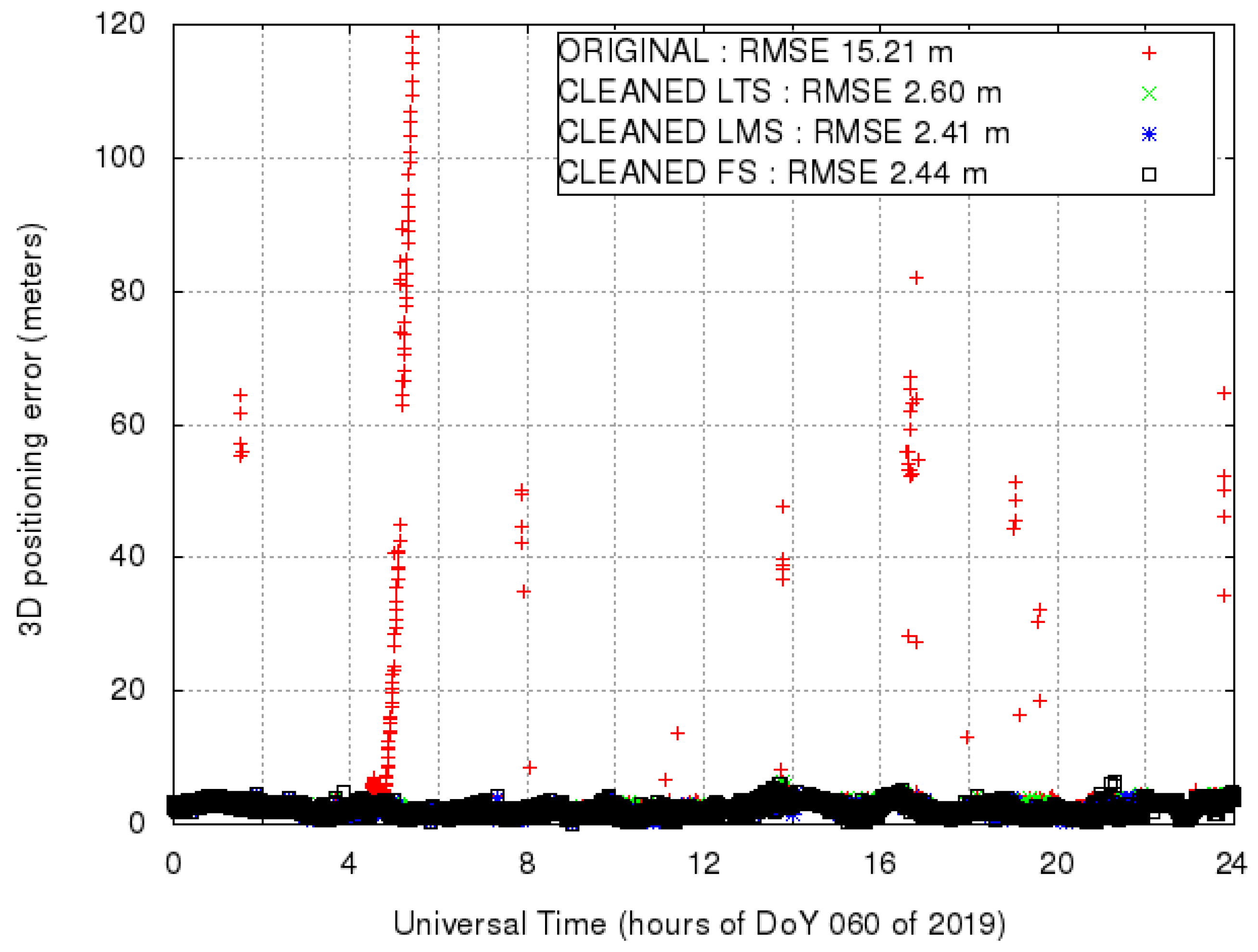

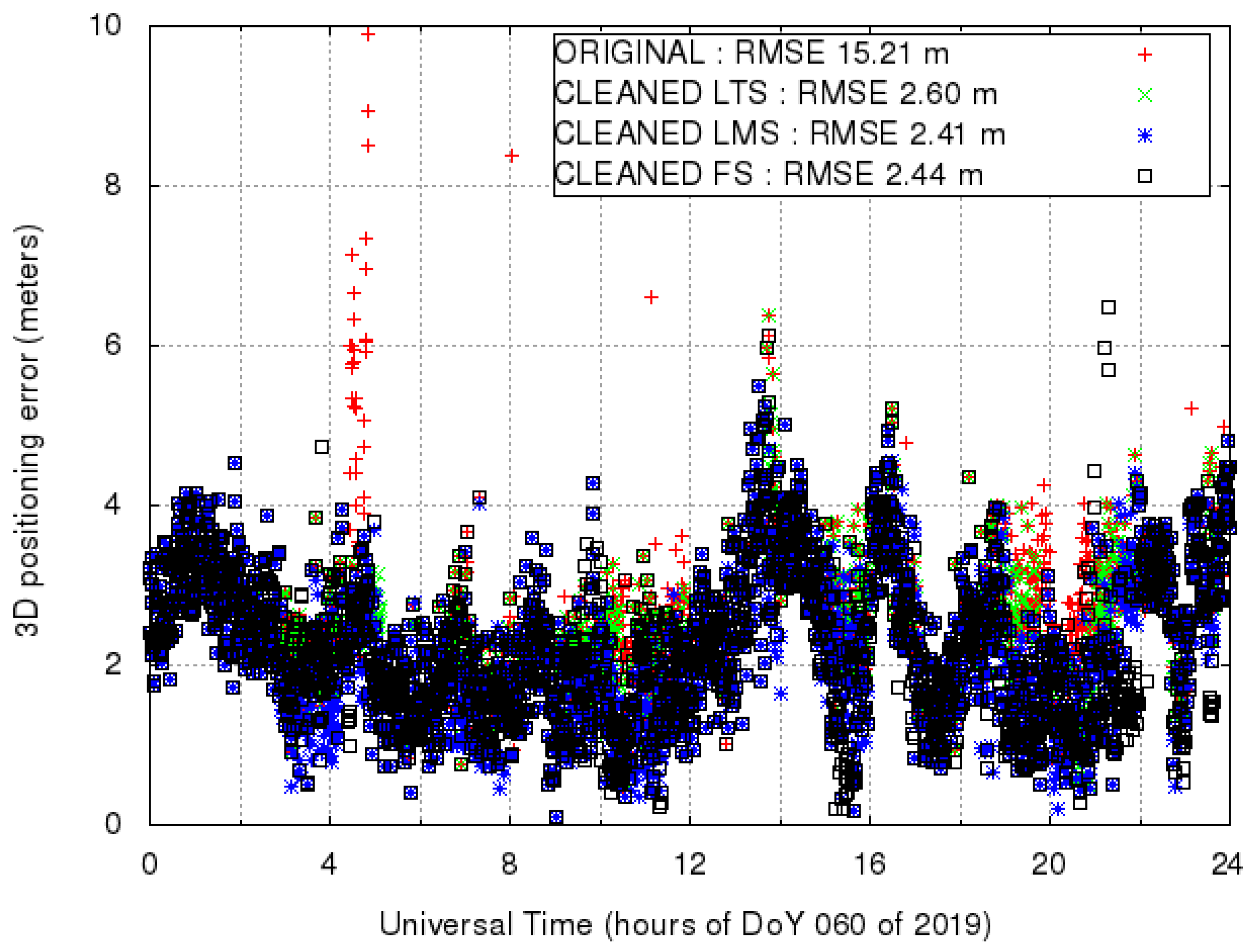

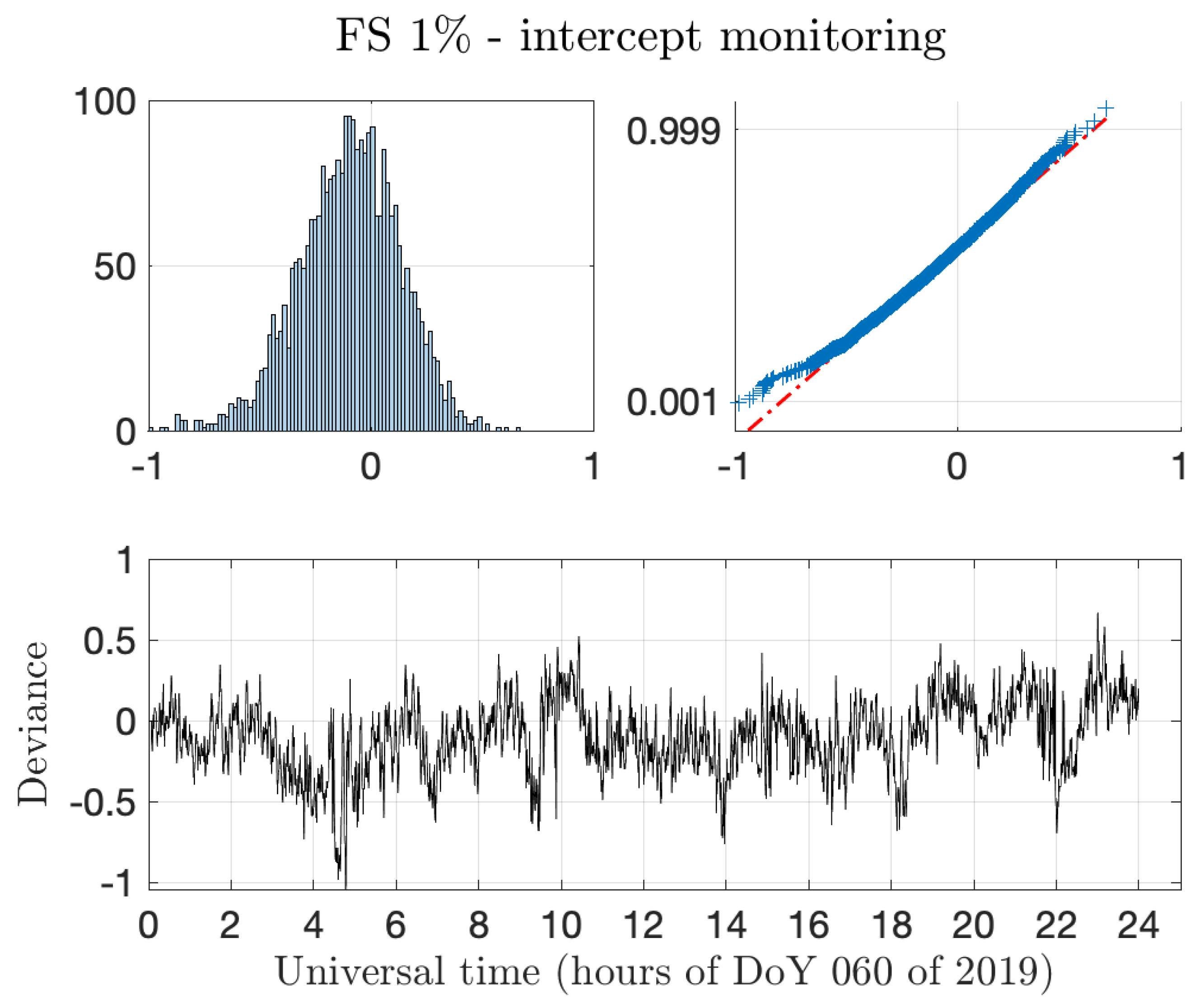

We now apply the outlier detection algorithms based on LMS, LTS and FS to the HUEG dataset. With the aim of developing an efficient mechanism for outlier detection of pre-fit values, we chose a relatively small time window of ten epochs. That is, the window considers five minutes of data for each computation, sliding along the whole 24 h of observations. This choice is arbitrary and is done only as a kind of compromise between the need for a decision about unreliable satellites within ‘few’ minutes and having a meaningful set of data. The outliers, computed by using data of the whole window, are assigned to the epoch ‘entering’ the window itself.

Figure 4 shows the comprehensive results of outlier detection for the defined time window with LMS, LTS and FS and the evolution of mean and median in each case. The plots of mean and median monitoring do not show big differences. This is not surprising since the outliers are similar in the three cases. FS shows ‘more centered’ means and medians, meaning a better selection of outliers, and possibly, a cleaner output. Moreover, the mean shows, in the three cases, a ‘displacement’ towards negative values; a comment on this will be given in

Section 4.2 below.

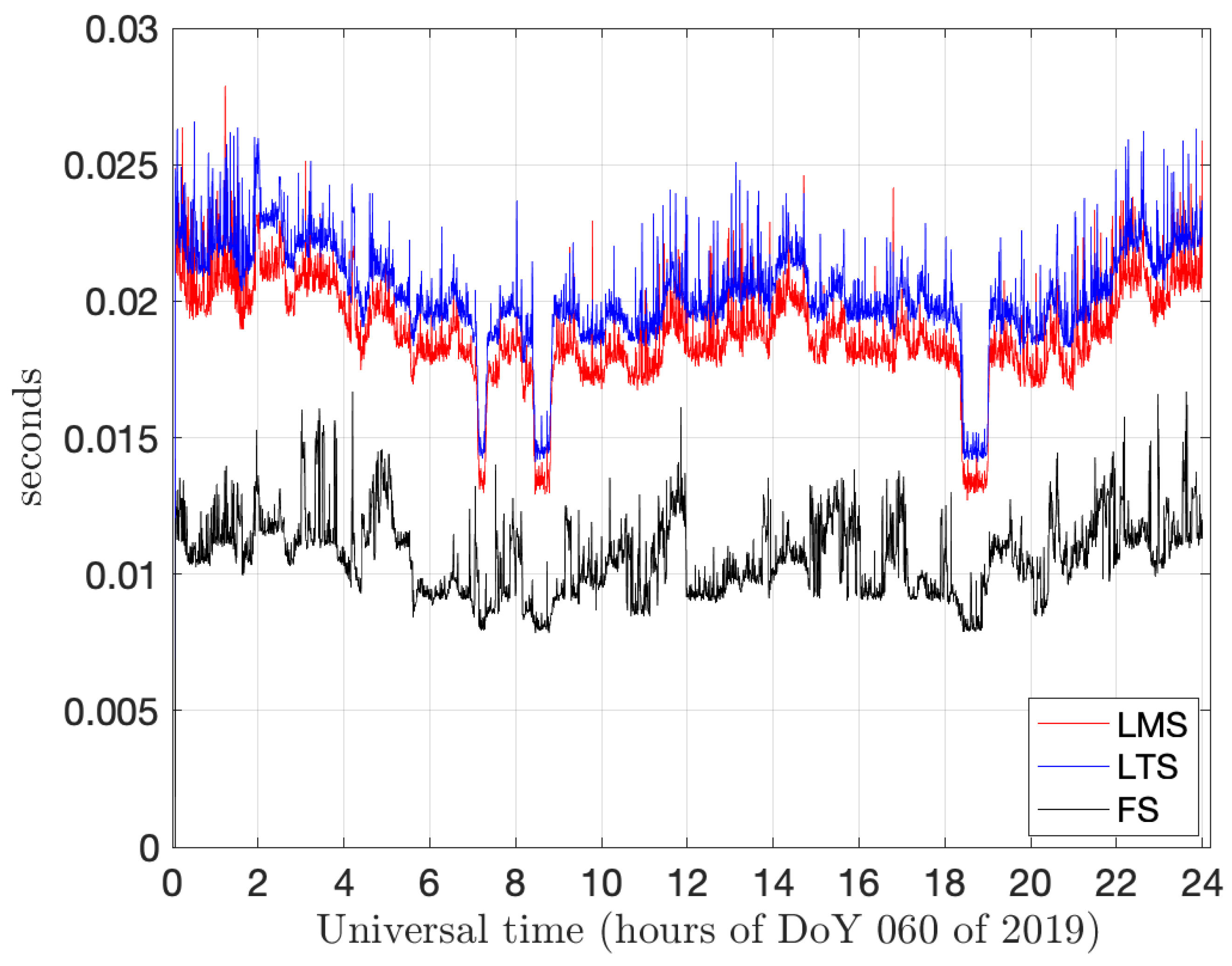

Figure 5 shows the respective computing times for LMS, LTS and FS for each time window lasting 10 epochs on a Quad Core Intel i7,

GHz machine.

Table 2 reports the respective computing times—cumulative and median—for all 10 epochs windows, and shows a clear computational advantage in using FS in spite of its greater theoretical complexity over LMS and LTS. This computational advantage is due to the efficient implementation of the FS algorithm in FSDA. The three drops of LTS and LMS in computing time are coincident with consecutive epochs containing the lowest number of satellites (i.e., 7) included in the computation. The difference in performance between LMS and LTS on one side and FS in these specific intervals, with a time drop on one hand and almost no change on the other hand, is due to the different computational complexity of the methods, as explained below.

Table 2 and

Figure 5 show the respective computing times for the three methods on the HUEG dataset (24 h of measurements sampled every 30 s) and the same time window length (of 10 epochs). The clear advantage of the FS might be surprising, given that the method relies on a process that can iterate as many times as the size of the data sample to be analyzed, say

n. Several factors determine the advantage over LMS and LTS. The first one is that LMS and LTS need a minimum number of random samples of size

selected with computationally intensive ‘sampling without replacement’ methods. This explains why

Figure 5 shows a visible drop in computing corresponding to the three intervals where data come from only 7 satellites instead of 9–10 as in the majority of cases.

Instead, the FS is initialized with an LMS/LTS regression estimated on very few data points (those sufficient to fit the regression line, therefore two points as a minimum, in our case) and this is computationally trivial. Then, it progresses with a sequence of order statistics and OLS computations, which are also computationally simple. Finally, FS can start monitoring for potential signals only in the final part of the search, and this additionally reduces its computational burden. As a reference, on the same machine, the computation of the SPP by gLAB with only the default outliers (satellites with a pre-fit residual greater than forty meters and with elevation lower than five degrees) is done, resulting in a median over eleven trials of 1.078 s on the same dataset. In this setting, i.e., the MATLAB-interpreted version of FS, the time overhead for the FS computation in the case of a moving window results in s. However, it is noted that although in its interpreted version (i.e., not compiled), FS processes 24 h of data in half a minute, which enables real-time operations.