1. Introduction

Circular data arise commonly in many different fields such as earth sciences, meteorology, biology, physics, and protein bioinformatics. Examples are represented by the analysis of wind directions [

1,

2], animal movements [

3], handwriting recognition [

4], and people orientation [

5]. The reader is advised to read the work in [

6,

7] to become familiar with the topic of circular data and find several stimulating examples and areas of application.

In this paper, we deal with the robust fitting of multivariate circular data, according to a wrapped normal model. Robust estimation is supposed to mitigate the adverse effects of outliers on estimation and inference. Outliers are unexpected anomalous values that exhibit a different pattern with respect to the rest of the data, as in the case of data orientated towards certain rare directions [

3,

8,

9]. In circular data modeling, in the univariate case, the data can be represented as points on the circumference of the unit circle. The idea can be extended to the multivariate setting, where observations are supposed to lie on a

dimensional torus, by revolving the unit circle in a

dimensional manifold, with

. Therefore, the main aspect of circular data is periodicity, that reflects in the boundedness of the sample space and often of the parametric space.

The purpose of robust estimation is twofold: On the one hand we aim to fit a model for the circular data at hand and on the other hand, an effective outlier detection rule can be derived from the robust estimation technique. The latter often gives very important insight into the data generation scheme and statistical analysis. Looking for outliers and investigating their source and nature could unveil unknown random mechanisms that are worth studying and may not have been considered otherwise. It is also important to keep in mind that outliers are model dependent, since they are defined with respect to the specified model. Then, an effective detection of outliers could be a strategy to improve the model [

10]. We further remark that an outlier detection rule cannot be derived by a non-robust method that is sensitive to contamination in the data, such as maximum likelihood estimation.

There have been several attempts to deal with outliers in circular data analysis, mainly focused on the Von Mises distribution and univariate problems [

2,

11,

12,

13]. A first general attempt to develop a robust parametric technique in the multivariate case can be found in [

9]: The authors focused on weighted likelihood estimation and considered outliers from a probabilistic point of view, as points that are unlikely to occur under the assumed model. A different approach, based on computing a local measure of outlyingness for a circular observation with respect to the distance from its

k nearest neighbors, has been suggested in [

14].

In this paper, we propose a novel robust estimation scheme. The key idea is that outlyingness is not measured directly on the torus as in [

9] but only after unwrapping the multivariate circular data from the

p-dimensional torus onto a hyperplane. This approach allows one to search for outliers based on their

geometric distance from the robust fit. In other words, the main difference between the proposed technique and that discussed in [

9] lies in the downweighting strategy: Here, weights are evaluated over the data unwrapped onto

, whereas in [

9], the weights are computed directly on the circular data over the torus and the fitted wrapped model.

In particular, we focus on the multivariate wrapped normal distribution, that, despite its apparent complexity, allows us to develop a general robust estimation algorithm. Alternative robust estimation techniques are considered, such as those stemming from M-estimation, weighted likelihood, and hard trimming. It is worth noting that to the extent of our knowledge, M-estimation and hard trimming procedures have never been considered in robust estimation for circular data, neither in univariate problems, nor in the multivariate case. In addition, the weighted likelihood approach adopted here differs from that employed in [

9], according to the comments above.

The proposed robust estimation techniques also lead to outlier detection strategies based on formal rules and the fitted model, by paralleling the classical results under a multivariate Normal model [

15]. It is also worth remarking that the methodology can be extended to the family of wrapped elliptically symmetric distributions.

The rest of the paper is structured as follows. In

Section 2 we give some necessary background about the multivariate wrapped normal distribution and about the maximum likelihood estimation approach. This represents the starting point for the newly established robust algorithms that are introduced in

Section 3. Outlier detection is discussed in

Section 4. The finite sample behavior of the proposed methodology is investigated through some illustrative synthetic examples in

Section 5, and numerical studies in

Section 6. Real data analyses are discussed in

Section 7. Concluding remarks finalize the paper in

Section 8.

2. Fitting a Multivariate Wrapped Normal Model

The multivariate wrapped normal distribution is obtained by component-wise wrapping a

p-variate normal distribution

on a

dimensional torus [

16,

17,

18] according to

,

. Formally,

is multivariate wrapped normal and the modulus operator

is performed component-wise. Then, we can write

.

The density function of

takes the form of an infinite sum over

, that is:

where

denotes the density function of

. The support of

is bounded and given by

. Without loss of generality, we let

to ensure identifiability. The

dimensional vector

represents the wrapping coefficients vector, that is, it indicates how many times each component of the

toroidal data point has been wrapped. Hence, if we observed the vector

, we would obtain the unwrapped (unobserved and hence not available) observation

.

Given a sample

, the log-likelihood function is given by:

Direct maximization of the log-likelihood function in (

2) appears unfeasible, since it involves an infinite sum over

. A first simplification stems from approximating the density function (

1) with only a few terms [

6], so that

is replaced by the Cartesian product

where

for some

J providing a good approximation. Therefore, maximum likelihood estimation can be performed through the Expectation-Maximization (EM) or Classification-Expectation-Maximization (CEM) algorithm based on the (approximated) classification log-likelihood:

where

or

according to whether

has

as the wrapping coefficients vector.

The CEM algorithm reveals a particularly appealing way to perform maximum likelihood estimation both in terms of accuracy and computational time [

8]. The CEM algorithm alternates between the CE step:

and the M-step:

If the wrapping coefficients were known, we would obtain that

,

, are realizations from a multivariate normal distribution. Notice that, under such circumstances, the estimation process in (

5) resembles that concerning the parameters of a multivariate normal distribution. Then, the wrapping coefficients can be considered as latent variables and the observed circular data

as being incomplete [

8,

19,

20].

We stress that in each step, the algorithm allows us to deal with multivariate normal data

obtained as the result of the CE-step in (

4). Then, the M-step in (

5) involves the computation of the classical maximum likelihood estimates of the parameters of a multivariate normal distribution.

3. A Robust CEM Algorithm

A robust CEM algorithm can be obtained by a suitable modification of the M-step (

5), while leaving the CE-step unchanged. Indeed, robustness is achieved solving a different set of complete-data estimating equations given by:

where the estimating equation

is supposed to define a bounded influence and/or high breakdown point estimator of multivariate location and scatter [

10,

21,

22]. The resulting algorithm is a special case of the general proposal developed in [

23], which gives very general conditions for consistency and asymptotic normality of the estimator defined by the roots of (

6). The main requirements are unbiasedness of the estimating equation, the existence of a positive definite variance-covariance matrix

, and of a negative definite partial derivatives matrix

.

In this paper, we suggest a very general strategy that parallels the classical approaches to robust estimation of multivariate location and scatter under the common multivariate normal assumption, that can be easily extended to the more general setting of elliptical symmetric distributions. In this respect, it is possible to use estimating equations as in (

6) that satisfy the above requirements. In particular, we will consider estimating equations characterized by a set of data dependent weights that are meant to downweight those data points that exhibit large Mahalanobis distances from the robust fit. The Mahalanobis distance is defined over the complete unwrapped data as:

and it is used to assess outlyingness. In the following, we illustrate some well-established techniques for a robust estimation of multivariate location and scatter that define estimating equations as in (

5) to be used in the M-step.

3.1. M-Estimation

The M-step can be modified in order to perform M-estimation as follows:

with:

for a certain weight function

. The weights are supposed to be close to zero for those data points exhibiting large distances from the robust fit. Well-known weight functions involved in M-type estimation are the classical Huber

and Tukey

. The constant

c regulates the trade-off between robustness and efficiency.

3.2. S-Estimation

In S-estimation, the objective is to minimize a measure of distances’ dispersion. Let

, where

denotes the size and

, with

, the shape of the variance-covariance matrix. Then, in the M-step one could update

, minimizing some robust measure of scale of the squared distances, that is:

with

and

is an M-scale estimate that satisfies:

where

is the Tukey bisquare function,

with associated estimating function

. It can be shown that the solution to the minimization problem satisfies M estimating equations [

24]. The S-estimate for

is updated as

. The consistency factor

determines the robustness-efficiency trade-off of the estimator. The reader is pointed to [

25] for details about its selection.

3.3. MM-Estimation

S-estimation can be improved in terms of efficiency if the consistency factor used in the estimation of

is larger than that used in the computation of

. Then, one could update

minimizing:

with

and

. The updated MM-estimate of

is

. A small value of

in the first step leads to a high breakdown point, whereas a larger value

in the second step corresponds to a larger efficiency [

22,

25,

26].

3.4. Weighted Likelihood Estimation

The weighted likelihood estimating equations share the same structure of M-type estimating equations but with weights:

where

is the Pearson residual and

is the Residual Adjustment Function (RAF) [

27,

28,

29,

30,

31], with

denoting the positive part. Following [

32], Pearson residuals can be computed comparing the vector of squared distances and their underlying

distribution at the assumed multivariate normal model, as:

where

is an unbiased-at-the-boundary kernel density estimate based on the set of squared distances

evaluated at the current parameters values obtained from weighted likelihood estimation and

denotes the density function of a

variate. The residual adjustment function can be derived from the class of power divergence measures, including maximum likelihood, Hellinger distance, Kullback–Leibler divergence, and Neyman’s Chi–Square, from the Symmetric Chi-Square divergence or the family of Generalized Kullback–Leibler divergences. It plays the same role as the Huber or Tukey function. The method requires the evaluation of a kernel density estimate in each step over the set

. The kernel bandwidth allows control of the robustness-efficiency trade-off. Methods to obtain a kernel density estimate unbiased at the boundary have been discussed and compared in [

32].

It is worth remarking that Pearson residuals could have been also computed in a different fashion through the evaluation of a multivariate non-parametric density estimate evaluated over the

data or on the original torus data

. In the former case, a multivariate kernel density estimate should have been compared with a multivariate normal density. In the latter case, we need a suitable technique to obtain a multivariate kernel density estimate for torus data to be compared with the wrapped normal density. This approach has been developed in [

9]. In a very general framework, the limitations related to the employment of multivariate kernels have been investigated in depth in [

32]. The reader is also pointed to [

33,

34], who developed weighted likelihood-based EM and CEM algorithms in the framework of robust fitting of mixtures model.

3.5. Impartial Trimming Robust Estimation

In the M-step, we consider a 0–1 weight function, that is:

for a certain threshold

q. This is also known as a hard trimming strategy. The cut-off

q can be fixed in advance or determined in an adaptive fashion. Prominent examples are the Minimum Covariance Estimator (MCD, [

35]) and the Forward Search [

36], respectively. The computation of weights obeys an impartial trimming strategy, according to which, based on current parameters values in each step, distances are sorted in non-decreasing order, that is

and then, maximum likelihood estimates of location and scatter are computed over the non trimmed set. In other words, a null weight is assigned to those data points exhibiting the largest

distances, where

is the trimming level: In this case, we have

. The variance-covariance estimate evaluated over the non trimmed set is commonly inflated to ensure consistency at the normal model by a factor

, where

denotes the distribution function and

is the

level quantile of the

. A reweighting step can be also performed after convergence has been reached, with weights computed as in (

8) on the final fitted distances with

(common choices are

). The final estimates should be inflated as well, by the factor

, where now

is the rate of actual trimming in the reweighting step.

3.6. Initialization

A crucial issue in the development of EM and CEM algorithms is the choice of initial parameters values

. Moreover, to avoid dependence of the algorithm on the starting point and also to avoid being trapped in local or spurious solutions, it is suggested to run the algorithm from different initial values and then choose the solution that better satisfies some criterion. Here, starting values are obtained by subsampling. The mean vector

is initialized with the circular sample mean. Initial diagonal elements of

are given by

, where

is the sample mean resultant length; the off-diagonal elements of

are given by

(

), where

is the circular correlation coefficient,

and

[

18]. The subsample size is expected to be as small as possible in order to increase the probability to get an outlier free initial subset but large enough to guarantee estimation of the unknown parameters. Several strategies may be adopted to select the best solution at convergence, depending on the robust methodology applied to update the parameters values. For instance, after MM-estimation, one could consider the solution leading to the smallest robust scale estimate among squared distances; when applying impartial trimming, one could consider the solution with the lowest determinant

; the solution stemming from weighted likelihood estimating equations can be selected according to a minimum disparity criterion [

32,

33]; or minimizing the probability to observe a small Pearson residual over multivariate normal data ([

9,

32] and references therein).

3.7. Extension to Mixed-Type Data

It may happen that we are interested in the joint distribution of some toroidal and (multivariate) linear data. Let us denote the mixed-type data matrix as

, where

is composed by

—dimensional circular data and

by

—dimensional linear data. Such mixed-type data are commonly denoted as cylindrical [

7]. Under the wrapped normal model

in a component-wise fashion and

has a multivariate normal distribution. If we knew the wrapping coefficients vectors, we could deal with a sample of size

from a multivariate normal distribution. The unknown wrapping coefficients vectors are estimated in the CE-step (

4), so that one can work with the complete data

at each step.

4. Outlier Detection

Outlier detection is a task strongly connected to the problem of robust fitting, whose main aim is to identify those data showing anomalous patterns, or even no patterns at all, that deviate from model assumptions. For linear data, the classical approach to outlier detection relies on Mahalanobis distances [

37,

38]. Here, the same approach can be pursued on the inferred unwrapped data

at convergence. Formally, an observation is flagged as an outlier when:

where

are common choices.

Outlier detection is the result of a testing strategy. For a fixed significance level, the process of outlier detection may result in type-I and type-II errors. In the former case, a genuine observation is wrongly flagged as an outlier (swamping), in the latter case, a true outlier is not identified (masking). Therefore, it is important to control both the level, provided by the rate of swamped genuine observations, and the power of the test, given by the rate of outliers correctly detected. The outlier detection rule can be improved by taking into account proper adjustments to correct for multiple testing and avoid excess of swamping [

39].

5. Illustrative Synthetic Examples

In this Section, in order to illustrate the main aspects and benefits of the proposed robust estimation methodology, we consider a couple of examples with synthetic data. Here, the examples only concern bivariate torus data. The samples size is

with

contamination. We compare the results from the robust CEM described above in

Section 3 and Maximum Likelihood Estimation (MLE) performed according to the classical CEM algorithm described in

Section 2, with

. In particular, we consider MM-estimation with

breakdown point and

shape efficiency, the WLE with a symmetric chi-square RAF, and impartial trimming with

trimming and reweighting based on the

-level quantile of the

distribution. It is worth stressing that the breakdown point and the efficiency of the robust methods are tuned on the assumption of multivariate normality for the unwrapped data

. The robust CEM algorithm has been initialized from 20 different initial values evaluated over subsamples of size 10. Data and outliers have been plotted with different symbols and colors (available from the online version of the paper).

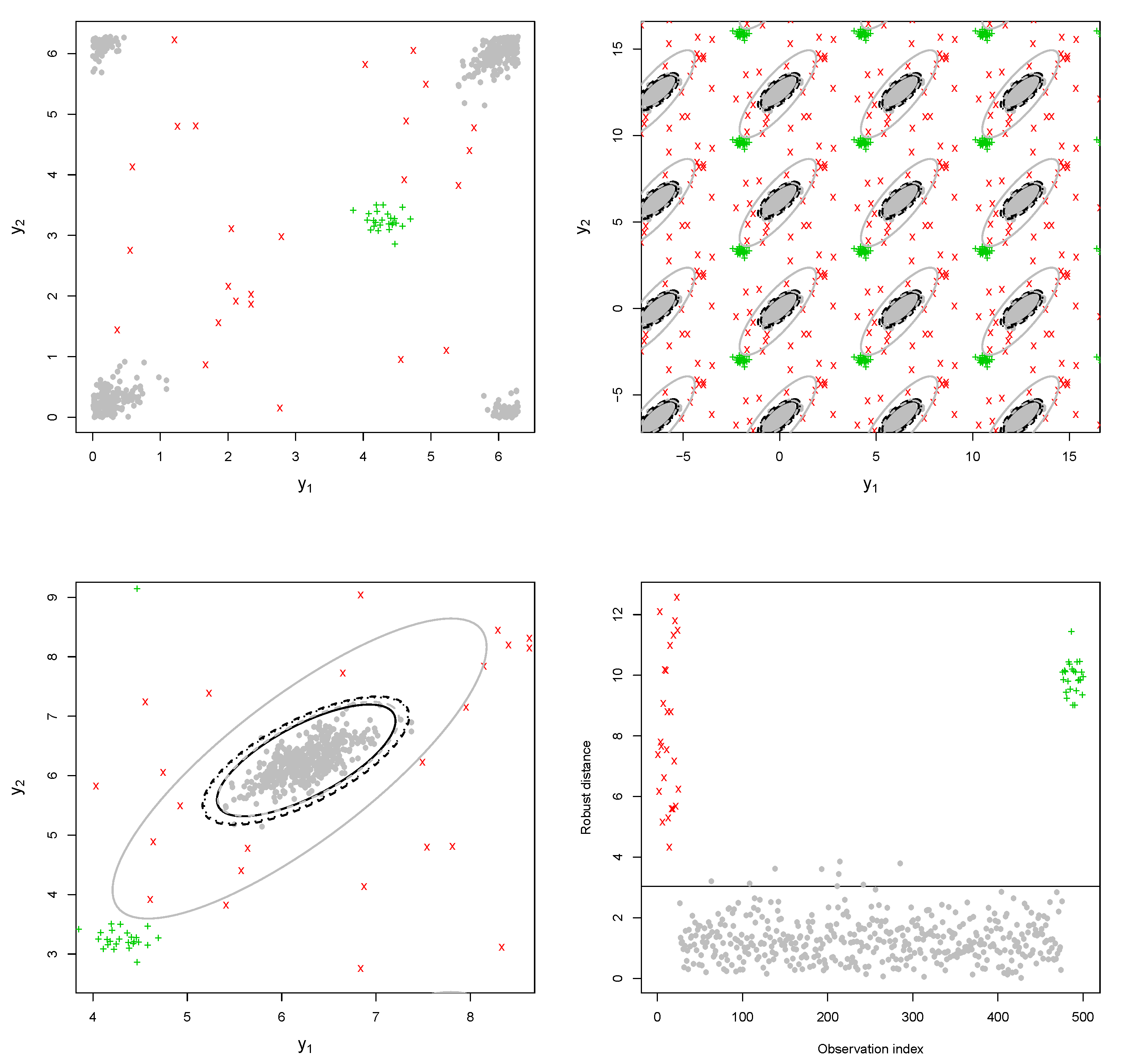

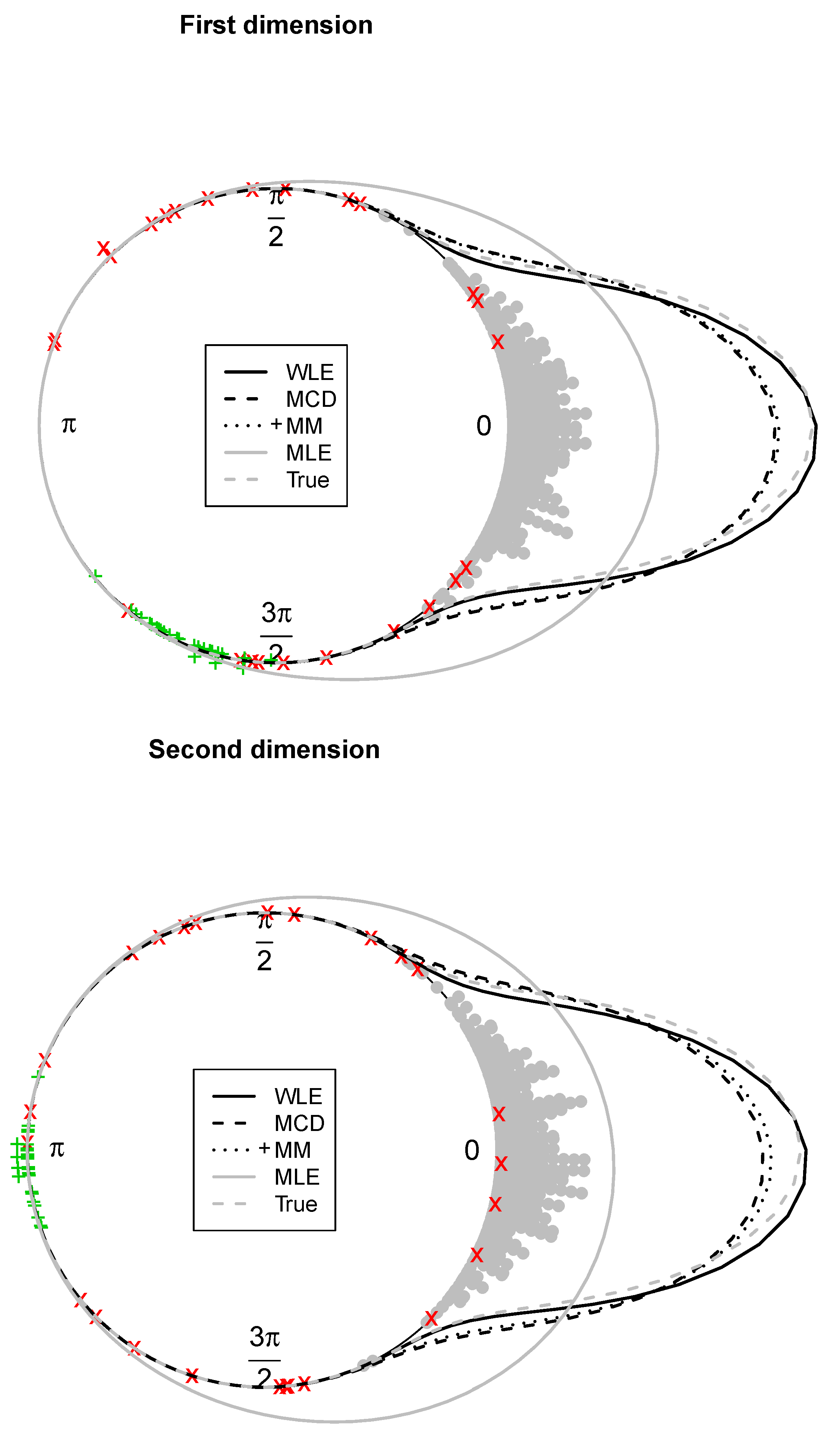

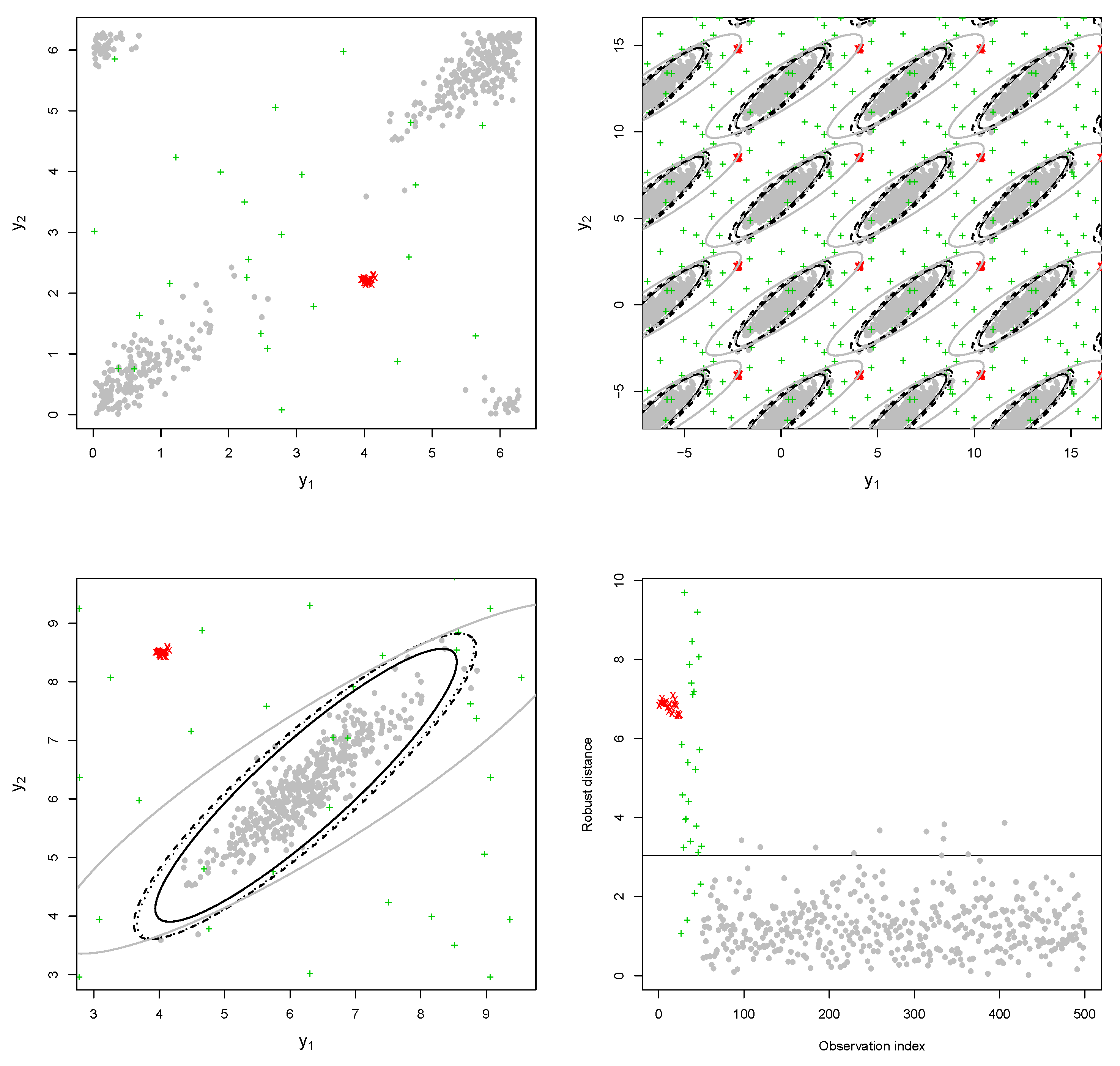

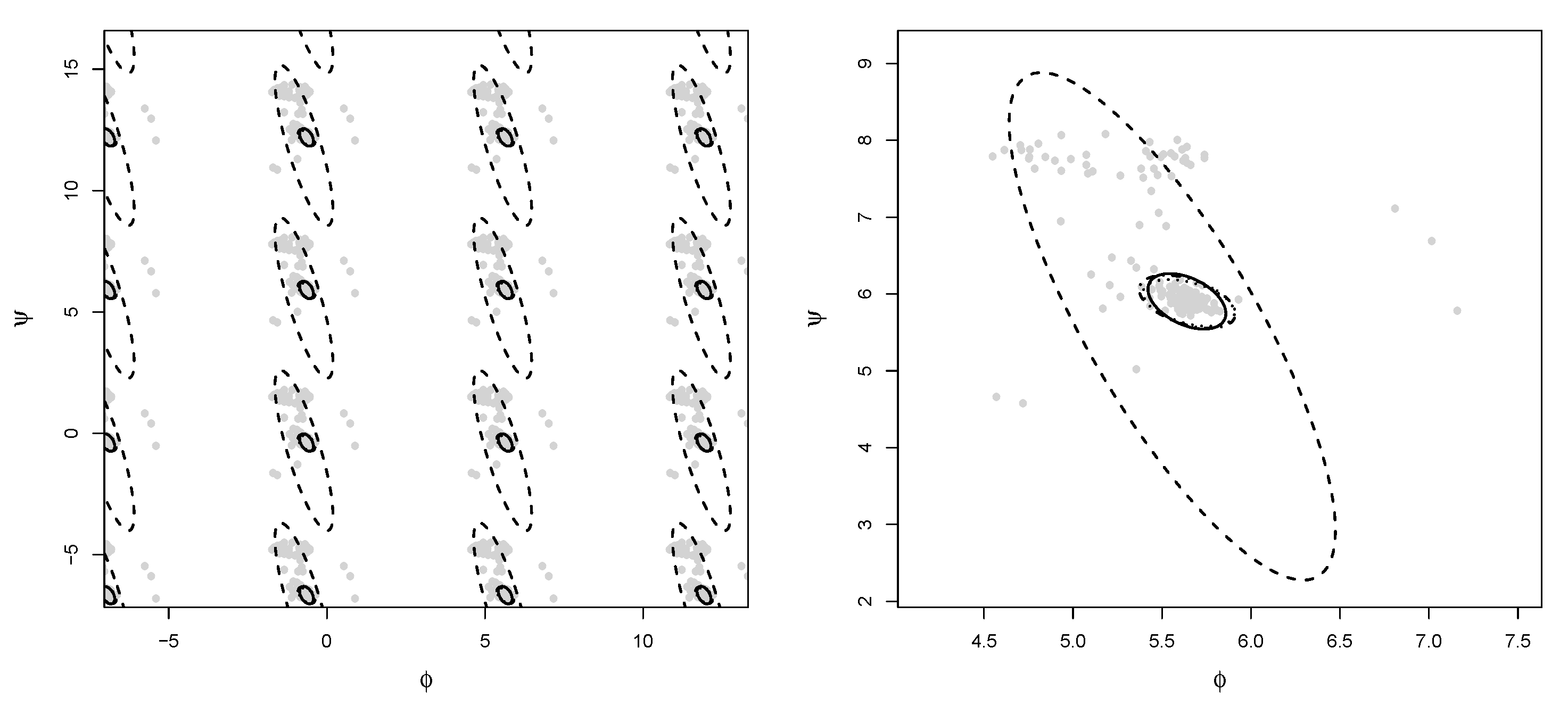

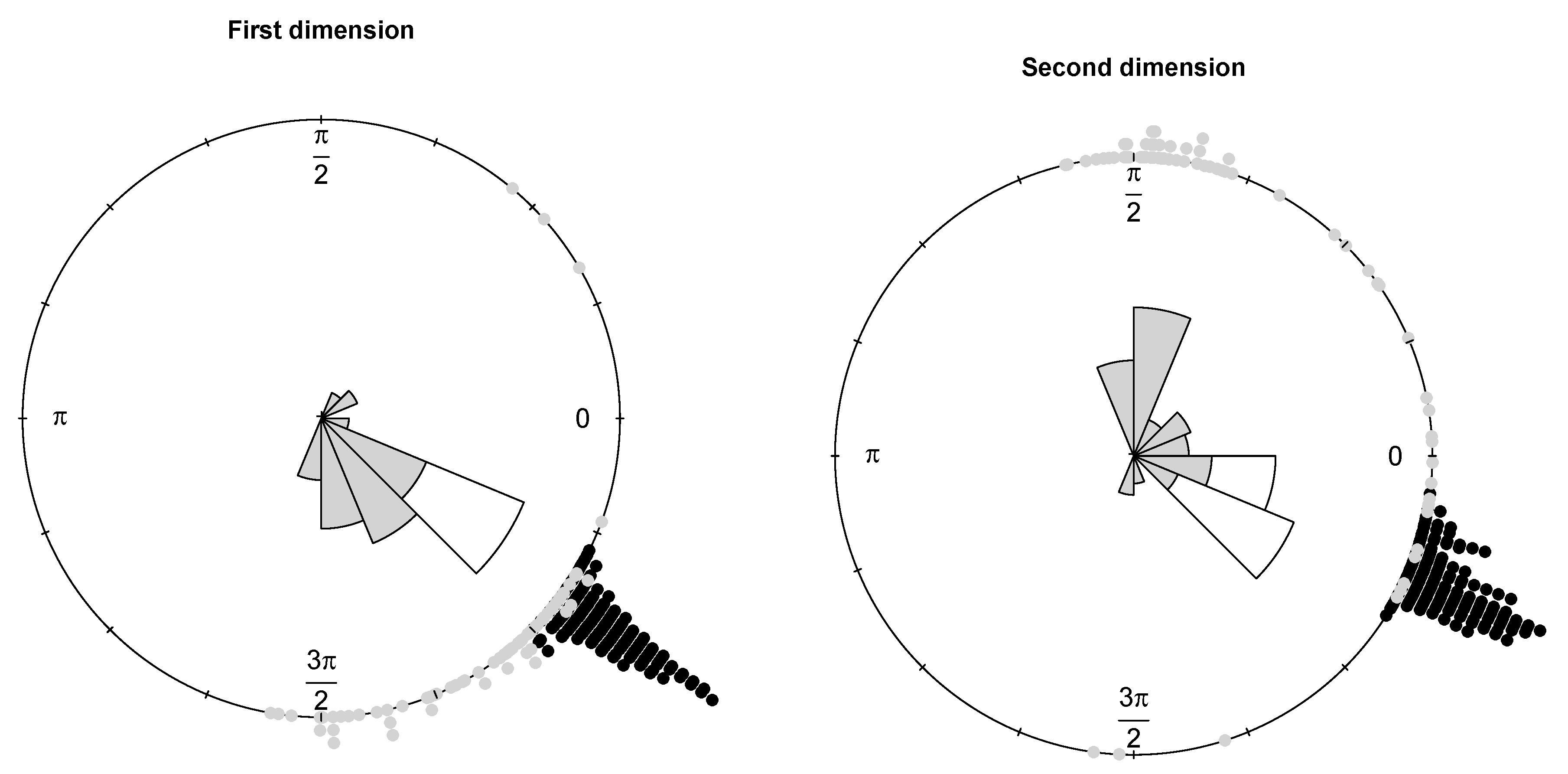

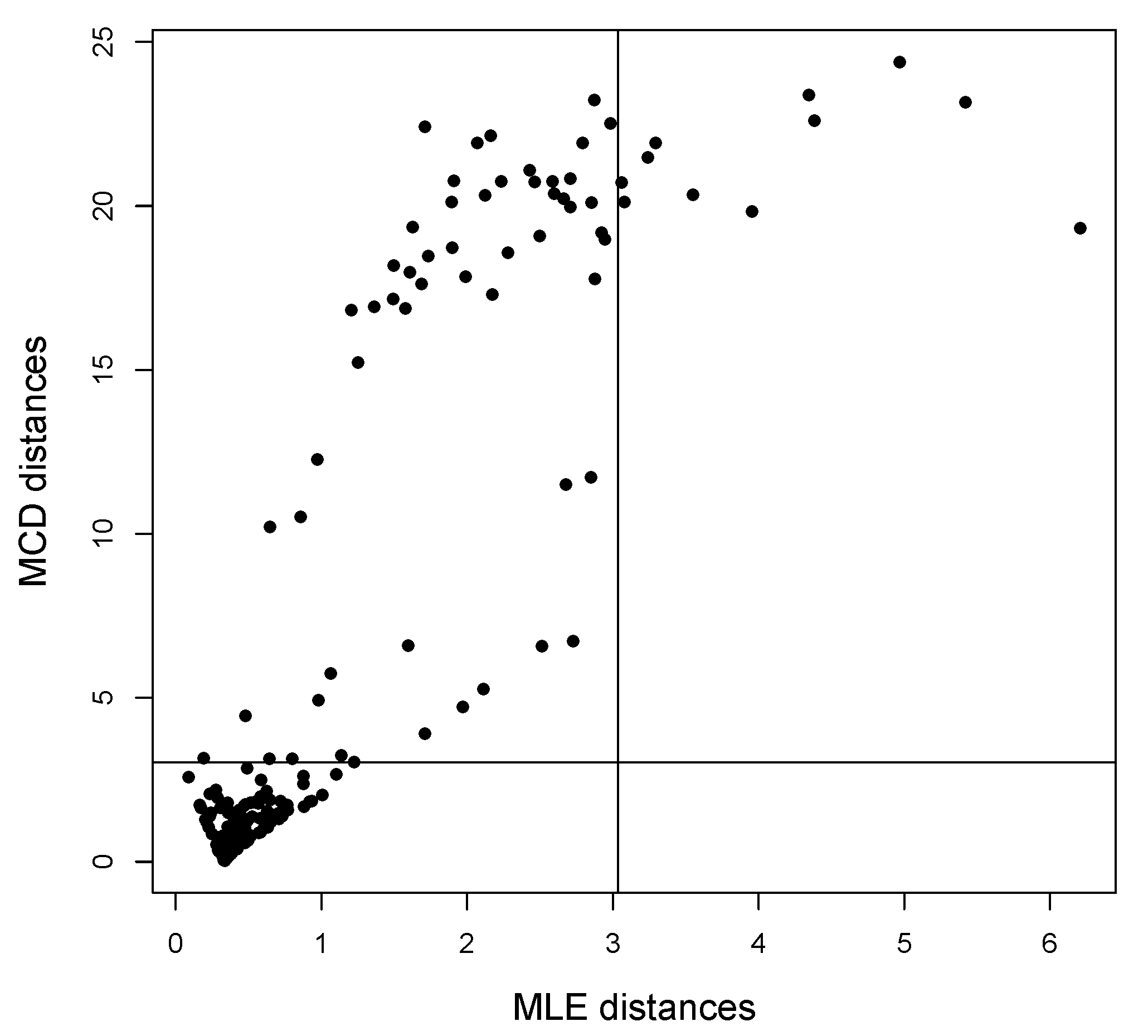

Example 1. The bulk of the data (gray dots) has been drawn from a bivariate wrapped normal distribution with , , where R is a correlation matrix with off-diagonal elements equal to 0.7. Two types of contamination are considered. The first fraction of atypical data is composed by 25 scattered outliers along the circumference of the unit circle (denoted with a red cross). They have been selected so that their distances from the true model on the flat torus are larger than the 0.99-level quantile of the distribution. The remaining part is given by clustered outliers (green plus). The data are plotted in the left-top panel of Figure 1. Due to the intrinsic periodic nature of the data, one should think that the top margin joins the bottom margin, the left margin joins the right margin, and opposite corners join as well. Then, it is suggested to represent the circular data points after they have been unwrapped on a flat torus in the form for , respecting the cyclic topology of the data. Therefore, the same data structure is replicated according to the intrinsic periodicity of the data, as in the top-right panel of Figure 1. The bivariate fitted models are given in the form of tolerance ellipses based on the 0.99-level quantile of a distribution. A single data structure is given in the bottom-left panel with ellipses over-imposed. The bottom-right panel is a distance plot stemming from the WLE. The solid line correspond to the cut-off . Points above the threshold line are detected as outliers. All the considered outliers are effectively spotted after the robust CEM based on the WLE. In particular, the group of points corresponding to the clustered outliers is well above the cut-off. Similar results stem from the use of MM-estimation and impartial trimming. Figure 2 gives the fitted marginals on the circumference: The robust fits are able to recover the true marginal density on the circle, whereas the maximum likelihood fitted density has been flattened and attracted by outliers. Example 2. The data have been generated according to the procedure described in [8]: For a fixed condition number , we obtained a random correlation matrix R. Then, R has been converted into the covariance matrix , with , , and denotes a p-dimensional vector of ones. Then, 25 outliers (red cross) have been added in the direction of the smallest eigenvalue of the covariance matrix [9], whereas the remaining 25 outliers have been sampled from a uniform distribution on (green plus). The data and the fitted models are given in

Figure 3. The distance plot stemming from WLE is given in the bottom-right panel of

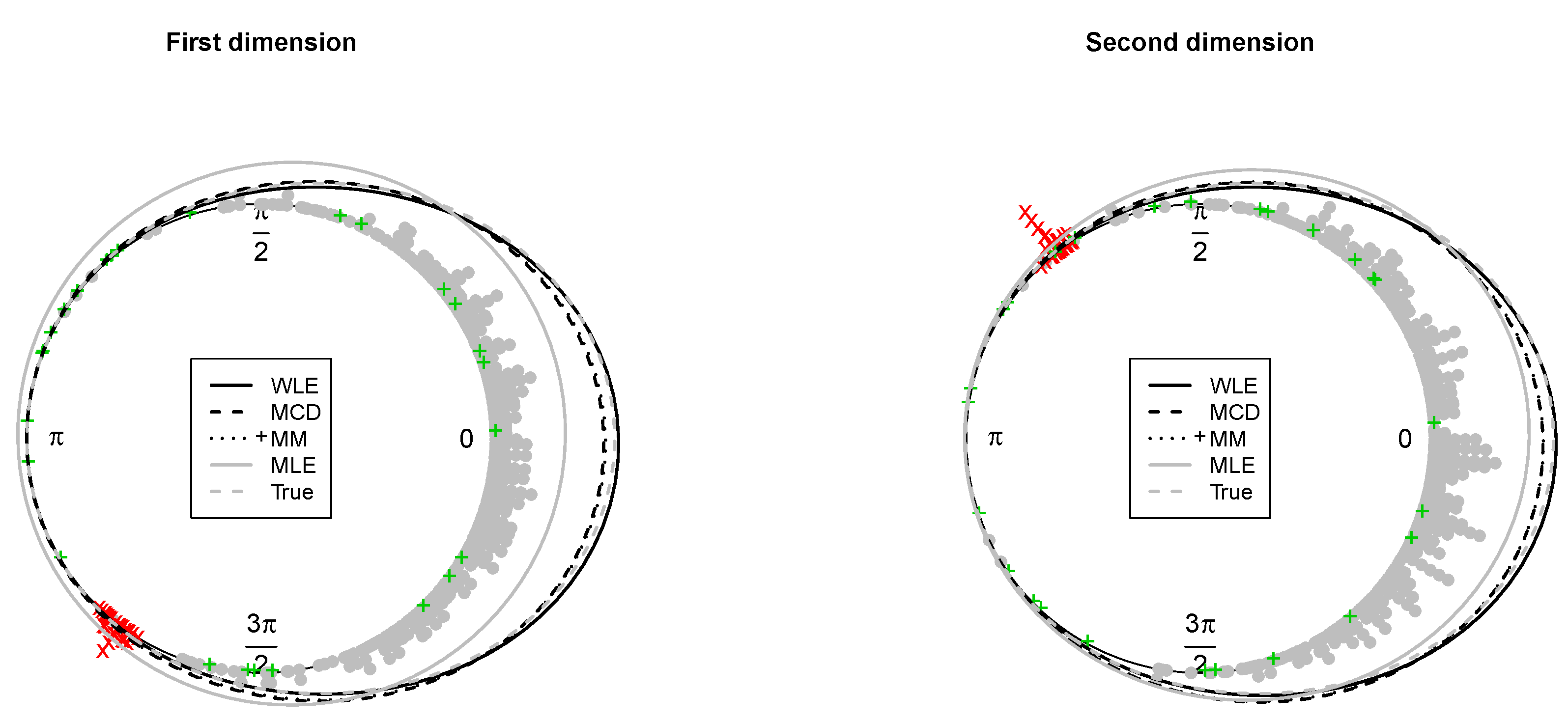

Figure 3. The fitted and true marginals are given in

Figure 4. As before, maximum likelihood does not lead to a reliable fitted model and does not allow to detect outliers because of an inflated fitted variance-covariance matrix. In contrast, the occurrence of outliers does not affect robust estimation. The robust methods lead to detect the point mass contamination since all corresponding points are well above the cut-off line, as are most of the noisy points added in the direction of the smallest eigenvalue of the true variance-covariance matrix.

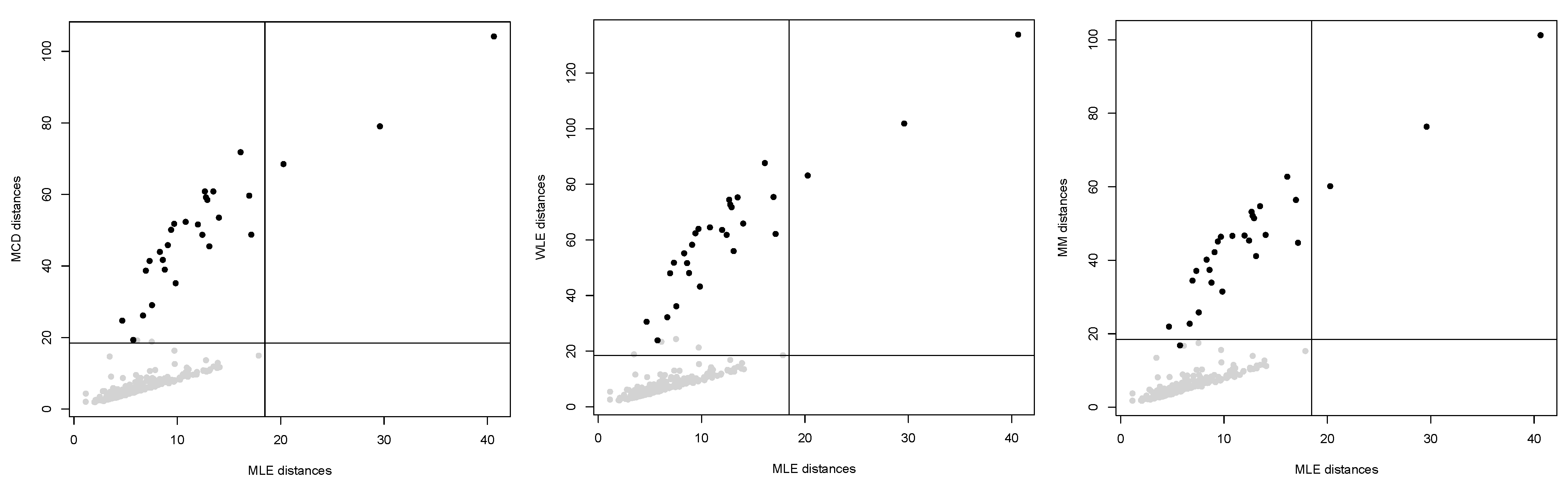

6. Numerical Studies

In this section, we investigate the finite sample behavior of the proposed robust CEM algorithms through a simulation study with replicates. In particular, we consider MM-estimation with a 50% breakdown point and 95% shape efficiency, the WLE with a symmetric chi-square RAF and impartial trimming with 25% trimming and reweighting based on the 0.975–level quantile of the distribution, denoted as MCD. These methods have been compared to the MLE, evaluated according to the classical CEM algorithm. In all cases we set . The data generation scheme follows the lines already outlined in the second synthetic example: Data are sampled from a distribution, with and , where R is a random correlation matrix and . Contamination has been added by replacing a proportion of randomly chosen data points. A couple of outliers configurations have been taken into account:

Scattered: outlying observation are generated from a uniform distribution on .

Point-mass: observations are shifted by an amount in the direction of the smallest eigenvector of .

We considered dimensions

, sample sizes

n = 100,500,

, contamination level

, and contamination size

. Initial values are obtained by subsampling based on 20 starting values. The best solution at convergence is selected according to the criteria outlined in

Section 3.6.

The accuracy of the fitted models is evaluated according to:

- (i)

The average angle separation:

which ranges in

, for the mean vector;

- (ii)

The divergence:

for the variance-covariance matrix.

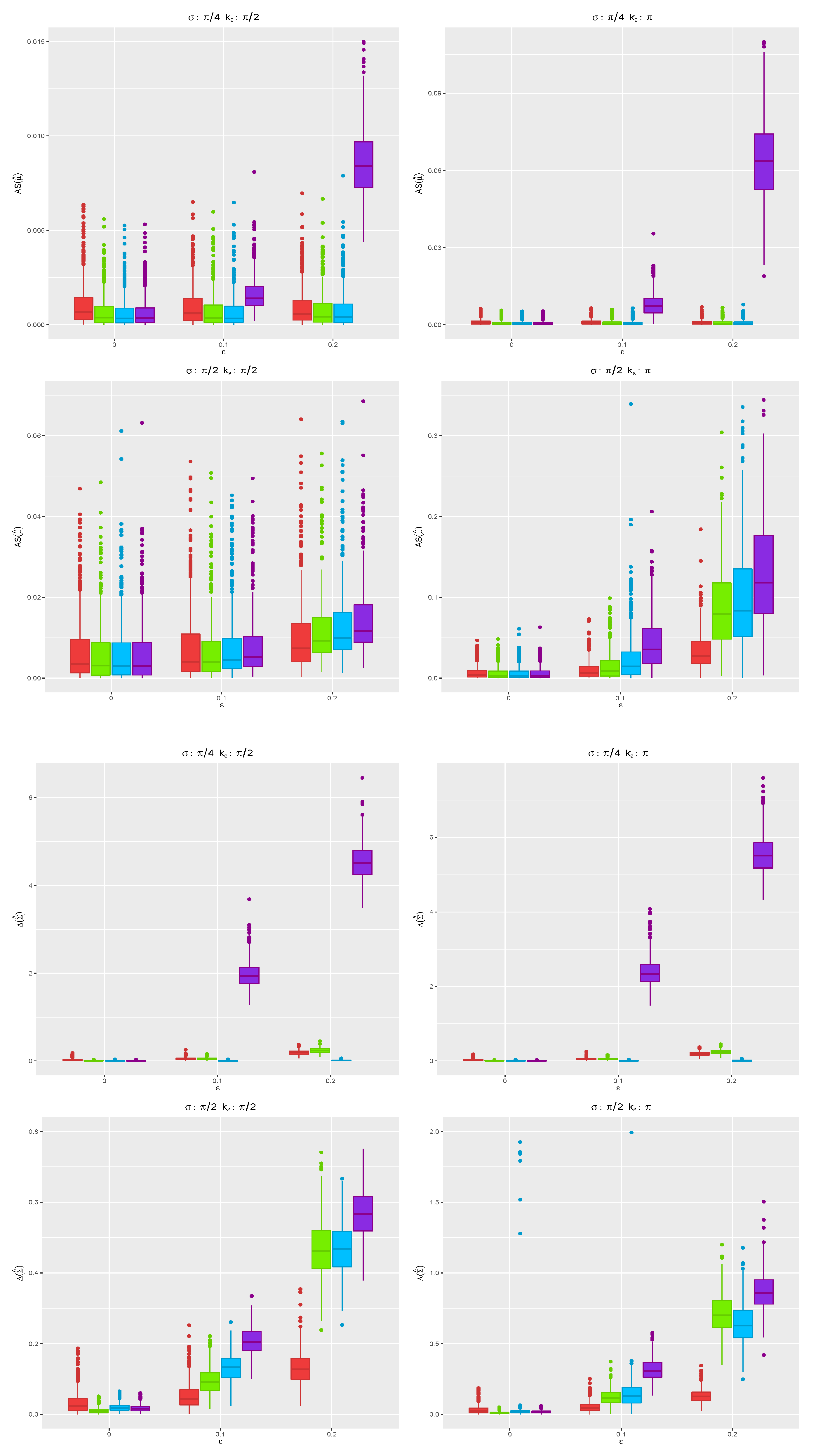

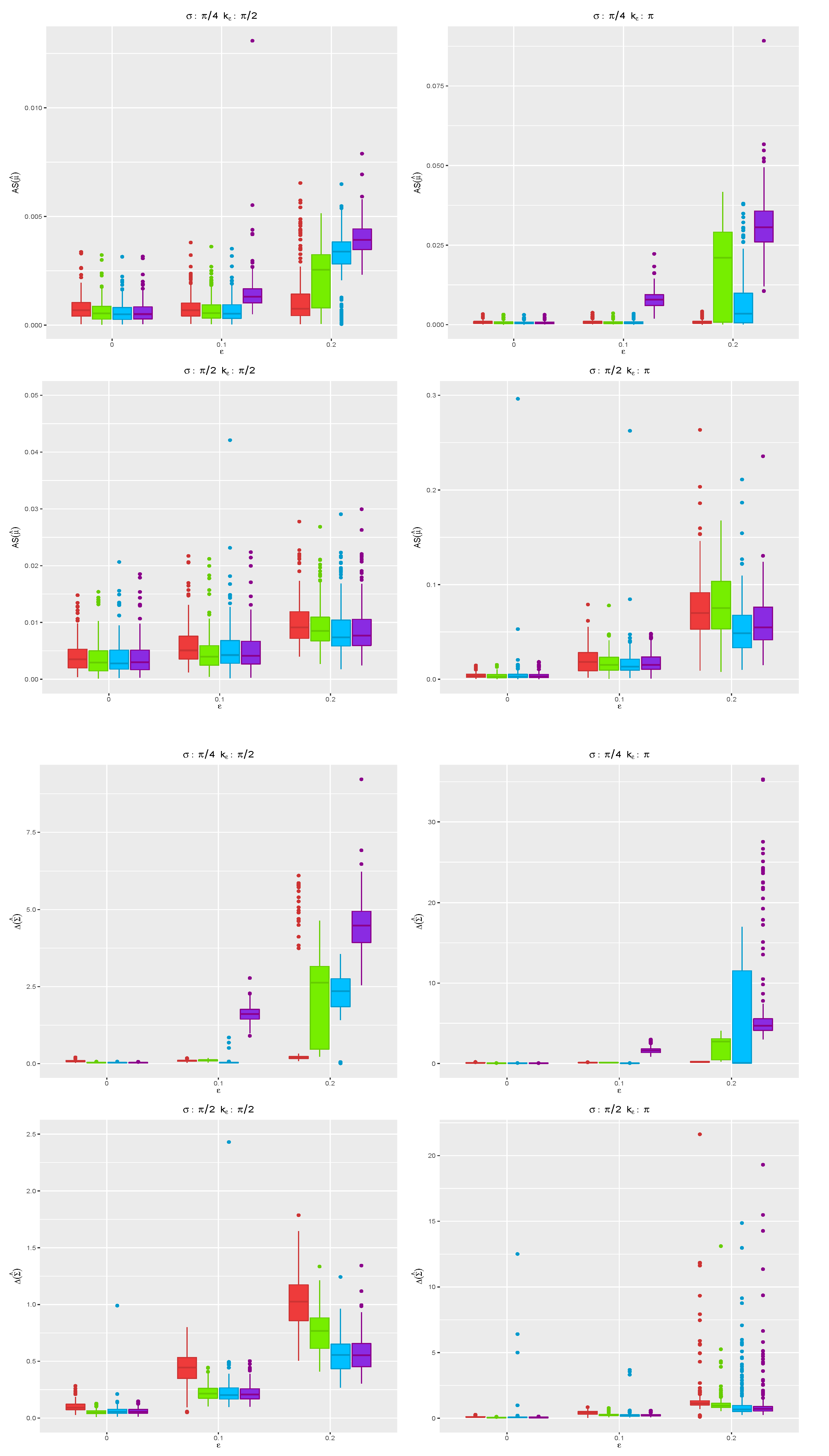

Here, we display the results for the scenario with

.

Figure 5 shows the boxplots for the angle separation and divergence computed using the MCD (red), MM (green), WLE (blue), and MLE (violet) for all the replicates when

, while the case

is given in

Figure 6. Under the true model, the robust CEM gives results close to those stemming from the MLE. In contrast, in the presence of contamination in the data, the proposed robust CEM algorithm achieves a completely satisfactory accuracy, while the MLE deteriorates, especially when the contamination level increases. When

, the MCD shows the best performance among the robust proposals for the more challenging cases with

, when outliers are not well separated from the bulk of genuine data points. The same is not true for

: The presence of outliers over all the

p dimensions needs a different tuning of robust estimators.

We also investigated the reliability of the proposed outlier detection rule (

9) in terms of swamping and masking. The entries in

Table 1 give the median percentage of type–I errors, that is of genuine observations of wrongly declared outliers, whereas

Table 2 gives the median percentage of type–II errors, that is of non-detected outliers. The testing rule has been applied for a significance level

. We only report the results for

. Swamping derived by the robust methods is always reasonable. Their masking error is satisfactory but for the scenario with

and

. Actually, in this case the outliers are not well separated from the group of genuine points. In contrast, the type-II error stemming from the MLE is always unacceptable. In summary, MM, MCD, and WLE performs in a satisfactorily equivalent fashion for the task of outlier detection, as long as outliers exhibit their own pattern different from the rest of the data.