Advanced Particle Classification in Space Calorimetry Using Transformer-Based and Gradient Boosting Models

Abstract

1. Introduction

- Section 2 provides a brief overview of the HERD experiment,

- Section 3 details the AI-driven analysis pipeline for the calorimeter including data processing and algorithms implementation considered in this paper,

- Section 4 presents the preliminary results obtained from the two machine learning techniques.

2. HERD Experiment as Test Bench

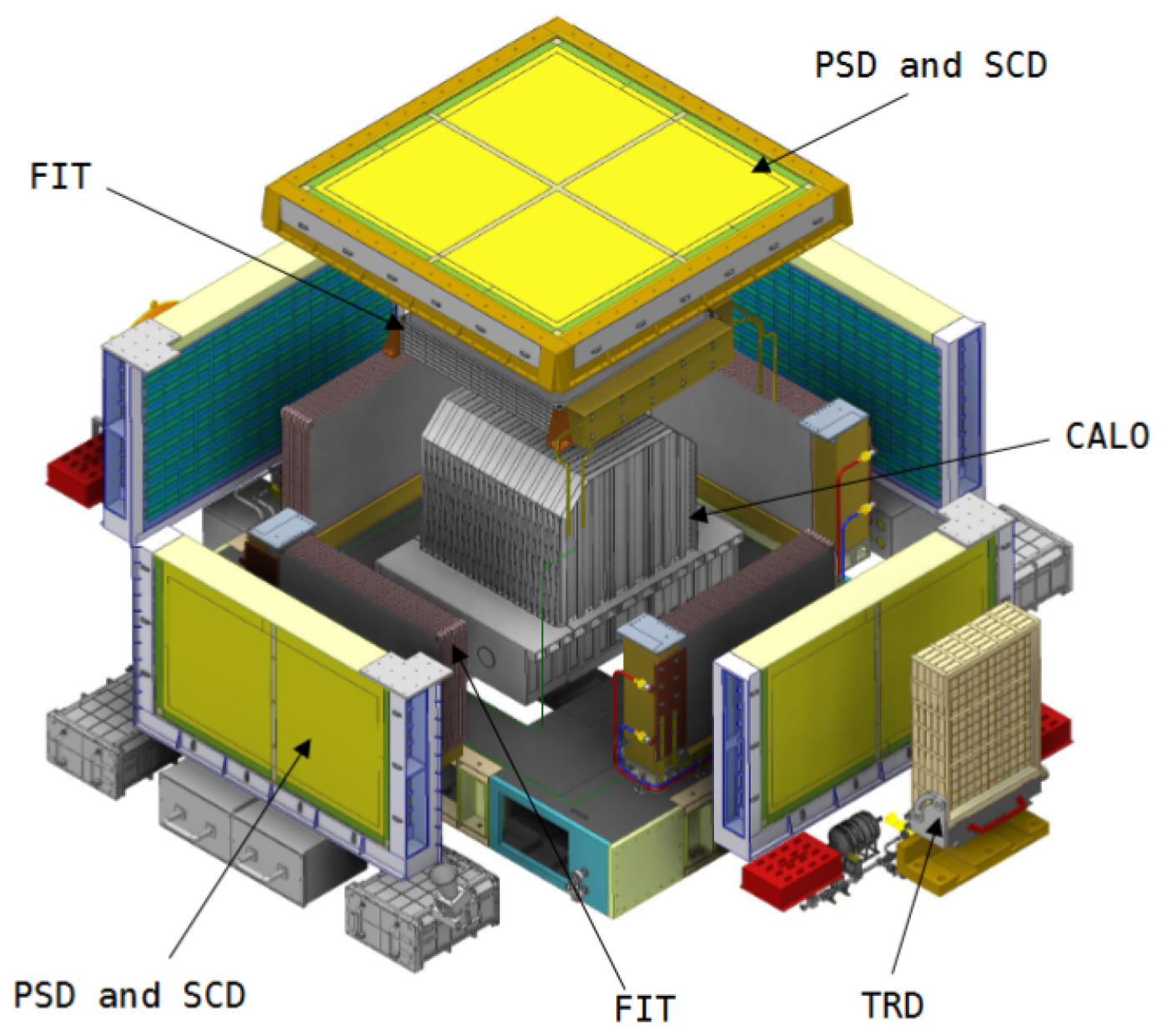

- CALO: the central calorimeter is the core of the experiment, used to measure particle energies and to distinguish electrons from protons and heavier nuclei. It consists of approximately 7500 LYSO cubic scintillating crystals, each 3 cm per side [7];

- FIT: the FIber Tracker surrounds the CALO on the top and four lateral sides. It is designed for particle tracking and charge measurement and consists of five tracking sectors, each with seven X-Y double layers of closely packed scintillating fibers, allowing for seven independent position measurements of traversing charged particles [8];

- PSD: the Plastic Scintillator Detector is made of plastic scintillator bars and measures particle charges also serving as a trigger for low-energy gamma rays [9];

- SCD: the Silicon Charge Detector is a micro-strip silicon detector for precise particle tracking and charge measurement [10];

- TRD: the Transition Radiation Detector is positioned on one side of the detector and consists of THick Gaseous Electron Multipliers (THGEM), primarily used for calibration of TeV nuclei [11].

The HERD Simulation Dataset

3. Pipeline for AI Electron and Proton Discrimination Algorithm

- Lateral moment until the fourth order: quantifies the spread of energy deposition perpendicular to the shower axis, providing insight into the transverse size of the shower;

- Longitudinal moment until the fourth order: characterizes the distribution of energy along the depth of the calorimeter, reflecting the shower development along its axis;

- Longitudinal energy profile: which encodes the energy deposition pattern across successive calorimeter layers.

3.1. The Transformer Classification Algorithm Definition

- Embedding Layer: Input features are first projected into a higher-dimensional space through a linear embedding layer. This step expands the representational capacity of the model, enabling the subsequent layers to better capture non-trivial correlations in the data.

- Positional Encoding: Since the Transformer framework does not inherently encode sequential or spatial relationships, positional encodings are added to the embedded vectors to convey information about the relative position of each element in the input sequence. In this work, we implemented a custom function that generates fixed sine–cosine positional encodings and adds them to the input embeddings. These encodings use sinusoidal functions at different frequencies, enabling the model to infer distances and relative ordering between elements from their phase relationships. In practice, this allows the Transformer to distinguish, for instance, between energy deposits occurring in different pixels of the calorimeter, even though all inputs are processed in parallel.

- Transformer Encoder: The central component is a stack of five Transformer encoder layers. Each layer contains two sub-blocks: a multi-head self-attention mechanism, which learns long-range dependencies between sequence elements, and a position-wise feed-forward network, which enhances the model’s ability to capture complex high-dimensional structures. Residual connections and layer normalization are automatically handled by the PyTorch (version 2.6.0) nn.TransformerEncoderLayer implementation [12].

- Classification Layer: The encoder outputs are aggregated by computing their mean along the sequence dimension, yielding a fixed-size feature vector. This vector is passed to a fully connected layer that produces the final binary classification output.

3.2. The XGBoost Classification Algorithm

- For , corresponds to the average longitudinal displacement of the deposited energy along the shower axis.

- For , is related to the longitudinal spread (or variance) of the energy deposition.

- Higher orders () capture more detailed features of the shower profile.

- For , represents the mean lateral displacement of the deposited energy with respect to the shower axis.

- For , corresponds to the lateral spread (variance) of the shower profile.

- Higher orders () capture finer details of the transverse distribution.

4. Results

4.1. Transformer Results

4.2. XGBoost Results

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. arXiv 2017, arXiv:1706.03762. [Google Scholar]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, ACM, KDD ’16, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar] [CrossRef]

- Wang, A.; Gandrakota, A.; Ngadiuba, J.; Sahu, V.; Bhatnagar, P.; Khoda, E.E.; Duarte, J. Interpreting Transformers for Jet Tagging. arXiv 2024, arXiv:2412.03673. [Google Scholar] [CrossRef]

- Zhang, S.N.; Adriani, O.; Albergo, S.; Ambrosi, G.; An, Q.; Bao, T.W.; Battiston, R.; Bi, X.J.; Cao, Z.; Chai, J.Y.; et al. The high energy cosmic-radiation detection (HERD) facility onboard China’s Space Station. In Proceedings of the Space Telescopes and Instrumentation 2014: Ultraviolet to Gamma Ray, Montréal, QC, Canada, 22–26 June 2014; Takahashi, T., den Herder, J.W.A., Bautz, M., Eds.; SPIE: Bellingham, WA, USA, 2014; Volume 9144, p. 91440X. [Google Scholar] [CrossRef]

- Mori, N. GGS: A Generic GEANT4 Simulation package for small- and medium-sized particle detection experiments. Nucl. Instruments Methods Phys. Res. Sect. Accel. Spectrometers Detect. Assoc. Equip. 2021, 1002, 165298. [Google Scholar] [CrossRef]

- Gu, Y. China space station: New opportunity for space science. Natl. Sci. Rev. 2021, 9, nwab219. [Google Scholar] [CrossRef] [PubMed]

- Betti, P. The HERD experiment: New frontiers in detection of high energy cosmic rays. PoS 2024, TAUP2023, 142. [Google Scholar] [CrossRef]

- Perrina, C.; Frieden, J.M.; Azzarello, P.; Sukhonos, D.; Wu, X.; Gascón, D.; Gómez, S.; Mauricioc, J. The scintillating-fiber tracker (FIT) of the HERD space mission from design to performance. PoS 2024, ICRC2023, 147. [Google Scholar] [CrossRef]

- Serini, D.; Altomare, C.; Alemanno, F.; Ximenes, N.A.; Barbato, F.; Bernardini, P.; Cagnoli, I.; Casilli, E.; Cattaneo, P.; Comerma, A.; et al. Characterization of the nuclei identification performances of the plastic scintillator detector prototype for the future HERD satellite experiment. In Proceedings of the 2023 9th International Workshop on Advances in Sensors and Interfaces (IWASI), Bari, Italy, 8–9 June 2023; pp. 184–189. [Google Scholar] [CrossRef]

- Silvestre, G. The Silicon Charge Detector of the High Energy Cosmic Radiation Detection experiment. J. Inst. 2024, 19, C03042. [Google Scholar] [CrossRef]

- Liu, X.; Adriani, O.; Bai, X.h.; Bai, Y.l.; Bao, T.W.; Berti, E.; Betti, P.; Bottai, S.; Cao, W.W.; Casaus, J. Double read-out system for the calorimeter of the HERD experiment. In Proceedings of the 38th International Cosmic Ray Conference (ICRC2023), Nagoya, Japan, 26 July–3 August 2023; p. 097. [Google Scholar] [CrossRef]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library. arXiv 2019, arXiv:1912.01703. [Google Scholar]

- Akiba, T.; Sano, S.; Yanase, T.; Ohta, T.; Koyama, M. Optuna: A Next-generation Hyperparameter Optimization Framework. arXiv 2019, arXiv:1907.10902. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2017, arXiv:1412.6980. [Google Scholar] [CrossRef]

| Hyperparameter | Values |

|---|---|

| Embedding Input Dimension | 128, 256, 512 |

| Number of head attention | 4, 8 |

| num_layers | 1, 2, 3, 4, 5, 6, 7, 8 |

| learning rate (lr) | between |

| patience | 3, 4, 5, 6, 7, 8, 9, 10 |

| max_epochs | 20, 21,…, 50 |

| batch_size | 16, 32, 64 |

| Hyperparameter | Optimal Value |

|---|---|

| embedding dimension () | 256 |

| number of heads () | 8 |

| number of layers (num_layers) | 5 |

| learning rate (lr) | |

| patience | 6 |

| max epochs | 74 |

| Metric | Value (%) |

|---|---|

| Accuracy | 94.55 |

| Precision | 92.58 |

| Recall | 96.81 |

| Metric | Low Energy (%) | High Energy (%) |

|---|---|---|

| Accuracy | 99.19 | 99.50 |

| Precision | 99.16 | 99.53 |

| Recall | 99.21 | 99.47 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bossa, M.; Cuna, F.; Gargano, F. Advanced Particle Classification in Space Calorimetry Using Transformer-Based and Gradient Boosting Models. Particles 2025, 8, 87. https://doi.org/10.3390/particles8040087

Bossa M, Cuna F, Gargano F. Advanced Particle Classification in Space Calorimetry Using Transformer-Based and Gradient Boosting Models. Particles. 2025; 8(4):87. https://doi.org/10.3390/particles8040087

Chicago/Turabian StyleBossa, Maria, Federica Cuna, and Fabio Gargano. 2025. "Advanced Particle Classification in Space Calorimetry Using Transformer-Based and Gradient Boosting Models" Particles 8, no. 4: 87. https://doi.org/10.3390/particles8040087

APA StyleBossa, M., Cuna, F., & Gargano, F. (2025). Advanced Particle Classification in Space Calorimetry Using Transformer-Based and Gradient Boosting Models. Particles, 8(4), 87. https://doi.org/10.3390/particles8040087