1. Introduction

Rolling bearings are vital components of rotating equipment. Their failure can have severe consequences, including the failure of the entire mechanical system. Such failures pose a significant risk of safety accidents during actual production, leading to unpredictable outcomes [

1,

2]. Therefore, the development of remaining useful life (RUL) technologies provides a strong assurance for the reliable functioning of equipment [

3], which can help engineers carry out timely maintenance and replacement, reduce economic losses, and improve the economic benefits of enterprises.

In recent years, the data-driven method based on supervised learning is very popular in the field of equipment failure prediction, which mainly includes the construction of health indicators (HIs) and RUL prediction. The constructed HIs and corresponding target labels are employed to train the prediction model for subsequent test set verification. Therefore, the construction of appropriate HIs plays a crucial part in RUL prediction. Based on varying construction strategies for HIs, existing methods are primarily categorized into two approaches, namely direct HI (PHI) and indirect HI (VHI). PHI is typically obtained by employing various techniques in the original signal analysis or signal processing, also referred to as physical HIs. The spectral entropy based on multi-scale morphological decomposition employed by Wang Bing et al. [

4] and the binary multi-scale entropy adopted by Li Hongru et al. [

5] are HIs obtained using statistical methods from the original signals. Gebraeel et al. [

6] extracted the average amplitudes and harmonics to serve as PHI. VHI is generally constructed using fusion or dimensionality reduction methods, because it does not have actual physical significance. Wang et al. [

7] fused 12 time-domain features into a new health index using Mahalanobis distance. Xia et al. [

8] used spectral regression technology to reduce the dimensionality of fault features in time-domain, frequency-domain, and time–frequency domain extracted from rolling bearing vibration signals to derive new features. However, the time-domain characteristics (PHI) relevant to the degradation trend calculated by the bearings’ original signal are limited (it is theoretically believed that rolling bearings are relatively smooth in the initial stage of degradation, and sharply degenerate near the end of the bearings’ lifespan, and the time-domain features appear relatively drastic in terms of fluctuations), so directly predicting the RUL for bearings lacks high accuracy. Data augmentation aims to address these challenges by generating additional data and effectively employing several frequently employed methods, such as noise injection and data augmentation (expanding or shrinking the dataset) [

9], so as to meet the process of predictive model training and verification.

The existing generation models based on machine learning mainly include variational autoencoder (VAE) [

10] and generative adversarial network (GAN) [

11]. Among them, GAN research has been widely concerned and has found wide applications in applied in stock prediction, image generation, and other fields. Due to its excellent performance and powerful data generation capacity, GAN research has been widely used. It also currently plays a huge role in data generation that generates high-quality, diverse time series. In the domain of prognostics and health management (PHM), Liu et al. [

12] proposed an optimized GAN with stable model gradient change and effectively utilized it for machine fault diagnosis. Lu et al. [

13] introduced a predictive model for bearing faults that combines GAN and LSTM. Lei et al. [

14] utilized the time-domain features as the original data for the GAN and used support vector regression (SVR) and radial basis function neural network (RBFNN) to perform RUL prediction on the amplified data. Numerous studies have shown that data augmentation using GAN outperforms traditional methods, leading to notable enhancements in model performance. For instance, Frid-Adar et al. [

15] showed that synthetic data generated using GAN improved classification accuracy from 78.6% to 85.7% compared to affine augmentation. Due to the gradient explosion, gradient disappearance, and other conditions in the practical application of GAN, the generated sample quality is poor, and to address the issue of poor sample quality, it is essential to create a substantial number of samples, which is difficult to achieve the expected goal [

16]. In order to build a more comprehensive generative model and develop the generative learning of VAE and GAN, Larsen et al. [

17] integrated the two models of VAE and GAN, proposed VAE-GAN, and applied it to face image recognition. Wang et al. [

18] proposed a data enhancement method named PVAEGAN, which achieved good fault diagnosis effect by generating a limited quantity of failure data. Considering the advantages of VAE-GAN in the above fields, this paper applies VAE-GAN to sample generation of time series to acquire high-quality time series data set.

In supervised training, the training set and corresponding target labels are fed into the prediction model, with the goal for the model to learn the mapping relationship between them. To evaluate the model’s training effectiveness, test set data are fed into the trained predictive model to derive the forecasted outcome. The training and test sets originate from distinct degradation processes, resulting in lower model prediction accuracy. Typically, we consider the correlation between training sets, and leverage the intrinsic correlation within the training set to assign weights to its data, thereby constructing a novel time series feature for RUL prediction. Nie et al. [

19] constructed similarity features by calculating Pearson’s correlation coefficients between time series of features in time-domain and frequency-domain and corresponding time vectors. Hou et al. [

20] applied weights to the training set’s RUL, contingent upon the similarity between the training and test sets during the degradation phase, thereby acquiring a similar RUL for the test set to facilitate network training. The DTW algorithm is employed to measure the similarity between time series. It can accurately describe the similarity and difference of time series by stretching, aligning, and warping the time series. Nguyen et al. [

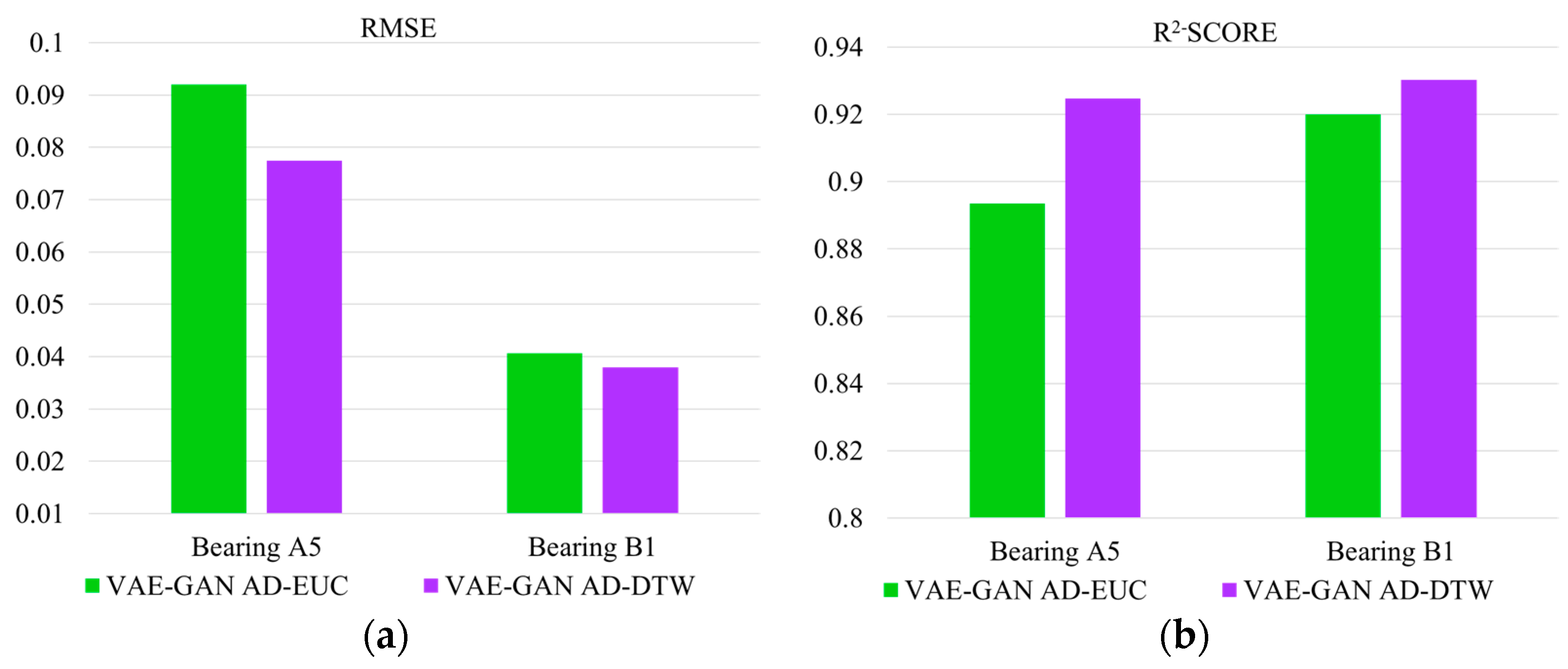

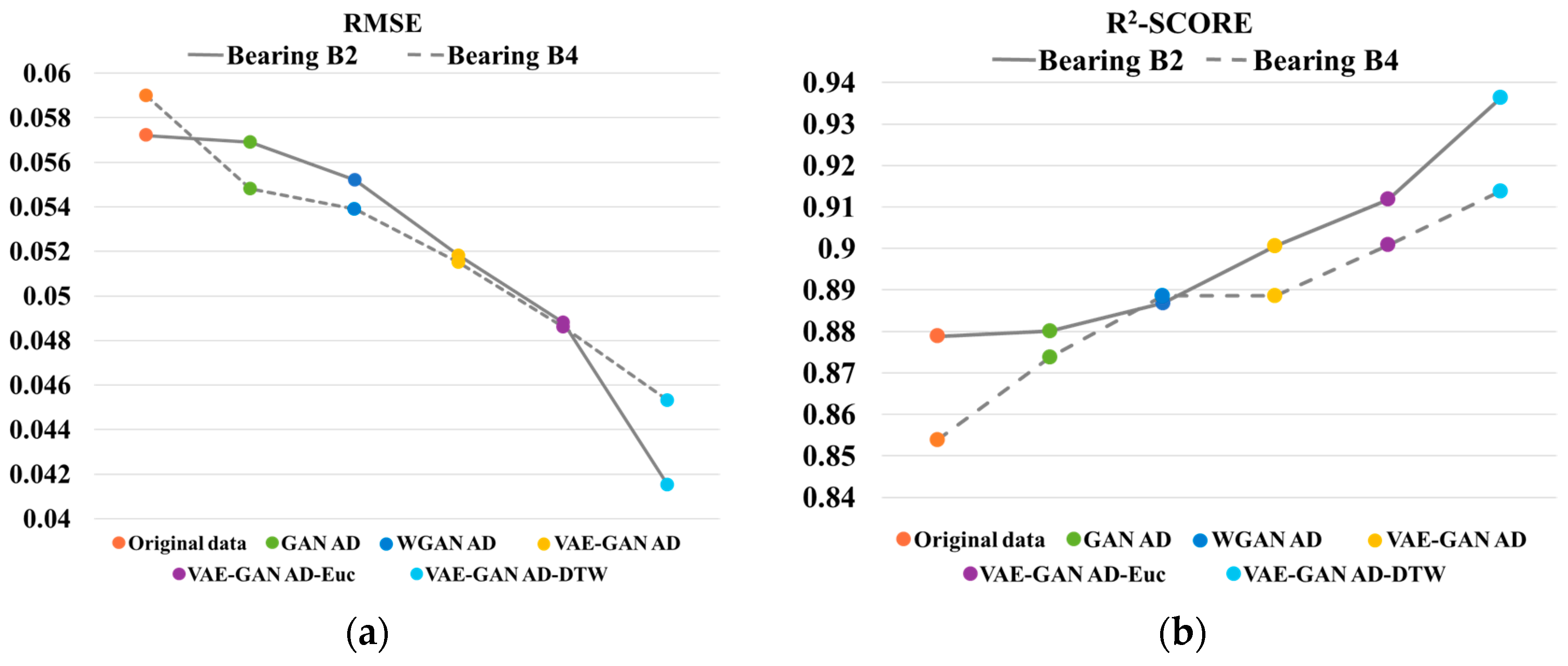

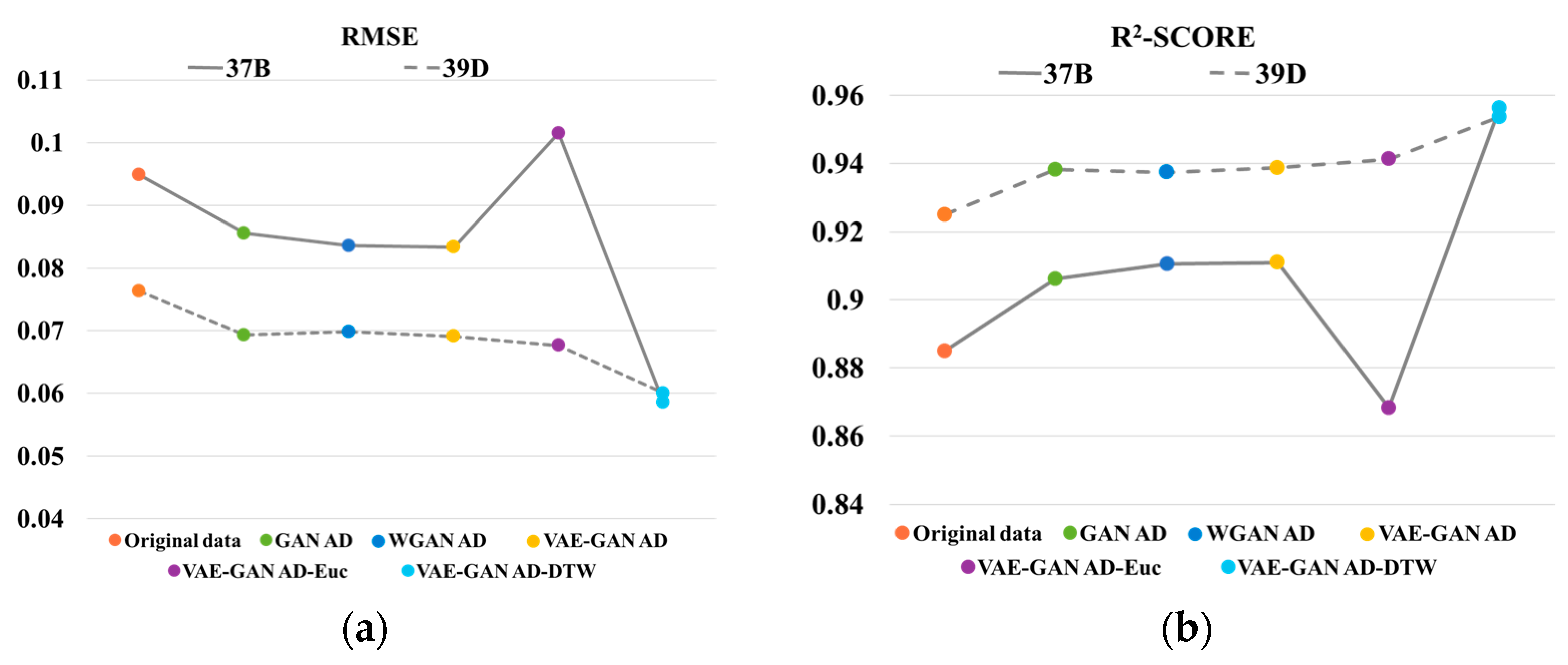

21] used the DTW algorithm to calculate the similarity between time series and carry out state matching. Compared with Euclidean distance, it calculated time series more accurately. Experimental findings indicate that utilizing similarity to enhance the predictive accuracy of the model is effective. Therefore, this paper proposes a time series data generation method based on VAE-GAN. By combining the advantages of VAE and GAN in sample generation, the function of data augmentation and data expansion is realized while retaining the original data features and distribution. Moreover, an adaptive time series feature construction method is proposed, and the DTW distance of the training set and target sequence is calculated for similarity evaluation. According to the similarity between the two sets, the enhanced training set data are weighted and fused to construct a DTW weighted feature to enhance the predictive accuracy of supervised learning models.

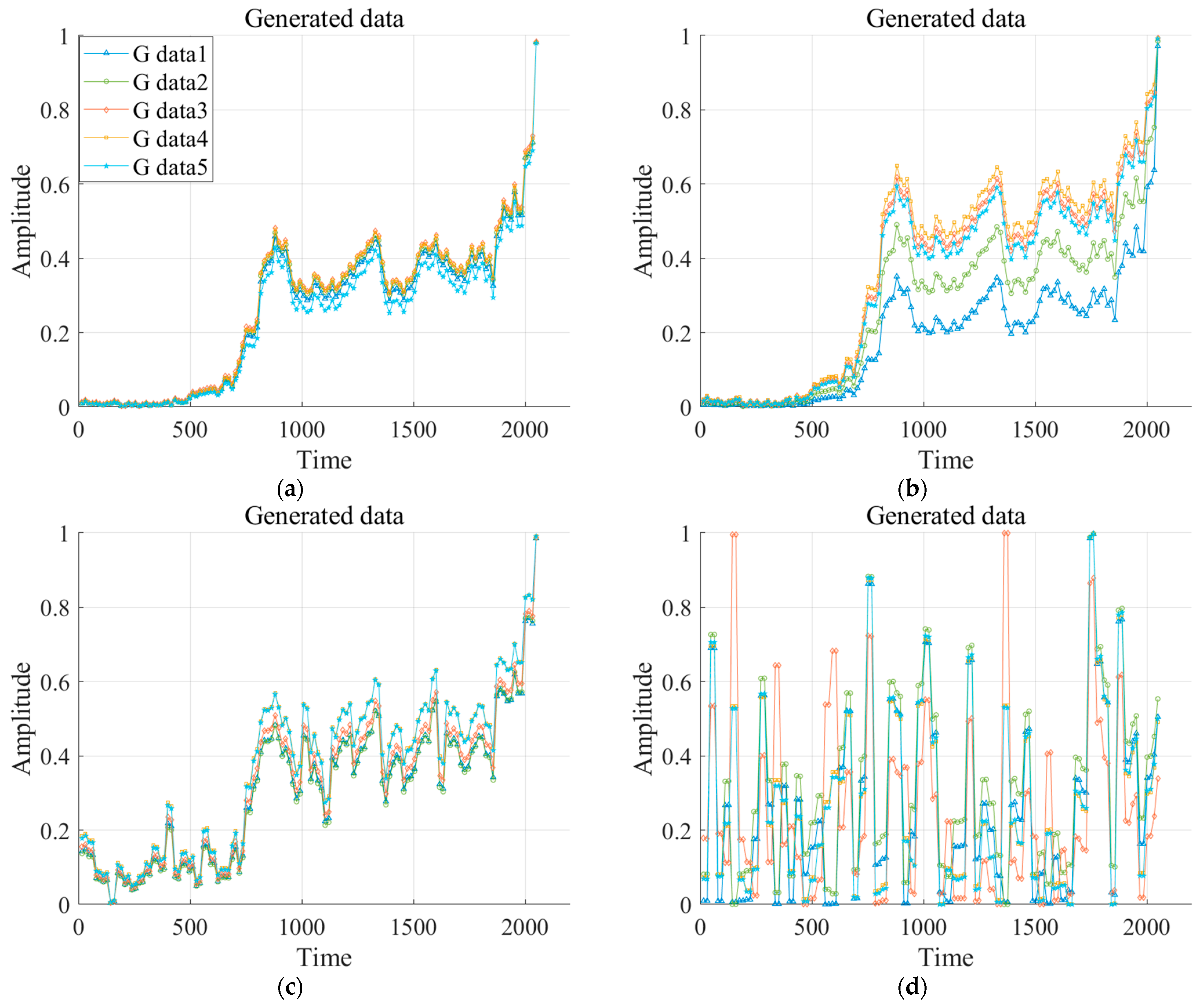

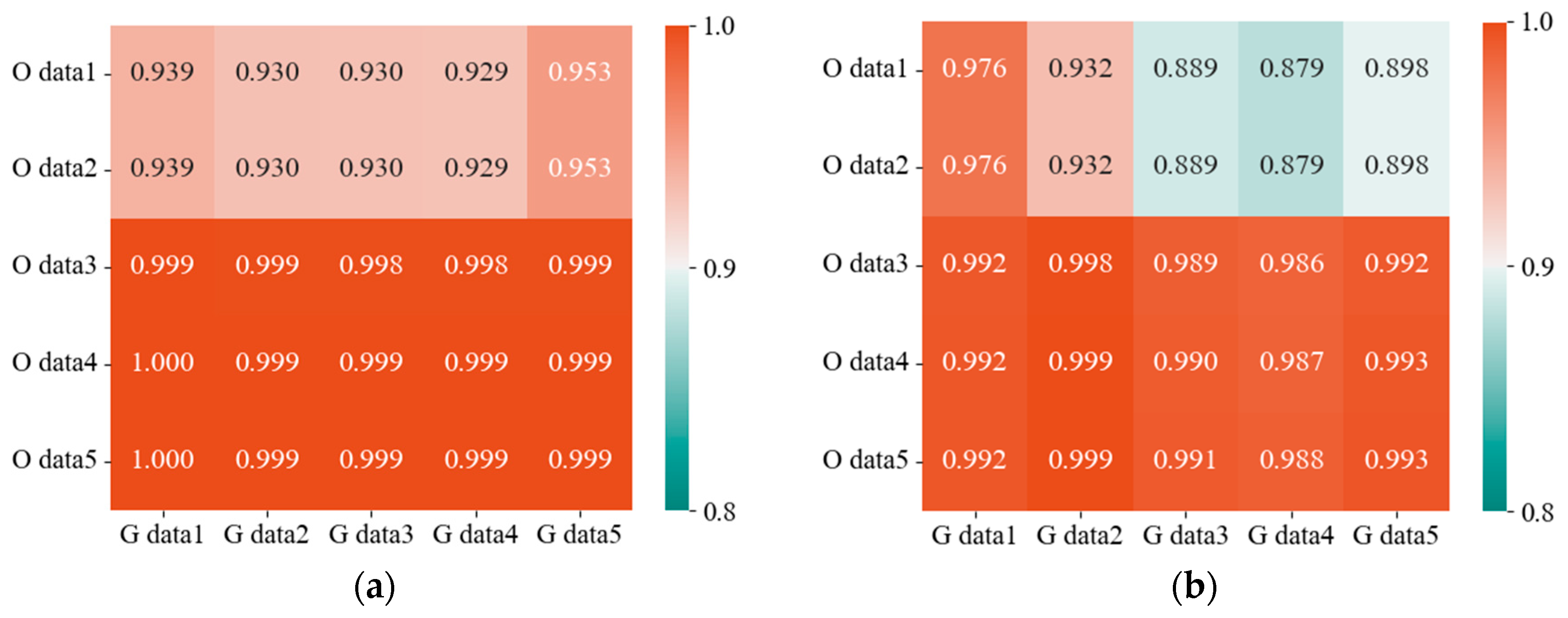

This paper aims to predict the RUL of rolling bearings, utilizing a similarity weighting approach that combines VAE-GAN and DTW, which is depicted in

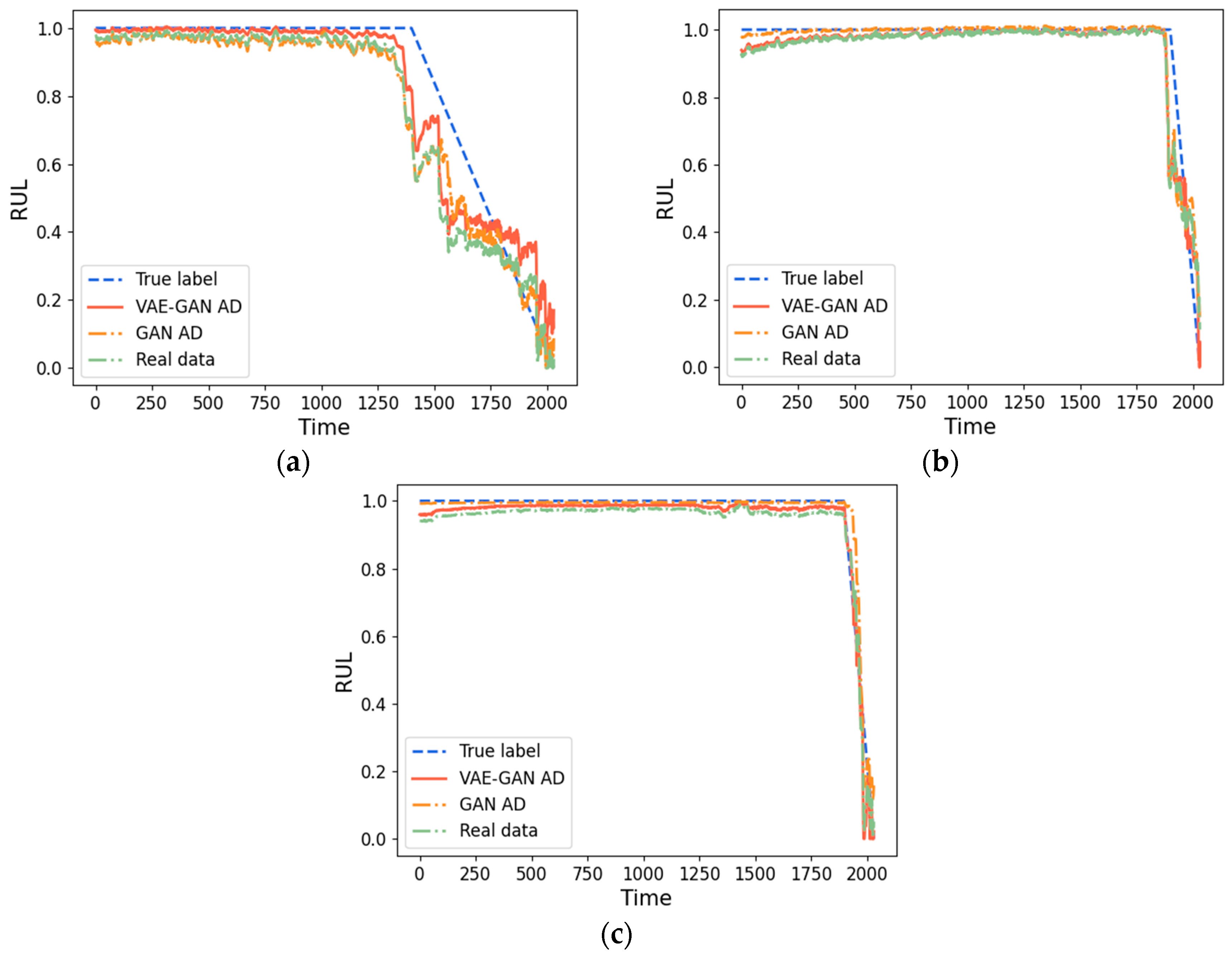

Figure 1. Firstly, time-domain features are extracted from the bearings’ horizontal vibration signals. Subsequently, the characteristic time-domain features are manually selected and input into the VAE-GAN network. Combining the advantages of VAE and GAN in sample generation, high-quality generated data are obtained. The augmented training set is obtained by mixing generated data and the original data, and then the augmented training set is matched with the target sequence data by DTW similarity to obtain the weighted fusion data. The CNN-LSTM network is trained by the DTW weighted fusion augmented data, and finally the trained prediction model is utilized to obtain RUL predictions using the test set as input. In this paper, the efficacy of the approach is validated via experimental analysis employing the XJTU-SY rolling element bearing accelerated life test dataset. The following outlines the primary contributions of this paper:

- (1)

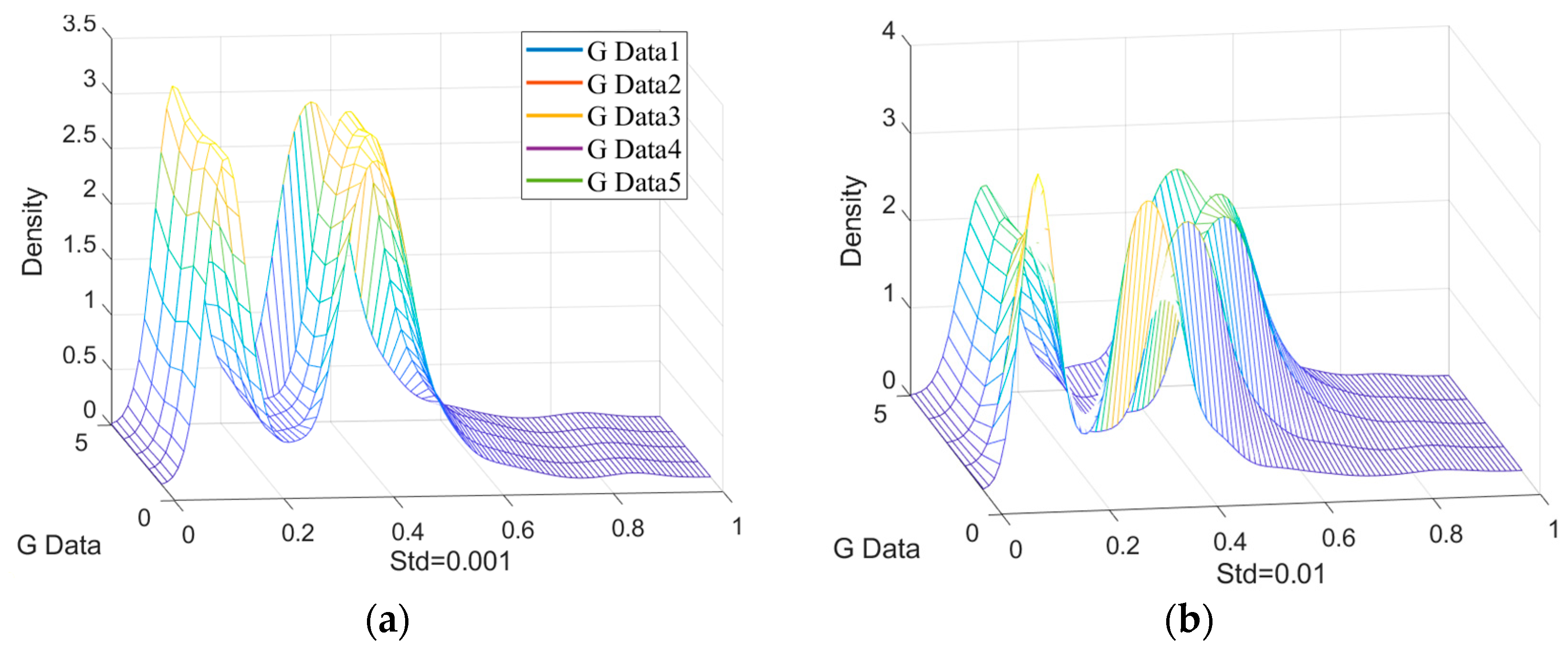

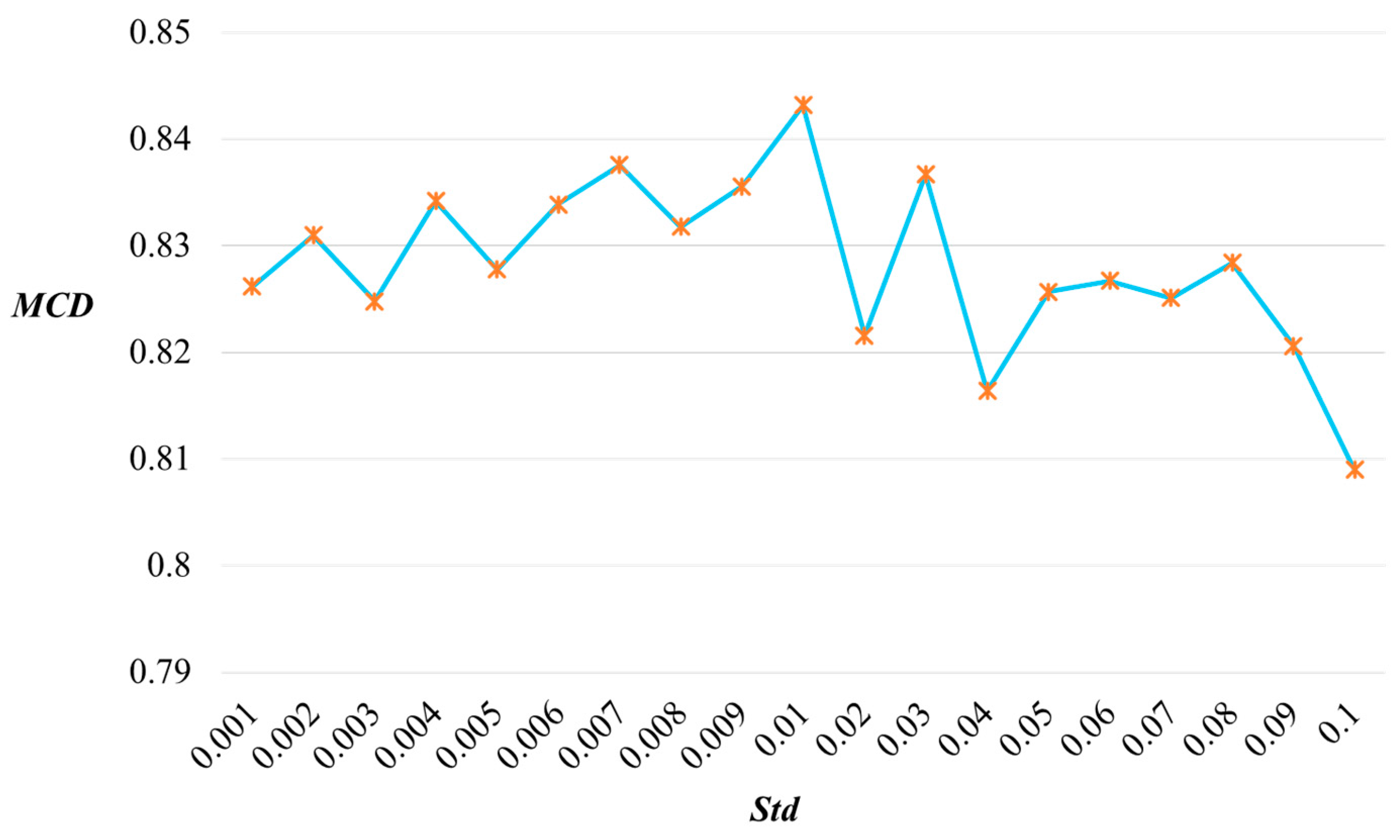

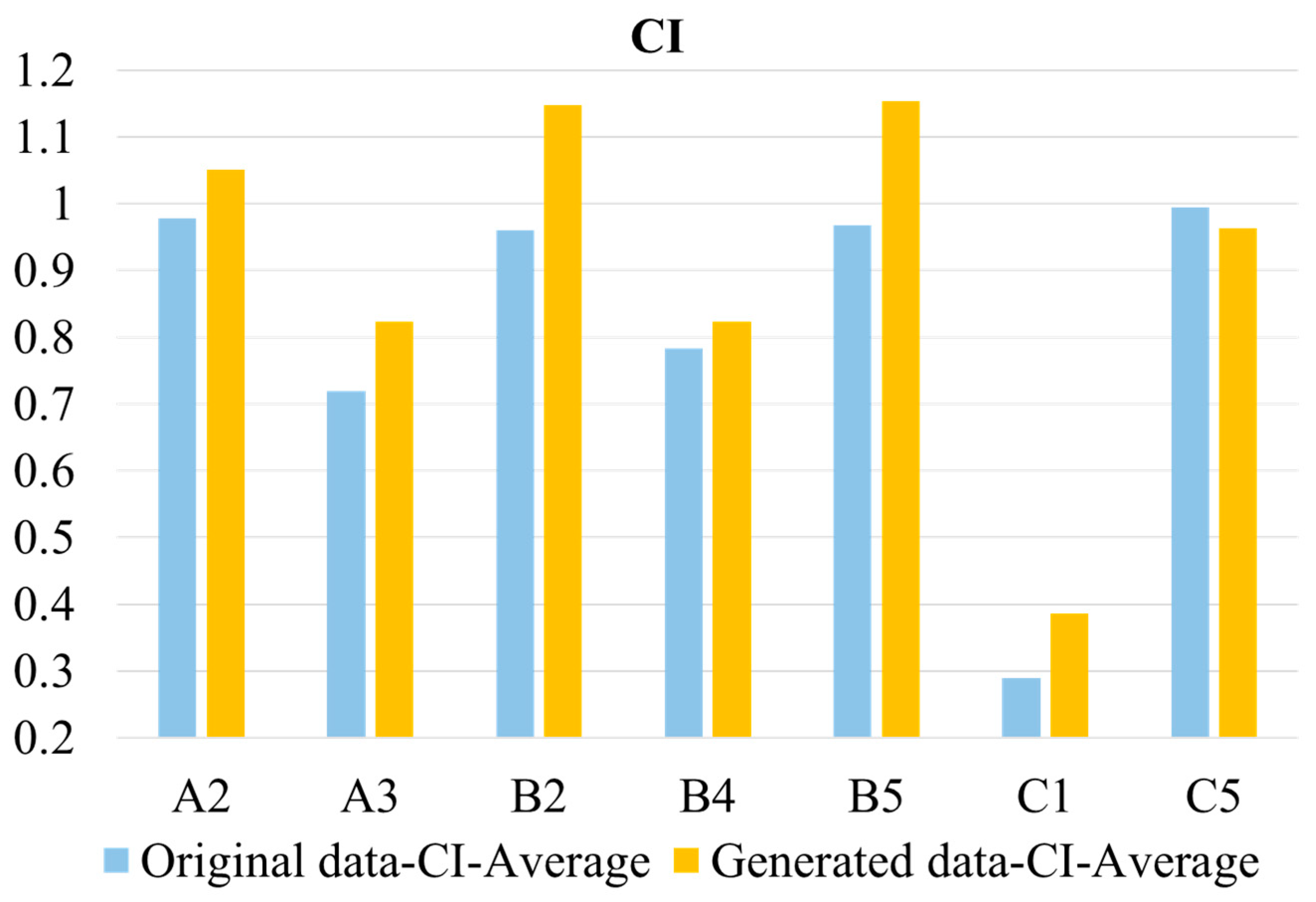

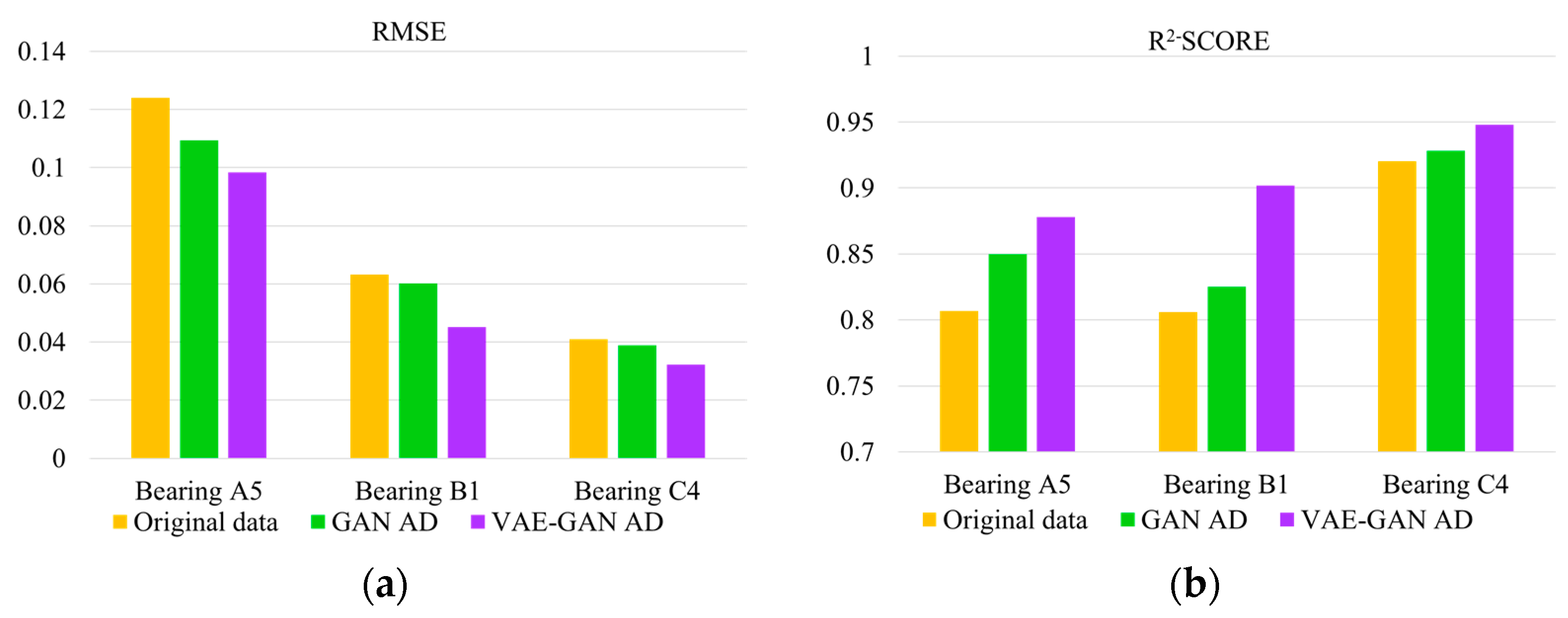

A feature generation method based on VAE-GAN is proposed, which effectively solves the issue of limited capability of original bearing signal to represent degraded state and insufficient effective time-domain features. The generated features are of higher quality and capture more adequate degradation information than the real data.

- (2)

In supervised learning, the mapping between the training set and the test set is often unknown. To address this challenge, this study incorporates DTW similarity weighting to match the similarity between training data and target sequence, thereby enhancing the accuracy of bearing RUL prediction.

- (3)

The efficacy of the proposed method is confirmed by conducting experiments on public datasets. By combining data augmentation and similarity weighting, a more comprehensive understanding of degradation patterns can be achieved, leading to enhanced prediction performance and accuracy.

The remainder of this paper is organized as follows. In

Section 2, a concise review of the foundational theory is described. In

Section 3, the basic theory of VAE-GAN data augmentation and similarity fusion with DTW distance is introduced. In

Section 4, the evaluation of the generated data quality and the confirmation of the proposed method’s effectiveness are conducted using the XJTU-SY rolling element bearing accelerated life test dataset, and the relevant comparative tests are carried out.

Section 5 gives the conclusion.

2. Foundational Theory

2.1. Variational Autoencoder (VAE)

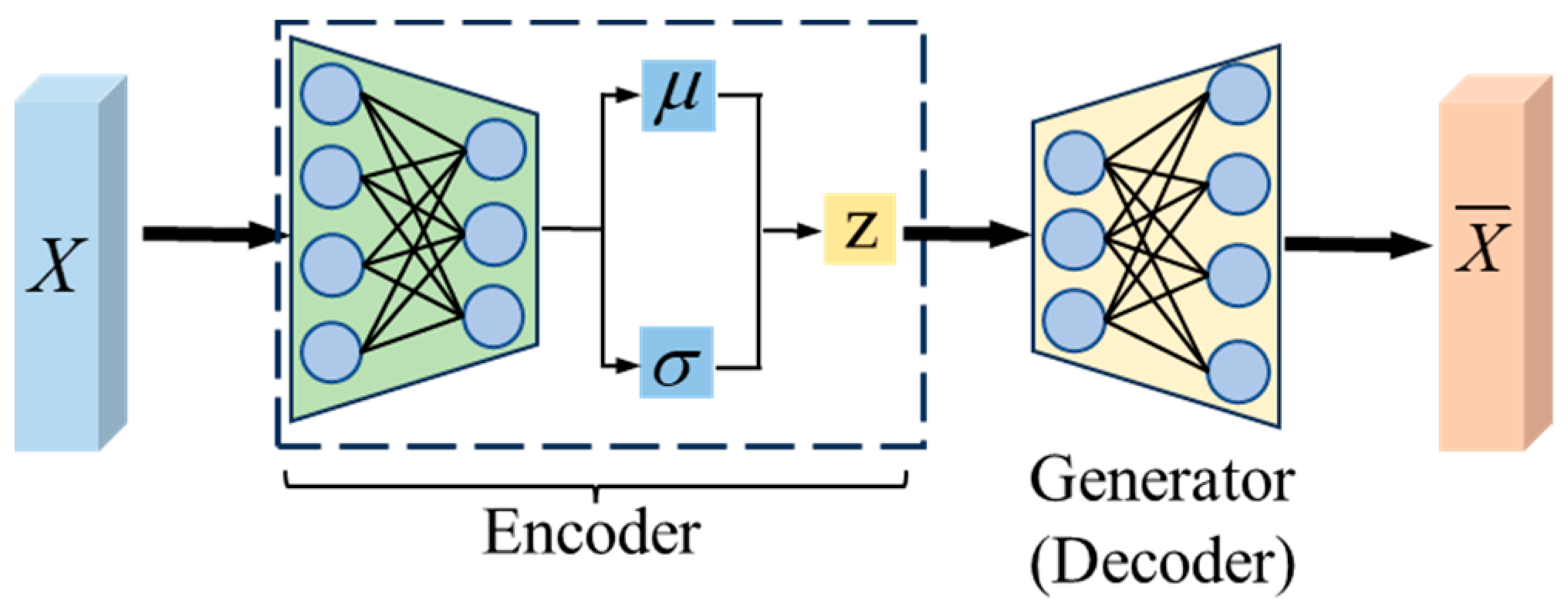

VAE, a variant of the autoencoder, is a neural network that combines probabilistic statistics and deep learning, and its structure is shown in

Figure 2. It can be divided into encoder and decoder. Encoder learns the distribution of raw data, converts the original input X into two vectors, one represents the mean vector

μ and the other represents the standard deviation vector

σ of the distribution. Subsequently, samples are drawn from the sample space defined by the two vectors, and the resulting sample Z, obtained as Z = E(X), is used as the Input for the generator. Nevertheless, training the two values becomes challenging due to the intrinsic randomness of the samples, so the reparameterization technic is utilized to define Z as Equation (1), so that the randomness of the sample will be transferred to

ε. The decoder network then restores the hidden variable Z to an approximate reconstructed data.

where

ε is the auxiliary noise variable from the normal distribution N (0,I).

When real samples are known, the principal objective of the generative model is to capture and learn the underlying data distribution P(X) of this set of data according to the real samples, and samples according to the learned distribution, so as to obtain all possible distributions in line with this set of data.

Because VAE allows potentially complex priors to be set, powerful potential representations of the data can be learned.

2.2. Generative Adversarial Network (GAN)

GAN is a well-known generative algorithm model that consists of two main components, namely the generator model and the discriminator model. The core concept of GAN is to train generators to generate ideal data through the mutual game between generator and discriminator to form an antagonistic loss. The generator aims to closely align the distribution of the generated samples with that of the training samples, while the discriminator evaluates whether a sample is real, or a fake one produced by the generator. The goal of GAN is to use random noise z to train the generator network, so that the generated samples closely resemble real samples, and the discriminator network calculates the probability that the input samples are from the real samples. The framework of the GAN is illustrated in

Figure 3:

2.3. Dynamic Time Warping (DTW) Algorithm

The DTW algorithm [

22] can reflect the fluctuation trend among bearing vibration signal sequences and has high sensitivity to the fluctuation trend among different vibration signal sequences. Its basic idea is to regularize the time axis by the numerical similarity of the time series, and then find the optimal correspondence between these two temporal sequences. Thus, the DTW distance is utilized to qualify the similarity of the vibration signal sequences in this paper, if the DTW distance of two vibration signals is smaller, it means that the similarity between them is higher, and there is a certain mapping relation between the two sequences.

The DTW distance utilizes the dynamic regularization Idea to adjust the correspondence between the elements of two vibration signal sequences at different times to find an optimal bending trajectory that minimizes the distance between two vibration signal sequences along that path.

Let there be two one-dimensional signal sequences X and Y, X = [x1, x2,…, xr,…, xR]{1 ≤ r ≤ R} and Y = [y1, y2,…, ys,…, yS]{1 ≤ s ≤ S}, where r and s are the lengths of X and Y, respectively, an r*s matrix grid is constructed and the matrix elements (r,s) denote the distances between the points xr and ys.

The distance between two sequence matches is the distance

dk(

r,

s) weighted sum:

To ensure that the resulting path A is a globally optimal regularized path, the following three constraints must be satisfied: (1) Scope constraints: the beginning position must be (1,1), the end position must be (R,S), to have a beginning and an end; (2) Monotonicity: the path to maintain the time order monotonous non-decreasing, the slope cannot be too small or too large, can be limited to 0.5~2 range; (3) Continuity: r and s can only increase sequentially by 0 or 1, i.e., the point after (r,s) must be (r + 1,s), (r,s + 1) or (r + 1,s + 1).

The path with the minimum cumulative distance is the optimal regularized path, and there is one and only one of them, and the recursive formula for the DTW distance can be found according to Equation (2) and the constraints: