Impact of Virtual Reality on Decision-Making and Risk Assessment During Simulated Residential Fire Scenarios

Abstract

1. Introduction

1.1. Connecting Fire Characteristics to Human Behavior

1.2. Approaches for Collecting Occupant Behavior

1.3. Virtual Environments and HBIF

1.4. Simulated Fires

1.5. Present Study

2. Materials and Methods

2.1. Participants

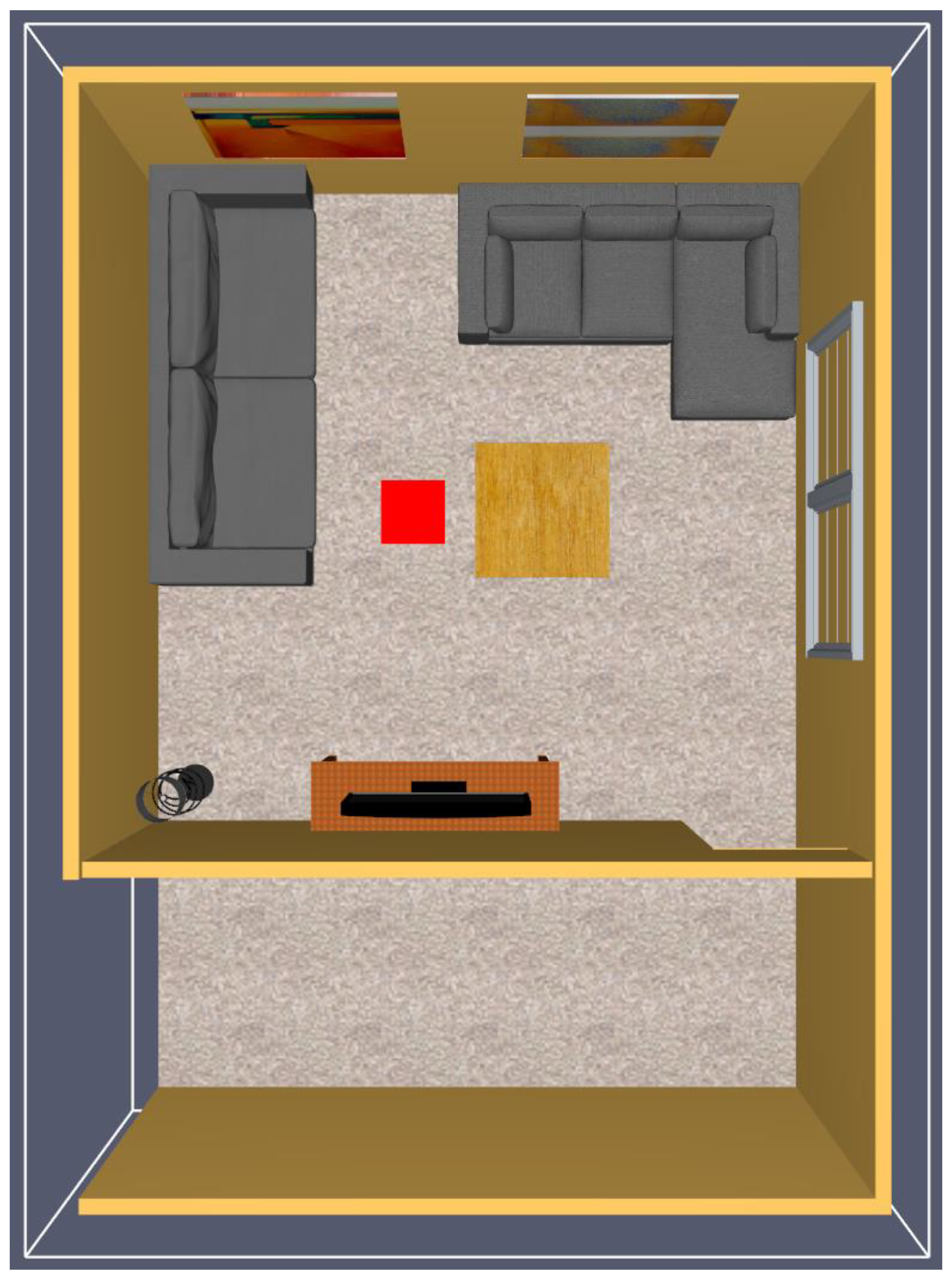

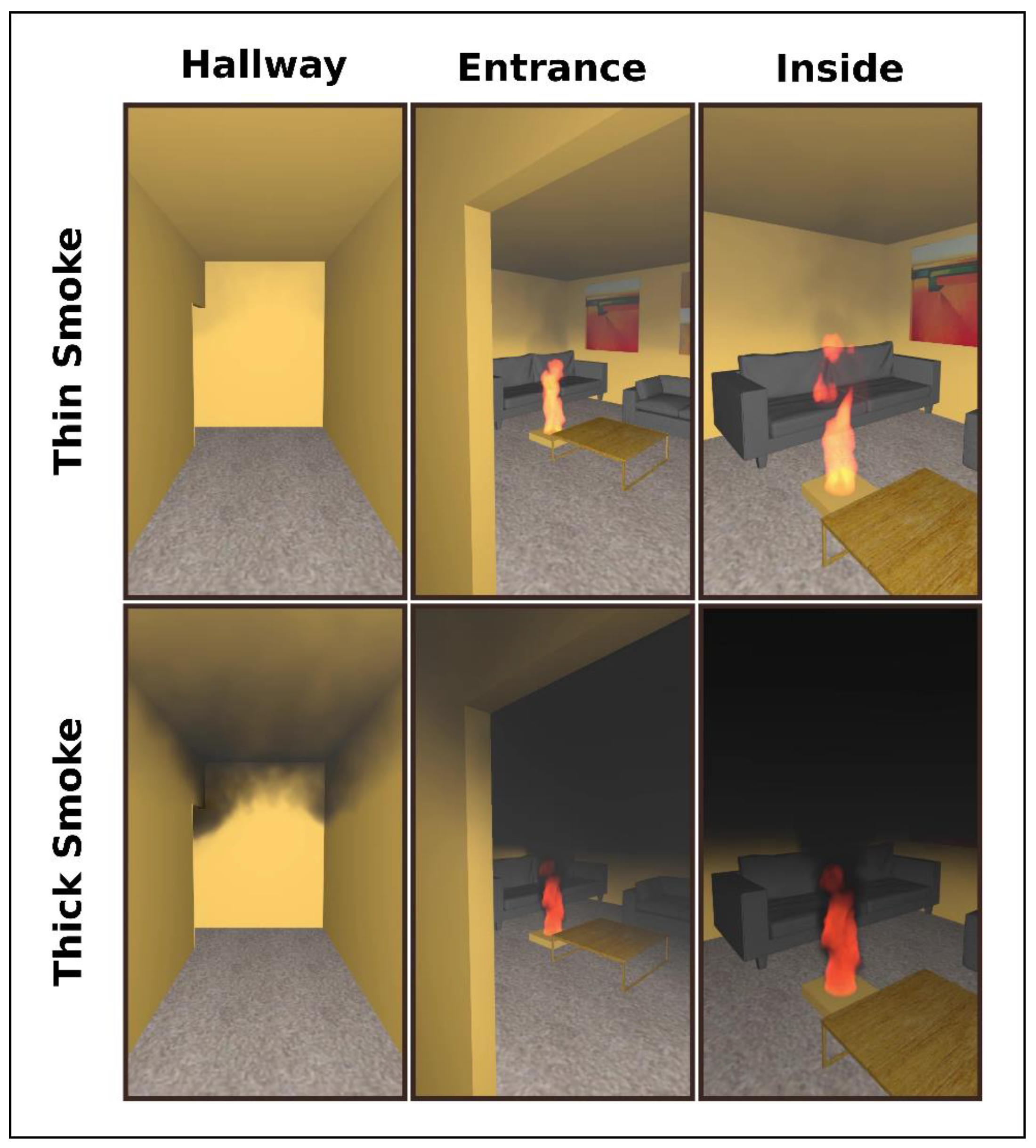

2.2. Materials

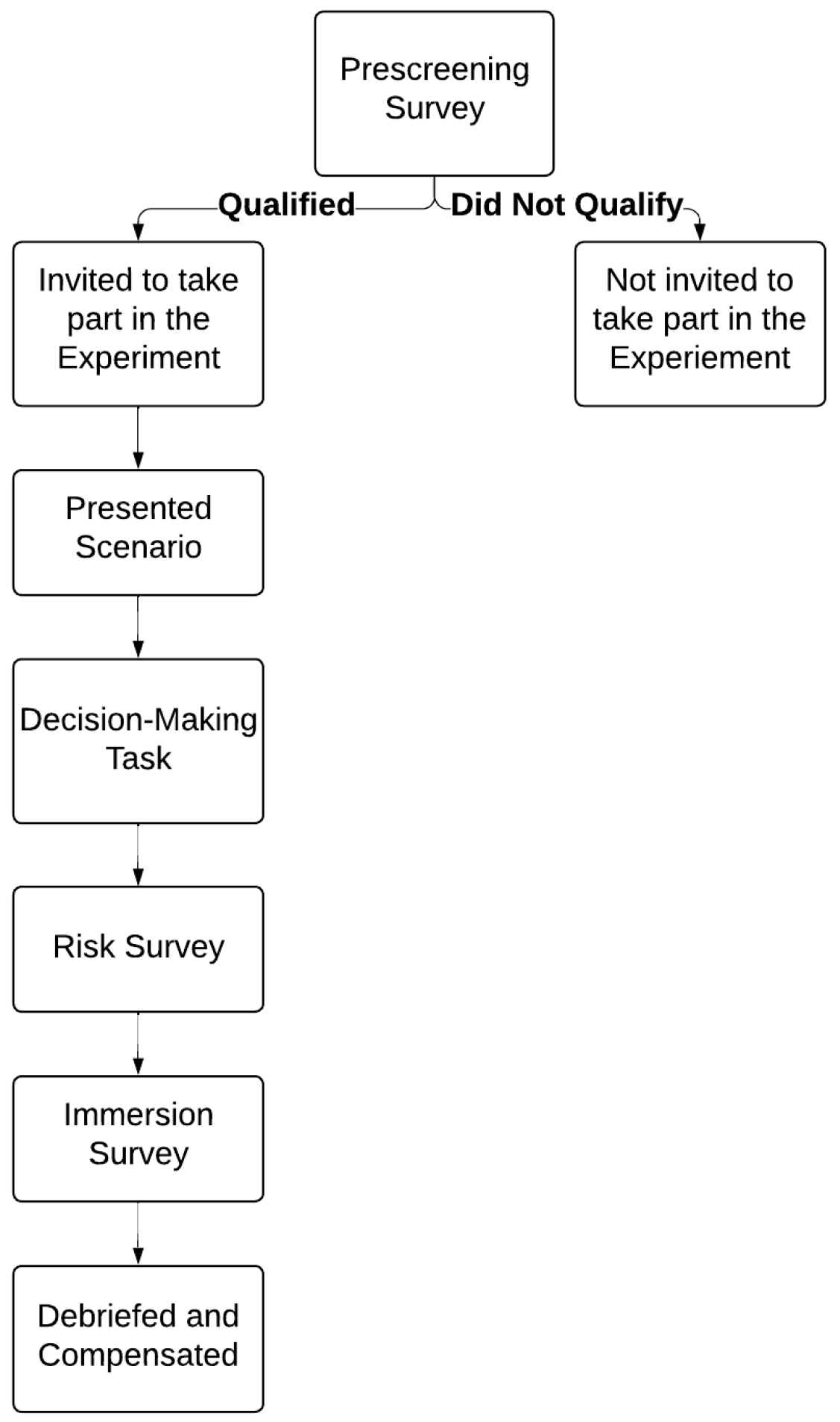

2.3. Procedures

3. Results

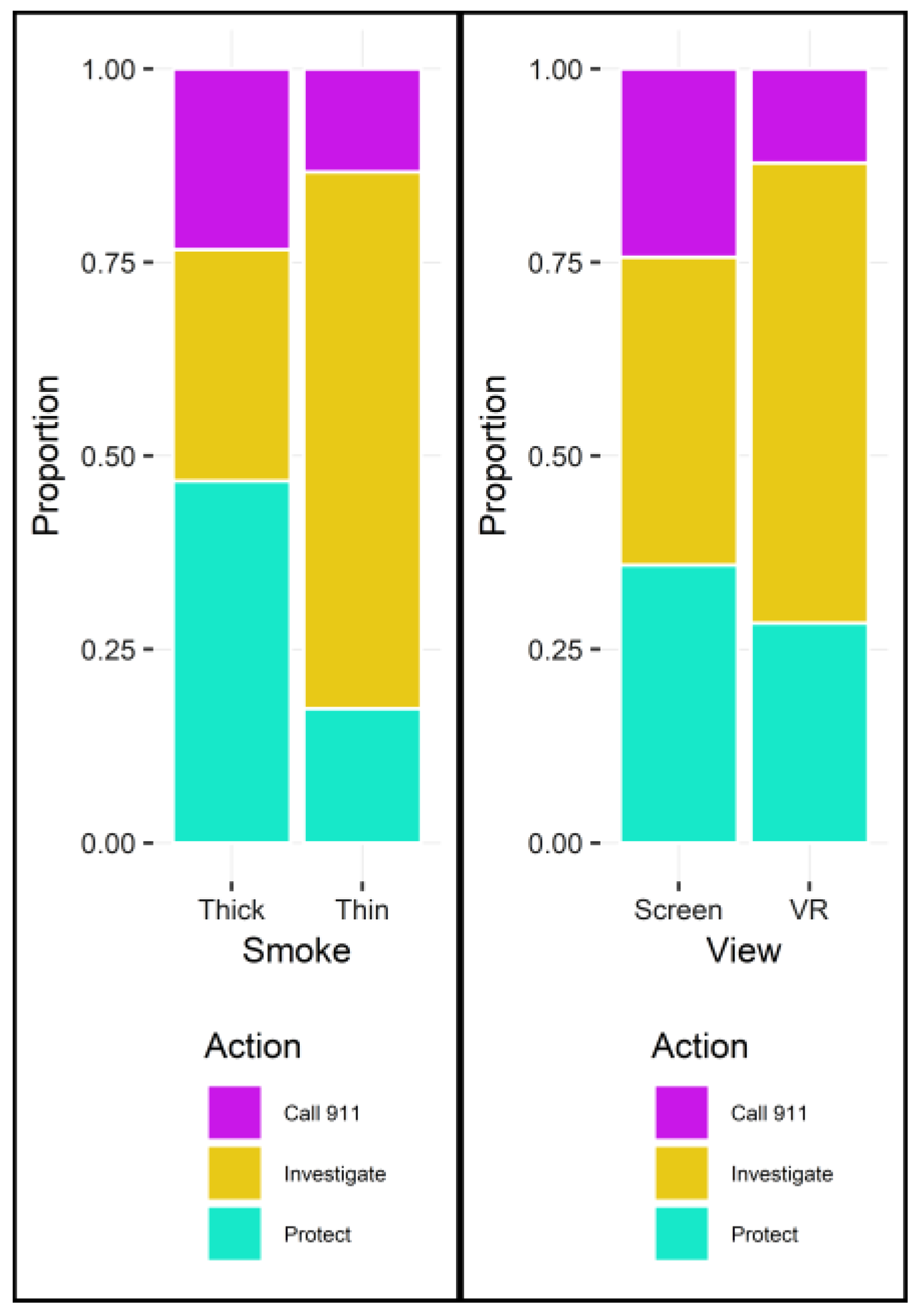

3.1. Action Responses

3.2. Risk Ratings

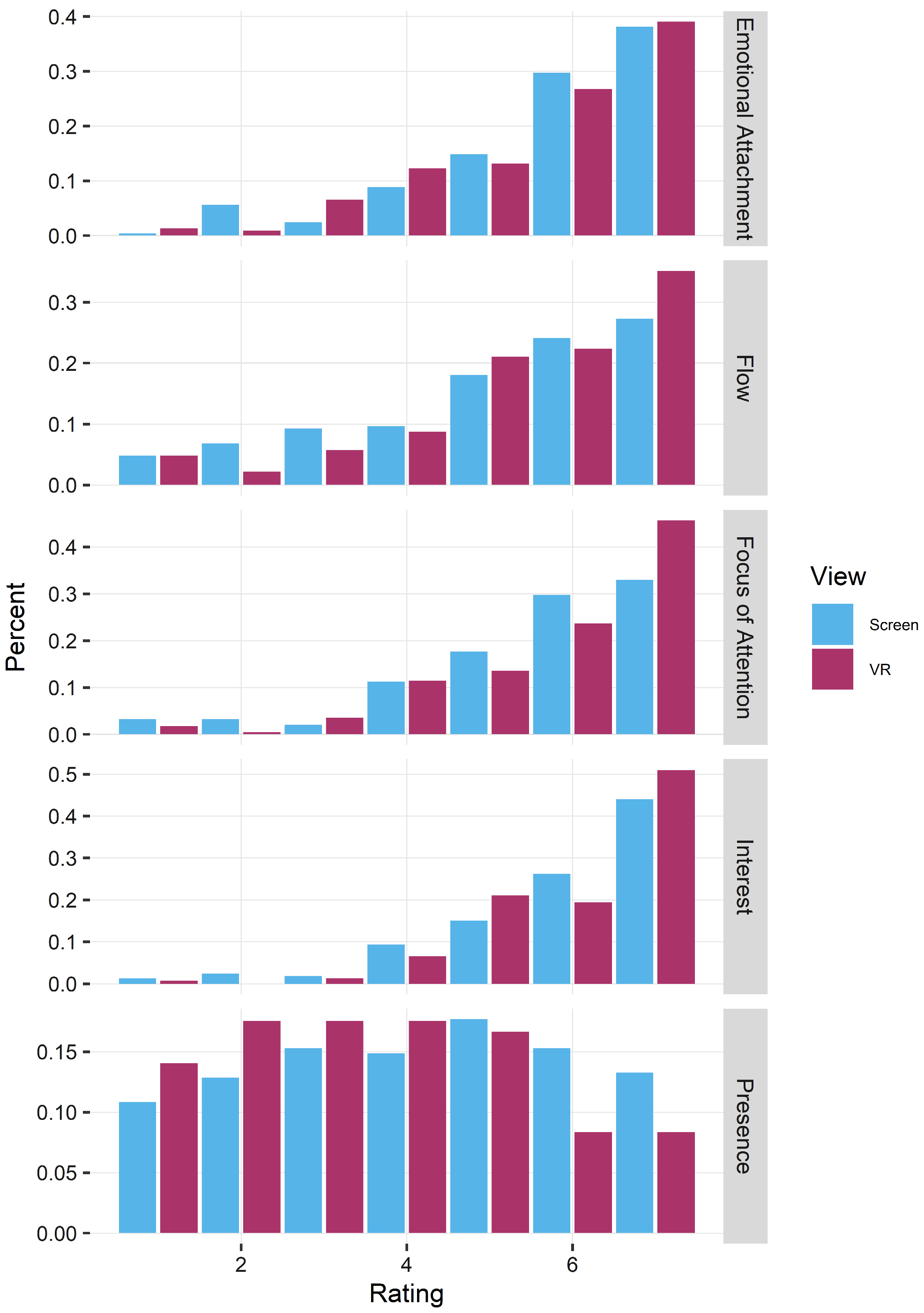

3.3. Immersion Ratings

3.4. Cross-Measure Analysis

4. Discussion

4.1. Fire Cues Influenced Perceived Risk and Selected Actions

4.2. The Impact of View Condition on Actions

4.3. Immersion Across View Conditions

4.4. Impact of Risk and Immersion on Actions

4.5. Limitations and Future Directions

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- U.S. Fire Administration. Residential Fire Estimate Summaries. Available online: https://www.usfa.fema.gov/statistics/residential-fires/ (accessed on 1 August 2024).

- Arias, S.; Wahlqvist, J.; Nilsson, D.; Ronchi, E.; Frantzich, H. Pursuing behavioral realism in Virtual Reality for fire evacuation research. Fire Mater. 2021, 45, 462–472. [Google Scholar] [CrossRef]

- Bryan, J.L. Smoke as a Determinant of Human Behavior in Fire Situations; U.S. Department of Commerce, National Bureau of Standards: Washington, DC, USA, 1977.

- Lovreglio, R.; Dillies, E.; Kuligowski, E.; Rahouti, A.; Haghani, M. Exit choice in built environment evacuation combining immersive virtual reality and discrete choice modelling. Autom. Constr. 2022, 141, 104452. [Google Scholar] [CrossRef]

- Kinateder, M.T.; Kuligowski, E.D.; Reneke, P.A.; Peacock, R.D. Risk perception in fire evacuation behavior revisited: Definitions, related concepts, and empirical evidence. Fire Sci. Rev. 2015, 4, 1. [Google Scholar] [CrossRef] [PubMed]

- Menzemer, L.W.; Ronchi, E.; Karsten, M.M.V.; Gwynne, S.; Frederiksen, J. A scoping review and bibliometric analysis of methods for fire evacuation training in buildings. Fire Saf. J. 2023, 136, 103742. [Google Scholar] [CrossRef]

- Sime, J.D. Escape Behaviour in Fires: Panic or Affiliation. Ph.D. Thesis, University of Surrey, Guildford, UK, 1984. [Google Scholar]

- Templeton, A.; Nash, C.; Lewis, L.; Gwynne, S.; Spearpoint, M. Information sharing and support among residents in response to fire incidents in high-rise residential buildings. Int. J. Disaster Risk Reduct. 2023, 92, 103713. [Google Scholar] [CrossRef]

- Zhu, R.; Lin, J.; Becerik-Gerber, B.; Li, N. Human-building-emergency interactions and their impact on emergency response performance: A review of the state of the art. Saf. Sci. 2020, 127, 104691. [Google Scholar] [CrossRef]

- Tong, D.; Canter, D. The decision to evacuate: A study of the motivations which contribute to evacuation in the event of fire. Fire Saf. J. 1985, 9, 257–265. [Google Scholar] [CrossRef]

- Lindell, M.K.; Perry, R.W. The protective action decision model: Theoretical modifications and additional evidence. Risk Anal. 2012, 32, 616–632. [Google Scholar] [CrossRef]

- Balog-Way, D.; McComas, K.; Besley, J. The evolving field of risk communication. Risk Anal. 2020, 40, 2240–2262. [Google Scholar] [CrossRef]

- Kuligowski, E.D. Predicting human behavior during fires. Fire Technol. 2013, 49, 101–120. [Google Scholar] [CrossRef]

- Wang, Y.; Kyriakidis, M.; Dang, V.N. Incorporating human factors in emergency evacuation—An overview of behavioral factors and models. Int. J. Disaster Risk Reduct. 2021, 60, 102254. [Google Scholar] [CrossRef]

- Kuligowski, E.D. The Process of Human Behavior in Fires (NIST TN 1632); National Institute of Standards and Technology: Gaithersburg, MD, USA, 2009. [CrossRef]

- Christianson, S.-Å.; Loftus, E.F. Remembering emotional events: The fate of detailed information. Cogn. Emot. 1991, 5, 81–108. [Google Scholar] [CrossRef]

- Nahleen, S.; Strange, D.; Takarangi, M.K. Does emotional or repeated misinformation increase memory distortion for a trauma analogue event? Psychol. Res. 2021, 85, 2453–2465. [Google Scholar] [CrossRef] [PubMed]

- Kensinger, E.A.; Ford, J.H. Retrieval of Emotional Events from Memory. Annu. Rev. Psychol. 2020, 71, 251–272. [Google Scholar] [CrossRef]

- Bonny, J.W.; Leventon, I.T. Measuring human perceptions of developing room fires: The influence of situational and dispositional factors. Fire Mater. 2021, 45, 451–461. [Google Scholar] [CrossRef]

- Bonny, J.W.; Milke, J.A. Precision of Visual Perception of Developing Fires. Fire 2023, 6, 328. [Google Scholar] [CrossRef]

- Hulse, L.M.; Galea, E.R.; Wales, D.; Thompson, O.F.; Siddiqui, A. Recollection of flame height and smoke volume in domestic fires. In Proceedings of the 6th International Symposium on Human Behaviour in Fire, Cambridge, UK, 28–30 September 2015. [Google Scholar]

- Morélot, S.; Garrigou, A.; Dedieu, J.; N’Kaoua, B. Virtual reality for fire safety training: Influence of immersion and sense of presence on conceptual and procedural acquisition. Comput. Educ. 2021, 166, 104145. [Google Scholar] [CrossRef]

- Ahmadi, M.; Yousefi, S.; Ahmadi, A. Exploring the Most Effective Feedback System for Training People in Earthquake Emergency Preparedness Using Immersive Virtual Reality Serious Games. Int. J. Disaster Risk Reduct. 2024, 110, 104630. [Google Scholar] [CrossRef]

- Gagliardi, E.; Bernardini, G.; Quagliarini, E.; Schumacher, M.; Calvaresi, D. Characterization and future perspectives of Virtual Reality Evacuation Drills for safe built environments: A Systematic Literature Review. Saf. Sci. 2023, 163, 106141. [Google Scholar] [CrossRef]

- Scorgie, D.; Feng, Z.; Paes, D.; Parisi, F.; Yiu, T.W.; Lovreglio, R. Virtual reality for safety training: A systematic literature review and meta-analysis. Saf. Sci. 2024, 171, 106372. [Google Scholar] [CrossRef]

- Breuer, C.; Loh, K.; Leist, L.; Fremerey, S.; Raake, A.; Klatte, M.; Fels, J. Examining the auditory selective attention switch in a child-suited virtual reality classroom Environment. Int. J. Environ. Res. Public 2022, 19, 16569. [Google Scholar] [CrossRef] [PubMed]

- Vince, J. Introduction to Virtual Reality; Springer Science & Business Media: London, UK, 2011. [Google Scholar]

- Lovreglio, R.; Duan, X.; Rahouti, A.; Phipps, R.; Nilsson, D. Comparing the effectiveness of fire extinguisher virtual reality and video training. Virtual Real. 2021, 25, 133–145. [Google Scholar] [CrossRef]

- Shaw, E.; Roper, T.; Nilsson, T.; Lawson, G.; Cobb, S.V.G.; Miller, D. The heat is on: Exploring user behaviour in a multisensory virtual environment for fire evacuation. In Proceedings of the Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019. [Google Scholar] [CrossRef]

- Georgiou, Y.; Kyza, E.A. The development and validation of the ARI questionnaire: An instrument for measuring immersion in location-based augmented reality settings. Int. J. Hum.-Comput. Stud. 2017, 98, 24–37. [Google Scholar] [CrossRef]

- Tekinbas, K.S.; Zimmerman, E. Rules of Play: Game Design Fundamentals; MIT Press: Cambridge, MA, USA, 2003. [Google Scholar]

- Simeone, A.L.; Cools, R.; Depuydt, S.; Gomes, J.M.; Goris, P.; Grocott, J.; Esteves, A.; Gerling, K. Immersive speculative enactments: Bringing future scenarios and technology to life using virtual reality. In Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems, New Orleans, LA, USA, 29 April–5 May 2022. [Google Scholar]

- Fire Dynamics Simulator (FDS6.7.9) [Computer Software]; National Institute of Standards and Technology: Gaithersburg, MD, USA, 2022; Available online: https://github.com/firemodels/fds.

- PyroSim. [Computer Software]. Thunderhead Eng. 2022. Available online: https://www.thunderheadeng.com/pyrosim.

- Forney, G.P. Technical Reference Guide (NIST Special Publication 1017-2; Smokeview, A Tool for Visualizing Fire Dynamics Simulation Data); National Institute of Standards and Technology: Gaithersburg, MD, USA, 2023. [Google Scholar]

- Bonny, J.W.; Hussain, Z.; Russell, M.D.; Trouvé, A.; Milke, J.A. Simulated fire video collection for advancing understanding of human behavior in building fires. Front. Psychol. 2024, 15, 1438020. [Google Scholar] [CrossRef]

- Kim, H.-J.; Lilley, D.G. Heat release rates of burning items in fires. J. Propuls. Power 2002, 18, 866–870. [Google Scholar] [CrossRef]

- Lange, K.; Kühn, S.; Filevich, E. “Just another tool for online studies” (JATOS): An easy solution for setup and management of web servers supporting online studies. PLoS ONE 2015, 10, e0130834. [Google Scholar] [CrossRef]

- de Leeuw, J.R. jsPsych: A JavaScript library for creating behavioral experiments in a web browser. Behav. Res. 2015, 47, 1–12. [Google Scholar] [CrossRef]

- A-Frame. [Computer Software]. 2024. Available online: https://github.com/aframevr/aframe.

- Arias, S.; Nilsson, D.; Wahlqvist, J. A virtual reality study of behavioral sequences in residential fires. Fire Saf. J. 2021, 120, 103067. [Google Scholar] [CrossRef]

- Helske, S.; Helske, J. Mixture Hidden Markov Models for Sequence Data: The seqHMM Package in R. J. Stat. Softw. 2019, 88, 1–32. [Google Scholar] [CrossRef]

- Christensen, R.H.B. ordinal—Regression Models for Ordinal Data. 2023. Available online: https://CRAN.R-project.org/package=ordinal (accessed on 1 August 2024).

- Lenth, R.V.; Buerkner, P.; Herve, M.; Love, J.; Miguez, F.; Riebl, H.; Singmann, H. emmeans: Estimated Marginal Means, Aka Least-Squares Means (Version 1.7.3). [Computer Software]. 2022. Available online: https://CRAN.R-project.org/package=emmeans.

- Wickham, H. ggplot2: Elegant Graphics for Data Analysis; Springer: New York, NY, USA, 2016. [Google Scholar]

- Feng, Z.; Liu, C.; González, V.A.; Lovreglio, R.; Nilsson, D. Prototyping an immersive virtual reality training system for urban-scale evacuation using 360-degree panoramas. IOP Conf. Ser. Earth Environ. Sci. 2022, 1101, 022037. [Google Scholar] [CrossRef]

- Vishwakarma, P.; Mukherjee, S.; Datta, B. Travelers’ intention to adopt virtual reality: A consumer value perspective. J. Destin. Mark. Manag. 2020, 17, 100456. [Google Scholar] [CrossRef]

- Grade, S.; Badets, A.; Pesenti, M. Influence of finger and mouth action observation on random number generation: An instance of embodied cognition for abstract concepts. Psychol. Res. 2017, 81, 538–548. [Google Scholar] [CrossRef] [PubMed]

| Option | Description | Action Category |

|---|---|---|

| Investigate | You move forward to take a closer look. | Investigate |

| Evacuate | You immediately run towards the exit and leave the building. | Protect |

| Barricade | You return to your room, close the door, and stuff a wet towel under the door. | Protect |

| Continue watching TV | You return to your room and continue watching the television. | Delay |

| Gather belongings | You look for your stuff and repack your luggage. It takes some time. | Delay |

| Call 911 | You immediately dial 911 using your cellphone. You wait for instructions. | Call 911 |

| Engage | You locate a fire extinguisher and move towards the fire to try to put it out. | Engage |

| Wait | You stay in your current position, waiting. | Delay |

| From | To | Call 911 | Delay | Engage | Investigate | Protect |

|---|---|---|---|---|---|

| Call 911 | 0 | 0.19 | 0.21 | 0.08 | 0.52 |

| Delay | 0.1 | 0 | 0.05 | 0.1 | 0.75 |

| Engage | 0.12 | 0.02 | 0.73 | 0 | 0.14 |

| Investigate | 0.15 | 0.04 | 0.51 | 0 | 0.31 |

| Protect | 1 | 0 | 0 | 0 | 0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Russell, M.D.; Bonny, J.W.; Reed, R. Impact of Virtual Reality on Decision-Making and Risk Assessment During Simulated Residential Fire Scenarios. Fire 2024, 7, 427. https://doi.org/10.3390/fire7120427

Russell MD, Bonny JW, Reed R. Impact of Virtual Reality on Decision-Making and Risk Assessment During Simulated Residential Fire Scenarios. Fire. 2024; 7(12):427. https://doi.org/10.3390/fire7120427

Chicago/Turabian StyleRussell, Micah D., Justin W. Bonny, and Randal Reed. 2024. "Impact of Virtual Reality on Decision-Making and Risk Assessment During Simulated Residential Fire Scenarios" Fire 7, no. 12: 427. https://doi.org/10.3390/fire7120427

APA StyleRussell, M. D., Bonny, J. W., & Reed, R. (2024). Impact of Virtual Reality on Decision-Making and Risk Assessment During Simulated Residential Fire Scenarios. Fire, 7(12), 427. https://doi.org/10.3390/fire7120427