Enhancing the Effectiveness of Juvenile Protection: Deep Learning-Based Facial Age Estimation via JPSD Dataset Construction and YOLO-ResNet50

Abstract

1. Introduction

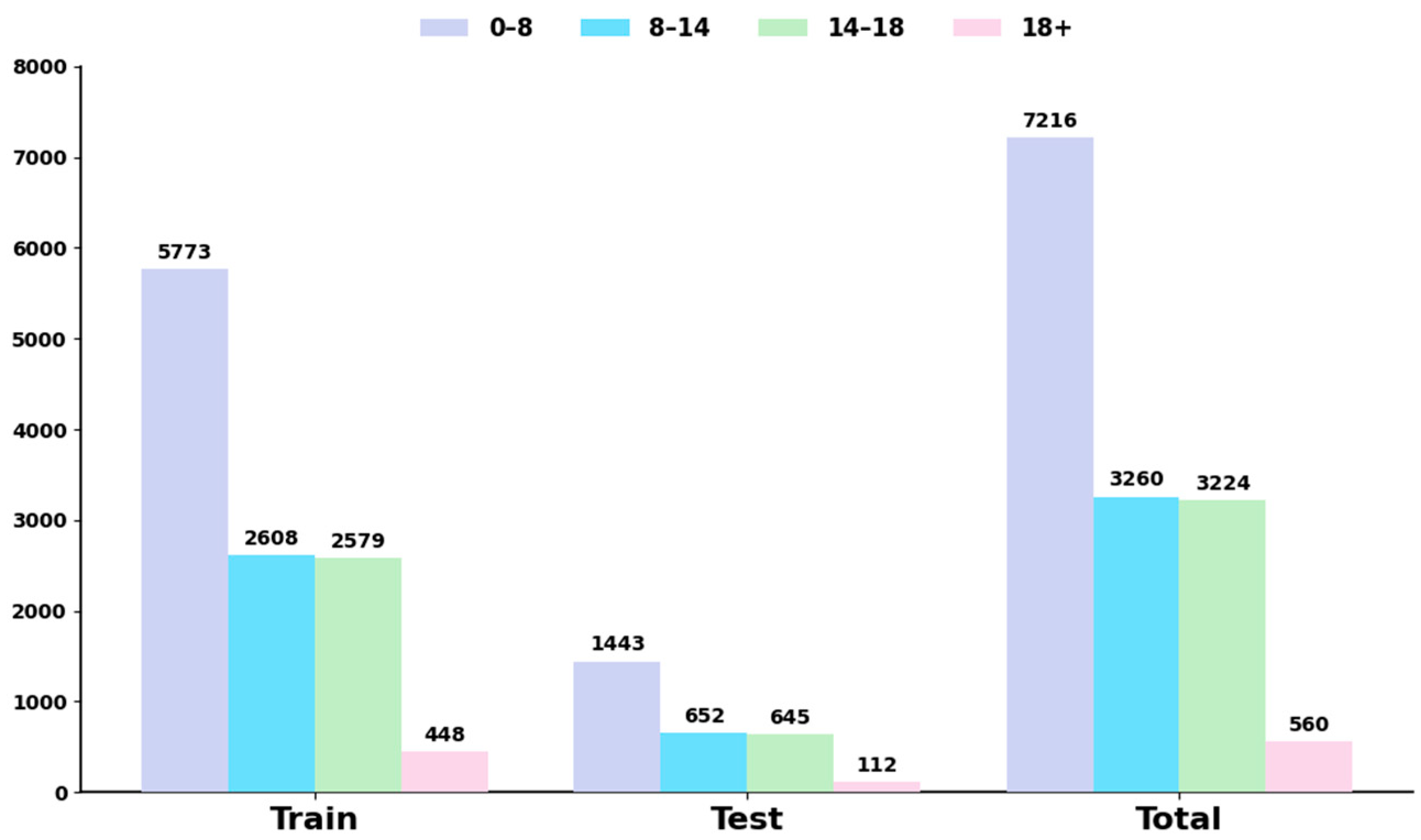

- Specialised dataset for law enforcement scenarios: We have constructed the Juvenile Protection Surveillance and Detection Dataset (JPSDD), which covers all growth stages from birth to 18 years of age. This dataset incorporates real-world data, including surveillance footage and location-specific images, to provide a standardised benchmark for training and evaluating algorithms in practical policing applications.

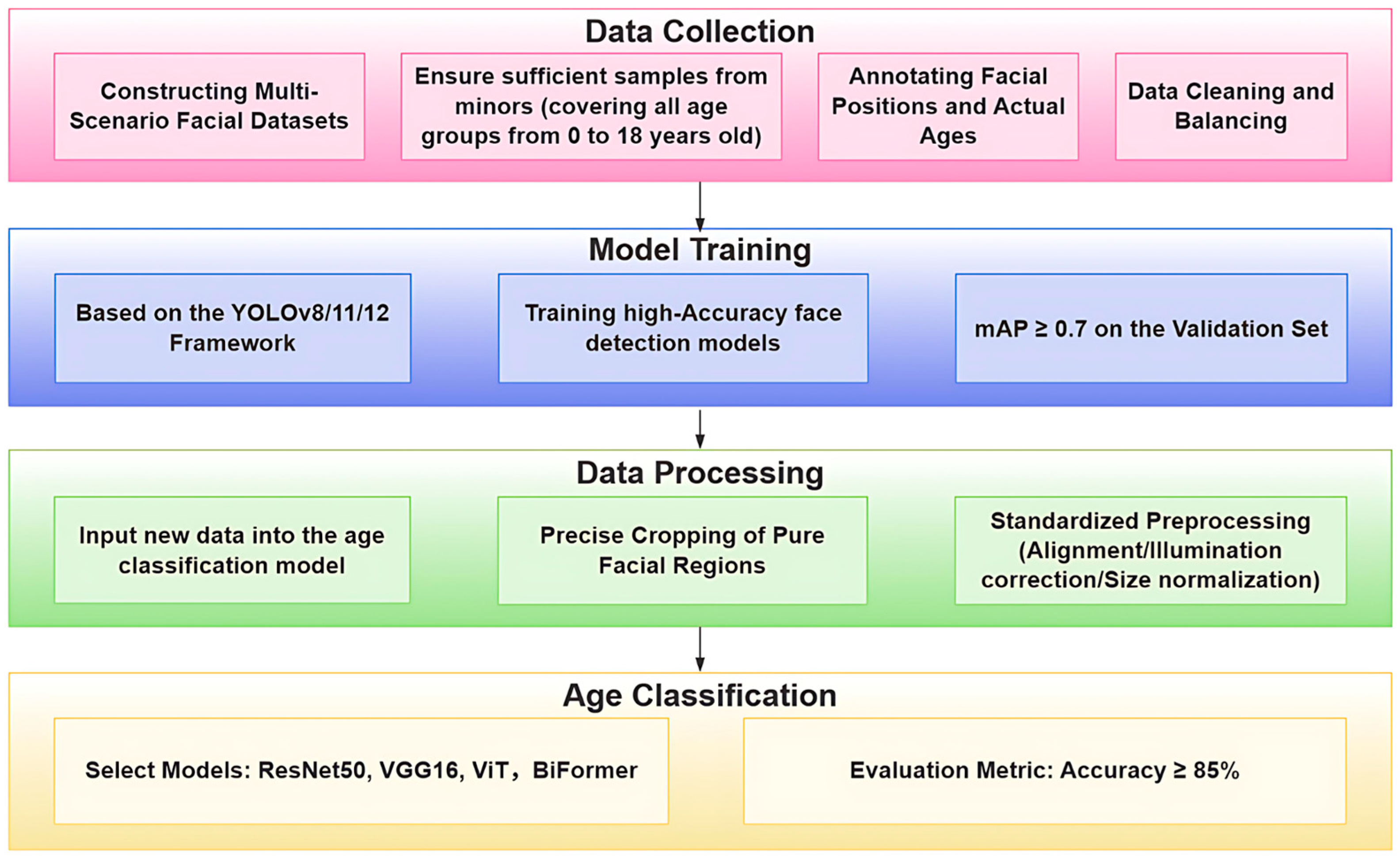

- Cascaded ‘Detection–Cropping–Classification’ Framework: We propose a novel framework comprising three stages: first, accurate location of facial regions via object detection; second, elimination of background interference through adaptive cropping; and third, fine-grained age classification based on deep features. To optimise performance, we systematically compared three detection models (YOLOv8, YOLOv11 and YOLOv12) and four classification models (VGG16, ResNet50, ViT and BiFormer). Experimental results identified the optimal combination that significantly enhances the accuracy and robustness of cross-age estimation.

- Grad-CAM-Enhanced Interpretability: We use Gradient-weighted Class Activation Mapping (Grad-CAM) to generate visual heatmaps that highlight the key facial regions on which the model focuses. This approach reveals critical age-distinguishing features, verifies the rationality of model predictions and facilitates comparative analysis of feature extraction differences across various network architectures. Specifically, it validates the distinct decision-making mechanisms between CNN- and ViT-based models.

2. Related Works

2.1. Research into Algorithms for Facial Age Estimation

2.2. Fairness Research in Age Estimation

2.3. Privacy Protection Technologies in Biometric Applications

2.4. Technical Applications in Juvenile Protection

2.5. Summary of Research Gaps

3. Materials and Methods

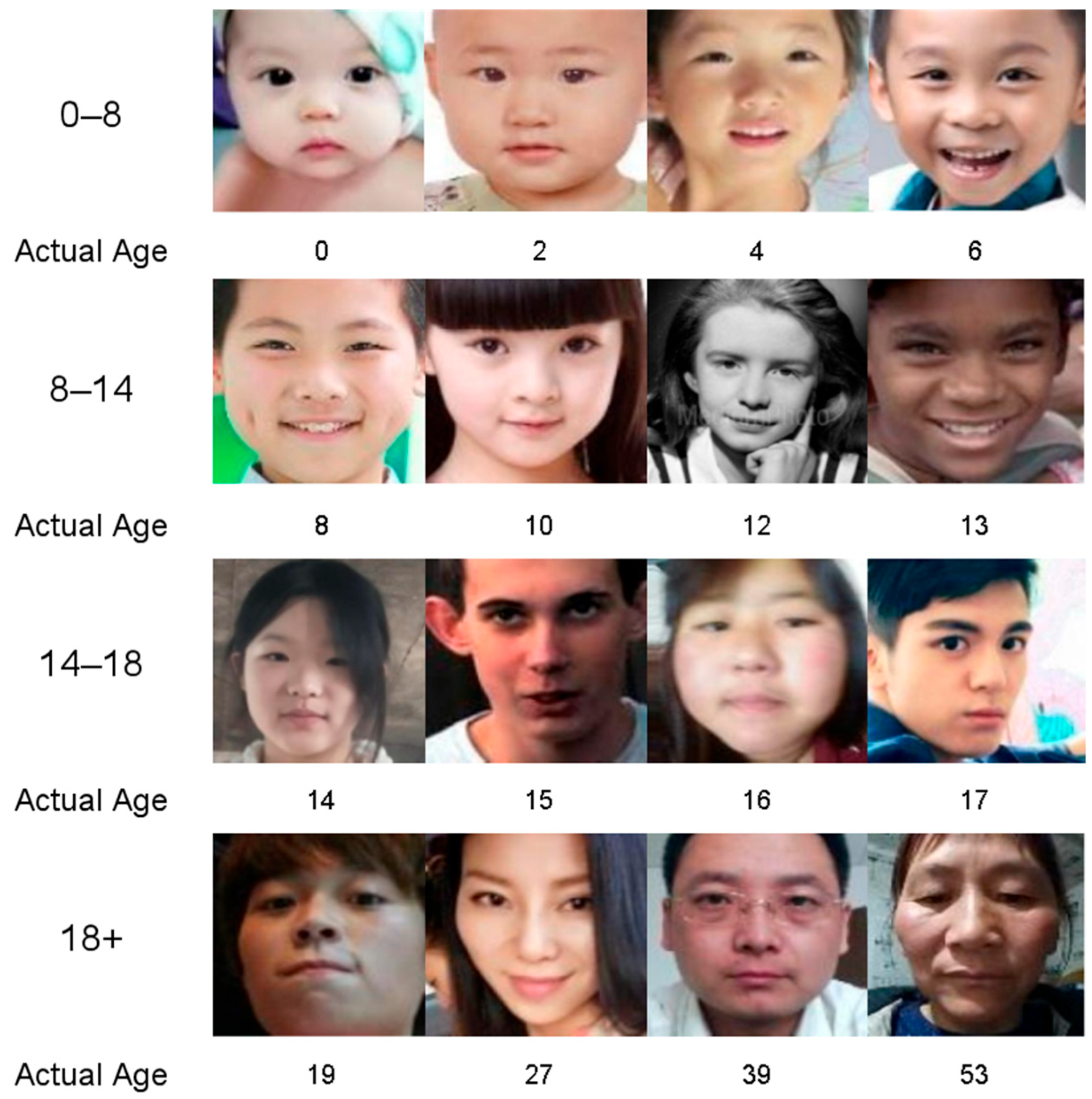

3.1. Dataset Collection and Preprocessing

3.2. Age Classification Criteria

3.3. Related Technology Overview

3.3.1. Introduction to Face Image Detection Models

3.3.2. Introduction to Facial Age Estimation Models

3.4. Evaluation Metrics

4. Results and Discussions

4.1. Experimental Environment Setup

4.2. Comparison and Analysis of Experimental Results

4.2.1. Comparative Performance of Object Detection Methods

4.2.2. Performance Comparison in the Age Classification

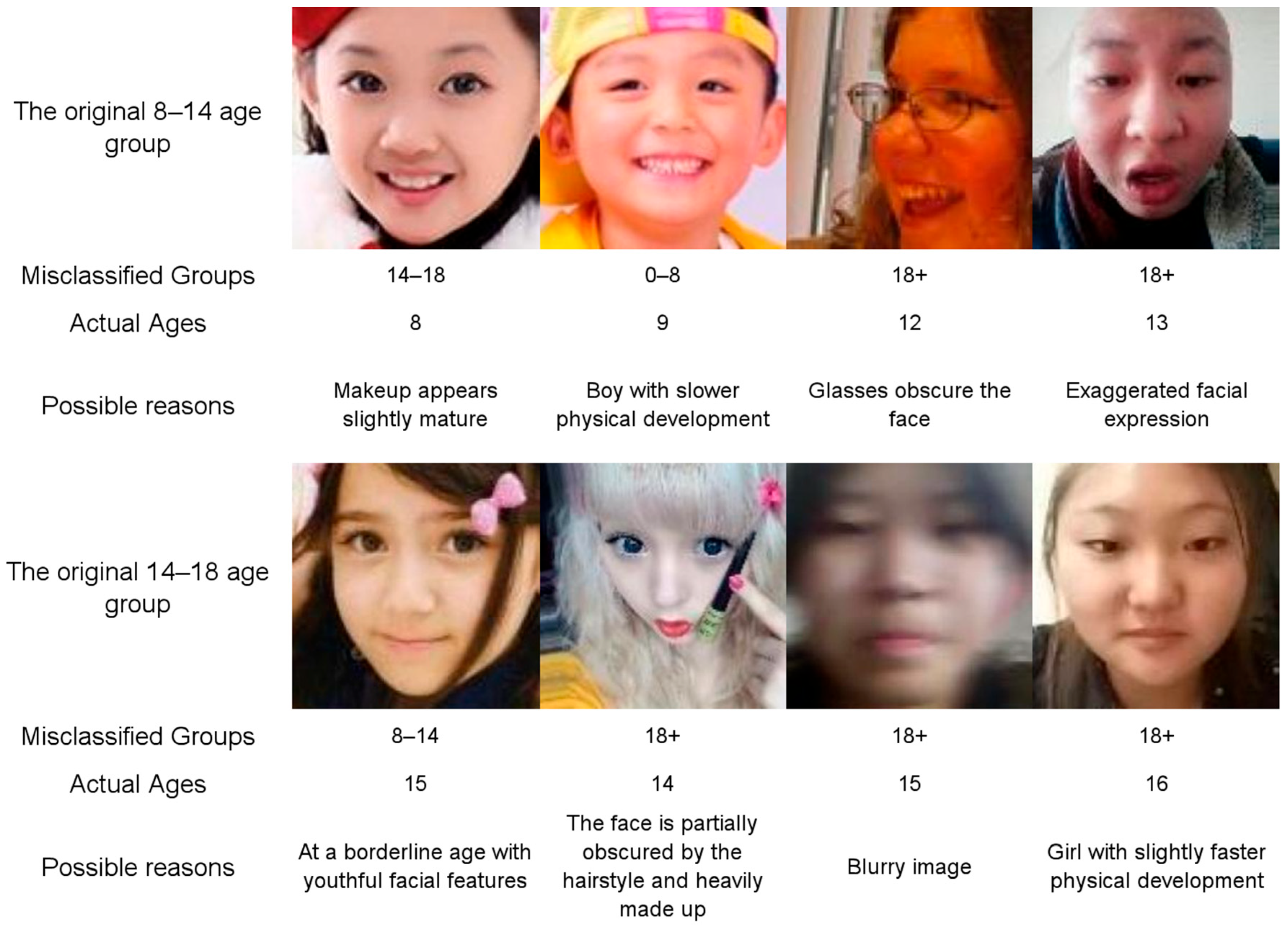

Poor Performance in the 8–14 and 14–18 Age Groups

In Traditional CNN Models, the ResNet50 Model Outperforms the VGG16 Model

The ViT Model Performs Worse than Traditional CNNs

BiFormer’s Performance Is Intermediate Between ResNet50 and VGG16

4.2.3. Model Visualization

4.2.4. Optimization of Classification Model

5. Conclusions and Future Work

5.1. Research Conclusions

5.2. Improvements and Future Work

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Cyberspace Administration of China. The Number of Netizens in China is Nearly 1.1 Billion, and the Internet Penetration Rate Reaches 78.0%. Available online: https://www.cac.gov.cn/2024-08/30/c_1726702676681749.htm (accessed on 18 November 2025).

- GDPR.eu. (n.d.). Art. 8 GDPR: Conditions Applicable to Child’s Consent in Relation to Information Society Services. Available online: https://gdpr.eu/article-8-childs-consent/ (accessed on 18 November 2025).

- U.S. Federal Trade Commission. COPPA Cases Dataset [Data Set]. 2024. Available online: https://www.ftc.gov/enforcement/cases-proceedings/terms/1421 (accessed on 19 November 2025).

- Ministry of Education of the People’s Republic of China. Law on the Protection of Minors. 2020. Available online: http://www.moe.gov.cn/jyb_sjzl/sjzl_zcfg/zcfg_qtxgfl/202110/t20211025_574798.html (accessed on 19 November 2025).

- The State Council of the People’s Republic of China. Regulations on the Protection of Minors in Cyberspace. 2023. Available online: https://www.gov.cn/zhengce/zhengceku/202310/content_6911289.htm (accessed on 19 November 2025).

- Wen, Y.; Zhang, K.; Li, Z.; Qiao, Y. A Discriminative Feature Learning Approach for Deep Face Recognition. In Computer Vision—ECCV 2016; ECCV 2016; Lecture Notes in Computer Science; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer: Cham, Switzerland, 2016; Volume 9911. [Google Scholar] [CrossRef]

- Levi, G.; Hassner, T. Age and Gender Classification Using Convolutional Neural Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Boston, MA, USA, 7–12 June 2015; pp. 34–42. [Google Scholar] [CrossRef]

- Luan, R.S.; Liu, G.F.; Wang, C.Q. Application of Dynamic Facial Recognition in Investigation Work. J. Crim. Investig. Police Univ. China 2019, 151, 122–128. [Google Scholar] [CrossRef]

- Jiang, C.; Liu, H.; Yu, X.; Wang, Q.; Cheng, Y.; Xu, J.; Liu, Z.; Guo, Q.; Chu, W.; Yang, M.; et al. Dual-Modal Attention-Enhanced Text-Video Retrieval with Triplet Partial Margin Contrastive Learning. In Proceedings of the ACM International Conference on Multimedia (ACM MM), Ottawa, ON, Canada, 19 October–3 November 2023; pp. 1–11. [Google Scholar] [CrossRef]

- Geng, X.; Zhou, Z.-H.; Smith-Miles, K. Correction to “Automatic age estimation based on facial aging patterns”. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 368. [Google Scholar] [CrossRef]

- Tan, Z.; Wan, J.; Lei, Z.; Zhi, R.; Guo, G.; Li, S.Z. Efficient Group-n Encoding and Decoding for Facial Age Estimation. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 2610–2623. [Google Scholar] [CrossRef] [PubMed]

- Kuprashevich, M.; Tolstykh, I. MiVOLO: Multi-input Transformer for Age and Gender Estimation. arXiv 2023, arXiv:2307.04616v2. [Google Scholar] [CrossRef]

- Wang, H.; Sanchez, V.; Li, C.-T. Cross-Age Contrastive Learning for Age-Invariant Face Recognition. In Proceedings of the ICASSP 2024—2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2024. [Google Scholar] [CrossRef]

- Yang, W.-Y.; Huang, A.-Q.; Tan, Z.-L.; Liu, Z.L.; Zhong, Y. Facial age estimation method combining similar cross-entropy and knowledge distillation. Comput. Technol. Dev. 2025, 35, 113–120. [Google Scholar] [CrossRef]

- Qin, J.; Jiao, Y.; Li, Z.-P.; Mao, Z.Y. Facial image age estimation based on attention ConvLSTM model. Comput. Appl. Softw. 2025, 42, 383–390. [Google Scholar] [CrossRef]

- Narayan, K.; Vibashan, V.S.; Chellappa, R.; Patel, V.M. FaceXFormer: A Unified Transformer for Facial Analysis. arXiv 2025, arXiv:2403.12960. [Google Scholar]

- Chen, X.; Fu, X. Application of face recognition and age estimation in online game anti-addiction systems. Technol. Mark. 2021, 28, 41–42. Available online: http://dianda.cqvip.com/Qikan/Article/Detail?id=7103757369 (accessed on 18 November 2025).

- Cao, Y.; Berend, D.; Tolmach, P.; Amit, G.; Levy, M.; Liu, Y. Fair and Accurate Age Prediction Using Distribution Aware Data Curation and Augmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 3404–3413. [Google Scholar] [CrossRef]

- Buolamwini, J.; Gebru, T. Gender Shades: Intersectional Accuracy Disparities in Commercial Gender Classification. In Proceedings of the Conference on Fairness, Accountability and Transparency, New York, NY, USA, 23–24 February 2018; pp. 77–91. Available online: https://api.semanticscholar.org/CorpusID:3298854 (accessed on 23 August 2025).

- Muhammed, A.; Marcos, J.; Gonçalves, N. Federated Learning for Secure and Privacy-Preserving Facial Recognition: Advances, Challenges, and Research Directions. In Pattern Recognition and Image Analysis; Gonçalves, N., Oliveira, H.P., Sánchez, J.A., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2025; Volume 15937, pp. 227–241. [Google Scholar] [CrossRef]

- Zhang, M.; Wei, E.; Berry, R.; Huang, J. Age-Dependent Differential Privacy. IEEE Trans. Inf. Theory 2024, 70, 1300–1319. [Google Scholar] [CrossRef]

- Narayan, A.; Kulkarni, P.; Gupta, S.; Singh, R. FaceXFormer: End-to-End Transformer for Multi-Task Facial Analysis. Comput. Vis. Image Underst. 2024, 241, 103987. [Google Scholar] [CrossRef]

- Zhang, Z.; Song, Y.; Qi, H. Age Progression/Regression by Conditional Adversarial Autoencoder. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5810–5818. [Google Scholar] [CrossRef]

- Moschoglou, S.; Papaioannou, A.; Sagonas, C.; Deng, J.; Kotsia, I.; Zafeiriou, S. AgeDB: The first manually collected, in-the-wild age database. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Honolulu, HI, USA, 21–26 July 2017; pp. 1997–2005. [Google Scholar] [CrossRef]

- Agustsson, E.; Timofte, R.; Escalera, S.; Baro, X.; Guyon, I.; Rothe, R. Apparent and real age estimation in still images with deep residual regressors on the APPA-REAL database. In Proceedings of the 12th IEEE International Conference and Workshops on Automatic Face and Gesture Recognition (FG), Washington, DC, USA, 30 May–3 June 2017. [Google Scholar] [CrossRef]

- Zhang, Y.; Liu, L.; Li, C.; Loy, C.C. Quantifying facial age by posterior of age comparisons. In Proceedings of the British Machine Vision Conference (BMVC), London, UK, 4–7 September 2017. [Google Scholar] [CrossRef]

- Yin, Y.; Chang, D.; Song, G.; Sang, S.; Zhi, T.; Liu, J.; Luo, L.; Soleymani, M. FG-Net: Facial action unit detection with generalizable pyramidal features. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 3–8 January 2024; pp. 6099–6108. [Google Scholar] [CrossRef]

- Swathi, Y.; Challa, M. YOLOv8: Advancements and innovations in object detection. In Smart Trends in Computing and Communications: Proceedings of SmartCom 2024; Lecture Notes in Networks and Systems; Senjyu, T., So-In, C., Joshi, A., Eds.; Springer: Madison, WI, USA, 2024; Volume 946, pp. 1–13. [Google Scholar] [CrossRef]

- Khanam, R.; Hussain, M. YOLOv11: An overview of the key architectural enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar] [CrossRef]

- Tian, Y.; Ye, Q.; Doermann, D. Yolov12: Attention-centric real-time object detectors. arXiv 2025, arXiv:2502.12524. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16 × 16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Zhu, L.; Wang, X.; Ke, Z.; Zhang, W.; Lau, R.W.H. BiFormer: Vision Transformer with Bi-Level Routing Attention. arXiv 2023, arXiv:2303.08810. [Google Scholar]

- Li, K.; Ouyang, W.; Luo, P. Locality guidance for improving vision transformers on tiny data. In Proceedings of the European Conference on Computer Vision (ECCV), Tel Aviv, Israel, 23–29 October 2022. [Google Scholar] [CrossRef]

- Li, Y.; Yang, Y.; Song, P.; Wang, S. An improved SMOTE algorithm for enhanced imbalanced data classification by expanding sample generation space. Sci. Rep. 2025, 15, 23521. [Google Scholar] [CrossRef]

| Dataset | Total Number of Images | Number of Juvenile Images |

|---|---|---|

| UTKface | 20,000+ | 4352 |

| AgeDB | 16,488 | 303 |

| APPA-REAL | 7591 | 1417 |

| MegaAge | 41,941 | 7009 |

| FG-NET | 1002 | 619 |

| Parameter | Values |

|---|---|

| Epoch | 100 |

| Batch | 32 |

| Initial learning rate | 0.01 |

| Imgsz | 640 × 640 |

| Parameter | Values |

|---|---|

| Epoch | 100 |

| Batch | 64 |

| Initial learning rate | 0.001 |

| Imgsz | 224 × 224 |

| Model | Precision (%) | Recall (%) | F1 Score (%) | mAP@0.5 (%) |

|---|---|---|---|---|

| YOLOv8 | 82.7 | 79.1 | 80.9 | 84.0 |

| YOLOv11 | 84.7 | 81.0 | 82.8 | 87.5 |

| YOLOv12 | 84.1 | 80.2 | 82.1 | 87.2 |

| Model | Group | Precision (%) | Recall (%) | F1 Score (%) | Accuracy (%) |

|---|---|---|---|---|---|

| VGG16 | 0–8 | 83.04 | 88.02 | 85.45 | 62.61 |

| 8–14 | 56.01 | 33.14 | 41.64 | ||

| 14–18 | 57.28 | 34.69 | 43.21 | ||

| 18+ | 90.34 | 94.59 | 92.42 | ||

| ResNet50 | 0–8 | 84.77 | 89.17 | 86.91 | 64.37 |

| 8–14 | 55.19 | 35.95 | 43.54 | ||

| 14–18 | 59.51 | 37.19 | 45.78 | ||

| 18+ | 93.80 | 95.17 | 94.48 | ||

| ViT | 0–8 | 81.81 | 83.31 | 82.55 | 51.83 |

| 8–14 | 34.31 | 15.70 | 21.54 | ||

| 14–18 | 39.62 | 16.31 | 23.11 | ||

| 18+ | 84.42 | 92.00 | 88.05 | ||

| BiFormer | 0–8 | 82.69 | 93.63 | 87.82 | 59.12 |

| 8–14 | 48.07 | 23.08 | 31.18 | ||

| 14–18 | 48.47 | 24.96 | 32.95 | ||

| 18+ | 86.55 | 94.82 | 90.50 |

| Model | Group | Precision (%) | Recall (%) | F1 Score (%) | Accuracy (%) |

|---|---|---|---|---|---|

| ResNet50 | 0–8 | 84.77 | 89.17 | 86.91 | 64.37 |

| 8–14 | 55.19 | 35.95 | 43.54 | ||

| 14–18 | 59.51 | 37.19 | 45.78 | ||

| 18+ | 93.80 | 95.17 | 94.48 | ||

| ResNet50 | 14− | 85.48 | 84.23 | 84.85 | 85.95 |

| 14+ | 90.94 | 91.71 | 91.32 |

| Model | Precision (%) | Recall (%) | F1 Score (%) | Accuracy (%) |

|---|---|---|---|---|

| ResNet50 | 89.72 | 88.11 | 88.90 | 64.37 |

| CBAM-ResNet50 | 84.15 | 86.40 | 85.26 | 61.71 |

| CA-ResNet50 | 92.64 | 88.92 | 90.74 | 65.66 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Published by MDPI on behalf of the International Institute of Knowledge Innovation and Invention. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, Y.; Gao, Q.; Lin, Y.; Yang, Z.; Wang, X. Enhancing the Effectiveness of Juvenile Protection: Deep Learning-Based Facial Age Estimation via JPSD Dataset Construction and YOLO-ResNet50. Appl. Syst. Innov. 2025, 8, 185. https://doi.org/10.3390/asi8060185

Wu Y, Gao Q, Lin Y, Yang Z, Wang X. Enhancing the Effectiveness of Juvenile Protection: Deep Learning-Based Facial Age Estimation via JPSD Dataset Construction and YOLO-ResNet50. Applied System Innovation. 2025; 8(6):185. https://doi.org/10.3390/asi8060185

Chicago/Turabian StyleWu, Yuqiang, Qingyang Gao, Yichen Lin, Zhanhai Yang, and Xinmeng Wang. 2025. "Enhancing the Effectiveness of Juvenile Protection: Deep Learning-Based Facial Age Estimation via JPSD Dataset Construction and YOLO-ResNet50" Applied System Innovation 8, no. 6: 185. https://doi.org/10.3390/asi8060185

APA StyleWu, Y., Gao, Q., Lin, Y., Yang, Z., & Wang, X. (2025). Enhancing the Effectiveness of Juvenile Protection: Deep Learning-Based Facial Age Estimation via JPSD Dataset Construction and YOLO-ResNet50. Applied System Innovation, 8(6), 185. https://doi.org/10.3390/asi8060185