LCxNet: An Explainable CNN Framework for Lung Cancer Detection in CT Images Using Multi-Optimizer and Visual Interpretability

Abstract

1. Introduction

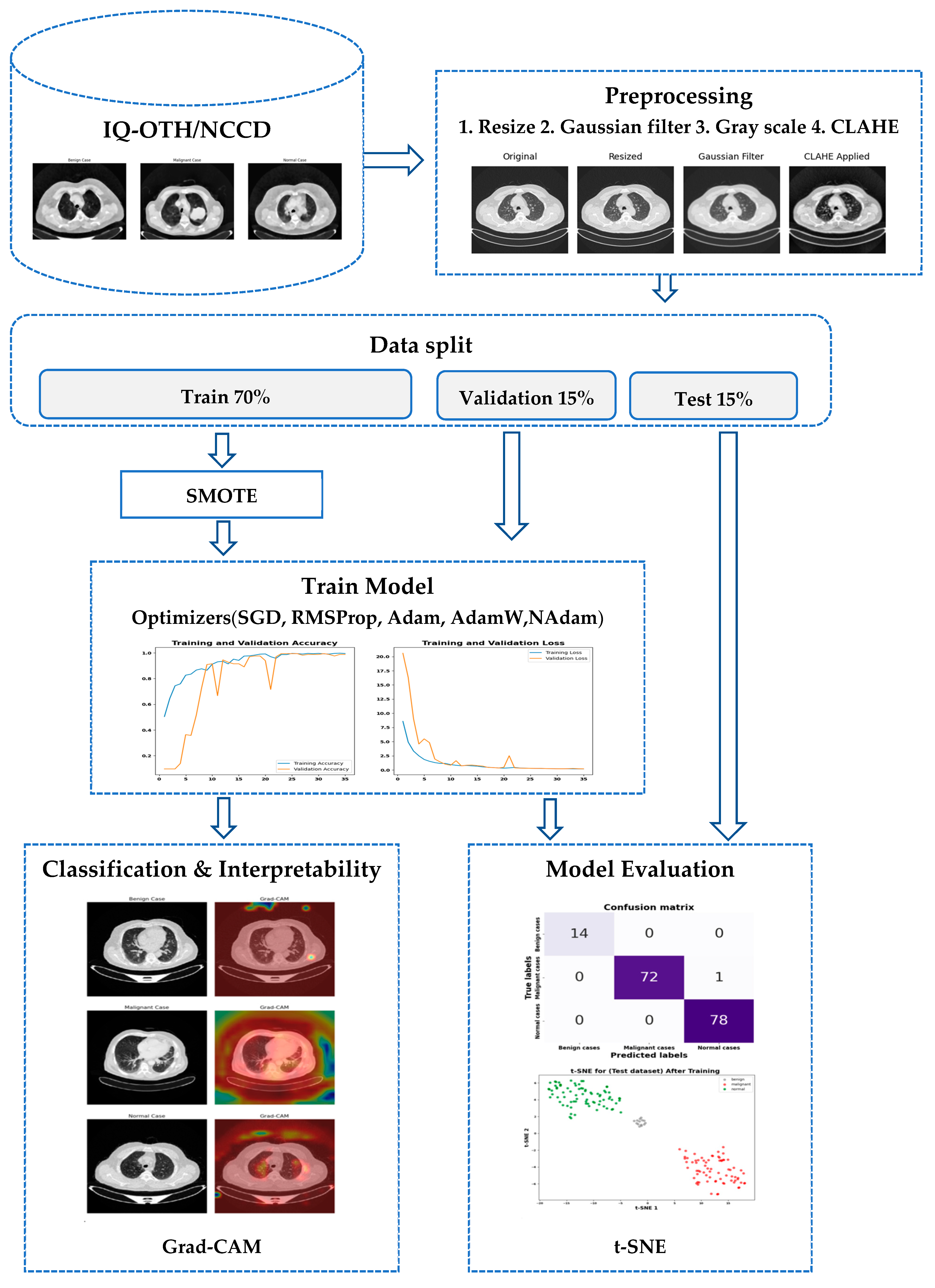

- A lightweight and effective CNN model (LCxNet) is developed to classify CT scans into normal, benign, and malignant categories with high precision.

- The LCxNet model is trained and evaluated using five widely adopted optimization algorithms, SGD, RMSProp, Adam, AdamW, and NAdam, providing comparative insights into training stability and performance.

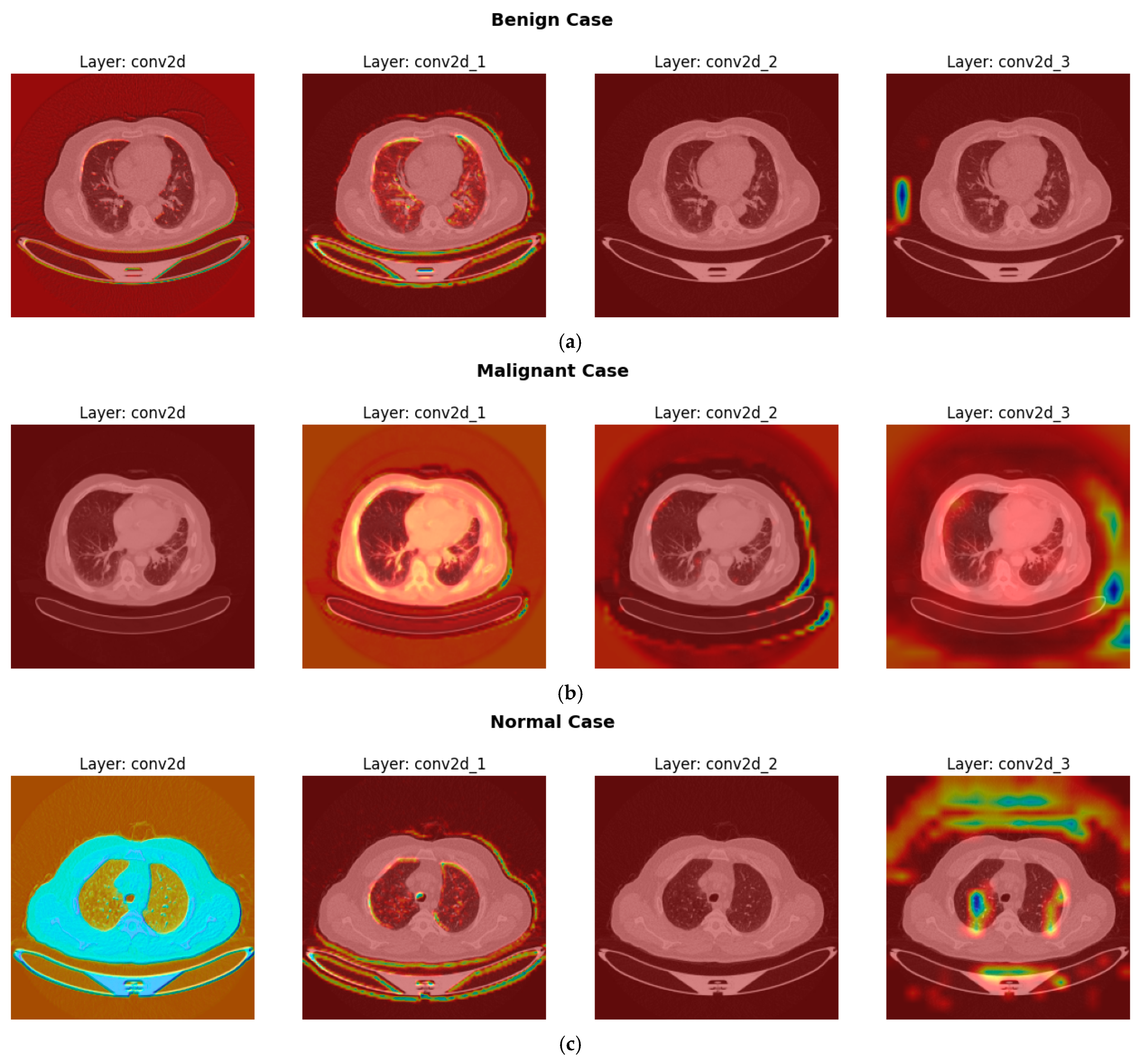

- To enhance interpretability and clinical trust, explainability techniques such as Gradient-weighted Class Activation Mapping (Grad-CAM) are utilized to visualize critical diagnostic regions within CT images that influence the model’s predictions.

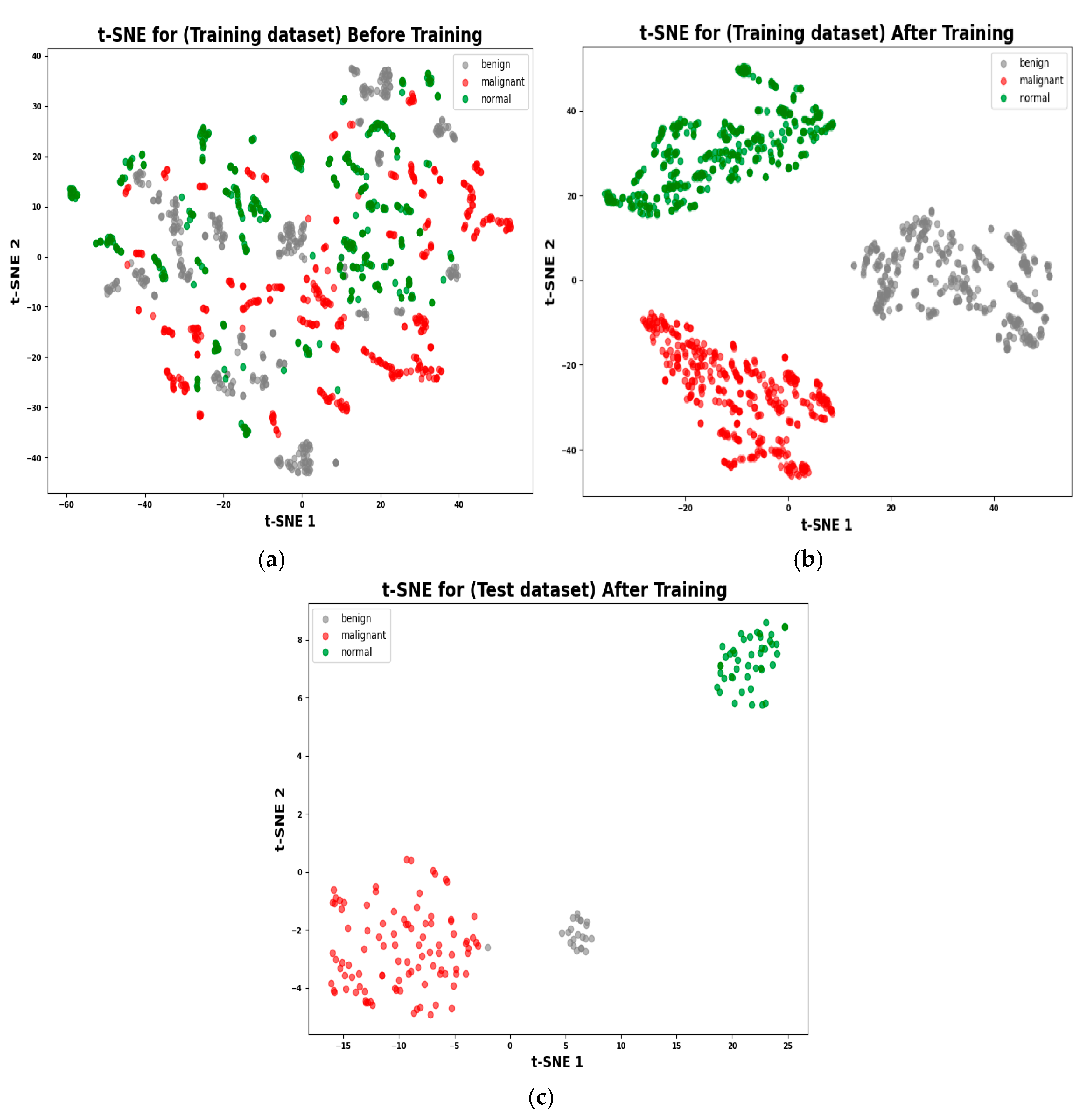

- The study investigates the learned feature representations using t-distributed Stochastic Neighbor Embedding (t-SNE) and histogram visualizations, facilitating a deeper understanding of feature separability and class distribution, thereby shedding light on the model’s internal decision-making process.

2. Related Work

3. Materials and Methods

3.1. Data Acquisition and Preparation

3.1.1. Dataset

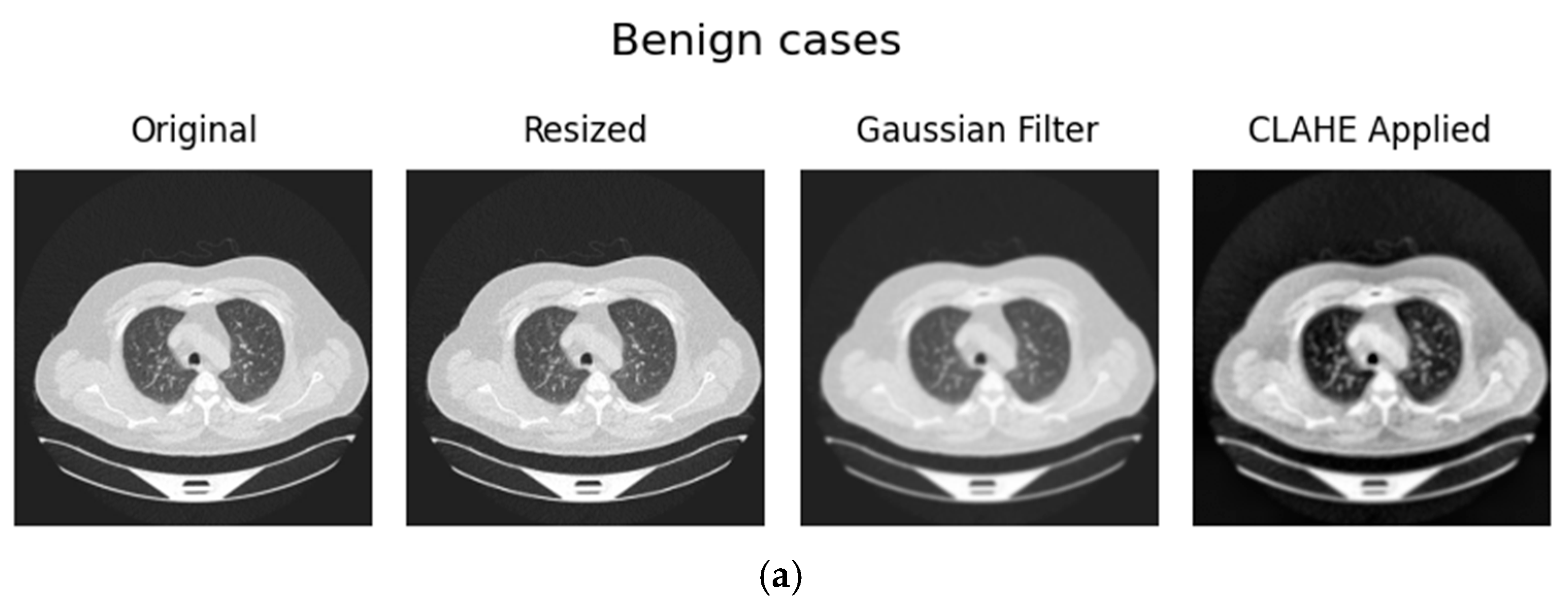

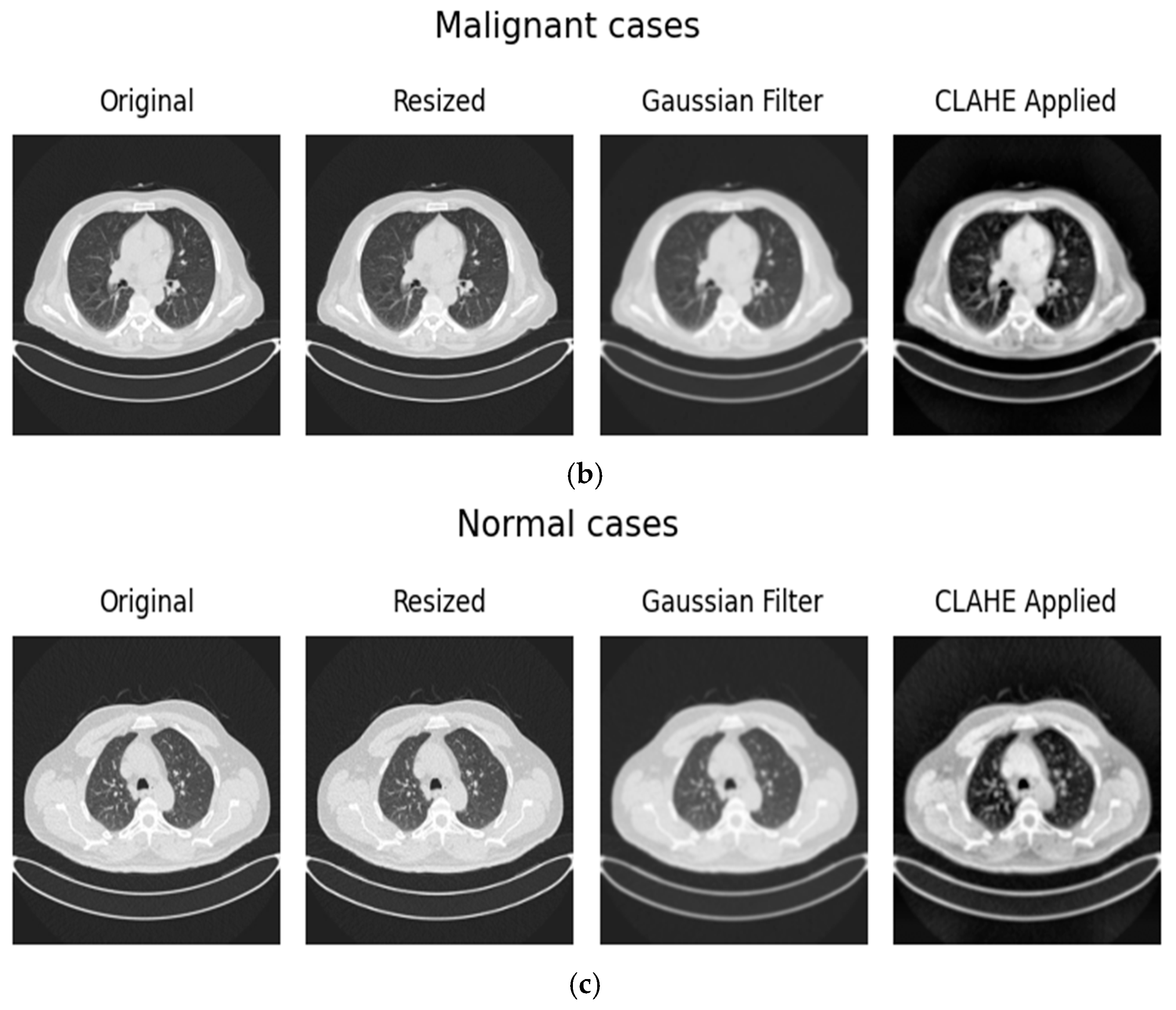

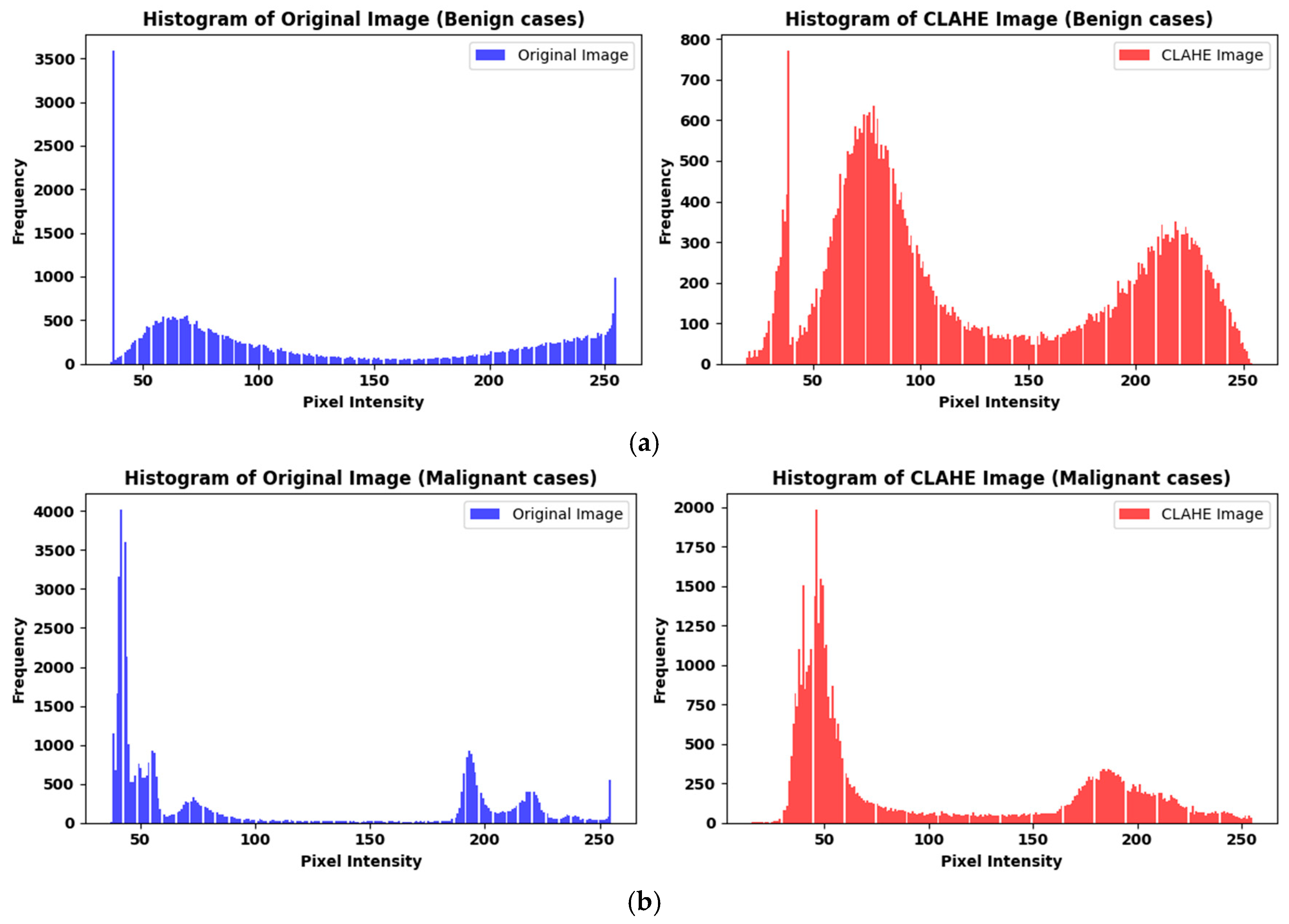

3.1.2. Preprocessing

3.1.3. Dataset Split

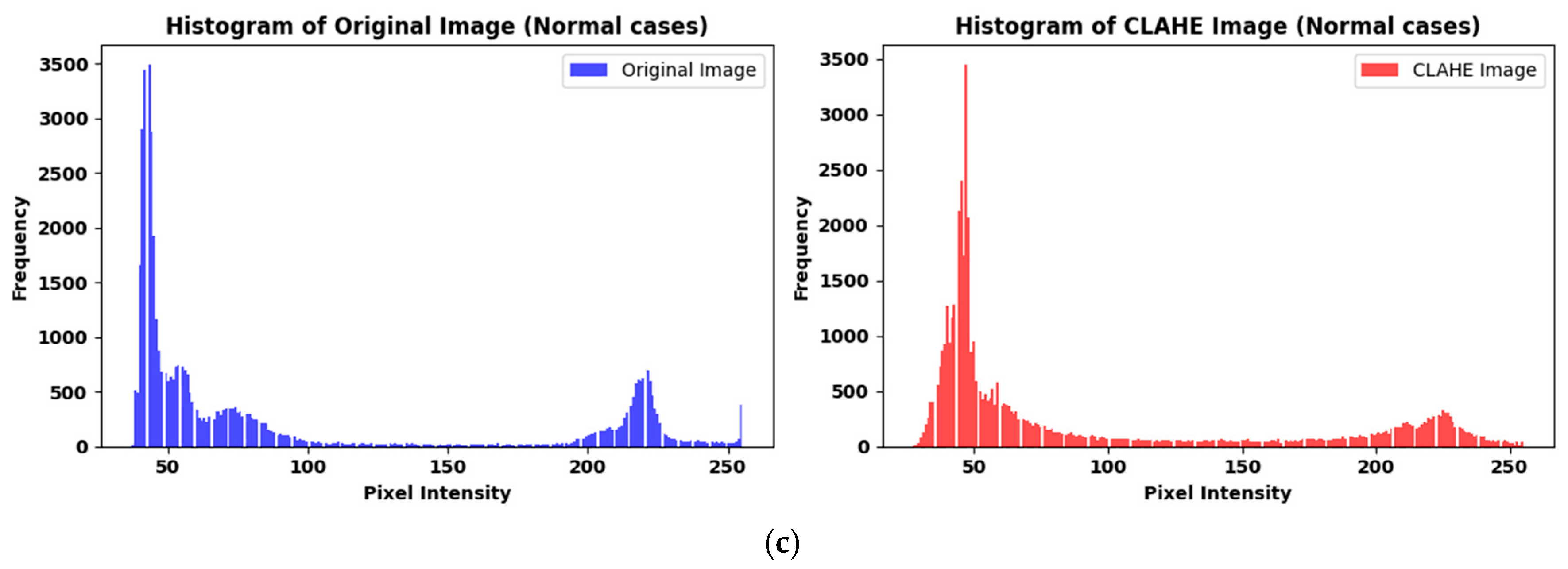

3.1.4. Strategy to Handle Data Imbalance

3.2. Model Architecture and Training Approach

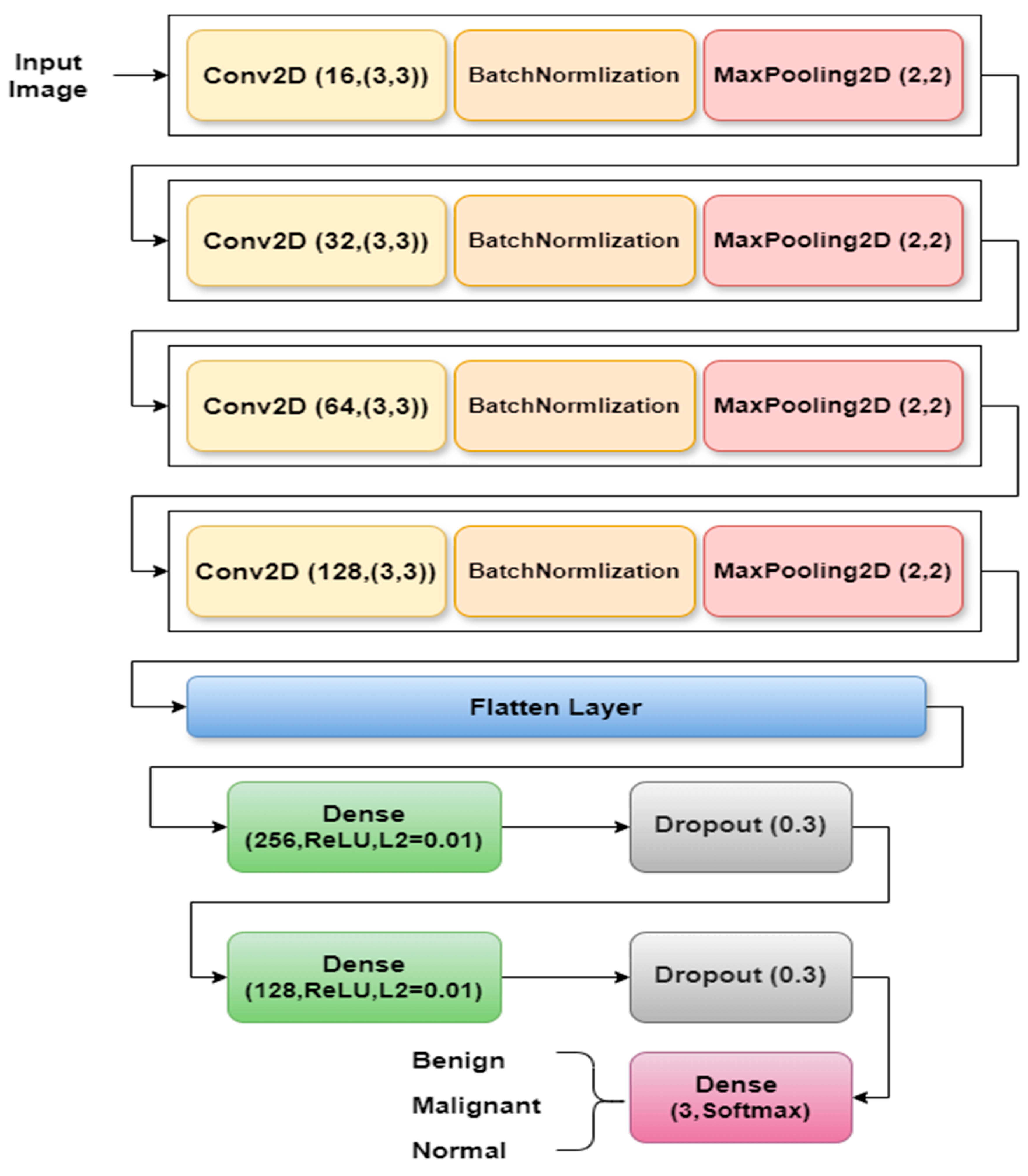

3.2.1. LCxNet Model Implementation

- Block 1 consists of a 3 × 3 Conv2D layer with 16 filters, producing an output of 218 × 218 × 16 with 160 parameters. Next comes BatchNormalization, which stabilizes activations with 64 parameters, and a 2 × 2 MaxPooling2D layer that reduces the output to 109 × 109 × 16.

- Block 2 comprises a 3 × 3 Conv2D layer with 32 filters, resulting in an output of 107 × 107 × 32 with 4640 parameters, It is followed by a BatchNormalization layer with 128 parameters, and a MaxPooling2D layer which reduces the output to 53 × 53 × 32.

- Block 3 features a 3 × 3 Conv2D layer with 64 filters, resulting in an output of 51 × 51 × 64 with 18,496 parameters. BatchNormalization follows this with 256 parameters and a MaxPooling2D layer, which reduces the output to 25 × 25 × 64.

- Block 4 consists of a 3 × 3 Conv2D layer with 128 filters, generating an output of 23 × 23 × 128 with 73,856 parameters. It also includes BatchNormalization with 512 parameters and a MaxPooling2D layer, which reduces the output to 11 × 11 × 128.

3.2.2. Optimization Strategy

- SGD (Stochastic Gradient Descent) with momentum (0.9): By lowering oscillations during training, momentum helps stabilize and speed up convergence, though further fine-tuning may be required for best results. As seen in Equations (1) and (2), where θt are the parameters at iteration t, β is the momentum decay rate (typically 0.9), α is the learning rate, and is the gradient of the loss function with respect to parameters ɵ at time step t.

- RMSprop (Root Mean Square Propagation): It is excellent at handling sequential tasks or models that are prone to noisy gradients because it dynamically adjusts learning rates based on recent gradients, guaranteeing steady and reliable updates during training. As shown in Equations (3) and (4), where ϵ a small constant (e.g., 1 × 10−8) to prevent division by zero.

- Adam (Adaptive Moment Estimation): This optimizer is very flexible and effective for a variety of tasks because it combines the advantages of adaptive learning rates and momentum. First moment estimate momentum as indicated in Equation (5), then second moment estimate RMS of gradients as seen in Equation (6).

- AdamW (Adaptive Moment Estimation with Weight Decay): By incorporating decoupled weight decay regularization, this enhanced Adam optimizer successfully improves generalization and reduces overfitting [41]. Equations (5)–(8) are used, and the parameters are updated by subtracting the adaptive gradient step and a scaled weight decay term, as seen in Equation (10).

- NAdam (Nesterov-accelerated Adaptive Moment Estimation): Combines the adaptive moment estimation of Adam with Nesterov accelerated gradients (NAG), which introduces a “lookahead” gradient step to improve convergence speed and stability [42]. Unlike Adam, which uses the current gradient to update parameters, NAdam incorporates the gradient at the anticipated next position, effectively providing a more responsive momentum update. , remain the same as in Adam Equations (6) and (8). However, the parameter update utilizes a lookahead gradient estimate, as seen in Equation (11).

3.2.3. The t-SNE Visualization

3.2.4. Explainable Artificial Intelligence (XAI) Techniques

- Compute Gradients: Calculate the gradients of the target class score with respect to the feature map of a specific convolutional layer, as shown in Equation (13).where is the activation at spatial location (i,j) in the k-th feature map.

- Global Average Pooling (GAP) for Weights: Compute the neuron importance weights for each feature map k as shown in Equation (14). This is achieved by performing a global average pool of the gradients calculated in step 1.where Z is the total number of spatial locations (i.e., ), these weights represent the “importance” of feature map k for the target class c.

- Weighted Sum of Feature Maps: Compute the ReLU-activated weighted sum of the feature maps using the calculated weights . Produces the raw Grad-CAM heatmap as illustrated in Equation (15).The ReLU is applied because we are typically interested in features that positively influence the class score.

- Upsampling and Superimposition: The resulting heatmap is typically low-resolution (same as the feature map). It is then upsampled to the original input image size and superimposed onto the input image to visualize the regions of interest that most influenced the CNN’s decision for the target class. These visualizations appear as “heatmaps.”

4. Experimental Results

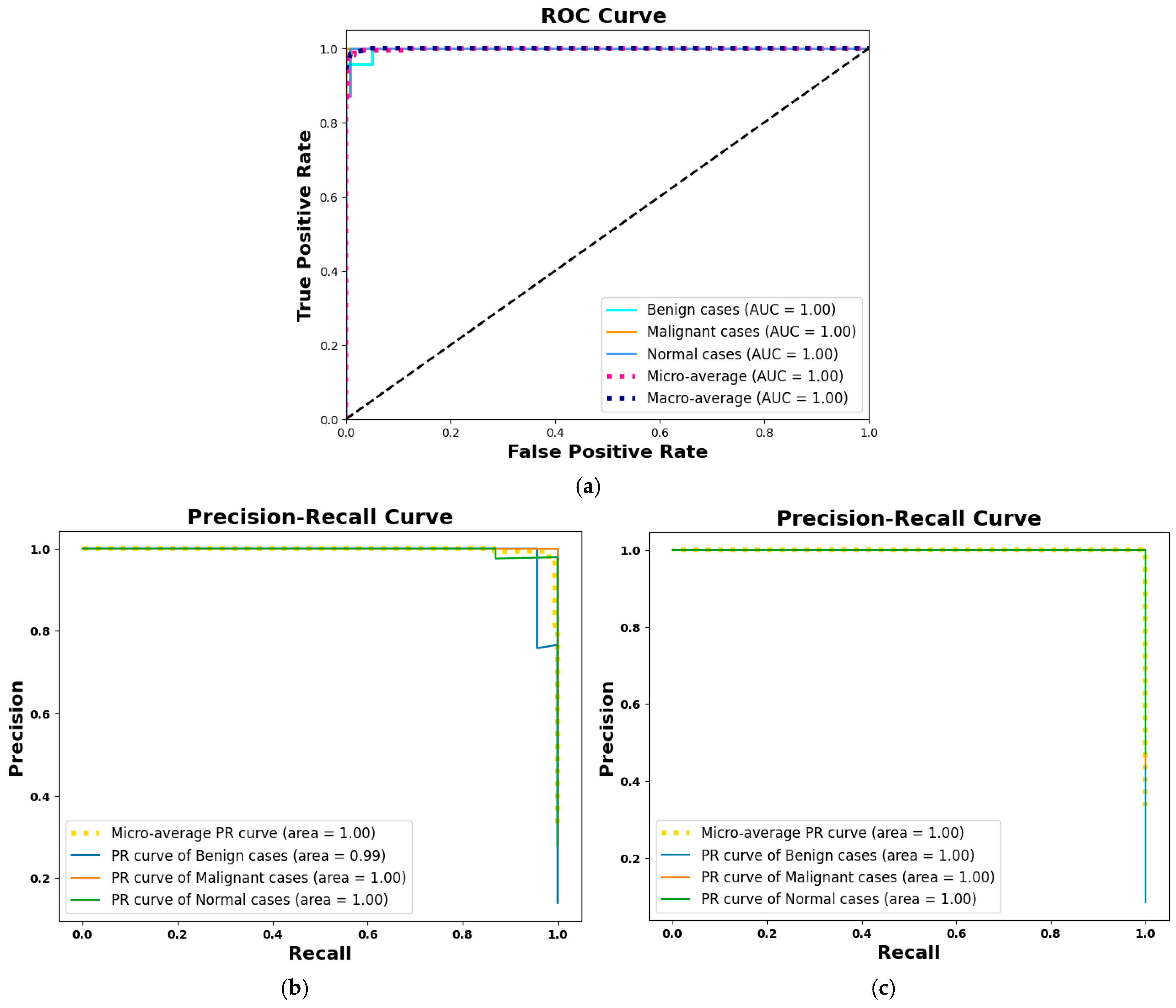

4.1. Performance Metrics

4.2. Hyperparameters Configuration

4.3. Experimental Setup

4.4. Optimization Analysis

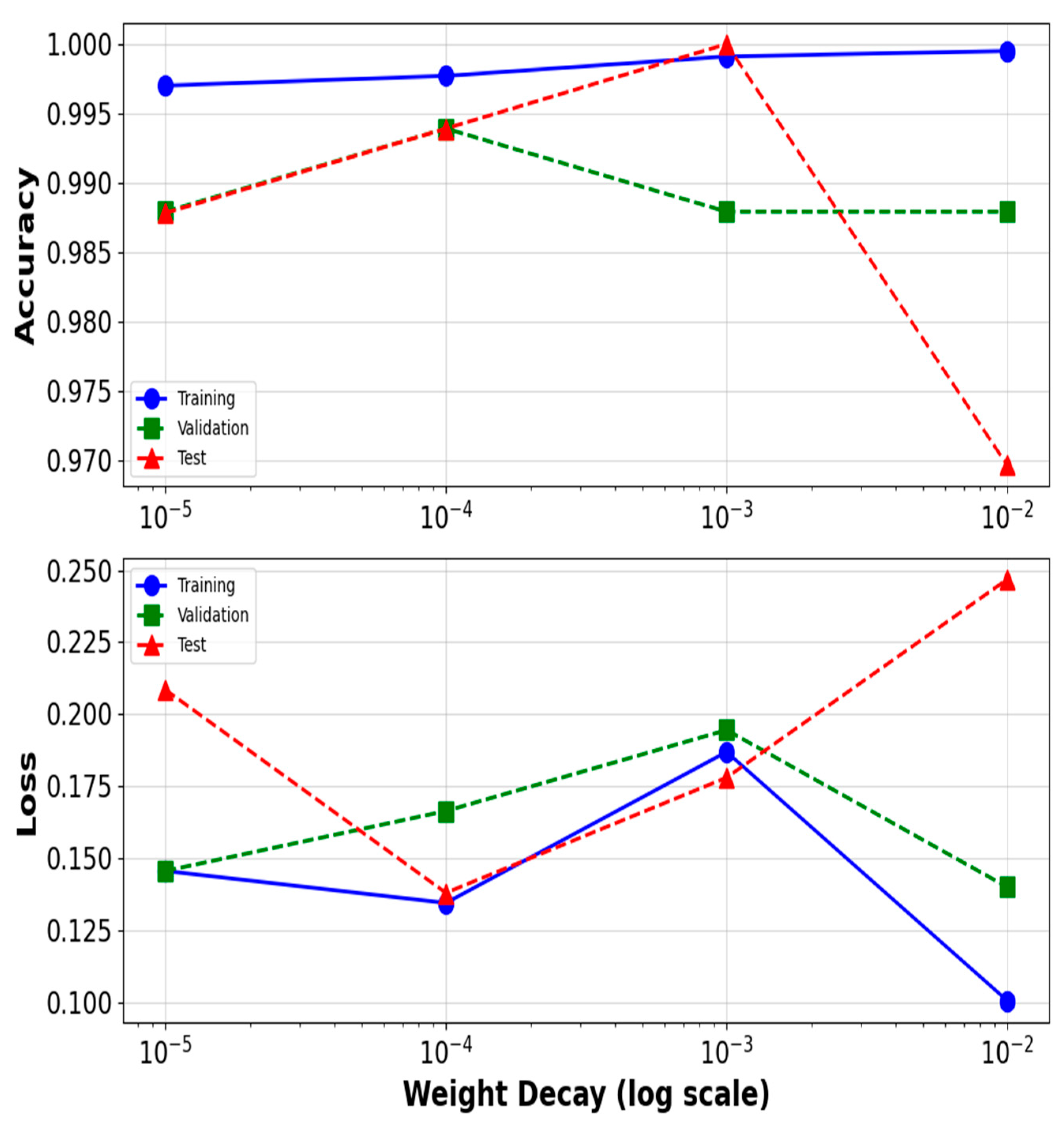

4.4.1. The Weight Decay’s Effect on AdamW

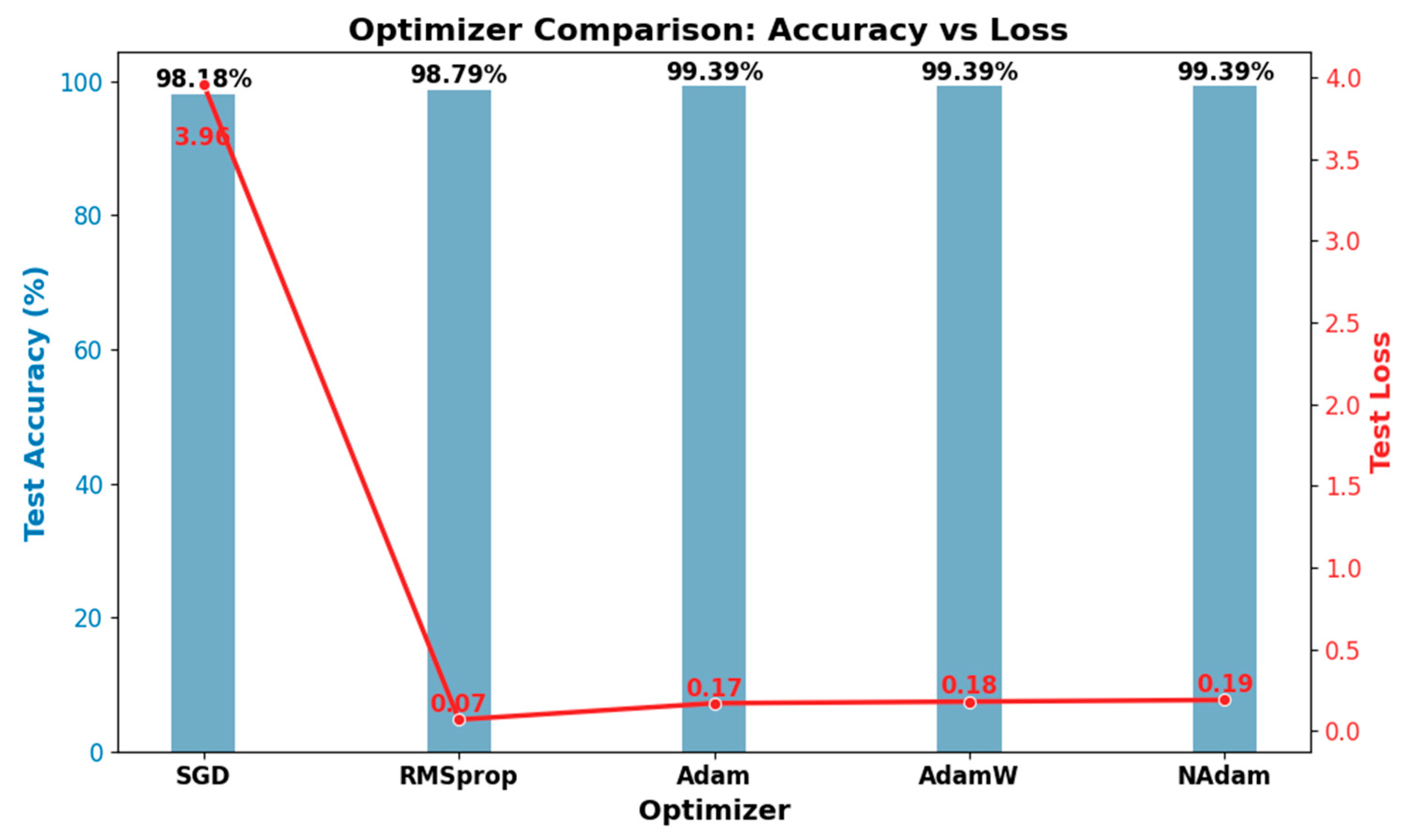

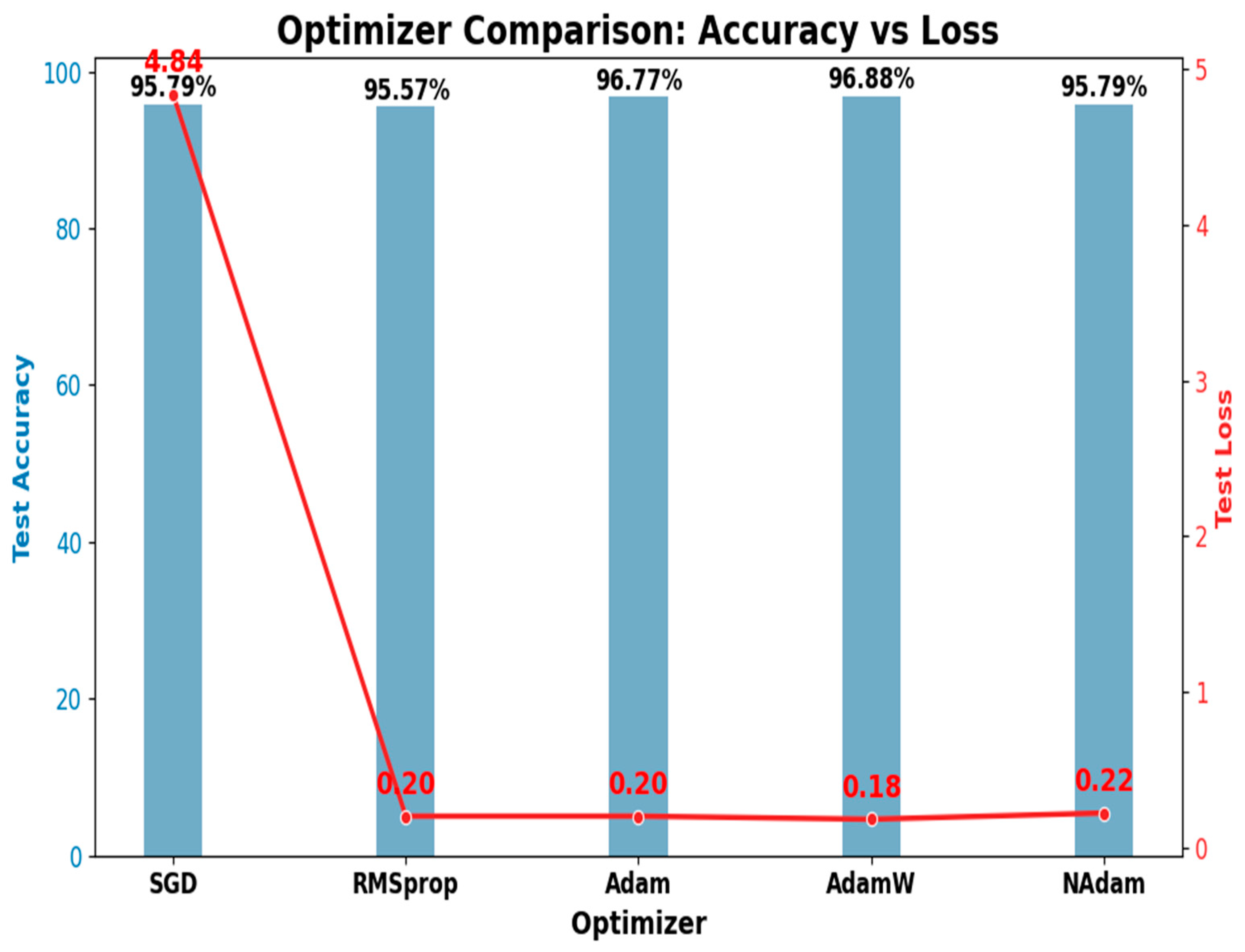

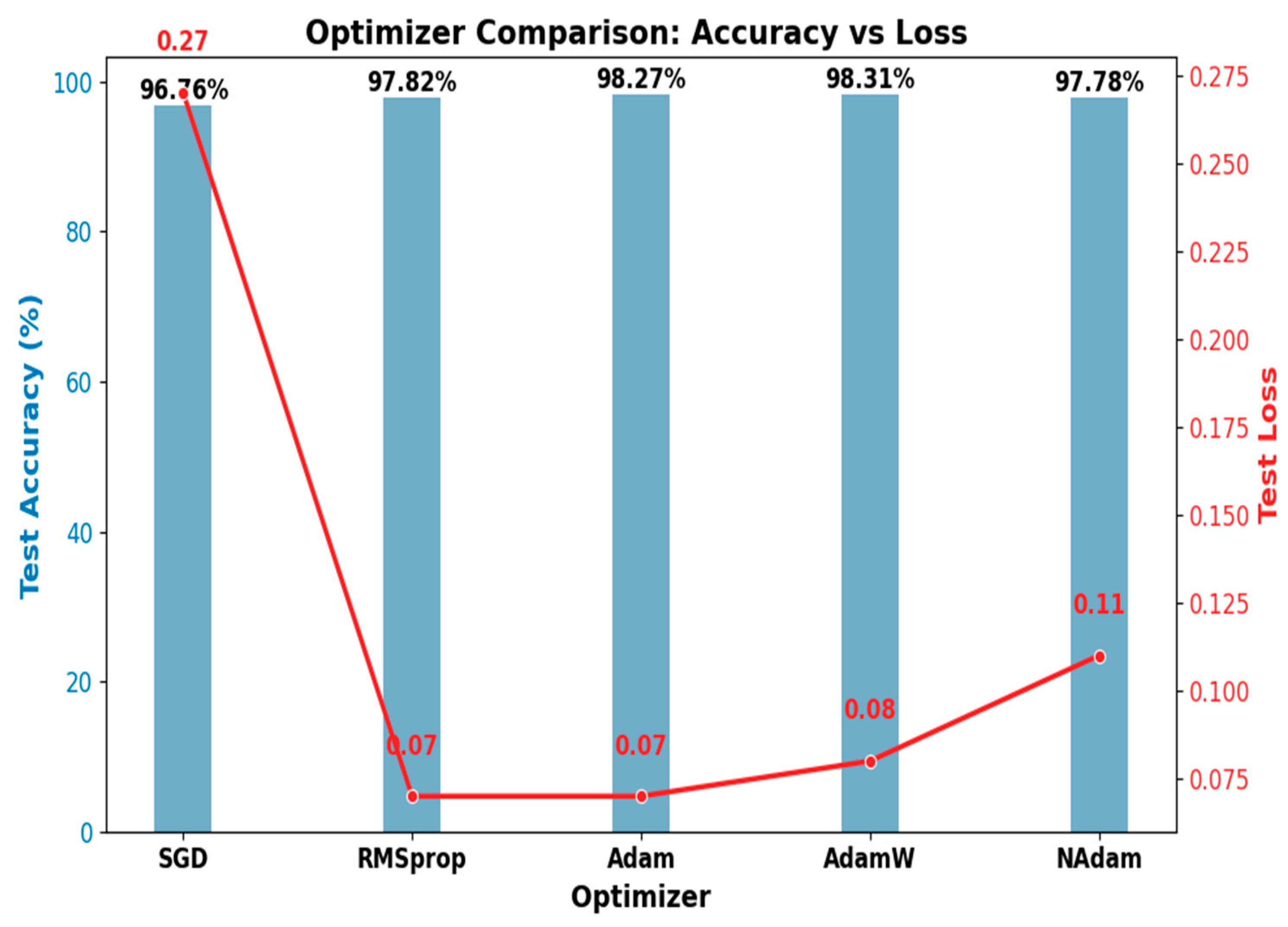

4.4.2. The Optimizer’s Effect on Model Performance

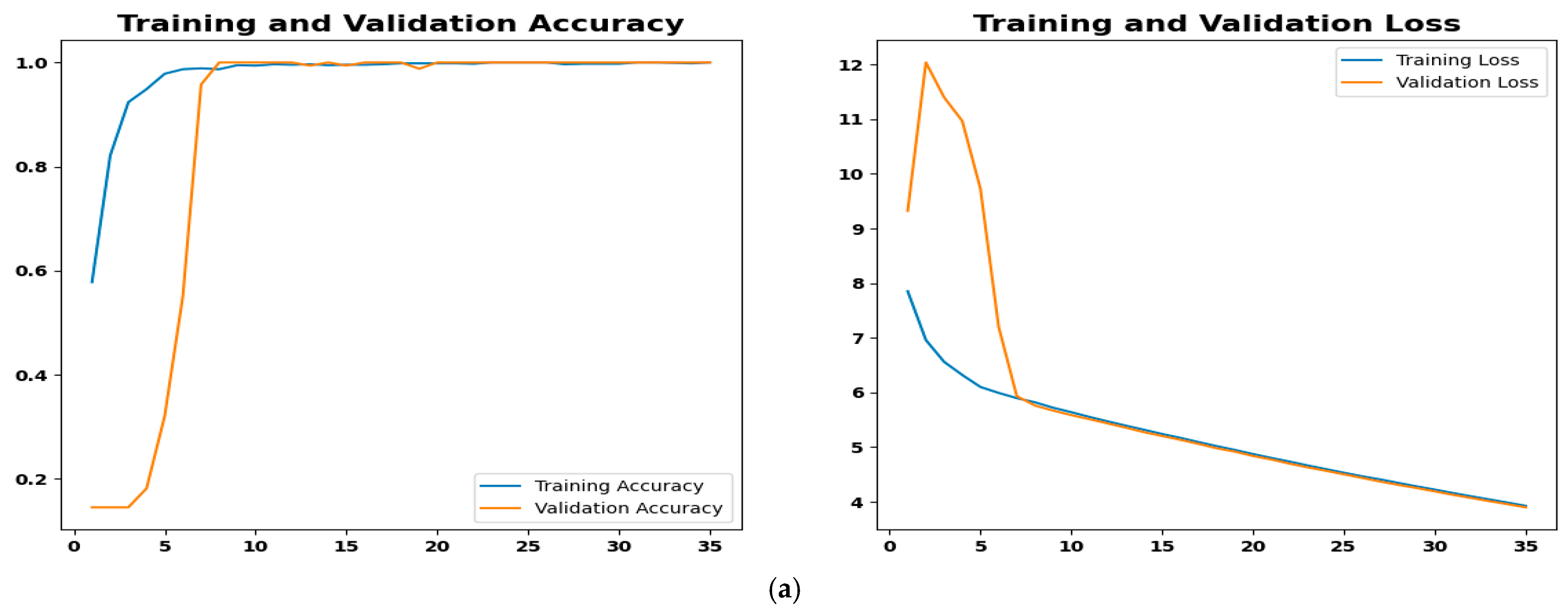

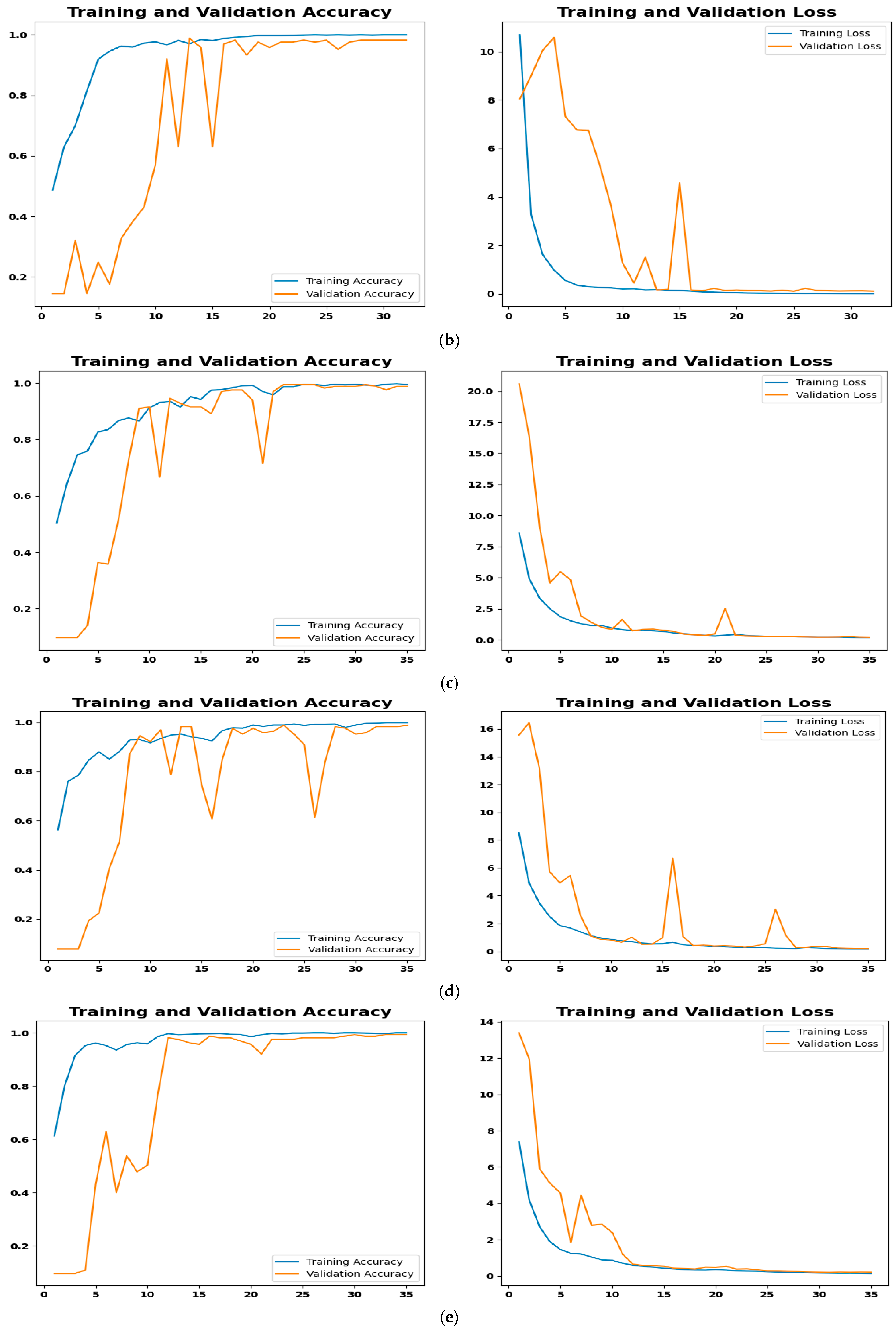

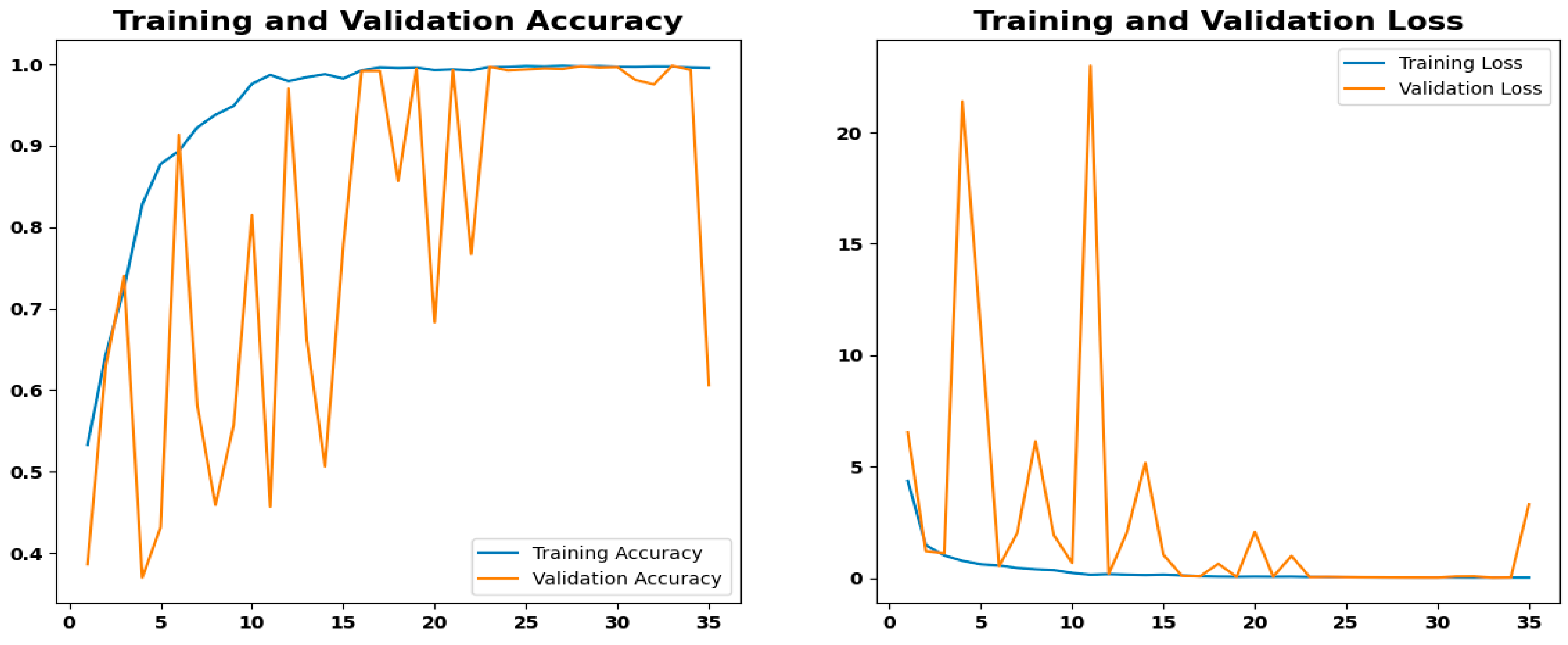

4.4.3. Training Performance and Convergence Analysis

4.4.4. Model Evaluation Metrics

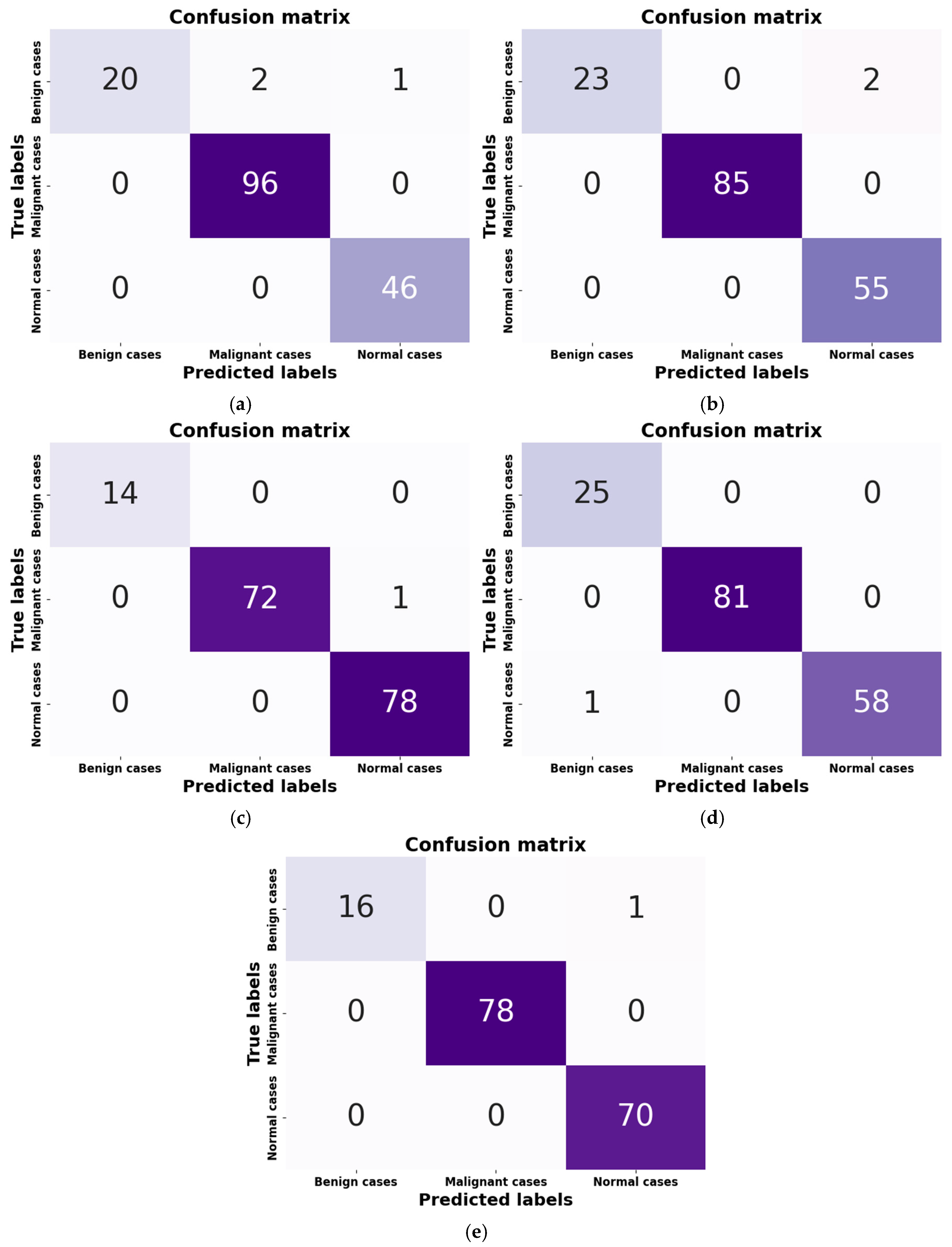

- Figure 9a: Although it has a minor flaw in handling benign cases, the SGD optimizer’s confusion matrix correctly identified 162 out of 165 cases. The misclassification rate was only 1.82%, with two cases being incorrectly classified as malignant and one as normal. This is less serious than missing a real cancer because it may make patients anxious, necessitate invasive diagnostic procedures, and result in needless medical expenses.

- Figure 9b: The model performs marginally better with RMSprop; only two benign cases, both of which were misclassified as normal. This indicates that RMSprop does not make the vital mistake of generating false positives for malignant cases, unlike SGD, which demonstrated this issue with benign cases. A positive result in the clinical setting of lung cancer since it lessens the possibility of needless procedures based on a benign finding. Without such misunderstandings, the model shows excellent accuracy in distinguishing between malignant and normal cases. The rate of misclassification is 1.21.

- Figure 9c: With only 1 out of 165 cases misclassified, the Adam optimizer has a high overall accuracy rate, suggesting a strong model. One malignant case, however, was mistakenly identified as normal, which raises concerns in medical diagnostics. A poorer prognosis, delayed treatment, and disease progression could result from a missed diagnosis. The numerical advantage in accuracy for patient safety is outweighed by the misclassification rate of 0.61.

- Figure 9d: With just one case misclassified—a normal case that was classified incorrectly as benign—AdamW has a high overall accuracy rate. AdamW is a safer option for situations requiring few false negatives for malignancy because it avoids missing a serious cancerous condition, which makes it less severe than Adam’s misclassification.

- Figure 9e: NAdam, a model similar to Adam and AdamW, achieves high accuracy with only one misclassification. It achieves 100% recall for malignant cases, a significant advantage over Adam, which had one critical false negative. The single misclassification in NAdam is a benign case being missed and called normal, which is less essential in lung cancer diagnosis.

4.5. Visualization of High-Dimensional Data Structure Using t-SNE

4.6. Interpretation of CNN Decisions Using Grad-CAM Heatmaps

4.7. Ablation Study

4.7.1. Impact of Data Split Ratios on Model Performance

- The 70:15:15 split offers a well-balanced training and evaluation strategy, making it ideal for achieving high accuracy and strong generalization capabilities.

- The 80:10:10 split enhances sensitivity and improves the detection of positive cases by increasing the size of the training set. However, because there is less validation data available for hyperparameter tuning, overall performance is somewhat decreased.

- The 80:20 split reduces the amount of validation and testing data, potentially limiting the model’s generalization capabilities. In this case, validation constitutes 20% of the training set, which means 16% of the original dataset.

4.7.2. Impact of Dataset Size on Model Performance

4.7.3. Impact of Modality Type: CT Scans vs. Histopathological Images

5. Discussion

5.1. Performance Comparison with TL Models

5.2. Performance Comparison with Other Studies on the IQ-OTH/NCCD

| Ref. | Class | Method | Handling Imbalance | Explainability | Accuracy (%) | Sensitivity (%) | Specificity (%) |

|---|---|---|---|---|---|---|---|

| Al-Yasriy et al. [37] 2020 | 2 | AlexNet | _ | _ | 93.54 | 95.71 | 95 |

| AL-Huseiny & Sajit [57] 2021 | 2 | GoogLeNet | _ | _ | 94.38 | 95.08 | 93.7 |

| Abdollahi [53] 2023 | 3 | LeNet | Data Augmentation | _ | 97.88 | 93.14 | 95.91 |

| Abunajm et al. [54] 2023 | 3 | CNN | Data Augmentation | _ | 99.45 | 99 | 99.21 |

| Raza et al. [56] 2023 | 3 | EfficientNetB1 | Data Augmentation | Grad-CAM | 99.1 | _ | _ |

| Musthafa et al. [38] 2024 | 3 | CNN | SMOTE | _ | 99.64 | _ | _ |

| Ganguly & Chakraborty [55] 2024 | 3 | CNN | Oversampling Technique | _ | 99.00 | 99.16 | 98.66 |

| Klangbunrueang et al. [48] 2025 | 3 | 4 TL Models, (with Superior VGG16) | Data Augmentation | Grad-CAM | 98.18 | _ | _ |

| Proposed LCxNet | 3 | CNN | SMOTE | Grad-CAM | 99.39 | 99.39 | 99.45 |

6. Conclusions and Perspectives

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| Adam | Adaptive Moment Estimation |

| AdamW | Adaptive Moment Estimation with Weight Decay |

| AUC | Area Under Curve |

| CAD | Computer-Aided Diagnosis |

| CNN | Convolutional Neural Network |

| CXR | Chest X-Ray |

| DL | Deep Learning |

| FN | False Negative |

| FP | False Positive |

| FPR | False Positive Rate |

| GAN | Generative Adversarial Network |

| Grad-CAM | Gradient-weighted Class Activation Mapping |

| LIME | Local Interpretable Model-agnostic Explanations |

| ML | Machine Learning |

| MRI | Magnetic Resonance Imaging |

| NAdam | Nesterov-accelerated Adaptive Moment Estimation |

| NSCLC | Non-small Cell Lung Cancer |

| PR | Precision-Recall |

| ResNet50 | Residual Network 50 |

| RMSProp | Root Mean Square Propagation |

| ROC | Receiver Operating Characteristic |

| SCLC | Small Cell Lung Cancer |

| SGD | Stochastic Gradient Descent |

| SMOTE | Synthetic Minority Over-sampling Technique |

| SVM | Support Vector Machine |

| t-SNE | t-Distributed Stochastic Neighbor Embedding |

| TN | True Negative |

| TP | True Positive |

| TPR | True Positive Rate |

| VGG | Visual Geometry Group |

| ViT | Vision Transformer |

| XAI | Explainable Artificial Intelligence |

| YOLOv8 | You Only Look Once (version 8) |

References

- Lung Cancer. Available online: https://www.who.int/news-room/fact-sheets/detail/lung-cancer (accessed on 24 August 2025).

- Lancaster, H.L.; Heuvelmans, M.A.; Oudkerk, M. Low-dose Computed Tomography Lung Cancer Screening: Clinical Evidence and Implementation Research. J. Intern. Med. 2022, 292, 68–80. [Google Scholar] [CrossRef]

- Hendrix, W.; Hendrix, N.; Scholten, E.T.; Mourits, M.; Trap-de Jong, J.; Schalekamp, S.; Korst, M.; Van Leuken, M.; Van Ginneken, B.; Prokop, M.; et al. Deep Learning for the Detection of Benign and Malignant Pulmonary Nodules in Non-Screening Chest CT Scans. Commun. Med. 2023, 3, 156. [Google Scholar] [CrossRef]

- What Is Lung Cancer? Available online: https://www.cancer.org/cancer/types/lung-cancer/about/what-is.html (accessed on 10 July 2025).

- Lung Cancer Risk Factors. Available online: https://www.cancer.org/cancer/types/lung-cancer/causes-risks-prevention/risk-factors.html (accessed on 10 July 2025).

- Kaur, N.; Hans, R. Transfer Learning for Cancer Diagnosis in Medical Images: A Compendious Study. Int. J. Comput. Intell. Syst. 2025, 18, 62. [Google Scholar] [CrossRef]

- Hussain, D.; Abbas, N.; Khan, J. Recent Breakthroughs in PET-CT Multimodality Imaging: Innovations and Clinical Impact. Bioengineering 2024, 11, 1213. [Google Scholar] [CrossRef]

- Liz-López, H.; De Sojo-Hernández, Á.A.; D’Antonio-Maceiras, S.; Díaz-Martínez, M.A.; Camacho, D. Deep Learning Innovations in the Detection of Lung Cancer: Advances, Trends, and Open Challenges. Cogn. Comput. 2025, 17, 67. [Google Scholar] [CrossRef]

- Taye, M.M. Understanding of Machine Learning with Deep Learning: Architectures, Workflow, Applications and Future Directions. Computers 2023, 12, 91. [Google Scholar] [CrossRef]

- Kourounis, G.; Elmahmudi, A.A.; Thomson, B.; Hunter, J.; Ugail, H.; Wilson, C. Computer Image Analysis with Artificial Intelligence: A Practical Introduction to Convolutional Neural Networks for Medical Professionals. Postgrad. Med. J. 2023, 99, 1287–1294. [Google Scholar] [CrossRef]

- Nair, S.S.; Meena Devi, V.N.; Bhasi, S. Lung Cancer Detection from CT Images: Modified Adaptive Threshold Segmentation with Support Vector Machines and Artificial Neural Network Classifier. Curr. Med. Imaging 2024, 20, e140723218727. [Google Scholar] [CrossRef]

- Kousiga, T.; Nithya, P. An Improving Lung Disease Detection by Combining Ensemble Deep Learning and Maximum Mean Discrepancy Transfer Learning. Int. J. Intell. Eng. Syst. 2024, 17, 294–306. [Google Scholar] [CrossRef]

- Abe, A.A.; Nyathi, M.; Okunade, A.A.; Pilloy, W.; Kgole, B.; Nyakale, N. A Robust Deep Learning Algorithm for Lung Cancer Detection from Computed Tomography Images. Intell.-Based Med. 2025, 11, 100203. [Google Scholar] [CrossRef]

- Elhassan, S.M.; Darwish, S.M.; Elkaffas, S.M. An Enhanced Lung Cancer Detection Approach Using Dual-Model Deep Learning Technique. Comput. Model. Eng. Sci. 2025, 142, 835–867. [Google Scholar] [CrossRef]

- Ozdemir, B.; Aslan, E.; Pacal, I. Attention Enhanced InceptionNeXt-Based Hybrid Deep Learning Model for Lung Cancer Detection. IEEE Access 2025, 13, 27050–27069. [Google Scholar] [CrossRef]

- Bouamrane, A.; Derdour, M.; Alksas, A.; El-Baz, A. Evaluating Explainability in Transfer Learning Models for Pulmonary Nodules Classification: A Comparative Analysis of Generalizability and Interpretability. Int. J. Pattern Recognit. Artif. Intell. 2025, 2540001. [Google Scholar] [CrossRef]

- Abe, A.; Nyathi, M.; Okunade, A. Lung Cancer Diagnosis from Computed Tomography Scans Using Convolutional Neural Network Architecture with Mavage Pooling Technique. AIMSMEDS 2025, 12, 13–27. [Google Scholar] [CrossRef]

- Jozi, N.S.; Al-Suhail, G.A. Lung Cancer Detection in Radiological Imaging Using Deep Learning: A Review. In Proceedings of the 2024 5th International Conference on Communications, Information, Electronic and Energy Systems (CIEES), Veliko Tarnovo, Bulgaria, 20–22 November 2024; pp. 1–8. [Google Scholar]

- Bushara, A.R.; Kumar, R.S.V. Deep Learning-Based Lung Cancer Classification of CT Images Using Augmented Convolutional Neural Networks. Electron. Lett. Comput. Vis. Image Anal. 2022, 21, 130–142. [Google Scholar] [CrossRef]

- Bangare, S.L.; Sharma, L.; Varade, A.N.; Lokhande, Y.M.; Kuchangi, I.S.; Chaudhari, N.J. Computer-Aided Lung Cancer Detection and Classification of CT Images Using Convolutional Neural Network. In Computer Vision and Internet of Things: Technologies and Applications; CRC Press: Boca Raton, FL, USA, 2022; pp. 247–262. [Google Scholar]

- Gopinath, A.; Gowthaman, P.; Venkatachalam, M.; Saroja, M. Computer Aided Model for Lung Cancer Classification Using Cat Optimized Convolutional Neural Networks. Meas. Sens. 2023, 30, 100932. [Google Scholar] [CrossRef]

- Deepa, V.; Fathimal, P.M. Deep-ShrimpNet Fostered Lung Cancer Classification from CT Images. Int. J. Image Graph. Signal Process. 2023, 15, 59–68. [Google Scholar] [CrossRef]

- Yan, C.; Razmjooy, N. Optimal Lung Cancer Detection Based on CNN Optimized and Improved Snake Optimization Algorithm. Biomed. Signal Process. Control 2023, 86, 105319. [Google Scholar] [CrossRef]

- Ravindranathan, M.K.; Vadivu, D.S.; Rajagopalan, N. Pulmonary Prognosis: Predictive Analytics for Lung Cancer Detection. In Proceedings of the 2024 2nd International Conference on Recent Advances in Information Technology for Sustainable Development (ICRAIS), Manipal, India, 6–7 November 2024; pp. 261–265. [Google Scholar]

- Nayak, C.; Tripathy, A.; Parhi, M. Deep Hybrid Neural Network: Unveiling Lung Cancer with Deep Hybrid Intelligence from CT Scans. Cureus J. Comput. Sci. 2025, 2, es44389-024-02008-2. [Google Scholar] [CrossRef]

- Shafi, I.; Din, S.; Khan, A.; Díez, I.D.L.T.; Casanova, R.D.J.P.; Pifarre, K.T.; Ashraf, I. An Effective Method for Lung Cancer Diagnosis from CT Scan Using Deep Learning-Based Support Vector Network. Cancers 2022, 14, 5457. [Google Scholar] [CrossRef]

- Shaziya, H.; Kattula, S. LungNodNet-The CNN Architecture for Detection and Classification of Lung Nodules in Pulmonary CT Images. In Proceedings of the 2022 IEEE 19th India Council International Conference (INDICON), Kochi, India, 24–26 November 2022; pp. 1–6. [Google Scholar]

- Mohamed, T.I.A.; Oyelade, O.N.; Ezugwu, A.E. Automatic Detection and Classification of Lung Cancer CT Scans Based on Deep Learning and Ebola Optimization Search Algorithm. PLoS ONE 2023, 18, e0285796. [Google Scholar] [CrossRef]

- Rajasekar, V.; Vaishnnave, M.P.; Premkumar, S.; Sarveshwaran, V.; Rangaraaj, V. Lung Cancer Disease Prediction with CT Scan and Histopathological Images Feature Analysis Using Deep Learning Techniques. Results Eng. 2023, 18, 101111. [Google Scholar] [CrossRef]

- Damayanti, N.P.; Ananda, M.N.D.; Nugraha, F.W. Lung Cancer Classification Using Convolutional Neural Network and DenseNet. J. Soft Comput. Explor. 2023, 4, 133–141. [Google Scholar] [CrossRef]

- UrRehman, Z.; Qiang, Y.; Wang, L.; Shi, Y.; Yang, Q.; Khattak, S.U.; Aftab, R.; Zhao, J. Effective Lung Nodule Detection Using Deep CNN with Dual Attention Mechanisms. Sci. Rep. 2024, 14, 3934. [Google Scholar] [CrossRef]

- Pathan, S.; Ali, T.; Sudheesh, P.G.; Kumar, P.V.; Rao, D. An Optimized Convolutional Neural Network Architecture for Lung Cancer Detection. APL Bioeng. 2024, 8, 026121. [Google Scholar] [CrossRef]

- Saxena, S.; Prasad, S.N.; Polnaya, A.M.; Agarwala, S. Hybrid Deep Convolution Model for Lung Cancer Detection with Transfer Learning. arXiv 2025, arXiv:2501.02785. [Google Scholar] [CrossRef]

- Alsallal, M.; Ahmed, H.H.; Kareem, R.A.; Yadav, A.; Ganesan, S.; Shankhyan, A.; Gupta, S.; Joshi, K.K.; Sameer, H.N.; Yaseen, A.; et al. Enhanced Lung Cancer Subtype Classification Using Attention-Integrated DeepCNN and Radiomic Features from CT Images: A Focus on Feature Reproducibility. Discov. Oncol. 2025, 16, 336. [Google Scholar] [CrossRef]

- Mohammed Qadir, A.; Ahmed Abdalla, P.; Faiq Abd, D. A Hybrid Lung Cancer Model for Diagnosis and Stage Classification from Computed Tomography Images. IJEEE 2024, 20, 266–274. [Google Scholar] [CrossRef]

- Al-Yasriy, H.F. The IQ-OTHNCCD Lung Cancer Dataset. 2020. Available online: https://www.kaggle.com/datasets/hamdallak/the-iqothnccd-lung-cancer-dataset (accessed on 1 September 2024).

- Al-Yasriy, H.F.; AL-Husieny, M.S.; Mohsen, F.Y.; Khalil, E.A.; Hassan, Z.S. Diagnosis of Lung Cancer Based on CT Scans Using CNN. IOP Conf. Ser. Mater. Sci. Eng. 2020, 928, 022035. [Google Scholar] [CrossRef]

- Musthafa, M.M.; Manimozhi, I.; Mahesh, T.R.; Guluwadi, S. Optimizing Double-Layered Convolutional Neural Networks for Efficient Lung Cancer Classification through Hyperparameter Optimization and Advanced Image Pre-Processing Techniques. BMC Med. Inf. Decis. Mak. 2024, 24, 142. [Google Scholar] [CrossRef]

- Hammad, M.; ElAffendi, M.; El-Latif, A.A.A.; Ateya, A.A.; Ali, G.; Plawiak, P. Explainable AI for Lung Cancer Detection via a Custom CNN on CT Images. Sci. Rep. 2025, 15, 12707. [Google Scholar] [CrossRef]

- Alahmed, H.A.; Al-Suhail, G.A. AlzONet: A Deep Learning Optimized Framework for Multiclass Alzheimer’s Disease Diagnosis Using MRI Brain Imaging. J. Supercomput. 2025, 81, 423. [Google Scholar] [CrossRef]

- Loshchilov, I.; Hutter, F. Decoupled Weight Decay Regularization. arXiv 2019, arXiv:1711.05101. [Google Scholar] [CrossRef]

- Dozat, T. Incorporating Nesterov Momentum into Adam. In Proceedings of the 4th International Conference on Learning Representations, San Juan, Puerto Rico, 2–4 May 2016. [Google Scholar]

- Roy, A.; Saha, P.; Gautam, N.; Schwenker, F.; Sarkar, R. Adaptive Genetic Algorithm Based Deep Feature Selector for Cancer Detection in Lung Histopathological Images. Sci. Rep. 2025, 15, 4803. [Google Scholar] [CrossRef]

- Toumaj, S.; Heidari, A.; Jafari Navimipour, N. Leveraging Explainable Artificial Intelligence for Transparent and Trustworthy Cancer Detection Systems. Artif. Intell. Med. 2025, 169, 103243. [Google Scholar] [CrossRef]

- Mercaldo, F.; Tibaldi, M.G.; Lombardi, L.; Brunese, L.; Santone, A.; Cesarelli, M. An Explainable Method for Lung Cancer Detection and Localisation from Tissue Images through Convolutional Neural Networks. Electronics 2024, 13, 1393. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. Int. J. Comput. Vis. 2020, 128, 336–359. [Google Scholar] [CrossRef]

- Shariff, V.; Paritala, C.; Ankala, K.M. Optimizing Non Small Cell Lung Cancer Detection with Convolutional Neural Networks and Differential Augmentation. Sci. Rep. 2025, 15, 15640. [Google Scholar] [CrossRef]

- Klangbunrueang, R.; Pookduang, P.; Chansanam, W.; Lunrasri, T. AI-Powered Lung Cancer Detection: Assessing VGG16 and CNN Architectures for CT Scan Image Classification. Informatics 2025, 12, 18. [Google Scholar] [CrossRef]

- Jain, R.; Singh, P.; Kaur, A. An Ensemble Reinforcement Learning-Assisted Deep Learning Framework for Enhanced Lung Cancer Diagnosis. Swarm Evol. Comput. 2024, 91, 101767. [Google Scholar] [CrossRef]

- Güraksın, G.E.; Kayadibi, I. A Hybrid LECNN Architecture: A Computer-Assisted Early Diagnosis System for Lung Cancer Using CT Images. Int. J. Comput. Intell. Syst. 2025, 18, 35. [Google Scholar] [CrossRef]

- Borkowski, A.A.; Bui, M.M.; Thomas, L.B.; Wilson, C.P.; DeLand, L.A.; Mastorides, S.M. Lung and Colon Cancer Histopathological Image Dataset (LC25000). arXiv 2019, arXiv:1912.12142. [Google Scholar] [CrossRef]

- Jozi, N.S.; Al-Suhail, G.A. Lung Cancer Detection: The Role of Transfer Learning in Medical Imaging. In Proceedings of the 2024 International Conference on Future Telecommunications and Artificial Intelligence (IC-FTAI), Alexandria, Egypt, 31 December–2 January 2024; pp. 1–6. [Google Scholar]

- Abdollahi, J. Evaluating LeNet Algorithms in Classification Lung Cancer from Iraq-Oncology Teaching Hospital/National Center for Cancer Diseases. arXiv 2023, arXiv:2305.13333. [Google Scholar]

- Abunajm, S.; Elsayed, N.; ElSayed, Z.; Ozer, M. Deep Learning Approach for Early Stage Lung Cancer Detection. arXiv 2023, arXiv:2302.02456. [Google Scholar] [CrossRef]

- Ganguly, K.; Chakraborty, N. Identification of Lung Cancer Affected CT-Scan Images Using a Light-Weight Deep Learning Architecture. In Proceedings of the International Conference on Data, Electronics and Computing, ICDEC 2023, Aizawl, India, 15–16 December 2023; Das, N., Khan, A.K., Mandal, S., Krejcar, O., Bhattacharjee, D., Eds.; Lecture Notes in Networks and Systems. Springer: Singapore, 2024; Volume 1103. [Google Scholar] [CrossRef]

- Raza, R.; Zulfiqar, F.; Khan, M.O.; Arif, M.; Alvi, A.; Iftikhar, M.A.; Alam, T. Lung-EffNet: Lung Cancer Classification Using EfficientNet from CT-Scan Images. Eng. Appl. Artif. Intell. 2023, 126, 106902. [Google Scholar] [CrossRef]

- AL-Huseiny, M.S.; Sajit, A.S. Transfer Learning with GoogLeNet for Detection of Lung Cancer. Indones. J. Electr. Eng. Comput. Sci. 2021, 22, 1078. [Google Scholar] [CrossRef]

| Ref. | Dataset | Methods | Class | Advantages | Limitations | Metrics % |

|---|---|---|---|---|---|---|

| Shafi et al. 2022 [26] | LUNA 16 (888 images) | CNN + SVM | 2 | -Hybrid model enhances results | -Small dataset -Poor scalability | Acc = 94 Pre = 95 Recall = 94.5 F1-score = 94.5 |

| Shaziya & Kattula 2023 [27] | LIDC-IDRI (6691images) | CNN | 2 | -Specialized architecture for nodules | -CT image size reduction impact | Acc = 93.58 Sen = 95.61 Spe = 90.14 |

| Mohamed et al. 2023 [28] | IQ-OTH/NCCD | EOSA-CNN | 3 | -Combines Ebola optimization for feature selection | -Limited data -Data imbalances -Time complexity | Acc = 93.21 Pre =100 Sen = 90.7 Spe =100 F1-score = 92.7 |

| Rajasekar et al. 2023 [29] | -CT scan -histopathological (15,000 images) | CNN, CNN GD, Inception V3, Resnet-50, VGG-16, and VGG-19 | a-2 b-3 | -CNN GD enables continuous parameter learning | -Computational cost (400 epochs) | For CNN GD Acc = 97.86 Pre = 96.39 Sen = 96.7 Spe = 97.4 F1-score = 97.9 |

| Damayanti et al. 2023 [30] | CT scan | CNN + DenseNet | 3 | -Hybrid architecture improves feature extraction | -Unknown no. of data | Acc= 99.49 |

| UrRehman et al. 2024 [31] | LUNA16 | (CNN) With a dual attention mechanism | 2 | -Dual attention mechanism focuses on relevant node features | -Increase training time -Computational cost | Acc = 95.4 Pre = 95.8 Sen = 94.6 Spe = 93.1 |

| Pathan et al. 2024 [32] | IQ-OTH/NCCD | SCA-CNN | 3 | -Optimized architecture for high-precision classification -Heatmap highlights anatomy | -Limited data -High computational costs | Acc = 99 Pre = 92.4 Sen = 92 Spe = 99.1 F1-score = 93 |

| Saxena et al. 2025 [33] | CT scan (434 images) | CNN | 2 | -Introduced Maximum Sensitivity Neural Network | -Small dataset. -Poor scalability | Acc = 98 Sen = 97 |

| Alsallal et al. 2025 [34] | CT scan (2725 images) | Radiomic features with DL and attention mechanisms | 5 | -Hybrid improves accuracy | -Complex model handling | Acc = 92.47 Sen = 92.11 AUC = 93.99 |

| Class Type | Original Samples | SMOTE Samples |

|---|---|---|

| Benign | 78 | 385 |

| Malignant | 385 | 385 |

| Normal | 304 | 385 |

| Total | 767 | 1155 |

| Layer (Type) | Output Shape | Parameters |

|---|---|---|

| Input | (220, 220, 1) | 0 |

| Conv2d | (218, 218, 16) | 160 |

| Batch normalization | (218, 218, 16) | 64 |

| Max_pooling2d | (109,109,16) | 0 |

| Conv2d_1 | (107, 107, 32) | 4640 |

| Batch_normalization_1 | (107, 107, 32) | 128 |

| Max_pooling2d_1 | (53, 53, 32) | 0 |

| Conv2d_2 | (51, 51, 64) | 18,496 |

| Batch_normalization_2 | (51, 51, 64) | 256 |

| Max_pooling2d_2 | (25, 25, 64) | 0 |

| Conv2d_3 | (23, 23, 128) | 73,856 |

| Batch_normalization_3 | (23, 23, 128) | 512 |

| Max_pooling2d_ | (11, 11, 128) | 0 |

| Flatten | (15488) | 0 |

| Dense | (256) | 3,965,184 |

| Dropout | (256) | 0 |

| Dense_1 | (128) | 32,896 |

| Dropout_1 | (128) | 0 |

| Dense_2 | (3) | 387 |

| Total Parameters | 4,096,579 (15.63 MB) | |

| Trainable Parameters | 4,096,099 (15.63 MB) | |

| Non-trainable Parameters | 480 (1.88 KB) |

| Hyper-Parameter | Value |

|---|---|

| Image size | (220, 220, 1) |

| Batch size | 16 |

| Learning rate | 0.001 |

| Optimizer | SGD, RMSProp, Adam, AdamW, NAdam |

| Epochs | 35 |

| Loss function | categorical_crossentropy |

| Early-stopping | monitor = val_loss, patience = 7 |

| ReduceLROnPlateau | monitor = val_loss, patience = 3, factor = 0.5 |

| Optimizer | Accuracy % | Weighted Precision % | Weighted F1-Score % | Weighted Sensitivity % | Weighted Specificity % | Cohen’s Kappa % | Loss | MR % |

|---|---|---|---|---|---|---|---|---|

| SGD | 98.18 | 98.22 | 98.13 | 98.18 | 98.08 | 96.74 | 3.96 | 1.82 |

| RMSprop | 98.79 | 98.83 | 98.77 | 98.79 | 99.39 | 97.97 | 0.07 | 1.21 |

| Adam | 99.39 | 99.40 | 99.39 | 99.39 | 99.46 | 98.94 | 0.17 | 0.61 |

| AdamW | 99.39 | 99.42 | 99.40 | 99.39 | 99.89 | 99.01 | 0.18 | 0.61 |

| NAdam | 99.39 | 99.40 | 99.39 | 99.39 | 99.55 | 98.96 | 0.19 | 0.61 |

| Split-Ratio | Accuracy % | Weighted Precision% | Weighted F1-Score % | Weighted Sensitivity % | Weighted Specificity % | Cohen’s Kappa % | Loss | MR % |

|---|---|---|---|---|---|---|---|---|

| 70:15:15 | 99.39 | 99.40 | 99.39 | 99.39 | 99.46 | 98.94 | 0.18 | 0.61 |

| 80:10:10 | 99.09 | 99.11 | 99.09 | 99.09 | 99.30 | 98.53 | 0.14 | 0.91 |

| 80:20 | 98.64 | 98.79 | 98.67 | 98.64 | 99.83 | 97.71 | 0.22 | 1.36 |

| Optimizer | Accuracy % | Weighted Precision % | Weighted F1-Score % | Weighted Sensitivity % | Weighted Specificity % | Cohen’s Kappa % | Loss | MR % |

|---|---|---|---|---|---|---|---|---|

| SGD | 95.79 | 95.94 | 95.77 | 95.79 | 97.72 | 93.58 | 4.84 | 4.21 |

| RMSprop | 95.57 | 95.78 | 95.53 | 95.57 | 97.62 | 93.25 | 0.20 | 4.43 |

| Adam | 96.77 | 96.86 | 95.99 | 96.77 | 98.25 | 95.08 | 0.20 | 3.23 |

| AdamW | 96.88 | 97.04 | 96.86 | 96.88 | 98.32 | 95.24 | 0.18 | 3.12 |

| NAdam | 95.79 | 95.97 | 95.75 | 95.79 | 97.73 | 93.58 | 0.22 | 4.21 |

| Optimizer | Accuracy % | Weighted Precision % | Weighted F1-Score % | Weighted Sensitivity % | Weighted Specificity % | Cohen’s Kappa % | Loss | MR % |

|---|---|---|---|---|---|---|---|---|

| SGD | 96.76 | 96.80 | 96.75 | 96.76 | 98.40 | 95.13 | 0.27 | 3.24 |

| RMSprop | 97.82 | 97.82 | 97.82 | 97.82 | 98.92 | 96.73 | 0.07 | 2.18 |

| Adam | 98.27 | 98.27 | 98.26 | 98.27 | 99.14 | 97.40 | 0.07 | 1.73 |

| AdamW | 98.31 | 98.31 | 98.31 | 98.31 | 99.14 | 97.47 | 0.08 | 1.69 |

| NAdam | 97.78 | 97.82 | 97.78 | 97.78 | 98.90 | 96.67 | 0.11 | 2.22 |

| Model | Accuracy % | Weighted Precision % | Weighted F1-Score % | Weighted Sensitivity % | Weighted Specificity % | Cohen’s Kappa % | Loss | MR % | Total Parameters |

|---|---|---|---|---|---|---|---|---|---|

| VGG16 | 95.76 | 95.67 | 95.65 | 95.76 | 97.50 | 92.34 | 0.19 | 4.24 | 14,718,275 |

| ResNet50 | 93.94 | 93.93 | 93.93 | 93.94 | 96.52 | 89.16 | 0.24 | 6.06 | 23,602,051 |

| MobileNetV2 | 96.97 | 97.44 | 97.12 | 96.97 | 98.78 | 94.65 | 0.08 | 3.03 | 2,266,947 |

| InceptionV3 | 92.73 | 93.70 | 93.10 | 92.73 | 96.76 | 87.22 | 0.21 | 7.27 | 21,817,123 |

| DenseNet121 | 96.36 | 96.31 | 96.28 | 96.36 | 97.99 | 93.45 | 0.11 | 3.64 | 7,044,675 |

| Xception | 95.76 | 96.47 | 95.93 | 95.76 | 98.15 | 92.51 | 0.14 | 95.76 | 20,875,819 |

| Proposed LCxNet | 99.39 | 99.40 | 99.39 | 99.39 | 99.46 | 98.94 | 0.18 | 0.61 | 4,096,579 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Published by MDPI on behalf of the International Institute of Knowledge Innovation and Invention. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jozi, N.S.; Al-Suhail, G.A. LCxNet: An Explainable CNN Framework for Lung Cancer Detection in CT Images Using Multi-Optimizer and Visual Interpretability. Appl. Syst. Innov. 2025, 8, 153. https://doi.org/10.3390/asi8050153

Jozi NS, Al-Suhail GA. LCxNet: An Explainable CNN Framework for Lung Cancer Detection in CT Images Using Multi-Optimizer and Visual Interpretability. Applied System Innovation. 2025; 8(5):153. https://doi.org/10.3390/asi8050153

Chicago/Turabian StyleJozi, Noor S., and Ghaida A. Al-Suhail. 2025. "LCxNet: An Explainable CNN Framework for Lung Cancer Detection in CT Images Using Multi-Optimizer and Visual Interpretability" Applied System Innovation 8, no. 5: 153. https://doi.org/10.3390/asi8050153

APA StyleJozi, N. S., & Al-Suhail, G. A. (2025). LCxNet: An Explainable CNN Framework for Lung Cancer Detection in CT Images Using Multi-Optimizer and Visual Interpretability. Applied System Innovation, 8(5), 153. https://doi.org/10.3390/asi8050153