Fault Diagnosis in Internal Combustion Engines Using Artificial Intelligence Predictive Models

Abstract

1. Introduction

2. Theoretical Background

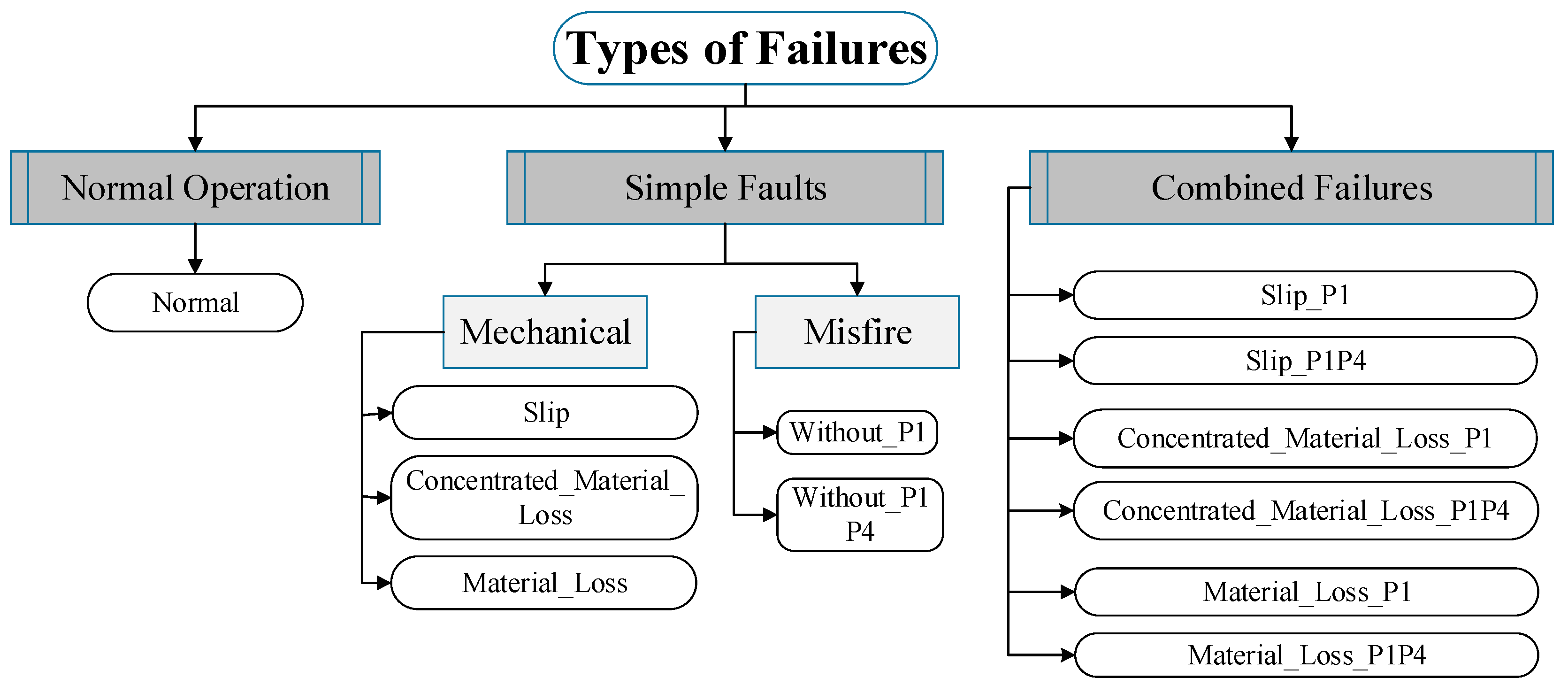

2.1. Faults in Internal Combustion Engines

2.2. Machine Learning Predictive Models

2.2.1. Decision Tree

2.2.2. Gradient Boosting

2.2.3. Random Forest

2.2.4. K-Nearest Neighbors

2.2.5. ANN-MLP

2.3. Deep Learning Predictive Models

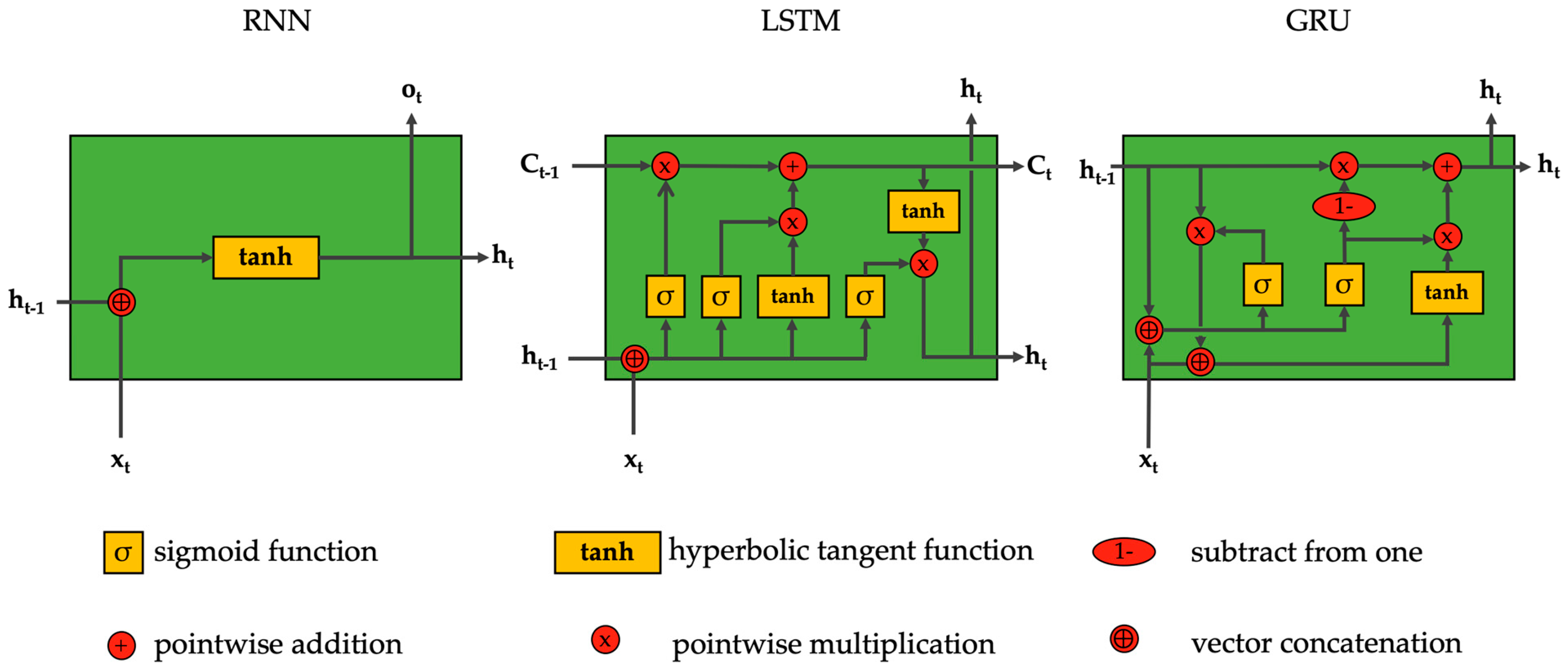

2.3.1. Recurrent Neural Networks

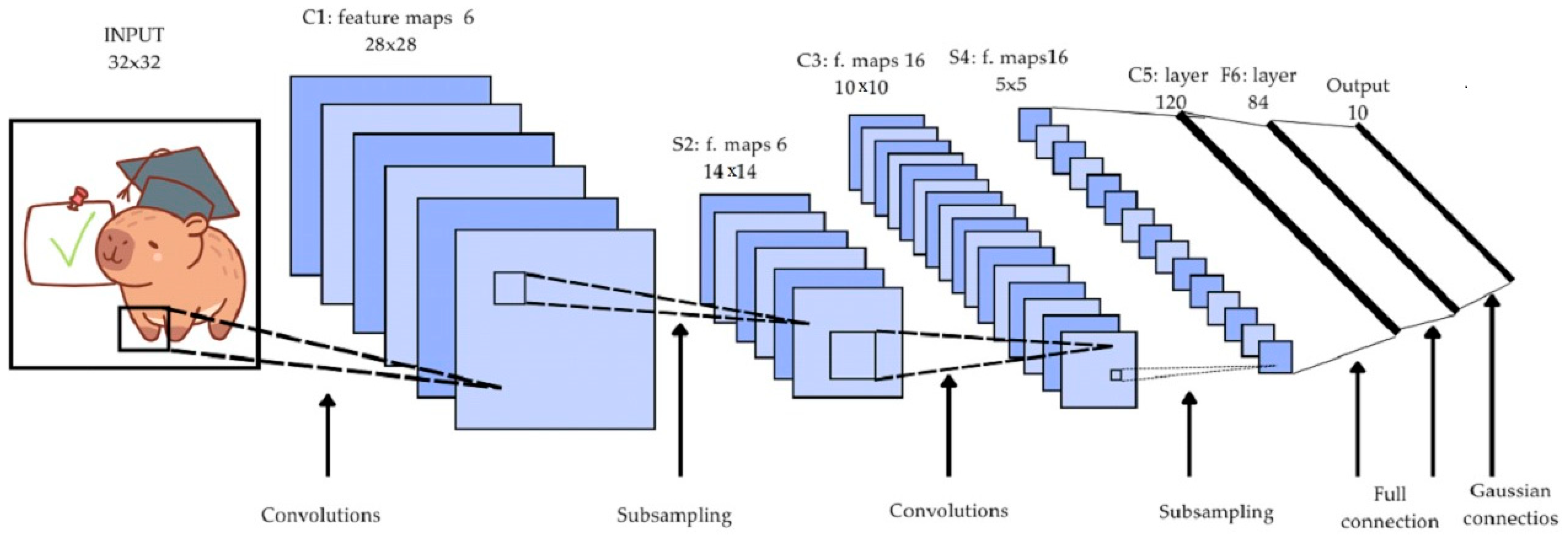

2.3.2. Convolutional Neural Networks

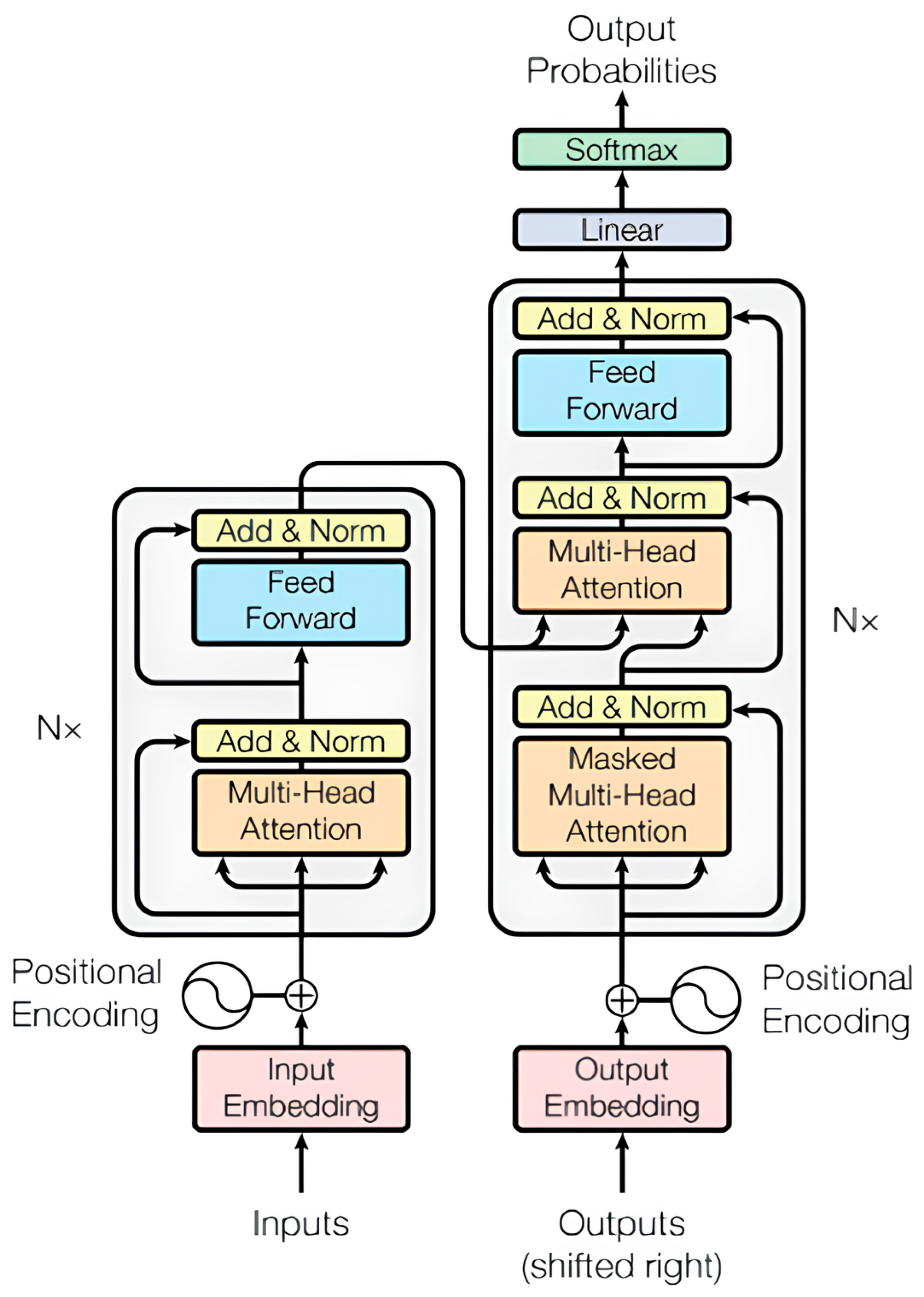

2.3.3. Transformers

2.4. Classification Measures

2.5. Key Studies in the Scientific Literature

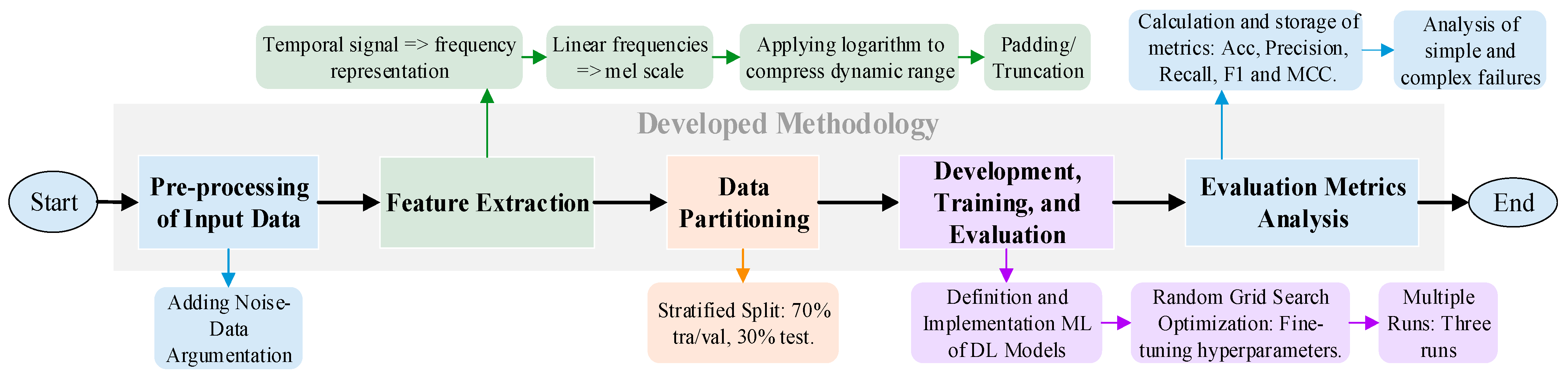

3. Materials and Methods

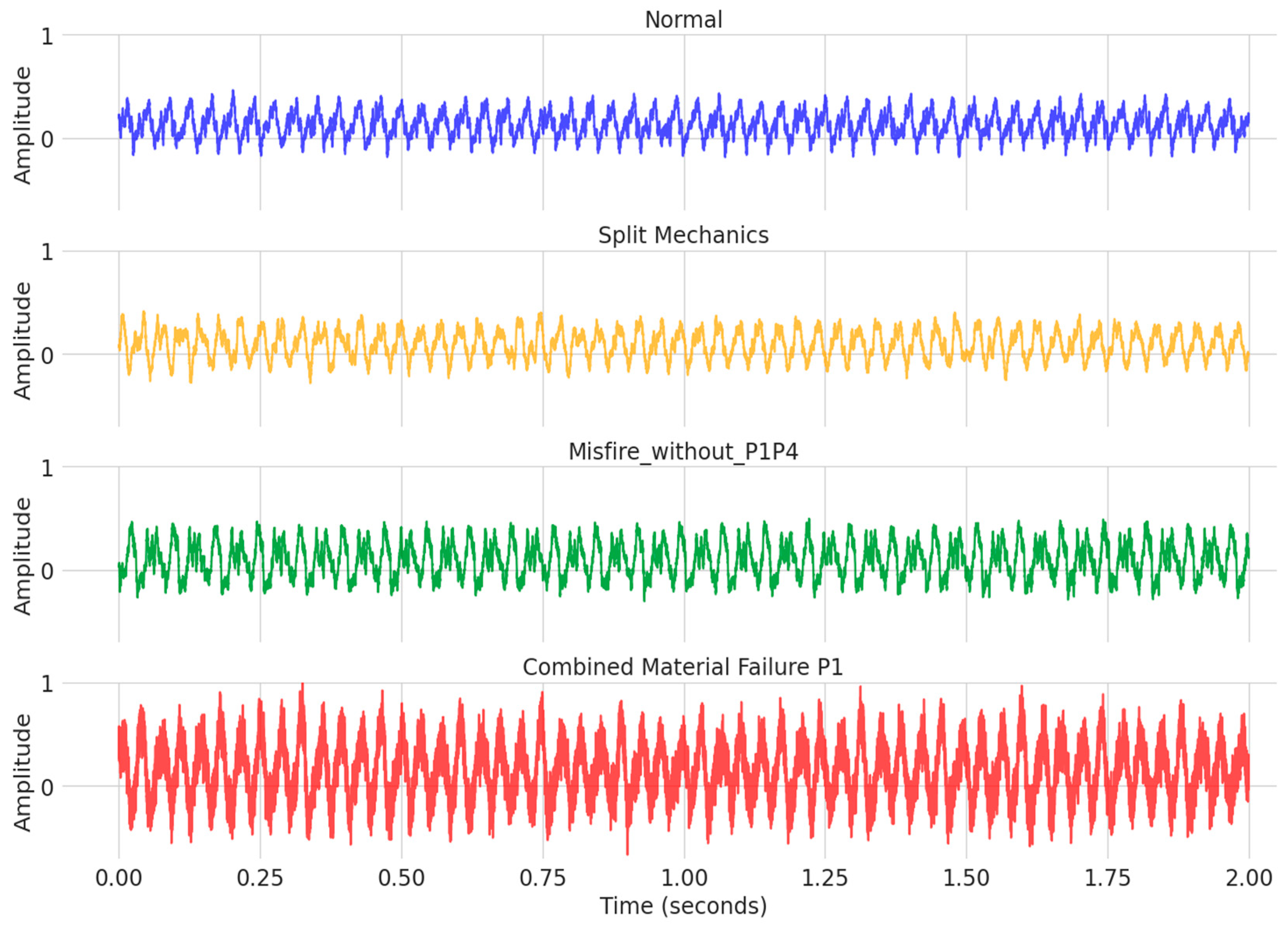

3.1. Dataset and ICE Failures

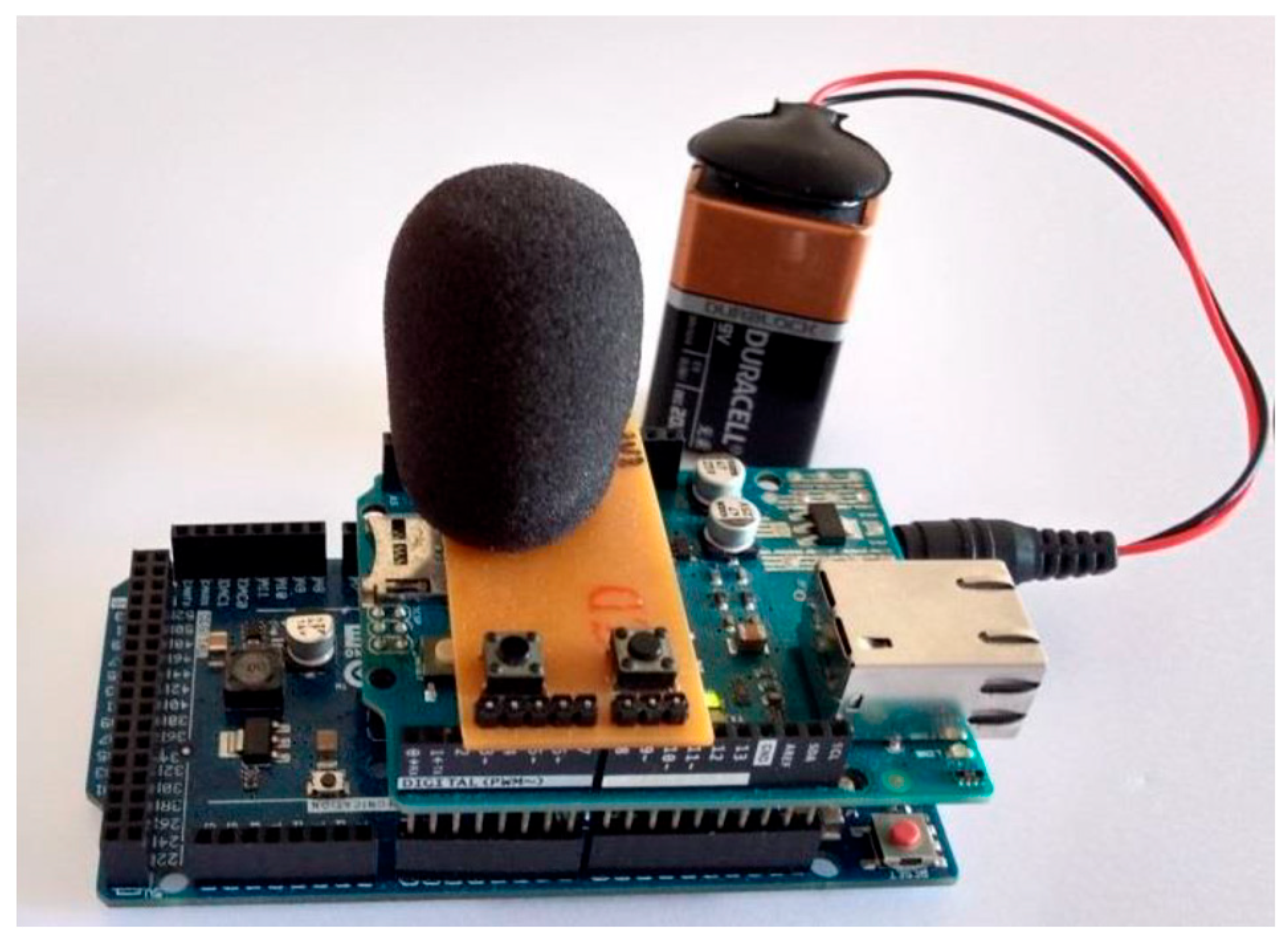

3.2. Experimental Setup and Instrumentation

3.2.1. Preprocessing of Input Data

3.2.2. Feature Extraction with Log-Mel Spectrogram

3.2.3. Data Partitioning

3.2.4. Development, Training, and Evaluation

3.2.5. Evaluation Metrics Analysis

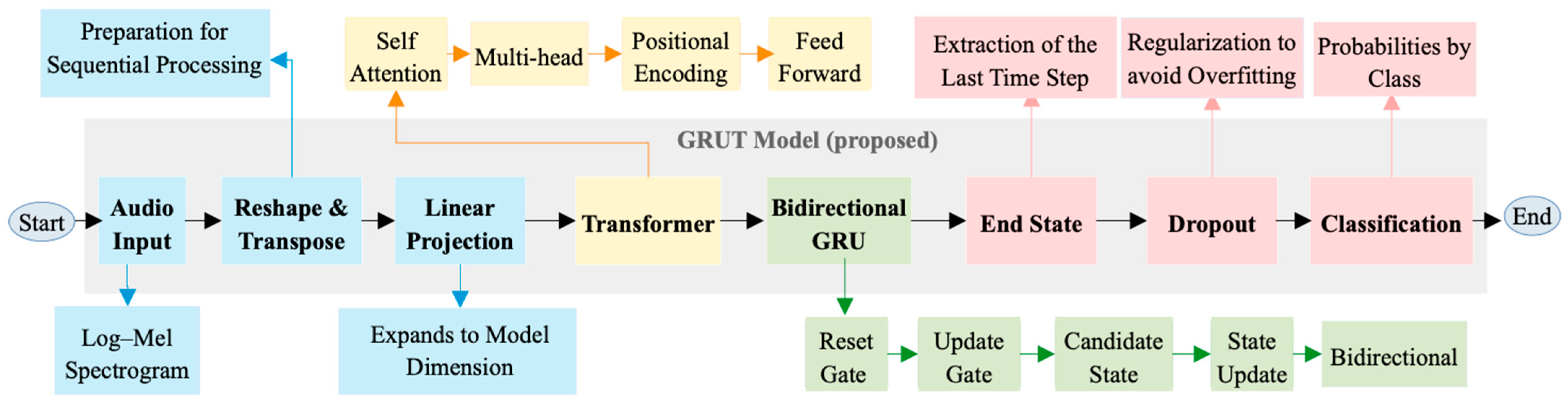

3.3. Proposed BiGRUT Hybrid Model

4. Results and Discussion

4.1. New Dataset for Benchmarking

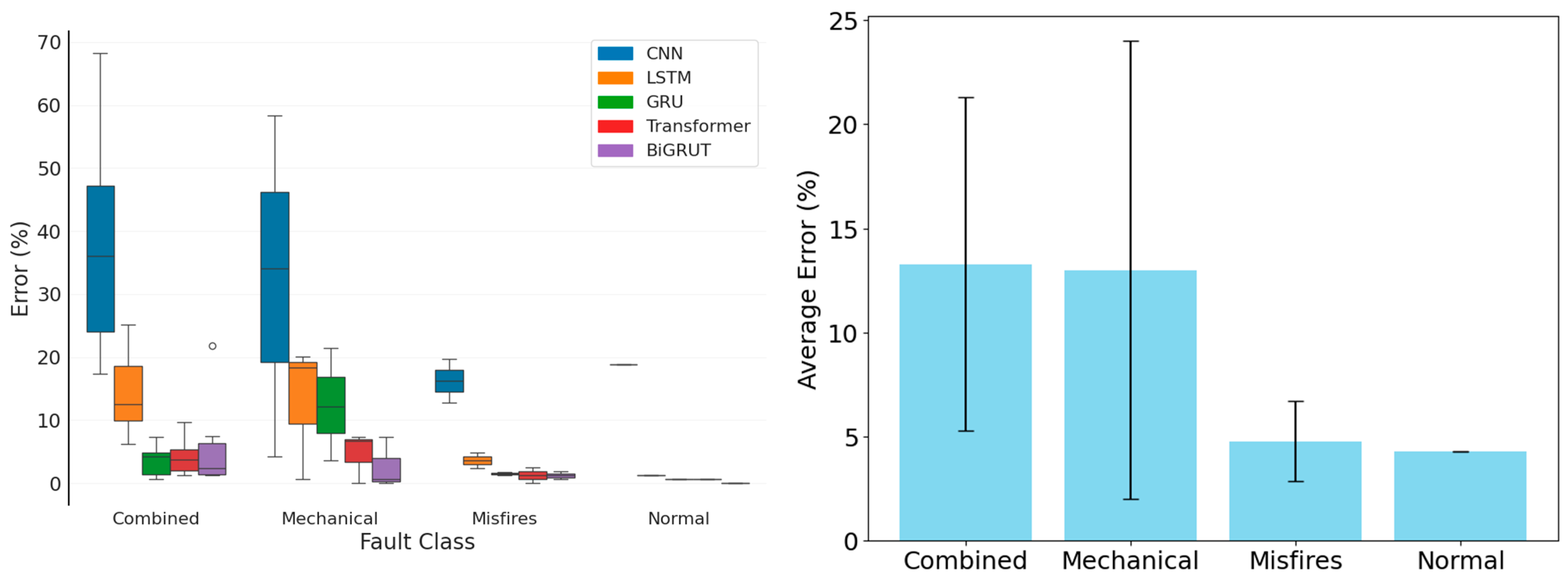

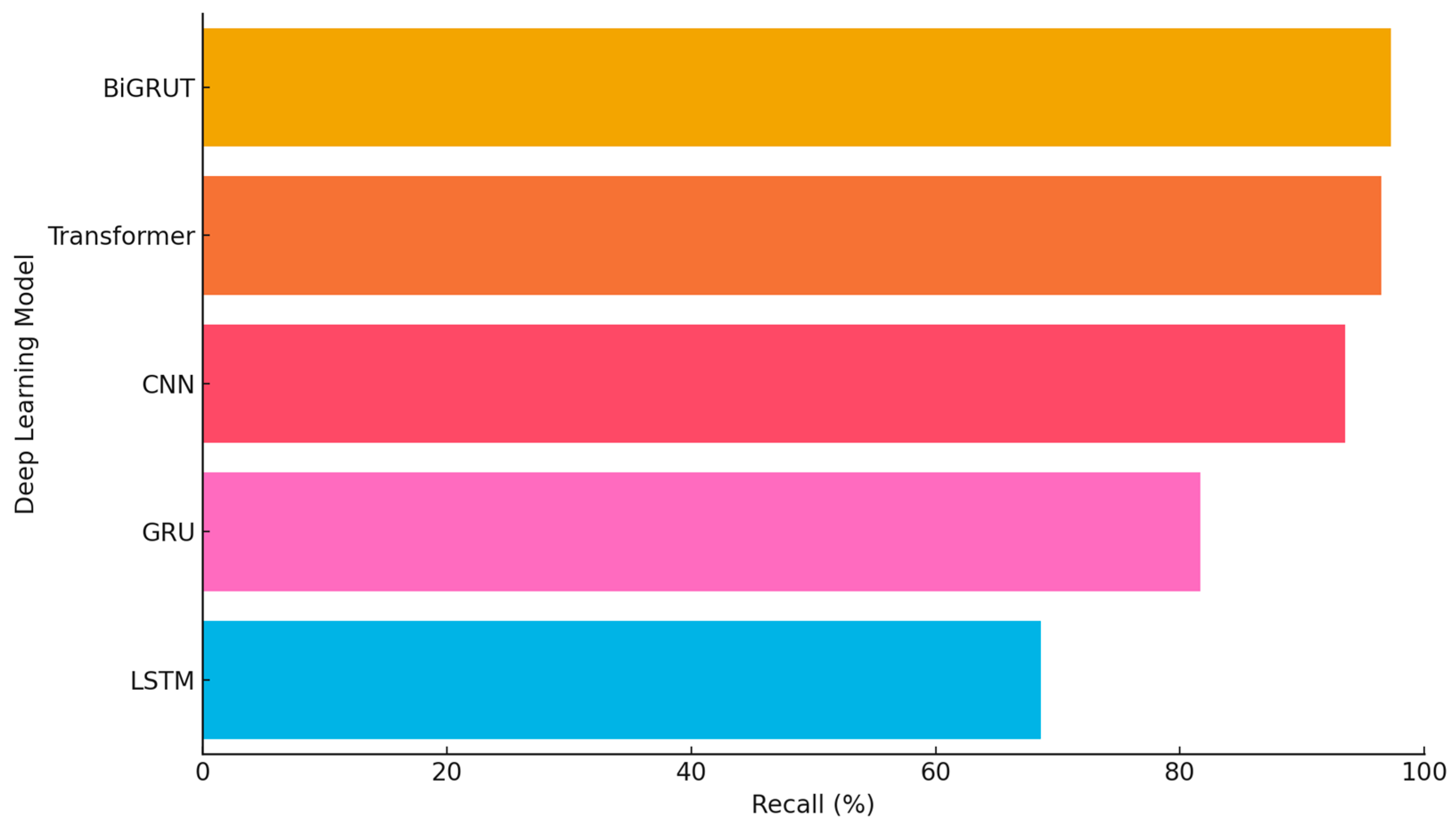

4.2. Classification Performance by Metrics

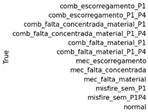

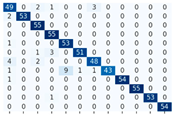

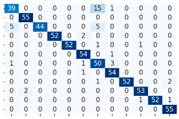

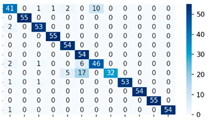

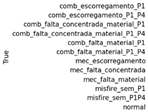

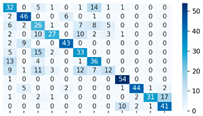

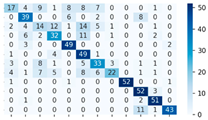

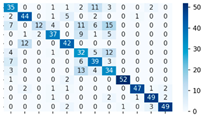

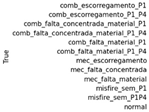

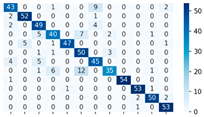

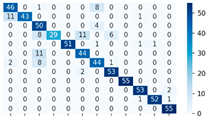

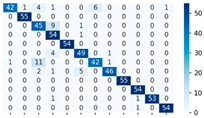

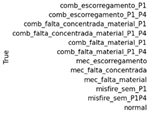

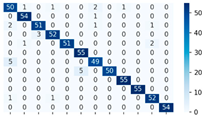

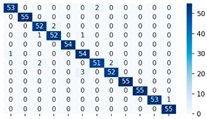

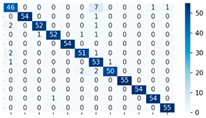

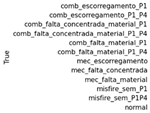

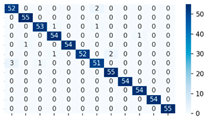

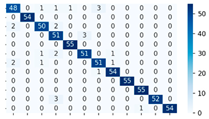

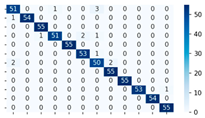

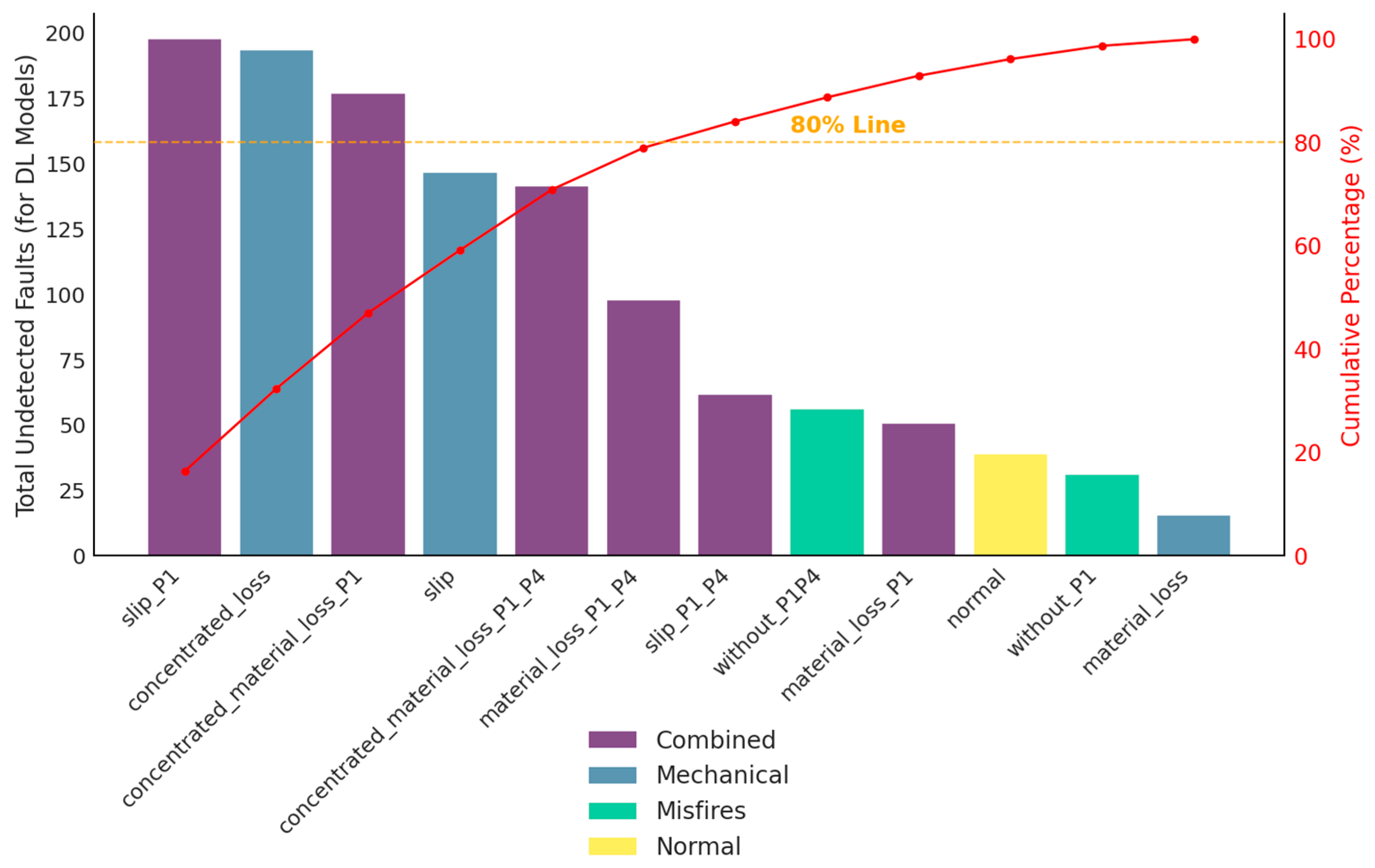

4.3. Performance Analysis by Fault Type

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

| Seed 42 | Seed 456 | Seed 789 | ||

|---|---|---|---|---|

|  |  |  | CNN |

|  |  |  | LSTM |

|  |  |  | GRU |

|  |  |  | Transformer |

|  |  |  | BiGRUT |

|  |  |

References

- IEA; IRENA; UNSD; World Bank; WHO. Tracking SDG 7: The Energy Progress Report; WHO: Washington DC, USA, 2024; p. 179. [Google Scholar]

- Sachs, J.; Kroll, C.; Lafortune, G.; Fuller, G.; Woelm, F. Sustainable Development Report 2022, 1st ed.; Cambridge University Press: Cambridge, UK, 2022; ISBN 978-1-009-21005-8. [Google Scholar]

- CODS. Índice ODS 2022 Para América Latina y El Caribe; Centro de los Objetivos de Desarrollo Sostenible para América Latina y el Caribe: Bogotá, Colombia, 2023; p. 100. [Google Scholar]

- Ovrum, E.; Longva, T.; Leisner, M.; Bachmann, E.M.; Gundersen, O.S.; Helgesen, H.; Endresen, O. Energy Transition Outlook 2024—Maritime Forecast to 2050; DNV: Oslo, Norway, 2023; p. 73. [Google Scholar]

- United Nations Trade; Development (UNCTAD). Review of Maritime Transport 2024: Navigating Maritime Chokepoints, 1st ed.; Review of Maritime Transport Series; United Nations Research Institute for Social Development: Bloomfield, NJ, USA, 2024; ISBN 978-92-1-106592-3. [Google Scholar]

- Nahim, H.M.; Younes, R.; Nohra, C.; Ouladsine, M. Complete Modeling for Systems of a Marine Diesel Engine. J. Mar. Sci. Appl. 2015, 14, 93–104. [Google Scholar] [CrossRef]

- Neumann, S.; Varbanets, R.; Minchev, D.; Malchevsky, V.; Zalozh, V. Vibrodiagnostics of Marine Diesel Engines in IMES GmbH Systems. Ships Offshore Struct. 2023, 18, 1535–1546. [Google Scholar] [CrossRef]

- Dong, F.; Yang, J.; Cai, Y.; Xie, L. Transfer Learning-Based Fault Diagnosis Method for Marine Turbochargers. Actuators 2023, 12, 146. [Google Scholar] [CrossRef]

- Rodríguez, C.G.; Lamas, M.I.; Rodríguez, J.D.D.; Caccia, C. Analysis of the pre-injection configuration in a marine engine through several mcdm techniques. Brodogradnja 2021, 72, 1–17. [Google Scholar] [CrossRef]

- Varbanets, R.; Shumylo, O.; Marchenko, A.; Minchev, D.; Kyrnats, V.; Zalozh, V.; Aleksandrovska, N.; Brusnyk, R.; Volovyk, K. Concept of Vibroacoustic Diagnostics of the Fuel Injection and Electronic Cylinder Lubrication Systems of Marine Diesel Engines. Pol. Marit. Res. 2022, 29, 88–96. [Google Scholar] [CrossRef]

- Tharanga, K.L.P.; Liu, S.; Zhang, S.; Wang, Y. Diesel Engine Fault Diagnosis with Vibration Signal. J. Appl. Math. Phys. 2020, 8, 2031–2042. [Google Scholar] [CrossRef]

- Varbanets, R.; Fomin, O.; Píštěk, V.; Klymenko, V.; Minchev, D.; Khrulev, A.; Zalozh, V.; Kučera, P. Acoustic Method for Estimation of Marine Low-Speed Engine Turbocharger Parameters. J. Mar. Sci. Eng. 2021, 9, 321. [Google Scholar] [CrossRef]

- Deptuła, A.; Kunderman, D.; Osiński, P.; Radziwanowska, U.; Włostowski, R. Acoustic Diagnostics Applications in the Study of Technical Condition of Combustion Engine. Arch. Acoust. 2016, 41, 345–350. [Google Scholar] [CrossRef]

- Box, G.E.P.; Jenkins, G.M.; Reinsel, G.C.; Ljung, G.M. Time Series Analysis: Forecasting and Control, 5th ed.; Wiley Series in Probability and Statistics; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2016; ISBN 978-1-118-67502-1. [Google Scholar]

- Cooley, J.W.; Tukey, J.W. An Algorithm for the Machine Calculation of Complex Fourier Series. Math. Comput. 1965, 19, 297–301. [Google Scholar] [CrossRef]

- Karpenko, M.; Ževžikov, P.; Stosiak, M.; Skačkauskas, P.; Borucka, A.; Delembovskyi, M. Vibration Research on Centrifugal Loop Dryer Machines Used in Plastic Recycling Processes. Machines 2024, 12, 29. [Google Scholar] [CrossRef]

- Danilevičius, A.; Danilevičienė, I.; Karpenko, M.; Stosiak, M.; Skačkauskas, P. Determination of the Instantaneous Noise Level Using a Discrete Road Traffic Flow Method. Promet—Traffic Transp. 2025, 37, 71–85. [Google Scholar] [CrossRef]

- Espi, M.; Fujimoto, M.; Kinoshita, K.; Nakatani, T. Exploiting Spectro-Temporal Locality in Deep Learning Based Acoustic Event Detection. J. Audio Speech Music Process. 2015, 2015, 26. [Google Scholar] [CrossRef]

- Russell, S.J.; Norvig, P. Artificial Intelligence: A Modern Approach, 4th ed.; Pearson Series in Artificial Intelligence; Pearson: Hoboken, NJ, USA, 2020; ISBN 978-0-13-461099-3. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: New York, NY, USA, 2017; Volume 30. [Google Scholar]

- Abubakar, S.; Said, M.F.M.; Abas, M.A.; Samaila, U.; Ibrahim, A.A.; Ismail, N.A.; Narayan, S.; Kaisan, M.U. Application of artificial intelligence in internal combustion engines—Bibliometric analysis on progress and future research priorities. J. Balk. Tribol. Assoc. 2024, 30, 632–654. [Google Scholar]

- Ahmed, R.; El Sayed, M.; Gadsden, S.A.; Tjong, J.; Habibi, S. Automotive Internal-Combustion-Engine Fault Detection and Classification Using Artificial Neural Network Techniques. IEEE Trans. Veh. Technol. 2015, 64, 21–33. [Google Scholar] [CrossRef]

- Yang, M.; Chen, H.; Guan, C. Research on Diesel Engine Fault Diagnosis Method Based on Machine Learning. In Proceedings of the 2022 4th International Conference on Frontiers Technology of Information and Computer (ICFTIC), Qingdao, China, 2 December 2022; IEEE: Piscataway, NJ, USA; pp. 1078–1082. [Google Scholar]

- Czech, P.; Wojnar, G.; Burdzik, R.; Konieczny, Ł.; Warczek, J. Application of the Discrete Wavelet Transform and Probabilistic Neural Networks in IC Engine Fault Diagnostics. J. Vibroeng. 2014, 16, 1619–1639. [Google Scholar]

- Zheng, H.; Zhou, H.; Kang, C.; Liu, Z.; Dou, Z.; Liu, J.; Li, B.; Chen, Y. Modeling and Prediction for Diesel Performance Based on Deep Neural Network Combined with Virtual Sample. Sci. Rep. 2021, 11, 16709. [Google Scholar] [CrossRef] [PubMed]

- Makridis, G.; Kyriazis, D.; Plitsos, S. Predictive Maintenance Leveraging Machine Learning for Time-Series Forecasting in the Maritime Industry. In Proceedings of the 2020 IEEE 23rd International Conference on Intelligent Transportation Systems (ITSC), Rhodes, Greece, 20 September 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–8. [Google Scholar]

- Xu, G.; Liu, M.; Jiang, Z.; Shen, W.; Huang, C. Online Fault Diagnosis Method Based on Transfer Convolutional Neural Networks. IEEE Trans. Instrum. Meas. 2020, 69, 509–520. [Google Scholar] [CrossRef]

- Senemmar, S.; Zhang, J. Deep Learning-Based Fault Detection, Classification, and Locating in Shipboard Power Systems. In Proceedings of the 2021 IEEE Electric Ship Technologies Symposium (ESTS), Arlington, VA, USA, 3 August 2021; IEEE: Piscataway, NJ, USA; pp. 1–6. [Google Scholar]

- Theodoropoulos, P.; Spandonidis, C.C.; Fassois, S. Use of Convolutional Neural Networks for Vessel Performance Optimization and Safety Enhancement. Ocean. Eng. 2022, 248, 110771. [Google Scholar] [CrossRef]

- Spandonidis, C.; Paraskevopoulos, D. Evaluation of a Deep Learning-Based Index for Prognosis of a Vessel’s Propeller-Hull Degradation. Sensors 2023, 23, 8956. [Google Scholar] [CrossRef]

- Laurie, A.; Anderlini, E.; Dietz, J.; Thomas, G. Machine Learning for Shaft Power Prediction and Analysis of Fouling Related Performance Deterioration. Ocean. Eng. 2021, 234, 108886. [Google Scholar] [CrossRef]

- Venkata, S.K.; Rao, S. Fault Detection of a Flow Control Valve Using Vibration Analysis and Support Vector Machine. Electronics 2019, 8, 1062. [Google Scholar] [CrossRef]

- Ellefsen, A.L.; Bjorlykhaug, E.; Aesoy, V.; Zhang, H. An Unsupervised Reconstruction-Based Fault Detection Algorithm for Maritime Components. IEEE Access 2019, 7, 16101–16109. [Google Scholar] [CrossRef]

- Ellefsen, A.L.; Cheng, X.; Holmeset, F.T.; Asoy, V.; Zhang, H.; Ushakov, S. Automatic Fault Detection for Marine Diesel Engine Degradation in Autonomous Ferry Crossing Operation. In Proceedings of the 2019 IEEE International Conference on Mechatronics and Automation (ICMA), Tianjin, China, 4–7 August 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 2195–2200. [Google Scholar]

- Ellefsen, A.L.; Han, P.; Cheng, X.; Holmeset, F.T.; Aesoy, V.; Zhang, H. Online Fault Detection in Autonomous Ferries: Using Fault-Type Independent Spectral Anomaly Detection. IEEE Trans. Instrum. Meas. 2020, 69, 8216–8225. [Google Scholar] [CrossRef]

- Korczewski, Z. Test Method for Determining the Chemical Emissions of a Marine Diesel Engine Exhaust in Operation. Pol. Marit. Res. 2021, 28, 76–87. [Google Scholar] [CrossRef]

- Bogdanowicz, A.; Kniaziewicz, T. Marine Diesel Engine Exhaust Emissions Measured in Ship’s Dynamic Operating Conditions. Sensors 2020, 20, 6589. [Google Scholar] [CrossRef]

- Rodrigues, N.F.; Brito, A.V.; Ramos, J.G.G.S.; Mishina, K.D.V.; Belo, F.A.; Lima Filho, A.C. Misfire Detection in Automotive Engines Using a Smartphone Through Wavelet and Chaos Analysis. Sensors 2022, 22, 5077. [Google Scholar] [CrossRef]

- Smart, E.; Grice, N.; Ma, H.; Garrity, D.; Brown, D. One Class Classification Based Anomaly Detection for Marine Engines. In Intelligent Systems: Theory, Research and Innovation in Applications; Jardim-Goncalves, R., Sgurev, V., Jotsov, V., Kacprzyk, J., Eds.; Studies in Computational Intelligence; Springer International Publishing: Cham, Switzerland, 2020; Volume 864, pp. 223–245. ISBN 978-3-030-38703-7. [Google Scholar]

- Wieclawski, K.; Figlus, T.; Mączak, J.; Szczurowski, K. Method of Fuel Injector Diagnosis Based on Analysis of Current Quantities. Sensors 2022, 22, 6735. [Google Scholar] [CrossRef]

- Tamura, M.; Saito, H.; Murata, Y.; Kokubu, K.; Morimoto, S. Misfire Detection on Internal Combustion Engines Using Exhaust Gas Temperature with Low Sampling Rate. Appl. Therm. Eng. 2011, 31, 4125–4131. [Google Scholar] [CrossRef]

- Avan, E.Y.; Mills, R.; Dwyer-Joyce, R. Ultrasonic Imaging of the Piston Ring Oil Film During Operation in a Motored Engine—Towards Oil Film Thickness Measurement. SAE Int. J. Fuels Lubr. 2010, 3, 786–793. [Google Scholar] [CrossRef]

- Stoumpos, S.; Theotokatos, G.; Mavrelos, C.; Boulougouris, E. Towards Marine Dual Fuel Engines Digital Twins—Integrated Modelling of Thermodynamic Processes and Control System Functions. J. Mar. Sci. Eng. 2020, 8, 200. [Google Scholar] [CrossRef]

- Stoumpos, S.; Theotokatos, G. A Novel Methodology for Marine Dual Fuel Engines Sensors Diagnostics and Health Management. Int. J. Engine Res. 2022, 23, 974–994. [Google Scholar] [CrossRef]

- Aghazadeh Ardebili, A.; Ficarella, A.; Longo, A.; Khalil, A.; Khalil, S. Hybrid Turbo-Shaft Engine Digital Twining for Autonomous Air-Crafts via AI and Synthetic Data Generation. Aerospace 2023, 10, 683. [Google Scholar] [CrossRef]

- Wu, Z.; Li, J. A Framework of Dynamic Data Driven Digital Twin for Complex Engineering Products: The Example of Aircraft Engine Health Management. Procedia Manuf. 2021, 55, 139–146. [Google Scholar] [CrossRef]

- Jiang, J.; Li, H.; Mao, Z.; Liu, F.; Zhang, J.; Jiang, Z.; Li, H. A Digital Twin Auxiliary Approach Based on Adaptive Sparse Attention Network for Diesel Engine Fault Diagnosis. Sci. Rep. 2022, 12, 675. [Google Scholar] [CrossRef] [PubMed]

- Torres, N.N.S.; Lima, J.G.; Maciel, J.N.; Gazziro, M.; Filho, A.C.L.; Souto, C.R.; Salvadori, F.; Ando Junior, O.H. Non-Invasive Techniques for Monitoring and Fault Detection in Internal Combustion Engines: A Systematic Review. Energies 2024, 17, 6164. [Google Scholar] [CrossRef]

- Hountalas, T.D.; Founti, M.; Zannis, T.C. Experimental Investigation to Assess the Performance Characteristics of a Marine Two-Stroke Dual Fuel Engine Under Diesel and Natural Gas Mode. Energies 2023, 16, 3551. [Google Scholar] [CrossRef]

- Lima, T.L.; Filho, A.C.L.; Belo, F.A.; Souto, F.V.; Silva, T.C.B.; Mishina, K.V.; Rodrigues, M.C. Noninvasive Methods for Fault Detection and Isolation in Internal Combustion Engines Based on Chaos Analysis. Sensors 2021, 21, 6925. [Google Scholar] [CrossRef] [PubMed]

- Terwilliger, A.M.; Siegel, J.E. Improving Misfire Fault Diagnosis with Cascading Architectures via Acoustic Vehicle Characterization. Sensors 2022, 22, 7736. [Google Scholar] [CrossRef]

- Chen, J.; Randall, R.B.; Feng, N.; Peeters, B.; Van der Auweraer, H. Automated Diagnostics of Internal Combustion Engines Using Vibration Simulation. In Proceedings of the ICSV20, Bangkok, Thailand, 7–11 July 2013. [Google Scholar]

- Mahdisoozani, H.; Mohsenizadeh, M.; Bahiraei, M.; Kasaeian, A.; Daneshvar, A.; Goodarzi, M.; Safaei, M.R. Performance Enhancement of Internal Combustion Engines Through Vibration Control: State of the Art and Challenges. Appl. Sci. 2019, 9, 406. [Google Scholar] [CrossRef]

- Barelli, L.; Bidini, G.; Buratti, C.; Mariani, R. Diagnosis of Internal Combustion Engine Through Vibration and Acoustic Pressure Non-Intrusive Measurements. Appl. Therm. Eng. 2009, 29, 1707–1713. [Google Scholar] [CrossRef]

- Hwang, O.; Lee, M.C.; Weng, W.; Zhang, Y.; Li, Z. Development of Novel Ultrasonic Temperature Measurement Technology for Combustion Gas as a Potential Indicator of Combustion Instability Diagnostics. Appl. Therm. Eng. 2019, 159, 113905. [Google Scholar] [CrossRef]

- Förster, F.; Crua, C.; Davy, M.; Ewart, P. Temperature Measurements under Diesel Engine Conditions Using Laser Induced Grating Spectroscopy. Combust. Flame 2019, 199, 249–257. [Google Scholar] [CrossRef]

- Liang, J.; Mao, Z.; Liu, F.; Kong, X.; Zhang, J.; Jiang, Z. Multi-Sensor Signals Multi-Scale Fusion Method for Fault Detection of High-Speed and High-Power Diesel Engine under Variable Operating Conditions. Eng. Appl. Artif. Intell. 2023, 126, 106912. [Google Scholar] [CrossRef]

- Janiesch, C.; Zschech, P.; Heinrich, K. Machine Learning and Deep Learning. Electron Mark. 2021, 31, 685–695. [Google Scholar] [CrossRef]

- Hutter, F.; Kotthoff, L.; Vanschoren, J. (Eds.) Automated Machine Learning: Methods, Systems, Challenges; The Springer Series on Challenges in Machine Learning; Springer International Publishing: Cham, Switzerland, 2019; ISBN 978-3-030-05317-8. [Google Scholar]

- Quinlan, J.R. Induction of Decision Trees. Mach. Learn. 1986, 1, 81–106. [Google Scholar] [CrossRef]

- Lecun, Y.; Bengio, Y.; Hinton, G. Deep Learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Elman, J.L. Finding Structure in Time. Cogn. Sci. 1990, 14, 179–211. [Google Scholar] [CrossRef]

- Bengio, Y.; Simard, P.; Frasconi, P. Learning Long-Term Dependencies with Gradient Descent Is Difficult. IEEE Trans. Neural Netw. 1994, 5, 157–166. [Google Scholar] [CrossRef]

- Pascanu, R.; Mikolov, T.; Bengio, Y. On the Difficulty of Training Recurrent Neural Networks. In Proceedings of the 30th International Conference on Machine Learning, Atlanta, GA, USA, 16–21 June 2013; Sanjoy, D., David, M., Eds.; Volume 28, pp. 1310–1318. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Mienye, I.D.; Swart, T.G.; Obaido, G. Recurrent Neural Networks: A Comprehensive Review of Architectures, Variants, and Applications. Information 2024, 15, 517. [Google Scholar] [CrossRef]

- Sit, M.; Demiray, B.Z.; Xiang, Z.; Ewing, G.J.; Sermet, Y.; Demir, I. A Comprehensive Review of Deep Learning Applications in Hydrology and Water Resources. Water Sci. Technol. 2020, 82, 2635–2670. [Google Scholar] [CrossRef]

- Perumal, T.; Mustapha, N.; Mohamed, R.; Shiri, F.M. A Comprehensive Overview and Comparative Analysis on Deep Learning Models. J. Artif. Intell. 2024, 6, 301–360. [Google Scholar] [CrossRef]

- Massaoudi, M.; Abu-Rub, H.; Refaat, S.S.; Chihi, I.; Oueslati, F.S. Deep Learning in Smart Grid Technology: A Review of Recent Advancements and Future Prospects. IEEE Access 2021, 9, 54558–54578. [Google Scholar] [CrossRef]

- Cho, K.; van Merrienboer, B.; Bahdanau, D.; Bengio, Y. On the Properties of Neural Machine Translation: Encoder-Decoder Approaches. arXiv 2014, arXiv:1409.1259. [Google Scholar] [CrossRef]

- Zhang, W.; Li, H.; Tang, L.; Gu, X.; Wang, L.; Wang, L. Displacement Prediction of Jiuxianping Landslide Using Gated Recurrent Unit (GRU) Networks. Acta Geotech. 2022, 17, 1367–1382. [Google Scholar] [CrossRef]

- Mateus, B.C.; Mendes, M.; Farinha, J.T.; Assis, R.; Cardoso, A.M. Comparing LSTM and GRU Models to Predict the Condition of a Pulp Paper Press. Energies 2021, 14, 6958. [Google Scholar] [CrossRef]

- Albawi, S.; Mohammed, T.A.; Al-Zawi, S. Understanding of a Convolutional Neural Network. In Proceedings of the 2017 International Conference on Engineering and Technology (ICET), Antalya, Turkey, 21–23 August 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1–6. [Google Scholar]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Ha, P. Gradient-Based Learning Applied to Document Recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.-C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 4510–4520. [Google Scholar]

- Iandola, F.N.; Han, S.; Moskewicz, M.W.; Ashraf, K.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-Level Accuracy with 50x Fewer Parameters and <0.5MB Model Size. arXiv 2016, arXiv:1602.07360. [Google Scholar]

- Liu, Y.; Wu, L. Intrusion Detection Model Based on Improved Transformer. Appl. Sci. 2023, 13, 6251. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 770–778. [Google Scholar]

- Ba, J.L.; Kiros, J.R.; Hinton, G.E. Layer Normalization. arXiv 2016, arXiv:1607.06450. [Google Scholar] [CrossRef]

- Gavrilyuk, K.; Sanford, R.; Javan, M.; Snoek, C.G.M. Actor-Transformers for Group Activity Recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Zhuang, B.; Liu, J.; Pan, Z.; He, H.; Weng, Y.; Shen, C. A Survey on Efficient Training of Transformers. In Proceedings of the Proceedings of the Thirty-Second International Joint Conference on Artificial Intelligence, Macau, China, 19 August 2023; International Joint Conferences on Artificial Intelligence Organization: Stanford, CA, USA, 2023; pp. 6823–6831. [Google Scholar]

- Park, J.; Choi, K.; Jeon, S.; Kim, D.; Park, J. A Bi-Directional Transformer for Musical Chord Recognition. arXiv 2019, arXiv:1907.02698. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning (Adaptive Computation and Machine Learning); The MIT Press: Cambridge, UK, 2016; ISBN 978-0-262-03561-3. [Google Scholar]

- Haibo, H.; Garcia, E.A. Learning from Imbalanced Data. IEEE Trans. Knowl. Data Eng. 2009, 21, 1263–1284. [Google Scholar] [CrossRef]

- Chicco, D.; Jurman, G. The Advantages of the Matthews Correlation Coefficient (MCC) over F1 Score and Accuracy in Binary Classification Evaluation. BMC Genom. 2020, 21, 6. [Google Scholar] [CrossRef]

- Teodoro, L.D.A.; Kappel, M.A.A. Aplicação de Técnicas de Aprendizado de Máquina Para Predição de Risco de Evasão Escolar Em Instituições Públicas de Ensino Superior No Brasil. Rev. Bras. Inform. Educ. 2020, 28, 838–863. [Google Scholar] [CrossRef]

- Tharwat, A. Classification Assessment Methods. Appl. Comput. Inform. 2021, 17, 168–192. [Google Scholar] [CrossRef]

- Jain, D.; Singh, V. Feature Selection and Classification Systems for Chronic Disease Prediction: A Review. Egypt. Inform. J. 2018, 19, 179–189. [Google Scholar] [CrossRef]

- Guimaraes, M.T.; Medeiros, A.G.; Almeida, J.S.; Falcao, Y.; Martin, M.; Damasevicius, R.; Maskeliunas, R.; Cavalcante Mattos, C.L.; Reboucas Filho, P.P. An Optimized Approach to Huntington’s Disease Detecting via Audio Signals Processing with Dimensionality Reduction. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–8. [Google Scholar]

- Medeiros, T.A.; Saraiva Junior, R.G.; Cassia, G.D.S.E.; Nascimento, F.A.D.O.; Carvalho, J.L.A.D. Classification of 1p/19q Status in Low-Grade Gliomas: Experiments with Radiomic Features and Ensemble-Based Machine Learning Methods. Braz. Arch. Biol. Technol. 2023, 66, e23230002. [Google Scholar] [CrossRef]

- Pająk, M.; Kluczyk, M.; Muślewski, Ł.; Lisjak, D.; Kolar, D. Ship Diesel Engine Fault Diagnosis Using Data Science and Machine Learning. Electronics 2023, 12, 3860. [Google Scholar] [CrossRef]

- Ranachowski, Z.; Bejger, A. Fault diagnostics of the fuel injection system of a medium power maritime diesel engine with application of acoustic signal. Arch. Acoust. 2005, 30, 465–472. [Google Scholar]

- Theodoropoulos, P.; Spandonidis, C.C.; Giannopoulos, F.; Fassois, S. A Deep Learning-Based Fault Detection Model for Optimization of Shipping Operations and Enhancement of Maritime Safety. Sensors 2021, 21, 5658. [Google Scholar] [CrossRef]

- de Lima, T.L.V.; Lima Filho, A.C. Métodos Não Invasivos Para Detecção E Isolamento De Falhas Em Motores De Combustão Interna Baseados Em Dimensões Fractais E Análise Multiresolução Wavelet. Ph.D. Thesis, Universidade Federal da Paraíba, Paraíba, Brasil, 2020. [Google Scholar]

- Iglesias, G.; Talavera, E.; González-Prieto, Á.; Mozo, A.; Gómez-Canaval, S. Data Augmentation Techniques in Time Series Domain: A Survey and Taxonomy. Neural Comput. Applic. 2023, 35, 10123–10145. [Google Scholar] [CrossRef]

- Yang, L.; Shami, A. On Hyperparameter Optimization of Machine Learning Algorithms: Theory and Practice. Neurocomputing 2020, 415, 295–316. [Google Scholar] [CrossRef]

- Ando Junior, O.H.; Maran, A.L.O.; Henao, N.C. A Review of the Development and Applications of Thermoelectric Microgenerators for Energy Harvesting. Renew. Sustain. Energy Rev. 2018, 91, 376–393. [Google Scholar] [CrossRef]

- Ando Junior, O.H.; Calderon, N.H.; De Souza, S.S. Characterization of a Thermoelectric Generator (TEG) System for Waste Heat Recovery. Energies 2018, 11, 1555. [Google Scholar] [CrossRef]

- Ando Junior, O.H.; Izidoro, C.L.; Gomes, J.M.; Correia, J.H.; Carmo, J.P.; Schaeffer, L. Acquisition and Monitoring System for TEG Characterization. Int. J. Distrib. Sens. Netw. 2015, 11, 531516. [Google Scholar] [CrossRef]

- Calderón-Henao, N.; Venturini, O.J.; Franco, E.H.M.; Eduardo Silva Lora, E.; Scherer, H.F.; Maya, D.M.Y.; Ando Junior, O.H. Numerical–Experimental Performance Assessment of a Non-Concentrating Solar Thermoelectric Generator (STEG) Operating in the Southern Hemisphere. Energies 2020, 13, 2666. [Google Scholar] [CrossRef]

- Izidoro, C.L.; Ando Junior, O.H.; Carmo, J.P.; Schaeffer, L. Characterization of Thermoelectric Generator for Energy Harvesting. Measurement 2017, 106, 283–290. [Google Scholar] [CrossRef]

- Kramer, L.R.; Maran, A.L.O.; De Souza, S.S.; Ando Junior, O.H. Analytical and Numerical Study for the Determination of a Thermoelectric Generator’s Internal Resistance. Energies 2019, 12, 3053. [Google Scholar] [CrossRef]

- Maran, A.L.O.; Henao, N.C.; Silva, E.A.; Schaeffer, L.; Ando Junior, O.H. Use of the Seebeck Effect for Energy Harvesting. IEEE Lat. Am. Trans. 2016, 14, 4106–4114. [Google Scholar] [CrossRef]

- Silva, E.A.D.; Filho, W.M.C.; Cavallari, M.R.; Ando Junior, O.H. Self-Powered System Development with Organic Photovoltaic (OPV) for Energy Harvesting from Indoor Lighting. Electronics 2024, 13, 2518. [Google Scholar] [CrossRef]

- Silva, E.; Urzagasti, C.; Maciel, J.; Ledesma, J.; Cavallari, M.; Ando Junior, O.H. Development of a Self-Calibrated Embedded System for Energy Management in Low Voltage. Energies 2022, 15, 8707. [Google Scholar] [CrossRef]

- Sylvestrin, G.R.; Scherer, H.F.; Ando Junior, O.H. Hardware and Software Development of an Open Source Battery Management System. IEEE Lat. Am. Trans. 2021, 19, 1153–1163. [Google Scholar] [CrossRef]

| Machine Learning | ||||||

|---|---|---|---|---|---|---|

| Hyperparameters | SVC | RF | GB | DT | k-NN | ANN-MLP |

| Scaler | Robust Scaler | Standard Scaler | Robust Scaler | - | - | Robust Scaler |

| C/N Estimators/Neighbors | C = 2.40 | 64 | 64 | - | 5 | (64, 32) |

| Kernel/Criterion | linear | log2 (max_features) | learning_rate = 0.001 | entropy | p = 1 | logistic |

| Max Depth | - | 10 | 8 | None | - | - |

| Min Samples Split | - | 5 | 5 | 5 | - | - |

| Min Samples Leaf | - | 5 | 5 | 2 | - | - |

| Bootstrap/Subsample | - | False | 0.9 | - | - | - |

| Weights/Solver | - | - | - | - | distance | lbfgs |

| Learning Rate/Alpha | - | - | - | - | - | alpha = 0.001 |

| Max Iter | - | - | - | - | - | 1000 |

| Deep Learning | ||||||

| Hyperparameters | CNN | LSTM | GRU | Transformer | BiGRUT | |

| Layers (1–3) | 3 (Conv2D) | 1 | 1 | 1 (Transformer Blocks) | 1 (Transf)/1 (LSTM) | |

| Units/Layer (32, 64, 128, 256) | 32 | 128 | 128 | 128 | 128/128 | |

| Batch Size (64, 128) | 128 | 128 | 128 | 128 | 128 | |

| Dropout (0.0, 0.1, 0.2, 0.3) | 0.2 | 0.1 | 0.2 | 0.2 | 0.2 | |

| Epochs (20, 30) | 30 | 30 | 30 | 30 | 30 | |

| Optimizer (Adam, SGD, RMSprop) | AdamW | AdamW | AdamW | AdamW | AdamW | |

| Sequence Length (2, 5) | 2 | 2 | 2 | 2 | 2 | |

| Learning Rate (1 × 10−4–5 × 10−3) | 0.001 | 0.0032 | 0.001 | 0.001 | 0.001 | |

| Activation Function (ReLU, tanh) | ReLU | Tanh | Tanh | ReLU | ReLU + tanh | |

| Convolutional Layers (1, 2, 3) | 3 | - | - | - | - | |

| Kernel (3 × 3, 5 × 5) | 3 × 3 | - | - | - | - | |

| Pooling (Max, Average) | Max + Adaptive Avg | - | - | - | - | |

| Head Attention (2, 4) | - | - | - | 4 | 4 | |

| Dimension Feed-forward Layer (32, 64, 128, 256) | - | - | - | 128 | 128 | |

| Model | Seed | Metrics | ||||

|---|---|---|---|---|---|---|

| Accuracy | Precision | Recall | F1-Score | MCC | ||

| Decision Tree | 1 | 0.515 | 0.522 | 0.515 | 0.516 | 0.472 |

| 2 | 0.521 | 0.527 | 0.521 | 0.521 | 0.478 | |

| 3 | 0.550 | 0.549 | 0.544 | 0.544 | 0.509 | |

| Mean | 0.529 | 0.533 | 0.527 | 0.527 | 0.486 | |

| Standard Deviation | 0.019 | 0.014 | 0.015 | 0.015 | 0.020 | |

| Gradient Boosting | 1 | 0.759 | 0.765 | 0.759 | 0.753 | 0.739 |

| 2 | 0.735 | 0.747 | 0.735 | 0.734 | 0.712 | |

| 3 | 0.736 | 0.688 | 0.697 | 0.687 | 0.713 | |

| Mean | 0.743 | 0.733 | 0.730 | 0.725 | 0.721 | |

| Standard Deviation | 0.014 | 0.040 | 0.031 | 0.034 | 0.015 | |

| k-NN | 1 | 0.866 | 0.871 | 0.866 | 0.867 | 0.854 |

| 2 | 0.851 | 0.858 | 0.850 | 0.849 | 0.838 | |

| 3 | 0.856 | 0.864 | 0.848 | 0.852 | 0.843 | |

| Mean | 0.858 | 0.864 | 0.855 | 0.856 | 0.845 | |

| Standard Deviation | 0.008 | 0.007 | 0.010 | 0.010 | 0.008 | |

| ANN-MLP | 1 | 0.880 | 0.884 | 0.880 | 0.880 | 0.869 |

| 2 | 0.858 | 0.858 | 0.858 | 0.857 | 0.846 | |

| 3 | 0.883 | 0.879 | 0.877 | 0.876 | 0.873 | |

| Mean | 0.874 | 0.874 | 0.872 | 0.871 | 0.863 | |

| Standard Deviation | 0.014 | 0.014 | 0.012 | 0.012 | 0.015 | |

| Random Forest | 1 | 0.809 | 0.811 | 0.809 | 0.801 | 0.794 |

| 2 | 0.828 | 0.832 | 0.827 | 0.821 | 0.813 | |

| 3 | 0.838 | 0.845 | 0.817 | 0.819 | 0.824 | |

| Mean | 0.825 | 0.829 | 0.818 | 0.814 | 0.810 | |

| Standard Deviation | 0.015 | 0.017 | 0.009 | 0.011 | 0.015 | |

| Model | Seed | Metrics | ||||

|---|---|---|---|---|---|---|

| Accuracy | Precision | Recall | F1-Score | MCC | ||

| CNN | 1 | 0.950 | 0.953 | 0.950 | 0.949 | 0.945 |

| 2 | 0.933 | 0.940 | 0.933 | 0.934 | 0.927 | |

| 3 | 0.924 | 0.934 | 0.924 | 0.922 | 0.918 | |

| Mean | 0.935 | 0.942 | 0.935 | 0.935 | 0.930 | |

| Standard Deviation | 0.013 | 0.010 | 0.013 | 0.014 | 0.014 | |

| LSTM | 1 | 0.648 | 0.675 | 0.648 | 0.645 | 0.618 |

| 2 | 0.691 | 0.698 | 0.690 | 0.676 | 0.666 | |

| 3 | 0.720 | 0.752 | 0.720 | 0.716 | 0.696 | |

| Mean | 0.686 | 0.708 | 0.686 | 0.679 | 0.660 | |

| Standard Deviation | 0.036 | 0.039 | 0.036 | 0.036 | 0.039 | |

| GRU | 1 | 0.870 | 0.875 | 0.871 | 0.870 | 0.859 |

| 2 | 0.877 | 0.894 | 0.876 | 0.875 | 0.867 | |

| 3 | 0.919 | 0.926 | 0.919 | 0.920 | 0.912 | |

| Mean | 0.889 | 0.898 | 0.889 | 0.888 | 0.880 | |

| Standard Deviation | 0.027 | 0.026 | 0.027 | 0.027 | 0.029 | |

| Transformer | 1 | 0.957 | 0.959 | 0.957 | 0.957 | 0.954 |

| 2 | 0.977 | 0.977 | 0.977 | 0.977 | 0.975 | |

| 3 | 0.960 | 0.963 | 0.960 | 0.961 | 0.957 | |

| Mean | 0.965 | 0.966 | 0.965 | 0.965 | 0.962 | |

| Standard Deviation | 0.011 | 0.010 | 0.011 | 0.011 | 0.012 | |

| BiGRUT (proposed) | 1 | 0.980 | 0.980 | 0.980 | 0.980 | 0.978 |

| 2 | 0.960 | 0.961 | 0.960 | 0.960 | 0.957 | |

| 3 | 0.977 | 0.977 | 0.977 | 0.977 | 0.975 | |

| Mean | 0.973 | 0.973 | 0.973 | 0.973 | 0.970 | |

| Standard Deviation | 0.011 | 0.010 | 0.011 | 0.011 | 0.012 | |

| Model | Fault Class | Fault Subclass | Recall Average | Faults Detected | Undetected Faults | Error (%) | Average Error (%) Per Class |

|---|---|---|---|---|---|---|---|

| CNN | Combined | Slip_P1 | 0.782 | 142.294 | 39.706 | 21.817 | 6.09 ± [8.05] |

| Slip_P1_P4 | 0.988 | 179.792 | 2.208 | 1.213 | |||

| Concentrated_material_loss_P1 | 0.926 | 168.556 | 13.444 | 7.387 | |||

| Concentrated_material_loss_P1_P4 | 0.988 | 179.755 | 2.245 | 1.233 | |||

| Material_loss_P1 | 0.982 | 178.633 | 3.367 | 1.850 | |||

| Material_loss_P1_P4 | 0.970 | 176.485 | 5.515 | 3.030 | |||

| Mechanical | Slip | 0.878 | 159.820 | 22.180 | 12.187 | 12.43 ± [8.91] | |

| Concentrated_loss | 0.785 | 142.943 | 39.057 | 21.460 | |||

| Material_loss | 0.964 | 175.381 | 6.619 | 3.637 | |||

| Misfires | Without_P1 | 0.988 | 179.792 | 2.208 | 1.213 | 1.53 ± [0.45] | |

| Without_P1P4 | 0.982 | 178.633 | 3.367 | 1.850 | |||

| Normal | Normal | 0.994 | 180.896 | 1.104 | 0.607 | 0.607 | |

| LSTM | Combined | Slip_P1 | 0.509 | 92.656 | 89.344 | 49.090 | 38.09 ± [18.98] |

| Slip_P1_P4 | 0.782 | 142.294 | 39.706 | 21.817 | |||

| Concentrated_material_loss_P1 | 0.317 | 57.645 | 124.355 | 68.327 | |||

| Concentrated_material_loss_P1_P4 | 0.585 | 106.543 | 75.457 | 41.460 | |||

| Material_loss_P1 | 0.827 | 150.544 | 31.456 | 17.283 | |||

| Material_loss_P1_P4 | 0.695 | 126.399 | 55.601 | 30.550 | |||

| Mechanical | Slip | 0.659 | 119.865 | 62.135 | 34.140 | 32.26 ± [27.13] | |

| Concentrated_loss | 0.416 | 75.700 | 106.300 | 58.407 | |||

| Material_loss | 0.958 | 174.283 | 7.717 | 4.240 | |||

| Misfires | Without_P1 | 0.872 | 158.698 | 23.302 | 12.803 | 16.25 ± [4.87] | |

| Without_P1P4 | 0.803 | 146.170 | 35.830 | 19.687 | |||

| Normal | Normal | 0.811 | 147.541 | 34.459 | 18.933 | 18.933 | |

| GRU | Combined | Slip_P1 | 0.794 | 144.496 | 37.504 | 20.607 | 14.33 ± [7.17] |

| Slip_P1_P4 | 0.909 | 165.456 | 16.544 | 9.090 | |||

| Concentrated_material_loss_P1 | 0.878 | 159.857 | 22.143 | 12.167 | |||

| Concentrated_material_loss_P1_P4 | 0.749 | 136.263 | 45.737 | 25.130 | |||

| Material_loss_P1 | 0.938 | 170.765 | 11.235 | 6.173 | |||

| Material_loss_P1_P4 | 0.872 | 158.734 | 23.266 | 12.783 | |||

| Mechanical | Slip | 0.799 | 145.412 | 36.588 | 20.103 | 12.99 ± [10.77] | |

| Concentrated_loss | 0.817 | 148.749 | 33.251 | 18.270 | |||

| Material_loss | 0.994 | 180.896 | 1.104 | 0.607 | |||

| Misfires | Without_P1 | 0.976 | 177.583 | 4.417 | 2.427 | 3.67 ± [1.76] | |

| Without_P1P4 | 0.951 | 173.052 | 8.948 | 4.917 | |||

| Normal | Normal | 0.988 | 179.774 | 2.226 | 1.223 | 1.223 | |

| Transformer | Combined | Slip_P1 | 0.903 | 164.352 | 17.648 | 9.697 | 4.26 ± [3.15] |

| Slip_P1_P4 | 0.988 | 179.792 | 2.208 | 1.213 | |||

| Concentrated_material_loss_P1 | 0.945 | 172.039 | 9.961 | 5.473 | |||

| Concentrated_material_loss_P1_P4 | 0.951 | 173.143 | 8.857 | 4.867 | |||

| Material_loss_P1 | 0.981 | 178.627 | 3.373 | 1.853 | |||

| Material_loss_P1_P4 | 0.975 | 177.523 | 4.477 | 2.460 | |||

| Mechanical | Slip | 0.933 | 169.764 | 12.236 | 6.723 | 4.68 ± [4.06] | |

| Concentrated_loss | 0.927 | 168.684 | 13.316 | 7.317 | |||

| Material_loss | 1.000 | 182.000 | 0.000 | 0.000 | |||

| Misfires | Without_P1 | 1.000 | 182.000 | 0.000 | 0.000 | 1.23 ± [1.74] | |

| Without_P1P4 | 0.975 | 177.529 | 4.471 | 2.457 | |||

| Normal | Normal | 1.000 | 182.000 | 0.000 | 0.000 | 0 | |

| BiGRUT (proposed) | Combined | Slip_P1 | 0.926 | 168.605 | 13.395 | 7.360 | 3.67 ± [2.66] |

| Slip_P1_P4 | 0.994 | 180.896 | 1.104 | 0.607 | |||

| Concentrated_material_loss_P1 | 0.963 | 175.296 | 6.704 | 3.683 | |||

| Concentrated_material_loss_P1_P4 | 0.951 | 173.112 | 8.888 | 4.883 | |||

| Material_loss_P1 | 0.994 | 180.896 | 1.104 | 0.607 | |||

| Material_loss_P1_P4 | 0.951 | 173.161 | 8.839 | 4.857 | |||

| Mechanical | Slip | 0.927 | 168.684 | 13.316 | 7.317 | 2.64 ± [4.06] | |

| Concentrated_loss | 0.994 | 180.896 | 1.104 | 0.607 | |||

| Material_loss | 1.000 | 182.000 | 0.000 | 0.000 | |||

| Misfires | Without_P1 | 0.994 | 180.878 | 1.122 | 0.617 | 1.22 ± [0.85] | |

| Without_P1P4 | 0.982 | 178.694 | 3.306 | 1.817 | |||

| Normal | Normal | 0.994 | 180.896 | 1.104 | 0.607 | 0.607 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Published by MDPI on behalf of the International Institute of Knowledge Innovation and Invention. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Torres, N.N.S.; Maciel, J.N.; Lima, T.L.d.V.; Gazziro, M.; Filho, A.C.L.; Carmo, J.P.P.d.; Ando Junior, O.H. Fault Diagnosis in Internal Combustion Engines Using Artificial Intelligence Predictive Models. Appl. Syst. Innov. 2025, 8, 147. https://doi.org/10.3390/asi8050147

Torres NNS, Maciel JN, Lima TLdV, Gazziro M, Filho ACL, Carmo JPPd, Ando Junior OH. Fault Diagnosis in Internal Combustion Engines Using Artificial Intelligence Predictive Models. Applied System Innovation. 2025; 8(5):147. https://doi.org/10.3390/asi8050147

Chicago/Turabian StyleTorres, Norah Nadia Sánchez, Joylan Nunes Maciel, Thyago Leite de Vasconcelos Lima, Mario Gazziro, Abel Cavalcante Lima Filho, João Paulo Pereira do Carmo, and Oswaldo Hideo Ando Junior. 2025. "Fault Diagnosis in Internal Combustion Engines Using Artificial Intelligence Predictive Models" Applied System Innovation 8, no. 5: 147. https://doi.org/10.3390/asi8050147

APA StyleTorres, N. N. S., Maciel, J. N., Lima, T. L. d. V., Gazziro, M., Filho, A. C. L., Carmo, J. P. P. d., & Ando Junior, O. H. (2025). Fault Diagnosis in Internal Combustion Engines Using Artificial Intelligence Predictive Models. Applied System Innovation, 8(5), 147. https://doi.org/10.3390/asi8050147