A Four-Dimensional Analysis of Explainable AI in Energy Forecasting: A Domain-Specific Systematic Review

Abstract

1. Introduction

The Observed Gaps, Work Contribution, and Research Questions

- No systematic cross-domain analysis of explanation quality: Existing reviews focus on isolated domains (e.g., load or price forecasting), but none systematically compare how “trust” and “fidelity” are conceptualized and evaluated across load, price, and renewable generation forecasting.

- Lack of domain-specific trust constructs: While trust is often mentioned, prior work rarely distinguishes between context-dependent interpretations such as “reliability under volatility” in markets, “physical plausibility” in generation, or user alignment in demand-side management.

- Insufficient alignment with user roles: Explanations are frequently evaluated in technical terms without considering the needs of real-world stakeholders (e.g., grid operators, market analysts, building managers), limiting operational relevance.

- Limited assessment of robustness and feasibility: There is minimal reporting on the computational cost, stability, or practical deploy ability of XAI methods in real-time or safety-critical energy systems.

2. Core Concepts and Taxonomy of Explainable AI

2.1. Interpretability vs. Explainability

- Explainability: The ability to generate post hoc explanations for complex models (e.g., DNNs, ensembles) using external techniques such as SHAP or LIME, which quantify the contribution of input features to individual or aggregate predictions. These methods are essential for diagnosing and validating black-box forecasts in energy markets and power systems [1,2,5,6,11,18].

2.2. The Role of Trust in XAI for Energy Forecasting

- Faithful: Accurately reflect the model’s internal logic.

- Consistent: Exhibit stable behavior across time and perturbations.

- Actionable: Enable users to make informed operational decisions.

2.3. A Multi-Axis Taxonomy of XAI Methods

- Ante-hoc (inherently interpretable): Models designed with transparent structure (e.g., linear regression, decision trees) [21].

- Post hoc (external explanation): Techniques applied to complex ‘black box’ models after a prediction is made. This includes external methods (e.g., SHAP, LIME) and the analysis of internal mechanisms like attention weights [22].

2.4. Time-Series Context and Understandability

2.5. Contextualizing XAI in Energy Systems

3. Review Methodology

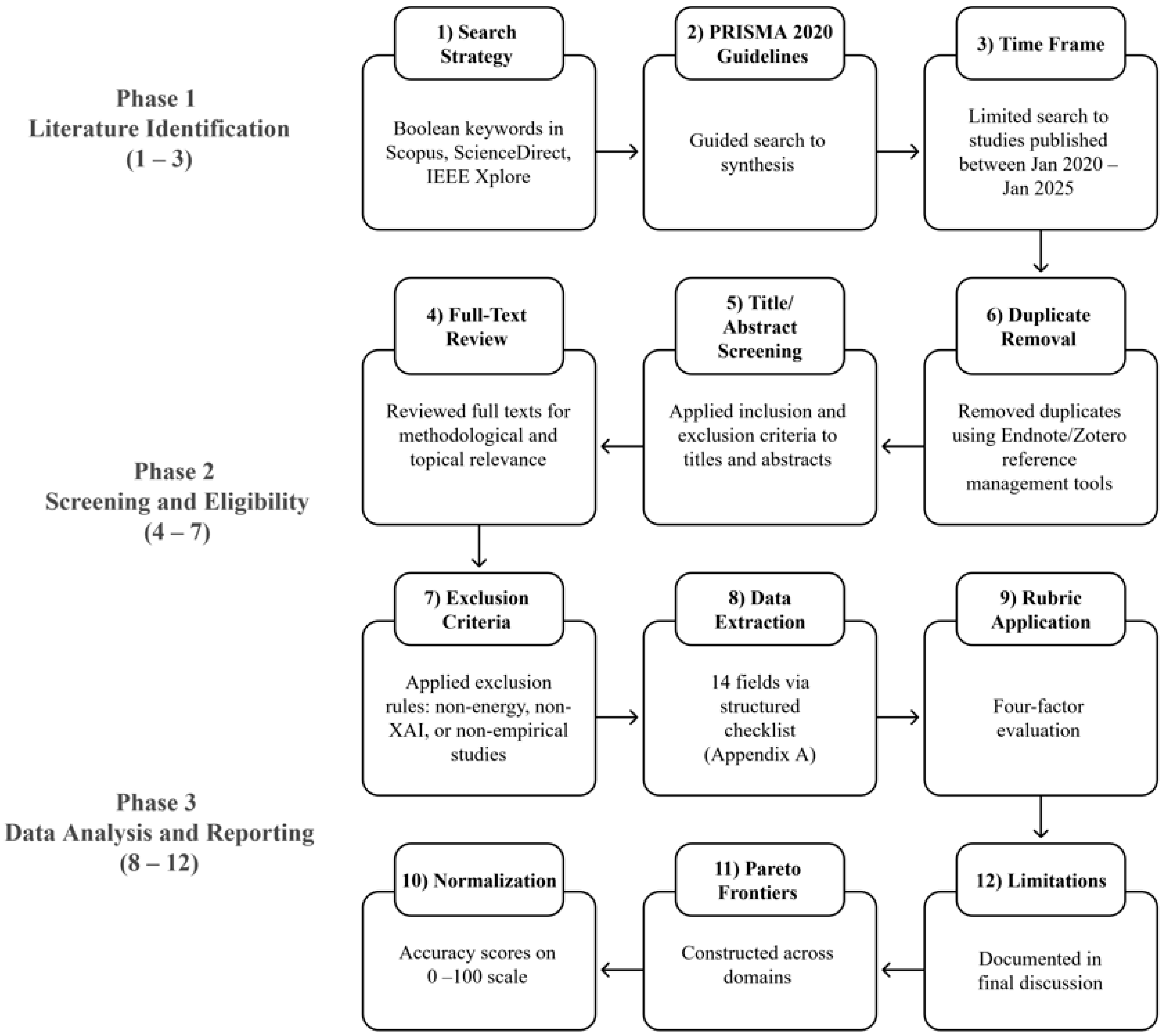

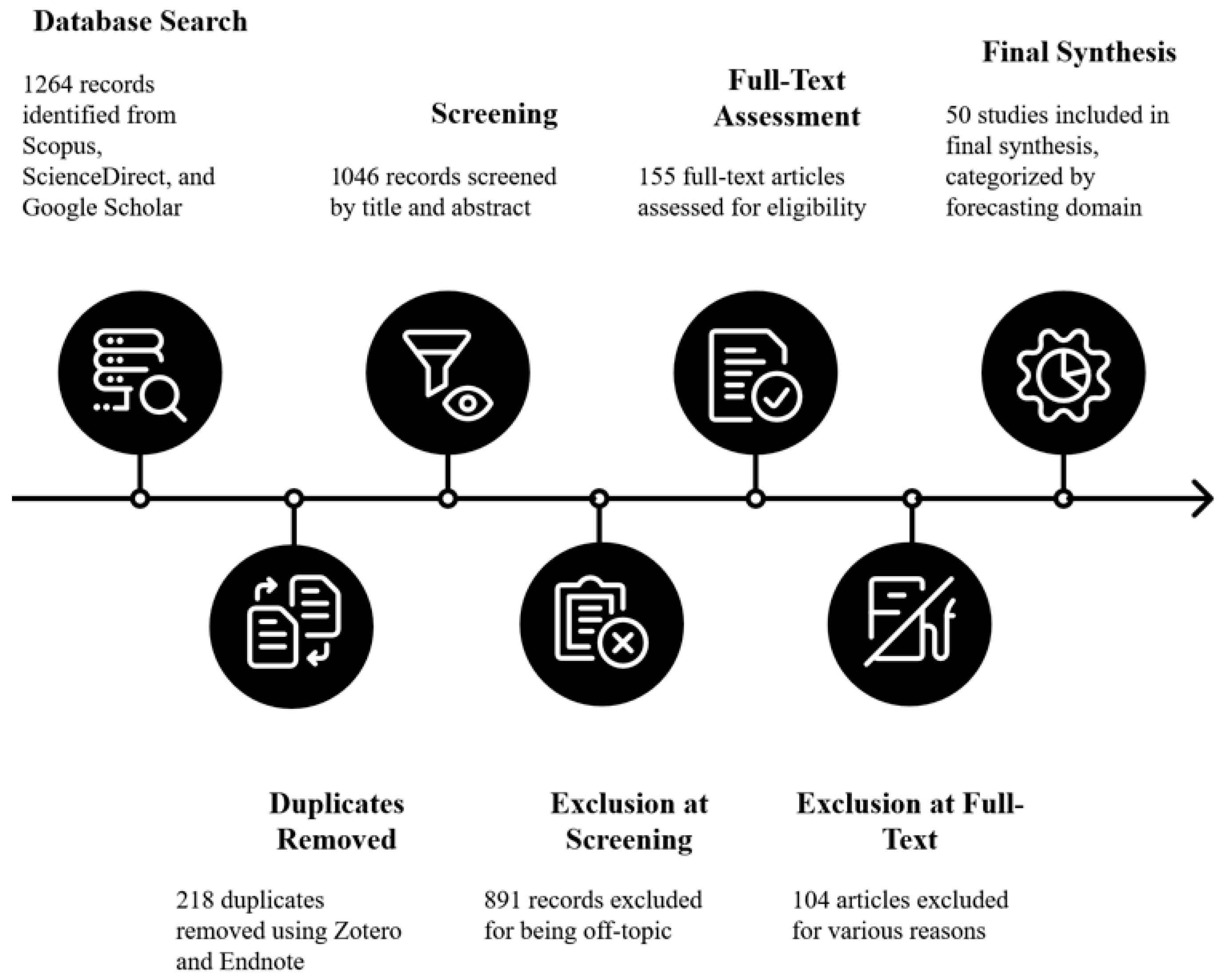

- Phase 1 (Literature Identification): Structured research was conducted in three major databases: Scopus, ScienceDirect, and IEEE Xplore. A Boolean search string combined keywords related to energy forecasting (e.g., “load forecasting”, “price forecasting”, “renewable generation forecasting”) and explainable AI (e.g., “XAI”, “SHAP”, “LIME”, “interpretability”, “post-hoc explanation”). The search was limited to studies published between January 2020 and June 2025 to focus on recent methodological advances. The final search was conducted on 15 May 2025, yielding an initial pool of 1264 records. To supplement the database search, a secondary query was performed in Google Scholar. Since this search engine does not support complex Boolean strings, we used targeted keywords combinations derived from our main search (e.g., “interpretable load forecasting”) to identify additional relevant studies.

- Phase 2 (Screening and Eligibility): After removing duplicates using reference management software, the sole author screened all titles, abstracts, and full texts against predefined inclusion and exclusion criteria.

- Peer-reviewed journal or conference papers

- Published in English with accessible full text

- Focused on XAI techniques applied to energy time-series forecasting

- Non-forecasting tasks (e.g., fault detection, clustering)

- No explicit use or evaluation of explainability

- Editorials, book chapters, or non-peer-reviewed sources

- Phase 3 (Data Analysis and Reporting): After the multi-stage screening process detailed in Phase 2 reduced the initial 1264 records to a final set of 50 eligible studies (see Figure 2 for a full breakdown), we proceeded with data extraction and analysis. We then applied our four-factor evaluation rubric (Section 4.2) to assess explanation quality across global transparency, local fidelity, user relevance, and operational viability. To minimize subjectivity, a detailed coding guide was developed a priori, defining clear criteria for low, medium, and high scores on each dimension (see Appendix A, Table A2). For example, for User Relevance, a ‘High’ score was given only if a study explicitly named a stakeholder (e.g., ‘grid operator’) and linked the explanation to a specific decision task. A ‘Low’ score was given if only technical feature importance was presented. Similarly, for Operational Viability, a ‘High’ score required explicit reporting of computational overhead, while a ‘Low’ score was given for no mention of cost. All scoring decisions were documented to support transparency and future replication.

3.1. Search Strategy and Time Frame

3.2. PRISMA Inspired Framework and Study Selection Procedure

- Peer-reviewed journal or conference paper.

- Published between January 2020 and June 2025.

- Focused on XAI techniques applied to energy forecasting.

- Written in English with accessible full text.

- Addressed only non-forecasting tasks (e.g., fault detection).

- Did not apply or mention any form of explainability.

- Were book chapters, editorials, or other non-peer-reviewed sources.

3.3. Data Extraction Process

3.4. A Four-Dimension for Explanation Framework

- Global Transparency: This dimension evaluates the extent to which the model’s overall behavior, learned strategies, and underlying logic are made comprehensible to a human analyst.

- Local Fidelity & Robustness: This dimension evaluates not just the claimed accuracy of local explanations (fidelity), but also their stability and consistency when tested under perturbation, distribution shift, or overtime.

- User Relevance: This dimension assesses whether the explanation is explicitly tailored to a specific user role (e.g., grid operator, market analyst) and supports a concrete decision-making task.

- Operational Viability: This dimension evaluates the practical feasibility of deploying the XAI system in a real-world setting. It considers crucial aspects like the computational overhead of generating explanations and the explicit justification for selecting a particular XAI method.

3.5. Rationale for Selected Dimensions

4. Results

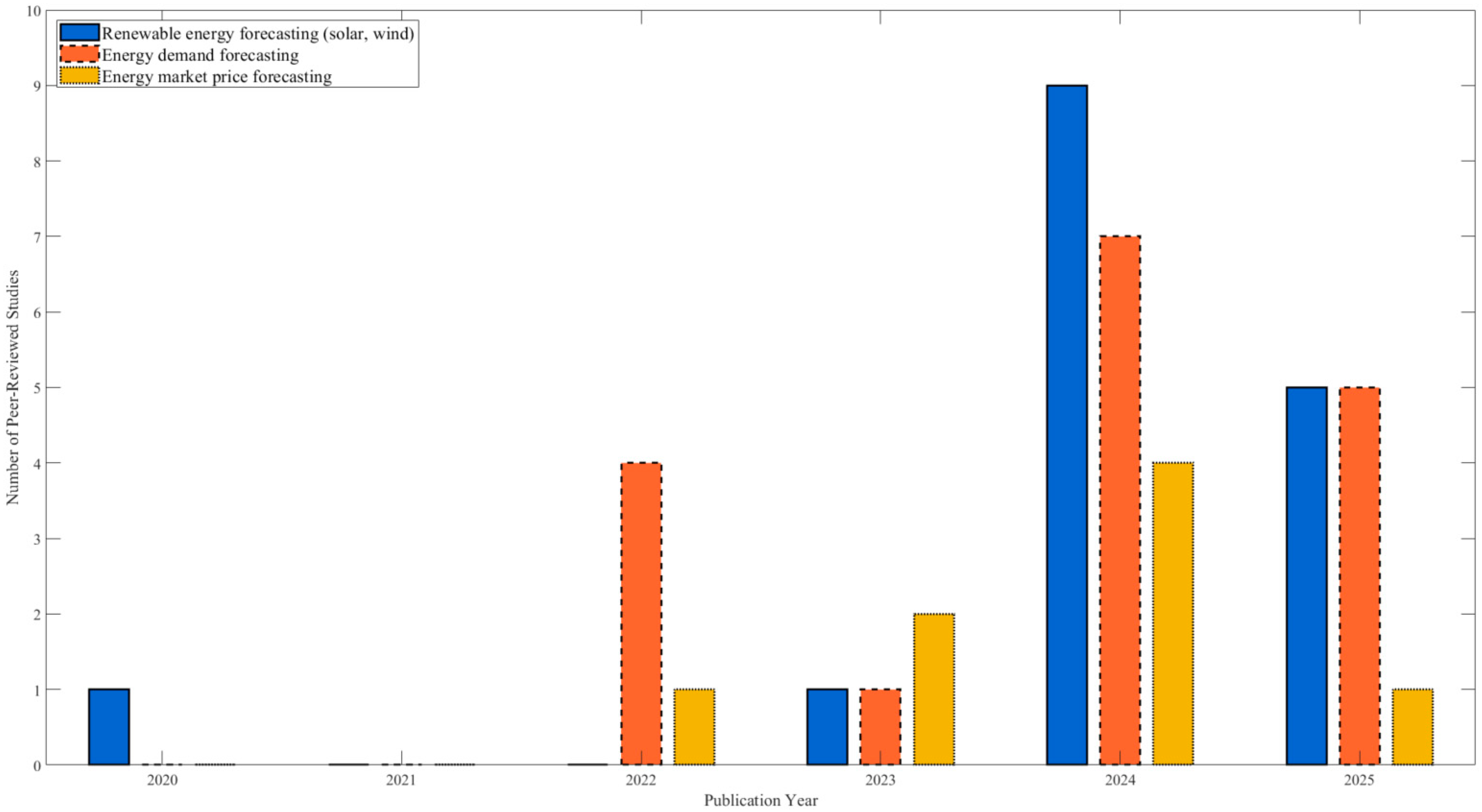

4.1. Publication Landscape Analysis

4.2. Load Forecasting

4.2.1. Forecasting Performance

- Latent representations: Autoencoders are used to compress high-dimensional sensor data into compact feature sets [33].

4.2.2. Explainable AI Methodologies in Load Forecasting

- Global Transparency: Global Transparency in load forecasting reveals the model’s overall strategy, such as how it weighs weather patterns against historical consumption cycles. This is achieved through interpretable-by-design architectures like Temporal Fusion Transformers (TFTs) and Hierarchical Neural Additive Models (HNAMs) that embed transparency directly into their structure [15,29]. For “black-box” models, global insights are derived by aggregating post hoc explanations from methods like SHAP to validate that the model learns expected relationships, such as outdoor temperature driving air conditioning load [25,35]. While these global insights are crucial for strategic planning, their value is limited without the ability to trust and diagnose forecasts for critical peak demand hours.

- Local Fidelity & Robustness: This need to trust individual predictions leads to the second dimension, Local Fidelity and Robustness, which validates the explanations for specific forecasts. To verify fidelity, novel metrics like the Contribution Monotonicity Coefficient (CMC) have been developed to evaluate explainers like DeepLIFT [12]. Robustness is also explicitly tested; for instance, HNAMs were subjected to robustness checks confirming their explanations remained consistent across different forecast horizons in over 99% of instances [15].

- User Relevance: User Relevance bridges the gap between a technically valid explanation and an actionable insight for a specific stakeholder. For grid operators, SHAP explanations are used to identify the drivers of peak consumption hours, supporting the design of time-of-use tariffs [28]. For homeowners, the ForecastExplainer framework visualizes which specific appliances will contribute most to future energy use, empowering them to manage demand [12].

- Operational Viability: This brings us to the final dimension, Operational Viability, which addresses the practical challenges of deploying these XAI systems. Intrinsic (ante-hoc) models like HNAMs are highly efficient, as the cost of explanation is integrated into the model’s inference time, making them suitable for real-time dispatch systems [15]. In contrast, post hoc methods like SHAP add computational overhead that may be too slow for generating explanations at the sub-hourly frequency required for operational control [12]. The literature shows that modern interpretable models can achieve accuracy competitive with their black-box counterparts, demonstrating that transparency does not have to come at a major cost to performance [15].

4.3. Price Forecasting

4.3.1. Forecasting Performance

- Unstructured Data: To capture public sentiment and emerging trends, some models successfully incorporate unstructured data, such as keyword search volumes from Baidu [43].

| Manuscript (Author, Year) | Application | Forecasting Horizon | Models | Inputs and Features |

|---|---|---|---|---|

| [43] | Carbon trading price | Multi-step (2, 4, 6 steps) | Proposed: CEEMDAN-WT-SVR. Benchmarks: ELM, SVR, LightGBM, XGBoost. | Structured (historical prices, market indices) and Unstructured (Baidu search index keywords). |

| [3] | Spanish electricity price | Real-time, multi-step (h = 1, 3, 24 h) | Proposed: ENSWNN. Benchmarks: LSTM, CNN, TCN, RF, XGBoost, River library models. | Univariate (historical electricity prices). |

| [42] | US clean energy stock indices | Short- to medium-term (daily data) | Proposed: Facebook’s Prophet, NeuralProphet. Benchmarks: TBATS, ARFIMA, SARIMA. | Macroeconomic variables (DJIA, CBOEVIX, oil price) and 21 technical indicators. |

| [7] | Electricity price (Italian & ERCOT markets) | Short-term (hourly) | Analyzed: CNN, LSTM. Proposed: Trust Algorithm for explaining models. | Zonal prices, ancillary market prices, demand, neighboring market prices, historical prices. |

| [40] | Half-hourly electricity price (Australia) | Half-hourly | Proposed: D3Net (STL-VMD with MLP, RFR, TabNet). Benchmarks: 14 standalone and STL-based models. | Univariate (lagged series of electricity prices identified by PACF). |

| [41] | Crude oil spot prices | Multi-horizon (multi months ahead) | Compared: MLP, RNN, LSTM, GRU, CNN, TCN. | Futures prices with different maturities (1, 2, 3, 6, 12 months). |

| [44] | Intraday electricity price difference | Intraday (15 min intervals) | Proposed: Normalizing Flows. Benchmarks: Gaussian copula, Gaussian regression. | Previous price differences, day-ahead price increments, renewable/load forecast errors. |

| [9] | Day-ahead electricity price (Spain) | Day-ahead (24 h) | Proposed: Stacked ensemble of 15+ ML models. Benchmarks: AutoML platforms (H2O, TPOT). | Extensive exogenous data (generation, demand, market prices, fuel prices), STL components, time series features. |

4.3.2. Explainable AI Methodologies in Price and Market Forecasting

- Global Transparency: Global Transparency is used by market analysts to ensure models learn rational economic relationships rather than spurious correlations. SHAP summary plots are commonly used to identify the most influential market drivers, such as demand forecasts or the price of natural gas [42,44]. More advanced frameworks combine SHAP with Morris’s sensitivity screening and surrogate decision tree models to provide a multi-faceted view of a complex ensemble’s logic [9].

- Local Fidelity & Robustness: Given that a single erroneous forecast can lead to significant financial loss, Local Fidelity and Robustness is paramount. While LIME and instance-specific SHAP values are used to explain individual predictions [42], a standout innovation is the “Trust Algorithm.” This tool generates a numerical trust score for each forecast by correlating its local SHAP explanation with the model’s global behavior. This directly measures the robustness of individual predictions and acts as a real-time risk assessment tool, proving highly effective at identifying unreliable forecasts during market regime shifts [7].

- User Relevance: User Relevance in this domain is uniquely focused on the needs of financial actors like traders, asset managers, and policymakers. A clear trend, different from the often-technical explanations in load forecasting, is the move toward simplified, actionable outputs. For example, score-based explanations like the trust score are proposed as more direct and user-friendly tools than complex plots, as they can be integrated directly into bidding software to trigger risk-averse strategies [7]. The goal is to foster human–machine collaboration where XAI provides a “data story” that augments a trader’s domain knowledge [9].

4.4. Renewable Generation Forecasting

4.4.1. Forecasting Performance

4.4.2. Explainable AI Methodologies in Renewable Generation Forecasting

- Global Transparency: This is used to verify that a model’s overall strategy aligns with domain knowledge. A key trend is the use of inherently interpretable “glass-box” models like the Explainable Boosting Machine (EBM), which learns explicit “shape functions” for each physical input, and Concept Bottleneck Models (CBMs), which base predictions on human-understandable concepts like “high turbulence” [45,46]. A standout innovation is the development of frameworks that validate a black-box model by quantifying its alignment with known physics, resulting in a “physical reasonableness” score [51].

- Local Fidelity & Robustness: This dimension is crucial for trusting individual forecasts, especially under the extreme or unusual weather conditions that affect renewable generation. While post hoc methods like LIME are used for local explanations [55], their trustworthiness is a significant concern. This has led to research that quantitatively measures the fidelity of XAI techniques like SHAP and LIME against a ground truth derived from perturbation analysis [46]. Furthermore, models that are interpretable by design, like the Direct Explainable Neural Network (DXNN), offer perfect local fidelity by providing a clear mathematical input-output mapping for every prediction [52].

- User Relevance: This is tailored to the needs of technical experts who manage physical assets. Unlike the financial traders in price forecasting or the diverse consumers in load forecasting, the users are often engineers, wind/solar farm operators, and grid planners. Explanations provide actionable insights for tasks such as root-cause analysis of wind turbine underperformance [51], predicting extreme wind events for operational safety [45], and providing public health information on UV radiation based on solar forecasts [56].

- Operational Viability: This dimension considers the practical feasibility of deploying XAI systems. Inherently interpretable models like EBMs are highly viable as they are computationally efficient and do not require a separate, costly explanation step [46]. While post hoc methods like SHAP can be computationally intensive [56], their use is justified for deep, offline analysis. The development of automated pipelines for complex validation, such as checking for physical reasonableness, also enhances the operational viability of advanced XAI frameworks [51].

5. Discussion

5.1. Three Paradigms of Explainable Energy Forecasting

5.2. Answering the Research Questions

5.3. Limitations of This Review

5.4. Future Research Directions

- Standardized Evaluation of Explanation Robustness: Future work must move beyond assuming explanation fidelity. We call for standardized benchmarks and metrics to evaluate robustness under data distribution shifts, input perturbations, and against physical or expert-verified ground truths.

- Focus on Operational Viability: We recommend rigorous reporting of computational costs and latency. This must include analysis of how different architectures scale. For example, while standard Transformers are highly parallelizable, their core self-attention mechanism is known to be computationally intensive and scales poorly with very long input sequences. This can make them prohibitive for very high-resolution (e.g., second-level) data without specialized sparse-attention mechanisms. In contrast, recurrent models like LSTMs/GRUs have different operational trade-offs, often being less computationally demanding per time-step but suffering from slow sequential processing that hinders training. Future work must benchmark these practical trade-offs.

- Human-Centered XAI Design: The field must advance beyond technical explainability toward genuine user utility. We strongly encourage human-subject studies involving domain experts (e.g., grid operators, market analysts) to determine which explanation formats are truly useful, actionable, and trust-building.

- Advance Ante-hoc and Physics-Informed XAI: Future research should explore underdeveloped areas of the XAI taxonomy, including more sophisticated inherently interpretable models and physics-informed XAI that validate model logic against scientific principles, especially in renewable generation forecasting.

- Our review found that methodological rigor was inconsistent, as primary studies rarely reported formal statistical significance testing (e.g., Diebold-Mariano tests). Future primary studies proposing novel models must include such testing to rigorously validate their claimed performance gains over established baselines.

- Adopt Robust Evaluation Metrics: Future work should also adopt more robust evaluation metrics.

- MAPE (Mean Absolute Percentage Error), while common, is not recommended for energy datasets as it produces undefined or infinite errors when the true value is zero (e.g., zero solar generation at night).

- MAE (Mean Absolute Error) is robust and directly interpretable in the units of the forecast (e.g., ‘MWh’), making it excellent for understanding the average error magnitude.

- RMSE (Root Mean Squared Error) is also critical as it squares errors, thus heavily penalizing large deviations. This is often desirable in high-stakes energy applications where missing a single large peak has outsized financial or operational consequences.

6. Conclusions

- A user-centric approach in load forecasting, a risk management approach in price forecasting, and a physics-informed approach in renewable generation forecasting.

- Our findings show that while post hoc methods, particularly SHAP, dominate the literature (appearing in 62% of studies), critical gaps persist. Only 23% of papers report on computational overhead, and fewer than 15% provide evidence of explanation robustness, severely hindering the development of truly trustworthy and deployable AI systems.

- Standardized robustness testing to move beyond assumed fidelity and ensure reliability.

- Rigorous reporting of computational costs and latency to assess operational feasibility in time-sensitive environments.

- Human-centered design, including studies with domain experts to evaluate the actionability and trust-building potential of XAI outputs.

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| ANN | Artificial Neural Network |

| CART | Classification and Regression Tree |

| CBM | Concept Bottleneck Model |

| CEEMDAN | Complete Ensemble Empirical Mode Decomposition with Adaptive Noise |

| CMC | Contribution Monotonicity Coefficient |

| CNN | Convolutional Neural Network |

| DA | Day-Ahead |

| D3Net | Dual-path Dynamic Dense Network |

| DL | Deep Learning |

| DNN | Deep Neural Networks |

| DT | Decision Tree |

| DXNN | Direct Explainable Neural Network |

| EBM | Explainable Boosting Machine |

| ENSWNN | Ensemble of Weighted Nearest Neighbors |

| ETS | Emissions Trading Scheme |

| EWS | Extreme Wind Speed |

| GBDT | Gradient Boosting Decision Trees |

| GBoost | Gradient Boosting |

| GDPR | General Data Protection Regulation |

| GRU | Gated Recurrent Unit |

| GT | Global Transparency |

| HNAMs | Hierarchical Neural Additive Models |

| ICE | Individual Conditional Expectation |

| ISTR | Interpretable Spatio-Temporal Representation |

| k-NN | k-Nearest Neighbors |

| KRC | Kendall’s Rank Correlation |

| LF | Local Fidelity |

| LIME | Local Interpretable Model-Agnostic Explanations |

| LR | Linear Regression |

| LSTM | Long Short-Term Memory |

| MAE | Mean Absolute Error |

| MAPE | Mean Absolute Percentage Error |

| MASE | Mean Absolute Scaled Error |

| ML | Machine Learning |

| MLP | Multi-Layer Perceptron |

| MSE | Mean Squared Error |

| MV-LSTM | Interpretable Multi-Variable LSTM |

| NAMs | Neural Additive Models |

| NF | Normalizing Flow |

| NWP | Numerical Weather Prediction |

| OHLC | Open-High-Low-Close |

| OV | Operational Viability |

| P | Spearman’s rank correlation coefficient |

| PDP | Partial Dependence Plots |

| PFI | Permutation Feature Importance |

| QLattice | Symbolic regression framework by Abzu |

| RF | Random Forest |

| RNN | Recurrent Neural Network |

| RQ | Research Question |

| SHAP | Shapley Additive Explanations |

| SRC | Spearman Rank Correlation |

| STGNNs | Spatio-Temporal Graph Neural Networks |

| STL | Seasonal-Trend decomposition based on Loess |

| SVR | Support Vector Regression |

| TabNet | Tabular Network |

| TCN | Temporal Convolutional Network |

| TFT | Temporal Fusion Transformer |

| THI | Temperature-Humidity Index |

| TS | Time Series |

| UR | User Relevance |

| VMD | Variational Mode Decomposition |

| WT | Wavelet Transform |

| XAI | Explainable Artificial Intelligence |

| X-CGNN | Explainable Causal Graph Neural Network |

| XGBoost | Extreme Gradient Boosting |

Appendix A

| Field | Description | Example |

|---|---|---|

| 1. Author, Year | First author and publication year | A CrossInformer model based on dual-layer decomposition and interpretability for short-term electricity load forecasting [30]. |

| 2. Title | Full paper title | “Explainable AI for Short-Term Load Forecasting in Smart Grids” |

| 3. DOI | Digital Object Identifier | 10.1016/j.apenergy.2025.123456 |

| 4. Domain | Forecasting domain | Load |

| 5. Forecasting Horizon | Time horizon (short/medium/long) | Short-term (1–24 h) |

| 6. Input Features | Features used (e.g., temperature, load history) | Temperature, humidity, hour-of-day |

| 7. Primary Model | Main forecasting model | LSTM |

| 8. XAI Method | Explanation technique used | SHAP |

| 9. Explanation Scope | Local or global | Local |

| 10. Model Dependency | Model-agnostic or model-specific | Model-agnostic |

| 11. Temporal Integration | Ante-hoc, in-hoc, post hoc | Post hoc |

| 12. Performance Metrics | Accuracy metrics reported | MAPE, R2 |

| 13. Accuracy | MSE, RMSE, R2, etc. | 76.8 |

| 14. 4 dimensions | As explained in the text | --- |

| Dimension | High Score (Score = 1) | Medium Score (Score = 0.5) | Low Score (Score = 0) |

|---|---|---|---|

| 1. Global Transparency | Study provides clear, global explanations of the model’s overall logic (e.g., full SHAP analysis, EBM shape functions). | Study provides basic global feature importance plots without deeper analysis of model strategy. | Study provides no global-level explanation of the model’s behavior. |

| 2. Local Fidelity & Robustness | Study explicitly tests the robustness or fidelity of its local explanations (e.g., perturbation tests, “reasonableness” scores, or uses inherently faithful models). | Study provides local explanations (e.g., LIME, local SHAP) but does not provide any evidence or testing of their robustness or fidelity. | Study provides no local explanations or evidence of robustness. |

| 3. User Relevance | Study explicitly names a stakeholder (e.g., ‘grid operator,’ ‘trader’) AND links the explanation to a specific decision-making task (e.g., ‘designing tariffs,’ ‘managing risk’). | Study mentions a general user (e.g., ‘experts’) but does not connect the explanation to a specific, actionable decision. | Study only presents technical feature importance without any mention of a user or decision task. |

| 4. Operational Viability | Study provides explicit reporting of computational overhead (e.g., time in seconds, memory cost) OR provides a clear justification for the XAI method choice in a deployment context. | Study makes a general claim about feasibility (e.g., ‘model is fast’) but provides no quantitative metrics or specific evidence. | Study provides no mention of computational cost or other deployment considerations. |

References

- Baur, L.; Ditschuneit, K.; Schambach, M.; Kaymakci, C.; Wollmann, T.; Sauer, A. Explainability and Interpretability in Electric Load Forecasting Using Machine Learning Techniques—A Review. Energy AI 2024, 16, 100358. [Google Scholar] [CrossRef]

- Shadi, M.R.; Mirshekali, H.; Shaker, H.R. Explainable artificial intelligence for energy systems maintenance: A review on concepts, current techniques, challenges, and prospects. Renew. Sustain. Energy Rev. 2025, 216, 115668. [Google Scholar] [CrossRef]

- Melgar-García, L.; Troncoso, A. A novel incremental ensemble learning for real-time explainable forecasting of electricity price. Knowl. Based Syst. 2024, 305, 112574. [Google Scholar] [CrossRef]

- Tian, C.; Niu, T.; Li, T. Developing an interpretable wind power forecasting system using a transformer network and transfer learning. Energy Convers. Manag. 2025, 323, 119155. [Google Scholar] [CrossRef]

- Chen, Z.; Xiao, F.; Guo, F.; Yan, J. Interpretable machine learning for building energy management: A state-of-the-art review. Adv. Appl. Energy 2023, 9, 100123. [Google Scholar] [CrossRef]

- Machlev, R.; Heistrene, L.; Perl, M.; Levy, K.Y.; Belikov, J.; Mannor, S.; Levron, Y. Explainable Artificial Intelligence (XAI) techniques for energy and power systems: Review, challenges and opportunities. Energy AI 2022, 9, 100169. [Google Scholar] [CrossRef]

- Heistrene, L.; Machlev, R.; Perl, M.; Belikov, J.; Baimel, D.; Levy, K.; Mannor, S.; Levron, Y. Explainability-based Trust Algorithm for electricity price forecasting models. Energy AI 2023, 14, 100259. [Google Scholar] [CrossRef]

- Ozdemir, G.; Kuzlu, M.; Catak, F.O. Machine learning insights into forecasting solar power generation with explainable AI. Electr. Eng. 2024, 107, 7329–7350. [Google Scholar] [CrossRef]

- Beltrán, S.; Castro, A.; Irizar, I.; Naveran, G.; Yeregui, I. Framework for collaborative intelligence in forecasting day-ahead electricity price. Appl. Energy 2022, 306, 118049. [Google Scholar] [CrossRef]

- Serra, A.; Ortiz, A.; Cortés, P.J.; Canals, V. Explainable district heating load forecasting by means of a reservoir computing deep learning architecture. Energy 2025, 318, 134641. [Google Scholar] [CrossRef]

- Haghighat, M.; MohammadiSavadkoohi, E.; Shafiabady, N. Applications of Explainable Artificial Intelligence (XAI) and interpretable Artificial Intelligence (AI) in smart buildings and energy savings in buildings: A systematic review. J. Build. Eng. 2025, 107, 112542. [Google Scholar] [CrossRef]

- Shajalal, M.; Boden, A.; Stevens, G. ForecastExplainer: Explainable household energy demand forecasting by approximating shapley values using DeepLIFT. Technol. Forecast. Soc. Change 2024, 206, 123588. [Google Scholar] [CrossRef]

- Grzeszczyk, T.A.; Grzeszczyk, M.K. Justifying Short-Term Load Forecasts Obtained with the Use of Neural Models. Energies 2022, 15, 1852. [Google Scholar] [CrossRef]

- Gürses-Tran, G.; Körner, T.A.; Monti, A. Introducing explainability in sequence-to-sequence learning for short-term load forecasting. Electr. Power Syst. Res. 2022, 212, 108366. [Google Scholar] [CrossRef]

- Feddersen, L.; Cleophas, C. Hierarchical neural additive models for interpretable demand forecasts. Int. J. Forecast. 2025; in press. [Google Scholar] [CrossRef]

- Joseph, L.P.; Deo, R.C.; Casillas-Pérez, D.; Prasad, R.; Raj, N.; Salcedo-Sanz, S. Short-term wind speed forecasting using an optimized three-phase convolutional neural network fused with bidirectional long short-term memory network model. Appl. Energy 2024, 359, 122624. [Google Scholar] [CrossRef]

- Liao, W.; Fang, J.; Ye, L.; Bak-Jensen, B.; Yang, Z.; Porte-Agel, F. Can we trust explainable artificial intelligence in wind power forecasting? Appl. Energy 2024, 376, 124273. [Google Scholar] [CrossRef]

- Darvishvand, L.; Kamkari, B.; Huang, M.J.; Hewitt, N.J. A systematic review of explainable artificial intelligence in urban building energy modeling: Methods, applications, and future directions. Sustain. Cities Soc. 2025, 128, 106492. [Google Scholar] [CrossRef]

- Barredo Arrieta, A.; Díaz-Rodríguez, N.; Del Ser, J.; Bennetot, A.; Tabik, S.; Barbado, A.; Garcia, S.; Gil-Lopez, S.; Molina, D.; Benjamins, R.; et al. Explainable Artificial Intelligence (XAI): Concepts, taxonomies, opportunities and challenges toward responsible AI. Inf. Fusion 2020, 58, 82–115. [Google Scholar] [CrossRef]

- Rudin, C. Stop Explaining Black Box Machine Learning Models for High Stakes Decisions and Use Interpretable Models Instead. Nat. Mach. Intell. 2019, 1, 206–215. [Google Scholar] [CrossRef]

- Molnar, C. Interpretable Machine Learning: A Guide for Making Black Box Models Explainable. 2019. Available online: https://christophm.github.io/interpretable-ml-book/ (accessed on 14 November 2025).

- Belle, V.; Papantonis, I. Principles and Practice of Explainable Machine Learning. Front. Big Data 2021, 4, 688969. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef]

- Elsevier. ScienceDirect. Available online: https://www.sciencedirect.com (accessed on 5 August 2025).

- Białek, J.; Bujalski, W.; Wojdan, K.; Guzek, M.; Kurek, T. Dataset level explanation of heat demand forecasting ANN with SHAP. Energy 2022, 261, 125075. [Google Scholar] [CrossRef]

- Wenninger, S.; Kaymakci, C.; Wiethe, C. Explainable long-term building energy consumption prediction using QLattice. Appl. Energy 2022, 308, 118300. [Google Scholar] [CrossRef]

- Choi, W.; Lee, S. Interpretable deep learning model for load and temperature forecasting: Depending on encoding length, models may be cheating on wrong answers. Energy Build. 2023, 297, 113410. [Google Scholar] [CrossRef]

- Mubarak, H.; Stegen, S.; Bai, F.; Abdellatif, A.; Sanjari, M.J. Enhancing interpretability in power management: A time-encoded household energy forecasting using hybrid deep learning model. Energy Convers. Manag. 2024, 315, 118795. [Google Scholar] [CrossRef]

- Ferreira, A.B.A.; Leite, J.B.; Salvadeo, D.H.P. Power substation load forecasting using interpretable transformer-based temporal fusion neural networks. Electr. Power Syst. Res. 2025, 238, 111169. [Google Scholar] [CrossRef]

- Li, H.; Tang, Y.; Liu, D. A CrossInformer model based on dual-layer decomposition and interpretability for short-term electricity load forecasting. Alex. Eng. J. 2025, 129, 117–127. [Google Scholar] [CrossRef]

- Fan, C.; Chen, H. Research on eXplainable artificial intelligence in the CNN-LSTM hybrid model for energy forecasting. J. Build. Eng. 2025, 111, 113150. [Google Scholar] [CrossRef]

- Moon, J.; Rho, S.; Baik, S.W. Toward explainable electrical load forecasting of buildings: A comparative study of tree-based ensemble methods with Shapley values. Sustain. Energy Technol. Assess. 2022, 54, 102888. [Google Scholar] [CrossRef]

- Allal, Z.; Noura, H.N.; Salman, O.; Chahine, K. Power consumption prediction in warehouses using variational autoencoders and tree-based regression models. Energy Built Environ. 2024; in press. [Google Scholar] [CrossRef]

- Xu, C.; Li, C.; Zhou, X. Interpretable LSTM Based on Mixture Attention Mechanism for Multi-Step Residential Load Forecasting. Electronics 2022, 11, 2189. [Google Scholar] [CrossRef]

- Lu, Y.; Vijayananth, V.; Perumal, T. Smart home energy prediction framework using temporal Kolmogorov-Arnold transformer. Energy Build. 2025, 335, 115529. [Google Scholar] [CrossRef]

- Eskandari, H.; Saadatmand, H.; Ramzan, M.; Mousapour, M. Innovative framework for accurate and transparent forecasting of energy consumption: A fusion of feature selection and interpretable machine learning. Appl. Energy 2024, 366, 123314. [Google Scholar] [CrossRef]

- Miraki, A.; Parviainen, P.; Arghandeh, R. Electricity demand forecasting at distribution and household levels using explainable causal graph neural network. Energy AI 2024, 16, 100368. [Google Scholar] [CrossRef]

- Jiang, Y.; Li, Y.; Chen, Y. Interpretable short-term load forecasting via multi-scale temporal decomposition. Electr. Power Syst. Res. 2024, 235, 110781. [Google Scholar] [CrossRef]

- Mathew, A.; Chikte, R.; Sadanandan, S.K.; Abdelaziz, S.; Ijaz, S.; Ghaoud, T. Medium-term feeder load forecasting and boosting peak accuracy prediction using the PWP-XGBoost model. Electr. Power Syst. Res. 2024, 237, 111051. [Google Scholar] [CrossRef]

- Ghimire, S.; Deo, R.C.; Hopf, K.; Liu, H.; Casillas-Pérez, D.; Helwig, A.; Prasad, S.S.; Pérez-Aracil, J.; Barua, P.D.; Salcedo-Sanz, S. Half-hourly electricity price prediction model with explainable-decomposition hybrid deep learning approach. Energy AI 2025, 20, 100492. [Google Scholar] [CrossRef]

- Lee, J.; Xia, B. Analyzing the dynamics between crude oil spot prices and futures prices by maturity terms: Deep learning approaches to futures-based forecasting. Results Eng. 2024, 24, 103086. [Google Scholar] [CrossRef]

- Ghosh, I.; Jana, R.K. Clean energy stock price forecasting and response to macroeconomic variables: A novel framework using Facebook’s Prophet, NeuralProphet and explainable AI. Technol. Forecast. Soc. Change 2024, 200, 123148. [Google Scholar] [CrossRef]

- Jiang, M.; Che, J.; Li, S.; Hu, K.; Xu, Y. Incorporating key features from structured and unstructured data for enhanced carbon trading price forecasting with interpretability analysis. Appl. Energy 2025, 382, 125301. [Google Scholar] [CrossRef]

- Cramer, E.; Witthaut, D.; Mitsos, A.; Dahmen, M. Multivariate probabilistic forecasting of intraday electricity prices using normalizing flows. Appl. Energy 2023, 346, 121370. [Google Scholar] [CrossRef]

- Álvarez-Rodríguez, C.; Parrado-Hernández, E.; Pérez-Aracil, J.; Prieto-Godino, L.; Salcedo-Sanz, S. Interpretable extreme wind speed prediction with concept bottleneck models. Renew. Energy 2024, 231, 120935. [Google Scholar] [CrossRef]

- Liao, W.; Fang, J.; Bak-Jensen, B.; Ruan, G.; Yang, Z.; Porté-Agel, F. Explainable modeling for wind power forecasting: A Glass-Box model with high accuracy. Int. J. Electr. Power Energy Syst. 2025, 167, 110643. [Google Scholar] [CrossRef]

- Verdone, A.; Scardapane, S.; Panella, M. Explainable Spatio-Temporal Graph Neural Networks for multi-site photovoltaic energy production. Appl. Energy 2024, 353, 122151. [Google Scholar] [CrossRef]

- Wu, Z.; Zeng, S.; Jiang, R.; Zhang, H.; Yang, Z. Explainable temporal dependence in multi-step wind power forecast via decomposition based chain echo state networks. Energy 2023, 270, 126906. [Google Scholar] [CrossRef]

- Niu, Z.; Han, X.; Zhang, D.; Wu, Y.; Lan, S. Interpretable wind power forecasting combining seasonal-trend representations learning with temporal fusion transformers architecture. Energy 2024, 306, 132482. [Google Scholar] [CrossRef]

- Zhao, Y.; Liao, H.; Zhao, Y.; Pan, S. Data-augmented trend-fluctuation representations by interpretable contrastive learning for wind power forecasting. Appl. Energy 2025, 380, 125052. [Google Scholar] [CrossRef]

- Letzgus, S.; Müller, K.-R. An explainable AI framework for robust and transparent data-driven wind turbine power curve models. Energy AI 2024, 15, 100328. [Google Scholar] [CrossRef]

- Wang, X.; Hao, Y.; Yang, W. Novel wind power ensemble forecasting system based on mixed-frequency modeling and interpretable base model selection strategy. Energy 2024, 297, 131142. [Google Scholar] [CrossRef]

- Nallakaruppan, M.K.; Shankar, N.; Bhuvanagiri, P.B.; Padmanaban, S.; Bhatia Khan, S. Advancing solar energy integration: Unveiling XAI insights for enhanced power system management and sustainable future. Ain Shams Eng. J. 2024, 15, 102740. [Google Scholar] [CrossRef]

- Sarp, S.; Kuzlu, M.; Cali, U.; Elma, O.; Guler, O. An Interpretable Solar Photovoltaic Power Generation Forecasting Approach Using An Explainable Artificial Intelligence Tool. In Proceedings of the 2021 IEEE Power & Energy Society Innovative Smart Grid Technologies Conference (ISGT), Washington, DC, USA, 25–29 July 2021; pp. 1–5. [Google Scholar]

- Talaat, F.M.; Kabeel, A.E.; Shaban, W.M. Towards sustainable energy management: Leveraging explainable Artificial Intelligence for transparent and efficient decision-making. Sustain. Energy Technol. Assess. 2025, 78, 104348. [Google Scholar] [CrossRef]

- Prasad, S.S.; Joseph, L.P.; Ghimire, S.; Deo, R.C.; Downs, N.J.; Acharya, R.; Yaseen, Z.M. Explainable hybrid deep learning framework for enhancing multi-step solar ultraviolet-B radiation predictions. Atmos. Environ. 2025, 343, 120951. [Google Scholar] [CrossRef]

- Wang, H.; Cai, R.; Zhou, B.; Aziz, S.; Qin, B.; Voropai, N.; Gan, L.; Barakhtenko, E. Solar irradiance forecasting based on direct explainable neural network. Energy Convers. Manag. 2020, 226, 113487. [Google Scholar] [CrossRef]

- Ukwuoma, C.C.; Cai, D.; Ukwuoma, C.D.; Chukwuemeka, M.P.; Ayeni, B.O.; Ukwuoma, C.O.; Adeyi, O.V.; Huang, Q. Sequential gated recurrent and self attention explainable deep learning model for predicting hydrogen production: Implications and applicability. Appl. Energy 2025, 378, 124851. [Google Scholar] [CrossRef]

- Zhao, Y.; Zhao, Y.; Liao, H.; Pan, S.; Zheng, Y. Interpreting LASSO regression model by feature space matching analysis for spatio-temporal correlation based wind power forecasting. Appl. Energy 2025, 380, 124954. [Google Scholar] [CrossRef]

- Kuzlu, M.; Cali, U.; Sharma, V.; Guler, O. Gaining Insight Into Solar Photovoltaic Power Generation Forecasting Utilizing Explainable Artificial Intelligence Tools. IEEE Access 2020, 8, 187814–187823. [Google Scholar] [CrossRef]

| Era | Energy Systems Milestone | AI/ML Development | XAI/Interpretability Progress |

|---|---|---|---|

| 1880s | Invention of AC transmission (Tesla) | – | – |

| 1958 | First commercial solar cell (Bell Labs) | Perceptron | – |

| 1988 | Grid-connected renewables begin scaling | Temporal-Difference RL | Rule-based expert systems (inherent transparency) |

| 1997 | European RES integration begins | LSTM | Sensitivity analysis in neural networks |

| 2012 | Rise of smart grids, digital meters | Deep Learning: AlexNet | Early visualization techniques |

| 2015 | Tesla Powerwall, home battery revolution | ML in load/price forecasting (e.g., XGBoost) | Interpretable tree ensembles (e.g., SHAP precursors) |

| 2020s | Hybrid AI-energy systems, net-zero push | Transformers, DRL, ensemble DL | Widespread adoption of SHAP, LIME, surrogate models |

| Ref. (Year) | Domain | Forecasting Focus | Time-Series Specific | Trust-Centric | Domain-Specific Trust | User-Aligned | Synthesis Depth |

|---|---|---|---|---|---|---|---|

| [1] | Power load forecasting | ✓ | ✓ | ✗ | ✗ | ✗ | Moderate |

| [6] | General energy systems | Partial | ✗ | ✗ | ✗ | ✗ | Moderate |

| [5] | Building energy | ✓ | ✓ | ✗ | ✗ | ✓ (partial) | High |

| [11] | Smart buildings | ✗ | ✗ | ✗ | ✗ | ✓ | High |

| [18] | Urban emissions | ✗ | ✗ | ✗ | ✗ | ✗ | Low |

| [2] | Energy maintenance | ✗ | ✗ | ✓ (partial) | ✗ | ✓ | High |

| This paper * | Load, price, generation | ✓ | ✓ | ✓ | ✓ | ✓ | High |

| Model Type | Predictive Power | XAI Strategy & Temporal Scope | Why It Is Useful |

|---|---|---|---|

| Linear Regression | Low (baseline performance) | Ante-hoc; Global. Coefficients reveal fixed temporal feature influence (e.g., lagged variables). | Simple baseline; helps assess lagged influence and seasonal shifts directly. |

| Decision Tree | Medium | Ante-hoc; Local/Global. Splits reflect temporal thresholds; tree depth captures recursive logic. | Rule-based clarity helps explain threshold behaviors and diurnal patterns in forecasts. |

| K-NN (with windowing) | Medium | Post hoc; Local. LIME or local surrogates explain nearest historical patterns used for prediction. | Supports analog-based forecasting; useful in short-term demand anomalies. |

| Random Forest | High | Post hoc; Primarily Local. TreeSHAP 1 for lag feature importance per prediction. | Captures non-linear interactions and helps explain recurring temporal cycles. |

| CNN (e.g., for 1D TS) | High | Post hoc; Local. Grad-CAM-style 2 saliency maps on input time window highlight key subsequences. | Shows which time chunks influence decisions good for interpretable filters on sensor sequences. |

| RNN/LSTM (w/attention) | Very High (sequence-aware) | Post hoc; Local. Attention weights, feature occlusion, or LIME used to explain time step influence. | Ideal for continuous forecasting; attention reveals influential lagged periods. |

| Transformer | Very High (multi-step, attention) | Post hoc; Local/Global. Self-attention reveals intra-sequence dependencies; SHAP for input features. | Powerful for recursive or hybrid forecasting; aligns well with explainable temporal dynamics. |

| Journal | Number of Publications | Impact Factor (Latest) |

|---|---|---|

| Applied Energy | 10 | 11 (27 June 2025) |

| Energy | 5 | 9.4 (27 June 2025) |

| Electric Power Systems Research | 4 | 4.2 (27 June 2025) |

| Energy and AI | 4 | 9.6 (27 June 2025) |

| Energy Conversion and Management | 3 | 10.9 (27 June 2025) |

| Country | Load | Generation | Price |

|---|---|---|---|

| China | 1 | 10 | 1 |

| Germany | 4 | 1 | 1 |

| India | 0 | 1 | 3 |

| South Korea | 2 | 1 | 1 |

| Spain | 1 | 2 | 2 |

| Study | Application | Forecasting Model(s) | Key Input Features | Forecasting Horizon |

|---|---|---|---|---|

| [27] | Building load and temperature | Attention-GRU | Weather, setpoints, historical load/temp | 24 h ahead |

| [14] | National electric load (Germany) | Sequence-to-Sequence RNN (GRU) | Weather, time (dummies), historical load | Day-ahead (24 h) |

| [35] | Smart home energy consumption | TKAT, LSTM, CNN-LSTM, CNN-GRU | Weather, historical appliance usage | Short-term |

| [36] | National energy consumption (UK) | MLR, SVM, GPR, LSBoost | Weather, socioeconomic, energy prices | Next month (monthly) |

| [26] | Annual building energy performance | QLattice, XGB, ANN, SVR, MLR | Building characteristics, insulation, heating | Annual |

| [25] | District heating demand (Warsaw) | Artificial Neural Network (ANN) | Weather, time (cosine), historical demand | Up to 120 h ahead |

| [10] | District heating & cooling load | Reservoir Computing (RC), LSTM, CNN, etc. | Weather, historical energy load, time (sine) | 6 h ahead (15 min intervals) |

| [28] | Household active and reactive power forecasting | LSTM-Attention with SHAP | Historical load/power, lag values, weather, explicit numerical time encoding | 1 h ahead, 1-day ahead |

| [37] | Household and distribution level electricity demand forecasting | Explainable Causal Graph Neural Network (X-CGNN) | Historical demand, weather variables (temp, humidity, wind) | 30, 60, 90 min; 1, 2, 3 h |

| [30] | Short-term electricity load forecasting | CrossInformer with ICEEMDAN-RLMD decomposition and SHAP | Historical load, temperature, electricity price, humidity | Short-term (24 steps ahead) |

| [34] | Multi-step residential load forecasting (probabilistic) | Interpretable Multi-Variable LSTM (MV-LSTM) with mixture attention | Historical load, time-related variables (hour, day, holiday), weather | Next day (48 half-hour steps) |

| [13] | Justifying short-term load forecasts from neural models | RNN with LSTM layers, explained using LIME | Historical load, weather from multiple cities, holiday/school indicators | 72 h ahead |

| [12] | Explainable household energy demand forecasting | ForecastExplainer (LSTM explained by DeepLIFT approximating SHAP) | Aggregate and appliance-level consumption, seasonality features | Hourly, daily, weekly |

| [38] | Interpretable short-term load forecasting for central grid | Interpretable model with multi-scale temporal decomposition (Transformers & CNNs) | Historical load, auxiliary features (temperature, calendar, humidity) | Short-term |

| [15] | Interpretable retail demand forecasting | Hierarchical Neural Additive Models (HNAMs) | Sales data, promotions, price, holidays, product/store attributes | Daily, up to 2 weeks |

| [31] | Building Air Conditioning Energy Forecasting | CNN-LSTM | Previous energy use, water temperatures, system flow, weather | 1 h ahead (Short-term) |

| [29] | Power Substation Load Forecasting | Temporal Fusion Transformer (TFT) | Historical load, temporal data (hour, day, holiday), temperature | 24 & 48 h ahead (Short-term) |

| [33] | Warehouse Power Consumption Prediction | VAE + Tree-based Regressors (ExtraTree, LightGBM) | VAE-generated latent features from all building sensors | 10-min, 20-min, 1 h ahead (Short-term) |

| [39] | Feeder Load Forecasting | PWP-XGBoost | Temperature, humidity, customer count, KVA, temporal data | 1-year ahead (Medium-term) |

| [32] | Educational Building Load Forecasting | Tree-based Ensembles (LightGBM, RF, XGBoost, etc.) | Historical load, weather (THI, WCT), temporal data | Day-ahead hourly (Short-term) |

| Study | Applied Explainability Method | Global or Local | User Relevance | Method of Visualizing Explainability |

|---|---|---|---|---|

| [27] | Attention Mechanism | Both | Model developers and researchers for understanding model behavior | 2D color map of attention weights over time |

| [14] | Saliency Maps (Perturbation) | Local | Domain experts (e.g., energy traders) for causal understanding | 2D color map (saliency map) of feature importance over time |

| [35] | SHAP | Both | Model developers and energy managers for feature impact analysis | SHAP summary plot (beeswarm plot) and feature importance bars |

| [36] | SHAP & Feature Selection | Both | Policymakers and energy analysts for identifying key drivers | SHAP summary plots and bar charts |

| [26] | QLattice (inherent), Permutation Importance | Global | Experts and non-experts (homeowners) for transparent calculation | Simple mathematical formula, variable importance bar plots |

| [25] | SHAP (DeepSHAP) | Both (focus on Global) | Experts and model developers for model validation | Feature importance bars, dependency plots, scatter plots of SHAP values |

| [10] | LIME | Local | Power plant/network operators for operational decision-making | Heatmap of feature importance over the forecast horizon |

| [28] | SHAP (post hoc) | Both | Power Managers: Designing time-of-use tariffs and optimizing energy storage sizing. | Feature importance bar charts, SHAP summary (beeswarm) plots, dependency plots. |

| [37] | Intrinsic Causal Graph; Post hoc Feature Ablation | Both | Model Developers: Optimizing input sequence length to create lighter, more efficient models for real-time use. | Causal graphs (network diagrams), heatmaps of feature/time-lag importance. |

| [30] | SHAP (post hoc) | Global | System Analysts: Quantifying feature contributions to validate modeling assumptions and understand drivers of load. | SHAP beeswarm plot. |

| [34] | Mixture Attention Mechanism (intrinsic) | Both | Utility Analysts: Understanding relationships between load, weather, and time to analyze demand changes. | Bar chart for global variable importance, heatmaps for temporal importance. |

| [13] | LIME (post hoc) | Local | Forecasters/Practitioners: Justifying individual forecasts to build trust and improve neural models. | LIME explanation plot (horizontal bar chart). |

| [12] | ForecastExplainer (DeepLIFT approximating SHAP) | Primarily Local (can be aggregated) | Smart Home Users: Understanding appliance-level consumption to become more aware and optimize energy use. | Area plots, box plots, and histograms showing feature contributions over time. |

| [38] | Linear combination of specialized NNs (intrinsic) | Global | Model Developers/Analysts: Understanding the significance of different temporal patterns (trend, seasonality) and features for model tuning. | Heatmaps of learned significance scores for features and decomposed components. |

| [15] | Hierarchical Neural Additive Models (HNAMs) (intrinsic) | Both | Retail Managers/Forecasters: Aligning the model with mental models to reduce algorithm aversion and improve judgmental adjustments. | Violin plots, time series plots of aggregated effects, and instance-level forecast decomposition plots. |

| [31] | SHAP, LIME, Grad-CAM, Grad-Absolute-CAM | Both | Building professionals seeking model trust and practical utility. | Feature importance bar charts, feature activation heatmaps. |

| [29] | Attention Mechanism, Variable Importance Networks (inherent in TFT) | Both | Grid operators make decisions on fault analysis and resource planning. | Attention weight plots over time, feature importance bar charts for encoder/decoder. |

| [32] | SHAP (TreeSHAP) | Both | EMS managers persuading customers to act on peak alerts. | SHAP summary plot, Partial Dependence Plot (PDP), heatmap plot. |

| [27] | SHAP (TreeSHAP) | Both (Focus on improving global from local) | Not explicitly stated but geared towards data scientists improving feature analysis. | SHAP Partial Dependence Plot (PDP). |

| [33] | SHAP, Tree-based Feature Importance | Global | Warehouse managers and operators may lack technical knowledge. | SHAP summary and bar plots. |

| Manuscript (Author, Year) | Applied Explainability Method | Global or Local | User Relevance (Target Audience & Goal) | Method of Visualization |

|---|---|---|---|---|

| [7] | Trust Algorithm (combining SHAP, PFI, and LR scores) | Both | High: EPF users and bidding agents, for deciding when to trust a prediction. | Score-based (numerical trust score); waterfall/force plots. |

| [44] | SHAP values | Global | Moderate: Model developers, for input feature selection. | Bar charts of average absolute SHAP values. |

| [3] | Inherent model explainability (k-NN based) | Local | High: End-users, for understanding the historical basis of a forecast. | Line plots showing the query sequence and its nearest neighbors. |

| [41] | Extensive hyperparameter tuning analysis | Global | Moderate: AI researchers, for understanding model architecture behavior. | Primarily through analysis of performance tables. |

| [9] | SHAP, Morris screening, ICE/PD plots, surrogate decision tree | Both | High: Market participants, for human-in-the-loop decision-making. | SHAP plots, ICE/PD curves, decision tree diagrams. |

| [43] | Random permutation with Monte Carlo simulation, prediction intervals | Both | High: Analysts, for understanding feature impact and prediction uncertainty. | Line plots showing prediction intervals under uncertainty. |

| [42] | SHAP, LIME, Partial Dependence Plots (PDP) | Both | High: Policymakers and traders, for strategic decision-making. | SHAP summary plots, LIME explanation plots, 3D PDPs. |

| [40] | SHAP, LIME | Both | High: Energy experts and grid operators, for decision-making. | SHAP summary/bar plots, LIME bar plots. |

| Study | Model(s) | Input Features | Forecasting Horizon |

|---|---|---|---|

| [54] | XGBoost | Irradiance, Temp, Humidity, Wind Speed/Direction, Pressure, Time Index | 15 min intervals |

| [8] | LightGBM, RF, GB, XGBoost, DT | SSRD, Temp, Humidity, Cloud Cover, Wind, Pressure, Precipitation, etc. | Hourly |

| [47] | GCN1D (STGNN) | Multi-site Power, Wind Speed, Temp, Month, Hour, Plant Location | Multi-step (24–72 h) |

| [55] | XGBoost, Linear Regression, RF, etc. | DC/AC Power, Daily/Total Yield, Irradiance, Ambient/Module Temp | Not specified |

| [53] | Random Forest Regressor (best of 4) | DC Power, Irradiance, Ambient/Module Temp, Daily/Total Yield | Not specified |

| [56] | TabNet (DL) | Satellite data (Ozone, Aerosols), Sky Images (Clouds), SZA, Lagged UV-B | Multi-step hourly (1–4 h) |

| [57] | Direct Explainable Neural Network (DXNN) | Solar Irradiance, Temp, Humidity, Wind, Sun Altitude/Azimuth, etc. | 1 min ahead |

| [16] | 3P-CBILSTM: A three-phase hybrid model combining CNN and BiLSTM, optimized with TMGWO and BOHB. | Historical hourly wind speed and multiple ground-level and satellite-based meteorological variables. | 1 h ahead |

| [58] | Sequential GRU & Self-Attention: A deep learning framework with a final XGBoost regressor layer. | Experimental data: Temperature, RSS Particle Size, HDPE Particle Size, and % of Plastics in Mixture. Data was augmented. | N/A (Regression Task) |

| [52] | Ensemble System: An ensemble of mixed-frequency (AR-MIDAS) and machine learning models with an interpretable base model selection strategy (elastic net + SHAP). | Mixed-frequency (15 min and 1-h) wind speed and wind power data, preprocessed with ICEEMDAN. | 1 h ahead |

| [45] | Concept Bottleneck Model (CBM): An interpretable model with automatically generated concepts from decision trees and a logistic regressor output layer. | Historical hourly wind speed, transformed into 14 interpretable OHLC-inspired features. | 1 h ahead |

| [50] | Hybrid (Contrastive Learning + Ridge Regression) | Historical wind power from multiple farms (spatio-temporal); learned trend & fluctuation representations. | Ultra-short-term |

| [51] | Evaluation Framework (for ANN, RF, SVR, etc.) | Wind speed, air density, turbulence intensity. | Point-in-time (Power Curve) |

| [46] | Interpretable (Glass-Box GAM with trees) | NWP data (wind speed/direction at 10 m & 100 m) and/or historical wind power. | Short-term (hours to days) |

| [48] | Hybrid (Decomposition + ESN) | Historical wind power decomposed by VMD into sub-series. | Multi-step |

| [49] | Hybrid (Representation Learning + Transformer) | Historical wind power, NWP data, static variables; learned seasonal & trend representations. | Multi-horizon (short-term) |

| [4] | Interpretable Deep Learning (Transformer) | Multivariate historical data (wind power, speed, direction). | Single-step |

| [59] | Interpretable (LASSO) & Evaluation Framework | Historical wind speed and power from multiple farms (spatio-temporal). | Ultra-short-term |

| [17] | XAI Trustworthiness Evaluation Framework | NWP data. | Short-term |

| Study | Applied Explainability Method | Global or Local Scope | User Relevance & Application | Method of Visualizing Explainability |

|---|---|---|---|---|

| [46] | Explainable Boosting Machine (WindEBM) | Both | High: Direct decision support, feature engineering, and root-cause analysis. | Bar charts (feature importance), 1D and 2D plots (shape functions). |

| [49] | ISTR-TFT | Both | Understanding feature importance, disentangling seasonal-trend patterns, visualizing time dependencies. | Bar charts (variable importance), t-SNE plots (representations), line plots (attention weights). |

| [51] | Physics-Informed XAI using Shapley Values | Both | Model validation, robustness assessment, understanding out-of-distribution performance, root-cause analysis. | Conditional attribution plots (global strategy), bar plots (local deviations). |

| [50] | ICOTF with Optimal Transport | Both | Understanding interactions and the contribution of specific historical time steps. | Heat maps of the transportation matrix. |

| [59] | LASSO Coefficients and Derived Indices | Global | Extracting domain knowledge about spatio-temporal correlations and feature importance. | Heat maps of correlation coefficients and causal discrimination matrices. |

| [17] | Post hoc XAI Evaluation (SHAP, LIME, PFI, PDP) | Both | Assessing the trustworthiness and reliability of different XAI techniques themselves. | Bar charts (feature importance), violin and box plots (distribution of trust metrics). |

| [48] | DCESN Temporal Dependence Coefficient | Global | Understanding the internal temporal dynamics and influence of different model parts on future steps. | Heat maps of dependence coefficients. |

| [16] | LIME (Local Interpretable Model-Agnostic Explanations) SHAP (Shapley Additive Explanations) | Both: Global (LIME for overall model); Local (LIME/SHAP for individual predictions) | High: Explanations designed for wind farm operators to help make quality decisions regarding grid integration. | Local: Bar plots from LIME showing feature contributions. Global: SHAP visualizations including feature importance plots, beeswarm summary plots and dependence plots. |

| [58] | SHAP and LIME Partial Dependence Plots (PDPs) Modified William’s Plot | Both: Global (SHAP & PDPs provide overall insights); Local (LIME & SHAP explain individual predictions) | High: Targeted at energy engineers to help them understand factors influencing hydrogen production for process optimization. | Local: LIME and SHAP explainer plots. Global: Partial Dependence Plots and a modified William’s Plot for overall analysis. |

| [52] | Novel strategy combining Elastic Net algorithm with SHAP | Global | High: Used to interpretably select base models forming an ensemble, explaining model’s overall structure and logic; increases trust among grid operators for energy planning. | SHAP features importance and summary plots to visualize contribution of each base model (not individual data features). |

| [45] | Concept Bottleneck Model (CBM) intrinsically interpretable model | Both: Global (transparent architecture); Local (each prediction explained by activated concepts) | Very High: Explicitly designed for “human-in-the-loop” paradigm, with concepts representing clear rules useful for wind farm managers predicting extreme wind events. | Histograms of activated concepts. Bar charts showing accuracy of each “decision” (combination of concepts). Diagrams illustrating logical rules of individual concepts. |

| [54] | ELIS | Both | Understanding feature importance for decision-makers. | Tables of feature weights and contributions. |

| [8] | SHAP, LIME, PFI | Both (SHAP/PFI-Global, LIME/SHAP-Local) | Support grid operators and energy managers in decision-making. | Feature importance bar plots, SHAP beeswarm plots. |

| [47] | GNNEexplainer | Local (aggregated for global insights) | Inform energy network management and PV system design. | Heatmaps of feature/edge masks, plots of aggregated importance. |

| [55] | LIME | Local | Provide stakeholders with transparent tools for resource management. | Not specified in text (describes process). |

| [53] | LIME, PDP | Both (LIME-Local, PDP-Global) | Optimize solar generation systems by identifying key feature impacts. | PDP plots, bar charts of positive/negative feature contributions. |

| [56] | LIME, SHAP | Both (SHAP-Global, LIME-Local) | Aid UV experts in providing public health recommendations. | LIME bar plots, SHAP beeswarm plots and dependence plots. |

| [57] | Inherently Explainable Model (DXNN) | Global (via mathematical formula) | Understand the direct input-output relationship of the model. | quadratic explainable function” or “interpretable quadratic neuron. |

| [60] | LIME, SHAP, ELIS | Both (LIME/SHAP-ELIS-Global) | Enable adoption of AI in smart grids by increasing transparency. | Bar charts for feature importance and contributions, SHAP summary plots. |

| Characteristic | Load Forecasting (User-Centric Paradigm) | Price Forecasting (Risk-Centric Paradigm) | Generation Forecasting (Physics-Centric Paradigm) |

|---|---|---|---|

| Core Challenge | Capturing cyclical and behavioral patterns across diverse scales (e.g., residential, grid). | Managing extreme volatility, sharp price spikes, and high-stakes financial risk. | Taming intermittency and validating models against complex physical/meteorological processes. |

| Dominant Models | Sequential (LSTM, GRU), Transformers (TFT), and Hierarchical models (HNAMs). | Robust Ensembles (Stacked, Online), Hybrids (e.g., D3Net), and Decomposition-based models. | Interpretable-by-design (EBM, CBM), Spatio-temporal GNNs, and Physics-Informed models. |

| Key Input Data | Meteorological data, Calendar/Time features (holiday, day-of-week), and Building/System data. | Market data (interconnects, demand forecasts), Economic indicators (futures, VIX), and Unstructured data. | NWP data, Satellite/Sky-imagery, and specific Physical variables (e.g., turbine air density, cloud properties). |

| Primary XAI Goal | Provide actionable insights tailored to diverse users (e.g., grid operators, homeowners). | Perform risk management, validate economic logic, and provide actionable trading signals. | Ensure physical plausibility, perform root-cause analysis, and align model logic with science. |

| Trust’ Defined As | User Alignment: An explanation is relevant and useful for a specific human-in-the-loop task. | Financial Reliability: An explanation assesses real-time risk and prevents monetary loss (e.g., “Trust Algorithm”). | Physical Plausibility: An explanation confirms the model adheres to known physical principles (e.g., “reasonableness” score). |

| Key Representative Studies | [12,15,28,29] | [7,9,40,42] | [45,46,47,51] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Arabzadeh, V.; Frank, R. A Four-Dimensional Analysis of Explainable AI in Energy Forecasting: A Domain-Specific Systematic Review. Mach. Learn. Knowl. Extr. 2025, 7, 153. https://doi.org/10.3390/make7040153

Arabzadeh V, Frank R. A Four-Dimensional Analysis of Explainable AI in Energy Forecasting: A Domain-Specific Systematic Review. Machine Learning and Knowledge Extraction. 2025; 7(4):153. https://doi.org/10.3390/make7040153

Chicago/Turabian StyleArabzadeh, Vahid, and Raphael Frank. 2025. "A Four-Dimensional Analysis of Explainable AI in Energy Forecasting: A Domain-Specific Systematic Review" Machine Learning and Knowledge Extraction 7, no. 4: 153. https://doi.org/10.3390/make7040153

APA StyleArabzadeh, V., & Frank, R. (2025). A Four-Dimensional Analysis of Explainable AI in Energy Forecasting: A Domain-Specific Systematic Review. Machine Learning and Knowledge Extraction, 7(4), 153. https://doi.org/10.3390/make7040153