These findings emphasize the growing importance of IoT security and the scientific community’s continuous effort to address emerging threats in complex technological ecosystems. The following sections provide a detailed analysis of the identified trends, methodologies, and technologies shaping modern IDSs for IoT.

3.1. IDS Types

To answer RQ1, it is crucial to understand the different approaches of IDSs present in the literature. IDSs can be broadly categorized into five main types. Network-based IDSs (NIDSs) monitor network traffic in real time, analyzing data to identify malicious behavior across the network. These systems provide a comprehensive view of network activity, making them particularly effective in highly dynamic and distributed IoT environments. Recent developments in Edge AI IDSs extend this concept by performing traffic analysis closer to the data source, reducing latency and enhancing privacy protection in IoT environments [

33].

Host-based IDSs (HIDSs), on the other hand, are installed directly on devices, where they monitor their activity and detect unauthorized changes. While valuable for safeguarding critical devices, HIDSs are less commonly used because their scope is limited to the individual device, making them less effective for broader threat detection [

34].

Anomaly-based IDSs (AIDSs) operate by creating profiles of normal behavior and generating alerts when significant deviations occur. This approach is especially beneficial for identifying new and unknown threats, which is crucial in IoT settings characterized by continuously evolving attack vectors. However, their implementation can be complex and is often accompanied by challenges such as higher rates of FP.

Behavior-based IDSs (BIDSs) analyze patterns of behavior to detect anomalies, but their configuration and maintenance can be challenging due to the inherent noise in behavioral data. Recent proposals, such as the one by J. Tang et al. [

35], aim to mitigate this issue by incorporating social context into behavior modeling. Their system uses behavior comparison and correlation across IoT nodes to improve detection accuracy while minimizing irrelevant behavioral fluctuations.

Lastly, Signature-based IDSs (FIDSs) rely on comparing network traffic to a database of known attack signatures. While efficient against previously documented threats, FIDSs are less effective at addressing emerging or unknown attacks, limiting their applicability in rapidly changing environments. Blockchain-based IDSs enhance signature-based detection by ensuring tamper-proof logging of security events and alerts. By using a distributed ledger, these IDSs prevent log manipulation and improve trust in data integrity, making them suitable for critical infrastructures and collaborative IoT systems [

36].

A review of the literature indicates that all IDS types are being used in IoT security, though their application varies by context.

Figure 6 shows that NIDSs are the most prevalent due to their effectiveness in real-time network monitoring in distributed environments. In contrast, while AIDSs can adapt to new attack vectors, they often suffer from high false positive rates, and HIDS, though less frequent, are crucial for protecting critical devices. FIDSs and BIDSs are less common due to limitations in detecting emerging threats and managing noisy behavioral data. This diversity underscores the need to tailor IDS solutions to the specific challenges of IoT. Hybrid approaches, grouped as “others” in the literature, merge several detection techniques to address specific IoT challenges. By integrating multiple methodologies, these solutions achieve higher accuracy and lower false positive rates than traditional IDS types.

The architecture of IDSs, whether centralized or decentralized, also plays a critical role in their application. Centralized IDSs consolidate data analysis at a single point, offering a unified global security view and facilitating advanced monitoring through comprehensive data aggregation. However, they require significant computational resources and may suffer scalability issues, e.g., larger networks can lead to bottlenecks, increased latency, and reduced responsiveness. Additionally, a centralized design creates a single point of failure that may compromise overall security. In contrast, decentralized IDSs distribute detection tasks across multiple nodes, enabling parallel processing that reduces the latency of transmitting data to a central server and improves real-time responsiveness. This approach also enhances privacy by localizing processing on edge devices. Nevertheless, the inherent coordination among heterogeneous nodes increases system complexity, requiring robust protocols to maintain data coherence and effective synchronization.

Edge IDSs exemplify decentralized approaches by performing security analysis near the data source, further minimizing latency and preserving user privacy, which is critical in time-sensitive environments like healthcare IoT and industrial control systems. Additional trade-offs include energy efficiency and maintenance: while centralized systems benefit from high-performance servers, they incur higher energy costs and simpler maintenance that poses risks due to single-point failures. Decentralized models, although offering improved fault tolerance and scalability, demand complex integration and interoperability solutions.

In summary, centralized IDSs provide comprehensive data visibility and simplified management but may struggle in large-scale, dynamic IoT environments due to latency and resource bottlenecks. Conversely, decentralized architectures offer better scalability and responsiveness with enhanced privacy, although they introduce challenges in system complexity, coordination, and energy management.

Table 3 provides a comparative analysis of the main IDS types used in IoT environments, detailing their detection approaches, advantages, limitations, and practical use cases. It reveals that no single IDS type is optimal for all contexts, as the choice depends on the threat model and specific constraints. For instance, NIDSs are highly effective for detecting broad, real-time threats such as DDoS attacks in smart cities, but may overlook device-level issues like firmware tampering in medical devices, which HIDSs better address.

Similarly, AIDSs can identify zero-day attacks by spotting deviations from normal behavior; however, they tend to produce high false positive rates. FIDS, while dependable for known threats, struggle with zero-day attacks due to their reliance on fixed signatures. Additionally, Blockchain IDSs ensure tamper-proof logging, which is ideal for critical financial systems, whereas Edge IDSs reduce latency by analyzing data near the source, proving advantageous for real-time detection in industrial IoT. Hybrid IDSs combine multiple methods to effectively address both known and emerging threats, enhancing overall accuracy and efficiency.

3.2. Types of Proposal Evaluation

In the reviewed articles, approximately 70% include some form of evaluation, which is crucial for assessing IDS performance, yet the absence of standardized metrics often hinders direct comparisons across studies. The majority of the articles employ two main evaluation methods: simulation or practical experimentation, as depicted in

Figure 7.

Simulations are particularly popular for evaluating IDS performance, as they allow the creation of controlled environments that replicate real IoT conditions. Specialized tools such as NS-3 [

37] or IoT Simulator [

38] are frequently used in these studies.

For example, M. Amiri-Zarandi et al. [

39] presented an IDS based on FL called the Social Intrusion Detection System (SIDS). The SIDS addresses the limitations of traditional centralized methods, which collect all data on a central server and therefore increase computational load and privacy risks, by training models locally on IoT devices and then aggregating them on a central server. Their study demonstrated that the SIDS improves detection accuracy, optimizes resource usage, and preserves privacy compared to centralized and individual approaches.

Recent work by Kipongo et al. [

40] and Sharadqh et al. [

41] employs NS-3.26 to validate IDSs in IoT settings. Kipongo et al. simulate an AI-based IDSs in edge-assisted SDWSNs, achieving over 90% detection accuracy and packet-delivery ratios while balancing energy and latency. Sharadqh et al. assess HybridChain-IDS, a blockchain-anchored, bi-level framework, reporting high precision, recall, and F1 scores without degrading throughput or latency. These studies underscore NS-3’s utility for capturing IoT complexity and heterogeneity.

Other articles opt for practical experiments conducted in real or controlled environments, which, although more costly, provide robust validation by capturing the inherent complexities of IoT. A. Verma et al. [

42] carried out an experimental evaluation of seven supervised ML–based IDS classifiers (tuning them on CIDDS-001, UNSW-NB15 and NSL-KDD datasets) and then deployed each model on a Raspberry Pi 3 Model B+ to measure average response times and determine the best trade-off between detection performance and real-time latency. These tests demonstrated the system’s effectiveness in real-time intrusion detection while addressing the complexity and variability of IoT environments. Similarly, M. Osman et al. [

43] propose ELG-IDS, a hybrid intrusion detection system for RPL-based IoT networks that combines genetic-algorithm feature selection with a stacking ensemble of classifiers. In experiments on RPL attack datasets, ELG-IDS achieves up to 99.66% detection accuracy, demonstrating the effectiveness of GA-driven feature reduction and ensemble learning. Shalabi et al. [

44], in their systematic literature review of blockchain-based IDS/IPS for IoT networks, highlight how distributed ledgers can ensure tamper-proof logging of security events and bolster trust in collaborative IoT environments, though they themselves do not implement new experiments.

Building on this, S. Li et al. [

45] introduce HDA-IDS, which fuses signature-based and anomaly-based detection using a CL-GAN model. When evaluated on benchmark datasets such as NSL-KDD and CICIDS2018, HDA-IDS shows around a 5% improvement in detection accuracy and significant reductions in both training and testing times, underscoring the value of controlled experiments for validating IDS performance in heterogeneous IoT scenarios. These findings emphasize that a combination of simulations and real-world experiments is essential to comprehensively assess IDS scalability, accuracy, and practical applicability in IoT.

It is also essential to highlight the importance of using datasets in the evaluation of proposals. A dataset is an organized collection of data used to train and evaluate intrusion detection models. However, many publicly available datasets for IDS evaluation lack diversity, often failing to cover the full spectrum of IoT threats, such as low-rate DoS attacks or adversarial examples. This limitation can result in models performing well under controlled conditions but may fail in real-world scenarios. Using diverse datasets improves detection accuracy, model robustness, and generalization capacity, as shown in

Figure 8.

A common limitation is the reliance on synthetically generated data, which simplifies dataset creation but often fails to capture the dynamic nature of IoT traffic. For example, datasets like UNSW-NB15 and NSL-KDD rely heavily on simulated attacks, lacking more sophisticated threats such as polymorphic malware or encrypted traffic often found in real environments. This results in models that can detect straightforward threats but struggle with more complex patterns like zero-day attacks, which exploit previously unknown vulnerabilities.

Dataset imbalance is another frequent issue. Many datasets are dominated by either attack traffic or benign data, which can skew the model’s learning process. For example, NSL-KDD contains a disproportionately high number of attack samples, leading models to over-prioritize attack detection while overlooking subtle variations in normal traffic. Conversely, datasets with few attack samples may cause models to miss rare threats such as low-rate DoS attacks. Although techniques like Synthetic Minority Over-sampling Technique (SMOTE) can help balance the data, they may introduce synthetic patterns that do not fully represent actual traffic behavior, potentially distorting detection metrics.

Lastly, the lack of realism in many datasets is a major concern. Real IoT networks often include multiple device types, variable traffic loads, encrypted communications, and sporadic interactions (factors that synthetic datasets struggle to replicate). For example, although datasets such as BoT-IoT and N-BaIoT are specifically designed for IoT environments, they are generated under controlled conditions and frequently lack the variability of genuine network traffic.

Figure 9 illustrates a side-by-side performance benchmark of five representative IDS models on the CICIDS 2017 dataset, comparing Precision, Recall, and F1-score for each approach [

46].

Several studies have attempted to address these limitations by combining datasets to improve coverage. F. Nie et al. [

47] introduce M2VT–IDS, a multi-task, multi-view learning framework that represents IoT traffic via three perspectives—spatio-temporal series, header–field patterns, and payload semantics—and processes these through a shared network followed by task–specific attention modules. Evaluated on the BoT-IoT, MQTT-IoT-IDS2020, and IoT-Network-Intrusion datasets, achieves over 99.8% accuracy in anomaly detection and similarly high precision, recall, and F1 for attack identification and device identification, all while avoiding redundant feature engineering. Similarly, S. Racherla et al. [

48] evaluated Deep-IDS using the CIC-IDS2017 dataset, reaching a detection rate of 96.8%. While these results are promising, the datasets primarily focus on a limited set of threats, such as DDoS attacks, and fail to capture the full range of IoT-specific vulnerabilities.

Several studies have compared IDS performance against established benchmarks. For instance, R. Ahmad et al. [

46] evaluated the effectiveness of hybrid classifiers using datasets such as BoT-IoT, N-BaIoT, CICIDS2017, and UNSW-NB15. However, these datasets often exhibit repetitive attack patterns, typically limited to basic scans and floods, which tends to overlook more complex, multi-stage attacks or encrypted payloads.

To select the most suitable dataset for IDS evaluation, it is essential to prioritize those that encompass a diverse range of attack types, device behaviors, and traffic patterns to prevent overfitting to a limited threat landscape. Datasets should reflect realistic network conditions, including encrypted traffic, dynamic variations in load, and sporadic device activity, as commonly observed in IoT environments. Additionally, the balance between benign and malicious traffic must be carefully considered, as datasets skewed towards a majority class can distort model evaluation. Finally, the dataset size should be sufficient to capture network complexity without overwhelming computational resources, particularly in resource-constrained IoT devices, ensuring a comprehensive and reliable assessment of IDS performance.

Table 4 summarizes datasets used in IDS evaluations, revealing disparities in data diversity, feature count, and balance. Historical datasets like NSL-KDD and UNSW-NB15 are synthetic and imbalanced, limiting their suitability for modern IoT scenarios. BoT-IoT and NB-IoT better represent IoT environments but lack coverage of complex attack vectors, such as multi-stage attacks or encrypted traffic. Varying feature counts and imbalance ratios highlight the challenge of standardizing IDS evaluations, underscoring the need for more diverse, realistic, and representative datasets for consistent performance comparisons.

While our review highlights these significant limitations, we recognize the need to bridge the gap between controlled experimental evaluations and the dynamic conditions of real-world networks. To address this, future studies should incorporate datasets collected directly from operational IoT networks to capture the authentic diversity of traffic, including encrypted communications and the heterogeneous behaviors of various devices, and pursue collaborations with industry partners to conduct pilot deployments and field experiments. These initiatives would enable IDSs to be tested under realistic conditions of network load, device variability, and genuine attack scenarios.

Integrating hybrid evaluation frameworks that combine synthetic and real-world datasets bolsters model robustness by ensuring performance metrics such as detection accuracy and false-positive rates and accurately capturing both controlled test conditions and dynamic operational environments. Collectively, these methods establish a more comprehensive assessment paradigm that drives IDS research toward practical, scalable solutions capable of meeting the stringent demands of real-world IoT deployments.

3.3. Optimized Aspects

To address the third research question, it is important to examine the aspects that are commonly optimized in IDS proposals for IoT environments, as shown in

Figure 10. These include challenges such as resource constraints, data privacy, adaptability to dynamic threats, and scalability. Recent approaches highlight the importance of multi-objective optimization to balance competing priorities, such as detection accuracy and energy efficiency, especially in resource-constrained IoT devices [

62,

63].

In IoT environments, security is paramount due to the vast number of interconnected devices handling sensitive data. Recent advancements include analyzing network traffic and device behavior through DL and signature-based IDS. For instance, F. Sadikin et al. [

64] proposed a hybrid IDS for ZigBee networks, wireless technology designed for low-power, short-range applications. Their approach combined rule-based detection and anomaly detection through ML, addressing both known attacks like DoS and emerging threats such as device hijacking, where attackers take control of devices, rendering legitimate users powerless.

Additionally, Tasmanian Devil Optimization, a bio-inspired metaheuristic, has been combined with a deep autoencoder for intrusion detection in UAV networks, achieving up to 99.36% accuracy and high precision in comparative experiments [

65]. Similarly, hybrid optimization schemes that integrate multi–objective algorithms for feature selection and hyperparameter tuning have proven essential for balancing detection performance and false–positive rates.

Similarly, A. Abusitta et al. [

66] leveraged anomaly detection using denoising autoencoders, a DL method capable of robustly representing data in noisy environments. Their solution achieved 94.6% accuracy in intrusion detection, highlighting the efficiency of autoencoders in identifying anomalous patterns in network traffic. Furthermore, IoT-PRIDS [

67] introduces a packet-representation-based IDS that builds lightweight profiles solely from benign traffic—eschewing labeled attack data altogether—and demonstrates strong detection performance with minimal false alarms in practical IoT scenarios.

Computational efficiency is a critical factor in IoT systems due to the resource constraints of devices, such as limited processing power and energy availability. Researchers have focused on optimizing IDSs for high detection accuracy with minimal resource consumption. For instance, S. Bakhsh et al. [

68] evaluate three deep learning models—Feed-Forward Neural Network (FFNN), Long Short-Term Memory (LSTM), and Random Neural Network (RandNN)—on the CIC-IoT22 dataset, achieving detection accuracies of 99.93%, 99.85% and 96.42%, respectively, which highlights their potential suitability for deployment in resource-constrained IoT environments.

Edge AI involves deploying artificial intelligence algorithms directly on IoT devices or edge servers, enabling localized data processing and analysis without relying heavily on cloud resources. This decentralized approach reduces latency, enhances privacy, and minimizes bandwidth usage, making it particularly suitable for resource-constrained IoT environments. When integrated with IDS, Edge AI allows real-time anomaly detection by processing traffic and behavioral data at the network’s edge, ensuring quicker responses to threats while preserving sensitive information locally.

Nguyen et al. [

69] introduce TS-IDS, a GNN-based framework that fuses node- and edge-level features for intrusion detection. On the NF-ToN-IoT benchmark, TS-IDS improves weighted F1 by 183.49% over an EGraphSAGE baseline—and achieves additional F1 gains of 6.18% on NF-BoT-IoT and 0.73% on NF-UNSW-NB15-v2—while reaching an AUC of 0.9992. Moreover, its O(N) computational complexity ensures scalable deployment in large-scale IoT networks.

Similarly, Javanmardi et al. [

70] introduced the M-RL model, a mobility-aware IDS for IoT-Fog networks, which combined Rate Limiting (RL) and Received Signal Strength (RSS) analysis to optimize resource usage. This lightweight solution achieved over 99% detection accuracy in dynamic environments while mitigating spoofing attacks. Additionally, hybrid Deep Autoencoder models have demonstrated high performance in anomaly detection by reducing dimensionality and enabling both binary and multiclass classifications, as evidenced by experiments with the BoT-IoT dataset. Together, these innovations highlight the potential of Edge AI to deliver scalable, precise, and resource-efficient IDS solutions in diverse IoT contexts.

Similarly, Y. Saheed et al. [

71] addresses threat detection in the industrial IoT environment using a model that combines a genetic algorithm for feature selection with an attention mechanism and an adaptation of the Adam optimizer in LSTM networks. This lightweight solution allows reducing computational complexity and optimizing detection on resource-constrained devices, achieving exceptionally high performance metrics on real datasets (SWaT and WADI). The integration of SHAP in the model also reinforces the transparency of the process, facilitating the interpretation of the results by experts.

By incorporating Edge AI, IDS solutions can adapt dynamically to network variations, handle large-scale IoT deployments, and ensure reliable detection with minimal computational overhead. These innovations underscore the growing relevance of Edge AI in building robust, efficient, and scalable IDSs for modern IoT ecosystems.

FL is a decentralized ML approach where devices collaboratively train a global model without sharing raw data. Instead, only model updates are exchanged, ensuring data privacy and reducing bandwidth usage. This makes FL particularly suitable for IoT environments, where devices are resource-constrained and often handle sensitive information. In IDS, FL enables localized detection by training models on-device, capturing unique network behaviors while aggregating insights to improve global model robustness [

72,

73].

Building on this, V. Rey et al. [

74] applied FL to IDSs by using the N-BaIoT dataset. Their approach reduced communication overhead by transmitting model updates instead of raw data. Additionally, techniques like trimmed mean aggregation excluded outliers, and s-resampling minimized model heterogeneity, enhancing system integrity and resilience against adversarial attacks.

Mansi H. Bhavasar et al. [

6] present FL-IDS, a federated learning–based intrusion detection framework deployed on Raspberry Pi and Jetson Xavier edge devices that reaches up to 99% accuracy and reduces model loss to 0.009 compared to a centralized baseline, all while never sharing raw data. Tabassum et al. [

2] enhance this paradigm with EDGAN-IDS, integrating a GAN at each client to generate synthetic samples that correct class imbalance and accelerate convergence—achieving over 97% accuracy across multiple IoT datasets. Finally, Ma et al. [

75] propose ADCL, a similarity-based collaborative learning scheme that selectively combines models trained on related networks, boosting F-score by up to 80% in adaptability and 42% in learning integrity without exchanging user data.

Adaptability is vital in IoT due to the dynamic nature of networks and evolving threats. Advanced ML techniques have been explored to improve IDS adaptability. G. Thamilarasu et al. [

76] developed an IDS for the Internet of Medical Things (IoMT) using ML algorithms and regression techniques. This hierarchical system employed mobile agents for distributed attack detection, demonstrating high adaptability and low resource consumption in hospital networks. J. Jeon et al. [

77] propose Mal3S, a static IoT malware–detection framework that first extracts five types of features from each binary—raw bytes, opcode sequences, API–call patterns, DLL imports and embedded strings—then encodes each feature set as a grayscale image and feeds these into a multi–SPP–net CNN for classification. Evaluated on a diverse IoT–malware corpus, Mal3S substantially outperforms conventional static detectors and demonstrates strong ability to identify novel malware families.

Emerging methodologies, such as Variational Autoencoders (VAEs) and XAI, are further enhancing adaptability in IDS. VAEs, a type of generative model, address data imbalance by generating realistic synthetic samples of rare or unseen attack patterns. For example, Li et al. [

78] propose VAE–WGAN, a hybrid generative framework that combines a variational autoencoder with a Wasserstein GAN to synthesize labeled attack samples and rebalance the training set. When used to augment data for an LSTM+MSCNN classifier, it achieves 83.45% accuracy and an F1–score of 83.69% on NSL–KDD, and surpasses 98.9% in both metrics on AWID. XAI complements this by making decision processes interpretable and transparent, i.e., analysts can see why certain patterns are flagged and adjust detection strategies accordingly. Han et al. [

79] introduce XA-GANomaly, an adaptive semi-supervised GAN-based IDS that incrementally retrains on incoming data batches and integrates SHAP, reconstruction-error visualization, and t-SNE to interpret and refine detection decisions dynamically.

Reliability is another critical parameter for ensuring accurate detection in IoT environments. Several studies have utilized advanced architectures to improve IDS reliability while minimizing FP. For example, S. Soliman et al. [

80] implemented models based on LSTM, Bidirectional LSTM (Bi-LSTM), and Gated Recurrent Units (GRU), achieving near 100% accuracy and a classification error below 0.01%. S. Khan et al. [

81] propose SB–BR–STM, a novel CNN block that combines dilated split–transform–merge operations with squeezed–boosted channels, and demonstrate its effectiveness by comparing it against standard architectures (SqueezeNet, ShuffleNet, DenseNet–201, etc.) on the IoT_Malware dataset—achieving a top accuracy of 97.18%. Santosh K. Smmarwar et al. [

82] combined DWT-based feature extraction, GAN-driven data augmentation and CNN classification to achieve near-perfect accuracy, demonstrating strong resilience in smart-agriculture IoT scenarios. Similarly, S. Racherla et al. [

48] proposed Deep-IDS, an LSTM-based edge system validated on the CIC-IDS2017 dataset, which attains 97.67% detection accuracy with a low false-alarm rate. This system demonstrated high reliability in identifying threats while minimizing FP, ensuring consistent performance in diverse IoT environments.

VAEs and XAI play crucial roles in improving IDS reliability by addressing key challenges in detecting accuracy and interpretability. VAEs enhance robustness by generating diverse synthetic attack data, as demonstrated by Li et al. [

78], whose VAE-WGAN framework that synthesizes attack samples to rebalance training data, yielding 83.45% accuracy and an 83.69% F1-score on NSL-KDD, and over 98.9% in both metrics on AWID. Meanwhile, Han et al. [

79] showed how SHAP, reconstruction-error visualization, and t-SNE can be integrated into XA-GANomaly to explain and refine its outputs, yielding an 8% improvement in F1-score and an 11.51% increase in accuracy on UNSW-NB15 while maintaining stable detection performance.

Scalability is essential in IoT, given the large number of devices and data volumes. M. Osman et al. [

43] proposed an ensemble learning approach combined with feature selection via genetic algorithms, achieving 97.9% accuracy in detecting attacks in Routing Protocol for Low-Power and Lossy Networks (RPL). This method reduces computational complexity and memory footprint by selecting a compact subset of predictive features, which enhances scalability in resource-constrained RPL deployments. M. Amiri-Zarandi et al. [

39] demonstrated that FL can effectively address scalability challenges in IDSs by distributing model training across devices, reducing central server dependency. This approach not only lowers communication overhead but also enhances privacy by ensuring sensitive data remains on local nodes.

Blockchain technology offers a decentralized, immutable framework that strengthens IDS functionality by securely logging intrusion events and ensuring the integrity of threat intelligence. In this context, blockchain creates a distributed ledger where each detected event, such as anomalous traffic patterns or security alerts, is recorded with timestamps and cryptographic validation (e.g., via secure hash functions like SHA-256), which prevents any alteration or deletion of logs. In addition, smart contracts are programmed with predefined conditions that automatically trigger responses (like alerting administrators or isolating compromised nodes) when specific attack patterns are detected. The blockchain’s consensus mechanism, such as Proof-of-Authority or similar lightweight protocols, ensures that the validation process is distributed across multiple nodes, thereby eliminating single points of failure, fostering trust in collaborative environments, and enabling scalable intrusion detection, especially in critical scenarios like industrial IoT or smart city infrastructures.

Furthermore, Saveetha et al. [

83] introduce a deep-learning–based IDS and outline how integrating blockchain could anchor detection logs immutably (reinforcing tamper resistance) without yet implementing the full ledger. Y. Sunil Raj et al. [

84] present a real-time adaptive IoT IDS that combines federated learning with blockchain to immutably record and verify model updates, strengthening the integrity of the collaborative training process. B. Hafid et al. [

85] explore IDS deployment on resource-constrained edge devices, suggesting that blockchain can secure detection logs and enable safe information sharing among nodes, thus mitigating vulnerabilities inherent to centralized architectures.

Y. Loari et al. [

86] further enhance the IDS landscape by developing a collaborative system that combines anomaly-based and signature-based methods, using blockchain to securely store and distribute critical threat data through smart contracts. S. Alharbi et al. [

87] build on this idea with a framework that shares a blacklist of malicious IP addresses across multiple IDS nodes via blockchain, reducing data redundancy and boosting scalability. Finally, while Liu et al. [

88] develop an AI-, IoT-, and blockchain-based framework for food authenticity and traceability in smart agriculture, they do not propose an IDS. Nevertheless, their discussion of immutable logging and transparent record-keeping illustrates how similar ledger mechanisms could be leveraged to secure IDS detection logs and bolster resilience across IoT networks.

Blockchain-based IDS solutions are on the rise. For example, consider a smart factory where an IDS continuously monitors network traffic from critical IoT sensors and controllers. When the system detects a sudden surge of failed login attempts from a previously unrecognized IP address (potentially indicating a brute force attack), the IDS computes a secure hash of the event details (timestamp, source IP, and anomaly type) using SHA-256, and appends this hashed data to the blockchain ledger, making it tamper-proof. Simultaneously, a smart contract pre-programmed with thresholds for suspicious activity automatically alerts network administrators, isolates the affected device by updating firewall rules, and disseminates the intrusion alert to other nodes via a lightweight consensus protocol like Proof-of-Authority, thus curtailing any lateral movement by the attacker.

Despite these strengths, current limitations include scalability challenges in high-volume networks, latency introduced by consensus protocols, and integration difficulties with resource-constrained IoT devices.

R. Alghamdi et al. [

89] propose a cascaded federated deep learning framework that pushes CNN and LSTM training to individual IoT nodes (preserving privacy) while a lightweight trust-based pre-filter minimizes latency and computational overhead, thus demonstrating true scalability and efficiency for real-time IDS deployment in resource-constrained IoT networks.

3.4. Main AI Approaches

This section focuses exclusively on AI-based intrusion-detection techniques for IoT, presenting supervised, tree-based, deep-learning, federated-learning, and generative approaches in a self-contained block to improve clarity and avoid cross-sectional repetition.

AI has gained popularity in the field of cybersecurity due to its ability to dynamically adapt to new threats, improve detection accuracy, and reduce FP. AI-based IDSs continuously learn from data to identify anomalous patterns, allowing them to detect unknown threats more effectively. However, they face significant challenges.

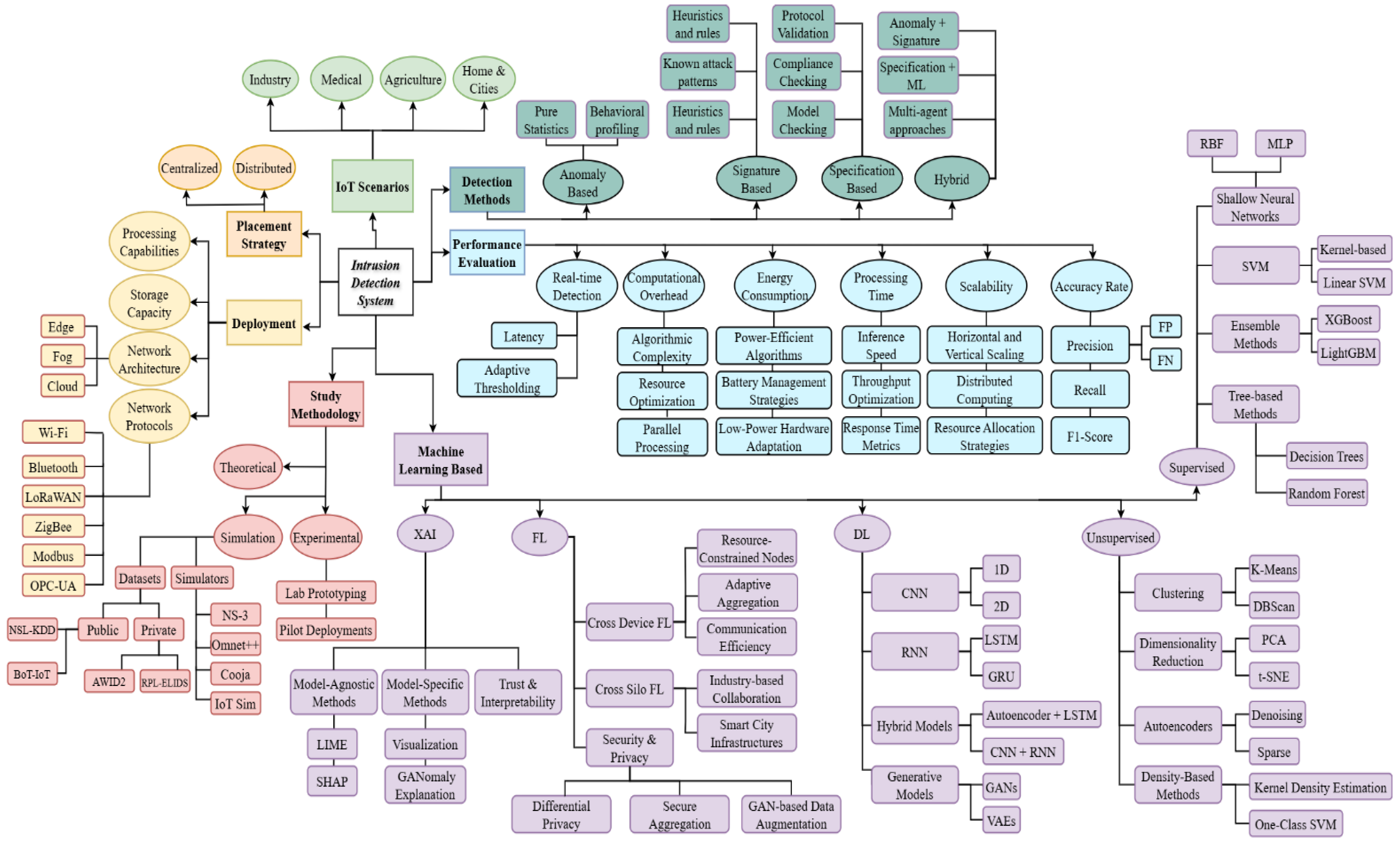

Figure 11 presents a classification of the most commonly used AI techniques in IDS. This classification is divided into five main categories: Supervised ML, tree-based methods, DL, FL and GANs. Each section highlights the specific algorithms and methods most commonly used in the literature. The following sections will highlight specific studies that have used these techniques to enhance intrusion detection, demonstrating how they have been implemented in various environments and the results obtained.

AI in IDSs has grown, improving threat detection by analyzing anomalous network traffic patterns, boosting accuracy, and reducing false positives as data grows. However, AI solutions need large datasets and high computational resources, limiting IoT applicability, and are vulnerable to adversarial attacks, requiring robust designs. Traditional non-AI models, simpler and effective for known threats, remain valuable in resource-constrained settings.

Several studies highlight the application of advanced techniques in IDS. R. Ahmad et al. [

46] present a comprehensive benchmark showing that pure convolutional networks train in roughly 10–20 min—significantly faster than hybrid autoencoder + BRNN or autoencoder + LSTM models, which may require several hours—while still exceeding 98% accuracy on datasets such as CICIDS2017 and NSL-KDD. Similarly, A. Kumar et al. [

90] introduce EDIMA, a two-stage edge-AI IDS that first uses a lightweight ML detector to flag scanning sessions and then applies an autocorrelation-based test to pinpoint infected devices; in two 15-min replay scenarios with real IoT malware traces, it achieves a 100% detection rate with zero missed detections and very low false positives.

Distributed IDS solutions leveraging FL have emerged as a promising approach to address the scalability, data privacy, and resource constraints in IoT. M. H. Bhavsar et al. [

6] developed FL–IDS, which trains logistic regression and CNN models locally on Raspberry Pi and Jetson Xavier devices—never sharing raw traffic—and aggregates only model updates on a central server. Deployed on transportation IoT systems, it attains 94% accuracy on NSL–KDD and 99% on the Car–Hacking dataset. Their method employs an iterative federated training process where a central server initializes a global model, distributes it to edge devices for local training, and then aggregates the model updates to refine the global model continuously. A simplified pseudocode outlining this process is shown in Algorithm 1.

Algorithm 1 lays out our federated training workflow in plain steps. First, the server creates an initial IDS model with random weights. In each training round, it picks a group of edge devices based on their availability. Each device that has collected enough local data trains the received model for a few epochs using its own dataset and then computes the difference between its updated model and the one it received. It sends that “delta” plus its dataset size back to the server; devices without enough data simply sit out that round. The server then fuses all incoming updates by taking a weighted average (so larger datasets have more influence) to produce the next global model. Periodically, the server tests the new model on a separate validation set, and if performance has plateaued, it stops early. This loop ensures data privacy (raw data never leaves the devices), handles devices of varying capacity, and converges efficiently to a robust IDS model for heterogeneous IoT scenarios.

In addition to the main training loop, Algorithm 1 depends on three helper functions. SelectDevices (n, K, availability

scores) returns a subset S

t of K devices (out of the total n) chosen based on their availability scores. LocalUpdate (G

t−1, D

i, η, α) carries out FedProx-regularized local training of the global model G

t−1 on device i’s dataset D

i using learning rate η and proximal term α, and returns the updated weights L

i,t. Finally, Evaluate (G

t, D

test) computes and returns the loss of the newly aggregated global model G

t on the held-out test set D

test.

| Algorithm 1: Federated IDS Training Process |

| Inputs: Total rounds (T), Number of devices (n), Local dataset for each device I (Di), Minimum devices per round (K), Learning rate (η), Convergence threshold (τ), FedProx parameter (α), Minimum samples per device (mmin), Evaluation frequency (evalfreq), Global test set (Dtest), Availability scores for all devices (availabilityscores) |

| Output: Final global model GT |

| 1. Initialize global model G0 with random weights and lastloss ←∞. |

| 2. for each round t = 1 to T: |

| 3. St ← SelectDevices(n, K, availabilityscores) |

| 4. for each device i ∈ S_t in parallel do |

| 5. if |Di| ≥ mmin then |

| 6. Lit ← LocalUpdate(Gt−1, Di, η, α) |

| 7. Δwit ← Lit − Gt−1 |

| 8. Send(Δwit, |Di|) |

| 9. else |

| 10. Δwit ← 0, | Di | ← 0 |

| 11. end if |

| 12. end for |

| 13. total_samples ← Σ{i∈St} | Di | |

| 14. Gt ← Gt−1 + Σ{i∈St} (|Di|/totalsamples) × Δwit |

| 15. if t mod evalfreq = 0 then |

| 16. losst ← Evaluate(Gt, Dtest) |

| 17. if |losst − lastloss| < τ then |

| 18. return Gt |

| 19. end if |

| 20. lastloss ← losst |

| 21. end if |

| 22. end for |

| 23. return GT |

R. Kumar et al. [

91] propose a fog-based IDS that runs Random Forest (RF) and XGBoost (XGB) locally on BoT-IoT nodes. In binary detection RF achieves 99.81% accuracy (F1 99.90%) and XGB 99.999% (F1 99.999%); in multi-class tests RF maintains ~99.99% accuracy (F1 99.997%) while XGB’s accuracy is similar but F1 drops to 87.9%. As fog nodes scale from 1 to 20, per-node training time falls from 340 s to 155 s for RF (≈55% reduction) and from 1480 s to 920 s for XGB (≈38% reduction), demonstrating improved scalability and efficiency. S. Khanday et al. [

92] propose a SMOTE-balanced, feature-selected DDoS detector for constrained IoT devices, reaching over 98% accuracy with near-zero false positives on BOT-IoT and TON-IoT while keeping model complexity minimal.

Expanding on these approaches, B. Gupta et al. [

93] proposed a distributed optimization system for IoT attack detection using FL combined with the Siberian Tiger Optimization (STO) algorithm. In their framework, the server first optimizes the CNN model’s hyperparameters using STO, balancing exploration and exploitation to minimize a global loss function, and then distributes these optimized parameters to clients for local training. Finally, the server aggregates the local updates to refine the global model iteratively. Moreover, F. Hendaoui et al. [

94] introduced FLADEN, a comprehensive FL framework for anomaly detection that constructs a diverse, real-world threat intelligence dataset, updates the federated learning library for efficient resource allocation, and achieves detection accuracies exceeding 99.9% while preserving data privacy.

The choice of algorithms in IDSs depends on the specific environment and constraints. Neural networks excel in handling large data volumes and detecting complex patterns, while Random Forest is robust in noisy data scenarios. Support Vector Machines (SVM) is particularly effective for binary classifications and high-dimensionality problems, and genetic algorithms optimize feature selection, improving detection efficiency. Hybrid systems often combine these methods with traditional techniques, such as signature-based approaches, or advanced methods like DWT for multiresolution analysis, as demonstrated in earlier studies.

Traditional AI techniques in IDSs primarily depend on supervised learning models, which require extensive labeled datasets and often fail to generalize to new threats. As highlighted in Section III.B, many IDS datasets suffer from imbalances and lack diversity. To overcome these challenges, Generative AI methods such as VAEs and GANs have been developed to generate synthetic data that simulates a wider range of attack scenarios and compensates for dataset imbalances.

The synthetic data generation algorithm using GAN for IoT IDSs employs an adversarial training paradigm in which two neural networks compete to improve the realism of generated samples. First, both a generator (G) and a discriminator (D) are initialized with random weights. During each training iteration, the discriminator is updated multiple times on batches of real IoT traffic and generator–produced samples, while enforcing a gradient–penalty term (WGAN-GP) to stabilize learning. Next, the generator is trained to “fool” the discriminator by producing ever more realistic data. Periodically, we assess quality using Fréchet Inception Distance (FID) to measure visual similarity and a coverage metric to ensure all attack classes are represented. Training stops once pre-specified quality thresholds or the maximum iteration count are met, yielding a generator capable of producing high-quality synthetic data that enhances the diversity and balance of IDS training sets. To further clarify this process, the following pseudocode (Algorithm 2) outlines the general WGAN-GP training procedure for generating synthetic IoT intrusion data.

Recent advances in IoT security demonstrate the application of Large Language Model architectures to radio frequency fingerprinting (RFFI) for device identification and authentication. Gao et al. [

95] developed a BERT-LightRFFI framework that uses knowledge distillation to transfer RFF feature extraction capabilities from a pre-trained BERT model to lightweight networks suitable for 6G edge devices, achieving 97.52% accuracy in LoRa networks under multipath fading and Doppler shift conditions. Similarly, Zheng et al. [

96] proposed a dynamic knowledge distillation approach using a modified GPT-2 architecture (RFF-LLM) with proximal policy optimization for UAV individual identification, achieving 98.38% accuracy with only 0.15 million parameters in complex outdoor environments. These works demonstrate how Transformer-based architectures can be effectively adapted for wireless security applications through specialized training on I/Q signal data rather than natural language. In parallel, diffusion models have emerged as a new generative paradigm for synthesizing highly realistic data distributions, offering potential for IDS training and augmentation beyond the capabilities of GANs and VAEs.

In addition to the core training loop, Algorithm 2 relies on several helper functions. SampleBatch (D, m) draws a minibatch of mmm real samples from dataset D uniformly at random. Sample (p (z), m) returns m independent noise vectors drawn from the distribution p (z). Uniform (0,1,m) generates a vector of m scalars sampled uniformly in [0,1] for interpolating between real and fake data. CalculateFID (xeval, Dreal) computes the Fréchet Inception Distance between the generated sample set Xeval and the real dataset Dreal, providing a measure of distributional similarity. Finally, CalculateCoverage (xeval, Dreal) evaluates what fraction of the attack classes present in Dreal also appear at least once in Xeval, ensuring diverse class coverage.

For instance, Rahman et al. [

97] developed the SYN-GAN framework, which generated synthetic datasets for training IDS models, achieving 90% accuracy on the UNSW-NB15 dataset and 100% on the BoT-IoT dataset. This approach not only addresses issues like false data and outliers but also enriches the training data, thereby enhancing the robustness of IDSs against previously unseen threats.

| Algorithm 2: WGAN-GP Synthetic Data Generation for IDS |

| Inputs: Iterations N, Minibatch size m, Real dataset Dreal, Noise distribution p (z), Learning rates ηG, ηD, Discriminator steps k, Gradient penalty λ, Frequency of quality evaluation evalfreq, Number of samples for quality evaluation evalsize, FID threshold for early stopping thresholdFID, Coverage threshold for early stopping thersholdcoverage, Size of final synthetic dataset targetsize |

| Output: Trained generator network G* and final synthetic dataset Dsyn |

| 1. Initialize generator G with random weights |

| 2. Initialize discriminator D with random weights |

| 3. for t = 1 to N do |

| 4. for j = 1 to k do |

| 5. xreal ← SampleBatch(Dreal, m) |

| 6. z ← Sample(p (z), m) |

| 7. xfake ← G(z) |

| 8. ε ← Uniform(0,1,m) |

| 9. ← ε × xreal + (1 − ε) × xfake |

| 10. LD ← Mean(D(xfake)) − Mean(D(xreal)) + λ × Mean((||∇{} D()||2 − 1)2) |

| 11. D ← D − ηD × ∇D LD |

| 12. end for |

| 13. z′ ← Sample(p (z), m) |

| 14. L_G ← -Mean(D(G(z′))) |

| 15. G ← G − ηG × ∇G LG |

| 16. if t mod evalfreq = 0 then |

| 17. zeval ← Sample(p (z), evalsize) |

| 18. xeval ← G(zeval) |

| 19. FIDt ← CalculateFID(xeval, Dreal) |

| 20. coveraget ← CalculateCoverage(xeval, Dreal) |

| 21. if FIDt < thresholdFID and coveraget > thresholdcoverage then |

| 22. break |

| 23. end if |

| 24. end if |

| 25. end for |

| 26. zfinal ← Sample(p (z), targetsize) |

| 27. Dsyn ← G(zfinal) |

| 28. return G, Dsyn |

Recent studies have further advanced this line of work by proposing deep generative architectures tailored to IoT environments. C. Qian et al. [

98] propose RGAnomaly, a reconstruction-based GAN that combines an autoencoder and a variational autoencoder within a dual-transformer architecture, specifically designed for multivariate time series anomaly detection in IoT systems. Their model effectively extracts and fuses temporal and metric features, outperforming prior approaches such as MAD-GAN and OmniAnomaly across four benchmark datasets.

Similarly, D. Hamouda et al. [

99] introduce FedGenID, a federated IDS architecture based on conditional GANs (cGANs) that performs data augmentation across distributed industrial IoT nodes. Their system enhances detection accuracy, particularly under non-IID data distributions and zero-day attacks, surpassing traditional federated methods by 10% in high-privacy scenarios. In a complementary domain, Y. Jin et al. [

100] present HSGAN-IoT, a semi-supervised GAN framework for hierarchical IoT device classification. Although not an IDS per se, its ability to identify unseen devices based on traffic patterns contributes to the early detection of anomalous or spoofed behaviors in networked environments.

While these models show promising results, they share several limitations. First, the use of deep generative models often entails a significant computational cost, making real-time deployment in constrained IoT devices challenging. Second, most approaches require careful alignment between synthetic and real data distributions to avoid overfitting or detection bias. Finally, the stability of GAN training remains a concern, particularly in unsupervised or federated settings where data heterogeneity is high.

Moreover, incorporating XAI techniques not only clarifies the decision-making process of AI-based IDSs in IoT but also enhances system reliability by providing interpretable insights into which features drive the model’s predictions. For instance, Han and Chang [

79] introduced the XA-GANomaly model, which fuses adaptive semi-supervised learning with SHAP-based explanations to improve anomaly detection in dynamic network environments. Similarly, Li et al. [

78] demonstrated that a hybrid VAE-WGAN model could generate synthetic samples tailored to rare attack types, significantly boosting detection performance on imbalanced datasets.

In addition, Arafah et al. [

101] improved feature representation by combining a denoising autoencoder with a Wasserstein GAN to generate realistic synthetic attacks across datasets like NSL-KDD and CIC-IDS2017, while Sharma et al. [

10] highlighted the integration of XAI techniques such as SHAP and Local Interpretable Model-Agnostic Explanations (LIME) to provide interpretable results in IDS models, fostering trust and reliability in AI-driven cybersecurity systems.

Building on these approaches, recent studies have further advanced the application of XAI in IDSs for IoT. S.B. Hulayyil et al. [

102] presented an explainable AI-based intrusion detection framework explicitly designed for IoT systems. Their framework integrates ML classifiers with XAI techniques to offer real-time, interpretable explanations for detected vulnerabilities (e.g., those related to Ripple20), thereby enabling security analysts to understand and fine-tune model behavior more effectively.

While SHAP-based explanations (see Sections III-B, III-C, and III-D) significantly improve transparency, they impose non-trivial computational overhead that may limit real-time applicability on resource-constrained IoT devices, highlighting a key challenge for practical deployments.

Complementarily, A. Gummadi et al. [

103] conducted a systematic evaluation of white-box XAI methods, such as Integrated Gradients (IG), Layer-wise Relevance Propagation (LRP), and Deep-SHAP, using comprehensive metrics (descriptive accuracy, sparsity, stability, efficiency, robustness, and completeness). Their results underscore the importance of these metrics to quantify the quality of explanations in IoT anomaly detection contexts.

By grounding these advanced techniques in the specific deficiencies identified in current IDS datasets, namely, imbalance, limited representation of rare threats, and inadequate simulation of real-world traffic, Generative AI and XAI not only improve detection accuracy and reduce false positives but also offer practical solutions to these concrete data-related challenges. This revised approach reinforces the applicability of these technologies in addressing the critical gaps detailed in Section III.B.

3.5. IDS Application Fields

At this point, the main fields of IDS implementation identified in the evaluated articles include Industry 4.0, medical and healthcare services, smart cities and homes, and agriculture, as illustrated in

Figure 12. These sectors are pivotal to economic growth, public health, and food security, making the protection of their infrastructure and data from cyber threats imperative. The deployment of IDSs in these fields ensures not only operational continuity but also compliance with regulatory standards, protection of sensitive data, and resilience against evolving threats.

In industrial manufacturing environments, IDSs play a crucial role in protecting Industrial Control Systems (ICS), which form the backbone of power grids, water treatment facilities, and production lines. Past cyberattacks targeting industrial systems, such as the notorious Stuxnet malware, have demonstrated the potentially catastrophic consequences of compromised industrial infrastructure. The implementation of IDSs in these environments ensures operational continuity, reduces downtime caused by cyberattacks, and minimizes the economic impact of disruptions.

Several researchers have made significant contributions to IDS solutions for industrial applications. Y. Saheed et al. [

104] proposes a dimensionality reduction mechanism using an autoencoder that integrates DCNN and LSTM for network data analysis in ICS. This methodology allows for implementing an IDS that does not require detailed prior information about the system topology, facilitating its deployment in critical infrastructures and demonstrating high accuracy and robustness results against attacks in real environments.

X. Yu et al. [

4] introduced the Edge Computing-based anomaly detection algorithm (ECADA), which efficiently detects anomalies from both single-source and multi-source time series in industrial environments, enhancing detection accuracy while simultaneously reducing the computational load on cloud data centers. This improvement not only strengthens detection capabilities but also promotes industrial sustainability through more efficient resource utilization.

Alireza Zohourian et al. [

67] with IoTPRIDS, a lightweight, packet-representation-based intrusion detection framework designed for general IoT deployments. IoT-PRIDS trains exclusively on benign network traffic, without resorting to complex machine-learning models, and operates in a host-based configuration, delivering near-real-time detection of complex attack patterns with minimal false alarms. Its low computational footprint and reliable performance make it especially well-suited for resource-constrained or safety-critical environments, including industrial control systems. K. Ramana et al. [

105] presented an intelligent IDS for IoT-assisted wireless sensor networks, employing a whale optimization algorithm for hyperparameter optimization that substantially improved detection accuracy while reducing false positives in industrial environments.

Further advancing the field, Mirdula S. et al. [

106] present a deep learning–based anomaly detection framework for IoT-integrated smart buildings, leveraging Manufacturer Usage Description (MUD) profiles to dynamically monitor device behavior and detect network-level anomalies. Validated on real smart-building traffic, it achieves high detection accuracy (>99%) with a lightweight design suitable for heterogeneous IoT deployments. Fengyuan Nie et al. [

47] contributed with their innovative M2VT-IDS architecture, which adapts seamlessly to dynamic and distributed industrial networks, significantly enhancing anomaly detection capabilities and operational efficiency.

K. Shalabi et al. [

44] conducted a systematic review of blockchain-based IDSs/IPSs for IoT networks, covering work published between 2017 and 2022 in industrial and healthcare settings. Their analysis highlights how blockchain strengthens data integrity, decentralization and scalability of threat response, and points out open challenges in resource-constrained deployments.

In the healthcare sector, the integration of IDSs is vital for safeguarding the confidentiality and security of sensitive medical data while ensuring the integrity of connected medical devices [

76]. H. Alamro et al. [

107] developed the BHS-ALOHDL system, which leverages blockchain technology to enhance data security and facilitate intrusion detection in healthcare environments. In evaluations on ToN-IoT and CICIDS-2017 datasets, it achieved up to 99.31% detection accuracy (with comparably high precision, recall, F1-score and AUC) while maintaining lower execution and transaction-mining times than competing PoW-based approaches. By preventing unauthorized access and safeguarding patient information, these IDS solutions ensure the reliability and trustworthiness of medical services.

For smart homes and cities, IDS technologies protect critical infrastructure and user privacy from an ever-growing range of cyber threats. For example, E. Anthi et al. [

108] designed a three-layer supervised IDS for smart home IoT devices, achieving F-measure scores of 96.2%, 90.0% and 98.0% on profiling, anomaly detection and attack classification tasks. Sarwar et al. [

109] presented an anomaly-detection framework for smart homes using classifiers such as Random Forest, Decision Tree and AdaBoost, reporting perfect precision, recall and F1 on the UNSW-BoT-IoT benchmark.

In the agricultural sector, IDS technologies are optimizing productivity and securing systems against cyber threats that could disrupt food supply chains. S. K. Smmarwar et al. [

82] propose a novel three–phase Deep Malware Detection framework—DMD-DWT-GAN—specifically designed for IoT-based smart agriculture systems. First, extracted features such as opcodes, bytecode, call logs, executable files and strings are converted into binary form and then concatenated into 8-bit sequences that are mapped directly to pixel intensities, producing grayscale “visualizations” of each malware sample (which are subsequently normalized, colorized and resized to 32 × 32 pixels to standardize input). Next, a Discrete Wavelet Transform decomposes each image into Approximation (Ac) and Detail (Dc) coefficients, which are fused with a GAN—the generator processes Dc while the discriminator processes Ac—to enhance discriminative malware patterns. Finally, a lightweight Convolutional Neural Network classifies the malware family in real time. Evaluated on both the IoT-malware and Malimg benchmark datasets, this IDS achieves 99.99% accuracy, precision, recall and F1 score—outperforming existing state-of-the-art—while maintaining a low prediction time of 9 s.

Despite major technological strides, industrial intrusion detection systems (IIDS) still face critical hurdles: the lack of unified standards limits interoperability and complicates integration, while many solutions demand heavy computational power and energy, making them ill-suited for constrained environments. As networks and data volumes expand, scalability becomes more difficult to maintain, and high false-positive rates can overwhelm operators, whereas false negatives may leave dangerous threats undetected, jeopardizing production, patient safety, or infrastructure. Overcoming these issues will require the creation of lightweight, adaptive, and highly accurate IDS solutions tailored to each sector’s needs, fully compatible with existing systems and optimized for efficient resource use.

Deployment Case Studies

To illustrate practical applications of IDS in IoT, we summarize three real-world pilot implementations across industrial, healthcare, and smart-home domains.

Table 5 presents each deployment’s context, the core IDS technology used, key performance metrics, and the main lessons learned.

In this smart manufacturing plant, the choice of algorithms reflected both the diversity of data sources and operational requirements. CNNs analyzed thermal and visual imagery to detect subtle mechanical anomalies, while HMMs modeled temporal dynamics in sensor readings. VAEs compressed high-dimensional signals, with SVMs providing robust classification on the reduced feature space. This multi-algorithm strategy shows that industrial environments require complementary techniques for different data modalities, and that sustained performance relies on continuous retraining as plant conditions evolve.

In the cloud-based healthcare case, the IDS design had to balance classification accuracy with stringent data security requirements in a medical IoT context. Neural networks were employed to model the nonlinear patterns in ECG signals and device traffic, offering the flexibility needed for both arrhythmia detection and intrusion identification. Their performance was enhanced through a Hybrid Tempest optimization process, which integrated multiple search strategies to fine-tune network parameters and avoid local minima, a key factor when working with heterogeneous hospital data. Complementary feature extraction techniques were introduced to ensure efficiency: statistical descriptors captured variability across patients, while spectral features reduced computational load during real-time monitoring. This layered strategy reflects the dual challenge of healthcare IoT—delivering precise diagnostics while protecting sensitive information—and demonstrates that robust anomaly detection in medical environments depends on careful algorithmic integration rather than reliance on a single model.

In the smart city case, the IDS was designed to manage the massive flow of data generated by connected infrastructures such as traffic systems, energy grids, and home devices. Rather than relying solely on centralized monitoring, detection was pushed to fog and edge nodes so that anomalies could be identified close to their source, reducing response latency and network overhead. Random Forest models provided fast, reliable classification of diverse traffic patterns, while edge analytics ensured scalability across heterogeneous devices. This deployment illustrates how IDSs in smart environments act as a distributed nervous system—filtering malicious activity in real time, preserving service continuity, and protecting both public infrastructure and private households from evolving cyber threats.

Taken together, these pilots illustrate how different IDSs approaches align with context-specific needs. In industrial plants with strict uptime requirements, hybrid combinations of VAEs, HMMs, and CNNs, supported by edge AI, prove effective for handling diverse signals and ensuring real-time response, while explainable AI methods are needed to foster operator trust. In healthcare, neural IDSs optimized with metaheuristics and, prospectively, federated learning frameworks provide accuracy while respecting privacy constraints. In municipal or smart-home networks, rules-based IDS engines like Suricata remain valuable when augmented with anomaly detection for broader coverage. Looking forward, large language model architectures applied to radio-frequency fingerprinting represent a promising option for wireless IoT and UAV identification, while generative models such as GANs, VAEs, and diffusion models can create realistic attack scenarios to support training where data scarcity persists.

Beyond these documented deployments, several emerging application scenarios demonstrate the expanding scope of IoT-IDS implementations: in healthcare, hospitals could adopt federated IDSs so that multiple institutions collaboratively train models without sharing raw patient data, while blockchain ensures integrity of alerts. In industrial contexts, edge-based IDSs can be placed close to PLCs to deliver sub-100 ms responses that prevent cascading failures, with cloud coordination for long-term adaptation. In agriculture, lightweight IDSs tailored to sensor networks could intercept denial-of-service attempts that risk disrupting irrigation or food supply chains. In smart cities, hybrid blockchain–ML IDSs may secure video surveillance and transport systems, providing tamper-evident alerts and ensuring scalable policy enforcement across distributed fog nodes.

3.6. Open Research Questions

The development of sophisticated and adaptive ML and DL algorithms is crucial for addressing rapidly evolving cyber threats in IoT environments. IDSs must move beyond static models by incorporating continuous learning frameworks, such as reinforcement and online learning, and emerging approaches like meta-learning and few-shot learning, which enable adaptation to novel threats with minimal labeled data. For example, a smart-home IDSs must adapt rapidly when new IoT appliances are introduced, while an industrial IoT deployment may require online learning to handle unforeseen attacks without interrupting operations.

Efficient management of large data volumes remains a critical challenge. Distributed and edge computing techniques can enhance real-time processing by bringing data analysis closer to source devices, thus reducing latency and network overhead. Deploying edge AI models and combining DL methods with dimensionality reduction (e.g., PCA or autoencoders) can help optimize resource usage in environments with limited computational power. In vehicle IoT networks, for instance, low-latency IDS decisions are required to prevent cascading failures in connected cars, highlighting the importance of lightweight edge-based detection.

Standardization is vital in overcoming current IDS limitations. The lack of consistent, validated datasets and diverse IDS implementations hampers comparability and replicability. Establishing global repositories and universal communication protocols would improve interoperability across diverse IoT devices and facilitate the development of robust evaluation frameworks. A concrete example is the disparity between public datasets (e.g., CICIDS, UNSW-NB15) and the traffic observed in real smart city deployments, where encrypted protocols and heterogeneous devices are predominant—underscoring the urgency of standardized benchmarks.

The integration of blockchain technology offers a decentralized, immutable framework for securing IDS operations. By ensuring tamper-proof logging through distributed ledgers and automating response mechanisms via smart contracts, blockchain enhances data integrity and resiliency, particularly in critical sectors like industrial automation and healthcare. In parallel, FL, especially when combined with transfer learning (TL) [

113] and optimized for resource-constrained devices, provides promising avenues for decentralized training and improved scalability. Techniques such as adaptive compression and edge computing further support these decentralized models. For example, in healthcare IoT, FL could enable hospitals to collaboratively train IDS models without exposing sensitive patient data, while blockchain ensures integrity and auditability of alerts across institutions.

The urgency and complexity of these challenges vary significantly across deployment contexts, directly informing our temporal roadmap. Short-term priorities (2024–2026) focus on enhancing detection accuracy in constrained environments—for instance, agricultural IoT networks require sub-50 ms anomaly detection with less than 1% false positive rates to prevent irrigation system disruptions, while smart manufacturing demands 99.99% uptime during adaptive learning phases. Medium-term objectives (2027–2029) center on scalable interoperability, where standardized APIs must accommodate the projected 75 billion IoT devices by 2030. Long-term goals (2030–2035) emphasize autonomous security ecosystems capable of self-evolution, exemplified by smart city infrastructures that can autonomously detect, classify, and mitigate novel attack vectors without human intervention while maintaining citizen privacy through zero-knowledge proof mechanisms.

AI is essential for IDS transparency, using methods like SHAP or LIME to deliver real time, feature level explanations that help administrators validate decisions and fine tune configurations. Equally critical are extensive real world trials—in smart cities, industrial networks, etc.—to expose deployment challenges beyond the lab and foster industry–academia collaboration for robust, reliable systems. For instance, deploying IDS pilots in municipal IoT infrastructures could reveal practical challenges such as bandwidth constraints in surveillance cameras or interoperability issues in heterogeneous sensor gateways. Finally, achieving scalability requires hybrid signature and anomaly based detection, augmented by generative models (VAEs, GANs) to synthesize diverse attack scenarios. Looking ahead, the integration of Generative AI and large language models (LLMs) promises to make IoT security more adaptive and intelligent: LLMs can parse unstructured device logs and network traffic to flag novel threats, optimize lightweight encryption and authentication protocols for constrained sensors, automatically generate and verify smart contracts in blockchain-backed IDS frameworks, and enable intuitive, natural language access control interfaces—laying the groundwork for a secure, self-evolving IDS ecosystem [

114]. Data balancing techniques such as SMOTE or denoising autoencoders remain vital to handle class imbalance and missing values without degrading performance.

Future developments will hinge on seamlessly integrating AI with traditional methods, leveraging techniques like transfer learning and automated hyperparameter optimization to reduce reliance on large training datasets and enable deployment in resource-constrained IoT environments, while employing AI-driven hyperparameter tuning and architecture search to achieve real-time adaptability with minimal computational overhead. Equally important will be interdisciplinary collaboration among cybersecurity experts, software engineers, and data scientists to create holistic IDS solutions capable of evolving alongside new threats; their long-term success will depend on this synergy.

Figure 13 illustrates our research roadmap across three time horizons, linking each open question to targeted implementation, optimization, and scale-up activities. These time horizons were determined through a comprehensive analysis of the papers reviewed in this survey, systematically evaluating the current maturity level, reported limitations, and implementation challenges of each technique. Short-term horizons (2024–2026) encompass technologies with demonstrated proof-of-concept implementations but requiring optimization for IoT constraints, such as federated learning approaches that show promise but face communication overhead challenges. Medium-term horizons (2027–2029) include techniques showing theoretical feasibility with limited practical validation, like blockchain-based IDS frameworks that require scalability improvements. Long-term horizons (2030–2035) represent emerging paradigms with significant research gaps, such as quantum-resistant protocols and fully autonomous security ecosystems. This timeline reflects the consistent patterns observed across reviewed literature regarding development cycles from research prototype to practical deployment in resource-constrained IoT environments.

Another promising but still underexplored direction is intrusion detection at the physical layer of wireless IoT systems. Since most IoT devices communicate over broadcast wireless channels (e.g., Wi-Fi, ZigBee, LoRa, NB-IoT, Bluetooth), they are inherently vulnerable to spoofing, jamming, and signal injection attacks. IDS solutions at this layer can exploit radio-frequency (RF) features such as signal strength, phase, and device-specific fingerprints to detect anomalies and impersonation attempts. However, research in this area remains limited compared to network- and application-layer IDS. Expanding future work to incorporate physical-layer intrusion detection would strengthen IoT security holistically by addressing threats that arise before higher-layer protocols can provide protection.