Abstract

While neural networks can solve complex geometric problems, as demonstrated by systems like AlphaGeometry, we have limited understanding of how they internally represent and reason about spatial relationships. In this work, we investigate how neural networks develop internal spatial understanding by training Graph Neural Networks and Transformers to predict point positions on a discrete 2D grid from geometric constraints that describe hidden figures. We show that both models develop interpretable internal representations that mirror the geometric structure of the problems they solve. Specifically, we observe that point embeddings self-organize into 2D grid structures during training, and during inference, the models iteratively construct the hidden geometric figures within their embedding spaces. Our analysis reveals how reasoning complexity correlates with prediction accuracy, and shows that models solve constraints through an iterative refinement process, which might resemble continuous optimization. We also find that Graph Neural Networks prove more suitable than Transformers for this type of structured constraint reasoning and scale more effectively to larger problems. These findings provide initial insights into how neural networks can develop structured understanding and contribute to their interpretability.

1. Introduction

While neural networks can solve complex spatial reasoning problems, we have limited understanding of the internal mechanisms they use to represent and manipulate geometric relationships. Many papers have already demonstrated that autoregressive Transformers, for example, Refs. [1,2,3], and Graph Neural Networks (GNNs), for example, Refs. [4,5], are able to learn to solve such problems, but it is not clear what mechanisms they discovered.

A notable example is the work by [6] who developed a system named AlphaGeometry, which was able to solve problems that appeared on the International Mathematical Olympiad. The system contains an autoregressive Transformer that takes as input a sequence of tokens describing the problem in the language of geometric relations and is trained to predict auxiliary points useful for finding a proof for the given statement. AlphaGeometry achieves impressive performance, but it operates as a black box, providing no insights into how the model internally represents geometric relationships or constructs spatial understanding.

A human dealing with such a problem would try to form a mental image of the construction used in the problem (most likely by first drawing it). We can ask a natural question whether NNs could also form such a “mental image”, which would reflect the spatial configuration of points described in the problem. We can also ask whether an autoregressive Transformer is a suitable model for such a problem and whether a GNN, which eliminates a lot of symmetries (variable renaming and constraint reordering), could be easier to train and be more scalable.

In this contribution, we take a closer look at these questions using a simplified approach. We will focus on purpose-built geometric constraint satisfaction problems (CSPs). This setting allows for detailed analysis of neural geometric reasoning that is difficult to achieve in complex systems like AlphaGeometry. We create a simple CSP language with several geometric constraints (relations) for which the domain is a set of points in a discrete 2D grid of certain size. Each instance of this CSP uniquely describes a hidden figure whose points are the solution of the instance. As the discrete grid is finite, we can assign a token/class (when using a Transformer, each point is represented by a token; when using a GNN, each point corresponds to a class) to each point and train the model to predict the points in the hidden figure with a cross-entropy loss.

Analysis shows that both models develop interpretable internal representations that mirror the geometric structure of the problems they solve. After visualizing a low-dimensional projection of the embeddings corresponding to individual points, we can see that they organized themselves in a 2D grid which they represent. We also show that during inference, the embeddings of unknown variables evolve into a configuration that reflects the hidden figure described by the problem. Additionally, we find that GNNs prove more suitable than Transformers for structured constraint reasoning tasks and scale more effectively to larger problems.

This controlled approach provides solid mechanistic insights into how neural networks develop spatial understanding and contributes to a broader understanding of structured reasoning in neural systems.

The rest of the paper has the following structure. In Section 2, we review related work. Section 3 describes our experimental setup, including the two types of architectures and the process for generating problems. In Section 4, we present the results of our experiments for both models, analyze embedding structure emergence, examine the iterative solution process, and provide analysis of failure modes for different problem complexities. We discuss the results and limitations of our work in Section 5. Additional interesting and supporting results can be found in the Appendix A, Appendix B, Appendix C, Appendix D, Appendix E, Appendix F, Appendix G, Appendix H, Appendix I and Appendix J.

2. Related Work

2.1. Reasoning About Geometry

Geometric reasoning has been a focus of research for decades [7], with Wu’s method [8] considered state-of-the-art until recently. In 2024, AlphaGeometry [6] surpassed Wu’s method on International Mathematical Olympiad problems, utilizing a combination of symbolic deduction and neural language model which predicts auxiliary constructions based on problem statement which is described using geometric relations. Our work is partially inspired by AlphaGeometry, but we study a much easier setup in which the model predicts points in a 2D grid that satisfy the relations used to describe the construction.

Other neural approaches include visual reasoning methods that extract geometric primitives from diagrams using deep learning for construction problems [9]. Our work differs by focusing on abstract constraint satisfaction rather than visual diagram processing, enabling detailed analysis of internal spatial representations.

We were also partially inspired by the work of [10], which provides an explanation for the process by which GNNs can learn to solve Boolean CSPs, concretely deciding the satisfiability of Boolean formulas. We augment their setup for the domain of geometric CSPs. In their work, they show empirical evidence that the GNN learns to act as a first-order solver of a semidefinite programming (SDP) relaxation of MAX-SAT. MAX-SAT is an optimization version of SAT, and in SDP relaxation, vectors assigned to variables are optimized in order to minimize an objective which captures how many constraints are satisfied.

In the domain of geometric CSPs, ref. [11] developed an algorithm for the automatic construction of diagrams for geometry problems. The algorithm works by optimizing coordinates of points by gradient descent to maximize an objective function which captures how well the geometric constraints of the problem are satisfied. We emphasize that their algorithm was manually constructed and not learned.

2.2. Spatial Reasoning in Neural Networks

Another related area of research is focused on the ability of NNs to conduct spatial reasoning in a broader sense, such as through spatial navigation, planning and interaction with physical objects.

Several papers explore spatial reasoning in the context of Large Language Models (LLMs) such as ChatGPT (gpt-4-turbo-2024-04-09) or LLama (Llama 3-8B) [12]. In [1,3], the authors point out various failure modes in the planning and navigation capability of a large pool of LLMs. In [2], the authors developed a prompting technique called Visualization of Thought (VoT), which significantly improved LLM performance in visual navigation tasks. The study most similar to our work is [13], in which the authors probe small Transformer models trained for maze navigation in order to understand the representation it uses to solve the task. Unlike the previously mentioned works, ref. [14] uses reinforcement learning to train a language model based on a recurrent NN (RNN) for text-based spatial reasoning.

Apart from language models, other papers also explore spatial reasoning in the context of GNNs. In [4], the authors developed a GNN for spatial navigation, which utilizes a memory mechanism for long-range information storage, while ref. [5] introduced the SpatialSim benchmark together with a GNN that is trained to recognize spatial configurations. Our work contributes to this area by partially elucidating the mechanism by which NNs are able to reason about geometric relations and by showing that GNNs which use a graph of relations/constraints and variables are much easier to train and scale.

3. Problems and Methods

To test the ability to reason about geometric relations, we develop a simple generative procedure of problems with solutions that allows us to control the difficulty and size of the problem. We test two types of models, GNNs and Transformers, on data generated with various generator settings.

3.1. Geometric Problem Generation

Our goal was to create a simple synthetic dataset that would enable us to study the mechanism which NNs use to reason about geometric relations. Specifically, we wanted to investigate whether neural networks develop internal representations that reflect the geometric structure of the problems they solve. In the task of auxiliary point prediction for which AlphaGeometry was using a language model, it is, for example, not clear whether the model took the geometric nature of the constraints into account or treated the language of the problem abstractly without any metric interpretation/grounding.

Therefore, we designed a generator for CSPs whose solutions can be interpreted as geometric figures described by the input constraints. The task of the model is to predict the positions of the points contained in the constraints. We simplify the problem so that the geometric figures lie on a discrete grid, and we can therefore treat the prediction of the positions as a classification task. We note that one can also treat the task as a regression and train the model to process figures with arbitrary 2D coordinates.

Our CSP uses four types of constraints: M, R, S, and T. The constraint says that the point B is the midpoint of the line segment given by A and C, and says that points A, B give an axis of symmetry around which C and D reflect each other. The constraint says that points A, B, C, D form a square and says that the vector is a translation of the vector . In order to have unique solutions, we also need to fix several points to concrete positions. We can achieve that with the additional constraint P, for which signifies that point A lies at position . For each constraint, a certain number of variables are required to be known so that the constraint can be uniquely resolved; here, we call them determining variables. Once these variables are fixed, the remaining variables are uniquely determined. These are called dependent variables. For the constraint M, two variables are determining and one is dependent, i.e., if we know the positions of two variables, the last one can be resolved. For the constraint R, three variables are determining and one is dependent. For the constraint S, two variables are determining and two are dependent (i.e., we assume a fixed spatial ordering of the square vertices). For the constraint T, three variables are determining and one is dependent. In the Section 4.5 the visualization of the following geometric CSP instance is showed:

where are known points and are unknown.

Our generator creates problems that require deductive reasoning by ensuring the constraints form a dependency structure where some must be resolved before others. Attempting to solve all constraints simultaneously is not feasible because the dependent variables would be incorrectly determined. For example, a network might successfully create a square from four unknown points, but if some of those points are actually determined by previous translation or reflection constraints, the resulting square would be geometrically valid but positioned incorrectly within the overall figure. This dependency structure forces models to discover the proper reasoning sequence rather than solving constraints in isolation.

To create a single problem, we sample a random directed acyclic graph (DAG) with nodes corresponding to types of constraints and edges corresponding to dependencies. Then we add variables to the constraints so that each constraint within the graph can be uniquely resolved given that the parent constraints are already resolved (the parent constraints therefore need to contain the determining variables). To produce a problem with a unique solution, we fix the required number of variables appearing in each of the root constraints which have no parents (i.e., arbitrary two variables in the S constraint, three variables for the T constraint, etc.) We use the Z3 solver [15] to obtain the assignment for all other points. If the resulting figure is larger than the size of the grid, we reject the problem, otherwise we place it in a random position within the grid. The constraints with the fixed points are given as an input to the model, and the positions of the remaining variables are given as labels.

By varying the number and types of constraints, the generator produces problems with different complexity levels and reasoning depths. This allows us to investigate whether models can solve problems harder than those seen during training by leveraging test-time scaling approaches such as increased iterations or resampling.

3.2. Models

In our experiments, we tested two types of architectures: GNNs and autoregressive Transformers.

3.2.1. Graph Neural Network

The architecture of the GNN is inspired by the model presented in [10] and originates in [16]. Similarly to works about Boolean satisfiability, it applies the same update rules repeatedly. It is a GNN operating on a bipartite graph with n variables and m constraints defined by Equations (1) and (2) below which are applied recurrently for several rounds. Equation (2) updates the embeddings of variables which are, after the last update, used to classify a given variable to a point within the grid. This is achieved by applying a linear layer on each variable embedding. As mentioned in Section 3.1, some variables need to be fixed to concrete points so that the problem has a unique solution.

The embeddings of known variables are initialized using the embedding layer that shares weights with the final classification layer , i.e., both use the same trainable weight matrix , where N is the number of grid positions and d is the embedding dimension. This design ensures consistent representation of grid positions throughout the network (similarly to token embeddings sharing weights with the classification head in language models). They are not updated during message passing. The embeddings of unknown variables are initialized randomly, i.e., they are sampled from a unit hypercube following the uniform probability distribution of each coordinate. It enables resampling during inference by using different random initializations.

The embeddings are updated after each message-passing iteration. Let n be the number of variables and m be the number of constraints. The update equations have the following form:

where is the matrix of stacked variable embeddings, is the matrix of stacked constraint embeddings, is the adjacency matrix that matches constraints to variables, is the adjacency matrix that selects variables for each constraint ( will have rows because there are four variables in each constraint; for constraints that have only three variables, we append a zero vector to preserve the shape), taking the constraint type and argument position into account, and denotes the operation that extracts and concatenates the embeddings of the four variables involved in each constraint in the correct order. is the set of constraint types. Each problem instance is represented by a bipartite graph containing the denotation of the constraint types, which is fully encoded by the matrix , from which the matrix can be easily determined.

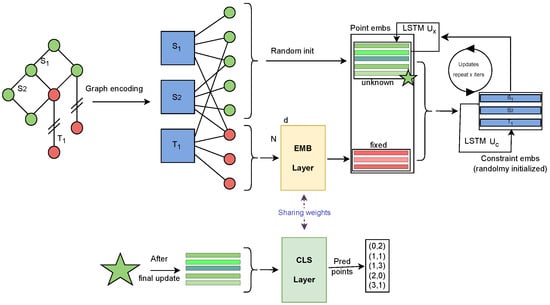

Equation (2) updates the embeddings of variables and Equation (1) updates the embeddings of the constraints. The latter equation is further indexed by c which reflects the fact that we are using multiple types of constraints which require distinct update functions. The update functions and () are in our case realized as LSTMs [17] parameterized by and , respectively. Technically, each LSTM also updates a cell state which is not mentioned in the equations. These LSTMs could be replaced by simple RNNs but we found the LSTMs easier to optimize and RNNs do not work well with our data; see Appendix A.4. An example of the simplified schema of the GNN processing can be found in Figure 1.

Figure 1.

Solution process of the GNN model. The geometric problem is encoded as a bipartite graph with variable nodes (points) and constraint nodes. Red points represent fixed/known variables that maintain their initial embeddings from the embedding layer throughout the process. Green points represent unknown variables and blue nodes represent constraint embeddings, both initialized randomly. The embedding layer is represented as a matrix with dimensions N (corresponding to grid points, i.e., classes) by d (embedding dimension, ). During inference, unknown point embeddings and constraint embeddings are iteratively updated using the respective LSTMs: updates variable embeddings (Equation (2)) and updates constraint embeddings (Equation (1)) for a specified number of iterations. After the final update, unknown points are classified using a linear classification layer without bias that shares weights with the embedding layer. The classifier produces logits that are converted to probabilities via softmax to predict the final point positions.

The update of each embedding happens independently of the other embeddings, i.e., in Equation (2), each embedding of a variable (row in the matrix ) is updated independently by the same LSTM which takes as input the aggregated message from the constraints containing this particular variable. The aggregated message is simply the sum of the relevant constraint embeddings and it is realized by multiplying the constraint embedding matrix by the matrix . The LSTM which updates the constraint embeddings differs by the fact that the aggregated message is not obtained as a sum but as a concatenation of variable embeddings. The embeddings are concatenated in the order in which the variables appeared within the constraints. For example, for the constraint , we concatenate the embeddings of variables in that order. One can intuitively view the embeddings of variables as if they are representing the values of these variables (points) and embeddings of constraints as if they represent the information of what is needed to satisfy the constraint. For this reason, the function which updates the constraint embeddings cannot be permutation-invariant because different orders of the determining variables result in different values for the dependent variables.

The embedding dimension d and number of iterations are configurable parameters that we adjust based on problem complexity. Importantly, since the same update rules are applied recurrently, the number of iterations can be modified at test time without changing the model parameters. This architectural property leads to the option of allocating more computation to solve harder problems during inference. Other hyperparameters for training the GNN can be found in Table A1.

For clarity, one illustrative example of how a GNN processes and solves a specific problem will be presented later in Section 3.3.

3.2.2. Autoregressive Transformer

The autoregressive Transformer model is based on the GPT-2 architecture developed by [18] with rotary embeddings [19]. It takes as input a sequence of tokens representing the problem together with a query for a variable we want to predict. For example, the input with just one constraint could have the following form: S ([0, 0] [0, 1] C D) ? D. It is querying the position for variable D within a square with two known points. The model reads this sequence of tokens (where the positions of points such as correspond to one token) and is trained to predict the token which corresponds to the the correct position of variable D ( in this case). This means that from each CSP produced by the generator described in Section 3.1, we extract one sequence for each unknown point. After experimenting with the hyperparameters of the model, we set the number of layers to 6, the number of heads to 6, and the embedding dimension to 256. We also experimented with a recurrent application of one layer, as in [20], to more closely mimic the GNN, but having separate weights for each layer produced better results. Other hyperparameters of the model and for training can be found in Table A1.

3.3. GNN: Illustrative Example

To illustrate how the GNN works, we present a concrete example showing how it processes geometric constraints on a grid with an embedding dimension and iteration count . Consider the following constraint satisfaction problem:

where are known points fixed by position constraints P, and are unknown points to be predicted.

3.3.1. Problem Encoding and Dependencies

Each grid position maps to a unique index using . Therefore, the known points correspond to indices: , , . The problem forms a bipartite graph with eight point nodes and three constraint nodes, where the dependency structure requires sequential resolution: constraint determines point D, which enables constraint to determine points E and F, which finally allows constraint to determine points G and H.

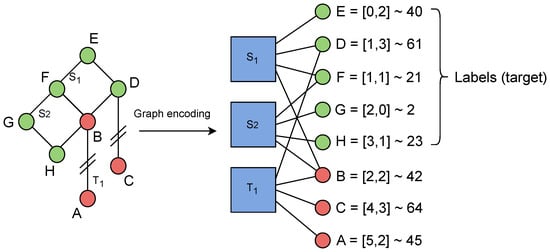

The point constraints P are not realized as separate constraint nodes in the bipartite graph, but rather as fixed embeddings taken directly from the embedding layer. Figure 2 illustrates this encoding and the target solution.

Figure 2.

Graph encoding of the illustrative example problem showing the bipartite structure with point nodes and constraint nodes, along with the point-to-index mapping for the 20 × 20 grid. Red points are fixed (known), while green points need to be determined by the model.

3.3.2. Embedding Initialization

The model uses a shared embedding matrix for both the embedding layer and final classifier. Known points are initialized with the fixed embeddings , , and corresponding to points A, B, and C, respectively, where denotes the i-th row of matrix . These embeddings remain unchanged throughout inference:

Unknown points are initialized with random vectors , sampled uniformly from the unit hypercube, and constraint embeddings are initialized as random vectors . These random vectors are used as the initial hidden states for the LSTM-based message passing. Specifically, for each unknown point i, we set the initial embedding as the hidden state , and similarly for each constraint c, we set . The LSTM cell states that and are initialized to zero. During inference, the hidden states and evolve over time according to the update equations.

3.3.3. Message Passing Dynamics

The update equations are repeated for 15 iterations. Our LSTM-based architecture maintains both hidden states and cell states, where the hidden states serve as the embeddings that flow through the message-passing network, while cell states maintain internal memory. The LSTM also produces a cell output at each iteration, but this output is discarded and only the hidden and cell states are retained.

For constraint updates (Equation (1)), each constraint type uses a specialized LSTM update function. The constraint embedding (LSTM hidden state) and cell state are updated as:

where is the concatenated message from variables participating in constraint c, and each constraint type has its own LSTM parameters: for Translation, for Square, for Reflection, and for Midpoint constraints. The LSTM returns both an output (which is discarded) and a tuple containing the new hidden state and cell state .

For the above example, the input message for constraint is the concatenated vector , where , , and are the fixed embeddings of the known points A, B, and C, respectively, and is the current hidden state of point D. Similarly, the messages and for constraints and are given by and , respectively. Each of these message vectors is passed to the corresponding constraint-specific LSTM update function .

For variable updates (Equation (2)), unknown point embeddings are updated using messages aggregated from all connected constraints. Each unknown variable i aggregates the sum of constraint embeddings from all connected constraints and passes this sum to the shared LSTM update function :

Here, denotes the set of constraint nodes connected to variable i, is the hidden state (embedding) of point i at time step t, and is its corresponding LSTM cell state.

In the illustrative example, point D receives messages from constraints and , resulting in an update input of . Point F receives messages from and . Points E, G, and H each receive a single constraint message: from for E, and from for G and H.

These aggregated messages are passed into the LSTM, which outputs the updated hidden state that becomes the new embedding of variable i.

3.3.4. Solution Recovery

After t iterations, the model predicts the final grid position of each unknown variable using a standard classification head. The final embedding is projected using the shared embedding matrix (no bias term) to produce logits:

The predicted index is obtained as , which is then mapped back to coordinates via , .

During training, we apply a cross-entropy loss over the logits , comparing predicted and ground truth indices for all unknown variables. The embedding matrix is shared with the embedding layer used for known variables, ensuring consistency between input encoding and prediction.

In our example, the correct predictions for points D–H are , , , , and , satisfying all constraints.

4. Experiments and Results

We evaluate both architectures on geometric constraint problems. We examine prediction accuracy, dataset complexity effects, embedding structure emergence, initialization strategies, solution dynamics, and failure modes.

4.1. Comparison of the Two Architectures

To compare the two architectures, we measure the accuracy of individual point prediction (point accuracy). In later experiments, we also report the accuracy in terms of correctly assigning all variables within the problem (complete accuracy).

The experiments on the synthetically generated data show that the GNN performs significantly better than the Transformer, as expected. In Appendix G, we show that we were able to train the GNN on grid sizes up to points to a validation accuracy larger than . In comparison, the Transformer achieved an accuracy of only if we trained it on a grid size and limited the number of constraints to 2. If we trained the model on a more complex setting with the grid size and up to 6 constraints, it reached an accuracy of approximately .

For completeness, we also trained the Transformer model to find the assignments to variables using Chain-of-Thought (CoT) [21] by imitating a log from a simple solver. The solver resolves the constraints one by one in the topological order of the DAG of constraints mentioned in Section 3.1. Using this method of training, the Transformer learns to predict the positions of variables within a grid with approximately 50% point accuracy. We did not explore this direction further as reasoning with a CoT is orthogonal to reasoning in the embedding space on which we focus in this work. More details about the CoT experiment can be found in Appendix E.

Given the substantially better results achieved by the GNN on this problem setting, we conduct the main analysis of the embeddings with the GNN. Appendix D contains similar analysis and visualization for the Transformer (see Figure A8).

We also note that the model accuracy can be further improved by leveraging multiple prediction attempts since incorrect predictions often fall close to their true positions (as shown in Appendix C) and according to preliminary tests, sampling multiple different initial embeddings increases the chance of predicting the correct assignment.

4.2. GNN Performance Analysis

Given the GNN’s clear advantage over the Transformer for our CSPs, representable by bipartite graphs, we now analyze the GNN’s behavior in detail across multiple dimensions. We examine how the model performs under distribution shift, visualize the internal representations it develops, and identify failure modes related to problem complexity. The analysis uses our best-performing GNN configuration with an embedding dimension of 128, 15 message-passing iterations, and training procedures detailed in Appendix A. This systematic evaluation reveals both the capabilities and limitations of our geometric constraint solving approach on discrete grids.

4.2.1. Dataset Design and Complexity Distribution

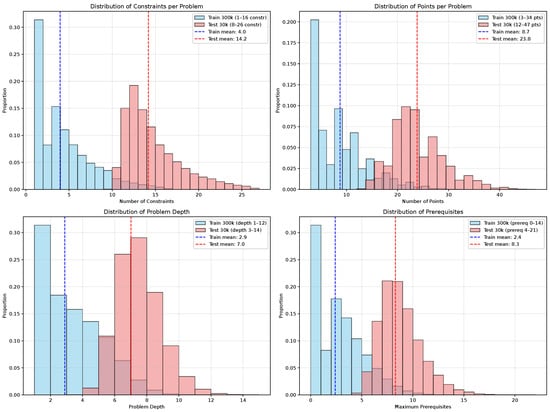

The training dataset contains problems with 1–16 geometric constraints (mean: ), 3–34 points (mean: ), and reasoning depths of 1–12 (mean: ). The test dataset was specifically designed to be more challenging, containing problems with 8–26 geometric constraints (mean: ), 12–47 points (mean: ), and reasoning depths of 3–14 (mean: ). Figure 3 illustrates these distribution differences across key complexity metrics.

Figure 3.

Deliberate distribution shift between training and test sets across complexity metrics. The harder test distribution enables analysis of model behavior under increased problem complexity, failure mode identification, and evaluation of test-time scaling approaches.

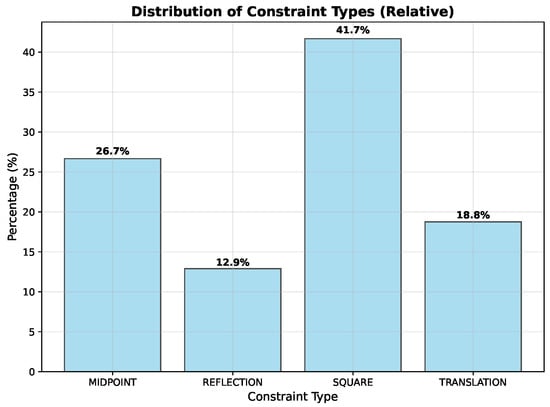

Our generator creates problems for specific grid sizes, and we focus on a grid as it provides a good balance. It is large enough to accommodate interesting geometric figures while remaining computationally tractable for detailed analysis, which required multiple re-runs. Our constraint set consists of four types, Square (S), Reflection (R), Midpoint (M), and Translation (T), with their relative distribution in the training dataset shown in Figure 4.

Figure 4.

Distribution of constraint types in the training dataset. Square constraints are most frequent (41.7%), followed by Midpoint (26.7%), Translation (18.8%), and Reflection (12.9%). The test dataset follows a very similar distribution, ensuring consistent constraint type representation across training and evaluation.

This choice of difficulty distributions allows us to study model behavior when problem complexity is increased beyond what the model encountered during training. The high validation accuracy achieved on the training distribution (% point accuracy, % complete problem accuracy using 10% of training data as validation) demonstrates that the GNN can effectively solve geometric constraint problems when sufficient training data is available. We used ( without validation) training examples. The size was determined through the scaling analysis outlined in Appendix G, which shows the sample complexity requirements for different grid sizes.

The deliberately harder test distribution provides a challenging evaluation setting, where the model achieves lower baseline accuracy, creating opportunities to study failure modes, reasoning depth effects, and test-time scaling approaches.

4.2.2. Performance Under Distribution Shift

Table 1 presents comprehensive performance results on our datasets. The model maintains good performance even under distribution shift, achieving % point accuracy and % complete accuracy on the harder test set with standard inference (15 iterations, 1 resample).

Table 1.

Performance of the model. The first row reports validation accuracy (on a separate dataset, using 1 resample). The remaining rows show results on a harder test set drawn from a different distribution, across different numbers of inference iterations (15, 23, Best) and resample counts (1 and 10). We report point-wise accuracy and complete accuracy.

The results demonstrate clear benefits from test-time scaling approaches. Increasing inference iterations from 15 to 23 improves complete accuracy from % to %. Using 10 resamples with different random initializations further boosts performance to % complete accuracy. We determined the optimal iteration count of 23 through the analysis shown in Figure A10, which also revealed a slight performance increase with multiple resamples.

The “Best” configuration could be explained as an oracle that knows the optimal number of iterations for each individual problem to achieve the most solved points in the fewest iterations (with a maximum of 50 iterations). This approach achieves a complete accuracy of % with single resampling and % with 10 resamples. This model could in theory get very close to its performance on the validation set.

For the resampling strategy, we tested various counts but found the most significant improvement occurred when going from 1 to 5 resamples. We chose 10 resamples as a conservative buffer, since additional resamples beyond this point showed diminishing returns while increasing computational cost.

These findings suggest several important properties of the learned model. The improvement from additional iterations indicates that the GNN employs an iterative refinement process similar to continuous optimization methods. The benefits of resampling show that different random initializations of unknown variables can lead to different solution paths, with some being more effective for particular problem instances.

The model’s ability to generalize to problems with increased complexity beyond the training distribution are consistent with previous work that applied a very similar GNN architecture to SAT problems [22].

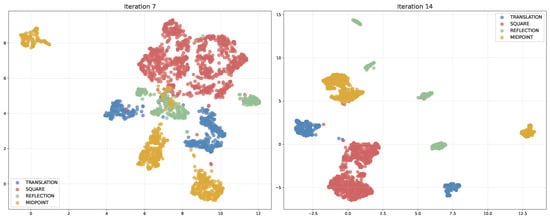

4.3. Visualizing the Embeddings of Individual Points

In the following text, we use the terms static embeddings and dynamic embeddings. By static embeddings we mean the embeddings of points of the grid which are used for initialization of known points and are shared with the classification head of both models. By dynamic embeddings we mean the embeddings of the unknown variables which are updated throughout the forward process.

Both types of models are trained to predict positions of unknown points within the instance. This is achieved by a linear layer which computes the logits for each point. As already mentioned, the weights of this layer are shared with the embedding layer, representing the position of known points.

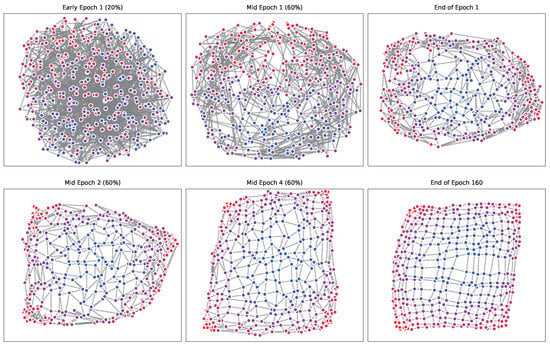

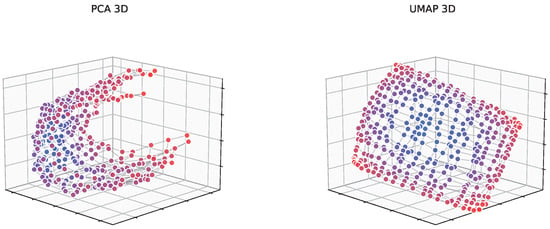

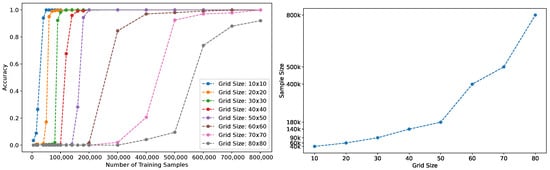

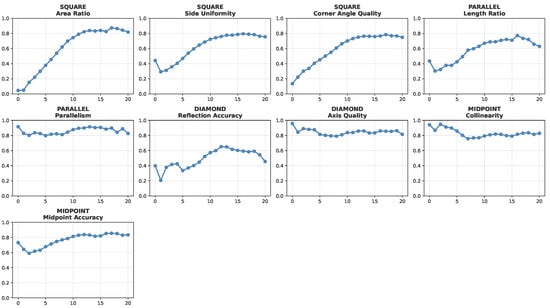

When visualizing low-dimensional projection of the static embeddings corresponding to individual points, we found that they organize themselves into a 2D grid they represent. In Figure 5, we show how this organization emerges during training and how it is connected to the precision of the prediction (three-dimensional projection is depicted in Appendix B).

Figure 5.

Emergence of spatial structure in static point embeddings during training on 20 × 20 grid. Colors indicate distance from the grid center, revealing how the model learns geometric relationships without explicit spatial supervision. UMAP projection of 128-dimensional embeddings clearly shows the progression from random initialization to an organized 2D grid-like structure, while PCA (Appendix B) points to the underlying curved manifold geometry. This self-organization demonstrates that geometric inductive biases emerge naturally from constraint satisfaction training.

We stress that the existence of the grid structure of the data domain and the existence of geometric figures related to constraints is given to a model only indirectly through the constraints and their solutions. The whole training dataset can be thought of as a set of constraints written in an unknown language, and the goal of training is to find a model for this language which will enable correct prediction. As the prediction is based on the similarity (given by the inner product) of the dynamic embeddings of variables and static embeddings of points, it is not that unexpected that the discovered model reflects the geometry behind this language.

Here we focus on point embeddings; for completeness, we provide some results about constraint embeddings in Appendix I. We show that constraint embeddings encode constraint types, geometric properties, satisfaction status, and temporal information to some extent.

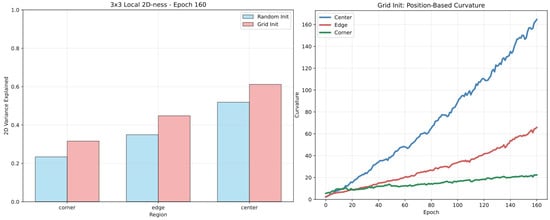

4.4. Network Initialization with Grid Structure

We investigated whether providing the model with an initial understanding of grid structure accelerates training convergence. Rather than initializing the shared embedding and classifier weights randomly, we can initialize them to reflect the geometric structure of the grid.

To provide the model with an initial geometric inductive bias, we initialize the embedding matrix (used for both known point embeddings and the final classification layer) using a rotated grid construction. We begin by assigning each of the N grid positions ( for a grid) its exact 2D coordinates and construct a matrix where , , and all other dimensions are zero. We then generate a random orthogonal matrix via QR decomposition of a matrix with entries sampled from a standard normal distribution, and define the initial embedding matrix as:

This rotation spreads the spatial coordinate information across all d dimensions while avoiding alignment with any particular coordinate axis. Following this initialization, training proceeds identically to the random case, with the weights in remaining fully trainable.

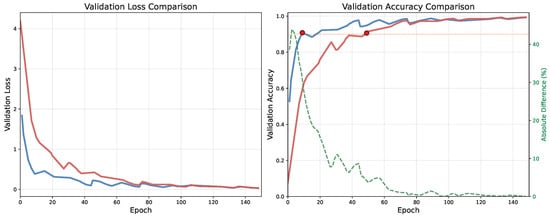

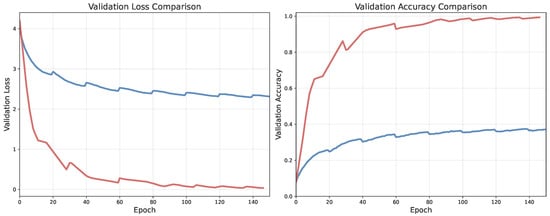

Figure 6 demonstrates that grid-structured initialization provides substantial training acceleration compared to random initialization. The model with grid initialization converges to high validation accuracy within the first few epochs, while random initialization requires significantly more training time. This suggests that providing geometric inductive bias through weight initialization helps the model quickly discover the spatial relationships it needs to solve geometric constraint problems.

Figure 6.

Comparison between random initialization of shared embedding and classification layer weights (red) and grid-structured initialization (blue) in terms of validation loss (left) and point accuracy (right). Red dots mark when each model first achieves 90% accuracy. Green dashed line is the percentual difference (the value is on the right vertical axis). Grid initialization reaches 90% accuracy within 10 epochs while random initialization requires almost 50 epochs. After 100 epochs their performance equalizes.

The initialization method shares weights between the embedding layer (used for known points) and classifier head (used for predictions), ensuring consistent geometric representations throughout the network. This architectural choice proves beneficial as the model can leverage the geometric structure for both encoding known positions and predicting unknown ones.

4.5. Solution Process

In order to find the solution, the GNN model iteratively moves embeddings of unknown points in a high-dimensional space. During the forward pass, the same update rule is applied over and over again. Hence, it is possible to extract the embeddings in each message-passing iteration to obtain a better understanding of the underlying process. Similar examples are provided in Appendix H.

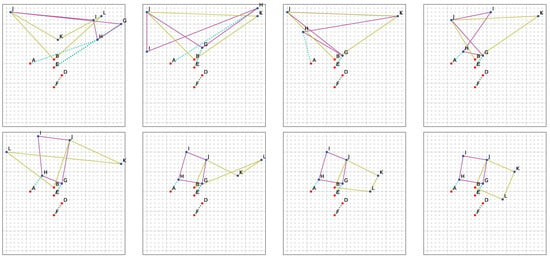

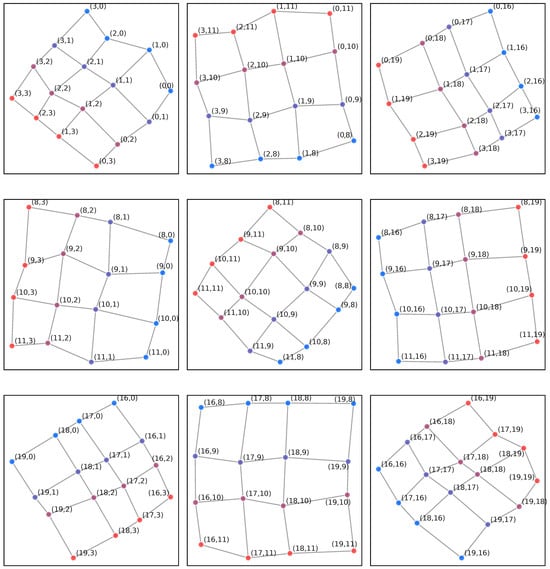

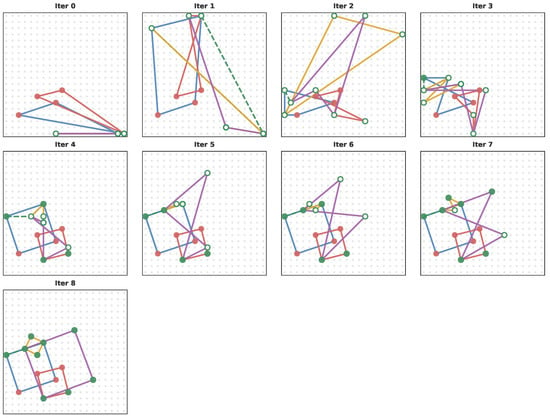

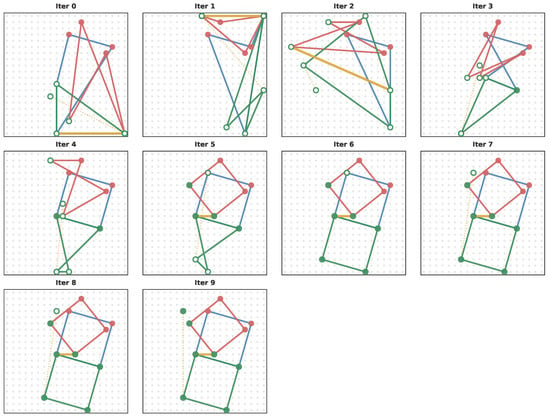

Figure 7 shows an example of the solution process for a random problem given to the GNN. After each update, the closest static point embedding is taken for each variable of a problem and is visualized as the corresponding grid position.

Figure 7.

Visualization of the solution process. The red points , and F () are known, and the blue points , and L need to be predicted. There are two Translation constraints: and , and two Square constraints: and . Translations are marked by a dotted line and Squares by a solid line. The network is trained to predict the result in 15 iterations, of which the initial state and results after iterations , and 13 were chosen for illustration. The network gradually improves the result over the iterations: the first Translation with only one unknown point G is solved, followed by finding the point H of the second Translation. After Translations, both Squares are solved. Note that this visualization was created with a model operating on a 30 × 30 grid. Line colors are used to differentiate between the constraints in the problem.

Note that in this example, the GNN first finds a close approximation to the hidden configuration and then refines it. Here, the squares are first “approximated” by quadrilaterals which then converge to exact squares. It can also be observed that one square constraint is approximately satisfied earlier than the other, which reflects the fact that the other constraint can be resolved only after the first constraint is resolved (as explained in the next section). For an extended discussion and visualization of the reasoning dynamics as revealed by UMAP, see Appendix J.

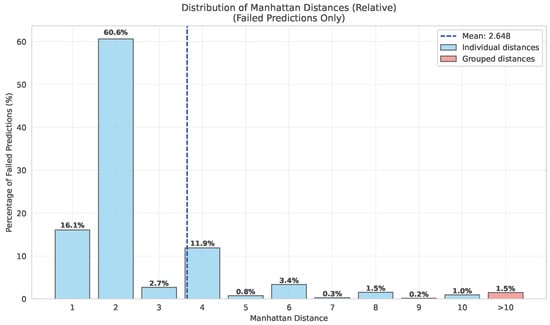

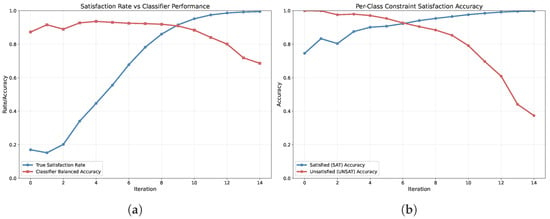

4.6. Analysis of Incorrectly Classified Points

To understand the failure modes of the GNN, we analyze predictions on our test instances using the basic model setup (15 iterations, 1 resample) from Table 1. We examine how prediction accuracy relates to problem complexity along multiple dimensions.

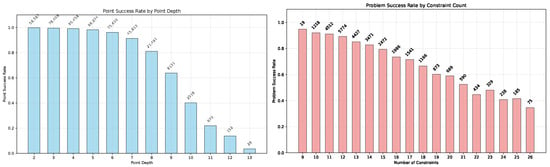

As explained in Section 3.1, constraints form a dependency structure where some must be resolved before others. For any given point, we can trace the dependency chain of constraints that must be resolved to determine its position. We define point depth as the number of constraint resolution steps required for a specific point. The problems themselves vary in complexity through both the total number of constraints and the maximum depth of dependency chains within the problem.

Figure 8 (left) shows that prediction accuracy correlates negatively with point depth. Points requiring deeper reasoning chains have significantly higher failure rates. The relationship is nearly monotonic, with success rates declining from over for points at depths of 2–3 to below for points requiring 10 or more resolution steps. This pattern aligns with the iterative solution process observed in Section 4.5 where the model progressively constructs solutions by first resolving simpler constraints.

Figure 8.

Failure analysis on test dataset (15 iterations, 1 resample). (Left) individual point prediction accuracy degrades with reasoning depth, showing points requiring deeper dependency chains have higher failure rates. (Right) complete problem success decreases with constraint count. Numbers above bars show absolute sample sizes for each category.

Figure 8 (right) demonstrates that problems with more constraints also show lower complete success rates. This reflects both the increased likelihood of errors accumulating across multiple predictions and the tendency for larger problems to contain deeper dependency structures.

These findings suggest that the GNN’s reasoning capability is fundamentally limited by the length of dependency chains it can reliably process. Importantly, when predictions do fail, the incorrectly classified points tend to be positioned close to their correct target locations, as evidenced by the distance histograms in Appendix C.

5. Limitations and Discussion

We note that we study a much simpler setup than was studied in AlphaGeometry. Predicting positions of points satisfying a set of geometric constraints is straightforward in comparison with predicting useful auxiliary points. Also, in our case, the unknown points need to lie on a discrete 2D grid so that we can train the model with a classification loss. The simplified setup turned out to be sufficient to study how NNs can reason about geometric constraints. Training the model for predicting auxiliary points requires a large computational budget and, moreover, the authors did not release the training dataset, which was also expensive to create. We also mention that we use only four types of constraints, but the whole setup could be easily extended to different sets of constraints by adding more update functions in Equation (1). These simplifications enabled us to provide partial understanding of the process by which the tested models are able to solve individual problems and to demonstrate the benefits of using a GNN instead of a Transformer. We note that variable permutation invariance is not considered in our constraint definitions, as a fixed variable ordering is required to ensure unique solutions.

Compared to the GNN which Hula et al. [10] used for Boolean satisfiability, our GNN deals with fewer constraints and points. In their case, the GNN was able to handle hundreds of constraints, whereas our GNN deals with an order of magnitude fewer constraints and still needs more training samples than in the case of Boolean satisfiability. This could be caused by the fact that the domain of our constraints is much larger, but it could also be the case that different architecture of the GNN (with different update functions) could scale better to larger problems.

It is also possible that a different training procedure might result in significantly easier training. The inference process depicted in Figure 7 and the findings of [10] suggest that the GNN could implicitly optimize an unknown objective during the iterative forward pass. Finding an expression for this objective could be interesting and used to train the network in an unsupervised way. This should be much easier than training it with the classification loss. Another obvious direction is to train the model as a diffusion model which “denoises” randomly assigned points to points of the solution.

Lastly, we mention that our experimental setup can be extended to more complex CSPs which could contain temporal relations and relations between various entities. We believe that such CSPs could be very useful for studying how NNs can generalize to unseen situations [23] and how they can discover models of the world without grounding [24]. Studying geometric CSPs has the advantage that the domain has an obvious geometric interpretation which is visible also in the embedding space.

6. Future Work

While we provided initial insights into geometric reasoning in neural networks, several directions remain for deeper understanding. Future work should investigate the expressiveness requirements of different update mechanisms, building on our finding that LSTMs significantly outperform RNNs for constraint updates. This includes determining the minimal architectural complexity needed to solve geometric constraints and whether these mechanisms can recover explicit mathematical formulas underlying constraints rather than learning approximations. The iterative refinement process that we observed suggests connections to continuous optimization methods. Future work should formalize this relationship and investigate whether training procedures based on explicit optimization objectives could improve efficiency. Additionally, extending beyond our four constraint types while addressing variable permutation invariance would broaden insights. Moving from discrete grid classification to continuous coordinate regression would enable analysis of geometric reasoning in unrestricted spatial domains.

7. Conclusions

We have shown that GNNs as well as Transformers can learn to solve geometric CSPs and provided several insights into the process by which they find the solution. The visualizations show that when processing the problem, models form the hidden spatial configurations described by the problem in the embedding space. During training, the static embeddings of individual points in the 2D grid organize themselves within a 2D subspace and reflect the neighborhood structure of the grid. We showed that the occurrence of errors depends on problem complexity, like the number of steps required to resolve all variables. The models solve constraints through an iterative refinement process and exhibit test-time scaling capabilities, where allocating additional computational resources during inference improves performance on harder (out of training distribution) problem instances. Lastly, we showed that GNNs are much easier to train and can be scaled to significantly larger grids than Transformers.

Author Contributions

Conceptualization, J.H. and D.M.; methodology, J.H. and D.M.; software, J.H., D.M., D.H. and M.J.; validation, D.M. and J.H.; formal analysis, D.M. and J.J.; investigation, D.M. and J.H.; resources, J.H. and M.J.; data curation, D.M.; writing—original draft preparation, D.M. and J.H.; writing—review and editing, J.J., D.M. and J.H.; visualization, D.M.; supervision, J.H. and M.J.; project administration, J.H. All authors have read and agreed to the published version of the manuscript.

Funding

This article has been produced with the financial support of the European Union under the REFRESH—Research Excellence For REgion Sustainability and High-tech Industries project number CZ.10.03.01/00/22_003/0000048 via the Operational Programme Just Transition. The research was supported by the Ministry of Education, Youth and Sports within the dedicated program ERC CZ under the project POSTMAN no. LL1902, by the Czech Science Foundation grant no. 25-17929X, and by the European Union under the project ROBOPROX (reg. no. CZ.02.01.01/00/22_008/0004590). This article is part of the RICAIP project that has received funding from the European Union’s Horizon 2020 research and innovation programme under grant agreement No 857306.

Data Availability Statement

The synthetic datasets and problem generator code supporting the findings of this study are available on request from the corresponding author. The complete codebase including model implementations and training procedures will be made publicly available upon publication of this manuscript to ensure full reproducibility of the reported results.

Acknowledgments

During the preparation of this manuscript, the authors used Claude (Anthropic’s AI assistant) for code development assistance and English language editing suggestions. All conceptualization, experimental design, analysis, and interpretation of results were conducted by the authors. The AI tool was used primarily for programming assistance and language refinement suggestions. The authors have reviewed and edited all output and take full responsibility for the content of this publication.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| NN | Neural network |

| GNN | Graph neural network |

| CSP | Constraint satisfaction problem |

| SDP | Semidefinite programming |

| LLM | Large language model |

| RNN | Recurrent neural network |

| M | Midpoint constraint type |

| R | Reflection constraint type |

| S | Square constraint type |

| T | Translation constraint type |

| DAG | Directed acyclic graph |

| LSTM | Long short-term memory |

| CoT | Chain-of-thought |

| PCA | Principal component analysis |

| UMAP | Uniform manifold approximation and projection |

Appendix A. Training Procedures and Hyperparameters

This section details the training procedures and hyperparameter configurations used for the GNN models, which were the primary focus of experiments.

Appendix A.1. GNN Training Procedure

The GNN uses an AdamW optimizer with cosine annealing learning rate scheduling. The scheduler operates in 15-epoch cycles where each cycle’s peak learning rate decreases by a factor of 0.9 from the previous cycle. Learning rate restarts cause temporary 0.5–6% accuracy (larger in initial stages of training) drops but enable continued optimization, with models regaining and exceeding previous performance within few epochs.

Exponential Moving Average (EMA) weights with a decay of 0.99 provide stable evaluation metrics. EMA weights are used exclusively for validation and testing while training continues with primary parameters. Cross-entropy loss applies only to unknown points, with the known points remaining fixed.

The model supports training with both a fixed and changing number of iterations (where the number of message-passing repetitions is different for each batch). Iteration training samples are changed from a diminishing probability distribution centered at 15 iterations (±10 range), where 15 iterations occur 40% of the time, 16–17 iterations occur ∼15% combined, and extreme values like 25 iterations occur <1%. This technique improves test-time scaling robustness. Iteration count does not affect trainable parameters since the same layers are applied recurrently.

Dropout (0.1) applies to both point and constraint embeddings during training.

Appendix A.2. Hyperparameter Selection for GNN

The hyperparameter search determined that 15 training iterations outperform higher values like 20 for convergence speed and final accuracy. Smaller batch sizes (32) consistently outperform larger ones, trading GPU utilization for model accuracy. With random initialization, larger batch sizes prevent 99%+ convergence, plateauing around 90% after 200 epochs.

Grid-structured initialization enables broader hyperparameter ranges while maintaining performance, though the final configuration uses conservative settings reliable across initialization methods.

All hyperparameters were optimized for geometric constraint problems on 20 × 20 grids. A summary of the GNN and the Transformer architecture used in the initial comparison reported in Section 4.1 are visible in Table A1.

Table A1.

Model hyperparameters and training settings for both architectures.

Table A1.

Model hyperparameters and training settings for both architectures.

| Parameter | GNN | Transformer |

|---|---|---|

| Model Architecture | ||

| Embedding dimension | 128 | 256 |

| Model iterations/layers | 15 ± 10 (diminishing) | 6 |

| Number of heads | – | 6 |

| Dropout rate | 0.1 | – |

| Positional embeddings | – | RoPE |

| Training Configuration | ||

| Optimizer | AdamW | AdamW |

| Learning rate | ||

| Weight decay | – | |

| Batch size | 32 | 512 |

| Epochs | 200 | 200 |

| Warmup steps | – | 200 |

| Learning Rate Schedule | ||

| Scheduler | Cosine annealing | Linear |

| Cycle length | 15 epochs | – |

| Peak decay factor | 0.9 | – |

| Min LR factor | 0.1 | – |

| Regularization | ||

| EMA decay | 0.99 | – |

| Gradient clipping | 0.65 | 1.0 |

| Special Configuration | ||

| Special tokens | – | [SEP], [UNK], [PAD], [MASK] |

Appendix A.3. Model Complexity

For 20 × 20 grids with an embedding dimension of 128, the GNN contains 1,498,112 trainable parameters. Each constraint type contributes 328,704 parameters to the variable–constraint () message-passing layers. The shared embedding and classifier layers account for 51,200 parameters (400 grid positions × 128 dimensions), counted once since they share the same weight matrix. The constraint–variable () message-passing layer contributes 132,096 parameters. In comparison, the Transformer model for the same grid size contains 5,081,088 parameters, approximately 3.4 times larger than the GNN architecture.

Appendix A.4. LSTM vs. RNN Constraint Update Ablation

We conducted an ablation experiment to evaluate whether our constraint update mechanism benefits from using an LSTM cell over a simpler RNN cell. While the main experiments use LSTM-based updates, we performed a basic hyperparameter search for the RNN variant, adjusting learning rate, embedding dimension, batch size, and number of iterations. Across all runs, the RNN remained unstable and failed to exceed 40% validation accuracy. When using the same hyperparameters as the LSTM model (for a direct comparison), the RNN plateaued at 38.4% and exhibited higher validation loss.

Figure A1 shows the validation accuracy and loss during training for both variants. These results confirm the advantage of using more expressive update mechanisms (such as LSTM) for modeling our geometric constraints.

Figure A1.

Comparison of LSTM- and RNN-based constraint update mechanisms. The LSTM achieves higher validation accuracy and lower loss (red). The RNN variant achieves only 38.4% accuracy and performs worse in terms of loss (blue).

Appendix B. Embedding Visualization for the GNN in 3D

As mentioned in Section 4.3, the static embeddings of individual points self-organize into a grid structure representing their spatial relationships. This section provides 3D visualizations to complement the 2D projections shown in the main text.

Figure A2 shows 3D projections of the learned embeddings using both UMAP and PCA methods. From the UMAP projections, we observed that during training, randomly initialized embeddings evolve from a single spherical cluster, which later unfolds into a U-shaped surface and finally converges to a flat 2D surface.

In contrast, the PCA 3D visualization remains a curved “cup” or “bell” shape rather than the flat plane observed with UMAP even after full training. Thus the embeddings lie on a curved surface in the high-dimensional space rather than in a flat plane.

Figure A2.

Three-dimensional projections of static point embeddings from 128-dimensional space using PCA (left) and UMAP (right). PCA reveals a curved “cup”- or “bell”-shaped structure, while UMAP projects them onto a flatter surface that more clearly shows the 2D grid organization. Colors indicate distance from grid center.

Figure A3.

Two-dimensional projections of static point embeddings from 128-dimensional space using PCA (left) and UMAP (right). PCA shows a curved, warped structure while UMAP better preserves the regular grid connectivity. Lines show spatial adjacency relationships from the original 20 × 20 grid. Colors indicate distance from grid center.

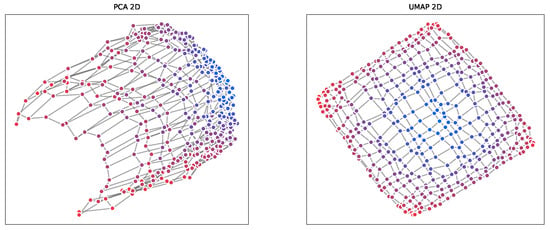

To further characterize the geometric evolution of the embedding space, we measure local curvature and local dimensionality throughout training (Figure A4).

Curvature is computed in the 3D PCA projection using an eigenvalue-based method that quantifies deviation from local planarity within small neighborhoods. Specifically, we compute the local covariance matrix of each neighborhood and define curvature as the ratio between the smallest and largest eigenvalues:

where and are the smallest and largest eigenvalues of C, and is a small constant added for numerical stability.

In the case of random initialization, curvature starts relatively high and fluctuates. In contrast, curvature under grid initialization starts near zero but increases steadily over time, eventually reaching levels similar to the random case. This indicates that even when training begins from a perfectly planar manifold, the resulting structure develops nontrivial curvature as training progresses. In parallel, we evaluate local 2D-ness, defined as the variance explained by the top two principal components in spatial subgrids. This captures how locally planar the embedding remains. Together, these metrics provide complementary views on how spatial structure and geometric complexity evolve, and how these differ across initialization strategies.

Figure A4.

Curvature and local 2D-ness during training for random vs. grid initialization. Right: Mean curvature over training time and by spatial region. Curvature is computed in the 3D PCA projection using a local neighborhood eigenvalue method, and it reflects how much local patches deviate from planarity. The rising curvature over time corresponds to the emergent cup-like shape seen in 3D PCA plots. Left: 2D-ness, measured as the fraction of variance explained by the top 2 PCA components in spatial subgrids.

Figure A5 demonstrates that when examining 4 × 4 subregions of the 20 × 20 grid, PCA projections reveal well-organized local grid structures. This visualization technique shows that the embeddings maintain locally linear relationships within smaller regions, even though the global structure exhibits curvature.

Figure A5.

PCA projections of representative 4 × 4 subregions from the 20 × 20 grid embeddings, selected from corners and center areas for complete coverage. Local grid structure is well-preserved across all regions. While global embedding organization exhibits curvature (Figure A2), these local neighborhoods maintain linear spatial relationships.

These visualizations confirm that the model successfully learns to embed spatial relationships in its high-dimensional representation, with the embeddings organized on a curved surface that preserves local neighborhood structure.

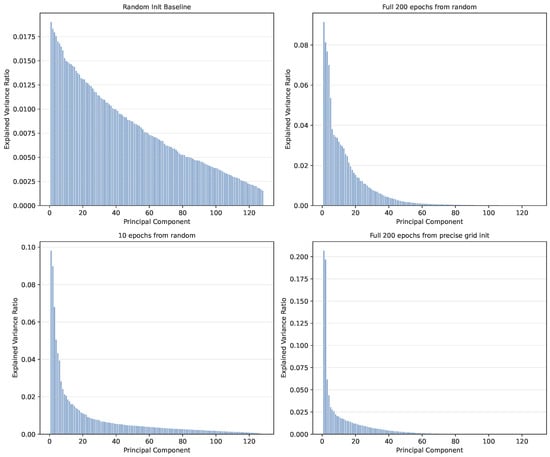

Figure A6 shows the distribution of variance across PCA components at different training stages and initialization methods. When training from random initialization, the first two components are most prominent at early stages (10 epochs). After full training (200 epochs), the variance spreads across approximately the first five components.

Training from precise grid initialization shows a different pattern. After 200 epochs, the first two components remain most prominent while the model utilizes additional dimensions, contrasting with the pure grid initialization baseline which concentrates variance primarily in two dimensions.

This analysis reveals how the usage of the embedding dimensions evolves during training and how initialization strategy affects the final embedding structure.

It also highlights the advantage of UMAP for our setup. UMAP offers a more accurate visualization of the learned grid structure than PCA. PCA is a global linear method and can distort local relationships when the embedding manifold becomes curved or non-planar, often placing distant points close together in projection. UMAP, by contrast, is a locally nonlinear method that better preserves neighborhood structure. This makes it more effective at representing the underlying grid organization when it becomes embedded in a non-planar, high-dimensional space.

Figure A6.

PCA component variance distribution across training conditions. (Top left) random initialization baseline. (Top right) after 200 epochs from random initialization, most variance spreads across approximately 5 components. (Bottom left) early training (10 epochs from random) shows the first 2 components are more prominent. (Bottom right) after 200 epochs from precise grid initialization, variance utilizes more dimensions than baseline but less prominently than random initialization training.

Appendix C. Distribution of the Error Magnitudes

The histogram in Figure A7 depicts a distribution of Manhattan distances between the incorrectly predicted point position and the ground truth position on the grid. As can be seen, most incorrectly predicted positions lie very close to the ground truth position.

Figure A7.

Distribution of prediction errors for incorrectly classified points, measured by Manhattan distance. Most failed predictions lie close to their correct positions.

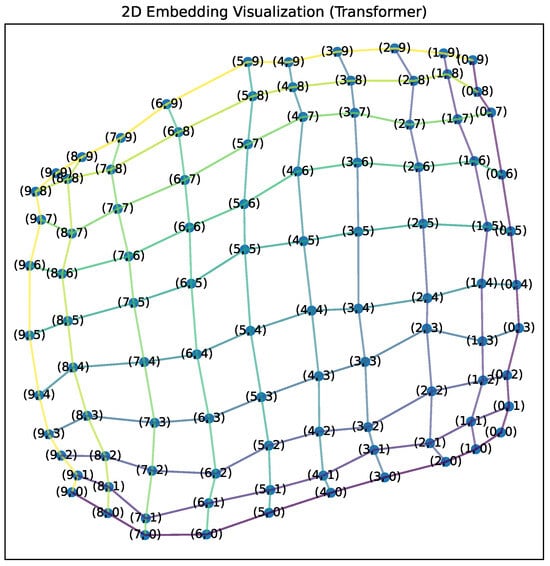

Appendix D. Embedding Visualization for the Transformer Model

In Figure A8, we show the grid-like structure of static point embeddings discovered by the Transformer. In comparison with Figure 5 which shows the emergence of the corresponding structure for the GNN, the grid is now of smaller size, namely 10 × 10, since the Transformer was not able to scale into larger sizes with sufficient accuracy as discussed in Section 4.1.

Figure A8.

Projection into 2D using UMAP illustrates that static token embeddings of the Transformer self-organized into a grid-like structure which the visualized tokens represent. The edges show the connectivity of the points in the original grid. Labels on points are coordinates in the original grid.

Appendix E. Chain-of-Thought Training

In order to train the Transformer to produce a chain-of-thought which assigns the variables incrementally, we implemented a simple solver which logs its steps and the resulting logs are used for imitation. The solver first orders the constraints according to a DAG mentioned in Section 3.1 and then resolves the constraints one by one, starting from the root constraints. As the solver traverses the DAG, it logs the constraint of a given node, the values of already assigned variables within the constraint, and lastly the computed values for the remaining variables. We also include few keywords into the log which delimit the provided information. An example of the log for a random problem is shown below:

TRANSLATION ( 1 0 2 3 ) , TRANSLATION ( 5 4 7 6 ) , SQUARE ( 8 7 9 3 ) , SQUARE ( 11 8 10 3 ) , TRANSLATION ( 8 7 12 3 ) ; fixed 0 = #696 , 1 = #617 , 2 = #978 , 4 = #577 , 5 = #498 , 6 = #731 ; Solution begins ; Con TRANSLATION ( 5 4 7 6 ) ; Known 5 = #498 , 4 = #577 , 6 = #731 ; Impl 7 = #652 ; Con TRANSLATION ( 1 0 2 3 ) ; Known 1 = #617 , 0 = #696 , 2 = #978 ; Impl 3 = #1057 ; Con SQUARE ( 8 7 9 3 ) ; Known 7 = #652 , 3 = #1057 ; Impl 8 = #462 , 9 = #867 ; Con SQUARE ( 11 8 10 3 ) ; Known 8 = #462 , 3 = #1057 ; Impl 11 = #247 , 10 = #842 ; Con TRANSLATION ( 8 7 12 3 ) ; Known 8 = #462 , 7 = #652 , 3 = #1057 ; Impl 12 = #867 ; Solution ends

Variables are expressed by a number (1, 2, 3, etc.) and individual points are expressed with point ID with a # symbol in front (#696, #617, etc.). The input has two parts: a problem statement and a solution. We train on the whole input, but exclude the problem statement from the computation of the loss. For validation, we include only the problem statement. The keyword Con marks the selected constraint, Known marks the known variables which appear in the selected constraint with their values, and Impl marks the newly deduced variables with their values.

Appendix F. Test-Time Scaling and Iteration Analysis

This section analyzes model behavior across different iteration counts and resampling strategies, supporting the test-time scaling results presented in Table 1.

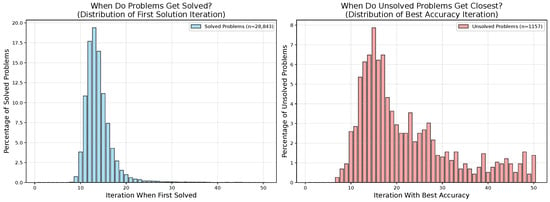

Figure A9 shows the distribution of iterations when variables are correctly assigned for the first time (left) and when unsolved problems achieve their best point accuracy (right) using single resampling. Most problems that can be solved are resolved within the first 15 iterations, with a long tail extending to 50 iterations. For unsolved problems, the peak occurs around iterations 10–15, though substantial numbers of problems achieve their best accuracy at later iterations, indicating that additional computation can still provide benefits.

Figure A10 demonstrates how accuracy evolves with iteration count for both single and multiple resampling strategies. Both point and complete problem accuracy peak around iterations 23–25, then decline with further iterations. This indicates that while some individual problems benefit from extended computation (as shown in Figure A9), increasing iterations beyond 25 breaks more already-solved instances than it helps, resulting in net performance degradation.

Multiple resamples provide consistent benefits across all iteration counts, with optimal performance occurring in the 23–25 iteration range for both strategies.

These results explain why increasing iterations from 15 to 23 improves complete accuracy and why the “Best” oracle configuration achieves higher performance by selecting optimal iteration counts per problem.

Figure A9.

When problems reach solution during inference. Left: distribution of first solution iteration for successfully solved problems. Right: iteration when unsolved problems achieve their highest point accuracy.

Figure A10.

Model performance across iteration counts with single resampling (blue) and 10 resamples (red). Both point accuracy and complete problem accuracy peak around iterations 23–25, then decline. Multiple resampling provides consistent benefits across all iteration counts.

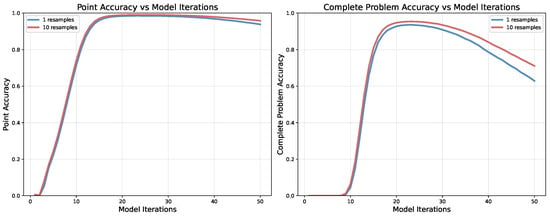

Appendix G. Scaling the Size of the Grid

To get a sense of how the sample complexity depends on the size of the grid, we trained several models on different sizes of the grid and different amount of training samples. To achieve faster training, we conducted these experiments with problems which contained only two types of constraints (S and T).

The problem generator mentioned in Section 3.1 produces problems which have, on average, around four constraints. Both types of constraints (S and T) are sampled with equal probability. Therefore, we can assume that an average problem has two constraints of type S (which are determined by two points) and two constraints of type T (which are determined by three points). If we denote the number of points on the side of the grid by n, then we can estimate the number of unique problems in an -size grid to be . There are possible point positions and we independently sample six points for two constraints of type T and four points for two constraints of type S, together yielding possibilities, each of which determines one instance. This number should be viewed as an upper bound because it ignores the fact that constraints can share variables.

To test the dependence of the validation accuracy on the grid size and number of training samples, we use the following values of n: 10, 20, 30, 40, 50, 60, 70, and 80. This results in the following number (after rounding the exponent to the nearest integer) of possible problems for each n, respectively: , , , , , , , and .

The sizes of the training set for each grid size are in the range from 5k to 800k. The relationship between the validation accuracy, the grid size, and the size of the training set can be seen in Figure A11. In the same Figure (right), we plot the relationship between the grid size and sizes of the training set for which the validation accuracy exceeded .

Figure A11.

Scaling analysis for different grid sizes using simplified problems with only Square and Translation constraints. Left: validation accuracy versus training set size for grids from 10 × 10 to 80 × 80 points. Right: sample complexity required to achieve 90% accuracy across different grid sizes.

Appendix H. More Examples of the Solution Process

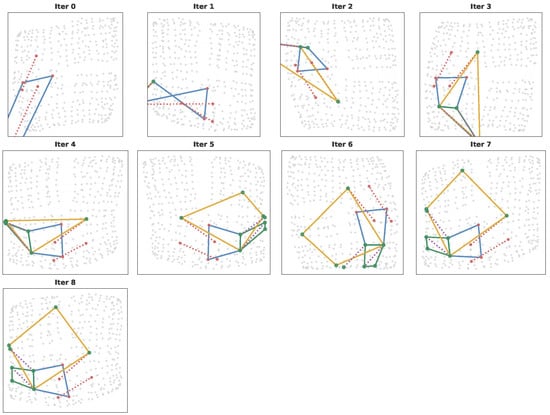

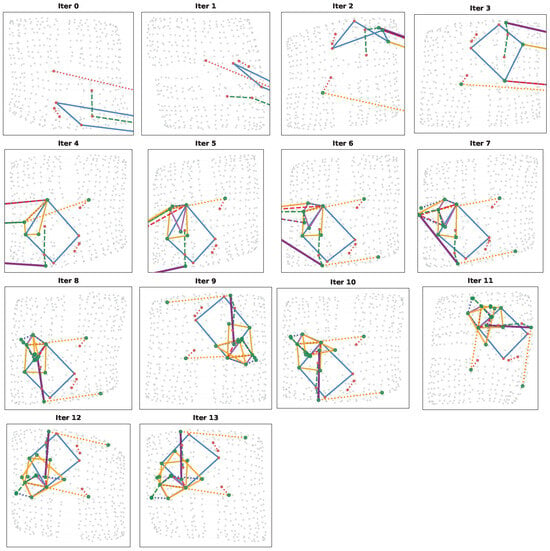

This section provides additional examples of the iterative solution process to support the findings presented in Section 4.5. While the main text focused on a single detailed example, these three instances demonstrate that the observed iterative refinement behavior is consistent across different problem configurations and constraint combinations.

These examples cover three of the four constraint types in our CSP language, Midpoint (M), Reflection (R), and Square (S), providing broader evidence for the model’s geometric reasoning capabilities. The instances shown in Figure A12, Figure A13 and Figure A14 were selected primarily for visual clarity. Some generated problems place points in close proximity, obscuring the iterative dynamics when visualized.

Unlike the prominent example in the main text, we omit point labels to avoid visual clutter while still clearly showing the constraint relationships through colored lines (for the same reason, only problems with low constraint amount and depth are presented). Each figure shows how the model progressively constructs the hidden geometric configuration, with constraint satisfaction improving over iterations until convergence to the correct solution. These examples reinforce our core finding that the GNN employs a continuous optimization-like process to solve geometric constraint problems, moving point embeddings iteratively toward configurations that satisfy the given constraints.

In these visualizations, known points appear as fixed red markers throughout all iterations, while unknown points are shown as green markers that move as the model iterates. Unknown points appear as empty circles when incorrectly positioned and filled circles when they reach their correct locations. We display all iterations until final resolution. Different constraint types use distinct visual representations: Square constraints appear as full lines connecting all four vertices, Midpoint constraints use dashed lines forming a three-point chain, and Reflection constraints show full lines connecting the axis points with less visible dotted lines connecting the reflected point pairs.

Figure A12.

Problem with five constraints: four Squares and one Midpoint. The Midpoint constraint (dashed green line) coincides with the top side of the blue Square (two vertices of the smallest Square). Point colors denote known (red) and unknown (green) variables. Line colors are used to differentiate between the constraints in the problem. If a green point is empty, it is not in the right position yet.

Figure A13.

Problem with four constraints: three Squares and one Reflection. The two leftmost points are reflected across the axis formed by the yellow line segment. Point colors denote known (red) and unknown (green) variables. Line colors are used to differentiate between the constraints in the problem. If a green point is empty, it is not in the right position yet.

Figure A14.

Problem with six constraints: two Squares, three Midpoints, and one Reflection. One Midpoint is less visible in the final solution because it is both a common vertex of both Squares and simultaneously the midpoint of two different Midpoint constraints. The orange line segment provides the Reflection axis. Point colors denote known (red) and unknown (green) variables. Line colors are used to differentiate between the constraints in the problem. If a green point is empty, it is not in the right position yet.

Appendix I. Constraint Embedding Analysis

We analyze the information encoded in constraint embeddings produced by the GNN during its iterative process. These embeddings are updated using information from both fixed and unknown points.

Appendix I.1. Constraint Type Classification

We tested whether constraint embeddings encode their constraint type by training a simple MLP classifier (two linear layers with ReLU) on the embeddings. The classifier achieved over accuracy predicting constraint types. Figure A15 shows the clear separation between constraint types in the projected embedding space using UMAP.

Figure A15.

Constraint embedding clusters after 8 and 15 iterations, projected using UMAP. Different constraint types form distinct clusters, with subclusters reflecting geometric properties and network biases.

Each constraint type exhibits subclustering patterns that reflect geometric properties, network processing biases, and some generator design choices. Square constraints form subclusters based on side orientation relative to the grid (parallel versus diagonal orientations). Midpoint constraints cluster according to which variable is unknown: the Midpoint itself or one of the endpoint variables. Reflection constraints show four subclusters corresponding to reflection axis orientation: two for axes parallel to grid edges and two for diagonal axes. Translation constraints exhibit order-dependent clustering based on which variables in the constraint are unknown—specifically whether variables or are unknown—revealing network bias toward variable ordering. Note that we identified fewer distinct subclusters than the maximum possible number, as some potential subclusters appeared very close in the embedding space.

These patterns indicate that constraint embeddings encode both geometric properties and structural biases from the network’s processing order. As discussed in Section 3.1, our generator creates problems requiring unique solutions through specific dependency structures, which may contribute to these ordering biases. Future work could explore making constraints invariant to variable permutations.

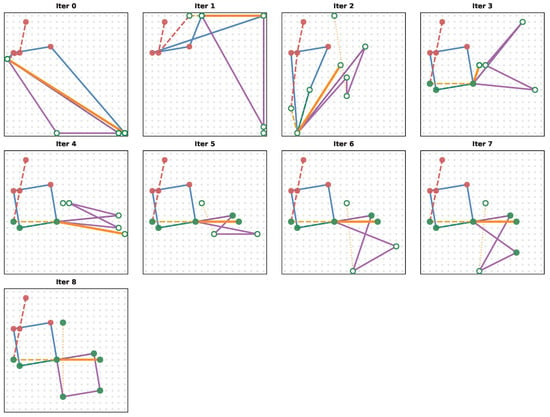

Appendix I.2. Constraint Satisfaction Prediction

We trained an MLP classifier to predict whether individual constraints are satisfied at each iteration. Using our 30k test dataset, we annotated constraint satisfaction status after each iteration up to 15 iterations and split the data: for training, for validation, and for testing. We made sure that the training data had balanced classes.

For early iterations, the classifier achieved over accuracy. However, performance degraded for higher iterations, as shown in Figure A16. The model increasingly predicted “satisfied” for most constraints as iteration count increased, regardless of actual satisfaction status.

Figure A16.

Constraint satisfaction prediction accuracy across iterations. (a) True satisfaction rate versus classifier-balanced accuracy. (b) Per-class accuracy showing the model’s bias toward predicting “satisfied” at higher iterations.

Appendix I.3. Temporal Information Encoding

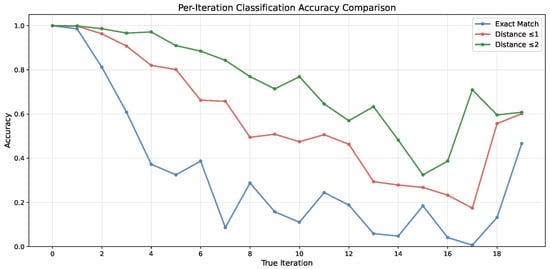

We investigated whether constraint embeddings encode iteration number by training an MLP to predict the current iteration from constraint embeddings. Results using 20 iterations are shown in Figure A17.

Figure A17.

Accuracy of predicting iteration number from constraint embeddings. Exact match accuracy (blue), and when allowing distance 1 (orange) and distance 2 (green) tolerance. The model accurately predicts early iterations (≤4) but becomes less reliable for higher iteration counts.

The classifier achieves high accuracy for early iterations (≤4) but becomes less reliable for higher iteration counts. When allowing tolerance (predicting within 1–2 iterations of the true value), accuracy clearly improves. This indicates that constraint embeddings do encode temporal information, though with decreasing precision for later iterations.

When trained on higher maximum iteration counts, the predictor defaults to the final iteration class for later iterations, suggesting it learns a coarse ‘early’ vs ‘late’ distinction rather than precise temporal positioning. This may be due to the original GNN not being exposed to longer sequences during training.

These findings demonstrate that constraint embeddings encode rich information about constraint types, geometric relationships, satisfaction status, and temporal progression, providing more insight into the model’s internal reasoning process.

Appendix J. Evolution of Constraints Under UMAP Projection