A Comprehensive Summary of the Application of Machine Learning Techniques for CO2-Enhanced Oil Recovery Projects

Abstract

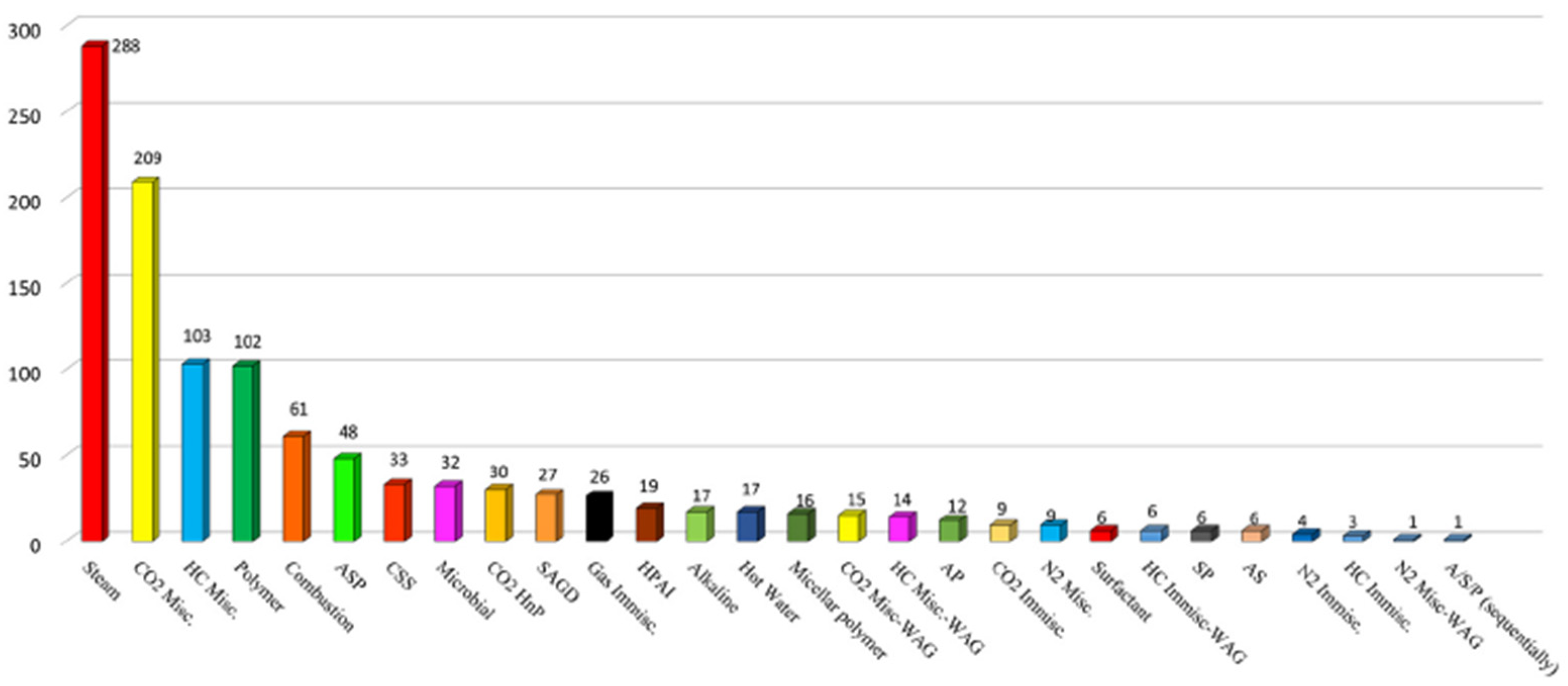

1. Introduction

2. Mechanisms and Processes of CO2-EOR

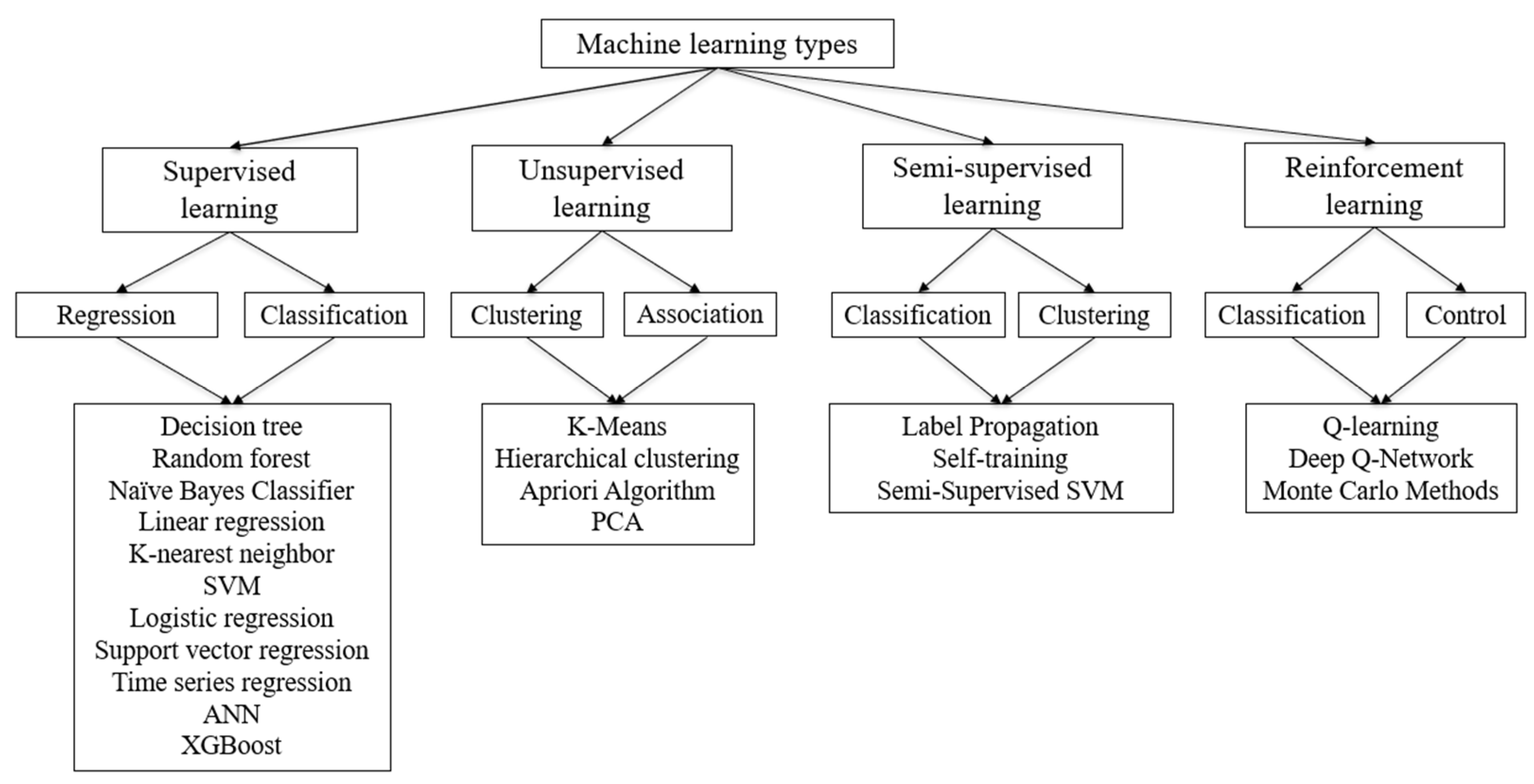

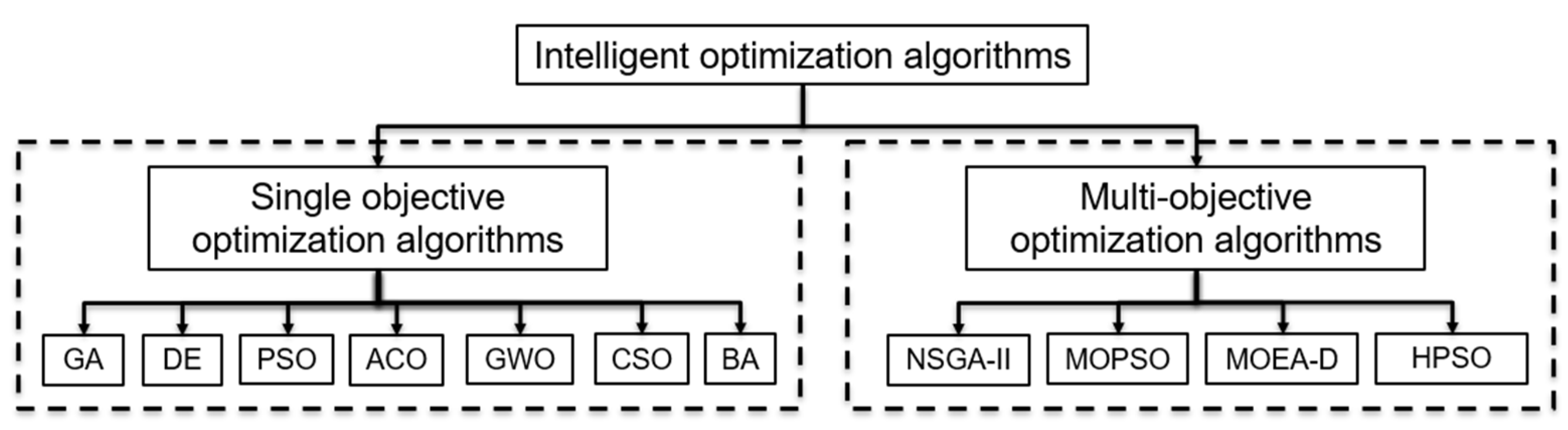

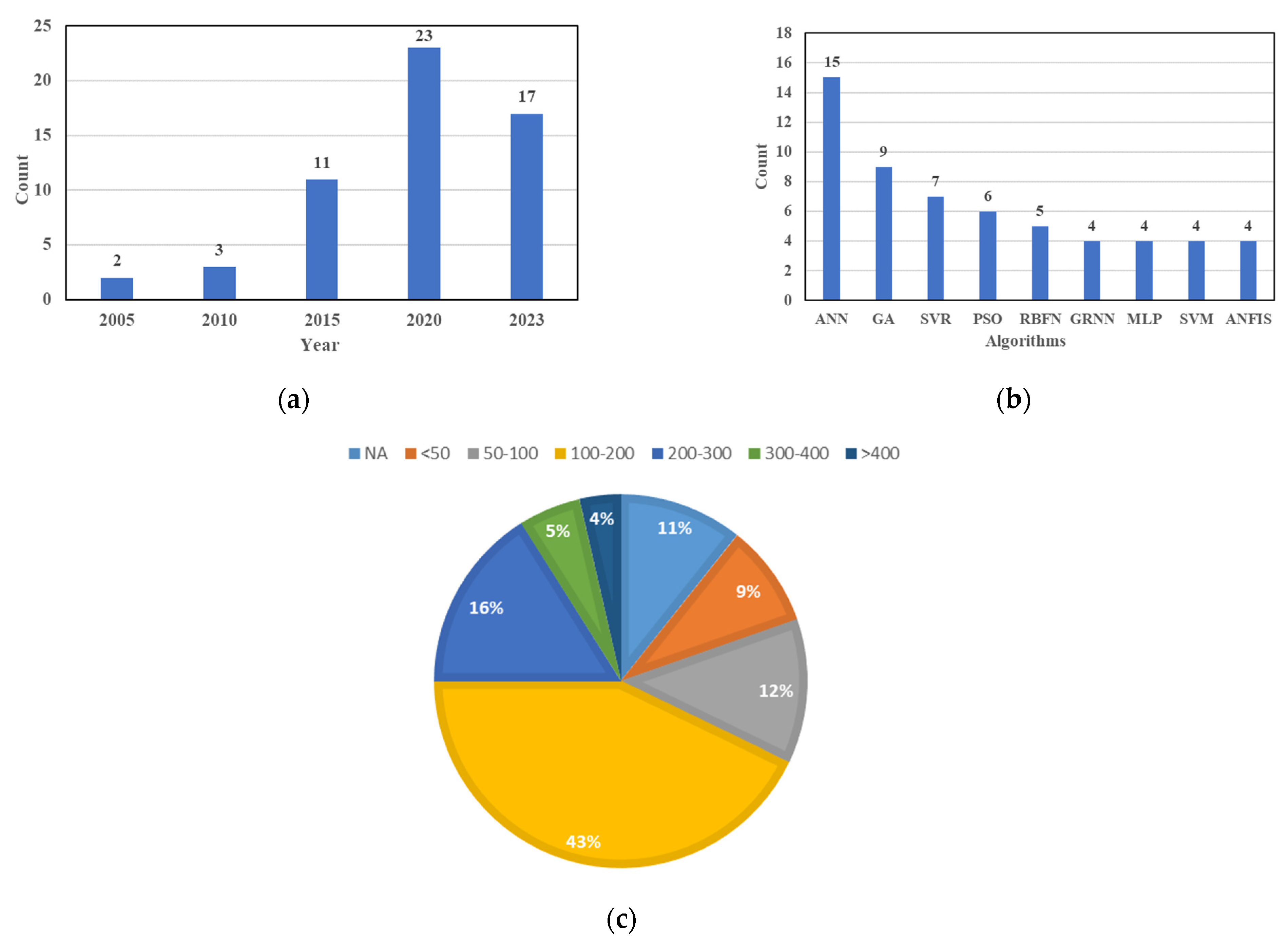

3. Summary of Machine Learning Approaches

4. Application of ML in CO2-EOR

4.1. Minimum Miscibility Pressure (MMP)

- (a)

- (b)

| Authors | Methods | Dataset | Splitting | Inputs | Results | Evaluation | Limitations | Rating * |

|---|---|---|---|---|---|---|---|---|

| Huang et al. [25] | ANN | N/A | N/A | Pure CO2 (TR, xvol, MWC5+, xint), impure CO2 (yH2S, yN2, yCH4, ySO2, Fimp) | ANN can predict MMP. | First applied ANN. ANN is better than other statistical models. | Need to separate pure CO2 and impure CO2. | 7 |

| Emera and Sarma [26] | GA | N/A | N/A | TR, MWC5+, xvol/(yC1 + yH2S + yCO2 + yN2 + yC2-C4). | GA is best for predicting MMP and impurity factors. | First used GA. Limited input parameters (only 3 variables). | Pure CO2. MWC7+ only up to 268. | 7 |

| Dehghani et al. [29] | GA | 55 | 80% train + 20% test | TR, TC, MWC5+, xvol/xint. | GA is better than conventional methods. | Can predict pure and impure CO2. But limited input parameters and data points. | Limited input parameters and data points. | 6 |

| Shokir [22] | ACE | 45 | 50% train + 50% test | TR, MWC5+, yCO2, yH2S, yN2, yC1, yC2-C4, xC1+N2, xint | Can predict relatively accurate MMP for pure and impure CO2. | Can predict pure and impure CO2. But very limited data points. It may have overfitting. | valid only for C1, N2, H2S, and C2–C4 contents in the injected CO2 stream. | 6 |

| Dehghani et al. [30] | ANN-GA | 46 | N/A | TR, MWC5+, yCO2, yH2S, yN2, yC1, yC2-C4, xC1+N2, xint | GA-ANN is better than Shokir [22], Emera and Sarma [26]. | It can be applied to both CO2 and natural gas streams. | Limited data points and only ANN architecture is tested. | 6 |

| Nezhad et al. [31] | ANN | 179 | N/A | TR, xvol, MWC5+, yCO2, yvolatile, yintermediate | ANN is acceptable. | Acceptable data points but not detailed explanations. | Local minima or overfitting | 8 |

| Shokrollahi et al. [32] | LSSVM | 147 | 80% train + 10% test + 10% validate | TR, xvol, MWC5+, yCO2, yC1, yH2S, yN2, yC2-C5 | First applied LSSVM. | It can be used for both pure and impure CO2. Also applied outlier analysis. | Valid only for the impurity contents of C1, N2, H2S, and C2–C5. | 8 |

| Tatar et al. [33] | RBFN | 147 | 80% train + 20% test | TR, MWC5+, yCO2, yH2S, yN2, yC1, yC2-C5, (xC1 + xN2)/(xC2-C4+ xH2S + xCO2) | Better than Emera and Sarma’s model. | Compared with almost all available empirical correlations. | Limited data points | 8 |

| Zendehboudi et al. [34] | ANN-PSO | 350 | 71% train + 29% test | TR, xvol, MWC5+, yCO2, yC1, yH2S, yN2, yC2-C4 | ANN-PSO is best. | Though it has large datasets, but only suitable for fixed input parameters. | Only valid for specific conditions | 8 |

| Chen et al. [35] | ANN | 83 | 70% train + 30% test | TR, MWC5+, xvol, xint, yCO2, yH2S, yC1, and yN2 | ANN provides the least errors. | May have overfitting. | Small datasets | 7 |

| Asoodeh et al. [36] | CM (NN-SVR) | 55 | N/A | TR, MWC5+, xvol/xint, yC2-C4, yCO2, yH2S, yC1, and yN2 | CM is better than NN and SVR. | Limited data points and may have overfitting. | Small datasets | 6 |

| Rezaei et al. [37] | GP | 43 | N/A | TR, MWC5+, xvol/xint | GP provides the best estimation. | Limited data points and may have overfitting. | Small datasets and only consider pure CO2. | 6 |

| Chen at al. [38] | GA-BPNN | 85 | 75% train + 25% test | TR, MWC7+, xvol, xC5-C6, yCO2, yH2S, yN2, yC1, yC2-C4, xint | Both pure and impure CO2, better than other correlations. | It can be applied to both pure and impure CO2 but may have overfitting. | Limited data points. GA is time-consuming. | 7 |

| Ahmadi and Ebadi [39] | FL | 59 | 93% train + 7% test | TR, MWC5+, xvol/xint, TC | The curve shape membership function has the lowest error. | Limited data points and a high possibility of overfitting. | Only four experimental results for testing. | 6 |

| Sayyad et al. [40] | ANN-PSO | 38 | N/A | TR, xvol, MWC5+, yCO2, yH2S, yC1, yN2, yC2-C5 | Better than Emera and Sarma, Shokir. | Only valid for fixed inputs. | Limited data points | 6 |

| Zargar et al. [41] | GRNN | N/A | N/A | TR, MWC5+, xvol/xint, yC2-C4, yCO2, yH2S, yC1, and yN2. | GRNN is an efficient computational structure. GA reduces the runs of GRNNs. | Though compared with most known correlations, but unknown about the data source. | Need more information about the treatment of data. | 6 |

| Bian et al. [42] | SVR-GA | 150 | 67% train + 23% test and 83% train + 17% test | TR, MWC5+, xvol, yCO2, yH2S, yC1, yN2. | Better than other empirical correlations. | Can be used for pure and impure CO2 and low AARD. | Separate pure and impure CO2. | 9 |

| Hemmati-Sarapardeh et al. [43] | MLP | 147 | 70% train + 15% test + 15% validate | TR, TC, MWC5+, xvol/xint | Can predict both pure and impure CO2. | Simple and reliable. | Treatment of inputs may be too simple. | 8 |

| Zhong and Carr [44] | MKF-SVM | 147 | 90% train + 10% test | TR, TC, MWC5+, xvol/xint | The mixed kernel provides better performance. | Treatment of inputs may be too simple. | Did not consider the effect of N2, H2S. | 8 |

| Fathinasab and Ayatollahi [45] | GP | 270 | 80% train + 20% test | TR, Tcm, MWC5+, xvol/xint | GP provides the best prediction. | Relatively large datasets but may simplify the inputs. | AARE is a little high (11.76%). | 7 |

| Alomair and Garrouch [46] | GRNN | 113 | 80% train + 20% test | TR, MWC5+, MWC7+, xC1, xC2, xC3, xC4, xC5, xC6, xC7+, xCO2, xH2S, xN2. | GRNN is better than five empirical correlations. | Too many inputs and no further comparison between GRNN and other ML methods. | Does not consider the purity of CO2. | 7 |

| Karkevandi-Talkhooncheh et al. [47] | ANFIS | 270 | 80% train + 20% test | TR, TC, MWC5+, xvol, xint | ANFIS-PSO is the best among the five optimization methods. | Very comprehensive comparison with available models and different optimizations. | Further applicability may be needed. | 9 |

| Ahamdi et al. [48] | GEP | N/A | N/A | TR, Tcm, MWC5+, xvol/xint | GEP is better than traditional correlations. | Unknown about datasets. | Further validation may be needed. | 6 |

| Karkevandi-Talkhooncheh et al. [49] | RBF-GA/PSO/ICA/ACO/DE | 270 | 80% train + 20% test | TR, MWC5+, xvol, xC2-C4, yCO2, yH2S, yC1, yN2. | ICA-RBF is best. | Comparable large datasets. Five algorithms were applied. | Further applicability may be needed. | 9 |

| Tarybakhsh et al. [50] | SVR-GA, MLP, RBF, GRNN | 135 | 92.5% train + 7.5% test | TR, MWC2-C6 (OIL), MWC7+, SGC7+, MWC2-C6 (GAS), yCO2, yH2S, yC1, yN2. | SVT-GA is best. | Too many input parameters may cause a high possibility of overfitting. | The R2 is as high as 0.999. Too perfect to be reliable. | 6 |

| Dong et al. [51] | ANN | 122 | 82% train + 18% test | H2S, CO2, N2, C1, C2… C36+ | ANN can be used to predict MMP. | Too many inputs. No dominant input selection. | Input variables were assumed based on the availability of data. | 7 |

| Hamdi and Chenxi [52] | ANFIS | 48 | 73% train + 27% test | TR, MWC5+, xvol, xint | Gaussian MF is the best among the five MFs. ANFIS is better than ANN. | Though applied five MF but limited data points. | Limited data points and does not consider the existence of CO2. | 6 |

| Khan et al. [53] | ANN, FN, SVM | 51 | 70% train + 30% test | TR, MWC7+, xC1, xC2-C6, MWC2+, xC2 | ANN is best. | Compared three methods but input parameters are overlapping. | Limited data points and does not consider the existence of CO2. | 6 |

| Choubineh et al. [54] | ANN | 251 | 75% train + 10% test + 15% validate | TR, MWC5+, xvol/xint, SG | ANN is best compared with empirical correlations. | Relatively large dataset. Use gas SG instead. | Further applicability may be needed. | 8 |

| Li et al. [55] | NNA, GFA, MLR, PLS | 136 | N/A | TR, TC, MWC5+, xvol/xint, yC2-C5, yCO2, yH2S, yC1, yN2. | ANN is best among both empirical and other algorithms. | Unclear about how to split the data. | Further applicability may be needed. | 8 |

| Hassan et al. [56] | ANN, RBF, GRNN, FL | 100 | 70% train + 30% test | TR, MWC7+, xC2-C6 | RBF provides the highest accuracy. | Only three input parameters may simplify the model. | Does not consider the purity of CO2 and the limited dataset. | 7 |

| Sinha et al. [57] | Linear SVM/KNN/RF/ANN | N/A | 67% train + 33% test | TR, MWC7+, MWOil, xC1, xC2, xC3, xC4, xC5, xC6, xC7+, xCO2, xH2S, and xN2. | Modified correlation with linear SVR and hybrid method with RF is best. | Only need oil composition and TR. Does not consider the purity of CO2. | MMP range 1000–4900 psi. | 7 |

| Nait Amar and Zerabi [9] | SVR-ABC | 201 | 87% train + 13% test | TR, TC, MWC5+, xvol/xint, xC2-C4 | SVR-ABC is better SVR-TE. | The choice of inputs is limited | Limited comparison. | 8 |

| Dargahi-Zarandi et al. [58] | AdaBoost SVR, GDMH, MLP | 270 | 67% train + 33% test | TR, TC, MWC5+, xvol, xC2-C4, yCO2, yH2S, yC1, yN2. | AdaBoost SVR is best. | Create a 3-D plot for better visualization. | Further applicability was limited | 9 |

| Tian et al. [59] | BP-NN (GA, MEA, PSO, ABC, DA) | 152 | 80% train + 20% test | TR, MWC5+, xC1, xC2, xC3, xC4, xC5, xC6, xC7+, yCO2, yH2S, yN2. | DA-BP has the highest accuracy. | Compared with empirical correlations and GA-SVR. | Too many input parameters may have overfitting. | 8 |

| Ekechukwu et al. [60] | GPR | 137 | 90% train + 10% test | TR, TC, MWC5+, xvol/xint | GPR has higher accuracy than other models. | Very comprehensive comparison. A larger dataset may be better. | Further validation with experiments may be needed. | 8 |

| Saeedi Dehaghani and Soleimani [61] | SGB, ANN, ANN-PSO, ANN-TLBO | 144 | 75% train + 25% test | TR, MWC5+, xvol, xint, yCO2, yC1, yint, yN2. | PSO and TLBO can help improve the accuracy of the ANN model. SGB is better than ANN. | First applied SGB. Maybe compared with other optimization methods will be better. | Further validation with experiments may be needed. | 8 |

| Dong et al. [62] | FCNN | 122 | 82% train + 18% test | xCO2, xH2S, xN2, xC1, xC2, xC3, xC4, xC5, xC6, …, xC36+. | L2 regularization and Dropout can help reduce overfitting. | Alleviate overfitting but small datasets. | Small datasets. | 7 |

| Chen et al. [63] | SVM | 147 | 80% train + 20% test | TR, MWC7+, xvol, xC2-C4, xC5-C6, yCO2, yHC, yC1, and yN2. | POLY kernel is more accurate. MWC7+ and xC5-C6 should not be considered. | Very complete and comprehensive. Includes optimization and evaluation. | More persuasive with a large dataset. | 9 |

| Ghiasi et al. [64] | ANFIS, AdaBoost-CART | N/A | 90% train + 10% test | TR, TC, MWC5+, xvol/xint, yCO2, yH2S, yC1-C5, and yN2 | The novel AdaBoost- The CART model is the most reliable. | The size of the dataset is unknown. First one to use AdaBoost. | May have overfitting and validation is not strong. | 7 |

| Chemmakh et al. [65] | ANN, SVR-GA | 147 (pure CO2), 200 (impure CO2) | NA | TR, TC, MWC5+, xvol/xint | ANN and SVR-GA are reliable to use. | The novelty of work is not clear. | Only compared with empirical correlations. | 7 |

| Pham et al. [66] | FCNN | 250 | 80% train + 20% test | TR, xvol/xint, MW, yC1, yC2+, yCO2, yH2S, yN2 | Multiple FCN together with Early Stopping and K-fold cross validation has high prediction of MMP. | Applied deep learning—multiple FCN to predict MMP. Limited comparisons and validations. | Only compared with decision tree and random forest. | 7 |

| Haider et al. [67] | ANN | 201 | 70% train + 30% test | TR, MWC7+, xCO2, xC1, xC2, xC3, xC4, xC5, xC6, xC7, yCO2, yH2S, yC1, yN2. | An empirical correlation is developed based on ANN. | Too many inputs and a high possibility of overfitting. | Need further validation with other reservoir fluid and injected gas. | 7 |

| Huang et al. [68] | CGAN-BOA | 180 | 60% train + 20% test + 20% validate | TR, MWC7+, xCO2, xC1, xC2, xC3, xC4, xC5, xC6, xC7+, xN2, yCO2, yH2S, yN2, yC1, yC2, yC3, yC4, yC5, yC6, yC7+. | CGAN-BOA and ANN are better than SVR-RBF and SVR-POLY. | Proved deep learning has the potential for predicting MMP. | May have overfitting problems given 21 input parameters. | 8 |

| He et al. [69] | GBDT-PSO | 195 | 85% train + 15% test | TR, xCO2, xC1, xC2, xC3, xC4, xC5, xC6, xC7+, xN2, | GBDT is better than LR, RR, RF, MLP. | Improved GBDT by using PSO. But not a comprehensive comparison. | Only GBDT was optimized. Other algorithms could also be tuned and compared. | 7 |

| Hou et al. [70] | GPR-PSO | 365 | 80% train + 20% test | TR, TC, MWC5+, xvol/xint, yCO2, yH2S, yC1, yC2-C5, yN2. | GPR-PSO provides the highest accuracy. | Comprehensive comparison and large datasets. | The model was only validated with literature data. | 9 |

| Rayhani et al. [71] | SFS, SBS, SFFS, SBFS, LR, RFFI | 812 | 80% train + 20% test | TR, TC, MWC7+, MWgas, xC5, xC6, xC2-C6 | SBFS provides the highest accuracy. | Large datasets. Comprehensive data selection and model comparison. | Further applicability with field data or commercial simulation was limited. | 9 |

| Shakeel et al. [72] | ANN, ANFIS | 105 | 70% train + 30% test | TR, MWC7+, xvol, xC2-C4, xC5-C6, yCO2, yH2S, yC1, yHC, yN2. | ANN is better than ANFIS; the trainlm performs best. | Demonstrated good accuracy but lack of uncertainty analysis. | Limited dataset and only two ML algorithms were tested. | 7 |

| Shen et al. [73] | XGBoost, TabNet, KXGB, KTabNet | 421 | 80% train + 20% test | TR, MWC5+, xvol/xint, yCO2, yH2S, yC1, yC2-C5, yHC, and yN2 | KXGB is best. KTabNet can be used as an alternative. | Large datasets. Comprehensive model comparison. New insights into deep learning. | Need improvement of feature comprehensiveness. | 9 |

| Lv et al. [24] | XGBoost, CatBoost, LGBM, RF, deep MLN, DBN, CNN | 310 | 80% train + 20% test | TR, TC, MWC5+, xvol/xint | CatBoost outperforms than other AI techniques. | Comprehensive model comparison and evaluation. New insights into deep learning. | The accuracy depends on the databank. A larger dataset will be more robust. | 9 |

| Hamadi et al. [74] | MLP-Adam, SVR-RBF, XGBoost | 193 | 84% train + 16% test | TR, TC, MWC5+, xvol/xint | XGBoost provides the best prediction for both pure and impure CO2. | Not comprehensive comparison and a limited dataset. | Limited dataset and only two ML algorithms were tested | 7 |

| Huang et al. [27] | 1D-CNN, SHAP | 193 | NA | TR, MWC7+, xCO2, xC1, xC2, xC3, xC4, xC5, xC6, xC7+, xN2, yCO2, yH2S, yN2, yC1, yC2, yC3, yC4, yC5, yC6, yC7+. | MMPs from the slim tube and rising bubble are different. 1D-CNN performs best. | It is novel in the SHAP application, but the comparison with other ML models is limited. | Further applicability with field data or commercial simulation was limited. | 8 |

| Al-Khafaji et al. [28] | MLR, SVR, DT, RF, KNN | 147 (type 1), 197 (type 2), 28 (type 3) | 80% train + 20% test | Type 1: TR, MWC5+, xvol/xint; Type 2: MWC7+, xvol, xint, xC5-C6, xC7+, yCO2, yH2S, yN2, yC1, yC2-C6, yC7+.; Type 3: TR, MWC6+, xvol, xint, xC6+, API, sp.gr, Pb. | KNN has the highest efficient accuracy and lowest complexity. | Have a broad range of data including both experimental and field data. Performed thorough comparisons. | Only pure CO2. | 9 |

| Sinha et al. [75] | Light GBM | 205 | 80% train + 20% test | TR, MWC7+, MWOil, xC1, xC2, xC3, xC4, xC5, xC6, xC7+, xCO2, xN2, xH2S | An expanded range is developed with Light GBM. | Compared with empirical and EOS correlations. First used Light GBM in MMP prediction. | Further applicability with field data or commercial simulation was limited. | 8 |

4.2. Water-Alternating-Gas (WAG)

4.3. Well Placement Optimization (WPO)

4.4. Oil Production/Recovery Factor

4.5. Multi-Objective Optimization

4.6. PVT Properties

4.7. CO2-Foam Flooding

5. Benefits and Limitations of ML

6. Conclusions

- Most reports on model performance indicators are limited to the size of the data bank, with 12% of the investigated papers having a database of less than 100 data points, making it difficult to accurately assess the quality of the model over time or track its drift with new data;

- Regarding validation and verification, the CO2-EOR has many reliable, dependable, and well-established techniques for verification and validation procedures for ML models; the research highlights several issues with current ML models, including scalability, validation and verification deficiencies, and an absence of published data regarding the establishment costs of ML models;

- Most CO2-EOR research focused on MMP predictions and WAG design, with 56 out of 101 papers devoted to MMP prediction and 26 of 101 papers to WAG design; the applications in the recovery factor, well placement optimization, and PVT properties are limited;

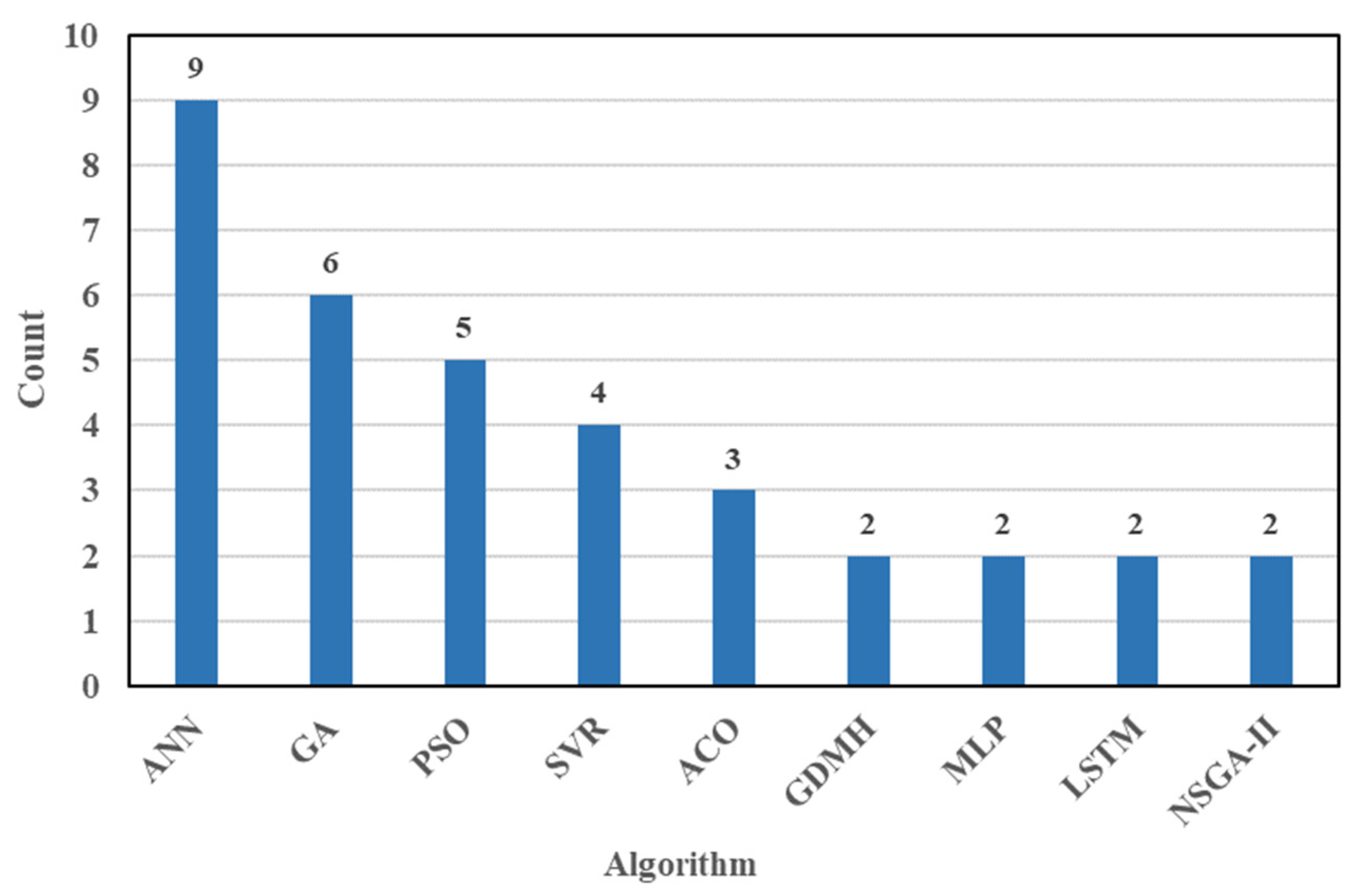

- ANN is the most employed ML algorithm, and GA is the most popular optimization algorithm based on 101 reviewed papers. ANN has proven to be flexible enough to be implemented to build intelligent proxies; while oil and gas data are frequently characterized by noise, incompleteness, heterogeneity, and nonlinearity, ANNs exhibit superior capability in handling such diverse data types and can adeptly adapt to varying data distributions;

- ML algorithms have the potential to greatly reduce the computational cost and time to perform compositional simulation runs; however, ML applications for well placement and multi-objective optimizations in CO2-EOR are very limited given the complexity of the problem. Furthermore, the reliability of coupled ML-metaheuristic paradigms based on reservoir simulation results needs further investigation;

- The application of ML in the oil and gas industry still requires further exploration and development. Future work can focus on integrating knowledge from multiple disciplines, such as geology, reservoir engineering, and petrophysics with ML models to enhance accuracy and interpretability; another focus area could be the development of hybrid models that implement ML techniques alongside physics-based methodologies, providing robust and reliable support;

- In summary, this study provides a comprehensive overview of the application of ML and optimization techniques in CO2-EOR projects; our work significantly contributes to the advancement of knowledge in the field by providing a synthesis of the latest research; these methods have demonstrated their ability to improve the efficiency, production forecast, and economic viability of CO2-EOR operations; the insights gained from this study provide valuable guidance for the future direction of ML applications in CO2-EOR R&D (research and development) and deployment.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Nomenclature

| AARD | Average absolute relative deviation |

| AARE | Average absolute relative error |

| ABC | Artificial bee colony |

| ACO | Ant colony optimization |

| ACE | Alternating conditional expectation |

| AR | Auto-regressive |

| ANN | Artificial Neural Network |

| ANFIS | Adaptive neuro-fuzzy inference system |

| BA | Bee algorithm |

| BOA | Bayesian optimization algorithm |

| BPNN | Backpropagation algorithm neural network |

| BR | Bayesian regularization |

| CatBoost | Categorical boosting |

| CCD | Central composite design |

| CFNN | Cascade forward neural network |

| CGAN | Conditional generative adversarial network |

| CM | Committee machine |

| CNN | Convolutional neural network |

| COA | Cuckoo optimization algorithm |

| CSO | Cuckoo search optimization |

| DA | Dragonfly algorithm |

| DBN | Deep belief network |

| DE | Differential evolution |

| DNN | Dense neural network |

| ERT | Extremely randomized trees |

| FCNN | Fully connected neural network |

| FGIR | Field gas injection rate |

| FL | Fuzzy logic |

| FN | Functional network |

| GA | Genetic algorithm |

| GB | Gradient boosting |

| GBDT | Gradient boosting decision tree |

| GBM | Gradient boost method |

| GEP | Gene expression programming |

| GFA | Genetic function approximation |

| GIR | Gas injection rate |

| GMDH | Group method of data handling |

| GP | Genetic programming |

| GPR | Gaussian process regression |

| GRNN | Generalized regression neural network |

| GSA | Gravitational search algorithm |

| GWO | Grey wolf optimization |

| He | Hurst exponent |

| HPSO | Hybrid particle swarm optimization |

| ICA | Imperialist competitive algorithm |

| KXGB | Knowledge-based XGB |

| LGBM | Light gradient boosting machine |

| LM | Levenberg—Marquardt |

| LR | Lasso regression |

| LSSVM | Least-squares support vector machine |

| LSTM | Long short-term memory |

| MADS | Mesh adaptive direct search |

| MARS | Multivariate Adaptive Regression Splines |

| MASRD | Mean absolute symmetric relative deviation |

| MEA | Mind evolutionary algorithm |

| MF | Membership function |

| MKF | Mixed kernels function |

| MLP | Multi-layer perceptron |

| MLR | Multiple linear regression |

| MLNN | Multi-layer neural networks |

| MOPSO | Multi-objective particle swarm optimization |

| MSE | Mean squared error |

| NNA | Neural network analysis |

| NPV | Net present value |

| NSGA-II | Non-dominated sorting genetic algorithm version II |

| PLS | Partial least squares |

| POLY | Polynomial function |

| PSO | Particle swarm optimization |

| RBFN | Radial-based function networks |

| RFFI | Random forest feature importance |

| RR | Ridge regression |

| RSM | Response surface models |

| SBFS | Sequential backward floating selection |

| SBS | Sequential backward selection |

| SCG | Scaled conjugate gradient |

| SFS | Sequential forward selection |

| SFFS | Sequential forward floating selection |

| SGB | Stochastic gradient boosting |

| SGR | Solution gas ratio |

| SHAP | Shapley Additive explanations |

| SVR | Support vector regression |

| SVM | Support vector machine |

| TLBO | Teaching learning-based optimization |

| TPVT | Total pore volume tested |

| WIR | Water injection rate |

| WHFP | Well head flow pressure |

| XGBoost | Extreme gradient boosting |

| λ*Dx | Effective correlation length |

References

- Green, D.W.; Willhite, P.G. Enhanced Oil Recovery; Henry, L., Ed.; Doherty Memorial Fund of AIME, Society of Petroleum Engineers: Richardson, TX, USA, 1998; Volume 6. [Google Scholar]

- Yang, G.; Jiang, R.; Li, X.; Jiang, Y. Evaluation of Polymer Flooding Performance Using Water-Polymer Interference Factor for an Offshore Oil Field in Bohai Gulf: A Case Study. In Proceedings of the SPE Improved Oil Recovery Conference, Tulsa, OK, USA, 14–18 April 2018; SPE: Tulsa, OK, USA, 2018. [Google Scholar]

- Yongmao, H.; Zenggui, W.; Binshan, J.; Yueming, C.; Xiangjie, L.; Petro, X. Laboratory Investigation of CO2 Flooding. In Proceedings of the Nigeria Annual International Conference and Exhibition, Abuja, Nigeria, 2 August 2004; SPE: Abuja, Nigeria, 2004. [Google Scholar]

- Cheraghi, Y.; Kord, S.; Mashayekhizadeh, V. Application of Machine Learning Techniques for Selecting the Most Suitable Enhanced Oil Recovery Method; Challenges and Opportunities. J. Pet. Sci. Eng. 2021, 205, 108761. [Google Scholar] [CrossRef]

- Gozalpour, F.; Ren, S.R.; Tohidi, B. CO2 EOR and Storage in Oil Reservoir. Oil Gas Sci. Technol. 2005, 60, 537–546. [Google Scholar] [CrossRef]

- Hasan, M.M.F.; First, E.L.; Boukouvala, F.; Floudas, C.A. A Multi-Scale Framework for CO2 Capture, Utilization, and Sequestration: CCUS and CCU. Comput. Chem. Eng. 2015, 81, 2–21. [Google Scholar] [CrossRef]

- Ghoraishy, S.M.; Liang, J.T.; Green, D.W.; Liang, H.C. Application of Bayesian Networks for Predicting the Performance of Gel-Treated Wells in the Arbuckle Formation, Kansas. In Proceedings of the SPE Symposium on Improved Oil Recovery, Tulsa, OK, USA, 19–23 April 2008; SPE: Tulsa, OK, USA, 2008. [Google Scholar]

- Liu, M.; Fu, X.; Meng, L.; Du, X.; Zhang, X.; Zhang, Y. Prediction of CO2 Storage Performance in Reservoirs Based on Optimized Neural Networks. Geoenergy Sci. Eng. 2023, 222, 211428. [Google Scholar] [CrossRef]

- Nait Amar, M.; Zeraibi, N. Application of Hybrid Support Vector Regression Artificial Bee Colony for Prediction of MMP in CO2-EOR Process. Petroleum 2020, 6, 415–422. [Google Scholar] [CrossRef]

- You, J.; Lee, K.J. Pore-Scale Numerical Investigations of the Impact of Mineral Dissolution and Transport on the Heterogeneity of Fracture Systems During CO2-Enriched Brine Injection. SPE J. 2022, 27, 1379–1395. [Google Scholar] [CrossRef]

- Ng, C.S.W.; Nait Amar, M.; Jahanbani Ghahfarokhi, A.; Imsland, L.S. A Survey on the Application of Machine Learning and Metaheuristic Algorithms for Intelligent Proxy Modeling in Reservoir Simulation. Comput. Chem. Eng. 2023, 170, 108107. [Google Scholar] [CrossRef]

- Kumar, N.; Augusto Sampaio, M.; Ojha, K.; Hoteit, H.; Mandal, A. Fundamental Aspects, Mechanisms and Emerging Possibilities of CO2 Miscible Flooding in Enhanced Oil Recovery: A Review. Fuel 2022, 330, 125633. [Google Scholar] [CrossRef]

- Satter, A.; Thakur, G.C. Integrated Petroleum Reservoir Management: A Team Approach; PennWell Books: Tulsa, OK, USA, 1994. [Google Scholar]

- Yang, G.; Li, X. Modified Peng-Robinson Equation of State for CO2/Hydrocarbon Systems within Nanopores. J. Nat. Gas Sci. Eng. 2020, 84, 103700. [Google Scholar] [CrossRef]

- Wang, L.; Yao, Y.; Luo, X.; Daniel Adenutsi, C.; Zhao, G.; Lai, F. A Critical Review on Intelligent Optimization Algorithms and Surrogate Models for Conventional and Unconventional Reservoir Production Optimization. Fuel 2023, 350, 128826. [Google Scholar] [CrossRef]

- Holm, L.W.; Josendal, V.A. Mechanisms of Oil Displacement By Carbon Dioxide. J. Pet. Technol. 1974, 26, 1427–1438. [Google Scholar] [CrossRef]

- Yellig, W.F.; Metcalfe, R.S. Determination and Prediction of CO2 Minimum Miscibility Pressures (Includes Associated Paper 8876). J. Pet. Technol. 1980, 32, 160–168. [Google Scholar] [CrossRef]

- Christiansen, R.L.; Haines, H.K. Rapid Measurement of Minimum Miscibility Pressure with the Rising-Bubble Apparatus. SPE Reserv. Eng. 1987, 2, 523–527. [Google Scholar] [CrossRef]

- Rao, D.N.; Lee, J.I. Application of the New Vanishing Interfacial Tension Technique to Evaluate Miscibility Conditions for the Terra Nova Offshore Project. J. Pet. Sci. Eng. 2002, 35, 247–262. [Google Scholar] [CrossRef]

- Alston, R.B.; Kokolis, G.P.; James, C.F. CO2 Minimum Miscibility Pressure: A Correlation for Impure CO2 Streams and Live Oil Systems. Soc. Pet. Eng. J. 1985, 25, 268–274. [Google Scholar] [CrossRef]

- Orr, F.M.; Jensen, C.M. Interpretation of Pressure-Composition Phase Diagrams for CO2/Crude-Oil Systems. Soc. Pet. Eng. J. 1984, 24, 485–497. [Google Scholar] [CrossRef]

- Shokir, E.M.E.-M. CO2–Oil Minimum Miscibility Pressure Model for Impure and Pure CO2 Streams. J. Pet. Sci. Eng. 2007, 58, 173–185. [Google Scholar] [CrossRef]

- Ahmadi, K.; Johns, R.T. Multiple-Mixing-Cell Method for MMP Calculations. SPE J. 2011, 16, 733–742. [Google Scholar] [CrossRef]

- Lv, Q.; Zheng, R.; Guo, X.; Larestani, A.; Hadavimoghaddam, F.; Riazi, M.; Hemmati-Sarapardeh, A.; Wang, K.; Li, J. Modelling Minimum Miscibility Pressure of CO2-Crude Oil Systems Using Deep Learning, Tree-Based, and Thermodynamic Models: Application to CO2 Sequestration and Enhanced Oil Recovery. Sep. Purif. Technol. 2023, 310, 123086. [Google Scholar] [CrossRef]

- Huang, Y.F.; Huang, G.H.; Dong, M.Z.; Feng, G.M. Development of an Artificial Neural Network Model for Predicting Minimum Miscibility Pressure in CO2 Flooding. J. Pet. Sci. Eng. 2003, 37, 83–95. [Google Scholar] [CrossRef]

- Emera, M.K.; Sarma, H.K. Use of Genetic Algorithm to Estimate CO2–Oil Minimum Miscibility Pressure—A Key Parameter in Design of CO2 Miscible Flood. J. Pet. Sci. Eng. 2005, 46, 37–52. [Google Scholar] [CrossRef]

- Huang, C.; Tian, L.; Wu, J.; Li, M.; Li, Z.; Li, J.; Wang, J.; Jiang, L.; Yang, D. Prediction of Minimum Miscibility Pressure (MMP) of the Crude Oil-CO2 Systems within a Unified and Consistent Machine Learning Framework. Fuel 2023, 337, 127194. [Google Scholar] [CrossRef]

- Al-Khafaji, H.F.; Meng, Q.; Hussain, W.; Khudhair Mohammed, R.; Harash, F.; Alshareef AlFakey, S. Predicting Minimum Miscible Pressure in Pure CO2 Flooding Using Machine Learning: Method Comparison and Sensitivity Analysis. Fuel 2023, 354, 129263. [Google Scholar] [CrossRef]

- Dehghani, S.A.M.; Vafaie Sefti, M.; Ameri, A.; Kaveh, N.S. A Hybrid Neural-Genetic Algorithm for Predicting Pure and Impure CO2 Minimum Miscibility Pressure. Iran. J. Chem. Eng. 2006, 3, 44–59. [Google Scholar]

- Dehghani, S.A.M.; Sefti, M.V.; Ameri, A.; Kaveh, N.S. Minimum Miscibility Pressure Prediction Based on a Hybrid Neural Genetic Algorithm. Chem. Eng. Res. Des. 2008, 86, 173–185. [Google Scholar] [CrossRef]

- Nezhad, A.B.; Mousavi, S.M.; Aghahoseini, S. Development of an Artificial Neural Network Model to Predict CO2 Minimum Miscibility Pressure. Nafta 2011, 62, 105–108. [Google Scholar]

- Shokrollahi, A.; Arabloo, M.; Gharagheizi, F.; Mohammadi, A.H. Intelligent Model for Prediction of CO2—Reservoir Oil Minimum Miscibility Pressure. Fuel 2013, 112, 375–384. [Google Scholar] [CrossRef]

- Tatar, A.; Shokrollahi, A.; Mesbah, M.; Rashid, S.; Arabloo, M.; Bahadori, A. Implementing Radial Basis Function Networks for Modeling CO2-Reservoir Oil Minimum Miscibility Pressure. J. Nat. Gas Sci. Eng. 2013, 15, 82–92. [Google Scholar] [CrossRef]

- Zendehboudi, S.; Ahmadi, M.A.; Bahadori, A.; Shafiei, A.; Babadagli, T. A Developed Smart Technique to Predict Minimum Miscible Pressure-Eor Implications. Can. J. Chem. Eng. 2013, 91, 1325–1337. [Google Scholar] [CrossRef]

- Chen, G.; Wang, X.; Liang, Z.; Gao, R.; Sema, T.; Luo, P.; Zeng, F.; Tontiwachwuthikul, P. Simulation of CO2-Oil Minimum Miscibility Pressure (MMP) for CO2 Enhanced Oil Recovery (EOR) Using Neural Networks. Energy Procedia 2013, 37, 6877–6884. [Google Scholar] [CrossRef]

- Asoodeh, M.; Gholami, A.; Bagheripour, P. Oil-CO2 MMP Determination in Competition of Neural Network, Support Vector Regression, and Committee Machine. J. Dispers. Sci. Technol. 2014, 35, 564–571. [Google Scholar] [CrossRef]

- Rezaei, M.; Eftekhari, M.; Schaffie, M.; Ranjbar, M. A CO2-Oil Minimum Miscibility Pressure Model Based on Multi-Gene Genetic Programming. Energy Explor. Exploit. 2013, 31, 607–622. [Google Scholar] [CrossRef]

- Chen, G.; Fu, K.; Liang, Z.; Sema, T.; Li, C.; Tontiwachwuthikul, P.; Idem, R. The Genetic Algorithm Based Back Propagation Neural Network for MMP Prediction in CO2-EOR Process. Fuel 2014, 126, 202–212. [Google Scholar] [CrossRef]

- Ahmadi, M.-A.; Ebadi, M. Fuzzy Modeling and Experimental Investigation of Minimum Miscible Pressure in Gas Injection Process. Fluid Phase Equilib. 2014, 378, 1–12. [Google Scholar] [CrossRef]

- Sayyad, H.; Manshad, A.K.; Rostami, H. Application of Hybrid Neural Particle Swarm Optimization Algorithm for Prediction of MMP. Fuel 2014, 116, 625–633. [Google Scholar] [CrossRef]

- Zargar, G.; Bagheripour, P.; Asoodeh, M.; Gholami, A. Oil-CO2 Minimum Miscible Pressure (MMP) Determination Using a Stimulated Smart Approach. Can. J. Chem. Eng. 2015, 93, 1730–1735. [Google Scholar] [CrossRef]

- Bian, X.-Q.; Han, B.; Du, Z.-M.; Jaubert, J.-N.; Li, M.-J. Integrating Support Vector Regression with Genetic Algorithm for CO2-Oil Minimum Miscibility Pressure (MMP) in Pure and Impure CO2 Streams. Fuel 2016, 182, 550–557. [Google Scholar] [CrossRef]

- Hemmati-Sarapardeh, A.; Ghazanfari, M.-H.; Ayatollahi, S.; Masihi, M. Accurate Determination of the CO2-Crude Oil Minimum Miscibility Pressure of Pure and Impure CO2 Streams: A Robust Modelling Approach. Can. J. Chem. Eng. 2016, 94, 253–261. [Google Scholar] [CrossRef]

- Zhong, Z.; Carr, T.R. Application of Mixed Kernels Function (MKF) Based Support Vector Regression Model (SVR) for CO2—Reservoir Oil Minimum Miscibility Pressure Prediction. Fuel 2016, 184, 590–603. [Google Scholar] [CrossRef]

- Fathinasab, M.; Ayatollahi, S. On the Determination of CO2–Crude Oil Minimum Miscibility Pressure Using Genetic Programming Combined with Constrained Multivariable Search Methods. Fuel 2016, 173, 180–188. [Google Scholar] [CrossRef]

- Alomair, O.A.; Garrouch, A.A. A General Regression Neural Network Model Offers Reliable Prediction of CO2 Minimum Miscibility Pressure. J. Pet. Explor. Prod. Technol. 2016, 6, 351–365. [Google Scholar] [CrossRef]

- Karkevandi-Talkhooncheh, A.; Hajirezaie, S.; Hemmati-Sarapardeh, A.; Husein, M.M.; Karan, K.; Sharifi, M. Application of Adaptive Neuro Fuzzy Interface System Optimized with Evolutionary Algorithms for Modeling CO2-Crude Oil Minimum Miscibility Pressure. Fuel 2017, 205, 34–45. [Google Scholar] [CrossRef]

- Ahmadi, M.A.; Zendehboudi, S.; James, L.A. A Reliable Strategy to Calculate Minimum Miscibility Pressure of CO2-Oil System in Miscible Gas Flooding Processes. Fuel 2017, 208, 117–126. [Google Scholar] [CrossRef]

- Karkevandi-Talkhooncheh, A.; Rostami, A.; Hemmati-Sarapardeh, A.; Ahmadi, M.; Husein, M.M.; Dabir, B. Modeling Minimum Miscibility Pressure during Pure and Impure CO2 Flooding Using Hybrid of Radial Basis Function Neural Network and Evolutionary Techniques. Fuel 2018, 220, 270–282. [Google Scholar] [CrossRef]

- Tarybakhsh, M.R.; Assareh, M.; Sadeghi, M.T.; Ahmadi, A. Improved Minimum Miscibility Pressure Prediction for Gas Injection Process in Petroleum Reservoir. Nat. Resour. Res. 2018, 27, 517–529. [Google Scholar] [CrossRef]

- Dong, P.; Liao, X.; Chen, Z.; Chu, H. An Improved Method for Predicting CO2 Minimum Miscibility Pressure Based on Artificial Neural Network. Adv. Geo-Energy Res. 2019, 3, 355–364. [Google Scholar] [CrossRef]

- Hamdi, Z.; Chenxi, D. Accurate Prediction of CO2 Minimum Miscibility Pressure Using Adaptive Neuro-Fuzzy Inference Systems. In Proceedings of the SPE Gas & Oil Technology Showcase and Conference, Dubai, United Arab Emirates, 21–23 October 2019. [Google Scholar]

- Khan, M.R.; Kalam, S.; Khan, R.A.; Tariq, Z.; Abdulraheem, A. Comparative Analysis of Intelligent Algorithms to Predict the Minimum Miscibility Pressure for Hydrocarbon Gas Flooding. In Proceedings of the Abu Dhabi International Petroleum Exhibition & Conference, Abu Dhabi, United Arab Emirates, 11 November 2019; SPE: Abu Dhabi, United Arab Emirates, 2019. [Google Scholar]

- Choubineh, A.; Helalizadeh, A.; Wood, D.A. Estimation of Minimum Miscibility Pressure of Varied Gas Compositions and Reservoir Crude Oil over a Wide Range of Conditions Using an Artificial Neural Network Model. Adv. Geo-Energy Res. 2019, 3, 52–66. [Google Scholar] [CrossRef]

- Li, D.; Li, X.; Zhang, Y.; Sun, L.; Yuan, S. Four Methods to Estimate Minimum Miscibility Pressure of CO2-Oil Based on Machine Learning. Chin. J. Chem. 2019, 37, 1271–1278. [Google Scholar] [CrossRef]

- Hassan, A.; Elkatatny, S.; Abdulraheem, A. Intelligent Prediction of Minimum Miscibility Pressure (MMP) During CO2 Flooding Using Artificial Intelligence Techniques. Sustainability 2019, 11, 7020. [Google Scholar] [CrossRef]

- Sinha, U.; Dindoruk, B.; Soliman, M. Prediction of CO2 Minimum Miscibility Pressure MMP Using Machine Learning Techniques. In Proceedings of the SPE Improved Oil Recovery Conference, Tulsa, OK, USA, 31 August–4 September 2020; SPE: Tulsa, OK, USA, 2020. [Google Scholar]

- Dargahi-Zarandi, A.; Hemmati-Sarapardeh, A.; Shateri, M.; Menad, N.A.; Ahmadi, M. Modeling Minimum Miscibility Pressure of Pure/Impure CO2-Crude Oil Systems Using Adaptive Boosting Support Vector Regression: Application to Gas Injection Processes. J. Pet. Sci. Eng. 2020, 184, 106499. [Google Scholar] [CrossRef]

- Tian, Y.; Ju, B.; Yang, Y.; Wang, H.; Dong, Y.; Liu, N.; Ma, S.; Yu, J. Estimation of Minimum Miscibility Pressure during CO2 Flooding in Hydrocarbon Reservoirs Using an Optimized Neural Network. Energy Explor. Exploit. 2020, 38, 2485–2506. [Google Scholar] [CrossRef]

- Ekechukwu, G.K.; Falode, O.; Orodu, O.D. Improved Method for the Estimation of Minimum Miscibility Pressure for Pure and Impure CO2–Crude Oil Systems Using Gaussian Process Machine Learning Approach. J. Energy Resour. Technol. 2020, 142, 123003. [Google Scholar] [CrossRef]

- Saeedi Dehaghani, A.H.; Soleimani, R. Prediction of CO2-Oil Minimum Miscibility Pressure Using Soft Computing Methods. Chem. Eng. Technol. 2020, 43, 1361–1371. [Google Scholar] [CrossRef]

- Dong, P.; Liao, X.; Wu, J.; Zou, J.; Li, R.; Chu, H. A New Method for Predicting CO2 Minimum Miscibility Pressure MMP Based on Deep Learning. In Proceedings of the SPE/IATMI Asia Pacific Oil & Gas Conference and Exhibition, Bali, Indonesia, 25 October 2020; SPE: Bali, Indonesia, 2020. [Google Scholar]

- Chen, H.; Zhang, C.; Jia, N.; Duncan, I.; Yang, S.; Yang, Y. A Machine Learning Model for Predicting the Minimum Miscibility Pressure of CO2 and Crude Oil System Based on a Support Vector Machine Algorithm Approach. Fuel 2021, 290, 120048. [Google Scholar] [CrossRef]

- Ghiasi, M.M.; Mohammadi, A.H.; Zendehboudi, S. Use of Hybrid-ANFIS and Ensemble Methods to Calculate Minimum Miscibility Pressure of CO2—Reservoir Oil System in Miscible Flooding Process. J. Mol. Liq 2021, 331, 115369. [Google Scholar] [CrossRef]

- Chemmakh, A.; Merzoug, A.; Ouadi, H.; Ladmia, A.; Rasouli, V. Machine Learning Predictive Models to Estimate the Minimum Miscibility Pressure of CO2-Oil System. In Proceedings of the Abu Dhabi International Petroleum Exhibition & Conference, Abu Dhabi, United Arab Emirates, 15–18 December 2021; SPE: Abu Dhabi, United Arab Emirates, 2021. [Google Scholar]

- Pham, Q.C.; Trinh, T.Q.; James, L.A. Data Driven Prediction of the Minimum Miscibility Pressure (MMP) Between Mixtures of Oil and Gas Using Deep Learning. In Proceedings of the Volume 1: Offshore Technology; American Society of Mechanical Engineers: Cincinnati, OH, USA, 2021. [Google Scholar]

- Haider, G.; Khan, M.A.; Ali, F.; Nadeem, A.; Abbasi, F.A. An Intelligent Approach to Predict Minimum Miscibility Pressure of Injected CO2-Oil System in Miscible Gas Flooding. In Proceedings of the ADIPEC, Abu Dhabi, United Arab Emirates, 31 October–3 November 2022; SPE: Abu Dhabi, United Arab Emirates, 2022. [Google Scholar]

- Huang, C.; Tian, L.; Zhang, T.; Chen, J.; Wu, J.; Wang, H.; Wang, J.; Jiang, L.; Zhang, K. Globally Optimized Machine-Learning Framework for CO2-Hydrocarbon Minimum Miscibility Pressure Calculations. Fuel 2022, 329, 125312. [Google Scholar] [CrossRef]

- He, Y.; Li, W.; Qian, S. Minimum Miscibility Pressure Prediction Method Based On PSO-GBDT Model. Improv. Oil Gas Recovery 2023, 6. [Google Scholar] [CrossRef]

- Hou, Z.; Su, H.; Wang, G. Study on Minimum Miscibility Pressure of CO2–Oil System Based on Gaussian Process Regression and Particle Swarm Optimization Model. J. Energy Resour. Technol. 2022, 144, 103002. [Google Scholar] [CrossRef]

- Rayhani, M.; Tatar, A.; Shokrollahi, A.; Zeinijahromi, A. Exploring the Power of Machine Learning in Analyzing the Gas Minimum Miscibility Pressure in Hydrocarbons. Geoenergy Sci. Eng. 2023, 226, 211778. [Google Scholar] [CrossRef]

- Shakeel, M.; Khan, M.R.; Kalam, S.; Khan, R.A.; Patil, S.; Dar, U.A. Machine Learning for Prediction of CO2 Minimum Miscibility Pressure. In Proceedings of the SPE Middle East Oil and Gas Show and Conference, MEOS, Proceedings, Abu Dhabi, United Arab Emirates, 23–25 May 2023; Society of Petroleum Engineers (SPE): Abu Dhabi, United Arab Emirates, 2023. [Google Scholar]

- Shen, B.; Yang, S.; Gao, X.; Li, S.; Yang, K.; Hu, J.; Chen, H. Interpretable Knowledge-Guided Framework for Modeling Minimum Miscible Pressure of CO2-Oil System in CO2-EOR Projects. Eng. Appl. Artif. Intell. 2023, 118, 105687. [Google Scholar] [CrossRef]

- Hamadi, M.; El Mehadji, T.; Laalam, A.; Zeraibi, N.; Tomomewo, O.S.; Ouadi, H.; Dehdouh, A. Prediction of Key Parameters in the Design of CO2 Miscible Injection via the Application of Machine Learning Algorithms. Eng 2023, 4, 1905–1932. [Google Scholar] [CrossRef]

- Sinha, U.; Dindoruk, B.; Soliman, M. Physics Guided Data-Driven Model to Estimate Minimum Miscibility Pressure (MMP) for Hydrocarbon Gases. Geoenergy Sci. Eng. 2023, 224, 211389. [Google Scholar] [CrossRef]

- Hosseinzadeh Helaleh, A.; Alizadeh, M. Performance Prediction Model of Miscible Surfactant-CO2 Displacement in Porous Media Using Support Vector Machine Regression with Parameters Selected by Ant Colony Optimization. J. Nat. Gas Sci. Eng. 2016, 30, 388–404. [Google Scholar] [CrossRef]

- Nait Amar, M.; Zeraibi, N.; Redouane, K. Optimization of WAG Process Using Dynamic Proxy, Genetic Algorithm and Ant Colony Optimization. Arab. J. Sci. Eng. 2018, 43, 6399–6412. [Google Scholar] [CrossRef]

- Amar, M.N.; Zeraibi, N.; Jahanbani Ghahfarokhi, A. Applying Hybrid Support Vector Regression and Genetic Algorithm to Water Alternating CO2 Gas EOR. Greenh. Gases Sci. Technol. 2020, 10, 613–630. [Google Scholar] [CrossRef]

- Le Van, S.; Chon, B.H. Evaluating the Critical Performances of a CO2–Enhanced Oil Recovery Process Using Artificial Neural Network Models. J. Pet. Sci. Eng. 2017, 157, 207–222. [Google Scholar] [CrossRef]

- Van, S.L.; Chon, B.H. Effective Prediction and Management of a CO2 Flooding Process for Enhancing Oil Recovery Using Artificial Neural Networks. J. Energy Resour. Technol. 2018, 140, 032906. [Google Scholar] [CrossRef]

- Mohagheghian, E.; James, L.A.; Haynes, R.D. Optimization of Hydrocarbon Water Alternating Gas in the Norne Field: Application of Evolutionary Algorithms. Fuel 2018, 223, 86–98. [Google Scholar] [CrossRef]

- Nwachukwu, A.; Jeong, H.; Sun, A.; Pyrcz, M.; Lake, L.W. Machine Learning-Based Optimization of Well Locations and WAG Parameters under Geologic Uncertainty. In Proceedings of the SPE Improved Oil Recovery Conference, Tulsa, OK, USA, 14–18 April 2018; SPE: Tulsa, OK, USA, 2018. [Google Scholar]

- Belazreg, L.; Mahmood, S.M.; Aulia, A. Novel Approach for Predicting Water Alternating Gas Injection Recovery Factor. J. Pet. Explor. Prod. Technol. 2019, 9, 2893–2910. [Google Scholar] [CrossRef]

- Jaber, A.K.; Alhuraishawy, A.K.; AL-Bazzaz, W.H. A Data-Driven Model for Rapid Evaluation of Miscible CO2-WAG Flooding in Heterogeneous Clastic Reservoirs. In Proceedings of the SPE Kuwait Oil & Gas Show and Conference, Mishref, Kuwait, 13–16 October 2019; SPE: Mishref, Kuwait, 2019. [Google Scholar]

- Menad, N.A.; Noureddine, Z. An Efficient Methodology for Multi-Objective Optimization of Water Alternating CO2 EOR Process. J. Taiwan Inst. Chem. Eng. 2019, 99, 154–165. [Google Scholar] [CrossRef]

- Yousef, A.M.; Kavousi, G.P.; Alnuaimi, M.; Alatrach, Y. Predictive Data Analytics Application for Enhanced Oil Recovery in a Mature Field in the Middle East. Pet. Explor. Dev. 2020, 47, 393–399. [Google Scholar] [CrossRef]

- Belazreg, L.; Mahmood, S.M. Water Alternating Gas Incremental Recovery Factor Prediction and WAG Pilot Lessons Learned. J. Pet. Explor. Prod. Technol. 2020, 10, 249–269. [Google Scholar] [CrossRef]

- You, J.; Ampomah, W.; Sun, Q. Development and Application of a Machine Learning Based Multi-Objective Optimization Workflow for CO2-EOR Projects. Fuel 2020, 264, 116758. [Google Scholar] [CrossRef]

- You, J.; Ampomah, W.; Morgan, A.; Sun, Q.; Huang, X. A Comprehensive Techno-Eco-Assessment of CO2 Enhanced Oil Recovery Projects Using a Machine-Learning Assisted Workflow. Int. J. Greenh. Gas Control. 2021, 111, 103480. [Google Scholar] [CrossRef]

- Enab, K.; Ertekin, T. Screening and Optimization of CO2-WAG Injection and Fish-Bone Well Structures in Low Permeability Reservoirs Using Artificial Neural Network. J. Pet. Sci. Eng. 2021, 200, 108268. [Google Scholar] [CrossRef]

- Afzali, S.; Mohamadi-Baghmolaei, M.; Zendehboudi, S. Application of Gene Expression Programming (GEP) in Modeling Hydrocarbon Recovery in WAG Injection Process. Energies 2021, 14, 7131. [Google Scholar] [CrossRef]

- Lv, W.; Tian, W.; Yang, Y.; Yang, J.; Dong, Z.; Zhou, Y.; Li, W. Method for Potential Evaluation and Parameter Optimization for CO2-WAG in Low Permeability Reservoirs Based on Machine Learning. IOP Conf. Ser. Earth Environ. Sci. 2021, 651, 032038. [Google Scholar] [CrossRef]

- Nait Amar, M.; Jahanbani Ghahfarokhi, A.; Ng, C.S.W.; Zeraibi, N. Optimization of WAG in Real Geological Field Using Rigorous Soft Computing Techniques and Nature-Inspired Algorithms. J. Pet. Sci. Eng. 2021, 206, 109038. [Google Scholar] [CrossRef]

- Junyu, Y.; William, A.; Qian, S. Optimization of Water-Alternating-CO2 Injection Field Operations Using a Machine-Learning-Assisted Workflow. In Proceedings of the SPE Reservoir Simulation Conference, Online, 26 October–1 November 2021; SPE: Abu Dhabi, United Arab Emirates, 2021. [Google Scholar]

- Sun, Q.; Ampomah, W.; You, J.; Cather, M.; Balch, R. Practical CO2—WAG Field Operational Designs Using Hybrid Numerical-Machine-Learning Approaches. Energies 2021, 14, 1055. [Google Scholar] [CrossRef]

- Huang, R.; Wei, C.; Li, B.; Yang, J.; Wu, S.; Xu, X.; Ou, Y.; Xiong, L.; Lou, Y.; Li, Z.; et al. Prediction and Optimization of WAG Flooding by Using LSTM Neural Network Model in Middle East Carbonate Reservoir. In Proceedings of the Abu Dhabi International Petroleum Exhibition & Conference, Abu Dhabi, United Arab Emirates, 15–18 November 2021; SPE: Abu Dhabi, United Arab Emirates, 2021. [Google Scholar]

- Li, H.; Gong, C.; Liu, S.; Xu, J.; Imani, G. Machine Learning-Assisted Prediction of Oil Production and CO2 Storage Effect in CO2-Water-Alternating-Gas Injection (CO2-WAG). Appl. Sci. 2022, 12, 10958. [Google Scholar] [CrossRef]

- Andersen, P.Ø.; Nygård, J.I.; Kengessova, A. Prediction of Oil Recovery Factor in Stratified Reservoirs after Immiscible Water-Alternating Gas Injection Based on PSO-, GSA-, GWO-, and GA-LSSVM. Energies 2022, 15, 656. [Google Scholar] [CrossRef]

- Singh, G.; Davudov, D.; Al-Shalabi, E.W.; Malkov, A.; Venkatraman, A.; Mansour, A.; Abdul-Rahman, R.; Das, B. A Hybrid Neural Workflow for Optimal Water-Alternating-Gas Flooding. In Proceedings of the SPE Reservoir Characterization and Simulation Conference and Exhibition, Abu Dhabi, United Arab Emirates, 24–26 January 2023; SPE: Abu Dhabi, United Arab Emirates, 2023. [Google Scholar]

- Asante, J.; Ampomah, W.; Carther, M. Forecasting Oil Recovery Using Long Short Term Memory Neural Machine Learning Technique. In Proceedings of the SPE Western Regional Meeting, Anchorage, AK, USA, 22–25 May 2023; SPE: Anchorage, AK, USA, 2023. [Google Scholar]

- Matthew, D.A.M.; Jahanbani Ghahfarokhi, A.; Ng, C.S.W.; Nait Amar, M. Proxy Model Development for the Optimization of Water Alternating CO2 Gas for Enhanced Oil Recovery. Energies 2023, 16, 3337. [Google Scholar] [CrossRef]

- Xiong, X.; Lee, K.J. Data-Driven Modeling to Optimize the Injection Well Placement for Waterflooding in Heterogeneous Reservoirs Applying Artificial Neural Networks and Reducing Observation Cost. Energy Explor. Exploit. 2020, 38, 2413–2435. [Google Scholar] [CrossRef]

- Nwachukwu, A.; Jeong, H.; Pyrcz, M.; Lake, L.W. Fast Evaluation of Well Placements in Heterogeneous Reservoir Models Using Machine Learning. J. Pet. Sci. Eng. 2018, 163, 463–475. [Google Scholar] [CrossRef]

- Selveindran, A.; Zargar, Z.; Razavi, S.M.; Thakur, G. Fast Optimization of Injector Selection for Waterflood, CO2-EOR and Storage Using an Innovative Machine Learning Framework. Energies 2021, 14, 7628. [Google Scholar] [CrossRef]

- Ding, M.; Yuan, F.; Wang, Y.; Xia, X.; Chen, W.; Liu, D. Oil Recovery from a CO2 Injection in Heterogeneous Reservoirs: The Influence of Permeability Heterogeneity, CO2-Oil Miscibility and Injection Pattern. J. Nat. Gas Sci. Eng. 2017, 44, 140–149. [Google Scholar] [CrossRef]

- Ahmadi, M.A.; Zendehboudi, S.; James, L.A. Developing a Robust Proxy Model of CO2 Injection: Coupling Box–Behnken Design and a Connectionist Method. Fuel 2018, 215, 904–914. [Google Scholar] [CrossRef]

- Karacan, C.Ö. A Fuzzy Logic Approach for Estimating Recovery Factors of Miscible CO2-EOR Projects in the United States. J. Pet. Sci. Eng. 2020, 184, 106533. [Google Scholar] [CrossRef]

- Chen, B.; Pawar, R.J. Characterization of CO2 Storage and Enhanced Oil Recovery in Residual Oil Zones. Energy 2019, 183, 291–304. [Google Scholar] [CrossRef]

- Iskandar, U.P.; Kurihara, M. Time-Series Forecasting of a CO2-EOR and CO2 Storage Project Using a Data-Driven Approach. Energies 2022, 15, 4768. [Google Scholar] [CrossRef]

- You, J.; Ampomah, W.; Sun, Q.; Kutsienyo, E.J.; Balch, R.S.; Dai, Z.; Cather, M.; Zhang, X. Machine Learning Based Co-Optimization of Carbon Dioxide Sequestration and Oil Recovery in CO2-EOR Project. J. Clean. Prod. 2020, 260, 120866. [Google Scholar] [CrossRef]

- Ampomah, W.; Balch, R.S.; Grigg, R.B.; McPherson, B.; Will, R.A.; Lee, S.; Dai, Z.; Pan, F. Co-optimization of CO2-EOR and Storage Processes in Mature Oil Reservoirs. Greenh. Gases Sci. Technol. 2017, 7, 128–142. [Google Scholar] [CrossRef]

- You, J.; Ampomah, W.; Sun, Q.; Kutsienyo, E.J.; Balch, R.S.; Cather, M. Multi-Objective Optimization of CO2 Enhanced Oil Recovery Projects Using a Hybrid Artificial Intelligence Approach. In Proceedings of the SPE Annual Technical Conference and Exhibition, Calgary, AB, Canada, 30 September–2 October 2019; SPE: Calgary, AB, Canada, 2019. [Google Scholar]

- You, J.; Ampomah, W.; Kutsienyo, E.J.; Sun, Q.; Balch, R.S.; Aggrey, W.N.; Cather, M. Assessment of Enhanced Oil Recovery and CO2 Storage Capacity Using Machine Learning and Optimization Framework. In Proceedings of the SPE Europec featured at 81st EAGE Conference and Exhibition, London, UK, 3–6 June 2019; SPE: London, UK, 2019. [Google Scholar]

- Vo Thanh, H.; Sugai, Y.; Sasaki, K. Application of Artificial Neural Network for Predicting the Performance of CO2 Enhanced Oil Recovery and Storage in Residual Oil Zones. Sci. Rep. 2020, 10, 18204. [Google Scholar] [CrossRef] [PubMed]

- Emera, M.K.; Sarma, H.K. A Genetic Algorithm-Based Model to Predict CO-Oil Physical Properties for Dead and Live Oil. J. Can. Pet. Technol. 2008, 47, 52–61. [Google Scholar] [CrossRef]

- Rostami, A.; Arabloo, M.; Kamari, A.; Mohammadi, A.H. Modeling of CO2 Solubility in Crude Oil during Carbon Dioxide Enhanced Oil Recovery Using Gene Expression Programming. Fuel 2017, 210, 768–782. [Google Scholar] [CrossRef]

- Rostami, A.; Arabloo, M.; Lee, M.; Bahadori, A. Applying SVM Framework for Modeling of CO2 Solubility in Oil during CO2 Flooding. Fuel 2018, 214, 73–87. [Google Scholar] [CrossRef]

- Mahdaviara, M.; Nait Amar, M.; Hemmati-Sarapardeh, A.; Dai, Z.; Zhang, C.; Xiao, T.; Zhang, X. Toward Smart Schemes for Modeling CO2 Solubility in Crude Oil: Application to Carbon Dioxide Enhanced Oil Recovery. Fuel 2021, 285, 119147. [Google Scholar] [CrossRef]

- Iskandarov, J.; Fanourgakis, G.S.; Ahmed, S.; Alameri, W.; Froudakis, G.E.; Karanikolos, G.N. Data-Driven Prediction of in Situ CO2 Foam Strength for Enhanced Oil Recovery and Carbon Sequestration. RSC Adv. 2022, 12, 35703–35711. [Google Scholar] [CrossRef]

- Moosavi, S.R.; Wood, D.A.; Ahmadi, M.A.; Choubineh, A. ANN-Based Prediction of Laboratory-Scale Performance of CO2-Foam Flooding for Improving Oil Recovery. Nat. Resour. Res. 2019, 28, 1619–1637. [Google Scholar] [CrossRef]

- Raha Moosavi, S.; Wood, D.A.; Samadani, A. Modeling Performance of Foam-CO2 Reservoir Flooding with Hybrid Machine-Learning Models Combining a Radial Basis Function and Evolutionary Algorithms. Comput. Res. Prog. Appl. Sci. Eng. CRPASE 2020, 6, 1–8. [Google Scholar]

- Khan, M.R.; Kalam, S.; Abu-khamsin, S.A.; Asad, A. Machine Learning for Prediction of CO2 Foam Flooding Performance. In Proceedings of the ADIPEC, Abu Dhabi, United Arab Emirates, 31 October–3 November 2022; SPE: Abu Dhabi, United Arab Emirates, 2022. [Google Scholar]

- Vo Thanh, H.; Sheini Dashtgoli, D.; Zhang, H.; Min, B. Machine-Learning-Based Prediction of Oil Recovery Factor for Experimental CO2-Foam Chemical EOR: Implications for Carbon Utilization Projects. Energy 2023, 278, 127860. [Google Scholar] [CrossRef]

| Authors | Methods | Dataset | Splitting | Objectives | Inputs | Results | Evaluations | Limitations | Rating * |

|---|---|---|---|---|---|---|---|---|---|

| Hosseinzadeh Helaleh and Alizadeh [76] | SVM (ACO, GA, PSO) | 200 | 80% train + 20% test | Fractional oil recovery | RLC, RLD, NgAO, NgGO, MSWAG, NC, SGR, NPe, NSCon, NB, Nα, Nσ, λ*Dx, Nn, He | ACO has high accuracy and low computational time compared to ANN, GA, and PSO. | Evaluate with both experiments and simulations. Limited to a similar geological model. | Only has SVM model. | 8 |

| Le Van and Chon [79] | ANN | 223 (simulation) | 45% train + 20% test + 35% validation | Oil recovery factor, oil rate, GOR, accumulative CO2 production, net CO2 storage | Swi, kv/kh, WAG ratio, duration of each cycle | ANN models can support numerical simulation of CO2-EOR projects. WAG ratio less than 1.5 is best. | Evaluated multiple objectives but only limited to ANN. | Only have simulation results as trained data. | 8 |

| Van and Chon [80] | ANN | 263 (simulation) | 50% train + 20% test + 30% validation | Oil recovery + net CO2 storage + cumulative gaseous CO2 production | Kv/Kh, WAG ratio, Sw, well distance between each injector, T | ANN can help estimate oil recovery and CO2 storage. Injection cycle 25 is best. | Evaluate different WAG ratios but limited to ANN models only. | Only have simulation results as trained data. | 7 |

| Mohagheghia [81] | GA, PSO | 2000 (simulation) | NA | NPV + incremental recovery factor | Water and gas injection rates, BHP of producers, cycle ratio, cycle time, injected gas composition, total WAG period | PSO is capable of optimizing WAG variables and projects at field scale. | First used GA in WAG at field scale. Evaluated with three case studies. Limited to specific geological models. | Only GA and PSO are evaluated. Specific to E-segment. | 9 |

| Nwachukwu, Jeong, Sun et al. [82] | XGBoost, MADS | 1000 (simulation) | 50% train + 50% test | Oil/water/gas production rates, well locations, NPV | Well x-coordinates, well y-coordinates, water/gas injection rates, well block ϕ/k, well block Swi | The new model combined XGBoost and MADS provided high accuracy. | Demonstrated with a case study in which underlying geology is uncertain. Limited to one model. | Only XGBoost is employed. | 8 |

| Nait Amar et al. [77] | ANN/GA, ACO | 85 | 88% train + 12% test | Field oil production total | Gas/water injection rates, gas/water injection half-cycle, WAG ratio, and slug size | Both GA and ACO are highly effective in the optimization of the WAG process. | Demonstrated the application of a time-dependent proxy model for the WAG process. Without further application of the case study. | Restricted to specific geological models. Limited simulation runs | 8 |

| Belzareg et al. [83] | Regression, GDMH | 4290 | 70% train + 30% test | Incremental recovery factor | kh, kv, API, gas gravity, water viscosity, solution GOR, WAG ratio, WAG cycle, land coefficient, reservoir pressure, PV of injected water, PV of injected gas | GMDH performed better in selecting effective input parameters and optimizing the model structure. | Novel approach but did not apply real field WAG pilot data to validate. | Limited to two ML methods. | 8 |

| Jaber et al. [84] | CCD | 81 | NA | Oil recovery | k, ϕ, kv/kh, cyclic length, BHP, WAG ratio, CO2 slug size | The new proxy model can predict oil recovery. The optimum WAG ratio is 1.5. | Developed a new proxy model based on CCD, but limited to one model. | Limited data points and only from simulation runs. | 7 |

| Menad and Noureddine [85] | MLP (LMA, BR, SCG) + NSGA-II | From 2010 to 2018 | NA | FOPR, FWPR | Time, FWIR, FGIR, the value of the needed parameter at the previous time step | MLP-LMA has the highest accuracy and lowest computation time. | Developed a dynamic proxy model for multiple objectives. But limited to one geological model. | The database was generated based on multiple runs of the simulation. | 8 |

| Nait Amar and Zeraibi [9] | SVR, GA | 75 | NA | Field oil production total | Injection rates of water and gas, half-cycle injection time, WAG ratio, slug size, initialization time of the process | SVR-GA provides high accuracy and reasonable CPU time. | Established a dynamic proxy model based on SVR-GA, but no comparison with other algorithms. | Limited data points and only one model evaluated. | 7 |

| Yousef et al. [86] | ANN | 8 years × 37 wells | 85% train + 15% test | Oil/gas/water production rate, GOR, infill well location | Well trajectory data, well logs, seismic data, production and injection history, reservoir pressure, choke opening, and WHP history | Implementing ANN for top-down modeling can predict reservoir performance under WAG. | Can predict the reservoir performance 3 months ahead. But simplify the data gathering, modeling, and validation process. | Unknown about specific input data. No comparison with other models or field case studies. | 6 |

| Belazreg and Mahmood [87] | GDMH | 177 | 70% train + 30% test | Incremental oil recovery factor | Rock type, WAG process type, reservoir horizontal permeability, API, oil viscosity, reservoir pressure and temperature, and hydrocarbon pore volume of injected gas | GDMH models can predict three WAG incremental recovery factors: sandstone immiscible gas injection, sandstone miscible gas injection, and carbonate miscible gas injection | Proved GDMH can model the WAG process and has good potential. More data and validation are needed to improve model robustness and applicability. | Limited published WAG pilot data. | 8 |

| You et al. [88] | ANN | 820 | 80% train + 10% test + 10% validation | Oil recovery, CO2 storage, and project NPV | Water injection time, CO2 injection time, producer BHP, water injection rate | The ANN proxy model can help improve the prediction performance. | Could handle two or three objectives very well when a limited number of control parameters | Only suitable for limited input parameters. | 8 |

| You et al. [89] | Gaussian SVR-PSO | 217 | NA | Hydrocarbon recovery + CO2 sequestration volume + NPV | FOPR × 2, gas cycle × 5, water cycle × 5 | The proposed method can optimize the WAG process with high accuracy. | Nice sensitivity studies of CO2 price and oil price on NPV. Limited comparison with other ML models. | Restricted to specific geological models. | 8 |

| Enab and Ertekin [90] | ANN | 2000 | 80% train + 10% test + 10% validation | Production prediction, production schemes design, history matching | 25 inputs including reservoir rock characteristics, initial conditions, oil composition, well design parameters, and injection strategy parameters | ANN provides a faster prediction for fish-bone structure in low permeability reservoirs. | Nice project design and economic analysis, but limited to ANN model only. | Limitations were | |

| Afzali et al. [91] | GEP | 96 | 67% train + 33% test | Recovery factor | Oil viscosity, gas/water injection rates, k, PVI, number of cycles | The developed model is successful when compared with experimental results. | Novelty in using GEP. The dataset is from mathematical correlation. | imposed by defining the range of each variable. | 8 |

| Lv et al. [92] | ANN-PSO | 2100 | 70% train + 15% test + 15% validation | Oil production | So, Pi, k, ϕ, h, Pwf, water injection rate, water cut before gas flooding, gas injection rate, water injection volume, cycle time, water injection time, production rate, grid size | ANN-PSO provides a good model for parameter optimization of CO2 WAG-EOR. | Routine procedures, not too much novelty in applying ANN-PSO. | Limited and less supportive dataset. | 8 |

| Nait Amar et al. [93] | MLP-LM, RBFNN-ACO/GWO | 82 | 88% train + 12% test | Field oil production total | Water/gas injection rates, injection half-cycle, downtime, WAG ratio, gas slug size | MLP-LMA is best. The proxy model can significantly reduce simulation time and conserve high accuracy. | The application of GWO is novel. Limited runs and may have overfitting problems. | No comparison with other ML models. | 7 |

| Junyu et al. [94] | Gaussian-SVR | 1400 | NA | Cumulative oil production and cumulative CO2 storage. | Water/gas cycle, producer BHP, water injection rate, etc. (91 variables in total) | Gaussian-SVR performs best. | Showed the possibility to design a CO2-WAG project using as many inputs as possible. | Water cut is limited to 50%. Reservoir pressure must be higher than MMP. | 8 |

| Sun et al. [95] | SVR, MLNN, RSM | 600 | 83% train + 17% test | Oil production, CO2 storage, NPV. | Duration of CO2 and water injection cycles, water injection rate, production well specifications, oil price, CO2 price, etc. (62 parameters) | The MLNN model can handle problems with large input and output dimensions. | Compared three different methods. But only suitable for specific geological models. | Given the large number of input parameters, the dataset may not be large enough. | 7 |

| Huang et al. [96] | LSTM | 5404 | 90% train + 10% test | Oil production, GOR, water cut | Daily liquid rate, daily oil/gas/water rate, GIR, WIR, reservoir pressure, WHFP, choke size of producers | The calculation time of LSTM is 864% less than the simulation, while the prediction error of the LSTM method is 261% less than the simulation. | The model is based on real reservoir data over 15 years. But limited to one ML model. | The average reservoir pressure must be between 3700–5400 psi. | 8 |

| Li et al. [97] | RF | 216 | 70% train + 30% test | Cumulative oil production, CO2 storage amount, CO2 storage efficiency | CO2-WAG period, CO2 injection rate, water-gas ratio, reservoir properties, oil properties, depth, layer thickness, Soi, well operation | CO2-WAG cycle time has a slight influence on oil production. Random forest can predict oil production and CO2 storage. | Proved RF has high computation efficiency and accuracy in CO2-WAG projects. But no comparison of different ML models. | Only one ML model is considered. No comparison with other models. | 7 |

| Andersen et al. [98] | LSSVM—PSO/GA/GWO/GSA | 2500 | 70% train + 15% test + 15% validation | Oil recovery factor | Water-oil and gas-oil mobility ratios, water-oil and gas-oil gravity numbers, reservoir heterogeneity factor, two hysteresis parameters, and water fraction | LSSVM with GWO or PSO performed better than GA or GSA. | Very detailed and thorough study. The dataset is relatively large. Some limitations of input parameters. | Small dataset and only one ML model is studied. | 7 |

| Singh et al. [99] | DNN-GA | 2200 | 70/80% train + 30/20% test | Maximize oil recovery | Water injection rates, gas-to-water ratio, slug size | DNN-GA workflow can identify improved WAG parameters over the baseline recovery, with incremental increases of 0.5–2%. | Presents a novel workflow for WAG optimization using ML. Requires a large number of simulation runs (2200 here) to initially train DNN. | Several important parameters were not varied much. | 9 |

| Asante et al. [100] | LSTM | 2345 × 3 | 80% train + 20% test | Oil production rate, oil recovery factor | Bottom-hole pressure at injector and producer, water and gas injection volumes, WAG cycle | LSTM can model complex time-series data without the use of the geological model. | Shows the ability of LSTM to perform time series analysis. But the input parameters are restricted. | Limited to optimizing WAG parameters. | 7 |

| Matthew et al. [101] | ANN-NSGA-II | 68 + 97 | NA | Maximize oil produced and CO2 storage | Water and gas injection rate, half-cycle length, time step | The developed proxy model can predict both simple and complex models. | Developed a dynamic proxy model for multiple objectives. But the dataset size is limited. | Requires large amounts of quality field data. | 7 |

| Authors | Methods | Dataset | Splitting | Objectives | Inputs | Results | Evaluations | Limitations | Rating * |

|---|---|---|---|---|---|---|---|---|---|

| Nwachukwu et al. [103] | XGBoost | 200, 500, 1000 | NA | Total profit, cumulative oil/gas produced, net CO2 stored | Well-to-well pairwise connectivity, injector block k and ϕ, initial injector block saturations | Quick evaluation of well placement using well-to-well connectivity was successful with 1000 simulation runs and R2 = 0.92. | No co-optimization of oil recovery and CO2 storage, only ML proxy usage. | The dataset is from simulation runs. Only suitable for one geological model. | 8 |

| Selveindran et al. [104] | AdaBoost, RF, ANN | 3000, 2000, 1000 | 70% train + 30% test | Incremental oil production | K, ϕ, PV, initial fluid saturation, pressure, time of flight, well-to-well distances, distance to the injector, injection rate, and injection depth | Stacked learner is better than an individual learner. ML helps rapidly identify the areas that are optimal for injection. | Detailed and comprehensive analysis, including posterior sampling. | Heavily rely on the geological model. | 8 |

| Authors | Methods | Dataset | Splitting | Objectives | Inputs | Results | Evaluations | Limitations | Rating * |

|---|---|---|---|---|---|---|---|---|---|

| Ahmadi et al. [106] | LSSVM | 46 | 80% train + 20% test | Oil recovery factor | BHP of injection well, CO2 injection rate, CO2 injection concentration, BHP of production well, oil production rate | The hybridization of LSSVM and BBD is statistically correct for predicting RF. | Provided the possibility of using ML and comparing it with commercial software. But limited dataset. | Small dataset and only suitable for similar oil reservoirs. Only valid for the same input parameters range. | 7 |

| Chen and Pawar [108] | MARS, SVR, RF | 500, 250, 100 | NA | Recovery factor | Thickness, depth, k, Sor, CO2 injection rate, BHP of production well | MARS has the best performance. | Applied to 5 fields in Permian Basin and had good matches. Heavily relies on a base model and may not fully represent diverse ROZs. | Significant assumptions are made regarding uncertain parameters like residual oil saturation. | 8 |

| Karacan [107] | FL | 24 | 83% train + 17% test | Recovery factor | Lithology, API, ϕ, k, HCPV, depth, net pay, Pi, well spacing, Sorw | FL provided a reasonably accurate prediction. | Though a small dataset, it provides the possibility of using ML in recovery factor prediction. | Too difficult to draw statistical conclusions from such a small dataset. | 7 |

| Iskandar and Kurihara [109] | AR, MLP, LSVM | 3653 × 8 wells | 40% train + 20% test + 40% validation | Oil, gas, and water production | ϕ, k, formation thickness, BHP, flow capacity, storage capacity | The AR model is best, with long and consistent forecast horizons across wells. LSTM performs well but has shorter forecast horizons. MLP has high variability and short forecast horizons. | First time series forecasting study. No model updating/retraining over time. Overall, it is a solid study. | Limited hyperparameter tuning is performed. Only three models were tested. | 9 |

| Authors | Methods | Dataset | Splitting | Objectives | Inputs | Results | Evaluations | Limitations | Rating * |

|---|---|---|---|---|---|---|---|---|---|

| Ampomah et al. [111] | GA | NA | NA | Oil recover + CO2 storage | NA | The proxy models to determine the optimal operational parameters, including injection/production rates, pressure, and WAG cycles. | First used proxy models and GA to optimize oil recovery and CO2 storage simultaneously. But relies heavily on having an accurate reservoir mode. | Optimal parameters are specific to this reservoir—and not necessarily generalizable. | 7 |

| You et al. [112] | RBFNN | 160 | N/A | Cumulative oil production + CO2 storage + NPV | water cycle, gas cycle, BHP of producer, water injection rate | The proxy model is built based on RBFNN for optimization. | The overall prediction is acceptable, but the CO2 storage prediction is much higher. | The CO2 storage optimization is 18% higher than the baseline. | 7 |

| You et al. [113] | ANN-PSO | 820 (numerical model) | 80% train + 10% test + 10% validation | Cumulative oil production + CO2 storage + NPV | water cycle, gas cycle, BHP of producer, water injection rate | The optimization study showed promising results for multiple objectives. | Developed a novel hybrid optimization for multiple objective functions. But only validated with field case. | Only four input parameters are considered. | 7 |

| Vo Thanh et al. [114] | ANN-PSO | 351 (numerical model) | 80% train + 10% test + 10% validation | Cumulative oil production + cumulative CO2 storage +cumulative CO2 retained | ϕ, k, Sorg, Sorw, BHP of producer, CO2 injection rate | ANN can forecast the performance of CO2 EOR and storage in a residual oil zone. | The ANN provides R2 of 0.99 and MSE of less than 2%, but the application in other types of reservoirs is questionable. | Case specific. | 7 |

| Authors | Methods | Dataset | Splitting | Objectives | Inputs | Results | Evaluations | Limitations | Rating * |

|---|---|---|---|---|---|---|---|---|---|

| Emera and Sarma [115] | GA | 106 (dead oil), 74 (live oil) | NA | CO2 solubility, oil swelling factor, CO2-oil density, and viscosity | API, Ps, T, MW | The GA-base correlations provided the highest accuracy. | First applied GA in CO2-oil properties prediction. Will be more helpful if a full dataset is provided. | Validated over a certain data range. May not be reliable if it is out of data range. | 8 |

| Rostami et al. [116] | ANN, GEP | 106 (dead oil), 74 (live oil) | 80% train + 20% test | CO2 solubility | Ps, T, MW, γ, Pb | GEP is more accurate than ANN for dead oil. | Compared with several empirical methods. More comparisons between ML models will be more persuasive. | Limited dataset on live oil. | 8 |

| Rostami et al. [117] | LSSVM | 106 (dead oil), 74 (live oil) | 70% train + 15% test + 15% validation | CO2 solubility | Ps, T, MW, γ | LSSVM showed higher accuracy compared to previous empirical correlations. | More rigorous validation against experimental data equations of state models would be useful. | Only a few literature models were compared. | 7 |

| Mahdaviara et al. [118] | MLP, RBF (GA, DE, FA), GMDH | NA | NA | CO2 solubility | Ps, T, MW, γ, Pb | MLP-LM and MLP-SCG are better at predicting solubility. GMDH is better than LSSVM. | Compared with various models and optimization methods. But unknown for the dataset. | Not known for the dataset. | 8 |

| Hamadi et al. [74] | MLP-Adam, SVR-RBF, XGBoost | 105 (dead oil), 74 (live oil) | 80% train + 20% test | CO2 solubility, IFT | Ps, T, MW, γ, Pb | SVR-RBF provided the best accuracy. | Limited comparisons between different models. | Given the year that this paper was published, the dataset is small. | 7 |

| Authors | Methods | Dataset | Splitting | Objectives | Inputs | Results | Evaluations | Limitations | Rating * |

|---|---|---|---|---|---|---|---|---|---|

| Moosavi et al. [120] | MLP, RBF (GA, COA) | 214 | 80% train + 20% test; 75% train + 25% test; 90% train + 10% test | Oil flow rate and recovery factor | Surfactant kind, ϕ, K, PV of core, Soi, injected foam PV | Both MLP and RBF provide high accuracy with R2 up to 0.99. | The earliest research on CO2-foam EOR. Only focus on laboratory data. | Only studied two methods, and there was no comparison among other ML algorithms. | 8 |

| Raha Moosavi et al. [121] | RBF (TLBO, PSO, GA, ICA) | 214 | 80% train + 20% test | Oil flow rate and recovery factor | Surfactant kind, ϕ, K, PV of core, Soi, injected foam PV | RBF-TLBO provides the highest accuracy. | Proved ML can provide high accuracy (R2 can reach 0.999), but is only limited to coreflood. | Limited to laboratory experiments. | 8 |

| Iskandarov et al. [119] | DT, RF, ERT, GB, XGBoost, ANN | 145 | 70% train + 30% test | Surfactant stabilized CO2 apparent foam viscosity | Shear rate, Darcy velocity, surfactant concentration, salinity, foam quality, T, and pressure | ML can provide reliable prediction, and ANN provides the highest accuracy. | Proved ML can predict for both bulk and sandstone formation under various conditions. | The dataset size is relatively small and may have overfitting. | 8 |

| Khan et al. [122] | XGBoost | 200 | 70% train + 30% test | Oil recovery factor | Foam type, Soi, total PV tested, ϕ, K, injected foam PV | XGBoost can provide high accuracy. | Proved XGBoost can be used for CO2-foam. Limited to laboratory data. | Only one ML is applied. No other comparisons. | 7 |

| Vo Thanh et al. [123] | GRNN, CFNN-LM, CFNN-BR, XGBoost | 260 | 70% train + 30% test | Oil recovery factor | IOIP, TPVT, ϕ, K, injected foam PV | Porosity is the most significant parameter. GRNN has the highest accuracy. | Comprehensive and detailed description. | Limited to laboratory experiments. | 9 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Du, X.; Salasakar, S.; Thakur, G. A Comprehensive Summary of the Application of Machine Learning Techniques for CO2-Enhanced Oil Recovery Projects. Mach. Learn. Knowl. Extr. 2024, 6, 917-943. https://doi.org/10.3390/make6020043

Du X, Salasakar S, Thakur G. A Comprehensive Summary of the Application of Machine Learning Techniques for CO2-Enhanced Oil Recovery Projects. Machine Learning and Knowledge Extraction. 2024; 6(2):917-943. https://doi.org/10.3390/make6020043

Chicago/Turabian StyleDu, Xuejia, Sameer Salasakar, and Ganesh Thakur. 2024. "A Comprehensive Summary of the Application of Machine Learning Techniques for CO2-Enhanced Oil Recovery Projects" Machine Learning and Knowledge Extraction 6, no. 2: 917-943. https://doi.org/10.3390/make6020043

APA StyleDu, X., Salasakar, S., & Thakur, G. (2024). A Comprehensive Summary of the Application of Machine Learning Techniques for CO2-Enhanced Oil Recovery Projects. Machine Learning and Knowledge Extraction, 6(2), 917-943. https://doi.org/10.3390/make6020043