Abstract

Alzheimer’s disease (AD) is an old-age disease that comes in different stages and directly affects the different regions of the brain. The research into the detection of AD and its stages has new advancements in terms of single-modality and multimodality approaches. However, sustainable techniques for the detection of AD and its stages still require a greater extent of research. In this study, a multimodal image-fusion method is initially proposed for the fusion of two different modalities, i.e., PET (Positron Emission Tomography) and MRI (Magnetic Resonance Imaging). Further, the features obtained from fused and non-fused biomarkers are passed to the ensemble classifier with a Random Forest-based feature selection strategy. Three classes of Alzheimer’s disease are used in this work, namely AD, MCI (Mild Cognitive Impairment) and CN (Cognitive Normal). In the resulting analysis, the Binary classifications, i.e., AD vs. CN and MCI vs. CN, attained an accuracy (Acc) of 99% in both cases. The class AD vs. MCI detection achieved an adequate accuracy (Acc) of 91%. Furthermore, the Multi Class classification, i.e., AD vs. MCI vs. CN, achieved 96% (Acc).

1. Introduction

Alzheimer’s disease (AD) is a debilitating neurological disorder affecting millions worldwide. AD is now a leading cause of death in old age [1], and through trends, the number of cases will rise in the coming years. The biological reason for this condition is the build-up of a protein called beta-amyloid in the brain, leading to the loss of nerve cells [2]. Around 55 million people are affected by the severe neurological disorder known as dementia, with more than 60% of cases occurring in middle- and low-income countries. Economic, social, and mental stress are among the leading factors contributing to the onset of AD. As a result, there is a growing need to better understand the disease and identify effective treatments. In the modern era, using artificial intelligence (AI) techniques for detecting AD and its sub-stages is common practice [3]. These techniques include both single-modality and multimodality methods. However, though contributions from researchers have been made in this field, the most appropriate and effective methods have yet to be identified. AD has various biological and other causes, and the primary reasons cannot be placed through a single-modality approach [4]. In addition, there are other methods, such as clinical assessments, demographic conditions, and MMSE scores, but none have proven to be a sustainable approach for AD detection [5]. In their searching for a reliable method of detecting AD, many experts are exploring the use of a multimodal approach. This technique combines various biomarkers, such as MRI scans and PET scans, to diagnose more accurately [6].

In medical image fusion, combining different input images into a single fused image is a more natural method that provides essential and complementary information for accurate diagnosis and treatment. Pixel-level intensity matching is generally used in image fusion [7]. This approach, also known as multimodal fusion, is considered more efficient than feature fusion techniques as it expresses information more effectively and presents a broader range of features [8,9]. By fusing the components from different brain regions, a more precise visualization is possible, which can help identify the various stages of the disease, i.e., AD, Mild Cognitive Impairment (MCI), and Cognitive Normal (CN). This study compares Binary and Multi Class analysis for understanding the differences in AD. For classification, trending machine learning (ML) and ensemble learning (EL) techniques are utilized. Using a multi-model, multi-slice 2D CNN ensemble learning architecture is considered a highly effective approach for classifying AD versus CN and AD versus MCI, with an (Acc) rate of 90.36% and 77.19%, respectively [10]. Another ensemble technique using the same architecture yielded (Acc) rates of 90.36%, 77.19%, and 72.36% for AD, MCI, and CN, respectively, when compared to each other [11]. A new subset of three relevant features was used to create an ensemble model, and a weighted average of the top two classifiers (LR and linear SVM) was used. The voting classifier weighted average outperformed the basic classifiers, with an (Acc) rate of 0.9799 ± 0.055 and an AUPR of 0.9108 ± 0.015 [12]. The CNN-EL ensemble classifier, using an MRI modality of the MCInc, yielded (Acc) rates of 0.84 ± 0.05, 0.79 ± 0.04, and 0.62 ± 0.06 for identifying subjects with MCI or AD [13]. Bidirectional GAN is a revolutionary end-to-end network that uses image contexts and latent vectors to synthesize brain MR-to-PET images [14].

MRIs are generally used for the detection of AD. They are highly effective imaging tools and contain data related to the anatomy of the different neuro regions [15]. They reveal changes in brain structure before clinical symptoms appear. These modalities reflect alterations in the cortex, white matter (WM), and subcortical regions, which can help detect abnormalities caused by AD [16]. Brain shrinkage, which is frequent in AD, as well as the reduction in the size of specific brain areas such as the hippocampus (Hp), entorhinal cortex (Ec), temporal lobe (Tl), parietal lobe (Pl), and prefrontal cortex (Pc), have been observed [17]. These areas are critical for cognitive skills such as memory, perception, and speaking in AD [18]. The degree and pattern of atrophy in these areas can provide important visualizations for studying the prognosis of AD. MRI scans can detect lesions or anomalies in the brain that signal AD, for the early identification and therapy of the condition [19]. PET scans are generally for the diagnosis of AD [20]. PETs provide functional brain imaging by measuring glucose metabolism and detecting AD-associated brain function changes [21]. PET scans can detect amyloid plaques and Tau proteins, which signify AD, and measure glucose metabolism in the brain. Various PET modalities, such as amyloid PET, FDG-PET, and Tau PET, possess unique characteristics for identifying AD biomarkers. Amyloid PET employs a radiotracer to bind amyloid plaques, FDG-PET measures glucose metabolism, and Tau PET utilizes a radiotracer to bind Tau proteins, visually representing their presence in the brain.

Structural MRI can reveal alterations in brain structure, while functional PET images can capture the metabolic characteristics of the brain, enhancing the ability to detect lesions. FDG PET characteristics distinctly exhibit quantitative hypometabolism and a component in the precuneus among MCI patients. A volume-of-interest analysis comparing global GM in AD patients to healthy controls revealed reduced CBF and FDG uptake [22]. In a similar vein, MRI, FDG-PET, and CSF biomarkers were employed in a study to differentiate AD (or MCI) from healthy controls using a kernel combination strategy, with the combined method accurately identifying 91.5% of MCI converters and 73.4% of non-converters [23]. Both classifier-level and deep learning-based feature-level LUPI algorithms can enhance the performance of single-modal neuroimaging-based CAD for AD [24]. CERF integrates feature creation, feature selection, and sample classification to identify the optimal method combination and provide a framework for AD diagnosis [25]. Although these methods offer innovative frameworks, they still lack the ability to effectively detect AD and its subtypes. Consequently, it has been suggested that multimodal approaches combining MRI and PET images could improve the accuracy of AD classification. The fusion of PET and MRI images in machine learning has many advantages for analyzing and diagnosing neurological disorders such as AD [26]. Combining functional information from PET and structural details from MRI provides the models with a more extensive range of features, improving accuracy and strength. This integration compensates for individual modality limitations, allowing algorithms to utilize both imaging techniques’ strengths [27]. Machine learning models can better understand disease progression by capturing both functional and structural changes in the brain, ultimately improving early diagnosis, prognosis, and treatment planning. Hence, various performance metrics are compared for these classes to validate this study. The significant contribution made in this article is described here:

- This work proposes the image-fusion technique for the fusion of (PET + T1-weighted MRI) scans and feature fusion from the fused and non-fused imaging modalities for detecting AD.

- This work proposes the ensemble classification method (GB + SVM_RBF) for Multi Class classification and (SVM_RBF + ADA + GB + RF) methods for the Binary Class classification of AD.

- This work also reaches adequate (Acc) in the Multi Class and the Binary Class classification of AD and its subtypes, i.e., (AD to MCI), which is 91%, and other classes (AD to CN) and (MCI to CN), with 99%. The (Acc) achieved (AD vs. MCI vs. CN) in the Multi Class is 96%.

In this article, Section 2 delves into the previous research on AD detection and the importance of different methods in both single- and multimodal contexts, explicitly focusing on ML, DL, and EL methods. Section 3 provides detailed information about the data set used in the experiment, while Section 4 outlines the methodology, including various preprocessing techniques and the proposed image-fusion method. The results obtained from the ML and ensemble models (EL) are analyzed in Section 5, including Binary and Multi Class results, and the different trending methods used in the article are discussed. Lastly, Section 6 describes the conclusions of all the experimental works.

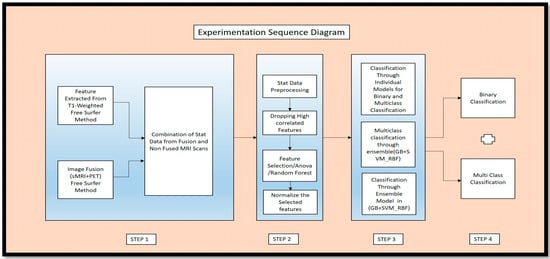

Figure 1 in the article provides an overview of the key experiments conducted in the study through an experimental sequence diagram. This figure outlines the basic experimental procedures described in the article.

Figure 1.

Experimental Sequence Diagram of Proposed Work.

2. Recent Study

Aged people are generally affected by AD; no prevention and cure diagnosis procedures are available for these patients. This disease is increasing drastically, and no decline has occurred [28]. Several studies have come into existence to tackle this issue, such as neuroimaging analysis to detect AD in the early stages [29]. AD detection can often be observed in one of the most common regions, namely the Hp region, by measuring its volume in affected patients. A decrease in the volume can result in the cause of AD [30]. To identify this reason, the semi-automatic segmentation technique ITK-SNAP concerns the age, gender, and left and right Hp volume values of AD patients [31]. Hence these values are taken as the neuroimaging data. These data, using factors such as age, visits, education, and MMSE, are processed using ML algorithms to predict AD [32]. The Extended Probabilistic Neural Network (EANN) and the Dynamic EL Algorithm (DELA) are also becoming trends in AD detection. The Logistic Regression (LR) method also has sustainable (Acc) compared to the other methods.

Single modality and multimodality are ways of overriding the biomarkers. Neuroimaging also identifies the form of biomarkers to detect AD and its stages. Multimodality helps to identify the crucial hybrid set of features that can help diagnose and detect AD. These integrations are achieved through various ML and DL techniques [33]. DL methods also show some promising results in detecting AD and its stages, specifically MCI to AD conversion. They combine various data modalities to determine the difference between AD’s cortical and subcortical regions and their stages [34]. Multimodality in fluorescence optical imaging is used as biofuel for AD detection [35]. Fingerprints are common for the identification of AD. This approach also shows a more significant difference in the different stages of AD. Acoustic biomarkers play an essential role in the detection of subtypes of AD. These data types, when used and processed through the ML technique, provided 83.6% (Acc) in AD and NC persons [36]. The retina attention is taken as a consideration for the identification of AD and other neuro disorders and diseases [37]. Cognitive scores, medication history, and demographic data also provide an effective source of information for classifying AD and subtypes using ML techniques [38]. Tri-modal approaches are also multimodal techniques for identifying hybrid features in the cortical region. Furthermore, ADx is an integrated diagnostic system that combines acoustics, microfluidics, and orthogonal biosensors for clinical purposes [39]. ML and adversarial hypergraph fusion are approaches for the prediction of AD and its stages [40].

A novel EL technique can accurately diagnose Alzheimer’s disease, even in its early stages. This approach enhances AD detection (Acc) by integrating various classifiers from neuroimaging features and surpasses multiple state-of-the-art methods [41]. In this study, Alzheimer’s diagnostic precision was improved by applying the Decision Tree algorithm and three EL techniques to OASIS clinical data, with bagging achieving remarkable (Acc) [42]. To attain a more accurate and reliable model, researchers propose an ensemble voting approach that combines multiple classifier predictions, yielding impressive results for older individuals in the OASIS data set [43]. This investigation introduces a unique computer-assisted method for early Alzheimer’s diagnosis, leveraging MRI images and various 3D classification architectures for image processing and analysis, further enhancing the EL technique’s outcomes [44].

For the detailed longitudinal studies of cortical regions of the brain, different state-of-the-art methods are available, including SVM, DT, RF, and KNN, which have shown the sustainable classification of the different stages of AD [45]. These come under the supervised learning approach and have been able to classify AD and its stages effectively [46]. A framework based on DL is used to identify individuals with various stages of AD, CN, and MCI [47]. In AD, the concatenation of MRI and PET scans using a wavelet transform-based multimodality fusion approach enables the prediction of AD [48]. To identify stages from MCI to AD, a multimodal RNN is used in the DL technique [49]. A comprehensive diagnostic tool detects AD and its stages using the hybrid cross-dimension neuroimaging biomarkers, longitudinal CSF, and cognitive score from the ADNI database [50]. A multimodal DL technique can also be used in clinical trials to identify individuals at high risk of developing AD [51].

These studies demonstrate the effectiveness of using DL and ML methods in single-, dual-, or tri-modality contexts for AD detection. One of the major challenges when employing DL methods for AD detection is the difficulty in visualizing or validating the precise features that contribute to AD identification and its stages. The DL approach makes it challenging to provide evidence for predicting and identifying the features responsible for AD. Moreover, DL methods require a large amount of labeled data for classifying AD and its stages. However, DL methods might necessitate even more data to accurately classify AD and its stages. To assist in AD diagnosis, an image-fusion technique known as ‘GM-PET’ combines MRI and FDG-PET grey matter (GM) brain tissue sections through registration and mask encoding. A 3D multi-scale CNN approach is utilized to assess its effectiveness [52]. In contrast, our research employs ensemble learning techniques to classify various subtypes. By incorporating ML methods, we perform in-depth analyses that enable us to pinpoint the precise AD-related regions within cortical and subcortical features while also evaluating the effectiveness of the fusion technique.

Therefore, ML methods offer several advantages over DL for detecting AD and its stages. They are often more straightforward to implement with detailed reasons about which causes AD and their subtypes. ML uses biomarker features like molecular, audio, fingerprints, structural MRI, functional MRI, PET scans, and clinical, which have shown good performance even with smaller data sets. ML models are preferred because they can better understand the factors that contribute to the disease and its stages. However, the challenges are still in the enormous amount of medical data. EL is favored over traditional ML models, as it can better comprehend the factors contributing to a disease and its progression. Despite the challenges of vast medical data sets and the quest for optimal (Acc), EL techniques help overcome limitations. The diagnostic performance can be significantly improved compared to standard ML techniques. Even though DL has an advantage when dealing with complex data such as fMRI and PET, ML, and EL are practical approaches for detecting AD and its distinct stages.

3. Data Set

This data set has been taken from the Alzheimer Disease Neuroimaging Initiative (ADNI). All the images taken here are raw; they are not preprocessed. The database link is (adni.loni.usc.edu). Los Angeles, CA: Laboratory of Neuro Imaging, University of Southern California Table 1. The PET images were acquired in December, with the data set comprising various subjects from 2010 to 2022. The data were downloaded in November 2022 for preprocessing and feature extraction purposes, specifically for the PET modality of all AD, MCI, and CN subjects. In a similar vein, the sMRI data for AD, MCI, and CN subjects spanned from 2006 to 2014 and were downloaded in April 2022. Instead of subject-wise matching, only quantity and structural mapping were conducted for the analysis.

Table 1.

Data set used in this research.

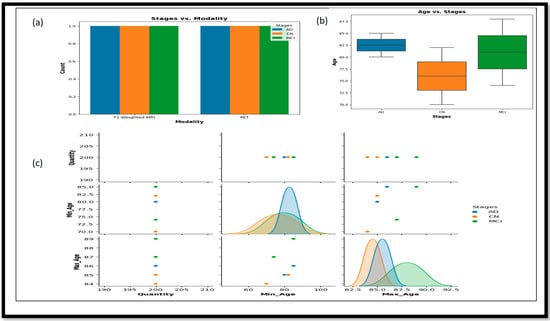

This table describes using two different modalities (T1-weighted MRI) and PET. These data sets are a combination of males and females. Their ages generally range between 70 and 90, and the average age is 80. A total number of 1200 images was used in this research work, and each stage, including AD, MCI, and CN, individually had 200 images. The quality of these images is raw and unfiltered and they were not processed anywhere. Figure 2 provides more detailed information about the relationship between various attributes using different plots.

Figure 2.

(a) Represent the relations between stages and modalities. (b) Represent the Box plot and the relation between the ages and stages of the data set used. (c) It represent the correlations and interactions between the different attributes that is Max_Age, Min_Age and Quantity.

In Figure 2a, the bar plot represents the plot of Stages vs. Modality, which shows the distribution of different stages (AD, CN, and MCI) across the two imaging modalities—T1-weighted MRI and PET. Figure 2b, the box plot of Age vs. Stages, illustrates the age range distribution for each stage, highlighting the differences and similarities in age ranges among AD, CN, and MCI groups. Lastly, the Figure 2c pair plot showcases pairwise relationships between columns in the data set (Min_Age, Max_Age, and Quantity), with different colors representing the various stages.

4. Methods

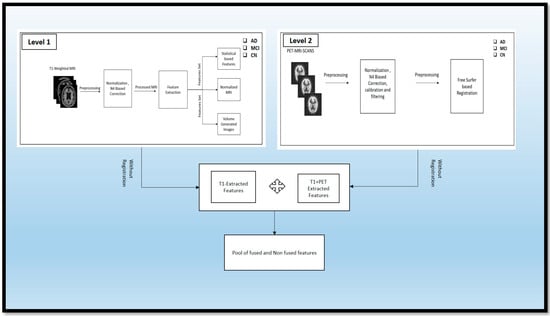

This section discusses the various preprocessing steps required to prepare the data for fusion and the methods to achieve the fusion. In Section 4.1, we detail how we applied the different preprocessing techniques to the T1-weighted images extracted from statistical and volume-generated images. Section 4.2 describes the preprocessing of the PET scans. Section 4.3 describes the fusion of PET and MRI, achieved through the registration technique. Section 4.4 clarifies the fusion of features from the fused and non-fused modalities. Section 4.5 describes the traditional ML methods used, and Section 4.6 describes the ensemble methods used for classifying the different classes: AD, MCI, and CN.

4.1. MRI SCANS

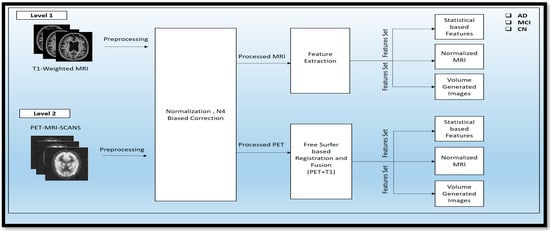

MRI scans were taken of these different classes: AD, MCI, and CN. These scans were raw scans. These scans came with high-rated features and unwanted features for disease diagnosis. So, to reduce the unwanted and noise-oriented elements, the different preprocessing steps, as given in Figure 3, were applied, including normalization and N4 bias correction. Then, the preprocessed MRI scans were processed for feature extraction using automatic pipeline methods and different statistical volume-generated features from the feature extraction method.

Figure 3.

Representation of the Two-Tier Process for Preprocessing and Feature Extraction in PET and sMRI Modalities.

4.1.1. Normalization

The raw image taken from the ADNI data set was unprocessed. So, the normalization technique was applied in that data set to reduce unwanted features. This technique consists of adjusting the intensity range of a T1-weighted MRI image. It includes a proper visualization and understanding of the importance of the brain’s other regions that are affected by AD and its stages. This process is necessary to identify the anatomical features from the cortical area correctly. This formula involves the calculation of each pixel in the percentage range from 0 to 100 and mapping this range with the other pixels, which also normalizes the different intensities. The desired formulae for the calculation of normal image intensity are described in Equation (1):

In Equation (1), defines the normal pixel intensity value, stands for the minimum pixel intensity and describes the maximum pixel intensity.

4.1.2. N4 Biased Correction

After the normalization, the T1-weighted modality was processed using the N4 bias correction method to remove abnormalities from the different intensities of the MRI scanners. This preprocessing technique is an essential technique that consists of removing the various artifacts from the MRI scanners from the other version. This preprocessing method was applied to the MRI, PET, and DTI scans for better visualization. These corrections can be achieved through the formulae below, in Equation (2):

In Equation (2), the normal pixel intensity value () is calculated concerning the exponential constant and the pixel value attained from the different modalities of AD. is the shift of the curve that receives the different parameter. The parameter k contains the slope of the curve. After the preprocessing, the processed T1-weighted MRI scans of the MCI, AD, and CN stages are fed as input to the feature extraction method, which utilizes the automatic pipeline method for the feature extraction.

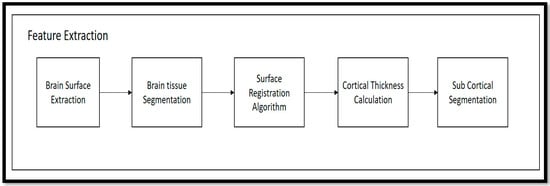

4.1.3. Feature Extraction

Following the preprocessing stage, the T1-weighted modalities undergo feature extraction methods. The Free Surfer methods are used for feature extraction, which consist of various fundamental algorithms that help to identify additional features in the brain region. This method uses the Brain Surface Extraction technique, which combines surface modeling and deformable models to identify the brain regions from MRI scans. The technique works on the principle of identifying curves that represent the different boundary regions of the brain. These boundaries are refined using other deformable methods available in the automatic pipeline method. Then, the Brain Tissue Segmentation technique uses a deformable model and MNI-template-based atlas to segment the different parts of the brain and identify areas with white matter (WM), grey matter (GM), and cerebrospinal fluid (CSF). The Surface Registration approach is employed to register the MRI images of the subject’s brain to a standard brain template and one another, to examine the regional brain volume and cortical thickness. This involves normalizing the intensities of the subject’s MRI scans using the standard template. The cortical thickness estimation is performed through surface modeling and the linear registration of the tissue segmentation results. To segment the brain into different subcortical structures such as the amygdala and Hp, atlas-based segmentation and intensity normalization are used. The entire process follows a professional and technical approach.

Then, after the completion of the execution shown in Figure 4, a different output is achieved in the form of folders, i.e., subject data, including anatomical image, cortical surfaces, WM segmentation, and GM segmentation. Statistical data were generated from the analysis of the brain data.

Figure 4.

Free Surfer-based Feature Extraction from T1-weighted MRI Scans of AD, MCI, CN.

Algorithm 1 that describes transforming the raw T1-weighted data into processed T1wMRIs through the N4 bias correction approach, which removes artifacts and normalizes the images for uniformity. Feature extraction techniques such as Brain Surface Extraction (T1wMRI) and Brain Tissue Segmentation (T1BSE) are applied to compare the different brain regions, followed by Surface Registration (T1BTS) to identify other parts of the T1-weighted image. Cortical Thickness (T1SR) is then calculated, and Sub-Cortical Registration (T1CT) is implemented to produce volumetric shapes, constructed regions, and various cortical and subcortical brain regions in stat files. After completing level 1 experiments, similar preprocessing steps are taken to prepare the PET modality for the fusion process.

| Algorithm 1 Feature Extraction from the Single Modality (T1-weighted MRI Scans) | |

| 1: | Input: Raw T1-weighted Image |

| 2: | Output: Statistical-based features |

| 3: | For I- = T T1wMRIs to n do |

| 4: | { |

| 5: | Normalization (T1wMRIsN4); |

| 6: | {Npv = (Pv − Mv)/(Mav − Mv)} |

| 7: | N4 Bias Correction(T1wMRIs); |

| 8: | {N4v = (1/(1 + exp(−k ∗ (pv − c))))} |

| 9: | return T1wMRI; |

| 10: | } |

| 11: | Then T1wMRI -> Processed to Free Surfer Method () |

| 12: | { |

| 13: | Brain Surface Extraction (T1WMRI) |

| 14: | Brain Tissue Segmentation (T1BSE) |

| 15: | Surface Registration (T1BTS) |

| 16: | Cortical Thickness (T1SR) |

| 17: | Sub-Cortical Registration (T1CT) |

| 18: | } |

| 19: | End |

4.2. PET SCANS

Before fusion, the preprocessing of the PET scans is one of the most important features. The preprocessing in the PET scans is used to remove noise, reduce artifacts, and improve the signal-to-noise ratio of the scans. The most common preprocessing techniques used for PET scans include N4 bias correction, normalization, and registration. Artifacts are reduced using techniques such as median filtering, which helps to remove artifacts by retaining the originality of the image. These artifacts are generally removed from the PET scans using the adaptive noise-filtering technique. In this technique, the noise-oriented input signal is found and then it is subtracted from the template signal by preserving the signal original form.

In Equation (3), is the new noise level, is the old noise level, alpha is the adaption rate and x is the current noise sample.

Filtering involves using a low-pass or high-pass filter to remove high-frequency noise and artifacts Calibration is used to ensure that the intensities of the pixels are consistent across images. Normalization is used to adjust the intensities of pixels, so they are within a certain range. Finally, registration is used to align the images across multiple PET scans. This is conducted by using an adaptive noise-reduction technique, which helps to reduce noise without affecting the original image. Then the preprocessed PET scans are further supplied to the Free Surfer-based registration method.

Algorithm 2 contains the basic preprocessing approach which is contextual to PET scans. The PET scans are basically highly integrated into the features, but the vigilance of these features cannot be integrated through this individual modality. This modality requires a certain fusion approach with the T1-weighted image, to obtain the clear vision of the amyloid protein and the affected cortical region in the brain. These PET scans underwent the N4 bias correction methods, normalization and adaptive noise-filtering methods. These methods help in the fusion approach for the feature extraction of the PET scans.

| Algorithm 2 Preprocessing approach in Single Modality (PETscans1) | |

| 1: | Input: Raw PETscans |

| 2: | Output: Preprocessed PETscans |

| 3: | For I- = PETscans to n do |

| 4: | { |

| 5: | N4 Bias Correction (PETscans1); |

| 6: | (pv − c))))} |

| 7: | Normalization (PETscans2); |

| 8: | {Npv = (Pv − Mv)/(Mav − Mv)} |

| 9: | Adaptive noise filtering |

| 10: | PETtscans = (1 − α PETtscansold + α x |

| 11: | } |

4.3. Multimodality Image Fusion

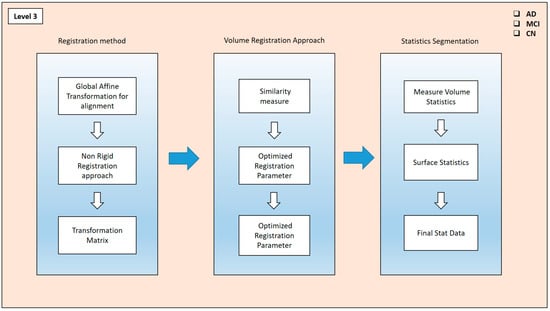

Image fusion is a technique in which the different modalities of images fuse to provide the hybrid set of features in Figure 5. Pixel-level, feature-level, and decision-level fusion are the basic types of image-fusion approaches in practice. In this process, we have chosen the pixel-level fusion approach to see the actual difference in the cortical region of AD and its different stages through these fused modalities. This helps reduce the noise level and provides a better resolution of the affected area of the brain region. The T1-weighted scans were processed through Free Surfer by the recon-all methods, which contain the pipeline of the different preprocessing steps and help to evaluate the significant features—volume, area, mean, and others—of the different cortical regions the brain. The T1 and PET scans are taken using a register method, where a global affine transformation is implemented for alignment. This transformation method produces a better comparison and study of these modalities, which can be enriched with the GM, WM, cortical thickness, and presence in the neuro region. This was operated using the three translations and aligned adaptation approaches. After the registration method, the mutual characteristics of these modalities required us to determine the similarity between the two scans. After the fusion of these two scans, the volume registration approach wraps PET and MRI images by translating the source volume to the target volume’s space. To align these volumes and bring them into a unified space, the mri_vol2vol method establishes their transformation matrix. Subsequently, the similarity measure between the two modalities was analyzed.

Figure 5.

Fusion approach for PET and MRI scans.

The fused (PET and MRI) images’ similarity measures were optimized through the different registered parameters. They were also fine-tuned through this approach. These recorded parameters were taken into account when creating the transformation matrix. This matrix calculates the segmented features via the stat segmentation approach from the Free Surfer method. Hence, the volume statistics were measured in the different regions, and using the additional stat data, the calculations were performed for this approach. These stat data were calculated for the specific ROI in the brain, such as the frontal lobes, temporal lobes, occipital lobes, and cerebellum, as well as subcortical structures such as the Hp, amygdala, thalamus, and basal ganglia. Hence, these generations of the different stats contain cortical thickness, surface area, folding index, curvature, mean curvature, and cortical volume.

Algorithm 3 that is fusion of the T1 scans and PET modality. Here, in this table, we first applied the bb-registration methods for performing the registration, including global affine transformation and non-rigid transformation, and then we returned the registered modality. Then, these fused modalities went to volume-to-volume mapping, where they were altered in the similarity measurement and correlation matrix, and we returned the matrix. From the correlations matrix, the stat features were extracted, including the volume statistics and surface statistics for the variation in the classification of AD, MCI and CN classes.

| Algorithm 3 Algorithm for Fusion Dual Modality (PETscans + T1scans) | |||

| 1: | Input: Preprocessed PETscans and T1scans | ||

| 2: | Output: Stat_data_neuroregion | ||

| 3: | for I = PETscans and T1scans to n do | ||

| 4: | Register_method { | ||

| 5: | Global affine transformation (PETscans + T1scans); | ||

| 6: | Non-Rigid Registration (PT1rscans); | ||

| 7: | Return Matrix (NRPETscans + NRT1scans); | ||

| 8: | } | ||

| 9: | Volume to Volume Mapping { | ||

| 10: | Similarity Measure (NRPETscans + NRT1scans) | ||

| 11: | Correlation Matrix (NRPETscans + NRT1scans) optimized parameters | ||

| 12: | Return Matrix ((NRPETscans + NRT1scans) optimized parameters | ||

| 13: | } | ||

| 14: | Stats Extraction From Output of v to v Mapping { | ||

| 15: | Volume Statistics (((NRPETscans + NRT1scans) optimized parameters)) | ||

| 16: | Surface Statistics ((((NRPETscans + NRT1scans) optimized parameters)) | ||

| 17: | Return Stat Data (((((NRPETscans + NRT1scans) optimized parameters))) | ||

| 18: | } | ||

| 19: | end | ||

4.4. Feature-Level Fusion

For our feature-level fusion, we extracted the relevant features from T1-MRI scans, which include calculation of the volume, area, white matter, curving, folding, Gaussian curve, thickness, and folding from the different cortical regions of the brain. In the same way, after fusion (T1 + PET), we extracted the features from the combined modality and compiled them into one stat file for further classification. Before applying these combined features, we used the correlation and drop-out techniques for the removal of unwanted features before the selection of features. We applied Binary- and Multi Class-level classification for the intended classes: AD, MCI, CN. The whole process is represented in Figure 6.

CSR = [F_T1, F_FNFR]

Figure 6.

Feature-Level Fusion of PET MRI Scans of AD, MCI, CN.

Let n_t1 be the number of features extracted from the T1 modality (F_T1) and n_fnfr be the number of features extracted from the FNFR modality (F_FNFR). If we have m samples, then the matrix representation of the CSR will be an m x (n_t1 + n_fnfr) matrix, denoted as CSR_mat:

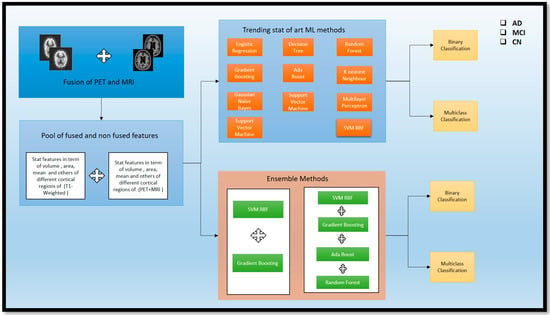

4.5. ML and EL Models

ML methods are currently trending as essential techniques for classifying different stages of AD. However, using the ensemble technique for validating results is a novel approach that has yet to be explored in the trending ML methods for classifying AD, MCI, and CN individuals. Therefore, both approaches were used in our study, utilizing the latest trending ML approach, as shown in Figure 6. We ensembled from those methods to validate the classification (Acc) of AD, MCI, and CN. We used a feature-fusion approach to obtain a pool of fused and non-fused features, including the area, maximum, mean, number of vertices, and number of voxels, standard deviation, and volume from the fused (PET and MRI) scans. Additionally, we considered the segmented volume, mean, and area from the segmented region of the non-fused scans. These features were then passed through the ML and EL approaches for further AD, MCI, and CN classification. The process is described in detail in Figure 7.

Figure 7.

Overview of standalone ML methods and ensemble methods for the classification of AD and subtypes.

4.6. ML Methods

There are some current ML methods that are traditionally used for the classification of AD and its subtypes. Here, recent methods have been used for the category. Logistic Regression (LR) is the statistical technique employed for the Binary type. A Decision Tree (DT) model resembles a flowchart and divides data depending on the feature values. Random Forest (RF) is a collection of Decision Trees that reduces overfitting. Gradient Boosting (GB) is an iterative approach that merges weak learners to enhance prediction. Ada Boost (AB) is a boosting algorithm that changes sample weights to improve classifier performance. K-Nearest Neighbor (KNN) is an instance-based technique that categorizes data based on the majority vote of its nearest neighbors. Gaussian Naive Bayes (GNB) is a probabilistic classifier based on Bayes’ theorem with an assumption of Gaussian distribution. Support Vector Machine (SVM) is a technique that determines the best decision boundary between classes. Multi-layer Perceptron (MLP) is a neural network that learns non-linear relationships in a feedforward manner. Lastly, Support Vector Machine RBF (SVM-RBF) is an SVM that incorporates a Radial Basis Function Kernel to allow for non-linear decision boundaries. LR uses multi_class = ‘auto’ for automatic Multi Class strategy selection; DT uses default settings; SVM uses a linear kernel, C = 0.9 for regularization, RF uses default settings; GB and AB use default parameters; KNN uses three nearest neighbors; GNB uses a default parameter; MLP uses two hidden layers, ReLU activation, and the Adam solver; and SVM RBF uses an RBF kernel, gamma = 0.9, and C = 1. Hence, these are the parameters used for both the Binary and Multi Class for AD.

4.7. El Methods

To see the effectiveness reached in the (Acc), we tried this ensemble of different traditional ML models. First, we combined Gradient Boosting (GB) and Support Vector Machine with Radial Basis Function Kernel (SVM_RBF) to create an ensemble method (SVM_RBF + GB) to classify Alzheimer’s disease (AD) subtypes. The voting classifier is an ensemble learning algorithm that improves (Acc) and stability by leveraging diversity among the base models. The voting classifier ensemble algorithm is used with the output of a GB classifier and an SVM with an RBF kernel. It is a ‘hard’ voting approach, selecting the class with the most votes from the base models. The SVM was adopted with the same parameter as a decision boundary flexibility, C = 1 was used to enable regularization and generalization, and Multi Class classification was performed. GB improves prediction (Acc) by iteratively refining the weak metrics data, while SVM_RBF excels in the classification removal of nonlinear data, which is included stat data part. Hence, the combination of these models provides a better classification for the Multi Class of AD and its subtypes.

Similarly, we adopted this ensemble approach for Binary Class classification. Here, we used the four models so the ensemble performed better in the Binary Class, especially in the (AD vs. MCI). The (SVM_RBF + AB + GB + RF) is for the Binary classification of AD and subtypes of AD. The model, which is a combination of four different classifiers, includes specific parameters for each one. One of the classifiers is an SVM with an RBF kernel, with gamma = 0.9 to control how the decision boundary is shaped, a regularization parameter of C = 1, and decision_function_shape = ‘ovr’ for Multi Class classification. Two other classifiers use default parameters for AB classifier () and GB classifier (). The fourth classifier, RF, utilizes RF classifier () with a criterion of ‘gini’ and n_estimators = 100, which determine the number transit in the data. This can enrich the classifier with better performance for classifying AD and its subtypes. Consequently, after implementing these techniques, significant findings were observed for identifying AD and its various stages. A detailed discussion is presented in Section 5.

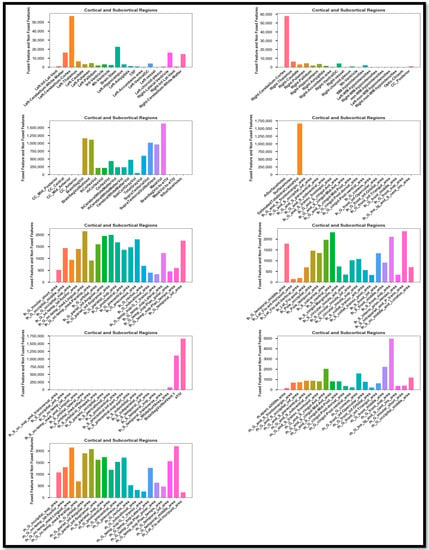

5. Result Analysis and Discussion

After the fusion of the features extracted from the fused PET and T1-weighted modalities and the non-fused modalities, the high-intensity and noise-integrated features were in the generated stat file. These features required regress preprocessing before the feature selection. For the different features, normalization, correlation, scaling, and feature drop-out techniques were applied to detect AD and its stages. First, this study uses the correlation approach to filter out the relevant data whose impact is more significant than 0.9. This procedure already normalized these remaining features. The feature selection method ANOVA F-value and the RF technique were used for feature selection. Hence, the selected features were obtained as shown in Figure 8. This feature set contains information about the different forms of statistical calculation of the other cortical regions of the brain. Hence, after the feature selection, these feature sets passed through various trending learning techniques for classifying AD and its stages. These techniques include LR, DT, SVM, RF, GB, AB, KNN, GNB, MLP, and SVM_RBF for the Binary and Multi Class classification of AD, MCI, and CN, and similarly, the ensemble methods (SVM_RBF + GB) and (SVM_RBF + AB + GB + RF). Section 5.1 covers Experiment 1, which involves Binary classification using ML and EL techniques. Section 5.2 details Experiment 2, where Multi Class classification is performed among AD, MCI, and CN classes. The same EL and ML methods are also used here. These classifications are conducted using different performance metrics in terms of (Acc), precision (Prec), recall (Rrec) achieved, and F1 score (F1sco).

Figure 8.

Selected feature Set of the Feature-level fusion.

5.1. Experiment 1

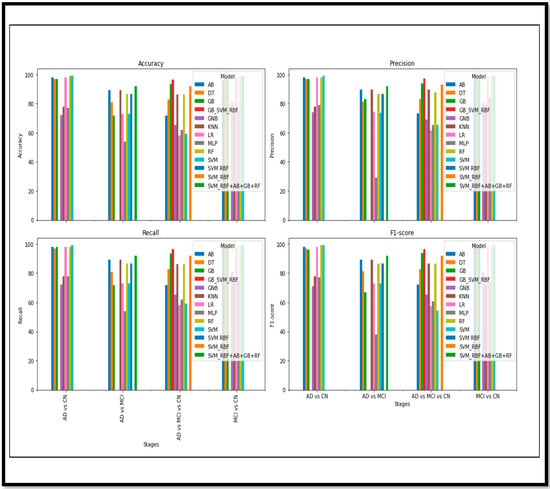

Experiment 1 involved performing Binary Class classification on three classes from the Alzheimer’s data set (AD, MCI, and CN). The results of the Binary Class, by applying these standalone learning and EL methods, are described in Table 2.

Table 2.

The results achieved from the different trending methods and performing the Binary classification (MCI vs. CN).

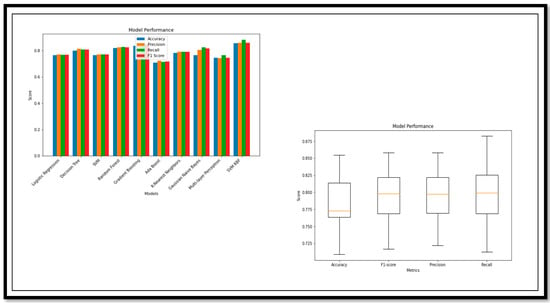

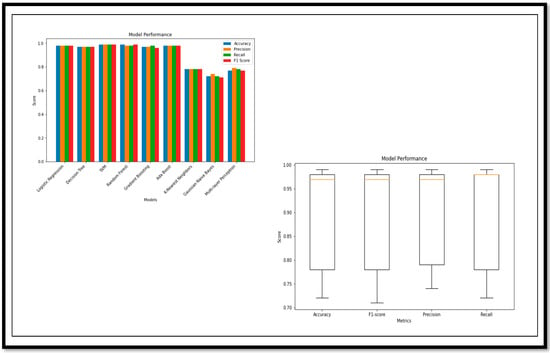

Among these models, the SVM has the highest Acc (98), Prec (99), Rrec (99), and F1sco (99), indicating that it performs the best overall. The other models also perform relatively well, with high Acc, Prec, Rrec, and F1sco scores, ranging from 98 to 99. However, KNN and MLP models have relatively lower performance scores. In the classification of MCI vs. CN, we did not use the ensemble method; the adequate (Acc) was achieved through standalone ML methods. The performance analysis is described using the graph and the box plot of all the models for this classification in Figure 9.

Figure 9.

Performance Metrics and Model Performance of the Binary Class (MCI vs. CN).

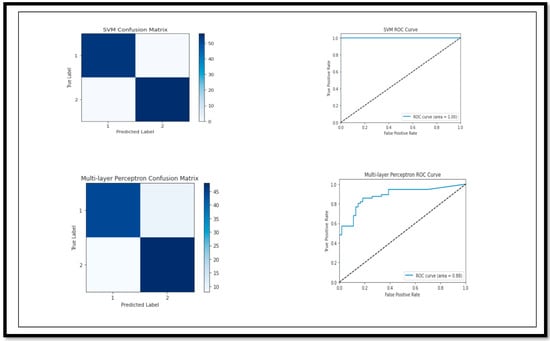

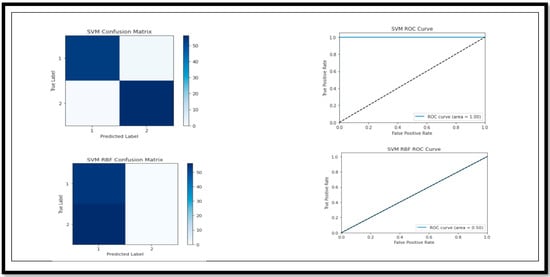

The model break down, on the basis of the performance parameters for (MCI vs. CN), is shown below. The ROC curve and confusion matrix of the model with high and low (Acc) are shown in Figure 10.

Figure 10.

Confusion Matrix and ROC curve of models for classification of Binary Class (MCI vs. CN).

Again, we performed the classification for the other classes, such as AD vs. MCI. The conversion of MCI to AD always has challenges in the classification. These challenges increase due to the similarity in some of the features, where the classification model is unable to find the difference in the comparison. Here, in this classification, we used standalone methods and ensemble methods. Table 3 below describes the detailed results, which were obtained after applying all these models.

Table 3.

The results achieved by the different ML models for the Binary Class (AD vs. MCI).

The ensemble method (SVM_RBF + AB + GB + RF) has the highest (Acc) 91.89, (Prec) 91.98, (Rrec) 91.89, and (F1sco) 91.87, indicating that it has the best performance value as compared to the others. AB, GB, KNN with an (Acc) of 89.19 and (Prec), (Rrec), and (F1sco) close to 89, is quite close to a good performance. The models, including LR, DT, SVM, GB, MLP, have relatively reduced (Acc), (Prec), (Rrec), and (F1sco) levels. The ensemble and RF, GB, and AB models show an acceptable (Acc), while other models such as LR, DT, SVM, GB, and MLP are lacking concerning the conversion of MCI to AD. The model break down, on the basis of the performance parameters for the classification of (AD vs. MCI), is in Figure 11.

Figure 11.

Performance Metrics and Model Performance of the Binary Class (AD vs. MCI).

The ROC curve and confusion matrix of the model with high and low (Acc) (AD vs. MCI) are shown in Figure 12.

Figure 12.

Confusion Matrix and ROC curve of models for classification of Binary Class (AD vs. MCI).

In the previous classification, we observed that the conversion of AD vs. MCI does not provide the greater Acc as compared to the CN vs. MCI. An adequate (Acc) is achieved through the ensemble model of 91. Now, again, we performed the classification of the pure AD class vs. the CN class. The performance of these different models was acquired and the results are described in Table 4.

Table 4.

Describes the results achieved by the different ML models for the Binary Class (AD vs. CN).

The SVM model has the highest (Acc) (99), (Prec) (99), (Rrec) (99), and (F1sco) (99), indicating that it performs the best overall. The RF and AB models also have high (Acc), (Prec), (Rrec) and (F1sco), with scores of 99 and 98, respectively. The LR, DT, GB, KNN, GNB, and MLP models have lower performance metrics in comparison to the other models. The performance analysis using a graph and a box plot of all the models for these classifications is described in Figure 13.

Figure 13.

Performance Metrics and Model Performance of the Binary Class (AD vs. CN).

The model break down, on the basis of the performance parameters for the classification of (AD vs. CN), is shown below. The ROC curve and confusion matrix of the highest (Acc) and lower (Acc) achieved by the model for the classification are shown in Figure 14.

Figure 14.

Confusion Matrix and ROC curve of models for classification of Binary Class (AD vs. CN).

Hence, after seeing the performance of the classifications of all the classes (AD, MCI and CN), Binary Class detection has the maximum (Acc) of 99% using the SVM model. AD vs. MCI classification also shows a sustainable (Acc) as compared to previous research trends. After the Binary Class, we performed Multi Class detection for the detection of AD and its different stages.

5.2. Experiment 2

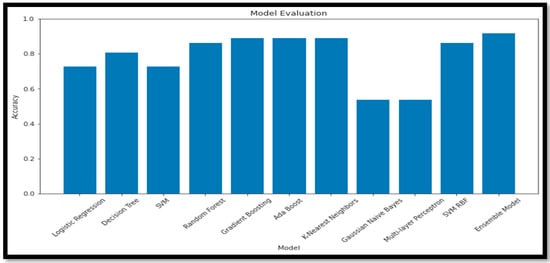

Since Binary Class classification was conducted and an adequate (Acc) was achieved using the classification models, we could again apply this model for Multi class and see the performance of this model in this scenario. Table 5 describes the detailed results, which were obtained after applying all these models (AD vs. MCI vs. CN).

Table 5.

Describes the results achieved by the different ML models for the Binary Class (AD vs. MCI vs. CN).

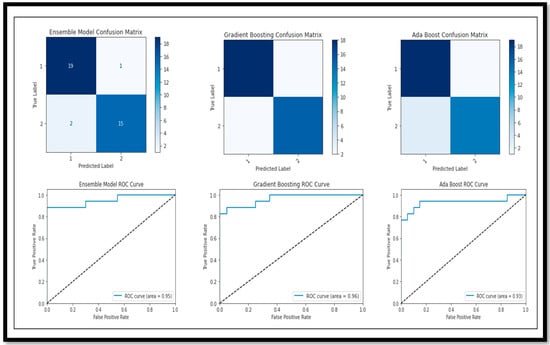

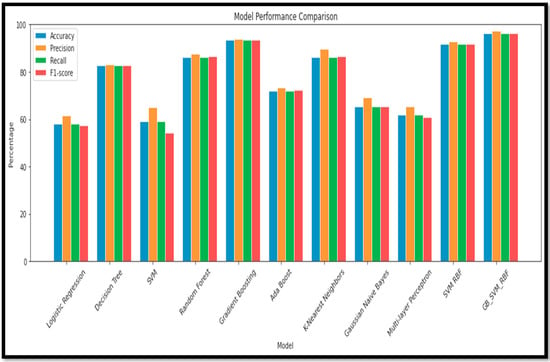

Based on the results, the ensemble model has lots of potential for the m=Multi Class classification of AD and its subtypes. There was a sustainable (Acc) achieved in the Multi Class through the ensemble model as compared to standalone learning methods. The ensemble model (GB_SVM_RBF) has the highest (Acc), with 96.36% (Acc) and an (F1sco) of 96.36%. In contrast, the Logistic Regression model has the second-lowest performance in both (Acc) (58.18%) and (F1sco) (57.27%). Other models, such as (RF), (GB), and (SVM_RBF) also demonstrate strong performance, while models such as (GNB) and (MLP) are less accurate and have lower (F1sco) levels. The performance analysis, using a graph and a box plot, of all the models for these classifications is described in Figure 15.

Figure 15.

Performance Metrics and Model Performance of the Multi Class (AD vs. MCI vs. CN).

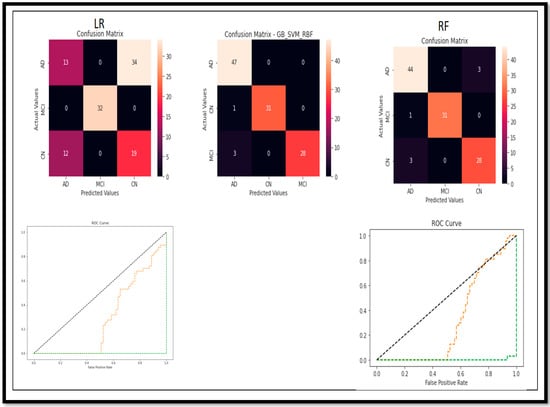

Following the evaluation of performance metrics and model performance for all models, we also examined the Confusion Matrix and ROC curve for each model in relation to the results obtained for (BC) (AD vs. MCI vs. CN) (Figure 16).

Figure 16.

Confusion Matrix and ROC curve of models for classification of Multi Class (AD vs. MCI vs. CN).

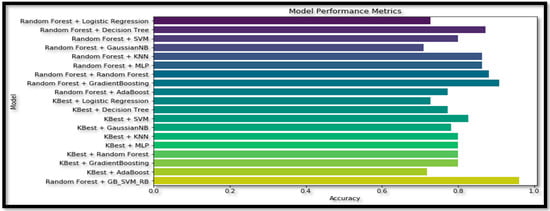

To further validate our study, we employed various feature selection (Random Forest and KBest) and classification techniques and observed the differing results upon applying these models. When conducting an ablation study concerning feature selection methods and classification models, the ensemble model consistently demonstrated superior accuracy compared to the other models utilized, shown in Table 6.

Table 6.

Describes the ablation study of the different ML and Ensemble models for the Multi Class (AD vs. MCI vs. CN).

Table 6 presents the accuracy (Acc) of various combinations of feature selection methods and classification models. The ensemble model ‘RF + GB’ achieves the highest accuracy (90.91%) among the non-ensemble models. The original ensemble model, ‘GB_SVM_RBF’, outperforms all the other models, with an accuracy of 96%. Comparing the feature selection methods, models using ‘RF’ generally achieve higher accuracy than those using ‘KBest‘. Among the classifiers, ‘DT’, ‘KNN’, and ‘MLP’ consistently yield relatively high accuracy scores when combined with different feature selection methods. The ‘GNB’ and ‘AB’ classifiers result in lower accuracy scores in most cases, indicating they may not be the most suitable classifiers for this data set. The ensemble model ‘GB_SVM_RBF’ demonstrates the best performance, and utilizing the ‘RF’ feature selection method yields superior results to the ‘KBest’ method. The plot for this study is represented in Figure 17.

Figure 17.

Plot for the ablation study of the different ML and Ensemble models for the Multi Class (AD vs. MCI vs. CN).

The cumulative (Acc) comparison of the Binary Class and Multi Class classes of the AD with respect to the different models is shown in Figure 18.

Figure 18.

Cumulative performance metrics comparison of Binary and Multi Class.

Regarding the fusion of the features and images, the results have effectively shown progress in detecting AD and its stages. The fusion approach has significantly outperformed other non-fusion methods. Figure 15 demonstrates the different performance metrics of the various models for the Binary and the Multi Class classification of AD. An acceptable (Acc) is achieved in the Binary Class classification. Many ML and ensemble models have outperformed and shown sustainable (Acc). However, for Multi Class, the (Acc) is also acceptable, but when comparing with the Binary results, there is still room for improvement. The results achieved in this article are compared and validated with the recent research conducted in AD detection in Table 7.

Table 7.

Exiting proposed method comparison with the other method in the multimodality.

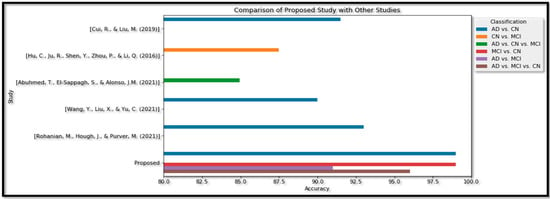

The proposed study, as shown in the table, utilized a combination of image fusion, feature-level fusion, and ensemble methods for classification. For the Binary classification of AD vs. CN, an accuracy of 99% was achieved, which is higher than the accuracies obtained in other studies. In the Binary type of MCI vs. CN, an accuracy of 99% was obtained. For the Binary classification of AD vs. MCI, an accuracy of 91% was achieved.

For the Multi Class category of AD vs. MCI vs. CN, an accuracy of 96% was obtained. The author reports that this result outperforms the Multi Class classification accuracy of 84.95% [32]. Hence, from the above table, we can see that the proposed model shows an effective (Acc) in terms of the Binary Class and Multi Class classification in Figure 19.

Figure 19.

Proposed Method analysis with the recent studies performance metrics comparison of Binary and Multi Class [30,31,32,33,34].

6. Conclusions

The prediction of AD and its stages is widely explored in this article using the multimodal approach. An automatic pipeline method called Free Surfer helps to combine two modalities (PET and MRI) through the affine registration method. This whole technique uses pixel-level fusion. Then, these fused and non-fused outcomes are again processed for feature extraction. The extracted features, including volume, area, curving, folding, standard deviation, and mean, are from different brain cortical and subcortical regions, including the Hp, amygdala, and putamen. The features are fused for both modalities, and unnecessary elements are removed, leaving only the optimum characteristics for classification. Techniques such as ANOVA, scalar, Random Forest classifier, and correlation are used to select the prominent features. Those features are passed through various ML trending methods, including LR, DT, RF, GB, AB, KNN, GNB, SVM, MLP, SVM-RBF, and EL. Specifically, the EL methods are SVM_RBF + AB + GB + RF and GB + SVM_RBF. In a Binary classification of AD vs. CN, most models achieved an adequate (Acc) of 99%. For MCI vs. CN, high (Acc) scores were found using SVM, RF, GB, and AB models; that is, 99%. The (Acc) of AD to MCI detection was lower than other Binary classifications, but an ensemble model (SVM_RBF + AB + GB + RF) still achieved 91% (Acc). For AD vs. MCI vs. CN analysis, the ensemble model (GB + SVM_RBF) had the highest (Acc) score of 96%. Therefore, the image- and feature-level fusion techniques demonstrate potential as features for ensemble classification models, which perform sustainably in Binary and Multi Class classification. Our subsequent analysis in future work will focus on identifying which regions provide the most prominent features for detecting AD, MC, and CN.

Author Contributions

Conceptualization A.S., R.T. and S.T.; methodology, A.S.; software, A.S.; validation, A.S., R.T. and S.T.; formal analysis, A.S.; investigation, R.T.; resources, S.T.; data curation, A.S.; writing—original draft preparation, A.S.; writing—review and editing, A.S.; visualization, A.S.; supervision, S.T.; project administration, R.T.; funding acquisition, S.T. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Abdelaziz, M.; Wang, T.; Elazab, A. Alzheimer’s disease diagnosis framework from incomplete multimodal data using convolutional neural networks. J. Biomed. Inform. 2021, 121, 103863. [Google Scholar] [CrossRef] [PubMed]

- Arora, H.; Ramesh, M.; Rajasekhar, K.; Govindaraju, T. Molecular tools to detect alloforms of A$β$ and Tau: Implications for multiplexing and multimodal diagnosis of Alzheimer’s disease. Bull. Chem. Soc. Jpn. 2020, 93, 507–546. [Google Scholar] [CrossRef]

- Ashraf, G.M.; Nigel, H.G.; Taqi, A.K.; Iftekhar, H.; Shams, T.; Shazi, S.; Ishfaq, A.S.; Syed, K.Z.; Mohammad, A.; Nasimudeen, R.J.; et al. Protein misfolding and aggregation in Alzheimer’s disease and type 2 diabetes mellitus. CNS Neurol. Disord.-Drug Targets (Former. Curr. Drug Targets-CNS Neurol. Disord.) 2014, 13, 1280–1293. [Google Scholar] [CrossRef]

- Battineni, G.; Hossain, M.A.; Chintalapudi, N.; Traini, E.; Dhulipalla, V.R.; Ramasamy, M.; Amenta, F. Improved Alzheimer’s Disease Detection by MRI Using Multimodal Machine Learning Algorithms. Diagnostics 2021, 11, 2103. [Google Scholar] [CrossRef]

- Dai, Z.; Yan, C.; Wang, Z.; Wang, J.; Xia, M.; Li, K.; He, Y. Discriminative analysis of early Alzheimer’s disease using multi-modal imaging and multi-level characterization with multi-classifier (M3). Neuroimage 2012, 59, 2187–2195. [Google Scholar] [CrossRef] [PubMed]

- Fabrizio, C.; Termine, A.; Caltagirone, C.; Sancesario, G. Artificial intelligence for Alzheimer’s disease: Promise or challenge? Diagnostics 2021, 11, 1473. [Google Scholar] [CrossRef]

- Goel, T.; Sharma, R.; Tanveer, M.; Suganthan, P.N.; Maji, K.; Pilli, R. Multimodal Neuroimaging based Alzheimer’s Disease Diagnosis using Evolutionary RVFL Classifier. IEEE J. Biomed. Health Inform. 2023; early access. [Google Scholar] [CrossRef]

- Goenka, G.; Tiwari, S. AlzVNet: A volumetric convolutional neural network for (MC) of Alzheimer’s disease through multiple neuroimaging computational approaches. Biomed. Signal Process. Control 2022, 74, 103500. [Google Scholar] [CrossRef]

- Goenka, N.; Tiwari, S. Multi-class classification of Alzheimer’s disease through distinct neuroimaging computational approaches using Florbetapir PET scans. Evol. Syst. 2022, 1–24. [Google Scholar] [CrossRef]

- Kang, W.; Lin, L.; Zhang, B.; Shen, X.; Wu, S.; Alzheimer’s Disease Neuroimaging Initiative. Multi-model and multi-slice ensemble learning architecture based on 2D convolutional neural networks for Alzheimer’s disease diagnosis. Comput. Biol. Med. 2021, 136, 104678. [Google Scholar] [CrossRef]

- Syed, A.H.; Khan, T.; Hassan, A.; Alromema, N.A.; Binsawad, M.; Alsayed, A.O. An Ensemble-Learning Based Application to Predict the Earlier Stages of Alzheimer’s Disease (AD). IEEE Access 2020, 8, 222126–222143. [Google Scholar] [CrossRef]

- Lella, E.; Pazienza, A.; Lofù, D.; Anglani, R.; Vitulano, F. An Ensemble Learning Approach Based on Diffusion Tensor Imaging Measures for Alzheimer’s Disease Classification. Electronics 2021, 10, 249. [Google Scholar] [CrossRef]

- Pan, D.; Zeng, A.; Jia, L.; Huang, Y.; Frizzell, T.; Song, X. Early detection of Alzheimer’s disease using magnetic resonance imaging: A novel approach combining convolutional neural networks and ensemble learning. Front. Neurosci. 2020, 14, 259. [Google Scholar] [CrossRef] [PubMed]

- Hu, S.; Shen, Y.; Wang, S.; Lei, B. Brain MR to PET synthesis via bidirectional generative adversarial network. In Proceedings of the Medical Image Computing and Computer Assisted Intervention—MICCAI 2020: 23rd International Conference, Lima, Peru, 4–8 October 2020; Proceedings, Part II 23. Springer International Publishing: Cham, Switzerland, 2020; pp. 698–707. [Google Scholar]

- Zhu, W.; Sun, L.; Huang, J.; Han, L.; Zhang, D. Dual Attention Multi-Instance Deep Learning for Alzheimer’s Disease Diagnosis With Structural MRI. IEEE Trans. Med. Imaging 2021, 40, 2354–2366. [Google Scholar] [CrossRef] [PubMed]

- Amlien, I.; Fjell, A. Diffusion tensor imaging of white matter degeneration in Alzheimer’s disease and mild cognitive impairment. Neuroscience 2014, 276, 206–215. [Google Scholar] [CrossRef] [PubMed]

- Raji, C.A.; Lopez, O.L.; Kuller, L.H.; Carmichael, O.T.; Becker, J.T. Age, Alzheimer disease, and brain structure. Neurology 2009, 73, 1899–1905. [Google Scholar] [CrossRef]

- Buckner, R.L. Memory and executive function in aging and AD: Multiple factors that cause decline and reserve factors that compensate. Neuron 2004, 44, 195–208. [Google Scholar] [CrossRef]

- Davatzikos, C.; Fan, Y.; Wu, X.; Shen, D.; Resnick, S.M. Detection of prodromal Alzheimer’s disease via pattern classification of magnetic resonance imaging. Neurobiol. Aging 2008, 29, 514–523. [Google Scholar] [CrossRef]

- Mosconi, L.; Berti, V.; Glodzik, L.; Pupi, A.; De Santi, S.; de Leon, M.J. Pre-clinical detection of Alzheimer’s disease using FDG-PET, with or without amyloid imaging. J. Alzheimer’s Dis. 2010, 20, 843–854. [Google Scholar] [CrossRef]

- Wang, X.; LMichaelis, M.; Michaelis, K.E. Functional genomics of brain aging and Alzheimer’s disease: Focus on selective neuronal vulnerability. Curr. Genom. 2010, 11, 618–633. [Google Scholar] [CrossRef]

- Riederer, I.; Bohn, K.P.; Preibisch, C.; Wiedemann, E.; Zimmer, C.; Alexopoulos, P.; Förster, S. Alzheimer Disease and Mild Cognitive Impairment: Integrated Pulsed Arterial Spin-Labeling MRI and 18F-FDG PET. Radiology 2018, 288, 198–206. [Google Scholar] [CrossRef]

- Zhang, D.; Wang, Y.; Zhou, L.; Yuan, H.; Shen, D. The Alzheimer’s Disease Neuroimaging Initiative. Multimodal classification of Alzheimer’s disease and mild cognitive impairment. NeuroImage 2011, 55, 856–867. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Initiative, A.D.N.; Meng, F.; Shi, J. Learning using privileged information improves neuroimaging-based CAD of Alzheimer’s disease: A comparative study. Med. Biol. Eng. Comput. 2019, 57, 1605–1616. [Google Scholar] [CrossRef] [PubMed]

- Bi, X.-A.; Hu, X.; Wu, H.; Wang, Y. Multimodal Data Analysis of Alzheimer’s Disease Based on Clustering Evolutionary Random Forest. IEEE J. Biomed. Health Inform. 2020, 24, 2973–2983. [Google Scholar] [CrossRef] [PubMed]

- Liu, M.; Zhang, J.; Yap, P.-T.; Shen, D. View-aligned hypergraph learning for Alzheimer’s disease diagnosis with incomplete multi-modality data. Med. Image Anal. 2016, 36, 123–134. [Google Scholar] [CrossRef] [PubMed]

- Moradi, E.; Pepe, A.; Gaser, C.; Huttunen, H.; Tohka, J.; Alzheimer’s Disease Neuroimaging Initiative. Machine learning framework for early MRI-based Alzheimer’s conversion prediction in MCI subjects. Neuroimage 2015, 104, 398–412. [Google Scholar] [CrossRef]

- Hao, N.; Wang, Z.; Liu, P.; Becker, R.; Yang, S.; Yang, K.; Pei, Z.; Zhang, P.; Xia, J.; Shen, L.; et al. Acoustofluidic multimodal diagnostic system for Alzheimer’s disease. Biosens. Bioelectron. 2021, 196, 113730. [Google Scholar] [CrossRef]

- Krstev, I.; Pavikjevikj, M.; Toshevska, M.; Gievska, S. Multimodal Data Fusion for Automatic Detection of Alzheimer’s Disease. In Digital Human Modeling and Applications in Health, Safety, Ergonomics and Risk Management, Proceedings of the Health, Operations Management, and Design: 13th InternationalConference, DHM 2022, Held as Part of the 24th HCI International Conference, HCII 2022, Virtual Event, 26 June–1 July 2022; Proceedings, Part II; Springer International Publishing: Cham, Switzerland, 2022; pp. 79–94. [Google Scholar]

- Lee, G.; Nho, K.; Kang, B.; Sohn, K.-A.; Kim, D.; Weiner, M.W.; Aisen, P.; Petersen, R.; Jack, C.R.; Jagust, W.; et al. Predicting Alzheimer’s disease progression using multi-modal deep learning approach. Sci. Rep. 2019, 9, 1952. [Google Scholar] [CrossRef]

- Liu, L.; Zhao, S.; Chen, H.; Wang, A. A new machine learning method for identifying Alzheimer’s disease. Simul. Model. Pract. Theory 2020, 99, 102023. [Google Scholar] [CrossRef]

- Mirzaei, G.; Adeli, H. Machine learning techniques for diagnosis of alzheimer disease, mild cognitive disorder, and other types of dementia. Biomed. Signal Process. Control 2022, 72, 103293. [Google Scholar] [CrossRef]

- Qiu, S.; Miller, M.I.; Joshi, P.S.; Lee, J.C.; Xue, C.; Ni, Y.; Wang, Y.; De Anda-Duran, I.; Hwang, P.H.; Cramer, J.A.; et al. Multimodal deep learning for Alzheimer’s disease dementia assessment. Nat. Commun. 2022, 13, 3404. [Google Scholar] [CrossRef]

- Moons, L.; De Groef, L. Multimodal retinal imaging to detect and understand Alzheimer’s and Parkinson’s disease. Curr. Opin. Neurobiol. 2022, 72, 1–7. [Google Scholar] [CrossRef] [PubMed]

- Neelaveni, J.; Devasana, M.G. Alzheimer disease prediction using machine learning algorithms. In Proceedings of the 2020 6th International Conference on Advanced Computing and Communication Systems (ICACCS), Coimbatore, India, 6–7 March 2020; pp. 101–104. [Google Scholar]

- Odusami, M.; Maskeliūnas, R.; Damaševi, R. Pixel-Level Fusion Approach with Vision Transformer for Early Detection of Alzheimer’s Disease. Electronics 2023, 12, 1218. [Google Scholar] [CrossRef]

- El-Sappagh, S.; Abuhmed, T.; Islam, S.R.; Kwak, K.S. Multimodal multitask deep learning model for Alzheimer’s disease progression detection based on time series data. Neurocomputing 2020, 412, 197–215. [Google Scholar] [CrossRef]

- Uysal, G.; Ozturk, M. Hippocampal atrophy based Alzheimer’s disease diagnosis via machine learning methods. J. Neurosci. Methods 2020, 337, 108669. [Google Scholar] [CrossRef] [PubMed]

- Venugopalan, J.; Tong, L.; Hassanzadeh, H.R.; Wang, M.D. Multimodal deep learning models for early detection of Alzheimer’s disease stage. Sci. Rep. 2021, 11, 3254. [Google Scholar] [CrossRef] [PubMed]

- Whitehouse, P.J.; Price, D.L.; Struble, R.G.; Clark, A.W.; Coyle, J.T.; Delon, M.R. Alzheimer’s disease and senile dementia: Loss of neurons in the basal forebrain. Science 1982, 215, 1237–1239. [Google Scholar] [CrossRef]

- Liu, M.; Zhang, D.; Shen, D.; Alzheimer’s Disease Neuroimaging Initiative. Ensemble sparse classification of Alzheimer’s disease. NeuroImage 2012, 60, 1106–1116. [Google Scholar] [CrossRef]

- Al-Hagery, M.A.; Al-Fairouz, E.I.; Al-Humaidan, N.A. Improvement of Alzheimer disease diagnosis (Acc) using ensemble methods. Indones. J. Electr. Eng. Inform. (IJEEI) 2020, 8, 132–139. [Google Scholar]

- Chatterjee, S.; Byun, Y.C. Voting Ensemble Approach for Enhancing Alzheimer’s Disease Classification. Sensors 2022, 22, 7661. [Google Scholar] [CrossRef]

- Gamal, A.; Elattar, M.; Selim, S. Automatic Early Diagnosis of Alzheimer’s Disease Using 3D Deep Ensemble Approach. IEEE Access 2022, 10, 115974–115987. [Google Scholar] [CrossRef]

- El-Sappagh, S.; Alonso, J.M.; Islam, S.M.; Sultan, A.M.; Kwak, K.S. A multilayer multimodal detection and prediction model based on explainable artificial intelligence for Alzheimer’s disease. Sci. Rep. 2021, 11, 1–26. [Google Scholar] [CrossRef] [PubMed]

- El-Sappagh, S.; Saleh, H.; Sahal, R.; Abuhmed, T.; Islam, S.R.; Ali, F.; Amer, E. Alzheimer’s disease progression detection model based on an early fusion of cost-effective multimodal data. Futur. Gener. Comput. Syst. 2020, 115, 680–699. [Google Scholar] [CrossRef]

- Ying, Y.; Yang, T.; Zhou, H. Multimodal fusion for alzheimer’s disease recognition. Appl. Intell. 2022, 1–12. [Google Scholar] [CrossRef]

- Zargarbashi, S.; Babaali, B. A multi-modal feature embedding approach to diagnose Alzheimer disease from spoken language. arXiv 2019, arXiv:1910.00330. [Google Scholar]

- Zhang, F.; Li, Z.; Zhang, B.; Du, H.; Wang, B.; Zhang, X. Multi-modal deep learning model for auxiliary diagnosis of Alzheimer’s disease. Neurocomputing 2019, 361, 185–195. [Google Scholar] [CrossRef]

- Zhang, J.; He, X.; Qing, L.; Gao, F.; Wang, B. BPGAN: Brain PET synthesis from MRI using generative adversarial network for multi-modal Alzheimer’s disease diagnosis. Comput. Methods Programs Biomed. 2022, 217, 106676. [Google Scholar] [CrossRef]

- Zuo, Q.; Lei, B.; Shen, Y.; Liu, Y.; Feng, Z.; Wang, S. Multimodal Representations Learning and Adversarial Hypergraph Fusion for Early Alzheimer’s Disease Prediction. In Proceedings of the Pattern Recognition and Computer Vision: 4th Chinese Conference, PRCV 2021, Beijing, China, 29 October–1 November 2021; Proceedings, Part III 4. Springer International Publishing: Cham, Switzerland, 2021; pp. 479–490. [Google Scholar] [CrossRef]

- Song, J.; Zheng, J.; Li, P.; Lu, X.; Zhu, G.; Shen, P. An effective multimodal image fusion method using MRI and PET for Alzheimer’s disease diagnosis. Front. Digit. Health 2021, 3, 637386. [Google Scholar] [CrossRef]

- Cui, R.; Liu, M. RNN-based longitudinal analysis for diagnosis of Alzheimer’s disease. Comput. Med. Imaging Graph. 2019, 73, 1–10. [Google Scholar] [CrossRef]

- Hu, C.; Ju, R.; Shen, Y.; Zhou, P.; Li, Q. Clinical decision support for Alzheimer’s disease based on deep learning and brain network. In Proceedings of the 2016 IEEE International Conference on Communications (ICC), Kuala Lumpur, Malaysia, 22–27 May 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Abuhmed, T.; El-Sappagh, S.; Alonso, J.M. Robust hybrid deep learning models for Alzheimer’s progression detection. Knowl.-Based Syst. 2021, 213, 106688. [Google Scholar] [CrossRef]

- Wang, Y.; Liu, X.; Yu, C. Assisted Diagnosis of Alzheimer’s Disease Based on Deep Learning and Multimodal Feature Fusion. Complexity 2021, 2021, 6626728. [Google Scholar] [CrossRef]

- Rohanian, M.; Hough, J.; Purver, M. Multi-modal fusion with gating using audio, lexical and disfluency features for Alzheimer’s dementia recognition from spontaneous speech. arXiv 2021, arXiv:2106.09668. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).