Abstract

Alzheimer’s and related diseases are significant health issues of this era. The interdisciplinary use of deep learning in this field has shown great promise and gathered considerable interest. This paper surveys deep learning literature related to Alzheimer’s disease, mild cognitive impairment, and related diseases from 2010 to early 2023. We identify the major types of unsupervised, supervised, and semi-supervised methods developed for various tasks in this field, including the most recent developments, such as the application of recurrent neural networks, graph-neural networks, and generative models. We also provide a summary of data sources, data processing, training protocols, and evaluation methods as a guide for future deep learning research into Alzheimer’s disease. Although deep learning has shown promising performance across various studies and tasks, it is limited by interpretation and generalization challenges. The survey also provides a brief insight into these challenges and the possible pathways for future studies.

1. Introduction

Deep learning is a field of study that shows great promise for medical image analysis and knowledge discovery that is approaching clinicians’ performance in a growing range of tasks [1,2,3]. The interdisciplinary study of Alzheimer’s disease (AD) and deep learning have been a focus of interest for the past 13 years. This paper aims to survey the most current state of deep learning studies related to multiple aspects of research in Alzheimer’s disease, ranging from current detection methods to pathways of generalization and interpretation. This section will first provide the current definition of AD and clinical diagnostic methods to provide the basis for deep learning. We then detail this interdisciplinary study’s main areas of interest and current challenges.

1.1. Alzheimer’s Disease and Mild Cognitive Impairment

Alzheimer’s disease is the most common form of dementia and a significant health issue of this era [4]. Brookmeyer et al. [5] predicted that more than 1% of the world population would be affected by AD or related diseases by 2050, with a significant proportion of this cohort requiring a high level of care. AD usually starts from middle-to-old age as a chronic neurodegenerative disorder, but rare cases of early-onset AD can affect individuals of 45–64 years old [6]. AD leads to cognitive decline symptoms: memory impairment [7], language dysfunction [8], and decline in cognition and judgment [9]. An individual with symptoms may require moderate to constant assistance in day-to-day life, depending on the stage of disease progression. These symptoms severely affect patients’ quality of life (QOL) and their families. Studies into cost-of-illness for dementia and AD reveal that the higher societal need for elderly care significantly increases overall social-economic pressure [10].

The biological process that leads to AD may begin more than 20 years before symptoms appear [11]. The current understanding of AD pathogenesis is based on amyloid peptide deposition and the accumulation and phosphorylation of tau proteins around neurons [12,13,14], which leads to neurodegeneration and eventual brain atrophy. Factors associated with AD include age, genetic predisposition [15], Down’s syndrome [16], brain injuries [17], and cardiorespiratory fitness [18,19,20]. AD-related cognitive impairment can be broadly separated into three stages: (1) preclinical AD, where measurable changes in the brain, cerebral spinal fluid (CSF), and blood plasma can be detected; (2) mild cognitive impairment (MCI) due to AD, where biomarker evidence of AD-related brain change can be present; and (3) dementia due to AD, where changes in the brain are evident and noticeable memory, thinking and behavioural changes appear and impair an individual’s daily function.

The condition most commonly associated with AD is mild cognitive impairment (MCI), the pre-dementia stage of cognitive impairment. However, not all cases of MCI develop into AD. Since no definite pathological description exists, MCI is currently perceived as the level of cognitive impairment above natural age-related cognitive decline [21,22]. Multiple studies have analyzed the demographics and progression of MCI and have found the following: 15–20% of people age 65 or older have MCI from a range of possible causes [23]; 15% of people age 65 or older with MCI developed dementia at two years follow-up [24]; and 32% developed AD and 38% developed dementia at five years follow-up [25,26]. The early diagnosis of MCI and its subtypes can lead to early intervention, which can profoundly impact patient longevity and QOL [27]. Therefore, better understanding the condition and developing effective and accurate diagnostic methods is of great public interest.

1.2. Diagnostic Methods and Criteria

The current standard diagnosis of AD and MCI is based on a combination of various methods. These methods include cognitive assessments such as the Mini-Mental State Examination [28,29,30], Clinical Dementia Rating [31,32], and Cambridge Cognitive Examination [33,34]. These exams usually take the form of a series of questions and are often performed with physical and neurological examinations. Medical and family history, including psychiatric history and history of cognitive and behavioral changes, are also considered in the diagnosis. Genetic sequencing for particular biomarkers, such as the APOE-e4 allele [35], is used to determine genetic predisposition.

Neuroimaging is commonly used to inspect various signs of brain changes and exclude other potential causes. Structural magnetic resonance and diffusion tensor imaging are widely applied to check for evidence of symptoms of brain atrophy. Various forms of computed tomography (CT) are also used in AD and MCI diagnosis. Regarding positron emission tomography (PET), FDG-PET [36] inspects brain glucose metabolism, while amyloid-PET is applied to measure beta-amyloid levels. Single-photon emission computed tomography [37] (SPECT) is likely to produce false-positive results and is inadequate in clinical use. However, SPECT variants can be potentially used in diagnosis, e.g., 99 mTc-HMPAO SPECT [38,39]. At the same time, FP-CIT SPECT can visualize discrepancies in the nigrostriatal dopaminergic neurons [40]. In neuroimaging, a combination of multiple modalities is commonly used to utilize the functionality of each modality.

New diagnostic factors of CSF and blood plasma biomarkers have been reported in the literature and have been deployed in clinical practice in recent years. There are three main CSF and blood plasma biomarkers: Amyloid-β42, t-tau, and p-tau. Other biomarkers include neurofilament light protein (NFL), and neuron-specific enolase (NSE, and HFABP [41,42]. CSF biomarkers are becoming a critical factor in AD diagnostic criteria in some practices. However, the actual ‘ground truth’ diagnosis of AD can only be made via post-mortem autopsy.

Before this century, the established diagnostic criteria were the NINCDS-ADRDA criteria [43,44]. These criteria were updated by the International Working Group (IWG) in 2007 to include requirements of at least one factor among MRI, PET, and CSF biomarkers [45]. A second revision was introduced in 2010 to include both pre-dementia and dementia phases [46]. This was followed by a third revision to include atypical prodromal Alzheimer’s disease that shows cognition deficits other than memory impairment—IWG-2 [47]. Another independent set of criteria, the NIA-AA criteria, was introduced in 2011. These criteria include measures of brain amyloid, neuronal injury, and degeneration [48]. Individual criteria were introduced for each clinical stage, including pre-clinical [49,50], MCI [51,52], dementia [53,54], and post-mortem autopsy [55].

1.3. The Deep Learning Approach

Detailed preprocessing with refined extraction of biomarkers combined with statistical analysis is the accepted practice in current medical research. Risacher et al. [56] applied statistical analysis on biomarkers extracted using voxel-based morphometry and parcellation methods from T1-weighted MRI scans of AD, MCI, and HC. The study reveals statistical significance in multiple measures, including hippocampal volume and entorhinal cortex thickness. Qiu et al. [57] further confirmed this significance by analyzing regional volumetric changes through large deformation diffeomorphic metric mapping (LDDMM). Guevremont et al. [58] focused on robustly detecting microRNAs in plasma and used standardized analysis to identify microRNA biomarkers in different phases of Alzheimer’s disease. This study and its statistical analysis yielded useful diagnostic markers reflecting the underlying disease pathology. The different biomarker information extracted was fed into statistical analysis methods with varying numbers of variables to detect changes in biomarkers in disease development [59]. Similar studies also employed other neuroimaging data, genetic data, and CSF biomarkers. These studies supported the use of MRI imaging biomarkers in AD [60] and MCI diagnosis [61], laying the basis for developing automatic diagnostic algorithms.

Machine learning has amassed great popularity among current automated diagnostic algorithms due to its adaptivity to data and the ability to generalize knowledge with lower requirements of expert experience. The study by Klöppel et al. [62] proved the validity of applying machine learning algorithms in diagnosing dementia through a performance comparison between the Support Vector Machine (SVM) classification of local grey matter volumes and human diagnosis by professional radiologists. Janousova et al. [63] proposed penalized regression with resampling to search for discriminative regions to aid Gaussian kernel SVM classification. The regions found by the study coincide with the previous morphological studies. These breakthroughs led to the development of many machine-learning algorithms for AD and MCI detection. Zhang et al. [64] proposed a kernel combination method for the fusion of heterogeneous biomarkers for classification with linear SVM. Liu et al. [65] proposed the Multifold Bayesian Kernelization (MBK) algorithm, where a Bayesian framework derives kernel weights and synthesis analysis provides the diagnostic probabilities of each biomarker. Zhang et al. [66] proposed the extraction of the eigenbrain using Welch’s t-test (WTT) [67] combined with a polynomial kernel SVM [68] and particle swarm optimization (PSO) [69].

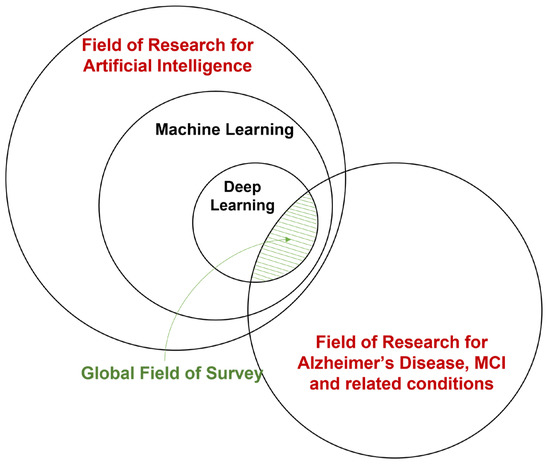

There has also been considerable interest in applying deep learning (DL), a branch of machine learning, to the field of AD and related diseases. Deep learning integrates the two-step feature extraction and classification process into neural networks, universal approximators based on backpropagation parameter training. [70]. Deep learning has made considerable advances in the domain of medical data, e.g., breast cancer [71], tuberculosis [72], and glioma [73]. Instead of hand-crafting features, models, and optimizers, deep learning leverages the layered structure of neural networks for the automated abstraction of various levels of features. For example, Feng et al. [74] used the proposed deep learning model to extract biomarkers for MRI in neuroimaging. The study demonstrates that the deep learning approach outperformed other neuroimaging biomarkers of amyloid and tau pathology and neurodegeneration in prodromal AD. A visualization of the field of this survey is shown in Figure 1.

Figure 1.

A broad overview of the field of this survey.

1.4. Areas of Interest

The primary aim of the surveyed deep learning studies in Alzheimer’s and related diseases is detecting and predicting neurodegeneration to provide early detection and accurate prognosis to support treatment and intervention. The main interests of this interdisciplinary field can be roughly categorized into three areas:

- Classification of various stages of AD. This area targets diagnosis or efficient progression monitoring. Current studies mostly focus on AD, MCI subtypes, and normal cognitive controls (NC). A few studies contain the subjective cognitive decline (SCD) stage before MCI.

- Predicting MCI conversion. This area is mainly approached by formulating prediction as a classification problem, which usually involves defining MCI converters and non-converters based on a time threshold from the initial diagnosis. Some studies also aim at the prediction of time-to-conversion for MCI to AD.

- Prediction of clinical measures. This area aims at producing surrogate biomarkers to reduce cost or invasivity, e.g., neuroimaging to replace lumbar puncture. Prediction of clinical measures, e.g., ADAS-Cog13 [75] and ventricular volume [76], is also used for longitudinal studies and attempts to achieve a more comprehensive evaluation of disease progression and model performance benchmarking.

There are also other areas of interest, including knowledge discovery, where studies attempt to understand AD through data [77]. Another area of interest is phenotyping and sample enrichment for clinical trials of treatments [78], where DL models are used to select patients that will likely respond to treatment and prevent ineffective or unnecessary treatment [79]. Interest also lies in segmentation and preprocessing, where DL models are applied to achieve higher performance or efficiency than conventional pipelines [80].

1.5. Challenges in Research

There is uncertainty in the diagnosis or prognosis of AD or related diseases with still developing diagnostic criteria and scientific understanding. DL-based approaches have already shown potential in the above areas of interest; however, there exists room for improvement and a range of challenges:

- Numerical representation of the differences between AD stages. Monfared et al. [81] calculated the range of Alzheimer’s disease composite scores to assess the severity of the cognitive decline in patients. Sheng et al. [82] made multiple classifications and concluded that the gap between late mild cognitive impairment and early mild cognitive impairment was small, whereas a greater difference exists between early and late MCI patients. Studies comparing clinical and post-mortem diagnoses have shown 10–20% false cases [83]. In addition, autopsy studies in individuals who were cognitively normal for their age found that ~30% had Alzheimer’s-related brain changes in the form of plaque and tangles [84,85]. Sometimes the signs that distinguish AD, for example, brain shrinkage [86], can be found in a normal healthy brain of older people.

- Difficulty in preprocessing. Preprocessing medical data, especially neuroimaging data, often requires complex pipelines. There is no set standard for preprocessing, while a broad range of processing options and relevant parameters exist. Preprocessing quality is also vastly based on the subjective judgment of clinicians.

- Unavailability of a comprehensive dataset. Though the amount and variety of data available for AD and related diseases are abundant compared with many other conditions, the number of subjects is only moderate compared with large datasets such as Image-Net and is below the optimal requirements for generalization.

- Differences in diagnostic criteria. The diagnostic criteria, or criteria for ground truth labels, can differ significantly between studies, especially in prior studies before new methods of diagnosis (e.g., CSF biomarkers [87] and genetic sequencing [88]) became accessible.

- Lack of reproducibility. Most frameworks and models are not publicly available. Without open-source code, implementation details such as specific data cohort selection, preprocessing procedures and parameters, evaluation procedures, and metrics are usually lacking. These are all factors that can significantly impact results. Additionally, few comprehensive frameworks are designed for benchmarking different models based on the same preprocessing/processing and testing standards [89,90].

- Lack of expert knowledge. Researchers adept at using DL often have no medical background, while medical data are significantly more complicated than natural images or language data. Therefore, these researchers lack expert knowledge, especially in preprocessing and identifying brain regions of interest (ROIs).

- Generalizability and interpretability. Current DL models are plagued by information leakage and only provide limited measures of generalizability, the model’s performance in real-world populations. The inherent ‘black box’ nature of neural networks impedes the interpretation of model functions and the subsequent feedback of knowledge for clinicians [91].

- Other practical challenges include the subjectivity of cognitive assessments, the invasiveness of diagnostic techniques such as a lumbar puncture to measure CSF biomarkers and the high cost of neuroimaging such as MRI.

By analyzing the frequency of occurrence, influencing factors, and potential impact on research results for each challenge based on evidence and observations in the literature, we assign weights to each challenge in Table 1.

Table 1.

Summary of challenges in applying DL to AD.

1.6. Survey Protocol

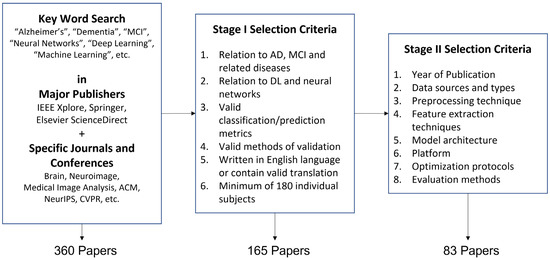

This survey covers DL studies related to AD or related diseases from 2010 to 2023. To identify literature related to our focus, we first queried the online libraries of IEEE, New York, NY, USA, Springer, Berlin/Heidelberg, Germany, and ScienceDirect, Amsterdam, The Netherlands and then concentrated search on:

- Recognized journals, including Brain, Neuroimage, Medical Image Analysis, Alzheimer’s and Dementia, Nature Communications, and Radiology.

- Conferences in computer vision and deep learning, including ACM, NeurIPS, CVPR, MICCAI, and ICCV.

The full list of search keywords is as follows: “Alzheimer’s”, “AD”, “Dementia”, “Mild Cognitive Impairment”, “MCI”, “Neural Networks”, “Deep Learning”, “Machine Learning”, “Learning”, “Big Data”, “Autoencoders”, “Generative”, “Multi-Modal”, “Interpretable”, “Explainable”. These keywords were used independently or in combination during the search process, which yielded 360 papers from various sources. A two-stage selection was performed, where the following conditions were first used to select the papers:

- Related to Alzheimer’s disease, MCI, or other related diseases.

- Related to deep learning, with the use of neural networks.

- Contains valid classification/prediction metrics.

- Utilizes a reasonable form of validation.

- Written in English or contains a valid translation.

- Contains a minimum of 180 individual subjects.

An additional constraint of subject number was applied in this survey, where 180 subjects correspond to a 0.01 chance of having an approximately 10% fluctuation in accuracy in generalization according to derivations from Hoeffding inequality [92]. However, this is only a basic requirement since the approximate generalization bound depends on the data available for evaluation and the independence assumptions between the classifier parameters and data. This condition is relaxed for studies using uncommon data types and functional MRI, where the available data are often limited compared with standard data types, e.g., MRI and PET. This selection stage yielded a total of 165 papers.

After the first stage of selection, the papers were evaluated on the year of publication, data source and type, preprocessing technique, feature extraction techniques, model architecture, platform, optimization protocol, and evaluation details. Papers from unknown sources or studies with apparent errors, e.g., information leakage, were excluded from the selection. This selection stage yielded a total of 83 papers. Similar to previous surveys, this survey mainly focused on neuroimaging data [93,94,95,96]. This review also expands on the work by Wen, Thibeau-Sutre, Diaz-Melo, Samper-González, Routier, Bottani, Dormont, Durrleman, Burgos and Colliot [89], which focuses on convolutional neural networks, to a broader range of supervised and unsupervised neural networks, including recent advances in graph and geometric neural networks. The survey protocol is visualized in Figure 2.

Figure 2.

Survey Protocol.

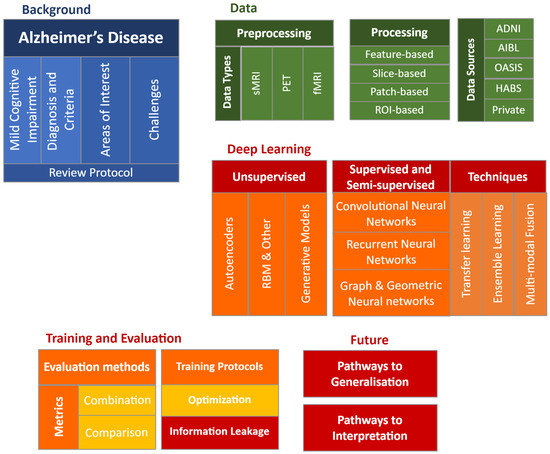

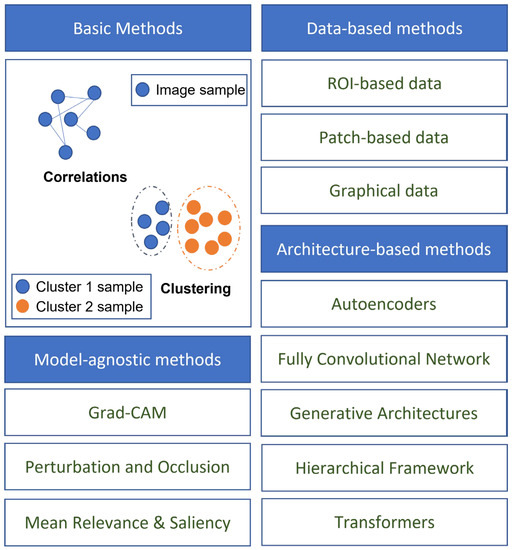

The paper is organized as follows: Section 2 introduces the data types implemented in deep learning research and potential data sources; Section 3 provides detailed summaries of data preprocessing methods for the main data types, followed by four categories of data processing for neural network data input in Section 4. Section 5, Section 6 and Section 7 constitute the main body of deep learning architectures and methods included in this review, categorized into unsupervised, semi-supervised, and supervised learning methods; typical models and recent advances are included in each category, including recent developments in generative models, recurrent and graph neural networks. Section 8 introduces various techniques, including transfer learning, ensemble learning, and multi-modal fusion. Section 9 details training and evaluation protocols, while Section 10 and Section 11 lay possible pathways for future research in interpretability and generalization. A taxonomy of the survey is shown in Figure 3.

Figure 3.

Overview of the survey content.

2. Data Types and Sources

Data issues are a core aspect of the deep learning approach, and the type and quantity of data directly impact model performance and potential generalizability. A large variety of data from numerous sources have been utilized in the reviewed studies. In this section, we summarize the main types of data available and the sources of these data.

2.1. Types of Data

The available data can be categorized into longitudinal data and cross-sectional data. Longitudinal data correspond to a subject’s disease progression data collected over time, while cross-sectional data are single-instance data that are time-independent. Longitudinal data can also be treated as independent data, time-series data, or comparative data. Demographics (Demo) is often a form of meta-data collected alongside other exams, primarily information regarding age, gender, and education. Neuroimaging data of various modalities are commonly collected. Common modalities include PET, MRI, and CT for diagnosis, and 3D-MRI [97], fMRI [98], and SPECT for research purposes. Various forms of cognitive assessments (CA) are also commonly available, including MMSE [29], CDR [99], ADAS-Cog [100,101], logical memory test [102,103], and postural kinematic analysis [104,105]. CSF, blood plasma biomarkers [106,107], and genetic data are available from several sources. Other less common data types include electroencephalography (EEG) for brain activity monitoring [106,107], mass spectra data collected through surface-enhanced laser desorption and ionization assay of saliva [108], and retinal imaging for abnormalities [109]. Electronic health records have also been studied to screen dementia and AD [110,111]. Alternative data types such as speech [112,113], activity pattern monitoring [114,115], and eye-tracking [116] have also been studied with a deep learning approach. Few deep-learning-related comparative studies have been performed between different data modalities and types, especially for the less common data types [117].

2.2. Sources of Data

Several open libraries have been created in the past two decades, providing easier access for researchers to available data on subjects with AD or related diseases. One of the main libraries is Alzheimer’s Disease Neuroimaging Initiative (ADNI) [118], a large longitudinal study aiming to develop novel biomarkers to detect AD and monitor disease progression. The original ADNI cohort was collected from 2004 to 2010 and contains T1-weighted MRI [119], FDG-PET, blood, and CSF biomarkers from 800 subjects [120]. Additional cohorts, ADNI-Go and ADNI-2, extended the longitudinal study of ADNI-1 while also encompassing a broader range of the stages of AD, adding 200 new subjects with early MCI [121]. A fourth cohort, ADNI3, with additional modalities targeting tau protein tangles, started in 2016 and is due to complete in 2022 [122].

ADNI is the most commonly used open library available for neuroimaging data. Another commonly used open library is the Open Access Series of Imaging Studies (OASIS), which includes a cross-sectional cohort (OASIS-1), a longitudinal cohort (OASIS-2) of demented or non-demented subjects MRI, and an additional longitudinal cohort (OASIS-3) provides MRI and PET in various modalities of 1098 subjects of normal cognition or AD [123]. While ADNI contains genomic data, OASIS only contains neuroimaging and neuropsychology data, i.e., cognitive assessments.

Other open libraries include the Harvard Aging Brain Study (HABS) [124] and Minimal Interval Resonance Imaging in Alzheimer’s Disease (MIRIAD) [125]. These open libraries are essential in propagating studies in machine learning or deep learning for AD research. There also exist several local studies modeled similar to ADNI for data compatibility, including Japan ADNI (J-ADNI) [126], the Hong Kong Alzheimer’s Disease Study [127], and the Australian Imaging Biomarkers and Lifestyle Study of Ageing (AIBL) [128]. Various institutes have established platforms to provide information and efficient access to available databases and libraries, including NeuGRID [129,130] and the Global Alzheimer’s Association Interactive Network [131]: http://www.gaain.org/ (accessed on 17 April 2023). We provide a shortlist of selected data sources in Table 2.

Table 2.

Sources of AD and dementia data.

An alternative source of data for ML practitioners and researchers alike are the challenges hosted either by ADNI or other institutions, such as CADDementia [132], TADPOLE [133,134], DREAM [135], and the Kaggle international challenge for automated prediction of MCI from MRI data. These challenges may provide pre-selection or preprocessed data, reducing the need for expert knowledge. A few studies have proposed to use brain age as a surrogate measure of cognitive decline and utilized databases of cognitively normal individuals, including UKBioBank [136], NKI, IXI [137], LifespanCN [138], and the Cambridge dataset [139]. Other sources of data that may be available include data from the International Genomics of Alzheimer’s Project (IGAP) [140], the Korean Longitudinal Study on Cognitive Aging and Dementia (KLOSCAD) [141,142], the INSIGHT-preAD study [143,144], the Imaging Dementia—Evidence for Amyloid Scanning (IDEA) study [145], and the European version of ADNI—AddNeuroMed [146,147]. Institutes that hold private data collections of AD or related diseases include the National Alzheimer’s Coordination Center, the Biobank of Beaumont Reference Laboratory, and IRCCS. As one of the most significant health crises of the era, many studies have collected data for AD and related diseases; therefore, the above-listed sources include only those most commonly used in reviewed literature and examples of alternative sources.

3. Data Preprocessing

The deep learning approach can replace the feature-crafting step of machine learning and reduce the need for preprocessing. Data types such as clock-drawing test images [148], activity monitoring data [115], and speech audio files [112] can be processed in a similar way to natural images and time-series data. However, for the prevalent neuroimaging data, due to the complexity of data and the variety of established pipelines, data preprocessing is a significant component in current DL studies. This survey will focus on imaging data, the most prevalent data category in the intersection between AD and deep learning. Differences in the organization of data adds to the difficulty of preprocessing. Gorgolewski et al. [149] proposed the Brain Imaging Data Structure (BIDS) repository structure. Conversion to a standard data structure such as BIDs is essential when using multiple modalities and data sources.

3.1. Structural MRI Data

MRI is a safe, non-invasive medical imaging technique. High-quality medical images can be generated with good spatial resolution while minimizing patient harm using a powerful magnetic field, radio waves, and a computer. Structural MRI (sMRI) and functional MRI (fMRI) are different MRI techniques used to study the brain. sMRI is a non-invasive brain imaging technique that can investigate changes in brain structure [150]. Changes in brain structure due to worsening cognitive impairment may include atrophy of specific brain regions, loss of brain tissue, and changes in the shape and size of certain brain structures [151,152].

MRI machines are highly complex medical equipment that can vary individually. Inhomogeneity of the B1-field in MRI machines can cause artifact signals known as the bias field. Bias field correction is often the first step in MRI data preprocessing [153,154], usually using B1-scans to correct for the non-uniformity in the MR image. Similarly, gradient non-linearity can be corrected with displacement information and phase mapping, e.g., Gradwarp. These corrections are often in-built into the MRI systems, and its outputs are often the raw data available from data sources. Intensity normalization is essential to mitigate the difference between multiple MRI machines, especially in large-scale multi-center studies or when combining data from multiple sources. The most common method found in AD-related papers is the N3 nonparametric non-uniform intensity normalization algorithm [155,156], a histogram peak sharpening algorithm that corrects intensity non-uniformity without establishing a tissue model. In some studies, motion correction is used to correct for subject motion artifacts produced during scanning sessions [157].

Brain extraction is a common MRI preprocessing component. It is the removal of non-brain components from the MRI scan. Skull-stripping removes the skull component, e.g., through bootstrapping histogram-based threshold estimations. Other similar procedures include cerebellum removal and neck removal. The extracted brain images are often registered to a brain anatomical template for spatial normalization, usually performed after brain extraction. Registration can be categorized based on the deformation allowed into affine registration and non-linear registration. Affine registration includes linear registration, while non-linear registration allows for local deformations [158,159,160]. A standard template used is MNI-152 based on 152 subjects [161], while some studies use alternative templates such as Colin27. A potential challenge in this process is that the selected control subjects’ age does not match the AD subjects’ older age and corresponding brain atrophy. Some studies resolve this issue by constructing study-specific template space based on training data, which can also be aligned with standard templates. Other alignments include AC-PC correction, the alignment of the images with the anterior commissure (AC) and posterior commissure (PC) on the same geometric plane. AC-PC correction can be performed with resampling to 256 × 256 × 256 and intensity normalization using the N3 algorithm with MIPAV. Studies have shown that linear or affine normalization is potentially sufficient for deep learning models [162,163], while other studies have shown that non-rigid registration can improve performance.

Another potential MRI preprocessing procedure is brain region segmentation, the division of the brain MRI into known anatomical regions. This step is usually performed to isolate brain regions related to AD, e.g., grey matter of the medial temporal lobe and the hippocampal region. Segmentation can be performed manually by outlining bounding boxes or precise pixel boundaries. Ideal practices include randomizing the samples and segmenting multiple times or segmentation with multiple expert radiologists [164]. However, manual segmentation is time intensive and not suitable for large datasets. Automated algorithms such as FSL FIRST [165] and the FreeSurfer pipeline can perform segmentation by registering to brain atlases, e.g., AAL. Other methods include using RAVENS maps produced by tissue-preserving image wrapping methods [166] and specific region segmentation, e.g., hippocampus segmentation with MALPEM [167]. With segmentation-based neural networks, multiple studies have applied the deep learning approach to hippocampus segmentation [168,169].

In AD-related studies, downsampling is often performed after preprocessing to reduce the dimensionality of input into the neural network, directly affecting the number of parameters and computational cost and achieving uniformity in input dimensions [170]. Smoothing is also often performed to further improve the signal-to-noise ratio [166] but it results in lower amplitude and increases peak bandwidth. Age correction also considers normal brain atrophy due to increasing age, similar to atrophy due to AD. A potential method to correct this effect is via a voxel-wise linear regression model after registration, which benefits overall model performance [167].

3.2. PET Data

PET utilizes a radioactive tracer to study the activity of cells and tissues in the body [171]. When studying neurological disorders, the tracer binds to specific proteins associated with the disease, such as amyloid beta, a hallmark of AD [172], and tau in the case of AD [173,174]. It can also help identify changes in glucose metabolism, which is altered in the brains of Alzheimer’s patients. The preprocessing of PET images is similar to the preprocessing of structural MRI images described in Section 3.1. In AD-related studies, PET data are often used with MRI data due to combined collection in major studies, e.g., ADNI. Preprocessing up to image registration and segmentation is first performed on the MRI image, while the PET images are registered to the corresponding MRI images through rigid alignment [166]. The post-segmentation steps of downsampling and smoothing are similar to those performed on MRI images. Studies independent of MRI follow either simplified preprocessing methods similar to MRI preprocessing [80,175,176] or only minimal preprocessing [177].

3.3. Functional MRI Data

Functional MRI (fMRI) is a type of magnetic resonance imaging designed to measure brain activity by monitoring blood flow within the brain. Instead of static, single-instance structural MRI, fMRI is temporal, consisting of a series of images. fMRI is used to study changes in brain function related to the disease. These changes can encompass altered connectivity between distinct regions of the brain [178] and variations in how the brain reacts to stimuli [179]. The fMRI can investigate alterations in memory and attention associated with cognitive impairment in MCI and AD [180]. Both sMRI and fMRI can be utilized to monitor the progression of the disease by detecting changes in specific brain regions over time [181,182].

Therefore, preprocessing steps in addition to the preprocessing procedures for structural MRI mentioned in Section 3.1 are required. Slice time correction is required to achieve the time-series exact timing, where fMRI may need to be first corrected for the temporal offset between each scan instance. More extended periods of fMRI scanning and the collection of multiple images in a single session increase the chance of head motion artifacts. Therefore, fMRI scans require additional filtering or correction for motion. Head motion correction of fMRI is usually performed through the spatial alignment to the first scan, or scan of choice, before spatial normalization. High-pass and low-pass filters can also be introduced to the temporal domain to control the fMRI data frequency and period [183]. The preprocessing of fMRI data can be automated using the SPM REST Toolkit, DPABI, or FreeSurfer. Data redundancy reduction methods are often applied to fMRI data; these can be categorized as methods based on common spatial pattern (CSP) or brain functional network (BFN). CSF-based methods produce spatial filters that maximize one group’s variance while minimizing another [184]. BFN-based methods use ROI segmentation to construct a brain network where the ROI features are vertices and the functional connections are edges. Brain networks can also be constructed by calculating ROI correlations after segmentation [185]. A recent study has also applied the deep learning approach to construct weighted correlation kernels integrated into neural network architecture to extract dynamic functional connectivity networks [186].

4. Data Processing

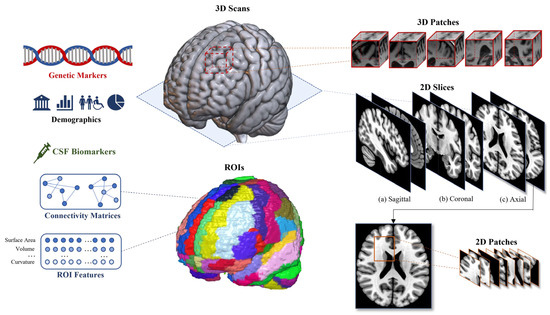

Data processing is essential to the deep learning approach, significantly influencing model architecture and performance. Compared with traditional machine learning feature extraction, data processing for deep learning focuses on processing input data to neural networks instead of establishing quantified representations. Data processing aims to preserve and emphasize critical discriminatory information within the preprocessed or raw data while standardizing the input for model readability across samples and modalities. The processing can be categorized into common types of model inputs. A basic summary of the most commonly used input types is illustrated in Figure 4.

Figure 4.

Summary of the most commonly used data types, where an sMRI is provided as an example of neuroimaging data. Feature-based data: demographics, CSF biomarkers, genetic markers, and 3D scans. Slice-based data: 2D slices from three views. Patch-based data: 2D and 3D patches. ROI-based data: ROI features and connectivity matrices.

4.1. Feature-Based

Feature-based approaches are performed on individual features of the provided data. For neuroimaging, this is also known as the voxel-based approach [96], which is applied to individual image voxels of spatially normalized images. Space co-alignment between images is also essential to ensure comparability between individual voxels across the dataset. To limit the amount of input information, tissue segmentation of the grey matter probability maps is often performed. Machine learning extraction of texture, shape, or other features can also be performed to reduce dimensionality and form an ML-DL hybrid approach [187]. Voxel-based methods for neuroimaging data retain global 2D or 3D information but ignore local information as it treats the entire brain uniformly, regardless of anatomical features. For 3D scans and genetic data with large transcription quantity, higher dimensions of input result in high computational cost; dimensionality reduction through either feature selection or transformations is common. Feature-based approaches are used for most alternative data types such as cognitive assessments, CSF, serum, and genetic biomarkers. Longitudinal data for these types of time-series data such as EEG, activity, and speech requires more stringent processing for sample completeness, e.g., imputation for missing data and time-stamp alignment [188].

4.2. Slice-Based

Slice-based approaches use 2D images or data. For 3D information, slice-based approaches assume that 2D information is a sufficient representation of the required information. Practical clinical diagnosis is often based upon a limited number of 2D slices instead of a complete 3D image. Some studies extract single or multiple 2D slices along the sagittal, axial, and coronal planes from the 3D scan. Slices from the axial plane are most commonly extracted, whereas the coronal view might contain the most critical AD-related regions. The selection of slices from 3D scans usually focuses on a particular dissection of the brain and the anatomical components it contains, e.g., the sagittal slices of the hippocampus are a known region of interest. Some studies used sorting procedures to find the most valuable slices, e.g., entropy sorting with greyscale histograms (Choi and Lee 2020). Slice-based approaches can be less computationally expensive than feature-based approaches with limited information quantity. However, the drawback of slice-based approaches is the loss of global and 3D geometric structures. Studies attempt to compensate for this loss by using multiple slices from multiple views, e.g., slices from three projections that show the hippocampal region [189], and multiple modalities, e.g., combining slices from MRI and PET.

4.3. Patch-Based

Instead of using all features or 2D slices, we can use regions of predefined size as input to the model, which is known as the patch-based approach. These regions can be 2D or 3D to suit model requirements [166]. Lin, Tong, Gao, Guo, Du, Yang, Guo, Xiao, Du and Qu [167] combined 2D greyscale patches of the hippocampal region into RGB patches. The patch-based approach can provide a larger sample size, equivalent to the number of patches, in the training procedure. Individual patches have a smaller memory footprint with lower input dimensions, reducing the computational resources required for training. However, additional resources for reconstructing sample-level results will mean costs to efficiency during testing and application. The challenge of patch-based approaches is capturing the most informative regions. Region selection is a vital component in this category; this includes the size of patches, the choice of overlap between the patches, and the choice of essential patches. Studies have attempted to use voxels’ statistical significance [56,190] to find patching regions, while landmark-based methods perform patching around anatomically significant landmarks [191]. These patch-based approach is an intermediate form of voxel-based and ROI-based methods. Various kinds of approaches require patch-level data, including the use of a single or a low number of patches from each image for low input dimension models used to localize atrophy [192], patch-level sub-networks for hierarchical models [166], and ensemble learning through networks trained on defined regions.

4.4. ROI-Based

Patch-based methods have predefined regions, and sizes of extraction are often rigid, while ROI-based methods focus on anatomical regions of interest within the brain. These ROIs of anatomical function are often finely selected in the preprocessing stage of registration to brain atlases. The most common atlas used among the reviewed studies is the Automated Anatomical Labeling (AAL) atlas, which contains 93 ROIs. Other atlases include the Kabani reference work [193] and the Harvard-Oxford cortical and subcortical structural atlases [194,195]. Elastic registration, such as HAMMER, has higher registration performance [196,197]. After ROI extraction, the reviewed studies commonly use GM tissue mean intensities, or volumes, of brain ROIs as features from PET, MRI, fMRI or other modalities [198]. Other measures include subcortical volumes [199,200], grey matter densities [201,202], cortical thickness [203,204], brain glucose metabolism [205,206], cerebral amyloid-β accumulation [207,208], and the average regional CMRGlc [209] for PET. The hippocampus is of particular interest in the reviewed papers; ROI-based methods have used 3D data and morphological measurements of its cortical thickness, curvature, surface area, and volume. Aderghal, Benois-Pineau and Afdel [189] proposed using both left and right hippocampal regions through flipping regions along the median plane. The relationships between ROIs are also used as standard input; the correlation between regions provides connectivity matrices that are often divided into cortical and subcortical regions [210].

ROI-based methods are closely linked to anatomical regions and have high interpretability and clinical implementability. However, the close link to a priori knowledge limits its potential in explorative studies. The computational cost is usually between that of the voxel and slice-based approaches, but ROI-based methods can maintain local 3D geometric information. Hierarchical neural network frameworks containing sub-networks at each representation level have also been proposed with effective network pruning to retain complete information [192].

4.5. Voxel-Based

Voxel-based approaches are feature-based approaches that focus on the analysis of individual voxels, which are the three-dimensional pixels that make up a medical image [211]. These voxels represent discrete locations in the brain [212], and their size and number can be adjusted to balance computational efficiency and spatial resolution [213]. Compared with slice-based approaches, voxel-based methods can capture the three-dimensional structure of the brain and its changes, which may not be evident in two-dimensional slices. Due to the complexity of brain structure and differences between subjects, spatial co-alignment (registration) is essential [214]. Registration is the process of spatially aligning image scans to an anatomical reference space [215]. This process involves aligning MRI images of different patients or the same patient at different time points to a standardized template representing a common anatomical space [216]. Many studies segment the aligned images into different tissue types, such as gray matter, white matter, and cerebrospinal fluid, using unique signal features of different tissue types before applying the model [217,218]. Comparing gray and white matter across groups or time points can be a sensitive method for detecting subtle changes in brain structure. However, voxel-based approaches also have limitations. One major limitation is the requirement for high spatial resolution. The paper [219] utilizes functional network topologies to depict neurodegeneration in a low-dimensional form. Furthermore, functional network topologies can be expressed using a low-dimensional manifold, and brain state configurations can be represented in a relatively low-dimensional space.

5. Introduction to Deep Learning

Deep learning (DL) is a branch of machine learning which implements universal approximators of neural networks [70,220], a modern development of the original perceptron [221,222] with chain rule-derived gradient computation [223,224] and backpropagation [225,226]. The fundamental formulation of the neural network can be represented through the formulation of a classifier:

where is the data. The function represents the ideal mapping between the input and the underlying solution . A neural network defines a mapping that provides an approximation of by adjusting its parameters . This adjustment can be considered a form of learning. For learning, a loss function, , can be constructed through the relation between the ideal output and the current output of the neural network. Backpropagation through derivatives of the loss function provides a means of updating the parameters for the learning process with a learning rate of . DL can abstract latent feature representations with minimal manual interference. Features generated by DL cover the hierarchy of low- to high-level features that extend from lines, dots, or edges to objects or characteristic shapes [227].

Advances in deep learning have achieved performance comparable to healthcare professionals in medical imaging classification [175,228]. Due to its feature as a component-wise universal approximator, it can be formulated in multiple ways, including feature extractors dependent on preprocessing and domain knowledge, classifiers for discrimination between groups, or regressors for the prediction of scenarios. Neural networks can also be used in AD knowledge discovery as the feature representation extracted by neural networks might contain information that is counter-intuitive to human understanding. This review outlines the fundamental techniques of deep learning and the main categories of current approaches to various challenges. As a machine learning sub-branch, deep learning approaches can be categorized into two main categories: unsupervised learning and supervised learning.

6. Unsupervised Learning

Unsupervised learning extracts inferences without ground truth categorization of the provided data samples or labels, while supervised approaches require data sample and label pairs. In deep learning, no architecture is strictly supervised or unsupervised if we decompose them into their base components, e.g., feature extraction and classification components of convolutional neural networks. In this survey, the distinction is made based on the relationship between the optimization target of the main neural network or framework and ground truth labels. Unsupervised learning methods will be summarized in this section, while supervised learning methods will be summarized in Section 7.

6.1. Autoencoder (AE)

Autoencoders are a type of artificial neural network designed to learn efficient data representation. The classical application of autoencoders is an unsupervised learning method with two main components: the encoder , and the decoder . The encoder is a neural network designed to map the input to a latent feature representation, while the decoder is a mirror image of the encoder designed to reconstruct the original input from the compressed representation, i.e.,

where is the reconstructed input, and , is the latent representation. AE can obtain efficient data representations in an alternative dimension by minimizing a reconstruction loss, e.g., squared errors:

The original AE consists of fully connected layers, while a stacked autoencoder consist of multiple layers within the encoder and decoder to allow extraction of high-level representations. This structure can be directly applied to train on extracted features such as the ROI features detailed in Section 4.4. In a previous study, structural features of ROI were combined with texture features extracted from Fractal Brownian Motion co-occurrence matrices [229]. Since the AE is unsupervised, a supervised neural network component is attached after training to enable classification or regression. This component commonly consists of fully connected layers (FCL) and activations. Fine-tuning by re-training the network with the supervised component is often applied to achieve better performance.

Greedy layer-wise training can be applied since the encoder and decoder have similar structures regardless of the number of stacked layers. In this training protocol, layers are continuously added to the encoder and decoder and retrained for hierarchical representation. Liu et al. [230] integrated this protocol with multi-modal fusion to improve multi-class classification with MCI sub-types to 66.47% ACC with 86.98% specificity. The same AE also achieved higher performance for binary classification tasks of AD vs. HC and MCI vs. HC. Another commonly applied method to improve AE performance is using sparsity constraints on the parameters. The constraint can be applied through -regularization or Kullback–Leibler divergence [231,232,233] for the model to learn with limited neurons during training instances and thereby reduce overfitting. For the classification between HC and MCI, Ju et al. [234] applied sparsity-constrained AE with functional connection matrices between ROIs in fMRI data. The sparsity constraint AE achieved a classification ACC of 86.47% with an AUC of 0.9164, over 20% higher than the machine learning counterparts of SVM, LDA, and LR. Apart from the training protocol and parameter constraint, some methods moderate the input and output of AE. Denoising AE reformulates the original reconstruction problem of AE to a denoising problem with the introduction of isotropic Gaussian noise.

Ithapu et al. [235] utilized this AE variant for feature extraction to construct a quantified marker for sample enrichment. Bhatkoti and Paul [236] applied a k-sparse autoencoder where only the neurons corresponding to the k-largest activations in the output are activated for backpropagation. These studies are representative of innovations in the application and enhancement of the original autoencoder.

The structure of neural networks in the encoder and decoder is not limited to MLP; the convolutional structure is also common among AD-related applications of AE. A study has applied 1D convolutional-AE to derive vector representations of longitudinal EHR data, where the 1D convolution operations act as temporal filters to obtain information on patient history [110]. Similarly, more sophisticated convolutional structures can also be used in the encoder and decoder architecture. Oh et al. [237] applied a convolutional AE with Inception modules, which are groups of layers consisting of multiple parallel filters. The standardized structure of AE makes it adaptable to any input dimension by configuring the encoder and decoder structure. Hosseini-Asl et al. [238], and Oh, Chung, Kim, Kim and Oh [237] applied 3D convolutional autoencoders to compress the representations of 3D MRI, while Er and Goularas [239] applied AE as an unsupervised component of the feature extraction process. AE can also be implemented as a pre-training technique, where after training, fully connected layers are added to the compressed layer of the encoder and used for supervised learning [240].

Apart from structural adjustments to the encoder and decoder layers, a probabilistic variation of AE also exists. These AE are known as variational autoencoders (VAE). For VAE, a single sample of available data can be interpreted as a random sample from the true distribution of data , while the encoder can be represented as , an approximation to the true marginal distribution of . The loss function is, therefore,

where is the reconstruction loss and is the Kullback–Leibler divergence, which regularizes the VAE and enforces the Gaussian prior . Through this adjustment, AE learns latent variable distributions instead of representations [241]. A more intuitive formulation is as follows:

where and represent the mapping to two independent neural network layers representing and , the set of mean and variance of the latent distributions. The latent representation can be sampled through reparameterization,

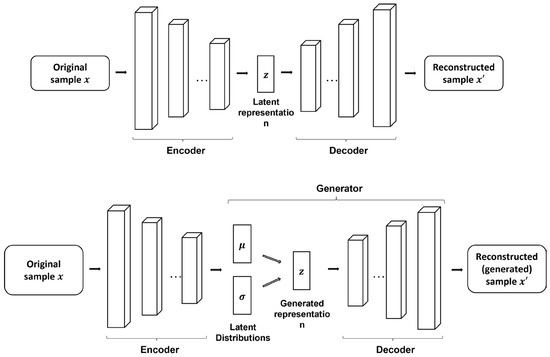

and decoded to reconstruct the input . Variational autoencoder has recently been applied to extract latent distributions of eMCI from high-dimensional brain functional networks [242] and provide risk analysis for AD progression [243]. Instead of a single set of latent distributions, a hierarchy of latent distributions can also be learned using ladder VAE. This variant of VAE was applied by Biffi et al. [244] to model HC and AD hippocampal segmentation populations, where latent distribution-generated segmentations for AD showed apparent atrophy compared with HC. By learning latent distributions, new data can be sampled from these distributions to generate new samples. From this perspective, VAE can be considered a generative model and is introduced in the following subsection. The fundamental autoencoder structures are shown in Figure 5.

Figure 5.

Fundamental autoencoder structures. The top figure represents a stacked 2D autoencoder, where each block represents a convolutional layer composed of a bank of convolutional filters (represented by the rectangular columns). The bottom represents a VAE, where instead of the latent representation, the encoder generates latent distributions represented by mean and variance , which are then used to generate representations. The convolution operation can be replaced by fully connected layers or complex modules, e.g., the Inception module.

6.2. Generative Models

Generative methods are a form of unsupervised learning that requires the model to recreate new data to supplement an existing data distribution. Variational autoencoders and RBM mentioned in the previous sections are both generative models. Another popular generative method is the construction of generative adversarial networks (GANs), where two or more neural networks compete in a zero-sum game. Classical GAN includes a generative neural network used to generate dummy data and a discriminator neural network to determine whether a sample is generated. The generator generates fake images from noise . The generated sample belongs to the generated data distribution . The discriminator attempts to discriminate between generated images and real images, . The competition between the generator and discriminator can be formulated through their loss function

where the objective is to minimize and maximize [245]. GAN is widely used for medical image synthesis, reconstruction, segmentation, and classification [246]. Islam and Zhang [247] applied a convolutional GAN to generate synthetic PET images for AD, NC, and MCI. The GAN model generated images with a mean PSNR of 32.83 and a mean SSIM of 77.48. The generated data were then classified using a 2D CNN, which achieved 71.45% ACC. This performance drop illustrates the difficulty in synthesizing quality synthetic images for training. A similar framework was proposed with shared feature maps between the generator and discriminator. With transfer learning, the framework achieved 0.713 AUC for SCD-conversion prediction [248]. Roychowdhury and Roychowdhury [249] implemented a conditional GAN, where the discriminator and generator are conditioned by labels ,

The conditional GAN was applied to generate longitudinal MRI data by generating and overlaying cortical ribbon images. The generated data provide a potential disease progression model of MCI to AD conversion and brain atrophy. The study showed that the modeled fractal dimension of the cortical image decreases over time. Baumgartner et al. [250] applied an unsupervised Wasserstein GAN, where a K-Lipschitz constraint critic function replaces the supervised discriminator. The loss of this model can be formulated as:

where is a set of 1-Lipschitz functions, and is a map generator function that uses existing images to generate new images . An additional regularization component is also added to the overall loss function to constrain the map for minimum change to the original image . In the study, is modeled by a 3D U-Net segmentation model. The modified WGAN generated disease effect maps similar to human observations for MRI images of MCI-converted AD. An alternative application of Wasserstein GAN with additional boundary equilibrium constraints was applied by Kim et al. [251]. This study extracted latent representations from autoencoder structure discriminators for classification with FCL and SVM. For AD vs. HC, the model achieved an ACC of 95.14% with an AUC of 0.98. A subsequent study by Rachmadi et al. [252] built upon the Wasserstein GAN structure with an additional critic function . The loss function corresponding to this additional component is:

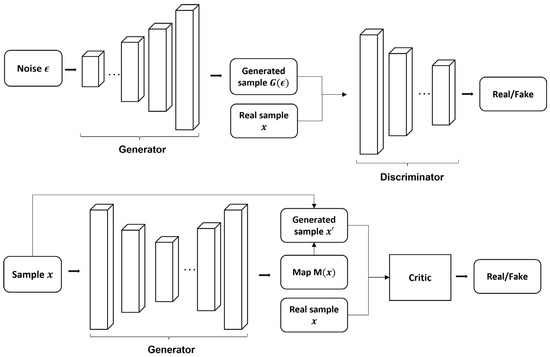

where and are baseline and follow-up images, respectively. Apart from using the original critic to discriminate between real and fake images, the new critic is also applied to discriminate between real disease evolution maps and generated maps . The inclusion of reformulates the generation of dummy scans to the generation of longitudinal evolution maps. Though this study was applied in monitoring the evolution of white matter hyperintensities in cerebral small vessel disease, the same concept and technique can be migrated to data on Alzheimer’s and related diseases [252]. Example GANs are illustrated in Figure 6. Apart from GAN, another type of innovative generative model is invertible neural networks (INN), which create invertible mappings with exact likelihood. Sun et al. [253] used two INN to extract the latent space of MRI and PET data and map them to each other for modality conversion. Conditional INNs, based on the conditional probability of latent space and combined with recurrent neural networks (RNN), were also used to generate longitudinal AD samples [176].

Figure 6.

Example generative adversarial networks. The top figure is an example vanilla 3D convolutional generative adversarial network. The bottom figure shows the basic schematics of the modified Wasserstein GAN [250,252]. The structure of each generator and discriminator component can be modified for different neural network architectures.

6.3. Restricted Boltzmann Machine (RBM) and Other Unsupervised Methods

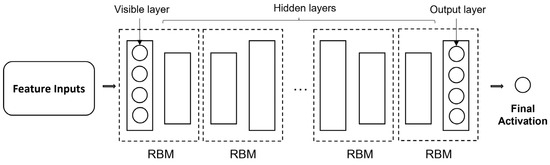

Apart from GAN and AE, numerous unsupervised methods have been applied for AD and related diseases. A well-known category is the restricted Boltzmann machine (RBM). An RBM is a generative network with a bipartite graph used to extract the probability distributions of the input data. RBM consists of two symmetrically-linked layers containing the visible and hidden units, respectively. The units, or neurons, within each layer are not connected. Similar to autoencoders, RBMs encode the input data through the forward pass while reconstructing input data through its backward pass. Two sets of biases for the two different passes aid this process. As an unsupervised method, RBM can also be used for feature extraction. Li et al. [254] applied multiple RBMs to initialize multiple hidden layers one at a time, while Suk et al. [255] combined RBM with the autoencoder learning module by combining layer-wise learning with greedy optimization. Conditional RBM has been applied as a statistical model for unsupervised progression forecasting of MCI, achieving ADAS-Cog13 prediction performance compared with supervised methods [256]. A deep belief network (DBN) is a neural network architecture comprising stacked RMBs. The basic structure of a DBN is shown in Figure 7. A DBN allows a backward pass of generative weights from the extracted feature to the input, making it more robust to noise. However, the layer-by-layer learning procedure for DBN can be computationally expensive. Suk, Lee, Shen and Initiative [166] applied a combination of MLP and DBM for feature extraction from multiple modalities.

Figure 7.

The basic structure of a deep belief network (DBN) consists of multiple restricted Boltzmann machines (RBM).

More recent studies in unsupervised learning applied show great diversity. Razavi et al. [257] applied sparse filtering as an unsupervised pre-training strategy for a 2D CNN. Sparse filtering is an easily applicable pre-training method where a neural network is first trained to output in a specified feature dimension. In this study, the cost function of classification is replaced by minimizing the sparsity of -normalized features of specified dimensions. Bi et al. [258] combined a CNN with PCA-generated filters and k-means clustering for a fully unsupervised framework for clustering MRI of AD, MCI, and NC. Wang, Xin, Wang, Gu, Zhao and Qian [184] hierarchically applied extreme learning machines for unsupervised feature representation extraction. Extreme learning machines are a variant of feedforward neural networks that applies the Moore–Penrose generalized inverse instead of gradient-based backpropagation. Majumdar and Singhal [259] applied deep dictionary input while using noisy inputs, such as denoising autoencoders, for categorical classification, while Cheng et al. [260] utilized a U-net-based CNN with rigid alignment for cortical surface registration of MRI images.

7. Supervised and Semi-Supervised Learning

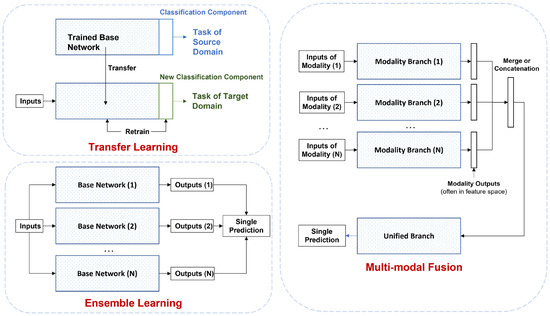

Supervised learning involves the use of known labels. In this study, we focus on the use of neural networks to map inputs to definite outputs. This section first introduces architecture classes, such as convolutional and recurrent neural networks, in Section 7.1 and Section 7.2. We then present recent advances in transfer learning, ensemble learning, and multimodal fusion in Section 8.1, Section 8.2 and Section 8.3. Finally, we introduce the most recent developments in graph and geometric neural networks.

7.1. Convolutional Neural Networks (CNN)

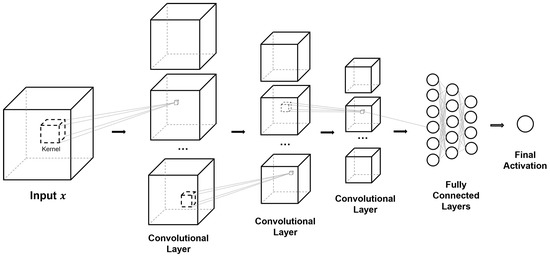

The innovation of convolutional neural networks (CNN), especially the development of the AlexNet [261,262] by Krizhevsky et al. [263], validated neural networks as practical universal approximators with layer-wise feature propagation. In CNN, the dense connections of MLPs are replaced with kernel convolutions:

where is the convolutional kernel; is a non-linear activation function; and and represent the dimensions for height, width, and channel of the input. CNN allows for parameter-efficient hierarchical feature extraction. Besides the reduced computational requirements, CNN has translational invariance and can retain spatial information, making it particularly suitable for neuroimaging data. The effectiveness of CNN is evident in their broad application, both as an independent model and as network components [264].

A typical CNN consists of several convolutional layers followed by non-linear activations. The non-linearity provides the basis for learning through backpropagation. Commonly used activation functions include the rectified linear unit (ReLU), , the hyperbolic tangent, , and sigmoid functions, . Recent new activation functions such as leaky-ReLU and parametric-ReLU are also seen in the reviewed literature [177,265].

Pooling, the downsampling of feature maps through an average or maximum filter approach, is also often applied. Batch normalization, where each mini-batch of data is standardized, is also commonly applied after convolution. A combination of the above procedures forms a convolution block, and a typical CNN comprises multiple convolution blocks. These blocks are often followed by a few fully connected layers and a Softmax activation for classification or a linear activation for regression. The theoretical foundations of CNN can be understood through the decomposition of tensors [266], while in this paper, we will focus on practical applications of CNN for AD-related tasks. The following subsections will provide a summarized introduction to 2D and 3D CNN focusing on recent applications, while more detail can be found in previous reviews [89,96].

7.1.1. 2D-CNN

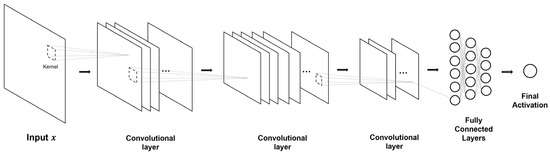

The original CNN was designed for computer vision pattern recognition of 2D images, allowing an easy application for 2D neuroimaging data. A basic 2D-CNN is shown in Figure 8. Aderghal, Benois-Pineau and Afdel [189] used a two-layer CNN with ReLU and max-pooling of 2D+ ε images that project slices from the sagittal, coronal, and axial slices into a three-channel 2D image. Alternatively, when 2D slices are available from multiple planes of a 3D image, an individual 2D-CNN can be used for each image and then ensembled. Neural network depth is associated with an increase in performance. Wang, Phillips, Sui, Liu, Yang and Cheng [265] proposed a deeper eight-layer CNN with leaky rectified learn units to classify single-slice MRI images, while a similar CNN was applied for the classification of Florbetaben-18 PET images [177]. Tang et al. [267] used a CNN model to identify amyloid plaques in AD histology slides. Similar to the aforementioned 2D CNN, the neural network consists of alternating layers of 2D convolution and max-pooling, followed by fully connected layers with ReLU activation and a Softmax activation to produce classification outputs. The CNN model showed excellent performance in the classification of amyloid plaques with an AUC of 0.993. The current state-of-art 2D CNN models are also mostly developed for natural image classification, though these models are easily applicable for 2D AD-related data. The availability of pre-trained state-of-art models provides the basis of transfer learning, as summarized in Section 8.1.

Figure 8.

Example 2D-CNN. This architecture provides the foundation for 2D convolutional architectures. Square slices in this figure represent channel-wise feature maps after convolution.

Due to the two dimension limit, data with multiple slices are either treated as independent or similar. 2D-CNN can also be applied to 1D data, including using the Hilbert space-filling curve to transform 1D cognitive assessment data to 2D [268] or using the time-series data of multi-channel EEG as a 2D matrix [269]. Though limited by dimensionality, 2D-CNN can be more practical in real-world application and deployment, as the data used in clinical practice are often 2D or lacks enough slices to construct the high-dimensional 3D T1-weighted MRI predominately used for medical research. The 3D neuroimaging data in open libraries such as ADNI and OASIS are often processed to obtain 2D slices or patches, as mentioned in Section 4. To retain 3D spatial information, 2D slices or patches from the sagittal, coronal, and axial views are often extracted for multi-view networks [270]. The lower dimensionality of 2D-CNN also makes it suitable for adaptation for 1D data, e.g., Alavi et al. [271] utilized the triplet architecture of face recognition and the Siamese one-shot learning model for automated live comparative analysis of RNA-seq data from GEO.

7.1.2. 3D-CNN

3D-CNN is inherently the same as 2D-CNN apart from an additional dimensionality in all components, including the convolutional kernel. The additional dimension provides 3D-CNN with better spatial information than 2D-CNN as the latter is inherently limited by kernel dimensionality and is, therefore, unable to efficiently capture the spatial information between slices. A basic 3D-CNN is shown in Figure 9. Similar to fundamental 2D-CNN models, Islam and Zhang [272] used a 3D-CNN composed of four 3D convolutional layers with FCL and Softmax with T1-weighted MRI, while Duc et al. [273] applied a similar CNN with rs-fMRI functional networks. A simple two-block 3D-CNN applied by Basaia et al. [274] showed either comparable or better performance than 2D-CNN in binary classification with AD, NC, and various MCI subtypes.

Figure 9.

Basic 3D CNN architecture. 3D images and patches from Figure 4 can be used as inputs for this architecture. Individual blocks within a convolutional layer represent channel-wise feature maps after convolution. Modifications such as identity mapping and dense connectivity can be applied with an additional dimension of height. Fully connected layers can be replaced with global average pooling for a fully convolutional neural network, while the final activation can be modified for classification, regression, or additional structure can be applied for alternative tasks such as semantic segmentation.

With the similarity between 2D and 3D CNN, high-performing architectures in two dimensions can easily be adapted to three dimensions; Basaia, Agosta, Wagner, Canu, Magnani, Santangelo, Filippi and Initiative [274] and Qiu et al. [275] both implemented 3D versions of an all convolutional neural network for the classification of AD and MCI, where the FCL + Softmax classification component is replaced with a CNN with a channel number corresponding to the number of categories and global pooling of each channel. A similar application of an all convolutional CNN was applied by Choi et al. [276] for MCI conversion prediction. Unsupervised pre-training has also been tested by Hosseini-Asl, Keynton and El-Baz [238] and Martinez-Murcia et al. [277] with 3D convolutional autoencoders, while features extracted by 3D-CNN have also been used as input for sparse autoencoders [278]. Ge et al. [279] combined a U-Net-structured 3D-CNN for multi-scale feature extraction with XG-Boost feature selection. State-of-art architectures for 2D-CNN have also been adapted to 3D, e.g., a 3D architecture for the Inception-v4 network [280]. Liu et al. [281] used 3D-AlexNet and 3D-ResNet as comparative models. Wang et al. [282] also proposed a probability-based ensemble of densely connected neural networks with 3D kernels to maximize network information flow. This study also revealed ensemble learning as a potential approach to higher performance, which is detailed in Section 8.2.

The additional dimensionality of 3D does not restrict input to the spatial domain. An example is dynamic functional connectivity networks, which are 2D representations of brain ROIs’ changes in blood oxygen level-dependent (BOLD) signals over time. For input of FCNs, the 3D-CNN obtains an additional temporal dimension in addition to the 2D spatial representation. With convolution along the temporal dimension, the neural network combines temporal and spatial connectivity to form more dynamic FCNs that can characterize time-dependent interactions considering the different contributions of time points [186].

The additional dimensionality of 3D-CNN corresponds to a significantly higher number of parameters within the model and higher computational cost. In order to reduce the computational cost, Spasov et al. [283] applied parameter-efficient 3D separable convolution, where the original 3D convolution is divided into depth-wise convolution and 1 × 1 point-wise convolution. Liu, Yadav, Fernandez-Granda and Razavian [281] performed comparative and ablation experiments and found that instance normalization can generalize better than batch normalization. This study also found that early spatial downsampling negatively impacts model performance, indicating that wider CNN architecture is more beneficial than additional layers and that smaller initial kernel sizes are ideal.

Liu, Cheng, Wang, Wang and Initiative [170] proposed the combined use of an ensemble 3D-CNN and 2D-CNN in a sequential manner, where the 3D-CNN captures spatial correlations with the 3D input. An ensemble of cascading 3D-CNN-generated feature maps is used as input for 2D-CNNs. While most of the studies above focus on categorical classification, disease progression predictions, and prediction of clinical measures, some deep learning studies were applied for different purposes, e.g., segmentation and image processing, which is potentially valuable for future studies in Alzheimer’s and related diseases. Yang et al. [284] proposed a 3D-CNN with residual learning architecture for hippocampal segmentation that is significantly more efficient than conventional algorithms. Pang et al. [285] combined a semi-supervised autoencoder with local linear mapping. With the development and availability of more powerful hardware in the past decade, the 3D convolutional neural network has become increasingly popular amongst applications within the reviewed literature.

7.2. Recurrent Neural Networks (RNN)

Longitudinal data of AD provide multiple data instances of a subject, allowing us to find ground truth for MCI conversion and time-to-conversion. However, the temporal nature of a series of instances is often not explored in DNN and CNN architecture. Recurrent neural networks incorporate the temporal domain through adaption to a sequence of input with time-varying activation and sequential synapse-like structure. The fundamental concept was formulated by Goodfellow et al. [227] as:

where and represent the state and input at time step . The state can be unfolded with respect to the past sequence:

where is a function. This property of the vanilla RNN allows to learn on all time steps and sequence lengths. Second-order RNNs consist of more complex neurons with memory components such as long short-term memory. LSTM is composed of a memory cell and three gates. The gates can be formulated as:

where for each gate , represents the activation, and and represent the recurrent weight and input weight matrices, respectively. The cell and update protocol can be formulated as:

where is the forget gate, is the external input gate and is the output gate. The recurrent weight and input weight of the memory cell are represented as and , respectively.

LSTM has been applied to brain network graph matrices to extract adjacent positional features from fMRI data; the combination of LSTM and extreme learning machine (ELM) showed a slight improvement over a CNN-ELM model in classification tasks [185]. Gated recurrent unit (GRU) is another gated-RNN structure that shares a similar structure with LSTM but does not contain the forget gate. Therefore, it contains a lower number of parameters and is more suitable for capturing long-term temporal patterns. GRU has been used for classification with temporal clustering of actigraphy time-series obtained through the monitoring of activity for NC, MCI, and AD subjects. In this application, features extracted with CNN and Toeplitz inverse covariance-based clustering were combined and fed into the recurrent neural network [286].

Bi-directional GRU (BGRU) is a GRU variation that can process input both forwards and backwards. It has been applied in a similar manner to MLP and CNN-extracted features in multiple studies [287,288]. Apart from its use as a classification component to replace traditional MLP or machine learning classifiers, RNN can also be utilized for structural data. One study has combined CNN and RNN by inputting a series of 2D slices from 3D scans to capture spatial features; the CNN component captures features within single slices, while the BGRU structures obtain a time-series of CNN-extracted features to extract inter-slice features, which are then used as input for an MLP classifier component [289]. Similarly, instead of features extracted from slice-level data, LSTM architecture variants have been modified to suit 3D structural data, e.g., 3D convolutional LSTM to encode representations extracted by a 3D-CNN [290].

In the range of applications of RNN in deep learning, a key characteristic that stands out is its ability to deal with temporal data. Therefore, one focus of interest is the combination of spatial and temporal information. Wang et al. [291] combined the two types of information for fMRI data through the parallel implementation of multiple LSTM on features corresponding to multiple time series of ROI BOLD signals and the use of convolutional components for time-series segments. While most studies formulate the MCI prediction problem as a classification task [292], one study has used features extracted from an LSTM-based autoencoder for prognosis modeling with a Cox regression model [293]. For time-series or sequential data, sample completeness is a significant challenge in practice. Due to the difficulty in longitudinal data collection, many datasets have missing or delayed collection time points. On top of classical data imputation, Nguyen et al. [294] utilized their proposed minimal RNN model to impute missing data by filling it with model predictions. This study achieved exceptional results in the TADPOLE longitudinal challenge for the 6-year predictions of ADAS-Cog13 and ventricular volume. These studies have shown the effectiveness of RNNs in the temporal modeling of AD and related diseases. With the incrementally increasing amount of longitudinal data collected across various projects, they will significantly impact the direction of the deep learning approach.

7.3. Graph and Geometric Neural Networks (GNNs)