Autonomous Underwater Vehicle Adaptive Altitude Control Framework to Improve Image Quality

Abstract

1. Introduction

2. Related Work

2.1. Dynamic Plan Generation

2.2. Underwater Image Quality

2.2.1. Traditional Techniques

2.2.2. AI Techniques

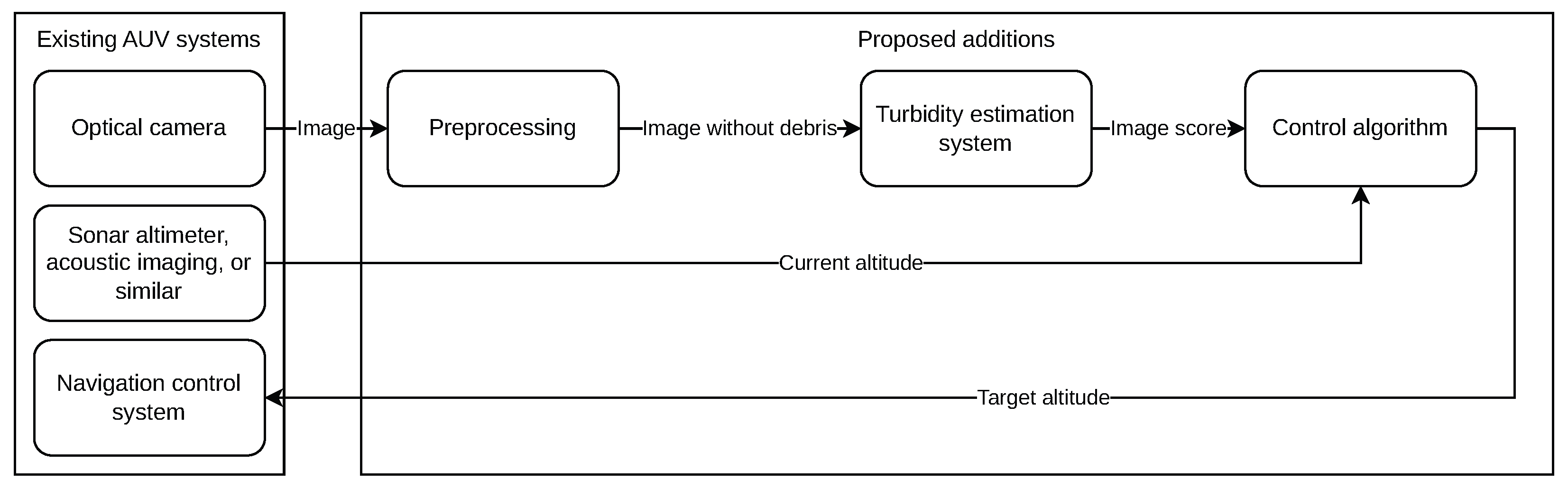

3. Problem Scenario and Proposed Methods

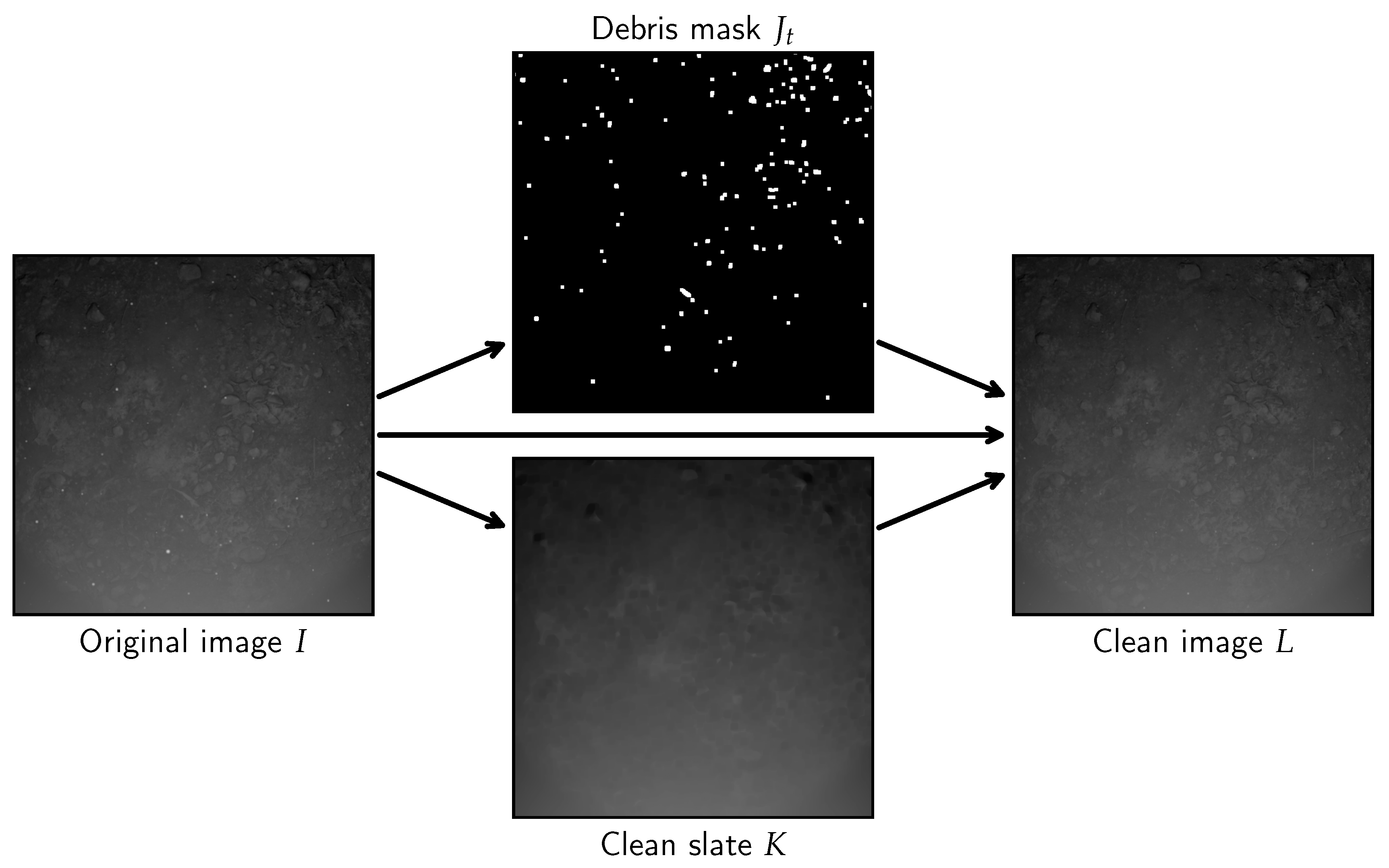

3.1. Image Preprocessing

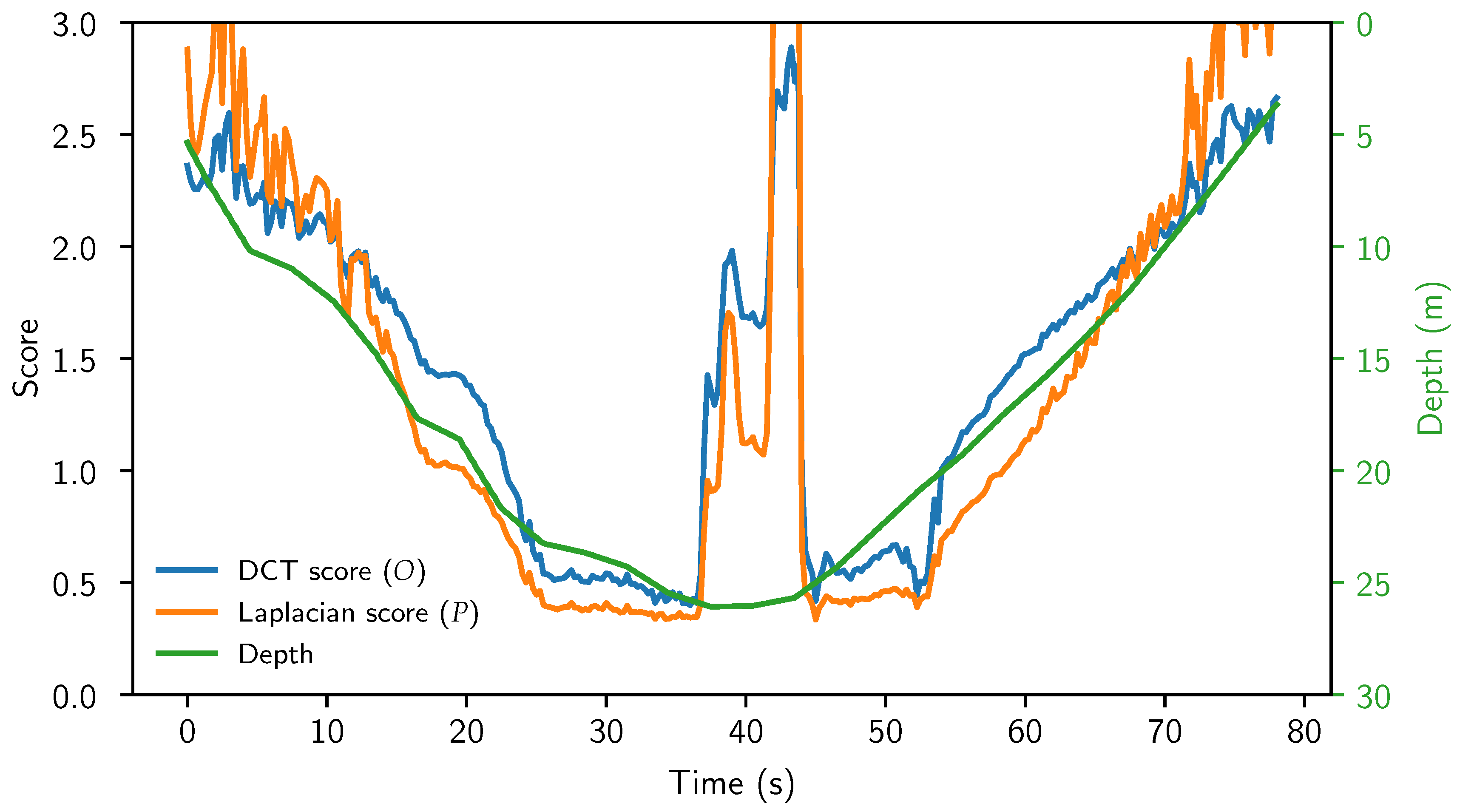

3.2. Image Quality Estimation

3.2.1. DCT Based Method

3.2.2. Laplacian Based Method

3.2.3. Proposed Method for Image Quality Estimation (D-L Method)

3.2.4. Control Algorithm

3.3. Hyperparameter Selection

3.3.1. Debris Removal Related Parameters

3.3.2. Image Quality Estimation Parameters

3.3.3. Control Parameters

4. Evaluation and Results

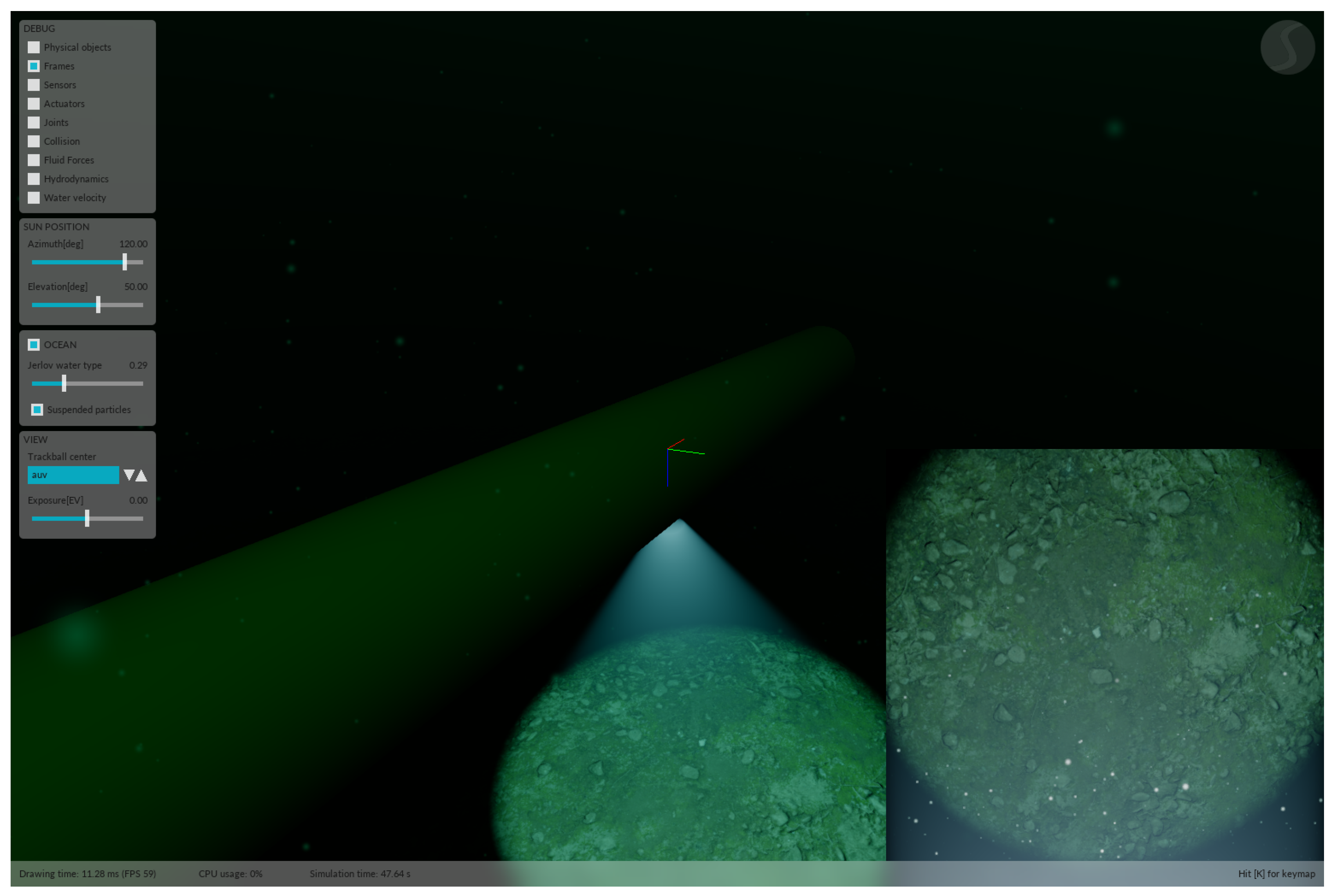

4.1. Evaluation Environment

- 1.

- It must support simulation of underwater environments.

- 2.

- It must be able to produce images with physically accurate turbidity effects.

- 3.

- It must support dynamic (movable) underwater lighting.

- 4.

- It would ideally be compatible with the ROS (Robot Operating System).

4.2. Evaluation Methodology

4.3. Evaluation Results

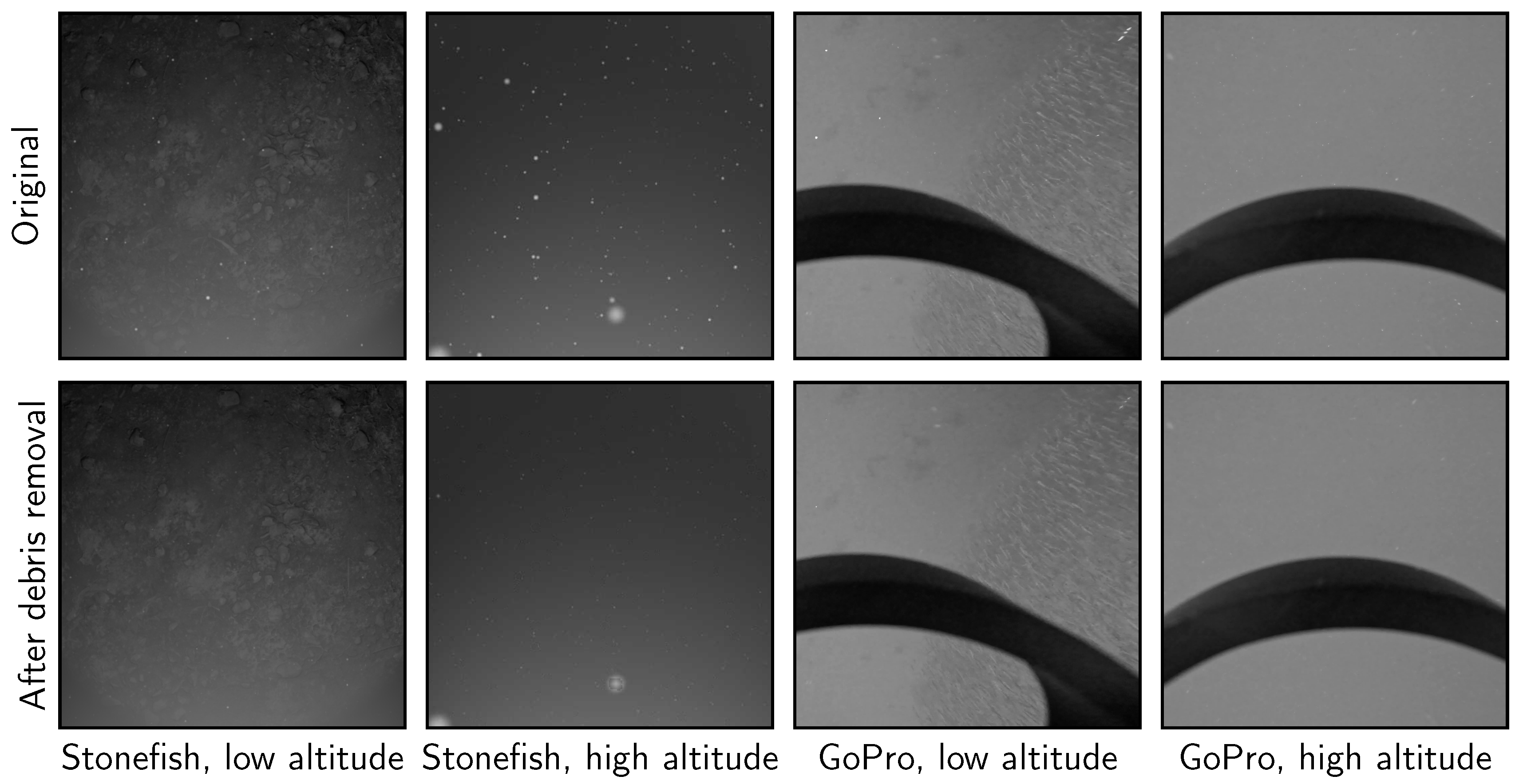

4.3.1. Debris Removal

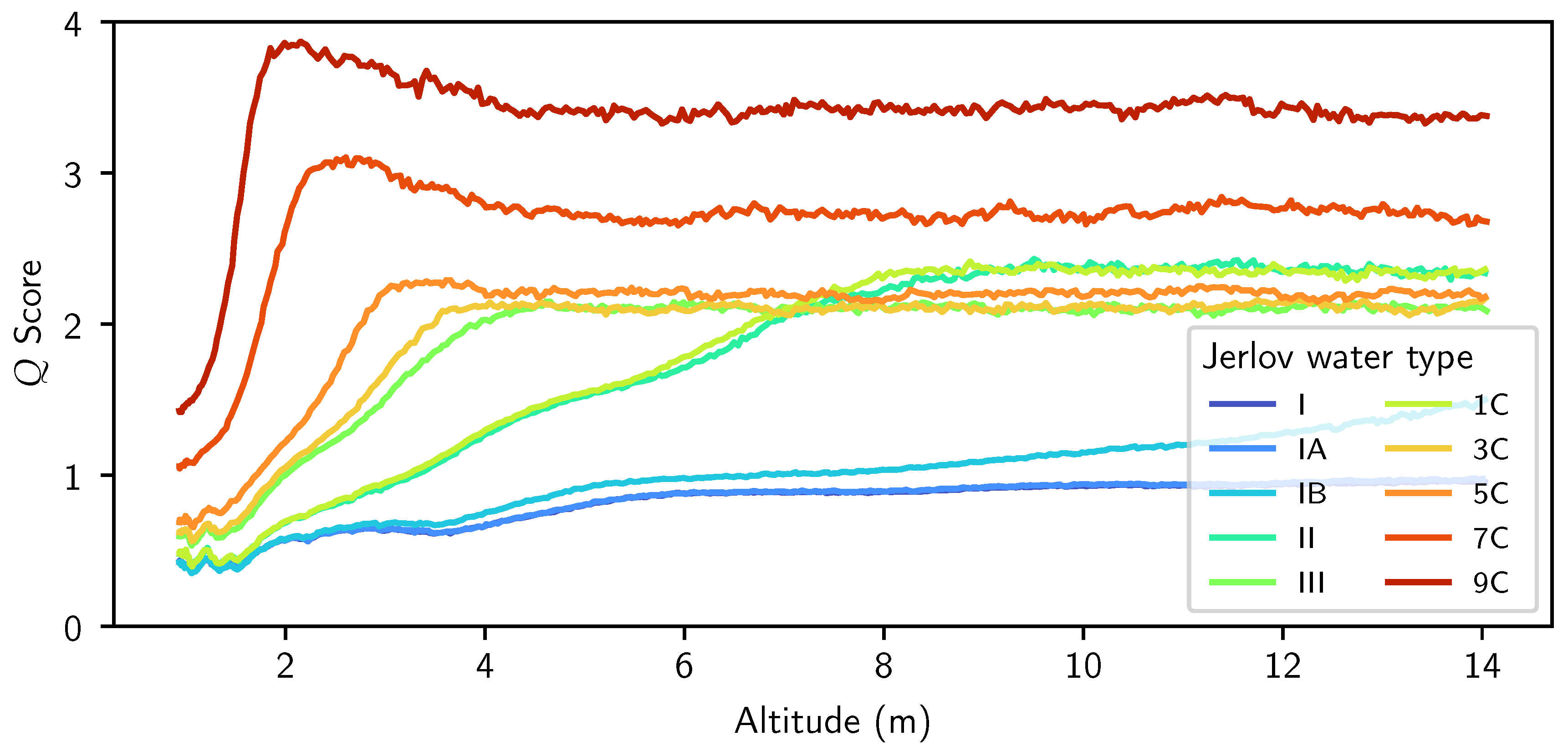

4.3.2. Image Quality Estimation

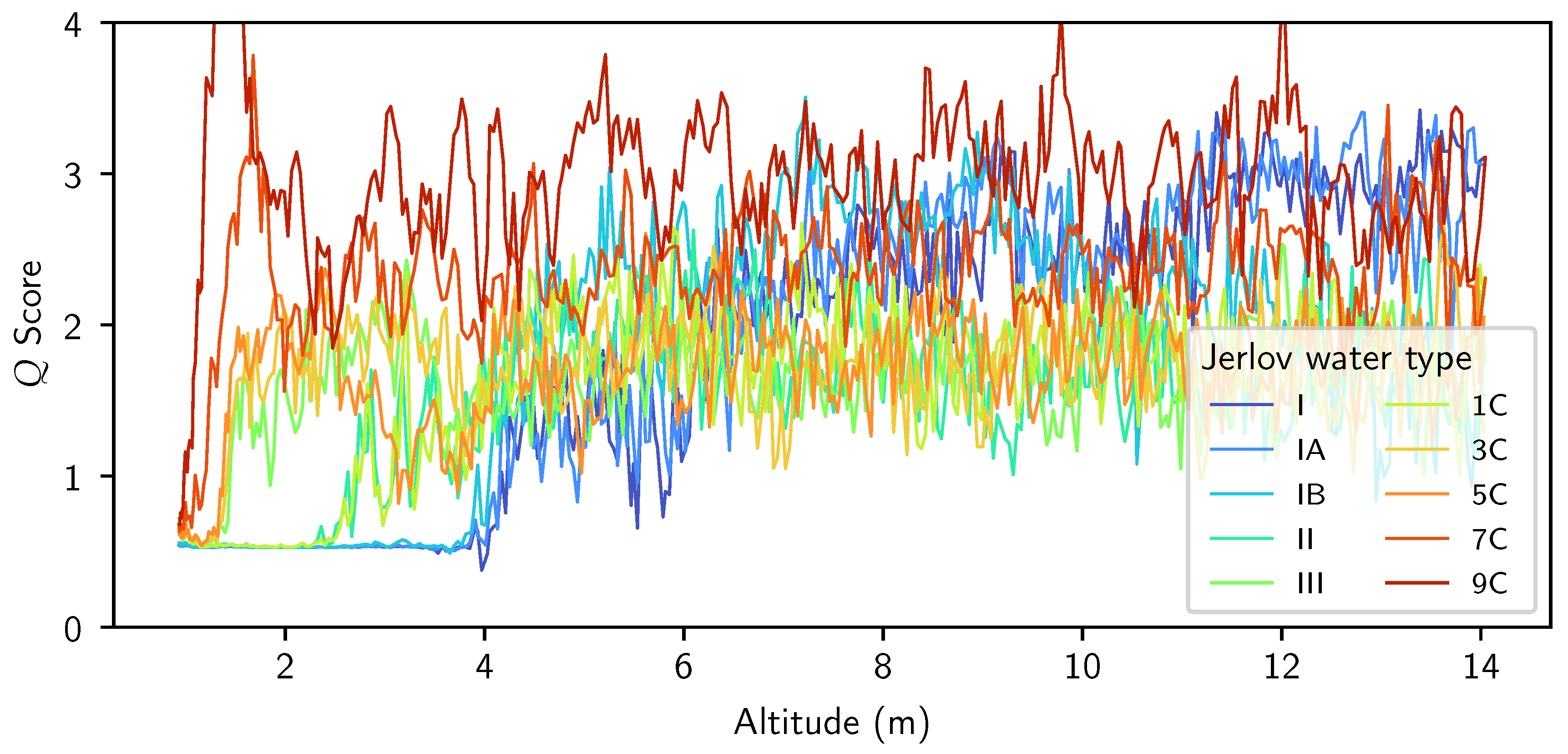

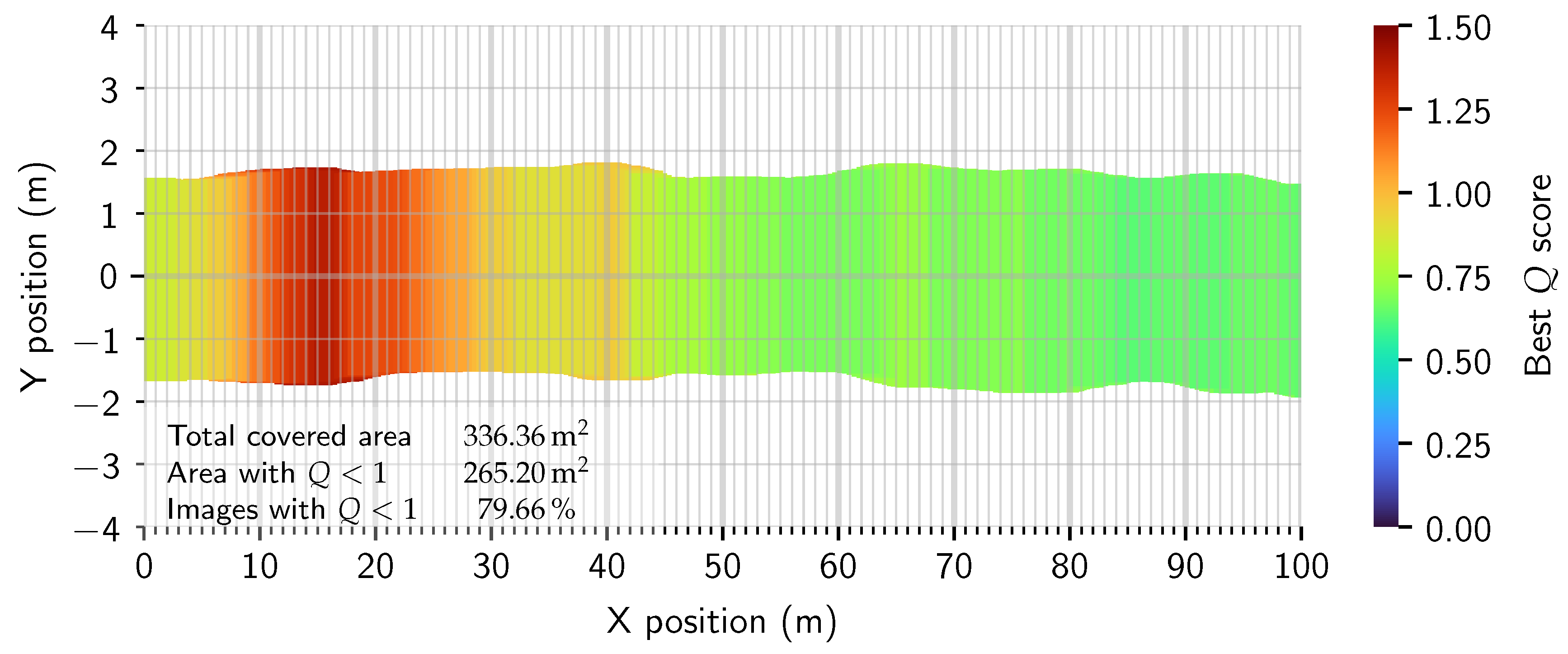

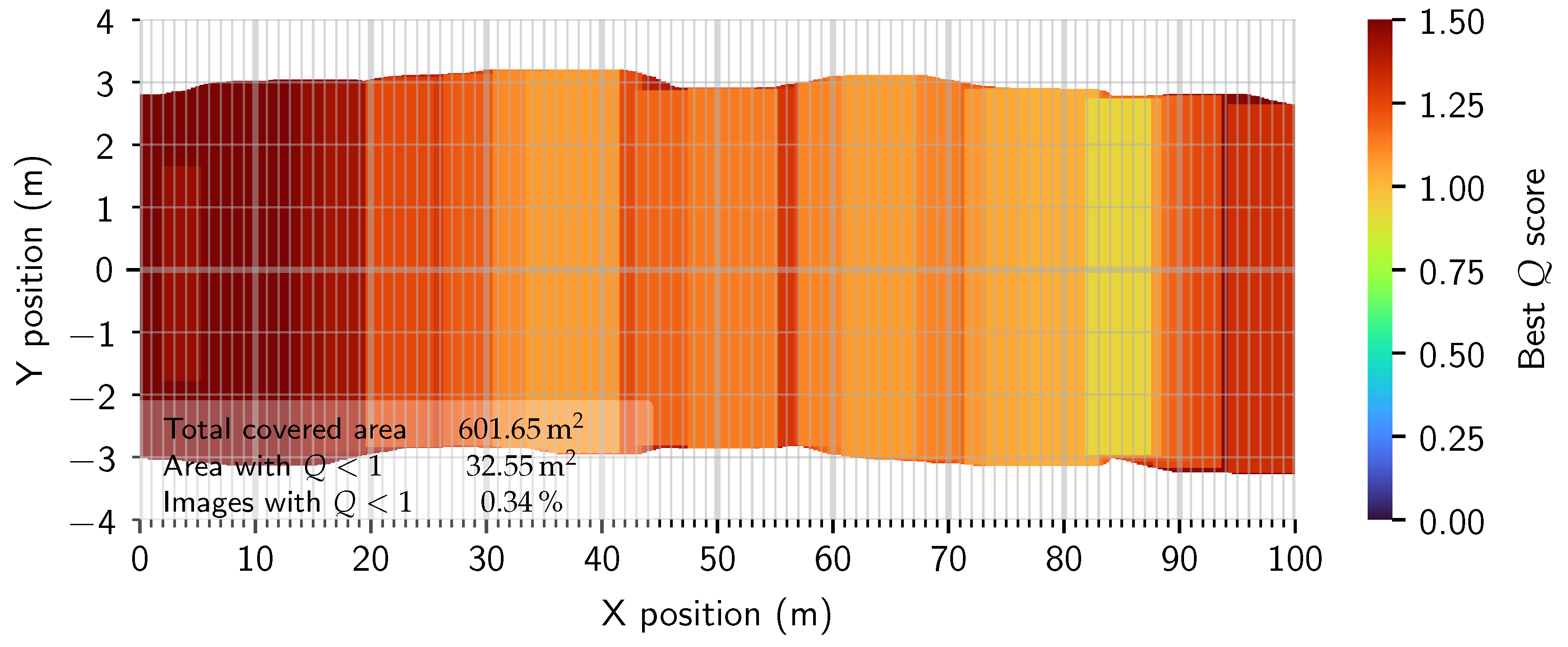

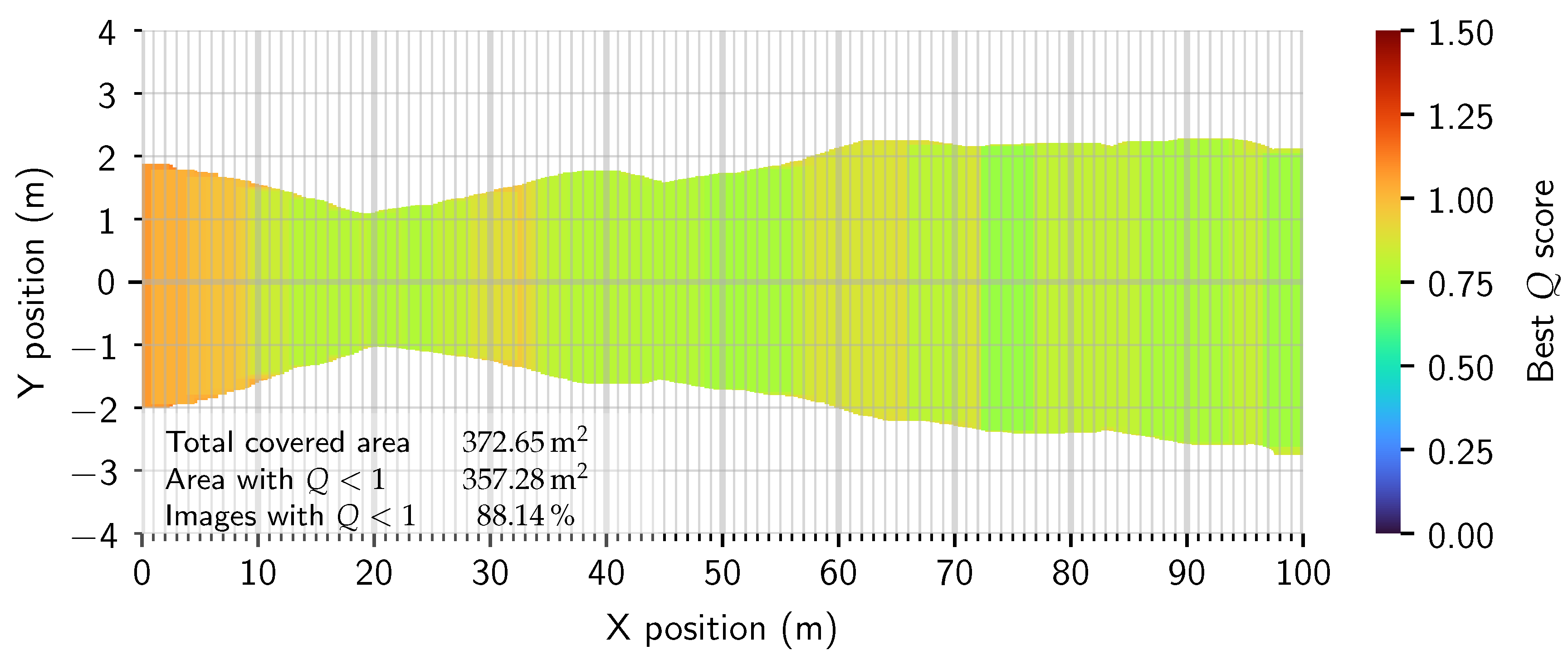

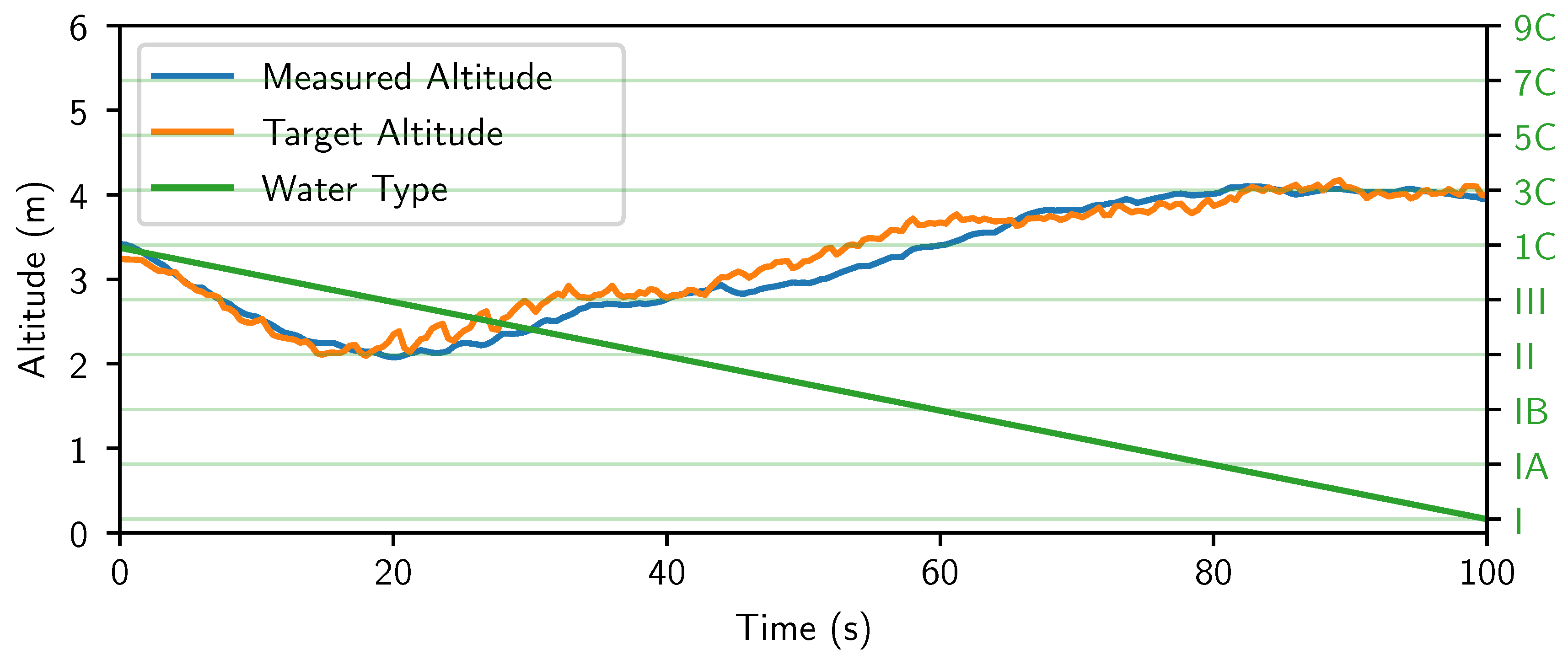

4.3.3. Control System

5. Conclusions and Future Works

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| AMC | Australian Maritime College |

| AUV | Autonomous Underwater Vehicle |

| CNN | Convolutional Neural Network |

| CTD | Conductivity, Temperature, Depth |

| DCP | Dark Channel Prior |

| DCT | Discrete Cosine Transform |

| FOV | Field of View |

| RGB | Red, Green, Blue |

| ROS | Robot Operating System |

Appendix A

| Name | Description | Value |

|---|---|---|

| I | Image from AUV camera | Sensor reading (512 × 512 monochrome image) |

| J | Grayscale image with values corresponding to the significance of debris in I | Calculated |

| Variant of J using t as a threshold | Calculated | |

| K | Blurred and eroded variant of I, missing most details | Calculated |

| L | Clean variant of I with debris removed | Calculated |

| M | Frequency distribution of L | Calculated |

| N | 1D frequency data consisting of the mean of the top proportion of values in each bin of M | Calculated |

| O | Image score using frequency domain technique | Calculated |

| P | Image score using Laplacian technique | Calculated |

| Q | Final image score | Calculated |

| a | Current measured altitude | Sensor reading |

| Rough target altitude | Calculated | |

| Min safe altitude | 2 m | |

| Max safe altitude | 15 m | |

| Safe rough target altitude | Calculated | |

| Final target altitude | Calculated | |

| b | Size of rolling average | 10 (4 s at 2.5 Hz) |

| t | Threshold used for | 8/255 |

| w | Weight function used to calculate O | 394 px Blackman window shifted right by 26 px |

| Blur kernel for I | 15 px window | |

| Erosion kernel for I | 3 px window | |

| Dilation kernel for I | 5 px window | |

| Blur kernel for K | 5 px window | |

| Erosion kernel for K | 7 px window | |

| Blur kernel for M | 11 px window | |

| Blur kernel for M | 25 px window | |

| Width of I | Sensor reading (512 px) | |

| Portion of values to keep in N | 0.1 (10%) | |

| Correction factor for the DCT based algorithm | 15 | |

| Correction bias for the DCT based algorithm | 0 | |

| Correction factor for the Laplacian based algorithm | 2.1 | |

| Correction bias for the Laplacian based algorithm | 0.2 | |

| Weight to use in lower value in the final averaging in Q | 0.7 |

Appendix B

References

- Yuh, J. Design and Control of Autonomous Underwater Robots: A Survey. Auton. Robot. 2000, 8, 7–24. [Google Scholar] [CrossRef]

- Solonenko, M.G.; Mobley, C.D. Inherent optical properties of Jerlov water types. Appl. Opt. 2015, 54, 5392. [Google Scholar] [CrossRef]

- Hansen, L.I.N. Turbidity Measurement Based on Computer Vision. Master’s Thesis, Aalborg University, Aalborg, Denmark, 2019. Available online: https://projekter.aau.dk/projekter/files/306657262/master.pdf (accessed on 20 July 2025).

- Hu, L.; Zhang, X.; Perry, M.J. Light scattering by pure seawater: Effect of pressure. Deep Sea Res. Part I Oceanogr. Res. Pap. 2019, 146, 103–109. [Google Scholar] [CrossRef]

- Čejka, J.; Bruno, F.; Skarlatos, D.; Liarokapis, F. Detecting Square Markers in Underwater Environments. Remote Sens. 2019, 11, 459. [Google Scholar] [CrossRef]

- Jerlov, N.G. Marine Optics; Elsevier: Amsterdam, The Netherlands, 1976; Volume 14. [Google Scholar]

- Álvarez Tuñón, O.; Jardón, A.; Balaguer, C. Generation and Processing of Simulated Underwater Images for Infrastructure Visual Inspection with UUVs. Sensors 2019, 19, 5497. [Google Scholar] [CrossRef] [PubMed]

- King, P.; Ziurcher, K.; Bowden-Floyd, I. A risk-averse approach to mission planning: Nupiri muka at the Thwaites Glacier. In Proceedings of the 2020 IEEE/OES Autonomous Underwater Vehicles Symposium (AUV)(50043), St. Johns, NL, Canada, 30 September–2 October 2020; IEEE: Piscataway, NJ, USA, 2020. [Google Scholar] [CrossRef]

- Neukermans, G.; Ruddick, K.; Greenwood, N. Diurnal variability of turbidity and light attenuation in the southern North Sea from the SEVIRI geostationary sensor. Remote Sens. Environ. 2012, 124, 564–580. [Google Scholar] [CrossRef]

- Shen, C.; Shi, Y.; Buckham, B. Model predictive control for an AUV with dynamic path planning. In Proceedings of the 2015 54th Annual Conference of the Society of Instrument and Control Engineers of Japan (SICE), Hangzhou, China, 28–30 July 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 475–480. [Google Scholar] [CrossRef]

- Yan, Z.; Li, J.; Wu, Y.; Zhang, G. A Real-Time Path Planning Algorithm for AUV in Unknown Underwater Environment Based on Combining PSO and Waypoint Guidance. Sensors 2018, 19, 20. [Google Scholar] [CrossRef]

- Kruger, D.; Stolkin, R.; Blum, A.; Briganti, J. Optimal AUV path planning for extended missions in complex, fast-flowing estuarine environments. In Proceedings of the Proceedings 2007 IEEE International Conference on Robotics and Automation, Rome, Italy, 10–14 April 2007; IEEE: Piscataway, NJ, USA, 2007; pp. 4265–4270. [Google Scholar] [CrossRef]

- Galceran, E.; Campos, R.; Palomeras, N.; Ribas, D.; Carreras, M.; Ridao, P. Coverage Path Planning with Real-time Replanning and Surface Reconstruction for Inspection of Three-dimensional Underwater Structures using Autonomous Underwater Vehicles: Coverage Path Planning with Real-time Replanning and Surface Reconstruction. J. Field Robot. 2014, 32, 952–983. [Google Scholar] [CrossRef]

- Hert, S.; Tiwari, S.; Lumelsky, V. A terrain-covering algorithm for an AUV. Auton. Robot. 1996, 3, 91–119. [Google Scholar] [CrossRef]

- MahmoudZadeh, S.; Yazdani, A.; Sammut, K.; Powers, D. Online path planning for AUV rendezvous in dynamic cluttered undersea environment using evolutionary algorithms. Appl. Soft Comput. 2018, 70, 929–945. [Google Scholar] [CrossRef]

- Kondo, H.; Ura, T. Navigation of an AUV for investigation of underwater structures. Control Eng. Pract. 2004, 12, 1551–1559. [Google Scholar] [CrossRef]

- Lee, H.; Jeong, D.; Yu, H.; Ryu, J. Autonomous Underwater Vehicle Control for Fishnet Inspection in Turbid Water Environments. Int. J. Control. Autom. Syst. 2022, 20, 3383–3392. [Google Scholar] [CrossRef]

- Lim, H.S.; King, P.; Chin, C.K.; Chai, S.; Bose, N. Real-time implementation of an online path replanner for an AUV operating in a dynamic and unexplored environment. Appl. Ocean Res. 2022, 118, 103006. [Google Scholar] [CrossRef]

- Antonelli, G.; Chiaverini, S.; Finotello, R.; Schiavon, R. Real-time path planning and obstacle avoidance for RAIS: An autonomous underwater vehicle. IEEE J. Ocean. Eng. 2001, 26, 216–227. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X. Single Image Haze Removal Using Dark Channel Prior. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 2341–2353. [Google Scholar] [CrossRef]

- Codevilla, F.M.; Silva Da Costa Botelho, S.; Drews, P.; Duarte Filho, N.; De Oliveira Gaya, J.F. Underwater Single Image Restoration Using Dark Channel Prior. In Proceedings of the 2014 Symposium on Automation and Computation for Naval, Offshore and Subsea (NAVCOMP), Rio Grande, Brazil, 11–13 March 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 18–21. [Google Scholar] [CrossRef]

- Gao, Y.; Li, H.; Wen, S. Restoration and Enhancement of Underwater Images Based on Bright Channel Prior. Math. Probl. Eng. 2016, 2016, 3141478. [Google Scholar] [CrossRef]

- Li, X.; Yu, S.; Gu, H.; Tan, Y.; Xing, L. Underwater Image Clearing Algorithm Based on the Laplacian Edge Detection Operator. In Proceedings of the 2nd International Conference on Artificial Intelligence, Robotics, and Communication, Fuzhou, China, 25–27 November 2022; Springer: Singapore, 2023; pp. 159–172. [Google Scholar] [CrossRef]

- Hao, Y.; Hou, G.; Tan, L.; Wang, Y.; Zhu, H.; Pan, Z. Texture enhanced underwater image restoration via Laplacian regularization. Appl. Math. Model. 2023, 119, 68–84. [Google Scholar] [CrossRef]

- Jiang, B.; Zhang, W.; Zhao, J.; Ru, Y.; Liu, M.; Ma, X.; Chen, X.; Meng, H. Gray-Scale Image Dehazing Guided by Scene Depth Information. Math. Probl. Eng. 2016, 2016, 1–10. [Google Scholar] [CrossRef]

- Bazeille, S.; Quidu, I.; Jaulin, L.; Malkasse, J.P. Automatic underwater image pre-processing. In Proceedings of the CMM’06, Brest, France, 26–19 October 2006; Available online: https://hal.science/hal-00504893v1/file/BAZEILLE_06a.pdf (accessed on 20 July 2025).

- Pavlic, M.; Belzner, H.; Rigoll, G.; Ilic, S. Image based fog detection in vehicles. In Proceedings of the 2012 IEEE Intelligent Vehicles Symposium, Madrid, Spain, 3–7 June 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 1132–1137. [Google Scholar] [CrossRef]

- Rabaa, Y.; Benazza-Benyahia, A. Estimation of Water Turbidity by Image-Based Learning Approaches. In Proceedings of the International Conference on Artificial Intelligence and Green Computing, Beni Mellal, Morocco, 15–17 March 2023; Springer: Cham, Switzerland, 2023; pp. 63–77. [Google Scholar] [CrossRef]

- Feizi, H.; Sattari, M.T.; Mosaferi, M.; Apaydin, H. An image-based deep learning model for water turbidity estimation in laboratory conditions. Int. J. Environ. Sci. Technol. 2022, 20, 149–160. [Google Scholar] [CrossRef]

- Mohammed, A. Determine Water Turbidity by Using Image Processing Technology. Int. J. Intell. Syst. Appl. Eng. 2024, 12, 4260–4265. [Google Scholar]

- Zhou, N.; Chen, H.; Chen, N.; Liu, B. An Approach Based on Video Image Intelligent Recognition for Water Turbidity Monitoring in River. ACS ES&T Water 2024, 4, 543–554. [Google Scholar] [CrossRef]

- Rudy, I.M.; Wilson, M.J. Turbidivision: A machine vision application for estimating turbidity from underwater images. PeerJ 2024, 12, e18254. [Google Scholar] [CrossRef] [PubMed]

- Jocher, G.; Chaurasia, A.; Qiu, J. Ultralytics YOLOv8. 2023. Available online: https://github.com/ultralytics/ultralytics (accessed on 25 July 2025).

- Yuan, C.; Li, Y. Switching median and morphological filter for impulse noise removal from digital images. Optik 2015, 126, 1598–1601. [Google Scholar] [CrossRef]

- Singh, I.; Neeru, N. Performance Comparison of Various Image Denoising Filters under Spatial Domain. Int. J. Comput. Appl. 2014, 96, 21–30. [Google Scholar] [CrossRef]

- Zhang, M.M.; Choi, W.S.; Herman, J.; Davis, D.; Vogt, C.; McCarrin, M.; Vijay, Y.; Dutia, D.; Lew, W.; Peters, S.; et al. DAVE Aquatic Virtual Environment: Toward a General Underwater Robotics Simulator. In Proceedings of the 2022 IEEE/OES Autonomous Underwater Vehicles Symposium (AUV), Singapore, 19–21 September 2022; IEEE: Piscataway, NJ, USA, 2022. [Google Scholar] [CrossRef]

- Amer, A.; Álvarez Tuñón, O.; Uğurlu, H.İ.; Le Fevre Sejersen, J.; Brodskiy, Y.; Kayacan, E. UNav-Sim: A Visually Realistic Underwater Robotics Simulator and Synthetic Data-Generation Framework. In Proceedings of the 2023 21st International Conference on Advanced Robotics (ICAR), Abu Dhabi, United Arab Emirates, 5–8 December 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 570–576. [Google Scholar] [CrossRef]

- Cieslak, P. Stonefish: An Advanced Open-Source Simulation Tool Designed for Marine Robotics, With a ROS Interface. In Proceedings of the OCEANS 2019-Marseille, Marseille, France, 17–20 June 2019; IEEE: Piscataway, NJ, USA, 2019. [Google Scholar] [CrossRef]

- Potokar, E.; Ashford, S.; Kaess, M.; Mangelson, J.G. HoloOcean: An Underwater Robotics Simulator. In Proceedings of the 2022 International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–27 May 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 3040–3046. [Google Scholar] [CrossRef]

- Manhaes, M.M.M.; Scherer, S.A.; Voss, M.; Douat, L.R.; Rauschenbach, T. UUV Simulator: A Gazebo-based package for underwater intervention and multi-robot simulation. In Proceedings of the OCEANS 2016 MTS/IEEE Monterey, Monterey, CA, USA, 19–23 September 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1–8. [Google Scholar] [CrossRef]

- urdf-ROS Wiki. Available online: https://wiki.ros.org/urdf (accessed on 25 July 2025).

| Distance from start | 0 m | 20 m | 40 m | 60 m | 80 m | 100 m |

| Water type | 1C | III | II | IB | IA | I |

| Altitude | Area Covered | Area with | Images with |

|---|---|---|---|

| dynamic | 372.65 | 357.28 | 88.14 % |

| 2.0 m | 205.55 | 205.55 | 100.00 % |

| 2.5 m | 270.38 | 244.37 | 89.46 % |

| 3.0 m | 336.36 | 265.20 | 79.66 % |

| 3.5 m | 402.59 | 231.37 | 63.39 % |

| 4.0 m | 469.36 | 234.19 | 52.54 % |

| 4.5 m | 536.59 | 151.34 | 16.55 % |

| 5.0 m | 601.65 | 32.55 | 0.34 % |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Litjens, S.; King, P.; Garg, S.; Yang, W.; Amin, M.B.; Bai, Q. Autonomous Underwater Vehicle Adaptive Altitude Control Framework to Improve Image Quality. Drones 2025, 9, 608. https://doi.org/10.3390/drones9090608

Litjens S, King P, Garg S, Yang W, Amin MB, Bai Q. Autonomous Underwater Vehicle Adaptive Altitude Control Framework to Improve Image Quality. Drones. 2025; 9(9):608. https://doi.org/10.3390/drones9090608

Chicago/Turabian StyleLitjens, Simon, Peter King, Saurabh Garg, Wenli Yang, Muhammad Bilal Amin, and Quan Bai. 2025. "Autonomous Underwater Vehicle Adaptive Altitude Control Framework to Improve Image Quality" Drones 9, no. 9: 608. https://doi.org/10.3390/drones9090608

APA StyleLitjens, S., King, P., Garg, S., Yang, W., Amin, M. B., & Bai, Q. (2025). Autonomous Underwater Vehicle Adaptive Altitude Control Framework to Improve Image Quality. Drones, 9(9), 608. https://doi.org/10.3390/drones9090608