1. Introduction

Unmanned aerial vehicles (UAVs), known for their ease of deployment and versatility, have become indispensable tools in various military and civilian applications, including search and rescue missions, reconnaissance, and surveillance [

1,

2,

3]. In drone technology, a crucial application involves monitoring complex environments and dynamic targets often characterized by uncertain intentions. Active tracking of moving targets presents a significant challenge in this domain. This challenge is further intensified when tracking occurs in cluttered and a priori unknown environments. Here, the UAV must simultaneously map its surroundings, predict the target’s motion, and plan a safe, kinodynamically feasible trajectory, all under severe computational constraints. The failure of any component in this chain can lead to mission failure, highlighting the need for a robust and integrated approach.

Tracking dynamic targets presents a complex challenge, constrained by limited onboard computation, UAV dynamics, and stringent safety requirements for obstacle avoidance [

4,

5,

6]. This challenge is traditionally addressed within the framework of maneuvering target tracking. The prevailing approach in this field relies on multiple-model methods, which simultaneously employ a set of models (and corresponding filters) to cover the possible dynamic behaviors of a target, as comprehensively surveyed by Li and Jilkov [

7].

Such filtering-based approaches form the core of many practical Electro-Optical Systems (EOSs) for UAVs, as demonstrated by Kim et al. [

8], who implemented a Kalman filter for image tracking and real-time 3D target localization on a small UAV. Their work exemplifies the successful application of classic estimation theory and also highlights the inherent system complexity and computational burden associated with achieving high-quality imaging, tracking, and measurement under SWaP constraints. To circumvent the dependency on explicit dynamic models, an alternative paradigm formulates the problem as a reactive control task.

Vision-based systems, for instance, achieve autonomous tracking by using visual trackers like LCT (Long-Term Correlation Tracking) with pixel-level feedback [

9,

10,

11], effectively bypassing the need for explicit state estimation via filtering. For instance, Kumar et al. [

12] demonstrated the detection, localization, and tracking of spherical targets using only onboard sensors. The primary focus of these approaches is the visual tracking scheme and the design of UAV control laws. By leveraging specific tracking strategies, they can achieve real-time performance. A fundamental limitation of these methods, however, is their general lack of environmental perception, which prevents them from incorporating critical safety and dynamic feasibility constraints. Regarding target prediction methodologies, some approaches employ Kalman filters integrated with coarse-grained motion models to forecast future trajectories [

13,

14,

15]; however, their reliability is often limited by model inaccuracies. To address this limitation, hybrid estimation methods, such as the Interacting Multiple Model (IMM) algorithm, have been developed to better handle target maneuverability and nonlinearities [

16,

17]. Alternatively, intent-free planning methods have been proposed that predict target motion through polynomial or Bézier curve regression [

18,

19]. A significant drawback of such methods is their susceptibility to the Runge phenomenon and catastrophic failure during boundary extrapolation.

To address these limitations, researchers use the hierarchical framework method to address these challenges [

20,

21]. Hierarchical motion planning involves an advanced geometric path planner for initial path generation and a low-level time parameterization scheme for trajectory optimization. The advanced geometric path planner identifies an obstacle-free path to satisfy primary geometric constraints. Subsequently, the low-level time parameterization method accounts for drone dynamics and generates a time-parameterized trajectory. Thus, the advanced geometric path planner, often called front-end path planning, complements the low-level time parameterization, known as back-end trajectory optimization [

22,

23]. For rotor-based unmanned aerial vehicles with non-trivial dynamics, generating trajectories directly in high-dimensional state space is time-consuming, whereas developing geometric paths and applying smoothing calculations is more efficient.

Although effective in meeting real-time demands, this decoupling often sacrifices global optimality. The separation of geometric and dynamic constraints can result in trajectories trapped in local minima, especially during local replanning with a non-zero initial state. This frequently manifests as suboptimal smoothness and inefficient maneuvering when facing sudden obstacles or evasive targets. For instance, when facing sudden obstacles or target maneuvers during the tracking process, the planned trajectory is constrained within a topologically equivalent class [

18,

24,

25]. This constraint can result in entrapment within local minima, leading to suboptimal flight smoothness and safety. Chen et al. [

18] address the challenging problem of tracking a moving target in cluttered environments using a quadrotor. Their initial proposal involves an online trajectory planning method that generates smooth, dynamically feasible, and collision-free polynomial trajectories that track a visually tracked moving target. Ding et al. [

26] propose a real-time B-spline based kinodynamic (RBK) search algorithm, transforming a position-only shortest path search into an efficient kinodynamic search by leveraging B-spline parameterization properties. Meanwhile, Dmitri et al. [

27] describe a practical path-planning algorithm for autonomous vehicles operating in unknown environments with online obstacle detection. However, both [

25,

26] suffer from significant time complexity due to the need for fine-grained search. This may lead to low computational efficiency, which is not conducive to rapid route planning for complex environments and drones.

Beyond these, a distinct line of research employs hierarchical learning and control frameworks, such as those using Safe Reinforcement Learning (SRL) from human demonstration [

28] and Hierarchical SRL with prescribed performance bounds [

29]. These methods excel in learning complex skills from demonstration and providing strong theoretical safety and stability guarantees, often via Lyapunov analysis. However, their reliance on extensive pre-training data, complex neural network computations, and precise environment models presents a fundamental challenge for resource-constrained UAVs tasked with real-time, onboard dynamic target tracking. The computational overhead and data dependency of these SRL-based approaches stand in contrast to the need for a lightweight, self-contained algorithm that requires no prior demonstrations or complex online policy learning.

Therefore, a comprehensive solution is required that not only maintains the efficiency of hierarchical planning but also bridges the gap between geometry and dynamics to achieve higher performance. Such a solution should deliver real-time computational efficiency, robustness to prediction uncertainties, strict dynamic feasibility, and tight perception-planning integration.

To this end, we propose a solution framework to adaptively navigate and plan paths for unmanned aerial vehicles tracking dynamic targets in unknown obstacle scenarios. The contributions of this article are summarized as follows, with comparisons to state-of-the-art methods such as Fast Tracker [

19]:

- (1)

A lightweight, intent-free method for motion prediction driven purely by spatiotemporal dynamics, departing from any form of fitting-based prediction (e.g., trajectory fitting in Fast Tracker) to avoid extrapolation inaccuracies.

- (2)

An environment-adaptive risk-weight mechanism for direct step-size determination in ESDF fine-grained search, enabling more efficient and reactive planning compared to fixed-step approaches.

- (3)

The proposed approach is validated through extensive simulations and subsequently implemented on a fully autonomous quadcopter system. Real-world experiments are conducted to evaluate its performance in dynamic scenarios requiring motion planning and obstacle avoidance.

Overall, trajectory planning efficiency is enhanced through a kinodynamic framework that integrates ESDF calculation directly into the search process. This method accounts for dynamic constraints and incorporates an adaptive risk-weight strategy, which reduces time complexity without extra computational cost, thereby extending the planner’s range and precision.

2. Framework Overview

In UAV perception, both LiDAR and cameras have proven effective for environmental sensing. However, LiDAR’s significant weight and power consumption present substantial drawbacks for small UAVs, where payload and energy are critically constrained. For instance, typical high-resolution LiDARs used in robotics (e.g., the Livox Mid-360 laser scanner from Livox Technology Co., Ltd., Shenzhen, China) can weigh over 250 g and consume 6 W or more [

30], whereas a lightweight camera system (e.g., the Intel RealSense D435i from Intel Corporation, Santa Clara, CA, USA) often weighs less than 100 g and consumes under 3 W [

22]. This stark contrast in Size, Weight, and Power (SWaP) underscores the distinct advantages of cameras, making them particularly suitable for vision-based UAV applications. Consequently, our work employs a depth camera as the primary sensor to address the challenging problem of autonomous mobile target tracking. This task finds critical applications in security surveillance, filmmaking, and wildlife monitoring [

13,

20], where the depth camera provides essential geometric information for robust 3D tracking and obstacle avoidance.

As illustrated in

Figure 1, our system is specifically designed for tracking a dynamic target in complex, unknown environments. The core problem we address is formally defined as: given the UAV’s current state

and the estimated state of the target

, compute a trajectory

for the UAV that minimizes a cost function

while satisfying (i) the UAV’s kinematic and dynamic constraints and collision-free conditions

, where the obstacle set

is constructed from the depth camera data; (ii) real-time replanning requirements to adapt to the target’s unpredictable motion. This formulation of the tracking problem, which fuses target estimates with depth-based obstacle mapping, aligns with and extends previous research on drone-based pursuit [

5,

20] and specifically builds upon studies published in the Drones journal concerning moving target tracking [

10,

11].

Figure 1 illustrates the software architecture of our vision-based quadrotor automatic navigation system. The suggested trajectory planning framework (highlighted in yellow) is a planning module that encompasses the Adaptive Kinodynamic path search and B-spline-based optimization method (AKBS). The hardware components (highlighted in orange) comprise the sensing hardware and the UAV with its actuator. The sensing hardware includes a depth camera and an IMU. The Euclidean Signed Distance Field (ESDF) [

31] and the state estimation module (VINS Fusion) [

2] offer low-frequency local maps and high-frequency pose estimation. Following the map update, the planning module will initiate the replanning strategy and a fixed-frequency trajectory prediction.

Our trajectory planning framework utilizes an adaptive kinodynamic path search to generate initial, collision-free trajectories efficiently. This approach is detailed in

Section 4. Subsequently, a computationally efficient optimization step refines these trajectories by adjusting their control points to enhance smoothness and guarantee dynamic feasibility, as elaborated in

Section 5. A geometric controller tracks the desired trajectories generated by the real-time replanning module during flight [

32]. The resulting attitude and throttle commands are then transmitted to the PX4 flight controller to guide the aerial vehicle toward the dynamic target. The experimental setup and results are detailed in

Section 6.

3. Intent-Free Target Trajectory Prediction

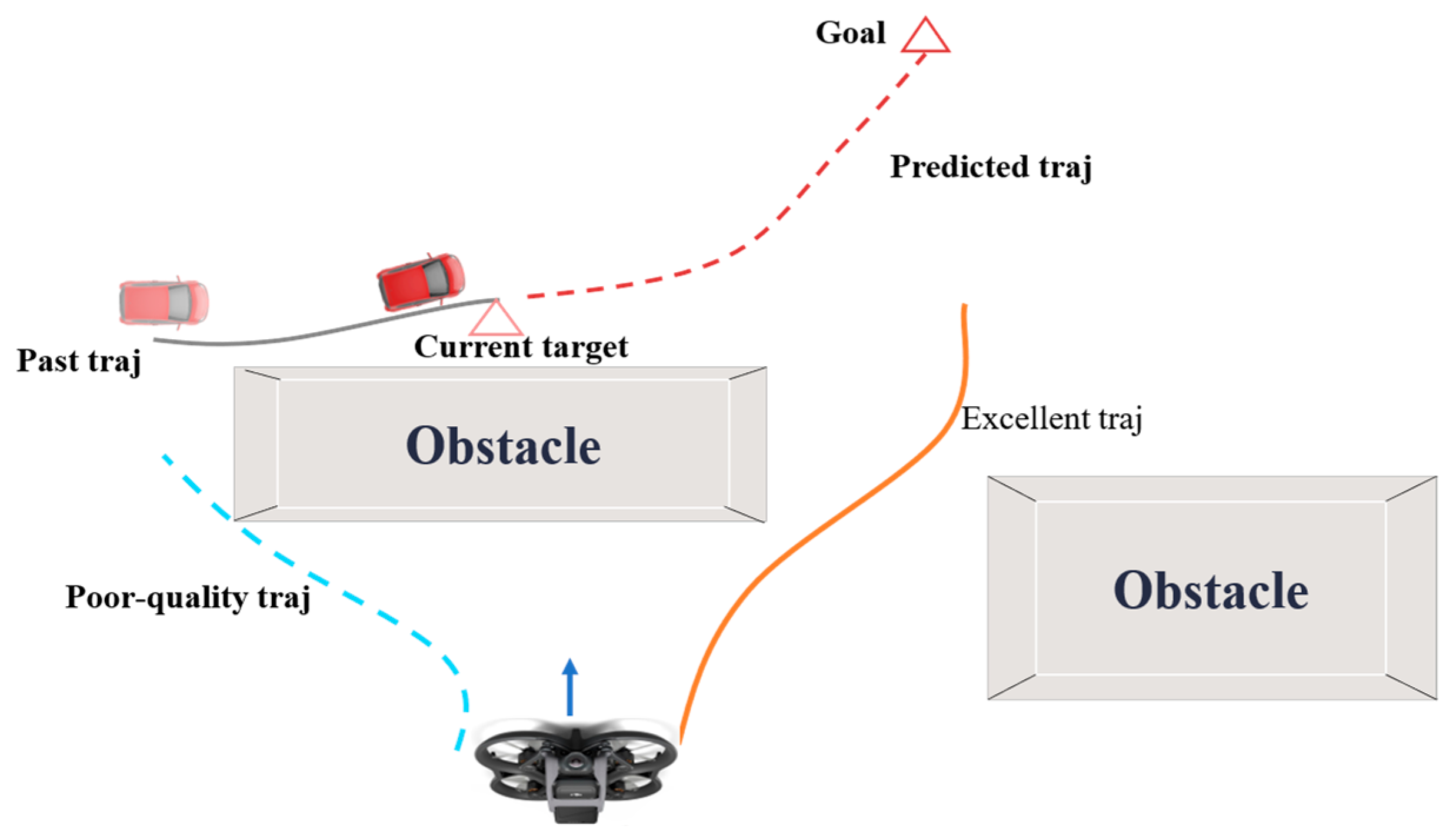

Trajectory prediction is essential for aerial drones to track moving targets, as conceptually illustrated in

Figure 2. The figure contrasts two approaches: methods without prediction (red trajectories) can only react to the target’s current estimated position, resulting in inefficient, oscillatory paths that lag behind the target’s motion and greatly increase the risk of loss—a catastrophic failure in applications like surveillance. In contrast, our prediction-informed method (green trajectory) anticipates the target’s future motion. This enables the UAV to plan a smoother, more efficient path that proactively intercepts the predicted path, thereby minimizing tracking error. The surrounding obstacles, perceived by the depth camera, further define the feasible space for these trajectories, underscoring the necessity of prediction in complex environments. However, generating an optimal trajectory is challenging, especially for local replanning from a non-zero initial state, due to the target’s unknown intent and dynamic characteristics. Consequently, the planning module must perform high-frequency replanning. Longer prediction horizons generally lead to more globally optimal planning results, provided the predictions remain accurate.

Assuming the target’s current position

and velocity

can be acquired via onboard sensors or communication, the objective is to predict its future position

at a fixed, known time horizon

. Algorithm 1 outlines the proposed intent-free trajectory prediction method for moving target. The proposed intent-free trajectory prediction algorithm is designed to address a critical challenge in vision-based UAV tracking: maintaining pursuit of a dynamic target in the absence of high-level intent information. In practical scenarios such as tracking a non-cooperative vehicle or a wild animal, the target’s ultimate goals and planned route are unknown to the UAV. Our algorithm operates under this key assumption, relying solely on real-time kinematic observations (position and velocity) from the onboard vision system. This makes it particularly suitable for applications like surveillance and filmmaking, where the target’s behavior is unpredictable, and the planner must react swiftly based on limited information. The core objective is to generate a short-term, kinematically feasible prediction to enable the UAV’s planner to proactively intercept the target, as opposed to lagging behind it.

| Algorithm 1:

Intent-free trajectory prediction algorithm for moving target

|

| | Input: , , |

| | Output: Predicted trajectory |

| 1 | |

| 2 | |

| 3 | While do |

| 4 | for do |

| 5 | |

| 6 | end for |

| 7 | |

| 8 | Return |

Prior to the presentation of Algorithm 1, this section details the kinematic modeling of dynamic target. The model employs a second-order chain integral formulation, utilizing acceleration as the control input, as defined below (Note that the following formulation is independent of any knowledge regarding the target’s motion):

The state vector encompasses the target’s kinematic properties, where denotes its 2D position, and represents its velocity. The control input is defined as the target’s acceleration, which drives the state evolution. The system matrix and the input matrix govern the internal dynamics and how the input affects the state, respectively. and represent the identity matrix and the zero matrix, respectively.

In Algorithm 1,

calculates a preliminary endpoint position

based on the target’s motion model:

The position

serves as an estimate of the final state, providing the heuristic cost required to reach it. In line 4,

denotes the discrete control input, defined as follows:

In Line 6, the process

computes the target state

using Equation (1). This subsequent state is then used to select the optimal control input by applying a time-energy optimization principle:

where

denotes the actual cost, and

denotes the heuristic cost.

The coefficient balances the weight of these two cost components. Notably, Equation (5) employs the Euclidean distance from the target to the estimated position as a proxy for time. Once Equation (5) is computed, the optimal control input corresponding to the target position is selected and appended to the predicted trajectory sequence . This operation is executed in Line 7.

Compared to learning-based predictors [

10], our method is data-efficient and does not require extensive offline training on specific motion datasets. It is robust to novel, unseen target behaviors since it is based on fundamental kinematics rather than pattern matching. However, a key limitation is its inability to leverage known contextual information or historical patterns for long-horizon prediction, which learning-based methods can potentially exploit if such data is available. Compared to other model-based predictors, such as the Constant Velocity (CV) or Constant Acceleration (CA) Kalman filters, our algorithm offers a significant advantage by explicitly generating and evaluating a set of kinematically feasible motion primitives. While a CV/CA model assumes a single, fixed motion model, our approach naturally encompasses a spectrum of maneuvers (e.g., sharp turns, deceleration) through the discrete control set

making it more adaptable to agile targets.

The primary trade-off lies in computational cost. Simple filters like CV are computationally lighter, whereas our method involves online optimization over a control space, demanding greater processing power. This design choice is justified for our target tracking application, where prediction accuracy over a short horizon is paramount for collision-free interception. In summary, our predictor fills a niche between overly simplistic filters and data-hungry learning models, offering a principled, kinematics-driven approach for short-term prediction in unstructured environments.

4. Front-End: Adaptive Kinodynamic Path Searching

In dynamic target tracking, the trajectory planning module requires high-frequency replanning. Traditional methods, which generate trajectories based on the shortest path [

33] (right curve,

Figure 3), often result in dynamically infeasible and unsafe trajectories. This issue is particularly pronounced during local replanning with a non-zero initial state. In contrast, the proposed method produces a dynamically feasible and safe trajectory (left curve,

Figure 3) by fully accounting for the aerial vehicle’s initial state and performing path searches informed by obstacle proximity. This work operates under the assumption that the target’s position is either perfectly perceived by the sensing algorithms or communicated to the UAV without obstruction.

Our kinodynamic search method builds upon a hybrid A* algorithm [

18], expanding nodes (motion primitives) generated by discretizing the control input. This process identifies a safe and dynamically feasible trajectory within a voxel grid map. A key contribution is the thorough utilization of the ESDF map’s prior information to design adaptive search steps and pruning schemes, enabling an efficient, collision-free trajectory search. The complete front-end search process is outlined in Algorithm 2, where

and

denote the open and closed sets, respectively. The open set is a priority queue that stores candidate nodes to be expanded, typically sorted by the sum of the cost from the start node and a heuristic estimate to the goal. The

operation selects the node with the lowest cost for expansion. The closed set records nodes that have already been visited and expanded to avoid redundant searches and prevent infinite loops.

implements the core node expansion process. It generates new candidate nodes by applying kinematically feasible motion primitives from the current node. The

method (used as

and

) checks whether a specific node is currently present in the open set or closed set, respectively. This is fundamental for the graph search logic to determine if a node is a new discovery, needs an update, or has already been processed. The

function checks whether the node lies in an occupied space (collision check) using the ESDF. The algorithm maintains a parent-pointer for each node during the expansion phase (line 19:

), which records the optimal predecessor of the node. When the

condition is met at node

, the Return Path() operation is invoked. This operation does not rely on a separately stored path variable. Instead, it dynamically reconstructs the optimal path by starting from the terminal node and sequentially tracing backwards through the chain of parent pointers until the start node is reached.

| Algorithm 2: Adaptive Kinodynamic Path Searching |

| 1: | Input: initial state , goal state |

| 2: | Initialize() |

| 3: | while is not empty do |

| 4: | , |

| 5: | if then |

| 6: | Return Path() |

| 7: | end if |

| 8: | |

| 9: | for in do |

| 10: | if then |

| 11: | continue; |

| 12: | end |

| 13: | |

| 14: | if then |

| 15: | |

| 16: | else if then |

| 17: | continue; |

| 18: | else if |

| 19: | , |

| 20: | |

| 21: | end if |

| 22: | end for |

| 23: | end while |

Instead of straight lines, we use motion primitives respecting the aerial drone dynamic as graph edges. Motion primitives are represented by the struct node.

4.1. Motion Primitive

While the original Hybrid A* algorithm in [

27] was designed for car-like robots in a 2D plane, our work constitutes a non-trivial extension to 3D space for quadrotor navigation, with key adaptations in state representation and motion primitives. The search state is defined as

, and node expansion (

Expand()) is governed by the UAV’s kinodynamic model presented in Equation (6) of this paper, which captures the full 3D translational dynamics—a fundamental departure from the 2D bicycle model in [

27]. A critical enhancement for aerial navigation is the treatment of orientation: although yaw is not explicitly included in the kinodynamic search, the desired yaw angle

for target tracking is geometrically derived from the generated path by aligning the UAV’s heading with the horizontal velocity vector

. Furthermore, leveraging the differential flatness property of UAV systems [

34], the entire state and control inputs can be algebraically determined from the flat outputs

. Thus, the trajectory and yaw profile generated by our planner fully define the UAV’s motion, enabling accurate tracking by the downstream geometric controller [

25]. We first discuss how to generate motion primitives in

Expand(). Similarly to [

34], using the differential flatness theory, the state of the aerial drone in path searching is represented by

. With the third derivative of displacement

as the control input

, the state transition equation is as follows:

Here, the symbol

denotes the direct sum, which constructs the matrices

and

by stacking the enclosed matrix block diagonally three times, with all off-diagonal blocks being zero.

is the expansion step,

. In three-dimensional space, uniform discrete control inputs

. To fully leverage the prior information from the ESDF—a product of the mapping module that is reused by the planner without incurring additional computational cost—we introduce a risk weight parameter

, defined as:

where

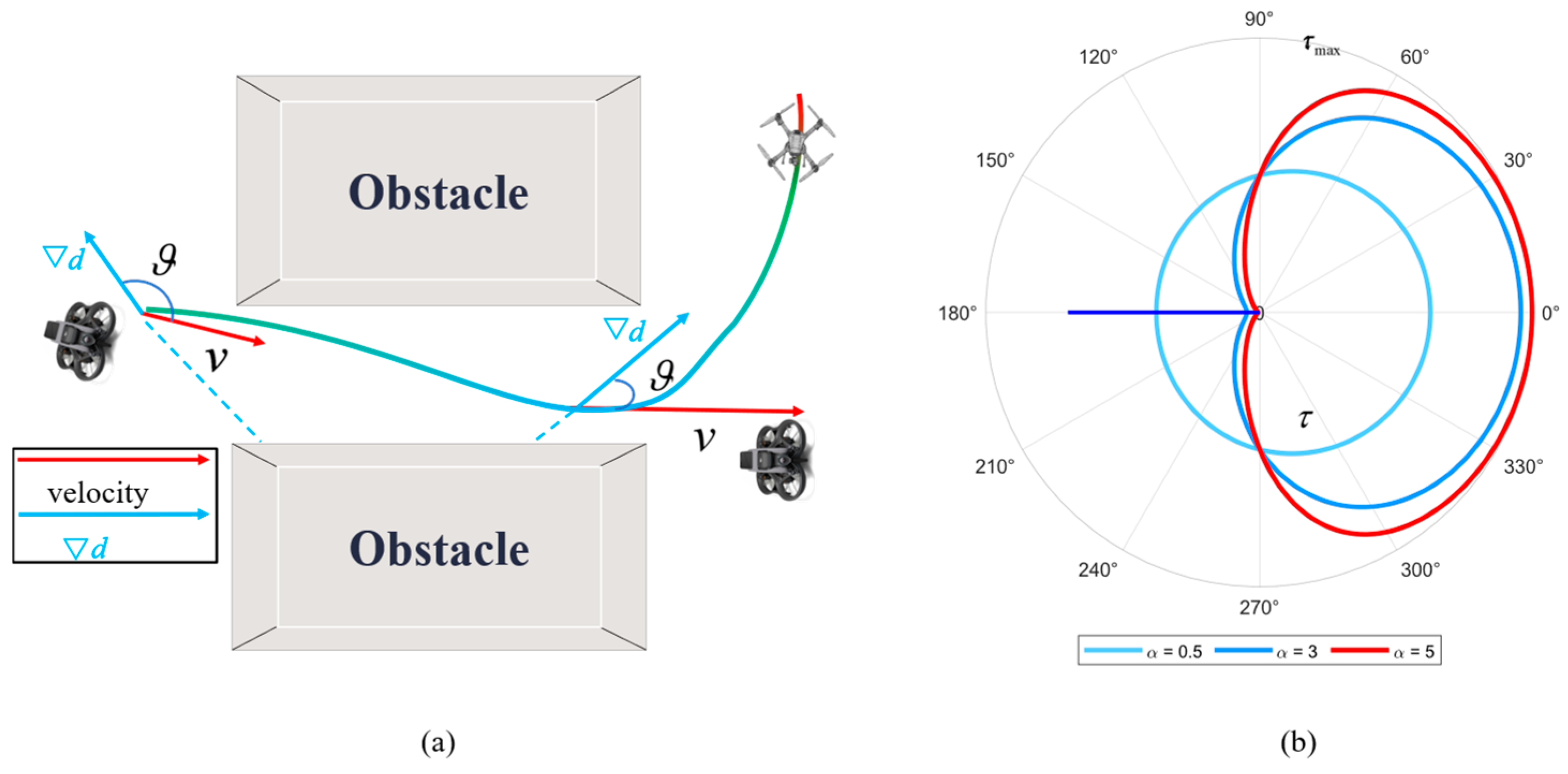

is the gradient of the ESDF. As shown in

Figure 4, when the velocity direction of the aerial drone is toward the obstacle, the gradient direction given by the ESDF is opposite to the velocity direction, which aggravates the maneuvering danger. Conversely, obstacle information can be disregarded when the gradient direction aligns with the velocity direction. The expansion step

of the motion primitive is given by the following formula:

where

is the rate-of-change coefficient. Evidently, the expansion step size is maximized when the directional angle is minimal. Conversely, the step size is minimized when the direction is opposed, as illustrated in

Figure 4b.

Unlike traditional search-based path planning algorithms such as A*, which typically consider a fixed number of spatially adjacent nodes (e.g., 8 in 2D or 26 in 3D), the proposed algorithm incorporates a dynamically adjustable point expansion step size mechanism, as shown in

Figure 5.

The non-uniform step expansion strategy differentiates our search algorithm and enhances aerial pathfinding in several key aspects. First, the well-designed mapping function in Equation (9) dynamically adjusts the expansion step size based on the risk weight

, which is derived from obstacle proximity and velocity alignment. This mechanism ensures conservative steps near obstacles for safety while allowing larger steps in open spaces to enhance search efficiency, thereby preventing node expansion in hazardous proximity. Second, since computational time correlates with the number of expanded nodes, larger steps reduce the total node count, simultaneously promoting trajectories away from obstacles for improved safety. Third, compared to traditional A* [

33], our method sparsely populates the search space, lowering computational load and enhancing real-time performance. Finally, the strategy fully incorporates the initial state during expansion, enabling dynamically feasible solutions for high-frequency replanning from non-zero initial states.

4.2. Actual Cost and Heuristic Cost

Following the terminology of A*, the cost of each node can be expressed as . In this formula, denotes the actual cost from the initial state to the current state , while represents the heuristic cost to search faster.

To achieve a trade-off between control-effort and trajectory duration, we minimize the cost function defined as:

In Algorithm 2,

calculates the cost of a motion primitive for each node extension, which is determined by the discrete control inputs and their duration. Under this formulation, the optimal search path is composed of a sequence of these motion primitives. Therefore, the cost function

is defined as follows:

An admissible and informative heuristic is essential for accelerating the search process. To this end, the problem is formulated as a two-point boundary value problem [

18,

32]. Furthermore, the acceleration constraint is relaxed to reduce computational load.

Here, denotes the positional difference between the start and end points, and represents the velocity difference. represents three directions. Equation (12) incorporates the UAV’s initial velocity into the path search.

calculates this heuristic cost

by applying Pontryagin’s minimum principle to minimize the cost-to-go from the current state to the target state.

So, . Adjusting the expansion step size of motion primitives offers a tunable trade-off between path quality and computational completeness. Empirical results from both simulations and physical experiments validate the algorithm’s robust performance.

5. Back-End: Trajectory Optimization

While the proposed search algorithm efficiently generates an initial kinodynamically feasible path, the resulting path, like many sampling-based or graph-search methods, may still be in close proximity to obstacles due to the discrete nature of the search and the myopic nature of the cost function. This is a known limitation of such front-end path finders [

35]. To address this issue and fully leverage the distance information in free space, we employ a B-spline-based trajectory optimization as the back-end. This two-stage strategy combines the robustness of the search algorithm in complex environments with the ability of gradient-based optimization to refine the trajectory for safety and smoothness. The key is to transform the sparse, discrete path waypoints from the front-end into a continuous, smooth, and collision-free trajectory that explicitly maintains a safe distance from obstacles.

5.1. Boundary Constraint

Boundary constraints define the fixed conditions for the initial state

and the final state

This work employs a B-spline for representing the UAV’s three-dimensional trajectory [

36,

37]. The core of our optimization is the formulation of a collision cost term that actively pushes the trajectory away from obstacles using the ESDF. As defined in Equation (20), this term penalizes control points where the distance to the nearest obstacle is below a safety threshold, ensuring the final optimized trajectory maintains a safe clearance.

The initial state of

k degree B-spline is determined only by the initial

k control points and the time interval. Given position constraint

, the relationship between position and control points is defined as follows:

Owing to the recursive property of B-splines, their derivatives are also B-splines. Consequently, the velocity and acceleration control points, denoted as

and

, respectively, can be derived from Equation (13):

where

represents the knot span. For a uniform B-spline, the knot vector is defined such that the time difference between consecutive knots is constant. This time difference, denoted as

, is termed the knot span. Therefore, the boundary constraint of the aerial drone can be expressed by the following formula:

where

represent control points. The vectors

represent the position, velocity, and acceleration at the trajectory’s start and end points, respectively. To accommodate high-frequency replanning, Equation (15) is applied iteratively throughout the process.

5.2. Trajectory Optimization

For a

k-degree B-spline defined by

n + 1 control points

, boundary constraints fix the positions of the first and last

k control points. Therefore, we optimize the remaining

n + 1 − 2

k control points and define the total cost function as follows:

The total cost function includes smoothness cost (), collision cost (), and dynamic cost (). , , and represent a trade-off between smoothness, safety, and dynamic feasibility.

In the context of 3D motion planning for UAVs, the dynamic constraints and operational requirements for vertical motion often differ from those in the horizontal plane. For instance, the acceleration and jerk limits along the z-axis are typically more conservative due to the need to directly counteract gravity and ensure stable ascent/descent. To accommodate these distinct characteristics and to enable independent control over the agility in the horizontal plane and the stability in the vertical direction, we formulate separate smoothness cost terms for the xy-plane and the z-axis.

The smoothness cost

is defined using the squared norm of higher-order derivatives of the trajectory segments. To independently regulate the smoothness in the horizontal plane and the vertical axis, as motivated above, we decompose the cost into two orthogonal components using projection matrices. Let

and

be the projection matrices that extract the horizontal (x, y) and vertical (z) components from a 3D vector, respectively. The smoothness cost is then formulated as:

where

and

are their respective weighting coefficients. This formulation allows for fine-grained tuning; for example, a larger

can be set to enforce a smoother, more conservative vertical profile, while a smaller

permits more aggressive maneuvers in the horizontal plane.

Noting the particular property of the B-spline, we convert the higher-order derivative information into the geometry information of the trajectory. So we rewrite

:

While the two formulations are fundamentally equivalent, Equation (18) offers distinct advantages: it relies solely on the trajectory’s geometric information, independent of time allocation, and it directly relates the cost to the optimization variables.

For the collision cost

, it is expressed as the repulsive force of the obstacle acting on each control point:

Here, represents the distance from the i-th control point to the nearest obstacle, obtained from the ESDF gradient map, and is the safety distance threshold. A penalty is applied when falls below .

Dynamic feasibility is enforced by constraining all velocity and acceleration control points [

38]. Consequently, penalties are imposed on the maximum velocity and acceleration of the aerial vehicle, formulated as:

The function is an even function that applies no penalty when the velocity or acceleration magnitude remains below a specified threshold. When the threshold is exceeded, a two-stage penalty is activated: a cubic term imposes a progressively increasing cost for exceeding the maximum allowable rate, while a quadratic term is added to ensure numerical stability and prevent unbounded growth.

5.3. Optimization Strategy

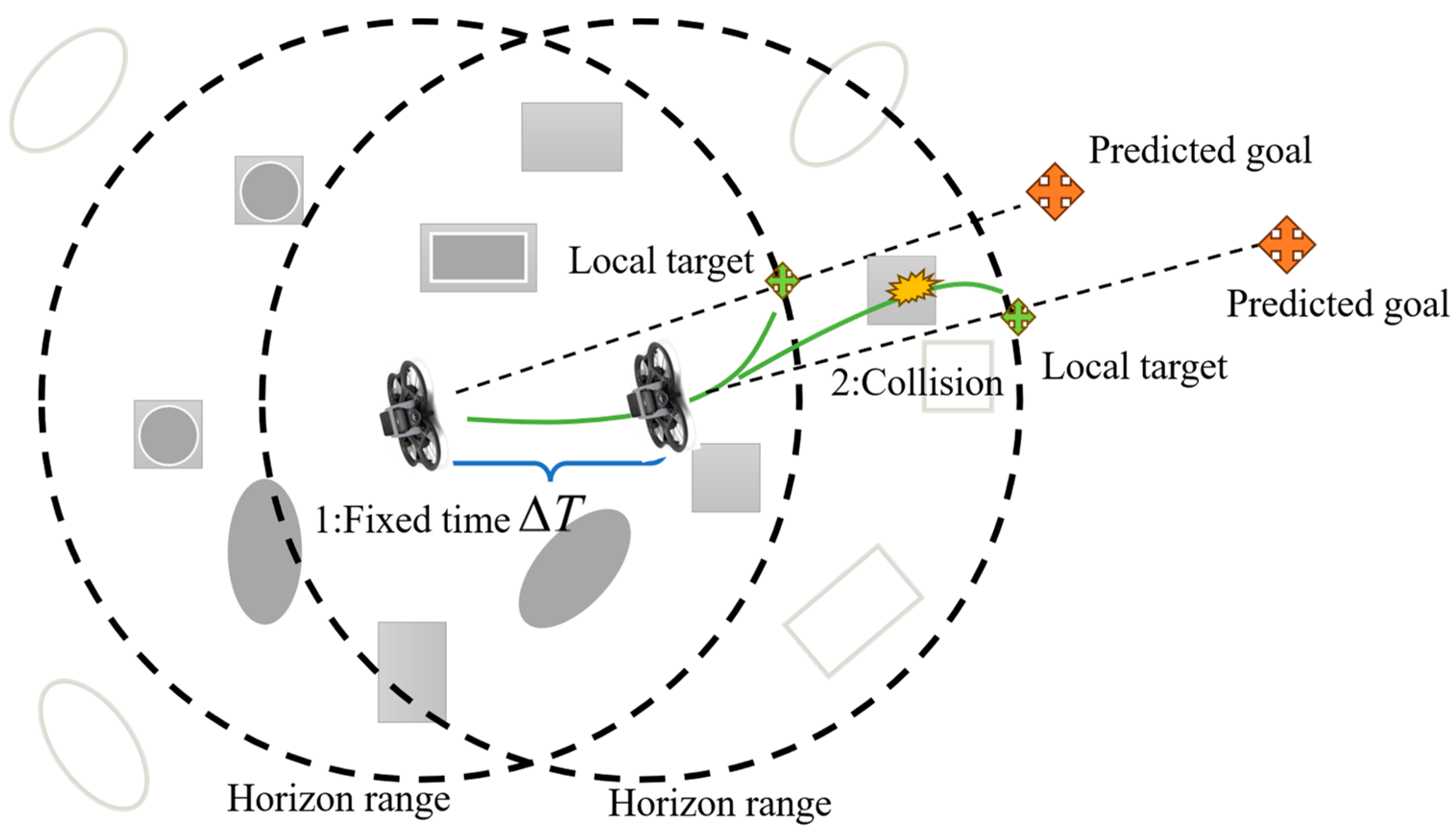

This local optimization strategy, also referred to as the Re-Planning Strategy, is illustrated in

Figure 6. The process begins by establishing a global target using the prediction data from Algorithm 1. If this global target lies outside the horizon space (denoted by the dotted circle), a line is projected from the UAV’s current position to the global target. The local target is then defined as the point where this line intersects the horizon space boundary. Since planning outside this space is inefficient, all path search and trajectory optimization are confined within it.

Re-planning is triggered under two conditions, as shown in

Figure 7. (1) Safety Check after Optimization: Our planning framework consists of a front-end path search that uses a discrete obstacle inflation for geometric feasibility, followed by a back-end trajectory optimization that employs soft constraints for smoothness and more precise obstacle avoidance. After the optimized trajectory is generated, a final safety validation is performed by discretizing the trajectory and rigorously checking for collisions against the ESDF. A re-planning cycle is immediately triggered if any discretized point on the optimized trajectory penetrates an obstacle (i.e., the signed distance ≤ 0). This ensures that the final output is strictly safe, even if the soft-constrained optimizer occasionally produces a trajectory that slightly cuts corners due to cost balancing. (2) Periodic Re-planning: The motion planning module is also invoked periodically at fixed time intervals of T = 0.5 s. This ensures system reactivity to new obstacles and dynamic changes that may not have been present during the previous planning cycle.

7. Conclusions

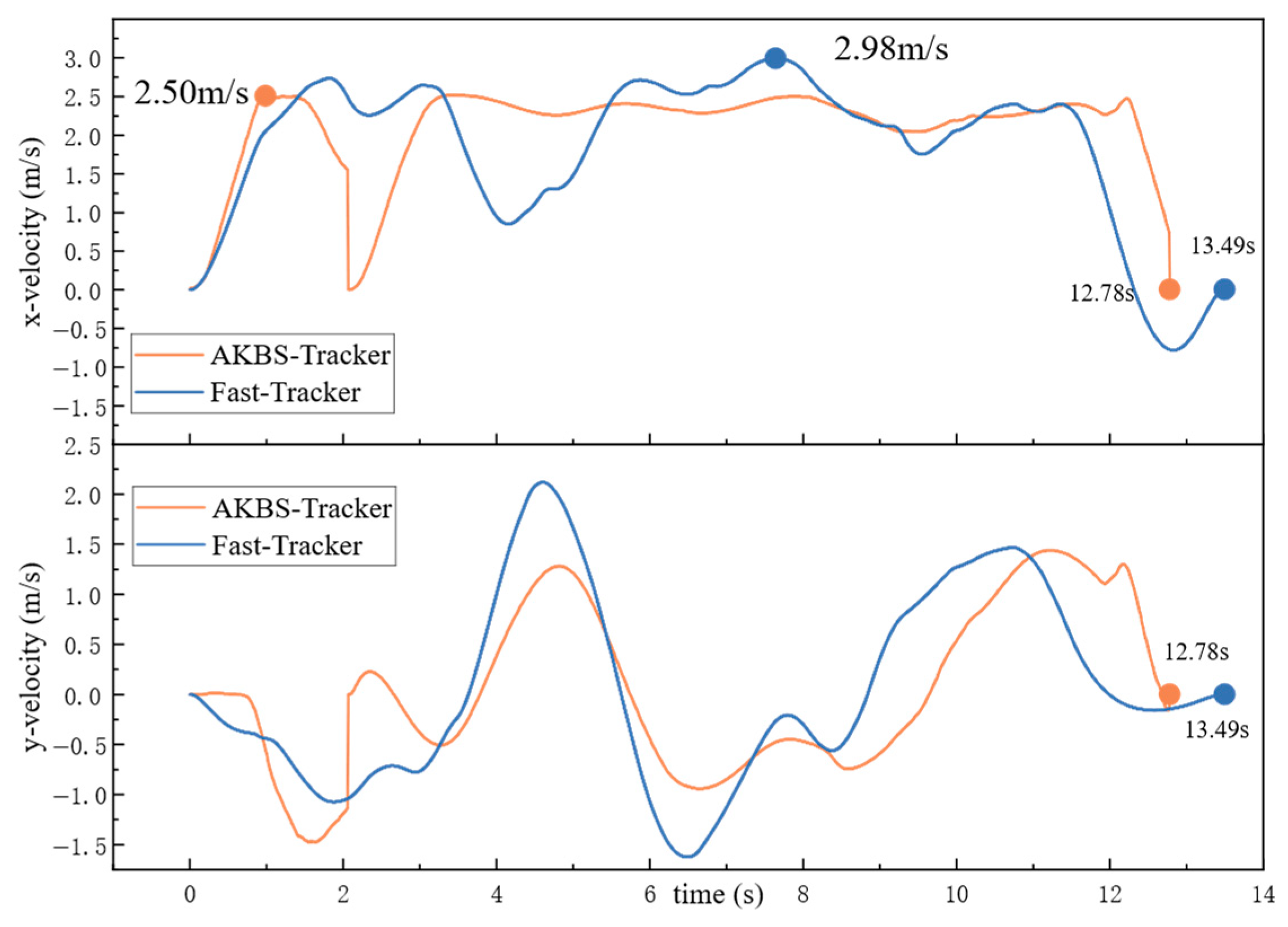

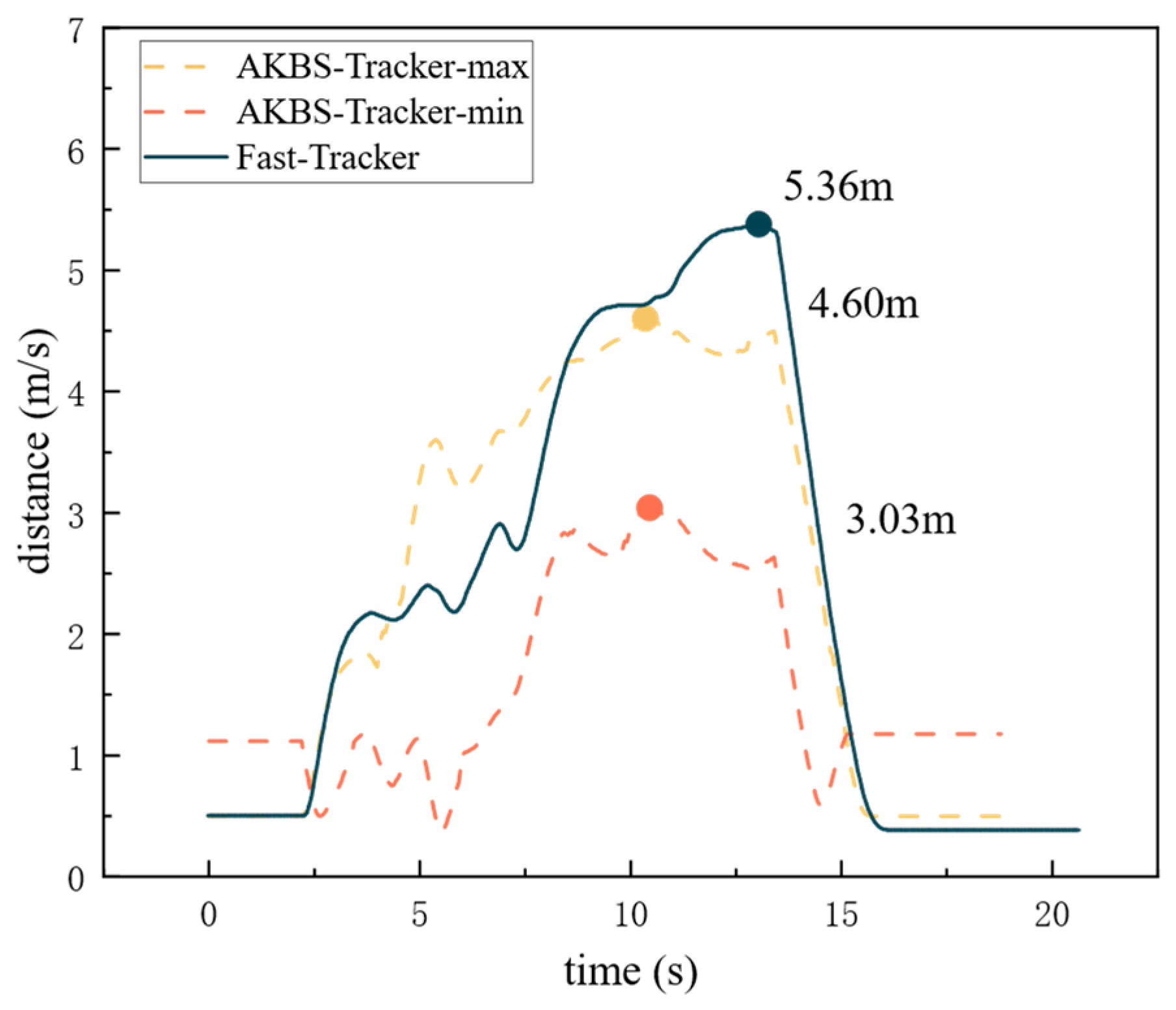

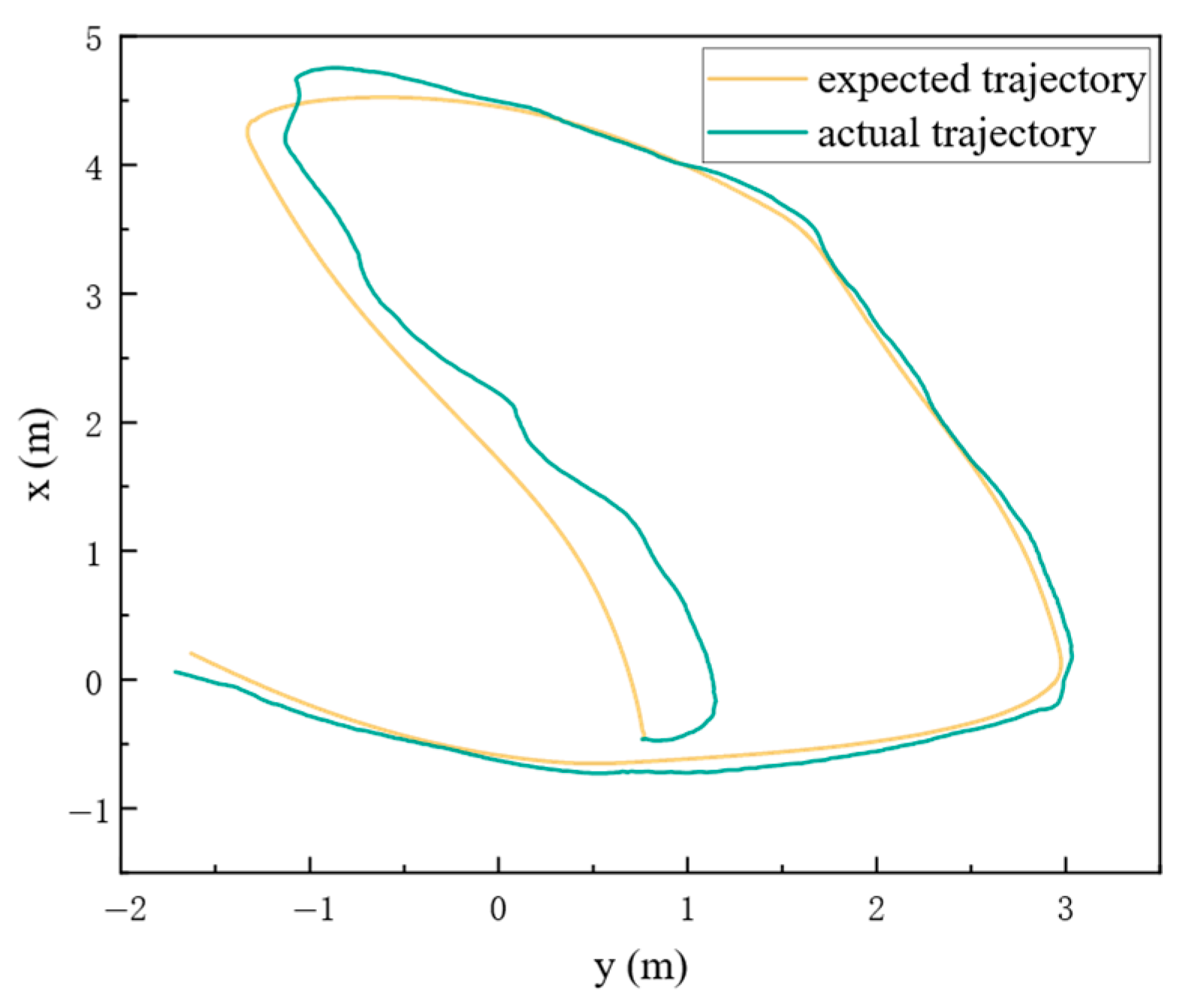

This paper introduced an integrated onboard motion planning framework for autonomous drones, with contributions in two key areas. First, we propose a lightweight, intent-free method for motion prediction driven purely by spatiotemporal dynamics, which provides a critical foundation for tracking unpredictable targets. Building upon this, a hierarchical planner combining front-end kinodynamic path searching and back-end B-spline trajectory optimization was developed to address the challenge of high-speed onboard replanning. The front-end search efficiently generates a safe, dynamically feasible initial path by incorporating a novel risk-weight parameter to reduce computational complexity. Subsequently, the back-end optimization refines this path for smoothness and safety using boundary constraints and gradient-based methods, leveraging the convex hull property of B-splines to enhance optimization efficiency. The proposed framework was rigorously validated through extensive simulations and real-world experiments, demonstrating robust performance. Our conclusions can be summarized as follows:

- (1)

For trajectory planning for single drone target tracking, the proposed AKBS-Tracker generates shorter trajectories than the traditional Fast-Tracker, with notable advantages in trajectory smoothness, time and tracking distance.

- (2)

The AKBS-Tracker decreased flight time effectively while maintaining the planned speed within the defined maximum speed limit, demonstrating its advantage in sustaining dynamic feasibility.

- (3)

In a real-world experiment conducted in an unfamiliar indoor environment, the AKBS-Tracker successfully executed motion planning for a single UAV. It adeptly maintained a smooth and dynamic trajectory while skillfully avoiding collisions with obstacles. This experiment validates the system’s capability to operate safely and effectively in practical scenarios.

This research significantly improves autonomous UAV efficiency, safety, and practical applicability for target tracking. The potential impact extends to various applications, such as surveillance, delivery, and exploration. Nevertheless, acknowledging the limitations of our study is crucial. Given the limits of our study, we did not take into account the communications of tracking and visual occlusion. Subsequent research should take into account the robustness to sensor noise and communication delay. This can be achieved by scaling the approach, integrating advanced sensors, creating adaptive algorithms, conducting real-world experiments, and facilitating practical implementation in various applications.