Automated UAV Object Detector Design Using Large Language Model-Guided Architecture Search

Highlights

- PhaseNAS introduces a phase-aware, dynamic NAS framework driven by large language models for UAV perception tasks.

- It achieves state-of-the-art accuracy and efficiency, generating superior YOLOv8 variants for object detection with lower computational cost.

- PhaseNAS enables automated, resource-adaptive model design, making real-time, high-performance perception feasible for UAVs and edge devices.

- Its structured template and adaptive LLM resource allocation strategies can be extended to broader AI applications beyond aerial object detection.

Abstract

1. Introduction

- 1

- Phase-Aware Dynamic LLM Allocation: We introduce a resource-adaptive NAS strategy that adjusts LLM capacity according to search phase, balancing broad exploration with precise refinement for UAV detection tasks.

- 2

- Structured Detection Architecture Templates: We design a parameterized template system that reliably maps LLM prompts to executable YOLO-style detection architectures, reducing errors and code failures in the search loop.

- 3

- Zero-Shot Detection Scoring: We propose a training-free detection scoring mechanism for rapid, accurate evaluation of candidate detectors on UAV datasets such as VisDrone2019, accelerating search without full model retraining.

- 4

- Comprehensive UAV Evaluation: We demonstrate the effectiveness of PhaseNAS on both classic classification benchmarks (with lightweight models) and challenging UAV detection tasks, showing state-of-the-art mAP and efficiency on datasets such as COCO and VisDrone2019.

2. Materials and Methods

2.1. Dynamic Search Process

- 1

- Modular Component Selection: All architectural components must be selected from predefined functional groups (e.g., convolutional layers, residual blocks).

- 2

- Dimensional Compatibility: Adjacent modules must preserve dimensional consistency through strict channel matching, ensuring seamless integration.

- 3

- Hardware-Aware Constraints: The computational complexity of the generated architectures must remain within predefined limits, ensuring practical applicability across diverse deployment scenarios.

2.1.1. Exploration Phase

2.1.2. Refinement Phase

2.2. Search Space Definition

- 1

- Available Building Blocks: Each network is constructed from a predefined set of residual and convolutional blocks with various kernel sizes and activation functions. The search is restricted to combinations of these standardized modules, ensuring compatibility and reproducibility across all candidate architectures.

- 2

- Channel Compatibility: The output channel of any block must match the input channel of the subsequent block to ensure seamless tensor propagation.

- 3

- Input/Output Constraints: The model input must be a three-channel image, and the output must be suitable for the classification head.

- 4

- Resource Constraints: The parameter count and network FLOPs are constrained within practical deployment ranges to ensure efficiency.

- 1

- YOLOv8 as Baseline: The search is anchored on the official YOLOv8n and YOLOv8s backbones, allowing modifications and reordering of backbone, neck, and head blocks, while constraining overall parameters and FLOPs to remain similar to the base models.

- 2

- Block-Level Modifications: Candidate architectures are generated by reconfiguring, replacing, or inserting blocks within the backbone and neck, provided input/output channels and tensor shapes remain compatible.

- 3

- Innovative Block Extensions:

- YOLOv8+: Models in this variant are generated by reorganizing existing YOLOv8 blocks and tuning their parameters, without introducing new block types.

- YOLOv8*: In addition to the modifications allowed in YOLOv8+, this variant explicitly introduces two novel blocks:These custom blocks are only available in the YOLOv8* search space, enabling the framework to explore more expressive and powerful architectures beyond the original YOLOv8 design. These novel modules are particularly beneficial for UAV scenarios, where the ability to preserve fine-grained features and enhance small object localization under limited computational budgets is critical.

- 4

- Multi-Scale and Detection Head Compatibility: All searched architectures must support multi-scale feature outputs and remain compatible with the YOLO detection heads.

- 5

- Resource Constraints: Parameter count and FLOPs are kept within the original YOLOv8n/s budgets to ensure real-time performance and fair comparison.

2.3. Algorithm Overview

| Algorithm 1: PhaseNAS Architecture Search |

Require: Initial architecture , small LLM (for Exploration), large LLM (for Refinement), thresholds , pool size K Ensure: Optimal architecture

|

2.4. LLM-Compatible Architecture Representation

2.5. Task Adaptation: From Classification to Object Detection

2.5.1. NAS Score for Classification

2.5.2. NAS Score for Object Detection

- Input Perturbation

- Feature Map Extraction

- Multi-Scale Difference

- BatchNorm Scaling

- Final NAS Score

- Repetition and Aggregation

3. Results

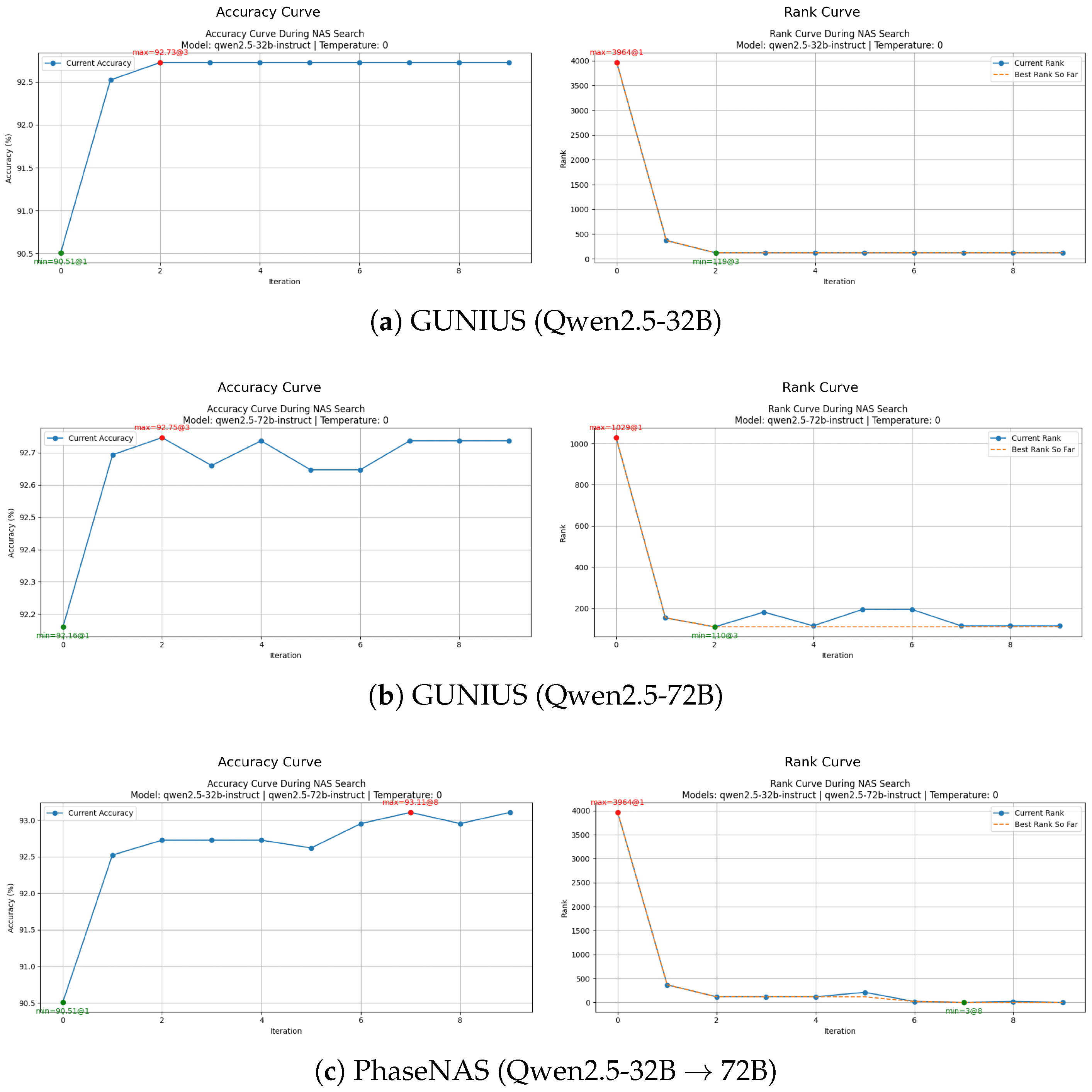

3.1. Comparison with LLM-Based NAS Baselines

3.2. Generalization to Classification and Detection Tasks

- Model Size: Limit total parameters for edge device deployment.

- FLOPs: Bound computational cost for real-time efficiency.

- Latency: Ensure practical inference speed on target hardware.

- Depth: Prevent over-deep, hard-to-optimize models.

3.3. Classification Results and Analysis

3.4. Object Detection Results and Analysis

- 1

- COCO Results:

- YOLOv8n series: YOLOv8n+ and YOLOv8n* improve mAP to 38.0 and 39.1, respectively, both with fewer parameters and lower FLOPs than the original (37.3, 3.2 M, 8.7 G).

- YOLOv8s series: YOLOv8s+ and YOLOv8s* achieve mAPs of 45.4 and 46.1, respectively, while reducing parameter count and FLOPs compared to the baseline (44.9, 11.2 M, 28.6 G).

- 2

- VisDrone2019 Results:

- YOLOv8n series: On the challenging VisDrone2019 benchmark, PhaseNAS improves mAP@50:95 from 18.5 (YOLOv8n) to 18.8 (YOLOv8n+) and 19.1 (YOLOv8n*), while maintaining lower parameter and FLOPs budgets.

- YOLOv8s series: Similarly, the mAP@50:95 increases from 22.5 (YOLOv8s) to 22.7 (YOLOv8s+) and 23.4 (YOLOv8s*), further verifying the effectiveness of PhaseNAS in drone-based detection scenarios.

4. Discussion

Limitations and Future Directions

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| NAS | Neural Architecture Search |

| LLM | Large Language Model |

| FLOPs | Floating Point Operations |

| mAP | mean Average Precision |

| UAV | Unmanned Aerial Vehicle |

| CNN | Convolutional Neural Network |

Appendix A. Details of LLM Prompt Design

Appendix A.1. System Content

Appendix A.2. User Input

Appendix A.3. Initial Structure and Prompt

Appendix A.4. Experimental Prompt

Appendix B. Correlation Between NAS det and mAP

| Model | YAML | PT | |

|---|---|---|---|

| YOLOv8n | 2.02 | 11.22 | 37.3 |

| YOLOv8s | 3.07 | 12.53 | 44.9 |

| YOLOv8m | 4.10 | 18.36 | 50.2 |

| YOLOv8l | 4.99 | 25.27 | 52.9 |

| YOLOv8x | 5.51 | 25.21 | 53.9 |

| Pair | Spearman | Pearson r |

|---|---|---|

| YAML vs. mAP | 1.000 | 0.994 |

| PT vs. mAP | 1.000 | 0.996 |

References

- Ramachandran, A.; Sangaiah, A.K. A review on object detection in unmanned aerial vehicle surveillance. Int. J. Cogn. Comput. Eng. 2021, 2, 84–97. [Google Scholar] [CrossRef]

- Du, D.; Zhu, P.; Wen, L.; Bian, X.; Lin, H.; Hu, Q.; Peng, T.; Zheng, J.; Wang, X.; Zhang, Y.; et al. VisDrone-DET2019: The vision meets drone object detection in image challenge results. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops (ICCVW), Seoul, Republic of Korea, 27–28 October 2019. [Google Scholar]

- Wang, P.; Zhao, J. SOD-YOLO: Enhancing YOLO-Based Detection of Small Objects in UAV Imagery. arXiv 2025, arXiv:2507.12727. [Google Scholar]

- Zheng, Y.; Jing, Y.; Zhao, J.; Cui, G. LAM-YOLO: Drones-based small object detection on lighting occlusion attention mechanism YOLO. Comput. Vis. Image Underst. 2025, 235, 104489. [Google Scholar] [CrossRef]

- Lu, L.; He, D.; Liu, C.; Deng, Z. MASF-YOLO: An Improved YOLOv11 Network for Small Object Detection on Drone View. arXiv 2025, arXiv:2504.18136. [Google Scholar] [CrossRef]

- Huang, M.; Mi, W.; Wang, Y. EDGS-YOLOv8: An Improved YOLOv8 Lightweight UAV Detection Model. Drones 2024, 8, 337. [Google Scholar] [CrossRef]

- Nguyen, P.T.; Nguyen, G.L.; Bui, D.D. LW-UAV–YOLOv10: A Lightweight Model for Small UAV Detection on Infrared Data Based on YOLOv10. Geomatica 2025, 77, 100049. [Google Scholar] [CrossRef]

- Gong, J.; Liu, H.; Zhao, L.; Maeda, T.; Cao, J. EEG-Powered UAV Control via Attention Mechanisms. Appl. Sci. 2025, 15, 10714. [Google Scholar] [CrossRef]

- Liu, S.; Zha, J.; Sun, J.; Li, Z.; Wang, G. EdgeYOLO: An edge-real-time object detector. In Proceedings of the 42nd Chinese Control Conference (CCC), Tianjin, China, 24–26 July 2023; pp. 9130–9135. [Google Scholar]

- Chen, D.; Zhang, L. SL-YOLO: A stronger and lighter drone target detection model. arXiv 2024, arXiv:2411.11477. [Google Scholar] [CrossRef]

- Micheal, A.A.; Micheal, A.; Gopinathan, A.; Barathi, B.U.A. Deep Learning-based Multi-class Object Tracking with Occlusion Handling Mechanism in UAV Videos. Res. Sq. 2024. [Google Scholar] [CrossRef]

- Jocher, G.; Chaurasia, A.; Qiu, J. Ultralytics YOLOv8 (Version 8.0.0). GitHub Repository. 2023. Available online: https://github.com/ultralytics/ultralytics (accessed on 1 November 2025).

- Elsken, T.; Metzen, J.H.; Hutter, F. Neural architecture search: A survey. J. Mach. Learn. Res. 2019, 20, 1–21. [Google Scholar]

- Real, E.; Aggarwal, A.; Huang, Y.; Le, Q.V. Regularized evolution for image classifier architecture search. Proc. Aaai Conf. Artif. Intell. 2019, 33, 4780–4789. [Google Scholar] [CrossRef]

- Real, E.; Moore, S.; Selle, A.; Saxena, S.; Suematsu, Y.L.; Tan, J.; Le, Q.V.; Kurakin, A. Large-Scale Evolution of Image Classifiers. In Proceedings of the 34th International Conference on Machine Learning (ICML 2017), Sydney, NSW, Australia, 6–11 August 2017; Volume 70, pp. 2902–2911. Available online: https://proceedings.mlr.press/v70/real17a.html (accessed on 1 November 2025).

- Zoph, B.; Le, Q. Neural architecture search with reinforcement learning. arXiv 2016, arXiv:1611.01578. [Google Scholar]

- Liu, H.; Simonyan, K.; Yang, Y. DARTS: Differentiable architecture search. arXiv 2018, arXiv:1806.09055. [Google Scholar]

- Nasir, M.U.; Earle, S.; Togelius, J.; James, S.; Cleghorn, C. LLMatic: Neural architecture search via large language models and quality diversity optimization. In Proceedings of the Genetic and Evolutionary Computation Conference, Melbourne, Australia, 14–18 July 2024. [Google Scholar]

- Chen, A.; Dohan, D.; So, D.R. EvoPrompting: Language models for code-level neural architecture search. arXiv 2023, arXiv:2302.14838. [Google Scholar]

- Rahman, M.H.; Chakraborty, P. Lemo-nade: Multi-parameter neural architecture discovery with LLMs. arXiv 2024, arXiv:2402.18443. [Google Scholar]

- Zheng, M.; Su, X.; You, S.; Wang, F.; Qian, C.; Xu, C.; Albanie, S. Can GPT-4 perform neural architecture search? arXiv 2023, arXiv:2304.10970. [Google Scholar] [CrossRef]

- Wu, X.; Wu, S.H.; Wu, J.; Feng, L.; Tan, K.C. Evolutionary computation in the era of large language model: Survey and roadmap. arXiv 2024, arXiv:2401.10034. [Google Scholar] [CrossRef]

- Slimani, H.; El Mhamdi, J.; Jilbab, A. Deep Learning Structure for Real-time Crop Monitoring Based on Neural Architecture Search and UAV. Braz. Arch. Biol. Technol. 2024, 67, e24231141. [Google Scholar] [CrossRef]

- Asghari, O.; Ivaki, N.; Madeira, H. UAV Operations Safety Assessment: A Systematic Literature Review. ACM Comput. Surv. 2025, 57, 1–37. [Google Scholar] [CrossRef]

- Wang, Y.; Li, J.; Yang, X.; Peng, Q. UAV–Ground Vehicle Collaborative Delivery in Emergency Response: A Review of Key Technologies and Future Trends. Appl. Sci. 2025, 15, 9803. [Google Scholar] [CrossRef]

- Alqudsi, Y.; Makaraci, M. UAV Swarms: Research, Challenges, and Future Directions. J. Eng. Appl. Sci. 2025, 72, 12. [Google Scholar] [CrossRef]

- Yu, C.; Liu, X.; Wang, Y.; Liu, Y.; Feng, W.; Xiong, D.; Tang, C.; Lv, J. GPT-NAS: Evolutionary Neural Architecture Search with the Generative Pre-Trained Model. J. Latex Cl. Files 2025, 14, 1–11. [Google Scholar]

- Morris, C.; Jurado, M.; Zutty, J. LLM Guided Evolution—The Automation of Models Advancing Models. In Proceedings of the Genetic and Evolutionary Computation Conference (GECCO ’24), Melbourne, Australia, 14–18 July 2024; Association for Computing Machinery: New York, NY, USA, 2024; pp. 377–384. [Google Scholar]

- Yu, Y.; Zutty, J. LLM-Guided Evolution: An Autonomous Model Optimization for Object Detection. In Proceedings of the Genetic and Evolutionary Computation Conference Companion (GECCO ’25 Companion), Málaga, Spain, 14–18 July 2025; Association for Computing Machinery: New York, NY, USA, 2025; pp. 2363–2370. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. YOLOv10: Real-Time End-to-End Object Detection. arXiv 2024, arXiv:2405.14458. [Google Scholar]

- Dong, X.; Yang, Y. NAS-Bench-201: Extending the scope of reproducible neural architecture search. arXiv 2020, arXiv:2001.00326. [Google Scholar] [CrossRef]

- Lin, M.; Wang, P.; Sun, Z.; Chen, H.; Sun, X.; Qian, Q.; Li, H.; Jin, R. Zen-NAS: A zero-shot NAS for high-performance image recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 347–356. [Google Scholar]

| Method | Core Paradigm | Tasks | Det. | Dyn. | Template | ZS-Score |

|---|---|---|---|---|---|---|

| LLMatic [18] | LLM + QD search | Cls. | No | No | No | No |

| EvoPrompting [19] | Evo-prompting | Cls. | No | No | No | No |

| GPT-NAS [27] | GPT + EA-guided NAS | Cls. | No | No | No | No |

| LLM-GE (GECCO’24) [28] | LLM-guided evolution + source-level code edits | Cls. | No | No | No | No |

| LeMo-NADe [20] | Code-level param NAS | Cls. | No | No | Yes | No |

| GUNIUS [21] | Agentic LLM NAS | Cls. | No | No | Yes | No |

| LLM-GE (GECCO’25) [29] | LLM-guided evolution + YOLO YAML | Det. | Yes | No | Yes | No |

| PhaseNAS (Ours) | Phase-aware LLM NAS | Cls. + Det. | Yes | Yes | Yes | Yes |

| Variant | Scope | Key Changes | New Blocks |

|---|---|---|---|

| YOLOv8 (baseline) | Official n/s backbones; standard neck/head; multi-scale (P3–P5) | — | No |

| YOLOv8+ (reconfig) | Block-level reconfiguration within backbone/neck | Re-order/tune blocks; keep I/O shapes | No |

| YOLOv8* (extended) | YOLOv8+ with optional modules | SCDown; PSA (toggled per candidate) | Yes |

| Method | Zen-Score | Search Time (min) | CIFAR-10 Acc. | CIFAR-100 Acc. |

|---|---|---|---|---|

| Zen-NAS | 99.20 | 7.43 | 96.72 ± 0.07 | 79.95 ± 0.08 |

| PhaseNAS | 99.43 | 1.20 (−83.9%) | 96.80 ± 0.06 | 80.76 ± 0.07 |

| Zen-NAS | 111.63 | 19.07 | 96.00 ± 0.08 | 80.78 ± 0.07 |

| PhaseNAS | 111.33 | 7.06 (−63.0%) | 96.65 ± 0.05 | 81.22 ± 0.06 |

| Zen-NAS | 121.38 | 67.46 | 96.94 ± 0.06 | 81.24 ± 0.09 |

| PhaseNAS | 121.44 | 9.01 (−86.6%) | 97.33 ± 0.04 | 81.35 ± 0.07 |

| COCO | |||||

|---|---|---|---|---|---|

| Family | Model | mAP@50:95 (mean ± sd) | mAP | MParams | GFLOPs |

| YOLOv8n | YOLOv8n | 37.30 ± 0.05 | – | 3.2 | 8.7 |

| YOLOv8n+ | 38.02 ± 0.04 | +0.72 (+1.9%) | 3.0 (−6.3%) | 8.2 (−5.7%) | |

| YOLOv8n* | 39.08 ± 0.07 | +1.78 (+4.8%) | 2.95 (−7.8%) | 8.5 (−2.3%) | |

| YOLOv8s | YOLOv8s | 44.90 ± 0.08 | – | 11.2 | 28.6 |

| YOLOv8s+ | 45.42 ± 0.05 | +0.52 (+1.1%) | 10.3 (−8.0%) | 25.0 (−12.6%) | |

| YOLOv8s* | 46.08 ± 0.09 | +1.18 (+2.6%) | 9.9 (−11.6%) | 22.4 (−21.7%) | |

| VisDrone2019 | |||||

| Family | Model | mAP@50:95 (mean ± sd) | mAP | MParams | GFLOPs |

| YOLOv8n | YOLOv8n | 18.50 ± 0.04 | – | 3.2 | 8.7 |

| YOLOv8n+ | 18.82 ± 0.05 | +0.32 (+1.7%) | 3.0 (−6.3%) | 8.2 (−5.7%) | |

| YOLOv8n* | 19.10 ± 0.08 | +0.60 (+3.2%) | 2.95 (−7.8%) | 8.5 (−2.3%) | |

| YOLOv8s | YOLOv8s | 22.50 ± 0.07 | – | 11.2 | 28.6 |

| YOLOv8s+ | 22.68 ± 0.09 | +0.18 (+0.8%) | 10.3 (−8.0%) | 25.0 (−12.6%) | |

| YOLOv8s* | 23.38 ± 0.10 | +0.88 (+3.9%) | 9.9 (−11.6%) | 22.4 (−21.7%) | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kong, F.; Shan, X.; Hu, Y.; Li, J. Automated UAV Object Detector Design Using Large Language Model-Guided Architecture Search. Drones 2025, 9, 803. https://doi.org/10.3390/drones9110803

Kong F, Shan X, Hu Y, Li J. Automated UAV Object Detector Design Using Large Language Model-Guided Architecture Search. Drones. 2025; 9(11):803. https://doi.org/10.3390/drones9110803

Chicago/Turabian StyleKong, Fei, Xiaohan Shan, Yanwei Hu, and Jianmin Li. 2025. "Automated UAV Object Detector Design Using Large Language Model-Guided Architecture Search" Drones 9, no. 11: 803. https://doi.org/10.3390/drones9110803

APA StyleKong, F., Shan, X., Hu, Y., & Li, J. (2025). Automated UAV Object Detector Design Using Large Language Model-Guided Architecture Search. Drones, 9(11), 803. https://doi.org/10.3390/drones9110803