GPLVINS: Tightly Coupled GNSS-Visual-Inertial Fusion for Consistent State Estimation with Point and Line Features for Unmanned Aerial Vehicles

Highlights

- Proposes the GPLVINS system for UAVs, which builds a tightly coupled GNSS-visual-inertial nonlinear optimization framework by fusing point and line features on the basis of GVINS. This addresses the issue of insufficient feature extraction in traditional point-feature VIO under texture-sparse environments and enhances the stability of UAV 6-DoF pose estimation.

- Optimizes the traditional LSD line feature extraction algorithm: short line segments are filtered out via non-maximum suppression and length threshold screening. This not only reduces computational cost but also integrates line reprojection residuals into the optimization process, further improving positioning accuracy.

- By comparing the performance of GPLVINS with GVINS, PL-VIO, and VINS-Fusion in indoor, outdoor, and indoor–outdoor transition scenarios, GPLVINS demonstrates superior positioning performance compared with other algorithms. Our system can handle complex situations such as drastic changes in lighting, loss of GNSS signals, or feature degradation, making it more suitable for the practical operational requirements of UAVs.

- Offers a more reliable state estimation scheme for UAV autonomous navigation. Particularly in GNSS-constrained or visually sparse feature scenarios, the incorporation of line features supplements environmental constraints and reduces the risk of pose drift, laying a foundation for subsequent extensions to stereo vision and adaptation to larger-scale textureless scenarios.

Abstract

1. Introduction

- Based on GVINS, GPLVINS incorporates line constraints to enhance the localization performance and robustness of the system in low-texture environments, effectively addressing the problem of UAV positioning failure in such settings.

- The LSD line feature detection algorithm is optimized using an NMS strategy to filter short line segments—a modification that significantly improves algorithm efficiency and ensures favorable real-time performance, fully meeting the requirements of real-time UAV applications.

- A self-developed UAV was utilized to independently collect experimental datasets, and these datasets, together with open-source datasets, were used to validate the performance of GPLVINS. Experiments demonstrate that GPLVINS achieves superior positioning in indoor, outdoor, and transition scenarios, with enhanced robustness under feature degradation and lighting changes.

2. Related Work

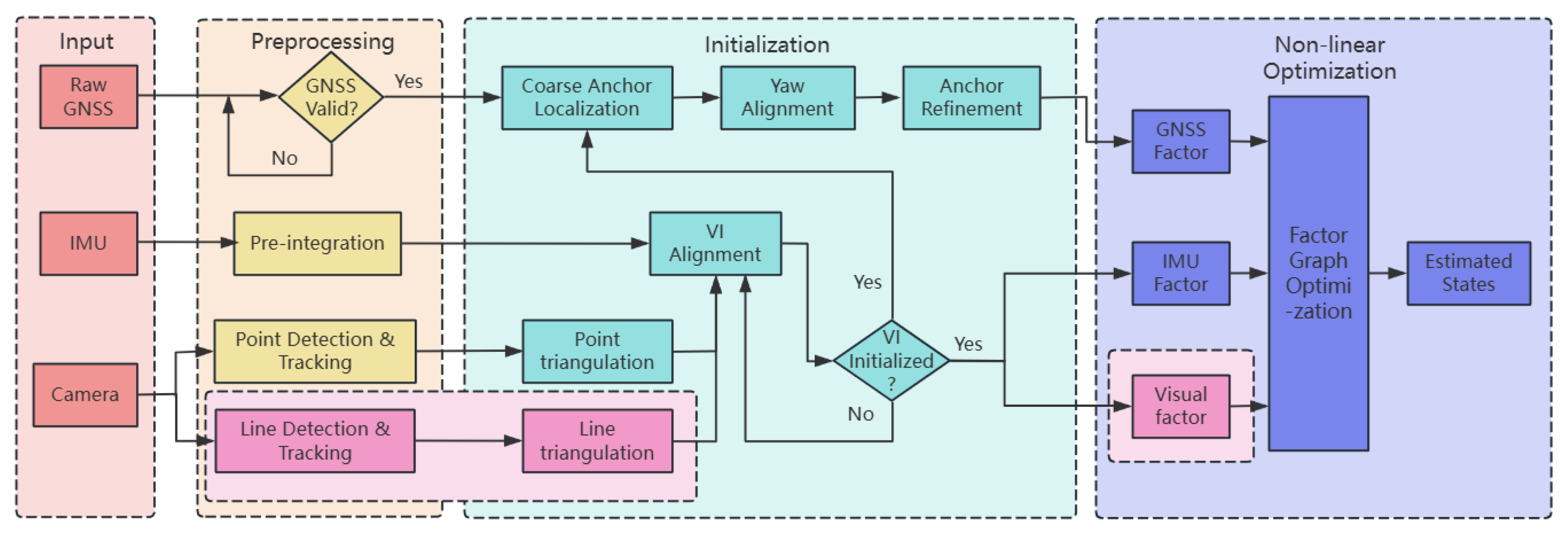

3. Method

3.1. MAP Estimation

3.2. Data Preprocessing

3.2.1. Preprocessing of Raw GNSS Data

3.2.2. IMU Data Preprocessing

3.2.3. Image Data Preprocessing

3.3. System Initialization

3.3.1. Feature Triangulation

3.3.2. Visual-Inertial Alignment

3.3.3. GNSS Initialization

3.4. Nonlinear Optimization

3.4.1. IMU Factor

3.4.2. Visual Factor

3.4.3. GNSS Factor

4. Experiment

4.1. Performance Validation Experiments for the Modified LSD

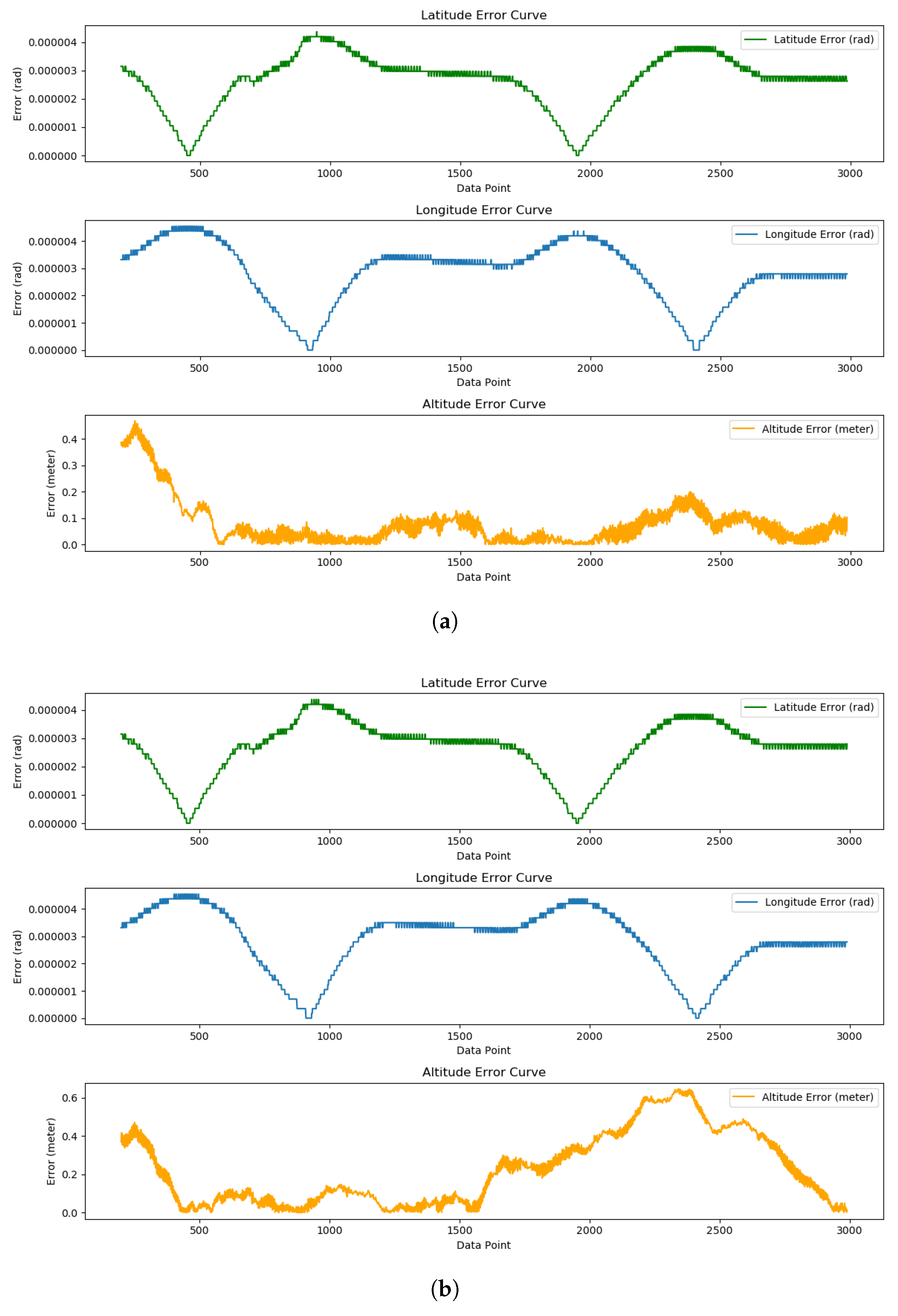

4.2. Experimental Evaluation of Outdoor Scenarios

4.3. Experimental Evaluation of Indoor and Outdoor Scenarios

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Qin, T.; Li, P.; Shen, S. VINS-Mono: A Robust and Versatile Monocular Visual-Inertial State Estimator. IEEE Trans. Robot. 2018, 34, 1004–1020. [Google Scholar] [CrossRef]

- Cao, S.; Lu, X.; Shen, S. GVINS: Tightly Coupled GNSS–Visual–Inertial Fusion for Smooth and Consistent State Estimation. IEEE Trans. Robot. 2022, 38, 2004–2021. [Google Scholar] [CrossRef]

- von Gioi, R.G.; Jakubowicz, J.; Morel, J.-M.; Randall, G. LSD: A Fast Line Segment Detector with a False Detection Control. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 722–732. [Google Scholar] [CrossRef] [PubMed]

- Geneva, P.; Eckenhoff, K.; Lee, W.; Yang, Y.; Huang, G. OpenVINS: A Research Platform for Visual-Inertial Estimation. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 4666–4672. [Google Scholar]

- Yang, Y.; Geneva, P.; Eckenhoff, K.; Huang, G. Visual-Inertial Odometry with Point and Line Features. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 2447–2454. [Google Scholar]

- Bai, Y.; Yang, F.; Liu, T.; Zhang, J.; Hu, X.; Wang, Y. Research on Lidar Vision Data Fusion Algorithm Based on Improved ORB-SLAM2. In Proceedings of the 2025 4th International Conference on Artificial Intelligence, Internet of Things and Cloud Computing Technology (AIoTC), Guilin, China, 8–10 August 2025; pp. 174–179. [Google Scholar]

- Leutenegger, S.; Lynen, S.; Bosse, M.; Siegwart, R.; Furgale, P. Keyframe-based visual-inertial odometry using nonlinear optimization. Int. J. Robot. Res. 2014, 34, 314–334. [Google Scholar] [CrossRef]

- Duan, C.; Liu, R.; Li, N.; Li, S.; Tang, Q.; Dai, Z.; Zhu, X. Tightly Coupled RTK-Visual-Inertial Integration with a Novel Sliding Ambiguity Window Optimization Framework. IEEE Trans. Intell. Transp. Syst. 2025. early access. [Google Scholar] [CrossRef]

- Shi, J. Good features to track. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 21–23 June 1994; pp. 593–600. [Google Scholar]

- Stumberg, L.V.; Usenko, V.; Cremers, D. Direct sparse visualinertial odometry using dynamic marginalization. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 2510–2517. [Google Scholar]

- Xia, C.; Li, X.; Li, S.; Zhou, Y. Invariant-EKF-Based GNSS/INS/Vision Integration with High Convergence and Accuracy. IEEE/ASME Trans. Mechatron. 2024. early access. [Google Scholar] [CrossRef]

- He, Y.; Zhao, J.; Guo, Y.; He, W.; Yuan, K. PL-VIO: Tightly-Coupled Monocular Visual-Inertial Odometry Using Point and Line Features. Sensors 2018, 18, 1159. [Google Scholar] [CrossRef] [PubMed]

- Wen, H.; Tian, J.; Li, D. PLS-VIO: Stereo Vision-inertial Odometry Based on Point and Line Features. In Proceedings of the 2020 International Conference on High Performance Big Data and Intelligent Systems, Shenzhen, China, 23–23 May 2020; pp. 1–7. [Google Scholar]

- Angelino, C.V.; Baraniello, V.R.; Cicala, L. UAV position and attitude estimation using IMU, GNSS and camera. In Proceedings of the 2012 15th International Conference on Information Fusion, Singapore, 9–12 July 2012; pp. 735–742. [Google Scholar]

- Baker, S.; Matthews, I. Lucas-kanade 20 years on: A unifying framework. Int. J. Comput. Vis. 2004, 56, 221–255. [Google Scholar] [CrossRef]

- Kaehler, A.; Bradski, G. Learning OpenCV 3: Computer Vision in C++ with the OpenCV Library; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2016. [Google Scholar]

- Zhang, L.; Koch, R. An efficient and robust line segment matching approach based on LBD descriptor and pairwise geometric consistency. J. Vis. Commun. Image Represent. 2013, 24, 794–805. [Google Scholar] [CrossRef]

| Algorithm Name | GPLVINS | PL-VIO |

|---|---|---|

| Point Feature Detection and Tracking/ms | 12 | 12 |

| Line Feature Detection (LSD)/ms | 16 (Modified) | 65 |

| Line Feature Tracking/ms | 9 | 15 |

| Output Frequency of State Estimation Results/Hz | 10 | 5 |

| Evaluation Data Type | GPLVINS | GVINS |

|---|---|---|

| Latitude/° | ||

| Longitude/° | ||

| Altitude/ m | 0.117652 | 0.292413 |

| RMSE Type | t-Statistic | p-Value | Significance ( = 0.05) |

|---|---|---|---|

| Latitude RMSE | 4.129 | Significant () | |

| Longitude RMSE | 8.856 | Highly Significant () | |

| Altitude RMSE | 41.63 | Extremely Significant () |

| Dataset Name | GPLVINS | GVINS | PL-VIO | VINS-Fusion |

|---|---|---|---|---|

| Conference Room | 2.277% | 4.054% | 2.871% | 3.184% |

| Parking Lot | 2.263% | 6.172% | 7.264% | - |

| Subway Station | 3.103% | 4.537% | 7.143% | - |

| MH-02-easy | 0.248% | 0.471% | 0.285% | 0.264% |

| MH-03-medium | 0.201% | 0.238% | 0.203% | 0.188% |

| MH-05-difficult | 0.339% | 0.651% | 0.471% | 0.529% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, X.; Li, S.; Lu, R.; Zhu, X. GPLVINS: Tightly Coupled GNSS-Visual-Inertial Fusion for Consistent State Estimation with Point and Line Features for Unmanned Aerial Vehicles. Drones 2025, 9, 801. https://doi.org/10.3390/drones9110801

Chen X, Li S, Lu R, Zhu X. GPLVINS: Tightly Coupled GNSS-Visual-Inertial Fusion for Consistent State Estimation with Point and Line Features for Unmanned Aerial Vehicles. Drones. 2025; 9(11):801. https://doi.org/10.3390/drones9110801

Chicago/Turabian StyleChen, Xinyu, Shuaixin Li, Ruifeng Lu, and Xiaozhou Zhu. 2025. "GPLVINS: Tightly Coupled GNSS-Visual-Inertial Fusion for Consistent State Estimation with Point and Line Features for Unmanned Aerial Vehicles" Drones 9, no. 11: 801. https://doi.org/10.3390/drones9110801

APA StyleChen, X., Li, S., Lu, R., & Zhu, X. (2025). GPLVINS: Tightly Coupled GNSS-Visual-Inertial Fusion for Consistent State Estimation with Point and Line Features for Unmanned Aerial Vehicles. Drones, 9(11), 801. https://doi.org/10.3390/drones9110801