1. Introduction

With the development of technology, unmanned aerial vehicles (UAVs or drones) are becoming an integral part of various fields of activity, including logistics, environmental monitoring, and search and rescue operations. However, their uncontrolled use creates significant security threats in areas including illegal surveillance, smuggling, terrorist attacks, and cyber sabotage. In this regard, the development of effective methods for detecting and classifying UAVs is one of the key tasks of modern security systems. The main goal of such systems is the timely detection and neutralization of threats associated with the use of drones, especially near critical infrastructure facilities. Such facilities include airports, power plants, military bases, government agencies, and industrial enterprises [

1,

2]. The penetration of UAVs into protected areas may result in a leakage of confidential information, disruption of strategically important facilities, and even human casualties. In addition, UAV detection systems play an important role in compliance with legislation governing the use of airspace, especially in areas with limited access. In this regard, there is a growing need to develop advanced technologies that ensure the high accuracy and reliability of drone detection in real time. Existing UAV detection and classification technologies are based on various physical principles, each of which has its own unique characteristics and limitations. Hybrid models (sensor fusion), integrating data from several sources, are actively being developed to improve accuracy and reliability. However, individual technologies, such as radar, radio frequency (RF-based), acoustic, and optical–electronic methods, also demonstrate high efficiency in solving UAV detection and classification problems.

Radar systems use the reflection of radio waves to detect UAVs using Doppler, aperture synthesis, and radar cross-section (RCS) technologies [

3]. Different frequency bands, including L, C, Ka, W, X, and S-bands, provide different levels of detection accuracy and range. The methods used to modulate the signals include FMCW (Frequency Modulation Continuous Wave), LFPM (Linear Frequency Phase Modulation), FSK (Frequency Shift Keying), LFM (Linear Frequency Modulation), and SFM (Step Frequency Modulation). One of the key approaches in radar detection is the analysis of micro-Doppler signatures, which allow characteristic features of UAV movement to be identified [

4,

5,

6]. Deep neural networks (DNNs), particularly convolutional neural networks (CNNs), are used for classification. These networks analyze spectrograms of radar signals to distinguish object types. For example, the use of ResNet-SP models allows a classification accuracy of up to 98.97% to be achieved due to the analysis of micro-Doppler effects. However, radar methods may experience difficulties in urban conditions and when detecting slow-moving or hovering objects. Additionally, RCS analysis methods are used, which allow UAVs to be classified based on their radar reflectivity. For example, the use of statistical models such as AIC (Akaike Information Criterion) and BIC (Bayesian Information Criterion) can achieve an accuracy of up to 97.73% with a signal-to-noise ratio (SNR) of 10 dB [

7]. Systems based on bistatic radars operating at 5G frequencies [

8] are also being developed which are capable of detecting UAVs with an accuracy of up to 100% and localizing them with an accuracy of 75%.

Radio frequency methods analyze UAV control signals, including passive RF sensing, compressive sensing, and RF fingerprinting [

9,

10]. These methods can not only detect a drone but also determine the location of its operator. Passive RF sensing, based on signal preamble analysis, can reduce computational costs and achieve a detection accuracy of up to 99.94% at a distance of up to 150 meters. However, the effectiveness of these methods decreases when drones use encrypted or non-standard communication channels. Machine learning methods such as XGBoost [

11] and multi-channel deep neural networks (MC-DNNs) in combination with feature generation (FEG) are used to improve detection accuracy. For example, MC-DNNs-based systems achieve an accuracy of up to 98.4% by analyzing unique RF signatures [

9]. Deep learning algorithms such as CNNs are also used to classify RF signals, which allows an accuracy of up to 99% to be achieved even at low SNR (0 dB).

Acoustic methods are based on the analysis of sound waves generated by UAV engines and propellers [

12,

13,

14]. These systems use spectral analysis and the identification of unique sound patterns to detect and classify drones. Deep neural networks such as CNNs and transformers are used to classify acoustic signatures. For example, CNN-based systems achieve an accuracy of up to 96.3% at a distance of up to 500 meters [

13]. However, the application of acoustic methods is limited in conditions of high background noise and at long distances. To improve efficiency, signal processing algorithms such as sound intensity analysis and spectral analysis are used to determine the direction of the noise source. For example, machine learning-based systems demonstrate an accuracy of up to 99% in detecting UAVs based on their acoustic characteristics.

Despite significant progress in the development of UAV detection systems based on the above technologies, one of the key tasks remains the improvement of optical–electronic channels [

15,

16,

17]. The main difficulty is that UAVs are often small objects that are difficult to detect against the background of complex scenes, especially at long distances or in poor visibility conditions. In addition, their visual characteristics, such as shape, size and trajectory of movement, can be similar to those of birds, which significantly complicates the classification process and leads to an increase in the number of false positives. To solve this problem, more advanced image processing algorithms are needed that are capable of accurately identifying and classifying small objects even in conditions of high background complexity. In this context, modern computer vision methods such as YOLO (You Only Look Once) and DETR (Detection Transformer) are widely used for object detection. These algorithms differ in their architecture and approach to object detection, and most importantly, they outperform other algorithms such as SSD and Faster R-CNNs in terms of accuracy and FPS. YOLO uses a one-stage detection method, which ensures high processing speed, while RT-DETR is based on transformers and attention mechanisms, allowing for a more efficient analysis of complex scenes with many objects. However, starting with the tenth version, the YOLO family has demonstrated superiority in accuracy metrics and FPS compared to the latest version of RT-DETR, which makes it more significant for practical detection and classification problems. In this paper, an improved model named YOLOv12-ADBC (Advanced for Drone and Bird Classification) was developed based on the YOLOv12m architecture. The model features an enhanced neck module incorporating a hierarchical multi-scale feature integration mechanism with adaptive spatial weighting, which increases the sensitivity of the network to visually similar objects such as drones and birds. This architectural refinement improves the accuracy and robustness of classification, especially in cluttered or noisy visual environments. As a result, it contributes to the increased reliability of the optical–electronic channel in Sensor Fusion-based airspace monitoring systems. To achieve these objectives, the following tasks were carried out:

- -

A review of related works was conducted;

- -

A dataset containing the classes “Drone” and “Bird” was created;

- -

Data augmentation was applied and YOLO family models (v8–v12) trained, tested and validated;

- -

RT-DETR-L was included as a representative transformer baseline in order to contextualize the gains of recent YOLO models by jointly analyzing accuracy (precision/recall, mAP50, mAP50–95) and efficiency (FPS) on a shared evaluation protocol;

- -

The results were analyzed to identify the most effective architecture for drone and bird classification;

- -

An improved YOLOv12-ADBC model was developed by integrating the adaptive hierarchical feature aggregation mechanism into the neck of YOLOv12m;

- -

The YOLOv12-ADBC model was trained and evaluated using the same dataset and performance metrics;

- -

An ablation and like-for-like comparison of YOLOv12-ADBC was conducted under different training recipes, quantifying their impact on precision, recall, mAP50, mAP50–95;

- -

Three video sequences were prepared for inference testing under realistic monitoring scenarios;

- -

Practical recommendations were provided for implementing an optimal model into an existing Sensor Fusion architecture.

The novelty of this study lies in its being the first systematic comparative analysis of YOLOv8–YOLOv12 models on the dedicated “Drone vs. Bird” dataset and the development of the enhanced YOLOv12-ADBC architecture. By integrating an Adaptive Hierarchical Feature Integration module into the Neck, the model achieves improved feature fusion and superior ability to distinguish drones from visually similar birds, making it suitable for integration into Sensor Fusion systems. The task of distinguishing drones from birds in optoelectronic channels is complicated by small object angular magnitudes, scale variations, low contrast against the sky, partial occlusions, motion blur, and adverse weather, which increases the likelihood of false positives. Existing methods are roughly divided into two groups. The first group is CNN approaches of the YOLO family, which leverage multiscale aggregation and attention mechanisms to preserve features of small objects and suppress background noise. The second includes real-time transformer detectors, such as RT-DETR-L, providing an alternative mechanism for cross-scale context modeling. In this paper, YOLOv12-ADBC is compared with both groups to test whether hierarchical multiscale feature integration at the model neck improves the ability to distinguish between visually similar UAVs and birds in realistic conditions.

2. Related Works

Numerous modern studies have focused on the application of YOLO architectures (versions v5–v11) to solve the problems of UAV detection and classification in a video stream. Such methods demonstrate a high level of efficiency in recognizing UAVs and other aerial objects in real time due to a balanced combination of accuracy, processing speed, and compactness of models. This section provides a brief overview of the latest studies aimed at developing YOLO visual detector models for detecting and classifying UAVs as aerial targets. The review includes the latest achievements in the field of developing YOLO visual detector models of versions 5–11. In the analysis of each work, a special emphasis is placed on discussing accuracy and FPS metrics.

In ref. [

18], the authors described the TGC-YOLOv5 model modified using a dual attention mechanism (GAM and Coordinate Attention), as well as the introduction of transformer blocks into the architecture. Due to improved feature aggregation, the model was able to increase sensitivity to small objects in complex shooting conditions. Experiments showed mean average precision (mAP) ≈ 94.2% when testing on a sample of drone images, making it effective for real-time use. The article [

19,

20] describes DB-YOLOv5, a modified version of YOLOv5 with a dual backbone aimed at improving feature representation in scenes with an urban background. The model was trained on specialized samples with different UAV angles and lighting conditions. As a result, a stable accuracy of mAP ≈ 94.8% was achieved, which makes this model applicable in video surveillance.

In the works [

21,

22,

23,

24], the authors developed modified versions of the YOLOv7 visual detector. In ref. [

21], the YOLOv7-UAV model is adapted to the conditions for detecting UAVs against the background of the sky, urban architecture and trees. The architecture includes a modified neck structure, which increases the model’s ability to detect objects with low visibility. The model showed high metrics: P = 95.3%, R = 90.4%, mAP@0.5 = 93.8%. In the work in ref. [

22], attention modules were introduced into the YOLOv7 architecture in the neck and head of the detector. The model was trained on a specially prepared set of images with different types of UAVs. The achieved results, precision = 96.2%, R = 91.5%, and mAP ≈ 94.5%, indicate its reliability in real time. The F-YOLOv7 model presented in ref. [

23] was developed as a lightweight version for accelerated execution on devices with limited resources. It retained a high accuracy of mAP ≈ 94.2% despite the reduced number of parameters and provided a speed of up to 128 FPS. This makes it especially valuable for integration into mobile monitoring systems. In ref. [

24], a combined approach is considered in which YOLOv7 is used together with a rule-based tracker for detecting and tracking drones in a video stream. The model successfully copes with UAV classification in complex scenes with trajectory intersections and camera movement. The accuracy was 95.09% and the recall was 96.56%, demonstrating stability in tracking.

Visual detectors for UAV detection and classification trained on the YOLOv9, YOLOv10, and YOLOv11 models are presented in ref. [

25,

26,

27]. The YOLOv9 model described in ref. [

25] was implemented using the Programmable Gradient Information (PGI) module and tested on the Jetson Nano platform. Training was performed on a combined dataset of over 9000 drone images from different viewing angles. The model achieved mAP50 = 95.7%, precision = 94.7%, recall = 86.4%, F1 = 0.903, and FPS ≈ 24, demonstrating a balance between accuracy and efficiency. BRA-YOLOv10, considered in ref. [

26], implements a two-layer attention mechanism and an additional output layer for small objects. It was trained on the SIDD small aerial vehicle dataset. The model demonstrated P = 98.9%, R = 92.3%, mAP50 = 96.5%, and FPS = 118, making it one of the best models according to aggregate metrics. YOLOv11, described in ref. [

27], is a lightweight architecture with an adaptive CCFM neck and an HWD module. Training was carried out on the anti-UAV set, including for drones of various types and lighting conditions. The following were achieved: precision = 96.9%, recall = 89.8%, mAP50 = 95.2%, and FPS ≈ 91.

Table 1 presents the comparative characteristics of visual detectors for UAV detection and classification based on the adaptation of neural networks of the YOLO family. The results of the training, testing and experimental studies on these models are presented from papers published no earlier than 2023 in order to demonstrate the latest achievements in this field.

In ref. [

28,

29,

30,

31,

32], the authors developed UAV detection and classification models based on the YOLOv8 model. The DRBD-YOLOv8 model proposed in ref. [

28] introduces lightweight architectural optimizations, including depthwise separable convolutions and an enhanced RCELAN neck with BiFPN feature fusion, enabling efficient multiscale UAV detection. A novel DN-ShapeIoU loss function improves bounding-box regression accuracy for small aerial targets. Trained on the DUT Anti-UAV dataset, the model achieves precision = 94.6%, recall = 92%, mAP50 ≈ 95.1%, and mAP50-95 = 55.4%, providing a compact yet high-performance solution suitable for real-time anti-UAV applications.

In ref. [

29], YOLOv8 was implemented in the NVIDIA DeepStream system, which allowed it to be tested under conditions of limited computing power. The architecture was adapted for video streams with a resolution of 720 p at 30 fps. The achieved accuracy was mAP50 = 96.2%, F1 = 0.91 and stable speed ≈ 42 FPS.

Alongside YOLO and RT-DETR, other families of detectors such as Faster R-CNN and SSD have also been widely explored for UAV detection tasks. These models demonstrate high accuracy in object recognition; however, their relatively heavy architectures often result in lower inference speed, which constrains their applicability in real-time aerial monitoring. Transformer-based detectors (e.g., DETR and its variants) have shown strong performance in complex visual scenes, but they typically require large-scale training resources and may be less efficient when deployed on resource-limited platforms. Thus, despite the progress achieved across different approaches, challenges remain in balancing accuracy, robustness, and efficiency for scenarios involving visually similar objects such as drones and birds. This gap provided the motivation for developing the YOLOv12-ADBC architecture in the present study.

3. Materials and Methods

The YOLO (You Only Look Once) series of models is widely used in real-time object detection tasks, demonstrating high performance in both speed and accuracy. With the transition from YOLOv8 to YOLOv12, the architecture of this line has evolved significantly—each iteration introduced structural improvements aimed at eliminating architectural limitations of previous versions and improving recognition quality, especially when working with small objects such as UAVs and birds.

YOLOv8 implemented an anchor-free architecture, eliminating the need to use pre-defined anchor rectangles. The model predicts the bounding boxes of objects directly from the coordinates of their centers, which increases versatility in detecting objects of various shapes and sizes [

33,

34]. The introduced decoupled head structure ensured the separate processing of classification and coordinate regression tasks, reducing mutual interference between them and improving detection accuracy. Improvements in feature fusion were achieved through the use of the Path Aggregation Network (PAN), which allowed for the efficient merging of activation maps at different levels. These measures contributed to increased accuracy in complex scenes while maintaining high inference speed.

The YOLOv9 model focuses on the stability of training and improving the transfer of features through a deep network. The main innovation was the implementation of Programmable Gradient Information (PGI), a mechanism that prevents gradient decay at great depths through controlled information filtering [

35]. Additionally, YOLOv9 uses the GELAN (Generalized Efficient Layer Aggregation Network) architecture, which combines the ideas of ELAN and CSPNet. GELAN provides flexible feature aggregation, improving the quality of object representation at different scales. This solution is critical for recognizing small objects, since it preserves their features throughout the entire signal path. Experiments have shown an increase in mAP and recall compared to previous versions, especially in tasks with a dense distribution of targets.

YOLOv10 focuses on optimizing the process of matching predictions to real objects during training. Removing the need for Non-Maximum Suppression (NMS) during training and implementing a dual assignment scheme allowed us to improve the accuracy of prediction binding without post-processing [

36]. The architecture of the model includes spatial–channel decoupled downsampling, a mechanism that provides resolution reduction without losing spatial and channel features critical for detecting small targets. The use of large-kernel convolutions expands the field of perception of neurons, allowing context to be captured without blurring local details. Overall, YOLOv10 demonstrated reliable performance in detecting small objects and reduced inference time, which makes it applicable on resource-constrained devices.

RT-DETR-L is used as a real-time transformer baseline alongside the YOLO family. The architecture couples a convolutional backbone with a lightweight hybrid encoder that processes multi-scale features in two stages: the Attention-based Intra-Scale Feature Interaction (AIFI) module, which applies self-attention within each resolution to refine local structure, and the Cross-Scale Feature-Fusion Module (CCFM), which aggregates information across scales, preserving small-object cues while retaining global context. After encoding, an IoU-aware selection chooses a fixed number of informative image tokens as the initial queries for the decoder; the decoder then iteratively refines these queries and outputs final bounding boxes and class labels using auxiliary heads [

17]. A salient property of RT-DETR is the absence of post-processing by NMS; associations between predictions and objects are performed directly by the transformer during training and inference, which reduces latency and mitigates errors in crowded scenes. The L variant is employed as a compromise between accuracy and speed, delivering competitive mAP on small targets with performance suitable for real-time applications.

YOLOv11 models have continued to develop in the direction of enhancing spatial sensitivity and model depth. The updated C3k2 convolutional block improved the stability of the gradient flow and ensured more robust training of deep layers. The use of SPPF (Spatial Pyramid Pooling—Fast) allowed the model to simultaneously extract features from different scales, which is especially important when working with objects of non-uniform size [

37]. The C2PSA (Convolutional block with Parallel Spatial Attention) attention mechanism implements the enhancement of significant spatial features without significantly increasing the computational load. These architectural elements enhanced YOLOv11’s ability to detect small and partially occluded objects on complex backgrounds. As a result, YOLOv11 achieved high accuracy while maintaining the speed typical of YOLO.

The most significant architectural and functional improvements were implemented in YOLOv12 [

38]. The model combined attention and residual feature aggregation mechanisms into a hybrid architecture focused on the accurate detection and classification of objects of different scales in real time. The central element of YOLOv12 is the Area Attention (A2) module, which provides segmented attention across feature map areas. Unlike classical self-attention, A2 divides the map into M segments and calculates a weighting coefficient αᵢ for each segment. The output map is formed according to Equation (1):

where

Fi are the features in the

i-th segment and

is the normalized attention weight. This allows adaptive scaling of the attention zone, enhancing sensitivity to small and poorly distinguishable objects, such as UAVs and birds at a long distance or partially occluded targets.

To increase the influence of small objects on the loss function, YOLOv12 introduces a scale-dependent weight, presented in Equation (2):

where

is the scale of the object, taking into account the height and width of both the object and the image,

l is the gain coefficient, and

is the coefficient tending to zero.

This increases the contribution of small objects to the final loss function and improves their detection. In addition, the coordinate localization loss function includes weighting by the object area (3):

where

is the localization error of the

i-th object;

xi and

yi are the actual coordinates;

are the predicted coordinates;

ωi is the width of the object; and

hi is the height of the object.

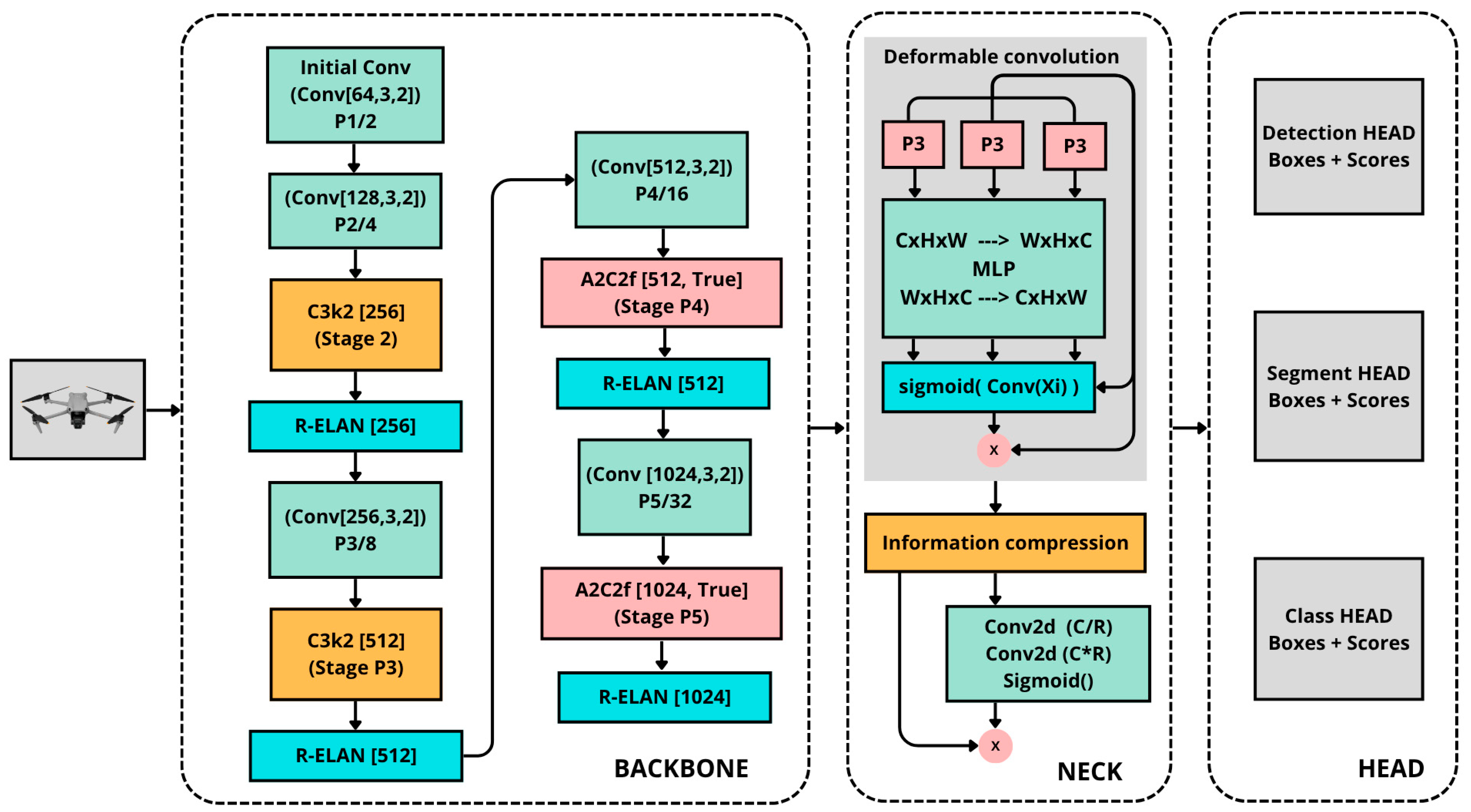

Despite the achieved results, certain applied tasks—such as the classification of visually similar objects like drones and birds—require further improvement of the model’s ability to distinguish structurally similar targets against visual noise and complex scenes. To address this challenge, an enhanced version of YOLOv12 named YOLOv12-ADBC (Advanced for Drone and Bird Classification) was developed (

Figure 1). The key improvement concerns the neck architecture, where traditional feature fusion methods are replaced by the Adaptive Hierarchical Feature Integration Network (AHFIN) module [

39]. AHFIN operates in several stages and is aimed at the efficient fusion of features of different scales, taking into account their spatial consistency and relative importance. In the first stage, each input feature tensor

Xi from different levels of the hierarchy is sequentially processed by a gradient compression block, including a dimensional permutation, passing through a multilayer perceptron (MLP) and an inverse permutation. As a result, a spatially adaptive weight map is formed, reflecting the significance of each feature element. This map is calculated using a convolution with a 1 × 1 kernel and a sigmoid activation function (Formula (4)):

Then, the original tensor

Xi is weighted element-wise by the obtained mask

Wi, which allows local features to be enhanced or suppressed depending on their significance (Formula (5)):

To form an integrated feature map, all adjusted feature maps are aggregated in accordance with Formula (6):

To reduce dimensionality and prevent redundancy,

Xfused is processed by a 1×1 compressive convolution, which implements information compression (Formula (7)):

In order to improve the stability of training and the gradient propagation in deep neural network architecture, a gradient compression module is used.

This component consists of two consecutive convolutional layers with kernels, between which the ReLU activation function is used. The first convolution reduces the number of channels, extracting the most significant features, and the second restores the original dimensionality. The output is an attention mask that highlights informative areas in the feature space. This mask is applied to the original tensor, enhancing significant channels and suppressing unimportant ones. The integration of the AHFIN module into the YOLOv12-ADBC architecture provides multi-level adaptation and feature fusion, which is critical for tasks related to distinguishing visually and structurally similar objects. This is especially important when classifying UAVs and birds with similar outlines, sizes, and textures. Due to the alignment of spatial features, step-by-step weighting, information, and gradient compression, as well as by focusing on key areas of the image, the model is able to more accurately highlight the distinctive features of each class. This allows for increased sensitivity to subtle differences between UAVs and birds, thereby ensuring reliable classification in the face of visual noise, complex backgrounds, and large-scale variability.

However, for an objective assessment of the effectiveness, it is important to train the previous versions of YOLO described above on a user dataset. In order to identify the most productive model, five neural network architectures of medium complexity available in the Ultralytics repository were selected and tested: YOLOv8m, YOLOv9m, YOLOv10m, RT-DETR-L, YOLOv11m, and YOLOv12m. The YOLOv12-ADBC model is modified from YOLOv12m. The choice of versions with the m (medium) index is justified by the need to achieve a balance between recognition accuracy and computational efficiency, which is especially important when processing video streams with limited hardware resources. Variants with a lower capacity (

s—small) are often inferior in detecting small objects on a complex background, while older models (

l and

x) require significant amounts of video memory and increased inference time, which may be unjustified in the absence of a critical gain in accuracy. A specialized dataset was developed for training, validating, and testing five YOLO models, including two classes of objects, such as UAVs and birds. In total, the dataset contained 7291 images selected in such a way as to simulate complex recognition scenarios: the presence of small objects at a distance, a variety of backgrounds (sky, forest, buildings), and different illumination and viewing angles (

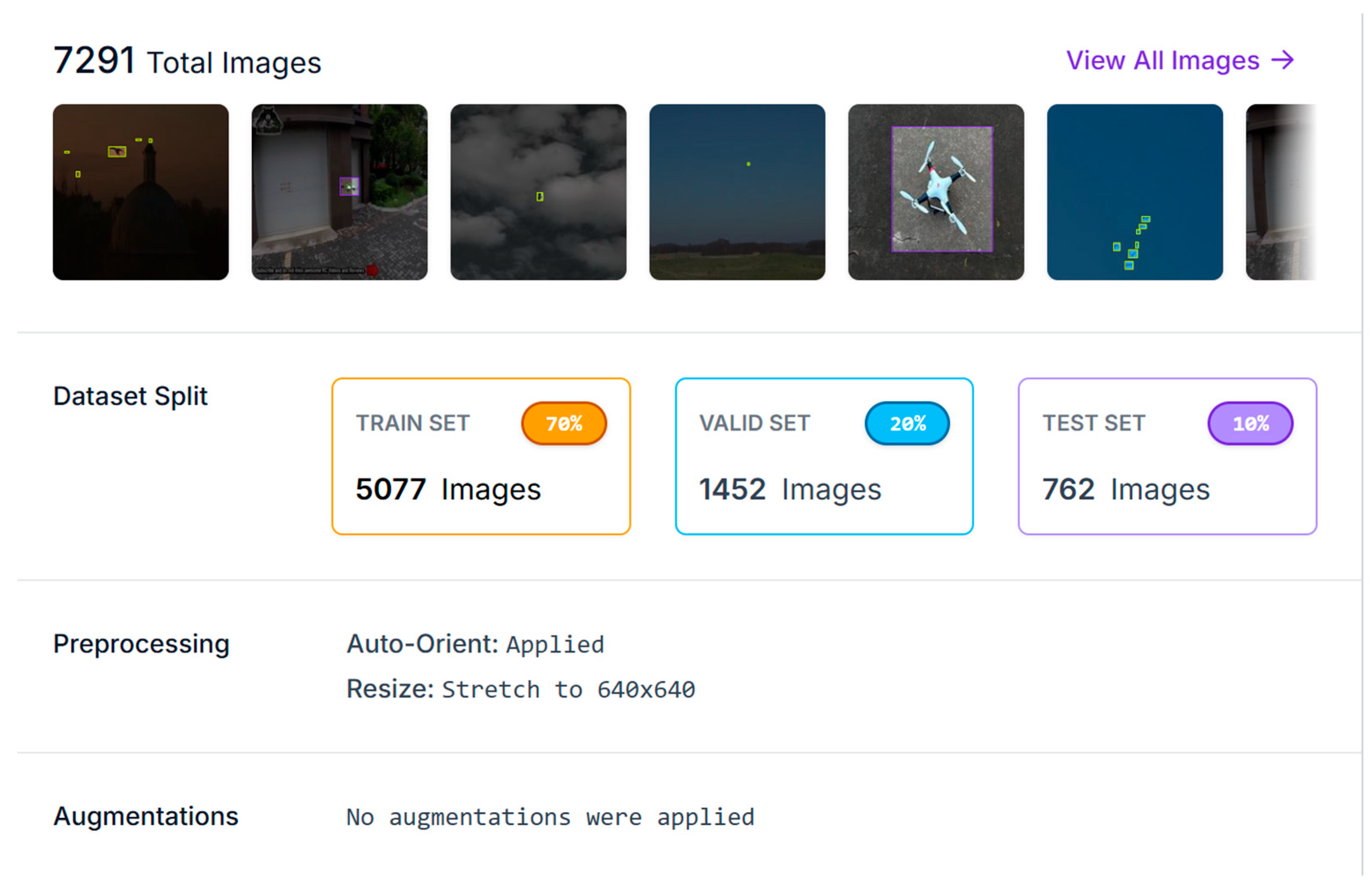

Figure 2). This approach allows us to bring the experimental conditions closer to real tasks of optical–electronic surveillance of airspace.

The dataset was compiled from open sources such as Roboflow, Ultralytics HUB and Kaggle. In addition, the dataset included frames from our own video recordings of UAV and bird flights obtained using the TD-8543IE3N camera. The data was labeled and pre-processed using the Roboflow.com platform, which allowed us to effectively classify objects and automatically distribute images into three subsamples: 70% for training, 20% for validation and 10% for testing. Each YOLO model was adapted to its own annotation format (

Figure 3) using “yaml” configuration files, which ensured the correct integration of data into the corresponding architecture.

To prepare and launch models in the Python environment, a software module was developed that implements augmentation and launches training, validation, and testing processes using the Ultralytics and PyTorch libraries. To improve the generalizing ability of the model, advanced augmentation methods were used, aimed at artificially increasing the diversity of the training dataset. The use of color transformations in the HSV color space—hue, saturation, and brightness—is implemented through shifts (Equation (8)):

where Δ

h = ±0.015, Δ

s = ±0.7, and Δ

v = ±0.4 as random values with a given amplitude. These transformations simulate different lighting conditions and atmospheric interference, contributing to the stability of the model to the variability of external conditions. Reflections of objects vertically and horizontally with a probability of 0.5 are also established. This property corresponds to a symmetric transformation in the coordinate system (Equation (9)):

where

W and

H are the width and height of the image, respectively. These operations allow the model to handle objects moving in different directions and increase the variability of representations in the training set.

Mosaic augmentation was applied to all images in the dataset, where according to Equation (10), four images were combined with a random set of transformations (shift, crop, and scale):

where

Ti is random transformations (scaling, cropping, shearing) applied to image

Ii.

This solution allows the density of small objects in the frame to be increased, improving their learning, especially in the context of visual noise and various backgrounds. An additional augmentation method, MixUp, with a probability of application of 0.1 is implemented through a linear combination of two images (Equation (11)):

where

y1 and

y2 are the corresponding annotations. This method reduces the likelihood of overtraining and increases the stability of YOLO models to background overlays and partial occlusions of objects in order to ensure high detail in the objects while preserving small visual details critical for recognizing UAVs and birds.

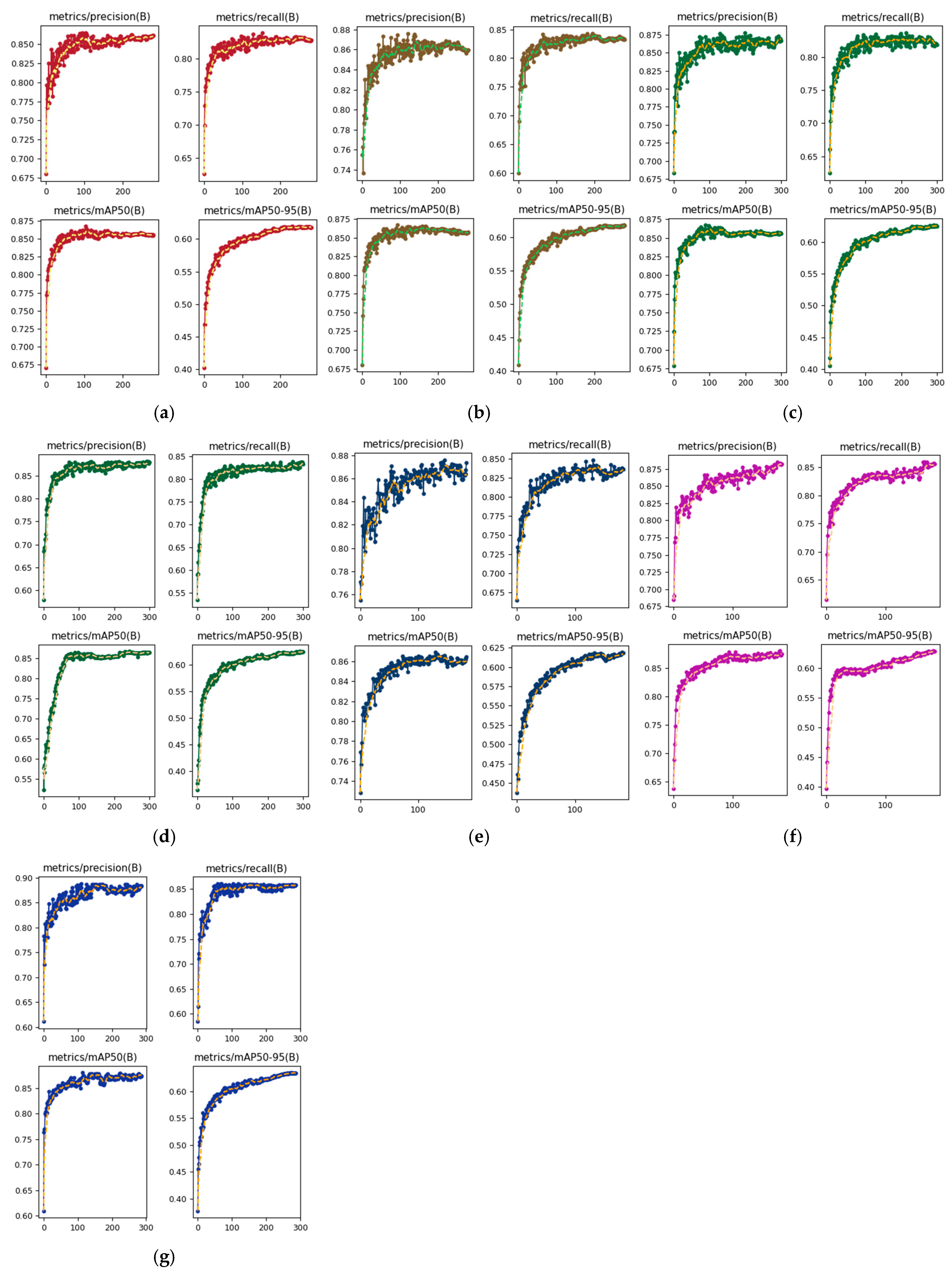

For training, 300 epochs were chosen as the maximum value using the AdamW optimizer with the following initial parameters: learning rate lr0 = 0.0001, batch size B = 16, and weight decay wd = 0.0005. Automatic accuracy bias and an early stopping mechanism were enabled if there was no progress in training for 50 epochs. All stages of training, validation, testing, and subsequent inference were implemented using an NVIDIA RTX 4090 GPU with sufficient throughput and memory for models of medium complexity. To ensure compatibility with the PyTorch and Ultralytics frameworks, the CUDA Toolkit 12.1 software stack was used under the Ubuntu 22.04 LTS operating system. The input resolution was fixed at 640 × 640. Mixed precision (FP16/AMP) was enabled. A gradient accumulation of two steps provided an effective batch size of 32. The learning rate followed cosine annealing with a 3-epoch warm-up, and an exponential moving average of the weights was maintained. A global random seed of 42 was set for reproducibility. The best checkpoint was selected by the highest mAP50–95 on the validation set, with metrics logged at every epoch.

To isolate the contribution of the AHFIN module and the Mosaic and MixUp augmentations, an ablative study of the YOLOv12-ADBC architecture was conducted. Experimental conditions were held constant: a single dataset, an identical training schedule, a fixed input image size, and a consistent hardware and software environment. Comparisons were performed using four metrics: Precision, Recall, mAP50, and mAP50–95. A configuration without AHFIN was used as a methodological reference point (equivalent to the standard YOLOv12m). Four configurations with activated AHFIN were included in the analysis: AHFIN, AHFIN + Mosaic, AHFIN + MixUp, and AHFIN + Mosaic + MixUp. This experimental design separately captures the contribution of the training recipe and the contribution of augmentations, ensuring the accurate interpretation of changes in metrics.

At the inference stage, each of the trained YOLO models was tested on a sample of three videos containing UAV flight scenes filmed from fixed observation points in the far visual contact zone. Such conditions are as close as possible to real-life optical–electronic surveillance tasks, where targets are presented as small, fast-moving objects, often blocked by the background or other interference. The inference results were visualized as bounding box frames indicating the class and confidence level, which allowed for a qualitative and quantitative assessment of the models’ performance in detecting complex and hard-to-distinguish targets. To quantitatively assess the performance of the experimental models, a specialized Python script was developed based on the ultralytics library, providing automated analysis of video files. During the processing of successive video frames, three key metrics were recorded: Detection Accuracy (Ad), Average Confidence and FPS. Detection Accuracy was defined as the ratio of the number of frames in which the drone was correctly identified without the presence of false class objects (birds) to the total number of processed frames. Formally, this indicator is calculated using Equation (12):

where

Nc is the number of frames containing only correctly identified UAVs (without false alarms for birds), and

Nt is the total number of video frames.

Average Confidence (Ac) is defined as the average confidence score assigned by the model to the detected drone class objects in each frame. To increase the rigor of the assessment, if a bird class object was erroneously detected in a frame, its confident score was interpreted as a false positive and was set to zero within the averaging. Thus, averaging took into account both the level of confidence in correct detections and false positives in accordance with Equation (13):

where

Ci is the average confidence for all objects in the frame, with zeroing in the case of the presence of an object of the “bird” class.

FPS in this implementation was estimated using the built-in YOLO().track() mechanism, which allows the model’s performance to be tracked on a given video file. The following section presents the results of experimental studies of trained YOLO models.

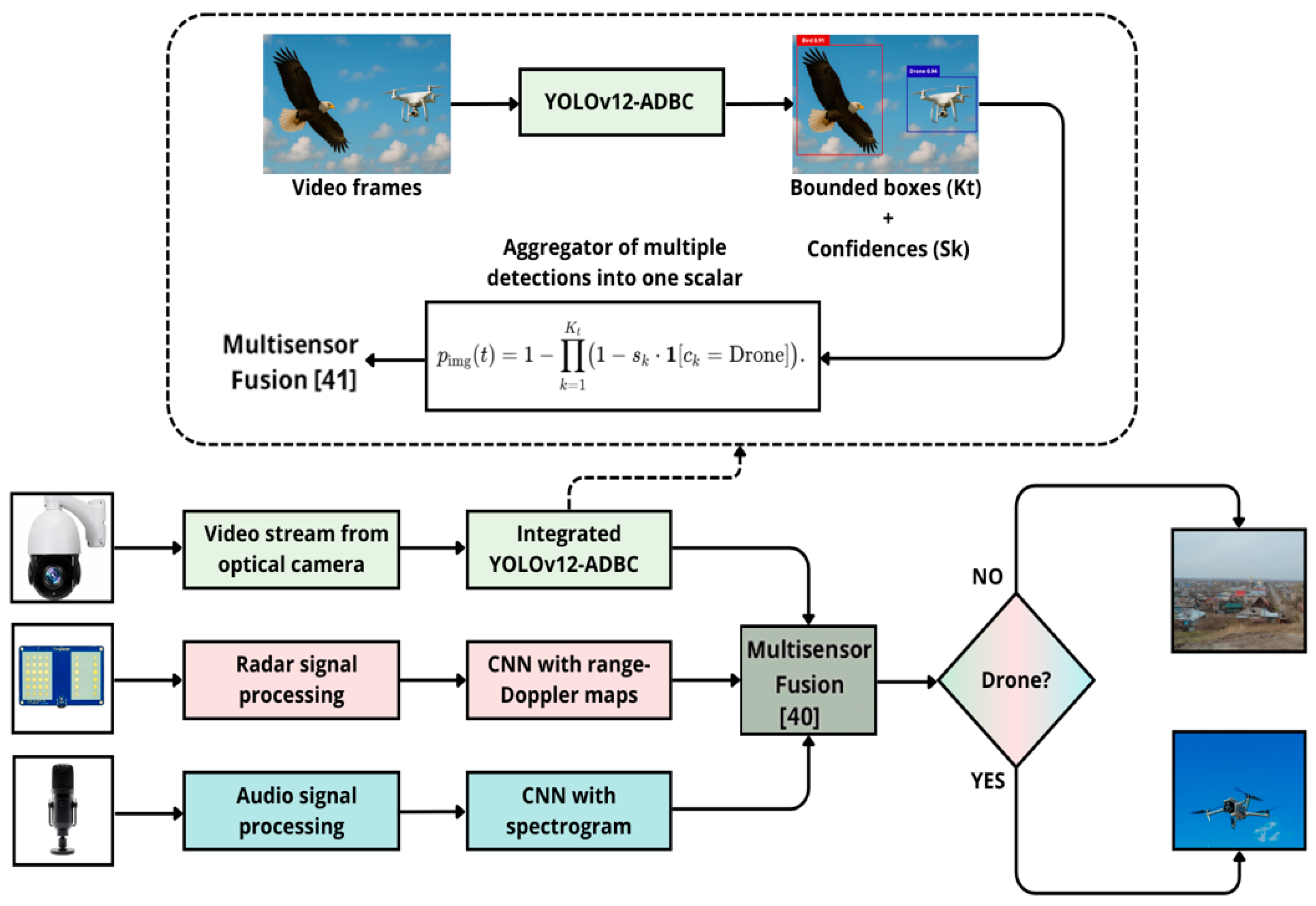

Based on the comparative analysis of the examined YOLO versions (YOLOv8–YOLOv12 and YOLOv12-ADBC), practical recommendations will be formulated for the implementation of the optimal visual detector model within the existing Sensor Fusion system. As the Sensor Fusion model, the architecture presented in ref. [

40] was selected. This publication [

40] is one of the most authoritative and widely cited works in the field of multisensor UAV detection. The Sensor Fusion architecture integrates three distinct channels—optical, radar, and acoustic—employing convolutional neural networks for feature extraction and logistic regression to combine probabilistic outputs. The YOLO models under consideration are capable of modifying the electro-optical channel, thereby enhancing the accuracy of UAV and bird detection and classification. At this stage, the focus is limited to practical recommendations without conducting or analyzing experimental results of direct integration of the visual detector model into the Sensor Fusion system, since such investigations will be the subject of a separate study.

5. Discussion

The results obtained for training, validation, testing, and inference for the YOLOv8m–YOLOv11m models, as well as the enhanced YOLOv12-ADBC, demonstrate consistent and robust performance in the task of UAV and bird classification. These models, trained within a unified framework, provide a representative basis for comparative analysis. Earlier research introduced modifications to standard architectures—such as GBS-YOLOv5 [

18], DB-YOLOv5 [

19], YOLOv7 + Attention [

21], and F-YOLOv7 [

22]—and achieved high accuracy (mAP up to 96.6%) and fast inference speeds (up to 128 FPS), but these architectures were often tailored to specific scenarios or hardware constraints. Similarly, recent advances like YOLOv8-DroneTrack [

29] and BRA-YOLOv10 [

25] reached mAP50 values up to 96.8%, with inference speeds from 42 to 118 FPS, though these approaches typically target narrow application domains. In contrast, the models proposed in this study, including YOLOv12-ADBC, are evaluated on a generalized and challenging dataset, allowing for a more objective assessment of their generalization capabilities and architectural contributions.

The comparison of the models based on the metrics presented in

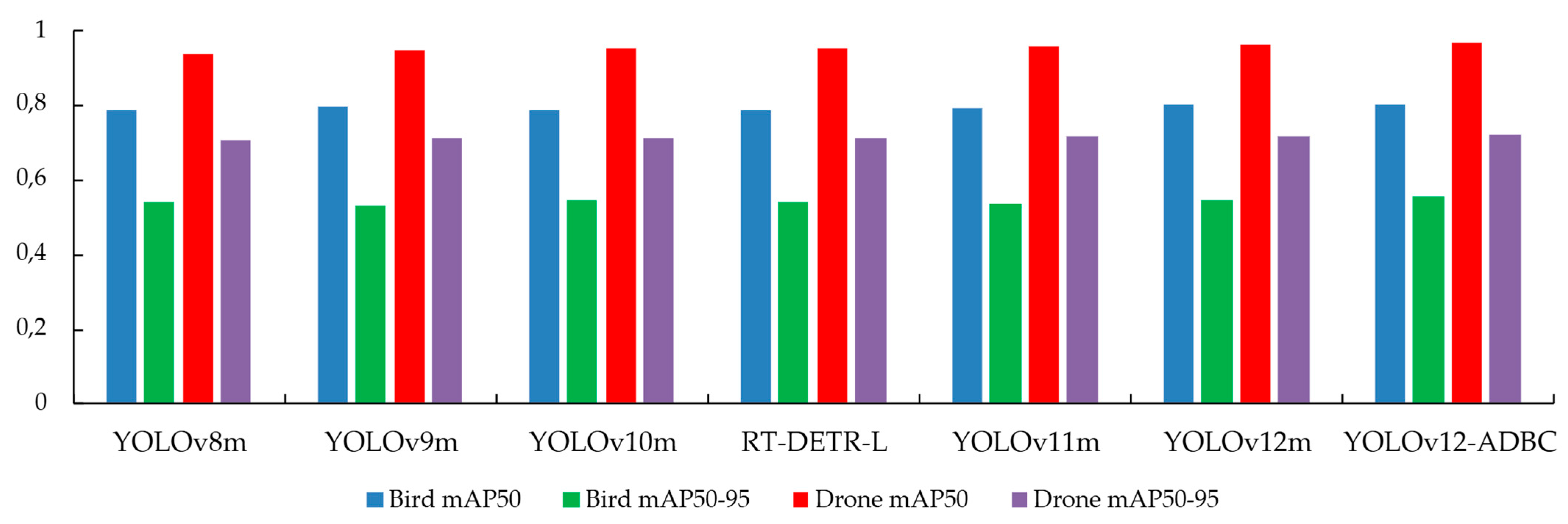

Table 2 shows that YOLOv12-ADBC achieves the strongest overall performance among all tested versions, outperforming not only the YOLO family baselines (v8m–v11m, v12m) but also the transformer baseline RT-DETR-L, while the baseline YOLOv12m already demonstrates balanced precision (0.88187), recall (0.85466), and accuracy across IoU thresholds (mAP50 = 0.87654, mAP50–95 = 0.62911). The integration of the AHFIN training recipe in YOLOv12-ADBC further improves these results to 0.89253 (Precision), 0.86432 (Recall), 0.88123 (mAP50), and 0.63391 (mAP50–95). In practical terms, the higher precision indicates a reduction in false positives during UAV detection, whereas the improved recall reflects greater sensitivity to true UAV instances across varied scenes. The gains at both IoU thresholds—especially the consistent increase in mAP50 and mAP50–95—suggest more reliable detection under changes in object scale and background complexity, which is consistent with the expected effect of AHFIN on stabilizing training and enhancing feature aggregation. Taken together, these improvements confirm that the architectural and training modification yields tangible benefits in both accuracy and robustness, thereby justifying the practical recommendation to adopt YOLOv12-ADBC as the preferred optical module within sensor-fusion frameworks.

A more detailed analysis of the per-class results in

Table 3 confirms the advantage of integrating the AHFIN recipe in YOLOv12-ADBC. For the drone class, YOLOv12-ADBC attains mAP50 = 0.96407 and mAP50-95 = 0.71749. The absolute gains over YOLOv12m (0.95674/0.71465) equal +0.00733 and +0.00284, which correspond to +0.77% and +0.40% in relative terms. Both values are the best among all baselines; the nearest competitors are YOLOv11m with mAP50 = 0.95126 and mAP50-95 = 0.71199, which remain below the proposed configuration. For the bird class, YOLOv12-ADBC reaches mAP50 = 0.80024 and mAP50-95 = 0.55051. The improvements over YOLOv12m (0.79629/0.54357) equal +0.00395 and +0.00694, that is, +0.50% and +1.28% in relative terms. These values also exceed all earlier baselines; for example, the strongest non-AHFIN result in the stricter metric is YOLOv10m with bird mAP50-95 = 0.54438, which is still below 0.55051. Taken together, the per-class gains indicate that the AHFIN training recipe improves multi-scale feature aggregation and increases sensitivity to subtle visual cues. The effect is most visible in bird mAP50-95 and drone mAP50, reflecting the better localization under varied object sizes and challenging backgrounds.

As a result of the ablation analysis presented in

Table 4, it was found that, relative to the original YOLOv12m, the full YOLOv12-ADBC configuration (AHFIN + Mosaic + MixUp) delivers a consistent improvement: Precision +1.21%, Recall +1.13%, mAP50 +0.54%, and mAP50–95 +0.76%. The largest contribution is provided by the AHFIN training recipe (without additional augmentation): relative to the baseline, it yields +0.87% in Precision, +0.63% in Recall, +0.38% in mAP50, and +0.43% in mAP50–95. Adding augmentations on top of AHFIN further increases performance moderately: moving to AHFIN + Mosaic gives ≈ +0.18%/+0.25%/+0.11%/+0.14% across the four metrics; the AHFIN + MixUp configuration yields ≈ +0.13%/+0.18%/+0.08%/+0.12%. The transition from “AHFIN” to the full configuration adds an additional ≈ +0.34% Precision, +0.49% Recall, +0.16% mAP50, and +0.33% mAP50–95. In terms of the share of the overall gain between the baseline and the final model, AHFIN accounts for ≈72% of the improvement in Precision, ≈56% in Recall, ≈70% in mAP50, and ≈57% in mAP50–95; the remaining portion is provided by the Mosaic/MixUp augmentations. Without AHFIN, the augmentations alone yield only limited gains over the baseline (e.g., Mosaic + MixUp without AHFIN: +0.42% Precision, +0.38% Recall, +0.24% mAP50, +0.28% mAP50–95). This confirms the key role of the AHFIN recipe. Mosaic and MixUp act as stabilizing amplifiers, primarily increasing Recall while maintaining or slightly improving Precision and aggregate mAP.

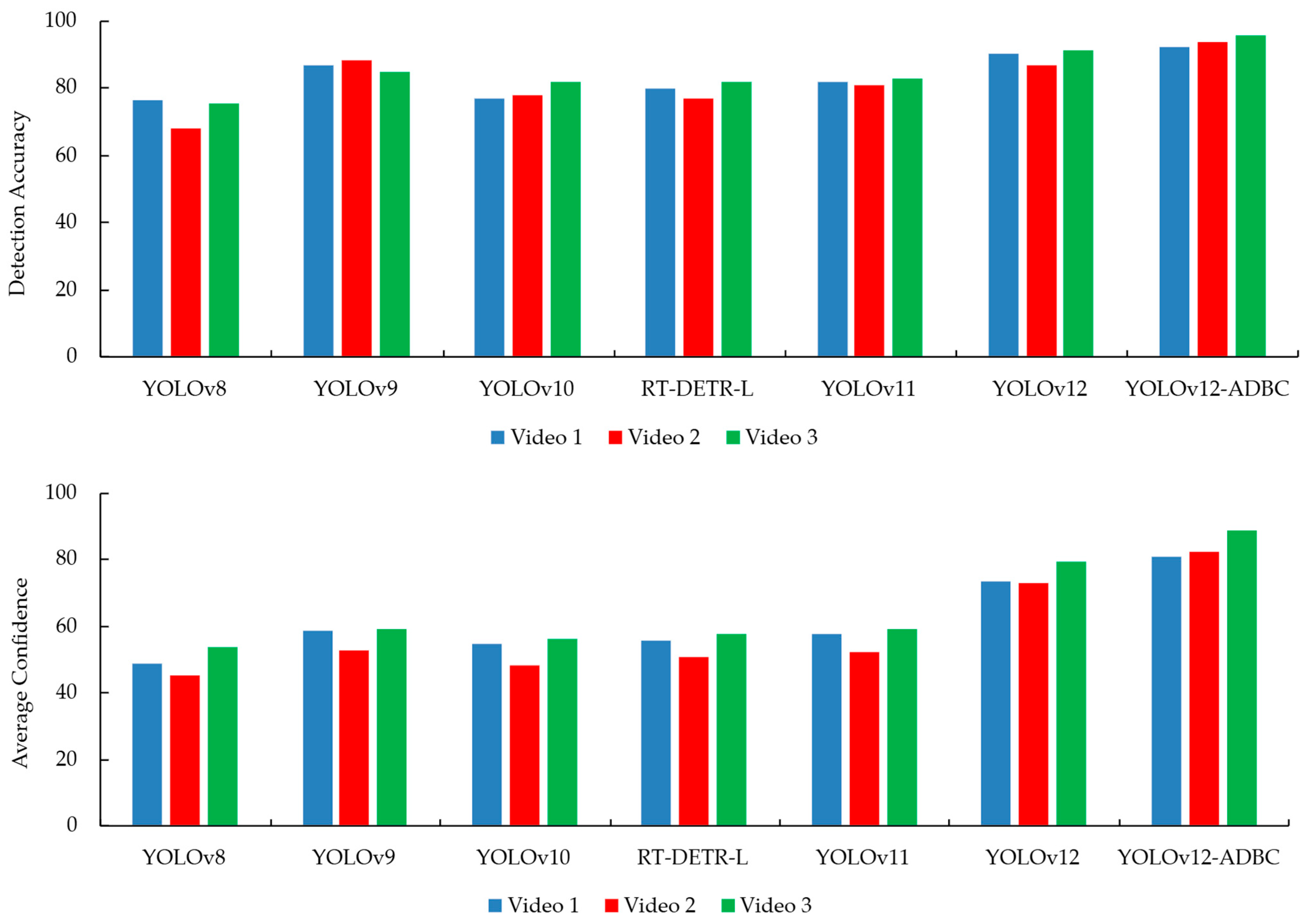

The testing results on video streams from three scenarios (

Table 5,

Figure 6) demonstrate the superior practical performance of YOLOv12-ADBC. The model attains the highest detection accuracy in all three videos, 92.16%, 93.41%, and 95.49%, whereas YOLOv12m reaches 89.73%, 86.61%, and 90.74%; the absolute gains are +2.43 pp, +6.80 pp, and +4.75 pp. YOLOv12-ADBC also provides consistently higher average confidence; 80.64%, 81.96%, and 88.57% versus 73.29%, 72.53%, and 79.14% for YOLOv12m, which corresponds to +7.35 pp, +9.43 pp, and +9.43 pp. In addition, the transformer baseline RT-DETR-L shows markedly lower values across all scenarios. Its detection accuracy is 79.61% on Video 1, 76.48% on Video 2, and 81.69% on Video 3, and its average confidence is 55.62%, 50.37%, and 57.48%. Relative to RT-DETR-L, YOLOv12-ADBC improves detection accuracy by +12.55 pp, +16.93 pp, and +13.80 pp for Videos 1–3, and raises average confidence by +25.02 pp, +31.59 pp, and +31.09 pp. These results indicate not only higher accuracy but also substantially more stable confidence estimates during continuous video inference. Such stability is critical in real-world monitoring, where reliable UAV recognition requires maintaining both accuracy and confidence across changing environments.

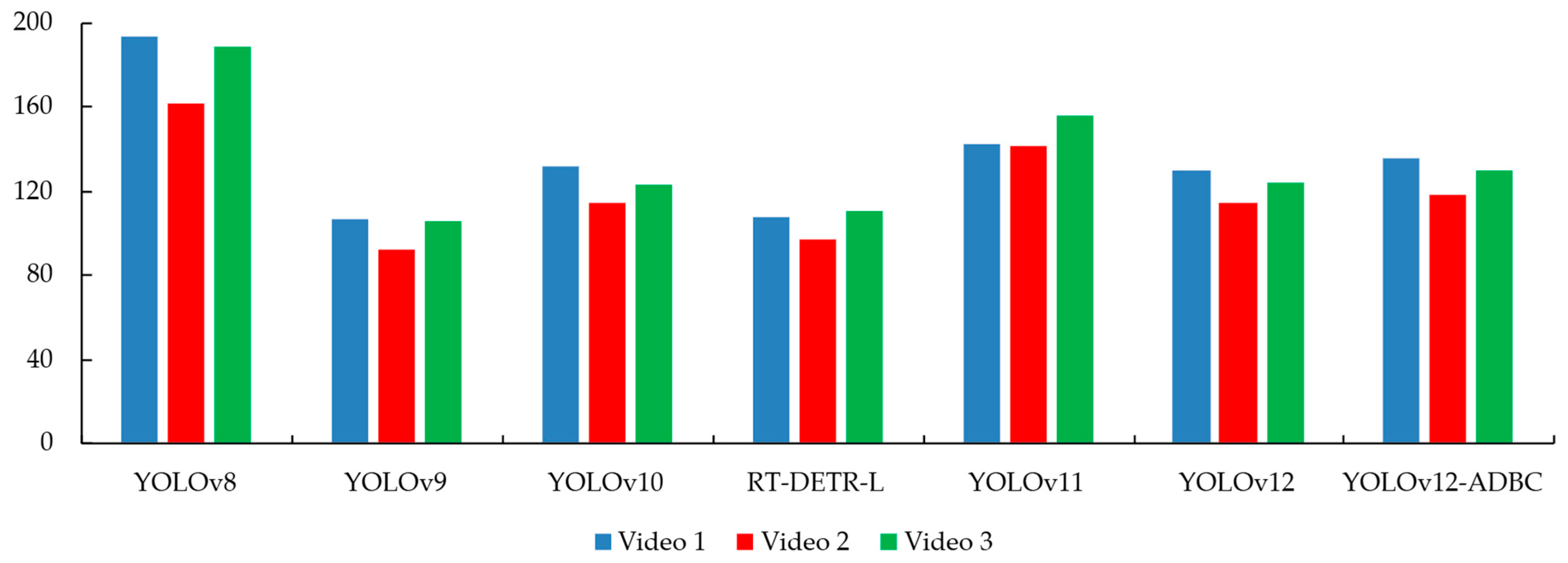

The only metric where YOLOv12-ADBC shows a relative disadvantage compared to other models is processing speed. Across the three video sequences (

Figure 8), its average FPS values are 135, 118, and 129, respectively (

Table 6,

Figure 7). By contrast, YOLOv8m significantly outperforms all competitors in terms of throughput, achieving 191, 161, and 188 FPS, which makes it particularly attractive for lightweight deployments where computational efficiency is prioritized over detection robustness. Nevertheless, it should be emphasized that even with a more complex architecture, YOLOv12-ADBC maintains a real-time performance suitable for practical applications such as surveillance, online monitoring, and autonomous systems. Importantly, the measured FPS rates remain well above the typical operational threshold of 30–60 FPS required for smooth video inference. This ensures that the improved accuracy and confidence levels of YOLOv12-ADBC are achieved without compromising the feasibility of real-time deployment.

A comparative analysis across all metrics and scenarios confirms that YOLOv12-ADBC is the most balanced and effective solution for visual detection tasks involving UAVs and birds. The model consistently achieves the best results in mAP50, mAP50-95, precision, recall, and prediction confidence during video inference, which is especially important under conditions of visual noise and object occlusion. A key factor behind these results is the use of the original YOLOv12 architecture, which improves feature representation through more coherent information flow across hierarchical levels. In particular, the decisive enhancement was the integration of a tailored spatial feature aggregation block in the neck of the model, implemented via the adaptive hierarchical feature integration network module. This module effectively aligns features of different resolutions, mitigating spatial inconsistencies and enhancing relevant object cues—a capability that is especially critical for the accurate classification of visually similar targets such as birds and drones. Thus, the architectural improvements in YOLOv12-ADBC have not only increased accuracy but also enhanced the model’s robustness in real-world conditions, while maintaining acceptable processing speed.

The YOLOv12-ADBC model demonstrates strong suitability for integration into data fusion algorithms due to its versatile architecture and its ability to effectively process features at multiple scales. These qualities make it particularly valuable for mid-level fusion, where visual features can be combined with information from other sensors—such as radar-based micro-Doppler signatures [

42] or the spectral characteristics of RF and acoustic signals [

43]—prior to the final classification stage. This enables the construction of a more comprehensive and reliable representation of the target by leveraging the strengths of each modality. Due to its high confidence in predictions and low tendency for false positives, YOLOv12-ADBC also functions effectively in late fusion frameworks. The outputs generated from the optical channel can be reliably merged with results from other sensors—such as radar [

44], RF, or acoustic modules—to formulate more accurate final decisions [

41]. This is particularly relevant in scenarios where sensors detect the presence of a target but are unable to ensure its correct identification. An important advantage of YOLOv12-ADBC for real-time aerial monitoring systems is its high video processing speed (ranging from 118 to 135 FPS), which ensures timely responses to potential threats while maintaining the performance required for a wide range of practical applications, including video surveillance and autonomous navigation. Integrating YOLOv12-ADBC into multisensor platforms allows for the compensation of limitations inherent to individual channels. For instance, following initial detection by a radar, the model can refine visual features of the target, especially in cases where objects like drones and birds exhibit similar RCS profiles. Moreover, the model can provide visual confirmation in situations where RF signals are absent or ambiguous, which is critical for identifying drones operating in radio silence. Similarly, in noisy environments or at long distances where acoustic sensors become unreliable, YOLOv12-ADBC remains a dependable source of information.

Based on this, the YOLOv12-ADBC detector (

Figure 9) is proposed to be used as a practical solution for the optical channel of the multisensor detection system. To integrate the selected visual detector model, it is necessary to transform the set of detections on each frame (Kt) into a scalar probability of the drone presence in the frame in accordance with Formula (14).

where

ck {Drone, Bird} is the class label, and

sk [0, 1] is the confidence corresponding to detection.

This approach is due to the fact that in the original work [

40], a CNN-based classifier pre-trained on ImageNet was used for the optical channel, which returned a scalar probability of the drone presence on each frame. Since the frequency of the YOLOv12-ADBC detector exceeds the frequency of the video camera (FPS = 30), exactly one scalar

pimg(

t) is formed on each clock cycle, corresponding to each input frame. The network’s redundant performance ensures minimal processing delay and allows the optical channel to be synchronized with the radar and acoustic sensor probabilities, which are also sampled over the camera’s frame time grid. To align the time series, the “hold-last-sample” strategy or window averaging is used according to Formula (15):

where p

m denotes the output probability of modality m.

The final solution is formed according to the scheme [

40] using logistic regression in accordance with Formula (16):

where

prad and

paud are the probabilistic outputs of the radar and acoustic channels and the coefficients a

0, a

1, a

2, and a

3 are determined by the method of minimizing the loss function.

This modification demonstrates that modern object detectors can be embedded into existing Sensor Fusion architectures without changing their fundamental mathematical structure. This approach not only improves the system’s robustness to challenging imaging conditions and external interference, but also creates the basis for more advanced fusion strategies in which YOLOv12-ADBC outputs can be combined with radar micro-Doppler characteristics, radio spectral signatures, or acoustic data. The practical recommendation thus highlights the role of YOLOv12-ADBC as a robust optical module in multi-sensor airborne monitoring systems, capable of compensating for the limitations of individual channels and providing mission-critical visual verification. This supports the conclusion that the integration of modern visual detectors into established fusion schemes significantly improves the overall reliability, responsiveness, and practical value of UAV detection systems in airspace protection tasks.

6. Conclusions

(1) In this study, we conducted a comprehensive evaluation of the performance of RT-DETR-L and several YOLO-based models—YOLOv8m, YOLOv9m, YOLOv10m, YOLOv11m, YOLOv12m, and the proposed YOLOv12-ADBC—for the task of visual detection and classification of drones and birds. The experimental setup included supervised training, multi-metric quantitative evaluation, and real-world video stream testing under complex background conditions.

(2) Among all the models tested, YOLOv12-ADBC demonstrated the most consistent and superior performance. It achieved the highest precision (0.89253), recall (0.86432), and mAP metrics (mAP50 = 0.88123, mAP50–95 = 0.63391), confirming its robustness in distinguishing UAVs from visually similar classes such as birds. In video inference, the model also maintained the highest average confidence and detection accuracy across three different scenarios, indicating strong generalization in real-world environments.

(3) When compared with recent solutions from the literature—including GBS-YOLOv5, YOLOv7 with attention mechanisms, and BRA-YOLOv10—the YOLOv12-ADBC model provides comparable or superior accuracy, while preserving architectural flexibility and not requiring task-specific modifications or specialized deployment platforms.

(4) The strong performance of YOLOv12-ADBC can be attributed to its architectural enhancements. The backbone of YOLOv12 ensures efficient multiscale feature representation, while the integration of the Adaptive Hierarchical Feature Integration Network (AHFIN) in the neck module enhances spatial feature fusion. AHFIN effectively aligns and emphasizes informative features from multiple scales, which is critical for the accurate classification of structurally similar targets like drones and birds.

(5) Based on the ablation study, the full training recipe for YOLOv12-ADBC is justified for deployment: AHFIN provides the principal accuracy gains relative to YOLOv12m, while Mosaic and MixUp yield additional but modest improvements and stabilize recall without degrading precision or aggregate mAP.

(6) Despite a slight decrease in inference speed compared to lighter models such as YOLOv8m, YOLOv12-ADBC remains within real-time performance limits (FPS > 118), making it applicable for aerial surveillance, autonomous systems, and multi-sensor fusion pipelines. Future research will focus on deployment on edge devices, adaptation for additional object classes, and further integration with asynchronous sensor networks for enhanced decision-making in complex operational environments.

(7) Future research directions will include

- -

Benchmarking the proposed YOLOv12-ADBC model on edge devices, such as NVIDIA Jetson platforms and NPUs, to validate performance under resource-constrained conditions;

- -

Conducting experimental Sensor Fusion studies that integrate the optical channel with radar, RF, and acoustic sensors to evaluate system-level performance.

- -

Investigating alternative Sensor Fusion strategies beyond logistic regression, including Support Vector Machines (SVM), Random Forest, Gradient Boosting, deep neural networks, and Bayesian models, to improve classification reliability and robustness in multi-modal scenarios.