Highlights

This paper proposes the FedGTD-UAV framework, introducing the application of federated transfer learning to UAV swarm ground small-target detection and significantly enhancing efficiency and performance. The main highlights are as follows:

What are the main findings?

- Proposes FedGTD-UAVs, the first FTL framework for UAV swarm ground small-target detection, combining centralized pre-training with federated fine-tuning to maintain over 98% performance under severe non-IID data.

- Introduces a lightweight GCNet module and SPD-Conv backbone, achieving 44.2% mAP@0.5 on VisDrone2019 and a 3.2× better accuracy–efficiency trade-off.

What is the implication of the main finding?

- Enables privacy-preserving collaborative learning across UAV swarms without sharing raw data, significantly improving generalization under data heterogeneity.

- Achieves real-time inference at 217 FPS with enhanced robustness against occlusions, facilitating practical deployment in dynamic environments.

Abstract

Swarm-based UAV cooperative ground target detection faces critical challenges including sensitivity to small targets, susceptibility to occlusion, and data heterogeneity across distributed platforms. To address these issues, we propose FedGTD-UAVs—a privacy-preserving federated transfer learning (FTL) framework optimized for real-time swarm perception tasks. Our solution integrates three key innovations: (1) an FTL paradigm employing centralized pre-training on public datasets followed by federated fine-tuning of sparse parameter subsets—under severe non-Independent and Identically Distributed (non-IID) data distributions, this paradigm ensures data privacy while maintaining over 98% performance; (2) an Space-to-Depth Convolution (SPD-Conv) backbone that replaces lossy downsampling with lossless space-to-depth operations, preserving fine-grained spatial features critical for small targets; (3) a lightweight Global Context Network (GCNet) module leverages contextual reasoning to effectively capture long-range dependencies, thereby enhancing robustness against occluded objects while maintaining real-time inference at 217 FPS. Extensive validation on VisDrone2019 and CARPK benchmarks demonstrates state-of-the-art performance: 44.2% mAP@0.5 (surpassing YOLOv8s by 12.1%) with 3.2× superior accuracy-efficiency trade-off. Compared to traditional centralized learning methods that rely on global data sharing and pose privacy risks, as well as the significant performance degradation of standard federated learning under non-IID data, this framework successfully resolves the core conflict between data privacy protection and detection performance maintenance, providing a secure and efficient solution for real-world deployment in complex dynamic environments.

1. Introduction

Unmanned aerial vehicle (UAV) technology has revolutionized applications requiring ground target detection, spanning precision agriculture, traffic monitoring, disaster response, and security surveillance [1]. Recent reviews [2,3] have also demonstrated the excellent cross-domain applicability of UAVs. However, individual UAVs face inherent limitations including restricted fields of view, susceptibility to occlusion, constrained computational resources, and vulnerability to environmental interference. These limitations are particularly pronounced in detecting small targets (occupying fewer than 20 pixels) within complex aerial imagery, where conventional detection methods exhibit significant performance degradation [4].

Collaborative perception through UAV swarms offers a compelling solution by leveraging distributed sensing capabilities and collective intelligence. Aggregating multi-perspective observations can enhance situational awareness, improve detection robustness, and increase coverage efficiency [5]. Nevertheless, conventional centralized coordination approaches introduce critical bottlenecks: massive raw data transmission strains communication bandwidth, centralized storage of sensitive imagery creates privacy vulnerabilities, and inherent scalability constraints arise from bandwidth limitations and single-point-of-failure risks.

To overcome these fundamental limitations of centralized paradigms, federated learning (FL) emerges as a disruptive solution tailored for UAV swarm perception. FL fundamentally reframes the collaborative learning process: instead of aggregating raw data, swarm members collaboratively train a shared model by exclusively exchanging model parameters or gradients. This approach inherently addresses the trilemma of bandwidth, privacy, and scalability. Its core lies in transmitting only compressed model updates instead of massive raw image data, reducing bandwidth consumption by several orders of magnitude—a critical advantage for bandwidth-constrained aerial networks where communication energy expenditure exceeds 90% of total consumption. Meanwhile, sensitive visual data remains entirely localized within individual UAVs, fundamentally eliminating risks of information leakage or malicious interception in security-sensitive applications such as border patrols. Furthermore, the decentralized architecture removes single points of failure associated with central servers, ensuring the UAV swarm maintains collaborative operational capabilities even under dynamic network partitioning or partial member disconnections.

A primary challenge stems from severe data heterogeneity and distribution shifts: UAV swarms operate across diverse geographical regions, weather conditions, and dynamic mission scenarios, resulting in highly non-Independent and Identically Distributed (non-IID) data distributions. Traditional FL algorithms suffer considerable performance degradation under such heterogeneity, with studies reporting accuracy drops exceeding 50% [6]. UAV mobility further exacerbates this issue by inducing temporal distribution shifts during environmental transitions. Compounding this challenge are stringent resource constraints and the need for communication efficiency. UAV platforms operate under tight computational, memory, and energy budgets. The frequent exchange of large model parameters in conventional FL imposes prohibitive communication costs, particularly problematic in bandwidth-constrained aerial environments, where communication overhead can account for up to 90% of total training energy consumption [7]. Furthermore, the inherent limitations of detecting small targets in aerial imagery present a distinct obstacle. Minimal target footprints, variations in altitude, and complex backgrounds challenge standard convolutional architectures, which lose critical spatial information during downsampling, severely compromising detection accuracy for small targets.

To overcome the interconnected challenges of data privacy, severe non-IID data distributions, and the computational constraints of UAVs in ground target detection, we propose FedGTD-UAVs. While federated learning addresses privacy, its performance plummets under non-IID data and high communication costs. Similarly, existing detection models often lose critical spatial information through downsampling or lack the contextual reasoning needed for cluttered scenes without being too heavy for UAVs. Our framework bridges these gaps through three key contributions:

- Unlike existing methods that fail to resolve these issues, we introduce the first FTL framework explicitly tailored for collaborative perception in UAV swarms, ensuring robust performance under challenging non-IID data distributions while safeguarding data privacy.

- Compared to prevailing detectors (e.g., YOLO series, RT-DETR), we present a computationally efficient detection architecture that uniquely combines SPD-Conv for enhanced spatial feature preservation and GCNet for contextual attention, optimized for on-device execution.

- Rigorous empirical validation on established benchmarks (VisDrone2019 and CARPK) demonstrates the framework’s state-of-the-art performance, achieving 44.2% mAP@0.5 (a 12.1% improvement over YOLOv8s) while operating at 217 FPS, with in-depth ablation studies further validating the effectiveness of each component.

The remainder of this paper is structured as follows. Section 2 reviews foundational work in FL and UAV target detection. Section 3 details the FedGTD-UAVs methodology and training protocols. Comprehensive experimental evaluations, including ablation studies and comparative analysis, are presented in Section 4. Section 5 concludes the paper, summarizing key findings and outlining future research directions.

2. Related Work

2.1. Federated Learning for Collaborative Perception

FL has established itself as a transformative paradigm for privacy-preserving distributed intelligence. While foundational frameworks [8,9] pioneered communication-efficient model aggregation, domain-specific implementations reveal persistent challenges. Autonomous driving systems have integrated FL with YOLOv7-E and Grad-CAM [10] to reduce data privacy risks by 40%, yet these approaches incur prohibitive computational costs unsuitable for resource-constrained platforms. Hierarchical FL architectures [11] effectively mitigate straggler effects in large-scale deployments but demonstrate limited adaptability to dynamic network topologies. For UAV applications specifically, lightweight models such as MobileNet-SSD [12] enable real-time perception (capable of processing at a speed of 18 frames per second) through parallel computing, but sacrifice significant accuracy under the severe non-IID data distributions typical of aerial operations. Recent innovations include encrypted aggregation protocols tailored for horizontal FL [13], the utilization of vehicular networks to construct distributed deep neural networks from multi-source sensors [14], and debiased learning techniques that directly address statistical heterogeneity [15].

Despite these advances, current FL frameworks exhibit critical limitations when applied to UAV swarm scenarios. Model heterogeneity stemming from divergent hardware capabilities across swarm members leads to performance fragmentation [16]. The dynamic topologies inherent in mobile UAV operations exacerbate communication bottlenecks, particularly under bandwidth constraints where frequent model updates incur prohibitive overhead [17]. Furthermore, adversarial robustness against Byzantine attacks during open-air operations remains largely unaddressed [18]. However, the core driving force behind adopting FL in UAV swarms precisely stems from the inherent conflict between the stringent operational constraints of aerial platforms and the high resource demands of centralized learning paradigms. The dual constraints of energy and bandwidth render raw data transmission impractical, whereas FL’s parameter exchange mechanism offers an efficient alternative by minimizing data transfer volumes. Its inherent design provides robust protection for sensitive mission data, preventing leaks to central nodes. Meanwhile, the distributed architecture aligns perfectly with the decentralized operational requirements of military/emergency UAV swarms, ensuring system resilience without the need for continuous cloud connectivity. While FL-assisted autonomous vehicles demonstrate promising multi-sensor fusion capabilities, they fail to accommodate UAV-specific operational constraints including mobility-induced data distribution shifts, meteorological disruptions, and mission-critical latency thresholds [19]. This further underscores the urgent need to optimize federated learning frameworks specifically for UAV swarm scenarios.

2.2. Ground Target Detection in Aerial Systems

Significant progress has been achieved in deep learning-based detection for single-UAV platforms. Researchers have addressed key challenges prevalent in aerial imagery, particularly scale variation, through the development of multi-scale feature extraction architectures such as Feature Pyramid Networks (FPN) [20] and DSF-Net [21], as well as attention mechanisms (e.g., DHA-Net [22], ASOE [23], OFEM [24], YOLO-S (with an inference speed 25% to 50% faster than YOLOv3) [25]). Beyond scale, substantial efforts have focused on improving computational efficiency (leveraging techniques like depthwise separable convolution (DSC) [26], dilated convolution (DWC) [27], and deformable convolutions [28]), handling occlusion [29], and enabling lightweight model deployment via techniques including pruning (with a 65.93% reduction in the number of parameters) [30], knowledge distillation [31,32], and sparsity optimization [33].

2.3. Swarm Intelligence and Collaborative Perception

Centralized approaches to swarm perception face fundamental limitations in practical deployment scenarios. PPO-based coordination frameworks [34] and terrain-aware planning systems [35] require continuous transmission of raw sensory data to central processing units, violating essential privacy constraints. While robust coordination algorithms (DADM/LDPTC) [36] and mixed-integer linear programming formulations [37] demonstrate resilience in adversarial environments, they rely on unrealistic assumptions of stable network connectivity—a condition rarely maintained in dynamic swarm operations. Recent FL implementations reduce communication overhead but lack three critical components: spatial preservation mechanisms essential for small-target detection in aerial imagery, contextual reasoning modules necessary for occlusion resolution, and effective adaptation strategies for non-IID data distributions where performance typically degrades by over 50% under label skew.

This analysis reveals a significant research void: no existing framework concurrently addresses privacy preservation, communication efficiency, small-target sensitivity, and non-IID robustness in dynamic UAV swarms. Our study bridges this critical gap through the co-design of FTL, SPD-Conv spatial preservation, and GCNet contextual reasoning—establishing a comprehensive solution tailored to the unique challenges of swarm-based perception.

3. Methodology

3.1. Framework Overview

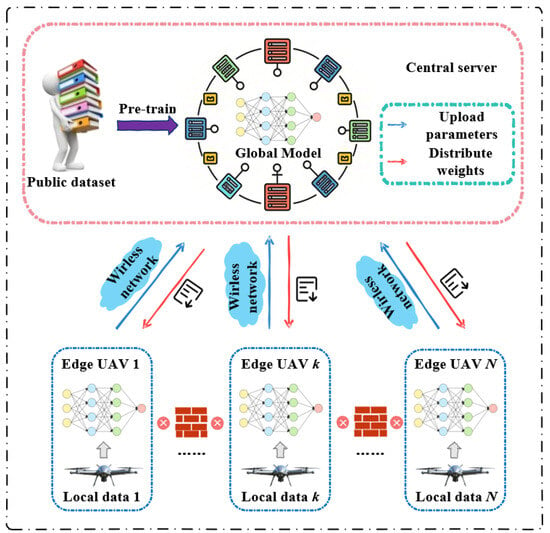

The proposed FedGTD-UAV framework addresses the core challenges of collaborative perception in UAV swarms through a co-designed FTL architecture. As illustrated in Figure 1, our system employs a central server coordinating N UAV agents, where each agent possesses locally acquired sensory data . The operational workflow initiates with knowledge initialization, where the server pre-trains a global detection model on a large-scale public dataset (e.g., MS COCO [38]) . This foundational phase imbues the model with generalized feature representations essential for diverse operational environments. The pre-trained model is subsequently distributed to all participating UAVs, establishing a common knowledge baseline while eliminating the prohibitive cost of training complex models from scratch on resource-constrained platforms.

Figure 1.

The overall architecture of FedGTD-UAV framework.

The core innovation lies in our two-stage optimization strategy. First, during the central pre-training phase, we leverage transfer learning to bootstrap model capabilities:

where denotes the detection loss function. Second, in the federated fine-tuning phase, each UAV selectively adapts only a sparse parameter subset using its private data , dramatically reducing computation and communication overhead. The criteria for selecting are based on the architectural sensitivity and task-specificity of different network components. Specifically, we fine-tune only the parameters of the detection head and the neck network, while keeping the backbone feature extractor frozen. This is motivated by the fact that the backbone, pre-trained on large-scale datasets, learns general feature representations that are transferable across domains. In contrast, the neck and head layers are more specialized for the specific detection task and benefit most from adaptation to local data distributions, thereby maximizing the efficacy of fine-tuning while minimizing the number of trainable parameters. This dual-phase approach achieves three critical advantages: (1) communication efficiency through compact update exchange, (2) accelerated convergence via superior initialization, and (3) inherent data privacy preservation by design.

3.2. Federated Transfer Learning Protocol

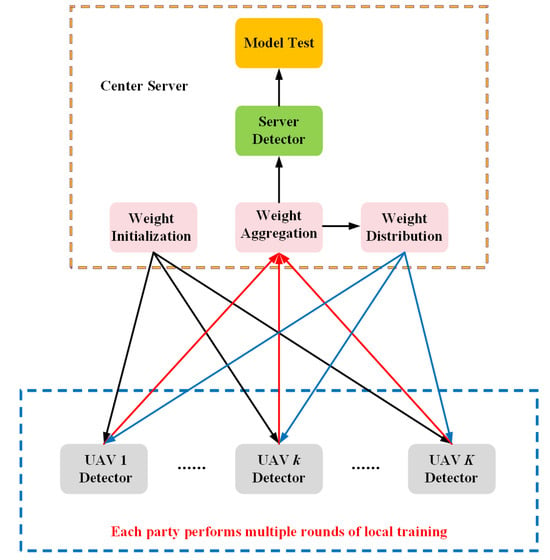

Building upon the Flower framework [39], we develop a customized FTL protocol specifically engineered for UAV swarm dynamics. Algorithm 1 formally describes the FedGTD-UAV training protocol, with Figure 2 illustrating the workflow of a single communication round. This process operates iteratively over T communication rounds according to the following cyclical procedure.

Figure 2.

FedGTD-UAVs workflow for a single communication round.

UAV Update enhances FL in UAV swarms through three swarm-aware innovations. First, dynamic UAV selection adapts to UAV availability by considering real-time connectivity and energy constraints. Second, selective parameter fine-tuning updates only task-specific weights for E local epochs, minimizing the local detection loss: . Third, weighted aggregation scales UAV updates proportionally to their dataset size , mitigating data distribution skew across the swarm.

| Algorithm 1 Federated Transfer Learning Protocol for FedGTD-UAVs. |

|

The FTL paradigm reduces on-device computation via prior knowledge, while selective parameter updates minimize bandwidth usage. Inherent data locality ensures privacy and disconnection resilience.

3.3. SPD-Conv for Spatial Fidelity Preservation

Conventional detectors typically rely on lossy downsampling operations, such as strided convolutions or max-pooling, to expand the receptive field and reduce computational cost. However, these operations inevitably discard fine-grained spatial information. This shortcoming is particularly prominent in aerial image object detection, where targets (such as vehicles and pedestrians) often occupy very few pixels—usually less than 20 pixels—so that even minor loss of spatial information may lead to missed detections or localization errors.

To address this issue, we propose a novel structure called SPD-Conv (Space-to-Depth Convolution) to replace conventional destructive downsampling methods. SPD-Conv adopts a new paradigm of “reorganize-then-refine” that achieves effective feature map scaling without losing information. As depicted in Figure 3, SPD-Conv transforms input feature map through two stages:

where denotes a parameter-free space-to-depth transformation that reorganizes spatial information into channels, with as a scaling factor. This decoupling offers three advantages: (1) preservation of spatial details critical to small-target detection, (2) elimination of aliasing artifacts inherent in strided convolutions, and (3) efficient integration into CNN backbones with minimal computational overhead. As a model-agnostic primitive, SPD-Conv enhances architectures (e.g., YOLO, SSD, RetinaNet) while significantly improving small-object sensitivity. By constructing information-rich feature hierarchies, it directly addresses core UAV-based detection challenges and enables robust perception in occluded environments.

Figure 3.

(a) Original image. (b) Transformed feature map. (c) Four sub-images after downsampling (scale = 2). (d) Sub-feature maps concatenated along channel dimension. (e) Feature refinement via non-strided convolution on this information-rich representation.

3.4. GCNet for Contextual Ambiguity Resolution

Traditional backbone networks suffer from limited receptive fields in their generated feature maps, leading to performance degradation in UAV scenarios with severe target occlusions. To address this challenge, we introduce a Global Context (GC) module with targeted simplifications and lightweight design adaptations. While preserving its capability to effectively capture long-range dependencies, we significantly reduce the computational complexity of the module, enabling seamless integration into our real-time object detection framework. This model ingeniously combines the advantages of Non-local Networks and Squeeze-and-Excitation Networks (as shown in Figure 4c), providing a concise, efficient, and powerful method for global context modeling. As depicted in Figure 4b, the simplified non-local block can be abstracted into three steps.

Figure 4.

Global context (GC) block architecture. The module computes a global attention map using a convolution and softmax. This map aggregates features into a single context vector. A lightweight bottleneck—with two convolutions, LayerNorm, and ReLU—transforms this context feature. The transformed feature is then added to the original input, enriching features with global context.

The first step is global attention pooling. A 1 × 1 convolution and a softmax function are employed to obtain attention weights, followed by attention pooling to acquire global context features. The second step is feature transformation via a 1 × 1 convolution . The third step is feature aggregation. The global context features are aggregated onto the features at each position using addition. We regard this as a global context modeling framework (as shown in Figure 4a), with the detailed architecture illustrated in Figure 4d, the module processes input features through

where , , and are learnable transformations. This design reduces computational complexity from to for spatial positions, while addressing two key challenges: (1) partial observations in occlusions through holistic context integration and (2) false positives in cluttered backgrounds via attention-based noise suppression. The synthesized global context c resolves ambiguities by enriching local features with scene-level semantics. This enables robust detection of occluded targets and suppression of distracting clutter under real-time constraints.

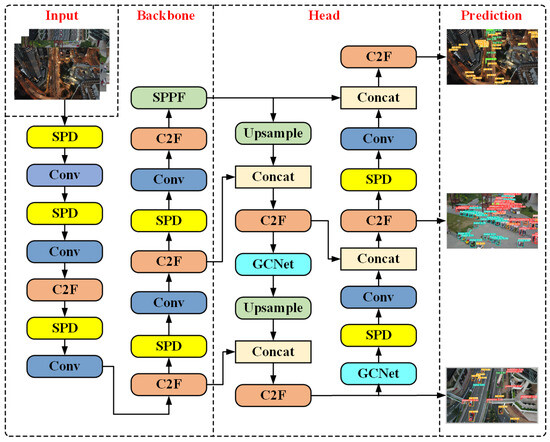

3.5. Integrated Architecture Design

The complete GTDetector-UAV architecture (Figure 5) integrates these innovations synergistically. The backbone utilizes SPD-Conv blocks combined with C2F modules for multi-scale feature extraction, effectively preserving spatial details across all scales. The neck network incorporates SPPF to enhance contextual understanding, while the detection head leverages GCNet blocks to improve contextual reasoning by effectively capturing long-range dependencies, thereby strengthening robustness against occluded objects. This co-designed architecture achieves an optimal balance: SPD-Conv ensures sensitivity to small targets, GCNet mitigates contextual ambiguities, and the federated framework facilitates efficient swarm-wide knowledge distillation. Within the FedGTD-UAV framework, GTDetector-UAVs is implemented as a unified target detector for both local inference on UAVs and global aggregation at the server.

Figure 5.

Architecture of the proposed GTDetector-UAVs.

4. Experimental Validation and Analysis

To evaluate the efficacy and generalizability of FedGTD-UAVs for collaborative perception in UAV swarms, we conduct comprehensive experiments across multiple dimensions. This section details the experimental setup, including (1) benchmark datasets and preprocessing, (2) implementation details (hardware and software), and (3) evaluation metrics. Our analysis examines three key aspects: component contributions via ablation studies, FL robustness under non-IID data distributions, and comparisons with state-of-the-art methods.

4.1. Dataset Construction and Preprocessing

We validate FedGTD-UAVs using two complementary aerial datasets with distinct characteristics.

VisDrone2019, meticulously curated and publicly released by the AISKYEYE Team (Tianjin, China), has emerged as a widely adopted authoritative benchmark in the field of UAV visual analysis. It includes 261,908 frames from 14 Chinese cities, spanning urban to rural scenes, varying object densities, and 10 categories with a long-tail distribution. It allocates 6471 images for training, 548 for validation, and 3190 for testing. As shown in Figure 6, cars dominate (62.3%), while awning-tricycles are rare (0.7%), mirroring real-world patterns and posing generalization challenges.

Figure 6.

Class distribution in VisDrone2019 dataset, reflecting real-world transportation frequency.

CARPK was developed and open-sourced by the Shanghai AI Laboratory (Shanghai, China), serving as a critical benchmark for large-scale parking lot vehicle detection research. It contains 89,777 vehicle instances at 40 m altitude (1280 × 720 resolution) from four parking lots. We apply an 8:3:3 split, yielding 1120 training, 420 validation, and 420 testing samples for domain adaptation evaluation.

To emulate real-world FL challenges, we apply two partitioning strategies. Stratified IID partitioning preserves class proportions per UAV via stratified sampling, with a reserved global test set.

For non-IID scenarios, we use Dirichlet partitioning after merging training and validation sets, with parameter vector defining sub-dataset weights. Smaller values produce highly skewed and class-imbalanced client data, effectively mimicking challenging but realistic UAV swarm scenarios where devices operate in distinct regions or perform different tasks. By focusing on , this generates partitions with heterogeneity, class imbalance, and dependencies, as shown in Figure 7. The original test set remains unchanged for evaluation.

Figure 7.

Distribution of categories in the dataset after Dirichlet partitioning (). Categories: 0: Pedestrian, 1: People, 2: Bicycle, 3: Car, 4: Van, 5: Truck, 6: Tricycle, 7: Awning-tricycle, 8: Bus, 9: Motor.

This dual-dataset approach assesses performance in complex environments (VisDrone2019), task-specific generalization (CARPK), and resilience to data skew (Dirichlet partitioning), addressing key gaps in aerial federated perception.

4.2. Experimental Setup

For reproducibility and fair comparison, all experiments use the hardware and software configurations in Table 1. Dataset-specific protocols include 300 epochs for VisDrone2019 and 100 for CARPK, with mosaic augmentation disabled in the final 10 epochs to avoid feature distortion. Hyperparameters are consistent and detailed in Table 2.

Table 1.

Hardware and software specifications.

Table 2.

Hyperparameter configuration.

This setup ensures (1) reproducibility via containerization, (2) efficient convergence through tailored epochs, and (3) stable training by disabling augmentation late-stage. The batch size of 16 balances GPU memory and gradient stability.

4.3. Performance Evaluation Metrics

We assess detection accuracy, efficiency, and complexity. Accuracy metrics are as follows:

where , , and are true positives, false positives, and false negatives; N is the number of classes; and is the AP for class i.

We emphasize mAP@0.5 (IoU threshold 0.5) and mAP@0.5:0.95 (averaged over IoU 0.5 to 0.95 in 0.05 steps) for localization precision in UAV tasks.

Efficiency is measured by frames per second (FPS) for real-time inference. Complexity uses FLOPs per inference and parameter count (Params) for resource assessment on UAV hardware.

This framework evaluates detection reliability (mAP variants), latency (FPS), and deployability (FLOPs/Params), addressing UAV swarm needs.

4.4. Ablation Study of Architectural Innovations

Given YOLOv8’s leading industrial performance and benchmark status in academia, we selected it as the core baseline model to rigorously evaluate the generalization capability and deployment value of our architectural innovations in complex real-world scenarios. Comprehensive ablation studies (Table 3) quantitatively validate the critical contributions of each architectural innovation component within the FedGTD-UAV framework to its overall performance.

Table 3.

Ablation study of FedGTD-UAV components on VisDrone2019 dataset.

Firstly, the SPD-Conv module significantly enhances small-target detection capabilities—essential for UAV applications—yielding a 3.1% absolute improvement in mAP@0.5 by preserving fine-grained spatial details during downsampling. Complementing this, the GCNet attention mechanism improves precision by 0.4% through contextual reasoning that effectively mitigates false positives in cluttered environments. Most notably, the FTL variant alone brings a substantial gain of 9.5% in mAP@0.5, underscoring the paramount importance of federated knowledge transfer in overcoming individual data limitations.

Secondly, the pairwise combinations reveal that FTL serves as a strong base for integration. While combining SPD-Conv and GCNet yields an additive effect (+3.5% vs. baseline), integrating either module with FTL results in higher performance gains (+4.6% and +4.7%, respectively), indicating that FTL provides a more robust feature representation for the subsequent modules to build upon.

Most notably, the full integration of all three components demonstrates a clear synergistic effect, achieving a 12.1% improvement in mAP@0.5. This final performance significantly exceeds the sum of their individual gains and any pairwise combination, highlighting that the components complement each other: SPD-Conv preserves critical details, GCNet suppresses noise, and FTL enables effective cross-domain knowledge integration.

Remarkably, the integrated architecture exhibits a synergistic effect: The full configuration achieves 44.2% mAP@0.5—representing a 12.1% absolute gain over the baseline—which, while slightly less than the naive sum of individual gains (3.1% from SPD-Conv, 0.4% from GCNet, and 9.5% from FTL), demonstrates clear synergy by substantially outperforming any single or pairwise combination. This emergent capability arises from complementary interactions among the components: SPD-Conv preserves critical target details, GCNet suppresses environmental noise, and FTL integrates cross-domain expertise across the UAV swarm, resulting in a system where the whole significantly exceeds the performance of partial integrations.

Operationally, despite the computational overhead introduced by GCNet (adding 1.6M parameters), the FedGTD-UAV maintains real-time viability at 217.3 FPS (4.6 ms latency) on embedded platforms. Crucially, FTL alone delivers 78.5% of the FedGTD-UAVs’ accuracy gain with minimal computational penalty, providing substantial deployment flexibility for resource-constrained UAV platforms where model size and inference speed are paramount.

4.5. Validate the Effectiveness of GCNet

To evaluate the effectiveness of the GCNet attention mechanism, comparative experiments were conducted under identical experimental conditions against other mainstream attention mechanisms such as SE, HAM, and AFF. All experiments were systematically evaluated on the VisDrone2019 dataset. Table 4 provides a detailed summary of various performance metrics after integrating each attention mechanism into the model.

Table 4.

Experimental results on different attention mechanisms.

Experimental results indicate that although GCNet has a slightly deeper architecture than the SE mechanism, it significantly outperforms mainstream attention mechanisms such as SE in both detection accuracy and real-time performance, demonstrating superior comprehensive performance. Furthermore, compared to the baseline model, the lightweight GCNet introduces only a marginal increase in the number of parameters, which remains within an acceptable range. These findings sufficiently demonstrate that the proposed lightweight GCNet can effectively capture long-range dependencies in complex backgrounds, thereby markedly enhancing robustness in detecting occluded targets.

4.6. Federated Learning Performance Under Data Heterogeneity

Under homogeneous data conditions (Table 5), our federated framework exhibits robust knowledge aggregation capabilities. The global model attains 44.2% mAP@0.5—comparable to the local models (43.6–44.5%)—while demonstrating superior performance in the more stringent mAP@0.5:0.95 metric (29.5% vs. 28.5–29.1%). This represents a 0.4–1.0% improvement in localization precision, underscoring that collaborative learning enhances model robustness even in data-homogeneous environments, where local models already exhibit strong performance.

Table 5.

Performance on IID VisDrone2019 dataset (data partitioned via stratified random sampling).

Under heterogeneous conditions with severe data skew (Table 6), the federated framework displays remarkable resilience to distributional disparities. Local models trained on skewed distributions exhibit substantial performance fragmentation, with mAP@0.5 varying dramatically from 37.9% to 59.2%. In contrast, the global model achieves a consistent 43.6% mAP@0.5, surpassing three of the local UAV models by substantial absolute margins of 3.9–5.7%, while maintaining balanced precision (64.2%) and recall (31.7%). The 28.1% mAP@0.5:0.95 further attests to enhanced localization stability amid challenging data heterogeneity. Notably, while the proposed framework demonstrates strong performance in addressing statistical heterogeneity (Non-IID data), evaluating its robustness under adversarial conditions (e.g., Byzantine attacks) will constitute a critical extension for future research, particularly for deployment in safety-critical scenarios.

Table 6.

Performance on non-IID VisDrone2019 dataset (non-IID partitioning via Dirichlet distribution with ).

To further validate the superiority of our framework, we compared FedGTD-UAVs with two classic federated learning algorithms under the same Non-IID setting. As shown in Table 7, FedAvg and FedProx only achieved marginal improvements over isolated local training and performed poorly when dealing with severe data heterogeneity issues. This is because they aggregate the entire model, and under non-IID data, such an aggregation approach may lead to model distortion due to client drift. In contrast, our method achieved a significantly higher mAP@0.5, outperforming FedAvg by 3.5% and FedProx by 3.9%. Moreover, since our method only requires the transmission of a small subset of critical parameters, it is much better suited for bandwidth-constrained UAV networks compared to transmitting the full model.

Table 7.

Performance comparison with classical federated learning algorithms under Non-IID data.

As illustrated in Figure 8, our framework attains significantly accelerated convergence, reaching optimal performance in 200 epochs—33% faster than centralized training. This efficiency arises from parallelized learning across UAV clients, where local updates generate momentum during global aggregation, thereby preserving robustness against data heterogeneity.

Figure 8.

Convergence comparison under IID and non-IID settings. FedGTD-UAVs achieves 33% faster convergence than centralized training, reaching optimum in 200 epochs.

Table 8 underscores a key advantage of our federated approach: superior localization precision, as evidenced by 29.5% mAP@0.5:0.95 under IID conditions—a 10.5% relative improvement over centralized training—while maintaining comparable classification confidence.

Table 8.

Comparative performance across training paradigms on VisDrone2019 dataset.

These results substantiate that, notwithstanding non-IID discrepancies, the framework sustains performance levels comparable to those under IID conditions, thereby demonstrating unprecedented robustness for perception tasks in dynamic environments.

4.7. Benchmarking Against State-of-the-Art Methods

The category-specific analysis in Table 9 demonstrates substantial improvements in safety-critical classes, including trucks (+15.9% mAP@0.5), motorcycles (+15.8%), and pedestrians (+15.1%). These enhancements stem from our architectural innovations: SPD-Conv bolsters small-target detection, GCNet mitigates occlusion-related ambiguities, and FTL facilitates effective cross-domain adaptation.

Table 9.

Per-category performance comparison on the VisDrone2019 dataset.

Comprehensive benchmarking in Table 10 establishes new performance standards: 44.2% mAP@0.5 on VisDrone2019 (+12.1% vs. YOLOv8s) and 73.2% mAP@0.5:0.95 on CARPK (+7.2% vs. state-of-the-art), achieved with a 54.7% reduction in computational requirements compared to YOLOv7.

Table 10.

Comparison with state-of-the-art methods on aerial datasets.

Figure 9 illustrates the consistent superiority of our approach across varying confidence thresholds, maintaining over 60% precision at 70% recall—a crucial attribute for safety-sensitive UAV operations, where minimizing false negatives is paramount.

Figure 9.

Precision–recall curves for state-of-the-art models on the VisDrone2019 dataset.

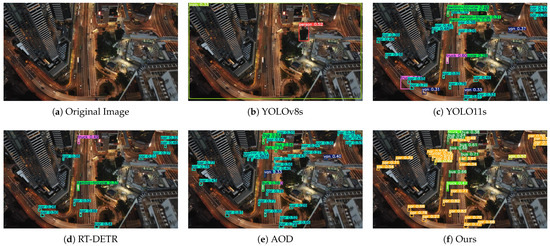

To provide an intuitive visual assessment, we present qualitative comparisons of our proposed FedGTD-UAVs model against leading detection models on representative scenes from the VisDrone2019 dataset. These encompass varying lighting conditions (e.g., bright daylight and low-light nighttime) and occlusion levels (e.g., minimal and dense). The results are illustrated in Figure 10, Figure 11 and Figure 12.

Figure 10.

Qualitative comparison under bright daylight conditions with slight occlusion on the VisDrone2019 dataset.

Figure 11.

Qualitative comparison under low-light conditions at night on the VisDrone2019 dataset.

Figure 12.

Qualitative comparison under complex lighting conditions with heavy occlusion on the VisDrone2019 dataset.

Across these comparisons, FedGTD-UAVs consistently outperforms baselines. In bright daylight with minimal occlusion (Figure 10), our model demonstrates superior target localization. Particularly in the right half of the figure, other models may suffer from missed or false detections, while our model significantly outperforms them in terms of accuracy. This is likely attributable to SPD-Conv’s enhancement of spatial details and GCNet’s modeling of global context. Under low-light nighttime conditions (Figure 11), where baselines exhibit degraded performance due to blurred features, FedGTD-UAVs maintains robust detection, benefiting from federated transfer learning’s cross-domain knowledge fusion for generalized representations. For instance, on the highway in the middle of the figure, the accuracy of vehicle detection by our model is significantly higher than that of other models. In densely occluded scenes with mixed lighting (Figure 12), special attention can be paid to the areas at the edges of the figure that are prone to being occluded by trees or buildings. Our model can still demonstrate its advantages in such cases. Our approach minimizes missed and false detections through synergistic module interactions: SPD-Conv preserves critical details, GCNet resolves contextual ambiguities, and the federated framework enables efficient knowledge distillation. These results underscore the model’s efficacy in addressing real-world UAV detection challenges.

These results substantiate the efficacy of the three proposed enhancements: SPD-Conv’s spatial detail preservation, GCNet’s global context modeling, and federated transfer learning’s cross-domain knowledge fusion. Consequently, the FedGTD-UAV system demonstrates exceptional adaptability in reasoning about target occlusions under varying lighting conditions and complex environments, making it an ideal solution for real-time object detection in dynamic UAV applications such as road surveillance.

5. Conclusions

This study demonstrates that the proposed FedGTD-UAV framework represents a significant advancement over pre-existing methods in federated learning for UAV swarms. By systematically addressing the key limitations of prior works—namely, their susceptibility to non-IID data degradation, inefficiency in detecting small targets, and lack of a privacy-preserving collaborative mechanism—our work provides a more robust, accurate, and practical solution for real-world deployment. The framework is specifically designed to address three key challenges in UAV swarm perception: reliable detection of small targets, robustness to occlusions, and resilience to data heterogeneity. By integrating SPD-Conv, GCNet, and federated transfer learning, our architecture delivers superior performance in aerial object detection. Specifically, SPD-Conv enhances detection accuracy by preserving critical spatial details of small targets, while GCNet strengthens robustness against occluded objects through contextual reasoning that effectively captures long-range dependencies. Furthermore, the federated transfer learning mechanism enables efficient knowledge sharing across the swarm, thereby overcoming inherent data heterogeneity issues in distributed systems.

Extensive evaluations demonstrate that FedGTD-UAV sets new benchmarks across multiple metrics. On the VisDrone2019 dataset, it achieves 44.2% mAP@0.5—a 12.1% improvement over YOLOv8s—and maintains over 98% performance retention under extreme non-IID data conditions, significantly outperforming the 56.2% performance degradation observed in isolated training scenarios. The framework also supports real-time inference at 217 FPS with 57.6 GFLOPs, yielding a 3.2× better accuracy-efficiency tradeoff. Furthermore, federated aggregation enhances localization precision to 29.5% mAP@0.5:0.95, surpassing centralized methods and acting as an effective regularizer.

Future research efforts will focus on the following key areas: First, we will explore dynamic model pruning for bandwidth-limited swarm communications, Byzantine-robust aggregation mechanisms for contested environments, and cross-modal federated learning incorporating infrared and SAR sensors to enhance the robustness and efficiency of algorithms in complex scenarios. Second, we will combine real-world data collection with synthetic data generation techniques to build multimodal datasets covering diverse weather conditions such as rain, fog, and nighttime, which will be used to train and validate more generalizable models. Third, we will conduct large-scale UAV swarm simulations and ultimately deploy and validate the technology on physical drone platforms to promote its application in mission-critical scenarios like disaster response and perimeter security—where privacy-preserving collaborative perception is essential for success in dynamic operational environments.

Author Contributions

Conceptualization, L.Z.; methodology, X.J.; validation, Y.C.; formal analysis, X.J.; investigation, Y.C.; resources, L.Z.; data curation, X.J.; writing—original draft preparation, X.J.; writing—review and editing, L.Z.; funding acquisition, L.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (Grant No. 61473114), the Key Scientific Research Projects of Higher Education Institutions in Henan Province (Grant No. 24B520006), and the Open Fund of Key Laboratory of Grain Information Processing and Control (Henan University of Technology), Ministry of Education (Grant No. KFJJ2024013).

Data Availability Statement

The original contributions presented in the study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Gohari, A.; Ahmad, A.B.; Rahim, R.B.A.; Supa’at, A.S.M.; Abd Razak, S.; Gismalla, M.S.M. Involvement of Surveillance Drones in Smart Cities: A Systematic Review. IEEE Access 2022, 10, 56611–56628. [Google Scholar] [CrossRef]

- Zhang, S.; He, Y.; Gu, Y.; He, Y.; Wang, H.; Wang, H.; Yang, R.; Chady, T.; Zhou, B. UAV-Based Defect Detection and Fault Diagnosis for Static and Rotating Wind Turbine Blades: A Review. Nondestruct. Test. Eval. 2025, 40, 1691–1729. [Google Scholar] [CrossRef]

- He, Y.; Liu, Z.; Guo, Y.; Zhu, Q.; Fang, Y.; Yin, Y.; Wang, Y.; Zhang, B.; Liu, Z. UAV-Based Sensing and Imaging Technologies for Power System Detection, Monitoring and Inspection: A Review. Nondestruct. Test. Eval. 2024, 39, 1–68. [Google Scholar] [CrossRef]

- Hua, W.; Chen, Q. A Survey of Small Object Detection Based on Deep Learning in Aerial Images. Artif. Intell. Revie 2025, 58, 162. [Google Scholar] [CrossRef]

- Han, Y.; Zhang, H.; Li, H.; Jin, Y.; Lang, C.; Li, Y. Collaborative Perception in Autonomous Driving: Methods, Datasets, and Challenges. IEEE Intell. Transp. Syst. Mag. 2023, 15, 131–151. [Google Scholar] [CrossRef]

- Zhu, H.; Xu, J.; Liu, S.; Jin, Y. Federated Learning on Non-IID Data: A Survey. Neurocomputing 2021, 465, 371–390. [Google Scholar] [CrossRef]

- Dang, X.T.; Vu, B.M.; Nguyen, Q.S.; Tran, T.T.M.; Eom, J.S.; Shin, O.S. A Survey on Energy-Efficient Design for Federated Learning over Wireless Networks. Energies 2024, 17, 6485. [Google Scholar] [CrossRef]

- Konečný, J.; McMahan, H.B.; Ramage, D.; Richtárik, P. Federated Optimization: Distributed Machine Learning for On-Device Intelligence. arXiv 2016, arXiv:1610.02527. [Google Scholar] [CrossRef]

- Konečný, J.; McMahan, H.B.; Yu, F.X.; Richtárik, P.; Suresh, A.T.; Bacon, D. Federated Learning: Strategies for Improving Communication Efficiency. arXiv 2016, arXiv:1610.05492. [Google Scholar] [CrossRef]

- Devarajan, G.G.; Thirunavukkarasan, M.; Mahalinghum, M.; Arockiam, D.; Wu, M.E.; Mahapatra, R.P. Explainable Federated Learning Based Secure and Transparent Object Detection Model for Autonomous Vehicles. IEEE Trans. Consum. Electron. 2025, 71, 6263–6270. [Google Scholar] [CrossRef]

- Behera, S.; Adhikari, M.; Menon, V.G.; Khan, M.A. Large Model-Assisted Federated Learning for Object Detection of Autonomous Vehicles in Edge. IEEE Trans. Veh. Technol. 2024, 74, 1839–1848. [Google Scholar] [CrossRef]

- Lu, Y.R.; Sun, D. Development of Real-Time Unmanned Aerial Vehicle Urban Object Detection System with Federated Learning. J. Aerosp. Inf. Syst. 2024, 21, 547–553. [Google Scholar] [CrossRef]

- Liu, Y.; Huang, A.; Luo, Y.; Huang, H.; Liu, Y.Z.; Chen, Y.Y.; Feng, L.C.; Chen, T.J.; Yu, H.; Yang, Q. FedVision: An Online Visual Object Detection Platform Powered by Federated Learning. arXiv 2020, arXiv:2001.06202. [Google Scholar] [CrossRef]

- Wang, S.; Li, C.; Ng, D.W.K.; Eldar, Y.C.; Poor, H.V.; Hao, Q.; Xu, C. Federated Deep Learning Meets Autonomous Vehicle Perception: Design and Verification. IEEE Netw. 2022, 37, 16–25. [Google Scholar] [CrossRef]

- Xu, Y.Y.; Lin, C.S.; Wang, Y.C.F. Bias-Eliminating Augmentation Learning for Debiased Federated Learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 20442–20452. [Google Scholar] [CrossRef]

- Li, C.; Deng, C.; Zhang, Y.; Wan, S. Federated Meta-Learning Based Computation Offloading Approach with Energy-Delay Tradeoffs in UAV-Assisted VEC. IEEE Trans. Mob. Comput. 2025, 24, 10978–10991. [Google Scholar] [CrossRef]

- Fu, Z.; Liu, J.; Mao, Y.; Qu, L.; Xie, L.; Wang, X. Energy-Efficient UAV-Assisted Federated Learning: Trajectory Optimization, Device Scheduling, and Resource Management. IEEE Trans. Netw. Serv. Manag. 2025, 22, 974–988. [Google Scholar] [CrossRef]

- Niu, S.; Xu, X.; Wu, T.; Xu, Y.; Liu, C.; Sun, Z. UAV-Assisted Federated Edge Learning Framework Based on Hierarchical Differential Privacy and Model Segmentation. In Proceedings of the 2024 43rd Chinese Control Conference (CCC), Kunming, China, 28–31 July 2024; IEEE: New York, NY, USA, 2024; pp. 8792–8797. [Google Scholar] [CrossRef]

- Al Farsi, A.; Khan, A.; Mughal, M.R.; Bait-Suwailam, M.M. Privacy and Security Challenges in Federated Learning for UAV Systems: A Systematic Review. IEEE Access 2025, 13, 86599–86615. [Google Scholar] [CrossRef]

- Liu, G.; Cao, Z.; Liu, S.; Song, B.; Liu, Z. An Improved SSD Method for Infrared Target Detection Based on Convolutional Neural Network. J. Comput. Methods Sci. Eng. 2022, 22, 1393–1408. [Google Scholar] [CrossRef]

- Zhang, F.; Yang, C. DSF-Net: Dual-Stream Fused Network for Video Frame Interpolation. IEEE Signal Process. Lett. 2023, 30, 1122–1126. [Google Scholar] [CrossRef]

- Wu, X.; Wang, L.; Guan, J.; Ji, H.; Xu, L.; Hou, Y.; Fei, A. DHANet: Dual-Stream Hierarchical Interaction Networks for Multimodal Drone Object Detection. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5406013. [Google Scholar] [CrossRef]

- Li, Z.; Lian, S.; Pan, D.; Wang, Y.; Liu, W. AD-Det: Boosting Object Detection in UAV Images with Focused Small Objects and Balanced Tail Classes. Remote Sens. 2025, 17, 1556. [Google Scholar] [CrossRef]

- Lyu, Y.; Zhang, T.; Li, X.; Liu, A.; Shi, G. LightUAV-YOLO: A Lightweight Object Detection Model for Unmanned Aerial Vehicle Image. J. Supercomput. 2025, 81, 105. [Google Scholar] [CrossRef]

- Betti, A.; Tucci, M. YOLO-S: A Lightweight and Accurate YOLO-Like Network for Small Target Detection in Aerial Imagery. Sensors 2023, 23, 1865. [Google Scholar] [CrossRef]

- Bai, Z.; Pei, X.; Qiao, Z.; Wu, G.; Bai, Y. Improved YOLOv7 Target Detection Algorithm Based on UAV Aerial Photography. Drones 2024, 8, 104. [Google Scholar] [CrossRef]

- Zhao, X.; Zhang, W.; Xia, Y.; Zhang, H.; Zheng, C.; Ma, J.; Zhang, Z. G-YOLO: A Lightweight Infrared Aerial Remote Sensing Target Detection Model for UAVs Based on YOLOv8. Drones 2024, 8, 495. [Google Scholar] [CrossRef]

- Yu, C.; Shin, Y. MCG-RTDETR: Multi-Convolution and Context-Guided Network with Cascaded Group Attention for Object Detection in Unmanned Aerial Vehicle Imagery. Remote Sens. 2024, 16, 3169. [Google Scholar] [CrossRef]

- Nian, Z.; Yang, W.; Chen, H. AEFFNet: Attention Enhanced Feature Fusion Network for Small Object Detection in UAV Imagery. IEEE Access 2025, 13, 26494–26505. [Google Scholar] [CrossRef]

- Qu, J.; Li, Q.; Pan, J.; Sun, M.; Lu, X.; Zhou, Y.; Zhu, H. SS-YOLOv8: Small-Size Object Detection Algorithm Based on Improved YOLOv8 for UAV Imagery. Multimed. Syst. 2025, 31, 42. [Google Scholar] [CrossRef]

- Han, Z.; Jia, D.; Zhang, L. LT-DETR: Lightweight UAV Object Detection and Dual Knowledge Distillation for Remote Sensing Scenarios. Meas. Sci. Technol. 2025, 36, 036005. [Google Scholar] [CrossRef]

- Min, X.; Zhou, W.; Hu, R.; Wu, Y.; Pang, Y.; Yi, J. LWUAVDet: A Lightweight UAV Object Detection Network on Edge Devices. IEEE Internet Things J. 2024, 11, 24013–24023. [Google Scholar] [CrossRef]

- Fan, Q.; Li, Y.; Deveci, M.; Zhong, K.; Kadry, S. LUD-YOLO: A Novel Lightweight Object Detection Network for Unmanned Aerial Vehicle. Inf. Sci. 2025, 686, 121366. [Google Scholar] [CrossRef]

- Arranz, R.; Carraminana, D.; de Miguel, G.; Besada, J.A.; Bernardos, A.M. Application of Deep Reinforcement Learning to UAV Swarming for Ground Surveillance. Sensors 2023, 23, 8766. [Google Scholar] [CrossRef] [PubMed]

- Granadeno, P.A.A.; Cleland-Huang, J. Land-Coverage Aware Path-Planning for Multi-UAV Swarms in Search and Rescue Scenarios. arXiv 2025, arXiv:2505.08060. [Google Scholar]

- Fan, J.; Lei, L.; Cai, S.; Shen, G.; Cao, P.; Zhang, L. Area Surveillance with Low Detection Probability Using UAV Swarms. IEEE Trans. Veh. Technol. 2023, 73, 1736–1752. [Google Scholar] [CrossRef]

- Ma, T.; Lu, P.; Deng, F.; Geng, K. Air–Ground Collaborative Multi-Target Detection Task Assignment and Path Planning Optimization. Drones 2024, 8, 110. [Google Scholar] [CrossRef]

- MS COCO. Available online: https://github.com/cocodataset/cocoapi (accessed on 6 October 2025).

- Beutel, D.J.; Topal, T.; Mathur, A.; Qiu, X.; Fernandez-Marques, J.; Gao, Y.; Sani, L.; Li, K.H.; Parcollet, T.; de Gusmão, P.P.B.; et al. Flower: A Friendly Federated Learning Research Framework. arXiv 2020, arXiv:2007.14390. [Google Scholar] [CrossRef]

- McMahan, H.B.; Moore, E.; Ramage, D.; Hampson, S.; Arcas, B.A.Y. Communication-Efficient Learning of Deep Networks from Decentralized Data. In Proceedings of the 20th International Conference on Artificial Intelligence and Statistics AISTATS 2017, Fort Lauderdale, FL, USA, 20–22 April 2017; PMLR 2017. Volume 54, pp. 1273–1282. [Google Scholar]

- Li, T.; Sahu, A.K.; Zaheer, M.; Sanjabi, M.; Talwalkar, A.; Smith, V. Federated Optimization in Heterogeneous Networks. Proc. Mach. Learn. Syst. 2020, 2, 429–450. [Google Scholar]

- Ultralytics-YOLOv5. Available online: https://github.com/ultralytics/YOLOv5 (accessed on 6 October 2025).

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar] [CrossRef]

- Ultralytics-YOLOv8. Available online: https://github.com/ultralytics/ultralytics (accessed on 6 October 2025).

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. Detrs Beat Yolos on Real-Time Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024. [Google Scholar] [CrossRef]

- Zhang, K.; Zhou, Y. Abnormal Object Detection of the Transmission Lines with YOLOv5 and Federated Learning. In Proceedings of the 2023 IEEE International Conference on Development and Learning (ICDL), Macau, China, 9–11 November 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 512–517. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).