An Intelligent Passive System for UAV Detection and Identification in Complex Electromagnetic Environments via Deep Learning

Abstract

1. Introduction

| Method | Advantages | Disadvantages |

|---|---|---|

| Radar-based method [12,13,18,19] | High performance | Difficult to detect small UAVs/High cost |

| Optical-based method [14,15,20,21] | Provides intuitive results | Short detection range/Susceptible to weather |

| Acoustic-based method [16,17,22] | Low cost | Short detection range/Easily affected by noise |

| RF-based method [34,35,36,39] | Low cost/Long range | Easily affected by interference |

- A passive UAV detection and identification system is proposed, with a modular architecture comprising a multi-beam directional antenna, a wideband spectrum analyzer, and a computing unit. The system enables real-time acquisition, processing, and identification of RF signals and is specifically designed to ensure robust operation in complex electromagnetic environments.

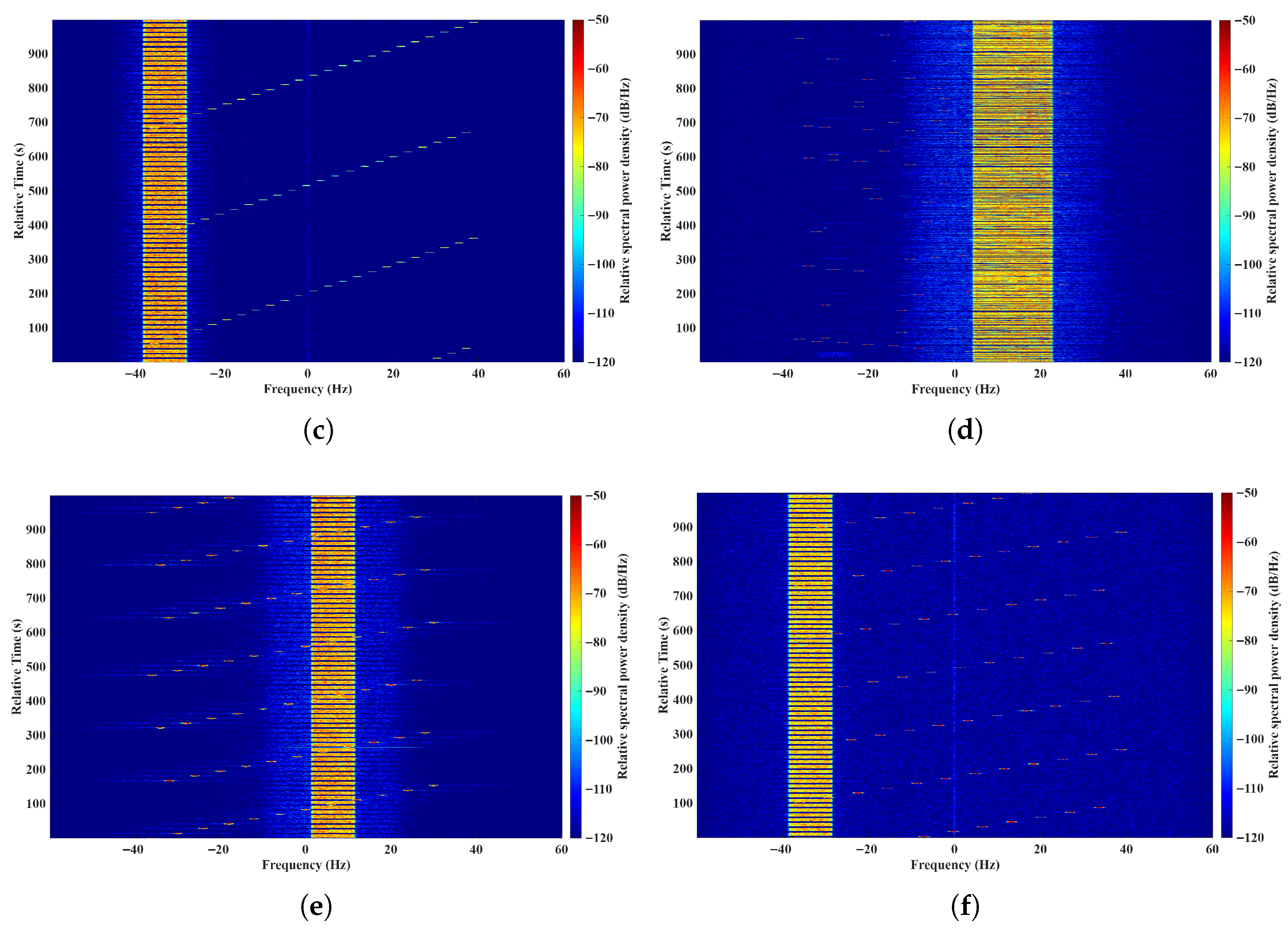

- A two-stage deep learning framework is proposed, which integrates a YOLO-based detector for FHSs with a CNN-based UAV model classifier. The YOLO module enables rapid and accurate localization of FHS regions in spectrograms, while the CNN extracts discriminative features for precise UAV model identification, thereby enhancing both accuracy and robustness under severe interference conditions.

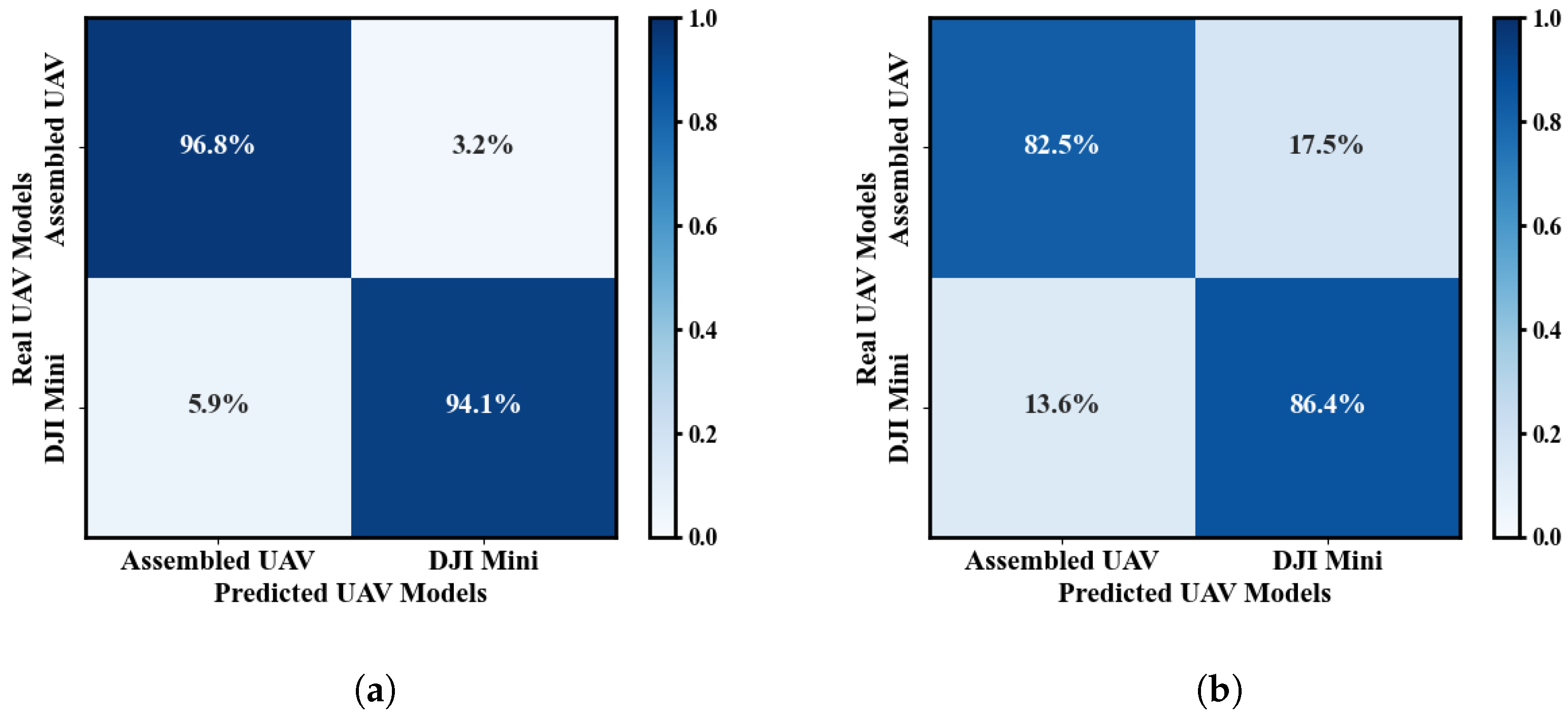

- Extensive measurements are conducted in diverse LoS and NLoS conditions across a campus. The results showed that the proposed system achieved over 96% detection and identification accuracy under LoS conditions and improved identification accuracy by more than 15% in NLoS conditions compared with conventional full-spectrogram classification methods.

2. Spectrogram-Based Framework for Passive UAV Detection and Identification

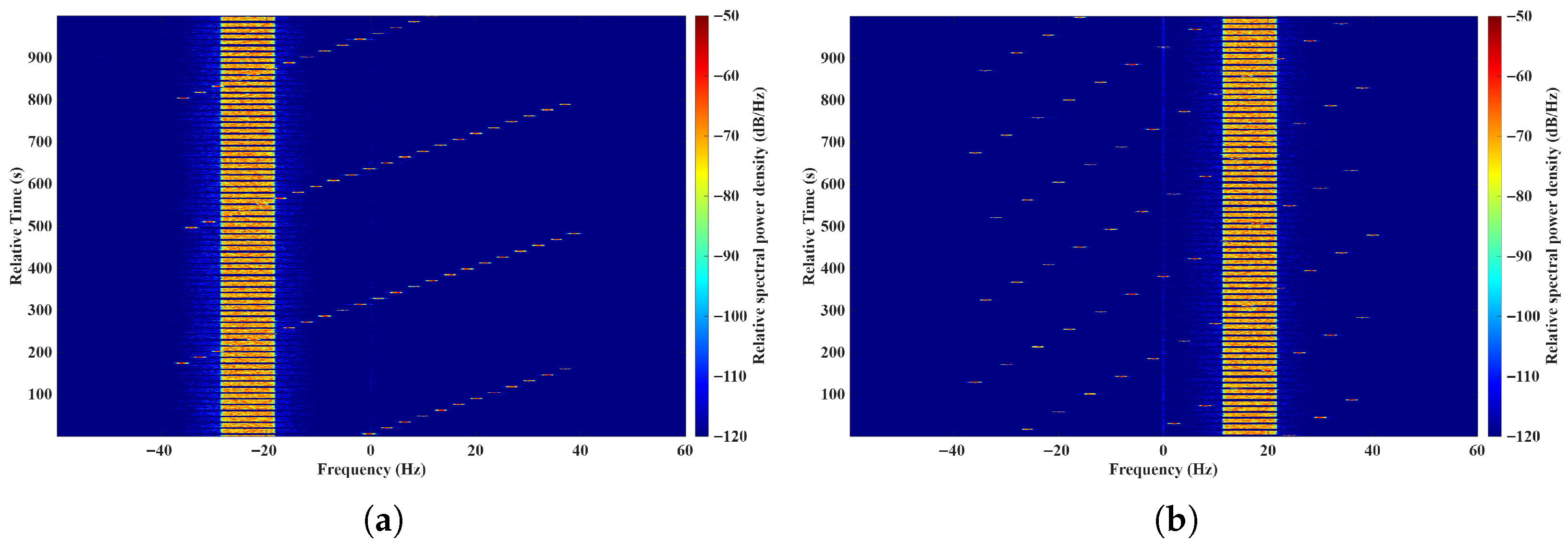

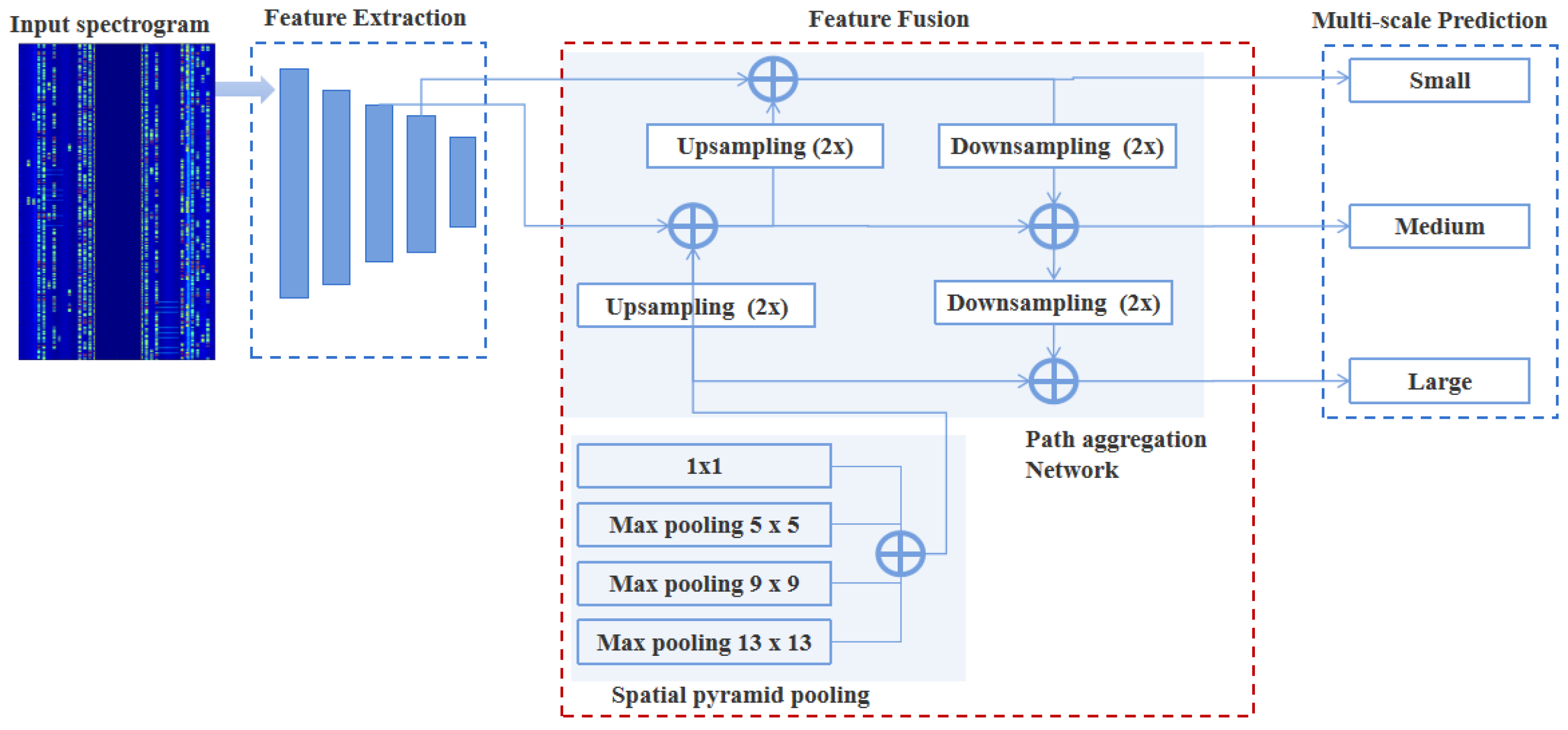

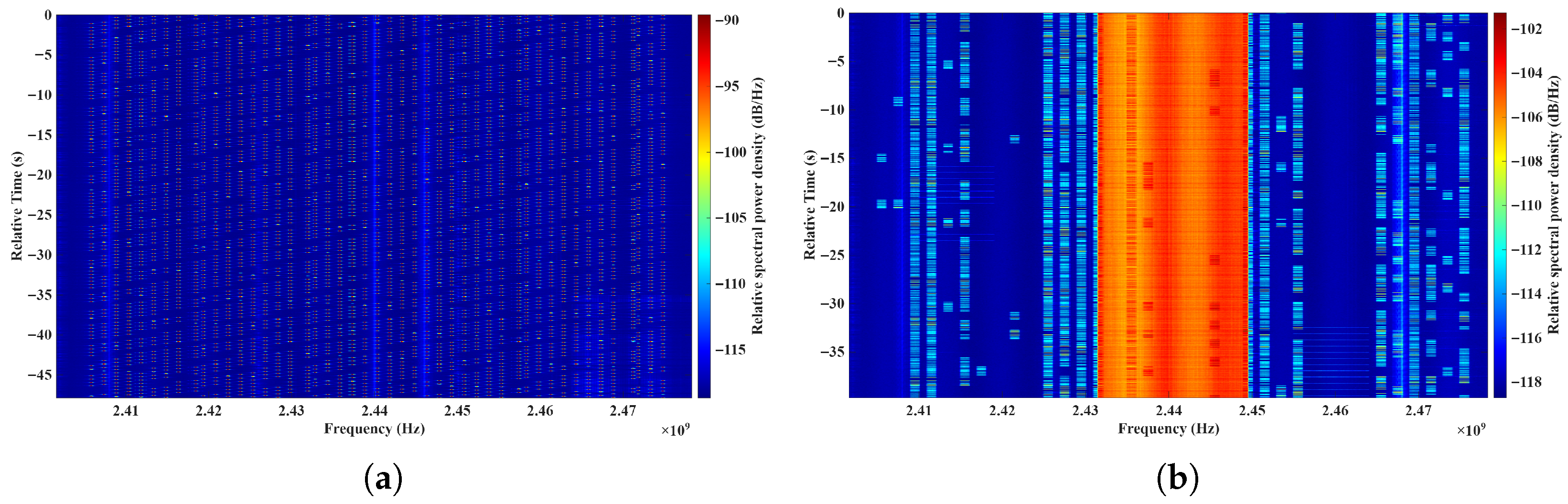

2.1. Characteristics of Frequency Hopping and Video Transmission Signals

2.2. Architecture of the Passive UAV Detection and Identification System

3. System Hardware and Algorithm Design

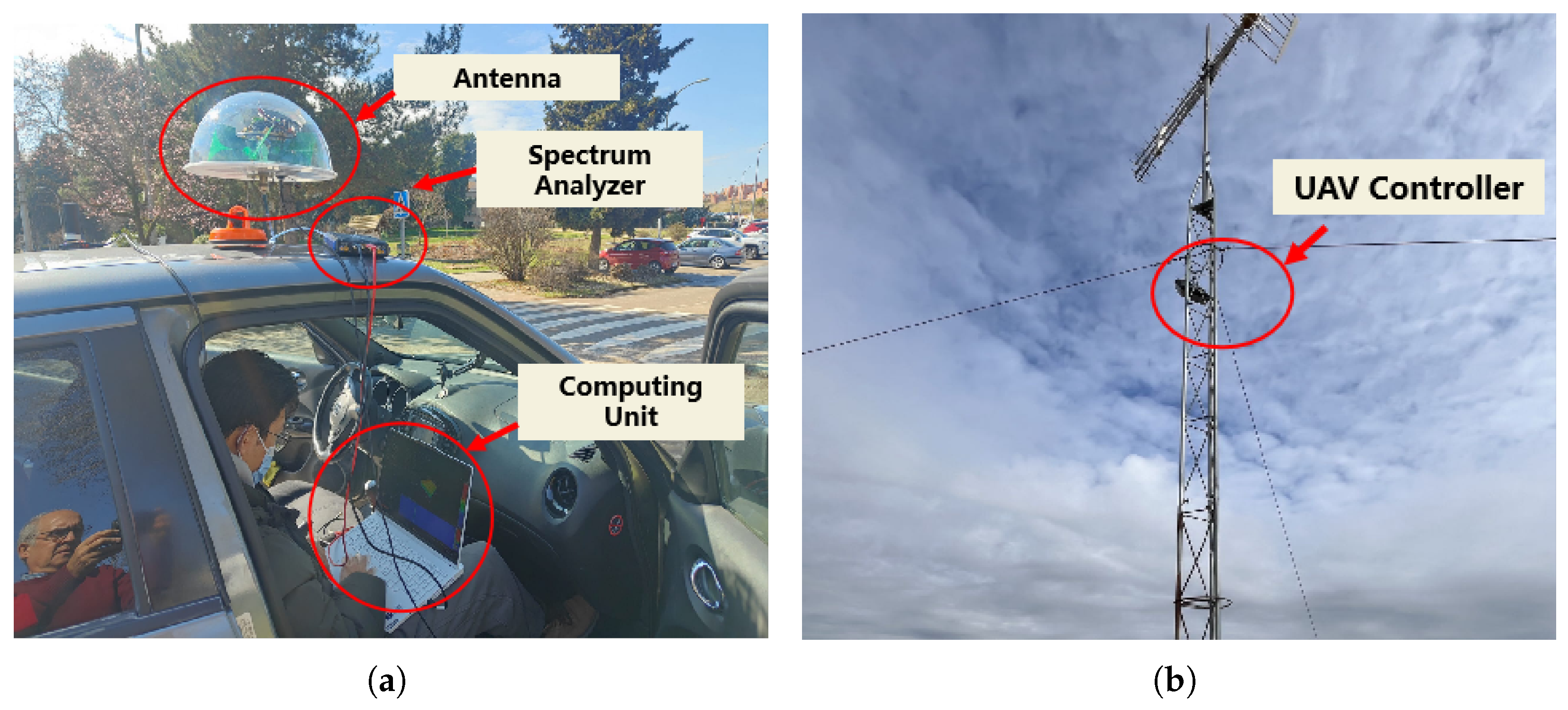

3.1. Hardware Platform

3.2. Algorithm Design

4. Experimental Validation and Analysis

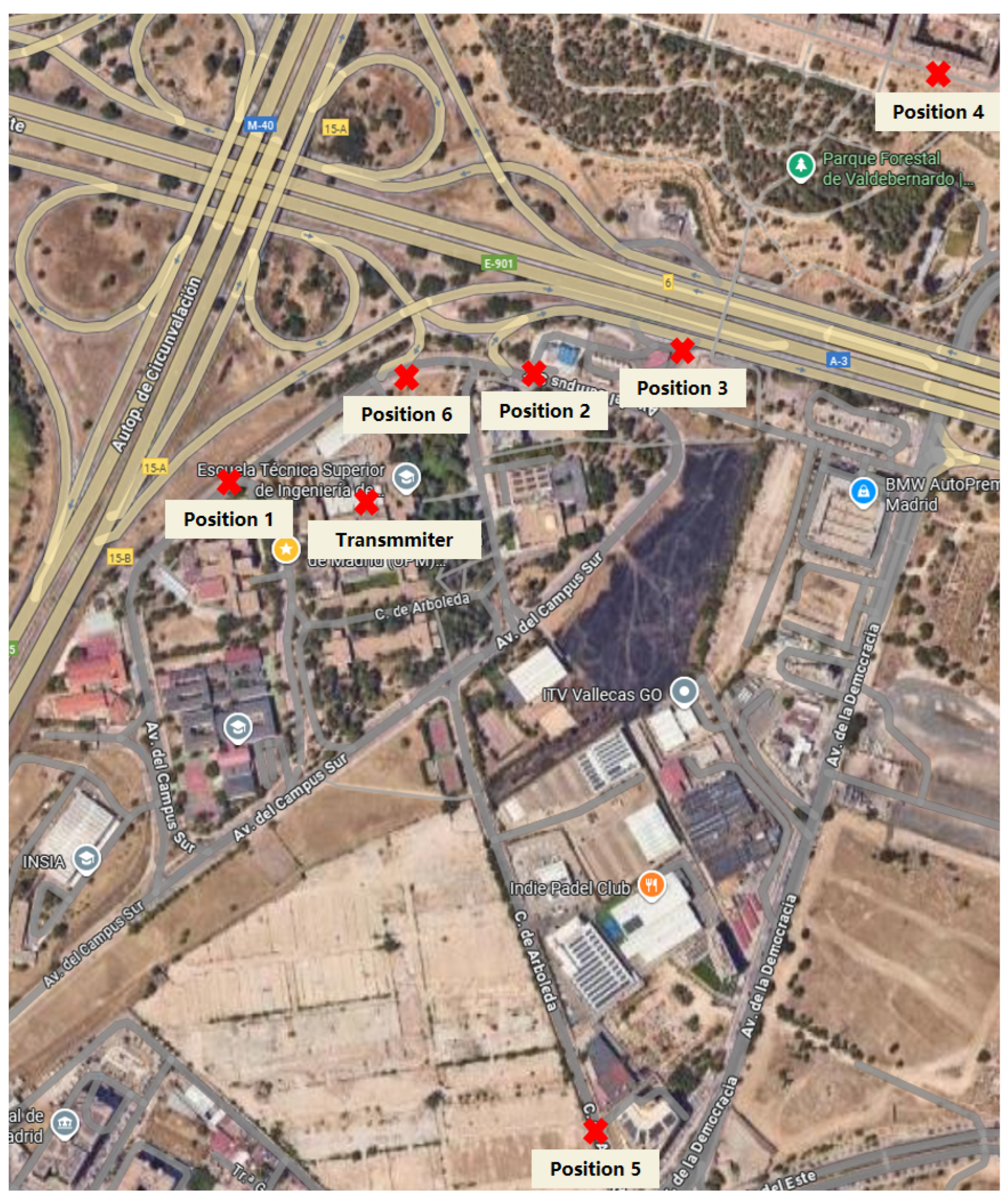

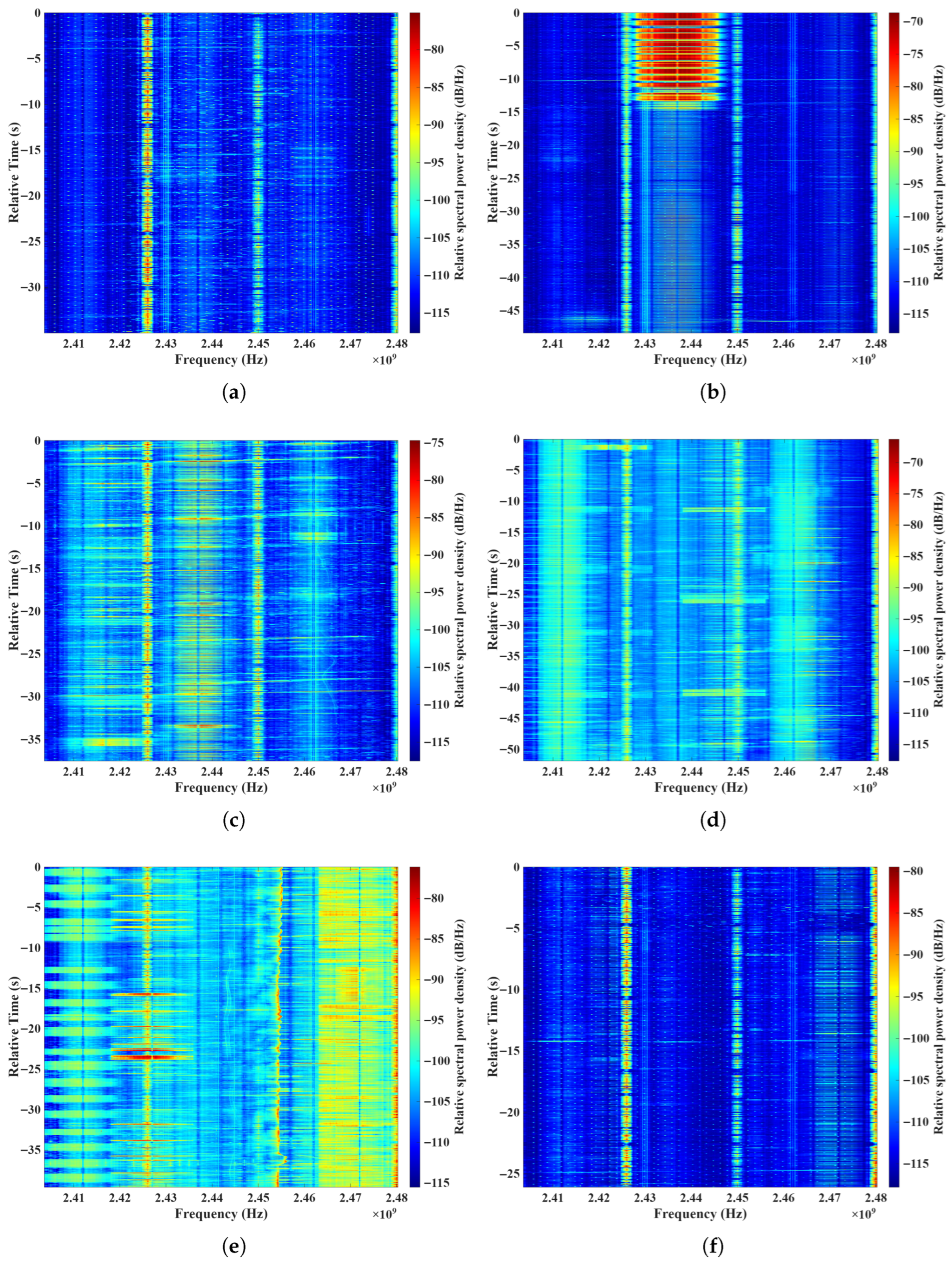

4.1. Measurement Setup

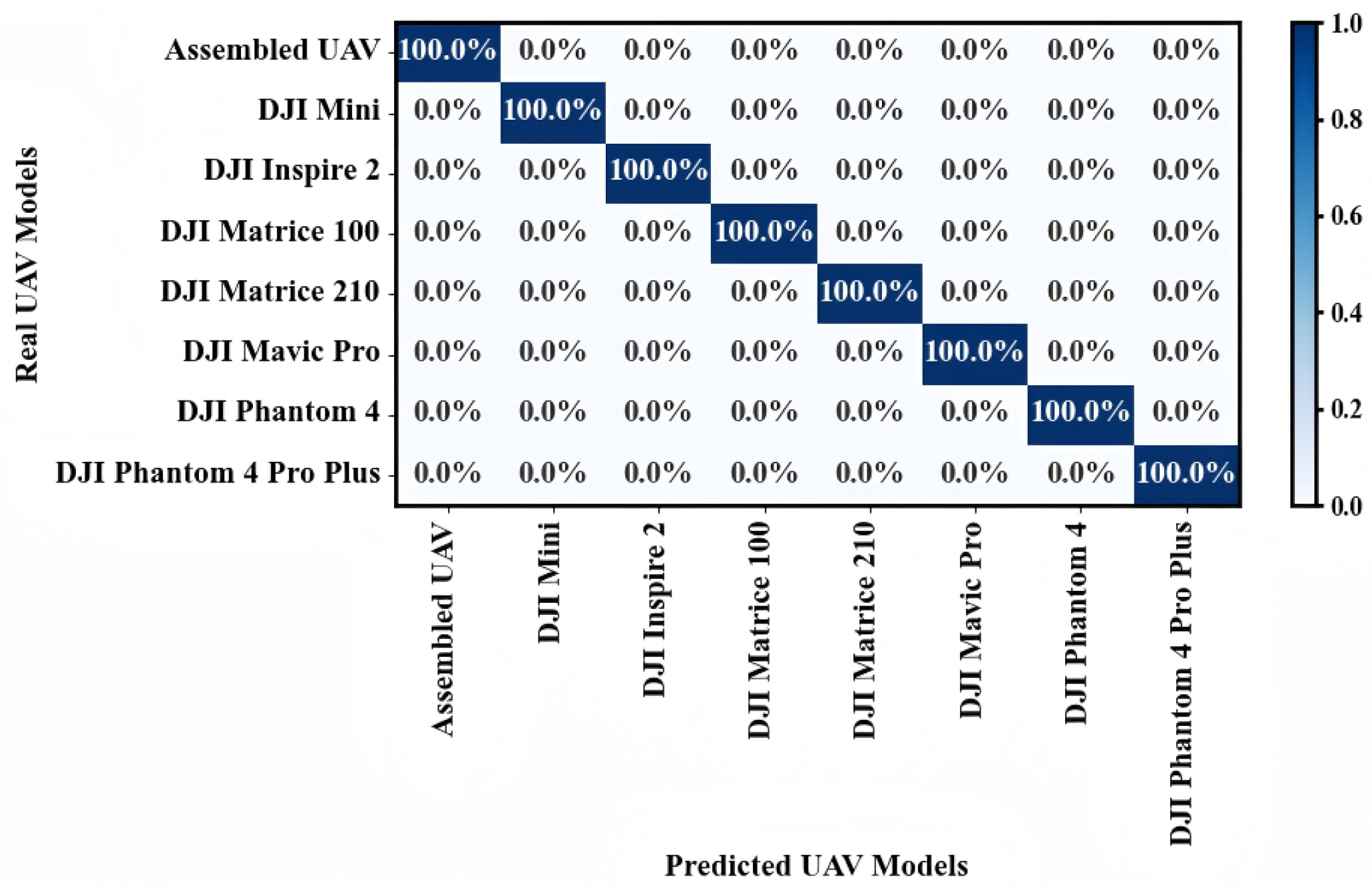

4.2. Performance Evaluation

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Xu, J.; Liu, X.; Jin, J.; Pan, W.; Li, X.; Yang, Y. Holistic Service Provisioning in a UAV-UGV Integrated Network for Last-Mile Delivery. IEEE Trans. Netw. Serv. Manage. 2025, 22, 380–393. [Google Scholar] [CrossRef]

- Wen, J.; Wang, F.; Su, Y. A Bi-Layer Collaborative Planning Framework for Multi-UAV Delivery Tasks in Multi-Depot Urban Logistics. Drones 2025, 9, 512. [Google Scholar] [CrossRef]

- Bonilla-Marquez, A.; Guzman-Flores, O.; Rodriguez-Gallo, Y.; Pimentel-Hernandez, K. Seed Spreading UAV Prototype for Precision Agriculture Development. In Proceedings of the IEEE Central America and Panama Student Conference (CONESCAPAN), Panama, Panama, 24–27 September 2024; pp. 1–6. [Google Scholar] [CrossRef]

- He, D.; Hou, H. UAV-Assisted Legitimate Wireless Surveillance: Performance Analysis and Optimization. In Proceedings of the IEEE International Conference on Unmanned Systems (ICUS), Nanjing, China, 18–20 October 2024; pp. 1975–1979. [Google Scholar] [CrossRef]

- Dousai, N.; Loncaric, S. Detecting Humans in Search and Rescue Operations Based on Ensemble Learning. IEEE Access 2022, 10, 26481–26492. [Google Scholar] [CrossRef]

- Dumencic, S.; Lanca, L.; Jakac, K.; Ivic, S. Experimental Validation of UAV Search and Detection System in Real Wilderness Environment. Drones 2025, 9, 473. [Google Scholar] [CrossRef]

- Chen, Y.; Zhu, Q.; Wang, J.; Jia, Z.; Wang, X.; Lin, Z. UAV-Aided Efficient Informative Path Planning for Autonomous 3D Spectrum Mapping. IEEE Trans. Cognit. Commun. Netw. 2025; early access. [Google Scholar] [CrossRef]

- Wang, J.; Zhu, Q.; Lin, Z.; Chen, J.; Ding, G.; Wu, Q.; Gu, G.; Gao, Q. Sparse Bayesian Learning-Based Hierarchical Construction for 3D Radio Environment Maps Incorporating Channel Shadowing. IEEE Trans. Wirel. Commun. 2024, 23, 14560–14574. [Google Scholar] [CrossRef]

- Sathyamoorthy, D. A review of security threats of unmanned aerial vehicles and mitigation steps. Def. Secur. Anal. 2015, 6, 81–97. [Google Scholar]

- Proposed Rule on Remote Identification of Unmanned Aircraft Systems. Available online: https://www.federalregister.gov/documents/2019/12/31/2019-28100/remote-identification-of-unmanned-aircraft-systems (accessed on 25 August 2025).

- Chen, Y.; Zhu, L.; Yao, C.; Gui, G.; Yu, L. A Global Context-Based Threshold Strategy for Drone Identification Under the Low SNR Condition. IEEE Internet Things J. 2023, 10, 1332–1346. [Google Scholar] [CrossRef]

- Aziz, N.; Fodzi, M.; Shariff, K.; Haron, M.; Yu, L. Analysis on drone detection and classification in LTE-based passive forward scattering radar system. Int. J. Integr. Eng. 2023, 15, 112–123. [Google Scholar] [CrossRef]

- Patel, J.; Fioranelli, F.; Anderson, D. Review of radar classification and RCS characterisation techniques for small UAVs or drones. IET Radar Sonar Navigat. 2018, 12, 911–919. [Google Scholar] [CrossRef]

- Ashraf, M.; Sultani, W.; Shah, M. Dogfight: Detecting drones from drones videos. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 7063–7072. [Google Scholar] [CrossRef]

- Rozantsev, A.; Lepetit, V.; Fua, P. Detecting flying objects using a single moving camera. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 879–892. [Google Scholar] [CrossRef] [PubMed]

- AI-Emadi, S.; Al-Ali, A.; Mohammed, A.; Al-Ali, A. Audio based drone detection and identification using deep learning. In Proceedings of the 15th International Wireless Communications & Mobile Computing Conference (IWCMC), Tangier, Morocco, 24–28 June 2019; pp. 459–464. [Google Scholar] [CrossRef]

- Zelnio, A.; Case, E.; Rigling, B. A low-cost acoustic array for detecting and tracking small RC aircraft. In Proceedings of the IEEE 13th Digital Signal Processing Workshop and 5th IEEE Signal Processing Education Workshop (DSP/SPE), Marco Island, FL, USA, 4–7 January 2009; pp. 121–125. [Google Scholar] [CrossRef]

- Shin, D.; Jung, D.; Kim, D.; Ham, J.; Park, S. A distributed FMCW radar system based on fiber-optic links for small drone detection. IEEE Trans. Instrum. Meas. 2017, 66, 340–347. [Google Scholar] [CrossRef]

- Oh, B.; Guo, X.; Wan, F.; Toh, K.; Lin, Z. Micro-Doppler Mini-UAV Classification Using Empirical-Mode Decomposition Features. IEEE Geosci. Remote Sens. Lett. 2018, 15, 227–231. [Google Scholar] [CrossRef]

- Yuan, S.; Sun, B.; Zou, Z.; Huang, H.; Wu, P.; Li, C.; Dang, Z.; Zhao, Z. IRSDD-YOLOv5: Focusing on the Infrared Detection of Small Drones. Drones 2023, 7, 393. [Google Scholar] [CrossRef]

- Fang, H.; Xia, M.; Zhou, G.; Chang, Y.; Yan, L. Infrared Small UAV Target Detection Based on Residual Image Prediction via Global and Local Dilated Residual Networks. IEEE Geosci. Remote Sens. Lett. 2022, 19, 7002305. [Google Scholar] [CrossRef]

- Yue, X.; Liu, Y.; Wang, J.; Song, H.; Cao, H. Software Defined Radio and Wireless Acoustic Networking for Amateur Drone Surveillance. IEEE Commun. Mag. 2018, 56, 90–97. [Google Scholar] [CrossRef]

- Ma, Z.; Zhang, R.; Ai, B.; Zeng, L.; Niyato, D. Deep Reinforcement Learning for Energy Efficiency Maximization in RSMA-IRS-Assisted ISAC System. IEEE Trans. Veh. Technol. 2025; early access. [Google Scholar] [CrossRef]

- Jiang, H.; Shi, W.; Zhang, Z.; Pan, C.; Wu, Q.; Shu, F. Large-Scale RIS Enabled Air-Ground Channels: Near-Field Modeling and Analysis. IEEE Trans. Wirel. Commun. 2025, 24, 1074–1088. [Google Scholar] [CrossRef]

- Su, Y.; Cheng, Q.; Liu, Z. Integrated Sensing and Communication for UAV Detection: A Reinforcement Learning-based Approach. In Proceedings of the IEEE Cyber Science and Technology Congress (CyberSciTech), Boracay Island, Philippines, 5–8 November 2024; pp. 540–543. [Google Scholar] [CrossRef]

- Yang, Z.; Wang, Q.; Liu, G.; Ma, Z. UAV Detection Based on OTFS ISAC System. In Proceedings of the IEEE 101st Vehicular Technology Conference (VTC2025-Spring), Oslo, Norway, 17–20 June 2025; pp. 1–7. [Google Scholar] [CrossRef]

- Nie, W.; Han, Z.; Zhou, M.; Xie, L.; Jiang, Q. UAV Detection and Identification Based on WiFi Signal and RF Fingerprint. IEEE Sens. J. 2021, 21, 13540–13550. [Google Scholar] [CrossRef]

- Toonstra, J.; Kinsner, W. Transient analysis and genetic algorithms for classification. In Proceedings of the IEEE WESCANEX 95. Communications, Power, and Computing. Conference Proceedings, Winnipeg, MB, Canada, 15–16 May 1995; pp. 432–437. [Google Scholar] [CrossRef]

- Song, P.; Zhang, N.; Zhang, H.; Guo, F. Blind Estimation Algorithms for I/Q Imbalance in Direct Down-Conversion Receivers. In Proceedings of the IEEE 88th Vehicular Technology Conference (VTC-Fall), Chicago, IL, USA, 27–30 August 2018; pp. 1–5. [Google Scholar] [CrossRef]

- Klein, R.; Temple, M.; Mendenhall, M.; Reising, D. Sensitivity Analysis of Burst Detection and RF Fingerprinting Classification Performance. In Proceedings of the IEEE International Conference on Communications(ICC), Dresden, Germany, 14–18 June 2009; pp. 1–5. [Google Scholar] [CrossRef]

- Jana, S.; Kasera, S. On fast and accurate detection of unauthorized wireless access points using clock skews. IEEE Trans. Mob. Comput. 2010, 9, 449–462. [Google Scholar] [CrossRef]

- Xie, Z.; Xu, L.; Ni, G.; Wang, Y. A new feature vector using selected line spectra for pulsar signal bispectrum characteristic analysis and recognition. Chin. J. Astron. Astrophys. 2007, 7, 565–571. [Google Scholar] [CrossRef]

- Patel, H.; Temple, M.; Baldwin, R. Improving ZigBee device network authentication using ensemble decision tree classifiers with radio frequency distinct native attribute fingerprinting. IEEE Trans. Rel. 2015, 64, 221–233. [Google Scholar] [CrossRef]

- Xiao, Y.; Zhang, X. Micro-UAV detection and identification based on radio frequency signature. In Proceedings of the International Conference on Systems and Informatics (ICSAI), Shanghai, China, 2–4 November 2019; pp. 1056–1062. [Google Scholar] [CrossRef]

- Xie, Y.; Jiang, P.; Gu, Y.; Xiao, X. Dual-Source Detection and Identification System Based on UAV Radio Frequency Signal. IEEE Trans. Instrum. Meas. 2021, 70, 2006215. [Google Scholar] [CrossRef]

- Li, Q.; Wang, F.; Zhang, Y.; Li, R.; Shi, S.; Li, Y. Novel Micro-UAV Identification Approach Assisted by Combined RF Fingerprint. IEEE Sens. J. 2024, 24, 26802–26813. [Google Scholar] [CrossRef]

- Zhu, G.; Li, S.; Liu, Y.; Zhu, Q.; Zhang, J.; Mao, K.; Zhou, Z. Space clustering and identification based on full-domain channel characteristics for UAV communication networks. Chin. J. Radio Sci. 2024, 39, 432–441. (In Chinese) [Google Scholar] [CrossRef]

- Mao, K.; Zhu, Q.; Wang, X.; Ye, X.; Gomez-Ponce, J.; Cai, X. A Survey on Channel Sounding Technologies and Measurements for UAV-Assisted Communications. IEEE Trans. Instrum. Meas. 2024, 73, 8004624. [Google Scholar] [CrossRef]

- Li, M.; Hao, D.; Wang, J.; Wang, S.; Zhong, Z.; Zhao, Z. Intelligent Identification and Classification of Small UAV Remote Control Signals Based on Improved Yolov5-7.0. IEEE Access 2024, 12, 41688–41703. [Google Scholar] [CrossRef]

- Radio-Frequency Control and Video Signal Recordings of Drones. Available online: https://zenodo.org/records/4264467 (accessed on 25 August 2025).

| Parameters | Value |

|---|---|

| Real-time Receiving Bandwidth | Max 245 MHz |

| Real-time Transmitting Bandwidth | 120 MHz |

| Minimum Detectable Signal Interval | Minimum 10 ns |

| Maximum Receiving Power | +23 dBm |

| Maximum Transmitting Power | +20 dBm |

| Internal Amplifier | −170 dBm/Hz |

| Amplitude Accuracy | Typical ±0.5 dB |

| Frequency Reference Accuracy | 0.5 ppm |

| Resolution Bandwidth | 62 MHz to 200 MHz |

| Attenuator Adjustment Range | 50 dB/70 dB (0.5 dB steps) |

| FPGA Model | XC7A200T-2 |

| GPS Synchronization | Timestamp precision ±10 ns |

| Test Poistions | Traditional Method Accuracy [39] | Proposed Method Accuracy | Difference |

|---|---|---|---|

| 1 (LoS) | 87.6% | 96.3% | +8.7% |

| 2 (LoS) | 77.4% | 89.3% | +11.9% |

| 3 (LoS) | 75.7% | 86.5% | +10.8% |

| 6 (LoS) | 84.5% | 92.2% | +7.7% |

| 4 (NLoS) | 61.0% | 81.1% | +20.1% |

| 5 (NLoS) | 57.9% | 72.8% | +14.9% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, G.; Briso, C.; Liu, Y.; Lin, Z.; Mao, K.; Li, S.; He, Y.; Zhu, Q. An Intelligent Passive System for UAV Detection and Identification in Complex Electromagnetic Environments via Deep Learning. Drones 2025, 9, 702. https://doi.org/10.3390/drones9100702

Zhu G, Briso C, Liu Y, Lin Z, Mao K, Li S, He Y, Zhu Q. An Intelligent Passive System for UAV Detection and Identification in Complex Electromagnetic Environments via Deep Learning. Drones. 2025; 9(10):702. https://doi.org/10.3390/drones9100702

Chicago/Turabian StyleZhu, Guyue, Cesar Briso, Yuanjian Liu, Zhipeng Lin, Kai Mao, Shuangde Li, Yunhong He, and Qiuming Zhu. 2025. "An Intelligent Passive System for UAV Detection and Identification in Complex Electromagnetic Environments via Deep Learning" Drones 9, no. 10: 702. https://doi.org/10.3390/drones9100702

APA StyleZhu, G., Briso, C., Liu, Y., Lin, Z., Mao, K., Li, S., He, Y., & Zhu, Q. (2025). An Intelligent Passive System for UAV Detection and Identification in Complex Electromagnetic Environments via Deep Learning. Drones, 9(10), 702. https://doi.org/10.3390/drones9100702