1. Introduction

The recent surge in vehicular communication demands has given rise to the concept Internet of Vehicles (IoV). As a subset of the Internet of Things (IoT), the IoV consists of mobile vehicles outfitted with sensors, processors, and software, enabling them to communicate via the Internet or other networks [

1,

2]. The IoV is a decentralized system that ensures the security and privacy of both vehicular and user data, integrating various technologies to provide reliable communication tools [

3,

4].

Vehicle-to-everything (V2X) communication within IoV facilitates data sharing from vehicles to infrastructure (V2I), among vehicles (V2V), with pedestrians (V2P), roadside units (V2R), and unmanned aerial vehicles (V2U). This integration is vital for the intelligent management of vehicular and network data traffic, promoting safer roads and improved vehicular energy efficiency. However, V2X communication has its challenges. The high mobility and diverse densities of vehicles in the IoV necessitate continuous communication links for reliable data exchange. Fixed infrastructures, such as RSUs and BSs, often cannot provide sufficient communication and computational services, resulting in reduced QoS.

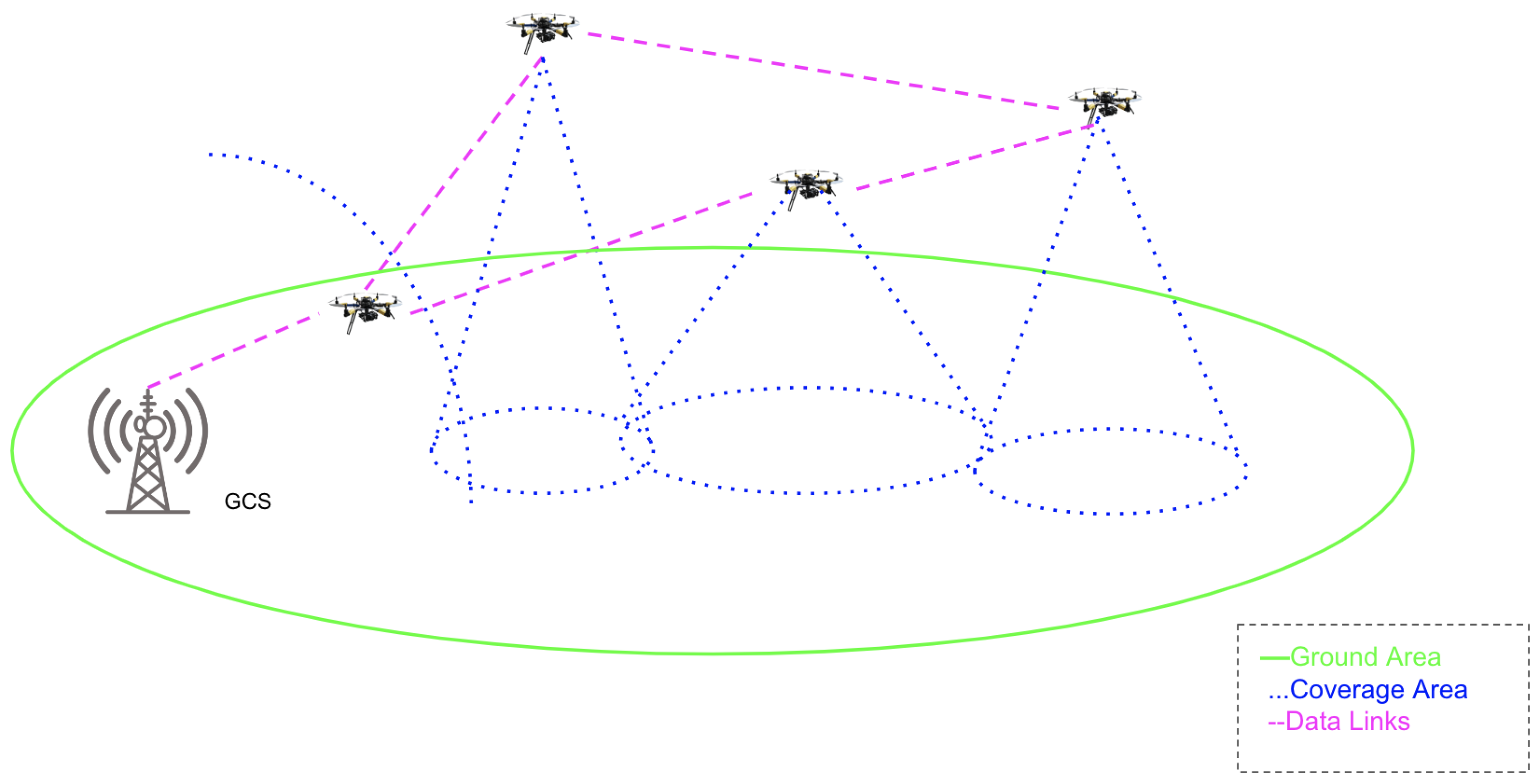

Incorporating Unmanned Aerial Vehicles (UAVs) into the IoV can significantly enhance the communication infrastructure by providing better LOS connectivity. This integration supports load balancing, mobility management, routing solutions, and cost-effective communication. UAVs, capable of autonomous operation and equipped with sensors, computing units, cameras, GPS, and wireless transceivers, can autonomously navigate predetermined flight paths, interact with their environment, and dynamically alter their routes during flight when needed, making them a valuable addition to the IoV [

5]. Specifically, UAVs can address the constraints of fixed roadside units (RSUs) by altering their speed and position dynamically, enabling them to collect and relay data across different regions [

6].

The remainder of the paper is structured as follows:

Section 2 summarizes the existing survey on the IoV, UAV, and UAV assisted IoV networks.

Section 3 provides a foundational discussion on the principles of IoV and UAV networks with a focus on vehicular communication technologies and UAV transceiver components with UAV communication architecture.

Section 4 explores AI/ML-based resource management in IoVs (

Section 4.1), UAVs (

Section 4.2), and UAV-assisted IoV networks (

Section 4.3). Furthermore, in this section, we divide the resources in UAV-assisted IoV network into different categories, namely deployment, task offloading, trajectory, resource allocation, spectrum sharing, clustering, and energy optimization and review the research in all the categories. In

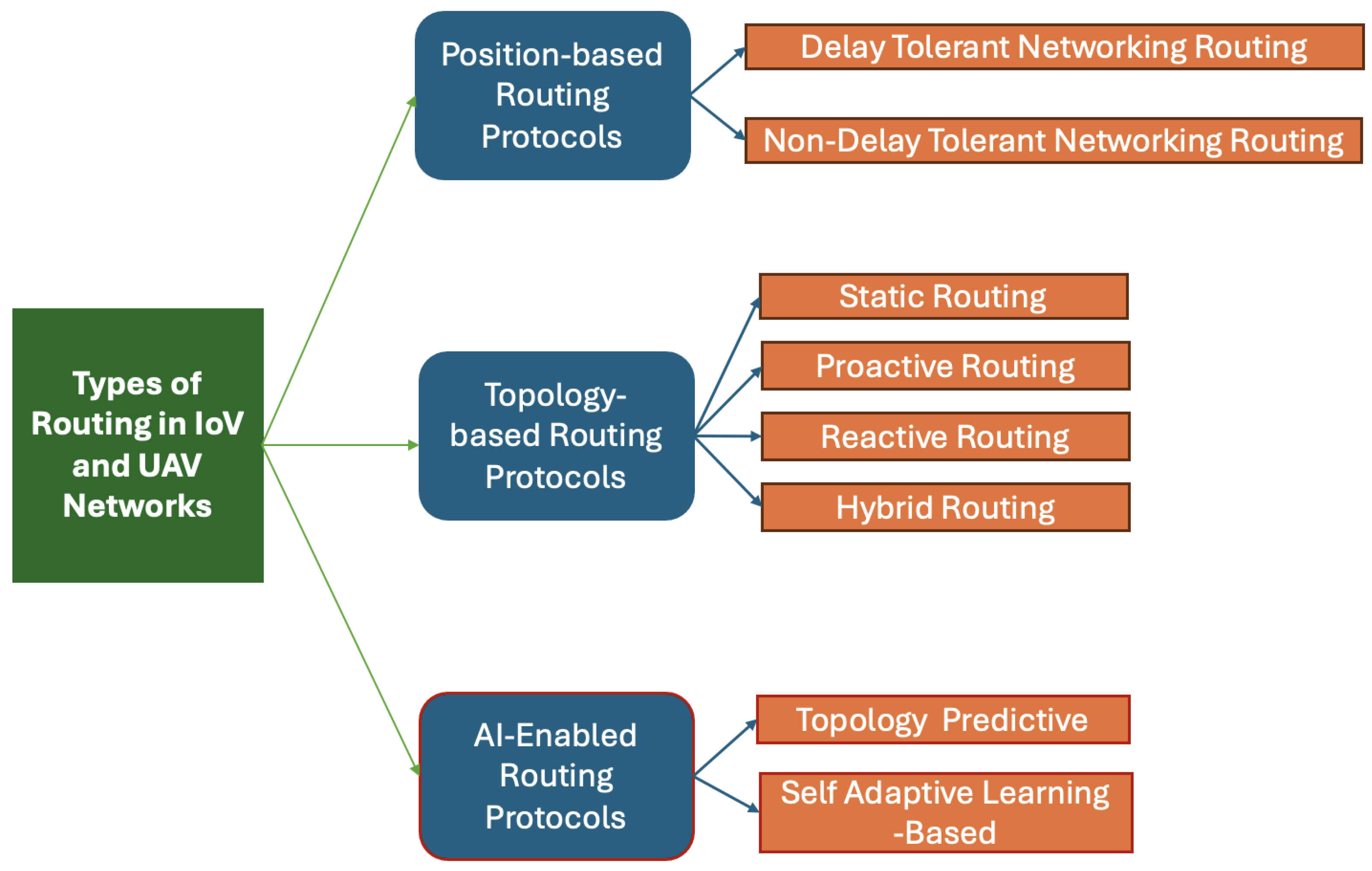

Section 5, we firstly introduce the types of routing (

Section 5.1) in IoV and UAV networks, namely position-based, topology-based and AI-based routing. After this, the research in the area of AI-enabled routing protocols in IoV (

Section 5.2), UAV (

Section 5.3), and UAV-assisted IoV networks (

Section 5.4) is reviewed and critically discussed.

Section 6 outlines the challenges, open issues, and prospective future research in ML/AI-based UAV-IoV networks, and finally,

Section 7 concludes the paper.

2. Related Work and Survey Contribution

In the last decade, AI has been integrated into vehicular networks as a potent solution for diverse communication and traffic challenges. Coupled with V2X technology, AI enables sophisticated vehicular applications such as traffic management, Autonomous Vehicle navigation, and data management. Machine Learning (ML) and Deep Learning (DL), as prominent branches of AI, are heavily employed in the IoV to tackle complex problems by leveraging the abundant data available [

7].

Most deep learning models require extensive historical data that include a variety of traffic features for training [

8]. However, in vehicular communications, this historical data, which encompasses routing, channel conditions, vehicle mobility, and resources, is often not available, making supervised DL methods impractical. As a result, Reinforcement Learning (RL) has become a powerful alternative in the IoV domain, allowing vehicles to independently make decisions for various networking tasks [

9,

10,

11]. In RL, agents, usually vehicles, gather data about their dynamic environment and make informed decisions to achieve goals such as resource and mobility management, routing options, and traffic forecasting.

The extensive research on AI/ML applications in wireless networks and Vehicular Ad hoc Networks (VANET) communications is thoroughly documented in scholarly articles. Liang et al. [

12] investigate the application of AI/ML in analyzing mobility and traffic patterns in dynamic vehicular networks, proposing methods to improve network performance in security, handover, resource management, and congestion control. The authors in [

13] categorize vehicular research related to transportation and networks, outlining vehicular network scenarios that utilize AI/ML for data offloading, mobile edge computing (MEC), network security, and transportation elements such as platooning, autonomous navigation, and safety. Furthermore, in [

14], authors present a detailed review of ML techniques in vehicular networks, focusing on resource and network traffic management and reliability. This work was further extended by [

15] to encompass cognitive radio (CR), beamforming, routing, orthogonal frequency-division multiple access (OFDMA), and non-orthogonal multiple access (NOMA) tasks.

An overview of ML, CR, VANET, and CR-VANET architectures including open issues and future challenges is presented in [

16]. Moreover, the applications of AI/ML in CR-VANET in autonomous vehicular networks and their union are also reviewed in this paper. In [

17], the authors first discussed Federated Learning (FL) and its use in wireless IoT. Then, this survey paper points out and discusses the technical challenges for FL-based vehicular IoT with future research directions. The survey paper [

18] critically reviews the ML and Deep Reinforcement Learning (DRL) models for MEC decision-based offloading in IoV. The main focus of the paper is on buffer and energy-aware ML-enabled Quality of Experience (QoE) optimization, and it summarizes the recent related research and methods and presents their comparison. In [

19], the authors surveyed and analyzed the resource allocation scenarios. In addition to this, the design challenges for resource management in VANETs using ML are presented as well. In [

20], a detailed overview of the RL and DRL techniques in IoV networks such as joint user association and beam forming, caching, data-offloading decisions, energy-efficient management of resources, and vehicular infrastructure management is presented. Then, future trends, challenges, and open issues in 6 G-based IoV are discussed.

In [

21], the primary ML concepts for wireless sensor networks (WSNs) and VANETs are summarized briefly with open issues and challenges. In [

22] a comprehensive survey of AI/ML techniques is presented, and then the strengths and weaknesses of these AI models for the VANET environment, including safety, traffic, infotainment applications, security, routing, resource, and mobility management are provided. In [

23] authors surveyed resource allocation techniques on DSRC, Cellular-V2X (C-V2X), and heterogeneous VANET. The AI/ML techniques are reviewed with respect to their integration in VANETs and utilization in designing several resource allocation tasks related to user association, handover, and virtual resource management for V2V and V2I communications. However, AI/ML on V2X is not the main focus of the paper.

In [

24], the RL-based routing schemes are classified depending on the centralized and distributed learning process. Moreover, they surveyed position-based, cluster-based, and topology-based routing protocols. The survey in [

25] summarizes the vehicular network and Smart Transport Infrastructure (STI) in detail. The paper deals with FL and its application in vehicular networks. It elaborates on vehicular IoTs (VIoTs), blockchain, FL, and intelligent transportation infrastructure. Then, the FL- and blockchain-based security and privacy applications in the VANET environment are discussed in detail. The challenges arising from the integration of FL and blockchain are pointed out in the survey with an indication of future research directions. In [

26], the survey presents a compilation of network-controlled functions that have been optimized through data-driven approaches in vehicular environments. The research related to the integration of AI/ML and V2X communications in areas such as handover and resource management or user association, caching, routing, beam-forming optimization, and QoS prediction are extensively reviewed. This survey classifies the training architecture into a centralized, distributed, or federated model for each ML technique. The time complexity of supervised, unsupervised, and RL models used in the literature are discussed. In [

27], the authors focused on resource management and computational offloading in a 6G vehicle-to-everything (V2X) network using FL. The paper explained the taxonomy of computational offloading in vehicular networks and only cited a few papers based on AI-driven computational offloading and focused more on explaining the different scenarios and challenges related to the network, resource management, computational offloading, and security and privacy issues in highly mobile vehicular network.

In [

28], the authors cover the applications of UAVs-based IoV networks. This work does not include the detailed implementation of AI/ML in UAV-based IoV and only mentions a few papers related to Software-Defined Networks (SDNs) based fog computing and AI/ML networks. However, it covers the areas such as privacy, security, congestion and network delays, and communication protocols. In [

29], the authors review the Internet of Drones (IoD) and classify the IoD-UAV according to its applications in the areas of resource allocation, aerial surveillance and security, and mobility in all the possible IoT-based fields. This survey concludes that the most used AI technique in IoD is Convolution Neural Networks (CNNs) and the most common areas of research are resource and mobility management. However, this survey completely ignores IoD-based IoV networks. In [

30], the role of UAVs in different scenarios such as smart farming and air quality indexing are discussed. One section briefly discusses the implementation of UAVs in communications as base stations, relay communication, and radio and distribution units. However, this survey does not cover UAV-assisted vehicular communication and the UAV-based resource management in depth. Similarly, in [

31], the authors primarily reviewed the UAV applications in 5G network, public safety, millimeter waves, and radio-based sensing. However, there is one section of the paper that reviewed the application of ML in UAV trajectory optimization and computational offloading for 5G networks and UAV-driven federated edge learning. For computational offloading, the authors do not cite any papers and only explain the application through two diagrams. The summary of the survey papers with AI/ML applications in IoV networks is provided in

Table 1.

In our review of existing literature on UAV-based IoV, we noted the lack of exploration into the use of ML for resource management and routing within UAV or IoD-based IoV systems. To date, no comprehensive survey has been published on this topic. Our paper provides an in-depth analysis of the integration of Autonomous Vehicles with UAVs and the deployment of AI/ML in UAV-based IoV for the allocation of physical and computational resources, as well as for routing algorithms. We discuss current AI/ML solutions in UAV-IoV, identify challenges and issues, and propose directions for future research.

4. AI-Based Resource Management

Addressing challenges such as high user volume, meeting stringent QoS, enhanced coverage, and cost reduction for end users requires efficiently managing various resources in UAV-assisted networks. Effective resource management is vital in overcoming challenges associated with resource scarcity.

Section 4 discusses the research contributions in resource management in (1) IoV, (2) UAV, and (3) the UAV-based IoV networks utilizing AI. The research in this area is focused on optimizing the communication resources based on the applications and services. For this reason, we divided the resource management problem into different categories based on IoV/UAV deployment, task offloading, UAV/IoV trajectory optimization, resource allocation, spectrum sharing, UAV/IoV clustering, and energy optimization, as shown in

Figure 5.

4.1. AI for Resource Management in Internet of Vehicles

Authors have considered various objectives for AI-based resource allocation, including load balancing, improved QoS or QoE, and the minimization of energy and latency. This section is dedicated to reviewing the contributions and research advancements in AI-based resource allocation within the IoV networks.

4.1.1. AI-Based Vehicular Clustering

In VANETs, the clustering of vehicles is performed for group nodes with similar attributes, based on predefined parameters or proximity, which facilitates the organized management of network parameters. Clustering in VANETs provides several benefits, such as resolving hidden node problems, creating manageable groups based on proximity, and efficient bandwidth utilization through frequency reuse. Typically, vehicular clustering involves designating one vehicle as the cluster head, while gateway nodes (GWs), within the transmission range of multiple cluster heads (CHs), help distribute the load. Clustering allows VANETs to leverage both wireless and wired infrastructure features effectively. Extensive research on communication protocols and strategies for ad hoc networks has identified clustering as a beneficial approach. Given the variable speeds and numbers of vehicles on the road at any time, developing a reliable mechanism for vehicle clustering is essential to evenly distribute the load on roadside units (RSUs). Supervised and unsupervised Machine Learning techniques have shown great promise in efficiently managing vehicular clusters.

In [

58], the main objective is to maximize the information capacity of the VANETs by maximizing the information capacities between the head vehicle and RSU and among vehicles. Cluster-Enabled Cooperative Scheduling based on RL (CCSRL) is introduced to schedule vehicles and manage communication resources to maximize information capacity. The CCSRL primarily considers factors such as distance metrics, vehicle stability, bandwidth efficiency, velocity, density, and channel conditions to arrange the vehicles in different clusters as well as in different classes. Auxiliary vehicles are selected by considering factors such as the vehicle’s speed deviation, alignment with the direction of the CH vehicle, and the quality of the channel condition. Initially, the RSU selects the cluster head vehicle, and afterward, the new CH is selected by the previous CH. The batch size and the number of vehicles in a cluster are kept small in this research as the larger batch size prevents the RL algorithm from achieving global optimization, and as the number of vehicles increases in a cluster, the number of motion states increases and the head vehicle takes a long time to make a final decision. As a result, the convergence time of the CCSRL increases as the batch size increases. The transmission delay, throughput, and packet delivery ratio are the metrics used to evaluate the algorithm’s performance.

In [

59], to mitigate the effects of unreliable V2V links, each V2V pair determines whether to use the V2V mode or the V2I mode based on actual link qualities. A combined problem of selecting transmission modes, allocating radio resources, and controlling power for cellular V2X communications is defined to maximize the total capacity of V2I. A two-timescale federated DRL-based algorithm is further developed to help obtain robust models, wherein, a graph-based vehicle clustering is performed to cluster nearby vehicles on a large timescale, while vehicles in the same cluster cooperate to train the robust global DRL model through FL on a small timescale. The performance of the proposed algorithm is better than the other DRL-based decentralized learning schemes without transfer learning. However, when compared with the centralized algorithm, the proposed model does not achieve a better data rate when the number of V2V pairs increases and the outage threshold increases. Moreover, the convergence reward of the proposed model increases slowly and remains below the centralized algorithm.

In [

60], the authors employ clustering by incorporating link reliability status, k-connectivity, and relative velocity factor into a fuzzy logic scheme. Each vehicle calculates a leadership value for itself and its one-hop neighbors by exchanging messages. They also employ an improved Q-learning (IQL) approach for selecting the gateway or cluster head vehicle. The proposed two-level clustering scheme demonstrates superior performance by maintaining higher throughput as vehicle speeds increase and reducing the likelihood of route changes compared to other classical Q-learning algorithms. However, the authors acknowledge the fact that as the action and state space grow, the complexity and computational cost of the proposed algorithm are expected to be increased significantly, and they do not deal with the complex vehicular scenario.

The authors in [

61] use Q-learning to select the optimal next-hop grid. The authors used grid-based routing, which divides a geographic area into small grids, allowing Q-learning to determine the optimal sequence of grids from source to destination. Once the optimal grid is selected, the agents choose the best relay vehicle within that grid using a greedy or Markov prediction method. Buses are given higher priority in vehicle selection because of their fixed routes and schedules, enhancing the scheme’s performance. Simulations indicate that this hierarchical routing scheme improves the delivery ratio and throughput, although it results in similar or slightly increased delay, hop count, and packet-forwarding frequency compared to other position-based routing protocols for various time slots. The authors do not provide any information about the complexity and control information overhead of the proposed model. It is an important aspect of the research problem as the proposed protocols need extra overhead for computing the Q-table compared to other comparative protocols.

In recent years, research has shifted from supervised learning to Q-learning-based RL algorithms to address clustering issues in VANET for time-sensitive applications. It is observed that in references [

58,

59,

60,

61], the focus is on employing Q-learning with an RL algorithm, utilizing a limited action and state space to maintain a small Q-table and reduce computational expense. However, V2V and V2I communications are time-critical, and large Q-tables can be impractical for time-sensitive applications. Consequently, there is a need to investigate Deep RL algorithms for VANET clustering to manage the increasing complexity as the action and state space expand. Deep Q-Networks (DQN) use neural networks to replace the Q-table, taking the state as input and predicting Q values based on historical data. The research work in the area of vehicular clustering for resource management is summarized in

Table 2 based on the objectives of the research, the algorithm designed, and the metrics used to evaluate the performance of the proposed algorithm.

4.1.2. AI-Based Vehicular Spectrum Sharing

Spectrum sharing involves managing the distribution of spectrum among Vehicular Cognitive Radio (VCR) users while ensuring QoS. It can be categorized based on spectrum utilization into unlicensed and licensed types. In unlicensed spectrum sharing, all users have equal priority, while in licensed spectrum sharing, primary users (PUs) are prioritized over secondary users (SUs). SUs can access both types of spectrum sharing only when PUs are not utilizing the spectrum. Additionally, spectrum sharing can be classified as centralized or distributed. In centralized spectrum sharing, a central node controls spectrum allocation and access, while in distributed spectrum sharing, each node independently manages spectrum access. Cooperative and non-cooperative approaches are also employed in spectrum sharing within VCR networks.

In [

62], the authors use the multi-agent RL (MARL) to develop a distributed spectrum sharing and power allocation algorithm to enhance the performance of both V2V and V2I links together. In the RL environment, the multiple V2V links try to access the V2I spectrum. The V2V links act as agents and refine the spectrum allocation and power control—strategies based on the individual environmental observations. Instead of considering the continuous values for power control, this paper considers only four levels of power control. This eases the learning by reducing the dimensions of the action space. In the training stage, the proposed model is centralized, and in the implementation stage, it is decentralized. The proposed MARL and single-agent RL (SARL) algorithms are used for comparison purposes. The proposed model considerably improves the overall system-level performance.

In [

63], the authors used the same MARL-based approach and four-level power con dimensionality reduction for the action space in NOMA communication using the MARL algorithm. So, in addressing spectrum allocation issues in V2X communications, the objectives are to enhance the overall throughput of V2I links while increasing the probability of the success of V2V channels within a specified time constraint,

T. However, the reward function in this study is not defined, and no reward penalty is provided. Moreover, the complexity of action and state space are not elaborated. The convergence of the reward function is not provided, so, it is difficult to draw conclusions about the performance of the proposed algorithm.

In [

64], the authors address three issues of reliable Cooperative Spectrum Sensing (CSS), channel indexing for selective Spectrum Sensing (SS), and optimal channel allocation to CR SU in a single framework for CR-VANETs. For CSS, local SS decisions with critical attributes such as the geographical position of the sensing signal acquisition and timestamp, utilizing the DRL technique to obtain a global CSS session, are introduced. All the vehicles (static and mobile) and UAVS are considered as SUs. Selective channel-based spectrum sensing is employed to reduce the sensing overload on CR users. A time series analysis is used with a deep learning-based Long Short Term Memory (LSTM) model to index PU channels for selective SS. Finally, for channel allocation in CR-VANETs, the complex environment is modelled as a Partially Observable Markov Decision Process (POMDP) framework and solved using a value iteration-based algorithm. To reduce the dimensionality problem associated with the DRL algorithm, the approximation method is used to reduce the size of the action and state space in the proposed algorithm. The reward function formulated in this research is highly unstable and only stabilizes for a few episodes and again drops and starts fluctuating. Moreover, the probability of PU detection drops as the speed of vehicles increases.

In [

65], the proposed resource management mechanism achieves intelligent and dynamic control of the entire VANET. The BS of each cell acts as the DRL agent. The environment encompasses the entire vehicular communication network, including the BS, IRS-aided channel, and the vehicles. The objective of the DRL-based scheme is to jointly optimize the transmission power vector of head vehicles, the Intelligent Reconfigurable Surface (IRS) reflection phase shift, and the BS detection matrix to maximize network energy efficiency under given latency constraints. The CSI and the status of the VANET are collected and sent to the DRL agent, which then takes action and receives the corresponding reward from the environment. Since the state and action variables of the DRL-based resource control and allocation scheme are continuous, the Deep Deterministic Policy Gradient (DDPG) algorithm is employed to solve the optimization model. The proposed model performs better than the baseline models in terms of system energy efficiency. However, the complexity of the model increases with the increase in the number of neurons in actor and critic networks, and it needs to be multiplied by the number of episodes and the number of time slots used in each episode. The comparison of the proposed algorithms’ convergence is not provided to gauge the efficiency of the proposed scheme.

In [

66], a resource allocation problem is solved for V2X communications aimed at maximizing the sum rate of V2I communications while ensuring the latency and reliability of V2V communications. This is achieved through a joint consideration of both frequency spectrum allocation and transmission power control. The authors formulate the resource allocation problem as a decentralized Discrete-time and Finite-state Markov Decision Process (DFMDP), where V2V links are the agents, the local channel information such as V2V-link interference channels from other V2V links are the state, the action space is the power allocation and spectrum multiplexing factor of V2V and V2I links, and finally the reward function is based on the sum-rate of V2I communications and the delivery probability of V2V communications, as the aim of the research is to select the proper spectrum bands and transmission powers that optimize the different QoS requirements of both V2I and V2V communications. To handle the continuous action space, the authors implemented a Deep Neural Network (DNN)-based DDPG framework, and higher efficiency is achieved compared to the random resource allocation scheme for the sum-rate of V2X communications and the delivery probability of V2V communications.

In [

67], for V2V communication, the authors proposed the RL-based decentralized resource allocation mechanism. It is applied to both unicast and broadcast scenarios. V2V links are agents and, based on the minimum interference, select their spectrum and power transmitted for V2I and V2V links. The V2I capacity maximization and V2V latency are chosen to show the performance of the proposed algorithm. As the number of vehicles increases, the interference grows, which lowers the V2V link capacity and makes it hard to guarantee the latency. In the proposed solution, the transmitted power is divided into three levels, and the agents select them based on their state information. The DRL is able to autonomously determine how to adjust power levels based on the remaining time and intelligently allocates resources based on local observations, resulting in significant improvements in the V2V success rate and V2I capacity compared to conventional methods. The authors modify the action state (action taken by each agent), and the agents update their actions asynchronously, with only one or a small subset of V2V links updating their actions in each time slot. This approach allows agents to observe environmental changes caused by the actions of other agents.

In [

68], the authors proposed a spectrum resource management multi-hop broadcast protocol named the Global Optimization algorithm based on Experience Accumulation (GOEA) to facilitate the coordination among vehicles in channel selection, aiming to mitigate packet loss resulting from channel collisions. Moreover, the dynamic spectrum access model is proposed based on the RL and Recurrent Neural Network (RNN+ DQN) algorithm. It is noted that as the number of vehicular users increases compared to the number of channels, the proposed RNN+DQN models’ performance deteriorates significantly. Both the proposed DRL model and GOEA only perform better if the number of users is low; otherwise, both perform badly as the vehicular density increases.

In [

69] the authors further extend their work [

70] and maximize the spectrum efficiency based on the mobility-aware, priority-based channel allocation method using DRL, where channels are allocated to vehicles based on their Service Mobility Factor (SMF) and priority. LSTM networks are employed to capture the temporal variation in service requests due to user mobility, which is then integrated with DRL. The bandwidth allocation policy is optimized using the proposed algorithm. The reward is calculated based on the user’s SMF, transmission cost, and used bandwidth. Additionally, the performance of LSTM+DQN and LSTM+A2C correlates with reward function convergence and spectral efficiency. Both models show superior performance for reward convergence. This study used the real-time large vehicular speed dataset, and the proposed model handles the big data efficiency. However, the loss incurred by the proposed model keeps fluctuating because the environment keeps changing.

Research on spectrum sharing extensively employs RL algorithms and their variants. The primary goal is to maximize throughput and spectral efficiency while minimizing latency for vehicular users. DQN-based algorithms are utilized to manage the continuous action and state spaces. However, as vehicle numbers and the complexity of these spaces grow, RL algorithm performance tends to decline [

69]. To address this, clustering approaches have been implemented to stabilize the system and lower access latency by decreasing direct connections to the cellular network [

65]. Moreover, distributed approaches are favored in the literature because they reduce message overhead among vehicles compared to centralized methods. The research work in the area of spectrum sharing is summarized in

Table 3 based on the objectives of the research, the algorithm designed, and the metrics used to evaluate the performance of the proposed algorithm.

4.1.3. AI in Ground Trajectory Management

Vehicular ground trajectory prediction is vital for the safety of intelligent self-driving vehicles. It predicts traffic behaviour on the road and informs future maneuvers based on these predictions, including drivers’ responses to sudden trajectory changes. Additionally, changes in vehicle trajectory and position impact the Field of View (FOV) in V2V communication as road blockage and vehicle density increase. Consequently, researchers are concentrating on trajectory prediction and have proposed numerous effective AI-based methods.

In [

71], the authors predicted leading vehicle trajectory using the proposed method based on the joint time-series modelling approach (JTSM). The proposed model is compared with the constant Kalman filter (CKF), LSTM, and multiple LSTM (MLLSTM). The proposed model shows significant improvement in terms of root mean square error (RMSE). In [

72], the authors predict vehicles’ trajectory by using the LSTM algorithm. Then, the predicted value is provided to the QL algorithm to figure out the optimal resource allocation policy for the nodes. The real-world vehicle trajectory data used in this research were provided by Didi Chuxing, a ride-sharing company. The ultimate goal is to enhance the QoS for non-safety-related services in MEC-based vehicular networks, and the proposed model outperforms the other models.

In [

73], the paper employs an LSTM encoder to encode the states of the target vehicle, enabling the prediction of its maneuvers. Trajectory prediction is then achieved using the predicted maneuvers along with map information. Finally, based on interaction-related factors, traffic rules (such as red lights), and map information, nonlinear optimization methods are utilized to refine and optimize the initial future trajectory. In addition to this, with the advancement of neural networks, various RNN architectures have been extensively utilized.

In [

74], the authors employed two groups of LSTM networks to predict the trajectory of a target vehicle. One group is used to model the trajectories of surrounding vehicles, while the other group focuses on modelling the interactions between these surrounding vehicles. In [

75], TraPHic, a model based on the CNN-LSTM hybrid network to predict the trajectories of traffic participants, is proposed. This model inputs the state and surrounding objects of the main vehicle into CNN-LSTM networks to extract their features. These features are then combined with the LSTM decoder to predict the main vehicle’s trajectory. However, this algorithm only predicts the trajectory of one object per operation. Similarly, in [

76], the authors employ a CNN-LSTM framework using a “box” method to detect and eliminate outliers in vehicle trajectories to obtain valid data. These data are then processed through the convolutional and maximum pooling layers to extract interaction-aware features, which are subsequently fed into an LSTM and a fully connected layer for prediction. The model’s hyper-parameters are optimized using the Grid Search (GS) algorithm.

This research primarily focuses on applying supervised learning techniques to the vehicular ground trajectory, a subject extensively studied within autonomous vehicular environments. The key areas of interest include driving-style prediction [

71], driving maneuvers [

73], and trajectory prediction for safe driving [

74,

75]. However, the impact of vehicular trajectory on resource management in ad hoc vehicular networks remains under-explored. Vehicular trajectory prediction is crucial, as the channel condition between vehicles in a highly mobile network depends on the LOS, and even minor variations in V2V communication can significantly degrade channel conditions, affecting the overall system performance. Additionally, since VANETs operate as multi-agent networks with independently moving agents, a minor positional shift of one agent can influence others. RL algorithms show promise in predicting trajectory effects on the system, but further study is needed to understand the impact of trajectory control on resource management using Machine Learning. The research work in the area of vehicular ground trajectory optimization for resource management is summarized in

Table 4 based on the objectives of the research, the algorithm designed, and the metrics used to evaluate the performance of the proposed algorithm.

4.1.4. AI in Task Offloading

Task offloading refers to the transfer of data from one device to another or the migration of the end user from one communication network to another. This paper focuses on the offloading of data or tasks between devices to distribute network resources and balance the load in highly mobile vehicular networks. Nowadays, vehicles are outfitted with sensors, cameras, transceivers, and onboard computing devices to facilitate communication with other vehicles and the surrounding infrastructure. Vehicular offloading involves transferring or migrating computations to cloud or fog nodes to augment vehicle capabilities. This offloading process allows for the remote processing of vehicular applications within the cloud or fog infrastructure. When vehicular application computations take place at the cloud level, it is known as cloud computing. Alternatively, when vehicular applications demand low latency and high computational resources, computations are performed on fog-level servers, a practice known as fog or edge computing. Task offloading can be binary or partial. In binary offloading, the entire task is either executed locally by the vehicle or transferred to the fog server for execution. With partial offloading, the vehicle performs a portion of the task locally, while the remainder is offloaded to the vehicular edge server for completion.

In [

77], the authors proposed a multi-platform intelligent offloading and resource allocation algorithm to dynamically organize the computing resources. The task-offloading problem is dealt with as a multi-class classification problem where the K-Nearest Neighbor (KNN) algorithm selects the best option available out of cloud computing, mobile edge computing, or local computing platforms. The system makes decisions to compute the complete task locally or decides to offload it to the MEC or the cloud. In addition, when the task is offloaded to a desired server, RL is implemented to solve the resource allocation strategy. The state is defined as the MEC computing capacity, the actions are the offloading decision and computation resource allocation, and the reward is the minimum total cost. The proposed joint optimization is compared with full MEC and full local techniques, and it is concluded that the proposed scheme reduces the total system cost and optimizes the overall system performance. However, the proposed RL model is not compared with any other AI or conventional mathematical optimization techniques.

A study similar to [

77] is conducted by the authors in [

78]. They approached the task-offloading problem in the same manner by proposing two offloading layers. The first layer selects between cloud computing (CC) and MEC servers using the Random Forest (RF) model to decide between local, MEC, or CC task offloading. In the proposed DRL model, vehicles send their traveling state, location, and task information to the MEC server. The RSU/BS is responsible for collecting the MEC server status, managing spectrum and computing resources among vehicles with task offloading requests, and combining this information into an environmental state. The RSU/BS then sends the combined environment state to the agent. The agent receives feedback on the optimal policy for resource allocation decisions for each vehicle to maximize the total accumulated reward. The T-Drive trajectory dataset, from the Microsoft website, is used for the model training and testing. This study is limited in scope, and the reward function is not well formulated, which significantly harms the convergence of the reward function.

In [

79], the authors divide regions into different vehicular fog cloud (VFC) systems, and each VFC consists of moving vehicles, a remote cloud, one or more VFs, and a VF resource manager (VFRM). The VF has restricted resources, and VFRM controls the assignment of the resources to VF to fulfill service latency requirements. The authors deal with the offloading problem as partial offloading. To implement the proposed strategy, the proximal policy optimization-based RL algorithm is used to handle the continuous action space instead of Q-learning as it is not suitable for this purpose. In terms of computational time, the proposed proximal policy optimization RL (PPO-RL) model does not perform better than the simple PPO algorithm as the proposed RL model needs the computations for the heuristic model used with the proposed model.

In [

80], to tackle the problem of the scarcity of computational resources, a selection criterion is proposed to select volunteers’ vehicles capable of executing the computationally intensive task. For the volunteer vehicle identification or the task-offloading decision, the authors used various state-of-the-art ML-based regression techniques, including LR, SVR, KNN, DT, RF, GB, XGBoosting, AdaBoost, and ridge regression. For the training and testing of the models, a vehicular onboard unit computing capability dataset is collected. It contains three different datasets. All three datasets have seven features but a different number of samples or sizes. The results for the task execution time and delay are also made to conform with the simulation environment developed using the NS3 simulator. One drawback of the proposed scheme is that it is not delay-tolerant, whereas the computing and transmission delays in task offloading are very critical to consider.

In [

81], a model named ARTNet is proposed to make an AI-enabled V2X framework for maximizing resource utilization at the fog layer and minimizing the average end-to-end delay of time-critical IoV applications in a distributed fashion. The software-defined network (SDN) controller selects the secondary agents who, in the case of SDN failure, support the underlying architecture. Moreover, the ARTNet, implemented with the secondary agents, takes the data offloading decision to minimize the end-to-end delay based on the reward function. The energy consumption, average latency, average overload probability, and energy shortfall are considered as evaluation metrics. The ARTNet model achieves success through lower latency, reduced energy consumption, and minimized energy shortfall by intelligently distributing tasks at the fog layer using resource pooling. Additionally, ARTNet assigns tasks to fog nodes with fewer tasks and optimizes the performance. However, the proposed model is a simple Q-learning-based RL model whose reward convergence analysis is not provided. The authors could have used other heuristic algorithms in the RL model instead of Q-learning for comparison purposes, or they could have compared it with other variants of RL models to present the effectiveness of the proposed model.

In [

82], the authors perform queue-length resource allocation. At the controller level, the network safety flows are managed. The safety flows have a higher priority ratio based on the criticality, and the non-safety flows have less priority. The bandwidth allocation is the main fairness allocation criterion to obtain the maximum rate for different applications. The simulation environment uses mininet-wifi for multiple RSUs and vehicles to communicate in V2V and V2I scenarios. The authors implemented LSTM, CNN, and DNN and compared their results with one another. The LSTM outperforms all models in terms of accuracy. AI-supervised learning is implemented, but the study provides limited information about the data collection, the size of the data samples, and the features used to classify the flows.

In [

83], the authors introduced automated slice resource control and updated the management system using two ML models. The first ML model predicts future resources at the network edges based on the user traffic streamed at each edge, classifying the traffic type to determine the specific resources required at any given physical resource location. The second ML model focuses on the resource utilization of virtual machines (VMs). It predicts future resource usage to decide on scaling specific types of virtual network functions (VNFs), ensuring service availability. The RNN model is used to automate the resource management for the IoV network and compares it with Auto-Regressive Integrated Moving Average (ARIMA) model in terms of accuracy. The dataset used in this research is called the GWAT-13 Materna dataset with 12 attributes, available on Materna 13, an open source directory. It has three traces expanded over three three-month period with each trace having 850 VMs data on average. The prediction results of ML models are used by the Automated Slice Resource Control and Update Management System (ASR-CUMS) to decide the resource requirements and update the physical resources. The dataset used is synthetic and is used for the reliable prediction of network resources available.

In [

84], the authors framed the computation offloading problem as Multi-agent Deep Reinforcement Learning (MADRL), aimed at selecting the best MEC server to execute tasks for multiple vehicles. Each vehicle’s state, which includes real-time location and task information, is considered. The objective is to minimize the total task execution delay across the entire system over a given period, and the task execution delay is used as the primary performance metric. Initially, the data center trains the actor and critic networks in a centralized manner. Subsequently, vehicles make task-offloading decisions in a distributed way. The reward function is formulated as the task completion time when vehicles offload the task. However, the penalty associated with the wrong action taken by the agent is not given. The evaluation of the proposed scheme shows that MDRCO achieves superior performance compared to the NN algorithm and the AC algorithm.

In [

85], the authors proposed a Lyapunov-optimization-based Multi-Agent Deep Deterministic Policy Gradient algorithm (L-MADDPG) for task offloading and resource allocation with the ultimate objective of minimizing the system energy under the queue stability and latency constraints of the vehicular network. The authors adopt a binary offloading approach to offload the task to MEC. Each vehicle keeps the local computation queue and offloading task queue. The MADDPG determines the best possible offloading policies based on the computational ratio and queue length at the edge serve. The state space includes the speed of the vehicle, the computational resources available, and the maximum available power at the vehicle. The action space includes the task-offloading policy, the local resources allocated to the task, and the size of the offloading task. The reward function is based on the amount of energy consumed in the local processing of the task and includes all the computational ratios and energy constraints. The proposed L-MADDPG is compared with other state-of-the-art RL-based algorithms. The reward function’s convergence for the proposed model and simple MADDPG models are the same. For energy consumption, the proposed model outperforms the other models. However, as the number of vehicles grows, the local computation at VEC grows to 4 times the number of vehicles. The study does not address time complexity or the slow convergence of the proposed algorithm which is evident from the results presented.

Most studies have focused on binary task offloading, often neglecting partial offloading. For example, the binary offloading issue is addressed in [

77,

78]. In [

77]: the offloading problem is tackled using a state-of-the-art RL algorithm, which struggles with computational time and the dimensions of the action and state space. To address these limitations, Ref. [

78] employs DRL, which manages dimensionality and enhances computational complexity. The technique of partial offloading is utilized in [

79], where Q-learning is replaced with PPO within the RL model to manage complexity. However, this approach is not benchmarked against DDPG, which is known to yield better outcomes in partial offloading scenarios with high-dimensional action spaces. Lyapunov optimization, a well-established method for task offloading, has yet to be fully explored with AI to address the intricate time and computation complexities of the task-offloading problem. In [

85], the authors successfully integrate Lyapunov optimization with an RL algorithm, indicating a promising but under-explored area that warrants further research attention beyond merely the energy consumption of the system. The research work in the area of vehicular ground trajectory optimization for resource management is summarized in

Table 5 based on the objectives of the research, the algorithm designed, and the metrics used to evaluate the performance of the proposed algorithm.

4.2. AI for Resource Management in UAV Networks

The mobility and LOS links offered by UAVs present them as a viable alternative to fixed base stations in wireless communication networks. Likewise, AI has garnered significant interest in this field due to its capacity to learn from data and the environment, enabling autonomous decision-making. Consequently, the research community is actively pursuing the integration of intelligence into UAV networks through various AI algorithms. This section discusses the potential applications of AI in UAV-based wireless networks, which can serve as a foundational platform for UAV-based vehicular networks.

4.2.1. AI in UAV Deployment

The placement of UAVs is critical in resource management, affecting transmit power, coverage, and the QoS of the communication system. UAVs may be deployed in various configurations, including two-dimensional, three-dimensional, single-UAV, and multi-UAV formations. In a two-dimensional setup, the UAV’s altitude is fixed, whereas a three-dimensional approach takes into account all three spatial coordinates. Optimizing UAV placement has produced enhanced outcomes, which are elaborated upon in this section.

In [

86], the authors combined the features of FL and MARL for UAV deployment and resource allocation in urban areas using a multi-agent collaborative environment learning (MACEL) with the main goal of enhancing the overall utility of the multi-UAV communication network through strategic adjustments in the positioning, channel allocation, and power configuration of individual UAVs. In this paper, the individual cumulative reward obtained by a single UAV with MACEL is not better than the MADQL network as each UAV in MADQL only pursues its own reward maximization and has no cooperative relationship of information sharing with others as in MACEL. Moreover, as the number of users increases, the proposed model increases the UAVs’ power while adjusting the UAVs’ locations to mitigate the effects of interference and energy consumption. Similarly, as the number of UAVs is increased (for UAVs = 6), the co-channel interference increases, and although the MACEL optimizes the UAV deployment, the interference does not reach satisfactory levels. One solution to overcome the interference and network capacity optimization could be to increase the discrete number of power levels but, this will affect the complexity and computing of the network as the pace of action grows with it.

In [

87], the authors find the optimal placement of each UAV-BS that minimizes energy consumption. The load prediction algorithm (LPA), which is based on two supervised ML algorithms, namely RF and Generalized Regression Neural Network (GRNN), is used to predict macro-cell congestion based on the load history generated by the mobile network. Then, the UAV-BSs Clustering and Positioning Algorithm (UCPA) is implemented to calculate the required quantity of UAV-BSs for each congested macro-cell to minimize the corresponding user congestion, alongside identifying the optimal placement of each UAV-BS within the coverage region of the congested macro-cell. The proposed model demonstrates better overall throughput, signal-to-noise ratio (SNR), and number of users supported by UAV-BS. This study comprehensively covers the UAV and non-UAV-based congestion control by setting up the simulated network with real-time data and evaluating the performance of the system under minimum throughput and SNR requirements. However, the overhead reduction during the higher user demand needs to be investigated further for the proposed intelligent system to be implemented in 5G and 6G networks, which require shorter delays and higher throughput.

In [

88], the authors proposed an approach to the deployment of multiple UAVs-Re-Configurable Intelligent Surface (RISs) (RISs installed on UAVs) serving multiple downlink users. This paper jointly optimizes the active beamformers at both the macro and small-cell base stations, the phase shift matrix at each RIS, the trajectories/velocities of UAVs, and sub-carrier allocations for micro- and mmWave transmissions, with the objective of minimizing the overall transmit power of the system. The fundamental problem is non-convex and is a mixed integer programming problem, so it is decomposed into two distinct sub-problems. The first sub-problem focuses on optimizing the trajectories/velocities of UAVs, the phase shifts of RISs, and sub-carrier allocations for micro-wavelength transmissions, which is solved using the dueling-DQN learning approach by developing a distributed algorithm, while the second deals with the design of active beam-forming and sub-carrier allocation for mmWave transmissions, which is solved using the SCA method. The performance of the proposed model is compared with other baseline algorithms in terms of transmit power against the minimum data rate (increases with optimized location), the number of reflecting elements at the RISs (transmit power decreases as the number of reflecting elements at the RISs increases) and number of antennas at the MBS (the transmission power of the system efficiently decreases as the number of antennas increases). However, in this study, the number of UAVs is fixed to 2 and the effects of the large number of UAVs on the power requirements, interference, and SNR are not considered in this study.

In [

89], the authors proposed a Multi-objective Joint DDPG (MJDDPG) algorithm to maximize the aggregated data collection and energy transmission within the urban monitoring network while simultaneously minimizing the energy expenditure of the UAVs and optimizing the UAV flight patterns. The results show that the data collection and amount of energy transfer by the UAVs fluctuate a lot throughout the training phase. Moreover, as the number of nodes increases, the energy consumption of UAVs in the case of the proposed model deteriorates as compared to other baseline models. In this study, the experimental results of the proposed model are not compared with any other models to prove the authenticity of the model. In addition to this, it is noted that the efficiency and validity of the designed reward function can be exploited mathematically to improve the performance of the proposed algorithm. The dimensionality effects of the large action and the state space are not considered, and the time and computational complexity of the algorithm are not discussed.

In [

90], the authors consider a swarm of HAPSs for communication and aim to compare the RL and swarm intelligent (SI) algorithms. In the SI algorithm, the HAPS support a fixed number of users, and one of the HAPSs does not support any user at all. In the RL algorithm, the number of users supported by the HAPSs changes dynamically, all HAPSs support a number of users, and the total number of users supported by all the HAPS with RLs is significantly higher than in the SI algorithm. The scope of this study is very limited and it does not cover the complicated HAPS scenarios with hybrid solutions/algorithms nor compare the results with other baseline algorithms.

In wireless communication research, UAV deployment is considered an optimization sub-problem alongside others such as user-UAV association and UAV transmit power [

86], as well as energy optimizations [

87]. The primary goals include maximizing QoE, sum rate, network throughput [

87], the lifetime, the fairness, and the spectrum efficiency [

88]. The three-dimensional deployment of UAVs poses a significant challenge in UAV-based communications and has not been thoroughly explored. Furthermore, the optimization problem of determining the 3D locations for UAV-BSs is NP-hard and lacks a deterministic polynomial-time solution. Heuristic and numerical methods have been used to approximate the optimal locations for UAV-BSs. Additionally, collision avoidance and accurate channel estimation are critical areas that must be addressed with UAV deployment in cellular networks using RL-based algorithms. Currently, AI-based solutions yield overly optimistic results in offline/simulated environments, highlighting the need for their implementation in realistic communication settings. The research work in the area of UAV deployment for resource management is summarized in

Table 6 based on the objectives of the research, the algorithm designed, and the metrics used to evaluate the performance of the proposed algorithm.

4.2.2. AI in UAV Spectrum Management

Networks that utilize the spectrum combine aerial UAVs and terrestrial communication devices, which depend on the allocated spectrum for various tasks such as information transmission and data relaying. These networks function under three spectrum-sharing paradigms: overlay, underlay, and interweave. In overlay mode, UAVs gain access to extra bandwidth for their transmissions while supporting terrestrial transmissions. Underlay mode permits multiple nodes to concurrently share the same band while strictly managing mutual interference. In interweave mode, UAVs opportunistically transmit information when terrestrial signals are absent. The effective spectrum-sharing strategies allow both UAVs and terrestrial devices to improve their communication capabilities. UAVs can connect to terrestrial access points for high data rate and secure transmissions, and also act as aerial access points to bolster terrestrial communication.

In [

91], the authors proposed a dynamic information exchange management scheme in a UAV network based on LSTM and the DQN algorithm to improve the average collision rate, throughput, and reward function based on the frame rate, sending bit rate, and total packet error rate. The performance of the proposed LSTM+DQN model is not considerably better than the DQN and Q-learning model for average packet collision rate. For the throughput of the dynamic time slot allocation system, the proposed model converges slowly along with other comparative models and does not show better throughput maximization as compared to the other models. It is noted that the action space of each UAV agent either shares information with all other UAVs or waits to share. This makes the action space grow as all UAVs are exchanging information at the same time. In addition to this, the action space is defined as a binary operation and the best channel allocation factor is a continuous time function. Clearly, this makes the proposed model more complex and slows down the convergence, which is evident based on the results obtained in the research.

In [

92], the authors proposed a DQN-based task offloading and channel allocation scheme with the objective of gathering the expected data packets while ensuring they meet the delay constraints for each packet and keep the data computational processing and time processing costs to a minimum. The volunteer vehicles need to choose the right number of sub-channels to minimize the task uploading delay, while the UAV must select the most efficient task processing model. This is a complex integer programming problem, and to solve it, the Lagrange Duality Method and DQN are deployed. The mean cost (data preparation, transmission, calculation, and downloading) is evaluated based on transmission power, the velocity of UAVs, the computing capacity, andthe distance between vehicles and UAVs. The proposed DQN scheme performs better than the other Q-learning-based techniques in terms of the convergence of reward value and the computational time except for the DQN-based Double-Option Scheme, as both use neural networks to predict Q-value. Moreover, as the number of vehicles increases, the cost of the system also increases, which is the drawback of the proposed model as it is unable to serve all the vehicles simultaneously.

In [

93], the authors perform joint power allocation and scheduling for a UAV swarm network. In the network, one drone is selected as a leader, and all other drones are made to be a group of drones following the leader. Every group transmits the update of its local FL model to the leader drone so it can combine all the local parameters for global parameter updates to the global model. While the drones exchange updates, the wireless transmissions are affected by many internal and external losses and interference. In order to assess the influence of wireless variables such as fading, transmission delay, and UAV antenna angle variations caused by environmental factors like wind and mechanical vibrations on FL efficiency, a comprehensive convergence analysis is conducted. Subsequently, a strategy for joint power allocation and scheduling is introduced to enhance the convergence speed of the FL. One drawback of the study is that as the variance of the angle deviation increases, the FL convergence takes more time, which can only be compensated by increasing the bandwidth of the system.

UAVs bring a novel dynamic aspect to spectrum sharing in cellular communications. In the design of radio frequency networks, the installation of equipment and the allocation of the spectrum are traditionally carried out for individual cells. However, the movement of UAVs necessitates a more dynamic approach to cell design, taking into account factors such as UAV mobility, altitude, the number of UAVs deployed, as well as their coverage areas. AI-based spectrum sharing in UAV-enabled wireless networks is facilitated through the implementation of RL algorithms. RL models based on neural networks have been effective in various domains, but they tend to converge slowly when applied to spectrum sharing in wireless communications [

91,

92], a challenge also observed with FL-based models [

93]. Furthermore, the reward function is critical in system optimization, making the selection of appropriate parameters and their interrelationships vital for agents to make correct decisions. The research work in the area of the UAV spectrum sharing for resource management is summarized in

Table 7 based on the objectives of the research, the algorithm designed, and the metrics used to evaluate the performance of the proposed algorithm.

4.2.3. AI in Aerial Trajectory Management

Energy-efficient trajectory planning for UAVs has attracted significant research interest lately, with numerous solutions suggested for UAV-enabled wireless networks. Generally, the current strategies for energy-efficient UAV trajectory planning fall into two categories: non-ML-based methods and ML-based methods. This section delves into the ML-based methods for optimizing UAV trajectories.

In [

94], the authors proposed the FL-based method for the joint optimization of the UAV position and local accuracy of the FL model and user computation and communication resources. These three problems are developed as three separate sub-problems. The proposed algorithm is compared with the fixed-altitude UAV-assisted FL ratio, performs with better learning, and reduces the system’s overall energy consumption. The horizontal trajectory of UAV makes the problem non-convex, and the Successive Convex Approximation (SCA) technique is implemented to make it convex. The Dinkelbach method is applied to optimize the FL local accuracy. Finally, the Karush–Kuhn–Tucker conditions (KKTH) method is used to optimize the system bandwidth. The proposed method’s performance (system cost reduction) improves as the altitude of UAV increases. In addition to this, as the bandwidth of the system increases, it supports more users and reduces the UAV energy consumption. However, this research is based on a single UAV system, and more complex multi-UAV-based scenarios need to be considered to include 3D vertical trajectory with collision avoidance and UAV transmission power to evaluate the performance of the proposed scheme.

In [

95], the authors integrate DNN in UAV at MEC for communication resource allocation, model optimization, and UAV trajectory control to ensure the service latency minimization while ensuring the requirements of learning accuracy and energy consumption are met. The resulting problem is characterized as a non-convex mixed integer nonlinear programming (MINLP) problem. So the original problem is divided into three subproblems. These sub-problems are solved iteratively. By optimizing the trajectory, the UAV positions itself closer to its serving devices, thereby providing better channel conditions and reducing transmission latency. The proposed algorithm operates in polynomial time and has high complexity, making its implementation challenging, particularly when the network scale is extremely large. Moreover, the task-offloading problem is based on binary model selection variables, and each task is supported by DNN at the edge or locally. This significantly increases energy consumption limitations and computational complexities, which results in the performance deterioration of the system.

In [

96], the authors maximize the sum rate of the UAV-enabled multi-cast network by jointly designing the UAV movement, RIS reflection matrix, and beam-forming design from the UAV to the users based on a multi-pass deep Q Network (BT-MP-DQN). In the proposed model, the UAV is the agent, and the beam-forming control and trajectory design are considered system actions. The movement of the UAV is discrete action, whereas the beam-forming design is continuous action. However, the UAV movement is not transformed into continuous action, which keeps this problem non-convex MINLP and the authors kept the problem non-convex. The proposed scheme is not compared with any baseline models to validate the results.

The potential for mobility that UAVs offer holds promising prospects but also introduces new challenges and technical obstacles. In UAV-assisted wireless networks, the optimization of UAV trajectories is critical, taking into account key performance metrics such as bandwidth [

94], sum-rate maximization [

96], energy consumption, and service latency [

95]. Additionally, trajectory optimization must consider the dynamic nature and diversity of UAV types. Despite numerous studies on UAV trajectory optimization, several issues remain unresolved, including the optimization of UAV trajectories based on the mobility patterns of ground users to enhance coverage performance and the development of obstacle and collision-aware trajectory optimization for UAVs. Furthermore, the horizontal trajectory presents a non-convex problem, and it is presumed that AI-based RL techniques can manage the non-convexity. However, this assumption leads to slow convergence in the RL models. The research work in the area of UAV trajectory management is summarized in

Table 8 based on the objectives of the research, the algorithm designed, and the metrics used to evaluate the performance of the proposed algorithm.

4.2.4. AI in UAV Task Offloading and Resource Allocation

Numerous MEC-based solutions have been developed to meet the QoS requirements of data-heavy mobile applications. However, the deployment of static edge servers in isolated, mountainous, or disaster-prone regions may not be practical. In such cases, UAVs become valuable. To ensure LoS communications, UAVs can be utilized for task offloading and to improve download performance. Research typically addresses task offloading and resource allocation—such as maximizing throughput and minimizing energy consumption—concurrently. The configuration of UAV-enabled MEC systems is greatly influenced by the specific application scenario of UAV deployment. UAVs can function as relays or offloading units, temporarily handling data during high-traffic periods, which enhances system capacity as UAVs operate as base stations to meet the surge in user demand. Moreover, using UAVs as relays not only increases system capacity but also broadens coverage. In managing system load, similar to VEC, both local computing for minor data tasks and data offloading to UAVs for larger datasets are utilized. The main challenges in data offloading and resource allocation involve controlling delays and managing the relay power. The primary goals of resource allocation and data offloading are to establish connections, secure high data transfer rates, and allocate targets efficiently.

In [

97], the authors address the integration of UAVs and terrestrial UE in cellular networks. The key challenge is managing inter-cell interference due to the reuse of time–frequency resource blocks. A novel approach using the first p-tier-based RB coordination criterion has been proposed. The study aims to enhance wireless transmission quality for UAVs while minimizing interference with terrestrial UEs. The goal is to minimize the UAV’s ergodic outage duration (EOD). The complexity of the problem is tackled using a hybrid of Deep Double Duelling Q Network (D3QN) and Twin Delayed Deep Deterministic Policy Gradient (TD3). The proposed UAV-based system with the RL model is effective in minimizing service latency and enhancing communication quality. The study highlights the importance of practical channel modelling and advanced optimization techniques to manage the complex interference environment in cellular-connected UAV networks. However, the MINLP problem was developed with no mathematically closed-form solution, and the authors relied on the capabilities of the RL algorithm for the optimum solution, which clearly increases the computational and time complexities.

In [

98], a multi-agent DRL approach was proposed to develop an efficient resource management method for UAV-assisted IoT communication systems. The resource-management algorithm optimizes bandwidth allocation, throughput optimization, interference mitigation, and power usage management. The DRL is used with the K-means algorithm and round-robin scheduling algorithms for clustering and service request queues, respectively. The accuracy, RMSE, and testing time(s) are used as metrics to compare the proposed method with previous works, but the throughput prediction and power consumption rates are not compared with the other models and previous work. So it is difficult to assess the overall performance of the proposed algorithm.

In [

99], the authors investigate the dynamic resource allocation of multiple UAV-enabled communication networks with each UAV autonomously communicating with a ground user by selecting its communicating user, power level, and sub-channel, without exchanging information with other UAVs. The long-term resource allocation problem is formulated as a stochastic game aimed at maximizing expected rewards. In this context, each UAV acts as a learning agent in the MARL model, with each resource allocation solution corresponding to an action taken by the UAVs. The reward function is based on the individual user, sub-channel, and power level decisions of UAV. However, it is considered if a UAV cannot find a user with a satisfactory QoS, it will be considered nonfunctional for the network. This makes the problem and the designed reward function very simple, such that it cannot tackle the complexities of the system. This means a complex reward function needs to be designed for the efficient UAV use.

In [

100], the authors proposed a MARL approach to manage bandwidth, throughput, interference, and power usage effectively while offloading the tasks to UAV. Moreover, an actor–critic-based RL technique (A2C) solution in UAVs is implemented to offload the computational tasks of the ground users and achieve the minimum mission time. The proposed method is compared with the greedy-based method and achieves a better average response time. However, the proposed method is not compared with other advanced RL-based methods such as DDPG to compare the computational and time complexities of the algorithm. In [

101], the authors probe the offloading of the task in UAV via MEC servers to minimize latency and the energy of the UAVs. Each UAV is associated with its corresponding task by keeping track of the available energy along with the optimal MEC server selection. Two Q-learning models are proposed and compared with the greedy algorithm. This study does not provide any information about the agents, states, or actions assigned in the algorithm. Moreover, no reward function is defined, and the complexity of the proposed model is not discussed either.

In [

102], the authors perform task offloading to manage the resources by ensuring the energy and latency minimization for high-altitude balloon (HAB) networks. The HABs dynamically determine the optimal user association, service sequence, and task allocation to minimize the weighted sum of energy and time consumption for all users. A Support Vector Machine (SVM)-based FL algorithm is proposed to determine user association. The non-convexity is dealt with by splitting the main problem into two sub-optimization tasks: (a) optimizing the service sequence and (b) optimizing task allocation. The SVM-based global learning algorithm achieves a better accuracy rate as users vary and utility function as compared to the proposed SVM-FL algorithm. The energy consumption performance is better than baseline models as the HABs make users compute tasks locally. Moreover, the computational task time is better than other algorithms yet it is quite high and not efficient.

Given the diverse application scenarios, selecting the most suitable offloading technique is crucial for improving network throughput, bandwidth, interference [

97,

98,

99,

100], energy consumption, and latency [

101,

102]. In scenarios with a large number of users, network nodes such as densely populated urban areas with heterogeneous networks, deep learning approaches, or optimization-based algorithms impose higher overhead on UAVs due to their iterative nature and longer computation and training times. Cooperative UAV-enabled hybrid algorithms are presented as a viable option, as they leverage a multi-agent system that allows for combinations of relay nodes and MEC servers. This approach enables a better selection of offloading algorithms to prevent excessive delays. The energy efficiency, flight time, and type of UAV selected for resource management and task offloading directly affect the UAVs’ ability to provide long-term and viable alternatives to the MEC server. Moreover, the binary offloading and allocation problem is mostly formulated where either the task is computed at the device level or completely offloaded to the UAVs. This approach limits the implementation of UAVs as most of the time, due to energy constraints of UAVs, they cannot compute the complete task. The research work in the area of UAV-based task offloading and resource allocation for resource management is summarized in

Table 9 based on the objectives of the research, the algorithm designed, and the metrics used to evaluate the performance of the proposed algorithm.

4.3. AI for Resource Management in UAV-IoV Networks

The heterogeneity of vehicular networks and their highly dynamic nature with fast-moving wireless nodes have made them more complex and demand new requirements for networking algorithms that can meet the stringent network control and resource-allocation demands such as efficient spectrum sharing, transmission power maximization, and computational resource management to minimize the energy requirements of UAVs and vehicles’ local computation. UAV-IoV networks are three-dimensional and contrast with terrestrial networks, and the UAV-BS itself moves with the vehicles on the roads. Therefore, traditional optimization techniques are unable to capture complex patterns. The resource management in UAV-IoV is divided into radio resource allocation and computational resource management. The radio resource allocation is further divided into spectrum and channel access optimization. The main goal of radio resource management is to limit channel interference, power usage, and network congestion. The computation resource management includes service, task, and traffic offloading in MEC, where the edge cloud nodes are located in BSs and/or UAVs. This decentralization of the system generates faster response times compared to the central deployments. In this section, we review AI-based resource allocation research conducted in UAV-IoV networks.

4.3.1. AI Deployment of UAV-IoV Systems

The integration of UAVs and vehicles within an AI-based IoV network enabled by UAVs is an under-researched area. Most studies focus on UAVs with static IoT users, cellular BS, vehicles, and RSUs. However, considering the high mobility of both UAVs and vehicles, vehicle clustering on roads and UAV deployment in the aerial network become critical due to the rapidly changing channel conditions between UAVs and vehicles. Additionally, with vehicles traveling at varying speeds, maintaining favorable channel conditions to ensure good QoS is essential, necessitating a mechanism for adequate connection time.

In [

103], the authors proposed an FL-based approach to the development of IoV-based applications. The authors used the Gale–Shapley algorithm to match the lowest-costing UAV to each sub-region. The UAV performs the local training. Based on the transversal and transmission cost function, the multi-dimensional node-coverage cost is converted into a single-dimensional node coverage. The simulation results show that the lowest marginal cost of node coverage for a UAV is assigned to each sub-region for task completion. The UAV energy constraint has not been considered as much as the effect of the flight time on the node coverage. Moreover, the proposed technique is not compared with previous works or any other baseline model to provide comprehensive analysis in terms of throughput maximization, energy consumption, and computational complexity.

In [

104], the authors deployed UAVs as relays to improve the communication efficiency between the model owner/server and the workers/vehicles. The paper combines auction-integration (AI) formations to integrate UAVs into groups of IoV elements with the target of achieving the total revenue maximization of a single UAV. The algorithm becomes more complex as the number of UAVs is increased, which in turn exponentially increases the number of partition sets of UAVs that need to be found. So the model is not affected by the change in the number of vehicles, but at the same time, if the overall size of the cell increases, it affects the communication efficiency of the proposed model, and the authors did not tackle this issue in this study.

A vast amount of research has been conducted on the deployment of vehicles and UAVs individually using AI. Yet the deployment of UAVs in relation to the distribution of vehicles on roads remains unexplored. The energy constraints, the relative speed of UAVs to vehicles on the road, and the UAVs’ brief flight duration present significant challenges in managing communication resources within UAV-based IoV networks. The research work in the area of UAV and vehicular deployment for resource management in UAV-assisted IoV networks is summarized in

Table 10 based on the objectives of the research, the algorithm designed, and the metrics used to evaluate the performance of the proposed algorithm.