EUAVDet: An Efficient and Lightweight Object Detector for UAV Aerial Images with an Edge-Based Computing Platform

Abstract

1. Introduction

| Method | Parameters (M) | FLOPs (G) | AP(%) | FPS | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| AP | AP | AP | AP | mAP | AP | mAP | Nano | Orin | |||

| YOLOv3-tiny [23] | 8.68 | 12.9 | 4.0 | 11.6 | 13.9 | 16.7 | 6.95 | 14.8 | 6.2 | 14.8 | 75.0 |

| YOLOv5-s [24] | 7.01 | 15.8 | 9.5 | 24.1 | 39.6 | 29.8 | 16.1 | 25.8 | 13.6 | 12.5 | 65.0 |

| YOLOx-tiny | 5.04 | 15.2 | 9.0 | 25.1 | 28.8 | 31.9 | 18.8 | 27.6 | 16.1 | 10.8 | 55.1 |

| YOLOx-s [25] | 8.94 | 26.8 | 10.0 | 26.4 | 34.8 | 33.4 | 19.8 | 28.9 | 17.0 | 9.4 | 46.7 |

| YOLOv7-tiny [26] | 6.03 | 13.1 | 11.1 | 26.9 | 39.1 | 35.0 | 18.5 | 29.5 | 15.4 | 16.3 | 70.0 |

| YOLOv10-n | 2.28 | 6.7 | 9.4 | 27.4 | 34.4 | 30.8 | 17.8 | 26.0 | 14.3 | 22.3 | 82.6 |

| YOLOv10-s [27] | 7.20 | 21.6 | 12.5 | 33.5 | 46.1 | 37.0 | 22.0 | 30.6 | 17.2 | 11.3 | 54.5 |

| EUAVDet-n | 1.21 | 6.2 | 9.5 | 28.9 | 34.9 | 31.3 | 18.3 | 26.1 | 14.3 | 23.4 | 84.7 |

| EUAVDet-s | 4.44 | 21.4 | 13.0 | 34.1 | 40.4 | 37.7 | 22.3 | 31.0 | 17.4 | 11.6 | 55.6 |

| YOLOv8-n | 3.01 | 8.1 | 9.6 | 28.6 | 38.2 | 31.9 | 18.4 | 26.2 | 14.4 | 19.5 | 78.0 |

| YOLOv8-s [28] | 11.13 | 28.7 | 13.0 | 33.1 | 41.5 | 38.0 | 22.4 | 31.0 | 17.3 | 6.4 | 45.0 |

| EUAVDet-n | 1.34 | 6.9 | 10.5 | 29.8 | 36.0 | 32.9 | 19.2 | 27.1 | 14.9 | 21.1 | 79.6 |

| EUAVDet-tiny | 2.86 | 15.0 | 12.8 | 34.1 | 40.6 | 37.2 | 22.1 | 30.5 | 17.0 | 13.2 | 56.0 |

| EUAVDet-s | 4.96 | 25.6 | 14.0 | 35.3 | 42.1 | 39.2 | 23.5 | 32.4 | 18.1 | 8.6 | 52.7 |

2. Related Works

2.1. Lightweight Object Detection

2.2. Multi-Scale Feature Fusion

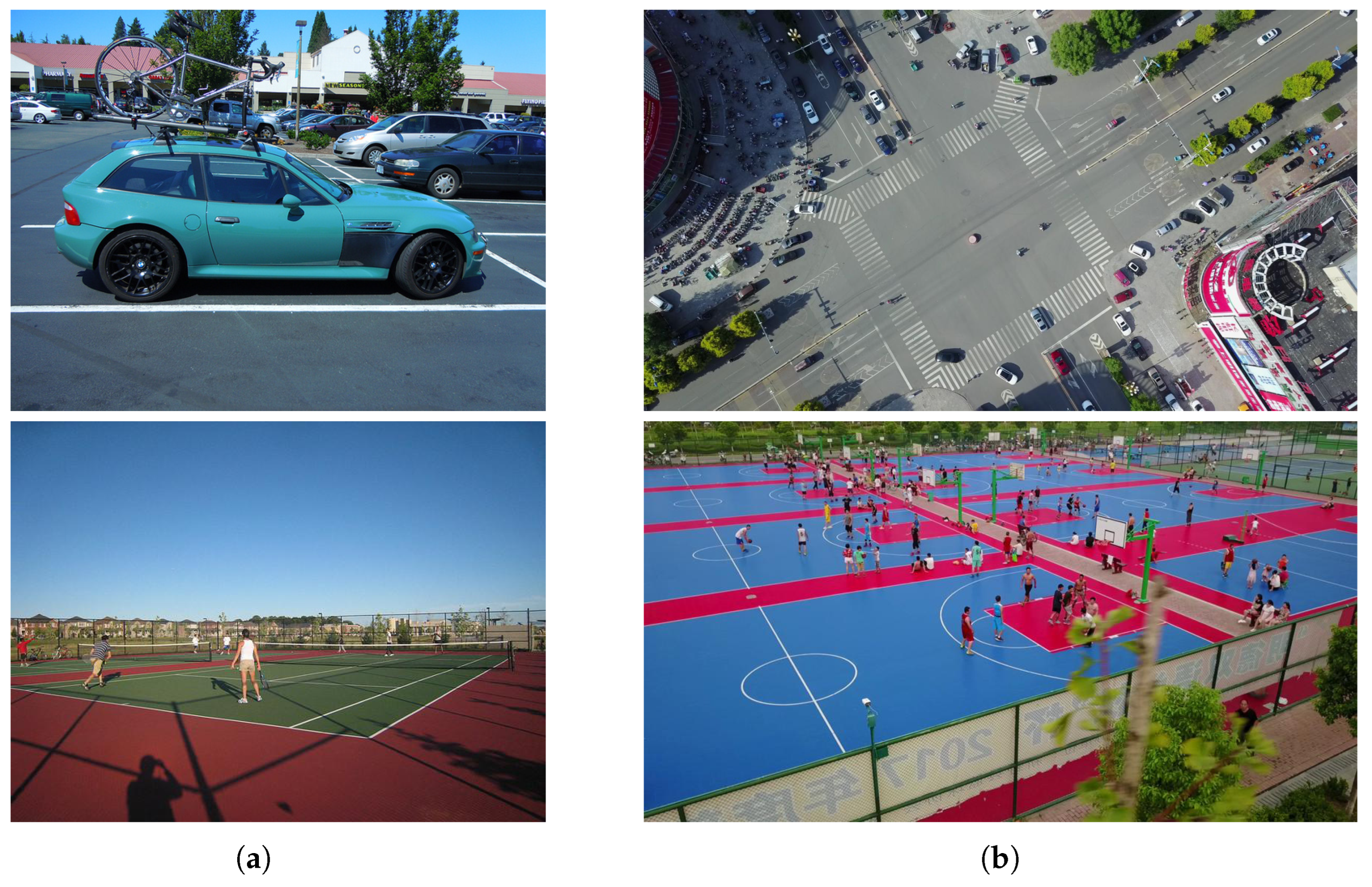

2.3. Object Detection for UAV Aerial Images

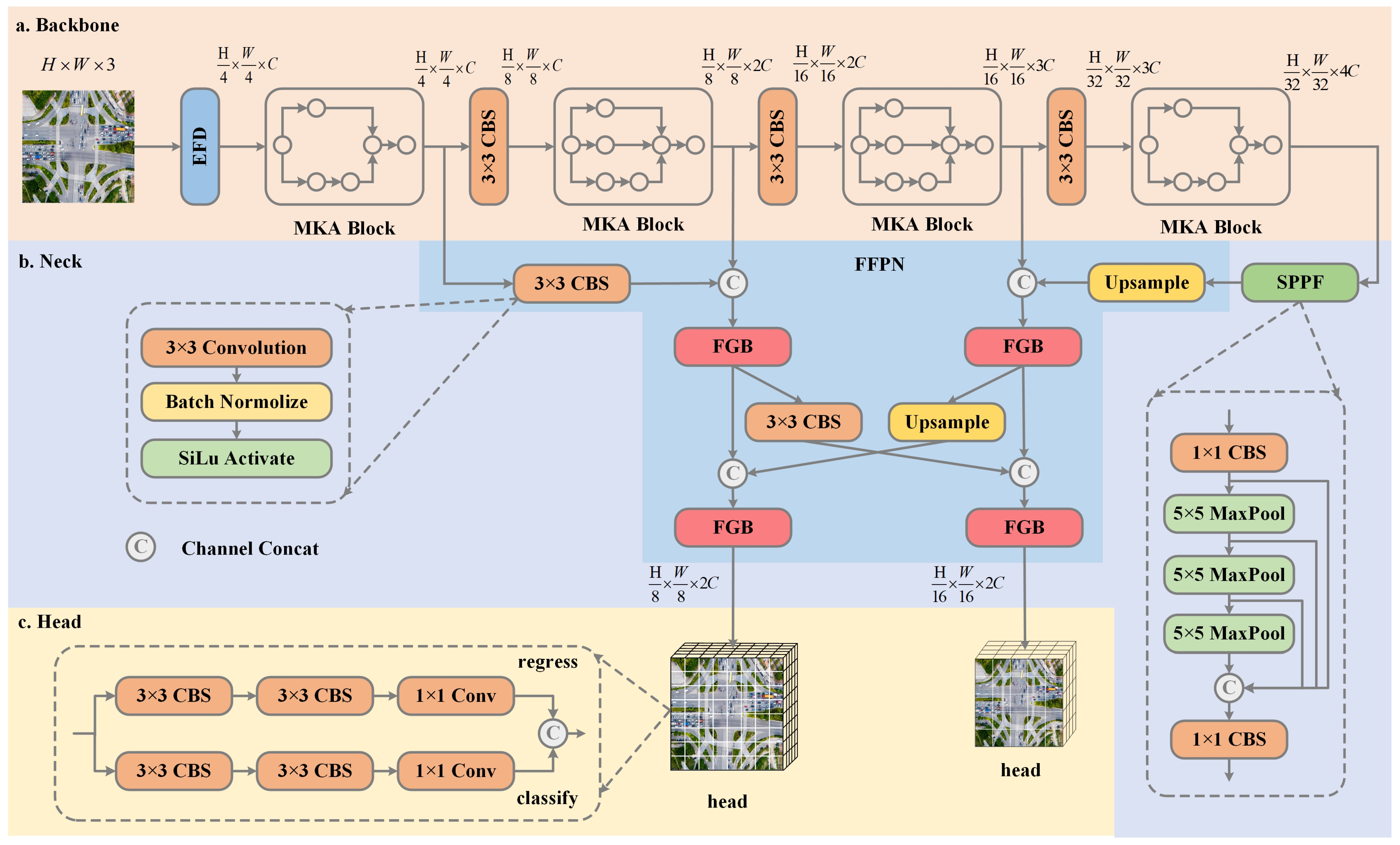

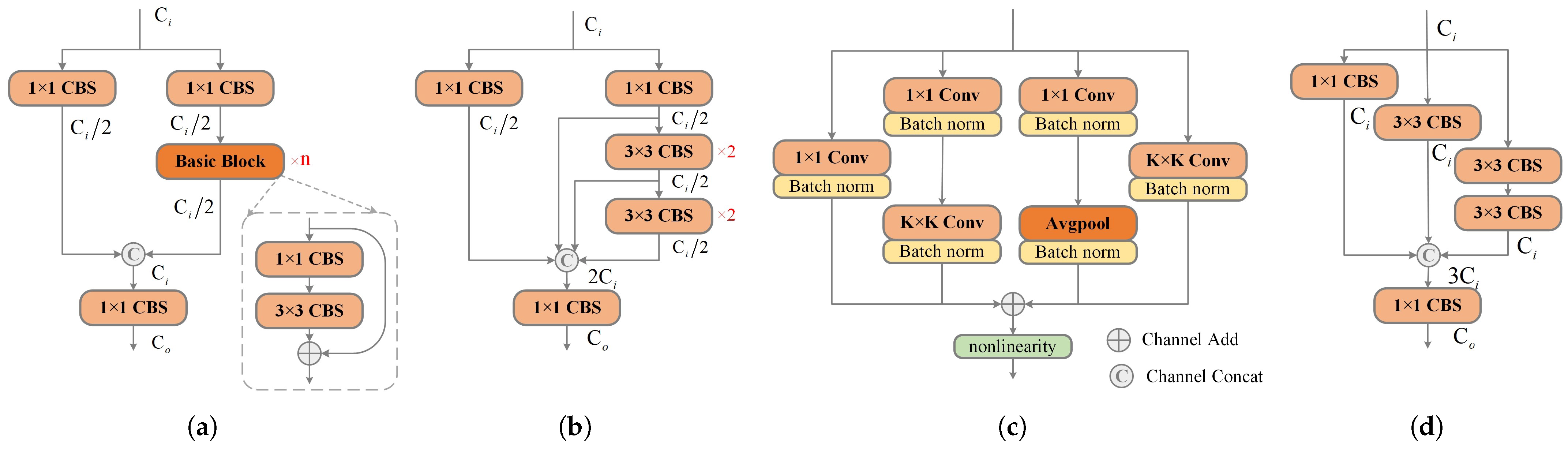

3. Proposed Method

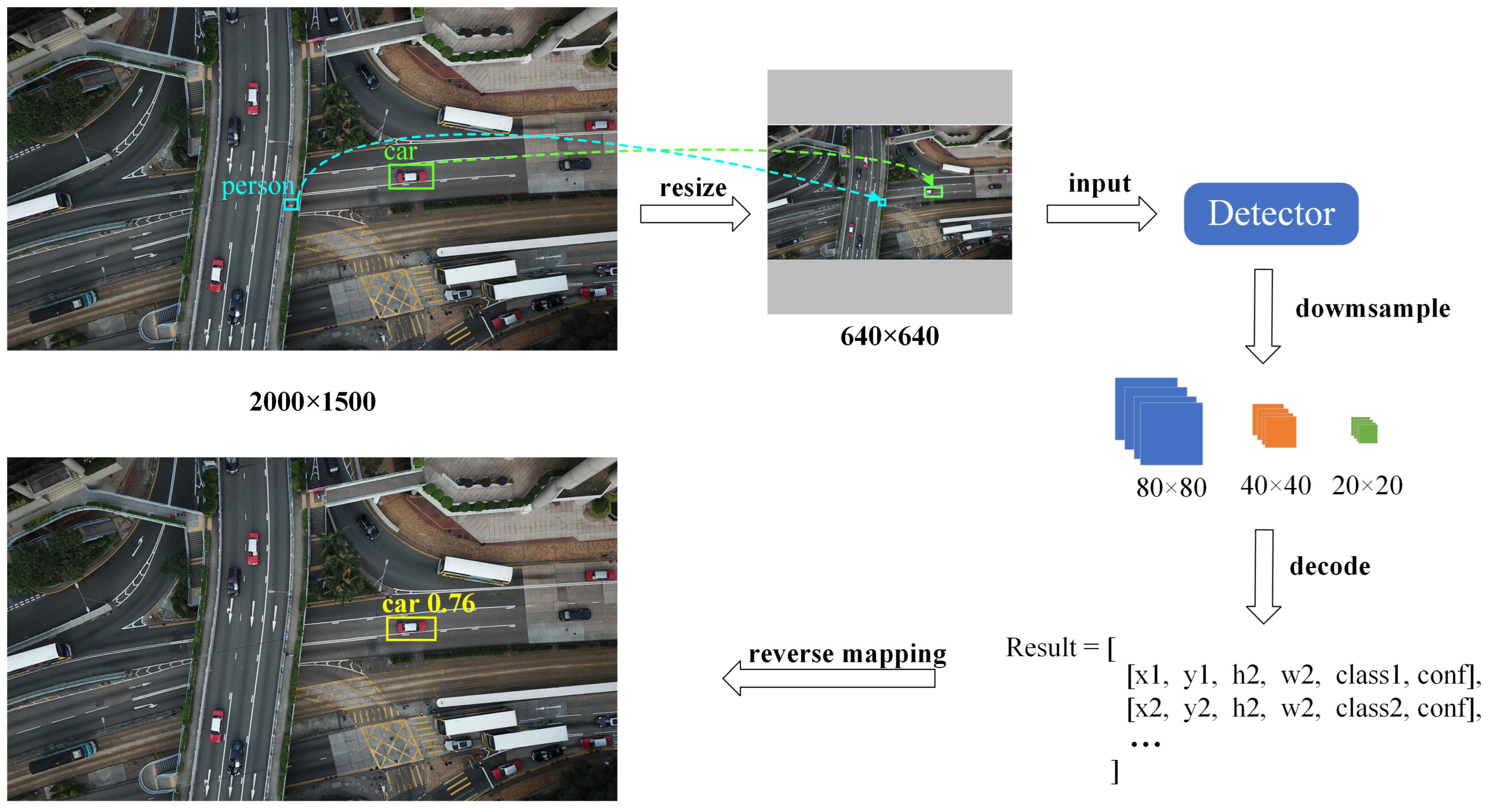

3.1. Efficient Feature Downsampling Module

3.2. Multi-Kernel Aggregation Block

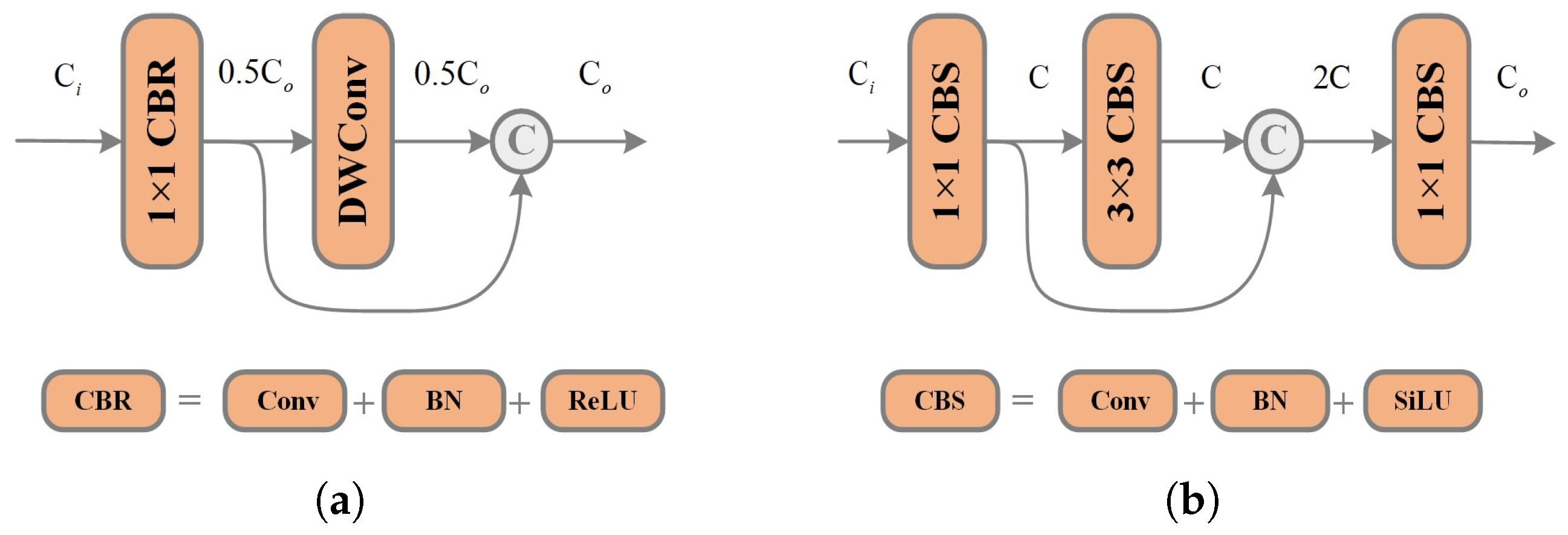

3.3. Faster Ghost Module

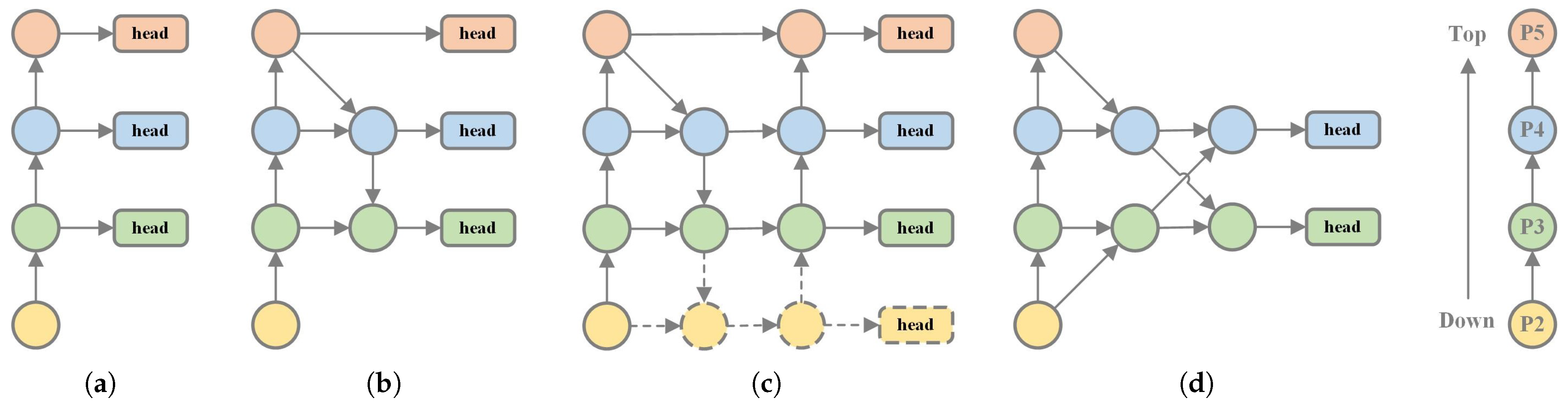

3.4. Focused Feature Pyramid Network

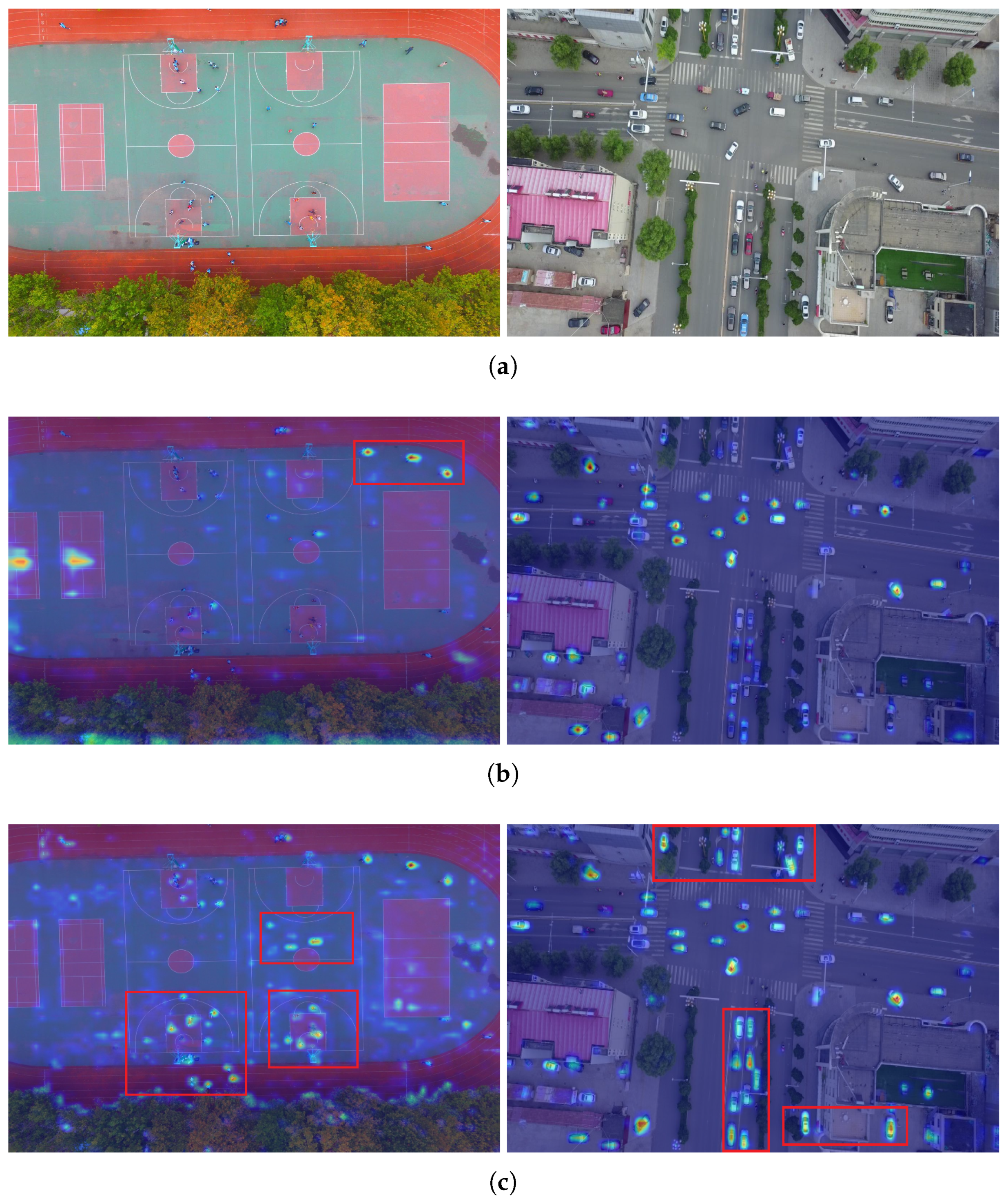

4. Experiments

4.1. Datasets

4.2. Evaluation Metrics

4.3. Implementation Details

4.4. Ablation Study

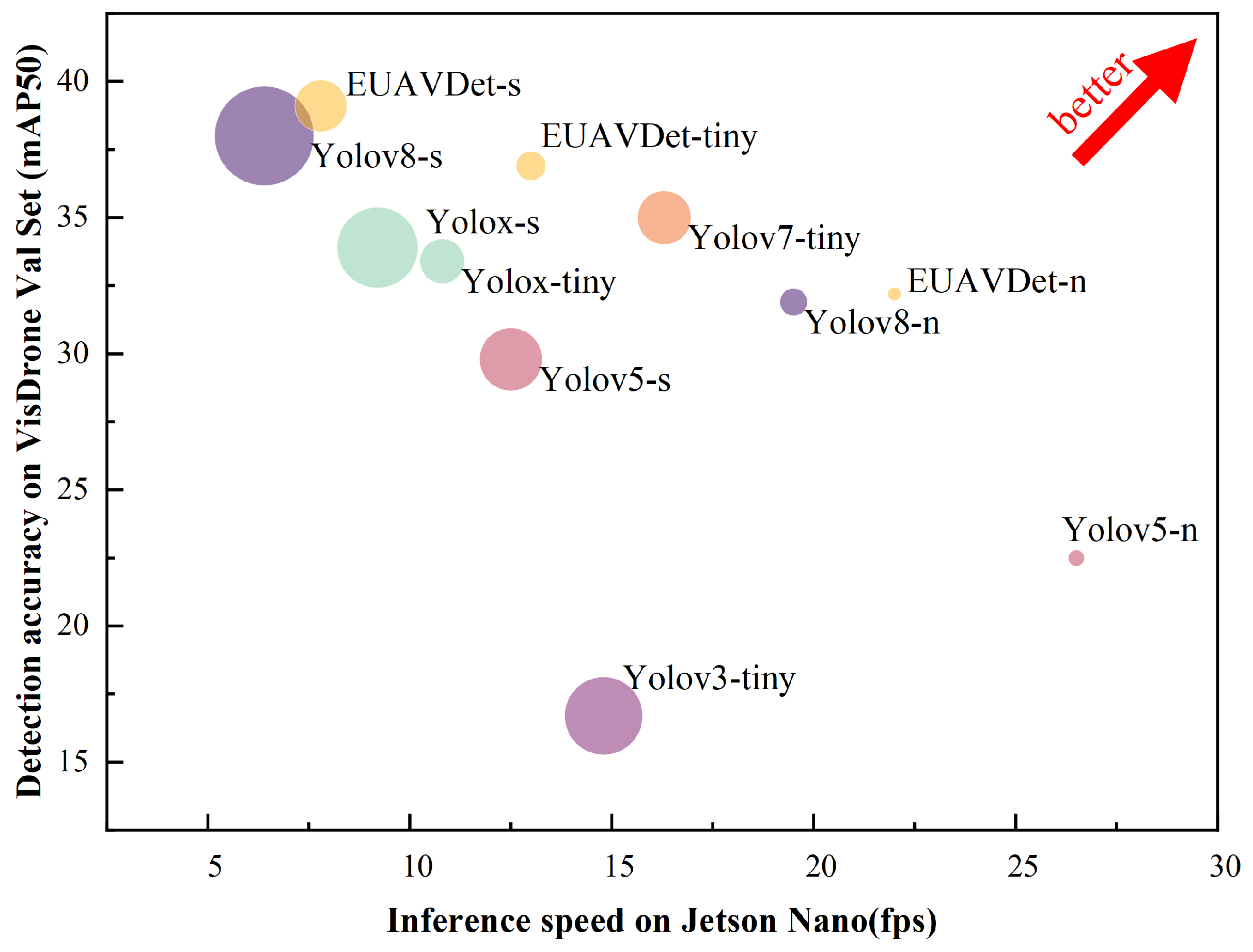

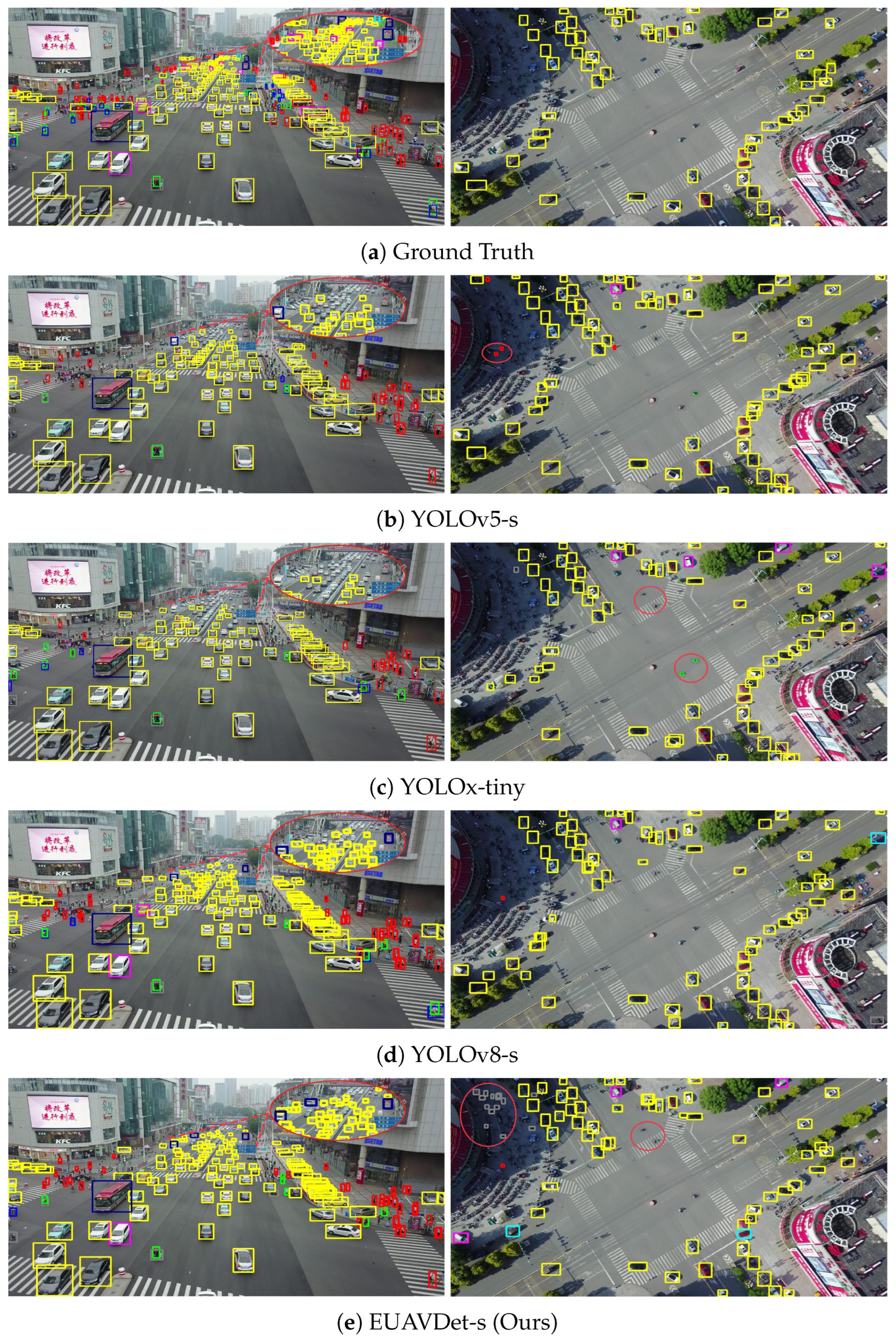

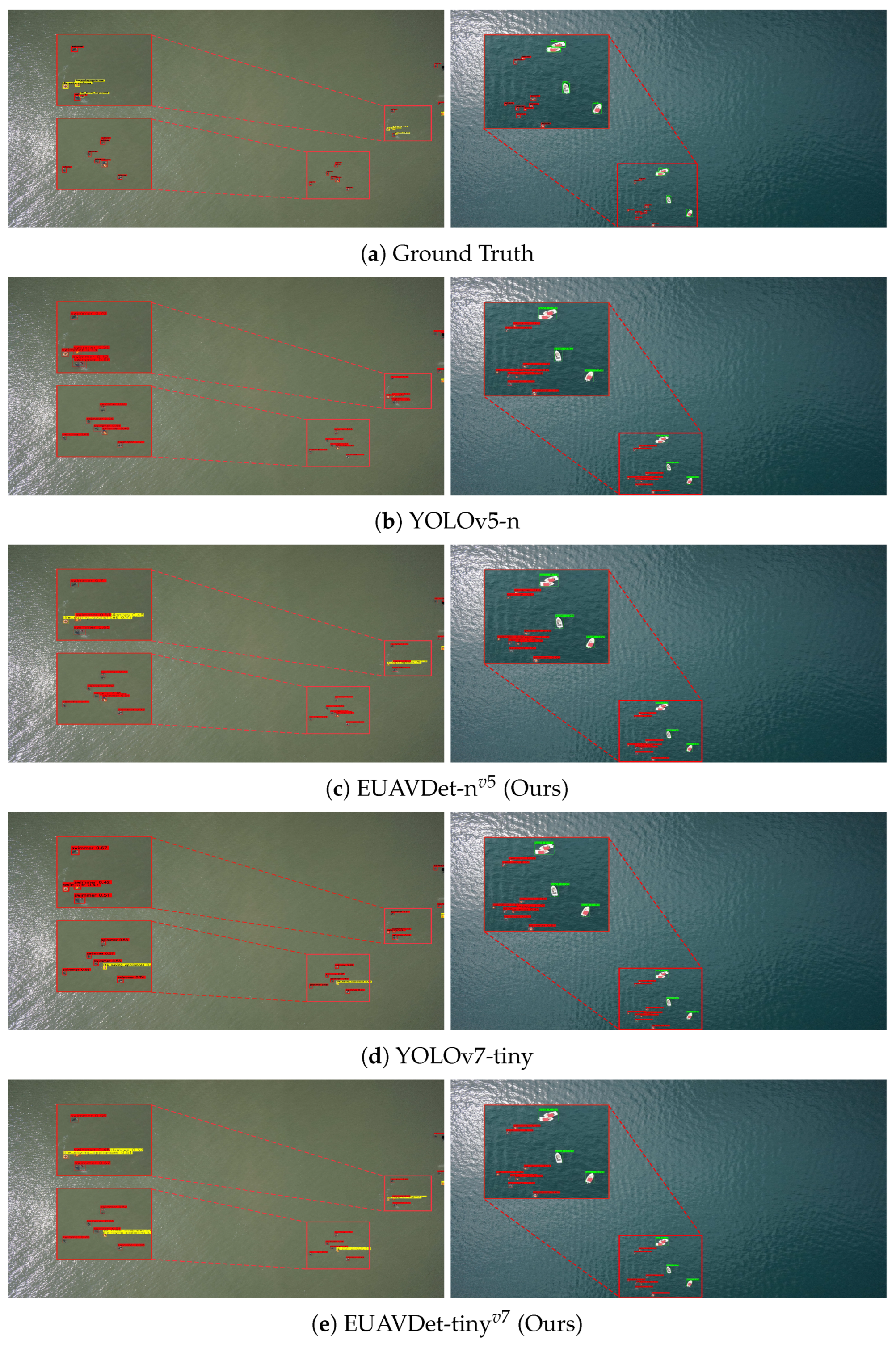

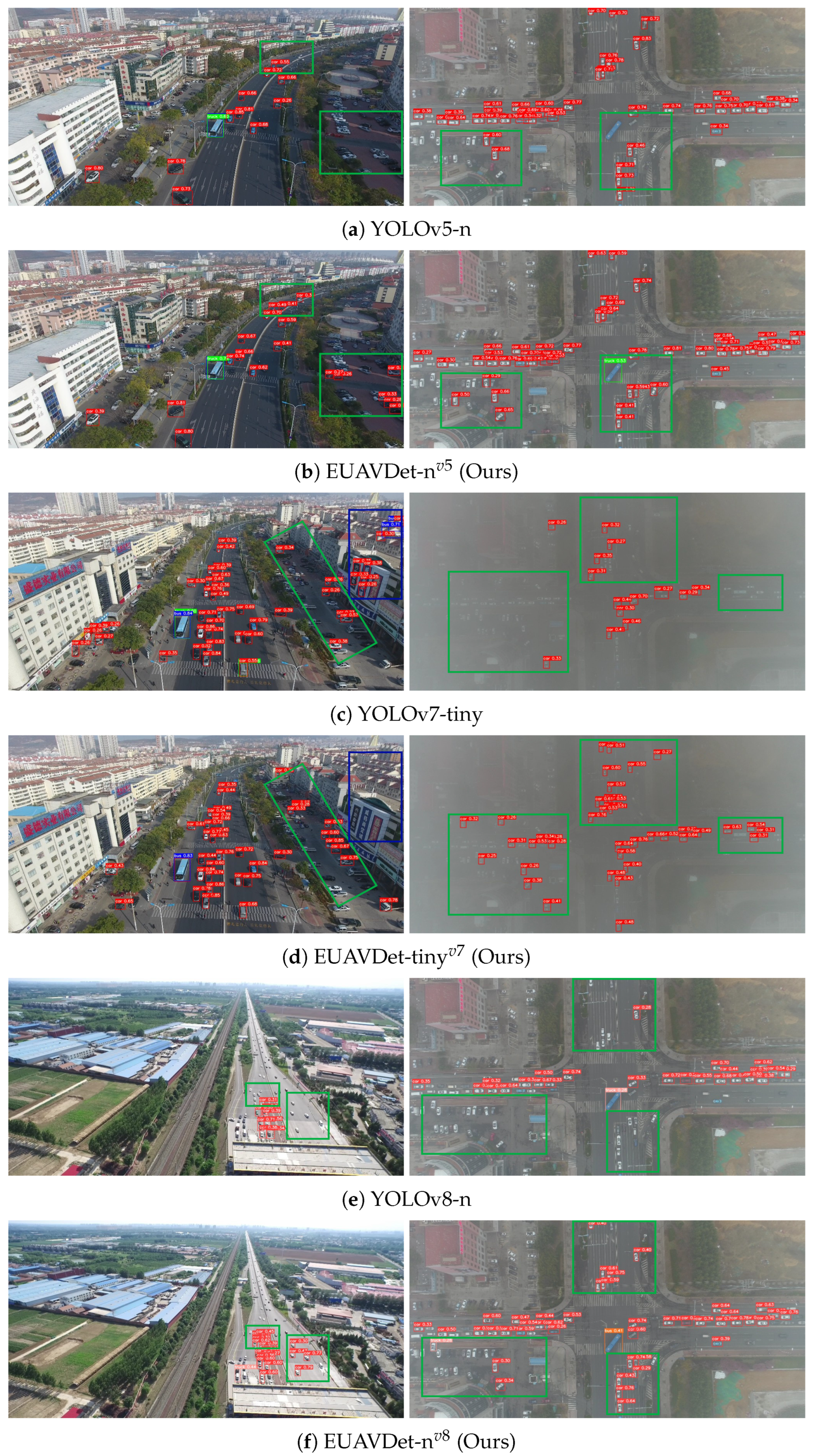

4.5. Comparison with the State-of-the-Art Methods

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Chen, C.; Zheng, Z.; Xu, T.; Guo, S.; Feng, S.; Yao, W.; Lan, Y. YOLO-Based UAV Technology: A Review of the Research and Its Applications. Drones 2023, 7, 190. [Google Scholar] [CrossRef]

- Zhang, Z.; Zheng, H.; Cao, J.; Feng, X.; Xie, G. FRS-Net: An Efficient Ship Detection Network for Thin-Cloud and Fog-Covered High-Resolution Optical Satellite Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 2326–2340. [Google Scholar]

- Cao, Z.; Kooistra, L.; Wang, W.; Guo, L.; Valente, J. Real-time object detection based on uav remote sensing: A systematic literature review. Drones 2023, 7, 620. [Google Scholar] [CrossRef]

- Koay, H.V.; Chuah, J.H.; Chow, C.O.; Chang, Y.L.; Yong, K.K. YOLO-RTUAV: Towards real-time vehicle detection through aerial images with low-cost edge devices. Remote Sens. 2021, 13, 4196. [Google Scholar] [CrossRef]

- Hernández, D.; Cecilia, J.M.; Cano, J.C.; Calafate, C.T. Flood detection using real-time image segmentation from unmanned aerial vehicles on edge-computing platform. Remote Sens. 2022, 14, 223. [Google Scholar] [CrossRef]

- Fan, Y.; Chen, W.; Jiang, T.; Zhou, C.; Zhang, Y.; Wang, X. Aerial Vision-and-Dialog Navigation. arXiv 2022, arXiv:2205.12219. [Google Scholar]

- Liu, S.; Zhang, H.; Qi, Y.; Wang, P.; Zhang, Y.; Wu, Q. AerialVLN: Vision-and-Language Navigation for UAVs. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), IEEE, Paris, France, 2–6 October 2023; pp. 15338–15348. [Google Scholar]

- Zhang, P.; Zhong, Y.; Li, X. SlimYOLOv3: Narrower, faster and better for real-time UAV applications. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Republic of Korea, 27–28 October 2019. [Google Scholar]

- Lu, Y.; Gong, M.; Hu, Z.; Zhao, W.; Guan, Z.; Zhang, M. Energy-based CNNs Pruning for Remote Sensing Scene Classification. IEEE Trans. Geosci. Remote Sens. 2023, 61, 3000214. [Google Scholar] [CrossRef]

- Li, Z.; Liu, X.; Zhao, Y.; Liu, B.; Huang, Z.; Hong, R. A lightweight multi-scale aggregated model for detecting aerial images captured by UAVs. J. Vis. Commun. Image Represent. 2021, 77, 103058. [Google Scholar]

- Lee, J.; Wang, J.; Crandall, D.; Šabanović, S.; Fox, G. Real-time, cloud-based object detection for unmanned aerial vehicles. In Proceedings of the 2017 First IEEE International Conference on Robotic Computing (IRC), IEEE, Taichung, Taiwan, 10–12 April 2017; pp. 36–43. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the Computer Vision—ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Proceedings, Part V 13. Springer: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar]

- Zhu, P.; Wen, L.; Du, D.; Bian, X.; Fan, H.; Hu, Q.; Ling, H. Detection and tracking meet drones challenge. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 7380–7399. [Google Scholar] [CrossRef]

- Guo, X. A novel Multi to Single Module for small object detection. arXiv 2023, arXiv:2303.14977. [Google Scholar]

- Zhang, R.; Shao, Z.; Huang, X.; Wang, J.; Wang, Y.; Li, D. Adaptive dense pyramid network for object detection in UAV imagery. Neurocomputing 2022, 489, 377–389. [Google Scholar] [CrossRef]

- Zhao, L.; Zhu, M. MS-YOLOv7: YOLOv7 Based on Multi-Scale for Object Detection on UAV Aerial Photography. Drones 2023, 7, 188. [Google Scholar] [CrossRef]

- Zhang, Z.; Xia, W.; Xie, G.; Xiang, S. Fast Opium Poppy Detection in Unmanned Aerial Vehicle (UAV) Imagery Based on Deep Neural Network. Drones 2023, 7, 559. [Google Scholar] [CrossRef]

- Du, B.; Huang, Y.; Chen, J.; Huang, D. Adaptive Sparse Convolutional Networks with Global Context Enhancement for Faster Object Detection on Drone Images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 13435–13444. [Google Scholar]

- Yin, Y.; Cheng, X.; Shi, F.; Zhao, M.; Li, G.; Chen, S. An Enhanced Lightweight Convolutional Neural Network for Ship Detection in Maritime Surveillance System. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 5811–5825. [Google Scholar] [CrossRef]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. Ghostnet: More features from cheap operations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1580–1589. [Google Scholar]

- Ding, X.; Zhang, X.; Han, J.; Ding, G. Diverse branch block: Building a convolution as an inception-like unit. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 10886–10895. [Google Scholar]

- Lee, Y.; Hwang, J.w.; Lee, S.; Bae, Y.; Park, J. An energy and GPU-computation efficient backbone network for real-time object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–17 June 2019; pp. 752–760. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Jocher, G. YOLOv5 by Ultralytics; Zenodo: Geneva, Switzerland, 2020. [Google Scholar] [CrossRef]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. Yolox: Exceeding yolo series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. YOLOv10: Real-Time End-to-End Object Detection. arXiv 2024, arXiv:2405.14458. [Google Scholar]

- Jocher, G.; Chaurasia, A.; Qiu, J. Ultralytics YOLO, 2023; Ultralytics Inc.: Seattle, WA, USA, 2023. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28, 1–9. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Dong, Z.; Wang, M.; Wang, Y.; Zhu, Y.; Zhang, Z. Object detection in high resolution remote sensing imagery based on convolutional neural networks with suitable object scale features. IEEE Trans. Geosci. Remote Sens. 2019, 58, 2104–2114. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision—ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part I 14. Springer: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Duan, K.; Bai, S.; Xie, L.; Qi, H.; Huang, Q.; Tian, Q. Centernet: Keypoint triplets for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6569–6578. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. Shufflenet: An extremely efficient convolutional neural network for mobile devices. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6848–6856. [Google Scholar]

- Cui, C.; Gao, T.; Wei, S.; Du, Y.; Guo, R.; Dong, S.; Lu, B.; Zhou, Y.; Lv, X.; Liu, Q.; et al. PP-LCNet: A lightweight CPU convolutional neural network. arXiv 2021, arXiv:2109.15099. [Google Scholar]

- Li, Y.; Chen, Y.; Dai, X.; Chen, D.; Liu, M.; Yuan, L.; Liu, Z.; Zhang, L.; Vasconcelos, N. Micronet: Improving image recognition with extremely low flops. In Proceedings of the IEEE/CVF International Conference on Computer Vision, New Orleans, LA, USA, 18–24 June 2021; pp. 468–477. [Google Scholar]

- Ding, X.; Zhang, X.; Ma, N.; Han, J.; Ding, G.; Sun, J. Repvgg: Making vgg-style convnets great again. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 13733–13742. [Google Scholar]

- Zhao, L.; Gao, J.; Li, X. NAS-kernel: Learning suitable Gaussian kernel for remote sensing object counting. IEEE Geosci. Remote Sens. Lett. 2023, 20, 6010105. [Google Scholar] [CrossRef]

- Peng, C.; Li, Y.; Shang, R.; Jiao, L. RSBNet: One-shot neural architecture search for a backbone network in remote sensing image recognition. Neurocomputing 2023, 537, 110–127. [Google Scholar] [CrossRef]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Ghiasi, G.; Lin, T.Y.; Le, Q.V. Nas-fpn: Learning scalable feature pyramid architecture for object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 7036–7045. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10781–10790. [Google Scholar]

- Zhang, D.; Zhang, H.; Tang, J.; Wang, M.; Hua, X.; Sun, Q. Feature pyramid transformer. In Proceedings of the Computer Vision—ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part XXVIII 16. Springer: Berlin/Heidelberg, Germany, 2020; pp. 323–339. [Google Scholar]

- Chen, C.F.R.; Fan, Q.; Panda, R. Crossvit: Cross-attention multi-scale vision transformer for image classification. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 357–366. [Google Scholar]

- Arani, E.; Gowda, S.; Mukherjee, R.; Magdy, O.; Kathiresan, S.; Zonooz, B. A comprehensive study of real-time object detection networks across multiple domains: A survey. arXiv 2022, arXiv:2208.10895. [Google Scholar]

- Li, H.; Li, J.; Wei, H.; Liu, Z.; Zhan, Z.; Ren, Q. Slim-neck by GSConv: A better design paradigm of detector architectures for autonomous vehicles. arXiv 2022, arXiv:2206.02424. [Google Scholar]

- Liu, S.; Huang, D. Receptive field block net for accurate and fast object detection. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 385–400. [Google Scholar]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A single-stage object detection framework for industrial applications. arXiv 2022, arXiv:2209.02976. [Google Scholar]

- Zhang, Z. Drone-YOLO: An efficient neural network method for target detection in drone images. Drones 2023, 7, 526. [Google Scholar] [CrossRef]

- Zhu, X.; Lyu, S.; Wang, X.; Zhao, Q. TPH-YOLOv5: Improved YOLOv5 based on transformer prediction head for object detection on drone-captured scenarios. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 2778–2788. [Google Scholar]

- Li, G.; Bai, Z.; Liu, Z.; Zhang, X.; Ling, H. Salient object detection in optical remote sensing images driven by transformer. IEEE Trans. Image Process. 2023, 32, 5257–5269. [Google Scholar] [CrossRef]

- Van Etten, A. You only look twice: Rapid multi-scale object detection in satellite imagery. arXiv 2018, arXiv:1805.09512. [Google Scholar]

- Akyon, F.C.; Altinuc, S.O.; Temizel, A. Slicing aided hyper inference and fine-tuning for small object detection. In Proceedings of the 2022 IEEE International Conference on Image Processing (ICIP), IEEE, Bordeaux, France, 16–19 October 2022; pp. 966–970. [Google Scholar]

- Wang, Y.; Yang, Y.; Zhao, X. Object detection using clustering algorithm adaptive searching regions in aerial images. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 651–664. [Google Scholar]

- Huang, Y.; Chen, J.; Huang, D. UFPMP-Det: Toward accurate and efficient object detection on drone imagery. In Proceedings of the AAAI Conference on Artificial Intelligence, Online, 22 February–1 March 2022; Volume 36, pp. 1026–1033. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Wang, C.Y.; Liao, H.Y.M.; Wu, Y.H.; Chen, P.Y.; Hsieh, J.W.; Yeh, I.H. CSPNet: A new backbone that can enhance learning capability of CNN. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 13–19 June 2020; pp. 390–391. [Google Scholar]

- Varga, L.A.; Kiefer, B.; Messmer, M.; Zell, A. Seadronessee: A maritime benchmark for detecting humans in open water. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2022; pp. 2260–2270. [Google Scholar]

- Du, D.; Qi, Y.; Yu, H.; Yang, Y.; Duan, K.; Li, G.; Zhang, W.; Huang, Q.; Tian, Q. The Unmanned Aerial Vehicle Benchmark: Object Detection and Tracking. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; Springer: Berlin/Heidelberg, Germany, 2018; pp. 375–391. [Google Scholar]

| Components | Params (M) | FLOPs (G) | AP (%) | mAP (%) | Latency (ms) | |||

|---|---|---|---|---|---|---|---|---|

| EFD | MKAB | FGM | FFPN | |||||

| - | - | - | - | 3.01 | 8.2 | 31.9 | 18.4 | 51.4 |

| ✓ | 3.01 | 8.5 | 32.4 | 18.7 | 55.7 | |||

| ✓ | 2.67 | 8.0 | 32.5 | 18.7 | 48.6 | |||

| ✓ | 2.30 | 7.4 | 31.8 | 18.4 | 48.3 | |||

| ✓ | 2.03 | 7.8 | 32.4 | 18.7 | 47.7 | |||

| ✓ | ✓ | 2.67 | 8.1 | 32.8 | 19.1 | 51.1 | ||

| ✓ | ✓ | 1.83 | 7.2 | 32.4 | 18.6 | 47.4 | ||

| ✓ | ✓ | ✓ | ✓ | 1.34 | 6.9 | 32.9 | 19.2 | 47.2 |

| Method | Params (M) | FLOPs (G) | AP (%) | mAP (%) | AP (%) | mAP (%) | Latency (ms) |

|---|---|---|---|---|---|---|---|

| C2f [28] | 3.01 | 8.2 | 31.9 | 18.4 | 26.2 | 14.4 | 51.4 |

| ELAN [26] | 2.71 | 8.6 | 23.7 | 13.3 | 18.1 | 9.8 | 50.6 |

| DBB [21] | 4.45 | 8.1 | 31.8 | 18.4 | 25.7 | 14.1 | 49.8 |

| MKAB | 2.67 | 8.0 | 32.5 | 18.7 | 26.7 | 14.7 | 48.6 |

| Method | Params (M) | FLOPs (G) | AP (%) | AP (%) | AP (%) | AP (%) | AP (%) | AP (%) |

|---|---|---|---|---|---|---|---|---|

| YOLOv8-n [28] | 3.01 | 8.2 | 9.6 | 28.6 | 38.2 | 5.7 | 22.6 | 35.3 |

| YOLOv8-n + EFD | 3.01 | 8.5 | 10.0 | 29 | 38.2 | 6.1 | 23.0 | 33.4 |

| YOLOv8-n + MKAB | 2.67 | 8.0 | 10.1 | 28.8 | 38.6 | 5.9 | 22.9 | 36.2 |

| EUAVDet-n | 1.34 | 6.9 | 10.5 | 29.8 | 37.7 | 6.4 | 23.3 | 37.3 |

| YOLOv8-s [28] | 11.13 | 28.7 | 13.0 | 33.1 | 41.5 | 7.8 | 26.8 | 40.6 |

| YOLOv8-s + EFD | 11.14 | 29.2 | 13.8 | 34.9 | 41.2 | 8.3 | 27.8 | 38.7 |

| YOLOv8-s + MKAB | 9.80 | 28.2 | 13.8 | 34.7 | 42.0 | 8.1 | 27.4 | 39.8 |

| EUAVDet-s | 4.96 | 25.6 | 14.0 | 35.3 | 42.1 | 8.4 | 28.5 | 39.5 |

| Method | Parames (M) | FLOPs (G) | AP(%) | FPS | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| AP | AP | AP | AP | AP | mAP | Nano | Orin | |||

| YOLOv5-n [24] | 1.77 | 4.2 | 26.2 | 42.0 | 55.9 | 70.2 | 36.0 | 38.4 | 24.5 | 92.0 |

| EUAVDet-n | 1.03 | 4.0 | 34.4 | 45.0 | 55.8 | 74.6 | 39.7 | 40.9 | 24.8 | 94.5 |

| YOLOv7-tiny [26] | 6.03 | 13.1 | 33.9 | 42.8 | 58.0 | 72.9 | 38.9 | 40.2 | 15.4 | 63.2 |

| EUAVDet-tiny | 1.60 | 7.2 | 35.2 | 45.9 | 55.9 | 76.7 | 39.3 | 41.3 | 19.3 | 73.2 |

| YOLOv8-n [28] | 3.01 | 8.1 | 20.7 | 37.6 | 58.7 | 59.4 | 33.6 | 33.9 | 19.7 | 73.5 |

| EUAVDet-n | 1.34 | 6.9 | 22.7 | 38.6 | 61.2 | 61.6 | 35.7 | 35.7 | 19.9 | 74.1 |

| YOLOv10-n [27] | 2.28 | 6.7 | 18.7 | 37.1 | 57.9 | 58.9 | 33.7 | 33.6 | 20.8 | 80.5 |

| EUAVDet-n | 1.21 | 6.2 | 18.3 | 39.8 | 57.2 | 60.8 | 34.3 | 35.0 | 21.7 | 83.1 |

| Method | Parames (M) | FLOPs (G) | AP(%) | FPS | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| AP | AP | AP | AP | AP | mAP | Nano | Orin | |||

| YOLOv5-n [24] | 1.77 | 4.2 | 9.0 | 23.7 | 32.0 | 27.5 | 12.4 | 14.0 | 26.5 | 97.5 |

| EUAVDet-n | 1.03 | 4.0 | 9.4 | 24.2 | 28.4 | 28.8 | 10.4 | 14.2 | 26.8 | 98.6 |

| YOLOv7-tiny [26] | 6.03 | 13.1 | 9.3 | 26.2 | 30.3 | 32.9 | 12.1 | 15.6 | 16.3 | 67.2 |

| EUAVDet-tiny | 1.60 | 7.2 | 10.2 | 28.0 | 34.5 | 33.6 | 14.9 | 16.8 | 20.5 | 77.5 |

| YOLOv8-n [28] | 3.01 | 8.1 | 9.8 | 24.8 | 30.3 | 26.4 | 16.2 | 15.2 | 20.8 | 79.5 |

| EUAVDet-n | 1.34 | 6.9 | 10.9 | 28.0 | 27.4 | 29.4 | 18. | 17.0 | 21.0 | 82.2 |

| YOLOv10-n [27] | 2.28 | 6.7 | 11.2 | 27.5 | 27.9 | 28.1 | 17.4 | 16.3 | 23.1 | 84.6 |

| EUAVDet-n | 1.21 | 6.2 | 11.1 | 27.9 | 23.6 | 28.5 | 17. | 16.6 | 24.3 | 86.8 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, W.; Liu, A.; Hu, J.; Mo, Y.; Xiang, S.; Duan, P.; Liang, Q. EUAVDet: An Efficient and Lightweight Object Detector for UAV Aerial Images with an Edge-Based Computing Platform. Drones 2024, 8, 261. https://doi.org/10.3390/drones8060261

Wu W, Liu A, Hu J, Mo Y, Xiang S, Duan P, Liang Q. EUAVDet: An Efficient and Lightweight Object Detector for UAV Aerial Images with an Edge-Based Computing Platform. Drones. 2024; 8(6):261. https://doi.org/10.3390/drones8060261

Chicago/Turabian StyleWu, Wanneng, Ao Liu, Jianwen Hu, Yan Mo, Shao Xiang, Puhong Duan, and Qiaokang Liang. 2024. "EUAVDet: An Efficient and Lightweight Object Detector for UAV Aerial Images with an Edge-Based Computing Platform" Drones 8, no. 6: 261. https://doi.org/10.3390/drones8060261

APA StyleWu, W., Liu, A., Hu, J., Mo, Y., Xiang, S., Duan, P., & Liang, Q. (2024). EUAVDet: An Efficient and Lightweight Object Detector for UAV Aerial Images with an Edge-Based Computing Platform. Drones, 8(6), 261. https://doi.org/10.3390/drones8060261