A Motion-Aware Siamese Framework for Unmanned Aerial Vehicle Tracking

Abstract

1. Introduction

2. Related Works

2.1. UAV Visual Tracking Algorithms

2.2. The Siamese Trackers

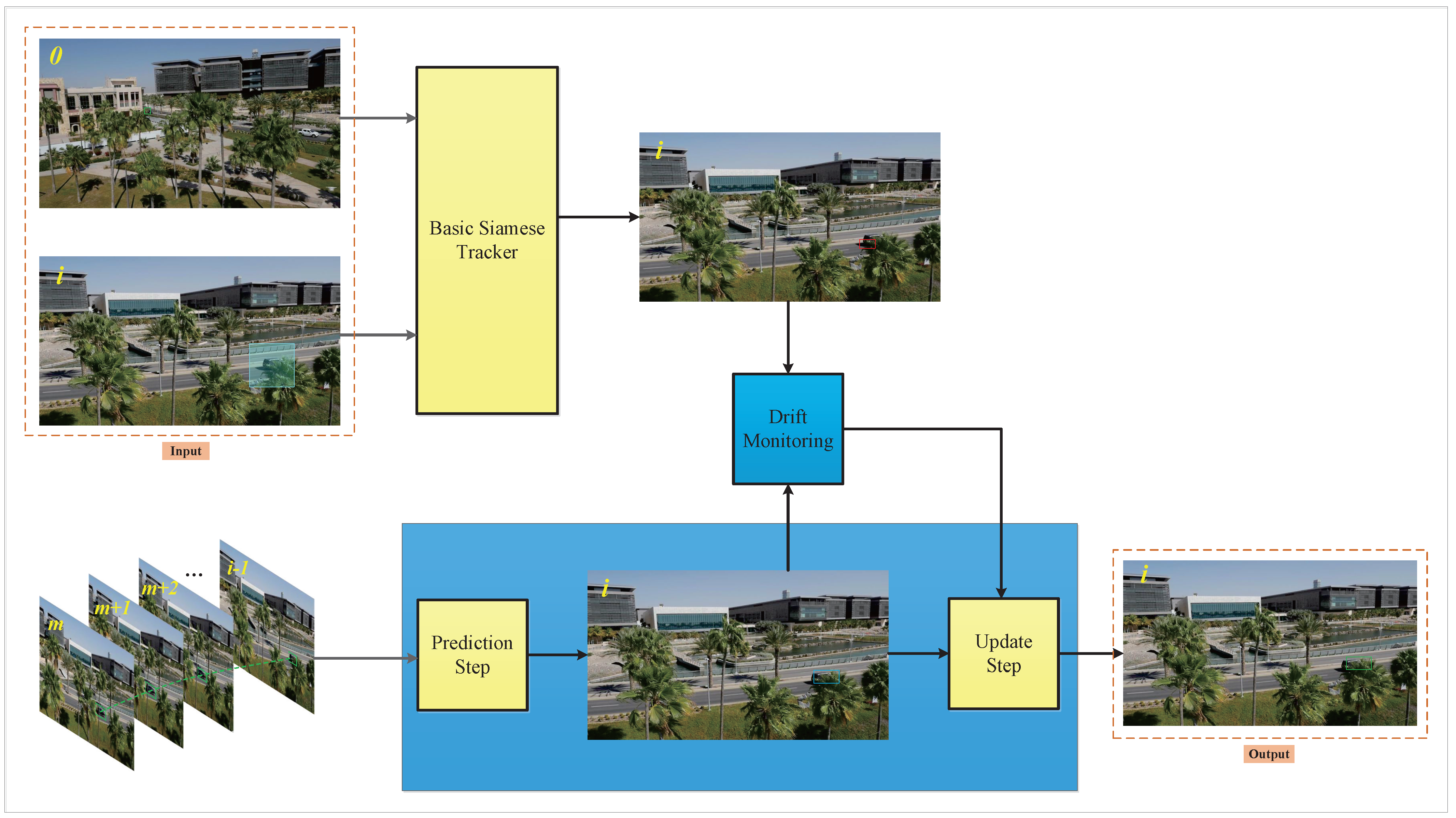

3. Motion-Aware Siamese Framework

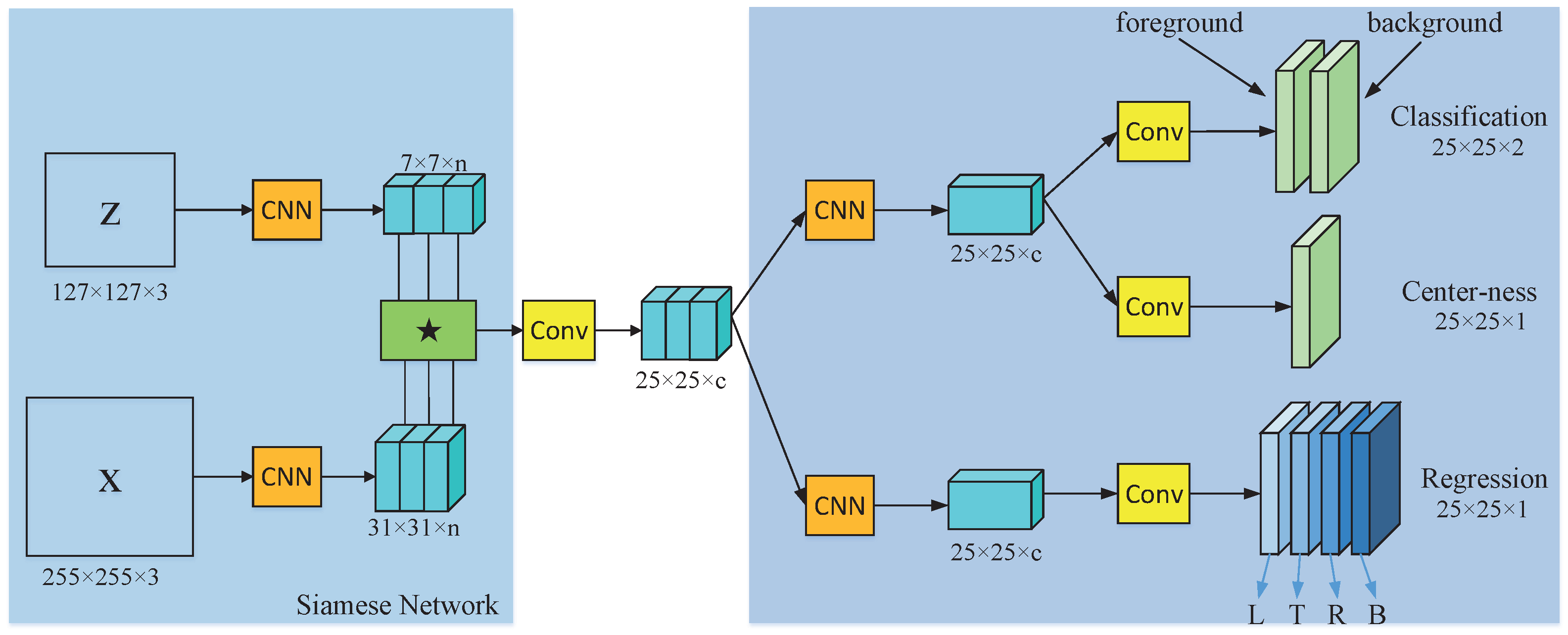

3.1. Basic Siamese Tracker

3.2. Motion Information Prediction by Using the Kalman Filter

3.3. Drift Monitoring and Tracking Recovery

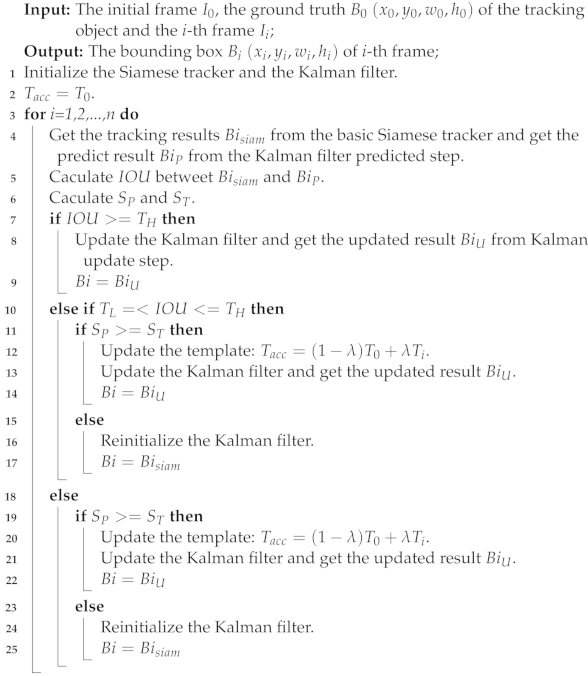

| Algorithm 1: The proposed motion-aware Siamese (MaSiam) framework algorithm |

|

4. Experiments

4.1. Experimental Platform and Parameters

4.2. Quantitative Experiment

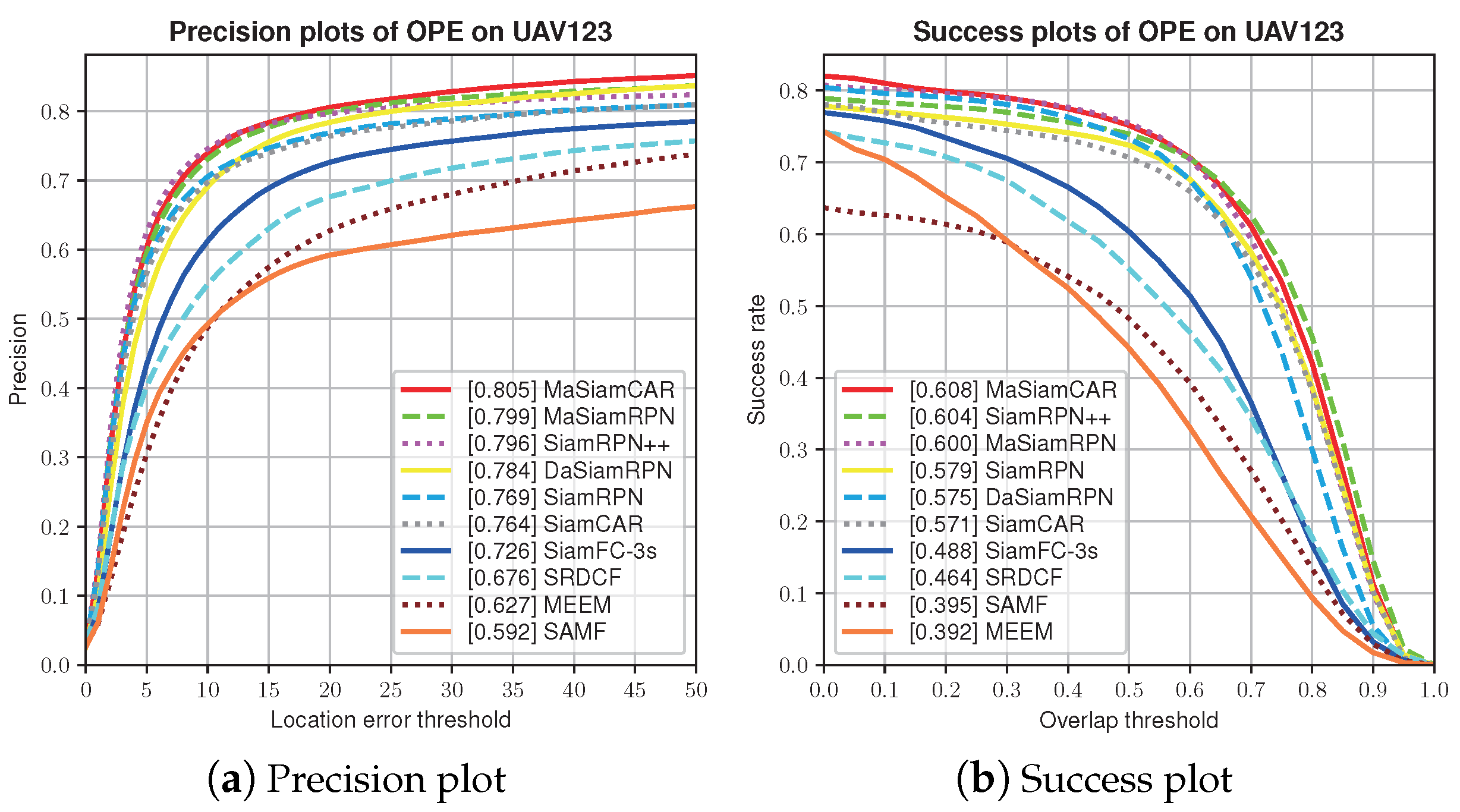

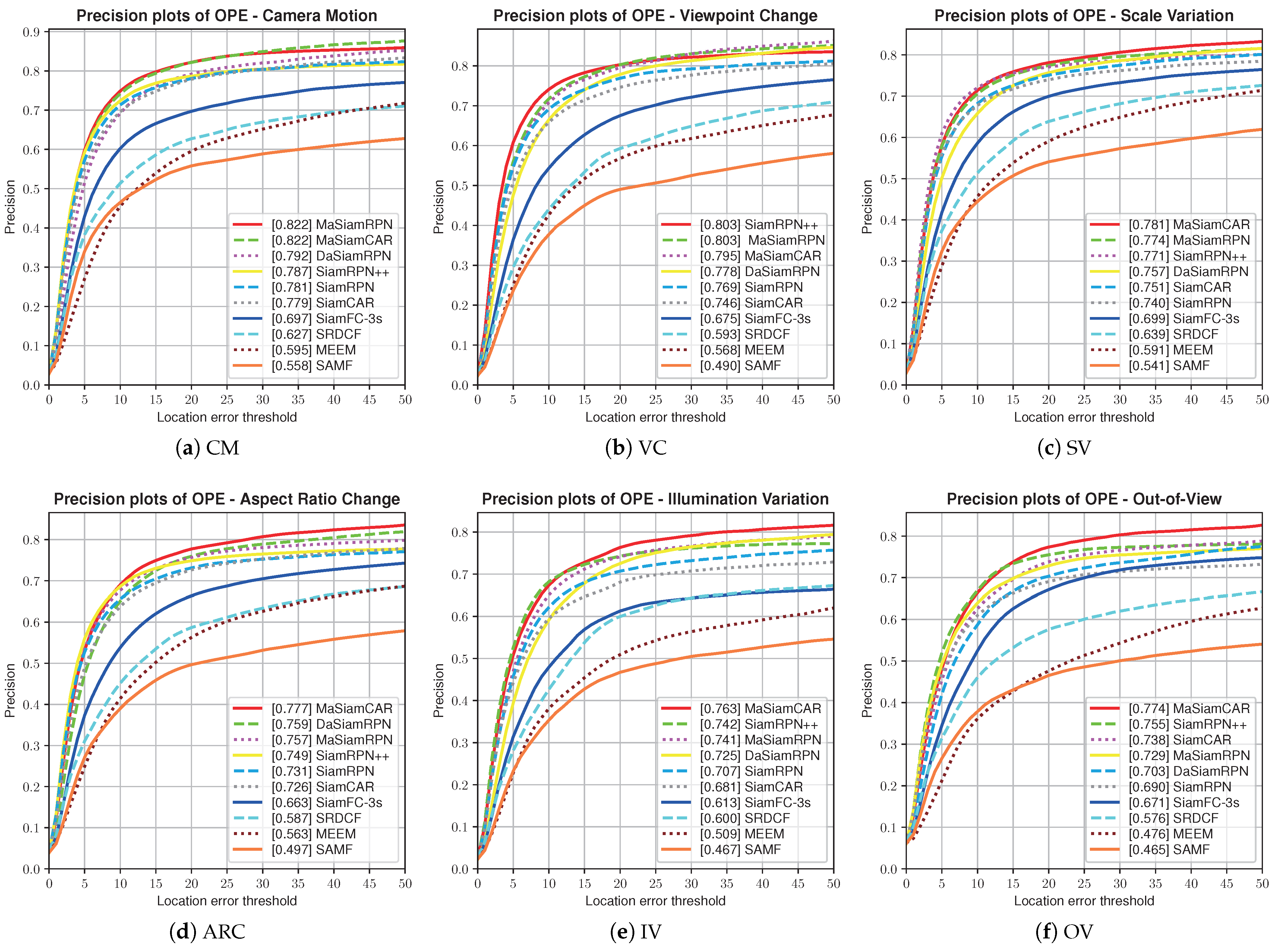

4.2.1. Experimental Analysis Using the UAV123 Dataset

- (1)

- Overall evaluation

- (2)

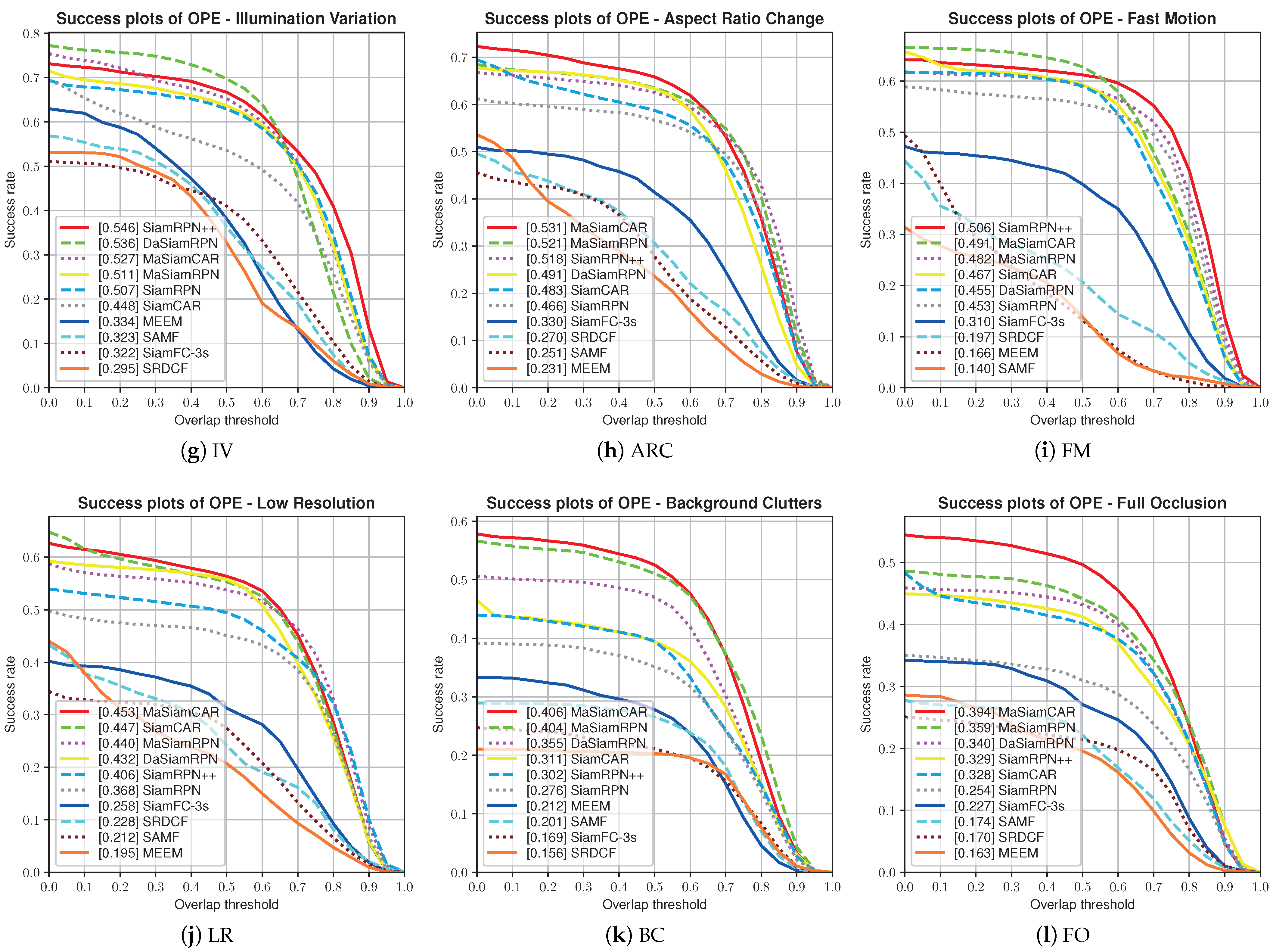

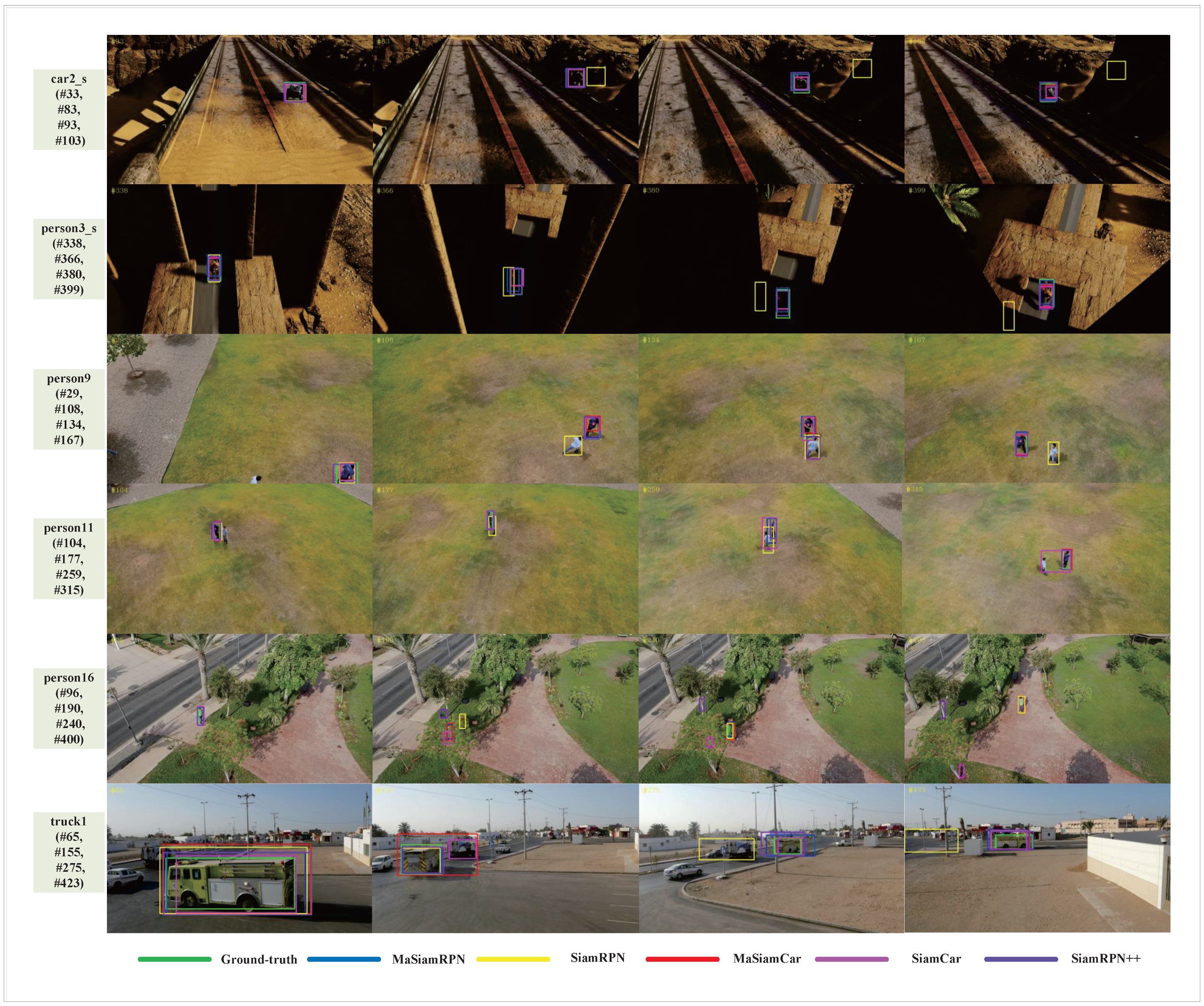

- Attribute evaluation

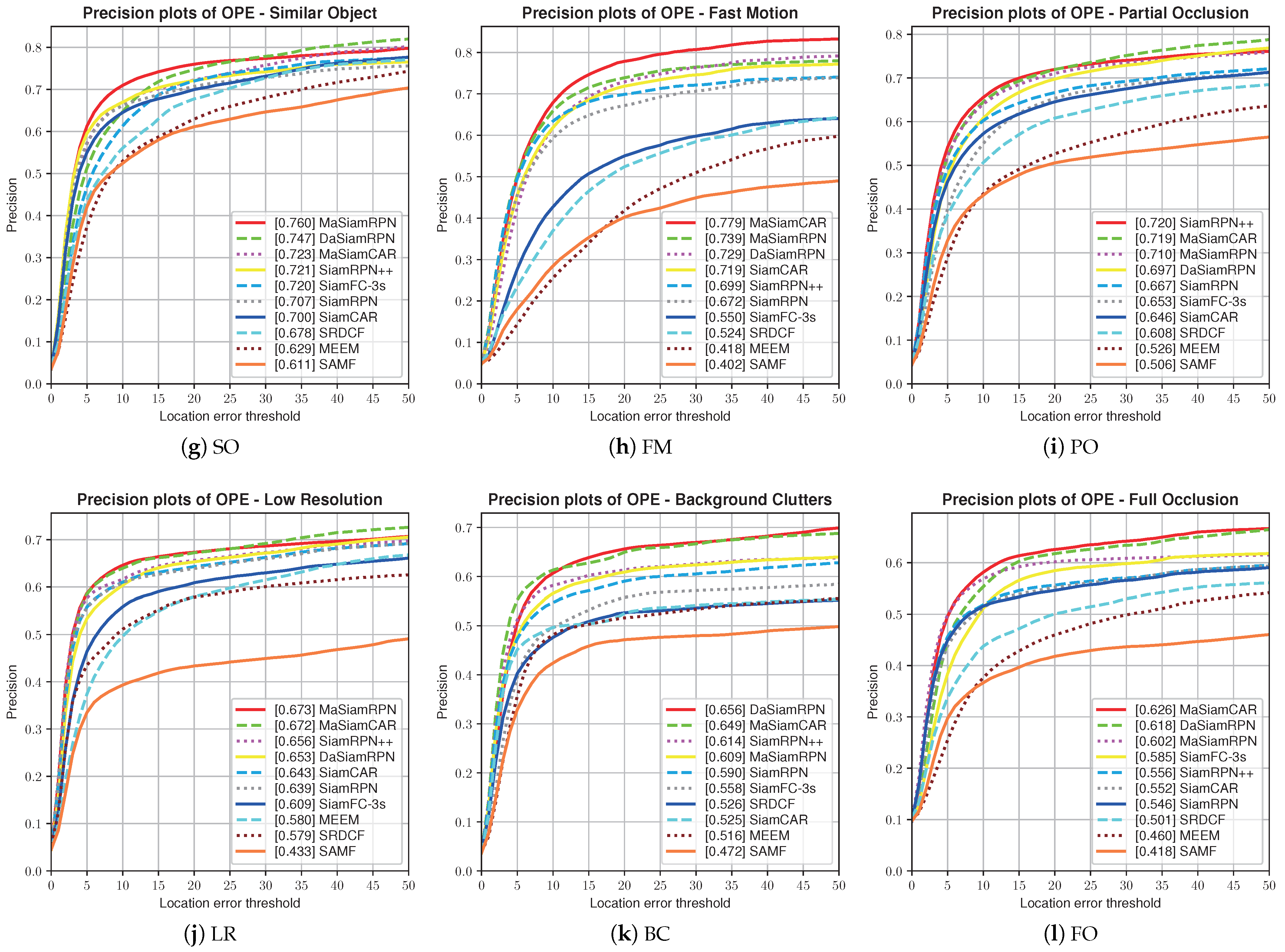

4.2.2. Experimental Analysis Using the UAV20L Dataset

- (1)

- Overall evaluation

- (2)

- Attribute evaluation

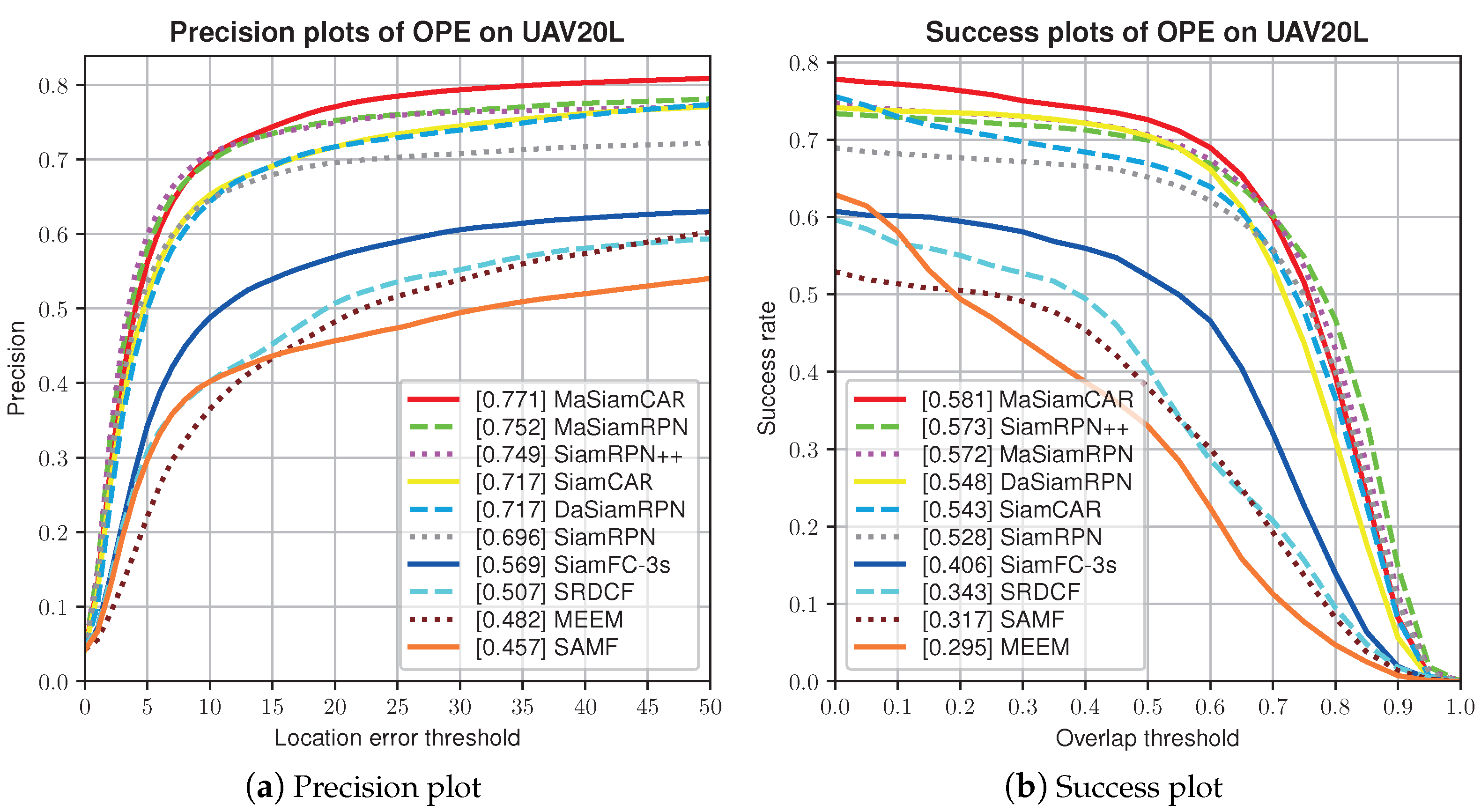

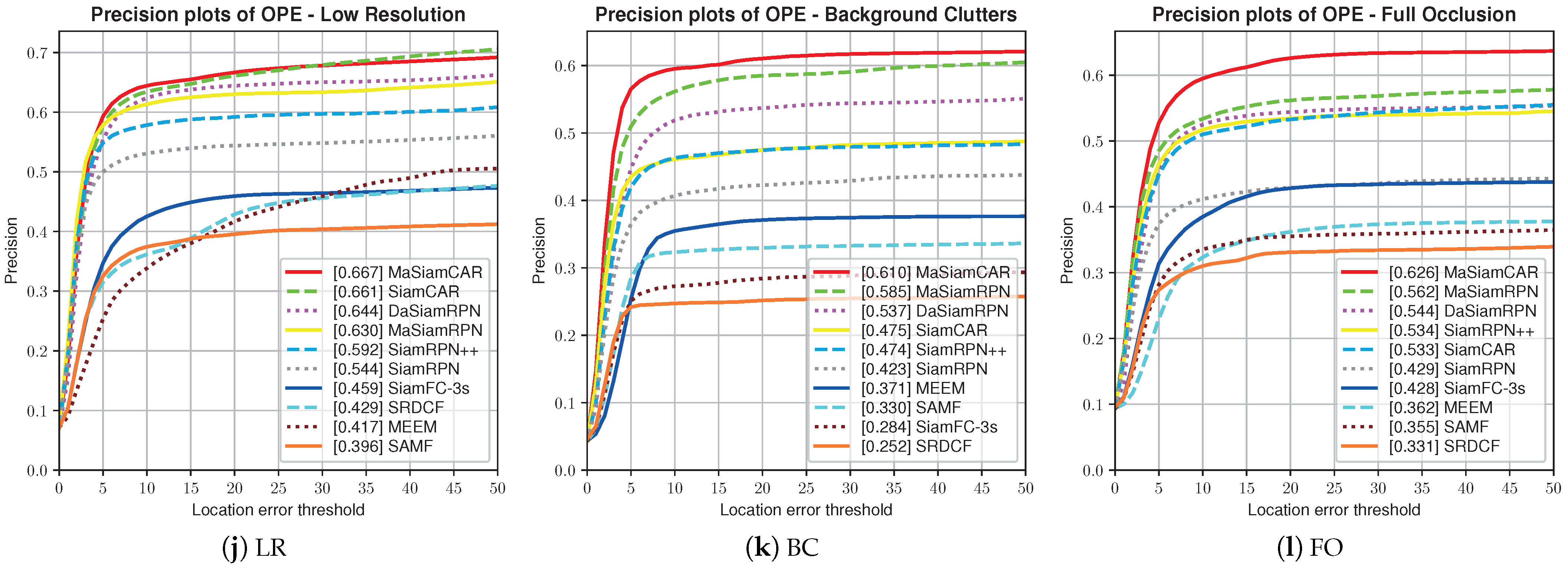

4.2.3. Experimental Analysis Using the UAVDT Dataset

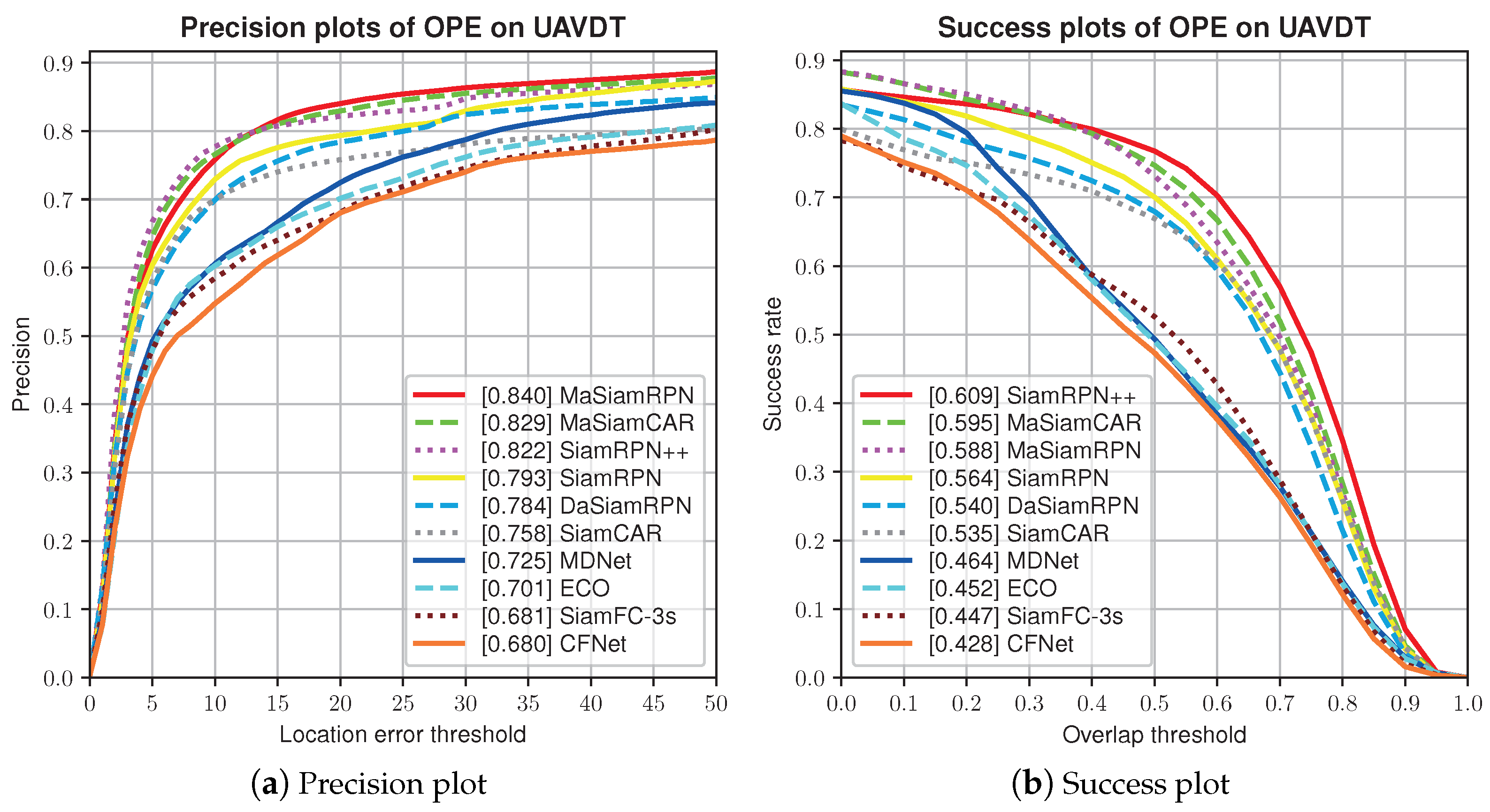

- (1)

- Overall evaluation

- (2)

- Attribute evaluation

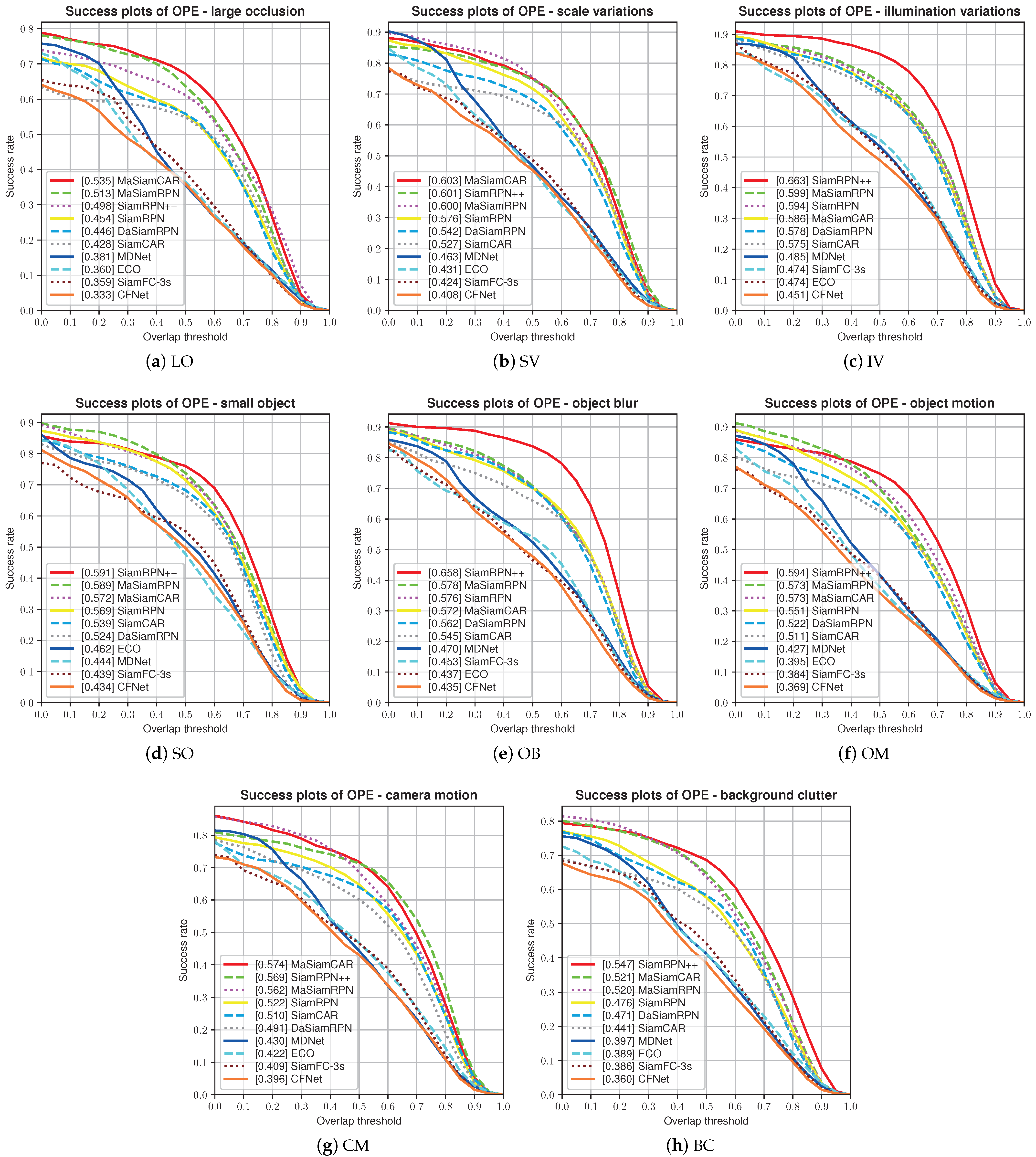

4.3. Qualitative Experimental Analysis

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Tullu, A.; Hassanalian, M.; Hwang, H.Y. Design and Implementation of Sensor Platform for UAV-Based Target Tracking and Obstacle Avoidance. Drones 2022, 6, 89. [Google Scholar] [CrossRef]

- Wang, C.; Shi, Z.; Meng, L.; Wang, J.; Wang, T.; Gao, Q.; Wang, E. Anti-Occlusion UAV Tracking Algorithm with a Low-Altitude Complex Background by Integrating Attention Mechanism. Drones 2022, 6, 149. [Google Scholar] [CrossRef]

- Li, B.; Yan, J.; Wu, W.; Zhu, Z.; Hu, X. High Performance Visual Tracking with Siamese Region Proposal Network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8971–8980. [Google Scholar]

- Guo, D.; Wang, J.; Cui, Y.; Wang, Z.; Chen, S. SiamCAR: Siamese Fully Convolutional Classification and Regression for Visual Tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 6269–6277. [Google Scholar]

- Bertinetto, L.; Valmadre, J.; Henriques, J.F.; Vedaldi, A.; Torr, P.H. Fully-convolutional Siamese Networks for Object Tracking. In Proceedings of the Computer Vision–ECCV 2016 Workshops, Amsterdam, The Netherlands, 8–10 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 850–865. [Google Scholar]

- Zhao, F.; Zhang, T.; Song, Y.; Tang, M.; Wang, X.; Wang, J. Siamese Regression Tracking with Reinforced Template Updating. IEEE Trans. Image Process. 2020, 30, 628–640. [Google Scholar] [CrossRef] [PubMed]

- Sun, L.; Yang, Z.; Zhang, J.; Fu, Z.; He, Z. Visual Object Tracking for Unmanned Aerial Vehicles Based on the Template-Driven Siamese Network. Remote Sens. 2022, 14, 1584. [Google Scholar] [CrossRef]

- Xu, Z.; Luo, H.; Hui, B.; Chang, Z.; Ju, M. Siamese Tracking with Adaptive Template-updating Strategy. Appl. Sci. 2019, 9, 3725. [Google Scholar] [CrossRef]

- Kalman, R.E. A New Approach To Linear Filtering and Prediction Problems. J. Basic Eng. 1960, 82D, 35–45. [Google Scholar] [CrossRef]

- Fu, C.; Ding, F.; Li, Y.; Jin, J.; Feng, C. DR 2 track: Towards Real-time Visual Tracking for UAV Via Distractor Repressed Dynamic Regression. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 25–29 October 2020; pp. 1597–1604. [Google Scholar]

- Fan, J.; Yang, X.; Lu, R.; Li, W.; Huang, Y. Long-term Visual Tracking Algorithm for UAVs Based on Kernel Vorrelation Filtering and SURF Features. Vis. Comput. 2022, 39, 319–333. [Google Scholar] [CrossRef]

- Li, Y.; Fu, C.; Huang, Z.; Zhang, Y.; Pan, J. Intermittent Contextual Learning for Keyfilter-aware UAV Object Tracking Using Deep Convolutional Feature. IEEE Trans. Multimed. 2020, 23, 810–822. [Google Scholar] [CrossRef]

- Zhang, F.; Ma, S.; Yu, L.; Zhang, Y.; Qiu, Z.; Li, Z. Learning Future-Aware Correlation Filters for Efficient UAV Tracking. Remote Sens. 2021, 13, 4111. [Google Scholar] [CrossRef]

- Deng, C.; He, S.; Han, Y.; Zhao, B. Learning Dynamic Spatial-temporal Regularization for UAV Object Tracking. IEEE Signal Process. Lett. 2021, 28, 1230–1234. [Google Scholar] [CrossRef]

- Li, Y.; Fu, C.; Ding, F.; Huang, Z.; Lu, G. AutoTrack: Towards High-performance Visual Tracking for UAV with Automatic Spatio-temporal Regularization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11923–11932. [Google Scholar]

- Zhang, F.; Ma, S.; Zhang, Y.; Qiu, Z. Perceiving Temporal Environment for Correlation Filters in Real-Time UAV Tracking. IEEE Signal Process. Lett. 2021, 29, 6–10. [Google Scholar] [CrossRef]

- He, Y.; Fu, C.; Lin, F.; Li, Y.; Lu, P. Towards Robust Visual Tracking for Unmanned Aerial Vehicle with Tri-attentional Correlation Filters. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October–24 January 2020; pp. 1575–1582. [Google Scholar]

- Li, B.; Wu, W.; Wang, Q.; Zhang, F.; Xing, J.; Yan, J.S. Evolution of Siamese Visual Tracking with Very Deep Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–17 June 2019; pp. 16–20. [Google Scholar]

- Zhang, Z.; Peng, H. Deeper and Wider Siamese Networks for Real-time Visual Tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–17 June 2019; pp. 4591–4600. [Google Scholar]

- Zhu, Z.; Wang, Q.; Li, B.; Wu, W.; Yan, J.; Hu, W. Distractor-aware Siamese Networks for Visual Object Tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 101–117. [Google Scholar]

- Xu, Y.; Wang, Z.; Li, Z.; Yuan, Y.; Yu, G. Siamfc++: Towards Robust and Accurate Visual Tracking with Target Estimation Guidelines. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 12549–12556. [Google Scholar]

- Chen, Z.; Zhong, B.; Li, G.; Zhang, S.; Ji, R. Siamese Box Adaptive Network for Visual Tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 6668–6677. [Google Scholar]

- Mueller, M.; Smith, N.; Ghanem, B. A Benchmark and Simulator for UAV Tracking. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 445–461. [Google Scholar]

- Du, D.; Qi, Y.; Yu, H.; Yang, Y.; Duan, K.; Li, G.; Zhang, W.; Huang, Q.; Tian, Q. The Unmanned Aerial Vehicle Benchmark: Object Detection and Tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 370–386. [Google Scholar]

- Li, D.; Wen, G.; Kuai, Y.; Porikli, F. End-to-end feature integration for correlation filter tracking with channel attention. IEEE Signal Process. Lett. 2018, 25, 1815–1819. [Google Scholar] [CrossRef]

- Danelljan, M.; Bhat, G.; Shahbaz Khan, F.; Felsberg, M. Eco: Efficient convolution operators for tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6638–6646. [Google Scholar]

- Guo, D.; Shao, Y.; Cui, Y.; Wang, Z.; Zhang, L.; Shen, C. Graph attention tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 9543–9552. [Google Scholar]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. Imagenet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Huang, L.; Zhao, X.; Huang, K. Got-10k: A Large High-diversity Benchmark for Generic Object Tracking in the Wild. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 1562–1577. [Google Scholar] [CrossRef] [PubMed]

- Wu, Y.; Lim, J.; Yang, M.H. Online object tracking: A benchmark. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 2411–2418. [Google Scholar]

| Condition 1 | Condition 2 | Strategy |

|---|---|---|

| Update the Kalman filter | ||

| Siamese tracker drift, update the template and retrack | ||

| Kalman predict drift, re-initialize the Kalman filter | ||

| Siamese tracker drift, update the template | ||

| Kalman predict drift, re-initialize the Kalman filter | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, L.; Zhang, J.; Yang, Z.; Fan, B. A Motion-Aware Siamese Framework for Unmanned Aerial Vehicle Tracking. Drones 2023, 7, 153. https://doi.org/10.3390/drones7030153

Sun L, Zhang J, Yang Z, Fan B. A Motion-Aware Siamese Framework for Unmanned Aerial Vehicle Tracking. Drones. 2023; 7(3):153. https://doi.org/10.3390/drones7030153

Chicago/Turabian StyleSun, Lifan, Jinjin Zhang, Zhe Yang, and Bo Fan. 2023. "A Motion-Aware Siamese Framework for Unmanned Aerial Vehicle Tracking" Drones 7, no. 3: 153. https://doi.org/10.3390/drones7030153

APA StyleSun, L., Zhang, J., Yang, Z., & Fan, B. (2023). A Motion-Aware Siamese Framework for Unmanned Aerial Vehicle Tracking. Drones, 7(3), 153. https://doi.org/10.3390/drones7030153