Development of a Novel Lightweight CNN Model for Classification of Human Actions in UAV-Captured Videos

Abstract

1. Introduction

- Efficient detection of human actions in UAV frames, using the proposed lightweight CNN model with the help of depthwise separable convolutions.

- The proposed approach is suitable for implementation on multiple embedded systems that are deployed in specific and sensitive areas.

- Introduce a new method that can be executed in real-time-based human action monitoring to make the city smarter at a low cost.

- Recognizing the actions of humans that can be used for military purposes or building smart cities for search and rescue processes and ensuring that relevant center authorities can easily monitor the results on their smartphones.

- Developing a method(s) that will improve the performance of the UAV-based HAR for search and rescue processes, which allows more effective and precise calculations than the existing methods in the literature.

2. Review of Related Works

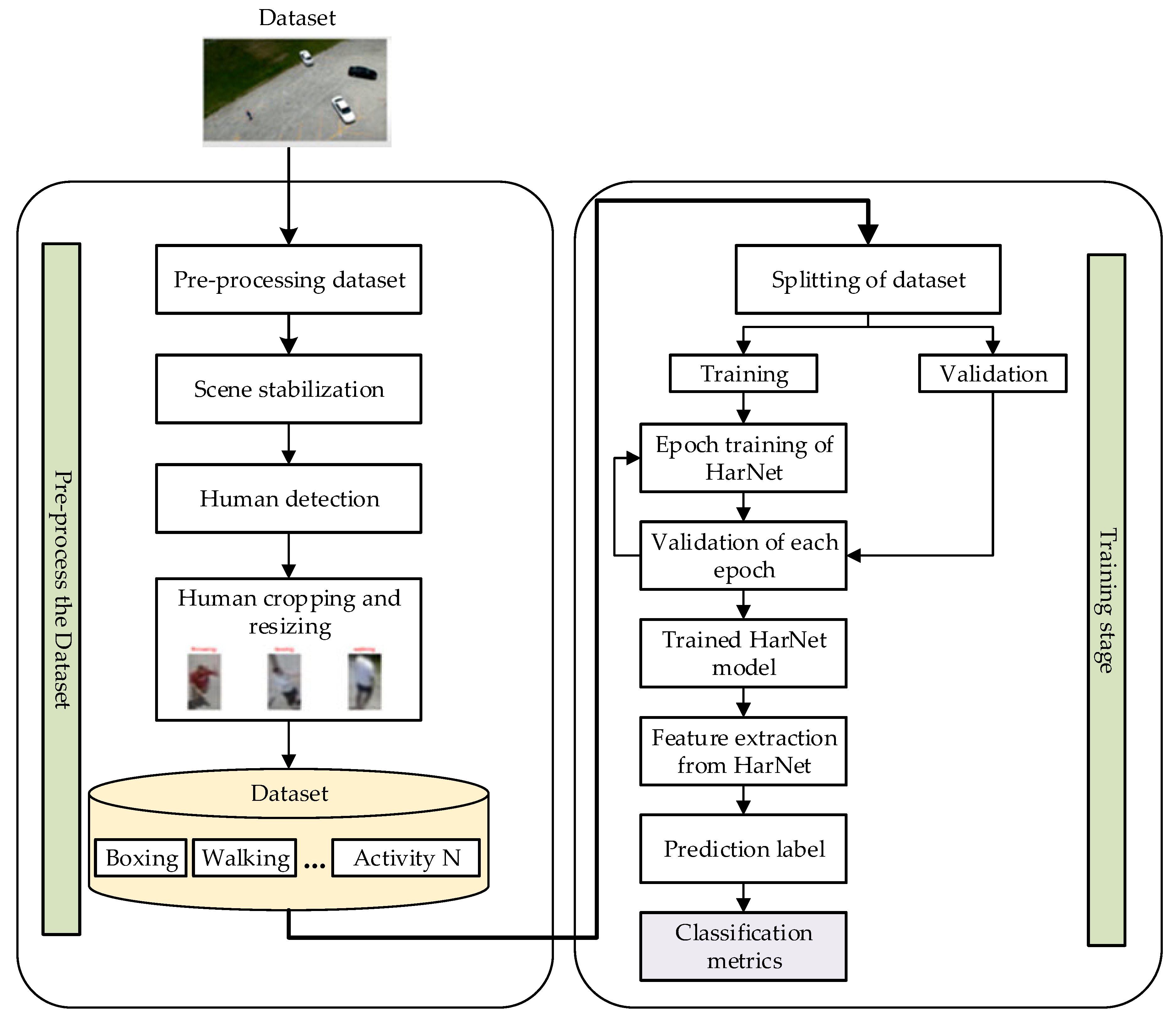

3. Methodology

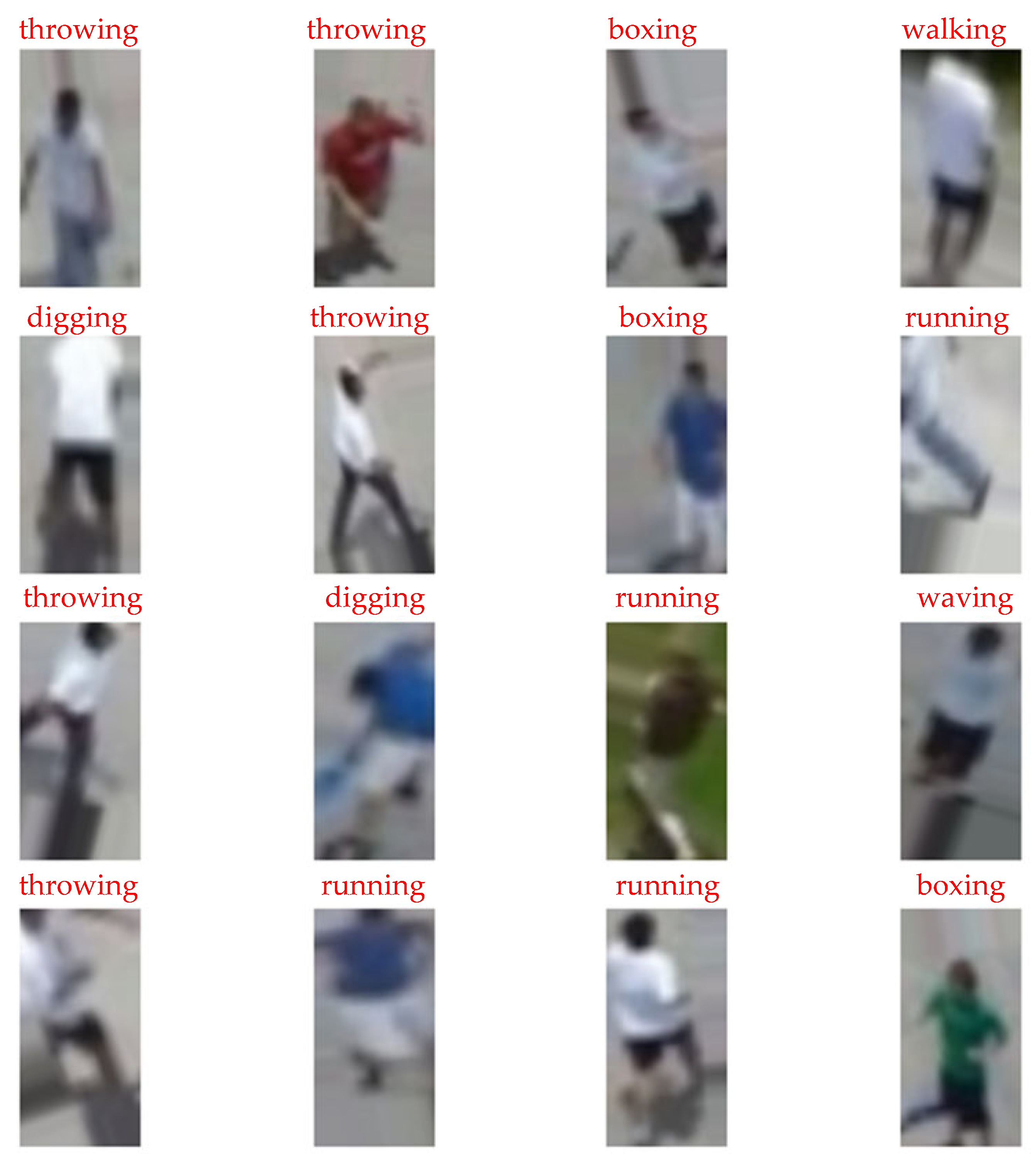

3.1. Proposed Dataset

3.2. Pre-Processing of the Dataset

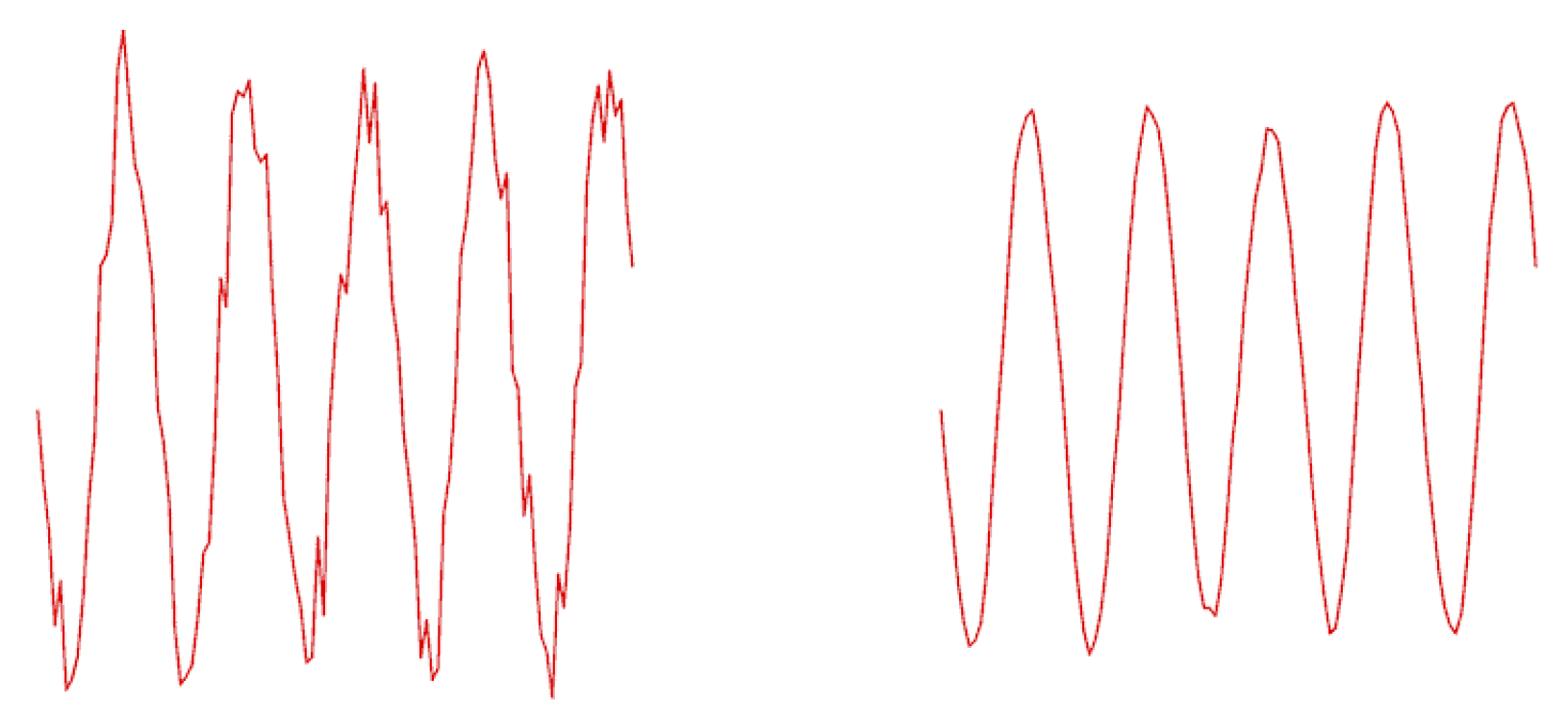

3.2.1. Video Stabilization

3.2.2. Human Detection and Cropping

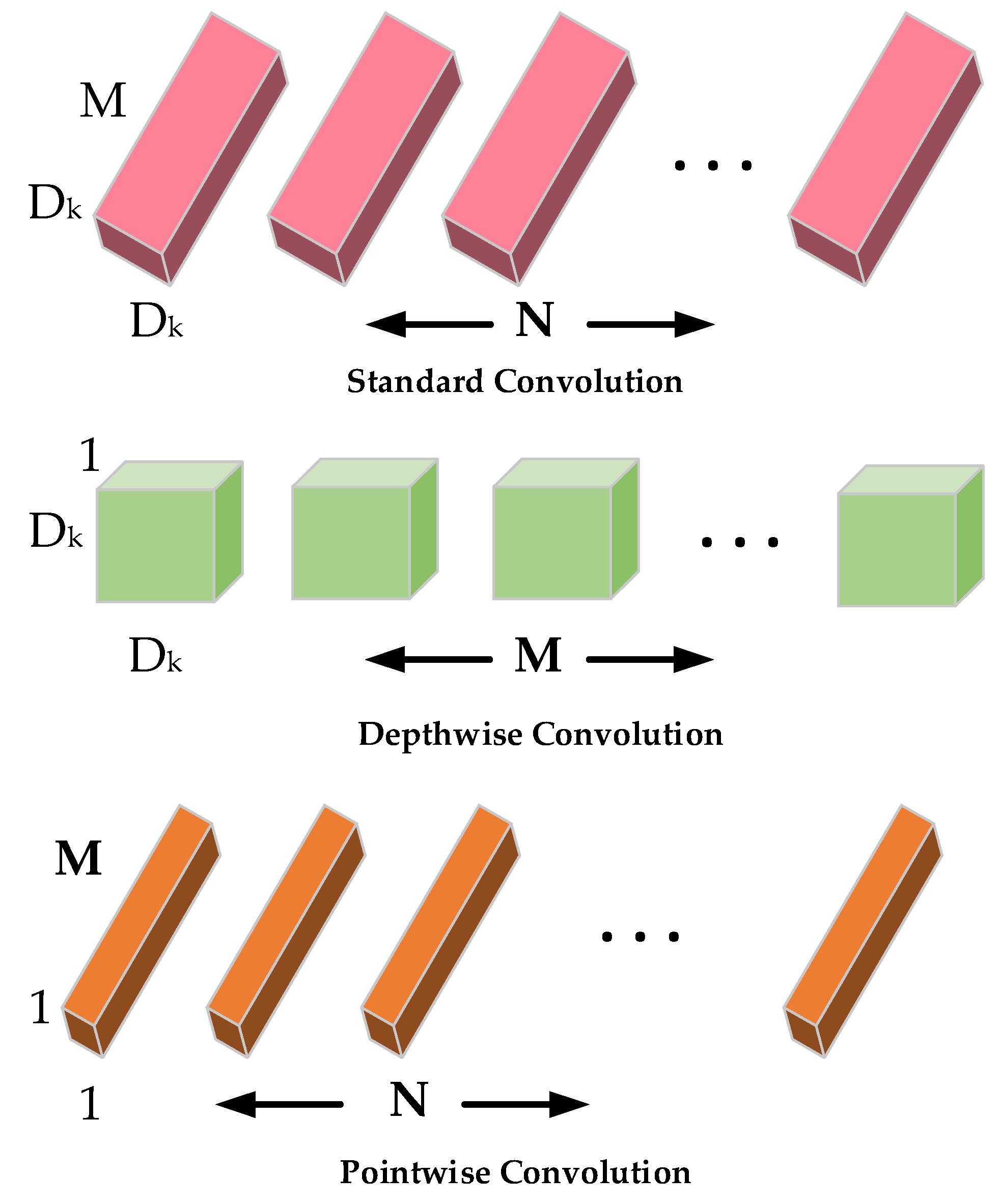

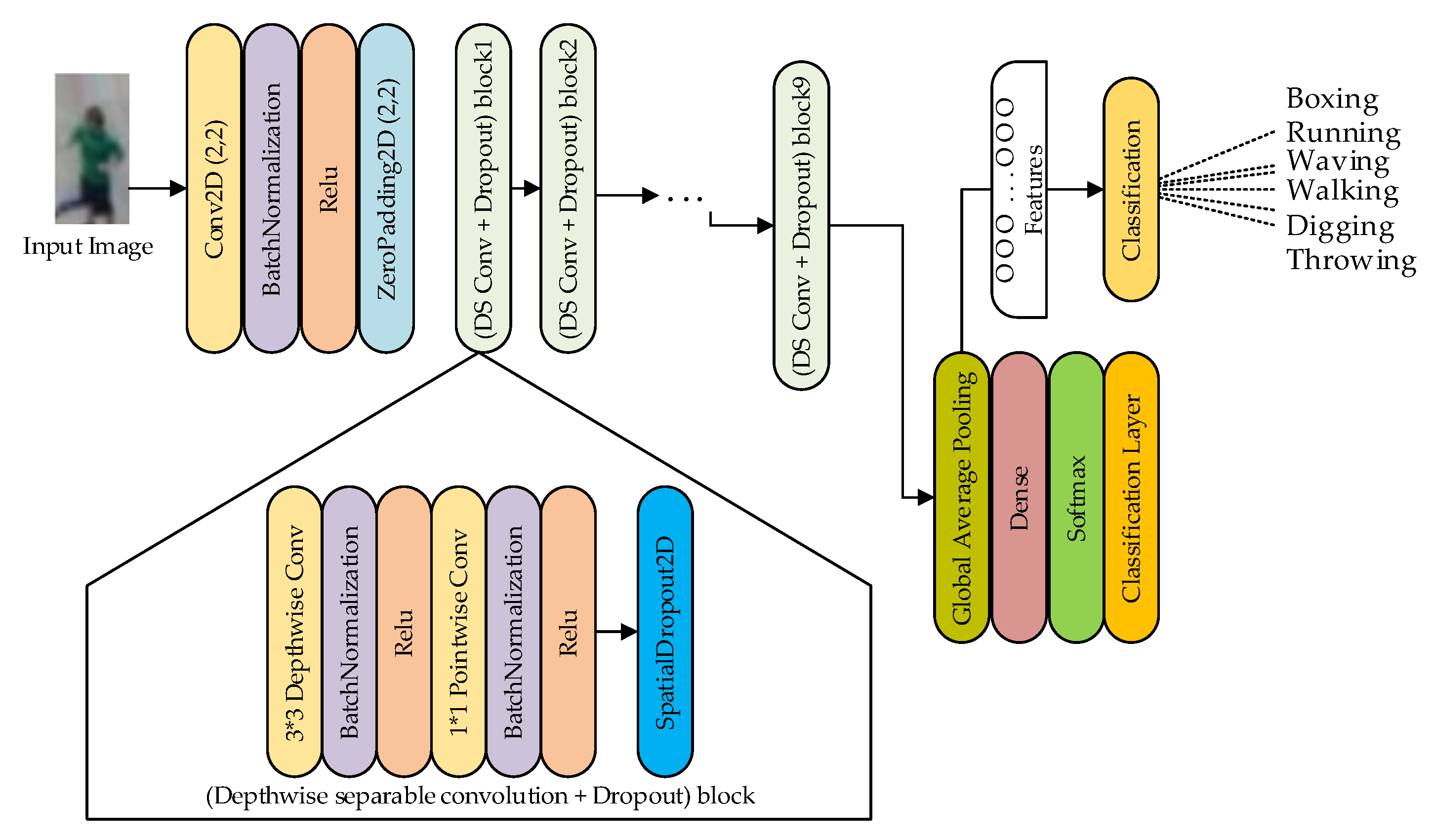

3.3. Proposed HarNet Model for UAV-Based HAR

4. Results

4.1. Performance Metrics

- Accuracy measures how well a model can correctly anticipate the class or label of an input. Accuracy is calculated by dividing the number of correct predictions by the total number of predictions. The calculation is shown in Equation (9).

- Recall measures the model’s ability to correctly classify all instances of a particular class. Recall is calculated by dividing the number of correct predictions for a specific class by the total number of instances of that class. The calculation is shown in Equation (10).

- Precision measures the model’s ability to correctly predict positive instances of a particular class. Precision is calculated by dividing the number of correct positive predictions by the total number of positive predictions. The calculation is shown in Equation (11).

- F1-score is a weighted average of precision and recall; a higher score indicates better performance. F1-score is determined by calculating the harmonic mean of precision and recall. The calculation is shown in Equation (12).

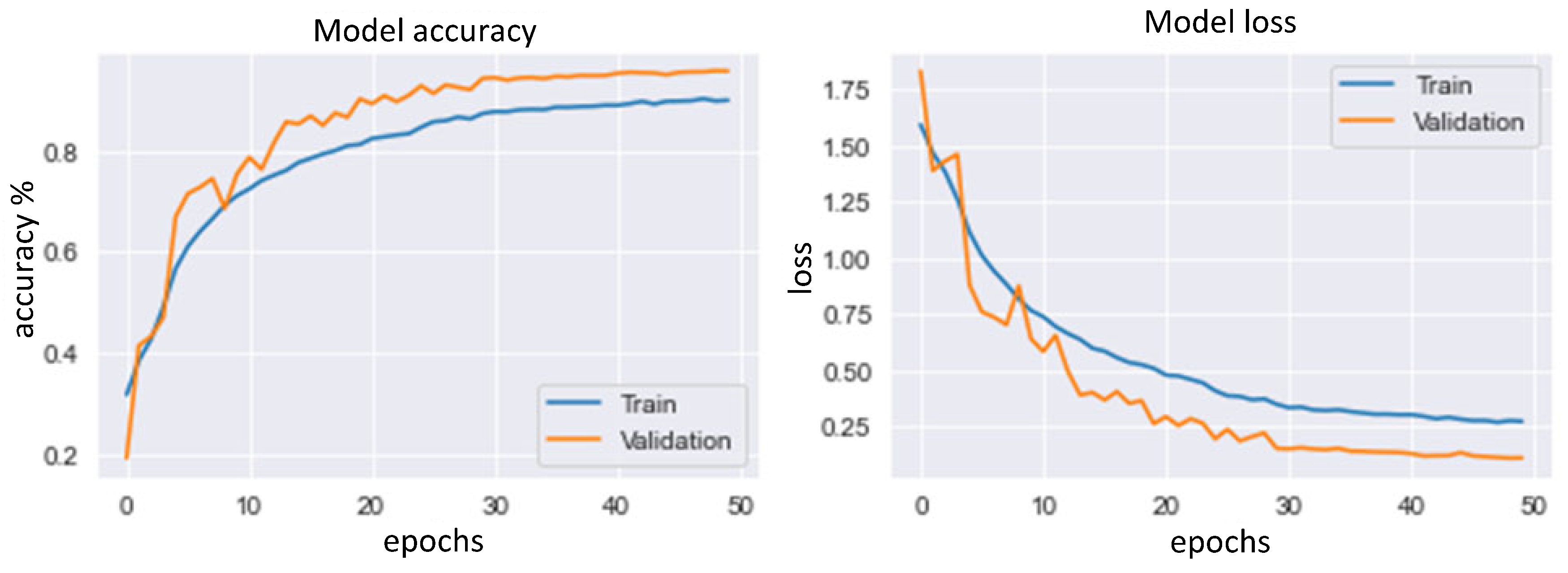

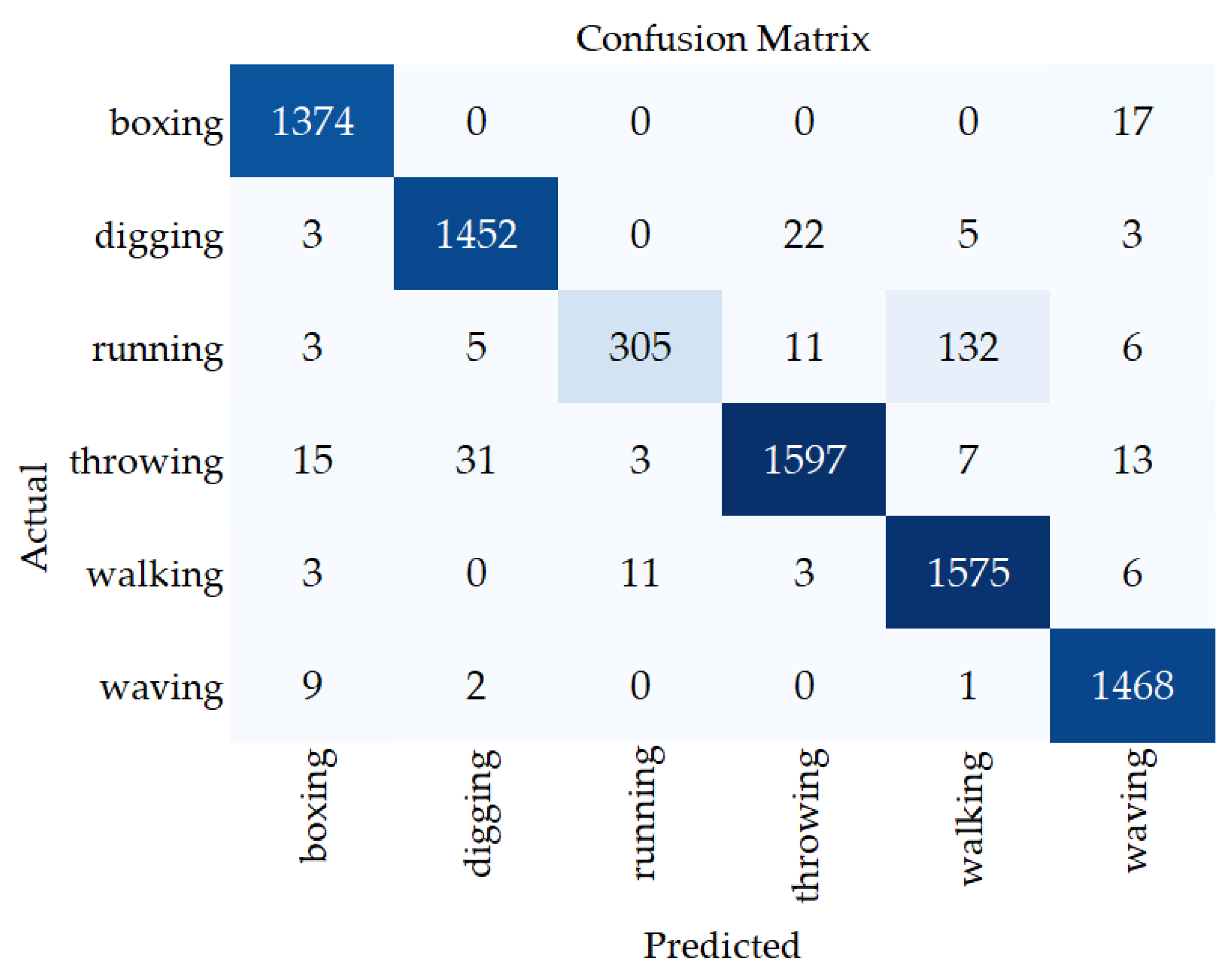

4.2. Experimental Results for the Proposed HarNet Model

5. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Abro, G.E.M.; Zulkifli, S.A.B.M.; Masood, R.J.; Asirvadam, V.S.; Laouti, A. Comprehensive Review of UAV Detection, Security, and Communication Advancements to Prevent Threats. Drones 2022, 6, 284. [Google Scholar] [CrossRef]

- Yaacoub, J.-P.; Noura, H.; Salman, O.; Chehab, A. Security Analysis of Drones Systems: Attacks, Limitations, and Recommendations. Internet Things 2020, 11, 100218. [Google Scholar] [CrossRef]

- Mohamed, N.; Al-Jaroodi, J.; Jawhar, I.; Idries, A.; Mohammed, F. Unmanned Aerial Vehicles Applications in Future Smart Cities. Technol. Forecast. Soc. Chang. 2020, 153, 119293. [Google Scholar] [CrossRef]

- Mohsan, S.A.H.; Khan, M.A.; Noor, F.; Ullah, I.; Alsharif, M.H. Towards the Unmanned Aerial Vehicles (UAVs): A Comprehensive Review. Drones 2022, 6, 147. [Google Scholar] [CrossRef]

- Zhang, N.; Wang, Y.; Yu, P. A Review of Human Action Recognition in Video. In Proceedings of the 2018 IEEE/ACIS 17th International Conference on Computer and Information Science (ICIS), Singapore, 6–8 June 2018; pp. 57–62. [Google Scholar] [CrossRef]

- Mottaghi, A.; Soryani, M.; Seifi, H. Action Recognition in Freestyle Wrestling Using Silhouette-Skeleton Features. Eng. Sci. Technol. Int. J. 2020, 23, 921–930. [Google Scholar] [CrossRef]

- Agahian, S.; Negin, F.; Köse, C. An Efficient Human Action Recognition Framework with Pose-Based Spatiotemporal Features. Eng. Sci. Technol. Int. J. 2019, 23, 196–203. [Google Scholar] [CrossRef]

- Arshad, M.H.; Bilal, M.; Gani, A. Human Activity Recognition: Review, Taxonomy and Open Challenges. Sensors 2022, 22, 6463. [Google Scholar] [CrossRef]

- Aydin, I. Fuzzy Integral and Cuckoo Search Based Classifier Fusion for Human Action Recognition. Adv. Electr. Comput. Eng. 2018, 18, 3–10. [Google Scholar] [CrossRef]

- Othman, N.A.; Aydin, I. Challenges and Limitations in Human Action Recognition on Unmanned Aerial Vehicles: A Comprehensive Survey. Trait. Signal 2021, 38, 1403–1411. [Google Scholar] [CrossRef]

- Alzubaidi, L.; Zhang, J.; Humaidi, A.J.; Al-Dujaili, A.; Duan, Y.; Al-Shamma, O.; Santamaría, J.; Fadhel, M.A.; Al-Amidie, M.; Farhan, L. Review of Deep Learning: Concepts, CNN Architectures, Challenges, Applications, Future Directions; Springer International Publishing: New York, NY, USA, 2021; Volume 8, ISBN 4053702100444. [Google Scholar]

- Lecun, Y.; Bengio, Y.; Hinton, G. Deep Learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Zaremba, W.; Sutskever, I.; Vinyals, O. Recurrent Neural Network Regularization. arXiv 2014, arXiv:1409.2329. [Google Scholar]

- Hinton, G.E. A Practical Guide to Training Restricted Boltzmann Machines. In Neural Networks: Tricks of the Trade. Lecture Notes in Computer Science; Montavon, G., Orr, G.B., Müller, K.R., Eds.; Springer: Berlin/Heidelberg, Germany, 2012; Volume 7700, pp. 599–619. [Google Scholar] [CrossRef]

- Mliki, H.; Bouhlel, F.; Hammami, M. Human Activity Recognition from UAV-Captured Video Sequences. Pattern Recognit. 2020, 100, 107140. [Google Scholar] [CrossRef]

- CRCV | Center for Research in Computer Vision at the University of Central Florida. Available online: https://www.crcv.ucf.edu/data/UCF-ARG.php (accessed on 2 July 2021).

- Sultani, W.; Shah, M. Human Action Recognition in Drone Videos Using a Few Aerial Training Examples. Comput. Vis. Image Underst. 2021, 206, 103186. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Perera, A.G.; Law, Y.W.; Chahl, J. Drone-Action: An Outdoor Recorded Drone Video Dataset for Action Recognition. Drones 2019, 3, 82. [Google Scholar] [CrossRef]

- Cheron, G.; Laptev, I.; Schmid, C. P-CNN: Pose-Based CNN Features for Action Recognition. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 3218–3226. [Google Scholar]

- Jhuang, H.; Gall, J.; Zuffi, S.; Schmid, C.; Black, M.J. Towards Understanding Action Recognition. In Proceedings of the 2013 IEEE International Conference on Computer Vision, Sydney, NSW, Australia, 1–8 December 2013; pp. 3192–3199. [Google Scholar] [CrossRef]

- Kotecha, K.; Garg, D.; Mishra, B.; Narang, P.; Mishra, V.K. Background Invariant Faster Motion Modeling for Drone Action Recognition. Drones 2021, 5, 87. [Google Scholar] [CrossRef]

- Liu, C.; Szirányi, T. Real-Time Human Detection and Gesture Recognition for on-Board UAV Rescue. Sensors 2021, 21, 2180. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Song, W.; Shumin, F. SSD (Single Shot MultiBox Detector). Ind. Control. Comput. 2019, 32, 103–105. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot Multibox Detector. In Computer Vision—ECCV 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2016; Volume 9905, pp. 21–37. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context in Computer Vision—ECCV 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2014; Volume 8693, pp. 740–755. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Haar, L.V.; Elvira, T.; Ochoa, O. An Analysis of Explainability Methods for Convolutional Neural Networks. Eng. Appl. Artif. Intell. 2023, 117, 105606. [Google Scholar] [CrossRef]

- Alaslani, M.G.; Elrefaei, L.A. Convolutional Neural Network Based Feature Extraction for IRIS Recognition. Int. J. Comput. Sci. Inf. Technol. 2018, 10, 65–78. [Google Scholar] [CrossRef]

- Chen, L.; Li, S.; Bai, Q.; Yang, J.; Jiang, S.; Miao, Y. Review of Image Classification Algorithms Based on Convolutional Neural Networks. Remote Sens. 2021, 13, 4712. [Google Scholar] [CrossRef]

- Geist, M. Soft-Max Boosting. Mach. Learn. 2015, 100, 305–332. [Google Scholar] [CrossRef]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-Based Learning Applied to Document Recognition. Proc. IEEE 1998, 86, 2278–2323. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going Deeper with Convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar] [CrossRef]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A.A. Inception-v4, Inception-ResNet and the Impact of Residual Connections on Learning. In Proceedings of the 31st AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; pp. 4278–4284. [Google Scholar] [CrossRef]

- Ma, N.; Zhang, X.; Zheng, H.T.; Sun, J. Shufflenet V2: Practical Guidelines for Efficient Cnn Architecture Design. In Computer Vision—ECCV 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2018; Volume 11218, pp. 122–138. [Google Scholar] [CrossRef]

- Wu, J.; Gao, Z.; Hu, C. An Empirical Study on Several Classification Algorithms and Their Improvements. In Advances in Computation and Intelligence; Cai, Z., Li, Z., Kang, Z., Liu, Y., Eds.; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2009; Volume 5821, pp. 276–286. [Google Scholar] [CrossRef]

- A Comparison Between Various Human Detectors and CNN-Based Feature Extractors for Human Activity Recognition via Aerial Captured Video Sequences. Available online: https://www.researchgate.net/publication/361177545_A_Comparison_Between_Various_Human_Detectors_and_CNN-Based_Feature_Extractors_for_Human_Activity_Recognition_Via_Aerial_Captured_Video_Sequences (accessed on 20 December 2022).

- Peng, H.; Razi, A. Fully Autonomous UAV-Based Action Recognition System Using Aerial Imagery. In Advances in Visual Computing; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2020; Volume 12509. [Google Scholar] [CrossRef]

| Authors | Number of Actions | Method | Dataset | Accuracy |

|---|---|---|---|---|

| Hazar Mliki et al. [16] | 10 human actions are recognized, including digging, boxing, and running | CNN Model (GoogLeNet architecture) | UCF-ARG dataset [17] | 68% Low accuracy is obtained |

| Waqas Sultani et al. [18] | 8 human actions are recognized including biking, diving, and running | DML for HAR model generation and Wasserstein GAN to generate aerial features from ground frames |

| 68.2% One limitation of DML is the need for access to various labels for each task for the corresponding data |

| A.G. Perera et al. [19] | 13 human actions are recognized, including walking, kicking, jogging, punching, stabbing, and running | P-CNN | They used their own dataset called the Drone-Action dataset | 75.92% The dataset was collected at low altitude and from low speeds |

| Kotecha et al. [20] | 5 human actions are recognized, including fighting, lying, shaking hand, waving hand, waving both hands | FMFM and AAR | They used their own dataset | 90% Reasonable accuracy is obtained |

| Liu et al. [21] | 10 human actions are recognized, including walking, standing, and making a phone call | DNN model and OpenPose algorithm | They used their own dataset | 99.80% At low altitude, high accuracy can be achieved |

| Class Number | Action | Number of Actors | Number of Instances per Each Actor | Total Videos per Action | Number of Cropped Frames after Human Detection |

|---|---|---|---|---|---|

| 1 | Boxing | 12 | 4 | 48 | 5844 |

| 2 | Walking | 12 | 4 | 48 | 7112 |

| 3 | Digging | 12 | 4 | 48 | 6239 |

| 4 | Throwing | 12 | 4 | 48 | 7511 |

| 5 | Running | 12 | 4 | 48 | 1939 |

| 6 | Waving | 12 | 4 | 48 | 6689 |

| Total | 72 | 24 | 288 | 35,334 |

| Parameter | Value |

|---|---|

| Used software | Python |

| Framework | Keras and Tensorflow |

| Image size | 120 × 60 |

| Optimizer | Adam |

| Loss type | Categorical cross-entropy |

| Initial learning rate | 1 × 10−6 |

| Mini-batch size | 64 |

| Epoch | 50 |

| Activation function | softmax |

| Action | Precision | Recall | F1-Score | Support |

|---|---|---|---|---|

| Boxing | 0.9765 | 0.9878 | 0.9821 | 1391 |

| Digging | 0.9745 | 0.9778 | 0.9761 | 1485 |

| Running | 0.9561 | 0.6602 | 0.7810 | 462 |

| Throwing | 0.9780 | 0.9586 | 0.9682 | 1666 |

| Walking | 0.9157 | 0.9856 | 0.9494 | 1598 |

| Waving | 0.9703 | 0.9919 | 0.9810 | 1480 |

| Accuracy | 0.9615 | 8082 | ||

| Macro average | 0.9618 | 0.9270 | 0.9396 | 8082 |

| Weighted average | 0.9621 | 0.9615 | 0.9600 | 8082 |

| Model Name | Parameters (Millions) | Loss | Accuracy |

|---|---|---|---|

| MobileNet | 3.3 | 0.29 | 92.24% |

| VGG-16 | 14.7 | 0.39 | 86.54% |

| VGG-19 | 20.1 | 0.61 | 81.39% |

| Xception | 20.99 | 0.49 | 87.32% |

| Inception-ResNet-V2 | 54.43 | 0.45 | 87.26% |

| DenseNet201 | 18.44 | 0.30 | 91.80% |

| Proposed HarNet model | 2.2 | 0.07 | 96.15% |

| Mode | Accuracy | Number of Actions |

|---|---|---|

| Hazar Mliki et al. 2020 [16] Optical flow (stabilization) + GoogLeNet (classification) | 68% | 5 |

| Waqas Sultani et al. 2021 [18] GAN + DML | 68.2% | 8 |

| Nouar Aldahoul et al. 2022 [40] EfficientNetB7_LSTM (classification) | 80% | 5 |

| Peng et al. 2020 [41] SURF (stabilization) + Inception-ResNet-3D (classification) | 85.83% | 5 |

| Proposed HarNet model | 96.15% | 6 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Othman, N.A.; Aydin, I. Development of a Novel Lightweight CNN Model for Classification of Human Actions in UAV-Captured Videos. Drones 2023, 7, 148. https://doi.org/10.3390/drones7030148

Othman NA, Aydin I. Development of a Novel Lightweight CNN Model for Classification of Human Actions in UAV-Captured Videos. Drones. 2023; 7(3):148. https://doi.org/10.3390/drones7030148

Chicago/Turabian StyleOthman, Nashwan Adnan, and Ilhan Aydin. 2023. "Development of a Novel Lightweight CNN Model for Classification of Human Actions in UAV-Captured Videos" Drones 7, no. 3: 148. https://doi.org/10.3390/drones7030148

APA StyleOthman, N. A., & Aydin, I. (2023). Development of a Novel Lightweight CNN Model for Classification of Human Actions in UAV-Captured Videos. Drones, 7(3), 148. https://doi.org/10.3390/drones7030148