Smart Drone Surveillance System Based on AI and on IoT Communication in Case of Intrusion and Fire Accident

Abstract

:1. Introduction

- Both Yolov8 and Cascade Classifier are successfully implemented together into the flight system to support each other in object detection, which accomplishes high accuracy and speed for surveillance purposes.

- The distance maintenance and yaw rotation algorithms based on the PID controller are described in detail, providing deep comprehension for the reader about the drone control field with the support of AI techniques.

- An algorithm for potentially dangerous object avoidance is proposed, which utilizes a straight strategy to dodge the approaching object based on the trained model.

- The strong point of this paper is to combine the computer vision models and the UAV algorithms into a smart system. There is a highly effective connection between the two sides of implementation. The drone-controlled algorithms are based on AI models. Thus, this paper not only describes the robust flight control methods in detail but also describes the automatic operation connected to the trained AI object identifier models.

2. Related Work

3. Materials and Method

3.1. Drone Components

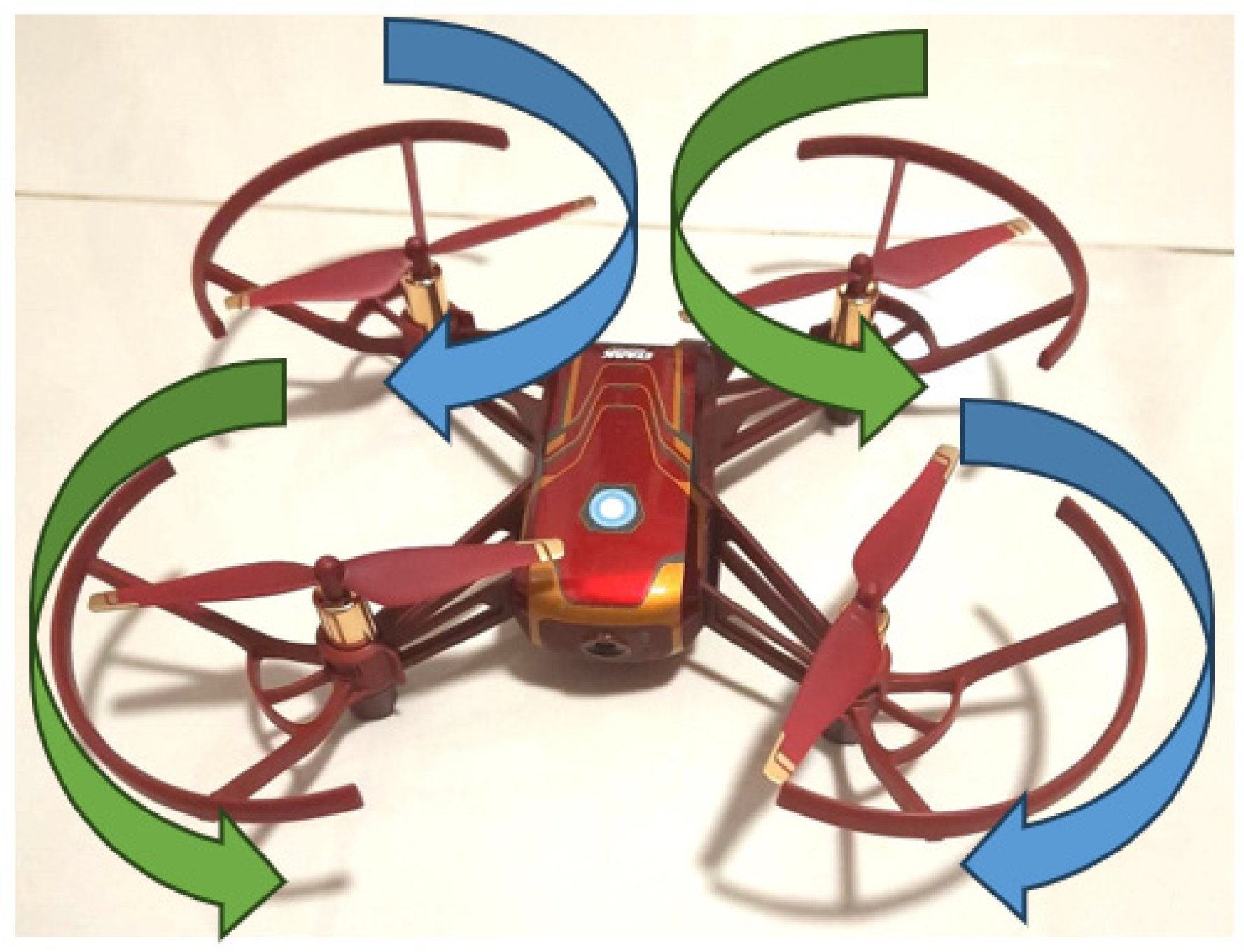

3.2. Drone Working Principle

- Left/right velocity: −100 to 100 cm/s;

- Forward/backward velocity: −100 to 100 cm/s;

- Up/down velocity: −100 to 100 cm/s;

- Yaw velocity: −100 to 100°/s.

- Move to left or move to right: 20 to 500 cm;

- Move forward or move backward: 20 to 500 cm;

- Rotate clockwise or anticlockwise: 1–360°.

3.3. Computer Vision Technologies

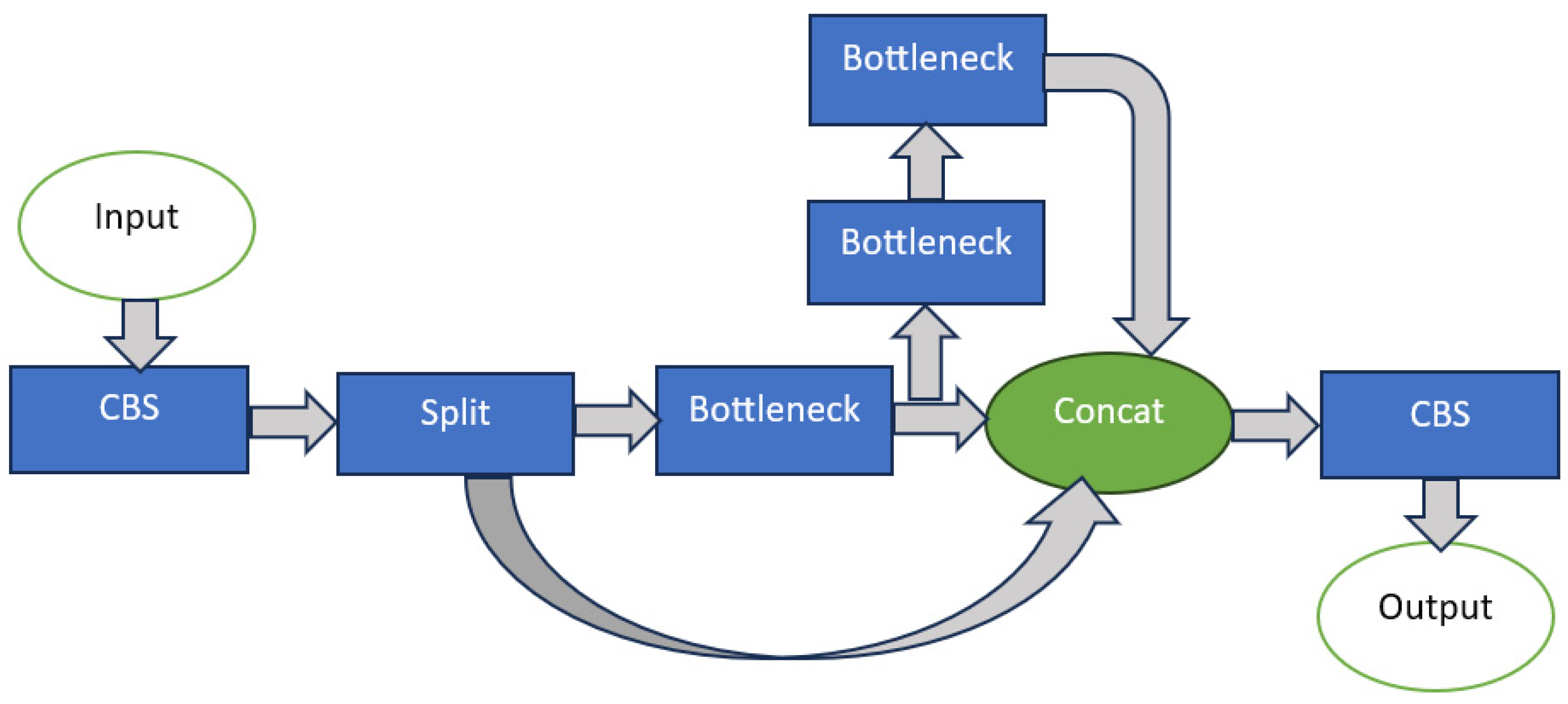

3.3.1. YOLOv8

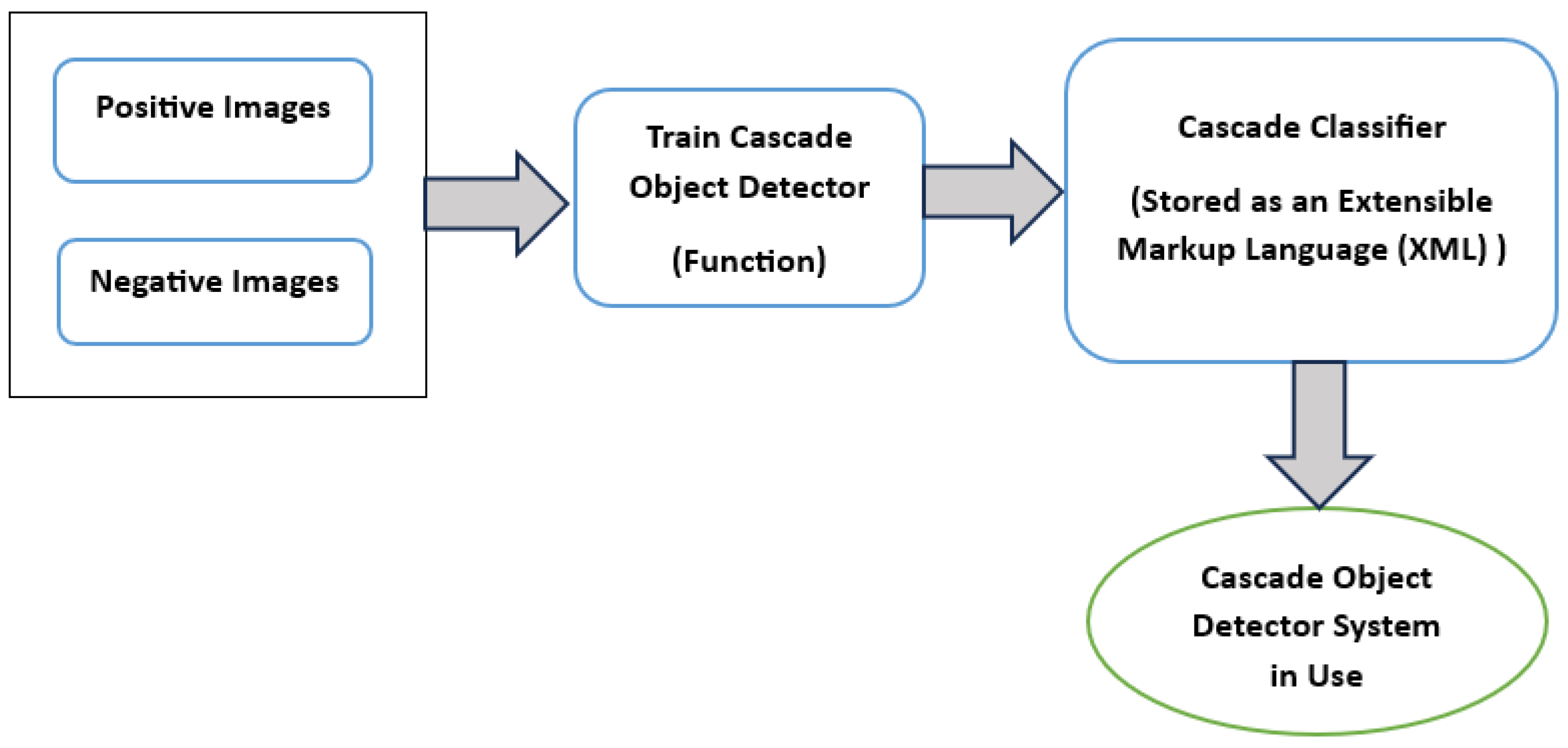

3.3.2. Cascade Classifier

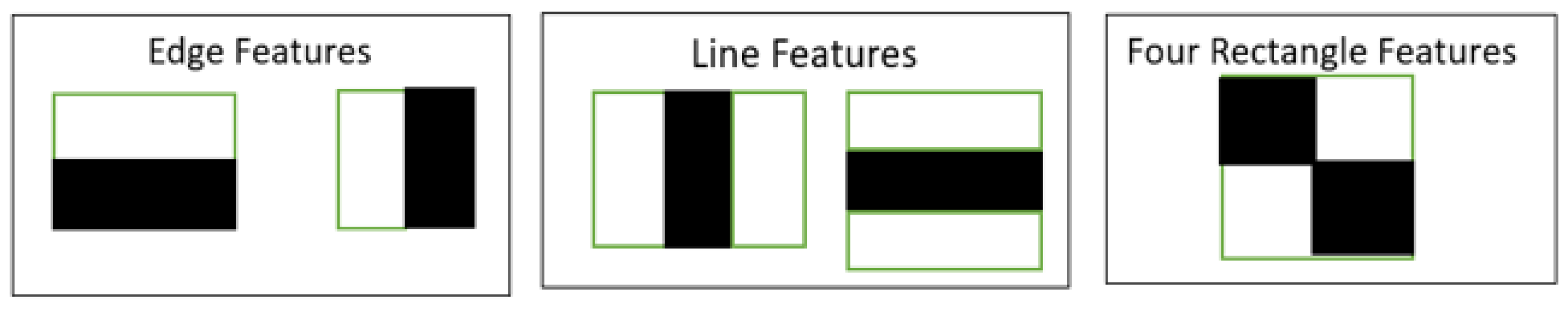

- Step 1: Gathering the Haar Features. In a detection window, a Haar feature is effectively the result of computations on neighboring rectangular sections. The pixel intensities in each location must be summed together to determine the difference between the sums. Figure 8 shows the Haar feature types.

- Step 2: Creating Integral Images. In essence, the calculation of these Haar characteristics is sped up using integral pictures. It constructs sub-rectangles and array references for each of them rather than computing each pixel. The Haar features are then computed using them.

- Step 3: Adaboost Training. Adaboost selects the top features and trains the classifiers to utilize them. It combines weak classifiers to produce a robust classifier for the algorithm to find items. Weak learners are produced by sliding a window across the input image and calculating Haar characteristics for each area of the image. This distinction contrasts with a learned threshold distinguishing between non-objects and objects. These are weak classifiers, whereas a strong classifier requires a lot of Haar features to be accurate. The last phase merges these weak learners into strong ones using cascading classifiers.

- Step 4: Implementing Cascading Classifiers. The Cascade Classifier comprises several stages, each containing a group of weak learners. Boosting trains weak learners, resulting in a highly accurate classifier from the average prediction of all weak learners. Based on this prediction, the classifier decides to go on to the next region (negative) or report that an object was identified (positive). Due to the majority of the windows not containing anything of interest, stages are created to discard negative samples as quickly as possible.

3.3.3. Evaluation Metrics

3.4. Human-Tracking Algorithms

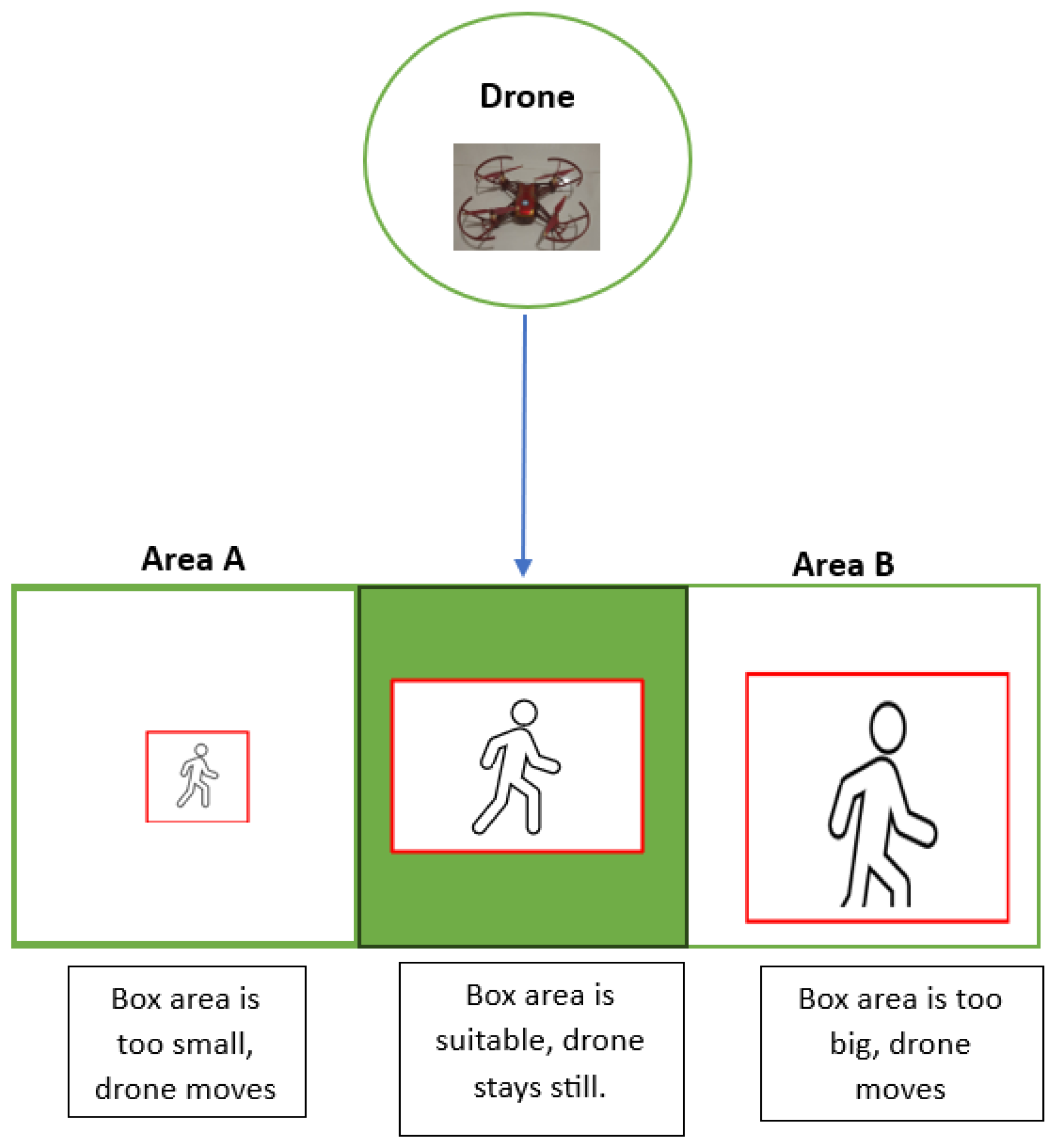

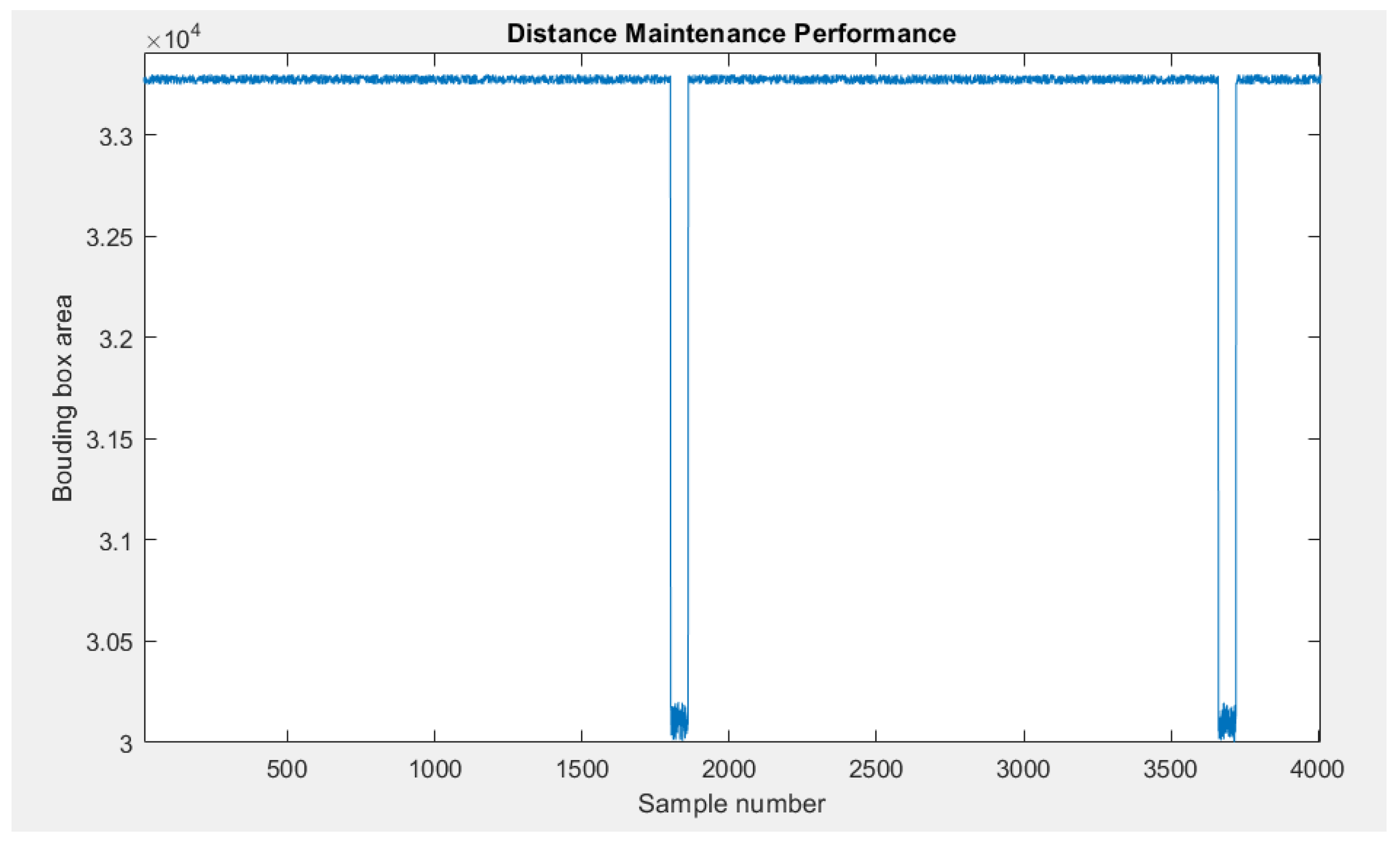

3.4.1. Distance Maintenance

- If the box area < A → Drone is too far away → 2 front motor speed is increased → Drone moves forward.

- If the box area > B → Drone is too close → 2 back motor speed is increased → Drone moves backward.

- If the box area ∈ [A, B] → Drone is at the proper distance from the object → Drone maintains motor speed.

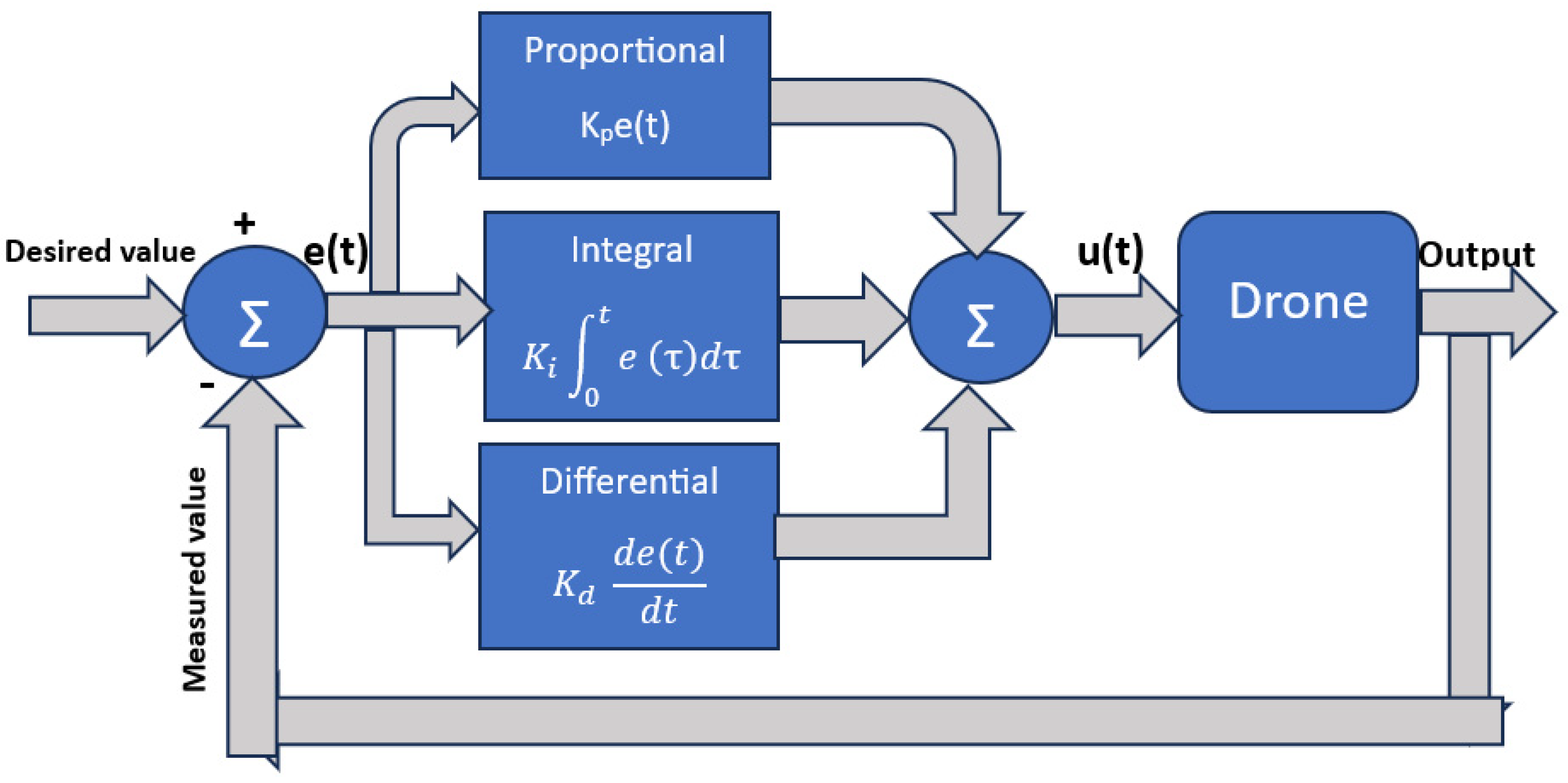

- The P-controller is an essential element in the control systems. The system offers a direct control action proportional to the error between the target setpoint and the measured process variable. The drone’s controller continuously modifies the motor speed depending on the difference between the desired and predicted box areas to ensure the drone maintains the appropriate distance from the item. The back motors are slowed to gently return the drone back if it is too near than intended and vice versa. The difference between the required and measured rectangle areas determines how much correction is made; higher differences yield more vital adjustments.

- The D-controller aids in system control by monitoring the rate of change. The focus is placed on the rate of change between the target value and the measured value. When the drone reaches the proper distance, the D-controller helps keep the drone steady by looking at how quickly the drone’s speed is changing. If the drone is going backward or downward too fast, the D-controller will adjust to slow it down. This feature helps the drone stay at the desired distance smoothly, ensuring stability and precise speed control.

- The I-controller operates by continuously summing the error signal over a period of time and utilizing the resultant integrated value to provide suitable modifications to control inputs. If the drone deviates from its setpoint, the integral controller calculates the duration and magnitude of the accumulative error and applies corrective actions proportionally. The P and D controllers can make quick adjustments but struggle to remove minor, persistent errors that occur over time, leading to steady-state errors.

- u(t): PID control variable.

- Kp, Ki, and Kd are the proportional, integral, and derivative coefficients, respectively.

- e(t) is the error between the desired and current values.

- Kp should be great enough if the error is significant; the control output will be proportionately high. Kd should be set higher if the change is rapid. Ki should be suitable to eliminate the residual error due to the historic cumulative value of the error.

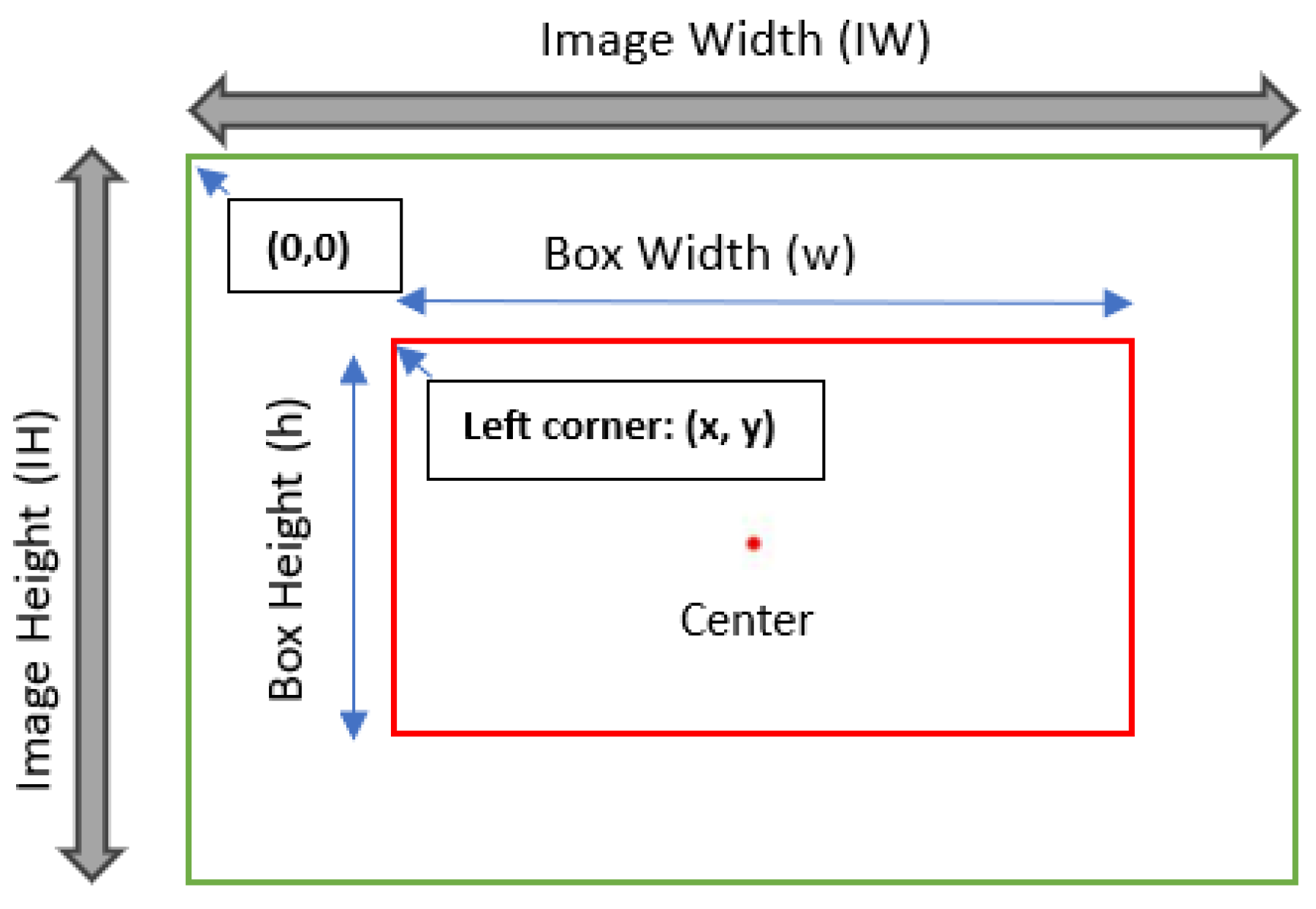

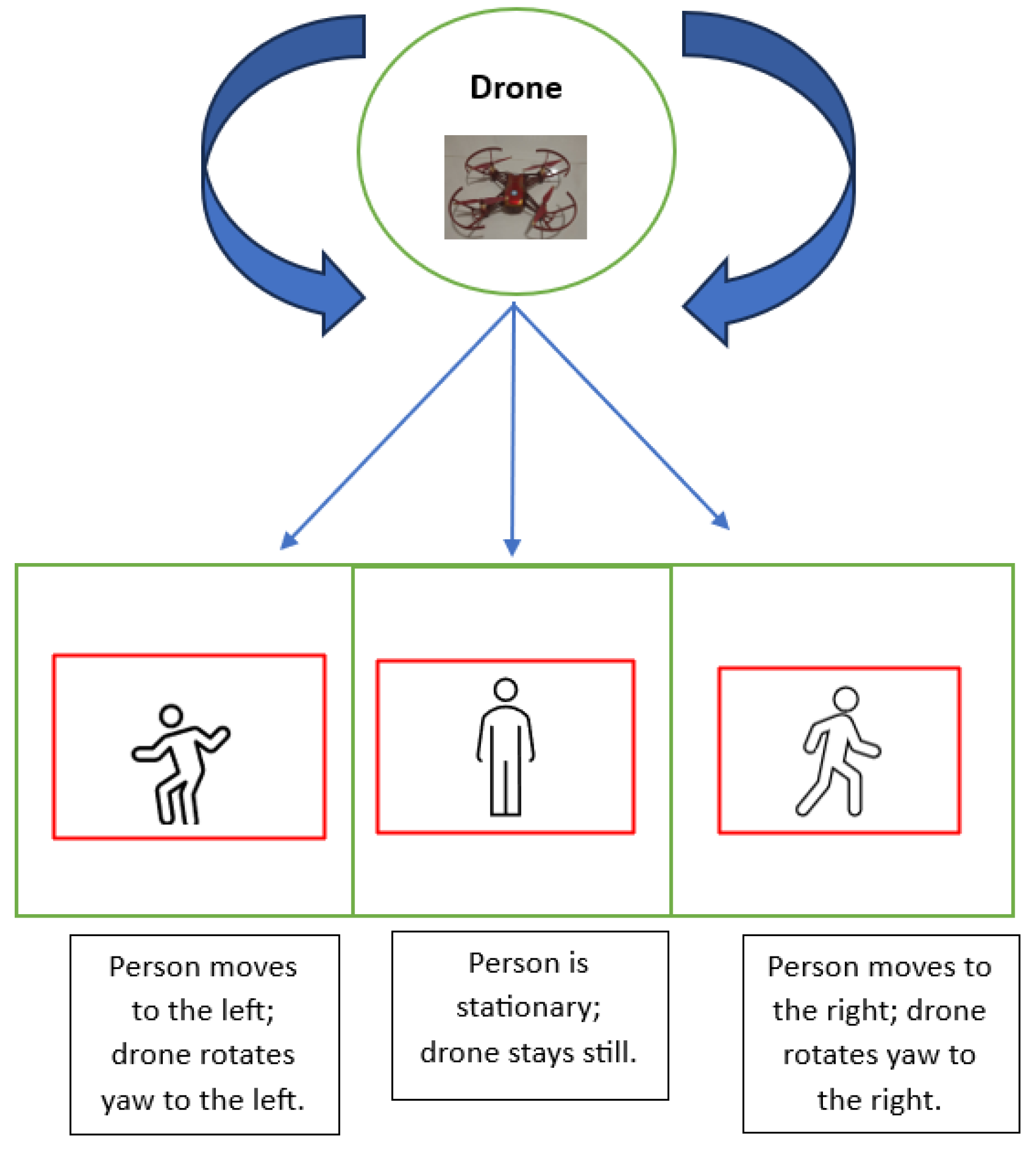

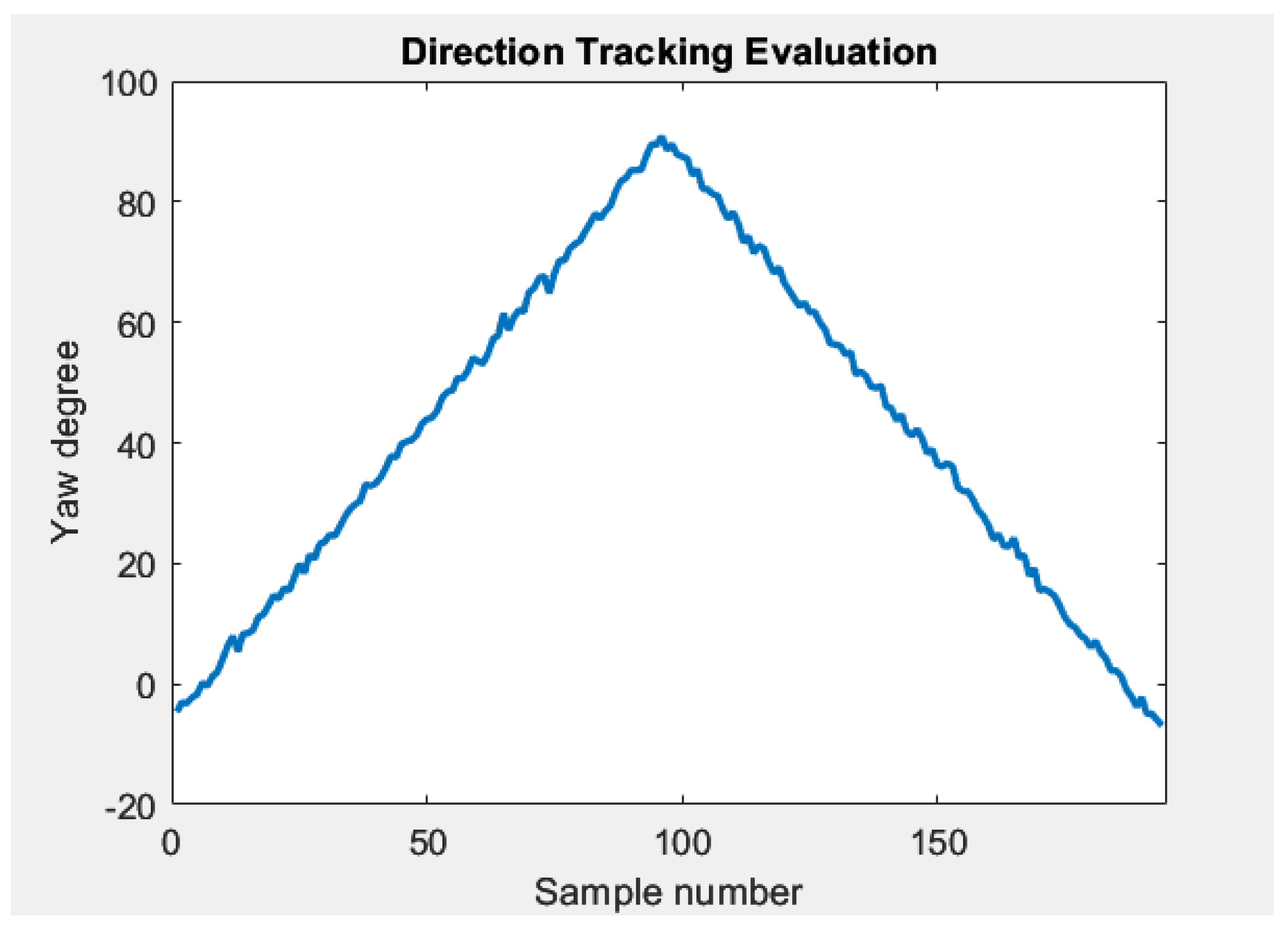

3.4.2. Yaw Rotation for Object Position Adaption

- If the x-coordinate of the rectangle center < x-coordinate of the image center → Target moves to the left → PID adjusts the drone yaw to increase the left motor speed.

- If the x-coordinate of the rectangle center > the x-coordinate of the image center → Target moves to the left → PID adjusts the drone yaw to increase the right motor speed.

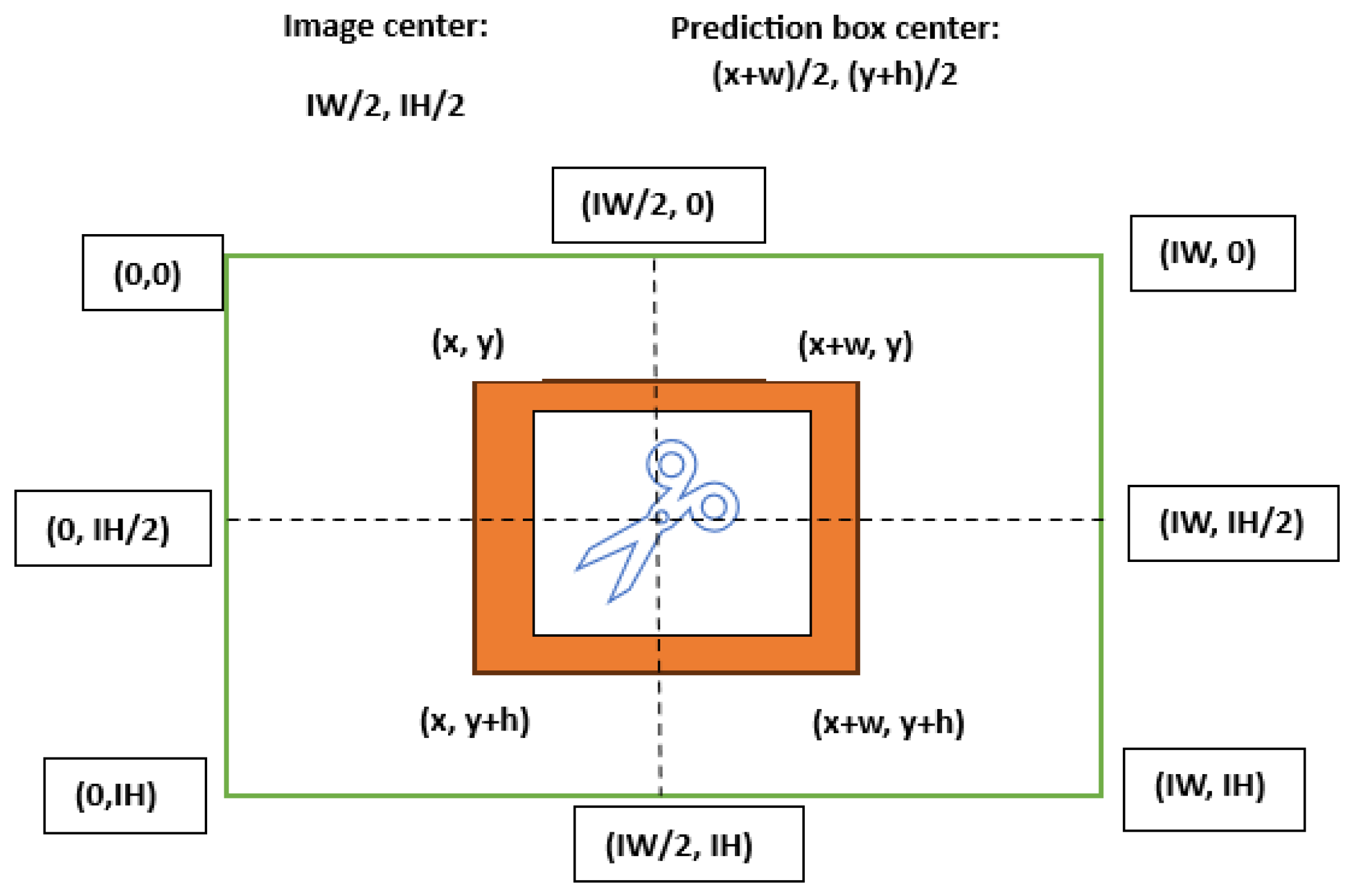

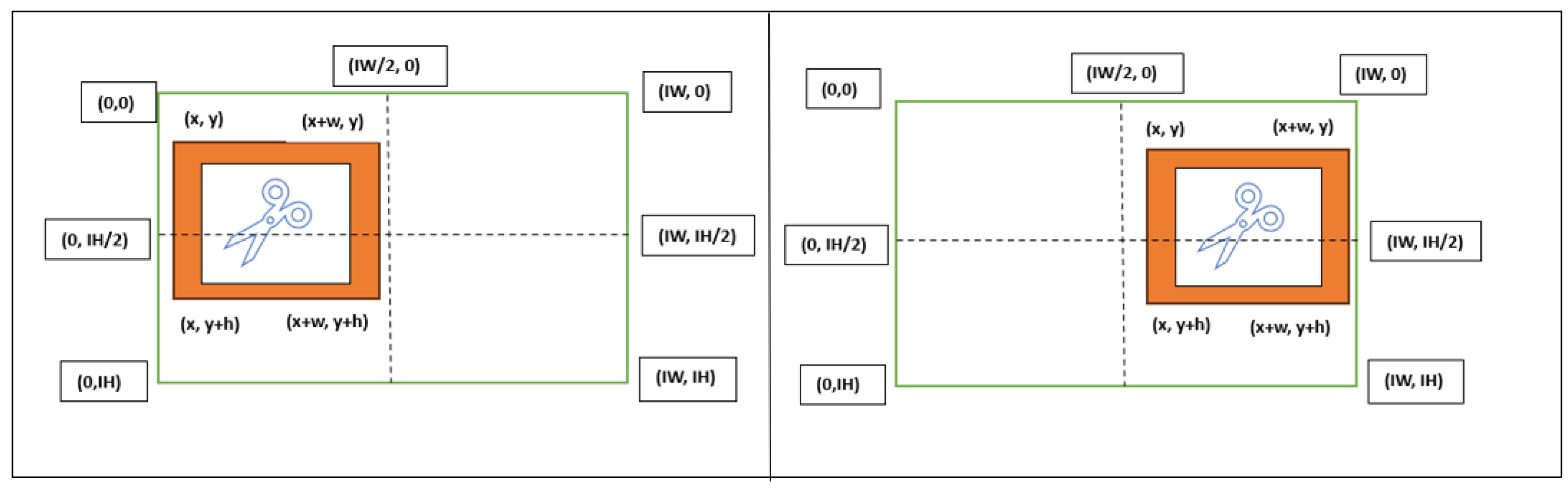

3.4.3. Potentially Dangerous Object Avoidance

- If (x + w)/2, ∈ [0, IW/2] and (y + h)/2, ∈ [0, IH]:

- If (x + w)/2, ∈ [IW/2, IW] and (y + h)/2, ∈ [0, IH]:

- If (x + w)/2 = IW/2 and (y + h)/2 = IH/2:

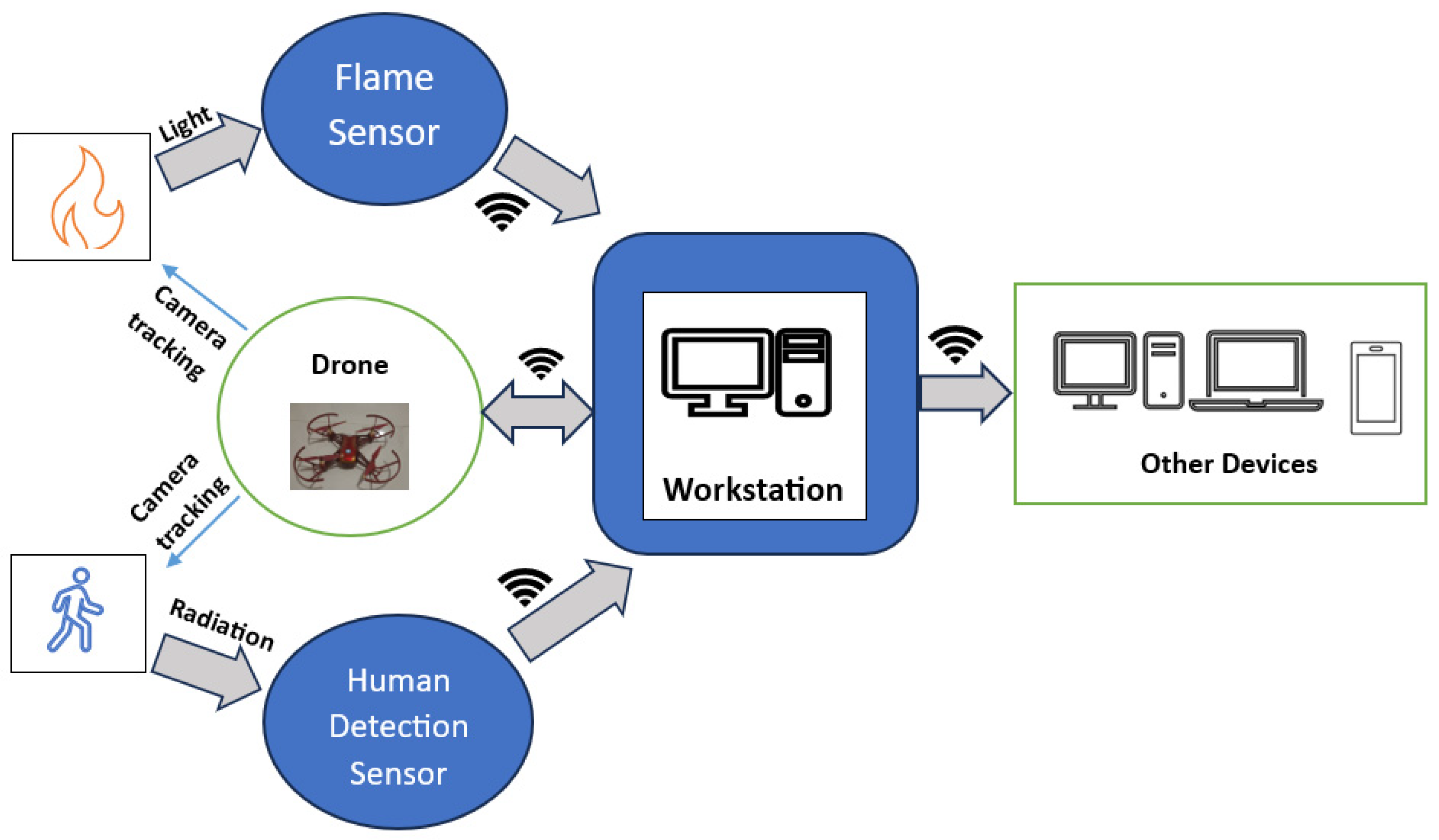

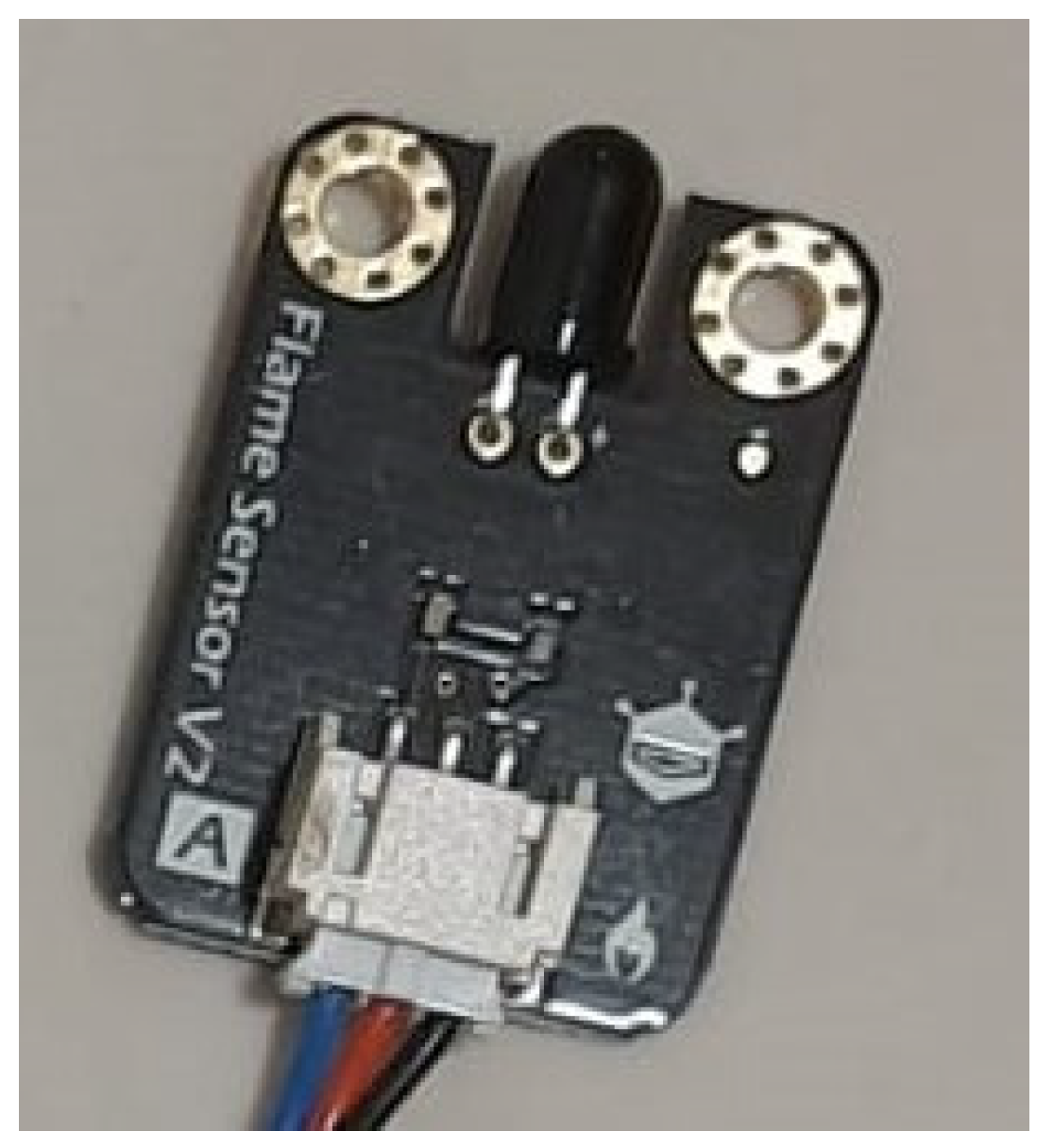

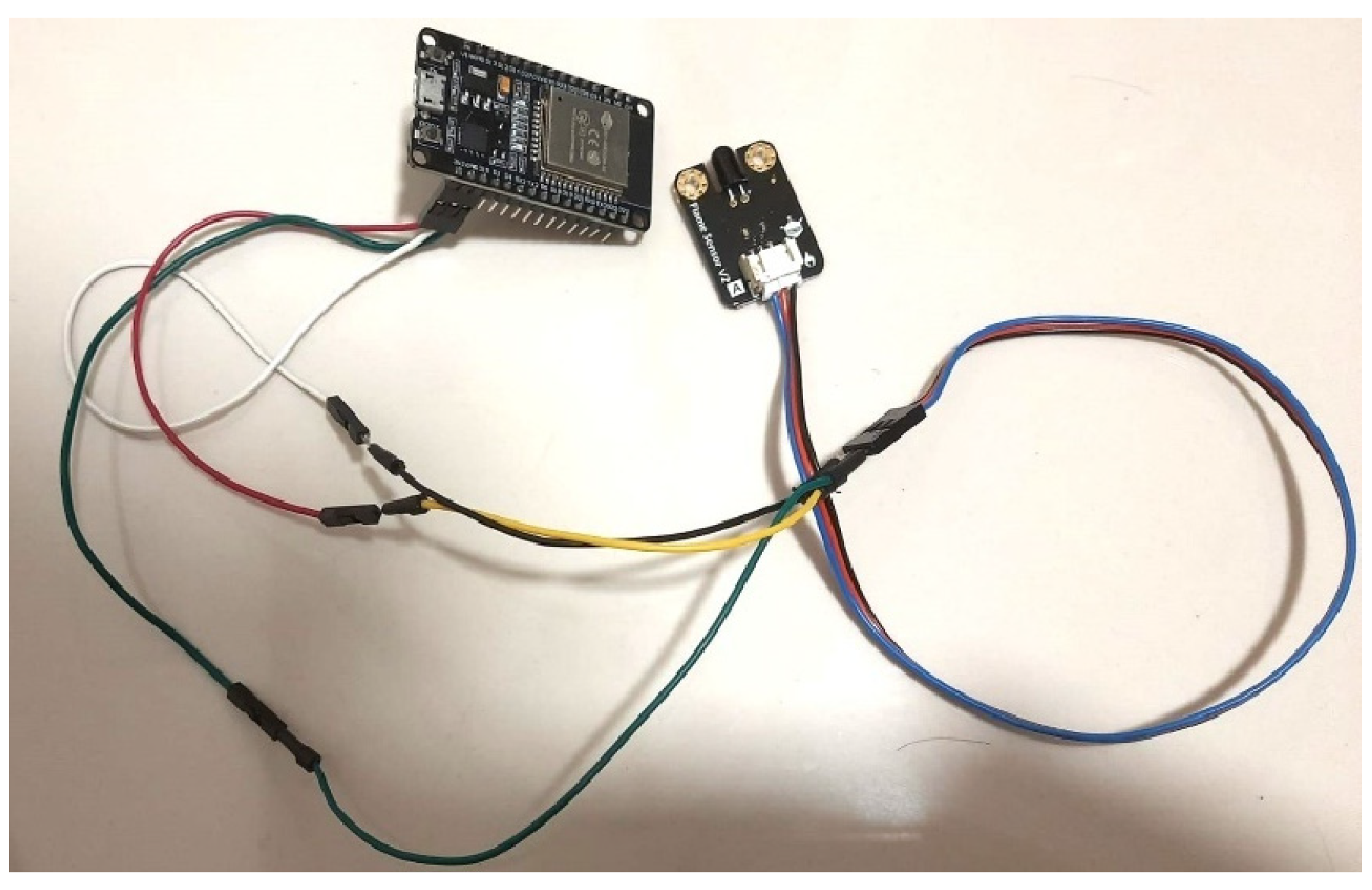

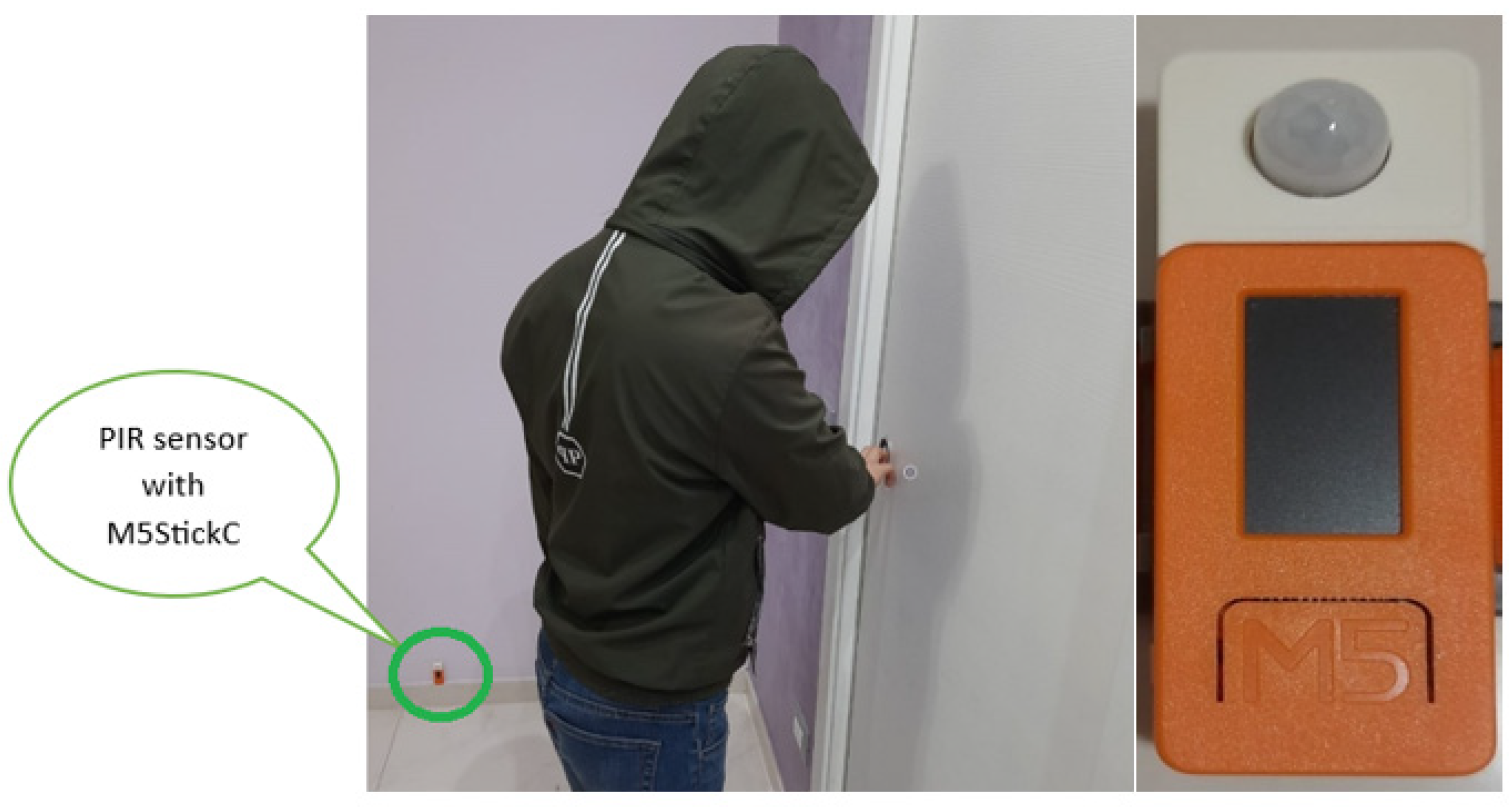

3.5. Sensor Utilization

4. Experiments and Results

4.1. Experimental Setup

4.2. Results and Analysis

4.2.1. Computer Vision Test Performance

4.2.2. Person Detection

4.2.3. Evaluation on the Distance Maintenance

4.2.4. Evaluation of Direction Rotation

4.2.5. Potentially Dangerous Object Detection

4.2.6. Fire Detection

4.3. System Overview

5. Conclusions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zuo, Z.; Liu, C.; Han, Q.-L.; Song, J. Unmanned Aerial Vehicles: Control Methods and Future Challenges. IEEE/CAA J. Autom. Sin. 2022, 9, 601–614. [Google Scholar] [CrossRef]

- Alsawy, A.; Hicks, A.; Moss, D.; Mckeever, S. An Image Processing Based Classifier to Support Safe Dropping for Delivery-by-Drone. In Proceedings of the 2022 IEEE 5th International Conference on Image Processing Applications and Systems (IPAS), Genova, Italy, 5–7 December 2022. [Google Scholar]

- Harrington, P.; Ng, W.P.; Binns, R. Autonomous Drone Control within a Wi-Fi Network. In Proceedings of the 2020 12th International Symposium on Communication Systems, Networks and Digital Signal Processing (CSNDSP), Porto, Portugal, 20–22 July 2020; IEEE: Piscataway, NJ, USA, 2020. [Google Scholar]

- Chen, K.-W.; Xie, M.-R.; Chen, Y.-M.; Chu, T.-T.; Lin, Y.-B. DroneTalk: An Internet-of-Things-Based Drone System for Last-Mile Drone Delivery. IEEE Trans. Intell. Transp. Syst. 2022, 23, 15204–15217. [Google Scholar] [CrossRef]

- Drones in Smart-Cities: Security and Performance; Al-Turjman, F. (Ed.) Elsevier Science Publishing: Philadelphia, PA, USA, 2020; ISBN 9780128199725. [Google Scholar]

- Sun, Y.; Zhi, X.; Han, H.; Jiang, S.; Shi, T.; Gong, J.; Zhang, W. Enhancing UAV Detection in Surveillance Camera Videos through Spatiotemporal Information and Optical Flow. Sensors 2023, 23, 6037. [Google Scholar] [CrossRef] [PubMed]

- Kim, B.; Min, H.; Heo, J.; Jung, J. Dynamic Computation Offloading Scheme for Drone-Based Surveillance Systems. Sensors 2018, 18, 2982. [Google Scholar] [CrossRef] [PubMed]

- Zaheer, Z.; Usmani, A.; Khan, E.; Qadeer, M.A. Aerial Surveillance System Using UAV. In Proceedings of the 2016 Thirteenth International Conference on Wireless and Optical Communications Networks (WOCN), Hyderabad, India, 21–23 July 2016; IEEE: Piscataway, NJ, USA, 2016. [Google Scholar]

- Boonsongsrikul, A.; Eamsaard, J. Real-Time Human Motion Tracking by Tello EDU Drone. Sensors 2023, 23, 897. [Google Scholar] [CrossRef] [PubMed]

- MediaPose. Available online: https://google.github.io/mediapipe/solutions/pose (accessed on 11 September 2022).

- Bazarevsky, V.; Grishchenko, I.; Raveendran, K.; Zhu, T.; Zhang, F.; Grundmann, M. BlazePose: On-Device Real-Time Body Pose Tracking. Available online: https://arxiv.org/abs/2006.10204 (accessed on 11 September 2022).

- Zhou, X.; Liu, S.; Pavlakos, G.; Kumar, V.; Daniilidis, K. Human Motion Capture Using a Drone. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; IEEE: Piscataway, NJ, USA, 2018. [Google Scholar]

- Shah, S.A.; Lakho, G.M.; Keerio, H.A.; Sattar, M.N.; Hussain, G.; Mehdi, M.; Vistro, R.B.; Mahmoud, E.A.; Elansary, H.O. Application of Drone Surveillance for Advance Agriculture Monitoring by Android Application Using Convolution Neural Network. Agronomy 2023, 13, 1764. [Google Scholar] [CrossRef]

- Jia, X.; Wang, Y.; Chen, T. Forest Fire Detection and Recognition Using YOLOv8 Algorithms from UAVs Images. In Proceedings of the 2023 IEEE 5th International Conference on Power, Intelligent Computing and Systems (ICPICS), Shenyang, China, 26–28 July 2024; IEEE: Piscataway, NJ, USA, 2023. [Google Scholar]

- Cuimei, L.; Zhiliang, Q.; Nan, J.; Jianhua, W. Human Face Detection Algorithm via Haar Cascade Classifier Combined with Three Additional Classifiers. In Proceedings of the 2017 13th IEEE International Conference on Electronic Measurement & Instruments (ICEMI), Yangzhou, China, 20–22 October 2017; IEEE: Piscataway, NJ, USA, 2017. [Google Scholar]

- Python. Available online: https://www.python.org/ (accessed on 20 December 2022).

- Peretz, Y. A Randomized Algorithm for Optimal PID Controllers. Algorithms 2018, 11, 81. [Google Scholar] [CrossRef]

- Aivaliotis, V.; Tsantikidou, K.; Sklavos, N. IoT-Based Multi-Sensor Healthcare Architectures and a Lightweight-Based Privacy Scheme. Sensors 2022, 22, 4269. [Google Scholar] [CrossRef]

- Kumar, M.; Kumar, S.; Kashyap, P.K.; Aggarwal, G.; Rathore, R.S.; Kaiwartya, O.; Lloret, J. Green Communication in Internet of Things: A Hybrid Bio-Inspired Intelligent Approach. Sensors 2022, 22, 3910. [Google Scholar] [CrossRef]

- Wijesundara, D.; Gunawardena, L.; Premachandra, C. Human Recognition from High-Altitude UAV Camera Images by AI Based Body Region Detection. In Proceedings of the 2022 Joint 12th International Conference on Soft Computing and Intelligent Systems and 23rd International Symposium on Advanced Intelligent Systems (SCIS&ISIS), Ise-Shima, Japan, 29 November–2 December 2022; IEEE: Piscataway, NJ, USA, 2022. [Google Scholar]

- Hussain, M. YOLO-v1 to YOLO-v8, the Rise of YOLO and Its Complementary Nature toward Digital Manufacturing and Industrial Defect Detection. Machines 2023, 11, 677. [Google Scholar] [CrossRef]

- Taye, M.M. Theoretical Understanding of Convolutional Neural Network: Concepts, Architectures, Applications, Future Directions. Computation 2023, 11, 52. [Google Scholar] [CrossRef]

- Yamashita, H.; Morimoto, T.; Mitsugami, I. Autonomous Human-Following Drone for Monitoring a Pedestrian from Constant Distance and Direction. In Proceedings of the 2021 IEEE 10th Global Conference on Consumer Electronics (GCCE), Kyoto, Japan, 12–15 October 2021; IEEE: Piscataway, NJ, USA, 2021. [Google Scholar]

- Liang, Q.; Wang, Z.; Yin, Y.; Xiong, W.; Zhang, J.; Yang, Z. Autonomous Aerial Obstacle Avoidance Using LiDAR Sensor Fusion. PLoS ONE 2023, 18, e0287177. [Google Scholar] [CrossRef] [PubMed]

- Majchrzak, J.; Michalski, M.; Wiczynski, G. Distance Estimation with a Long-Range Ultrasonic Sensor System. IEEE Sens. J. 2009, 9, 767–773. [Google Scholar] [CrossRef]

- Kawabata, S.; Lee, J.H.; Okamoto, S. Obstacle Avoidance Navigation Using Horizontal Movement for a Drone Flying in Indoor Environment. In Proceedings of the 2019 International Conference on Control, Artificial Intelligence, Robotics & Optimization (ICCAIRO), Majorca Island, Spain, 3–5 May 2019; IEEE: Piscataway, NJ, USA, 2019. [Google Scholar]

- Motion Capture Systems. Available online: https://optitrack.com/ (accessed on 24 November 2023).

- Hercog, D.; Lerher, T.; Truntič, M.; Težak, O. Design and Implementation of ESP32-Based IoT Devices. Sensors 2023, 23, 6739. [Google Scholar] [CrossRef] [PubMed]

- M5StickC P.I.R. Hat (AS312). Available online: https://shop.m5stack.com/products/m5stickccompatible-hat-pir-sensor (accessed on 5 October 2022).

- Flame_sensor_SKU__DFR0076-DFRobot. Available online: https://wiki.dfrobot.com/Flame_sensor_SKU__DFR0076 (accessed on 17 October 2022).

- ESP32. Available online: https://www.espressif.com/en/products/socs/esp32 (accessed on 23 May 2023).

- Hoang, M.L.; Carratu, M.; Paciello, V.; Pietrosanto, A. A New Orientation Method for Inclinometer Based on MEMS Accelerometer Used in Industry 4.0. In Proceedings of the 2020 IEEE 18th International Conference on Industrial Informatics (INDIN), Warwick, UK, 20–23 July 2020; IEEE: Piscataway, NJ, USA, 2020. [Google Scholar]

- Hoang, M.L.; Pietrosanto, A. New Artificial Intelligence Approach to Inclination Measurement Based on MEMS Accelerometer. IEEE Trans. Artif. Intell. 2022, 3, 67–77. [Google Scholar] [CrossRef]

- Hoang, M.L.; Carratu, M.; Ugwiri, M.A.; Paciello, V.; Pietrosanto, A. A New Technique for Optimization of Linear Displacement Measurement Based on MEMS Accelerometer. In Proceedings of the 2020 International Semiconductor Conference (CAS), Sinaia, Romania, 7–9 October 2020; IEEE: Piscataway, NJ, USA, 2020. [Google Scholar]

- DJITelloPy: DJI Tello Drone Python Interface Using the Official Tello SDK. Available online: https://djitellopy.readthedocs.io/en/latest. (accessed on 10 July 2023).

- Wang, X.; Gao, H.; Jia, Z.; Li, Z. BL-YOLOv8: An Improved Road Defect Detection Model Based on YOLOv8. Sensors 2023, 23, 8361. [Google Scholar] [CrossRef]

- Liu, W.; Quijano, K.; Crawford, M.M. YOLOv5-Tassel: Detecting Tassels in RGB UAV Imagery with Improved YOLOv5 Based on Transfer Learning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 8085–8094. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Li, H.; Xiong, P.; An, J.; Wang, L. Pyramid attention network for semantic segmentation. arXiv 2018, arXiv:1805.10180. [Google Scholar]

- Li, X.; Wang, W.; Wu, L.; Chen, S.; Hu, X.; Li, J.; Tang, J.; Yang, J. Generalized focal loss: Learning qualified and distributed bounding boxes for dense object detection. Adv. Neural Inf. Process. Syst. 2020, 33, 21002–21012. [Google Scholar]

- Gai, W.; Liu, Y.; Zhang, J.; Jing, G. An Improved Tiny YOLOv3 for Real-Time Object Detection. Syst. Sci. Control Eng. 2021, 9, 314–321. [Google Scholar] [CrossRef]

- Culjak, I.; Abram, D.; Pribanic, T.; Dzapo, H.; Cifrek, M. A Brief Introduction to OpenCV. In Proceedings of the 35th International Convention MIPRO, Opatija, Croatia, 21–25 May 2012; pp. 1725–1730. [Google Scholar]

- Viola, P.; Jones, M. Rapid Object Detection Using a Boosted Cascade of Simple Features. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition. CVPR 2001, Kauai, HI, USA, 8–14 December 2001; IEEE Computer Society: Piscataway, NJ, USA, 2005. [Google Scholar]

- Zhang, E.; Zhang, Y. Average Precision. In Encyclopedia of Database Systems; Liu, L., Özsu, M.T., Eds.; Springer: Boston, MA, USA, 2009; pp. 192–193. [Google Scholar]

- Tello Iron Man Edition. Available online: https://www.ryzerobotics.com/ironman (accessed on 10 October 2022).

- Annotating Images Using OpenCV. Available online: https://learnopencv.com/annotating-images-using-opencv/ (accessed on 2 January 2023).

- Ultralytics Home. Available online: https://docs.ultralytics.com/ (accessed on 4 January 2023).

- OpenCV: Cascade Classifier Training. Available online: https://docs.opencv.org/4.x/dc/d88/tutorial_traincascade.html (accessed on 2 January 2023).

- Hoang, M.L. Object Size Measurement and Camera Distance Evaluation for Electronic Components Using Fixed-Position Camera. Comput. Vis. Stud. 2023. [CrossRef]

- Karthi, M.; Muthulakshmi, V.; Priscilla, R.; Praveen, P.; Vanisri, K. Evolution of YOLO-V5 Algorithm for Object Detection: Automated Detection of Library Books and Performace Validation of Dataset. In Proceedings of the 2021 International Conference on Innovative Computing, Intelligent Communication and Smart Electrical Systems (ICSES), Chennai, India, 24–25 September 2021; IEEE: Piscataway, NJ, USA. [Google Scholar]

- Zeng, Y.; Zhang, T.; He, W.; Zhang, Z. YOLOv7-UAV: An Unmanned Aerial Vehicle Image Object Detection Algorithm Based on Improved YOLOv7. Electronics 2023, 12, 3141. [Google Scholar] [CrossRef]

| Model | Precision (%) | Recall (%) | AP (%) |

|---|---|---|---|

| Person, knife, bottle, cup, cell phone, scissors detection YOLOv8 | 88.4 | 86.5 | 88.9 |

| YOLOv7 | 85.1 | 84.8 | 85.3 |

| YOLOv5 | 72.5 | 71.2 | 72.7 |

| Flame detection (Cascade Classifier) | 89.1 | 88.3 | 90.1 |

| Advantages | Limits |

|---|---|

|

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hoang, M.L. Smart Drone Surveillance System Based on AI and on IoT Communication in Case of Intrusion and Fire Accident. Drones 2023, 7, 694. https://doi.org/10.3390/drones7120694

Hoang ML. Smart Drone Surveillance System Based on AI and on IoT Communication in Case of Intrusion and Fire Accident. Drones. 2023; 7(12):694. https://doi.org/10.3390/drones7120694

Chicago/Turabian StyleHoang, Minh Long. 2023. "Smart Drone Surveillance System Based on AI and on IoT Communication in Case of Intrusion and Fire Accident" Drones 7, no. 12: 694. https://doi.org/10.3390/drones7120694

APA StyleHoang, M. L. (2023). Smart Drone Surveillance System Based on AI and on IoT Communication in Case of Intrusion and Fire Accident. Drones, 7(12), 694. https://doi.org/10.3390/drones7120694