4.1. Measurement Error Prediction

Errors in , , , and can contribute to the overall system error. It is further assumed in this study that with a rigorous calibration procedure in place, errors, such as the ones found in boresighting, are at least one order of magnitude smaller than those from IMU orientation. For simplicity of analysis, boresighting errors were not modeled in this study. Similarly, it is assumed that the lever arm error is also negligible. Therefore, the contributions of UAS orientation, positioning, timing, and LIDAR are considered in the error prediction model.

First, smaller angular errors in UAS roll (

), pitch (

), and heading (

) angles are considered. In addition, a rotating or vibrating airframe will experience additional angular errors due to uncertainties in time, such that

where

is a skew-symmetric matrix. Ideally,

is at a sub-degree level for the sensor used in the system, whereas

and

are substantially smaller.

Next, the UAS position error, including the impact from the timing uncertainties, is represented with , where is the velocity of the antenna in the G frame.

Finally,

is considered in the

L frame in forward, right, and down directions. Since the LIDAR is pointing to the ground, the LIDAR forward direction is the vehicle down direction. The position error without timing error is

where

represents the LIDAR ranging error projected onto the direction of point

x.

and

indicate right and downward angular errors with respect to LIDAR.

The error in

x is thus modeled with

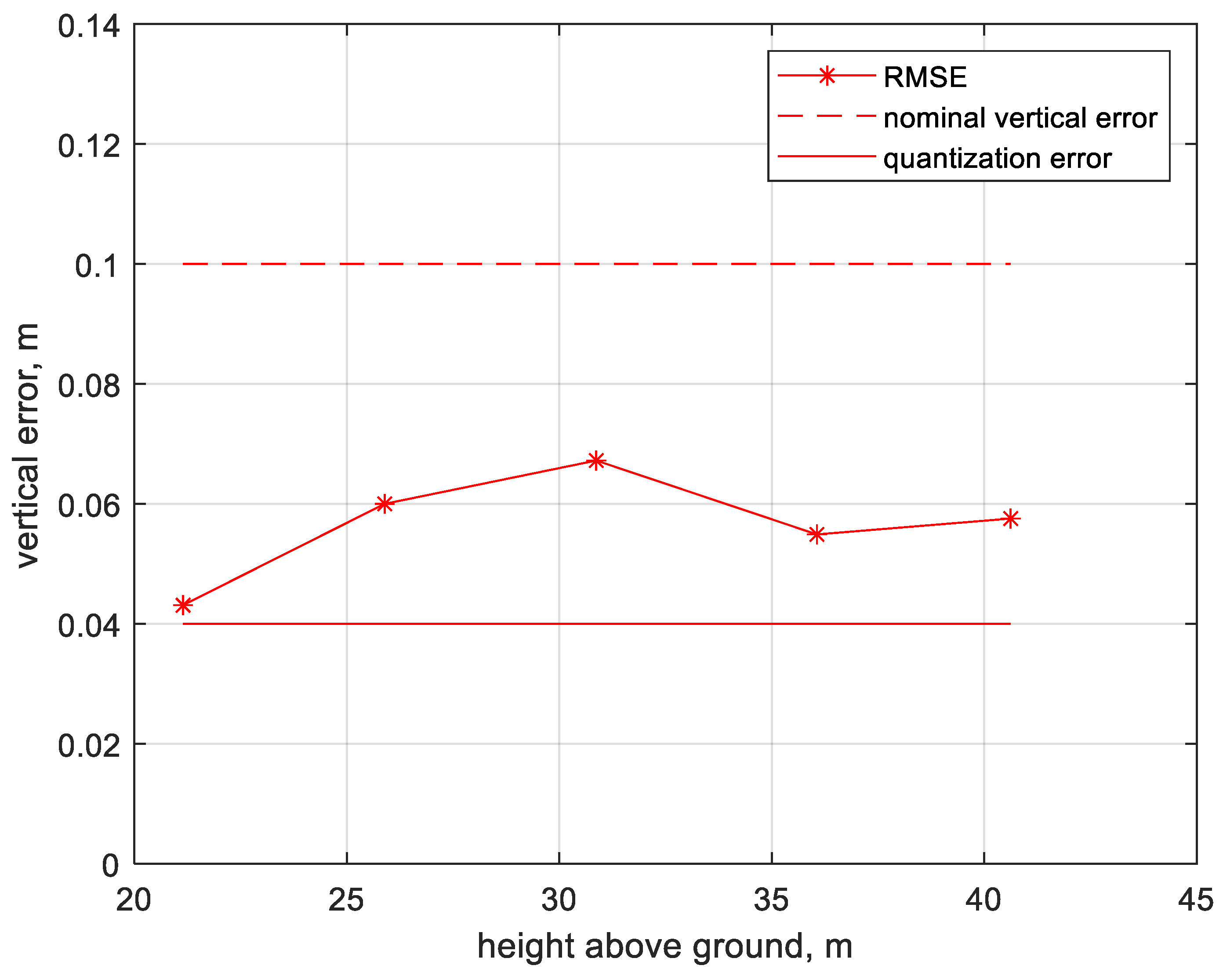

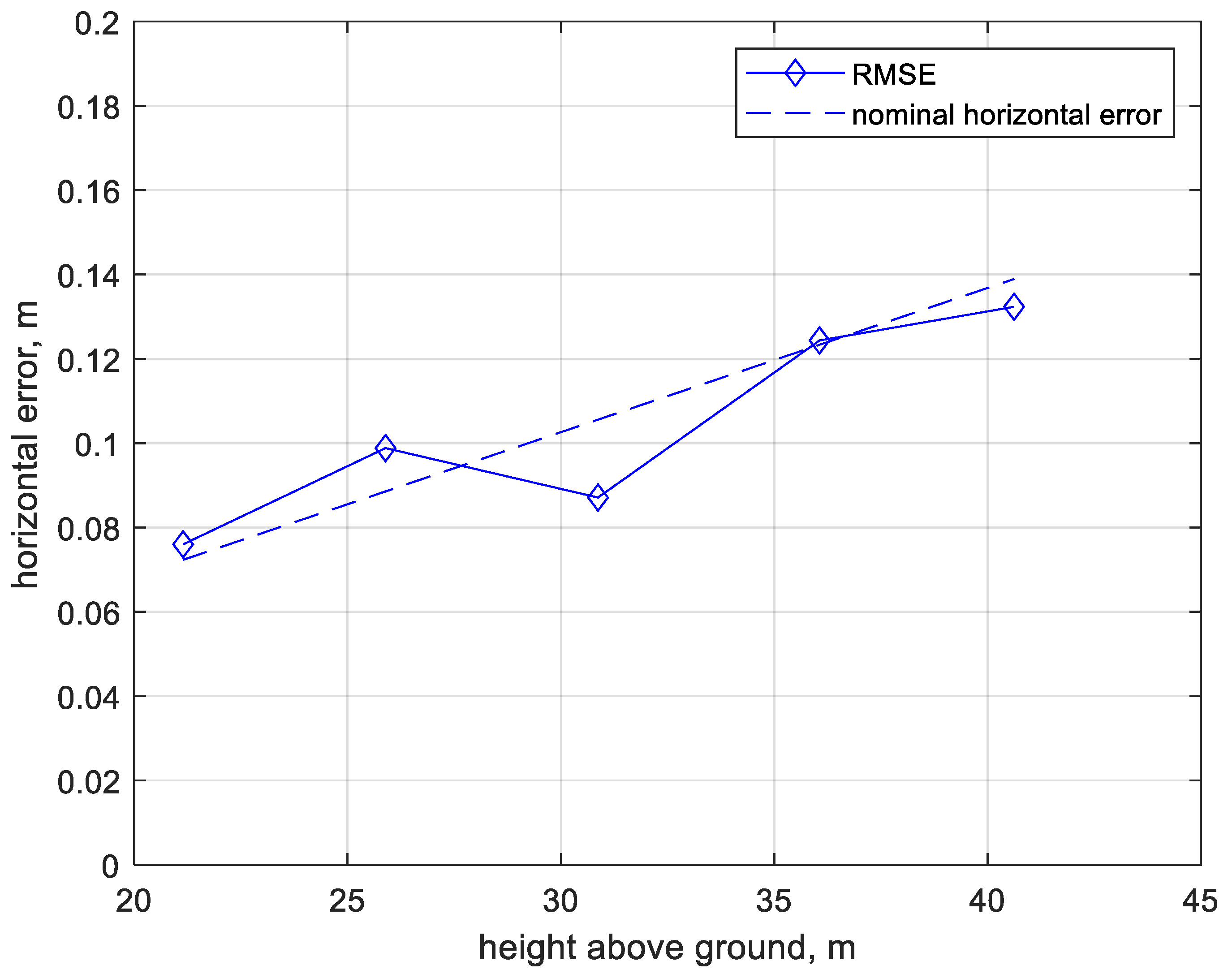

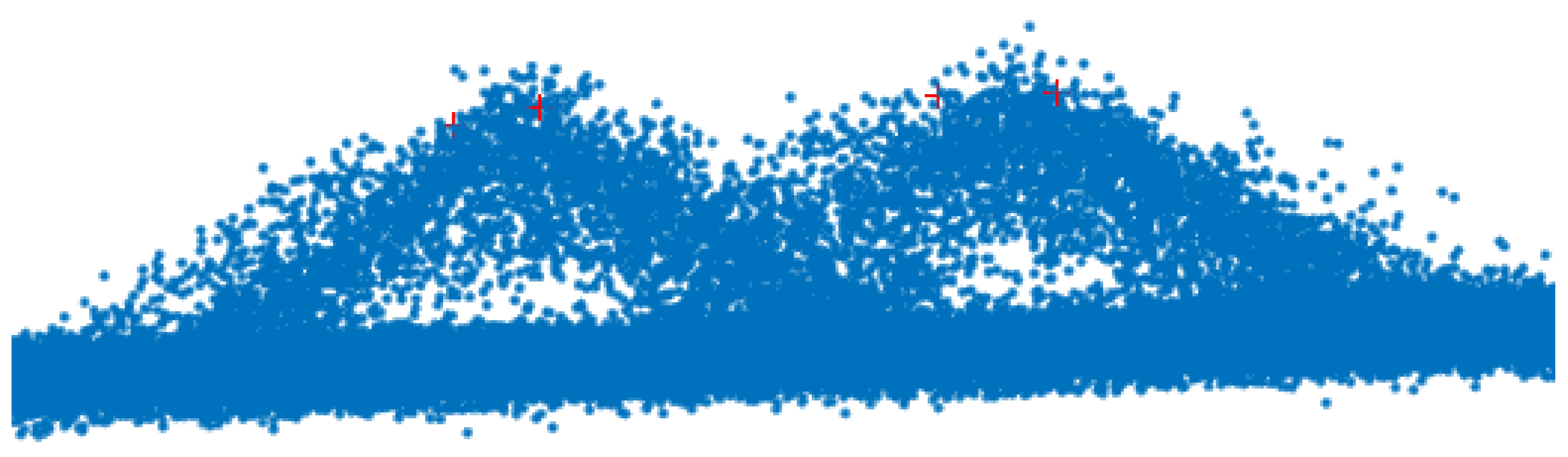

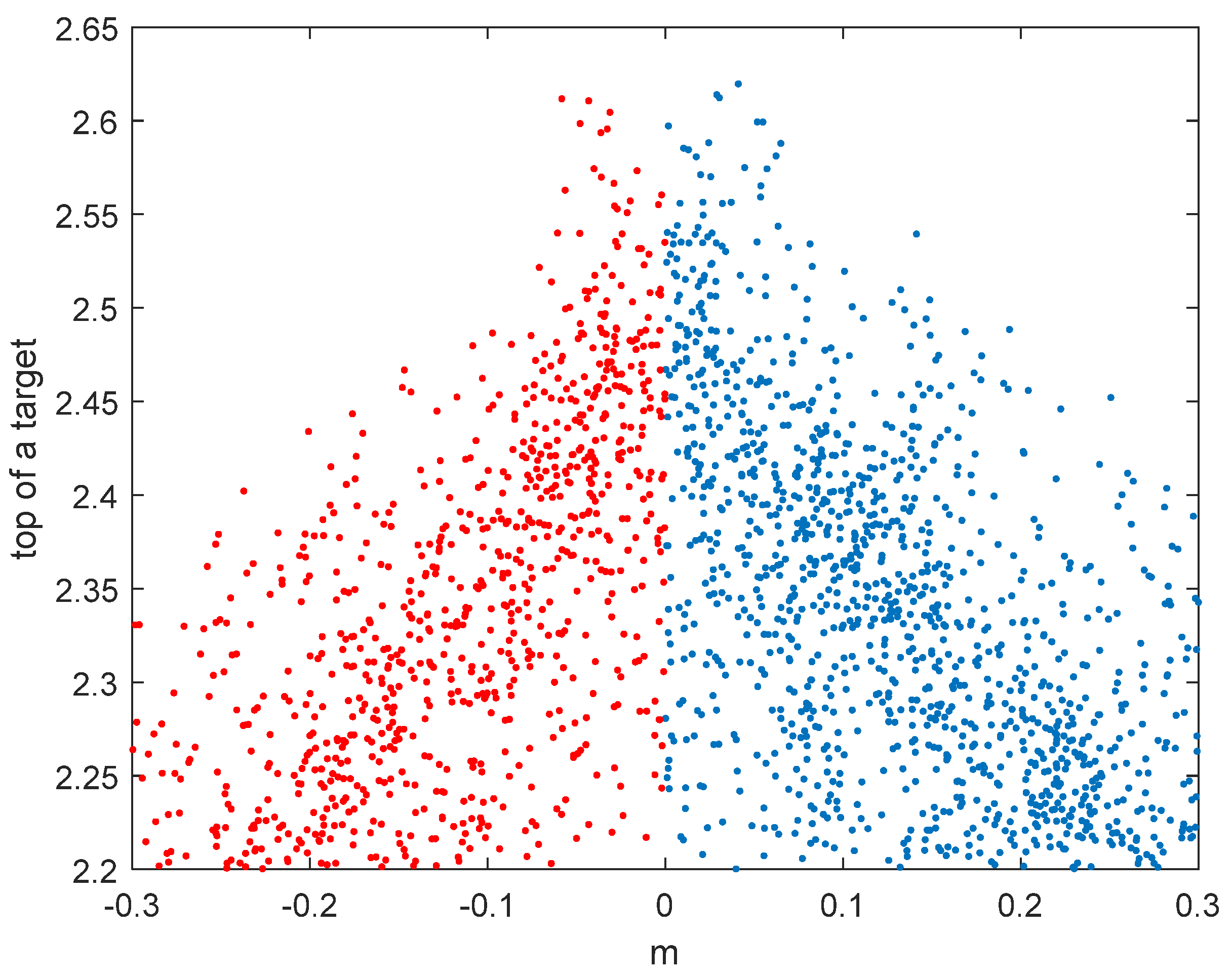

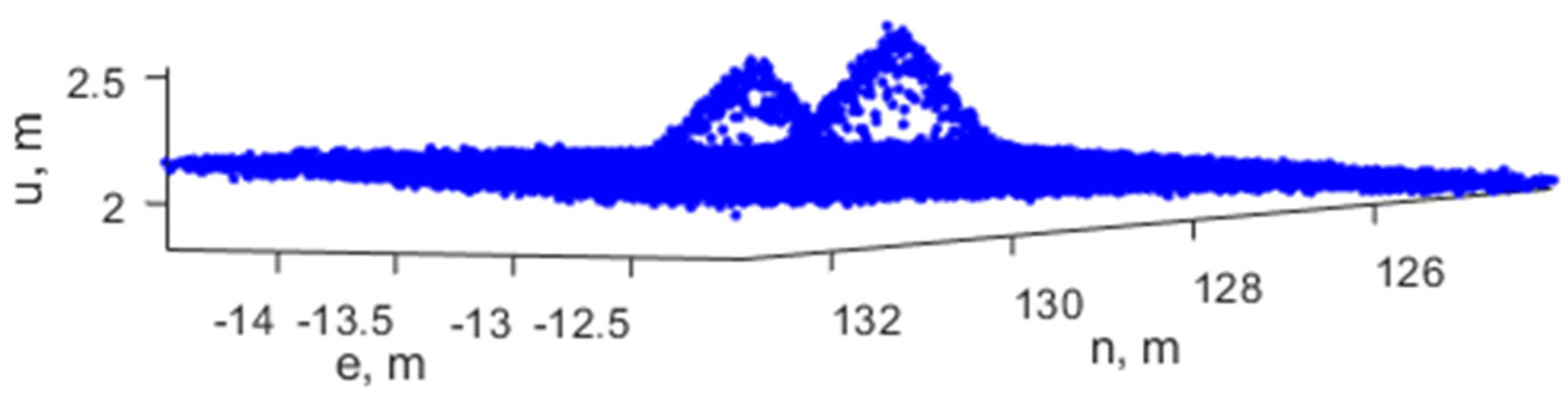

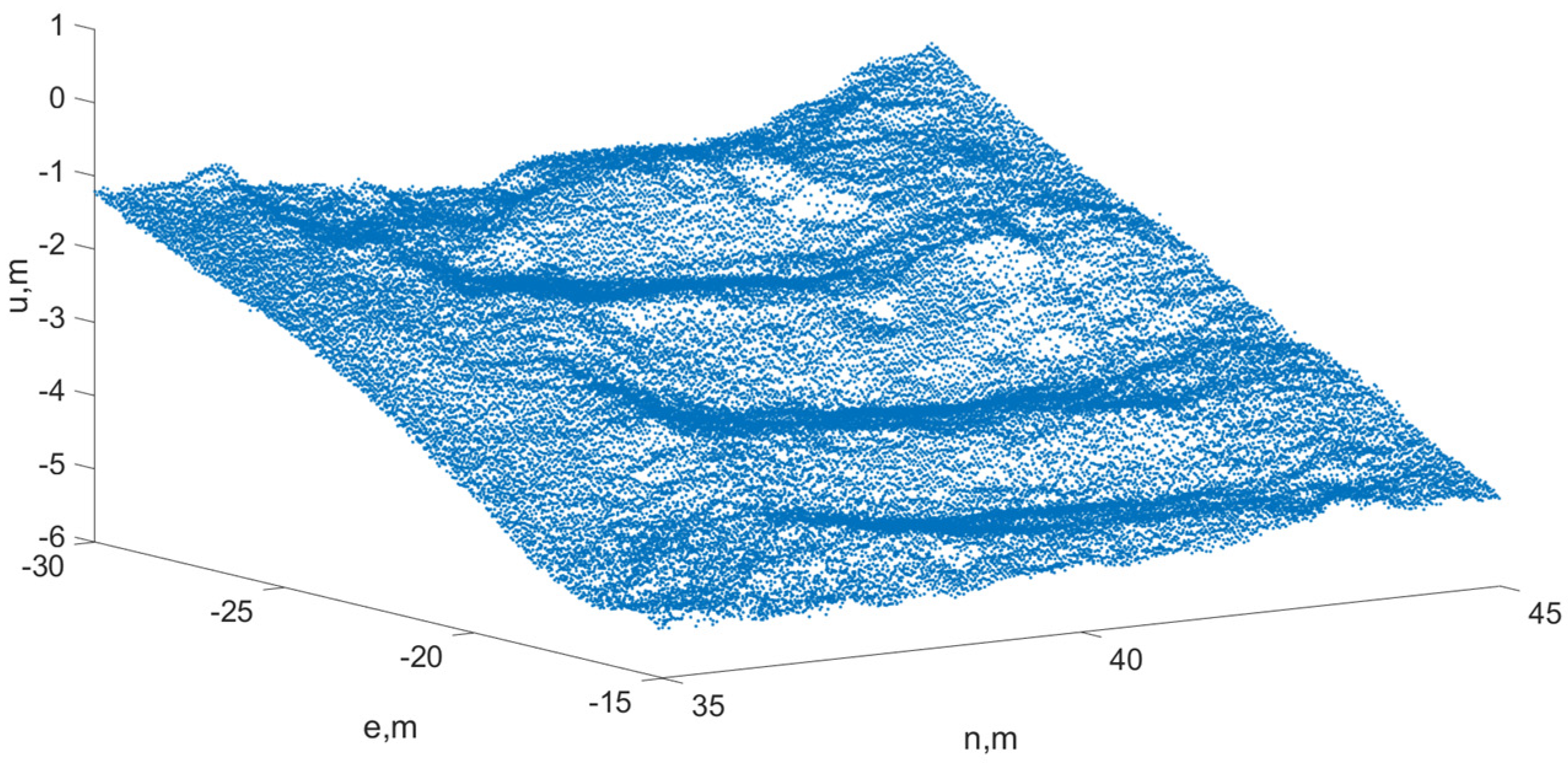

Equation (8) can be used to predict the 3D error magnitude in a global frame for individual scan points. Noticeably, the LIDAR errors (, and ) are not considered systematic errors. Instead, from Equation (7) is modeled as a random process, which is uncorrelated either among multiple points within the same scan or among repeated scans of the same point from a moving LIDAR. The other components from Equation (8) may be correlated among the points within the same scan but are likely uncorrelated among repeated scans. Therefore, the total errors in are expected to include a major component of random errors and a minor component of systematic errors. Since the random error component is caused by the LIDAR, it is considered a relative error, whereas the systematic error component was largely related to errors in the G frame, which is an absolute error.

In a set of points

X that are approximately collocated in the

G frame horizontally, the vertical dimension can be estimated based on all the points,

. In this study, the points were computed with a mean or median value. Therefore, a dense raw point cloud could be preprocessed, decimated, and turned into a more accurate elevation model. The expected accuracy can be significantly improved with the number of points. For example, the down-sampled point

could be an average of all the points, as shown in Equation (9).

The standard deviation of vertical errors in is reduced by the square root of the number of points in X. With a sufficiently large number of points in X, the random and relative errors in will approach zero, and therefore the systematic and absolute errors will dominate.

Alternatively,

can be calculated based on the median value of all the points in

X. Median values are less likely to be affected by outliers in the set. An implicit assumption is made that all the points in the set share similar heights in a small horizontal neighborhood (centimeter to decimeter level), which is a valid assumption for most smooth surfaces. The median value shown in Equation (10) is expected to be a robust estimation. To better find all the points, some optimization methods will be applied in future work [

55].

While the error model can predict horizontal and vertical errors separately, it is independent of the target surface. The texture, smoothness, and slope of a surface can contribute to the errors in the point cloud. For instance, a horizontal error can be perceived as a vertical error in a sloped surface. Vegetation on the surface could also result in additional uncertainty and, as a result, the optimal choice of the down-sampling method, i.e., mean vs. median values, may be dependent on the target surface. In general, the UAS-LIDAR system can measure a smooth and flat surface that is not covered by any vegetation with lower errors.

Furthermore, this error model is generic and would be applicable to any UAS-LIDAR system that has LIDAR synchronized to an onboard navigation system. However, in order to implement Equation (8), it does require intermediate data, such as the error models of navigation and synchronization, which may not be available from a commercial system.

4.2. An Ilustrative Example of Error Prediction Model

The presented error model helps with the quantification of the contribution of individual error sources in a single point in a LIDAR point cloud. As an illustrative example, consider a typical slow and smooth flight (speed = 5 m/s, no vibration or vertical velocity considered), where the UAS holds a constant altitude of 15 m above ground. The UAS flight control is often based on a standalone GNSS receiver, which can only achieve meter-level accuracy. For example, the 3D position error of GPS alone is 4.5 m (95% value) [

56]. However, the UAS is not required to fly at a precise altitude. Instead, the precise position of the UAS and the LIDAR will be computed in the PPK solution. Since the UAS flights discussed in this work all had open sky conditions, typically there are at least 15 GNSS satellites from GPS and GLONASS combined. The number of satellites has always been sufficient for a successful PPK or RTK solution. The precise LIDAR position, instead of the approximate flight altitude, will be used to compute a point cloud as shown in Equation (1).

Based on the typical performance provided by the manufacturer in [

52], it is assumed that

(1 standard deviation) and

for positioning errors (1 standard deviation). The lever arm between the LIDAR and the antenna

. The LIDAR is pointing downward, thus

. It is further assumed that the UAS is leveled and facing north, thus

. The error magnitude on a ground point

x right underneath the LIDAR (

) is analyzed and illustrated below:

Let represent the error component contributed by the orientation uncertainty. In a leveled flight with little vibration, it is assumed that there is unsensed orientation change within , so that . Although this assumption may be too optimistic for the UAS in some practical fight conditions, it would be acceptable for the presented sensing system since the vibration of the sensing system could be damped or separated from the vibration of the UAS airframe. In this case, the orientation error has a simplified model .

Since the distance between

x and the LIDAR is much greater than the lever arm, i.e.,

, the main contribution from the orientation error will be based on the term

. Recall that

; therefore,

where

is a component of the overall error,

, which is caused by the orientation uncertainty

. The errors are provided in North, East, and vertical directions, respectively.

Similarly, the error component caused by UAS positioning can be estimated by

In this simplified model, the contribution of timing error is purely horizontal and is only proportional to UAS velocity. The magnitude is limited by

A greater contribution comes from LIDAR error

. As aforementioned,

,

, and

(a conservative error level) are assumed for this LIDAR.

which contributes to the overall error via

It is evident from comparing Equations (11)–(15) that the LIDAR is the dominant error source ( for point x. Since the majority of is considered a random process that is independent among points, as mentioned earlier, the integration and synchronization with the navigation measurements does not introduce substantial systematic errors in the LIDAR point. As a result, the error magnitude is on the order of 0.1 m for both horizontal and vertical directions in a typical low-altitude flight.